Abstract

In this paper, based on the discrete lifetime distribution, the residual and past of the Tsallis and Renyi extropy are introduced as new measures of information. Moreover, some of their properties and their relation to other measures are discussed. Furthermore, an example of a uniform distribution of the obtained models is given. Moreover, the softmax function can be used as a discrete probability distribution function with a unity sum. Thus, applying those measures to the softmax function for simulated and real data is demonstrated. Besides, for real data, the softmax data are fit to a convenient ARIMA model.

1. Introduction

The entropy function has resulted in a substantial turn in the theory of information, such as the measure of uncertainty information. For a discrete random variable (R.V.) , and is the corresponding probability vector, . Thus, Shannon [1] produced the non-negative discrete entropy function as follows:

Lad et al. [2] originated the extropy as a dual model of uncertainty. The non-negative extropy of the discrete R.V. X is given by

Several measures of entropy and its generalization have been presented in the literature. Through the various generalizations of uncertainty, Tsallis [3] introduced the Tsallis entropy. The Tsallis entropy of the discrete R.V. X, , is given by

when is 1, then .

Recently, Xue and Deng [4] proposed the measure Tsallis extropy, as a complementary dual of the Tsallis uncertainty function and studied its maximum value. Then, Balakrishnan et al. [5] studied the Tsallis extropy and applied it to pattern recognition. The Tsallis extropy of the discrete R.V. X, , is given by

when is 1, then .

Based on the continuous lifetime distribution, Ebrahimi [6] discussed the measure of the entropy of the residual lifetime distribution. Furthermore, Di Crescenzo and Longobardi [7] presented the measure of the entropy of the past lifetime distribution.

Based on the discrete lifetime distribution, Gao and Shang [8] developed the generalized past entropy via the grain exponent based on oscillations. Li and Shang [9] introduced the modified discrete generalized past entropy via the grain exponent.

The innovation of this paper lies in presenting the residual and past versions of the Tsallis and Renyi extropy based on the discrete lifetime distribution. Moreover, we apply those models to the softmax function for simulated and real data. Besides, we discuss the residuals of the softmax real data and give a suitable ARIMA model to fit the data. The remaining article is structured as follows: Section 2 gives the suggested models with their properties and relation to other measures. Besides, we give an example of the models for a uniform distribution. Section 3 applies the softmax function to the suggested models on simulated and real data. Furthermore, we discuss the residuals and the fit ARIMA model to the softmax U.S. consumption data. Finally, Section 4 finishes the article with some conclusions.

2. The Suggested Models

Extropy is a favorite instrument to enhance the Tsallis entropy, which is the dual model of information entropy. The Tsallis extropy combines the advantages of the Tsallis entropy and extropy. In this section, we present the residual and past versions of the Tsallis extropy based on the discrete lifetime distribution.

Let the discrete R.V. X supported with , and the corresponding probability vector . Then, the residual and past discrete Tsallis entropy, , is given, respectively, by

where and , and the interceptive parameter t is between 1 and . Moreover, the residual and past discrete extropy is given, respectively, by

Motivated by the concept of the Tsallis extropy and the discrete lifetime distribution, we introduce the residual and past of the Tsallis extropy, respectively, as follows:

In the following proposition, we illustrate the past and residual Tsallis extropy behavior according to the value of .

Proposition 1.

Let the discrete R.V. X supported with , and the corresponding probability vector . Then, from the past and residual Tsallis extropy given in Equations (10) and (9), respectively, we have:

- 1.

- The past Tsallis extropy is positive (negative) if ().

- 2.

- The residual Tsallis extropy is positive (negative) if ().

Proof.

- The past Tsallis extropy given in Equation (10) can be rewritten as follows:Moreover, we have that the term is greater than the other two terms, and . Then, the proof is obtained.

- The residual Tsallis extropy given in Equation (9) can be rewritten as follows:Moreover, the proof is obtained similarly.

□

Example 1.

Suppose that the discrete R.V. X has a uniform distribution over . Then, the residual and past Tsallis extropy are given, respectively, by

In the following proposition, we will establish the relation between the past and residual Tsallis extropy and the past and residual extropy.

Proposition 2.

In the next proposition, we will obtain the relation between the past and residual Tsallis extropy and the past and residual Tsallis entropy when the choice of the parameter .

Proposition 3.

In the following theorem, we will show the relation between the past and residual Tsallis extropy and past and residual Tsallis entropy according to the value of .

Theorem 1.

Assume that the discrete R.V. X has finite support with the corresponding probability vector . Then, from the past and residual Tsallis extropy given in Equations (10) and (9), respectively, and the past and residual Tsallis entropy given in Equations (6) and (5), respectively, we have:

- 1.

- For , we obtain

- 2.

- For , we obtain

Proof.

Residual and Past Discrete Renyi Extropy

In the same manner, we can discuss the residual and past discrete Renyi extropy. Let the discrete R.V. X supported with , and the corresponding probability vector . Then, the Renyi extropy was introduced by Liu and Xiao [10] as follows:

Motivated by the concept of the Renyi extropy and the discrete lifetime distribution, we introduce the residual and past of the Renyi extropy, respectively, as follows:

In the following proposition, we will show the relation between the past and residual Renyi extropy and the past and residual extropy.

Proposition 4.

Example 2.

Suppose that the discrete R.V. X has a uniform distribution over . Then, the residual and past Renyi extropy are given, respectively, by

3. Applications

In this section, we will use different data sources and the softmax function to obtain the corresponding probability vector , then discuss the behavior of the residual and past Tsallis extropy.

3.1. Softmax Function

It has been noted in the real world that the actual R.V. may be continuous in disposition, but discrete when we observe it, for example in a hospital, the number of days a patient stays or a patient survives after treatment. Accordingly, it is appropriate to fit these positions by discrete distributions developed from continuous distributions. One of the most well-known functions in engineering and science is the softmax function. It has usage in many fields such as game theory [11,12,13], reinforcement learning [14], and machine learning [15,16]. This normalized exponential function is used to transform a vector into a unit sum vector as follows

3.1.1. Standard Normal Distribution

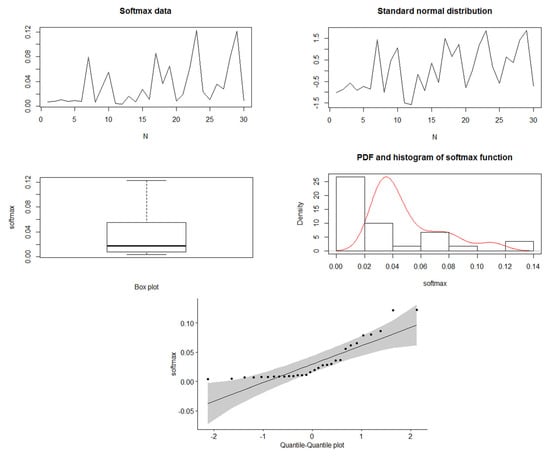

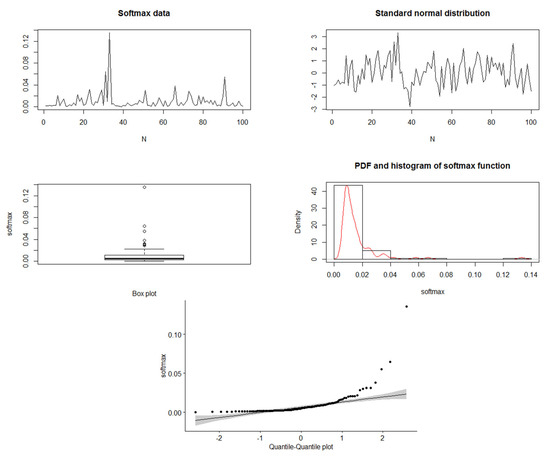

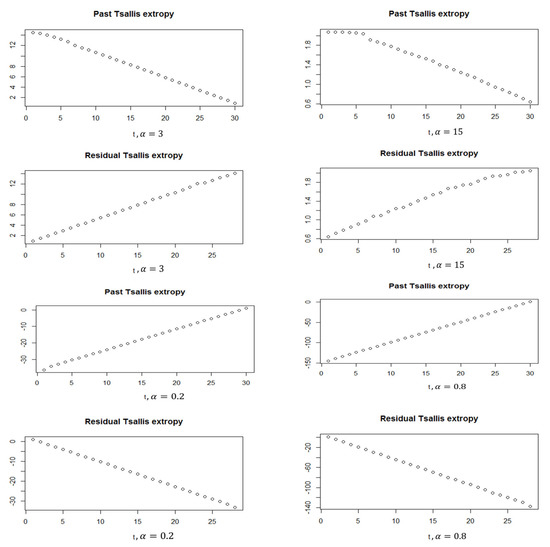

In this part, we generate data from standard normal distribution () and use the softmax function to obtain the corresponding probability vector with a unity sum. Figure 1 and Figure 2 show the simulated data of , besides the softmax data when = 30 and 100, respectively. For the softmax data when , we see the mean = 0.0333 and the variance = 0.001195, and it shows the quantile–quantile plot of the softmax data, which can be noted to be far from normality. When , we see that the mean = 0.01 and the variance = 0.0002753. Therefore, we can see that the variance is decreasing by increasing the sample. Furthermore, in the quantile–quantile plot of the softmax data, it starts to be closer to normality, but still, the extremely standardized residuals (on both ends) are more extensive than they would be for normal data. Moreover, Figure 3 and Figure 4 show the past and residual Tsallis extropy when , which can be noted as follows: By increasing t, the past Tsallis extropy decreases (increases) for . Furthermore, the residual Tsallis extropy increases (decreases) for .

Figure 1.

Simulated data of and the softmax data, .

Figure 2.

Simulated data of and the softmax data, .

Figure 3.

Past and residual Tsallis extropy, , .

Figure 4.

Past and residual Tsallis extropy, , .

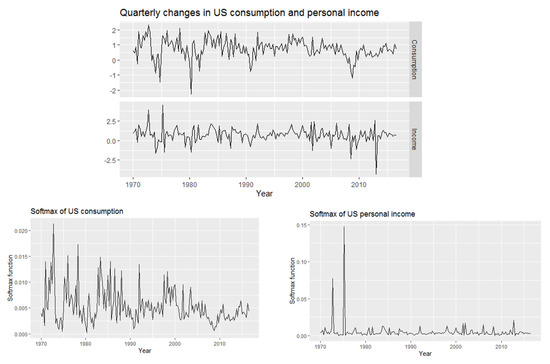

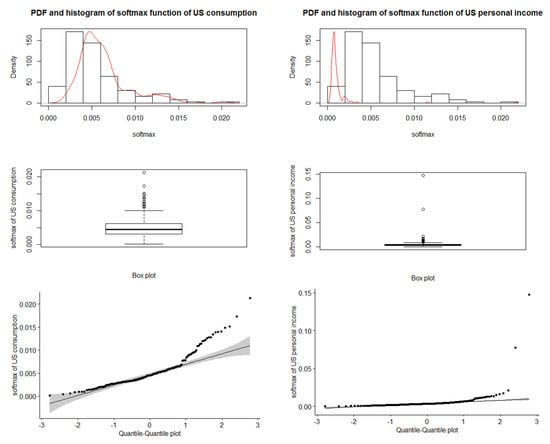

3.1.2. Real Data: U.S. Consumption and Personal Income Quarterly Changes

This part presents the quarterly changes in U.S. consumption and personal income data from 1970 to 2016. First, we want to discuss the softmax data obtained from our data. Figure 5 displays the U.S. consumption and income data and the corresponding softmax data. Figure 6 shows the data analysis of the softmax U.S. consumption and personal income, such that the mean of softmax U.S. consumption is 0.00534759 and the variance is 0.000011557 and the mean softmax of U.S. personal income is 0.00534759 and the variance is 0.0000149023. Moreover, the extremely standardized residuals (on both ends) are larger than they would be for normal data.

Figure 5.

Quarterly changes in U.S. consumption and personal income data from 1970 to 2016 and the corresponding softmax data.

Figure 6.

Data analysis of softmax U.S. consumption (left panel) and softmax U.S. personal income (right panel).

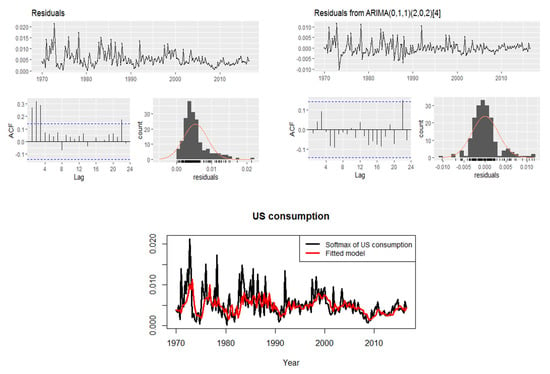

In Figure 7, based on the softmax U.S. consumption data, we see the residuals of the data before fitting them to a preferred model. Therefore, there are significant spikes in the ACF according to its residuals. Then, our aim now is to find a suitable model based on the ACF. Correspondingly, Figure 7 shows the residuals of the model fit to the data. Thus, the best model that fits the data is with p-value = 0.1502, AIC = 350.51, and it accepts the Ljung–Box test. Accordingly, all the spikes are within the significant ACF limits, except for a single spike that exceeds a small limit. Note that the general form of a seasonal ARIMA model is , where and are the seasonal and non-seasonal part of of the model, respectively, and s is the number of observations per year.

Figure 7.

Residuals of softmax U.S. consumption and its fit model .

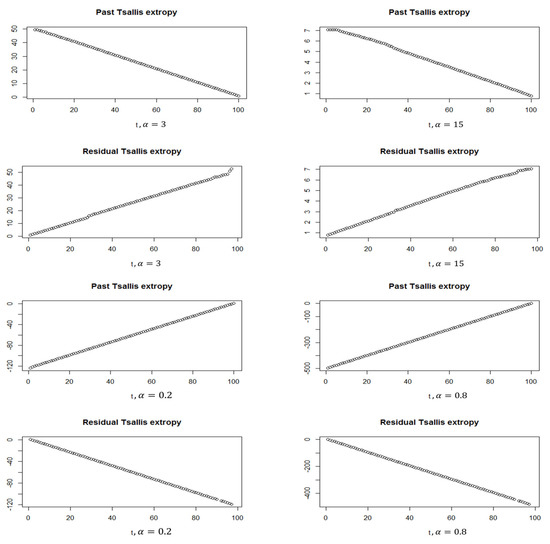

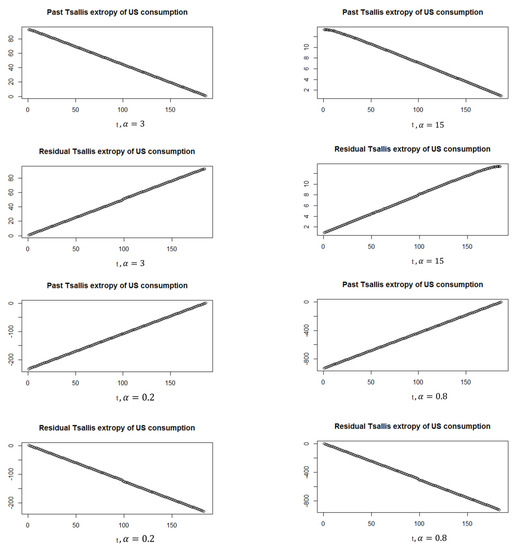

Moreover, Figure 8 shows the past and residual Tsallis extropy of the softmax U.S. consumption data, which can be noted as follows: By increasing t, the past Tsallis extropy decreases (increases) for . Furthermore, the residual Tsallis extropy increases (decreases) for .

Figure 8.

Past and residual Tsallis extropy of softmax U.S. consumption.

4. Conclusions

In this article, we offered the residual and past discrete Tsallis and Renyi extropy. Moreover, we discussed some properties and relations of the residual and past discrete Tsallis entropy with respect to our models. Moreover, we chose the softmax function as a discrete probability distribution function and studied its behavior for simulated and real data. We discussed the residuals of the softmax data for real data and suggested that the appropriate ARIMA model fits the data. Furthermore, we obtained the past and residual Tsallis extropy and studied its increasing and decreasing according to the value of . Finally, we can apply those models to ordered variables and their concomitants for future work. For more details, see [17,18,19]. For applications, see [20,21,22].

Author Contributions

Methodology, T.M.J., N.S.-A. and M.S.M.; Software, R.A. and M.S.M.; Investigation, M.S.M.; Resources, N.F.; Supervision, M.S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Taif University Researchers Supporting Project number (TURSP-2020/318), Taif University, Taif, Saudi Arabia. The author Nahid Fatima would like to acknowledge the support of Prince Sultan University for paying the Article Processing Charges (APC) of this publication.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The simulated data used to support the findings of this study are included within the article.

Acknowledgments

This study was supported by the Taif University Researchers Supporting Project number (TURSP-2020/318), Taif University, Taif, Saudi Arabia. The author Nahid Fatima would like to acknowledge the support of Prince Sultan University for paying the Article Processing Charges (APC) of this publication. The authors would like to thank the reviewers for their precious efforts.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Lad, F.; Sanfilippo, G.; Agro, G. Extropy: Complementary dual of entropy. Stat. Sci. 2015, 30, 40–58. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Xue, Y.; Deng, Y. Tsallis eXtropy. Commun. Stat.-Theory Methods 2021, 1–14. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Buono, F.; Longobardi, M. On Tsallis extropy with an application to pattern recognition. Stat. Probab. Lett. 2022, 180, 109241. [Google Scholar] [CrossRef]

- Ebrahimi, N. How to measure uncertainty in the residual lifetime distribution. Sankhya A 1996, 58, 48–56. [Google Scholar]

- Di Crescenzo, A.; Longobardi, M. Entropy-based measure of uncertainty in past lifetime distributions. J. Appl. Probab. 2002, 39, 434–440. [Google Scholar] [CrossRef]

- Gao, J.; Shang, P. Analysis of financial time series using discrete generalized past entropy based on oscillation-based grain exponent. Nonlinear Dyn. 2019, 98, 1403–1420. [Google Scholar] [CrossRef]

- Li, S.; Shang, P. A new complexity measure: Modified discrete generalized past entropy based on grain exponent. Chaos Solitons Fractals 2022, 157, 111928. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, F. Renyi extropy. Commun.-Stat.-Theory Methods 2021, 1–12. [Google Scholar] [CrossRef]

- Young, H.; Zamir, S. Handbook of Game Theory, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2015; Volume 4. [Google Scholar]

- Sandholm, W.H. Population Games and Evolutionary Dynamics; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Goeree, J.K.; Holt, C.A.; Palfrey, T.R. Quantal Response Equilibrium: A Stochastic Theory of Games; Princeton University Press: Princeton, NJ, USA, 2016. [Google Scholar]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Secaucus, NJ, USA, 2006. [Google Scholar]

- Mohamed, M.S. On concomitants of ordered random variables under general forms of morgenstern family. FILOMAT 2019, 33, 2771–2780. [Google Scholar] [CrossRef]

- Mohamed, M.S. A measure of inaccuracy in concomitants of ordered random variables under Farlie-Gumbel-Morgenstern family. FILOMAT 2019, 33, 4931–4942. [Google Scholar] [CrossRef]

- Mohamed, M.S. On Cumulative Residual Tsallis Entropy and its Dynamic Version of Concomitants of Generalized Order Statistics. Commun. Stat.-Theory Methods 2022, 51, 2534–2551. [Google Scholar] [CrossRef]

- Fatima, N. Solution of Gas Dynamic and Wave Equations with VIM. In Advances in Fluid Dynamics, Lecture Notes in Mechanical Engineering; Rushi Kumar, B., Sivaraj, R., Prakash, J., Eds.; Springer: Singapore, 2021. [Google Scholar] [CrossRef]

- Fatima, N. The Study of Heat Conduction Equation by Homotopy Perturbation Method. SN Comput. Sci. 2022, 3, 1–5. [Google Scholar] [CrossRef]

- Fatima, N.; Dhariwal, M. Solution of nonlinear coupled burger and linear burgers equation. Int. J. Eng. Technol. 2018, 7, 670–674. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).