1. Introduction

Over the last 20 years, several advances in thermodynamics have led to the development of results relating equilibrium quantities to nonequilibrium trajectories. Those advances have crystallized in a new area of research,

nonequilibrium thermodynamics, where these relations play a major role [

1,

2,

3]. Among them, two of the most remarkable are Jarzynski’s equality [

4,

5,

6] and Crooks’ fluctuation theorem [

7,

8], for which experimental evidence has been reported in several contexts: unfolding and refolding processes involving RNA [

9,

10], electronic transitions between electrodes manipulating a charge parameter [

11], the rotation of a macroscopic object inside a fluid surrounded by magnets where the current of a wire attached to the macroscopic object is manipulated [

12], and a trapped-ion system [

13,

14].

These two results have been derived under several assumptions in the context of nonequilibrium thermodynamics, including both deterministic [

5,

6] and stochastic dynamics [

4,

7,

8,

15]. Moreover, it has been argued that both results can be obtained as a consequence of Bayesian retrodiction in a physical context [

16]. Here, we derive both of them using only the concepts from the theory of Markov chains. This allows us to both distinguish the mathematical from the physical assumptions underlying them and, thus, to make them available for application in other areas where the framework of thermodynamics may be useful. The distinction between mathematical and physical assumptions will be of particular importance for the definition of work, as we will see, since the usual definition based on physical considerations leads to an asymmetry of the definition in processes that run either forward or backward in time—see

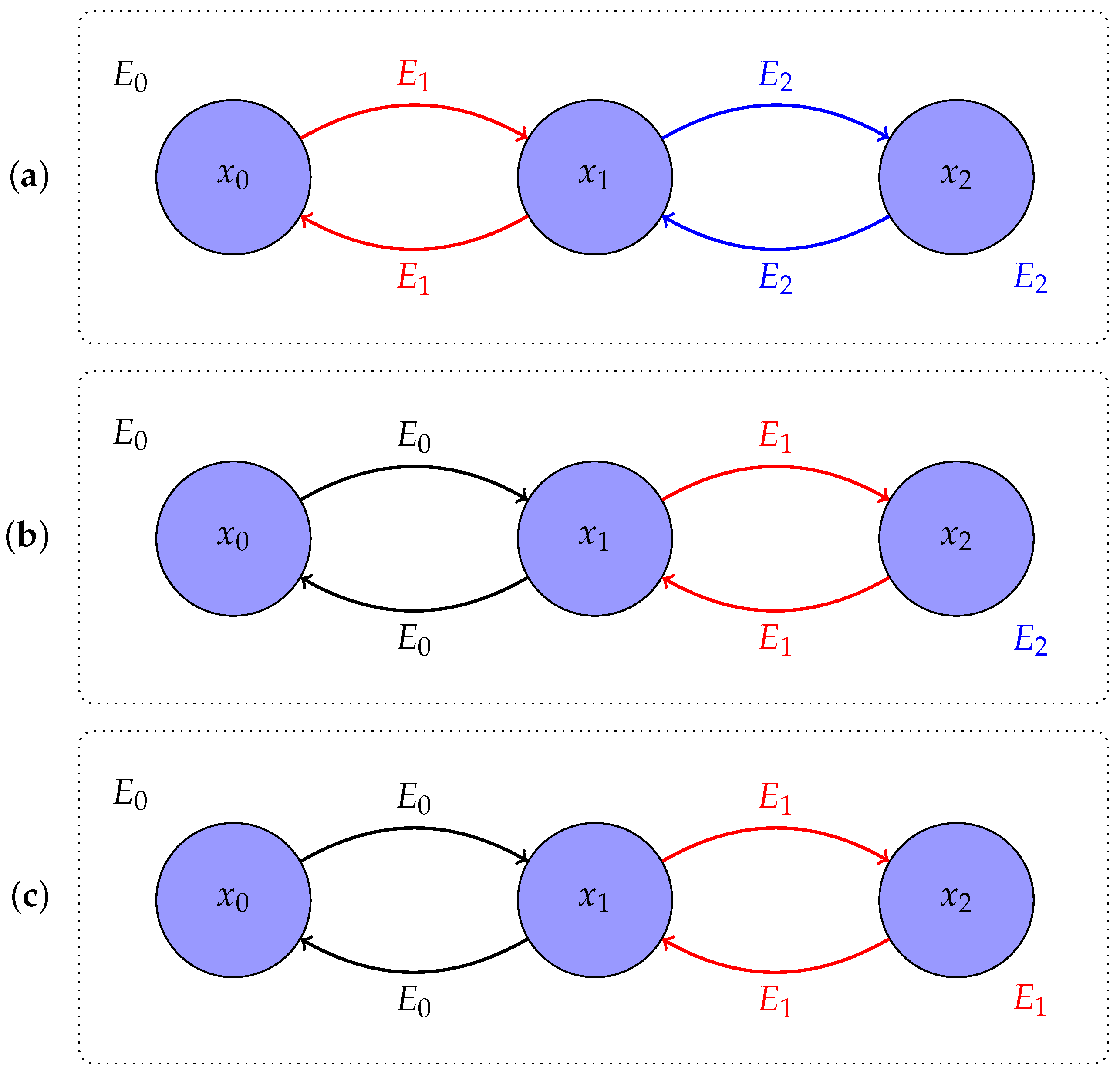

Figure 1 for a simple example. This is relevant, for instance, when analysing trajectories in terms of their work value, if we do not know whether they were recorded in the forward direction or whether they have been generated by playing them backwards. Ideally, we would like to be able to ascribe work values directly to trajectories without any additional information.

One of the application areas where the framework of thermodynamics has recently been investigated outside the realm of physics is the analysis of simple learning systems [

1,

18,

19,

20,

21,

22,

23,

24,

25,

26] and, in particular, the problem of decision-making under uncertainty and resource constraints [

22,

27,

28,

29,

30,

31]. The basic analogy follows from the idea that decision-making involves two opposing

forces: (i) the tendency of the decision-maker toward better options (equivalently, to maximize a function called

utility) and (ii) the restrictions on this tendency given by the limited information-processing capabilities of the decision-maker, which prevents him/her from always picking the best option and is usually modelled by a bound on the entropy of the probability distribution that describes the decision-maker’s behaviour. Thermodynamic systems are also explained in terms of two opposing forces, the first being the energy, which the system tries to minimize, and the second being the entropy, which prevents the minimization of the energy to its full extent. Thus, in both cases, we formally deal with optimization problems under information constraints, and thus, we can conceptualize both decision-making and thermodynamics in terms of information theory. In particular, we can consider the environment in which a decision is being made or a thermodynamic system is immersed as a source of information (in the form of either utility or energy), which, due to the noise (modelled by entropy), reaches the decision-making or thermodynamic system with some error. This results in an imperfect response by the system.

The analogy between thermodynamics and decision-making is not restricted to the equilibrium case [

22,

27,

28,

29,

30,

31], but can be taken further from equilibrium to nonequilibrium systems. In particular, the aforementioned fluctuation theorems of Jarzynski and Crooks have been previously suggested to apply to decision-makers that adapt to changing environments [

32]. In this previous work, hysteresis and adaptation were investigated in decision-makers, however, based on the physical convention of defining work differently for forward and backward processes. Here, we improve on the work there by replacing this convention with a different energy protocol that naturally entails a symmetric definition of work and by weakening the assumptions that are actually needed in order for the fluctuation theorems to hold in the context of general Markov chains. Given the fact that the literature on this topic belongs for the most part to thermodynamics, we adopt the thermodynamic notation here. In particular, we consider energy functions instead of utilities and take Markov chains as the starting point.

Our manuscript is organized as follows. In

Section 2, we introduce the notions of work and other thermodynamic concepts that are inherent to Markov chains, that is, in contrast to the formalism in physics [

7,

8,

15], we start with the assumption of a Markov chain and deduce all other concepts from that without the need to presuppose the existence of an external energy function. We discuss under what conditions these concepts are uniquely specified from the Markov chain. In

Section 3, we use this framework to weaken the derivation of Jarzynski’s equality (Theorem 1) in the context of decision-making that was presented in [

32]. In

Section 4, we prove Crooks’ fluctuation theorem (Theorem 2 and Corollary 2) within the same setup. In particular, we use an additional assumption that is not mandatory in Crooks’ work [

7,

8,

15], but here is needed given the inherent nature of our definition of work. In fact, we provide an example in which the new requirement is violated and, as a consequence, Crooks’ theorem is false everywhere (Proposition 3). In

Section 5, we discuss how the concepts we have developed can be applied to decision-making systems.

Notice, for simplicity, we develop the results for discrete-time Markov chains with finite state spaces. However, the ideas can be translated, for example, to continuous state spaces by assuming for densities of Markov kernels the properties we use here for transition matrices. We include a few more details regarding this scenario in the discussion (

Section 5), where we also briefly address the case of continuous-time Markov chains.

4. Crooks’ Fluctuation Theorem for Markov Chains

The original derivation of Crooks’ fluctuation theorem for Markovian dynamics [

7,

8,

15] was carried out using a definition of work different from the one in (4). In this section, we derive the theorem for Markov chains using (4) and comment on the difference between these approaches in the discussion. As discussed in the Introduction, an additional hypothesis is needed for the result to hold in our setup. We derive Crooks’ fluctuation theorem using this additional assumption in Theorem 2 and Corollary 2 below, and then, in Proposition 3, we provide an example where this requirement is violated and the theorem is false everywhere.

Before proving the intermediate results and, finally, Crooks’ fluctuation theorem, let us briefly sketch the procedure we follow throughout this section. We start with Proposition 1, where we use the same Markov chain that we defined in the proof of Theorem 1 and obtain, along similar lines, a more precise relation between and , in particular between the probability of some realization of and that of the same realization (with the events taking place in reversed order) of . As a matter of fact, we show that, for a given , is (roughly) the only Markov chain fulfilling such a relation. Then, in Proposition 2, we show how the driving signals of and are related. In order to do so, we exploit the relation between the equilibrium distributions of and , which comes from the fact the both Markov chains share the same equilibrium distributions. By combining these two propositions, we reach a relation between the probability distributions of the driving signals of and in Theorem 2. Lastly, in Corollary 2, we impose an extra condition on the equilibrium distributions of in order to obtain Crooks’ fluctuation theorem in its usual form. We proceed now to show the details involved in the argument we just presented. We start by proving Proposition 1.

Proposition 1. If is a Markov chain on a finite state space S whose initial distribution has non-zero entries and whose transition matrices are irreducible, then there exists a unique Markov chain such that , , , where are the transition matrices of , and for ,for any family of energies of . Moreover, this unique satisfieswhere , , and denotes the reversal of . In particular, the probability of following is the same as the probability of following if and only if . Proof. Consider the Markov chain

defined in the proof of Theorem 1, which is well defined, since we have the same hypotheses. We can proceed in the same way as in Theorem 1 to obtain

where we applied the Markov property of

in

, (11) in

, and the definition of

Q plus the Markov property of

in

. This proves the existence of a Markov chain with the desired properties. Moreover, we have

where we applied (4), the definition of conditional probability and the fact

follows

in

, the definition of the conditional probability, the fact that

follows

in

, and both the definitions of

Q and

plus (12) in

.

It remains to show the uniqueness of

. Assume

is a Markov chain with transition matrices

such that

, (13) holds, and

for all

and

. Consider some

n such that

and some

with

. By (13), we have

Applying the Markov property, the fact that

,

, and the definition of

, we obtain

Since the argument also works for

and

, the case

holds by definition, and

, we have

. □

We say

is

microscopically reversible [

15] if (13) is satisfied for

being the time reversal of

, i.e., if the unique Markov chain

that exists by Proposition 1 has initial distribution

and transition matrices

. Notice, if this property holds, then (14) relates the probability of observing a realization

of a Markov chain

with that of observing the reversed realization

in the time reversal

of

, that is when starting with the equilibrium distribution of the last environment and choosing according to the same conditional probabilities, but in reversed order. This is the case if and only if the transition matrices of

satisfy detailed balance, as we show in the following lemma.

Lemma 2. If is a Markov chain on a finite state space S with irreducible transition matrices and initial distribution with non-zero entries, then satisfies detailed balance for if and only if is microscopically reversible.

Proof. If satisfies detailed balance, that is if each satisfies (1), then by the definition of in (11), we have for each . Hence, in this case, the Markov chain constructed in Theorem 1 and Proposition 1 is the time reversal of , and so, is microscopically reversible by definition.

It remains to show that, if

is microscopically reversible, then its transition matrices satisfy detailed balance. Let

be the time reversal of

. Since, by assumption,

and

, where

are the transition matrices of

, we can follow the proof of Proposition 1 to obtain for

Thus, for each

,

satisfies detailed balance with respect to

. □

In particular, this means that, if

satisfies detailed balance, then the time reversal

of

satisfies (14), that is

for any family of energies

of

, where

is the dissipated work of

. Thus, in this case, dissipated work is an unambiguous measure of the discrepancy between the probability of observing a realization of

and the probability of observing the same trajectory in reversed order in the time reversal of

. We have, hence, an unambiguous measure of

hysteresis (see

Section 5).

Before showing Theorem 2 and Crooks’ fluctuation theorem, we relate the work of with that of its time reversal.

Proposition 2. If is a Markov chain on a finite state space S with the initial distribution with non-zero entries and irreducible transition matrices, then there exists a Markov chain and a constant such thatwhere and are families of energies of and , respectively. Moreover, if the stationary distribution of coincides with the initial distribution of , then there exists a constant such that Proof. Let

be the Markov chain defined in the proof of Theorem 1. Since work is well defined (up to a constant) for both

and

, by Lemma A2 (see

Appendix A), we can use the relation between the energy functions of both chains in (A2) to show (16). We have

where in

we defined

, which is a constant since

and

by Lemma A2 (see

Appendix A). In

, we applied the definition of

and (A2), cancelled the repeated

, defined as in Lemma A2, for all

, and introduced

. In

, we rewrite the sum in terms of

.

For the second statement, notice that, if is the stationary distribution of , then there exists a constant c such that by (2). Thus, we have for all , where is the constant in (16). □

If detailed balance holds, then (16) and (17) relate the work along a realization of with the reversed realization of its time reversal . More precisely, we obtain the following corollary.

Corollary 1 (When work is odd under time reversal)

. If all transition matrices of in Proposition 2 satisfy detailed balance and is the time reversal of , then the constants k in (16) and (17) can be taken to be zero.

Proof. For the constant in (16), we simply choose

and

, and for the constant in (17), we choose

and

, which we can do since

is the stationary distribution of

and, by Lemma A2 (see

Appendix A), also that of

. □

Remark 1. Note that choosing the energy functions in Corollary 1 is unnecessary whenever both and are thermodynamic processes. Although energy is defined only up to a constant in thermodynamics, it would make no sense to pick the constants differently when dealing with a system where the same dynamics occur more than once. Thus, there, we have for , , and in case is a stationary distribution of , . In particular, when taking to be the time reversal of in thermodynamics, we always have in (16), and in case is a stationary distribution of , in (17).

The non-constant term

in (16), which remains even when

satisfies detailed balance, follows from an asymmetry between

and

. In particular,

goes from

to

, whereas

goes from

to

, because while

and the stationary distribution of

is

, which is also the initial distribution of

, the stationary distribution of the final transition matrix

of

is

(and not

). Furthermore, while

may begin with a change in the energy function, since

is allowed,

does not, as

is both the initial distribution of

and the stationary distribution of

. An example can be found in

Figure 2. This asymmetry is erased if we assume that

is the stationary distribution of

, in which case, for Markov chains

that satisfy detailed balance, the work along any realization of

has the opposite sign of the work along the reversed realization of

. That is, thermodynamic work becomes

odd under time reversal.

The following theorem contains Crooks’ fluctuation theorem as the special case when satisfies detailed balance (see Corollary 2 below).

Theorem 2. If is a Markov chain on a finite state space S whose initial distribution has non-zero entries, whose transition matrices are irreducible, and where is the stationary distribution of , that is , then there exists a Markov chain and a constant such thatwhere and are families of energies of and , respectively, and denotes the support of the probability distribution of , that is the values that can be taken by with non-zero probability. Proof. Let

be the Markov chain defined in the proof of Theorem 1. Note that, for any family of energies

of

, there exists a constant

c such that

by (2), since

is the stationary distribution of

. Given some

, we have

where we applied (14) in

and Proposition 2 plus the fact that

in

. To obtain (18), it remains to show that

for all

. By definition, there exists some

such that

and

. By Proposition 2,

. Since

and the entries of the (unique) stationary distributions of

are also non-zero by Lemma 1, we can use (11) plus the Markov property for both

and

to show

, implying

. □

As the special case when each transition matrix of in Theorem 2 satisfies detailed balance, we obtain Crooks’ fluctuation theorem modified by the additional assumption of .

Corollary 2 (Crooks’ fluctuation theorem for Markov chains)

. If all transition matrices of the Markov chain in Theorem 2 satisfy detailed balance, then (18) holds with and being the time reversal of , that is the Markov chain with initial distribution and transition matrices .

Proof. As can be seen from the proof of Theorem 2 and Corollary 1, in the case of detailed balance, we can choose to be the time reversal of . Moreover, the origin of the constant k in Theorem 2 is Equation (17). By Corollary 1, this constant can be set to zero if the transition matrices of satisfy detailed balance. □

Notice, in most of the literature on Crooks’ fluctuation theorem, one writes

for the probability

of the work along the so-called

forward process and

for the probability

of the work along the so-called

backward process (the time reversal of

), so that, by Corollary 2, under detailed balance, Equation (18) reads

The condition that

is the stationary distribution of

is not necessary in Crooks’ original work [

7,

8,

15,

17]. Nonetheless, it is fundamental in our approach: Crooks’ fluctuation theorem can even be false for every work value if

is not the stationary distribution of

, as we show in Proposition 3.

Proposition 3. If is not the stationary distribution of , then there exist Markov chains where Crooks’ fluctuation theorem is false everywhere, despite the other assumptions in Theorem 2 and Corollary 2 being fulfilled.

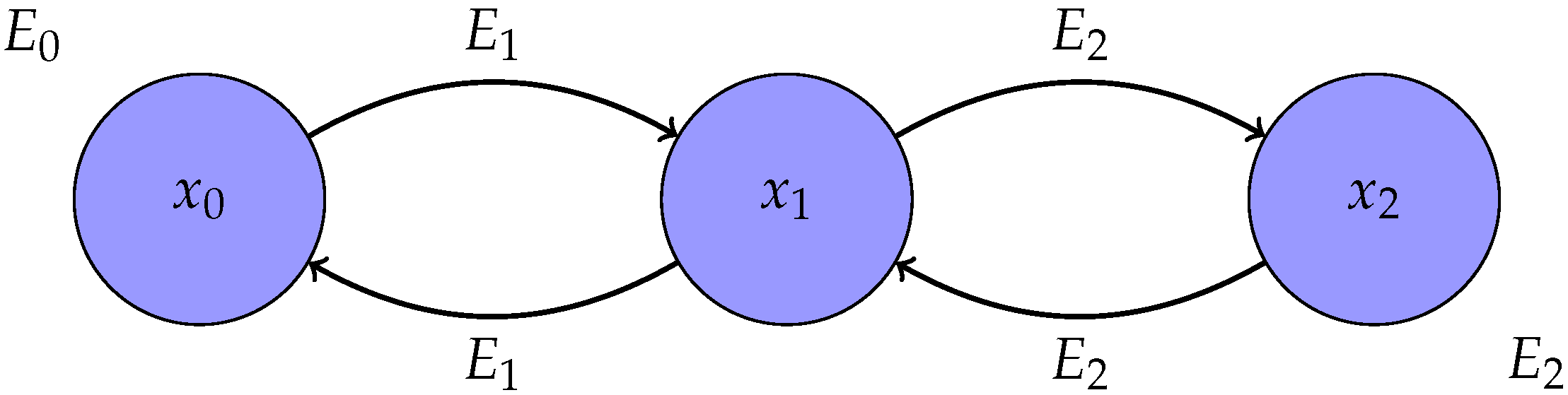

Proof. We consider a state space with three components

, a Markov chain with two steps

and

, where

and

. We take

as the energy function associated with

, where

,

and

, and

as the energy function associated with

, where

,

and

. Taking

, we obtain both free energies

and

equal to one. Notice that we have

, with non-zero entries, and

. Notice, also, that

, where

,

, and

. We fix

. We can easily see that (19) is not defined for

and that it is false, although defined, for

. We have

, since

is both the starting distribution of the time reversal of

and the stationary distribution of its only transition matrix. Thus, we have

and

, which means (19) is not defined at

. We obtain, analogously, that it is not defined for

. For

, we have

and

which means (19) is defined and false there. Although the argument is independent of the transition matrix for

, fix

for completeness, since it has non-zero entries and fulfils detailed balance with respect to

. □

5. Discussion: Application to Decision-Making

The bridge connecting thermodynamics and decision-making is an optimization principle directly inspired by the

maximum entropy principle [

27,

30,

37,

38]. In particular, given a finite set of possible choices

S, the optimal behaviour is given by the distribution

that optimally trades utility and uncertainty according to the following optimization principle:

where

is the set of probability distributions over

S,

H is the Shannon entropy,

denotes the expected value over

, and

is a

utility function, that is a function that assigns larger values to options in

S that are preferred by the decision-maker. Note that the main difference between (20) and the maximum entropy principle is the the substitution of an energy function

by a utility

U, which behaves as a negative energy function

(in the sense that it is the

force that opposes uncertainty). As a result of their similarity, both principles yield the same result, namely the Boltzmann distribution:

where

is a trade-off parameter between uncertainty and utility/energy and

Z is a normalization constant.

The analogy between thermodynamics and decision-making can be taken further by considering not only their optimal distribution, but how they transition between different distributions in the path towards the optimal one. Here, the notion of uncertainty is useful again, although this time, it is relative to the optimal distribution

p. More specifically, we can think of the transitions the decision-maker undergoes as being driven by the reduction of uncertainty with respect to the optimal distribution

p, which can be modelled by the dual of

p-majorization [

39]. In this approach, given

, the decision-maker transitions from

q to

, which we denote by

, if

is

closer to

p than

q (see [

39] for a rigorous definition of the dual of

and, hence, of

closer). For us, the important fact about

is that, as it turns out ([

40], Theorem 2), the transitions that are allowed by

are precisely the ones that result from applying a transition matrix that has

p as a stationary distribution. More precisely,

where

is a matrix whose rows are normalized and fulfils

, that is a

stochastic matrix for which

is a stationary distribution [

33]. Importantly, the transition matrix assumption is common in the study of both thermodynamic and decision-making systems [

8,

15].

In our current study, the situation we have in mind is that of a decision-maker that has to make a sequence of decisions under varying environmental conditions. We model this as a stochastic process that behaves like a Markov chain and introduce, starting from a Markov chain, the thermodynamic tools we use to describe it: energy, partition function, free energy, work, heat, and dissipated work. The behaviour of the decision-maker then corresponds to a decision vector

collected over

n potentially different environments. In this most general decision-making scenario, where the environment is changing over time, the optimal distribution

p is changing as well. Thus, we can regard the decision-making process as a sequence of transition matrices

, where each

corresponds to a particular environment with optimal response

p. We could imagine, for example, a gradient descent learner that would converge to

p for any given environment, presuming we allow for sufficient gradient update steps. Otherwise, the gradient learner, or any other optimization-based decision-making agent (e.g., following a Metropolis–Hastings optimization scheme), would lag behind the environmental changes and the environment would outpace the learner. In this general decision-making scenario, we can then study the relation between the optimal behaviour and the non-optimal one by fluctuation theorems [

1,

2,

3] like Jarzynski’s equality [

4,

5,

6] and Crooks’ fluctuation theorem [

7,

8]. While we focus on these two fluctuation theorems in our current study, similar arguments may be suitable to transfer other fluctuation theorems that have been considered in the thermodynamic literature (see, for example, [

1]) to a decision-making scenario.

Although the strong requirements in Lemma 2 were used in [

8] to derive Jarzynski’s equality through (15) and were assumed in the only approach we know for it in decision-making [

32], weaker assumptions that do not involve the time reversal of

are sufficient (cf. Theorem 1). The same properties have been used to derive Jarzynski’s equality following a different method in [

4].

In a decision scenario, the energy becomes a loss function that a decision-maker is trying to minimize. If this loss function changes over time, we can conceptually distinguish changes in loss that are induced externally by changes in the environment (e.g., given data), from changes in loss due to internal adaptation when a learning system changes its parameter settings. The externally induced changes in loss correspond to the physical concept of work and drive the adaptation process. Hence, we can consider the decision-theoretic equivalent of physical work as a driving signal: the (negative) surprise experienced by the decision-maker, given that it adds the (negative) surprise that he/she experiences at each step (which can be quantified by the difference in energy/utility evaluated at the decision-maker’s state when the environment changes) [

32,

41]. With this in mind, we can use Jarzynski’s equality to obtain a bound on the decision-maker’s expected surprise. In particular, by applying Jensens’ inequality on Jarzynski’s equality, one obtains

(Note that this is a version of the second law of thermodynamics [

2].) Hence, (22) provides a bound on the expected surprise. While a similar bound has been previously pointed out for decision-making systems [

32], here, we re-derive it under a novel energy protocol and with weaker assumptions regarding time reversibility.

Even though the assumption that

must be the stationary distribution of

seems to restrict the applicability of Crooks’ fluctuation theorem in our decision-theoretic setup when compared to the usual thermodynamics one (see Corollary 2 and Proposition 3), it is actually not an issue from an experimental point of view when the Markov chains correspond to thermodynamic processes. This is the case because of the way one is able to sample from a Boltzmann distribution given a thermodynamic system. One of the assumptions in Corollary 2 is that

should follow such a distribution for

. For this to be fulfilled, one needs to wait until the system relaxes to such a state. Because of that, one can think of any trajectory as having an additional point that was also sampled from the Boltzmann distribution for

. Thus, the assumption that

is the equilibrium distribution of

is always fulfilled, and the experimental range of validity of Crooks’ fluctuation theorem in our setup remains equal to the one in nonequilibrium thermodynamics [

8,

15]. In particular, the new constraint is fulfilled in previous experimental setups supporting the theorem (see, for example, [

9] or [

11]).

Hysteresis is a well-known effect that takes place in some physical systems and refers to the difference in the system’s behaviour when interacting with a series of environments compared to its response when facing the same conditions in reversed order [

2]. The same idea also applies to decision-making systems. In fact, hysteresis has been reported in both simulations of decision-making systems [

32], as well as in biological decision-makers recorded experimentally [

41,

42]. Given that it refers to the difference between decisions when the order in which the environments are presented is reversed, (15) and (19) constitute quantitative measures of hysteresis. In particular, Reference [

41] used this measure successfully to quantify hysteresis in human sensorimotor adaptation, where human learners had to solve a simple motor coordination task in a dynamic environment with changing visuomotor mappings. While a simple Markov model proved adequate to model sensorimotor adaptation, it should be noted that more complex learning scenarios involving long-term memory and abstraction would not be captured by such a simple model.

Detailed balance is not required neither for Jarzynski’s equality (Theorem 1), nor for the more general form of Crooks’ fluctuation theorem we presented in Theorem 2. It is, however, required in order to choose to be the time reversal of , which leads to Crooks’ fluctuation theorem in Corollary 2.

While the definition of detailed balance (1) we adopted here is standard in the Markov chain literature, there is some ambiguity regarding its use in thermodynamics, where it has, at least, two more meanings. It is used both for the weaker condition that the Boltzmann distribution

is a stationary distribution of

for

[

4] and as a synonym of microscopic reversibility [

8,

15]. Although we have shown microscopic reversibility and detailed balance are indeed equivalent under some conditions (see Lemma 2), we followed its definition in [

15], which is not the only one in the literature (see [

35,

43,

44]).

Notice what is called a stationary distribution in the literature on Markov chains is referred to as a

nonequilibrium steady state in thermodynamics [

45]. In order for it to be an

equilibrium state, it needs to fulfil detailed balance (1) with respect to the the transition matrix in question. Notice, also, detailed balance is not fulfilled in several applications of nonequilibrium thermodynamics throughout physics [

46] and biology [

47].

Notice, in the case that we have a continuous-time Markov chain, work becomes an integral, where the integrand for

at

and the one for

at

differ, aside from the sign, in a single point. Thus, work is odd under time reversal and the assumption that

can be dropped in both Theorem 2 and Corollary 2. However, the technical tools required to show Crooks’ fluctuation theorem or Jarzynski’s equality are technically more involved in the continuous-time case, as one can see in [

36].

In case the state space is continuous, the results can be derived in a similar fashion. What we ought to notice is that, in this scenario, the role of the transition matrices is played by the densities of the Markov kernels (see for example [

48]). These densities allow us to write conditions such as detailed balance analogously to how we do in the discrete case. In the case of Jarzynski’s equality for a Markov chain on a continuous state space

, one can see that the result follows like the one with a discrete state space. To convince ourselves, the only thing to take into account is the substitution of the sum in the expected value by the integral and that of the probability distribution by the density of the Markov kernel. Then, following the proof of Theorem 1, we can define a stochastic process

whose density Markov kernels are defined through the stationary distributions and density Markov kernels of

, in analogy to how we defined them in Theorem 1. The rest follow exactly in the same fashion. Crooks’ fluctuation theorem requires a longer explanation, but, essentially, follows from the same considerations.