Residual and Past Discrete Tsallis and Renyi Extropy with an Application to Softmax Function

Abstract

1. Introduction

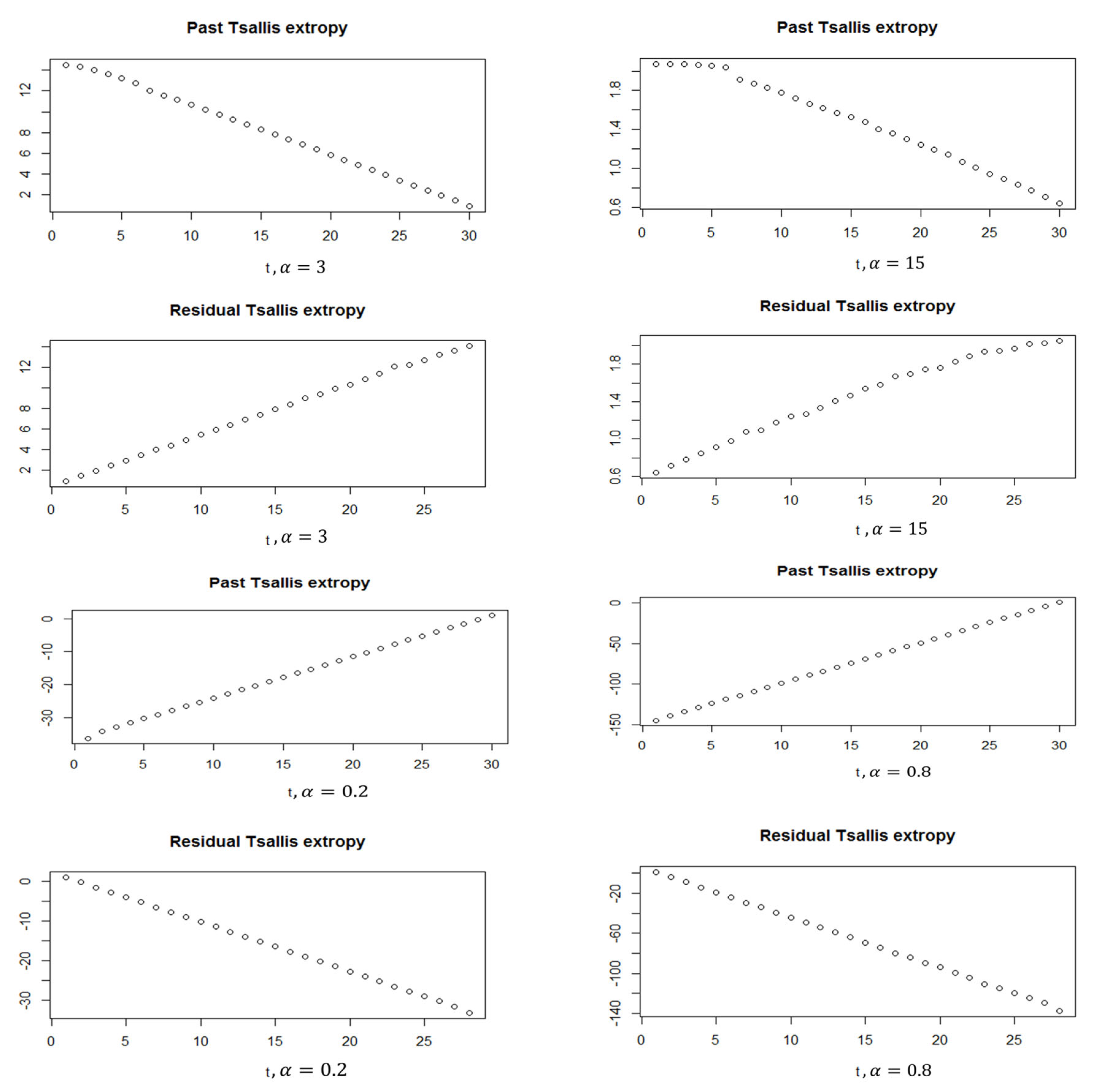

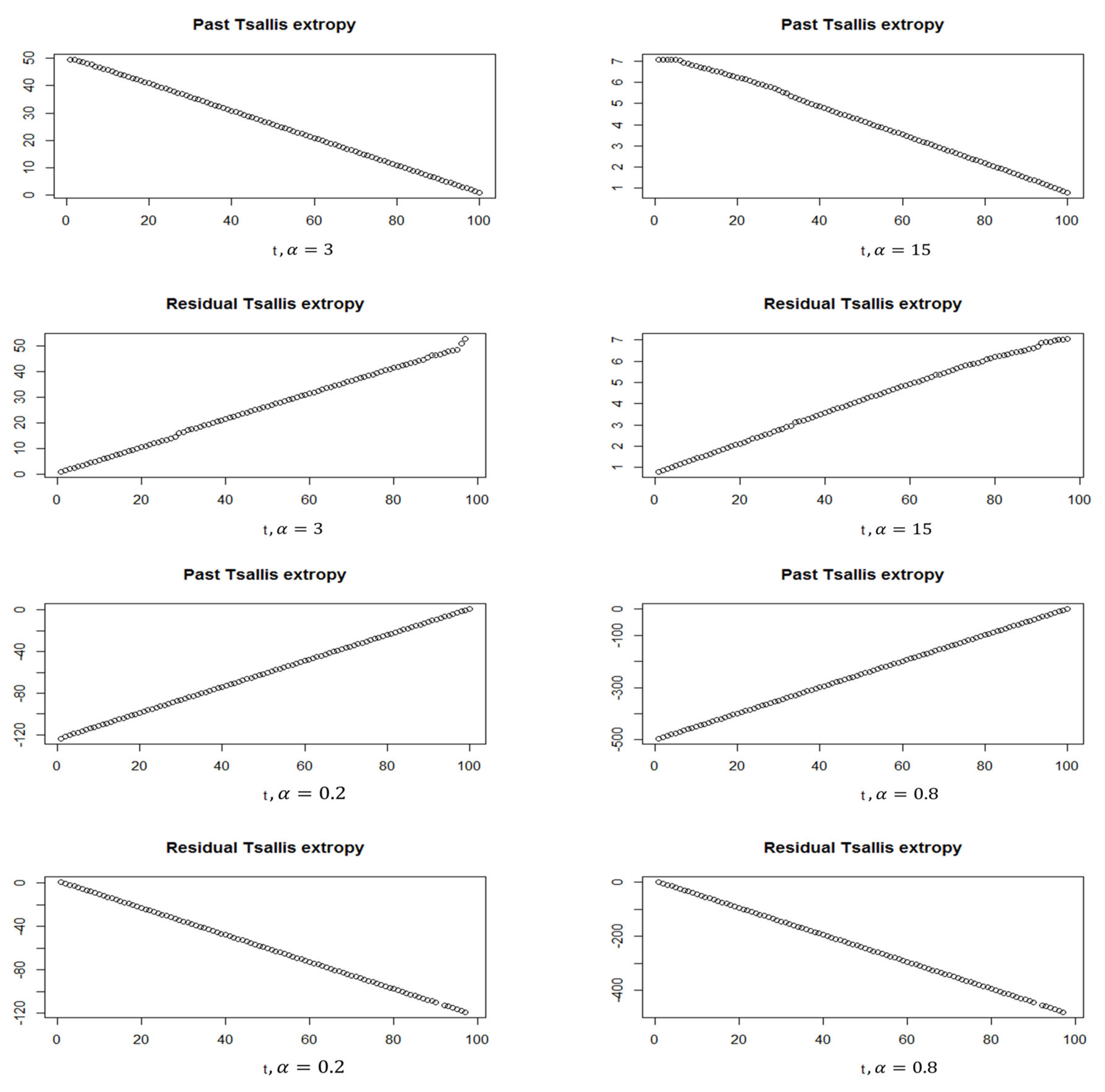

2. The Suggested Models

- 1.

- The past Tsallis extropy is positive (negative) if ().

- 2.

- The residual Tsallis extropy is positive (negative) if ().

- The past Tsallis extropy given in Equation (10) can be rewritten as follows:Moreover, we have that the term is greater than the other two terms, and . Then, the proof is obtained.

- The residual Tsallis extropy given in Equation (9) can be rewritten as follows:Moreover, the proof is obtained similarly.

- 1.

- For , we obtain

- 2.

- For , we obtain

Residual and Past Discrete Renyi Extropy

3. Applications

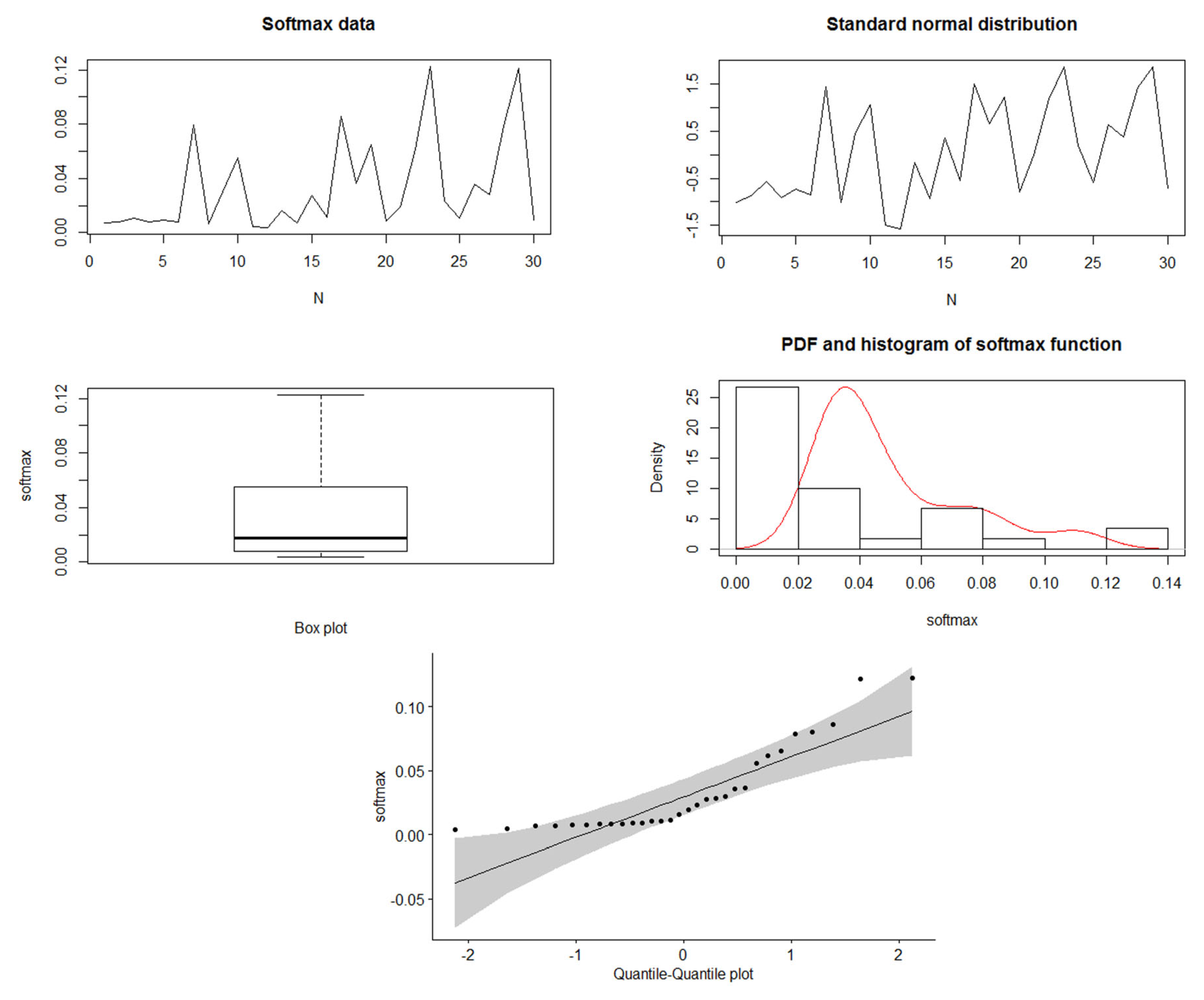

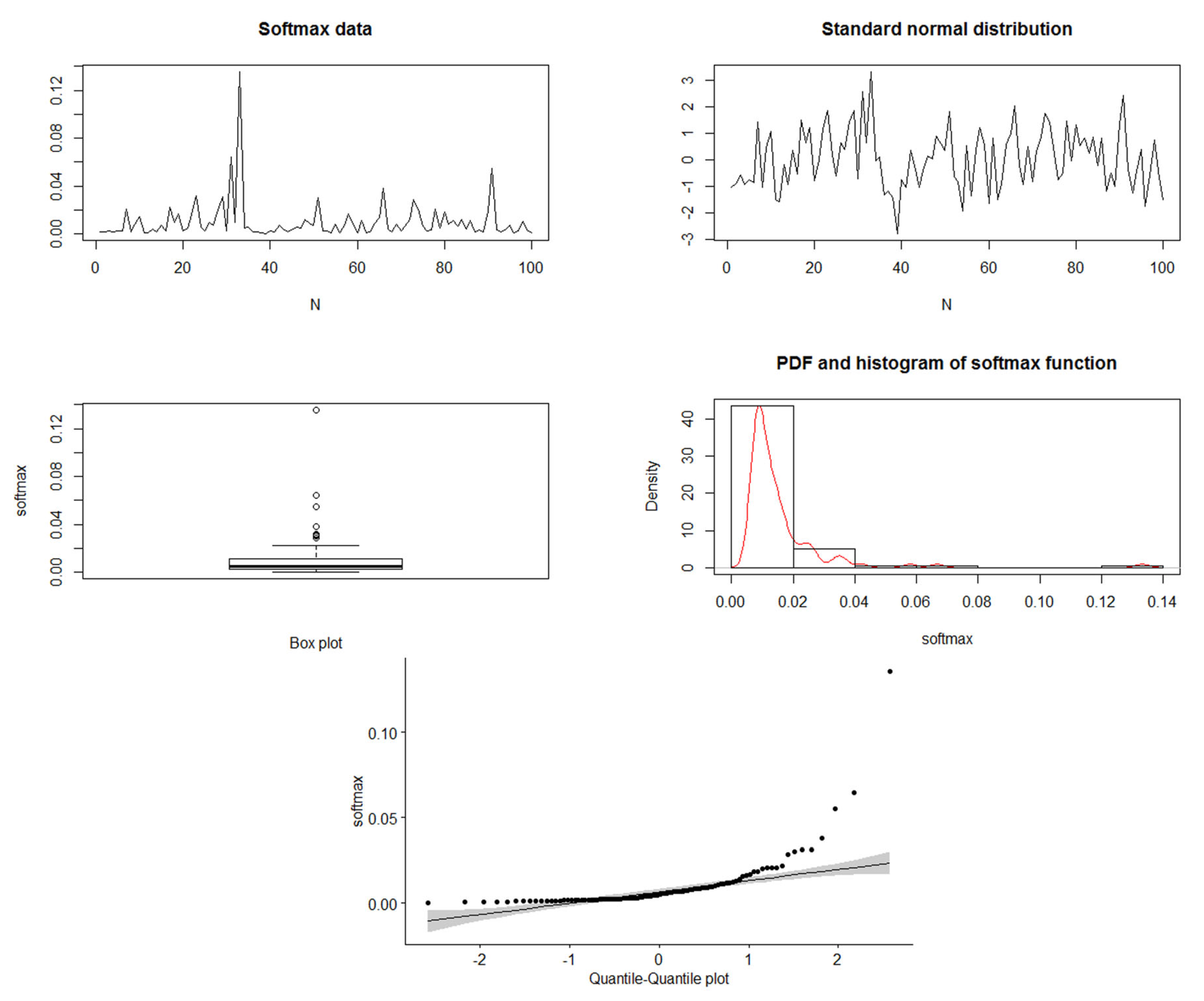

3.1. Softmax Function

3.1.1. Standard Normal Distribution

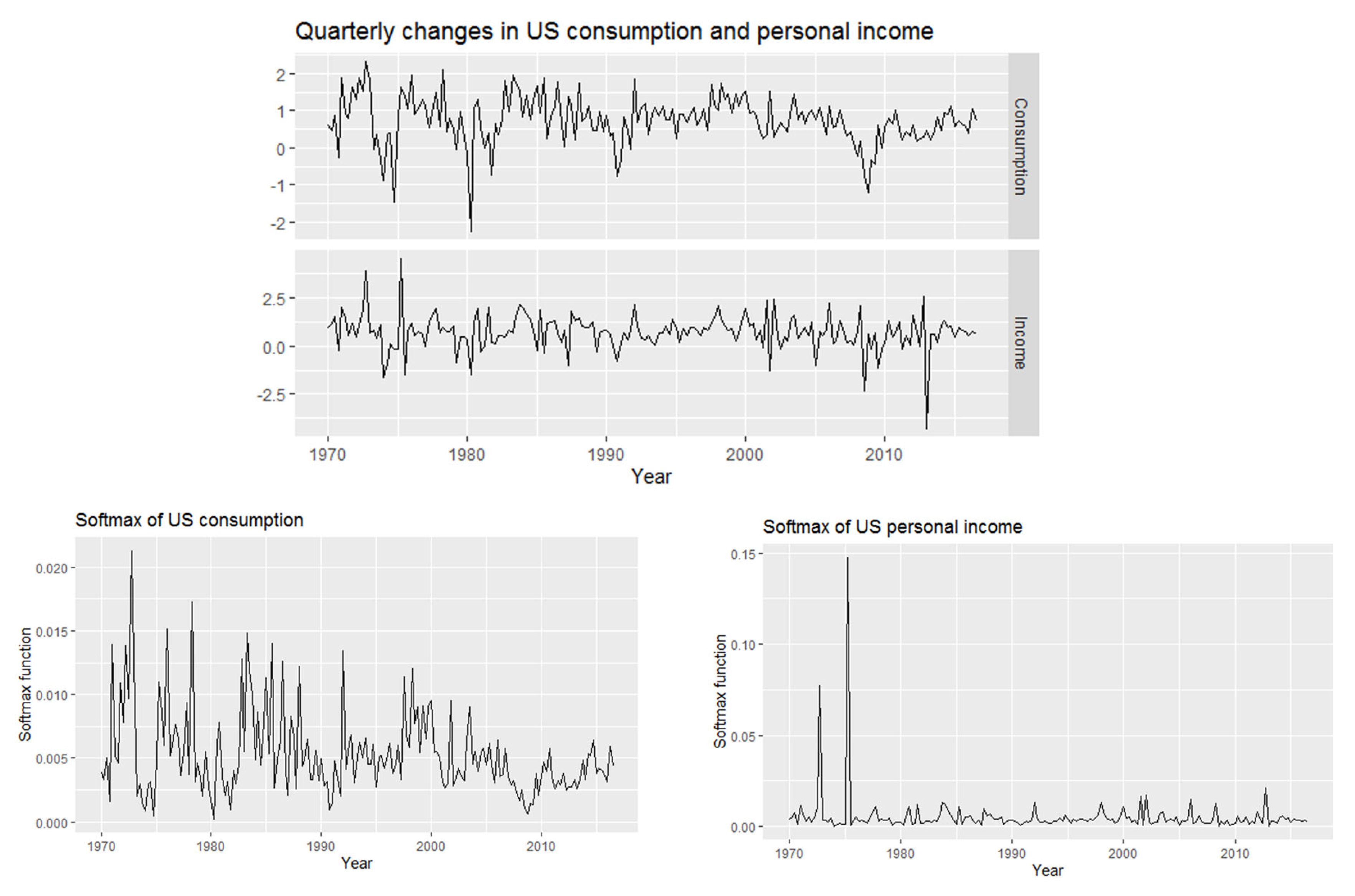

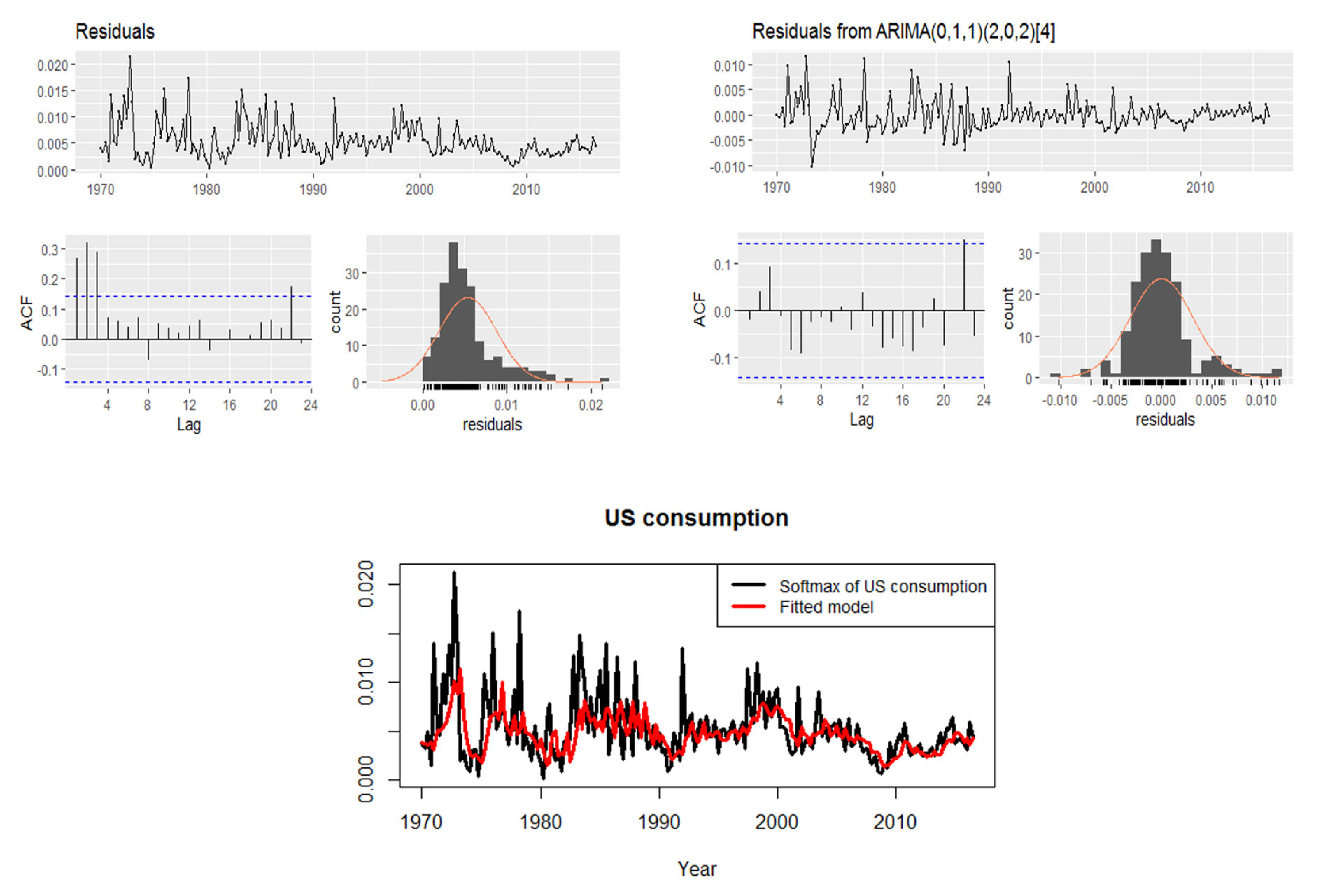

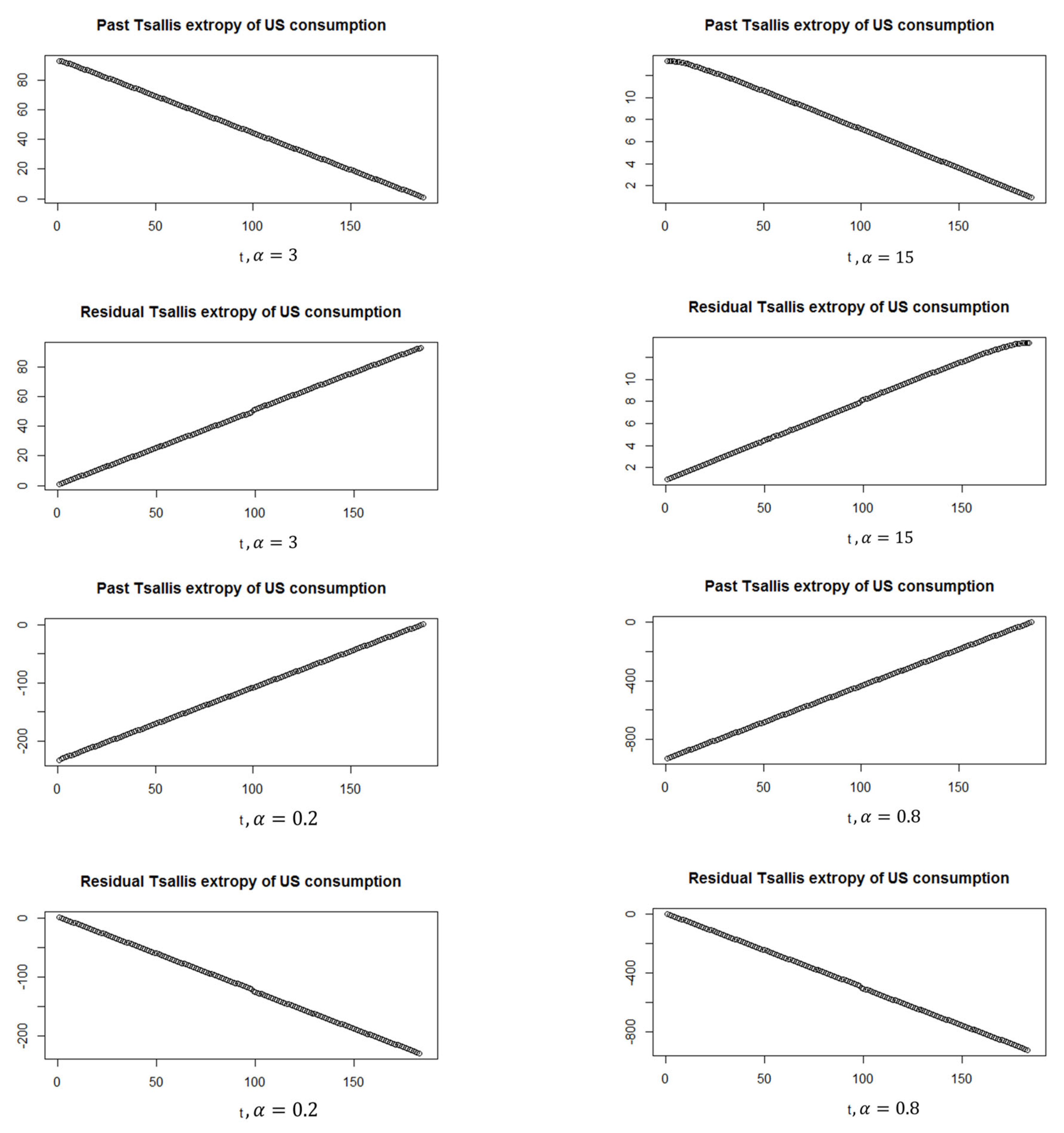

3.1.2. Real Data: U.S. Consumption and Personal Income Quarterly Changes

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Lad, F.; Sanfilippo, G.; Agro, G. Extropy: Complementary dual of entropy. Stat. Sci. 2015, 30, 40–58. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Xue, Y.; Deng, Y. Tsallis eXtropy. Commun. Stat.-Theory Methods 2021, 1–14. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Buono, F.; Longobardi, M. On Tsallis extropy with an application to pattern recognition. Stat. Probab. Lett. 2022, 180, 109241. [Google Scholar] [CrossRef]

- Ebrahimi, N. How to measure uncertainty in the residual lifetime distribution. Sankhya A 1996, 58, 48–56. [Google Scholar]

- Di Crescenzo, A.; Longobardi, M. Entropy-based measure of uncertainty in past lifetime distributions. J. Appl. Probab. 2002, 39, 434–440. [Google Scholar] [CrossRef]

- Gao, J.; Shang, P. Analysis of financial time series using discrete generalized past entropy based on oscillation-based grain exponent. Nonlinear Dyn. 2019, 98, 1403–1420. [Google Scholar] [CrossRef]

- Li, S.; Shang, P. A new complexity measure: Modified discrete generalized past entropy based on grain exponent. Chaos Solitons Fractals 2022, 157, 111928. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, F. Renyi extropy. Commun.-Stat.-Theory Methods 2021, 1–12. [Google Scholar] [CrossRef]

- Young, H.; Zamir, S. Handbook of Game Theory, 1st ed.; Elsevier: Amsterdam, The Netherlands, 2015; Volume 4. [Google Scholar]

- Sandholm, W.H. Population Games and Evolutionary Dynamics; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Goeree, J.K.; Holt, C.A.; Palfrey, T.R. Quantal Response Equilibrium: A Stochastic Theory of Games; Princeton University Press: Princeton, NJ, USA, 2016. [Google Scholar]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Secaucus, NJ, USA, 2006. [Google Scholar]

- Mohamed, M.S. On concomitants of ordered random variables under general forms of morgenstern family. FILOMAT 2019, 33, 2771–2780. [Google Scholar] [CrossRef]

- Mohamed, M.S. A measure of inaccuracy in concomitants of ordered random variables under Farlie-Gumbel-Morgenstern family. FILOMAT 2019, 33, 4931–4942. [Google Scholar] [CrossRef]

- Mohamed, M.S. On Cumulative Residual Tsallis Entropy and its Dynamic Version of Concomitants of Generalized Order Statistics. Commun. Stat.-Theory Methods 2022, 51, 2534–2551. [Google Scholar] [CrossRef]

- Fatima, N. Solution of Gas Dynamic and Wave Equations with VIM. In Advances in Fluid Dynamics, Lecture Notes in Mechanical Engineering; Rushi Kumar, B., Sivaraj, R., Prakash, J., Eds.; Springer: Singapore, 2021. [Google Scholar] [CrossRef]

- Fatima, N. The Study of Heat Conduction Equation by Homotopy Perturbation Method. SN Comput. Sci. 2022, 3, 1–5. [Google Scholar] [CrossRef]

- Fatima, N.; Dhariwal, M. Solution of nonlinear coupled burger and linear burgers equation. Int. J. Eng. Technol. 2018, 7, 670–674. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jawa, T.M.; Fatima, N.; Sayed-Ahmed, N.; Aldallal, R.; Mohamed, M.S. Residual and Past Discrete Tsallis and Renyi Extropy with an Application to Softmax Function. Entropy 2022, 24, 1732. https://doi.org/10.3390/e24121732

Jawa TM, Fatima N, Sayed-Ahmed N, Aldallal R, Mohamed MS. Residual and Past Discrete Tsallis and Renyi Extropy with an Application to Softmax Function. Entropy. 2022; 24(12):1732. https://doi.org/10.3390/e24121732

Chicago/Turabian StyleJawa, Taghreed M., Nahid Fatima, Neveen Sayed-Ahmed, Ramy Aldallal, and Mohamed Said Mohamed. 2022. "Residual and Past Discrete Tsallis and Renyi Extropy with an Application to Softmax Function" Entropy 24, no. 12: 1732. https://doi.org/10.3390/e24121732

APA StyleJawa, T. M., Fatima, N., Sayed-Ahmed, N., Aldallal, R., & Mohamed, M. S. (2022). Residual and Past Discrete Tsallis and Renyi Extropy with an Application to Softmax Function. Entropy, 24(12), 1732. https://doi.org/10.3390/e24121732