Abstract

In this paper, we generalize the notion of Shannon’s entropy power to the Rényi-entropy setting. With this, we propose generalizations of the de Bruijn identity, isoperimetric inequality, or Stam inequality. This framework not only allows for finding new estimation inequalities, but it also provides a convenient technical framework for the derivation of a one-parameter family of Rényi-entropy-power-based quantum-mechanical uncertainty relations. To illustrate the usefulness of the Rényi entropy power obtained, we show how the information probability distribution associated with a quantum state can be reconstructed in a process that is akin to quantum-state tomography. We illustrate the inner workings of this with the so-called “cat states”, which are of fundamental interest and practical use in schemes such as quantum metrology. Salient issues, including the extension of the notion of entropy power to Tsallis entropy and ensuing implications in estimation theory, are also briefly discussed.

1. Introduction

The notion of entropy is undoubtedly one of the most important concepts in modern science. Very few other concepts can compete with it in respect to the number of attempts to clarify its theoretical and philosophical meaning [1]. Originally, the notion of entropy stemmed from thermodynamics, where it was developed to quantify the annoying inefficiency of steam engines. It then transmuted into a description of the amount of disorder or complexity in physical systems. Though many such attempts were initially closely connected with the statistical interpretation of the phenomenon of heat, in the course of time, they expanded their scope far beyond their original incentives. Along those lines, several approaches have been developed in attempts to quantify and qualify the entropy paradigm. These have been formulated largely independently and with different applications and goals in mind. For instance, in statistical physics, entropy counts the number of distinct microstates compatible with a given macrostate [2], in mathematical statistics, it corresponds to the inference functional for an updating procedure [3], and in information theory, it determines a limit on the shortest attainable encoding scheme [2,4].

Particularly distinct among these are the information-theoretic entropies (ITEs). This is not only because they discern themselves through their firm operational prescriptions in terms of coding theorems and communication protocols [5,6,7,8,9], but because they also offer an intuitive measure of disorder phrased in terms of missing information about a system. Apart from innate issues in communication theory, ITEs have also proved to be indispensable tools in other branches of science. Typical examples are provided by chaotic dynamical systems and multifractals (see, e.g., [10] and citations therein). Fully developed turbulence, earthquake analysis, and generalized dimensions of strange attractors provide further examples [11]. An especially important arena for ITEs in the past two decades has been quantum mechanics (QM) with applications ranging from quantum estimation and coding theory to quantum entanglement. The catalyst has been an infusion of new ideas from (quantum) information theory [12,13,14,15], functional analysis [16,17], condensed matter theory [18,19], and cosmology [20,21]. On the experimental front, the use of ITEs has been stimulated not only by new high-precision instrumentation [22,23] but also by, e.g., recent advances in stochastic thermodynamics [24,25] or observed violations of Heisenberg’s error-disturbance uncertainty relations [26,27,28,29,30].

In his seminal 1948 paper, Shannon laid down the foundations of modern information theory [5]. He was also instrumental in pointing out that, in contrast with discrete signals or messages where information is quantified by (Shannon’s) entropy, the cases with continuous variables are less satisfactory. The continuous version of Shannon’s entropy (SE)— the so-called differential entropy, may take negative values [5,31], and so it does not have the same status as its discrete-variable counterpart. To solve a number of information-theoretic problems related to continuous cases Shannon shifted the emphasis from the differential entropy to yet another object—entropy power (EP). The EP describes the variance of a would-be Gaussian random variable with the same differential entropy as the random variable under investigation. EP was used by Shannon [5,6] to bound the capacity of non-Gaussian additive noise channels. Since then, the EP has proved to be essential in a number of applications ranging from interference channels to secrecy capacity [32,33,34,35,36]. It has also led to new advances in information parametric statistics [37,38] and network information theory [39]. Apart from its significant role in information theory, the EP has found wide use in pure mathematics, namely in the theory of inequalities [39] and mathematical statistics and estimation theory [40].

Recent developments in information theory [41], quantum theory [42,43], and complex dynamical systems in particular [10,44,45] have brought about the need for a further extension of the concept of ITE beyond Shannon’s conventional type. Consequently, numerous generalizations have started to proliferate in the literature ranging from additive entropies [31,46] through a rich class of non-additive entropies [47,48,49,50,51,52] to more exotic types of entropies [53]. Particularly prominent among such generalizations are ITEs of Rényi and Tsallis, which both belong to a broader class of so-called Uffink entropic functionals [54,55]. Both Rényi entropy (RE) and Tsalli entropy (TE) represent one-parameter families of deformations of Shannon’s entropy. An important point related to the RE is that the RE is not just a theoretical construct, but it has a firm operational meaning in terms of various coding theorems [8,9]. Consequently, REs, along with their associated Rényi entropy powers (REPs), are, in principle, experimentally accessible [8,56,57]. That is indeed the case in specific quantum protocols [58,59,60]. In addition, REPs of various orders are often used as convenient measures of entanglement—e.g., REP of order 2, i.e., represents tangle (with being concurrence) [61], is related to both fidelity F and robustness R of a pure state [62], quantifies the Bures distance to the closest separable pure state [63], etc. Even though our main focus here will be on REs and REPs since they are more pertinent in information theory, we will include some discussion related to Tsallis entropy powers at the end of this paper.

The aim of this paper is twofold. First, we wish to appropriately extend the notion of SE-based EP to the RE setting. In contrast to our earlier works on the topic [13,64], we will do it now by framing REP in the context of RE-based estimation theory. This will be done by judiciously generalizing such key notions as the De Bruijn identity, isoperimetric inequality (and ensuing Cramér–Rao inequality), and Stam inequality. In contrast to other similar works on the subject [65,66,67,68], our approach is distinct in three key respects: (a) we consistently use the notion of escort distribution and escort score vector in setting up the generalized De Bruijn identity and Fisher information matrix, (b) we generalize Stam’s uncertainty principle, and (c) Rényi EP is related to variance of the reference Gaussian distribution rather than the Rényi maximizing distribution. As a byproduct, we derive within such a generalized estimation theory framework the Rényi-EP-based quantum uncertainty relations (REPUR) of Schrödinger–Roberston type. The REPUR obtained coincides with our earlier result [13] that was obtained in a very different context by means of the Beckner–Babenko theorem. This in turn serves as a consistency check of the proposed generalized estimation theory. Second, we identify interesting new playgrounds for the Rényi EPs obtained. In particular, we asked ourselves a question: assuming one is able in specific quantum protocols to measure Rényi EPs of various orders, how does this constrain the underlying quantum state distribution? To answer this question, we invoke the concept of the information distribution associated with a given quantum state. The latter contains a complete “information scan” of the underlying state distribution. We set up a reconstruction method based on Hausdorff’s moment problem [69] to show explicitly how the information probability distribution associated with a given quantum state can be numerically reconstructed from EPs. This is a process that is analogous to quantum-state tomography. However, whereas tomography extracts the full density matrix from an ensemble using many measurements on a tomographically complete basis, the EP reconstruction method extracts the probability density on a given basis. This is an alternative approach that may be advantageous, for example, in quantum metrology schemes, where only knowledge of the local probability density rather than the full quantum state is needed [70].

The paper is structured as follows. In Section 2, we introduce the concept of Rényi’s EP. With quantum metrology applications in mind, we discuss this in the framework of estimation theory. First, we duly generalize the notion of Fisher information (FI) by using a Rényi entropy version of De Bruijn’s identity. In this connection, we emphasize the role of the so-called escort distribution, which appears naturally in the definition of higher-order score functions. Second, we prove the RE-based isoperimetric inequality and ensuing Cramér–Rao inequality and find how the knowledge of Fisher information matrix restricts possible values of Rényi’s EP. Finally, we further illuminate the role of Rényi’s EP by deriving (through the Stam inequality) Rényi’s EP-based quantum uncertainty relations for conjugate observables. To flesh this out, the second part of the paper is devoted to the development of the use of Rényi EPs to extract the quantum state from incomplete data. This is of particular interest in various quantum metrology protocols. To this end, we introduce in Section 3 the concepts of information distribution, and, in Section 4, we show how cumulants of the information distribution can be obtained from knowledge of the EPs. With the cumulants at hand, one can reconstruct the underlying information distribution in a process which we call an information scan. Details of how one could explicitly realize such an information scan for quantum state PDFs are provided in Section 5. There we employ generalized versions of Gram–Charlier A and the Edgeworth expansion. In Section 6, we illustrate the inner workings of the information scan using the example of a so-called cat state. This state is of interest in applications of quantum physics such as quantum-enhanced metrology, which is concerned with the optimal extraction of information from measurements subject to quantum mechanical effects. The cat state we consider is a superposition of the vacuum state and a coherent state of the electromagnetic field; two cases are studied comprising different probabilistic weightings of the superposition state corresponding to balanced and unbalanced cat states. Section 7 is dedicated to EPs based on Tsallis entropy. In particular, we show that Rényi and Tsallis EPs coincide with each other. This, in turn, allows us to phrase various estimation theory inequalities in terms of TE. In Section 7, we end with conclusions. For the reader’s convenience, we relegate some technical issues concerning the generalized De Bruijn identity and associated isoperimetric and Stam inequalities to three appendices.

2. Rényi Entropy Based Estimation Theory and Rényi Entropy Powers

In this section, we introduce the concept of Rényi’s EP. With quantum metrology applications in mind, we discuss this in the framework of estimation theory. This will not only allow us to find new estimation inequalities, such as the Rényi-entropy-based De Bruijn identity, isoperimetric inequality, or Stam inequality, but it will also provide a convenient technical and conceptual frame for deriving a one-parameter family of Rényi-entropy-power-based quantum-mechanical uncertainty relations.

2.1. Fisher Information—Shannon’s Entropy Approach

First, we recall that the Fisher information matrix of a random vector in with the PDF is defined as [38]

where the covariance matrix is associated with the random zero-mean vector—the so-called score vector, as

A corresponding trace of , i.e.,

is known as the Fisher information. Both the FI and FI matrix can be conveniently related to Shannon’s differential entropy via De Bruijn’s identity [66,67].

De Bruijn’s identity: Let be a random vector in with the PDF and let be a Gaussian random vector (noise vector) with zero mean and unit-covariance matrix, independent of . Then,

where

is Shannon’s differential entropy (measured in nats). In the case when the independent additive noise is non-Gaussian with zero mean and covariance matrix , then the following generalization holds [67]:

The key point about De Bruijn’s identity is that it provides a very useful intuitive interpretation of FI, namely, FI quantifies the sensitivity of transmitted (Shannon type) information to an arbitrary independent additive noise. An important aspect that should be stressed in this context is that FI as a quantifier of sensitivity depends only on the covariance of the noise vector, and thus it is independent of the shape of the noise distribution. This is because De Bruijn’s identity remains unchanged for both Gaussian and non-Gaussian additive noise with the same covariance matrix.

2.2. Fisher Information—Rényi’s Entropy Approach

We now extend the notion of the FI matrix to the Rényi entropy setting. A natural way to do it is via an extension of De Bruijn’s identity to Rényi entropies. In particular, the following statement holds:

Generalized De Bruijn’s identity: Let be a random vector in with the PDF and let be an independent (generally non-Gaussian) noise vector with the zero mean and covariance matrix , then, for any

where

is Rényi’s differential entropy (measured in nats) with . The ensuing FI matrix of order q has the explicit form

with the score vector

Here, is the so-called escort distribution [71]. The “” denotes the covariance matrix computed with respect to . Proofs of both the conventional (i.e., Shannon entropy based) and generalized (i.e., Rényi entropy based) De Bruijn’s identity are provided in Appendix A. There we also discuss some further useful generalizations of De Bruijn’s identity. Finally, as in the Shannon case, we define the FI of order q—denoted as , as

2.3. Rényi’s Entropy Power and Generalized Isoperimetric Inequality

Similarly as in conventional estimation theory, one can expect that there should exist a close connection between the FI matrix and the corresponding Rényi entropy power . In Shannon’s information theory, such a connection is phrased in terms of isoperimetric inequality [67]. Here, we prove that a similar relationship works also in Rényi’s information theory.

Let us start by introducing the concept of Rényi’s entropy power. This is defined as the solution of the equation [13,64]

where represents a Gaussian random vector with a zero mean and unit covariance matrix. Thus, denotes the variance of a would be Gaussian distribution that has the same Rényi information content as the random vector described by the PDF . Expression (12) was studied in [13,64,72], where it was shown that the only class of solutions of (12) is

with and . In addition, when , one has , where is the conventional Shannon entropy power [5]. In this latter case, one can use the asymptotic equipartition property [55,73] to identify with “typical size” of a state set, which in the present context is the effective support set size for a random vector. This, in turn, is equivalent to Einstein’s entropic principle [74]. In passing, it should be noted that the form of the Rényi EP expressed in (13) is not universally accepted version. In a number of works, it is defined merely as an exponent of RE, see, e.g., [75,76]. Our motivation for the form (13) is twofold: first, it has a clear interpretation in terms of variances of Gaussian distributions and, second, it leads to simpler formulas, cf. e.g., Equation (22).

Generalized isoperimetric inequality: Let be a random vector in with the PDF . Then,

where the Rényi parameter . We relegate the proof of the generalized isoperimetric inequality to Appendix B.

It is also worth noting that the relation (14) implies another important inequality. By using the fact that the Shannon entropy is maximized (among all PDF’s with identical covariance matrix ) by the Gaussian distribution, we have (see, e.g., [77]). If we further employ that is a monotonously decreasing function of q, see, e.g., [31,78], we can write (recall that )

The isoperimetric inequality (14) then implies

We can further use the inequality

(valid for any positive semi-definite matrix ) to write

where is an average variance per component.

Relations (16)–(18) represent the q-generalizations of the celebrated Cramér–Rao information inequality. In the limit of , we recover the standard Cramér–Rao inequality that is widely used in statistical inference theory [38,79]. A final logical step needed to complete the proof of REPURs is represented by the so-called generalized Stam inequality. To this end, we first define the concept of conjugate random variables. We say that random vectors and in are conjugate if their respective PDF’s and can be written as

where the (generally complex) probability amplitudes and are mutual Fourier images, i.e.,

and analogously for . With this, we can state the generalized Stam inequality.

Generalized Stam inequality (Stam’s uncertainty principle): Let and be conjugate random vectors in . Then,

is valid for any and that are connected via the relation . In particular, if we define and , then and are Hölder conjugates. A proof of the generalized Stam inequality is provided in Appendix C.

Let us now consider Hölder conjugate indices p and q with (so that ). Combining the isoperimetric inequality (14) together with the generalized Stam inequality (21), we obtain the following one-parameter class of REP-based inequalities

By symmetry, the role of q and p can be reversed. In Refs. [13,64], we presented an alternative derivation of inequalities (22) that was based on the Beckner–Babenko theorem. There it was also proved that the inequality saturates if and only if the distributions involved are Gaussian. The only exception to this rule is for the asymptotic values and (or vice versa) where the saturation happens whenever the peak of and tail of (or vice versa) are Gaussian.

The passage to quantum mechanics is quite straightforward. First, we realize that, in QM, the Fourier conjugate wave functions are related via two reciprocal relations

The Plancherel (or Riesz–Fischer) equality implies that, when , then also automatically (and vice versa). Thus, the connection between amplitudes and from (19) and amplitudes and from (23) is

The factor ensures that also and functions are normalized (in the sense of ) to unity; however, due to Equation (19), it might be easily omitted. The corresponding Rényi EPs change according to

and hence REP-based inequalities (22) acquire in the QM setting a simple form

This represents an infinite tower of mutually distinct (generally irreducible) REPURs [13].

At this point, some comments are in order. First, historically, the most popular quantifier of quantum uncertainty has been variance because it is conceptually simple and relatively easily extractable from experimental data. The variance determines the measure of uncertainty in terms of the fluctuation (or spread) around the mean value, which, while useful for many distributions, does not provide a sensible measure of uncertainty in a number of important situations including multimodal [12,13,64] and heavy-tailed distributions [13,14,64]. To deal with this, a multitude of alternative (non-variance based) measures of uncertainty in quantum mechanics (QM) have emerged. Among these, a particularly prominent role is played by information entropies such as the Shannon entropy [63], Rényi entropy [63,64], Tsallis entropy [80], associated differential entropies, and their quantum-information generalizations [13,15,64]. REPURs (26) fit into this framework of entropic QM URs. In connection with (26), one might observe that the conventional URs based on variances—so-called Robertson–Schrödinger URs [81,82]) and Shannon differential entropy based URs (e.g., Hirschman or Białynicki–Birula URs [15,83]) naturally appear as special cases in this hierarchy. Second, the ITEs enter quantum information theory typically in three distinct ways: (a) as a measure of the quantum information content (e.g., how many qubits are needed to encode the message without loss of information), (b) as a measure of the classical information content (e.g., amount of information in bits that can be recovered from the quantum system) and (c) to quantify the entanglement of pure and mixed bipartite quantum states. Logarithms in base 2 are used because, in quantum information, one quantifies entropy in bits and qubits (rather than nats). This in turn also modifies Rényi’s EP as

In the following, we will employ this QM practice.

3. Information Distribution

To put more flesh on the concept of Rényi’s EP, we devote the rest of this paper to the development of the methodology and application of Rényi EPs in extracting quantum states from incomplete data. The technique of quantum tomography is widely used for this purpose and involves making many different measurements on an ensemble of identical copies of a quantum state with a tomographically complete measurement basis [84,85]. This process is very measurement-intensive, scaling exponentially with the number of particles and so methods have been developed to approximate it with fewer measurements [86].

However, the method of Rényi EPs provides an efficient alternative approach. Instead of reconstructing the full quantum state, this process extracts the PDF of the quantum state in a given basis. For a broad class of quantum metrology problems, local rather than global approaches are preferred [70] and, for these, the local PDF of the state at each sensor is needed rather than the full density matrix. With this in mind, we first start with the notion of the information distribution.

Let be the PDF for the random variable . We define the information random variable so that . In other words, represents the information in with respect to . In this connection, it is expedient to introduce the cumulative distribution function for as

The function thus represents the probability that the random variable is less than or equal to y. We have denoted the corresponding probability measure as . Taking the Laplace transform of both sides of (28), we get

where denotes the mean value with respect to . By assuming that is smooth, then the PDF associated with —the so-called information PDF—is

Setting , we have

The mean here is taken with respect to the PDF g. Equation (31) can also be written explicitly as

Note that, when is integrable for , then (32) ensures that the moment-generating function for PDF exists. Thus, in particular, the moment-generating function exists when represents Lévy -stable distributions, including the heavy-tailed stable distributions (i.e, PDFs with the Lévy stability parameter ). The same holds for and due to the Beckner–Babenko theorem [13,87,88].

4. Reconstruction Theorem

Since is the moment-generating function of the random variable , one can generate all moments of the PDF (if they exist) by taking the derivatives of with respect to s. From a conceptual standpoint, it is often more useful to work with cumulants rather than moments. Using the fact that the cumulant generating function is simply the (natural) logarithm of the moment-generating function, we see from (32) that the differential RE is a reparametrized version of the cumulant generating function of the information random variable . In fact, from (31), we have

To understand the meaning of REPURs, we begin with the cumulant expansion (33), i.e.,

where denotes the n-th cumulant of the information random variable (in units of bits). We note that

i.e., they represent the Shannon entropy and varentropy, respectively. By employing the identity

we can rewrite (34) in the form

From (37), one can see that

where is the Kronecker delta function that has a value of one if , or zero otherwise. In terms of the Grünwald–Letnikov derivative formula (GLDF) [89], we can rewrite (38) as

Thus, in order to determine the first m cumulants of , we need to know all entropy powers. In practice, corresponds to a characteristic resolution scale for the entropy index which will be chosen appropriately for the task at hand, but is typically of the order . Note that the last term in (38) and (39) can be also written

with being the random variable distributed with respect to the Gaussian distribution with the unit covariance matrix.

When all the cumulants exist, then the problem of recovering the underlying PDF for is equivalent to the Stieltjes moment problem [90]. Using this connection, there are a number of ways to proceed; the PDF in question can be reconstructed e.g., in terms of sums involving orthogonal polynomials (e.g., the Gram–Charlier A series or the Edgeworth series [91]), the inverse Mellin transform [92], or via various maximum entropy techniques [93]. Pertaining to this, the theorem of Marcinkiewicz [94] implies that there are no PDFs for which for . In other words, the cumulant generating function cannot be a finite-order polynomial of degree greater than 2. The important exceptions, and indeed the only exceptions to Marcinkiewicz’s theorem are the Gaussian PDFs that can have the first two cumulants nontrivial and . Thus, apart from the special case of Gaussian PDFs where only and are needed, one needs to work with as many entropy powers (or ensuing REPURs) as possible to receive as much information as possible about the structure of the underlying PDF. In theory, the whole infinite tower of REPURs would be required to uniquely specify a system’s information PDF. Note that, for Gaussian information PDFs, one needs only and to reconstruct the PDF uniquely. From (37) and (39), we see that knowledge of corresponds to while further determines , i.e., the varentropy. Since is involved (via (39)) in the determination of all cumulants, it is the most important entropy power in the tower. Thus, the entropy powers of a given process have an equivalent meaning to the PDF: they describe the morphology of uncertainty of the observed phenomenon.

We should stress that the focus of the reconstruction theorem we present is on cumulants which can be directly used for a shape estimation of but not . However, by knowing , we have a complete “information scan” of . Such an information scan is, however, not unique, indeed, two PDFs that are rearrangements of each other—i.e., equimeasurable PDFs, have identical and . Even though equimeasurable PDFs cannot be distinguished via their entropy powers, they can be, as a rule, distinguished via their respective momentum-space PDFs and associated entropy powers. Thus, the information scan has a tomographic flavor to it. From the multi-peak structure of , one can determine the number and height of the stationary points. These are invariant characteristics of a given family of equimeasurable PDFs. This will be further illustrated in Section 6.

5. Information Scan of Quantum-State PDF

With knowledge of the entropy powers, the question now is how we can reconstruct the information distribution . The inner workings of this will now be explicitly illustrated with the (generalized) Gram-Charlier A expansion. However, other—often more efficient methods—are also available [91]. Let be cumulants obtained from entropy powers and let be some reference PDF whose cumulants are . The information PDF can be then written as [91]

With hindsight, we choose the reference PDF to be a shifted gamma PDF, i.e.,

with . In doing so, we have implicitly assumed that the PDF is in the first approximation equimeasurable with the Gaussian PDF. To reach a corresponding matching, we should choose , and . Using the fact that [95]

(where is an associated Laguerre polynomial of order k with parameter ) and given that , and for we can write (41) as

If needed, one can use a relationship between the moments and the cumulants (Faà di Bruno’s formula [94]) to recast the expansion (44) into more familiar language. For the Gram–Charlier A expansion, various formal convergence criteria exist (see, e.g., [91]). In particular, the expansion for nearly Gaussian equimeasurable PDFs converges quite rapidly and the series can be truncated fairly quickly. Since in this case one needs fewer ’s in order to determine the information PDF , only EPs in the small neighborhood of the index 1 will be needed. On the other hand, the further the is from Gaussian (e.g., heavy-tailed PDFs), the higher the orders of are required to determine , and hence a wider neighborhood of the index 1 will be needed for EPs.

6. Example—Reconstruction Theorem and (Un)Balanced Cat State

We now demonstrate an example of the reconstruction in the context of a quantum system. Specifically, we consider cat states that are often considered in the foundations of quantum physics as well as in various applications, including solid state physics [96] and quantum metrology [97]. The form of the state we consider is , where is the normalization factor, is the vacuum state, a weighting factor, and is the coherent state given by

(taking ). For , we refer to the state as a balanced cat state (BCS) and for , as an unbalanced cat state (UCS). Changing the basis of to the eigenstates of the general quadrature operator

where and are the creation and annihilation operators of the electromagnetic field, we find the PDF for the general quadrature variable to be

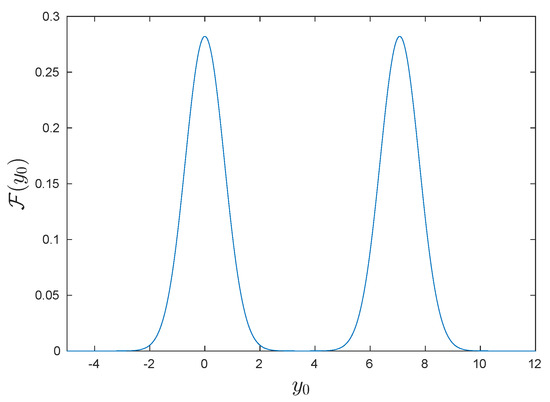

where is the normalization constant. Setting and returns the PDF of the BCS for the position-like variable . With this, the Rényi EPs are calculated and found to be constant across varying p. This is because for the BCS is in fact a piecewise rearrangement of a Gaussian PDF (yet has an overall non-Gaussian structure) as depicted in Figure 1, thus for all p, where is the variance of the ‘would be Gaussian’. Taking the reference PDF to be , with , and , it is evident that for all , and from the Gram–Charlier A series (41), a perfect matching in the reconstruction is achieved. Furthermore, it can be shown that the variance of (47) increases with , i.e., the variance increases as the peaks of the PDF diverge, which is in stark contrast to the Rényi EPs which remain constant for increasing . This reveals the shortcomings of variance as a measure of uncertainty for non-Gaussian PDFs.

Figure 1.

Probability distribution function of a balanced cat state (BCS) for the quantum mechanical state’s position-like quadrature variable with . This clearly displays an overall non-Gaussian structure; however, as this is a piecewise rearrangement of a Gaussian PDF for all , we have that for all p and .

The peaks, located at , where j is an index labelling the distinct peaks, give rise to sharp singularities in the target . With regard to the BCS position PDF, distributions of the conjugate parameter distinguish from its equimeasurable Gaussian PDF and hence the Rényi EPs also distinguish the different cases. The number of available cumulants k is computationally limited, but, as this grows, information about the singularities will be recovered in the reconstruction. In the following, we show how the tail convergence and location of a singularity for can be reconstructed using .

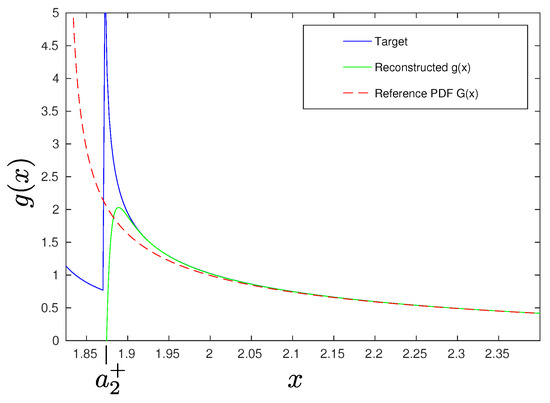

We consider the case of a UCS with , and we take in Equation (47) to find the PDF in the quadrature which is non-Gaussian for all piecewise rearrangements. As such, all REPs vary with p and consequently all cumulants carry information on . Here, we choose to reconstruct the UCS information distribution by means of the Edgeworth series [91] so that

where the reference PDF is again the shifted gamma distribution. Using the Edgeworth series, the information PDF is approximated by expanding in orders of n, which has the advantage over the Gram–Charlier A expansion discussed above of bounding the errors of the approximation. For the particular UCS of interest, expanding to order reveals convergence toward the analytic form of the information PDF shown as the target line in Figure 2. This shows that, for a given characteristic resolution, control over the first five Rényi EPs can be enough for a useful information scan of a quantum state with an underlying non-Gaussian PDF. In the example shown in Figure 2, we see that the information scan accurately predicts the tail behavior as well as the location of the singularity, which corresponds to the second (lower) peak of .

Figure 2.

Reconstructed information distribution of an unbalanced cat state with and . The Edgeworth expansion has been used here to order requiring control of the first five REPs. Good convergence of the tail behavior is evident as well as the location of the singularity corresponding to the second peak; corresponds to the value of x at the point of intersection with the second (lower) peak of .

7. Entropy Powers Based on Tsallis Entropy

Let us now briefly comment on the entropy powers associated with yet another important differential entropy, namely Tsallis differential entropy, which is defined as [47]

where, as before, the PDF is associated with a random vector in .

Similarly to the RE case, the Tsallis entropy power is defined as the solution of the equation

The ensuing entropy power has not been studied in the literature yet, but it can be easily derived by observing that the following scaling property for differential Tsallis entropy holds, namely

where and the q-deformed sum and logarithm are defined as [11]: and , respectively. Relation (51) results from the following chain of identities:

We can further use the simple fact that

Here, q and satisfy with . By combining (50), (51), and (53), we get

where we have used the sum rule from the q-deformed calculus: . Equation (54) can be resolved for by employing the q-exponential, i.e., , which (among others) satisfies the relation . With this, we have

In addition, when , one has

where is the conventional Shannon entropy power and is the Shannon entropy [5].

In connection with Tsallis EP, we might notice one interesting fact, namely by starting from Rényi’s EP (considering RE in nats), we can write

Here, we have used a simple identity

Thus, we have obtained that Rényi and Tsallis EPs coincide with each other. In particular, Rényi’s EPI (22) can be equivalently written in the form

Similarly, we could rephrase the generalized Stam inequality (21) and generalized isoperimetric inequality (14) in terms of Tsallis EPs. Though such inequalities are quite interesting from a mathematical point of view, it is not yet clear how they could be practically utilized in the estimation theory as there is no obvious operational meaning associated with Tsallis entropy (e.g., there is no coding theorem for Tsallis entropy). On the other hand, Tsallis entropy is an important concept in the description of entanglement [98]. For instance, Tsallis entropy of order 2 (also known as linear entropy) directly quantifies state purity [63].

8. Conclusions

In the first part of this paper, we have introduced the notion of Rényi’s EP. With quantum metrology applications in mind, we carried out our discussion in the framework of estimation theory. In doing so, we have generalized the notion of Fisher information (FI) by using a Rényi entropy version of De Bruijn’s identity. The key role of the escort distribution in this context was highlighted. With Rényi’s EP at hand, we proved the RE-based isoperimetric and Stam inequalities. We have further clarified the role of Rényi’s EP by deriving (through the generalized Stam inequality) a one-parameter family of Rényi EP-based quantum mechanical uncertainty relations. Conventional variance-based URs of Robertson-Schrödinger and Shannon differential entropy-based URs of Hirschman or Białynicki-Birula naturally appear as special cases in this hierarchy of URs. Interestingly, we found that the Tsallis entropy-based EP coincided with Rényi’s EP provided that the order is the same. This might open quite a new, hitherto unknown role for Tsallis entropy in estimation theory.

The second part of the paper was devoted to developing the application of Rényi’s EP for extracting quantum states from incomplete data. This is of particular interest in various quantum metrology protocols. To that end, we introduced the concepts of information distribution and showed how cumulants of the information distribution can be obtained from knowledge of EPs of various orders. With cumulants thus obtained, one can reconstruct the underlying information distribution in a process which we call an information scan. A numerical implementation of this reconstruction procedure was technically realized via Gram-Charlier A and Edgeworth expansion. For an explicit illustration of the information scan, we used the non-Gaussian quantum states—(un)balanced cat states. In this case, it was found that control of the first five significant Rényi EPs gave enough information for a meaningful reconstruction of the information PDF and brought about non-trivial information on the original balanced cat state PDF, such as asymptotic tail behavior or the heights of the peaks.

Finally, let us stress one more point. Rényi EP-based quantum mechanical uncertainty relations (26) basically represent a one-parameter class of inequalities that constrain higher-order cumulants of state distributions for conjugate observables [13]. In connection with this, the following two questions are in order. Assuming one is able to control Rényi EPs of various orders: (i) how do such Rényi EPs constrain the underlying state distribution and (ii) how do the ensuing REPURs restrict the state distributions of conjugate observables? The first question was tackled in this paper in terms of the information distribution and reconstruction theorem. The second question is more intriguing and has not yet been properly addressed. Work along these lines is presently under investigation.

Author Contributions

Conceptualization, P.J. and J.D.; Formal analysis, M.P.; Methodology, P.J. and M.P.; Validation, M.P.; Visualization, J.D.; Writing—original draft, P.J.; Writing—review & editing, J.D. All authors have read and agreed to the published version of the manuscript.

Funding

P.J. and M.P. were supported by the Czech Science Foundation Grant No. 19-16066S. J.D. acknowledges support from DSTL and the UK EPSRC through the NQIT Quantum Technology Hub (EP/M013243/1).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ITE | Information-theoretic entropy |

| UR | Uncertainty relation |

| RE | Rényi entropy |

| TE | Tsallis entropy |

| REPUR | Rényi entropy-power-based quantum uncertainty relation |

| QM | Quantum mechanics |

| EP | Entropy power |

| FI | Fisher information |

| Probability density function | |

| EPI | Entropy power inequality |

| REP | Rényi entropy power |

| BCS | Balanced cat state |

| UCS | Unbalanced cat state |

Appendix A

Here, we provide an intuitive proof of the generalized De Bruijn identity.

Generalized De Bruijn identity I: By denoting the PDF associated with a random vector as and the noise PDF as , we might write the LHS of (7) as

It should be noted that our manipulations make sense only for any , as only in these cases are RE and escort distributions well defined. The right-hand-side of (A1) can also be equivalently written as

where the mean is performed with respect to the escort distribution , while with respect to distribution.

We note in passing that the conventional De Bruijn’s identity (6) emerges as a special case when . For the Gaussian noise vector, we can generalize the previous derivation in the following way:

Generalized De Bruijn’s identity II: Let be a random vector in with the PDF and let be an independent Gaussian noise vector with the zero mean and covariance matrix , then,

The right-hand-side is equivalent to

To prove the identity (A3), we might follow the same line of reasoning as in (A1). The only difference is that, while in (A1) we had a small parameter in which one could expand to all orders of correlation functions and easily perform differentiation and limit for any noise distribution (with zero mean), the same procedure can not be done in the present context for a generic noise distribution. In fact, only the Gaussian distribution has the property that the higher-order correlation functions and their derivatives with respect to are small when is small. The latter is a consequence of the Marcinkiewicz theorem [99].

Appendix B

Here, we prove the Generalized isoperimetric inequality from Section 2. The starting point is the entropy-power inequality (EPI) [64]: Let and be two independent continuous random vectors in with probability densities and , respectively. Suppose further that and , and let

then the following inequality holds:

Let us now consider a Gaussian noise vector (independent of ) with zero mean and covariance matrix . Within this setting, we can write the following EPIs:

with . Here, we have used the simple fact that , irrespective of the value of r.

Let us now fix r and maximize the RHS of inequality (A7) with respect to and q provided we keep the constraint condition (A5). This yields the condition extremum

With this, q turns out to be

which in the limit reduces to . The latter implies that . The result (A10) implies that the RHS of (A7) reads

Should we have started with the p index, we would arrive at an analogous conclusion. To proceed, we stick, without loss of generality, to the inequality (A7). This implies that

To proceed, we employ the identity with representing a Gaussian random vector with zero mean and unit covariance matrix, and the fact that is monotonously decreasing function of r, i.e., (see, e.g., Ref. [78]). With this, we have

Consequently, Equation (A12) can be rewritten as

At this stage, we are interested in the limit. In order to find the ensuing leading order behavior of , we can use L’Hospital’s rule, namely

Now, we neglect the sub-leading term of order in (A14) and take on both sides. This gives

or equivalently

At this stage, we can use the inequality of arithmetic and geometric means to write (note that is a positive semi-definite matrix)

Consequently, we have

as stated in Equation (14).

Appendix C

In this appendix, we prove the Generalized Stam inequality from Section 2. We start with the defining relation (13), i.e.,

and consider so that . For the q-norm, we can write

Here, and are Hölder conjugates so that . The inequality employed is due to the Hausdorff–Young inequality (which in turn is a simple consequence of the Hölder inequality [64]). We further have

where is an arbitrary -independent vector, and denotes a regularized volume of —D-dimensional ball of a very large (but finite) radius R. In the first line of (A22), we have employed the triangle inequality (with equality if and only if ), namely

The inequality in the last line holds for (for all i), since, in this case, for all from the D-dimensional ball. In this case, one may further estimate the integral from below by neglecting the positive integrand .

Note that (A22) implies

with equality if and only if (to see this, one should apply L’Hospital’s rule). Equation (A24) allows for writing

where is some as yet unspecified constant and . In deriving (A25), we have used the Hölder inequality

Here, and also in (A22) and (A25), denotes the regularizated volume of .

As already mentioned, the best estimate of the inequality (A25) is obtained for . As we have seen, goes to zero as which allows for choosing the constant so that the denominator in (A25) stays finite in the limit . This implies that . Consequently, (A25) acquires in the large R limit the form

With this, we can write [see Equations (A20)–(A21)]

where, in the last inequality, we have used the fact that for and that . As a final step, we employ Equations (A18) and (A28) to write

which completes the proof of the generalized Stam’s inequality.

References

- Bennaim, A. Information, Entropy, Life in addition, the Universe: What We Know Amnd What We Do Not Know; World Scientific: Singapore, 2015. [Google Scholar]

- Jaynes, E.T. Papers on Probability and Statistics and Statistical Physics; D. Reidel Publishing Company: Boston, MA, USA, 1983. [Google Scholar]

- Millar, R.B. Maximum Likelihood Estimation and Infrence; John Wiley and Soms Ltd.: Chichester, UK, 2011. [Google Scholar]

- Leff, H.S.; Rex, A.F. (Eds.) Maxwell’s Demon 2: Entropy, Classical and Quantum Information, Computing; Institute of Physics: London, UK, 2002. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423; 623–656. [Google Scholar] [CrossRef]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: New York, NY, USA, 1949. [Google Scholar]

- Feinstein, A. Foundations of Information Theory; McGraw Hill: New York, NY, USA, 1958. [Google Scholar]

- Campbell, L.L. A Coding Theorem and Rényi’s Entropy. Inf. Control 1965, 8, 423–429. [Google Scholar] [CrossRef]

- Bercher, J.-F. Source Coding Escort Distributions Rényi Entropy Bounds. Phys. Lett. A 2009, 373, 3235–3238. [Google Scholar] [CrossRef]

- Thurner, S.; Hanel, R.; Klimek, P. Introduction to the Theory of Complex Systems; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics; Approaching a Complex World; Springer: New York, NY, USA, 2009. [Google Scholar]

- Bialynicki-Birula, I. Rényi Entropy and the Uncertainty Relations. AIP Conf. Proc. 2007, 889, 52–61. [Google Scholar]

- Jizba, P.; Ma, Y.; Hayes, A.; Dunningham, J.A. One-parameter class of uncertainty relations based on entropy power. Phys. Rev. E 2016, 93, 060104-1(R)–060104-5(R). [Google Scholar] [CrossRef] [PubMed]

- Maassen, H.; Uffink, J.B.M. Generalized entropic uncertainty relations. Phys. Rev. Lett. 1988, 60, 1103–1106. [Google Scholar] [CrossRef] [PubMed]

- Bialynicki-Birula, I.; Mycielski, J. Uncertainty relations for information entropy in wave mechanics. Commun. Math. Phys. 1975, 44, 129–132. [Google Scholar] [CrossRef]

- Dang, P.; Deng, G.-T.; Qian, T. A sharper uncertainty principle. J. Funct. Anal. 2013, 265, 2239–2266. [Google Scholar] [CrossRef]

- Ozawa, T.; Yuasa, K. Uncertainty relations in the framework of equalities. J. Math. Anal. Appl. 2017, 445, 998–1012. [Google Scholar] [CrossRef]

- Zeng, B.; Chen, X.; Zhou, D.-L.; Wen, X.-G. Quantum Information MeetsQuantum Matter: From Quantum Entanglement to Topological Phase in Many-Body Systems; Springer: New York, NY, USA, 2018. [Google Scholar]

- Melcher, B.; Gulyak, B.; Wiersig, J. Information-theoretical approach to the many-particle hierarchy problem. Phys. Rev. A 2019, 100, 013854-1–013854-5. [Google Scholar] [CrossRef]

- Ryu, S.; Takayanagi, T. Holographic derivation of entanglement entropy from AdS/CFT. Phys. Rev. Lett. 2006, 96, 181602-1–181602-4. [Google Scholar] [CrossRef]

- Eisert, J.; Cramer, M.; Plenio, M.B. Area laws for the entanglement entropy—A review. Rev. Mod. Phys. 2010, 82, 277–306. [Google Scholar] [CrossRef]

- Pikovski, I.; Vanner, M.R.; Aspelmeyer, M.; Kim, M.S.; Brukner, Č. Probing Planck-Scale physics Quantum Optics. Nat. Phys. 2012, 8, 393–397. [Google Scholar] [CrossRef]

- Marin, F.; Marino, F.; Bonaldi, M.; Cerdonio, M.; Conti, L.; Falferi, P.; Mezzena, R.; Ortolan, A.; Prodi, G.A.; Taffarello, L.; et al. Gravitational bar detectors set limits to Planck-scale physics on macroscopic variables. Nat. Phys. 2013, 9, 71–73. [Google Scholar]

- An, S.; Zhang, J.-N.; Um, M.; Lv, D.; Lu, Y.; Zhang, J.; Yin, Z.-Q.; Quan, H.T.; Kim, K. Experimental test of the quantum Jarzynski equality with a trapped-ion system. Nat. Phys. 2014, 11, 193–199. [Google Scholar] [CrossRef]

- Campisi, M.; Hänggi, P.; Talkner, P. Quantum fluctuation relations: Foundations and applications. Rev. Mod. Phys. 2011, 83, 771–791. [Google Scholar] [CrossRef]

- Erhart, J.; Sponar, S.; Sulyok, G.; Badurek, G.; Ozawa, M.; Hasegawa, Y. Experimental demonstration of a universally valid error—Disturbance uncertainty relation in spin measurements. Nat. Phys. 2012, 8, 185–189. [Google Scholar] [CrossRef]

- Sulyok, G.; Sponar, S.; Erhart, J.; Badurek, G.; Ozawa, M.; Hasegawa, Y. Violation of Heisenberg’s error-disturbance uncertainty relation in neutron-spin measurements. Phys. Rev. A 2013, 88, 022110-1–022110-15. [Google Scholar] [CrossRef]

- Baek, S.Y.; Kaneda, F.; Ozawa, M.; Edamatsu, K. Experimental violation and reformulation of the Heisenberg’s error-disturbance uncertainty relation. Sci. Rep. 2013, 3, 2221-1–2221-5. [Google Scholar] [CrossRef] [PubMed]

- Dressel, J.; Nori, F. Certainty in Heisenberg’s uncertainty principle: Revisiting definitions for estimation errors and disturbance. Phys. Rev. A 2014, 89, 022106-1–022106-14. [Google Scholar] [CrossRef]

- Busch, P.; Lahti, P.; Werner, R.F. Proof of Heisenberg’s Error-Disturbance Relation. Phys. Rev. Lett. 2013, 111, 160405-1–160405-5. [Google Scholar] [CrossRef]

- Jizba, P.; Arimitsu, T. The world according to Rényi: Thermodynamics of multifractal systems. Ann. Phys. 2004, 312, 17–59. [Google Scholar] [CrossRef]

- Liu, R.; Liu, T.; Poor, H.V.; Shamai, S. A Vector Generalization of Costa’s Entropy-Power Inequality with Applications. IEEE Trans. Inf. Theory 2010, 56, 1865–1879. [Google Scholar]

- Costa, M.H.M. On the Gaussian interference channel. IEEE Trans. Inf. Theory 1985, 31, 607–615. [Google Scholar] [CrossRef]

- Polyanskiy, Y.; Wu, Y. Wasserstein continuity of entropy and outer bounds for interference channels. arXiv 2015, arXiv:1504.04419. [Google Scholar] [CrossRef]

- Bagherikaram, G.; Motahari, A.S.; Khandani, A.K. The Secrecy Capacity Region of the Gaussian MIMO Broadcast Channel. IEEE Trans. Inf. Theory 2013, 59, 2673–2682. [Google Scholar] [CrossRef]

- De Palma, G.; Mari, A.; Lloyd, S.; Giovannetti, V. Multimode quantum entropy power inequality. Phys. Rev. A 2015, 91, 032320-1–032320-6. [Google Scholar] [CrossRef]

- Costa, M.H. A new entropy power inequality. IEEE Trans. Inf. Theory 1985, 31, 751–760. [Google Scholar] [CrossRef]

- Frieden, B.R. Science from Fisher Information: A Unification; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Courtade, T.A. Strengthening the Entropy Power Inequality. arXiv 2016, arXiv:1602.03033. [Google Scholar]

- Barron, A.R. Entropy and the Central Limit Theorem. Ann. Probab. 1986, 14, 336–342. [Google Scholar] [CrossRef]

- Pardo, L. New Developments in Statistical Information Theory Based on Entropy and Divergence Measures. Entropy 2019, 21, 391. [Google Scholar] [CrossRef]

- Biró, T.; Barnaföldi, G.; Ván, P. New entropy formula with fluctuating reservoir. Physics A 2015, 417, 215–220. [Google Scholar] [CrossRef]

- Bíró, G.; Barnaföldi, G.G.; Biró, T.S.; Ürmössy, K.; Takács, Á. Systematic Analysis of the Non-Extensive Statistical Approach in High Energy Particle Collisions—Experiment vs. Theory. Entropy 2017, 19, 88. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 2011, 96, 50003-1–50003-6. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S.; Gell-Mann, M. How multiplicity determines entropy and the derivation of the maximum entropy principle for complex systems. Proc. Natl. Acad. Sci. USA 2014, 111, 6905–6910. [Google Scholar] [CrossRef] [PubMed]

- Burg, J.P. The Relationship Between Maximum Entropy Spectra In addition, Maximum Likelihood Spectra. Geophysics 1972, 37, 375–376. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Havrda, J.; Charvát, F. Quantification Method of Classification Processes: Concept of Structural α-Entropy. Kybernetika 1967, 3, 30–35. [Google Scholar]

- Frank, T.; Daffertshofer, A. Exact time-dependent solutions of the Renyi Fokker–Planck equation and the Fokker–Planck equations related to the entropies proposed by Sharma and Mittal. Physics A 2000, 285, 351–366. [Google Scholar] [CrossRef]

- Sharma, B.D.; Mitter, J.; Mohan, M. On measures of “useful” information. Inf. Control 1978, 39, 323–336. [Google Scholar] [CrossRef][Green Version]

- Jizba, P.; Korbel, J. On q-non-extensive statistics with non-Tsallisian entropy. Physics A 2016, 444, 808–827. [Google Scholar] [CrossRef]

- Jizba, P.; Arimitsu, T. Generalized statistics: Yet another generalization. Physics A 2004, 340, 110–116. [Google Scholar] [CrossRef]

- Vos, G. Generalized additivity in unitary conformal field theories. Nucl. Phys. B 2015, 899, 91–111. [Google Scholar] [CrossRef]

- Uffink, J. Can the maximum entropy principle be explained as a consistency requirement? Stud. Hist. Phil. Mod. Phys. 1995, 26, 223–261. [Google Scholar] [CrossRef]

- Jizba, P.; Korbel, J. Maximum Entropy Principle in Statistical Inference: Case for Non-Shannonian Entropies. Phys. Rev. Lett. 2019, 122, 120601-1–120601-6. [Google Scholar] [CrossRef] [PubMed]

- Jizba, P.; Arimitsu, T. Observability of Rényi’s entropy. Phys. Rev. E 2004, 69, 026128-1–026128-12. [Google Scholar] [CrossRef]

- Elben, A.; Vermersch, B.; Dalmonte, M.; Cirac, J.I.; Zoller, P. Rényi Entropies from Random Quenches in Atomic Hubbard and Spin Models. Phys. Rev. Lett. 2018, 120, 050406-1–050406-6. [Google Scholar] [CrossRef]

- Bacco, D.; Canale, M.; Laurenti, N.; Vallone, G.; Villoresi, P. Experimental quantum key distribution with finite-key security analysis for noisy channels. Nat. Commun. 2013, 4, 2363-1–2363-8. [Google Scholar] [CrossRef] [PubMed]

- Müller-Lennert, M.; Dupuis, F.; Szehr, O.; Fehr, S.; Tomamichel, M. On quantum Renyi entropies: A new generalization and some properties. J. Math. Phys. 2013, 54, 122203-1–122203-20. [Google Scholar] [CrossRef]

- Coles, P.J.; Colbeck, R.; Yu, L.; Zwolak, M. Uncertainty Relations from Simple Entropic Properties. Phys. Rev. Lett. 2012, 108, 210405-1–210405-5. [Google Scholar] [CrossRef]

- Minter, F.; Kuś, M.; Buchleitner, A. Concurrence of Mixed Bipartite Quantum States in Arbitrary Dimensions. Phys. Rev. Lett. 2004, 92, 167902-1–167902-4. [Google Scholar]

- Vidal, G.; Tarrach, R. Robustness of entanglement. Phys. Rev. A 1999, 59, 141–155. [Google Scholar] [CrossRef]

- Bengtsson, I.; Życzkowski, K. Geometry of Quantum States. An Introduction to Quantum Entanglement; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Jizba, P.; Dunningham, J.A.; Joo, J. Role of information theoretic uncertainty relations in quantum theory. Ann. Phys. 2015, 355, 87–114. [Google Scholar] [CrossRef]

- Toranzo, I.V.; Zozor, S.; Brossier, J.-M. Generalization of the de Bruijn Identity to General ϕ-Entropies and ϕ-Fisher Informations. IEEE Trans. Inf. Theory 2018, 64, 6743–6758. [Google Scholar] [CrossRef]

- Rioul, O. Information Theoretic Proofs of Entropy Power Inequalities. IEEE Trans. Inf. Theory 2011, 57, 33–55. [Google Scholar] [CrossRef]

- Dembo, A.; Cover, T.M. Information Theoretic Inequalitis. IEEE Trans. Inf. Theory 1991, 37, 1501–1517. [Google Scholar] [CrossRef]

- Lutwak, E.; Lv, S.; Yang, D.; Zhang, G. Extensions of Fisher Information and Stam’s Inequality. IEEE Trans. Inf. Theory 2012, 58, 1319–1327. [Google Scholar] [CrossRef]

- Widder, D.V. The Laplace Transform; Princeton University Press: Princeton, NJ, USA, 1946. [Google Scholar]

- Knott, P.A.; Proctor, T.J.; Hayes, A.J.; Ralph, J.F.; Kok, P.; Dunningham, J.A. Local versus Global Strategies in Multi-parameter Estimation. Phys. Rev. A 2016, 94, 062312-1–062312-7. [Google Scholar] [CrossRef]

- Beck, C.; Schlögl, F. Thermodynamics of Chaotic Systems; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Gardner, R.J. The Brunn-Minkowski inequality. Bull. Am. Math. Soc. 2002, 39, 355–405. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley-Interscience: Hoboken, NJ, USA, 2006. [Google Scholar]

- Einstein, A. Theorie der Opaleszenz von homogenen Flüssigkeiten und Flüssigkeitsgemischen in der Nähe des kritischen Zustandes. Ann. Phys. 1910, 33, 1275–1298. [Google Scholar] [CrossRef]

- De Palma, G. The entropy power inequality with quantum conditioning. J. Phys. A Math. Theor. 2019, 52, 08LT03-1–08LT03-12. [Google Scholar] [CrossRef]

- Ram, E.; Sason, I. On Rényi Entropy Power Inequalities. IEEE Trans. Inf. Theory 2016, 62, 6800–6815. [Google Scholar] [CrossRef]

- Stam, A. Some inequalities satisfied by the quantities of information of Fisher and Shannon. Inform. Control 1959, 2, 101–112. [Google Scholar] [CrossRef]

- Rényi, A. Probability Theory; Selected Papers of Alfred Rényi; Akadémia Kiado: Budapest, Hungary, 1976; Volume 2. [Google Scholar]

- Cramér, H. Mathematical Methods of Statistics; Princeton University Press: Princeton, NJ, USA, 1946. [Google Scholar]

- Wilk, G.; Włodarczyk, Z. Uncertainty relations in terms of the Tsallis entropy. Phys. Rev. A 2009, 79, 062108-1–062108-6. [Google Scholar] [CrossRef]

- Schrödinger, E. About Heisenberg Uncertainty Relation. Sitzungsber. Preuss. Akad. Wiss. 1930, 24, 296–303. [Google Scholar]

- Robertson, H.P. The Uncertainty Principle. Phys. Rev. 1929, 34, 163–164. [Google Scholar] [CrossRef]

- Hirschman, I.I., Jr. A Note on Entropy. Am. J. Math. 1957, 79, 152–156. [Google Scholar] [CrossRef]

- D’Ariano, M.G.; De Laurentis, M.; Paris, M.G.A.; Porzio, A.; Solimeno, S. Quantum tomography as a tool for the characterization of optical devices. J. Opt. B 2002, 4, 127–132. [Google Scholar] [CrossRef]

- Lvovsky, A.I.; Raymer, M.G. Continuous-variable optical quantum-state tomography. Rev. Mod. Phys. 2009, 81, 299–332. [Google Scholar] [CrossRef]

- Gross, D.; Liu, Y.-K.; Flammia, S.T.; Becker, S.; Eisert, J. Quantum State Tomography via Compressed Sensing. Phys. Rev. Lett. 2010, 105, 150401-1–150401-4. [Google Scholar] [CrossRef] [PubMed]

- Beckner, W. Inequalities in Fourier Analysis. Ann. Math. 1975, 102, 159–182. [Google Scholar] [CrossRef]

- Babenko, K.I. An inequality in the theory of Fourier integrals. Am. Math. Soc. Transl. 1962, 44, 115–128. [Google Scholar]

- Samko, S.G.; Kilbas, A.A.; Marichev, O.I. Fractional Integrals and Derivatives: Theory and Applications; Gordon and Breach: New York, NY, USA, 1993. [Google Scholar]

- Reed, M.; Simon, B. Methods of Modern Mathematical Physics; Academic Press: New York, NY, USA, 1975; Volume XI. [Google Scholar]

- Wallace, D.L. Asymptotic Approximations to Distributions. Ann. Math. Stat. 1958, 29, 635–654. [Google Scholar] [CrossRef]

- Zolotarev, V.M. Mellin—Stieltjes Transforms in Probability Theory. Theory Probab. Appl. 1957, 2, 444–469. [Google Scholar] [CrossRef]

- Tagliani, A. Inverse two-sided Laplace transform for probability density functions. J. Comp. Appl. Math. 1998, 90, 157–170. [Google Scholar] [CrossRef]

- Lukacs, E. Characteristic Functions; Charles Griffin: London, UK, 1970. [Google Scholar]

- Pal, N.; Jin, C.; Lim, W.K. Handbook of Exponential and Related Distributions for Engineers and Scientists; Taylor & Francis Group: New York, NY, USA, 2005. [Google Scholar]

- Kira, M.; Koch, S.W.; Smith, R.P.; Hunter, A.E.; Cundiff, S.T. Quantum spectroscopy with Schrödinger-cat states. Nat. Phys. 2011, 7, 799–804. [Google Scholar]

- Knott, P.A.; Cooling, J.P.; Hayes, A.; Proctor, T.J.; Dunningham, J.A. Practical quantum metrology with large precision gains in the low-photon-number regime. Phys. Rev. A 2016, 93, 033859-1–033859-7. [Google Scholar] [CrossRef]

- Wei, L. On the Exact Variance of Tsallis Entanglement Entropy in a Random Pure State. Entropy 2019, 21, 539. [Google Scholar] [CrossRef] [PubMed]

- Marcinkiewicz, J. On a Property of the Gauss law. Math. Z. 1939, 44, 612–618. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).