Abstract

We consider learning as an undirected graphical model from sparse data. While several efficient algorithms have been proposed for graphical lasso (GL), the alternating direction method of multipliers (ADMM) is the main approach taken concerning joint graphical lasso (JGL). We propose proximal gradient procedures with and without a backtracking option for the JGL. These procedures are first-order methods and relatively simple, and the subproblems are solved efficiently in closed form. We further show the boundedness for the solution of the JGL problem and the iterates in the algorithms. The numerical results indicate that the proposed algorithms can achieve high accuracy and precision, and their efficiency is competitive with state-of-the-art algorithms.

1. Introduction

Graphical models are widely used to describe the relationships among interacting objects [1]. Such models have been extensively used in various domains, such as bioinformatics, text mining, and social networks. The graph provides a visual way to understand the joint distribution of an entire set of variables.

In this paper, we consider learning Gaussian graphical models that are expressed by undirected graphs, which represent the relationship among continuous variables that follow a joint Gaussian distribution. In an undirected graph, , and edge set E represents the conditional dependencies among the variables in vertex set V.

Let () be Gaussian variables with covariance matrix , and be the precision matrix, if it exists. We remove the edges so that the variables , are conditionally independent given the other variables if and only if the -th element in is 0:

where each edge is expressed as a set of two elements in . In this sense, constructing a Gaussian graphical model is equivalent to estimating a precision matrix.

Suppose that we estimate the undirected graph from data consisting of n tuples of p variables and that dimension p is much higher than sample size n. For example, if we have expression data of genes for case/control patients, how can we construct a gene regulatory network structure from the data? It is almost impossible to estimate the locations of the nonzero elements in by obtaining the inverse of sample covariance matrix , which is the unbiased estimator of . In fact, if , then no inverse exists because the rank of is, at most, n.

In order to address this situation, two directions are suggested:

- Sequentially find the variables on which each variable depends via regression so that the quasilikelihood is maximized [2].

- Find the locations in , the values of which are zeros, so that the regularized log-likelihood is maximized [3,4,5,6].

We follow the second approach because we assume Gaussian variables, also known as graphical lasso (GL). The regularized log-likelihood is defined by:

where tuning parameter controls the amount of sparsity, and denotes the sum of the absolute value of the off-diagonal elements in . Several optimization techniques [4,7,8,9,10,11,12] have been studied for the optimization problem of (1).

In particular, we consider a generalized version of the abovementioned GL. For example, suppose that the gene regulatory networks of thirty case and seventy control patients are different. One might construct a gene regulatory network separately for each of the two categories. However, estimating each on its own does not provide an advantage if a common structure is shared. Instead, we use 100 samples to construct two networks simultaneously. Intuitively speaking, using both types of data improves the reliability of the estimation by increasing the sample size for the genes that show similar values between case and control patients, while using only one type of data leads to a more accurate estimate for genes that show significantly different values. Ref. [13] proposed a joint graphical lasso (JGL) model by including an additional convex penalty (fused or group lasso penalty) to the graphical lasso objective function for K classes. For example, K is equal to two for the case/control patients in the example. JGL includes fused graphical lasso with fused lasso penalty, which encourages sparsity and the similarity of the value of edges across K classes, and group graphical lasso with group lasso penalty, which promotes similar sparsity structure across K graphs. Although there are several approaches to handling the multiple graphical models, such as those of [14,15,16,17], the JGL is considered the most promising.

The main topic of this paper is efficiency improvement in terms of solving the JGL problem. For the GL, relatively efficient solving procedures exist. If we differentiate the regularized log-likelihood (1) by , then we have an equation to solve [4]. Moreover, several improvements have been considered for the GL, such as proximal Newton [12] and proximal gradient [10] procedures. However, for the JGL, even if we derive such an equation, we have no efficient way of handling it.

Instead, the alternating direction method of multipliers (ADMM) [18], which is a procedure for solving convex optimization problems for general purposes, has been the main approach taken [13,19,20,21]. However, ADMM does not scale well concerning the feature dimension p and number of classes K. It usually takes time to converge to a high-accuracy solution [22].

For efficient procedures to solve the JGL problem, ref. [23] proposed a method based on the proximal Newton method only when the penalty term is expressed by fused lasso. The existing method requires expensive computations for the Hessian matrix and Newton directions, which means that it would be expensive to use for high-dimensional problems.

In this paper, we propose efficient proximal-gradient-based algorithms to solve the JGL problem by extending the procedure in [10] and employing the step-size selection strategy proposed in [24]. Moreover, we provide the theoretical analysis of both methods for the JGL problem.

In our proximal gradient methods for the JGL problem, the proximal operator in each iteration is quite simple, which eases the implementation process and requires very little computation and memory at each step. Simulation experiments are used to justify our proposed methods over the existing ones.

Our main contributions are as follows:

- We propose efficient algorithms based on the proximal gradient method to solve the JGL problem. The algorithms are first-order methods and quite simple, and the subproblems can be solved efficiently with a closed-form solution. The numerical results indicate that the methods can achieve high accuracy and precision, and the computational time is competitive with state-of-art algorithms.

- We provide the boundedness for the solution to the JGL problem and the iterates in algorithms, which is related to the convergence rate of the algorithms. With the boundedness, we can guarantee that our proposed method converges linearly.

Table 1 summarizes the relationship between the proposed and existing methods.

Table 1.

Efficient JGL procedures.

The remaining parts of this paper are as follows. In Section 2, we first provide the background of our proposed methods and introduce the joint graphical lasso problem. In Section 3, we illustrate the detailed content of the proposed algorithms and provide a theoretical analysis. In Section 4, we report some numerical results of the proposed approaches, including comparisons with efficient methods and performance evaluations. Finally, we draw some conclusions in Section 5.

Notation: In this paper, denotes the norm of a vector , for , and . For a matrix , denotes the Frobenius norm, denotes the spectral norm, , and if not specified. The inner product is defined by .

2. Preliminaries

This section first reviews the graphical lasso (GL) problem and introduces the graphical iterative shrinkage-thresholding algorithm (G-ISTA) [10] to solve it. Then, we introduce the step-size selection strategy that we apply to the joint graphical lasso (JGL) in Section 3.2.

2.1. Graphical Lasso

Let be observations of dimension that follow the Gaussian distribution with mean and covariance matrix , where without loss of generality, we assume . Let , and the empirical covariance matrix . Given penalty parameter , the graphical lasso (GL) is the procedure to find the positive definite such that:

where If we regard as a vertex set, then we can construct an undirected graph with edge set , where set denotes an undirected edge that connects the nodes .

If we take the subgradient of (2), then we find that the optimal solution satisfies the condition:

where is

2.2. ISTA for Graphical Lasso

In this subsection, we introduce the method for solving the GL problem (2) by the iterative shrinkage-thresholding algorithm (ISTA) proposed by [10], which is a proximal gradient method usually employed in dealing with nondifferentiable composite optimization problems.

Specifically, the general ISTA solves the following composite optimization problem:

where f and g are convex, with f differentiable and g possibly being nondifferentiable.

If we define the quadratic approximation w.r.t. and by

then we can describe the ISTA as a procedure that iterates

given initial value , where the value of step size may change at each iteration for efficient convergence purpose, and we use the proximal operator:

Note that the proximal operator of function is the soft-thresholding operator: the absolute value of each off-diagonal element with becoming either or zero (if ). We use the following function for the operator in Section 3:

where .

Definition 1.

A differentiable function is said to have a Lipschitz-continuous gradient if there exists (Lipschitz constant) such that

It is known that if we choose for each step in the ISTA that minimizes , then the convergence rate is, at most, as follows [25]:

However, for the GL problem (2), we know neither the exact value of the Lipschitz constant L nor any nontrivial upper bound. [10] implement a backtracking line search option in Step 1 of Algorithm 1 below to handle this issue.

The backtracking line search enables us to compute the value for each time by repeatedly multiplying by a constant until ( is positive definite) and

for the in (7). Additionally, (12) is a sufficient condition for (11), which was derived in [25] (see the relationship between Lemma 2.3 and Theorem 3.1 in [25]).

The whole procedure is given in Algorithm 1.

| Algorithm 1 G-ISTA for problem (2). |

| Input: , tolerance , backtracking constant , initial value , , . While (until convergence) do |

|

| end Output: -optimal solution to problem (2), . |

2.3. Composite Self-Concordant Minimization

The notion of the self-concordant function was proposed in [26,27,28]. In the following, we say a convex function f is self-concordant with parameter if

where is the domain of f.

Reference [24] considered a composite version of self-concordant function minimization and provided a way to efficiently calculate the step size for the proximal gradient method for the GL problem without relying on the Lipschitz gradient assumption in (10). They proved that

in (2) is self-concordant and considers the following minimization:

where f is convex, differentiable, and self-concordant, and g is convex and possibly nondifferentiable. As for Algorithm 1, without using the backtracking line search, we can compute direction with initial step size as follows:

where the operator is defined by (8). Then, we use the modified step size to update , which can be determined by the direction . After defining two parameters related to the direction: and , the modified step size can be obtained by

By Lemma 12 in [24], if the modified step size , then it can ensure a decrease in the objective function and guarantee convergence in the proximal gradient scheme. From (14), if , then the condition is satisfied. Therefore, we only need to check the case when . If the condition does not hold, we can change the value of the initial (such as the bisection method) to influence the value of in (13) until the condition is satisfied.

2.4. Joint Graphical Lasso

Let , and , where each is a row vector. Let be the number of occurrences in such that , so that .

For each , we define the empirical covariance matrix of the data as follows:

Given the penalty parameters and , the joint graphical lasso (JGL) is the procedure to find the positive definite matrix for , such that:

where penalizes . For example, ref. [13] suggested the following fused and group lasso penalties:

and

where is the -th element of for .

Unfortunately, there is no equation like (3) for the JGL to find the optimum . [13] considered the ADMM to solve the JGL problem. However, ADMM is quite time consuming for large-scale problems.

3. The Proposed Methods

In this section, we propose two efficient algorithms for solving the JGL problem. One is an extended ISTA based on the G-ISTA in Section 2.2, and the other is based on the step-size selection strategy introduced in Section 2.3.

3.1. ISTA for the JGL Problem

To describe the JGL problem, we define by

Then, the problem (15) reduces to:

where the function f is convex and differentiable, and g is convex and nondifferentiable. Therefore, the ISTA is available for solving the JGL problem (15).

The main difference between the G-ISTA and the proposed method is that the latter needs to simultaneously consider K categories of graphical models in the JGL problem (15). What is more, there are two combined penalties in , which complicate the proximal operator in the ISTA procedure. Consequently, the operator for the proposed method is not a simple soft thresholding operator, as it is for the G-ISTA method.

If we define the quadratic approximation of by:

then the update iteration is simplified as:

Nevertheless, the Lipschitz gradient constant of is unknown over the whole domain in the JGL problem. Therefore, our approach needs a backtracking line search to calculate step size . We show the details in Algorithm 2.

| Algorithm 2 ISTA for problem (15). |

| Input: , tolerance , backtracking constant , initial step size , initial iterate . For (until convergence) do |

|

| end Output: optimal solution to problem (15), . |

In the update of , we need to compute the proximal operators for the fused and group lasso penalties. In the following, for each of them, the problem can be divided into the fused lasso problems [29] and group lasso problems [30,31] for , . We apply the solutions given by (20) and (21) below.

3.1.1. Fused Lasso Penalty

By the definition of the proximal operator in the update step, we have:

Problem (18) is separable with respect to the elements in ; hence, the proximal operator can be computed in a componentwise manner: Let ; then, problem (18) reduces to the following for , :

where is an indicator function, the value of which is 1 only when .

The problem (19) is known as the fused lasso problem [29,32] given for . In particular, let and . When , and , the solution to (19) can be obtained through the soft thresholding operator based on the solution when by the following Lemma.

Lemma 1.

([33]) Denote the solution to parameters α and β as , and then the solution of the fused lasso problem:

is given by when are given for .

Additionally, rather efficient algorithms are available for solving the fused lasso problem (20) when (i.e., ) such as [32,34,35].

3.1.2. Group Lasso Penalty

By definition, the update of for the group lasso penalty is as follows:

Similarly, let ; then, the problem becomes the following for , :

We have for . In addition, for , the solution [31,36,37] is given by

3.2. Modified ISTA for JGL

Thus far, we have seen that in the JGL problem (15) is not globally Lipschitz gradient continuous. The ISTA may not be efficient enough for the JGL case because it includes the backtracking line search procedure for this case, which needs to evaluate the objective function and the positive definiteness of in Step 1 of Algorithm 2 and is inefficient when the evaluation is expensive.

In this section, we modify Algorithm 2 to Algorithm 3 based on the step-size selection strategy in Section 2.3, which takes advantage of the properties of the self-concordant function. The self-concordant function does not rely on the Lipschitz gradient assumption on the function [24], and we can eliminate the need for the backtracking line search.

Lemma 2.

([38]) Self-concordance is preserved by scaling and addition: if f is a self-concordant function and a constant , then is self-concordant. If are self-concordant, then is self-concordant.

By Lemma 2, the function in (16) is self-concordant. In Algorithm 3, for the initial step size of in each iteration, we use the Barzilai–Borwein method [39]. We apply the step-size mechanism in Section 2.3, which is employed in Steps 3–5 of Algorithm 3.

| Algorithm 3 Modified ISTA (M-ISTA). |

| Input: , tolerance , initial step size , initial iterate . For (until convergence) do |

|

| end Output: optimal solution to problem (15), . |

There is no backtracking procedure in this algorithm that guarantees the positive definiteness of , as in Step 1 of Algorithm 2. We next show how to ensure the positive definiteness of in the iterations of Algorithm 3.

Lemma 3.

([40], Theorem 2.1.1) Let f be a self-concordant function, and let . Additionally, if

then .

In Algorithm 3, because we know with and by Steps 3–5. Thus, we have :

which implies,

Hence, from Lemma 3, we see that stays in the domain and maintains positive definiteness.

3.3. Theoretical Analysis

For multiple Gaussian graphical models, Honorio and Samaras [14] and Hara and Washio [17] provided lower and upper bounds for the optimal solution . However, the models they considered are different than the JGL. To the best of our knowledge, no related research has provided the bounds of the optimal solution for the JGL problem (15).

In the following section, we show the bounds of the optimal solution for the JGL and the iterates generated by Algorithms 2 and 3, which are applied to both fused and group lasso-type penalties.

Proposition 1.

For the proof, see Appendix A.1.

Note that the objective function value always decreases with the increase in iteration in both algorithms due to [25] (Remark 3.1) and Lemma 12 in [24]. Therefore, the following inequality holds for Algorithms 2 and 3:

Then, based on the condition (22), we provide the explicit bounds of iterates in Algorithms 2 and 3 for the JGL problem (15).

Proposition 2.

Sequence generated by Algorithms 2 and 3 can be bounded:

where , , , and constant .

For the proof, see Appendix A.2.

With the help of Propositions 1 and 2, and the following Lemma, we can obtain the range of the step size that ensures the linear convergence rate of Algorithm 2.

Lemma 4.

Let be t-th iterate in Algorithm 2. Denote and as the minimum and maximum eigenvalues of the corresponding matrix, respectively. Define

and , , , and . The sequence generated by Algorithm 2 satisfies

with the convergence rate .

Proof.

It can be easily extended by Lemma 3 in [10]. □

Lemma 4 implies that to obtain the convergence rate , we require:

After using Propositions 1 and 2, we can obtain the bounds of . Further, we can obtain the step size that satisfies (23) and guarantee s the linear convergence rate . However, the step size is quite conservative in practice. Hence, we consider the Barzilai–Borwein method for implementation and regard the step size that satisfies (23) as a safe choice. When the number of backtracking iterations in Step 1 of Algorithm 2 exceeds the given maximum number to fulfill the backtracking line search condition, we can use the safe step size for the subsequent calculations. In Section 4.2.3, we confirm the linear convergence rate of the proposed ISTA by experiment.

4. Experiments

In this section, we evaluate the performance of the proposed methods on both synthetic and real datasets, and we compare the following algorithms:

- ADMM: the general ADMM method proposed by [13].

- FMGL: the proximal Newton-type method proposed by [23].

- ISTA: the proposed method in Algorithm 2.

- M-ISTA: the proposed method in Algorithm 3.

We perform all the tests in R Studio on a Macbook Air with 1.6 GHz Intel Core i5 and 8 GB memory. The wall times are recorded as the run times for the four algorithms.

4.1. Stopping Criteria and Model Selection

In the experiments, we consider two stopping criteria for the algorithms.

1. Relative error stopping criterion:

2. Objective error stopping criterion:

is a given accuracy tolerance; we terminate the algorithm if the above error is smaller than or the maximum number of iterations exceeds 1000. We use the objective error for convergence rate analysis and the relative error for the time comparison.

The JGL model is affected by regularized parameters and . For selecting the parameters, we use the V-fold crossvalidation method. First, the dataset is randomly split into V segments of equal size, a single subset (test data), estimated by the other subsets (training data), is evaluated, and the subset is changed for the test to repeat V times so that each subset is used.

Let be the sample covariance matrix of the v-th ( ) segment for class . We estimate the inverse covariance matrix by the remaining subsets and choose and , which minimize the average predictive negative log-likelihood as follows:

4.2. Synthetic Data

The performance of the proposed methods was assessed on synthetic data in terms of the number of iterations, the execution time, the squared error, and the receiver operating characteristic (ROC) curve. We follow the data generation mechanism described in [41] with some modifications for the JGL model. We put the details in Appendix B.

4.2.1. Time Comparison Experiments

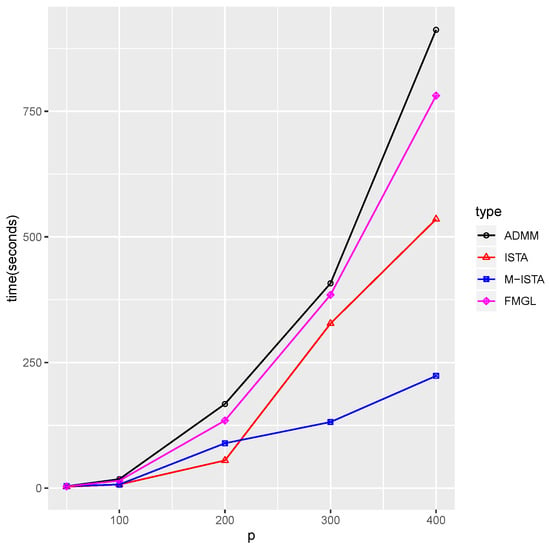

We vary to compare the execution time of our proposed methods with that of the existing methods. We consider only the fused penalty in our proposed method for a fair comparison in the experiments because the FMGL algorithm applies only to the fused penalty. First, we compare the performance among different algorithms under various dimensions p, which are shown in Figure 1.

Figure 1.

Plot of time comparison under different p. Setting , , , and .

Figure 1 shows that the execution time of the FMGL and ADMM increases rapidly as p increases. In particular, we observe that the M-ISTA significantly outperforms when p exceeds 200. The ISTA shows better performance than the three methods when p is less than 200, but it requires more time as p grows, compared to the M-ISTA. It is reasonable to consider that evaluating the objective function in the backtracking line search at every iteration increases the computational burden, especially when p increases, which means that the M-ISTA is a good choice for these cases. Furthermore, the ISTA can be a good candidate when the evaluation is inexpensive.

Table 2 summarizes the performance of the four algorithms under different parameter settings to achieve a given precision, , of the relative error. The results presented in Table 2 reveal that when we increase the number of classes K, all the algorithms require more time than usual. Moreover, the execution time of ADMM becomes huge among them. When we vary , the algorithms become more efficient as the value increases. For most instances, the M-ISTA and ISTA outperform the existing methods, such as ADMM and FMGL. For the exceptional cases ( and ), the M-ISTA and ISTA are still comparable with the FMGL and faster than ADMM.

Table 2.

Computational time under different settings.

4.2.2. Algorithm Assessment

We generate the simulation data as described in Appendix B and regard the synthetic inverse covariance matrices as the true values for our assessment experiments.

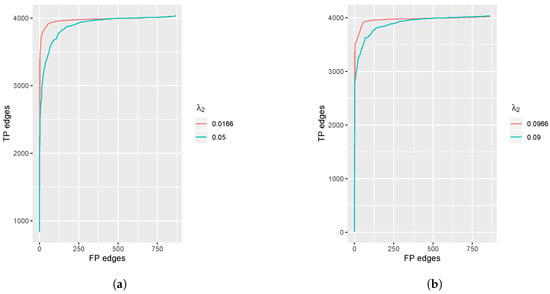

First, we assessed our proposed method by drawing an ROC curve, which displays the number of true positive edges (i.e., TP edges) selected compared to the number of false positive edges (i.e., FP edges) selected. We say that an edge in the k-th class is selected in estimate if element , and the edges are true positive edges selected if the precision matrix element and false positive edges selected if the precision matrix element , where the two quantities are defined by

and

where is the indicator function.

To confirm the validity of the proposed methods, we compare the ROC figures of the fused penalty and group penalty. We fix the parameters for each curve and change the value to obtain various numbers of selected edges because the sparsity penalty parameter can control the number of selected total edges.

We show the ROC curves for fused and group lasso penalties in Figure 2a,b respectively. From the figures, we observe that both penalties show highly accurate predictions for the edge selections. The result of in the fused penalty case is better than that in . Additionally, the result of in the group penalty case is better than that in , which means that if we select the tuning parameters properly, then we can obtain precise results while simultaneously meeting our different model demands.

Figure 2.

Plot of true positive edges vs. false positive edges selected. Setting , . (a) The fused penalty; (b) The group penalty.

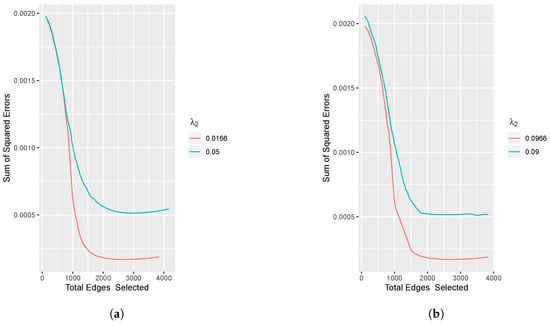

Then, Figure 3a,b display the mean squared error (MSE) between the estimated values and true values.

where is the value estimated by the proposed method, and is the true precision matrix value we used in the data generation.

Figure 3.

Plot of the mean squared errors vs. total edges selected. Setting , . (a) The fused penalty; (b) The group penalty.

The figures illustrate that when the total number of edges selected increases, the errors decrease and finally achieve relatively low values.

Overall, the proposed method shows competitive efficiency not only in computational time but also in accuracy.

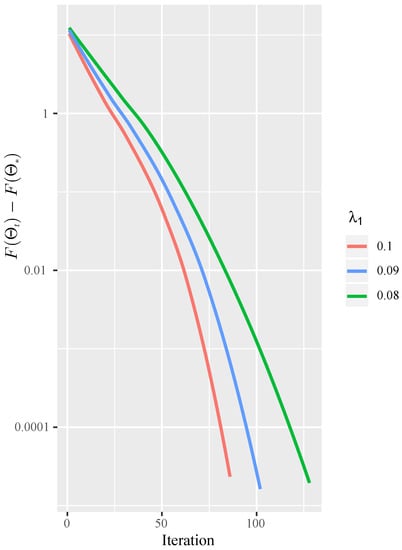

4.2.3. Convergence Rate

This section shows the convergence rate of the ISTA for solving the JGL problem (15) in practice, with , and . We recorded the number of iterations to achieve the different tolerance of in Figure 4 and ran it on a synthetic dataset, with , , , and . The figure reveals that as decreases, more iterations are needed to converge to the specified tolerance. Moreover, the figure shows the linear convergence rate of the proposed ISTA method, which corroborate the theoretical analysis in Section 3.3.

Figure 4.

Plot of vs. the number of iterations with different values. Setting and .

4.3. Real Data

In this section, we use two different real datasets to demonstrate the performance of our proposed method and visualize the result.

Firstly, we used the presidential speeches dataset in [42] for the experiment to jointly estimate common links across graphs and show the common structure. The dataset contains 75 most-used words (features) from several big speeches of the 44 US presidents (samples). In addition, we used the clustering result in [42], where the authors split the 44 samples into two groups with similar features, and then we obtained two classes of samples .

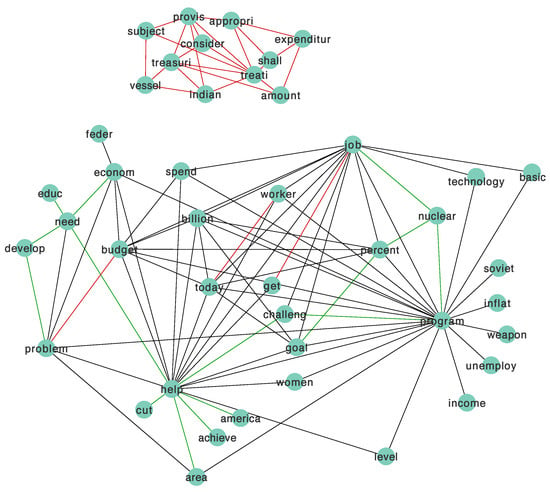

We used Cytoscape [43] to visualize the results when and . We chose these relatively large tuning parameters for better interpretation of the network figure. Figure 5 shows the relationship network graph of the high-frequency words identified by the JGL model with the proposed method. As shown in the figure, each node represents a word, and the edges demonstrate the relationships between words.

Figure 5.

Network figure of the words in president speeches dataset.

We use different colors to show various structures. The black edges are a common structure between the two classes, the red edges are the specific structures for the first class , and the green edges are for the second class . Figure 5 shows a subnetwork on the top with red edges, meaning there are relationships among those words, and the connections only exist in the first group.

We compared the time cost among four algorithms and show the results in Table 3. We used the crossvalidation method () described in Section 4.1 to select the optimal tuning parameters (, ). In addition, we manually chose the other two pairs of parameters for more comparisons.

Table 3.

Time comparison result of two real datasets.

Table 3 shows that ISTA outperforms the other three algorithms, and our proposed methods offer stable performance when varying the parameters, while ADMM is the slowest in most cases.

Secondly, we use a breast cancer dataset [44] for time comparison. There are 250 samples and 1000 genes in the dataset, with 192 control samples and 58 case samples . Furthermore, we extract 200 genes with the highest variances among the original genes. The tuning parameter pair , was chosen by the crossvalidation method. Table 3 exhibits that our proposed methods (ISTA and M-ISTA) outperform ADMM and FMGL, and M-ISTA shows the best performance in the breast cancer dataset.

5. Discussion

We propose two efficient proximal gradient descent procedures with and without the backtracking line search option for the joint graphical lasso. The first (Algorithm 2) does not require extra variables, unlike ADMM, which needs manual tuning the Lagrangian penalty parameters in [13] and storing and calculating dual variables. Moreover, we reduce the update iterate step to subproblems that can be solved efficiently and precisely by lasso-type problems. Based on Algorithm 2, we modified the step-size selection by extending the strategy in [24] to the second one (Algorithm 3), which does not rely on the Lipschitz assumption. Additionally, the second does not require a backtracking line search, significantly reducing the computation time needed to evaluate objective functions.

From the theoretical perspective, we reach the linear convergence rate for the ISTA. Furthermore, we derive the lower and upper bounds of the solution to the JGL problem and the iterates in the algorithms, guaranteeing that the ISTA converges linearly. Numerically, the methods are demonstrated on simulated and real datasets to illustrate their robust and efficient performance over state-of-the-art algorithms.

For further computational improvement, the most expensive step in the algorithms is to calculate the inversion of matrices required by the gradient of . Both algorithms have a complexity of per iteration. Moreover, we can solve the matrix inversion problem with more efficient algorithms with lower complexity. In addition, we can also use the faster computation procedure in [13] to decompose the optimization problem for the proposed methods and regard it as preprocessing. Overall, the proposed methods are highly efficient for the joint graphical lasso problem.

Author Contributions

Conceptualization, J.C., R.S. and J.S.; methodology, J.C., R.S. and J.S.; software, J.C. and R.S.; validation, J.C., R.S. and J.S.; formal analysis, J.C., R.S. and J.S.; writing—original draft preparation, J.C. and J.S.; writing—review and editing, J.C., R.S. and J.S.; visualization, J.C.; supervision, J.S.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Grant-in-Aid for Scientific Research (KAKENHI) C, Grant number: 18K11192.

Data Availability Statement

Publicly available datasets were analyzed in this paper. Presidential speeches dataset: https://www.presidency.ucsb.edu, accessed on 5 November 2021; Breast cancer dataset: https://www.rdocumentation.org/packages/doBy/versions/4.5-15/topics/breastcancer, accessed on 5 November 2021.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADMM | alternating direction method of multipliers |

| FMGL | fused multiple graphical lasso algorithm |

| FP | false positive |

| G-ISTA | graphical iterative shrinkage-thresholding algorithm |

| GL | graphical lasso |

| ISTA | iterative shrinkage-thresholding algorithm |

| JGL | joint graphical lasso |

| M-ISTA | modified iterative shrinkage-thresholding algorithm |

| MSE | mean squared error |

| ROC | receiver operating characteristic |

| TP | true positive |

Appendix A. Proofs of Propositions

Appendix A.1. Proof of Proposition 1

We first introduce the Lagrange dual problem of (15). By introducing the auxiliary variables , we can rewrite the problem as follows:

Then, the Lagrange function of the above is given by:

where are dual variables. To obtain the dual problem, we minimize the primal variables as follows:

Taking derivative of the function:

Hence, we can obtain the duality gap [38] (the primal problem minus the dual problem) as follows:

when the gap value is 0, the optimal solution is found. Because the conjugate function is the indicator function, the value is hence 0 for the optimal solution.

Firstly, for the group penalty , the duality gap is

From Equation (A2), we obtain

From Equation (A1), we have the following relationship of diagonal elements,

and due to dual variable , for . Hence,

By , we obtain the upper bound:

The proof is similar for the fused penalty ; therefore, we omit it here. Next, we continue to prove the lower bound of .

Firstly, for the group penalty . Let be non-negative matrix satisfying . Introducing the Lagrange multipliers and for . This procedure is similar to the way in [17].

Then, the new Lagrange problem becomes,

Taking derivative w.r.t and , we obtain the following equations:

When , from Equation (A5),

Taking summation of each k,

Then,

The last equation holds because

We only consider the case when for , because from Equations (A5) and (A6), we know . Overall, the lower bound is

The lower bound of fused penalty can be derived in similar way.

Appendix A.2. Proof of Proposition 2

By Equation (22) and convexity of , it is easy to obtain

Since , then

Hence,

Then, by Equation (A3), we can complete the proof of the upper bound.

To prove the lower bound, denote

By the definition of the matrix norm, we have

Denote the upper bound of as M, and that of as , for . By definition of tensor norm, we have .

Let constant . By the Equation (22), we have

Note that implies and because

Let the eigenvalues of as . Then , hence,

Then,

Let the coefficient of the term which contains in as , then

Because

denote , then,

Hence,

Then, we can obtain

Hence, the lower bound is proved:

Appendix B. Data Generation

We generate samples independently and identically distributed observations from a multivariate normal distribution , where is the inverse covariance matrix of the k-th category. Specifically, we generate p points randomly on a unit space and calculate their pairwise distances. Then, we find the m-nearest neighbors point by this distance. We connect any two points that are m-nearest neighbors of each other. The integer m determines for the degree of sparsity of the data, and m values range from 4 to 9 in our experiments.

Additionally, we add heterogeneity to the common structure by building extra individual connections in the following way: we randomly choose a pair of symmetric zero elements, , and replace them with a value uniformly generated from the interval. This operation is repeated times, where M is the number of off-diagonal nonzero elements in .

References

- Lauritzen, S.L. Graphical Models; Clarendon Press: Oxford, UK, 1996; Volume 17. [Google Scholar]

- Meinshausen, N.; Bühlmann, P. High-dimensional graphs and variable selection with the lasso. Ann. Stat. 2006, 34, 1436–1462. [Google Scholar] [CrossRef] [Green Version]

- Yuan, M.; Lin, Y. Model selection and estimation in the Gaussian graphical model. Biometrika 2007, 94, 19–35. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.; Hastie, T.; Tibshirani, R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics 2008, 9, 432–441. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, O.; El Ghaoui, L.; d’Aspremont, A. Model selection through sparse maximum likelihood estimation for multivariate Gaussian or binary data. J. Mach. Learn. Res. 2008, 9, 485–516. [Google Scholar]

- Rothman, A.J.; Bickel, P.J.; Levina, E.; Zhu, J. Sparse permutation invariant covariance estimation. Electron. J. Stat. 2008, 2, 494–515. [Google Scholar] [CrossRef]

- Banerjee, O.; Ghaoui, L.E.; d’Aspremont, A.; Natsoulis, G. Convex optimization techniques for fitting sparse Gaussian graphical models. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 89–96. [Google Scholar]

- Xue, L.; Ma, S.; Zou, H. Positive-definite l1-penalized estimation of large covariance matrices. J. Am. Stat. Assoc. 2012, 107, 1480–1491. [Google Scholar] [CrossRef] [Green Version]

- Mazumder, R.; Hastie, T. The graphical lasso: New insights and alternatives. Electron. J. Stat. 2012, 6, 2125. [Google Scholar] [CrossRef]

- Guillot, D.; Rajaratnam, B.; Rolfs, B.T.; Maleki, A.; Wong, I. Iterative thresholding algorithm for sparse inverse covariance estimation. arXiv 2012, arXiv:1211.2532. [Google Scholar]

- d’Aspremont, A.; Banerjee, O.; El Ghaoui, L. First-order methods for sparse covariance selection. SIAM J. Matrix Anal. Appl. 2008, 30, 56–66. [Google Scholar] [CrossRef] [Green Version]

- Hsieh, C.J.; Sustik, M.A.; Dhillon, I.S.; Ravikumar, P. QUIC: Quadratic approximation for sparse inverse covariance estimation. J. Mach. Learn. Res. 2014, 15, 2911–2947. [Google Scholar]

- Danaher, P.; Wang, P.; Witten, D.M. The joint graphical lasso for inverse covariance estimation across multiple classes. J. R. Stat. Soc. Ser. B Stat. Methodol. 2014, 76, 373. [Google Scholar] [CrossRef]

- Honorio, J.; Samaras, D. Multi-Task Learning of Gaussian Graphical Models; ICML: Baltimore, MA, USA, 2010. [Google Scholar]

- Guo, J.; Levina, E.; Michailidis, G.; Zhu, J. Joint estimation of multiple graphical models. Biometrika 2011, 98, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, B.; Wang, Y. Learning structural changes of Gaussian graphical models in controlled experiments. arXiv 2012, arXiv:1203.3532. [Google Scholar]

- Hara, S.; Washio, T. Learning a common substructure of multiple graphical Gaussian models. Neural Netw. 2013, 38, 23–38. [Google Scholar] [CrossRef] [Green Version]

- Glowinski, R.; Marroco, A. Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité d’une classe de problèmes de Dirichlet non linéaires. ESAIM Math. Model. Numer. Anal.-Modél. Math. Et Anal. Numér. 1975, 9, 41–76. [Google Scholar] [CrossRef]

- Tang, Q.; Yang, C.; Peng, J.; Xu, J. Exact hybrid covariance thresholding for joint graphical lasso. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2015; pp. 593–607. [Google Scholar]

- Hallac, D.; Park, Y.; Boyd, S.; Leskovec, J. Network inference via the time-varying graphical lasso. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 205–213. [Google Scholar]

- Gibberd, A.J.; Nelson, J.D. Regularized estimation of piecewise constant gaussian graphical models: The group-fused graphical lasso. J. Comput. Graph. Stat. 2017, 26, 623–634. [Google Scholar] [CrossRef] [Green Version]

- Boyd, S.; Parikh, N.; Chu, E. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Publishers Inc.: Norwell, MA, USA, 2011. [Google Scholar]

- Yang, S.; Lu, Z.; Shen, X.; Wonka, P.; Ye, J. Fused multiple graphical lasso. SIAM J. Optim. 2015, 25, 916–943. [Google Scholar] [CrossRef] [Green Version]

- Tran-Dinh, Q.; Kyrillidis, A.; Cevher, V. Composite self-concordant minimization. J. Mach. Learn. Res. 2015, 16, 371–416. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef] [Green Version]

- Nesterov, Y.; Nemirovskii, A. Interior-Point Polynomial Algorithms in Convex Programming; SIAM: Philadelphia, PA, USA, 1994. [Google Scholar]

- Renegar, J. A Mathematical View of Interior-Point Methods in Convex Optimization; SIAM: Philadelphia, PA, USA, 2001. [Google Scholar]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer Science & Business Media: New York, NY, USA, 2003; Volume 87. [Google Scholar]

- Tibshirani, R.; Saunders, M.; Rosset, S.; Zhu, J.; Knight, K. Sparsity and smoothness via the fused lasso. J. R. Stat. Soc. Ser. B 2005, 67, 91–108. [Google Scholar] [CrossRef] [Green Version]

- Simon, N.; Friedman, J.; Hastie, T.; Tibshirani, R. A sparse-group lasso. J. Comput. Graph. Stat. 2013, 22, 231–245. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. A note on the group lasso and a sparse group lasso. arXiv 2010, arXiv:1001.0736. [Google Scholar]

- Hoefling, H. A path algorithm for the fused lasso signal approximator. J. Comput. Graph. Stat. 2010, 19, 984–1006. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.; Hastie, T.; Höfling, H.; Tibshirani, R. Pathwise coordinate optimization. Ann. Appl. Stat. 2007, 1, 302–332. [Google Scholar] [CrossRef] [Green Version]

- Tibshirani, R.J.; Taylor, J. The solution path of the generalized lasso. Ann. Stat. 2011, 39, 1335–1371. [Google Scholar] [CrossRef] [Green Version]

- Johnson, N.A. A dynamic programming algorithm for the fused lasso and l 0-segmentation. J. Comput. Graph. Stat. 2013, 22, 246–260. [Google Scholar] [CrossRef]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Suzuki, J. Sparse Estimation with Math and R: 100 Exercises for Building Logic; Springer Nature: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Barzilai, J.; Borwein, J.M. Two-point step size gradient methods. IMA J. Numer. Anal. 1988, 8, 141–148. [Google Scholar] [CrossRef]

- Nemirovski, A. Interior point polynomial time methods in convex programming. Lect. Notes 2004, 42, 3215–3224. [Google Scholar]

- Li, H.; Gui, J. Gradient directed regularization for sparse Gaussian concentration graphs, with applications to inference of genetic networks. Biostatistics 2006, 7, 302–317. [Google Scholar] [CrossRef]

- Weylandt, M.; Nagorski, J.; Allen, G.I. Dynamic visualization and fast computation for convex clustering via algorithmic regularization. J. Comput. Graph. Stat. 2020, 29, 87–96. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shannon, P.; Markiel, A.; Ozier, O.; Baliga, N.S.; Wang, J.T.; Ramage, D.; Amin, N.; Schwikowski, B.; Ideker, T. Cytoscape: A software environment for integrated models of biomolecular interaction networks. Genome Res. 2003, 13, 2498–2504. [Google Scholar] [CrossRef] [PubMed]

- Miller, L.D.; Smeds, J.; George, J.; Vega, V.B.; Vergara, L.; Ploner, A.; Pawitan, Y.; Hall, P.; Klaar, S.; Liu, E.T.; et al. An expression signature for p53 status in human breast cancer predicts mutation status, transcriptional effects, and patient survival. Proc. Natl. Acad. Sci. USA 2005, 102, 13550–13555. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).