Abstract

We revise and slightly generalize some variational problems related to the “informational approach” in the classical optimization problem for automatic control systems which was popular from 1970–1990. We find extremals for various degenerated (derivative independent) functionals and propose some interpretations of obtained minimax relations. The main example of such functionals is given by the Gelfand–Pinsker–Yaglom formula for the information quantity contained in one random process in another one. We find some balance relations in a linear stationary one-dimensional system with Gaussian signal and interpret them in terms of Legendre duality.

| To Nikolay Petrovich Bukanov with my gratitude and admiration |

1. Introduction

An informational approach to an opimality criteria for a control system synthesis was proposed by Bukanov in [1]. He has considered the following one-dimensional, control system.

Remark 1.

The “subtraction” and “addition” notation of the diagram nodes means that f.e. the input function and while

His choice of an informational criterion was motivated by a straightforward engineering application: studies of the automatic board control system of the passenger jet TU-154. Mathematically, he had used the classical Gelfand–Pinsker–Yaglom functional formula [2]

for the amount of information about the one random process (the defect or the error signal Z) contained in another such process (the control signal U), where denotes the mutual correlation function for these processes.

Pinsker had re-written this functional in the following form:

Here, are spectral densities of the powers of the control signal U and the defect signal Z, -is the mutual spectral density.

Bukanov has expressed the functional variables of (1) in terms of the object-regulator transfer functions and spectral densities of the perturbations and has obtained the following explicit integral:

The transfer functions of the object and the regulator are represented by the frequency responses and (We shall describe below the detailed reduction of the Pinsker Formula (1) to the Bukanov’s expression (2) in a slightly general context.)

The aim of this paper to revise and to generalize this optimal regulator problem (for a class of linear stationary systems with non-zero program ()) as the classical optimization problem for automatic control system of Figure 1. We get the following results:

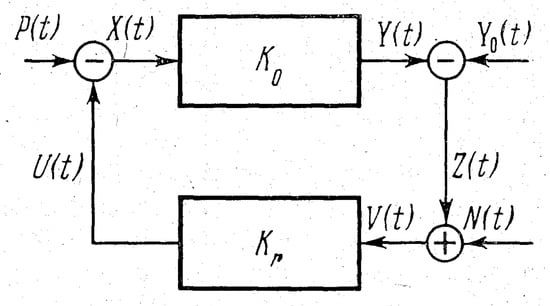

Figure 1.

This is a figure from [1]. Here, denotes the operator object which transform the input function to the output function which should be compared with the given function (the program function). The “defect” signal Z together with a measurement “noise” N forms the input of a regulator given by an operator . The resulting control signal U with an external perturbation P goes back to the input of the object operator .

- find extremals for a generalization of (1) and for some other degenerated (derivative independent) “informational” functionals (the main example of such functionals is given by the Pinsker formula for the entropy quantity of one random process in another.)

- obtained some minimax relations and propose its new interpretations as an “energy–balance equilibrium” in a spirit of the Brillouin’s and Schrodinger’s ideas of “negentropy” (we remind briefly this notion in the Discussion section).

- write our balance relations in a linear stationary one-dimensional system with Gaussian signals and interpret them in terms of Legendre duality.

We would like to stress and warn a mathematical purist reader that this manuscript is not a “mathematical paper” in a formal sense of this notion. We (almost) never discuss and almost never precise any “existence and finitude conditions”. We would rather prefer formal manipulations whenever they exist. Therefore, we decided not to overload these small notes with probability and measure theory precise statements, technicalities, and terminology. We refer all interested readers to the book [2] for all rigorous conditions and other pure mathematical details and statements.

1.1. The Origins

The famous Shannon’s formula [3] provides a facility to measure the capacity of a communication channel in a presence not only of white noise but also in the case when the transmission is perturbed by any Gaussian noise with the power spectrum function proportional to the square module of a filter transfer function :

We recall it because it is probably the first when implicitly the informational characteristics are stipulated by some “energy” constraint. Namely, let the power of the “transmitter” is bounded by some (positive) quantity such the spectral function of the transmitted signal is :

The Shannon formula we have mentioned above for the total capacity of reads for the frequency bandwidth as

Now, it is easy to give a mathematical answer to the natural question: what should we do to get a maximal transmission rate for the given constraint (3)? Shannon proposes to consider the variational problem with the Lagrange multiplier:

which gives the condition

and implies that is a constant. Going further, we put in (3) and obtain

where and the extremal , while

In this paper, we are going to apply these beautiful observations of the founding father of Theory of Information to some (degenerated) variational problems with power constraints arising in the optimal control of automatic systems.

1.2. Review of Some Previously Known Results

The informational criteria for a control system synthesis were very popular in applied automatic control and measure systems in 1970–1980. We should mention here relevant to our interests works of academician Petrov’s school researchers [4]. They were concentrated on the questions of correctness and regularization (in sense of Tikhonov) the control and statistical optimization problems. Their most important and most interesting (to our aims ) input has concluded in a study of a connection between the regularization and a correctness of problems in a one side and an approach motivated by the above Shannon channel capacity formulae in the other. Mathematically, their main tool was based on the Wiener filter theory and related integral equations of the Kolmogorov–Wiener type (see, for example, [5]).

It seems that the system considered by N. Bukanov was ideologically similar to models elaborated by Petrov’s school researchers [5,6]. In the same time, one should stress that Bukanov’s choice of the informational criterion has some advantages. Its mathematical toolbox does not appeal to integral equations and was strongly motivated as we have mentioned by engineering applications to numerical computations related with an optimization of the jet TU-154 automatic board control system.

We shall compare both approaches—[1,5,6] for some basic examples of control linear systems.

We remind that, in [1], a solution was proposed for the variational problem in the class of linear stationary systems with zero program () and Gaussian perturbation signal functions. Namely, Formula (1) makes sense exactly in this class of random functions admissible in [2].

More precisely, the main result of [1] is that the (degenerated) variational problem for the functional (2) has the following extremals:

which are minimized at the same time as the functional of the error signal dispersion:

and the functional of the control signal power dispersion:

One can conclude that the extremal relations (5) between the object–regulator characteristics guarantee that the optimal regulator provides the minimal power to the object of control. Another interesting observation made in [1] is that, in spite of the functional (2) minimal value , it does not mean that the regulator does not use the information at all—it means only that there is a certain “informational balance”:

between the channel capacity

of the object channel in the presence of perturbations P and N, the channel capacity

of the regulator channel in the presence of perturbations P and N, and the (half-)differences

of the informational performances of the output of the object and the regulator channels with open () and closed () loop–controllers:

Here, and are corresponding complex frequency responses (see details in [1]).

1.3. Further Generalizations and the Subject of the Paper

Further studies of this type optimal linear control systems were concerned to a natural generalization for the systems with a non-trivial program ( ). At the same time, it was an interesting and mathematically natural question to study similar (degenerated) variational problems for various informational functionals similar to (1) with a “power” constraint of type (3).

The first steps in this direction were done by the author (in a collaboration with N. Bukanov and V. Kublanov). We have reported our partial results in the paper [7] which was, in fact, a short conference proceedings announcement. No details of those results were ever published (to the best of my knowledge). It should be mentioned that some results and the intrinsic ideology—to consider an “entropy criterion” of optimal regulation proposed in our paper [7] were rediscovered in 1986 in the announcement [8].

One of the aims of this manuscript is to revise and to generalize the results reported in [7] and to consider them in a general context of variational problems with Legendre–Fenchel dual functionals.

The paper is organized as follows: Section 2 contains a reminder and a generalization of basic results from [1]. We introduce the notations and remind main definitions of all important notions, objects, and formulas used in the manuscript—among them are: the Pinsker functionals of entropy and informational rates of one stationary Gaussian signal with respect to another, signal spectral densities, and transfer functions.

First, we remind readers about (and generalize in the case of a non-trivial Gaussian stationary program signal) the variational problem for the Pinsker functional in the form proposed by Bukanov. We observe and discuss (Proposition 1) an important formula that represents the Pinsker informational rate functional associated with the linear system in Figure 1 in a Shannon-like channel capacity form.

Theorem 2 in Section 3 solves two degenerate variational problems for channel capacities functionals related with the program channel and with the error signal perturbation channel. We compare the expressions for both channel capacities with the Petrov–Uskov Formula (6) from [6]. Theorem 3 (essentially reported in [7]) gives the main balance relations for the system on Figure 1. We want to stress that the observed similarity of these relations with the famous Brilliouin and Schrodinger “negentropy” notion appeared due to my numerous discussions with Bukanov, who also indicated a close connection with the information gain, or the Kullback–Leibler divergence (see, for example [9], for a modern geometric approach to “informational thermodynamics”). In Section 4, we consider another informational functionals with or without necessary connections with optimization of linear automatic control systems. Our main observation here is Theorem 4 which remarks that the formula for the second Pinsker main functional—the entropy rate of a random process relatively to another random process—can be interpreted like a Legendre duality relation for the first Pinsker informational rate functional.

2. Optimization of the Linear Control System with a Non-Trivial Stationary Gaussian Program

The results of the section (slightly) generalize and extend the material of the paper [1] and give a new interpretation of it. It is worth noting that our notations are different from the original and our motivations and reasonings do not base on possible engineering applications and are purely theoretical.

2.1. Useful Formulas and Notations

We collect here (for the convenience of readers) all basic theoretical formulas which we shall use throughout the text. We clarify (once and forever in the paper) that all random variables and random processes (discrete or continuous) we shall understand in the same sense as in the Pinsker book [2], and we refer a “mathematically oriented” reader to this book for precise definitions, description, and properties.

2.1.1. Formulae

In what follows, we shall use the following important formulas. Let be two random functions (= stationary (generalized) Gaussian random processes).

- The Gelfand–Pinsker–Yaglom functional (Formula (2.58) from [10]) for the amount of information about a random function contained in another such function:where and are respectively the spectral densities of and and —the mutual spectral density of and Here,is the common correlation function for the processes and (Formula (10.21) from [2]).

- Gelfand–Pinsker–Yaglom functional for Gaussian such that and are non-correlated (Formula (2.61) from [10]):

- The entropy rate of a random variable with respect to was defined by Pinsker [2]. For two one-dimensional Gaussian stationary (in the wide sense) stochastic processes and , the formula for the entropy rate (Formula (10.5.7) from [2]) reads

- For Gaussian such that and are non-correlated:

2.1.2. Generalized Variational Problem

We suppose now that the stationary Gaussian program Then, the following system of linear operator equations with respect to the variables U and Z in coordinates of the Laplace transform for our control system in Figure 1 takes place:

The main determinant of the system

We remind readers about frequency representations and

2.1.3. Notations

For the automatic control system in Figure 1, we introduce the following notations:

In these notations, the functional (2) for the processes and is written as

Solving the system (10) and calculating the spectral density of signals and denoting the program signal , we obtain

One can find the explicit expressions for the transfer functions in Appendix B. We have computed the full functional generalizing functional (2):

Theorem 1.

The functional has the following extremals:

Proof of Theorem 1.

We denote by the “Lagrangian” density for the functional (13):

The “degeneracy” of (13) means that the Lagrangian density does not depend on derivatives of and and the Euler–Lagrange equations are replaced by easy and straightforward computations from the system of variations

(due to the basic lemma of the calculus of variations) by the usual function minimax conditions. The first variation gives

and we obtain the first condition in Theorem 1. The second condition is verifying with a bit more cumbersome computation and we put it in Appendix A. □

Corollary 1.

- The extremal minimal value of the functional (13) isbut, exactly like in the case of the zero program (see (15) in [1]), it does not mean that the regulator of the control system does not use the information. It just means that there is a balance of various channel capacities generalizing (8). We shall discuss and interpret these generalizations in the next sections.

- The same extremals (14) minimize the (generalized) functional of “power” error signal:

- This extremal value easily computedand coincides with the minimal value of (14.1) in [1] for zero–program case.

- The same is true for the similar generalization of the functional (7) for the power of the regulator. We omit the corresponding evident formulas generalizing (14.2) in [1].

We shall obtain another remarkable representation for the functional (13) using the spectral densities of signals Z and :

Proposition 1.

Proof.

This formula will be very useful in further interpretations in Section 4.

3. Capacity of Channels and Balance Theorems for the Linear Stationary Control System

Now, using the generalization for , we can also generalize and extend the relations which we have reported in [7]. In the same time, we shall propose some new interpretations of these relations. We shall assume throughout this section that all signals are stationary and Gaussian.

3.1. Capacity of Channels via Differential Entropy

We recall that, for the capacity of the channel for the linear control system in Figure 1, the given program can be computed via differential entropies (“reduced to a degree of freedom”) of this program and the error (defect) Z (see, for example, (ch. IV (12.5) in [11]):

where

and the expression (18) is written as

Analogously, one can obtain the capacity of the channel for the linear control system relatively the perturbations P and N:

and

such that

Remark 2.

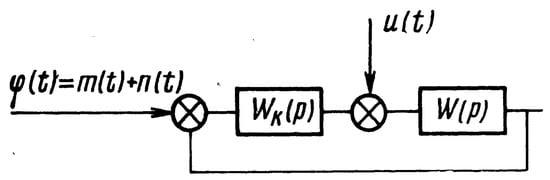

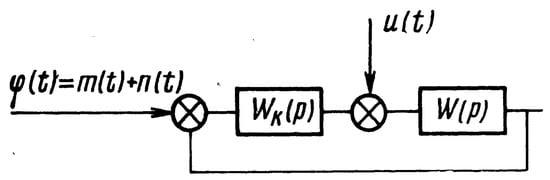

for a channel capacity of the linear system in Figure 2.

Figure 2.

This is a figure from [5]. Here, denotes the transfer function of the operator object. The signal with the perturbation signal form a function on the input of a regulator given by an operator . The system should reproduce the “useful” signal as an output.

Remark 3.

Here the “cross” notation of the diagram nodes means the same as the “addition” nodes notation in Figure 1.

Here, and are spectral densities of the “useful” signal and the “error” signal correspondingly.

The integral should be calculated over the total frequency band W. In particular, if and m and n are non-correlated, then

We assume that the perturbation has the spectral density with an additional “white noise” spectral density: We have from (24)

The authors of [5] argue that the entropy quantity is a characteristic of information lost while the signal goes through the system of Figure 2, and the minimum of this loss is achieved when . When , the entropy i.e., the “error” entropy tends to the entropy of the “useful” signal and the channel capacity goes to

Remark 4.

We should stress here that:

- all signals are supposed to be stationary random Gaussian with zero expectation value;

- the “optimality” solution in [5] was founded with the type “energy” constraint (3), which means in the context the minimality condition of the second momentum (“dispersion”) for the error signal

- The minimal value is achieved when and this condition shows that the “energy” and informational criteria give the same result (compare with the similar conclusion for the case of the system in Figure 1)

- The independency condition (the signals and are non-correlated) can be released, and this leads to the following generalization of capacity channel formula (24):

3.2. Variational Problems for the Channel Capacity Functionals

Now, we need to substitute the spectral densities of all signals in Formulas (20) and (22) and, as a result, obtain the following two channel capacity functionals:

and

Remark 5.

It is evident that the functional (28) generalizes the similar thing for the case

whose extremum under conditions (5) are

Remark 6.

(One can straightforwardly check that this extremal value is a sum of the informational performances of the object and the regulator channels with closed loop–controllers outputs under the conditions (5):

These remarks definitely indicate that there are more general information–performance balance relations and we start to discuss them.

Theorem 2.

The functional (27) has the maximal value

and the functional (28) has the minimal value

for the same set of extremals (14):

Proof of Theorem 2.

Both functionals are degenerated in the same sense as above. Therefore, their extremals are straightforwardly reduced to their Lagrangian densities’ usual minimax computations. Similarly, to determine a character of obtained extremums, it is enough to verify the Hessian matrices definiteness for these densities instead of computations of the second variation and . We omit these routine but tedious computations which are absolutely similar to those in Theorem 1. □

3.3. Informational Balance in the Linear Control System

The following theorem was obtained in a collaboration with Bukanov and Kublanov and was announced in [7].

Theorem 3.

There are two balance relations between the channel capacities:

- For the channel capacity of the program and the informational rate in the control signal U in the “defect" signal Z:

- For the channel capacity of perturbations P and N and the above informational rate:

Proof.

Both identities are easily checked by manipulations with definitions of the corresponding channel capacities and computed extrema. Let us verify the first one:

□

Remark 7.

On other hand, it means that

which shows a similarity with the Brillouin “negentropy" principle which says that the system loses information during a transition from a state with low entropy to a state with higher entropy. (We include a brief description of this notion in the Discussion section below).

Naively speaking, the entropy of the error signal of the linear system with any regulator is bigger (“more chaos") than the error signal entropy for the case of the system with optimal regulator:

Similarly, the same result can be obtained from the second balance relation

One can imagine here the informational rate as an “informational difference” between Z and or as a “Brillouin–Schrödinger negentropy” [12].

Remark 8.

We should admit that the first balance relation (33) does not exist in the absence of the program signal (), while the second is a generalization of the previously described informational balance relation (8): The LHS of the identity (34) under condition is:

which is exactly the RHS of (34) in the same condition.

4. Entropy Rate Functional for the Linear Stationary Control System

Now, we shall discuss the entropy rate functional (9) for the pair of Gaussian stationary error signal (Z) and the error signal with optimal regulator () in the linear control system above:

Introduce the function of a positive argument and study its behavior.

4.1. Properties of the Function and Legendre–Fenchel Transformation

Lemma 1.

The function has the following (almost) evident properties:

- and

- Let such that , then

- If and are integrable on with some measure andthen

- Let and ; then, the function admits the Legendre–Fenchel transformation

Proof.

- To prove the first positivity property of , we split the half line in two subsets: and Start with the second subset: for , we have andIn the first subset, one has similarly if and

- Tautological corollary of the computations in (1).

- Using the inequality (2), we obtain:

- The Legendre–Fenchel transformation of the function exists because the function is smooth and convex for The only critical point with the critical value is obtained from . By the definition (see, f.e. [13], we put and find . Then, we find the Legendre-dual function from We shall check that it satisfies the duality relation

□

We note the famous Fenchel–Young inequality which reads in this case as

which gives the evident inequality

4.2. Legendre Transformation Analogy for the Control System Functionals

We can observe that the Pinsker entropy rate functional (9) can be written in the form

where Then, the Legendre–Fenchel dual functional should be

with Using the part 4 of the Lemma 1, one can compute

Thus, we have obtained the following Legendre duality relation:

Coming back to the Pinsker entropy rate functional Formula (9), one observes that the duality implies

Formula (37) expressed the duality relation between the entropy rate and the functional for any pair of stationary Gaussian signals. Let us consider the case of the Pinsker entropy rate for our control system (36), i.e., and . In this case, (37) and (36) give us the Legendre duality condition between the functionals:

Summing up the discussion in Section 4.2, one can formulate the following theorem:

Theorem 4.

The Pinsker functional of the entropy rate of the error signal in the stationary linear control system with respect to the error signal of this control system with the optimal by the informational criterion regulator and the informational rate functional for these signals are in Legendre duality:

Remark 9.

We can also check that the the duality relation (37) can be easily generalized to the multidimensional case for two dimensional stationary Gaussian processes ξ and η such that and such that the pairs are pair-wise uncorrelated. Then, one can define dimensional analogues of entropy rate and informational rate functionals:

which can be simplified (because of the mutual independence of the signals ξ and η ) to

where (resp. ) is the mutual spectral densities matrix of signal-components of ξ (resp.η) and denotes the entry of the matrix

We want to stress that our multidimensional considerations are purely formal. We do not discuss the conditions of existence and finiteness for all formulas above and refer to [2,14] for all necessary mathematical details. We also do not know if these multidimensional generalizations can immediately apply to an optimal automatic control system problem, but we hope that such functional might be useful in comparison with the method of multidimensional optimization where the minimum of the error expectation value square and maximum of the information mean value criteria were used [15].

5. Discussion

The relationship between entropy and information was first discovered in the seminal work of Szilard [16]. Later, in the works of Brillouin [17], the negentropy principle of information was formulated, generalizing the second law of thermodynamics. According to this principle, both entropy and information should be considered jointly and cannot be interpreted separately. Brillouin had shown [18] that the definition of “information” leads to a direct connection between information and the negative of entropy (here is the abbreviation “negentropy”). Every experiment consumes negentropy (increases entropy) and yields information. The negentropy principle of information is a generalization of Carnot’s principle in thermodynamics that Brillouin had formulated in the following form: the amount of information contained in a physical system must be considered as a negative term in the total entropy of this system. If a system is isolated, it fulfills Carnot’s principle in its generalized form. According to this principle, the total entropy of the system does not decrease. Our Theorem 4 is a good illustration of these energy-information principle.

The functional enters in the Legendre duality relation (37) with the entropy rate functional and has explicit interpretation in terms of the informational rate in the case of the control system. The Legendre balance relation predicts some thermodynamical allusions going back to Gibbs thermodynamical potential relation:

which relates the Helmholtz free energy F to the entropy S, total energy E, and is inverse to the temperature (the Boltzman constant is supposed to be 1 here). If we shall follow the initial idea of Bukanov about a “similarity” of the informational rate functional with the Brillouin negentropy and the information gain or Kullback–Leibler divergence which is “similar (up to sign) to the differential entropy expression”:

for Gaussian , which has the same mean value as . (notations and the statement from p. 15 of [9]), then we should conclude that, in our case, the informational rate functional is rather a Legendre dual to the entropy rate , though it plays a “role of Hamiltonian” while figured here as a “Lagrangian”. Following the “mechanical and thermodynamical” allusions of [9], we could interpret the results of our Theorems 1 and 2 as a (specified) example of the “principle of minimal gain information” and the balance relations in Theorem 3 show the connection with Jaynes principle of maximum entropy. It would interesting to give further interpretations in this line and to understand what the role of the “partition function” is, which, in our context, should naively read

This question, together with more serious comprehension of appropriate Lagrangian or canonical coordinates in these application approaches, would open a door to powerful invariant methods in the theory of information control systems.

There are many other possible developments to study, but all of them look more traditional. We could almost straightforwardly generalize our results using slightly more complicated cases of the capacity channel formulas in comparison with the results of Petrov et al. for signals with correlations, multidimensional systems generalizing the system in Figure 2, etc. The “physical realization” conditions are also beyond the scope of this paper.

Funding

I was partially supported during this work by the Interdisciplinary CNRS Project “Granum” (“Graded Geometry, Numerical methods, Mechanics, and Control Theory”) and Russian Foundation for Basic Research for a partial support under the Grant RFBR 18-01-00461.

Acknowledgments

It is my pleasure to dedicate this paper to Nikolay Petrovich Bukanov, who had the courage many years ago to hire me as a young researcher and the patience to introduce me in the subject of applied Information Theory. His ideas and vision were very influential and his support was crucial at some difficult moments at the start of my scientific career. I am also very grateful to V. S. Kublanov for his collaboration on [7] and to V.V. Lychagin for numerous discussions of various aspects of geometric approach to thermodynamics and the allusions from Information theory. He encouraged me to revise my old computations and to transform them in these notes. It is also my pleasure to thank O.N. Poberejnaia for numerous useful discussions in the early stages of this work. Finally, I am very grateful to the anonymous referee No. 2 for his useful remarks.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Proof of the Theorem 1, Second Condition

Now, using the basic lemma of variational calculus, we obtain the second condition in (14):

References

- Bukanov, N.P. Information criterion of optimality of automatic control systems. Avtomat. Telemekh. 1972, 1, 57–62. (In Russian) [Google Scholar]

- Pinsker, M.S. Information and Informational Stability of Random Variables and Processes; Problemy Peredači Informacii; Izdat. Akad. Nauk SSSR: Moscow, Russia, 1960; Volume 7, 203p, (In Russian). English Translation: Pinsker, M.S., Translated and edited by Amiel Feinstein; Holden-Day, Inc.: San Francisco, CA, USA; London, UK; Amsterdam, The Netherlands, 1964; 243p. [Google Scholar]

- Shannon, C. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Petrov, B.N.; Petrov, V.V.; Ulanov, G.M.; Zaporczets, A.W.; Uskov, A.S.; Kotchubievsky, l.L. Stochastic Process in Control and Information Systems, Survey Paper 63; IFAC: Warszawa, Poland, 1969. [Google Scholar]

- Petrov, V.V.; Uskov, A.S. Informational aspects of the regularization problem. Doklady AN SSSR 1970, 195, 780–782. (In Russian) [Google Scholar]

- Petrov, V.V.; Uskov, A.S. Channel capacities of feed-back informational systems with internal perturbations. Doklady AN SSSR 1970, 194, 1029–1031. (In Russian) [Google Scholar]

- Bukanov, N.P.; Kublanov, V.S.; Rubtsov, V.N. About a question on a synthesis of an automatic control system optimal with respect to an informational criterion. In Proceedings of the All-Union Conference Optimization and Efficiency of Systems and Processes in Civil Aviation, MIIGA, Moscow, Russia, March 1979; pp. 83–88. (In Russian). [Google Scholar]

- Petrov, V.V.; Sobolev, V.I. Entropy approach to a quality criterial of automatic control systems. Doklady AN SSSR 1986, 287, 799–801. (In Russian) [Google Scholar]

- Lychagin, V.V. Contact Geometry, Measurement, and Thermodynamics. In Nonlinear PDEs, Their Geometry, and Applications’; Kycia, R.A., Ulan, M., Schneider, E., Eds.; Birkhäuser: Basel, Switzerland, 2019; pp. 3–52. [Google Scholar]

- Gelfand, I.M.; Yaglom, A.M. Computation of the amount of information about a stochastic function contained in another such function. Uspehi Mat. Nauk 1957, 12, 3–52. (In Russian) [Google Scholar]

- Tarasenko, F.P. Introduction in the course of Information Theory; Tomsk State University: Tomsk, Russia, 1963; p. 239. (In Russian) [Google Scholar]

- Schrödinger, E. What Does Life Is from the Physics Point of View? State Edition of Foreign Literature: Moscow, Russia, 1947; p. 147. (In Russian) [Google Scholar]

- Arnold, V.I. Mathematical Methods of Classical Mechanics; Translated by K. Vogtmann and A. Weinstein; Graduate Texts in Mathematics, 60; Springer: New York, NY, USA, 1989; 516p. [Google Scholar]

- Pinsker, M.S. Entropy, entropy rate and entropy stability of Gaussian random variables and processes. Doklady AN SSSR 1960, 133, 531–534. (In Russian) [Google Scholar]

- Petrov, V.V.; Zaporozhets, A.V. Principle of developable systems. Doklady AN SSSR 1975, 224, 779–782. (In Russian) [Google Scholar]

- Szilard, L. On the reduction of entropy in a thermodynamic system by the intervention of intelligent beings. Z. Phys. 1929, 53, 840–856. (In German) [Google Scholar] [CrossRef]

- Brillouin, L. Science and Information Theory; State Edition of Foreign Literature: Moscow, Russia, 1960; p. 392. (In Russian) [Google Scholar]

- Brillouin, L. Thermodynamics, statistics, and information. Am. J. Phys. 1961, 29, 318–328. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).