On Energy–Information Balance in Automatic Control Systems Revisited

Abstract

| To Nikolay Petrovich Bukanov with my gratitude and admiration |

1. Introduction

- find extremals for a generalization of (1) and for some other degenerated (derivative independent) “informational” functionals (the main example of such functionals is given by the Pinsker formula for the entropy quantity of one random process in another.)

- obtained some minimax relations and propose its new interpretations as an “energy–balance equilibrium” in a spirit of the Brillouin’s and Schrodinger’s ideas of “negentropy” (we remind briefly this notion in the Discussion section).

- write our balance relations in a linear stationary one-dimensional system with Gaussian signals and interpret them in terms of Legendre duality.

1.1. The Origins

1.2. Review of Some Previously Known Results

1.3. Further Generalizations and the Subject of the Paper

2. Optimization of the Linear Control System with a Non-Trivial Stationary Gaussian Program

2.1. Useful Formulas and Notations

2.1.1. Formulae

- The Gelfand–Pinsker–Yaglom functional (Formula (2.58) from [10]) for the amount of information about a random function contained in another such function:where and are respectively the spectral densities of and and —the mutual spectral density of and Here,is the common correlation function for the processes and (Formula (10.21) from [2]).

- Gelfand–Pinsker–Yaglom functional for Gaussian such that and are non-correlated (Formula (2.61) from [10]):

- For Gaussian such that and are non-correlated:

2.1.2. Generalized Variational Problem

2.1.3. Notations

- The extremal minimal value of the functional (13) isbut, exactly like in the case of the zero program (see (15) in [1]), it does not mean that the regulator of the control system does not use the information. It just means that there is a balance of various channel capacities generalizing (8). We shall discuss and interpret these generalizations in the next sections.

- The same extremals (14) minimize the (generalized) functional of “power” error signal:

- This extremal value easily computedand coincides with the minimal value of (14.1) in [1] for zero–program case.

3. Capacity of Channels and Balance Theorems for the Linear Stationary Control System

3.1. Capacity of Channels via Differential Entropy

- all signals are supposed to be stationary random Gaussian with zero expectation value;

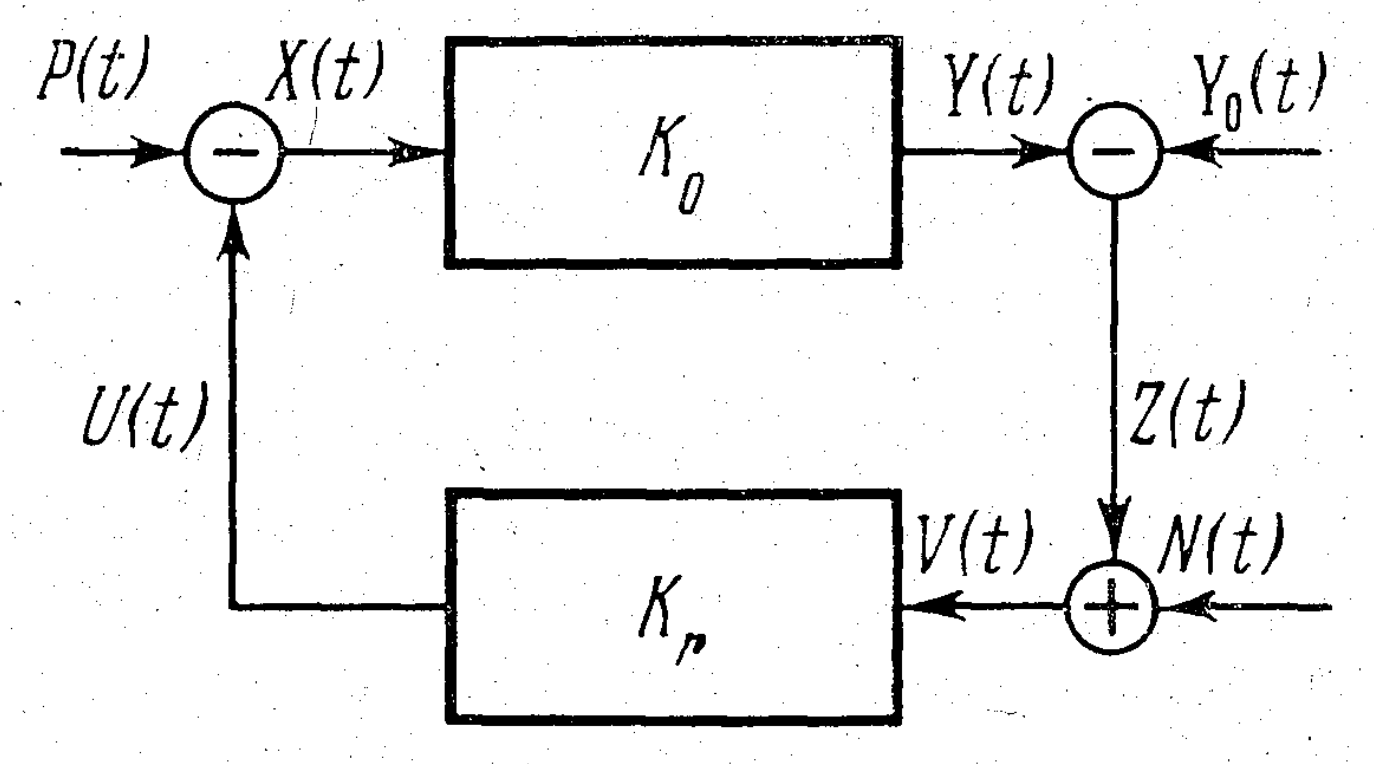

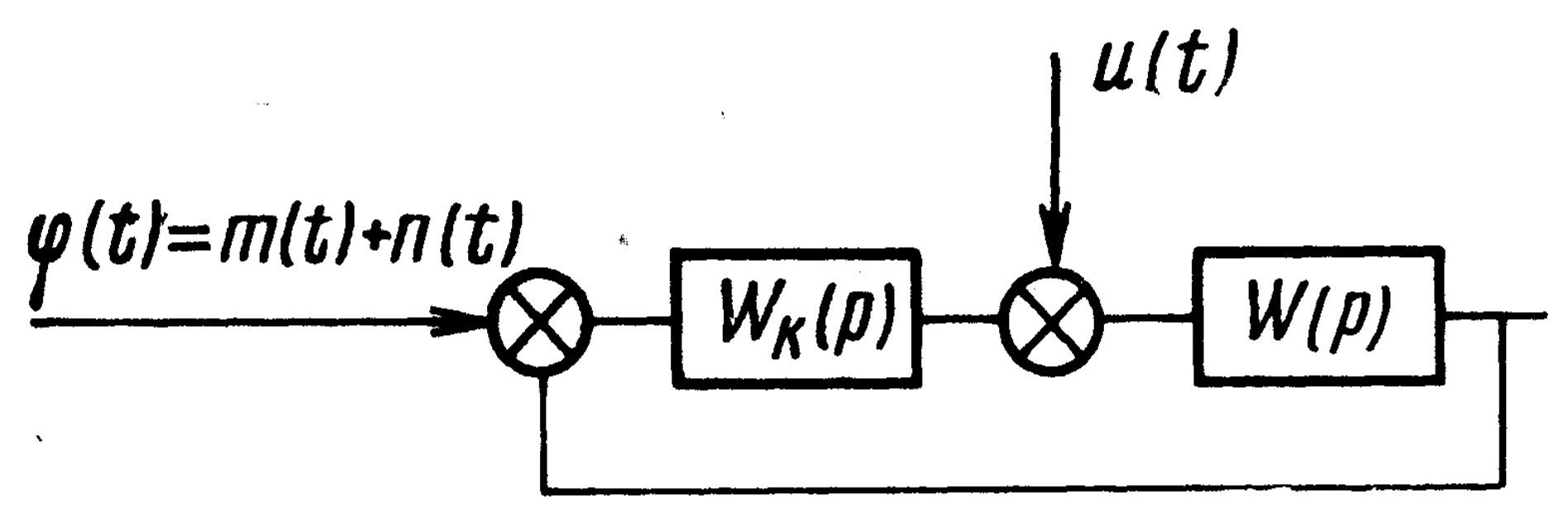

- The minimal value is achieved when and this condition shows that the “energy” and informational criteria give the same result (compare with the similar conclusion for the case of the system in Figure 1)

- The independency condition (the signals and are non-correlated) can be released, and this leads to the following generalization of capacity channel formula (24):

3.2. Variational Problems for the Channel Capacity Functionals

3.3. Informational Balance in the Linear Control System

- For the channel capacity of the program and the informational rate in the control signal U in the “defect" signal Z:

- For the channel capacity of perturbations P and N and the above informational rate:

4. Entropy Rate Functional for the Linear Stationary Control System

4.1. Properties of the Function and Legendre–Fenchel Transformation

- and

- Let such that , then

- If and are integrable on with some measure andthen

- Let and ; then, the function admits the Legendre–Fenchel transformation

- To prove the first positivity property of , we split the half line in two subsets: and Start with the second subset: for , we have andIn the first subset, one has similarly if and

- Tautological corollary of the computations in (1).

- Using the inequality (2), we obtain:

- The Legendre–Fenchel transformation of the function exists because the function is smooth and convex for The only critical point with the critical value is obtained from . By the definition (see, f.e. [13], we put and find . Then, we find the Legendre-dual function from We shall check that it satisfies the duality relation

4.2. Legendre Transformation Analogy for the Control System Functionals

5. Discussion

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Proof of the Theorem 1, Second Condition

Appendix B. Formulas for Transition Functions in Frequency Variables

References

- Bukanov, N.P. Information criterion of optimality of automatic control systems. Avtomat. Telemekh. 1972, 1, 57–62. (In Russian) [Google Scholar]

- Pinsker, M.S. Information and Informational Stability of Random Variables and Processes; Problemy Peredači Informacii; Izdat. Akad. Nauk SSSR: Moscow, Russia, 1960; Volume 7, 203p, (In Russian). English Translation: Pinsker, M.S., Translated and edited by Amiel Feinstein; Holden-Day, Inc.: San Francisco, CA, USA; London, UK; Amsterdam, The Netherlands, 1964; 243p. [Google Scholar]

- Shannon, C. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Petrov, B.N.; Petrov, V.V.; Ulanov, G.M.; Zaporczets, A.W.; Uskov, A.S.; Kotchubievsky, l.L. Stochastic Process in Control and Information Systems, Survey Paper 63; IFAC: Warszawa, Poland, 1969. [Google Scholar]

- Petrov, V.V.; Uskov, A.S. Informational aspects of the regularization problem. Doklady AN SSSR 1970, 195, 780–782. (In Russian) [Google Scholar]

- Petrov, V.V.; Uskov, A.S. Channel capacities of feed-back informational systems with internal perturbations. Doklady AN SSSR 1970, 194, 1029–1031. (In Russian) [Google Scholar]

- Bukanov, N.P.; Kublanov, V.S.; Rubtsov, V.N. About a question on a synthesis of an automatic control system optimal with respect to an informational criterion. In Proceedings of the All-Union Conference Optimization and Efficiency of Systems and Processes in Civil Aviation, MIIGA, Moscow, Russia, March 1979; pp. 83–88. (In Russian). [Google Scholar]

- Petrov, V.V.; Sobolev, V.I. Entropy approach to a quality criterial of automatic control systems. Doklady AN SSSR 1986, 287, 799–801. (In Russian) [Google Scholar]

- Lychagin, V.V. Contact Geometry, Measurement, and Thermodynamics. In Nonlinear PDEs, Their Geometry, and Applications’; Kycia, R.A., Ulan, M., Schneider, E., Eds.; Birkhäuser: Basel, Switzerland, 2019; pp. 3–52. [Google Scholar]

- Gelfand, I.M.; Yaglom, A.M. Computation of the amount of information about a stochastic function contained in another such function. Uspehi Mat. Nauk 1957, 12, 3–52. (In Russian) [Google Scholar]

- Tarasenko, F.P. Introduction in the course of Information Theory; Tomsk State University: Tomsk, Russia, 1963; p. 239. (In Russian) [Google Scholar]

- Schrödinger, E. What Does Life Is from the Physics Point of View? State Edition of Foreign Literature: Moscow, Russia, 1947; p. 147. (In Russian) [Google Scholar]

- Arnold, V.I. Mathematical Methods of Classical Mechanics; Translated by K. Vogtmann and A. Weinstein; Graduate Texts in Mathematics, 60; Springer: New York, NY, USA, 1989; 516p. [Google Scholar]

- Pinsker, M.S. Entropy, entropy rate and entropy stability of Gaussian random variables and processes. Doklady AN SSSR 1960, 133, 531–534. (In Russian) [Google Scholar]

- Petrov, V.V.; Zaporozhets, A.V. Principle of developable systems. Doklady AN SSSR 1975, 224, 779–782. (In Russian) [Google Scholar]

- Szilard, L. On the reduction of entropy in a thermodynamic system by the intervention of intelligent beings. Z. Phys. 1929, 53, 840–856. (In German) [Google Scholar] [CrossRef]

- Brillouin, L. Science and Information Theory; State Edition of Foreign Literature: Moscow, Russia, 1960; p. 392. (In Russian) [Google Scholar]

- Brillouin, L. Thermodynamics, statistics, and information. Am. J. Phys. 1961, 29, 318–328. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rubtsov, V. On Energy–Information Balance in Automatic Control Systems Revisited. Entropy 2020, 22, 1300. https://doi.org/10.3390/e22111300

Rubtsov V. On Energy–Information Balance in Automatic Control Systems Revisited. Entropy. 2020; 22(11):1300. https://doi.org/10.3390/e22111300

Chicago/Turabian StyleRubtsov, Vladimir. 2020. "On Energy–Information Balance in Automatic Control Systems Revisited" Entropy 22, no. 11: 1300. https://doi.org/10.3390/e22111300

APA StyleRubtsov, V. (2020). On Energy–Information Balance in Automatic Control Systems Revisited. Entropy, 22(11), 1300. https://doi.org/10.3390/e22111300