Abstract

In this paper, a noise enhanced binary hypothesis-testing problem was studied for a variable detector under certain constraints in which the detection probability can be increased and the false-alarm probability can be decreased simultaneously. According to the constraints, three alternative cases are proposed, the first two cases concerned minimization of the false-alarm probability and maximization of the detection probability without deterioration of one by the other, respectively, and the third case was achieved by a randomization of two optimal noise enhanced solutions obtained in the first two limit cases. Furthermore, the noise enhanced solutions that satisfy the three cases were determined whether randomization between different detectors was allowed or not. In addition, the practicality of the third case was proven from the perspective of Bayes risk. Finally, numerous examples and conclusions are presented.

1. Introduction

Stochastic resonance (SR) is a physical phenomenon where noise plays an active role in enhancing the performance of some nonlinear systems under certain conditions. Since the concept of SR was first put forward by Benzi et al. in 1981 [1], the positive effect of SR has been widely investigated and applied in various research fields, such as physics, chemical, biological and electronic, etc. [2,3,4,5,6,7,8,9,10]. In signal detection theory, it is also called noise enhanced detection [3]. The classical signature of noise enhanced detection can be an increase in output signal-to-noise ratio (SNR) [11,12,13] or mutual information (MI) [14,15,16,17,18], a decrease in Bayes risk [19,20,21] or probability of error [22], or an increase in detection probability without increasing the false-alarm probability [23,24,25,26,27,28].

Studies in recent years indicate that the detection performance of a nonlinear detector in a hypothesis testing problem can be improved by adding additive noise to the system input or adjusting the background noise level based on the Bayesian [19], Minimax [20] or Neyman–Pearson [24,25,28] criteria. In [19], S. Bayram et al. analyzed the noise enhanced M-ary composite hypothesis testing problem in a restricted Bayesian framework. Specifically, the minimax criterion can be used when the prior probabilities are unknown. The research results showed that the noise enhanced detection in the Minimax framework [20] can be viewed as a special case in the restricted Bayesian framework.

Numerous researches on how to increase the detection probability according to the Neyman–Pearson criterion have been made. In [23], S. Kay showed that for the detection of a direct current (DC) signal in a Gaussian mixture noise background, the detection probability of a sign detector can be enhanced by adding a suitable white Gaussian noise under certain conditions. A mathematical framework was established by H. Chen et al. in order to analyze the mechanism of the SR effect on the binary hypothesis testing problem according to Neyman–Pearson criterion for a fixed detector in [24]. The optimal additive noise that maximizes the detection probability without decreasing the false-alarm probability and its probability density function (pdf) are derived in detail. In addition, the conditions sufficing for improvability and non-improvability are given. In [27], Ashok Patel and Bart Kosko presented theorems and an algorithm to search the optimal or near-optimal additive noise for the same problem as in [24] from another perspective. In [28], binary noise enhanced composite hypothesis-testing problems are investigated according to the Max-sum, Max-min and Max-max criteria, respectively. Furthermore, a noise enhanced detection problem for a variable detector according to Neyman–Pearson criterion is investigated in [25]. Similar to [24,27,28], it also only considers how to increase the detection probability but ignores the importance of decreasing the false-alarm probability.

Few researchers focus on how to reduce the false-alarm probability and there is no evidence to indicate that the false-alarm probability cannot be decreased by adding additive noise on the premise of not deteriorating the detection probability. In fact, it is significant to decrease the false-alarm probability without decreasing the detection probability. In [2], a noise enhanced model for a fixed detector is proposed, which considers how to use the additive noise to decrease the false-alarm probability and increase the detection probability simultaneously. However, unfortunately, it does not take into account the case where the detector is variable. When no randomization exists between different detectors, we just need to find the most appropriate detector since the optimum solution for each detector can be obtained straightforwardly by utilizing the results in [2]. On the other hand, if the randomization between different detectors is allowed, some new noise enhanced solutions can be introduced by the randomization of multiple detector and additive noise pairs. Therefore, the aim of this paper was to find the optimal noise enhanced solutions for the randomization case.

Actually, in many cases, although the structure of the detector cannot be altered, some of its parameters can be adjusted to obtain a better performance. Even in some particular situations, the structure can also be changed. In this paper, we consider the noise enhanced model established in [2] for a variable detector, where a candidate set of decision functions can be utilized. Instead of solving the model directly, three alternative cases are considered. The first two cases are to minimize the false-alarm probability and maximize the detection probability without deterioration of one by the other, respectively. When the randomization between the detectors cannot be allowed, the first two cases can be realized by choosing a suitable detector and adding the corresponding optimal additive noise. When the randomization between different detectors can be allowed, the optimal noise enhanced solutions for the first two cases are suitable randomization between the two detectors and additive noise pairs. Whether the randomization between the detectors is allowed or not, the last case can be obtained by a convex combination of the optimal noise enhanced solutions for the first two cases with corresponding weights. In addition, the noise enhanced model also provides a solution to reduce the Bayes risk for the variable detector in this paper, which is different from the minimization of Bayes risk under Bayesian criterion in [19] where the false-alarm and detection probabilities are not of concern.

The remainder of this paper is organized as follows. In Section 2, a noise enhanced binary hypothesis-testing model for a variable detector is established, which is simplified into three different cases. In Section 3, the forms of the noise enhanced solutions are discussed. Furthermore, the exact parameter values of these noise enhanced solutions are determined in Section 4 allowing randomization between the detectors. Numerous results are presented in Section 5 and the conclusions are provided in Section 6.

Notation: Lower-case bold letters denote vectors, is a K-dimensional observation vector; upper-case hollow letters denote sets, e.g., denotes a set of real numbers; is used to denote pdf, while its corresponding conditional counterpart; denotes the decision function, denotes a set of decision functions; denotes the Dirac function; , , , , , and denote convolution, summation, integral, expectation, minimum, maximum and argument operators, respectively; and denote infimum and supremum operators, respectively; denotes a Gaussian pdf with mean and variance .

2. Noise Enhanced Detection Model for Binary Hypothesis-Testing

2.1. Problem Formulation

A binary hypothesis-testing problem is considered as follows

where and denote the original and alternative hypotheses, respectively, is a K-dimensional observation vector, i.e., , denotes a set of real numbers, and is the pdf of under , . Let represent the decision function, which is also the probability of choosing , and . For a given , the original false-alarm probability and detection probability can be calculated as

The new noise modified observation is obtained by adding an independent additive noise to the original observation such that

Then the pdf of under can be formulated as the following convolutions of and ,

The noise modified false-alarm probability and detection probability for the given can be calculated by

such that

From (6) and (7), and are the respective expected values of and based on the distribution of the additive noise . Especially, and for the given according to (8).

2.2. Noise Enhanced Detection Model for a Variable Detector

Actually, although the detector cannot be substituted in many cases, some parameters of the detectors can be adjusted to achieve a better detection performance, such as the decision threshold. Even in some particular cases, the structure of the detector can also be altered. Instead of the strictly fixed , a candidate set of decision functions is provided to be utilized here. As a result, for a variable detector, the optimization of detection performance can be achieved by adding a suitable noise and/or changing the detector. If the randomization between the detectors is allowed, the optimal solution of the noise enhanced detection problem would be a combination of multiple decision function and additive noise pairs.

Under the constraints that and , a noise enhanced detection model for a variable detector is established as follows

where and represent the improvements of false-alarm and detection probabilities, respectively. Let be the overall improvement of the detectability. Namely, is the sum of and such that .

It is obvious that the ranges of and are limited and the maximum values of and cannot be obtained at the same time. In order to solve the noise enhanced detection model, we can first consider two limit cases, i.e., the noise enhanced optimization problems of maximizing and , respectively, under the constraints that and ,. Then a new suitable solution of the noise enhanced detection model in (9) can be obtained by a convex combination of two optimal noise enhanced solutions obtained in the two limit cases with corresponding weights. Consequently, the new noise enhanced solution can always guarantee and , and the corresponding value of is between the values of obtained in the two limit cases. The three cases discussed above can be formulated as below.

- (i)

- When , the minimization of is explored such that the maximum achievable is denoted by , the corresponding is remarked as and . Thus, the corresponding false-alarm and detection probabilities can be written as below,

- (ii)

- When , the maximum is searched such that the corresponding and are denoted by and , respectively, where and is the maximum achievable . The corresponding false-alarm and detection probabilities can be expressed by

- (iii)

- A noise enhanced solution obtained as a randomization between two optimal solutions of case (i) and case (ii) with weights and , respectively, is applied in this case. Combining (10) and (11), the corresponding false-alarm and detection probabilities are calculated by

Naturally, and . It is obvious that case (iii) is identical to cases (i) when , while case (iii) is the same as case (ii) when . In addition, when , more different noise enhanced solutions can be obtained by adjusting the value of to increase the detection probability and decrease the false-alarm probabilities simultaneously.

Remarkably, if and are required, we only need to replace and in this model with and , respectively.

3. Form of Noise Enhanced Solution under Different Situations

For the noise enhanced detection problem, when the detector is fixed, we only need to consider how to find the suitable or optimal additive noise. Nevertheless, when the detector is variable, we also need to consider how to choose a suitable detector. In this section, the noise enhanced detection problem for the variable detector is discussed for the case where the randomization between different detectors is allowed or not.

3.1. No Randomization between Detectors

For the case where no randomization between detectors is allowed, only one detector can be applied for each decision, thereby the noise enhanced detection problem for the variable detector can be simplified to that for a fixed detector . That means the optimal noise enhanced solution is to find the optimal detector from and add the corresponding optimal additive noise. The actual noise modified false-alarm and detection probabilities can be expressed by and .

For any , the corresponding and can be obtained straightforwardly by utilizing the results in [2]. Then the optimal detectors corresponding to case (i) and case (ii) can be selected as and . When , the optimal detector for case (iii) is selected as . When , if , is selected as the optimal detector for case (iii). Otherwise, is selected.

3.2. Randomization between Detectors

For the case where the randomization between different detectors is allowed, multiple detector and additive noise pairs can be utilized for each decision, thereby the actual noise modified false-alarm and detection probabilities can be expressed as

where is the number of detectors involved in the noise enhanced solution, and are the respective false-alarm and detection probabilities for , is the probability of , and .

Let , then we have and where is a function which maps to based on function . Thus can be a one-to-one or one-to-multiple function with respect to (w.r.t.) , and vice versa. In addition, let be the set of all pairs of , i.e., . On the basis of these definitions, the forms of the optimal enhanced solutions for case (i) and case (ii) can be presented in the following theorem.

Theorem 1.

The optimal noise enhanced solution for case (i) (case (ii)) is a randomization of at most two detectors and discrete vector pairs, i.e., and with the corresponding probabilities. The corresponding proof is presented in Appendix A and omitted here.

4. Solutions of the Noise Enhanced Model with Randomization

In this section, we will explore and find the optimal enhanced solutions corresponding to cases (i) and (ii), then achieve case (iii) through utilizing the solutions of cases (i) and (ii). From (8), and can be treated as the false-alarm and detection probabilities, respectively, which are obtained by choosing a suitable and adding a discrete vector to . Thus we can find the minimum marked as and the maximum denoted by from the set . Then realizations of the case (i) and case (ii) can start with and , respectively. A more detailed solving process is given as follows.

4.1. The Optimal Noise Enhanced Solution for Case (i)

In this subsection, the main goal is to determine the exact values of two detectors and constant vector pairs in the optimal noise enhanced solution for case (i).

Define and . Namely, and are the minimum corresponding to a given for a fixed and for all , respectively. According to the definitions of and , is rewritten as , and the maximum corresponding to can be denoted by . Combining with the location of and Theorem 1, we have the following theorem.

Theorem 2.

If , then and , the minimum achievable is obtained by choosing the detector and adding a discrete vector . If , the optimal noise enhanced solution that minimizes is a randomization of two detectors and discrete vector pairs, i.e., and with probabilities and , and the corresponding . The corresponding proof is given in Appendix B.

Obviously, when , the detector and constant vector that minimizes can be determined by and . Moreover, and .

In order to determine the exact values of , and for the case of , an auxiliary function is provided. There exists at least one that makes obtain the same minimum value marked as in two intervals and . The maximum corresponding to in and are expressed by and , respectively. As a result, the optimal false-alarm probability and the corresponding detection probability can be calculated as

where , and are determined by and , and are determined by and . As a result, and .

4.2. The Optimal Noise Enhanced Solution for Case (ii)

The focus of this subsection is to determine the exact values of the parameters in the optimal noise enhanced solution for case (ii).

Define and , such that and are the maximum corresponding to a given for a fixed and all , respectively. In addition, since denotes the maximum , and the minimum corresponding to can be denoted by . Combined with the location of and Theorem 1, the following theorem is obtained.

Theorem 3.

If , then and , the maximum achievable in case (i) is obtained by choosing the detector and adding a constant vector to . Otherwise, the maximization of in case (ii) is obtained by a randomization of two pairs of and with the probabilities and , respectively, and the corresponding . The corresponding proof is similar to that of Theorem 2 and omitted.

According to Theorem 3, when , the detector and constant vector that maximizes in case (ii) is determined by and . Also, and .

In addition, in order to determine the exact values of , and that maximizes when , we define an auxiliary function that . There is at least one that makes obtain the same maximum value denoted by in two intervals and . The minimum corresponding to in and are expressed by and , respectively. As a consequence, the optimal detection probability and the corresponding false-alarm probability are recalculated as

where , and are determined by the two equations and , and are determined by and . Then we have and .

4.3. The Suitable Noise Solution for Case (iii)

According to the analyses in Section 4.1 and Section 4.2, the model in (9) can be achieved by choosing if and/or choosing if . When and hold at the same time, the model can also be achieved by a randomization of two detectors and noise pairs and with probabilities and , respectively, where .

If and , the model in (9), i.e., case (iii) can be achieved by the randomization of the two optimal noise enhanced solutions for case (i) and case (ii) with probabilities and , respectively, where . In other words, case (iii) can be achieved by a suitable randomization of , , , and with probabilities , , , and , respectively, as shown in Table 1.

Table 1.

The probability of each component in the suitable noise enhanced solution for case (iii).

The corresponding false-alarm and detection probabilities are calculated as

where , and . Especially, denotes case (i) and denotes case (ii). It is clearly that different available false-alarm and detection probabilities can be obtained by adjusting the value of under the constraints that and .

From the perspective of Bayesian criterion, the noise modified Bayes risk can be expressed in the form of a false-alarm and detection problem such that

where is the prior probability of , is the cost of choosing when is true, , and if . According to case (iii), the improvement of Bayes risk can be obtained by

where is the Bayes risk of the original detector. As a result, case (iii) provides a solution to decrease the Bayes risk.

When are unknown and are known, an alternative method considers the Minimax criterion, i.e., , where and are the conditional risks of choosing and , respectively, for the noise modified detector. Accordingly, case (i) and case (ii) also provide the optimal noise enhanced solution to minimize and , respectively, for the variable case.

The minimization of Bayes risk for a variable detector has also been discussed in [25]. Compared to this paper, the minimum Bayes risk is obtained without considering the false-alarm and detection probabilities, which is the biggest difference between reference [25] and our work. In addition, the minimization of Bayes risk in [25] is studied only under uniform cost assignment (UCA), i.e., and , when is known.

5. Numerical Results

In this section, numerical detection examples are given to verify the theoretical conclusions presented in the previous sections. A binary hypothesis-testing problem is given by

where is a K-dimensional observation vector, , is a known signal and are i.i.d. symmetric Gaussian mixture noise samples with the pdf

where . Let , , and . A general decision process of a suboptimal detector is expressed as

where is the decision threshold.

5.1. A Detection Example for

In this subsection, suppose that and

The corresponding decision function is

When we add an additive noise to , the noise modified decision function can be written as

It is obvious from (27) that the detection performance obtained by setting the threshold as and adding a noise is identical with that achieved by keeping the threshold as zero and adding a noise . As a result, the optimal noise enhanced performances obtained are the same for different thresholds. That is also to say, the randomization between different thresholds cannot improve the optimum performance further and only the non-randomization case should be considered in this example. According to (8), we have

where . Based on the analysis on Equation (8), the original false-alarm and detection probabilities are and , respectively.

From the definition of function , , and are monotonically increasing with and for any . In addition, both and are one-to-one mapping functions w.r.t. . Therefore, we have and for any . Furthermore, and . The relationship between and is one-to-one, as well as that between and . As a result, , , , and for any . That is to say, and , where and are the respective optimal noise components for case (i) and case (ii) for any . From [2,24], we have

Then the pdf of the optimal additive noise corresponding to case (i) and case (ii) for the detector given in (26) can be expressed as

where and . Thus the suitable additive noise for case (iii) can be given by

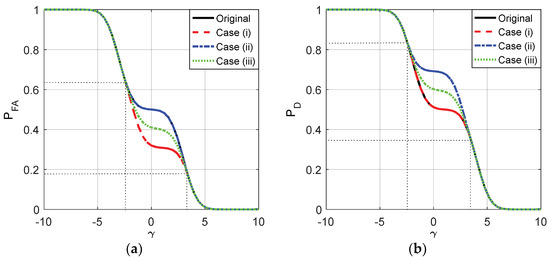

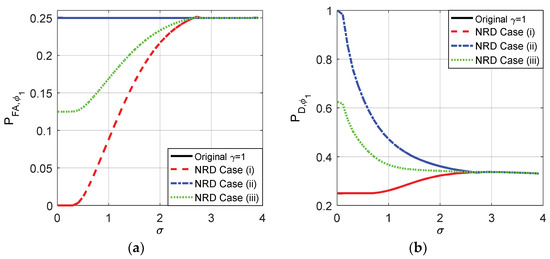

where . In this example, let . The false alarm and detection probabilities for the three cases versus different are shown in Figure 1.

Figure 1.

and as functions of for the original detector and the three cases where , , and

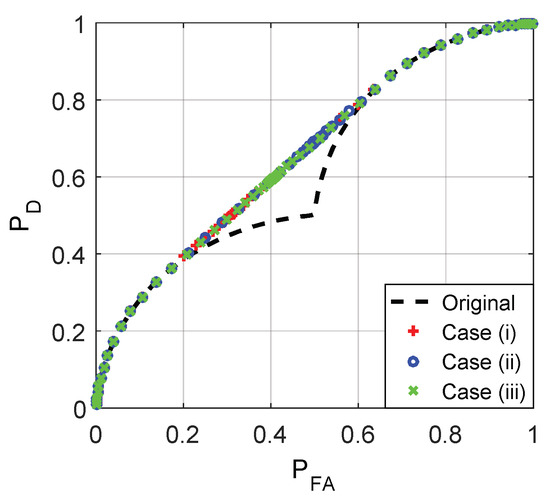

As plotted in Figure 1, with the increase of , the false alarm and detection probabilities for the three cases and the original detector gradually decrease from 1 to 0, and the noise enhanced phenomenon only occurs when . Namely, the detection performance can be improved by adding additive noise when the value of is between −2.5 and 3.5. When , and , the corresponding and , thereby the additive noises as shown in (32) and (33) exist to improve the detection performance. Furthermore, the receiver operating characteristic (ROC) curves for the three cases and the original detector are plotted in Figure 2. The ROC curves for the three cases overlap with each other exactly, and the detection probability can be increased by adding additive noise only when the false-alarm probability is between 0.1543 and 0.6543.

Figure 2.

Receiver operating characteristic (ROC) curves for the original detector and the three cases where , , and .

Given and the prior probability , , the noise enhanced Bayes risk obtained according to case (iii) is given by

where and . Let , and , then the Bayes risk of the original detector is calculated as

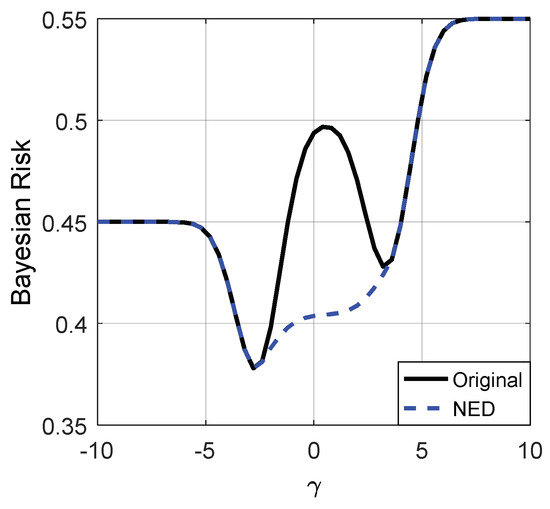

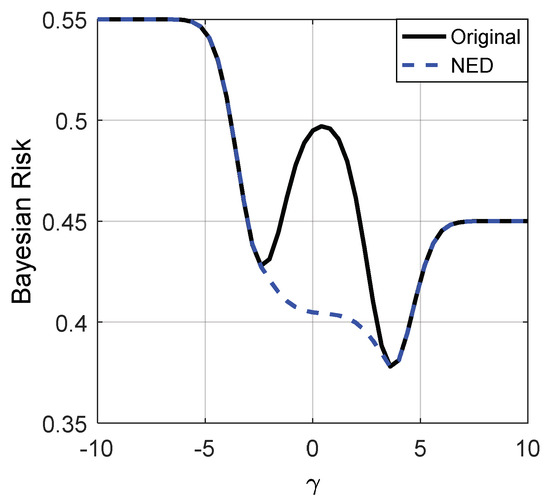

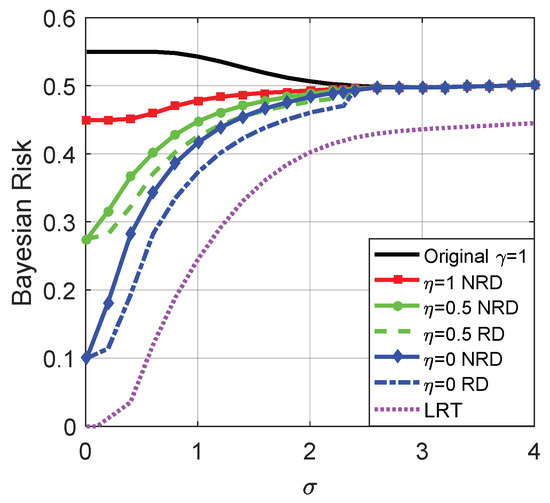

Figure 3 and Figure 4 depict the Bayes risks of the noise enhanced and the original detectors versus different for and 0.55, respectively.

Figure 3.

Bayes risks of the noise enhanced and original detectors for . “NED” denotes the noise enhanced detector.

Figure 4.

Bayes risks of the noise enhanced and the original detectors for .

From Figure 3 and Figure 4, we can see that when the decision threshold is very small, the Bayes risks of the noise enhanced and the original detectors are close to . As illustrated in Figure 3 and Figure 4, only when , can the Bayes risk be decreased by adding additive noise. With the increase of , the difference between the Bayes risks of the noise enhanced detector and the original detector first increases and then decreases to zero, and reaches the maximum value when . If the decision threshold is large enough, the Bayes risks for the two detectors are close to . In addition, there is no link between the values of and the possibility of the detection performance can or cannot be improved via additive noise, which is consistent with (35).

5.2. A Detection Example for

In this example, suppose that

where . When , we have

It is obvious that when and when , which implies the detection result is invalid if or . In addition, the detection performance is the same for (). Therefore, suppose that two alternative thresholds are and , the corresponding decision functions are denoted by and , respectively. Let , then we have

where , is the decision function given in (26) with . Based on the theoretical analysis in Section 4, , and . Furthermore, and .

Through a series of analyses and calculations, it is true that , where and are determined by and , respectively. Similarly, , where and are determined by and , respectively. Moreover, where .

On the other hand, where and are determined by and . Moreover, , where and . As a consequence, where .

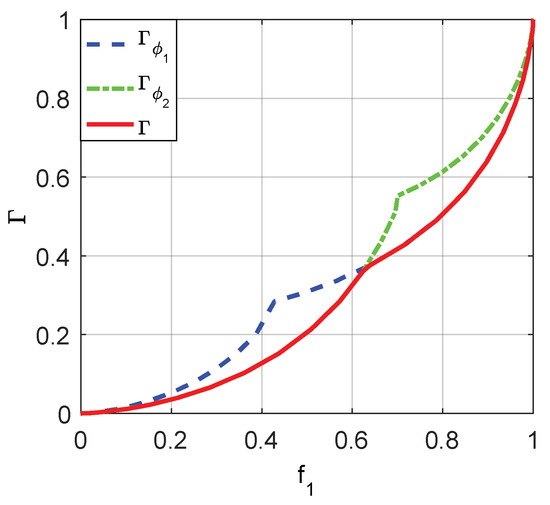

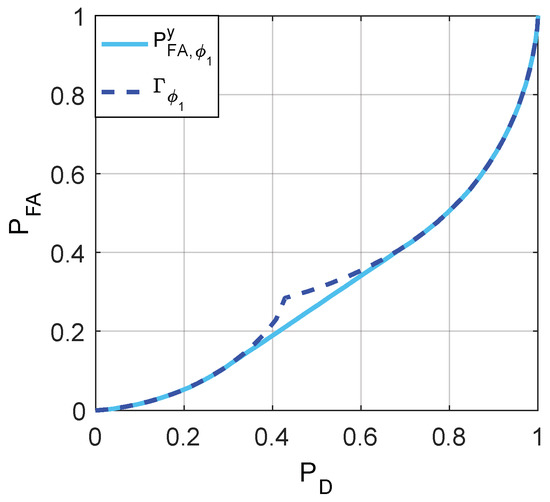

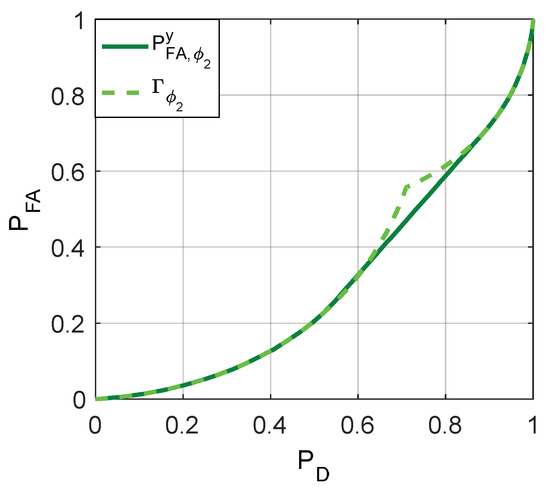

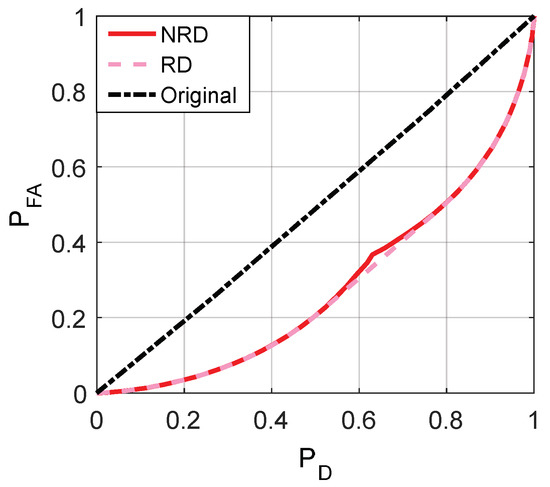

The minimum achievable false-alarm probabilities for and , i.e., and , can be obtained, respectively, by utilizing the relationships between , , and as depicted in Figure 5. Then Figure 6, Figure 7 and Figure 8 are given to illustrate the relationship between and clearly under two different decision thresholds. As illustrated in Figure 6, for the case of threshold , the false-alarm probability can be decreased by adding an additive noise when . Correspondingly, the false-alarm probability can be decreased by adding an additive noise only when for as shown in Figure 7. The minimum false-alarm probability for a given without threshold randomization is , which is plotted in Figure 8 and represented by the legend “NRD”.

Figure 5.

The relationships between , and .

Figure 6.

and the achievable minimum obtained when .

Figure 7.

The achievable minimum obtained when and .

Figure 8.

Comparison of minimum achieved by the original detector and noise enhanced decisions for “NRD” and “RD” cases, which denote non-randomization and randomization exist between the thresholds, respectively.

As illustrated in Figure 8, when the randomization between decision thresholds is allowed, the noise modified false-alarm probability can be decreased further compared with the case where no randomization between decision thresholds is allowed for . Actually, the minimum achievable noise modified false-alarm probability is obtained by a suitable randomization between two threshold and discrete vector pairs, i.e., and , with probabilities and , respectively, such that

Remarkably, the minimum false-alarm probability obtained in the “NRD” case is always superior to the original false-alarm probability for any .

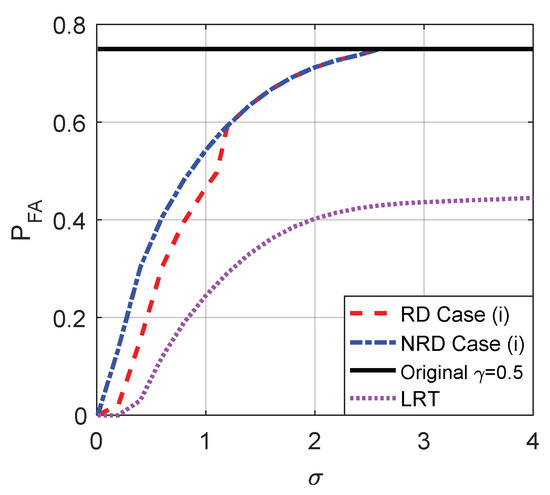

If the randomization between different decision thresholds is not allowed, the detection probability can be increased by adding additive noise when and for and , respectively. When the randomization is allowed, for , the maximum achievable detection probability can be obtained by a randomization of two pairs and with the corresponding weights and .

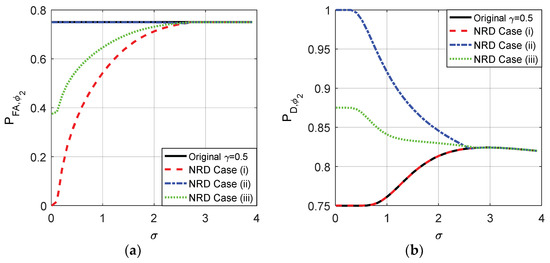

The probabilities of false-alarm and detection for different of the original detector and cases (i), (ii), and (iii) when the decision threshold and are compared in Figure 9 and Figure 10, respectively. As shown in Figure 9a,b, the original maintains 0.25 for any and the original is between 0.25 and 0.3371 when . As plotted in Figure 10a,b, the original maintains 0.75 and the original is between 0.75 and 0.8242 when .

Figure 9.

and as functions of for the original detector and the three cases without randomization existing between thresholds when for , , and .

Figure 10.

and as functions of for the original detector and the three cases without randomization exisingt between thresholds when for , , and .

According to the analyses above, the original and obtained when and are in the interval where the noise enhanced detection could occur. When the randomization between the thresholds is not allowed, according to the theoretical analysis, the optimal solutions of the noise enhanced detection performance for both case (i) and (ii) are to choose a suitable threshold and add the corresponding optimal noise to the observation. After some comparisons, the suitable threshold is just the original detector under the constraints in which and in this example. Naturally, case (iii) can be achieved by choosing the original detector and adding the noise which is a randomization between two optimal additive noises obtained in case (i) and case (ii). The details are plotted in Figure 9 and Figure 10 for the original threshold and 0.5, respectively.

From Figure 9 and Figure 10, it is clear that the smaller the , the smaller the false-alarm probability and the larger the detection probability. When is close to 0, the false-alarm probability obtained in case (i) is close to 0 and the detection probability obtained in case (ii) is close to 1. As shown in Figure 9, compared to the original detector, the noise enhanced false-alarm probability and the detection probability obtained in case (iii) are decreased by 0.125 and increased by 0.35, respectively, when is close to 0 where and . As shown in Figure 10, compared to the original detector, the noise enhanced false-alarm probability obtained in case (iii) is decreased by 0.375 and the corresponding detection probability is increased by 0.125, respectively, when and . With the increase of , the improvements of false-alarm and detection probabilities decrease gradually to zero as shown in Figure 9 and Figure 10. When , because the pdf of gradually becomes a unimodal noise, the detection performance cannot be enhanced by adding any noise.

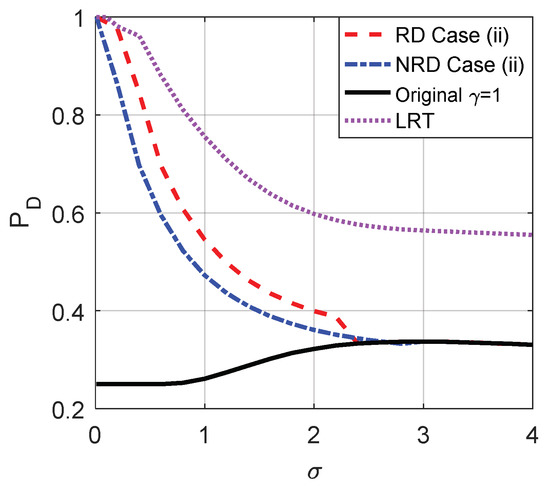

After some calculations, we know that under the constraints that and , the false-alarm probability cannot be decreased further by allowing randomization between the two thresholds compared to the non-randomization case when the original threshold is . It means that even if randomization is allowed, the minimum false-alarm probability in case (i) is obtained by choosing threshold and adding the corresponding optimal additive noise, and the achievable minimum false-alarm probability in case (i) is the same as that plotted in Figure 9. On the contrary, the detection probability obtained under the same constraints when randomization exists between different thresholds is greater than that obtained in the non-randomization case for , which is shown in Figure 11. Based on the analysis in Section 3.2, the maximum detection probability in case (ii) can be achieved by a suitable randomization of the two decision thresholds and noise pairs and with probabilities and , respectively, where . Such as , , and when . In addition, Figure 11 also plots the under the Likelihood ratio test (LRT) based on the original observation . It is obvious that the obtained under LRT is superior to that obtained in case (ii) for each . Although the performance of LRT is much better than the original and noise enhanced decision solutions, its implementation is much more complicated.

Figure 11.

Comparison of as function of for the original detector, LRT and case (ii) with or without randomization between thresholds, respectively, when , and the original threshold . “LRT” is the obtained under the Likelihood ratio test (LRT) based on the original observation .

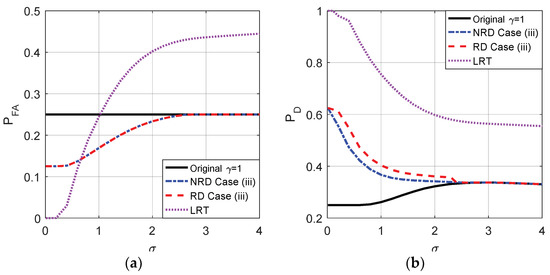

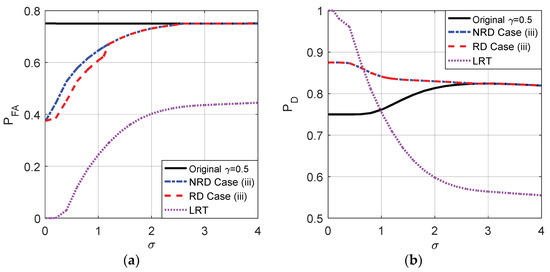

Naturally, case (iii) can also be achieved by randomization of the noise enhanced solution for case (i) and the new solution for case (ii) with the probabilities and , respectively. Figure 12 compares the probabilities of false-alarm and detection obtained by the original detector, LRT and case (iii) when randomization can or cannot be allowed where and the original threshold is . As shown in Figure 12, compared to the non-randomization case, the detection probability obtained in case (iii) is further improved for by allowing randomization of the two thresholds while the false-alarm probability cannot be further decreased. Moreover, the of LRT increases when increases and will be greater than that obtained in case (iii) when and the original detector when .

Figure 12.

Comparison of and as functions of for the original detector, LRT and case (iii) with or without randomization between thresholds, respectively, when , , and the original threshold .

Figure 13 illustrates the Bayes risks for the original detector, LRT and noise enhanced decision solutions when the randomization between detectors can or cannot be allowed for different , where , , and denote case (i), case (ii), and case (iii), respectively. As plotted in Figure 13, the Bayes risks obtained in case (i), (ii), and (iii) are smaller than the original detector, and the Bayes risk of LRT is the smallest one. Furthermore, the Bayes risk obtained in the randomization case is smaller than that obtained in the non-randomization case.

Figure 13.

Bayes risks of the original detector, LRT and noise enhanced decision solutions for different when , , and the original threshold .

As shown in Figure 14, when the original threshold , under the constraints that and , the false-alarm probability can be greatly decreased by allowing randomization between different thresholds compared to the non-randomization case when . In addition, LRT performs best on . Accordingly, the minimum false-alarm probability in case (i) is obtained by a suitable randomization of and with probabilities and , respectively, where . Through some simple analyses, under the same constraints, the detection probability obtained when there exists randomization between different thresholds cannot be greater than that obtained in the non-randomization case. Thus the maximum detection probability in case (ii) is the same as that illustrated in Figure 10, which is achieved by choosing a threshold and adding the corresponding optimal additive noise to the observation.

Figure 14.

Comparison of as function of for the original detector, LRT and case (i) with or without randomization between thresholds, respectively, when , , and the original threshold .

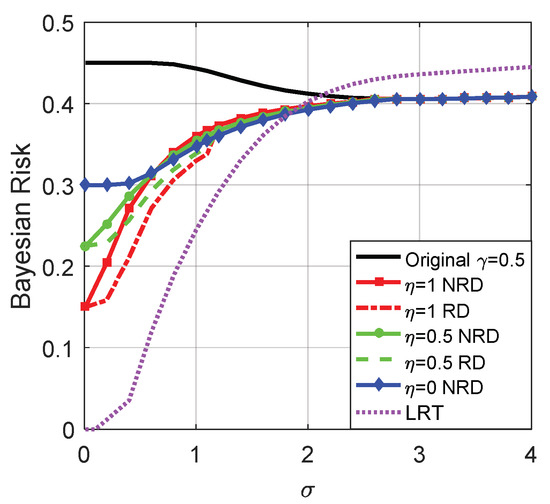

Case (iii) can also be achieved by the randomization of the noise enhanced solutions for case (i) and case (ii) with the probabilities and , respectively. As shown in Figure 15, compared to the non-randomization case, the false-alarm probability obtained in case (iii) is greatly improved by allowing randomization of the two thresholds while the detection probability cannot be increased when the original threshold . Although the of LRT is always superior to that obtained in other cases, the of LRT will be smaller than that obtained by the original detector and case (iii) when increases to a certain extent.

Figure 15.

Comparison of and as functions of for the original detector, LRT and case (iii) with or without randomization between thresholds, respectively, when , , and the original threshold .

Figure 16 illustrates the Bayes risks for the original detector, LRT and the noise enhanced decision solutions for different . Also, , , and denote case (i), case (ii) and case (iii), respectively. As plotted in Figure 16, the Bayes risks obtained by the three cases are smaller than the original detector for . The smallest one of the three is achieved in case (i) if or case (ii) if when no randomization exists between the thresholds, while it is achieved in case (i) if or case (ii) if when the randomization between the thresholds is allowed. Obviously, the Bayes risk obtained in the randomization case is not greater than that obtained in the non-randomization case. In addition, LRT achieves the minimum Bayes risk when and the maximum Bayes risk when .

Figure 16.

Bayes risks of the original detector, LRT and the noise enhanced decision solutions for different when , , and the original threshold .

As analyzed in 5.1, if the structure of a detector does not change with the decision thresholds, the optimal noise enhanced detection performances for different thresholds are the same, which can be achieved by adding the corresponding optimal noise. In such case, no improvement can be obtained by allowing randomization between different decision thresholds. On the other hand, if different thresholds correspond to different structures as shown in (39) and (40), randomization between different decision thresholds can introduce new noise enhanced solutions to improve the detection performance further under certain conditions.

6. Conclusions

In this study, a noise enhanced binary hypothesis-testing problem for a variable detector was investigated. Specifically, a noise enhanced model that can increase the detection probability and decrease the false-alarm probability simultaneously was formulated for a variable detector. In order to solve the model, three alternative cases were considered, i.e., cases (i), (ii), and (iii). First, the minimization of the false-alarm probability was achieved without decreasing the detection probability in case (i). For the case where the randomization between different detectors is allowed, the optimal noise enhanced solution of case (i) was proven as a randomization of at most two detectors and additive noise pairs. Especially, no improvement could be introduced by allowing the randomization between different detectors under certain conditions. Furthermore, the maximum noise enhanced detection probability was considered in case (ii) without increasing the false-alarm probability, and the corresponding optimal noise enhanced solution was explored regardless of whether the randomization of detectors is allowed. In addition, case (iii) was achieved by the randomization between two optimal noise enhanced solutions of cases (i) and (ii) with the corresponding weights. Remarkably, numerous solutions for increasing the detection probability and decreasing the false-alarm probability simultaneously are provided by adjusting the weights.

Moreover, the minimization of the Bayes risk based on the noise enhanced model was discussed for the variable detector. The Bayes risk obtained in case (iii) is between that obtained in case (i) and case (ii). Obviously, the noise enhanced Bayes risks obtained in the three cases are smaller than the original one without additive noise. It is significant to investigate case (i) and case (iii), especially for the situation where the result of case (ii) is not ideal or even not exists. Such as shown in Figure 16, the Bayes risks obtained in cases (i) and (iii) are smaller than that obtained in case (ii) under certain conditions. Studies on cases (i) and (iii) further supplement case (ii), and provide a more common noise enhanced solution for the signal detection. Finally, through the simulation results, the theoretical analyses were proven.

As a future work, the noise enhanced detection problem can be researched according to the maximum likelihood (ML) or the maximum a posteriori probability (MAP) criterion. Also, the theoretical results can be extended to the Rao test, which is a simpler alternative to the generalized likelihood ratio test (GLRT) [29], and applied to a stable system, a spectrum sensing problem in cognitive radio systems, and a decentralized detection problem [30,31,32]. In addition, a generalized noise enhanced parameter estimation problem based on the minimum mean square error (MMSE) criterion is another issue worth studying.

Author Contributions

Conceptualization, T.Y. and S.L.; Methodology, W.L.; Software, P.W.; Validation, T.Y. and S.L.; Formal Analysis, W.L.; Investigation, T.Y.; Resources, S.L.; Data Curation, J.G.; Writing-Original Draft Preparation, P.W.; Writing-Review & Editing, T.Y. and S.L. All authors have read and approved the final manuscript.

Funding

This research was partly supported by the National Natural Science Foundation of China (Grant No. 61701055), graduate scientific research and innovation foundation of Chongqing (Grant No. CYB17041), and the Basic and Advanced Research Project in Chongqing (Grant No. cstc2016jcyjA0134, No. cstc2016jcyjA0043).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1.

Due to , is a two-dimensional linear space. Further, suppose that is the convex hull of . It can be testified that is also the set of all possible . Generally, the optimal pairs of for case (i) and (ii) can only exist on , i.e., the boundary of . Furthermore, according to the Caratheodory’s Theorem, an arbitrary point on can be denoted by a convex combination of no more than two elements in . Consequently, case (i) and case (ii) can be achieved by a randomization of at most two detectors and discrete vector pairs.☐

Appendix B

Proof of Theorem 2.

If , there must exist a detector and a constant vector must satisfy and . Thus, the minimum achievable is obtained by choosing the detector and adding a discrete vector to . For the case of , the optimal noise enhanced solution that minimizes is a randomization of two detectors and discrete vector pairs, i.e., and with probabilities and directly according to Theorem 1. In addition, the contradiction method can be used here to prove . First, suppose the minimum false-alarm probability is obtained when with a noise enhanced solution . Then we suppose another valid solution which is a randomization of and with probabilities and . Then the new noise modified detection and false-alarm probabilities can be calculated as

where the last inequality holds from . Therefore, since the result in (A2) contracts the definition of .☐

References

- Benzi, R.; Sutera, A.; Vulpiani, A. The mechanism of stochastic resonance. J. Phys. A Math. Gen. 1981, 14, 453–457. [Google Scholar] [CrossRef]

- Liu, S.; Yang, T.; Zhang, X.; Hu, X.; Xu, L. Noise enhanced binary hypothesis-testing in a new framework. Digit. Signal Process. 2015, 41, 22–31. [Google Scholar] [CrossRef]

- Lee, I.; Liu, X.; Zhou, C.; Kosko, B. Noise-enhanced detection of subthreshold signals with carbon nanotubes. IEEE Trans. Nanotechnol. 2006, 5, 613–627. [Google Scholar] [CrossRef]

- Wannamaker, R.A.; Lipshitz, S.P.; Vanderkooy, J. Stochastic resonance as dithering. Phys. Rev. E 2000, 61, 233–236. [Google Scholar] [CrossRef]

- Dai, D.; He, Q. Multiscale noise tuning stochastic resonance enhances weak signal detection in a circuitry system. Meas. Sci. Technol. 2012, 23, 115001. [Google Scholar] [CrossRef]

- Kitajo, K.; Nozaki, D.; Ward, L.M.; Yamamoto, Y. Behavioral stochastic resonance within the human brain. Phys. Rev. Lett. 2003, 90, 218103. [Google Scholar] [CrossRef] [PubMed]

- Moss, F.; Ward, L.M.; Sannita, W.G. Stochastic resonance and sensory information processing: A tutorial and review of applications. Clin. Neurophys. 2004, 115, 267–281. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Stochastic resonance in continuous and spiking neuron models with Levy noise. IEEE Trans. Neural Netw. 2008, 19, 1993–2008. [Google Scholar] [CrossRef] [PubMed]

- McDonnell, M.D.; Stocks, N.G.; Abbott, D. Optimal stimulus and noise distributions for information transmission via suprathreshold stochastic resonance. Phys. Rev. E 2007, 75, 061105. [Google Scholar] [CrossRef] [PubMed]

- Gagrani, M.; Sharma, P.; Iyengar, S.; Nadendla, V.S.; Vempaty, A.; Chen, H.; Varshney, P.K. On Noise-Enhanced Distributed Inference in the Presence of Byzantines. In Proceedings of the Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 28–30 September 2011. [Google Scholar]

- Gingl, Z.; Makra, P.; Vajtai, R. High signal-to-noise ratio gain by stochastic resonance in a double well. Fluctuat. Noise Lett. 2001, 1, L181–L188. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z. Signal-to-noise ratio gain in non-dynamical and dynamical bistable stochastic resonators. Fluctuat. Noise Lett. 2002, 2, L145–L153. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z.; Fulei, T. Signal-to-noise ratio gain in stochastic resonators driven by coloured noises. Phys. Lett. A 2003, 317, 228–232. [Google Scholar] [CrossRef]

- Stocks, N.G. Suprathreshold stochastic resonance in multilevel threshold systems. Phys. Rev. Lett. 2000, 84, 2310–2313. [Google Scholar] [CrossRef] [PubMed]

- Godivier, X.; Chapeau-Blondeau, F. Stochastic resonance in the information capacity of a nonlinear dynamic system. Int. J. Bifurc. Chaos 1998, 8, 581–589. [Google Scholar] [CrossRef]

- Kosko, B.; Mitaim, S. Stochastic resonance in noisy threshold neurons. Neural Netw. 2003, 16, 755–761. [Google Scholar] [CrossRef]

- Kosko, B.; Mitaim, S. Robust stochastic resonance for simple threshold neurons. Phys. Rev. E 2004, 70, 031911. [Google Scholar] [CrossRef] [PubMed]

- Mitaim, S.; Kosko, B. Adaptive stochastic resonance in noisy neurons based on mutual information. IEEE Trans. Neural Netw. 2004, 15, 1526–1540. [Google Scholar] [CrossRef] [PubMed]

- Bayram, S.; Gezici, S.; Poor, H.V. Noise enhanced hypothesis-testing in the restricted bayesian framework. IEEE Trans. Signal Process. 2010, 58, 3972–3989. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Noise-enhanced M-ary hypothesis-testing in the mini-max framework. In Proceedings of the 3rd International Conference on Signal Processing and Communication Systems, Omaha, NE, USA, 28–30 September 2009; pp. 1–6. [Google Scholar]

- Bayram, S.; Gezici, S. Noise enhanced M-ary composite hypothesis-testing in the presence of partial prior information. IEEE Trans. Signal Process. 2011, 59, 1292–1297. [Google Scholar] [CrossRef]

- Kay, S.M.; Michels, J.H.; Chen, H.; Varshney, P.K. Reducing probability of decision error using stochastic resonance. IEEE Signal Process. Lett. 2006, 13, 695–698. [Google Scholar] [CrossRef]

- Kay, S. Can detectability be improved by adding noise. IEEE Signal Process. Lett. 2000, 7, 8–10. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K.; Kay, S.M.; Michels, J.H. Theory of the stochastic resonance effect in signal detection: Part I—Fixed detectors. IEEE Trans. Signal Process. 2007, 55, 3172–3184. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K. Theory of the stochastic resonance effect in signal detection: Part II—Variable detectors. IEEE Trans. Signal Process. 2007, 56, 5031–5041. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K.; Kay, S.; Michels, J.H. Noise enhanced nonparametric detection. IEEE Trans. Inf. Theory 2009, 55, 499–506. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Optimal noise benefits in Neyman–Pearson and inequality constrained signal detection. IEEE Trans. Signal Process. 2009, 57, 1655–1669. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Stochastic resonance in binary composite hypothesis-testing problems in the Neyman–Pearson framework. Digit. Signal Process. 2012, 22, 391–406. [Google Scholar] [CrossRef]

- Ciuonzo, D.; Papa, G.; Romano, G.; Rossi, P.S.; Willett, P. One-bit decentralized detection with a Rao test for multisensor fusion. IEEE Signal Process. Lett. 2013, 20, 861–864. [Google Scholar] [CrossRef]

- Ciuonzo, D.; Rossi, P.S.; Willett, P. Generalized rao test for decentralized detection of an uncooperative target. IEEE Signal Process. Lett. 2017, 24, 678–682. [Google Scholar] [CrossRef]

- Wu, J.; Wu, C.; Wang, T.; Lee, T. Channel-aware decision fusion with unknown local sensor detection probability. IEEE Trans. Signal Process. 2010, 58, 1457–1463. [Google Scholar]

- Ciuonzo, D.; Rossi, P.S. Decision fusion with unknown sensor detection probability. IEEE Signal Process. Lett. 2014, 21, 208–212. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).