Abstract

This paper studies the matrix completion problems when the entries are contaminated by non-Gaussian noise or outliers. The proposed approach employs a nonconvex loss function induced by the maximum correntropy criterion. With the help of this loss function, we develop a rank constrained, as well as a nuclear norm regularized model, which is resistant to non-Gaussian noise and outliers. However, its non-convexity also leads to certain difficulties. To tackle this problem, we use the simple iterative soft and hard thresholding strategies. We show that when extending to the general affine rank minimization problems, under proper conditions, certain recoverability results can be obtained for the proposed algorithms. Numerical experiments indicate the improved performance of our proposed approach.

1. Introduction

Arising from a variety of applications such as online recommendation systems [1,2], image inpainting [3,4] and video denoising [5], the matrix completion problem has drawn tremendous and continuous attention over recent years [6,7,8,9,10,11,12]. The matrix completion aims at recovering a low rank matrix from partial observations of its entries [7]. The problem can be mathematically formulated as:

where and is an index set. Due to the nonconvexity of the rank function , solving this minimization problem is NP-hard in general. To obtain a tractable convex relaxation, the nuclear norm heuristic was proposed [7]. Incorporated with the least squares loss, the nuclear norm regularization was proposed to solve (1) when the observed entries are contaminated by Gaussian noise [13,14,15,16]. In real-world applications, datasets might be contaminated by non-Gaussian noise or sparse gross errors, which can appear in both explanatory and response variables. However, it has been well understood that the least squares loss cannot be resistant to non-Gaussian noise or outliers.

To address this problem, some efforts have been made in the literature. Ref. [17] proposed a robust approach by using the least absolute deviation loss. Huber’s criterion was adopted in [18] to introduce robustness into matrix completion. Ref. [19] proposed to use an () loss to enhance the robustness. However, as explained later, the approaches mentioned above cannot be robust to impulsive errors. In this study, we propose to use the correntropy-induced loss function in matrix completion problems when pursuing robustness.

Correntropy, which serves as a similarity measurement between two random variables, was proposed in [20] within the information-theoretic learning framework developed in [21]. It is shown that in prediction problems, error correntropy is closely related to the error entropy [21]. The correntropy and the induced error criterion have been drawing a great deal of attention in the signal processing and machine learning community. Given two scalar random variables U, V, the correntropy between U and V is defined as with a Gaussian kernel given by , the scale parameter and a realization of . It is noticed in [20] that the correntropy can induce a new metric between U and V.

In this study, by employing the correntropy-induced losses, we propose a nonconvex relaxation approach to robust matrix completion. Specifically, we develop two models: one with a rank constraint and the other with a nuclear norm regularization term. To solve them, we propose to use simple, but efficient algorithms. Experiments on synthetic, as well as real data are implemented and show that our methods are effective even for heavily-contaminated datasets. We make the following contributions in this paper:

- In Section 3, we propose a nonconvex relaxation strategy for the robust matrix completion problem, where the robustness benefits from using a robust loss. Based on this loss, a rank constraint, as well as a nuclear norm penalized model is proposed. We also extend the proposed models to deal with the affine rank minimization problem, which includes the matrix completion as a special case.

- In Section 4, we propose to use simple, but effective algorithms to solve the proposed models, which are based on gradient descent and employ the hard/soft shrinkage operators. By verifying the Lipschitz continuity, the convergence of the algorithms can be proven. When extended to affine rank minimization problems, under proper conditions, certain recoverability results are obtained. These results give understandings of this loss function in an algorithmic sense, which is in accordance with and extends our previous work [22].

This paper is organized as follows: In Section 2, we review some existing (robust) matrix completion approaches. In Section 3, we propose our nonconvex relaxation approach. Two algorithms are proposed in Section 4 to solve the proposed models. Theoretical results will be presented in Section 4.1. Experimental results are reported in Section 5. We end this paper in Section 6 with concluding remarks.

2. Related Work and Discussions

In matrix completion, solving the optimization problem in Model (1) is NP-hard, and a usual remedy is to consider the following nuclear norm convex relaxation:

Theoretically, it has been demonstrated in [7,8] that under proper assumptions, with an overwhelming probability, one can reconstruct the original matrix. Situations of the matrix completion with noisy entries have been also considered; see, e.g., [6,9]. In the noisy setting, the corresponding observed matrix turns out to be:

where denotes the projection of B onto , and E refers to the noise. The following two models are frequently adopted to deal with the noisy case:

and its convex relaxed and regularized heuristic:

where is a regularization parameter. Similar theoretical reconstruction results have been also derived in the noiseless case under technical assumptions. Along this line, various approaches have been proposed [14,15,16,23,24]. Among others, Refs. [10,25] interpreted the matrix completion problem as a specific case of the trace regression problem endowed with an entry-wise least squares loss, . In the above-mentioned settings, the noise term E is usually assumed to be Gaussian or sub-Gaussian to ensure the good generalization ability, which certainly excludes the heavily-tailed noise and/or outliers.

Existing Robust Matrix Completion Approaches

It has been well understood that the least squares estimator cannot deal with non-Gaussian noise or outliers. To alleviate this limitation, some efforts have been made.

In a seminal work, Ref. [17] proposed a robust matrix completion approach, in which the model takes the following form:

The above model can be further formulated as:

where is a regularization parameter. The robustness of the model (4) results from using the least absolute deviation loss (LAD). This model was later applied to the column-wise robust matrix completion problem in [26].

By further decomposing E into , where refers to the noise and stands for the outliers, Ref. [18] proposed the following robust reconstruction model:

where are regularization parameters. They further showed that the above estimator is equivalent to the one obtained by using Huber’s criterion when evaluating the data-fitting risk. We also note that [19] adopted an () loss to enhance the robustness.

3. The Proposed Approach

3.1. Our Proposed Nonconvex Relaxation Approach

As stated previously, matrix completion models based on the least squares loss cannot perform well with non-Gaussian noise and/or outliers. Accordingly, robustness can be pursued by using a robust loss as mentioned earlier. Associated with a nuclear norm penalization term, they are essentially regularized M-estimator. However, note that the LAD loss and the loss penalize the small residuals strongly and hence cannot lead to accurate prediction for unobserved entries from the trace regression viewpoint. Moreover, robust statistics reminds us that models based on the above three mentioned loss functions cannot be robust to impulsive errors [27,28]. These limitations encourage us to employ more robust surrogate loss functions to address this problem. In this paper, we present a nonconvex relaxation approach to deal with the matrix completion problem with entries heavily contaminated by noise and/or outliers.

In our study, we propose the robust matrix completion model based on a robust and nonconvex loss, which is defined by:

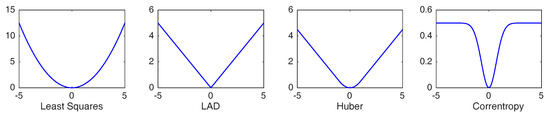

with a scale parameter. To give an intuitive impression, plots of loss functions mentioned above are given in Figure 1. As mentioned above, this loss function is induced by the correntropy, which measures the similarity between two random variables [20,21] and has found many successful applications [29,30,31]. Recently, it was shown in [22] that regression with the correntropy-induced losses regresses towards the conditional mean function with a diverging scale parameter when the sample size goes to infinity. It was also shown in [32] that when the noise variable admits a unique global mode, regression with the correntropy-induced losses regresses towards the conditional mode. As argued in [22,32], learning with correntropy-induced losses can be resistant to non-Gaussian noise and outliers, while ensuring good prediction accuracy simultaneously with properly chosen .

Figure 1.

Different losses: least squares, absolute deviation loss (LAD), Huber’s loss and (Welsch loss).

Associated with the loss, our rank-constraint robust matrix completion problem is formulated as:

where the data-fitting risk is given by:

The nuclear norm heuristic model takes the following form:

where is a regularization parameter.

3.2. Affine Rank Minimization Problem

In this part, we will show that our robust matrix completion approach can be extended to deal with the robust affine rank minimization problems.

It is known that the matrix completion problem (1) is a special case of the following affine rank minimization problem:

where is given, and is a linear operator defined by:

where for each i. Introduced and studied in [33], this problem has drawn much attention in recent years [14,15,16,23]. Note that (7) can be reduced to the matrix completion problem (1) if we set (the cardinality of ), and let for each , where and are the canonical basis vector of and , respectively.

In fact, (5) and (6) can be naturally extended to handle cases with noise and outliers of (7). Denote the risk as follows:

The rank constrained model can be formulated as:

and the nuclear norm regularized heuristic takes the form:

4. Algorithms and Analysis

We consider using gradient descent-based algorithms to solve the proposed models. It is usually admitted that gradient descent is not very efficient. However, in our experiments, we find that gradient descent is still efficient, and comparable with some state-of-the-art methods. On the other hand, we present recoverability and convergence rate results for gradient descent applied to the proposed models. Such results and analysis may help us better understand the models and such a nonconvex loss function from the algorithmic aspects.

We first consider gradient descent with hard thresholding for solving (8). The derivation is standard. Denote . By the differentiability of , when Y is sufficiently close to X, can be approximated by:

Here, is a parameter, and , the gradient of at Y, is equal to:

Now, the iterates can be generated as follows:

with:

We simply write (11) as , where denotes the hard thresholding operator, i.e., the best rank-R approximation to . The algorithm is presented in Algorithm 1.

| Algorithm 1 Gradient descent iterative hard thresholding for (8). |

|

The algorithm starts from an initial guess and continues until some stopping criterion is satisfied, e.g., , where is a certain given positive number. Indeed, such a stopping criterion makes sense, as Proposition A3 shows that . To ensure the convergence, the step-size should satisfy , where denotes the spectral norm of . For matrix completion, the spectral norm is smaller than one, and thus, we can set . In Appendix A, we have shown the Lipschitz continuity of , which is necessary for the convergence of the algorithm. can also be self-adaptive by using a certain line-search rule. Algorithm 2 is the line-search version of Algorithm 1.

| Algorithm 2 Line-search version of Algorithm 1. |

|

Solving (9) is similar, with only the hard thresholding replaced by the soft thresholding , which can be derived as follows. Denote as the SVD of . Then, is the matrix soft thresholding operator [13,16] defined as Gradient descent-based soft thresholding is summarized in Algorithm 3.

| Algorithm 3 Gradient descent iterative soft thresholding for (9). |

|

4.1. Convergence

With the Lipschitz continuity of presented in Appendix A, it is a standard routine to show the convergence of Algorithms 1 and 3, i.e., let be a sequence generated by Algorithm 1 or 3. Then, every limit point of the sequence is a critical point of the problem. In fact, the results can be enhanced to the statement that “the entire sequence converges to a critical point”, namely one can prove that where is a critical point. This can be achieved by verifying the so-called Kurdyka–ojasiewicz (KL) property [34] of the problems (8) and (9). As this is not the main concern of this paper, we omit the verification here.

4.2. Recoverability and Linear Convergence Rate

For affine rank minimization problems, the convergence rate results have been obtained in the literature; see, e.g., [23,24]. However, all the existing results are obtained for algorithms that solve the optimization problems incorporating the least squares loss. In this part, we are concerned with the recoverability and convergence rate of Algorithm 1. These results give the understanding of this loss function from the algorithmic aspect, which is in accordance with and extends our previous work [22].

It has been known that the convergence rate analysis requires the matrix RIPcondition [33]. In our context, instead of using the matrix RIP, we adopt the concept of the matrix scalable restricted isometry property (SRIP) [24].

Definition 1

(SRIP [24]). For any , there exist constants such that:

Due to the scalability of on the operator , SRIP is a generalization of the RIP [33] as commented in [24]. We point out that the results of Algorithm 1 for the affine rank minimization problem (8) rely on the SRIP condition. However, in the matrix completion problem (5), this condition cannot be met, since in this case is zero. Consequently, the results provided below cannot be applied directly to the matrix completion problem (5). However, similar results might be established for (5), if some refined RIP conditions are assumed to hold for the operator in the situation of matrix completion [23]. To obtain the convergence rate results, besides the SRIP condition, we also need to make some assumptions.

Assumption 1.

- At the -th iteration of Algorithm 1, the parameter in the loss function is chosen as:where , and is a positive constant.

- The spectral norm of A is upper bounded as

Based on Assumption 1, the following results for Algorithm 1 can be derived.

Theorem 1.

Assume that , where is the matrix to be recovered and . Assume that Assumption 1 holds. Let be generated by Algorithm 1, with the step-size . Then

- at iteration , Algorithm 1 will recover a matrix satisfying:where depending on β.

- If there is no noise or outliers, i.e., , then the algorithm converges linearly in the least squares and sense, respectively, i.e.,where and , depending on the choice of β.

The proof of Theorem 1 relies on the following lemmas, which reveal certain properties of the loss function .

Lemma 1.

For any and , it holds:

Proof.

For any , let . Since is even, we need to only consider . Note that , which is nonnegative when . Therefore, is a nondecreasing function on . On the other hand, and . Thus, the minimum of is . As a result, . This completes the proof. ☐

Lemma 2.

Assuming that , and , it holds:

Proof.

Since , it is not hard to check that . From the range of , it follows . This completes the proof. ☐

Lemma 3.

Given a fixed , for , is nondecreasing with respect to σ.

Proof.

It is not hard to check that is nonnegative on . ☐

Proof of Theorem 1.

By the fact that is rank-R and is the best rank-R approximation to , we have:

Since:

we know that:

where the last inequality follows from:

and the choice of the step-size . It remains to estimate . We first see that:

To verify our first assertion, it remains to bound the first two terms by means of . We consider the first term. Denoting , we know that:

The choice of tells us that:

and consequently:

Then, by the fact that and the choice of the step-size , we observe that the second term of (13) can be upper bounded by:

Combining (14) and (15) and denoting , we come to the following conclusion:

where the last inequality follows from the SRIP condition and the fact that by the range of . As a result, we get the following estimation:

where the last inequality follows from the assumption . Denote . The range of tells us that Iterating (16), we obtain:

Therefore, The first assertion concerning the recoverability is proven.

Suppose there is no noise or outliers, i.e., we have . In this case, it follows from (16) that:

and then, the SRIP condition tells us that:

where the last inequality comes from the inequality chain . Denote . Then, . Therefore, the algorithm converges linearly to in the least squares sense.

We now proceed to show the linear convergence in the sense. Following from the inequality , we obtain:

Combining with Inequality (A1), we see that can be upper bounded by:

We need to upper bound and in terms of . We first consider the second term. Under the SRIP condition, we have:

By setting , we get . Lemma 2 tells us that:

Summing the above inequalities over i from 1 to p, we have:

Therefore, can be bounded as follows:

We proceed to bound . It follows from (14) and Lemma 1 that:

By Lemma 3, the function is nondecreasing with respect to . This in connection with the fact that:

yields . Let , and consequently, . We thus have:

The proof is now completed. ☐

The above results show that it is possible that Algorithm 1 will find if the magnitude of the noise is not too large. Moreover, the results also imply that the algorithm is safe when there is no noise.

5. Numerical Experiments

This section presents numerical experiments to illustrate the effectiveness of our methods. Empirical comparisons with other methods are implemented on synthetic and real data contaminated by outliers or non-Gaussian noise.

The following 4 algorithms are implemented. RMC--IHTand RMC--ISTare denoted as Algorithms 1 and 3 incorporated with the line-search rule, respectively. The approach proposed in [16] is denoted as MC--IST, which is an iterative soft thresholding algorithm based on the least squares loss. The robust approach based on the LAD loss proposed in [17] is denoted by RMC--ADM. Empirically, the value of is set to be ; the tuned parameter of RMC--IST and MC--IST is set to , while for RMC--ADM, , as suggested in [17]. All the numerical computations are conducted on an Intel i7-3770 CPU desktop computer with 16 GB of RAM. The supporting software is MATLAB R2013a. Some notations used frequently in this section are introduced first in Table 1. Bold number in the tables of this section means that it is the best among the competitors.

Table 1.

Notations used in the experiments.

5.1. Evaluation on Synthetic Data

The synthetic datasets are generated in the following way:

- Generating a low rank matrix: We first generate an matrix with i.i.d. Gaussian entries ∼N(0,1), where . Then, a -rank matrix M is obtained from the above matrix by rank truncation, where varies from –.

- Adding outliers: We create a zero matrix and uniformly randomly sample entries, where varies from 0–. These entries are randomly drawn from the chi-square distribution, with four degrees of freedom. Multiplied by 10, the matrix E is used as the sparse error matrix.

- Missing entries: of the entries are randomly missing, with varying between . Finally, the observed matrix is denoted as .

RMC--IHT (Algorithm 1), RMC--IST (Algorithm 3) and RMC--ADM [17] are implemented respectively on the matrix completion problem with the datasets generated above. For these three algorithms, the same initial guess with the all-zero matrix is applied. The stopping criterion is , or restrictions on the number of iterations, which is set to be 500. For each tuple , we repeat 10 runs. The algorithm is regarded as successful if the relative error of the result satisfies .

Experimental results of RMC--IHT (top), RMC--IST (middle) and RMC--ADM (bottom) are reported in Figure 2, which are given in terms of phase transition diagrams. In Figure 2, the white zones denote perfect recovery in all the experiments, while the black ones denote failure for all the experiments. In each diagram, the x-axis represents the ratio of rank, i.e., we let , and the y-axis represents the level of outliers, i.e., we let . The level of missing entries varies from left to right in each row. As shown in Figure 2, our approach outperforms RMC--ADM when and increase. We also observe that RMC--IHT performs better than RMC--IST when the level of outliers increases, while RMC--IST outperforms RMC--IHT when the ratio of missing entries increases.

Figure 2.

Phase transition diagrams of RMC--IHT (Algorithm 1), RMC--IST (Algorithm 3) and RMC--ADM [17]. The first row: RMC--IHT; the second row: RMC--IST; the last row: RMC--ADM. x-axis: ; y-axis: . From the first column to the last column, varies from 0–.

Comparison of the computational time and the relative error are also reported in Table 2. In this experiment, the level of missing entries , the ratio of rank and the level of outliers varies between . For each , we randomly generate 20 instances and then average the results. In the table, “time” denotes the CPU time, with the unit being second, and “rel.err” represents the relative error introduced in the previous paragraph. The results also demonstrate the improved performance of our methods in most of the cases on CPU time and relative error, especially for RMC--IHT.

Table 2.

Comparison of RMC--IHT(Algorithm 1), RMC--IST(Algorithm 3) and RMC--ADM [17] on CPU time and the relative error on synthetic data. , . rel.err, relative error.

5.2. Image Inpainting and Denoising

One typical application of matrix completion is the image inpainting problem [4]. The datasets and the experiment are conducted as follows:

- We first choose five gray images, named “Baboon”, “Camera Man”, “Lake”, “Lena” and “Pepper” (the size of each image is ), each of which is stored in a matrix M.

- The outliers matrix E is added to each M, where E is generated in the same way as the previous experiment, and the level of outliers varies among .

- The ratio of the missing entries is set to . RMC--IST, RMC--ADM and MC--IST, are tested in this experiment. In addition, we also test the Cauchy loss-based model , which is denoted as RMC--IST, where:where is a parameter controlling the robustness. Empirically, we set . Other parameters are set to the same as those of RMC--IST. The above model is also solved by soft thresholding similar to Algorithm 3. Note that Cauchy loss has a similar shape as that of Welsch loss and also enjoys the redescending property; such a loss function is also frequently used in the robust statistics literature. The initial guess is . The stopping criterion is , or the iterations exceed 500.

Detailed comparison results in terms of the relative error and CPU time are listed in Table 3, from which one can see the efficiency of our method. Indeed, experimental results show that our method can be terminated within 80 iterations. According to the relative error in Table 3, our method performs the best in almost all cases, followed by RMC--IST. This is not surprising because the Cauchy loss-based model enjoys similar properties as the proposed model. We also observe that the RMC--ADM algorithm cannot deal with situations when images are heavily contaminated by outliers. This illustrates the robustness of our method.

Table 3.

Experimental results of RMC--IST (Algorithm 3), RMC--ADM [17] and MC--IST [16] on different images with , and varying from to .

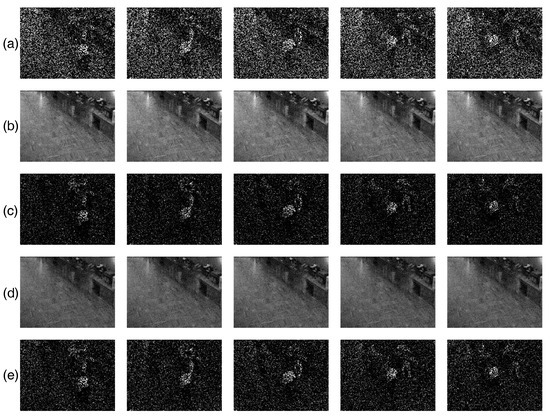

To better illustrate the robustness of our method empirically, we also attach images recovered by the three methods in Figure 3. For the sake of saving space, we merely list the recovery results for the case with missing entries. In Figure 3, the first column represents five original images, namely, “Baboon”, “Camera Man”, “Lake”, “Lena” and “Pepper”. Images in the second column are contaminated images with outliers and missing entries. Recovered results of each image are report in the remaining columns respectively by using RMC--IST, RMC--ADM, MC--IST and RMC--IST. One can observe that the images recovered by our method retain most of the important information, followed by RMC--IST.

Figure 3.

Comparison of RMC--IST, RMC--ADM and MC--IST on different images with outliers and missing entries. (a) The original low rank images; (b) images with missing entries and contaminated by outliers; (c) images recovered by RMC--IST (Algorithm 3); (d) images recovered by RMC--ADM [17]; (e) images recovered by MC--IST [16]; (f) images recovered by RMC--IST.

Our next experiment is designed to show the effectiveness of our method in dealing with the non-Gaussian noise. We assume that the entries of the noise matrix E are i.i.d drawn from Student’s t distribution, with three degrees of freedom. We then scale E by a factor , and we denote the corresponding . The noise scale factor varies in , and varies in . The results are shown in Table 4, where the image “Building” is used. We list the recovered images in Figure 4 with the case . From the table and the recovered images, we can see that our method also performs well when the image is only contaminated by non-Gaussian noise.

Table 4.

Experimental results on the image “Building”, contaminated by non-Gaussian noise with varying and the noise scale.

Figure 4.

Recovery results of RMC--IST (third), RMC--ADM (fourth) and MC--IST (fifth) on the image “Building” contaminated by non-Gaussian noise with and 30% missing entries.

5.3. Background Subtraction

Background subtraction, also known as foreground detection, is one of the major tasks in computer vision, which aims at detecting changes in image or video sequences and finds application in video surveillance, human motion analysis and human-machine interaction from static cameras [35].

Given a sequence of images, one can cast them into a matrix B by vectorizing each image and then stacking row by row. In many cases, it is reasonable to assume that the background varies little. Consequently, the background forms a low rank matrix M, while the foreground activity is spatially localized and can be seen as the error matrix E. Correspondingly, the image sequence matrix B can be expressed as the sum of a low rank background matrix M and a sparse error matrix E, which represents the activity in the scene.

In practice, it is reasonable to assume that some entries of the image sequence are missing and the images are contaminated by noise or outliers. Therefore, the foreground object detection problem can be formulated as a robust matrix completion problem. Ref. [36] proposed to use the LAD-loss-based matrix completion approach to separate M and E. The data of this experiment were downloaded from http://perception.i2r.a-star.edu.sg/bkmodel/bkindex.html.

Our experiment in this scenario is implemented as follows:

- We choose the sequence named “Restaurant” for our experiment, which consists of 3057 color images. Each image of “Restaurant” is in size. From the sequence, we pick 100 continuous images and convert them to gray images to form the original matrix B, which is in size, where each row is a vector converted from an image.

- Two types of non-Gaussian noise are added to B. The first type of noise is drawn from the chi-square distribution, with four degree of freedom; the second type of noise is drawn from Student’s t distribution, with three degrees of freedom. Then, the two types of noise are simultaneously rescaled by . The last of the entries are missing randomly.

- RMC--IHT and RMC--ADM are used to deal with this problem. We set in RMC--IHT. The initial guess is the zero matrix. The stopping criterion is , or the iterations exceed 200.

The running time and relative error are reported in Table 5. From the table, we see that the proposed approach is faster and gives smaller relative errors. To give an intuitive impression, we choose five frames from each image sequence, as shown in Figure 5, from which we can observe that when the image sequences are corrupted by noise () and missing entries, both of the methods can successfully extract the background and foreground images, and it seems that our method performs better because the details of the background images are recovered well, whereas the LAD-based approach does not seem to perform as well as ours where some details of the background are added to the foreground. It can be also observed that none of the two methods can recover the missing entries in the foreground. In order to achieve this, maybe more effective approaches are needed.

Table 5.

Experiment results on “Restaurant” contaminated by non-Gaussian noise and missing entries.

Figure 5.

Comparison between RMC--IHT (Algorithm 1) and RMC--ADM [17] on extracting the image sequence “Restaurant” with and contaminated by two types of non-Gaussian noise with . (a) The original image sequence; (b) the image sequence with missing entries and contaminated by noise; (c) background extracted by RMC--IHT (Algorithm 1); (d) foreground extracted by RMC--IHT (Algorithm 1); (e) background extracted by RMC--ADM [17]; (f) foreground extracted by RMC--ADM [17].

6. Concluding Remarks

The correntropy loss function has been studied in the literature [20,21] and has found many successful applications [29,30,31]. Learning with correntropy-induced losses could be resistant to non-Gaussian noise and outliers while ensuring good prediction accuracy simultaneously with properly chosen parameter . This paper addressed the robust matrix completion problem based on the correntropy loss. The proposed approach was shown to be efficient to deal with non-Gaussian noise and sparse gross errors. The nonconvexity of the proposed approach was due to using the loss. Based on the above approach, we proposed two nonconvex optimization models and extend them to the more general robust affine rank minimization problems. Two gradient-based iterative schemes to solve the nonconvex optimization problems were offered, with convergence rate results being obtained under proper assumptions. It would be interesting to investigate similar convergence and recoverability results for other redescending-type loss functions-based models. Numerical experiments verified the improved performance of our methods, where empirically, the parameter for is set to and for the nuclear norm model (6) is .

Acknowledgments

The research leading to these results has received funding from the European Research Council under the European Union’s Seventh Framework Programme (FP7/2007-2013)/ERC AdGA-DATADRIVE-B (290923). This paper reflects only the authors’ views; the Union is not liable for any use that may be made of the contained information; Research Council KUL: GOA/10/09 MaNet, CoEPFV/10/002 (OPTEC), BIL12/11T; PhD/Postdoc grants; Flemish Government: FWO: PhD/Postdoc grants, projects: G.0377.12 (Structured systems), G.088114N (Tensor-based data similarity); IWT: PhD/Postdoc grants, projects: SBOPOM(100031); iMinds Medical Information Technologies SBO 2014; Belgian Federal Science Policy Office: IUAPP7/19 (DYSCO, Dynamical systems, control and optimization, 2012–2017).

Author Contributions

Y.Y., Y.F., and J.A.K.S. proposed and discussed the idea; Y.Y. and Y.F. conceived and designed the experiments; Y.Y. performed the experiments; Y.Y. and Y.F. analyzed the data; J.A.K.S. contributed analysis tools; Y.Y. and Y.F. wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Lipschitz Continuity of the Gradient of ℓ σ and Some Propositions

The propositions given in the Appendix hold for both and . For simplicity, we only present the formulas for . We first give some notations. Let be the vectorization operator over any matrix space , with and:

We further define matrix , where:

Based on the above notations, the vectorized form of is written as:

and the gradient of at X can be rewritten as:

where is a diagonal matrix with:

Let be the spectral norm of A. The following proposition shows that the gradient of is Lipschitz continuous.

Proposition A1.

The gradient of is Lipschitz continuous. That is, for any , it holds that:

Proof.

With notations introduced above, we know that:

where and are the diagonal matrices corresponding to and . It remains to show that:

By letting and , we observe that:

Combining with the fact that for any and ,

we have:

As a result, . This completes the proof. ☐

The following conclusion is a consequence of Proposition A1.

Proposition A2.

For any , it holds that:

Proposition A3.

Let be generated by Algorithms 1 or 3 with . Then, it holds that:

Proof.

We first consider generated by Algorithm 1. Following from the fact that and is the best rank-R approximation of , we know that:

This together with (A1) gives:

which implies that the sequence is monotonically decreasing. Due to the lower boundness of , we see that .

When is generated by Algorithm 3, after simple computation, we have that is the minimizer of:

we thus have:

This in connection with Proposition A2 reveals:

Analogously, we have . This completes the proof. ☐

References

- Srebro, N.; Jaakkola, T. Weighted low-rank approximations. In Proceedings of the 20th International Conference on Machine Learning, Copenhagen, Denmark, 11–12 June 2003; Volume 3, pp. 720–727. [Google Scholar]

- Prize Website, N. Available online: http://www.netflixprize.com (accessed on 2 March 2018).

- Komodakis, N. Image completion using global optimization. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 442–452. [Google Scholar]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Ji, H.; Liu, C.; Shen, Z.; Xu, Y. Robust video denoising using low rank matrix completion. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 1791–1798. [Google Scholar]

- Candès, E.J.; Plan, Y. Matrix completion with noise. Proc. IEEE 2010, 98, 925–936. [Google Scholar] [CrossRef]

- Candès, E.J.; Recht, B. Exact matrix completion via convex optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Gross, D. Recovering low-rank matrices from few coefficients in any basis. IEEE Trans. Inf. Theory 2011, 57, 1548–1566. [Google Scholar] [CrossRef]

- Keshavan, R.H.; Montanari, A.; Oh, S. Matrix completion from noisy entries. J. Mach. Learn. Res. 2010, 99, 2057–2078. [Google Scholar]

- Koltchinskii, V.; Lounici, K.; Tsybakov, A.B. Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. Ann. Stat. 2011, 39, 2302–2329. [Google Scholar] [CrossRef]

- Signoretto, M.; Van de Plas, R.; De Moor, B.; Suykens, J.A.K. Tensor versus matrix completion: A comparison with application to spectral data. IEEE Signal Process. Lett. 2011, 18, 403–406. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, D.; Ye, J.; Li, X.; He, X. Fast and Accurate Matrix Completion via Truncated Nuclear Norm Regularization. IEEE Trans. Pattern Anal. 2013, 35, 2117–2130. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Goldfarb, D.; Ma, S. Convergence of fixed-point continuation algorithms for matrix rank minimization. Found. Comput. Math. 2011, 11, 183–210. [Google Scholar] [CrossRef]

- Ji, S.; Ye, J. An accelerated gradient method for trace norm minimization. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 457–464. [Google Scholar]

- Ma, S.; Goldfarb, D.; Chen, L. Fixed point and Bregman iterative methods for matrix rank minimization. Math. Program. 2011, 128, 321–353. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM (JACM) 2011, 58, 11. [Google Scholar] [CrossRef]

- Hastie, T. Matrix Completion and Large-Scale SVD Computations. Available online: http://www.stanford.edu/~hastie/TALKS/SVD_hastie.pdf (accessed on 21 February 2018).

- Nie, F.; Wang, H.; Cai, X.; Huang, H.; Ding, C. Robust matrix completion via joint Schatten p-norm andlp-norm minimization. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining (ICDM), Brussels, Belgium, 10–13 December 2012; pp. 566–574. [Google Scholar]

- Liu, W.; Pokharel, P.P.; Príncipe, J.C. Correntropy: Properties and applications in non-Gaussian signal processing. IEEE Trans. Signal Process. 2007, 55, 5286–5298. [Google Scholar] [CrossRef]

- Príncipe, J.C. Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer Science & Business Media: Berlin, Germany, 2010. [Google Scholar]

- Feng, Y.; Huang, X.; Shi, L.; Yang, Y.; Suykens, J.A. Learning with the maximum correntropy criterion induced losses for regression. J. Mach. Learn. Res. 2015, 16, 993–1034. [Google Scholar]

- Jain, P.; Meka, R.; Dhillon, I.S. Guaranteed Rank Minimization via Singular Value Projection. In Proceedings of the Advances in Neural Information Processing Systems, Hyatt Regency, VAN, Canada, 6–11 December 2010; Volume 23, pp. 937–945. [Google Scholar]

- Beck, A.; Teboulle, M.A. A linearly convergent algorithm for solving a class of nonconvex/affine feasibility problems. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: Berlin, Germany, 2011; pp. 33–48. [Google Scholar]

- Rohde, A.; Tsybakov, A.B. Estimation of high-dimensional low-rank matrices. Ann. Stat. 2011, 39, 887–930. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Caramanis, C.; Sanghavi, S. Robust Matrix Completion with Corrupted Columns. arXiv, 2011; arXiv:1102.2254. [Google Scholar]

- Huber, P.J. Robust Statistics; Springer: Berlin, Germany, 2011. [Google Scholar]

- Warmuth, M.K. From Relative Entropies to Bregman Divergences to the Design of Convex and Tempered Non-Convex Losses. Available online: http://classes.soe.ucsc.edu/cmps290c/Spring13/lect/9/holycow.pdf (accessed on 21 February 2018).

- Chen, B.; Xing, L.; Liang, J.; Zheng, N.; Príncipe, J.C. Steady-state mean-square error analysis for adaptive filtering under the maximum correntropy criterion. IEEE Signal Process. Lett. 2014, 21, 880–884. [Google Scholar]

- Chen, B.; Xing, L.; Zhao, H.; Zheng, N.; Príncipe, J.C. Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 2016, 64, 3376–3387. [Google Scholar] [CrossRef]

- Chen, B.; Liu, X.; Zhao, H.; Príncipe, J.C. Maximum correntropy Kalman filter. Automatica 2017, 76, 70–77. [Google Scholar] [CrossRef]

- Feng, Y.; Fan, J.; Suykens, J. A Statistical Learning Approach to Modal Regression. arXiv, 2017; arXiv:1702.05960. [Google Scholar]

- Recht, B.; Fazel, M.; Parrilo, P.A. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 2010, 52, 471–501. [Google Scholar] [CrossRef]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Li, L.; Huang, W.; Gu, I.Y.H.; Tian, Q. Statistical modeling of complex backgrounds for foreground object detection. IEEE Trans. Image Process. 2004, 13, 1459–1472. [Google Scholar] [CrossRef] [PubMed]

- Wright, J.; Ganesh, A.; Rao, S.; Peng, Y.; Ma, Y. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–8 December 2009; pp. 2080–2088. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).