Abstract

In this paper, a new framework for variable detectors is formulated in order to solve different noise enhanced signal detection optimal problems, where six different disjoint sets of detector and discrete vector pairs are defined according to the two inequality-constraints on detection and false-alarm probabilities. Then theorems and algorithms constructed based on the new framework are presented to search the optimal noise enhanced solutions to maximize the relative improvements of the detection and the false-alarm probabilities, respectively. Further, the optimal noise enhanced solution of the maximum overall improvement is obtained based on the new framework and the relationship among the three maximums is presented. In addition, the sufficient conditions for improvability or non-improvability under the two certain constraints are given. Finally, numerous examples are presented to illustrate the theoretical results and the proofs of the main theorems are given in the Appendix.

1. Introduction

Noise commonly exists with the useful signal, and more noises in the system often lead to less channel capacity and worse detectability. People usually try to utilize a series of filters and algorithms to remove the unnecessary noise. Hence, understanding and mastering the distribution and characteristics of noise is an essential research topic in traditional signal detection theory. Nevertheless, although it may seem very counterintuitive, noise does play an active role in many signal processing problems, and the performance of some nonlinear systems can be enhanced via adding noise under certain conditions [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27]. The phenomenon that noise benefits system is the so-called stochastic resonance (SR), which was first proposed by Benzi et al. [1] in 1981 when they studied the periodic recurrence of ice gases. The positive effects of noise have drawn the attention of researchers in various fields. For example, the effect of SR on the global stability of complex networks is investigated in [26]. Kohar and Sinha demonstrated how to utilize noise to make a bistable system behave as a memory device in [27].

In the signal detection problem, researchers commonly care about how to increase the output signal-to-noise (SNR) [7,8,9,10,11], the mutual information [12,13], or detection probability with a constant false alarm rate [14,15,16,17,18,19,20], or how to decrease the Bayes risk [21,22] or the probability of error [23] by adding additive noise to the input of system or changing the background noise level. As presented in [8], the output SNR obtained by adding suitable noise to the input of system is higher than the input SNR. The research results in [14] indicate that the detection probability of the sign detector can be increased by adding an appropriate amount of white Gaussian noise.

Depending on the detection metrics, the SR phenomenon for the hypothesis testing or detection problems are usually investigated according to the Bayesian, Minimax or Neyman–Pearson criteria. In [23], the additive noise to optimize the performance of a suboptimal detector is explored according to Bayesian criterion under uniform cost assignment. It is demonstrated that the optimal noise to minimize the probability of error is a constant, and the improvability obtained by adding the constant noise can also be achieved via shifting the decision region without adding any additive noise. The probability distribution of the optimal additive noise to minimize Bayes risk is investigated in [21] according to the restricted Bayesian criterion, which can be extended to Bayesian and Minimax criteria easily. For an M-ary hypothesis testing problem, the optimal noise is determined as a randomization of at most M mass points.

In [15], a mathematical framework is established to search the optimal noise to maximize the detection probability based on Neyman–Pearson criterion. This leads to the very significant conclusion that the optimal noise is determined as the randomization of at most two discrete vectors. In addition, sufficient conditions whether the detection probability can or cannot be increased are given. However, from the perspective of Patel [18], the proof of the optimal noise presented in [15] has a little bit of a drawback. Moreover, the same noise enhanced problem for a fixed detector is researched through establishing a different mathematical framework in [18], where the optimal noise to maximize detection probability is also proved as a random signal consisting of no more than two discrete points and the corresponding probabilities. The researchers in [16] studied the noise enhanced detection performance for variable detectors according to the Neyman–Pearson criterion based on the results in [15]. The optimal noise enhanced solution to maximize the detection probability is determined as a randomization of no more than two detector and constant vector pairs.

Through the comparison and analysis above, it is clear that most researchers have focused on the maximization of detection probability via additive noise and there are few studies which cover the field of the minimization of the false-alarm probability. We cannot exclude the possibility that the false-alarm probability can be decreased by adding noise without deteriorating the original detectability, especially for the case where the detection probability cannot be increased via adding any noise. For example, a noise enhanced model which can increase the detection probability and decrease the false-alarm probability simultaneously by adding noise is first formulated in [25] for a fixed detector. In addition, the model is solved by a convex combination of the optimal noises for two limited cases, i.e., the minimization of false-alarm probability and the maximization of detection probability. Nevertheless, it is obvious that the convex combination is usually not the optimal solution of the maximum overall improvement of the model. In this paper, we explore the optimal solution to maximize the overall improvement of detection and false-alarm probabilities directly instead of the convex combination. In practical applications, though the structure of the detector commonly cannot be replaced in many cases, some parameters of the detector can be varied to obtain a better performance. Moreover, the noise enhanced detection problems for a fixed detector can generally be achieved by simplifying the results for variable detectors directly. Thus, it is necessary to discuss the noise enhanced detection problems on the premise of variable detectors.

In order to obtain the optimal noise enhanced solution to maximize the overall improvement of detection and false-alarm probabilities for variable detectors under two inequality-constraints, we formulate a new framework to define six different disjoint sets of detector and discrete vector pairs based on the signs of the relative improvements of the detection and the false-alarm probabilities. Then we explore the optimal noise enhanced solutions for the maximum detection probability and the minimum false-alarm probability and give the corresponding algorithms in the new framework. Further, through some derivation, the optimal noise enhanced solution for the maximum overall improvement of detection and false-alarm probabilities is proved as a randomization of at most two detector and discrete vector pairs from two different sets, and the relationship among the three maximums is presented. In addition, the theoretical results for the case of allowing the randomization between detectors can be applied straightforwardly to the case where the randomization between detectors cannot be allowed. Namely, the optimization problem for variable detectors is simplified to choose a fixed detector and search the optimal additive noise when the randomization between detectors cannot be allowed. Actually, the maximization of detection probability in this paper is equivalent to the noise enhanced detection problem for variable detectors studied in [16], which also needs all information of detection and false-alarm probabilities obtained by every detector and discrete vector pair. Indeed, the new framework subdivides the one set in [16] into six subintervals. Based on the definition of the six sets, it is obvious that each detector and discrete vector pair as a component of additive noise is available, partially available, or unavailable to meet the two constraints. Then the available and partial available pairs can be utilized to construct the optimal noise enhanced solutions to satisfy different requirements. Namely, the division of six sets effectively provides the foundation for maximizing the relative improvements of detection and false-alarm probabilities, and the sum of them.

The main contributions of this paper can be summarized as follows:

- Formulation of a new framework, where six different disjoint sets of detector and discrete vector pairs are defined according to two inequality-constraints.

- Algorithms for the noise enhanced solutions to maximize the relative improvements of the detection and the false-alarm probabilities are given based on the new framework.

- Noise enhanced solution of the maximum overall improvability is first provided based on the new framework and the relationship among the three maximums is explored.

- Determination of the sufficient conditions for the improvability and nonimprovability under the two constraints.

The remainder of this paper is organized as follows: in Section 2, three optimization problems for a binary hypothesis testing problem for a variable detector are proposed and the six disjoint sets of detector and discrete vector pairs are defined. In Section 3, the optimal noise enhanced solutions for the three optimization problem are discussed when the randomization between detectors can or cannot be allowed and the corresponding algorithms are given. Numerical results are presented in Section 4 and the conclusions are provided in Section 5.

2. Problem Formulation

Consider a binary hypothesis testing problem as follows:

where is the probability density function (pdf) of the observation under , , and . For any , the probability of choosing can be characterized by and . Generally, is also treated as a decision function of the detector. In order to investigate the possible enhancement of detectability, a new noise modified observation is obtained by adding an independent noise to the original observation , i.e., . Correspondingly, the pdf of under can be expressed by the convolutions of and as below:

where represents the symbol of convolution.

For the same detector, the decision function for is the same as that for . Then the detection and false-alarm probabilities based on the new noise modified observation are calculated by:

According to the two constraints that and , where and represent the lower limit on the detection probability and the upper limit on the false-alarm probability, respectively, the following three important definitions are given by:

where and can be regarded as the relative improvements of the detection and false-alarm probabilities obtained by adding additive noise, respectively, and is the sum of and .

In many cases, though the structure of the detector cannot be substituted, some parameters of it can be varied, such as decision threshold. In addition, the whole detector can also be replaced in some special cases. Instead of a fixed decision function , we suppose that there exist a set of candidate decision functions written as and any can be utilized. Then for any decision function , , the detection and false-alarm probabilities based on can be obtained by replacing with , i.e.:

When the additive noise is a discrete vector with pdf , where denotes the delta function, i.e., only if and otherwise, . The corresponding noise modified detection and false-alarm probabilities can be rewritten as:

Accordingly, under the constraints of and , the relative improvements of the detection and false-alarm probabilities corresponding to the additive noise with pdf can be written as:

Correspondingly:

In order to make full use of the information gained by the discrete vector , we define the following six mutually disjoint sets for each according to the values of and denoted by , , , , , . Further, define and , then , where and .

Accordingly, a framework is formulated by defining the six different sets. As a result, the purpose of this paper is to investigate the optimal noise enhanced solutions for the maximum , and , respectively, under the two inequality-constraints based on the new framework. Obviously, whether the pair of is useful, partially useful or unuseful for the noise enhancement can be determined according to the definitions of the six sets. For instance, any detector and discrete pair of , and can meet the two constraints that and , the maximum may be obtained by a suitable detector and discrete pair of or , the maximum may be achieved by a detector and discrete pair of or , and the maximum may be reached by a suitable detector and discrete pair of , or . In the following sections, the corresponding theorems and algorithms are provided.

3. The Noise Enhanced Solutions

Let , and be the maximum achievable , and , respectively, which are obtained by adding a discrete vector as additive noise when the randomization between detectors is allowed. Namely, , and . If anyone of , and is less than zero, and cannot be obtained by adding any noise. So this paper is studied under the conditions that , and are greater than zero.

In general, when the randomization between different detectors is allowed, the noise enhanced solution can be viewed as a randomization of one or more detector and noise pairs with the corresponding weights. Suppose that the additive noise pdf is for any , , then , and can be expressed as:

where and . Generally, the additive noise for any can be viewed as a randomization of finite or infinite discrete vectors, i.e., , where and , and is a finite or infinite positive integer.

3.1. The Optimal Noise Enhanced Solution to Maximize zd

From Equation (15), can be rewritten as:

Further, can also be expressed by:

where , , , , , , . Let . In other words, is obtained by the randomization of two detector and discrete vector pairs from two different sets, i.e., and . Then is the convex combination of multiple , which means that can be obtained by the randomization of multiple different randomizations consisting of two detector and discrete vector pairs , from two different sets with the corresponding probabilities. From Equation (18), if there exists at least one detector and discrete vector pair which belongs to , the constraints and can be satisfied by choosing the suitable detector and adding the discrete vector. Otherwise, according to Equation (19) and the definitions of , a randomization of two detector and discrete vector pairs from two different sets may satisfy the two constraints and .

Let the maximum achievable obtained by any noise solution under the two constraints that and be denoted by . Define be the set of all detector and discrete vector pairs corresponding to . Then the following theorem and corollary hold and the corresponding proofs are presented in Appendix A.

Theorem 1.

can be achieved by the randomization of at most two detector and discrete vector pairs and from two different sets, i.e., and .

Corollary 1.

(a) If there exists at least one pair of which belongs to can be obtained by selecting and . (b) When , the corresponding to is zero. (c) When is obtained by the randomization of and from or with the respective probabilities and , or the detector and discrete pair .

Next, we try to search the maximum achievable obtained by the randomization of and from , or when . Generally, the corresponding and can be expressed by:

where and . Under the constraint that , the Lagrangian of the optimization problem of maximizing can be formulated as:

where denotes the distribution of and . According to the Lagrange duality, we have:

So solving the optimal solution to maximize is equivalent to finding and to make Equation (23) hold. Let us define an auxiliary function such that:

Let and be the respective suprema of over the sets and , i.e.,

Due to when , is a decreasing function of . When , , which means increases with . Thus, there exists one such that , i.e., there are and such that . So the and the obtained by the randomization between and with the respective probabilities and can be calculated by:

Combined with Equations (27) and (28), the and the randomization of and are the solution of Equation (23), i.e., is the maximum achievable obtained by the randomization of and from , or when . Based on the analysis above, Algorithm B1 is provided in Appendix B to search the two detector and discrete vector pairs.

3.2. The Optimal Noise Enhanced Solution to Maximize zfa

In this subsection, the optimal noise enhanced solution to maximize is considered. Let the maximum achievable obtained by any noise solution under the two constraints that and be denoted by . Define . Then the following theorem and corollary hold and the corresponding proofs are omitted here, which are similar to Theorem 1 and Corollary 1, respectively.

Theorem 2.

can be obtained by the randomization of at most two detector and discrete vector pairs and from two different sets, i.e., and .

Corollary 2.

(a) If there exists at least one pair of which also belongs to , can be achieved by selecting and . (b) When , the corresponding to is zero. (c) When , is obtained by the randomization of from , or and with the respective probabilities and , or the detector and discrete pair .

Then we focus on the maximum obtained by the randomization of from , or and with respective probabilities and when . The corresponding and can be expressed by:

where and . Under the constraint of , the Lagrangian of the maximization of is:

The Lagrange duality suggests that:

In order to solve Equation (32), let us define an auxiliary function such that:

Suppose that and are the respective suprema of over the sets and , i.e.,

When , and then increases with . Since when , decreases with . So there exists a such that . Namely, there exist and such that . The and the obtained by the randomization between and with the respective probabilities and can be calculated by:

From Equations (36) and (37), the and the randomization of and are the solution of Equation (32), i.e., is the maximum achievable obtained by the randomization of from , or and when . According to the derivation above, Algorithm B2 presented in Appendix B can be utilized to search the corresponding detector and discrete vector pairs.

3.3. The Optimal Noise Enhanced Solution to Maximize z

Let represent the maximum achievable under the two constraints that and . Define . Next, the optimal noise enhanced solution to maximize is explored in this subsection, the related theorem and corollary are provided as below.

Theorem 3.

can be obtained by the randomization of at most two detector and discrete vector pairs and from two different sets such that , and . The proof of Theorem 3 is also similar to Theorem 1 and omitted here.

Corollary 3.

(a) If there exists at least one pair of belongs to , the maximum can be realized by choosing and . (b) If , . (c) If and , we have . The proofs are provided in Appendix A.

Especially, when , we can select the two pairs and directly, according to the analysis above and the properties of , to form an available noise enhanced solution to make the value of as greater as possible.

If , then we can let and . Since , the maximum is achieved when . The greater the value of , the greater the value of . So and can be selected as and , where .

Similarly, when , let and , where .

3.4. Sufficient Conditions for and

In this section, according to the analysis from Section 3.1 to Section 3.3 and the properties of the six sets, the sufficient conditions which can or cannot satisfy the two constraints and are determined as below.

Theorem 4.

(a) If , any pair can meet and ; (b) When , if there exist and such that:

then and can be realized by the randomization of and , otherwise there exists no noise enhanced solution to make and hold. The proofs are presented in Appendix A.

When no randomization between detectors is allowed, only one detector can be selected to utilize. Suppose that the selected detector is , the conclusions for the case of allowing randomization between detectors can be applied to the case of nonrandomization between detectors straightforwardly by replacing with and , where .

4. Numerical Results

In this section, a binary hypothesis-testing problem is considered to verify the theoretical results explored in the previous sections. The binary hypotheses are given by:

where , is a known constant, and are independent identically distributed (i.i.d.) symmetric Gaussian mixture noise samples such that:

where . The test statistic of a suboptimal detector is shown as:

where . From Equation (41), the test result in this case is obtained through twice decision. Firstly, use the sign detector to determine the sign of each observation component . Secondly, compute the proportion of the positive observation components in the observation vector and then compare it with the decision threshold to obtain the final result.

Let , then the detector shown in Equation (41) can be substituted by two decision thresholds and , the corresponding decision function are and , respectively. When , the detector chooses only if and at the same time. When , the detector chooses if or . Assume be a discrete vector without any constraints. Then the detection and false-alarm probabilities of the sign detector choosing the noise modified observation component , , can be calculated by and , where , and . Further, the detection and false-alarm probabilities of for are computed as and , respectively. The detection and false-alarm probabilities of for can be expressed by and . Correspondingly, and , .

Let and . Under the two constraints that and , for any , we can determine the six sets , , for and according to the definitions of the six sets and the values of and , respectively. Naturally, the six sets obtained by allowing the randomization between and can be determined by , . Afterwards, we can search the maximum , , and the corresponding noise enhanced solutions according to the algorithm provided in Section 3.

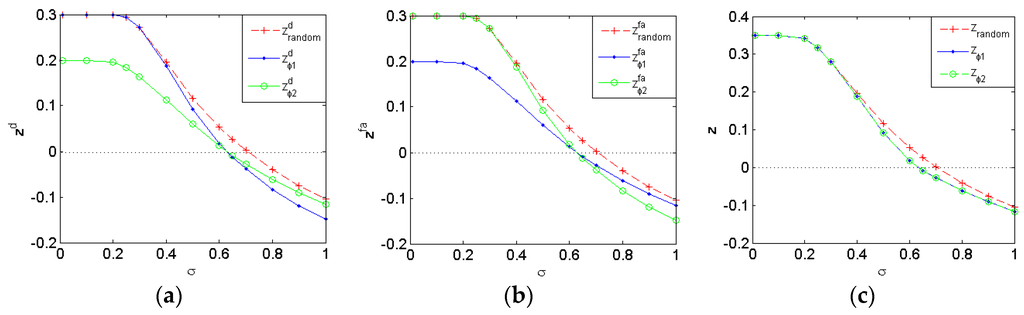

Figure 1 illustrates that the maximum achievable , and for , and the case of allowing the randomization between and for different values of when and . The plotted in Figure 1a is actually the relative improvement of the maximum achievable detection probability compared to under the constraint , i.e., . Hence, and can be realized only when . As shown in Figure 1a, when increases to a certain extent, which means the feasible range of for the noise enhanced phenomenon is limited. When is close to 0, the maximum achievable for is 0.3, which equals to that for the case of allowing the randomization between detectors, and the corresponding is close to 1 while the maximum for can only reach 0.9. With the increase of , for , and the case of allowing the randomization between them gradually decrease. When, the maximum achievable for is lower than that for the case of allowing the randomization between detectors. The maximum achievable for and when , and the maximum for is gradually greater than that for when . In particular, for the case where the randomization between detectors is allowed, the maximum achievable decreases to 0 when . Consequently, for , the noise enhanced phenomenon, under the constraints and , can still happen through allowing the randomization between and , which is on account of the fusion of and , , providing more available noise enhanced solutions.

Figure 1.

The maximum achievable , and for , and the case of allowing the randomization between and for different values of when and Plotted in (a), (b) and (c), respectively.

The depicted in Figure 1b is actually the relative improvement of the minimum achievable false-alarm probability compared to under the constraint that , i.e., . Similarly, there exists noise enhanced solution to meet the two constraints and only if . When approaches to 0, the maximum for is equal to that for the case of allowing the randomization between and , the corresponding minimum is close to 0 while the minimum for can only reach 0.1. From Figure 1b, as increases, the maximum achievable for , and the case of allowing the randomization between them gradually decrease. The maximum achievable for and are lower than zero when , while obtained in the case of allowing the randomization between and is still greater than zero for . In other words, for , compared to the nonrandomization case where the noise enhanced phenomenon cannot happen, and can still be realized by allowing the randomization between and .

From Figure 1a,b, it is clear that under the constraints and , the maximum achievable for is greater than that for and the minimum achievable for is smaller than that for when . In such case, we can choose for a greater or select for a smaller when the randomization between detectors cannot be allowed. As illustrated in Figure 1c, the maximum for is equal to that for . When is close to 0, the maximum for the case of allowing the randomization can reach , which is greater than the corresponding maximum and . Obviously, there exists to obtain the maximum in the whole . As increases, the number of the elements in the set decreases. When , , then the maximum obtained in the case of allowing the randomization is equal to the maximum or according to Corollary 3, i.e., .

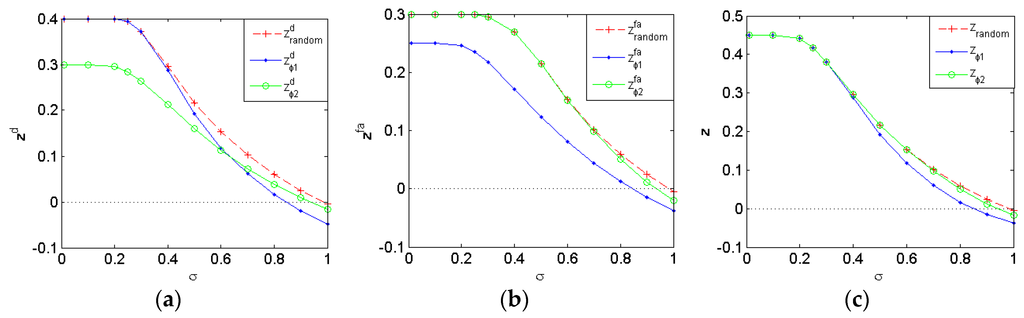

As a comparison, Figure 2 and Figure 3 show the maximum achievable , and for , and the case of allowing the randomization between them for different values of when , and , , respectively. Compared Figure 1a and Figure 2a, both of them plot the corresponding to the maximum under the constraint . So the in the two figures indicate the same change trend. In Figure 2b, the maximum obtained for the case of allowing randomization between detectors equals to that for when . Compared to Figure 1b, when is close to 0, the minimum in Figure 2b for still maintains zero, while the minimum for decreases from 0.1 to 0.05 as the corresponding increases from 0.2 to 0.25. Further, compared the minimum for when and , they are equal when and then the latter one is gradually greater than the former one as increases, which is consistent with the description as shown in Figure 1b and Figure 2b. From the definition of , i.e., , with the decrease of , the value of increases and some may change from negative to positive. In other words, the decrease of changes the distribution of the detector and discrete pair in . For any , some belonged to for are reallocated to the set or when decreases to 0.6. In addition, some when may belong to or when . Further, these new elements in can be utilized to construct more available noise enhanced solutions to obtain a superior false-alarm probability. However, we need to note that behind the improvement of is the decrease of the corresponding .

Figure 2.

The maximum achievable , and for , and the case of allowing the randomization between and for different values of when and . Plotted in (a), (b) and (c), respectively.

Figure 3.

The maximum achievable , and for , and the case of allowing the randomization between and for different values of when and Plotted in (a), (b) and (c), respectively.

Compared Figure 3 and Figure 1, as decreases from 0.3 to 0.2, some will change from positive to negative, i.e., the distribution of changes. Consequently, for any , there may be some change to and , change to , or change to . As shown in Figure 3a, when closes to 0, the maximum available for , and the case of allowing the randomization between them are 0.2, 0.15 and 0.25, and the corresponding maximum can reach 0.9, 0.85 and 0.95, respectively. As increases, the maximum for , and the case of allowing the randomization decrease, where the maximum decreases fastest for and slowest for . Further, the maximum achievable for is greater than that for when and the difference between the maximum for and the case of allowing the randomization are smaller and smaller with the increase of . Compared Figure 3b and Figure 1b, both of them plot the corresponding to the minimum under the constraint . Especially, for any , i.e., according to Corollary 3. In addition, compared with Figure 1, under the two constraints that and , the feasible ranges of for , and the case of allowing the randomization between them become smaller.

In conclusion, as decreases, the values of , and increase. This is mainly on account of the noise enhanced phenomenon generally occurs when the observation has multimodal pdf and the multimodal structure is more obvious for a smaller [21]. In order to investigate the simulation results of Figure 1, Figure 2 and Figure 3 further, Table 1, Table 2 and Table 3 present the optimal noise enhanced solutions to maximize , and for , and the case of allowing the randomization, respectively, for different when and .

Table 1.

The optimal additive noises to maximize , and for various values of for when and .

Table 2.

The optimal additive noises to maximize , and for various values of for when and .

Table 3.

The optimal additive noises to maximize , and for various values of for the case of allowing the randomization between and when and .

It is worthy to note that the optimal noise enhanced solutions to maximize , and , respectively, are not unique in many cases. Moreover, due to the property of the detector, the noise modified detectability for , , obtained by adding is the same with that achieved via adding . As a demonstration, for each , there only lists one noise enhanced solution for the maximum , as well as the maximum and . As shown in Table 1, Table 2 and Table 3, the optimal noise enhanced solutions to maximize , and , respectively, are the randomization of at most two detector and discrete vector pairs and from two different sets, which are consistent with Theorems 1–3.

Next, the noise enhanced solution for is taken as an example to illustrate firstly. When , the maximum obtained by is equal to the maximum obtained by .Through some calculations, is one of the discrete vectors to maximize , so is the optimal noise to maximize for when and . At the same time, the obtained by is also the maximum for , thus is the optimal noise to maximize for . Besides, the maximum obtained from and are smaller than the maximum for , then the maximum under the two constraints and is obtained by the randomization of from and from with probabilities 0.4 and 0.6, respectively. The case of is similar with the case of .

When , both and are null, and the maximum , and for cannot be obtained by the discrete vector from . Based on Theorems 1–3, the maximum , and can be achieved by the randomization of two detector and discrete vector pairs from and . Further, the noise enhanced solutions for the maximum and have the same additive noise components, i.e., and , but with different probabilities. Moreover, according to Corollary 3(b), . When the randomization between and is allowed, the obtained by is equal to the obtained by , and it is the maximum obtained in . According to Corollary 3(c), the maximum , and can be obtained by the randomization of and with different probabilities. Especially, the probability of for the maximum or is unique, while the probability of for the maximum can be chosen in a certain interval. When , no noise enhanced solution exists to meet the two constraints for the nonrandomization case in Table 1 and Table 2, while there still exist noise enhanced solutions to improve the detectability under the same constraints by allowing randomization between and shown in Table 3 and the corresponding solutions are also obtained according to Corollary 3(c).

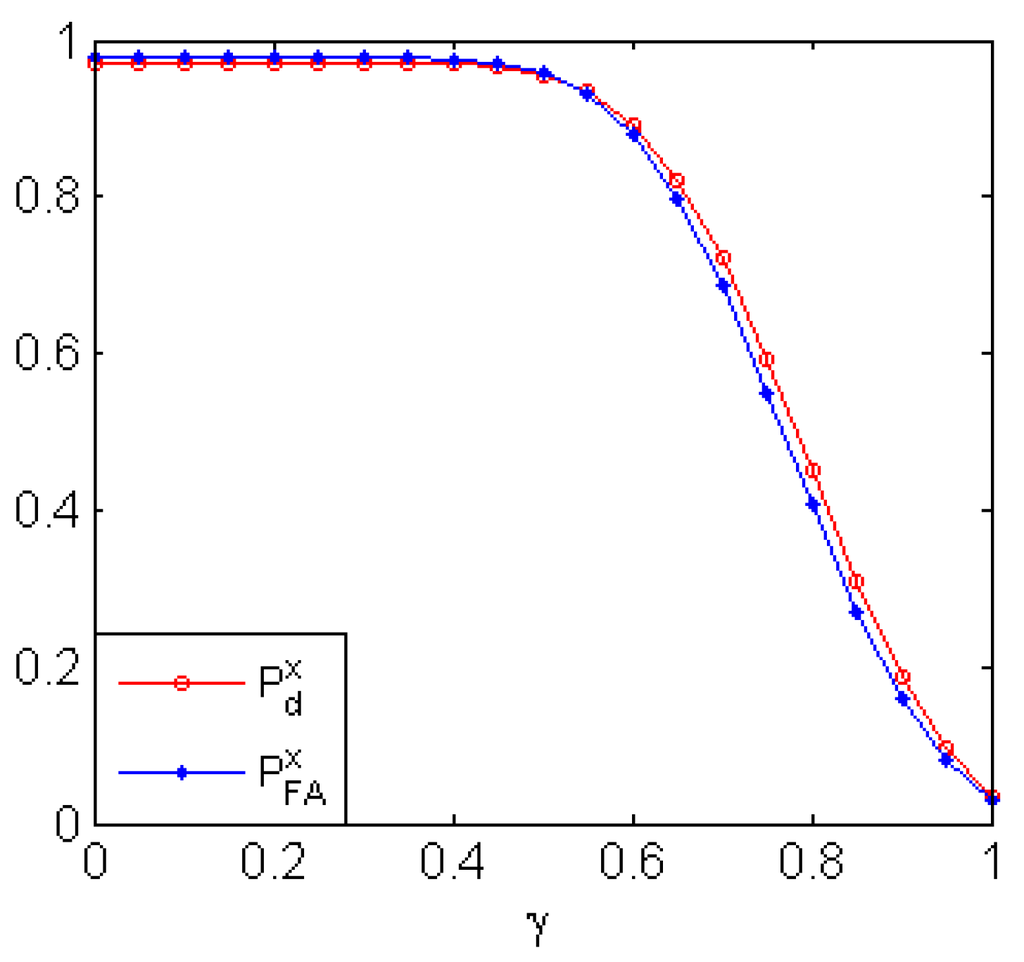

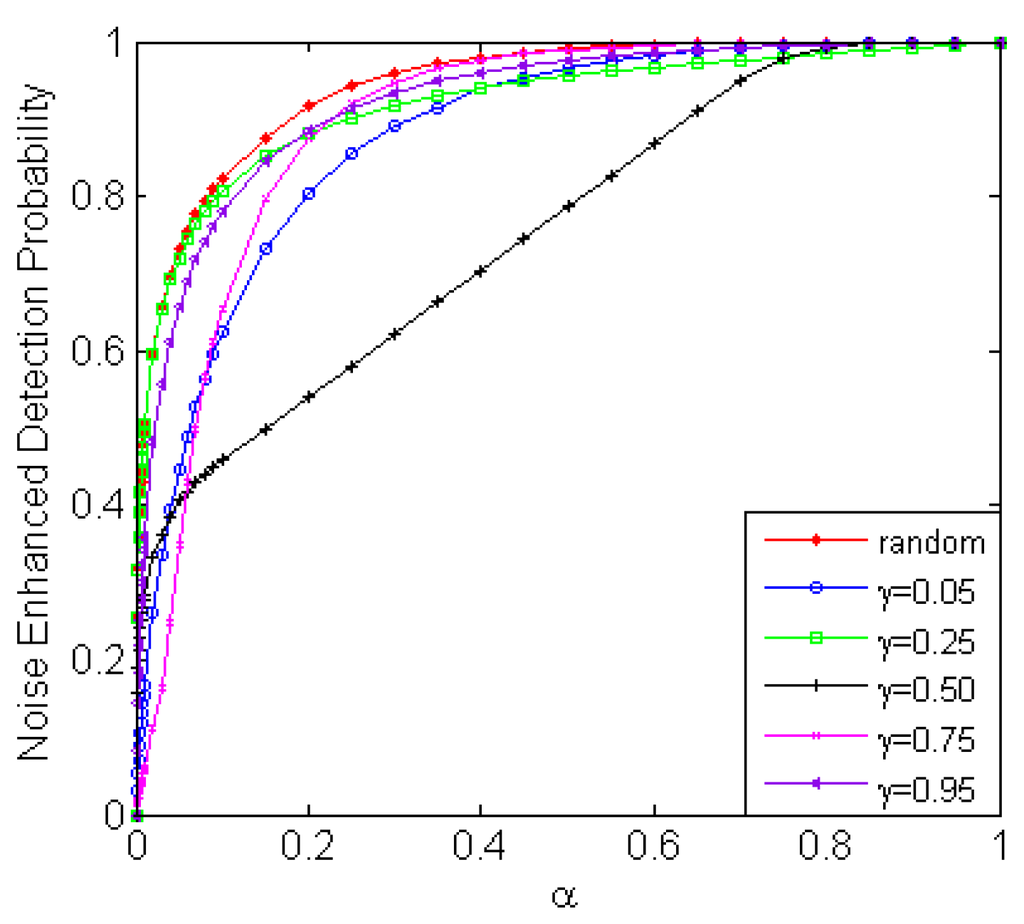

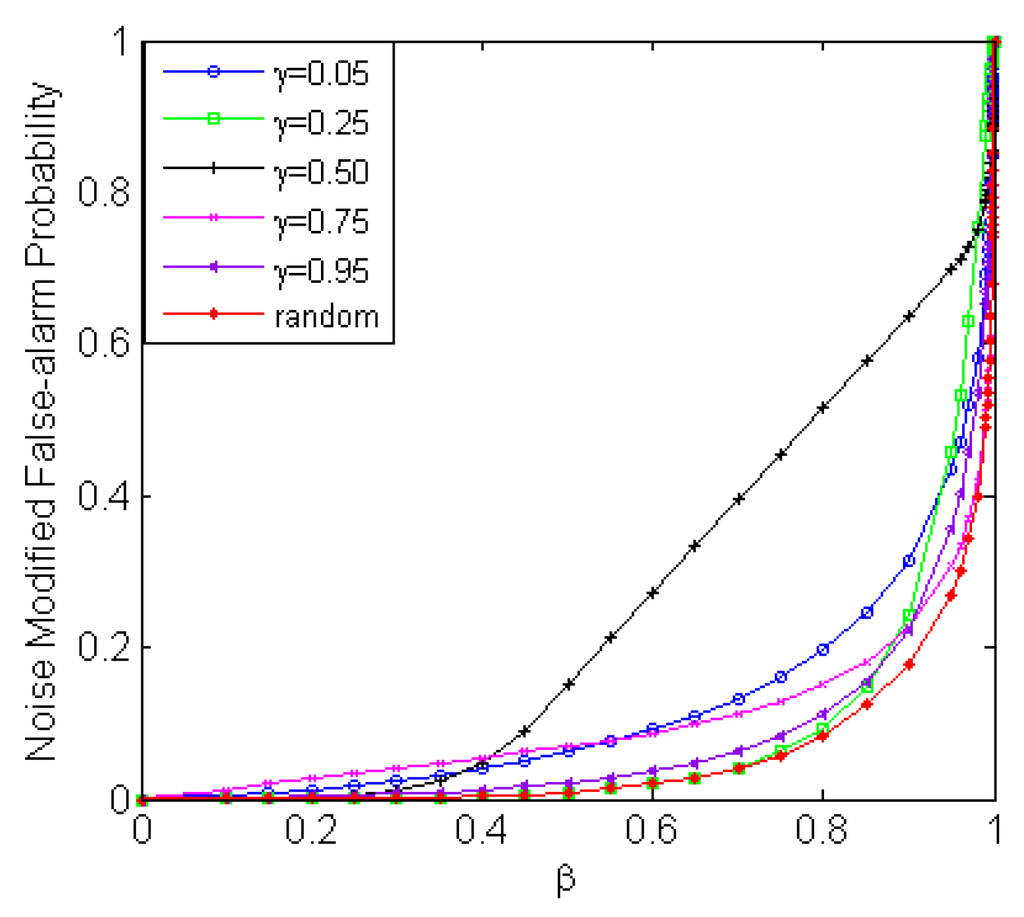

In order to discuss the effect of the decision threshold on the detection and false-alarm probabilities, the proposed noise enhanced method is operated on different values of . Further, the relationships between the maximum achievable detection probability and , the minimum achievable false-alarm probability and are explored for different . The different results of the original detector and the noise enhanced detector for different are given in Figure 4, Figure 5 and Figure 6.

Figure 4.

and for different when , , and .

Figure 5.

The maximum achievable as a function of. for and , and the case of allowing the randomization between thresholds, when , , and .

Figure 6.

The minimum achievable as a function of for and , and the case of allowing the randomization between thresholds, when , , and .

Figure 4 gives the original detection and false-alarm probabilities for different when . From Figure 4, we can see that both the original detection and false-alarm probabilities decrease with the increase of and the value of the original detection probability is close to that of the original false-alarm probability for any . In other words, a better detection probability is obtained for a smaller and a lower false-alarm probability is achieved for a greater . Figure 5 plots the maximum achievable obtained by adding noise as a function of for and , and the case of allowing the randomization between thresholds, when , , and . Compared Figure 5 with Figure 4, the detection probabilities for and 0.75 can be increased significantly by adding suitable additive noises with a lower false-alarm probability. Figure 6 presents the minimum achievable obtained by adding noise as a function of for and , and the case of allowing the randomization between thresholds, when , , and . Comparing Figure 6 with Figure 4, the false-alarm probabilities for , 0.25 and 0. 5 can be decreased significantly by adding suitable additive noises with a higher detection probability. From Figure 5 and Figure 6, different detection performance can be realized by adding noise. As shown in Figure 5 and Figure 6, for the cases of and , the detector of shows the worst performance compared to others. Thus, in such cases, is not a suitable threshold. From Figure 5 and Figure 6, different detection performance can be realized by adding noises. Namely, various noise enhanced solutions can be provided with our method to satisfy different performance requirements. As a result, for any decision threshold , we can determine whether the performance of the detector can be improved or not, and search a noise enhanced solution to realize the improvement according to the method proposed in this paper.

It is worthy to note that there is no limit on the detector in the method proposed in this manuscript. Furthermore, it only depends on detector itself whether the detection performance of the detector can or cannot be improved by adding noise. The algorithms proposed in this paper not only provide ways to prove the improvability or nonimprovability, but also analyze how to search the optimal noise enhanced solutions. For any detector, no matter an optimal Neyman–Pearson (Bayesian, Minimax) detector or other suboptimal detector, we first calculate all information of obtained by every detector and discrete vector pair . Then, we divide each pair into six sets according to the values of and , where and . If there exist detector and discrete vector pairs to satisfy the sufficient conditions as given in Section 3.4, noise enhanced solutions to maximize , and can be obtained according to Section 3.1, Section 3.2, and Section 3.3, respectively, on the premise that and . Otherwise, no noise enhanced solution exists to satisfy and simultaneously.

5. Conclusions

In this paper, a framework consisting of six mutually disjoint sets is established according to two inequality-constraints on detection and false-alarm probabilities. The maximization of , and are searched based on the framework. Theorems 1–3 give the forms of the optimal noise enhanced solutions to maximize , and . The calculations of maximum , and are presented in Corollaries 1, 2 and 3, respectively. Especially, the maximum is equal to the maximum or under certain conditions according to Corollary 3. Furthermore, sufficient conditions for and are given in Theorem 4.

Compared with the method proposed in [16], which only focuses on the maximization of with a constant false-alarm rate (CFAR), both methods require all the information of obtained by every detector and discrete vector pair , and our method may use the information more effectively to realize the overall improved performance or decrease the false alarm probability due to the division of six sets.

Furthermore, the theoretical results in this paper can be extended to a broad class of noise enhanced optimal problems subject to two inequality constraints, such as the minimization of Bayes risk under the constraint on condition risk about the binary hypothesis testing problem, and the minimization of linear combinations of error probabilities under constraints on type I and II error probabilities as discussed in [28].

Acknowledgments

This research is supported by the Fundamental Research Funds for the Central Universities (Grant No. CDJZR11160003 and No. 106112015CDJZR165510) and the National Natural Science Foundation of China (Grant No. 41404027, No. 61471072, No. 61301224, No. 61103212, No. 61471073 and No. 61108086).

Author Contributions

Shujun Liu raised the idea of the new framework to solve different noise enhanced signal detection optimal problems. Ting Yang and Shujun Liu contributed to the drafting of the manuscript, interpretation of the results, some experimental design and checked the manuscript. Mingchun Tang and Kui Zhang designed the experiment of the maximum achievable and for different cases. Ting Yang and Xinzheng Zhang contributed to the proofs of the theories developed in this paper. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

A.1. Proofs of Theorems and Corollaries

Theorem A1.

can be achieved by the randomization of at most two detector and discrete vector pairs and from two different sets, i.e., , , and .

Proof.

Combining Equation (19) and the definitions of , if there exists a randomization of two detector and discrete vector pairs from two different sets to make and hold, the two constraints of and can be satisfied by the randomization consisting of one or more randomization consisting of two detector and discrete vector pairs from two different sets with corresponding probabilities. Otherwise, no noise enhanced solution exists to meet and .

Obviously, under the two constraints that and , the obtained by any randomization of detector and discrete vector pairs cannot be greater than the maximum obtained by a randomization of two detector and discrete vector pairs and with probabilities and from two different sets, respectively, where . Especially, and represent the case where the maximum under the two constraints is obtained by one pair of detector and discrete vector. Namely, under the two constraints that and , the maximum can be obtained by the randomization of at most two detector and discrete vector pairs from two different sets. □

Corollary A1.

(a) If there exists at least one pair of which belongs to , can be obtained by selecting and . (b) When , the corresponding to is zero. (c) When , is obtained by the randomization of and from , or with the respective probabilities and , or the detector and discrete pair .

Proof.

Part (a): According to the definition of , the obtained by each pair is equal to . Since , the of can only come from , or . If there exists at least one pair of which belongs to , it is obvious that can be obtained by choosing .

Part (b): The contradiction method is used here. When , suppose that the corresponding to is greater than zero and denoted by . From the definition of , for any pair , we have and as is defined without the two constraints that and . Then the and the , which are obtained by the randomization of and the optimal noise enhanced solution for with probabilities and , respectively, can be calculated as follows,

because . Obviously, is in conflict with the definition of . So the corresponding to is zero when .

Part (c): Firstly, according to Theorem 1, is obtained by the randomization of at most two detector and discrete pairs from two different sets. Secondly, according to the conclusion (a) of Corollary 1, the corresponding to is zero. Then combined the definitions of , can only be realized by a detector and discrete pair from or a randomization of from and from , or with the respective probabilities and , and vice versa. Further, means that , i.e., . If the maximum obtained by the above randomization is greater than the maximum obtained by from , the optimal noise enhanced solution to maximize is the corresponding randomization. Otherwise, the optimal noise enhanced solution to maximize is the detector and discrete vector pair . □

Corollary A3.

(a) If there exists at least one pair of belongs to , the maximum can be realized by choosing and . (b) If , . (c) If and , we have .

Proof.

Part (a): According to the definition of , the obtained by each pair is equal to . Since , the of can come from . If there exists at least one pair of which belongs to , it is obvious that can be achieved by choosing .

Part (b): When , the maximum is obtained by the randomization of and with probabilities and , so:

In order to satisfy the two constraints that and , and need to meet the following inequalities:

Accordingly, when and when .

Since , there is at least one pair of and which belongs to . If can be selected only from , or .

When and , the randomization is available if and only if . From the definitions of and , Equation (A4) holds for any , and Equation (A5) holds only for . So the maximum is obtained when as .

When and , Equations (A4) and (A5) can hold at the same time if and only if . If , gets the maximum when , otherwise reaches the maximum when .

When and , suppose , based on the definitions about and , Equation (A4) holds for and Equation (A5) holds for any . So the maximum is reached when .

If or , and . According to Corollary 1, is obtained when and the corresponding . If or , and . According to Corollary 2, is obtained when and the corresponding equals to zero, i.e., . As a result, the maximum is equal to the maximum or , i.e., .

Part (c): When and , let and . Then we have . As , and . It is obvious that through some simple calculations, which means that and can be satisfied by the randomization of and with the respective probabilities and , where . Accordingly, we always have for any . Furthermore, and reach the maximum when and , respectively, and . □

Theorem 4.

(a) If , any pair can meet and ; (b) When , if there exist and such that

Then and can be realized by the randomization of and , otherwise there exists no noise enhanced solution to make and hold.

Proof.

Part (a): If , from the definitions of , and for any . Accordingly, the corresponding and .

Part (b): When , if we want to make the and obtained by the randomization of and meet the two constraints and , the two inequalities (A3) and (A4) should be satisfied simultaneously. Further, Equations (A3) and (A4) hold if and only if . Due to and for any , and for any , then:

□

Appendix B

| Algorithm B1. Optimal noise solution to maximize zd. |

| while |

| if |

| else |

| end |

| end |

It is noted that the parameter in Algorithm B1 is a near-zero positive, which is used to ensure accuracy of the algorithm.

| Algorithm B2. Optimal noise solution to maximize zfa. |

| while |

| if |

| else |

| end |

| end |

References

- Benzi, R.; Sutera, A.; Vulpiani, A. The mechanism of stochastic resonance. J. Phys. A Math. Gen. 1981, 14, 453–457. [Google Scholar] [CrossRef]

- Gammaitoni, L.; Hanggi, P.; Jung, P.; Marchesoni, F. Stochastic resonance. Rev. Mod. Phys. 1998, 70, 223–287. [Google Scholar] [CrossRef]

- Xu, B.; Li, J.; Zheng, J. How to tune the system parameters to realize stochastic resonance. J. Phys. A Math. Gen. 2003, 36, 11969–11980. [Google Scholar] [CrossRef]

- Rousseau, D.; Chapeau-Blondeau, F. Stochastic resonance and improvement by noise in optimal detection strategies. Digit. Signal Process. 2005, 15, 19–32. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Noise benefits in quantizer-array correlation detection and watermark decoding. IEEE Trans. Signal Process. 2011, 59, 488–505. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, L.R.; Varshney, P.K. Noise-enhanced information systems. Proc. IEEE 2014, 102, 1607–1261. [Google Scholar] [CrossRef]

- Chapeau-Blondeau, F. Input-output gains for signal in noise in stochastic resonance. Phys. Lett. A 1997, 232, 41–48. [Google Scholar] [CrossRef]

- Chapeau-Blondeau, F. Periodic and aperiodic stochastic resonance with output signal-to-noise ratio exceeding that at the input. Int. J. Bifurc. Chaos 1999, 9, 267–272. [Google Scholar] [CrossRef]

- Gingl, Z.; Makra, P.; Vajtai, R. High signal-to-noise ratio gain by stochastic resonance in a double well. Fluctuat. Noise Lett. 2001, 1, L181–L188. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z. Signal-to-noise ratio gain in non-dynamical and dynamical bistable stochastic resonators. Fluct. Noise Lett. 2002, 2, L145–L153. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z.; Fulei, T. Signal-to-noise ratio gain in stochastic resonators driven by coloured noises. Phys. Lett. A 2003, 317, 228–232. [Google Scholar] [CrossRef]

- Stocks, N.G. Suprathreshold stochastic resonance in multilevel threshold systems. Phys. Rev. Lett. 2000, 84, 2310–2313. [Google Scholar] [CrossRef] [PubMed]

- Mitaim, S.; Kosko, B. Adaptive stochastic resonance in noisy neurons based on mutual information. IEEE Trans. Neural Netw. 2004, 15, 1526–1540. [Google Scholar] [CrossRef] [PubMed]

- Kay, S. Can detectability be improved by adding noise. IEEE Signal Process. Lett. 2000, 7, 8–10. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K.; Kay, S.M.; Michels, J.H. Theory of the stochastic resonance effect in signal detection: Part I—Fixed detectors. IEEE Trans. Signal Process. 2007, 55, 3172–3184. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K. Theory of the stochastic resonance effect in signal detection: Part II—Variable detectors. IEEE Trans. Signal Process. 2007, 56, 5031–5041. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K.; Kay, S.; Michels, J.H. Noise enhanced nonparametric detection. IEEE Trans. Inf. Theory 2009, 55, 499–506. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Optimal noise benefits in Neyman–Pearson and inequality constrained signal detection. IEEE Trans. Signal Process. 2009, 57, 1655–1669. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Stochastic resonance in binary composite hypothesis-testing problems in the Neyman–Pearson framework. Digit. Signal Process. 2012, 22, 391–406. [Google Scholar] [CrossRef]

- Bayrama, S.; Gultekinb, S.; Gezici, S. Noise enhanced hypothesis-testing according to restricted Neyman–Pearson criterion. Digit. Signal Process. 2014, 25, 17–27. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S.; Poor, H.V. Noise enhanced hypothesis-testing in the restricted bayesian framework. IEEE Trans. Signal Process. 2010, 58, 3972–3989. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Noise enhanced M-ary composite hypothesis-testing in the presence of partial prior information. IEEE Trans. Signal Process. 2011, 59, 1292–1297. [Google Scholar] [CrossRef]

- Kay, S.; Michels, J.H.; Chen, H.; Varshney, P.K. Reducing probability of decision error using stochastic resonance. IEEE Signal Process. Lett. 2006, 13, 695–698. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Noise-enhanced M-ary hypothesis-testing in the minimax framework. In Proceedings of the 3rd International Conference on Signal Processing and Communication Systems (ICSPCS), Omaha, NE, USA, 28–30 September 2009.

- Liu, S.J.; Yang, T.; Zhang, X.Z.; Hu, X.P.; Xu, L.P. Noise enhanced binary hypothesis-testing in a new framework. Digit. Signal Process. 2015, 41, 22–31. [Google Scholar] [CrossRef]

- Choudhary, A.; Kohar, V.; Sinha, S. Noise enhanced activity in a complex network. Eur. Phys. J. B 2014, 87, 1–8. [Google Scholar] [CrossRef]

- Kohar, V.; Sinha, S. Noise-assisted morphing of memory and logic function. Phys. Lett. A 2012, 376, 957–962. [Google Scholar] [CrossRef]

- Pericchi, L.; Pereira, C. Adaptative significance levels using optimal decision rules: Balancing by weighting the error probabilities. Braz. J. Probab. Stat. 2016, 30, 70–90. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).