Abstract

A class of complex self-organizing systems subjected to fluctuations of environmental or intrinsic origin and to nonequilibrium constraints in the form of an external periodic forcing is analyzed from the standpoint of information theory. Conditions under which the response of information entropy and related quantities to the nonequilibrium constraint can be optimized via a stochastic resonance-type mechanism are identified, and the role of key parameters is assessed.

1. Introduction

One of the principal features of complex self-organizing systems is the multitude of a priori available states [1]. This confers to their evolution an element of unexpectedness, reflected by the ability to choose among several outcomes and the concomitant difficulty of an observer to localize the actual state in state space. This is reminiscent of a central problem of information and communication theories [2], namely how to recognize a particular signal blurred by noise among the multitude of signals emitted by a source.

The connection between self-organization and information finds its origin in the pioneering works of Haken and Nicolis [3,4]. In the present work, we explore this connection in a class of multistable systems subjected to stochastic variability generated by fluctuations of intrinsic or environmental origin, as well as to a systematic nonequilibrium constraint in the form of a weak external periodic forcing. As is well known, stochasticity typically induces transitions between the states [5]. Furthermore, under appropriate conditions, one witnesses sharp, stochasticity-induced amplification of the response to the periodic forcing, referred to as stochastic resonance [6]. Our objective is to relate these phenomena to information processing.

A general formulation of the stochastic dynamics in the presence of a periodic forcing for multistable systems involving one variable is presented in Section 2, where, building on previous work by one of the present authors [7], the classical linear response theory of stochastic resonance in bistable systems is extended to the case of an arbitrary number of simultaneously stable states. In Section 3, a set of entropy-like quantities characterizing the complexity, variability and predictability of the system viewed as an information processor are introduced. Their dynamics as induced by the dynamics of the underlying multistable system is analyzed in Section 4. It is shown that by varying some key parameters the system can attain states of optimal response and predictability. The main conclusions are summarized in Section 5.

2. Self-Organization and Stochastic Resonance in a Periodically-Forced Multistable System

Consider a one-variable nonlinear system subjected to additive periodic and stochastic forcings. The evolution of such a system can be cast in a potential form [1,5],

where x is the state variable and the stochastic forcing is assimilated to a Gaussian white noise of variance ,

We decompose the generalized potential U as:

Here, is the potential in absence of the periodic forcing, and ϵ, ω stand for the amplitude and frequency of the forcing, respectively. In the classical setting of stochastic resonance, possesses two minima (associated with two stable steady states of the system) separated by a maximum. In the present work, this setting is extended by allowing for the existence of an arbitrary number n of stable steady states and, thus, for a possessing n minima separated by intermediately situated maxima. Furthermore, we stipulate that the leftmost and rightmost minima one and n are separated from the environment by impermeable boundaries, such that there are no probability fluxes directed from these states to the environment [7].

A simple implementation of this setting amounts to choosing in such a way that the successive minima and maxima are equidistant and of equal depth and height, respectively. These conditions become increasingly difficult to fulfill for increasing n if has a polynomial form. For the sake of simplicity, we will therefore adopt the following model for :

with stable and unstable states located respectively at:

Equations (1) and (2) describe a composite motion consisting of a combination of small-scale diffusion around each of the stable states and of large-scale transitions between neighboring stable states across the intermediate unstable state. The latter is an activated process whose rate depends sensitively on the potential barrier (cf. Equation (2b)):

As long as the noise is sufficiently weak in the sense of , the characteristic time scale of this motion is much slower than the characteristic time of diffusion around a given stable state, the corresponding rate being given by Kramers’ formula [1,5],

where the accents denote derivatives with respect to x.

Placing ourselves in this limit, we can map Equation (1) into a discrete state process [5,7] describing the transfer of probability masses contained in the attraction basins of the stable states i ():

The corresponding kinetic equations read [7]:

where is the conditional probability per unit time to reach state i starting from state j. The transfer operator T appearing in this equation is a tridiagonal matrix satisfying the normalization condition , whose structure can be summarized as follows:

- Elements along the principal diagonal:

- Elements along the upper sub-diagonal:

- Elements along the lower sub-diagonal:

The rate constants can be evaluated from Equations (4a) and (4b),

with:

Equation (5) constitutes a linear system with time-periodic coefficients. In what follows, we focus on the linear response, which will provide us with both qualitative and quantitative insights into the role of the principal parameters involved in the problem.

The starting point is to expand Equation (7a) in ϵ,

with:

This induces a decomposition of the transfer operator T and of the probability vector in Equation (5) in the form:

Here, and Δ are again tridiagonal matrices with elements given by Equations (6a)–(6c), where are replaced by and , respectively. is the invariant probability in absence of the periodic forcing and the forcing-induced response. Notice that and are normalized to unity and to zero, respectively. Furthermore, since in absence of the forcing all k’s are equal (cf. Equations (7c) and (3a)), the corresponding invariant probabilities are uniform, .

Substituting Equation (9) into Equation (5) and adopting for compactness a vector notation, we obtain to the first order in ϵ:

The solution of this equation in the long time limit is of the form:

where the components and of and determine the amplitudes and phases of the ’s with respect to the periodic forcing,

Substituting Equation (11) into Equation (10) and identifying the coefficients of and , one obtains following the lines of [7] the following explicit expressions of and ,

where is given by:

and is the value of the unperturbed rates .

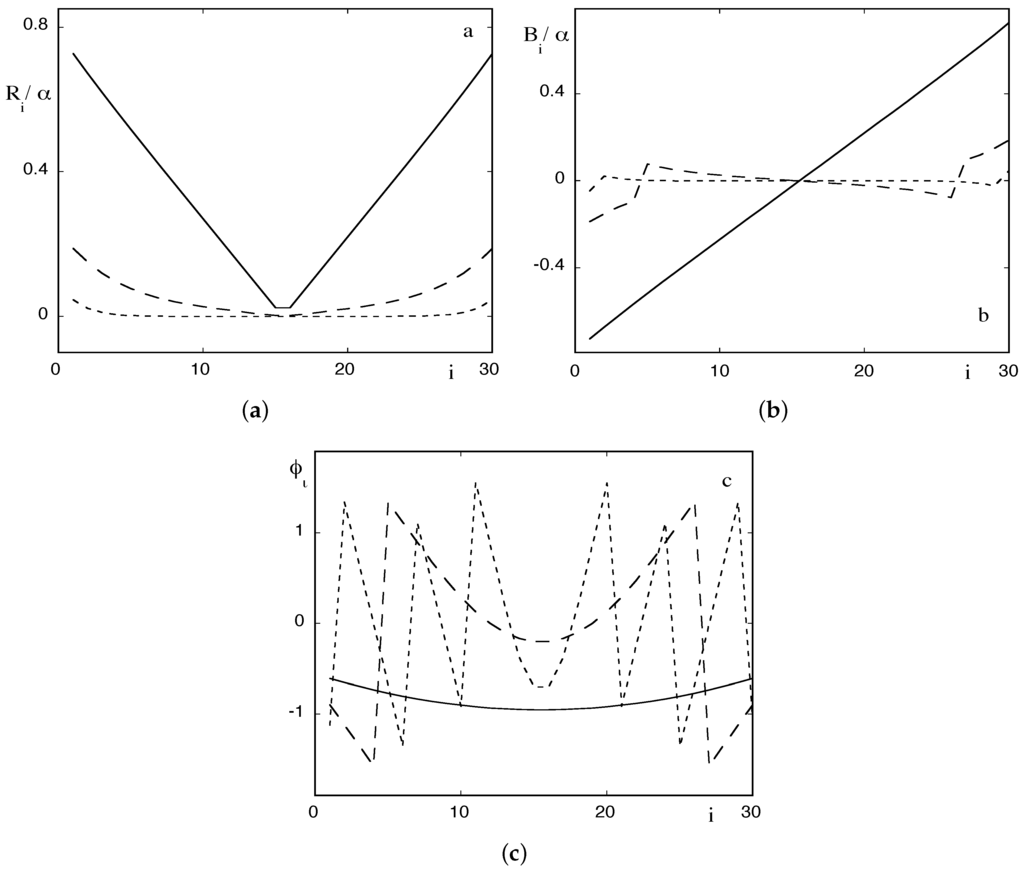

Figure 1a–c depicts the maxima and the phases of as a function of i, keeping n and fixed as obtained by numerical evaluation of the analytic expressions (12). The plot of the coefficient as a function of i in Figure 1b shows that this coefficient is subjected to several changes in sign. This entails that the corresponding response (Equation (11b)) will be subjected to an additional phase shift of π in regions where is negative. As can be seen, the maximal response is obtained for the boundary states one and n. This is due to the fact that while the intermediate states are depleted by transferring probability masses to both of their neighbors, for the boundary states, the depletion is asymmetric. Furthermore, for given noise strength , the response is more pronounced in the range of low frequencies, as expected to be the case in stochastic resonance. Note that for n odd, the response in the middle state is strictly zero. Finally, varying for fixed ω provides an optimal value for which the amplitude of the response is maximized.

Figure 1.

Amplitude scaled by : (a) of the response (Equation (11c)); (b) of the coefficient (Equation (12)); and (c) the behavior of the phase (Equation (11d)) in the case of coexisting stable states for different values of the ratio = 0.01 (full lines), 0.1 (dashed lines) and 1 (dotted lines).

3. Multistability, Information Entropy, Information Production and Information Transfer

In this section, we introduce a set of quantities serving as measures of the choice and unexpectedness associated with self-organization and, in particular, with the multiplicity of available states of the system introduced in Section 2. We start with information (Shannon) entropy [1,2,3,4]:

where the probabilities of the various states are defined by Equations (5), (9b) and (11). As a reference, we notice that in the absence of the nonequilibrium constraint provided by the external forcing, the probabilities are uniform, , and in this state of full randomness attains its maximum value:

The deviation from full randomness, and thus, the ability to reduce errors, is conveniently measured by the redundancy:

We come next to the link with dynamics. Differentiating both sides of Equation (14) with respect to time and utilizing Equation (5), we obtain a balance equation for the rate of change of .

Setting:

we can rewrite this equation in the more suggestive form:

Writing:

we finally obtain:

where the information entropy production and the associated flux are defined by [8,9]:

We notice the bilinear structure of in which the factors within the sum can be viewed as generalized (probability) fluxes and their associated generalized forces. This is reminiscent of the expression of entropy production of classical irreversible thermodynamics [10]. As a reference, in the state of equipartition realized in the absence of the external forcing, one has , , and vanishes along with all individual generalized fluxes and forces. This property of detailed balance, characteristic of thermodynamic equilibrium, breaks down in the presence of the forcing, which introduces a differentiation in and an asymmetry in the . measures, therefore the distance between equilibrium and nonequilibrium on the one side and between direct (i to j) and reverse (j to i) process on the other. In this latter context is also closely related to the Kullback information.

Finally, in an information theory perspective, one is led to consider the information transfer between a part of the system playing the role of “transmitting set” X and a “receiver set” Y separated by a “noisy channel” [2,4]. In the dynamical perspective developed in this work, the analogs are two states, say i and j, and a conditional probability matrix, . The information transfer is then simply the sum of the Shannon entropy and the Kolmogorov–Sinai entropy [1,4]:

To relate h with the quantities governing the evolution of our multistable system, we need to relate the transition probabilities to the transition rates (probabilities per unit time) featured in Equations (6) and (17). This requires in turn to map the continuous time process of the previous section to a discrete-time Markov chain. To this end, we introduce the discretized expression of the time derivative in Equation (5), utilize Equation (17) and choose the time step as a fraction of the Kramers time associated with the passage over the potential barriers as discussed in Section 2:

This leads to the discrete master-type equation:

where the stochastic matrix W is defined by:

Introducing again as a reference the state in the absence of the forcing, one sees straightforwardly that , and for , for and . Expression (19) reduces then to:

where the two terms on the right-hand side account, respectively, for the contributions of the intermediate states and of the boundary states one and n.

It is worth noting that for n, even the Markov chain associated with the forcing-free system is lumpable [11], in the sense that upon grouping the original states, one can reduce Equation (21) into a system of just two states a and b, with and . In other words, in the absence of the nonequilibrium constraint, the intermediate states play no role. The presence of the forcing will change this situation radically by inducing non-trivial correlations and information exchanges within the system.

4. Nonequilibrium Dynamics of Information and Stochastic Resonance

Our next step is to evaluate the quantities introduced in the preceding section in the presence of the nonequilibrium constraint provided by the external forcing with emphasis on the roles of key parameters, such as forcing amplitude and frequency, noise strength and number of states.

4.1. Information Entropy and Redundancy

Substituting expression Equation (9b) into Equation (14) and using the property , one sees straightforwardly that the contributions to cancel identically. Keeping the first non-trivial (i.e., ) parts, one obtains:

where:

and:

Using expression Equation (11b) for , we may further decompose into its time average part and a periodic modulation around the average:

where the effective amplitude and phase of the modulation are expressed in terms of and .

The evaluation of the redundancy, Equation (16), follows straightforwardly from that of , leading to:

where is given by Equations (24c) and (25).

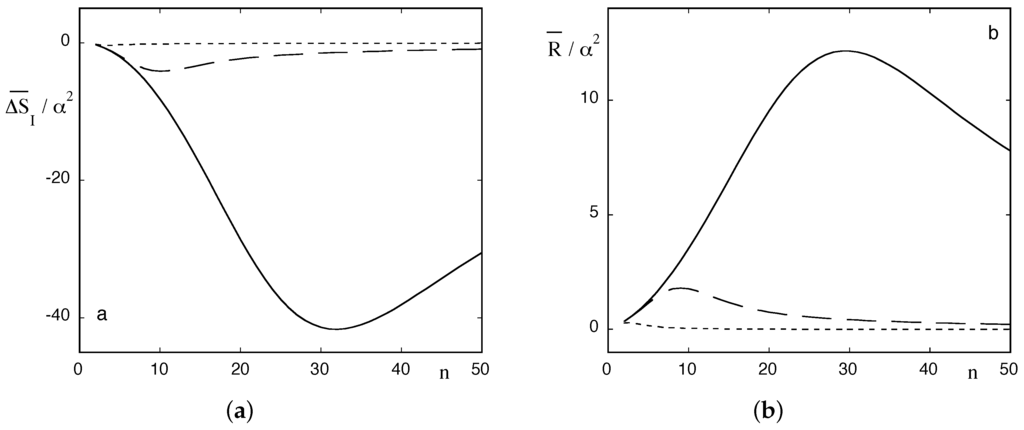

In Figure 2a,b, the time averaged excess entropy and redundancy are plotted as a function of the number n of states using expressions Equations (11)–(13). In all cases, is negative and positive, reflecting the enhancement of predictability induced by the nonequilibrium constraint. Furthermore, and similarly to Figure 1a, for given noise strength , the enhancement is more pronounced in the range of low frequencies ω and is further amplified when the conditions of stochastic resonance are met. Interestingly, for given and ω, the enhancement exhibits a clear-cut extremum for a particular value of the number of states. This unexpected result suggests that to optimize its function our multistable system, viewed as an information processor, should preferably be endowed with a number of states (essentially a “variety”) that is neither very small nor too large. Finally in Figure 3, the time evolution of the full in the low frequency range is plotted using expression Equations (11)–(13) and (25).

Figure 2.

Time average excess entropy (Equation (25a)) (a) and redundancy (Equation (26)); (b) as a function of the number n of states present for different values of the ratio = 0.01 (full lines), 0.1 (dashed lines) and 1 (dotted lines). Normalization parameter α as in Figure 1.

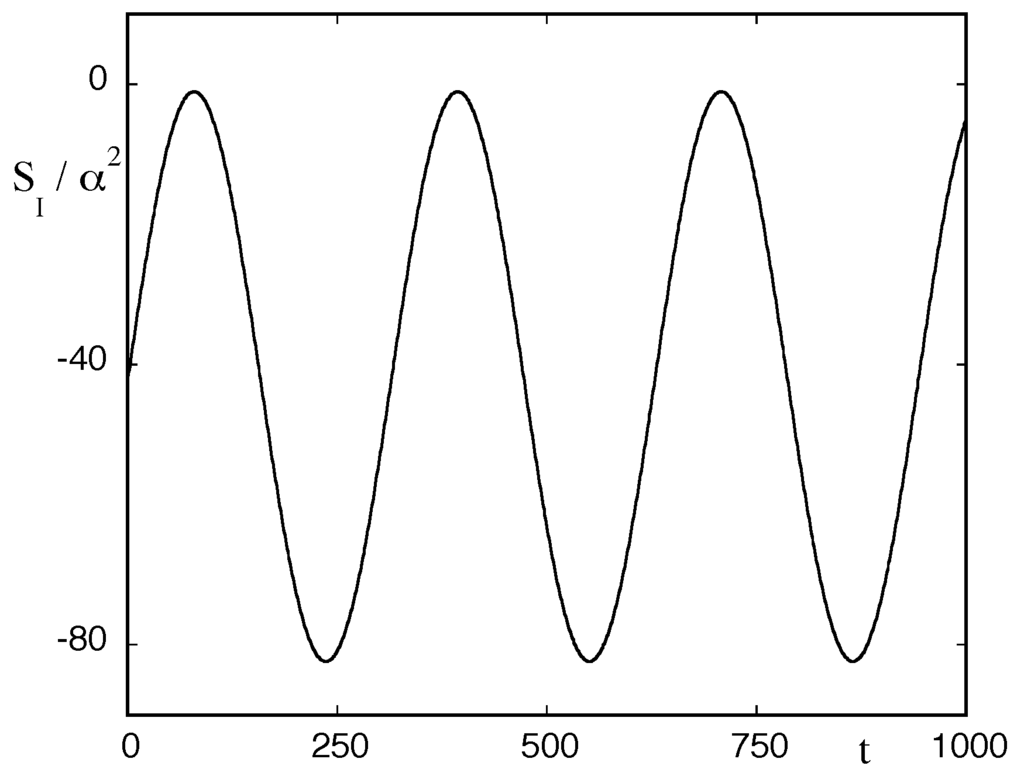

Figure 3.

Time evolution of the full excess entropy (Equation (24c)) in the case of coexisting states with = 0.01, corresponding to the minimum of the full line of Figure 2a.

4.2. Information Entropy Production

Our starting point is Equation (18b). We have shown in the preceding section that the zeroth order part of vanishes, since it corresponds to a state where detailed balance holds. To obtain the first non-trivial contribution, we need therefore to expand both and in the forcing amplitude ϵ. Actually, since each of the two factors in the expression of vanishes for , it suffices to take each of them to in order to obtain the dominant, contribution. Substituting Equation (9b) along with the analogous expressions for :

where and , we obtain:

where is given by Equations (11)–(13) and (see Equations (6), (7) and (17)):

Taking the time average over a period of the forcing and denoting for compactness sign , one finally obtains:

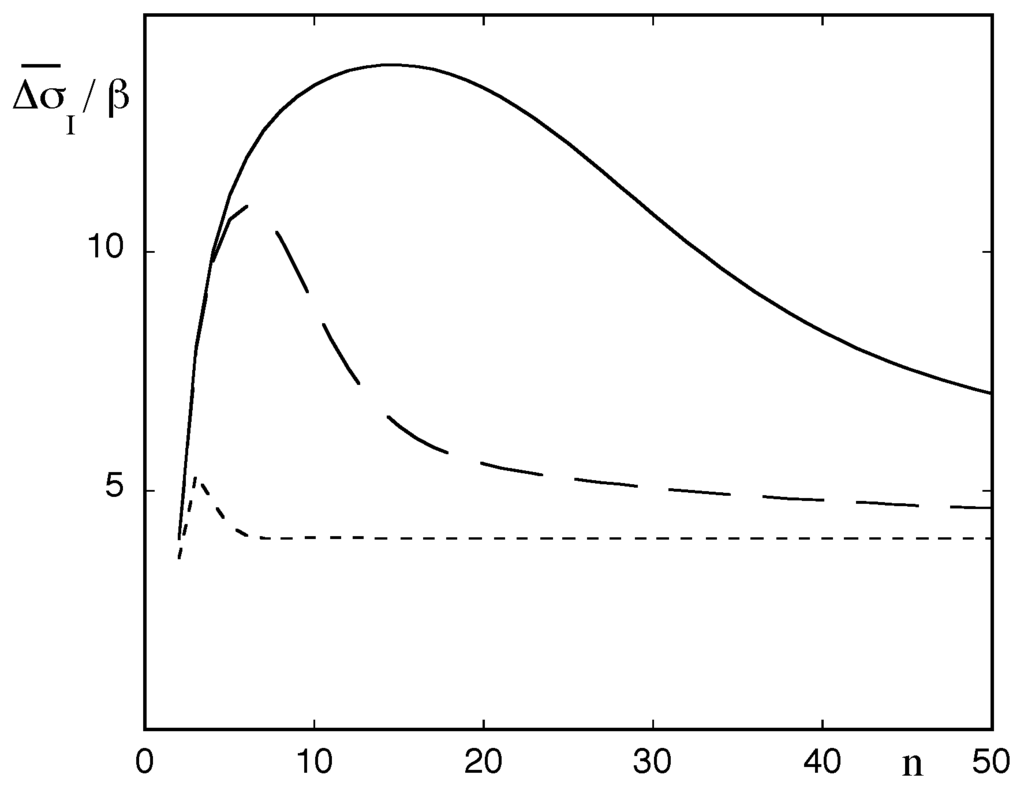

Figure 4 depicts the dependence of , scaled by the factor , as a function of a number of states n for different values of the forcing frequency. We observe a trend similar to that of Figure 2a,b: an enhancement under nonequilibrium conditions near stochastic resonance for an optimal number of intermediate states and practically no effect of the nonequilibrium constraint for higher frequencies. Since the time average of in Equation (18a) is necessarily zero, it follows that the information flux (Equation(18c)) will display a similar behavior albeit with an opposite sign, i.e., a pronounced dip for low frequencies and for an optimal number of states. In a sense, the excess information produced remains confined within the system at the expense of a negative excess information flux, in much the same way as in the entropy balance of classical irreversible thermodynamics [10].

Figure 4.

Information entropy production averaged over the period of the forcing (Equation (30)), scaled by as a function of the number of states n present and for different values of the ratio = 0.01 (full line), 0.1 (dashed line) and 1 (dotted line).

4.3. Information Transfer and Kolmogorov–Sinai Entropy

We begin by decomposing and in Equation (19) into a reference part and a deviation arising from the presence of the nonequilibrium constraint:

where and the ’s have been evaluated in Section 3. Using Equations (7), (8) and (22), one can establish the following properties:

- vanish for the intermediate states .

- The contributions of coming from second order terms in the expansion of Equation ((7a) in powers of ϵ do not contribute up to order to , which can therefore be limited for our purposes to its first order in ϵ part .

- , and satisfy the symmetry relations:

Substituting into Equation (19) and using the symmetry property along with the normalization condition , one obtains after some straightforward manipulations:

with:

Taking the average of Equation (33) over a period of the forcing leads to the following expression for the mean excess Kolmogorov–Sinai entropy:

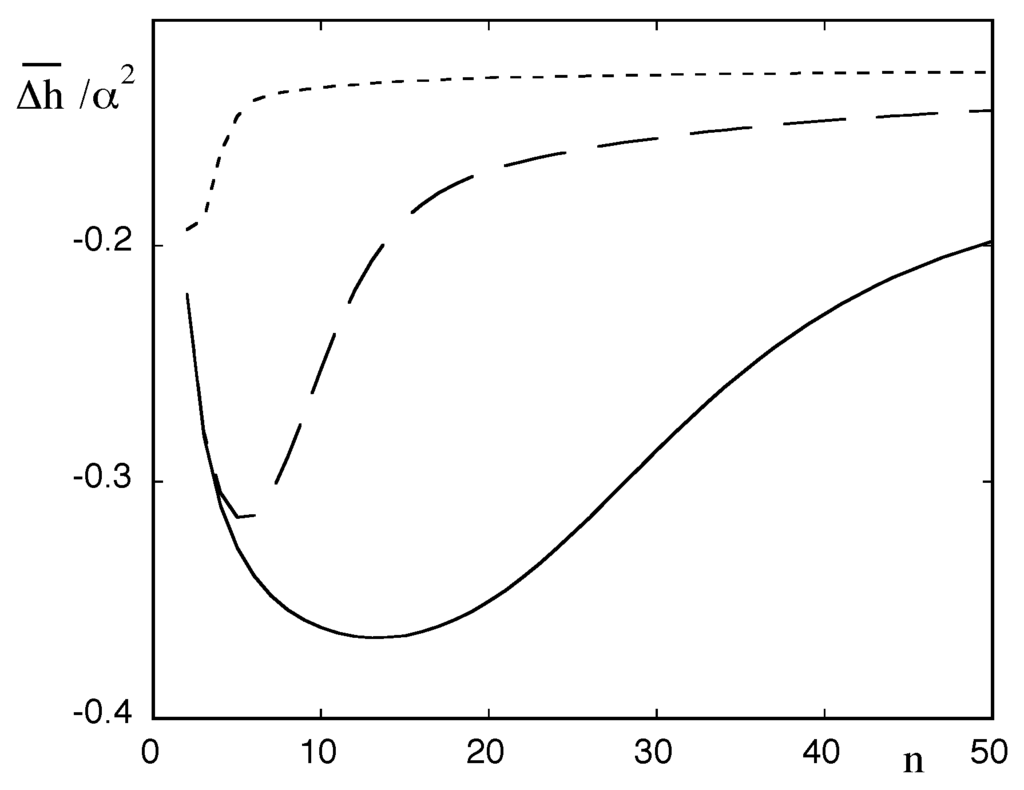

In Figure 5, the dependence of , scaled by a factor , on the number of states is plotted for various values of the forcing frequency. We find a trend similar to the one in Figure 2, Figure 3 and Figure 4, namely an optimal response for frequency values close to conditions of stochastic resonance and for a particular number of intermediate states. The negative values of reflect the reduction of randomness (of which h is a characteristic measure) induced by the nonequilibrium constraint.

Figure 5.

Kolmogorov–Sinai entropy averaged over the period of the forcing (Equation (35)) as a function of the number of states n present and for different values of the ratio = 0.01 (full line), 0.1 (dashed line) and 1 (dotted line). Normalization parameter α as in Figure 1.

5. Conclusions

In this work, a nonlinear system subjected to a nonequilibrium constraint in the form of a periodic forcing, giving rise to complex behavior in the form of fluctuation-induced transitions between multiple steady states and of stochastic resonance, was considered. Mapping the dynamics into a discrete-state Markov process allowed us to view the system as an information processor. Subsequently, the link between the dynamics and, in particular, the self-organization induced by the nonequilibrium constraint, on the one side, and quantities of interest in information theory, on the other side, was addressed. It was shown that the nonequilibrium constraint leaves a clear-cut signature on these quantities by reducing randomness and by enhancing predictability, which is maximized under conditions of stochastic resonance. Of special interest is the a priori unexpected existence of an optimum for a particular number of simultaneously stable states suggesting the existence of optimal alphabets on which information is to be generated and transmitted.

In summary, it appears that when viewed in a dynamical perspective, generalized entropy-like quantities as used in information theory can provide useful characterizations of self-organizing systems led to choose among a multiplicity of possible outcomes. Conversely and in line with the pioneering work in [3,4], information constitutes in turn one of the basic attributes emerging out of the dynamics of wide classes of self-organizing systems and conveying to them their specificity.

Acknowledgments

This work is supported, in part, by the Science Policy Office of the Belgian Federal Government.

Author Contributions

The authors contributed equally to the definition of the subject and to the analytic and computational parts of the work. They wrote jointly the paper and read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nicolis, G.; Nicolis, C. Foundations of Complex Systems, 2nd ed.; World Scientific: Singapore, Singapore, 2012. [Google Scholar]

- Ash, R.B. Information Theory; Dover: New York, NY, USA, 1990. [Google Scholar]

- Haken, H. Information and Self-Organization; Springer: Berlin, Germany, 1988. [Google Scholar]

- Nicolis, J.S. Chaos and Information Processing; World Scientific: Singapore, Singapore, 1999. [Google Scholar]

- Gardiner, C. Handbook of Stochastic Methods; Springer: Berlin, Germany, 1983. [Google Scholar]

- Gammaitoni, L.; Hänggi, P.; Jung, P.; Marchesoni, F. Stochastic resonance. Rev. Mod. Phys. 1998, 70, 223–287. [Google Scholar] [CrossRef]

- Nicolis, C. Stochastic resonance in multistable systems: The role of intermediate states. Phy. Rev. E 2010, 82, 011139. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.L.; van den Broeck, C.; Nicolis, G. Stability criteria and fluctuations around nonequilibrium states. Z. Phys. B 1984, 56, 165–170. [Google Scholar]

- Gaspard, P. Time-reversed dynamical entropy and irreversibility in Markovian random processes. J. Stat. Phys. 2004, 117, 599–615. [Google Scholar] [CrossRef]

- De Groot, S.; Mazur, P. Nonequilibrium Thermodynamics; North Holland: Amsterdam, The Netherlands, 1962. [Google Scholar]

- Kemeny, J.; Snell, J. Finite Markov Chains; Springer: Berlin, Germany, 1976. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).