Abstract

The rapid expansion of e-commerce has significantly influenced consumer purchasing behavior, making user reviews a critical source of product-related information. However, the large volume of low-quality and superficial reviews limits the ability to obtain reliable insights. This study aims to classify Turkish e-commerce reviews as either useful or useless, thereby highlighting high-quality content to support more informed consumer decisions. A dataset of 15,170 Turkish product reviews collected from major e-commerce platforms was analyzed using traditional machine learning approaches, including Support Vector Machines and Logistic Regression, and transformer-based models such as BERT and RoBERTa. In addition, a novel Multi-Transformer Fusion Framework (MTFF) was proposed by integrating BERT and RoBERTa representations through concatenation, weighted-sum, and attention-based fusion strategies. Experimental results demonstrated that the concatenation-based fusion model achieved the highest performance with an F1-score of 91.75%, outperforming all individual models. Among standalone models, Turkish BERT achieved the best performance (F1: 89.37%), while the BERT + Logistic Regression hybrid approach yielded an F1-score of 88.47%. The findings indicate that multi-transformer architectures substantially enhance classification performance, particularly for agglutinative languages such as Turkish. To improve the interpretability of the proposed framework, SHAP (SHapley Additive exPlanations) was employed to analyze feature contributions and provide transparent explanations for model predictions, revealing that the model primarily relies on experience-oriented and semantically meaningful linguistic cues. The proposed approach can support e-commerce platforms by automatically prioritizing high-quality and informative reviews, thereby improving user experience and decision-making processes.

1. Introduction

With the acceleration of digitalization, e-commerce has become one of the dominant forms of modern trade, significantly transforming consumer purchasing behavior. The widespread availability of the internet, increased mobile device usage, and advances in secure online payment systems have fundamentally reshaped traditional shopping habits. Today, consumers rely heavily on user-generated product reviews; however, the abundance of comments makes it difficult to locate informative and reliable content. Superficial expressions, such as “good,” “nice,” or “bad,” fail to address essential questions regarding product quality, usability, and limitations, rendering them useless from the consumer’s perspective. Therefore, identifying and highlighting useful reviews that provide meaningful insights has become a critical requirement for improving user experience. Addressing this need, the present study aims to classify Turkish e-commerce reviews as useful or useless.

Recent advancements in natural language processing (NLP) have enabled machine learning (ML) techniques to be effectively utilized in this field. Traditional algorithms, such as Support Vector Machines (SVM) and Logistic Regression (LR), have been widely applied in text classification tasks [1,2]. SVM, based on statistical learning theory, offers strong generalization capability in high-dimensional feature spaces and mitigates overfitting through margin optimization [1,3]. Methods for multi-class classification using SVM have been proposed in earlier studies [4], while other work has demonstrated high performance in detecting aggressive language in Turkish social media comments [2]. Further research has reported an accuracy of over 93% for cosmetic product sentiment classification using SVM [5], and additional studies have highlighted its superiority in multi-label e-commerce reviews [6]. Similarly, LR is frequently preferred due to its simplicity, interpretability, and robustness. The success of LR in classifying student arguments has been highlighted in the literature [7], and improved LR efficiency has been achieved through the Logistic Regression Matching Pursuit (LRMP) algorithm [8].

A paradigm shift in NLP has occurred with the introduction of transformer-based models, particularly Google’s Bidirectional Encoder Representations from Transformers (BERT), which has outperformed conventional methods across numerous tasks [9]. Strong results have been achieved using BERT–SVM hybrid architectures for short-text semantic matching [10], while multimodal transformers have proven effective across different data modalities [11]. The Robustly Optimized BERT Approach (RoBERTa), proposed to improve pre-training optimization, has shown further performance gains [12]. For morphologically rich and agglutinative languages such as Turkish, language-specific versions of BERT and RoBERTa have demonstrated superior results [13]. Studies have shown that BERT- and RoBERTa-based models outperform multilingual alternatives in Turkish datasets, supporting the importance of language-specific models, as demonstrated by Hugging Face [14]. Research targeting e-commerce review analysis further reinforces these findings. LSTM-based sentiment classification for Turkish reviews has been conducted in previous work [15], while transformer-based architectures have achieved higher accuracy on Turkish news texts [16].

Studies focused specifically on Turkish e-commerce reviews provide valuable insights into the effectiveness of both traditional and transformer-based approaches. Nine BERT variants were tested on 10,000 Turkish reviews, with ConvBERTurk achieving a 97.04% F1-score [17]. Other work obtained 96% accuracy using BERTurk-based sentiment analysis on 73,392 multi-domain reviews, while also showing that SVM remains competitive among traditional classifiers [18]. The effectiveness of fusion architectures has additionally been noted in the literature. A BERT–RoBERTa fusion model achieved 94.3% accuracy on COVID-19 tweets [19], and RoBERTa demonstrated better generalization for depression detection tasks [20]. Meanwhile, additional studies have shown that traditional models such as SVM, Naive Bayes, and Random Forest still perform strongly on Turkish datasets, depending on their characteristics [5,21].

The comparison of multilingual and language-specific transformer models has also been extensively explored. Research indicates that XLM-RoBERTa slightly outperformed BERT on the TRSAv1 sentiment dataset [22], whereas other work has shown that BERTurk variants significantly surpassed ELECTRA-based models on 150,000 Turkish examples [23]. Additional findings verify that language-specific transformer models outperform multilingual ones due to Turkish’s morphological complexity [13]. Moreover, TF-IDF, Word2Vec, GloVe, and BERT embeddings have been compared in multi-label classification tasks, with BERT achieving the highest Micro F1-score [6]. The present study builds upon these findings by revisiting the dataset introduced in earlier research [15], analyzing the same 15,170 e-commerce reviews under a new problem definition—classification of reviews as useful or useless.

A comprehensive analysis of the existing literature reveals two key gaps: (i) most studies focus primarily on English datasets, and research on agglutinative languages remains limited, and (ii) Turkish studies predominantly concentrate on sentiment analysis [18,21,23], while the prediction of review usefulness has not been adequately explored. Addressing this gap, the present study evaluates both traditional ML methods (SVM, LR) and modern transformer-based architectures (BERT, RoBERTa, BERTurk) for usefulness classification in Turkish e-commerce reviews. Additionally, a novel Multi-Transformer Fusion Framework (MTFF) is proposed, incorporating three fusion strategies—concatenation, weighted sum, and attention-based fusion—to identify the most effective architecture.

The major contributions of this study are as follows:

- (i)

- It extends beyond sentiment analysis and introduces a usefulness classification task for Turkish e-commerce reviews;

- (ii)

- It provides a comprehensive comparison of traditional ML models and transformer-based architectures using the same dataset;

- (iii)

- It demonstrates the effectiveness of transformer models for morphologically rich languages;

- (iv)

- It proposes a multi-transformer fusion framework, where the concatenation strategy yields the highest performance.

In conclusion, this study fills an important gap in the Turkish NLP literature and offers an original model for enhancing the user experience on e-commerce platforms.

Research Hypotheses

Based on the identified research gaps and the objectives of this study, the following research hypotheses are formulated:

H1:

Transformer-based models (BERT, RoBERTa, and BERTurk) achieve higher classification performance than traditional machine learning approaches (SVM, LR) in the task of Turkish e-commerce review usefulness classification.

H2:

Language-specific transformer models trained for Turkish outperform multilingual transformer models in terms of F1-score and accuracy, due to the morphological richness of the Turkish language.

H3:

The proposed MTFF provides statistically significant performance improvements compared to single transformer-based models, with the concatenation-based fusion strategy yielding the highest performance.

The remainder of this paper is organized as follows: Section 2 details the materials and methods, including preprocessing, manual labeling, and model architectures; Section 3 presents comparative experimental results; and Section 4 discusses implications, limitations, and directions for future research.

2. Materials and Methods

2.1. Materials

The data set used in this study was created in Turkish. Turkish is a language that poses challenges in natural language processing studies due to its agglutinative structure and rich morphological features. Words are derived from the combination of roots and affixes, which brings with it the problems of root-affix disambiguation and context-dependent polysemy. Furthermore, the semantic changes introduced by affixes in various contexts directly impact preprocessing strategies in the classification of interpretations [24].

2.1.1. Data Set

The data set used in the study was prepared for analyzing user experiences in the field of e-commerce. The dataset was created by Çabuk et al. [15] and made available on the online sharing platform Kaggle. A total of 15,170 product reviews were collected from e-commerce platforms commonly used in Turkey, including Hepsiburada, Trendyol, and N11, covering various product categories such as shavers, office chairs, and game consoles. This diversity ensures that different product groups, such as electronics and furniture, are represented [25].

2.1.2. Data Preprocessing

Classic preprocessing steps, such as removing punctuation marks, emojis, and stop words, were applied to the original dataset. However, in this study, these steps were not repeated in order to preserve the naturalness of the user comments. Specifically, expressions consisting solely of punctuation marks or short repetitions were also accepted as genuine user comments. Thus, content-rich and insightful comments were preserved alongside superficial and uninformative expressions in the same dataset, and classification was performed through a manual labeling process.

2.1.3. Manual Labeling Strategy

The labeling process was conducted entirely manually, with each comment reviewed individually and labeled as “useful” (1) or “useless” (0): (i) Useful comments: content that provides detailed information about the product’s performance, size, durability, price-performance balance, or user experience. (ii) Useless comments: comments that only contain short phrases such as “nice” or “okay,” evaluations made without using the product, or comments focused on topics unrelated to the product (e.g., shipping, packaging, wedding gifts). Since a single review may contain multiple sentences and mixed content, labeling was performed at the review level rather than the sentence level. If a review included at least one concrete, experience-based statement related to product functionality, performance, or usage, it was labeled as useful (1). Examples of useful reviews are presented in Table 1. In this classification, reviews were evaluated based on criteria such as product performance, ease of use, and ergonomic features. In contrast, Table 2 shows examples of reviews classified as useless. These reviews do not provide direct information about the product; they typically consist of brief and superficial statements or evaluations made without actually using the product.

Table 1.

Examples of useful reviews.

Table 2.

Examples of useless comments.

The distribution of useful and useless comments in the dataset, as shown in Table 3, indicates that 55.9% of the total 15,170 comments were labeled as useful, while 44.1% were labeled as useless. This distribution indicates that the dataset has a slight imbalance between classes; however, it provides a sufficiently balanced structure for model training and performance evaluations. The labeling process was conducted by a single researcher. Therefore, inter-annotator agreement (e.g., Cohen’s Kappa) was not calculated.

Table 3.

General distribution of the data set.

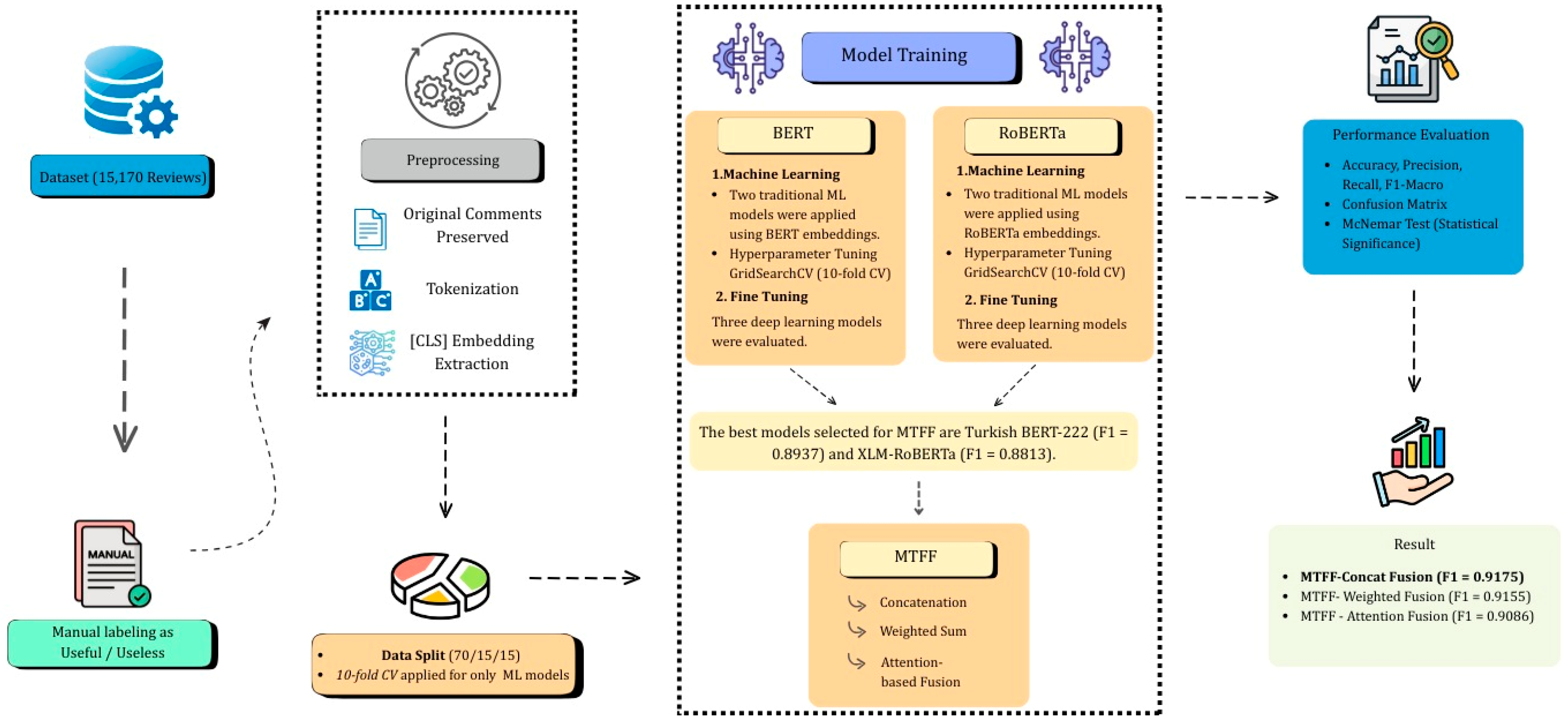

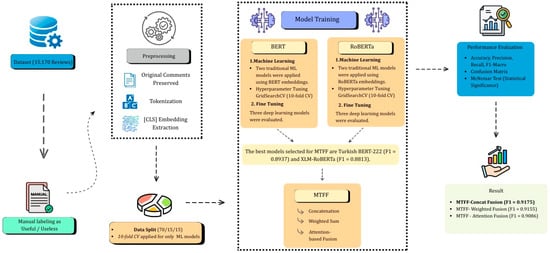

2.2. Method

In this study, transformer-based language models and fusion strategies were used together to classify Turkish e-commerce product reviews as useful (1) or useless (0). The general process of the proposed method includes data preprocessing, modeling, and fusion stages, as shown schematically in Figure 1.

Figure 1.

General flowchart of the proposed method.

In this approach, both fine-tuning and embedding-based ML approaches were first applied to two different pre-trained language models (Turkish BERT and XLM-RoBERTa). Both models were evaluated in four scenarios, as shown in Table 4.

Table 4.

Scenarios for BERT and RoBERTa approaches.

The results obtained from these four scenarios were compared, and it was determined that the Turkish BERT-222 (Fine-Tuning) and XLM-RoBERTa (Fine-Tuning) models yielded the highest accuracy rates. Therefore, these two models served as the basis for developing fusion strategies in subsequent stages.

2.2.1. BERT-Based Approaches

In this study, the process of classifying Turkish e-commerce reviews was addressed using a two-stage approach. In the first stage, the Turkish BERT model was used solely as a feature extractor. The model generated contextual representations for each review, which were then fed as input to the LR and SVM algorithms. Thus, BERT’s language representation power was combined with the learning capabilities of traditional classification algorithms. In embedding-based approaches, the hyperparameter optimization of the SVM and LR models was performed using the GridSearchCV method, where different parameter combinations were systematically evaluated to determine the configurations that yielded the highest accuracy and F1-macro scores. Additionally, 10-fold cross-validation was applied to increase the generalizability of the models, thereby reducing the risk of overfitting. To mitigate potential multicollinearity issues arising from the high dimensionality of BERT and RoBERTa embeddings, L2 regularization was applied in all LR models. The regularization strength (C) was optimized using GridSearchCV. The same regularization and validation strategy was consistently applied to both BERT- and RoBERTa-based embedding models.

To improve the results obtained, the models were subjected to a fine-tuning process in the second stage for the direct classification task. In this stage, three different pre-trained BERT models—Turkish BERT, Turkish BERT-222, and Multilingual BERT (mBERT)—were evaluated. All models were loaded via the Hugging Face Transformers library and integrated into a PyTorch-based fine-tuning process. During the fine-tuning phase, model weights were updated based on the classification of comments as “useful (1)” or “useless (0)”. The training process was executed using the Hugging Face Trainer API, which supported mechanisms such as validation, early stopping, and learning rate scheduler. The AdamW optimization algorithm was preferred to maintain model stability and prevent overfitting.

As a result, two different BERT-based approaches were applied within the scope of this study: (i) an Embedding-based machine learning model, (ii) a Fine-tuning-based direct classification model.

2.2.2. RoBERTa-Based Approaches

In this study, RoBERTa architecture was also evaluated alongside BERT-based models. RoBERTa was utilized as a feature extractor for classifying Turkish e-commerce reviews. In this context, the XLM-RoBERTa Base model produced 768-dimensional-embedding vectors corresponding to the [CLS] token for each review sentence, and these vectors were fed as input to classical machine learning algorithms (SVM and LR). Hyperparameter optimization was performed using the GridSearchCV method, where different parameter combinations were tested to determine the configurations that yielded the highest accuracy and F1-macro scores. To increase the model’s generalizability, 10-fold stratified cross-validation was applied.

Additionally, a fine-tuning approach was applied to RoBERTa architecture. In this context, three different pre-trained models were used: XLM-RoBERTa Base, RoBERTa Base, and DistilRoBERTa. During the fine-tuning phase, a task-specific classification layer was added to the model’s final layer, and all network parameters were retrained on the Turkish comment dataset. An early stopping mechanism (patience = 3) was applied during training, and the best model was selected based on the F1 score. The hyperparameters and training settings were configured in accordance with the standards defined for this study.

As a result of the studies, RoBERTa-based approaches were addressed in two fundamental ways, like BERT-based approaches: (i) an embedding-based machine learning approach and (ii) a fine-tuning-based direct classification model.

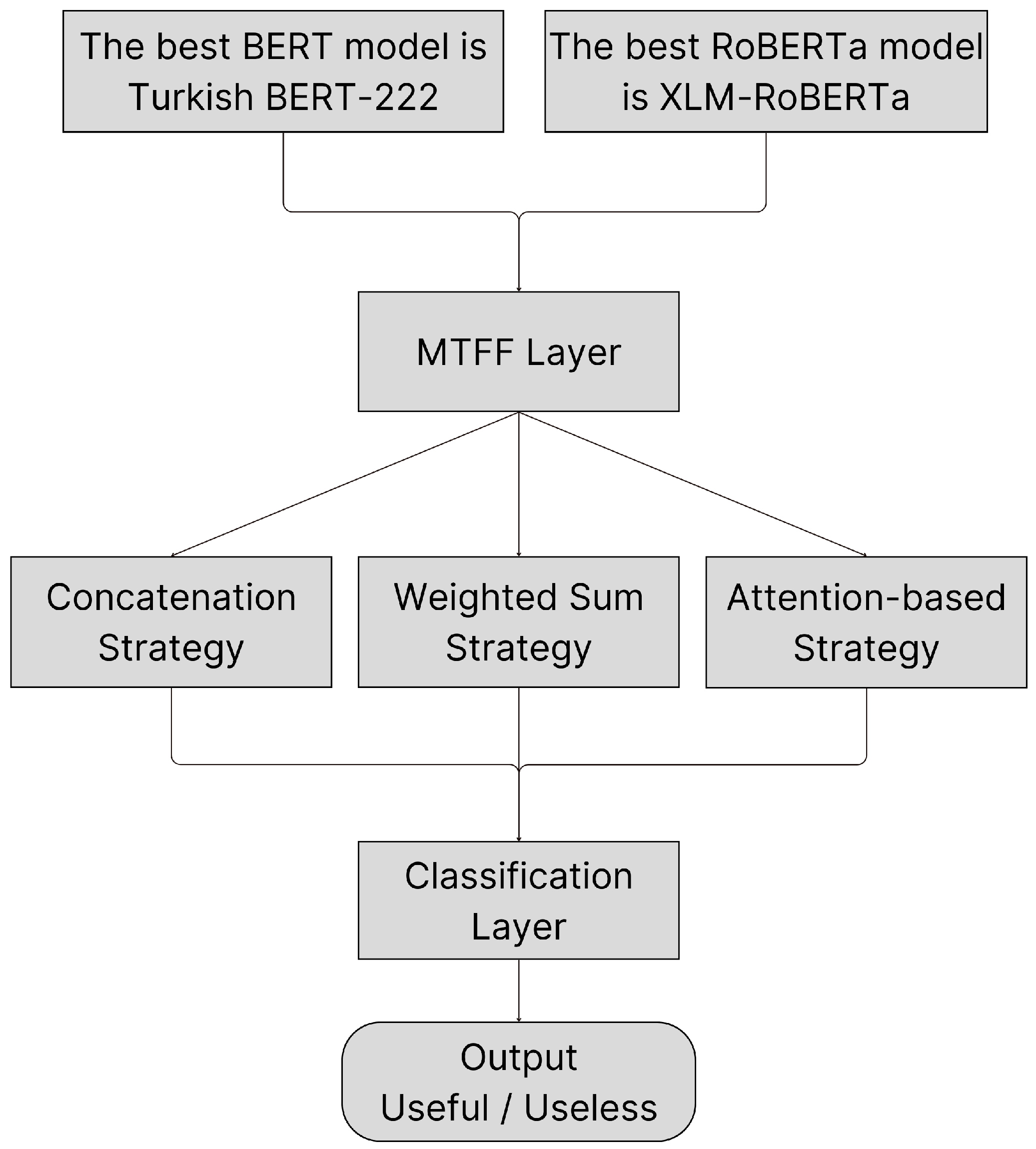

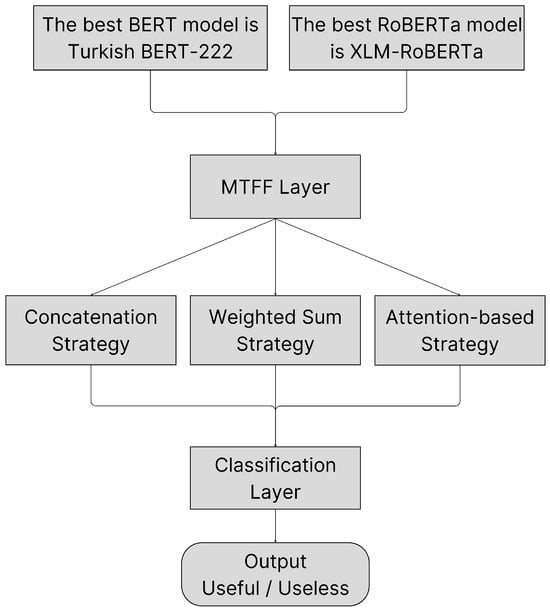

2.2.3. Proposed Multi-Transformer Fusion Framework (MTFF)

The proposed MTFF model in this study is a multi-transformer-based approach that combines the outputs of BERT and RoBERTa models using three different fusion strategies (concatenation, weighted sum, and attention). MTFF aims to achieve higher accuracy and generalizability in classifying Turkish e-commerce reviews. In this architecture, the Turkish BERT-222 and XLM-RoBERTa Base models, which demonstrated the highest performance in previous experimental stages, served as the basis. The general flowchart of architecture is illustrated in Figure 2.

Figure 2.

MTFF Flowchart.

In the proposed work, token representations obtained from the [CLS] token in BERT and RoBERTa models are used. Each model’s [CLS] vector is a summary representation of the hidden states in the model’s final layer, serving as an embedding that represents the entire review. These representations are combined using three different fusion strategies:

- Concatenation (Concat) Strategy: In the simplest method, the 768-dimensional [CLS] vectors obtained from BERT and RoBERTa were directly combined to form a 1536-dimensional combined vector. This vector was fed into a two-output fully connected classifier. This process is shown in Equation (1).

- Weighted Sum Strategy: In this approach, learnable weights (α, β) are defined for the output of both models. To ensure that no bias exists between the two models at the outset, the weights α and β are set to 0.5 equally. During the training process, these coefficients are optimized using the backpropagation algorithm so that the CrossEntropyLoss loss function measures the model’s prediction error.

- Attention-based Strategy: In this method, the [CLS] vectors of the two models are combined and passed through a small fully connected layer to dynamically calculate attention weights (w1, w2) for each example. These weights are normalized using the softmax function, ensuring their sum equals 1 (w1 + w2 = 1). The mathematical representation of this process is presented in Equation (3).

In addition to the three fusion strategies, the training and hyperparameter settings of all models used in the study are summarized in detail in Table 5.

Table 5.

Model parameter details.

AdamW, used in the models, is an optimization method that utilizes momentum and an adaptive learning rate in updating weights, and includes weight decay to mitigate overfitting [26]. During training, fundamental hyperparameters such as learning rate, batch size, epoch count, and early stopping were determined. These adjustments aimed to increase model stability and reduce the risk of overfitting.

2.2.4. Evaluation Metrics

In deep learning models, the data was evaluated by dividing it into three subsets: 70% for training, 15% for validation, and 15% for testing. In contrast, 10-fold cross-validation was applied to ML models to measure performance more consistently. Classification performance was evaluated using the confusion matrix, which was obtained by comparing model predictions with actual labels. This matrix is based on four key components showing the model’s correct and incorrect classifications.

Accuracy: As indicated in Equation (4), it shows the ratio of correctly classified examples among all model predictions:

Precision: As per Equation (5), it indicates the ratio of truly positive examples among those predicted as positive:

Recall: Measures the proportion of correctly predicted examples among true positive examples and is defined in Equation (6):

F1-Score: Defined as the harmonic mean of precision and recall values, as shown in Equation (7):

Confusion Matrix: In addition to calculating metrics, it allows for a detailed examination of the model’s performance on a class-by-class basis. It visualizes which classes experience more confusion and which classes the model predicts with greater accuracy.

2.2.5. Working Environment

All experiments were performed on the Google Colab Pro platform. The working environment was configured to include Python 3.12.11, PyTorch 2.8.0+cu126, and Transformers 4.56.1 libraries. On the hardware side, an NVIDIA A100-SXM4-40GB GPU (39.6 GB of VRAM), 83.5 GB of RAM, and a CPU with 6 physical and 12 logical cores were utilized. CUDA 12.6 support was actively provided on the GPU side.

3. Results

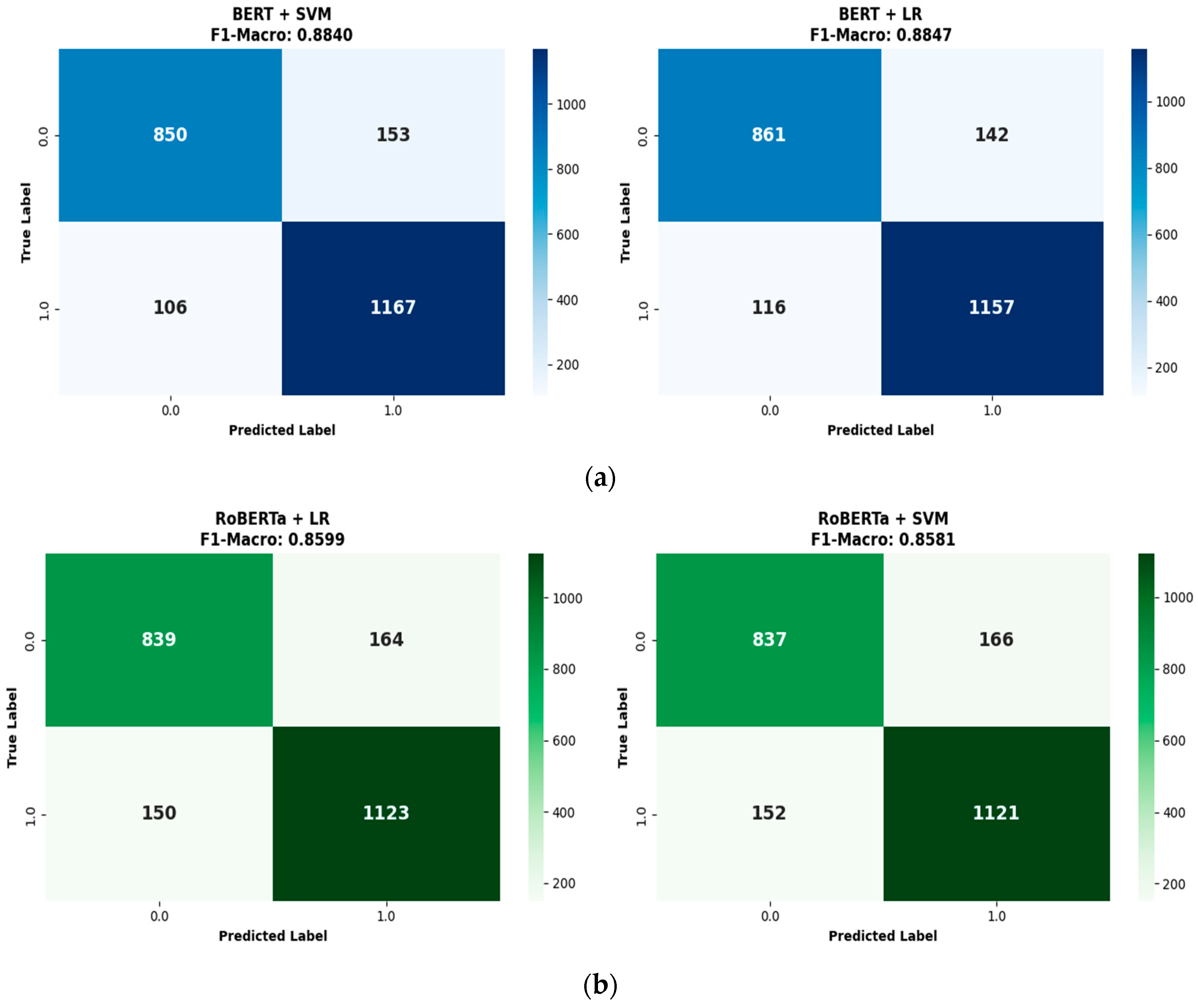

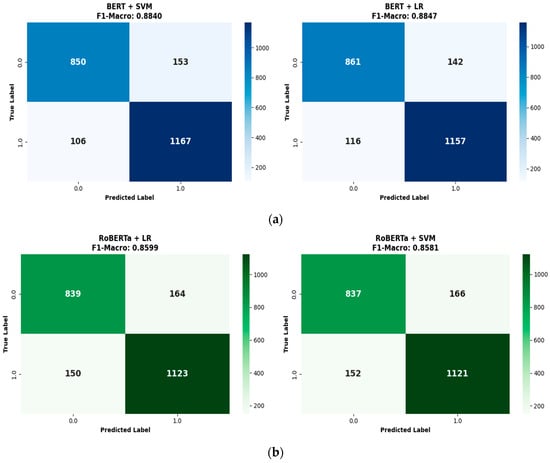

3.1. Embedding Results

This section presents experimental results where BERT and RoBERTa-based embeddings are used in conjunction with ML classifiers (LR and SVM). The performance metrics obtained for both model families are presented together in Table 6. As shown in Table 6, BERT-based embeddings achieved higher classification accuracy compared to RoBERTa-based embeddings. In the tables, values in bold represent the best results. The best performance was achieved with the BERT + LR combination (F1-Macro = 0.8847, Accuracy = 0.8866). The BERT + SVM model also produced a very close result with an F1-Macro value of 0.8840.

Table 6.

Performance comparison of BERT and RoBERTa embedding-based ML classifiers.

In RoBERTa-based embeddings, the LR classifier produced the best result with an F1-Macro of 0.8599. The fact that the performance differences are quite low suggests that both embedding types have achieved balanced learning success.

The confusion matrix analysis presented in Figure 3 shows that all four models perform a similarly balanced classification. In particular, the BERT + LR model’s lower error rate in the positive class (useful comments) explains its slight superiority.

Figure 3.

Confusion matrix visualizations for (a) BERT embedding + ML classifiers and (b) RoBERTa embedding + ML classifiers.

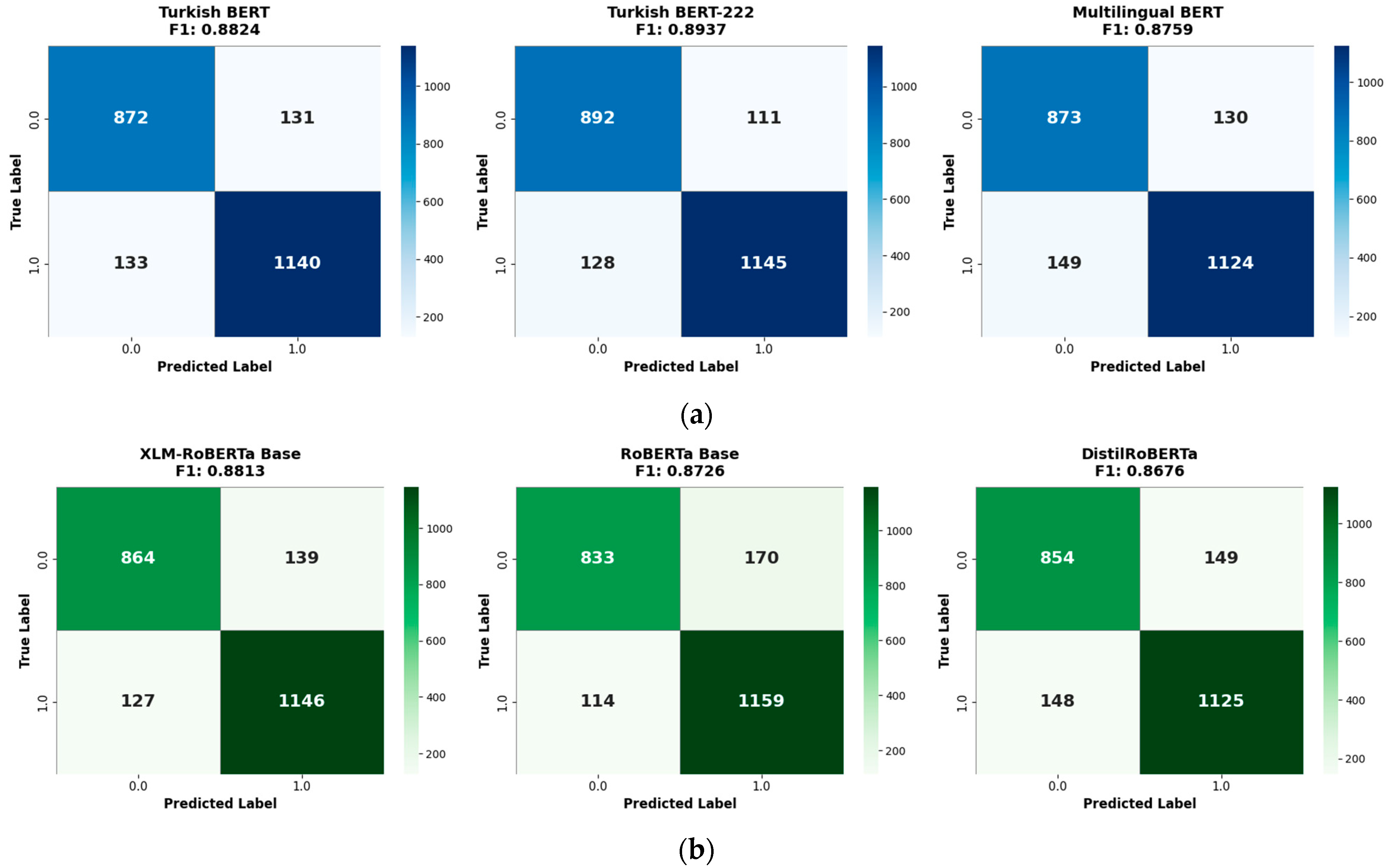

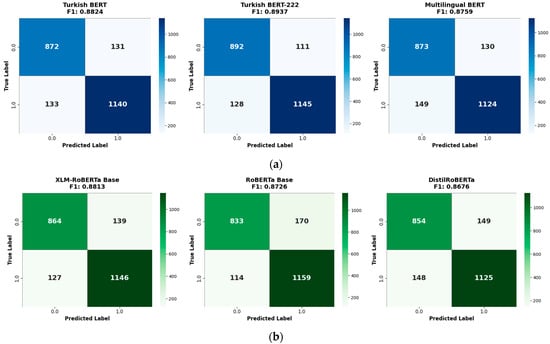

3.2. Fine-Tuning Results

Various variants of the BERT and RoBERTa model families were evaluated. The F1-Macro, Accuracy, Precision, and Recall values obtained on the test dataset are presented in Table 7.

Table 7.

Fine-Tuning performance results for BERT and RoBERTa model families.

As shown in Table 7, the Turkish BERT-222 model demonstrated the highest performance among all models with 0.8937 F1-Macro and 0.8950 Accuracy values. The Turkish BERT and XLM-RoBERTa Base models showed similar performance, and it was observed that both models could adapt to the Turkish language structure.

The confusion matrix results presented in Figure 4 indicate that the Turkish BERT-222 model performs a more balanced classification between the “useful” and “useless” classes. The XLM-RoBERTa Base model also achieves a similar balance thanks to its multilingual training advantage, whereas the DistilRoBERTa model experiences a performance loss due to compression. Overall, it can be said that BERT-based models achieve higher accuracy and F1 scores on the Turkish dataset compared to RoBERTa-based models. This is considered to stem from the fact that BERT models include variants specifically trained for Turkish.

Figure 4.

Confusion matrices for fine-tuning results of (a) BERT and (b) RoBERTa models.

3.3. MTFF Results

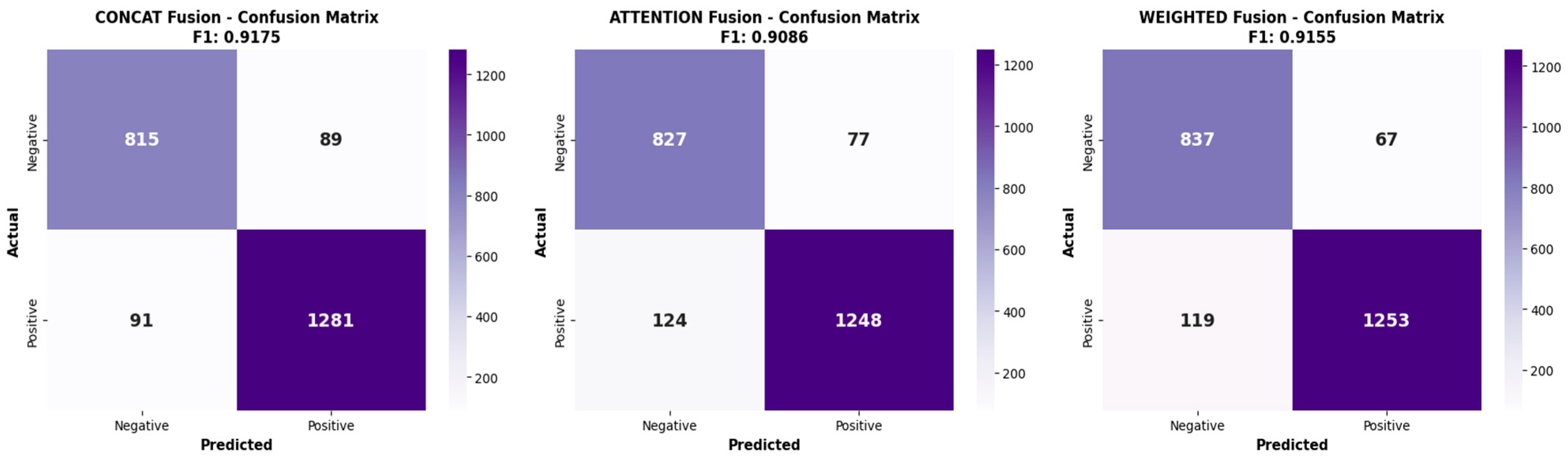

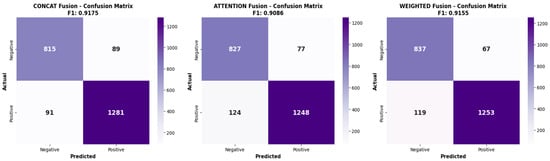

In the fusion phase, the output representations of the BERT and RoBERTa models were combined using three different fusion strategies: Concat Fusion, Attention Fusion, and Weighted Fusion. Each strategy was trained as described in Section 3.2 Method. These strategies aimed to improve overall performance by leveraging the complementary features of the models.

As shown in Table 8, the highest performance was achieved with the Concat Fusion strategy (F1-Macro = 0.9175). The Weighted Fusion model ranked second with an F1 score of 0.9155, while Attention Fusion ranked third with an F1 score of 0.9086. All strategies showed an accuracy rate above 91%, demonstrating that BERT and RoBERTa representations are complementary.

Table 8.

Performance results of fusion strategies.

Figure 5 presents the confusion matrix visuals for the three fusion strategies. The analysis revealed that the Concat Fusion model distinguished between the two classes in a balanced manner and achieved a higher correct classification rate in the “useful” class (1.0). The Weighted Fusion model produced similar accuracy results but generated more false positives in the “useless” (0.0) class. In the Attention Fusion model, although class balance was maintained, the overall F1 value remained lower.

Figure 5.

Confusion matrix outputs of Fusion Strategies.

3.4. Feature Importance Analysis Using SHAP

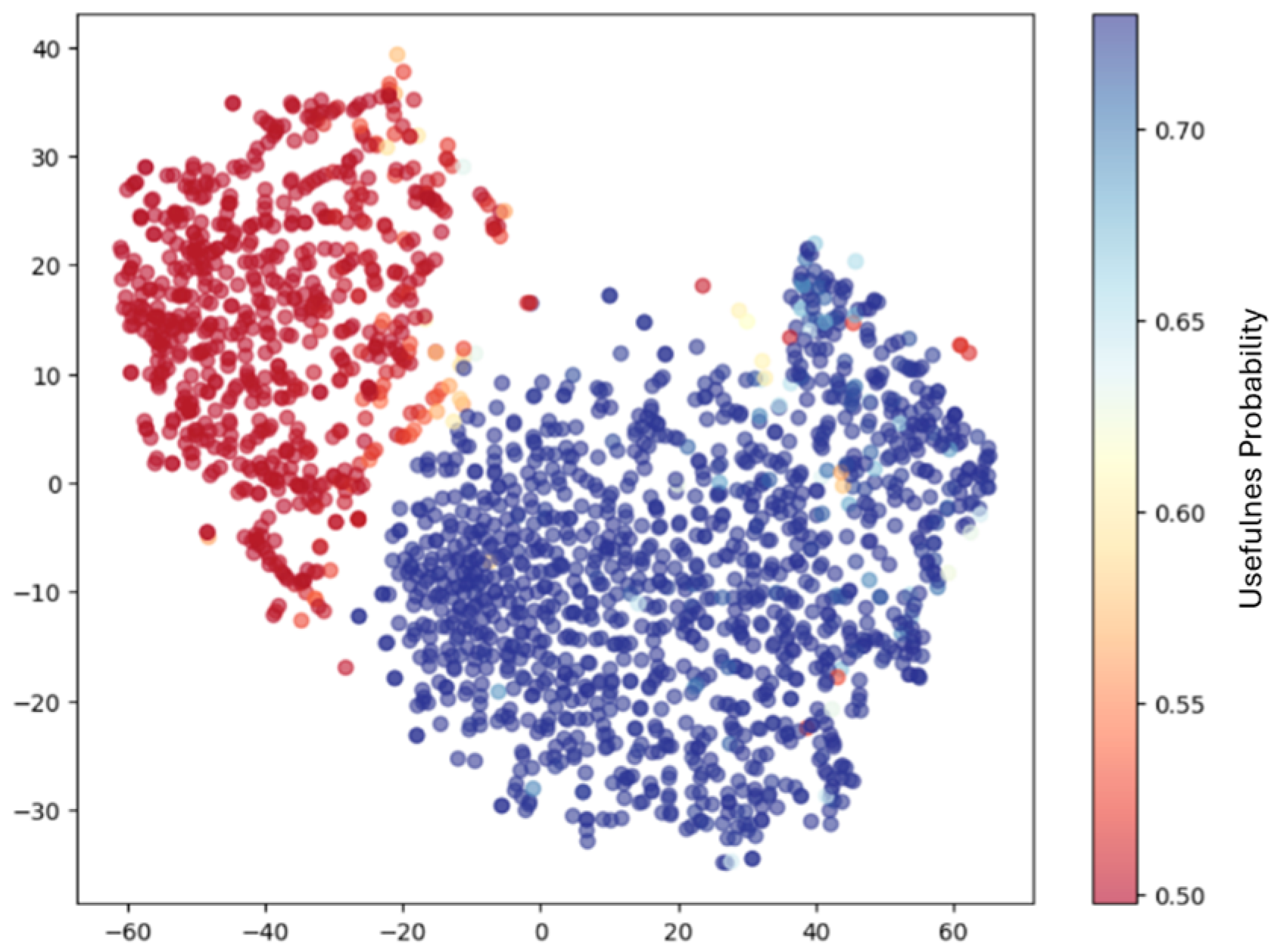

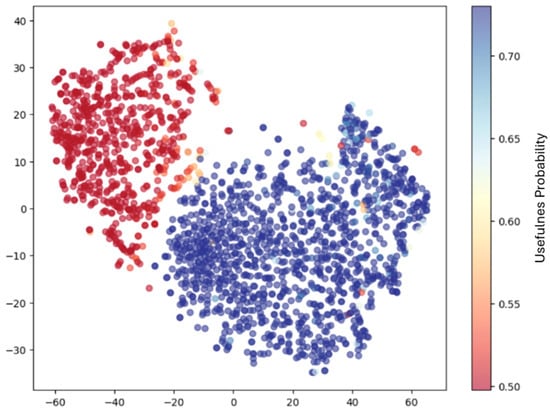

To improve the interpretability of the best-performing concat-based fusion model, SHAP (SHapley Additive exPlanations) analysis was employed. The SHAP framework enables a quantitative assessment of how individual features contribute to the model’s predictions, providing insights into the linguistic cues that drive usefulness classification.

Figure 6 presents a two-dimensional visualization of the model’s prediction space, illustrating the separation between useful and useless reviews along with their predicted usefulness probabilities. The visualization shows a clear clustering pattern, indicating that the model effectively distinguishes informative reviews from non-informative ones. Reviews predicted as useful are associated with higher usefulness probabilities and form a more compact and coherent cluster, whereas useless reviews exhibit lower probabilities and greater dispersion. This separation suggests that the concatenated representations produce a well-structured feature space with robust decision boundaries.

Figure 6.

t-SNE visualization of the concat-based fusion model predictions.

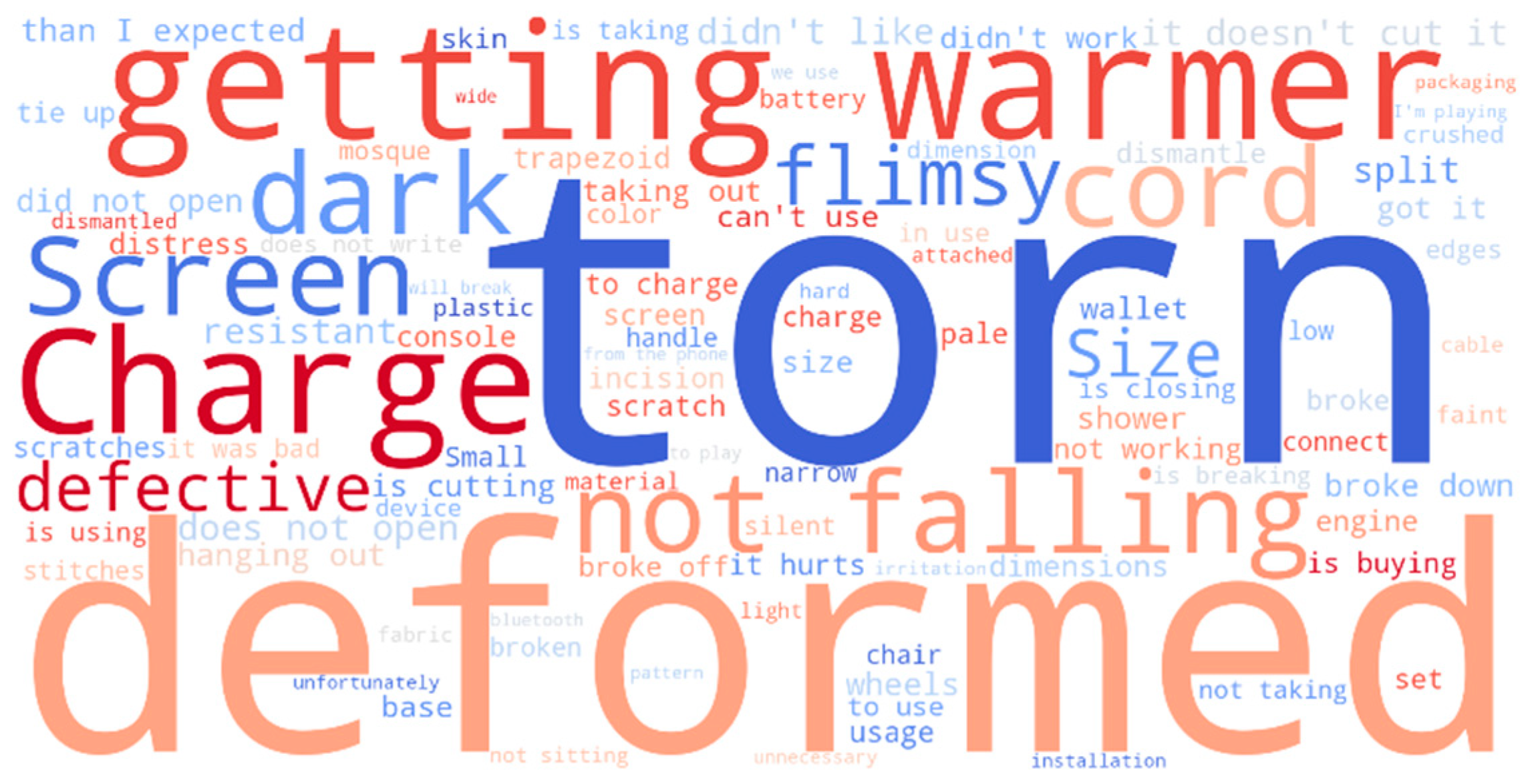

Figure 7 illustrates the most influential concepts based on the average absolute SHAP values computed over approximately 15,000 samples. The results indicate that words related to product functionality, physical condition, and user experience—such as “charge,” “screen,” “deformed,” “defective,” and “torn”—receive substantially higher importance scores. This finding demonstrates that the model prioritizes experience-based and information-rich expressions rather than surface-level sentiment indicators.

Figure 7.

SHAP-based word cloud of the most influential concepts.

To further analyze individual predictions, representative useful and useless samples were examined. In useful reviews, experience-oriented tokens (e.g., terms related to charging behavior, screen quality, or functional performance) exhibit strong positive contributions to the model output. In contrast, tokens with limited semantic relevance, punctuation marks, or context-independent fragments contribute negatively or marginally to the prediction. For useless reviews, generic or vague expressions (e.g., brief recommendations or unspecific evaluations) fail to generate strong discriminative signals, reflecting their low informational value.

Additionally, the SHAP-based analysis indicates that features originating from both BERT and XLM-RoBERTa embeddings contribute meaningfully to the final classification decisions, with neither representation consistently dominating the other. This observation confirms that the concatenation strategy captures complementary semantic information from both models rather than introducing redundant features.

Overall, the SHAP analysis demonstrates that the concat-based fusion model relies on semantically meaningful and experience-driven linguistic cues and that its decision-making process aligns well with human intuition regarding review usefulness.

To further investigate the relative contribution of each representation within the fusion architecture, the concat fusion mechanism was analyzed in detail. In this architecture, BERT and XLM-RoBERTa representations are directly concatenated and fed into a multi-layer classifier without any predefined static weighting mechanism. Instead, the relative contribution of each representation is automatically learned by the classifier layers during training. To quantitatively assess this contribution, the weights of the first linear layer processing the concatenated vector were examined. The results indicate that BERT contributes 50.04% and XLM-RoBERTa contributes 49.96% to the final decision. This finding demonstrates that both representations are utilized almost equally in the decision-making process and that the fusion mechanism integrates them in a balanced and complementary manner rather than prioritizing a single model. Therefore, the proposed approach performs a data-driven and adaptive representation fusion rather than relying on static weighting.

3.5. Statistical Significance Analysis

The McNemar test was used to evaluate whether the differences in prediction between fusion strategies were statistically significant. This test aims to determine whether the difference between models is random, based on the number of examples predicted differently by two classification models working on the same sample set. The McNemar test is a non-parametric method recommended for comparing the performance of two classifiers on the same dataset [27]. In this study, the McNemar test was applied to the confusion matrix results of the models to compare the prediction performance of the fusion strategies. Results obtained in the McNemar test with p < 0.05 indicate a statistically significant difference between the two models. In this case, it is accepted that the prediction performance of the models is not random and that one model produces results that are significantly different from the other. Conversely, when p ≥ 0.05, it is considered that the difference between the models may be random and that their performance is at a similar level. The results obtained are presented in Table 9. According to this, no significant difference was observed between the Concat and Weighted strategies (p = 0.4795), indicating that the two models produced similar decisions. However, in the Concat–Attention (p = 0.0029) and Attention–Weighted (p = 0.0003) comparisons, the p-values were found to be less than 0.05, indicating that these differences were statistically significant. These results show that the concat fusion strategy within the MTFF architecture provides the most stable and generalizable results.

Table 9.

McNemar test results.

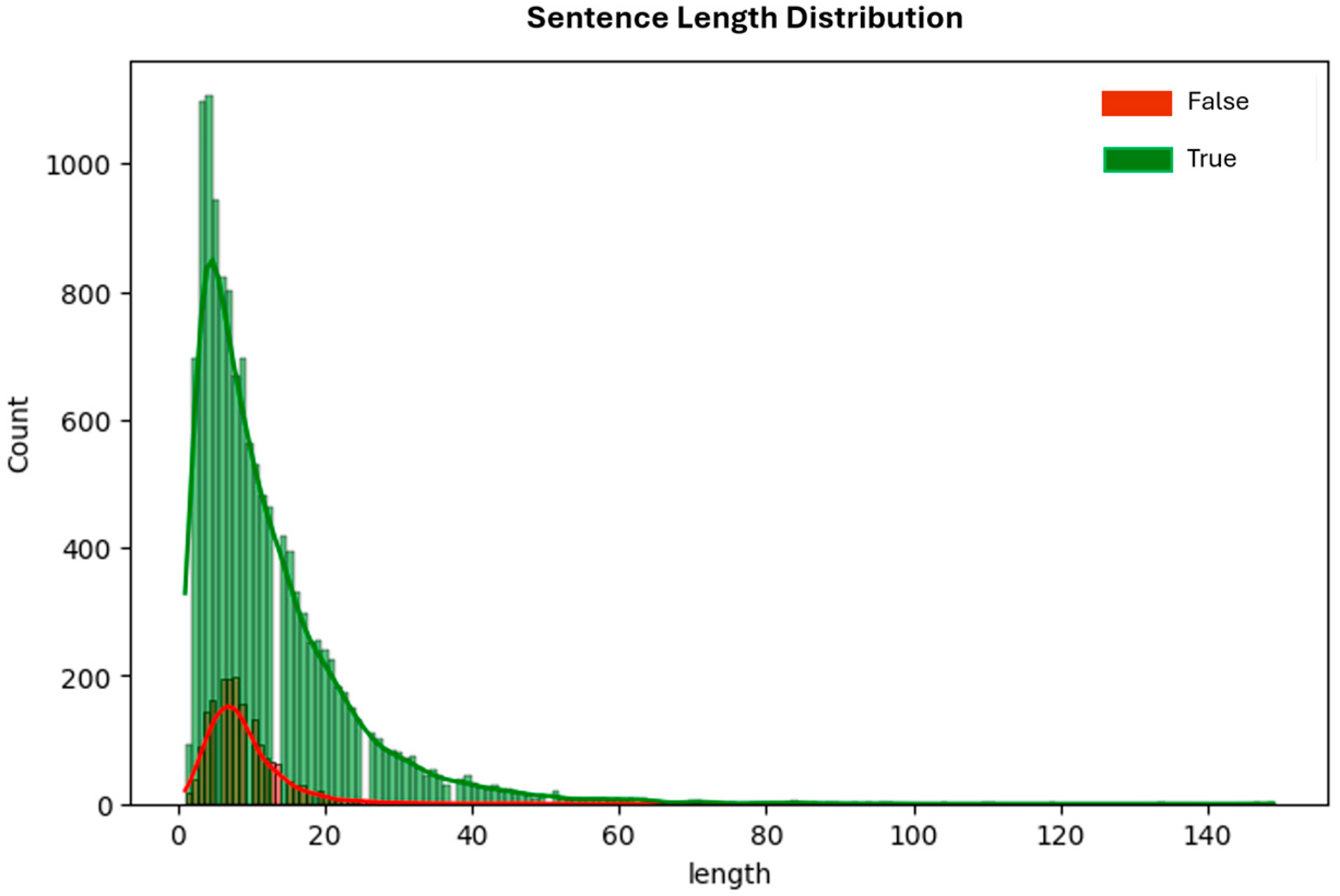

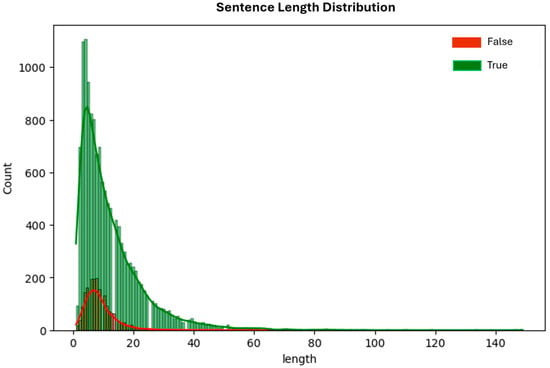

3.6. Error Analysis of Misclassified Samples

When the linguistic differences between correctly and incorrectly classified samples are examined, it is observed that the model tends to make more errors, particularly on short sentences that are highly context-dependent and contain superficial expressions. As illustrated in Figure 8, misclassified samples are predominantly composed of shorter sentences that provide limited contextual information, whereas correctly classified samples generally exhibit longer, more explanatory structures. Sentences involving slang usage, interrogative forms, and numerical expressions often include indirect meanings and are open to interpretation, which makes semantic inference more challenging for the model. In contrast, higher classification performance is observed for sentences that contain explicit linguistic cues, such as negation structures and contrastive conjunctions. Overall, these findings indicate that the amount and clarity of contextual information play a decisive role in the model’s decision-making process, and that context-dependent expressions carrying implicit meanings increase the likelihood of classification errors.

Figure 8.

Sentence length distribution of correctly and incorrectly classified reviews.

4. Discussion

The primary objective of this study is to identify useful customer reviews that support users’ product purchase decisions on e-commerce platforms and to distinguish them from non-informative reviews in order to deliver high-quality content to consumers. The aim of the study is to develop a classification system that highlights qualified reviews and facilitates users’ access to reliable product-related information.

In this context, transformer-based models and fusion strategies were systematically evaluated on 15,170 Turkish e-commerce reviews. The BERT + Logistic Regression (LR) approach demonstrated the best performance among embedding-based methods, achieving an F1-score of 88.47%. Among individual models, the Turkish BERT-222 fine-tuning model achieved superior performance with an F1-score of 89.37%. The highest performance was obtained using the proposed Multi-Transformer Fusion Framework (MTFF) with a concatenation-based fusion strategy, achieving an F1-score of 91.75% and an accuracy of 92.09%.

The superior performance of the concat-based fusion approach compared to both single-model baselines and alternative fusion strategies can be attributed to its ability to preserve the full representational capacity of each pre-trained language model. By directly concatenating the embeddings from BERT and XLM-RoBERTa, the model retains the distinct and complementary linguistic features captured by each encoder without imposing premature compression or information bottlenecks. This allows the downstream classifier to learn, in a data-driven manner, how much emphasis should be placed on each representation. Supporting this interpretation, the SHAP-based analysis indicates that the model primarily relies on semantically meaningful and experience-oriented linguistic cues, such as references to product functionality, condition, and usage, rather than superficial sentiment expressions. Moreover, an examination of the classifier weights reveals that BERT and XLM-RoBERTa representations contribute almost equally to the final predictions, suggesting that the fusion mechanism integrates both sources in a balanced and complementary manner rather than prioritizing a single model. In contrast, fusion mechanisms such as weighted averaging, attention-based fusion, or projection-based integration often involve early-stage aggregation or dimensionality reduction, which may suppress subtle yet discriminative features. This effect becomes particularly critical in short, noisy, and highly variable user-generated texts, where preserving diverse contextual cues is essential. Consequently, the concat strategy enables a richer joint feature space, leading to more robust decision boundaries and improved classification performance.

In the literature, Arzu and Aydoğan reported an accuracy of approximately 83% for sentiment classification of Turkish e-commerce reviews using BERT-based models [23]. In the present study, an F1-score of 89.37% was achieved with the Turkish BERT-222 model, demonstrating that individual BERT-based models can surpass previously reported results. Similarly, Kumar and Sadanandam achieved 94.3% accuracy using a BERT–RoBERTa fusion architecture for English-language texts [19]. For Turkish texts, the present study represents the first application of a BERT + RoBERTa fusion strategy for usefulness classification on a dataset created by Çabuk et al. [25].

The results indicate that a concatenation-based fusion strategy, which combines the strengths of BERT and RoBERTa representations, is an effective approach for classifying Turkish e-commerce reviews. The proposed architecture, particularly the concatenation-based fusion strategy (91.75% F1-score), is directly applicable to improving user experience on e-commerce platforms. Automatically identifying and highlighting useful customer reviews enables consumers to make more informed purchasing decisions while also providing a valuable tool for platform operators.

Limitations and Future Research

Despite the promising findings, this study has several limitations that should be acknowledged. First, the annotation process was conducted by a single researcher, which may introduce subjectivity and potential labeling bias. Future studies could involve multiple annotators and report inter-annotator agreement metrics such as Cohen’s Kappa.

Second, although the McNemar test was employed to assess the statistical significance of performance differences between fusion strategies, the evaluation was limited to pairwise comparisons on a single train–test split. Future research should conduct more robust statistical analyses across multiple data partitions or repeated cross-validation folds to enhance the generalizability of the findings.

Future studies may investigate the generalization capability of the proposed model across various forms of Turkish text, including social media content, product-related questions, and long-form consumer reviews. Furthermore, evaluating the approach on larger and domain-specific datasets could provide deeper insights into the robustness of fusion-based architecture.

Additionally, optimizing the model for real-time applications and integrating explainable artificial intelligence (XAI) techniques can offer greater transparency into the model’s decision-making mechanisms. Finally, extending the proposed fusion strategy to other Turkish NLP tasks, such as topic classification, sarcasm detection, and multi-label sentiment analysis, could further expand the applicability of the framework.

5. Conclusions

The experimental findings reveal clear performance differences among the evaluated modeling approaches. Hybrid methods combining transformer-based representations with machine learning classifiers, such as BERT + LR and RoBERTa + LR, achieved higher classification performance compared to traditional machine learning models alone. In addition, Turkish-specific fine-tuned BERT models produced the strongest results among individual transformer architectures.

With respect to model fusion, approaches integrating BERT and RoBERTa representations demonstrated more stable and improved performance than single-model configurations. In particular, the proposed MTFF employing a concatenation-based fusion strategy achieved the highest overall performance across all experimental settings. These results indicate that leveraging complementary information from different transformer architectures provides a substantial advantage in usefulness classification.

Based on these findings, all proposed research hypotheses are supported. The superior performance of transformer-based models over traditional machine learning methods confirms H1, the consistent advantage of Turkish-specific models over multilingual alternatives supports H2, and the performance gains obtained through the BERT–RoBERTa fusion within the MTFF validate H3. Overall, the results demonstrate that fusion-based transformer architecture constitutes an effective and practical solution for real-world e-commerce applications.

Author Contributions

Conceptualization, S.Ç. and E.A.Z.; methodology, S.Ç. and E.A.Z.; software, S.Ç.; validation, S.Ç. and E.A.Z.; formal analysis, S.Ç.; investigation, S.Ç.; resources, S.Ç.; data curation, S.Ç.; writing—original draft preparation, S.Ç.; writing—review and editing, S.Ç. and E.A.Z.; visualization, S.Ç.; supervision, E.A.Z.; project administration, E.A.Z.; funding acquisition, E.A.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The base dataset used in this study is publicly available on Kaggle [25]. The labeled dataset created for this research is not publicly available, as it contains derived annotations based on third-party data; however, the labeling methodology is described in detail in Section 2.1.3 to ensure reproducibility.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (GPT-5, GPT 4o) and Claude (Claude Sonet 4.5) for language polishing, readability improvements, and assistance with some code snippets. These tools were not used for generating content, performing analyses, or drawing conclusions. The authors reviewed and edited all AI-assisted outputs and take full responsibility for the final content.

Conflicts of Interest

The authors state no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| A100 | NVIDIA A100-SXM4 GPU |

| ALBERT | A little Bert |

| BERT | Bidirectional Encoder Representations from Transformers |

| BERTurk | Turkish Bert model |

| CLS/[CLS] | Classification Token |

| Concat | Concatenation |

| CUDA | Compute Unified Device Architecture |

| DL | Deep Learning |

| DistilRoBERTa | Distilled RoBERTa Model |

| ELECTRA | Efficiently Learning an Encoder that Classifies Token Replacements Accurately |

| FN | False Negative |

| FP | False Positive |

| GPU | Graphics Processing Unit |

| GridSearchCV | Grid Search with Cross-Validation |

| LSTM | Long Short-Term Memory |

| LR | Logistic Regression |

| LRMP | Logistic Regression Matching Pursuit |

| ML | Machine Learning |

| mBERT | Multilingual BERT |

| MTFF | Multi-Transformer Fusion Framework |

| NLP | Natural Language Processing |

| PyTorch | Python Machine Learning Framework |

| RoBERTa | Robustly Optimized BERT Pretraining Approach |

| SVM | Support Vector Machine |

| TF-IDF | Term Frequency–Inverse Document Frequency |

| TN | True Negative |

| TP | True Positive |

| XLM-RoBERTa | Cross-Lingual RoBERTa |

References

- Joachims, T. Text Categorization with Support Vector Machines: Learning with Many Relevant Features. In Machine Learning: ECML-98; Nédellec, C., Rouveirol, C., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 137–142. [Google Scholar] [CrossRef]

- Karayiğit, H.; İnan Acı, Ç.; Akdağlı, A. Detecting Abusive Instagram Comments in Turkish Using Convolutional Neural Network and Machine Learning Methods. Expert Syst. Appl. 2021, 174, 114802. [Google Scholar] [CrossRef]

- Al-Smadi, M.; Qawasmeh, O.; Al-Ayyoub, M.; Jararweh, Y. Deep Recurrent Neural Network vs. Support Vector Machine for Aspect-Based Sentiment Analysis of Arabic Hotels’ Reviews. J. Comput. Sci. 2018, 27, 386–393. [Google Scholar] [CrossRef]

- Xu, Z.; Li, P.; Wang, Y. Text Classifier Based on an Improved SVM Decision Tree. Phys. Procedia 2012, 33, 1986–1991. [Google Scholar] [CrossRef]

- Özmen, C.G.; Gündüz, S. Comparison of Machine Learning Models for Sentiment Analysis of Big Turkish Web-Based Data. Appl. Sci. 2025, 15, 2297. [Google Scholar] [CrossRef]

- Deniz, E.; Erbay, H.; Coşar, M. Multi-Label Classification of E-Commerce Customer Reviews via Machine Learning. Axioms 2022, 11, 436. [Google Scholar] [CrossRef]

- Wahyuningsih, T.; Manongga, D.; Sembiring, I.; Wijono, S. Comparison of Effectiveness of Logistic Regression, Naive Bayes, and Random Forest Algorithms in Predicting Student Arguments. Procedia Comput. Sci. 2024, 234, 349–356. [Google Scholar] [CrossRef]

- Li, Q.; Zhao, S.; Zhao, S.; Wen, J. Logistic Regression Matching Pursuit Algorithm for Text Classification. Knowl.-Based Syst. 2023, 277, 110761. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Xiao, Z.; Ning, X.; Duritan, M.J.M. BERT-SVM: A Hybrid BERT and SVM Method for Semantic Similarity Matching Evaluation of Paired Short Texts in English Teaching. Alex. Eng. J. 2025, 126, 231–246. [Google Scholar] [CrossRef]

- Yu, Q.; Ma, Q.; Da, L.; Li, J.; Wang, M.; Xu, A.; Li, Z.; Li, W. A Transformer-Based Unified Multimodal Framework for Alzheimer’s Disease Assessment. Comput. Biol. Med. 2024, 180, 108979. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Zümberoğlu, K.B.; Dik, S.Z.; Karadeniz, B.S.; Sahmoud, S. Towards Better Sentiment Analysis in the Turkish Language: Dataset Improvements and Model Innovations. Appl. Sci. 2025, 15, 2062. [Google Scholar] [CrossRef]

- Çizmeci, İ.H.; Gencer, K. Performance Analysis of the Most Downloaded Turkish and English Language Models on the HuggingFace Platform. J. Sci. Rep.-A 2025, 61, 13–24. [Google Scholar]

- Çabuk, M.; Yücalar, F.; Toçoğlu, M.A. Makine Öğrenmesi ile E-Ticaret Ürün Yorumlarının Otomatik Analizi. Avrupa Bilim Ve Teknol. Derg. 2023, 52, 110–121. [Google Scholar] [CrossRef]

- Demir, E.; Bilgin, M. Sentiment Analysis from Turkish News Texts with BERT-Based Language Models and Machine Learning Algorithms. In Proceedings of the 2023 8th International Conference on Computer Science and Engineering (UBMK), Burdur, Turkey, 14–17 September 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Mayda, İ.; Uğurlu, Y. Predicting the Usefulness of Turkish Consumer Reviews on E-Commerce Websites. In Proceedings of the 2024 32nd Signal Processing and Communications Applications Conference (SIU), Mersin, Turkey, 23–26 May 2024. [Google Scholar] [CrossRef]

- Teke, B.; Yazıcı, S.N.; Zamir, G.; Budak, A.B.; Aksakallı, I.K. BERTurk-Based Sentiment Analysis on E-Commerce Multi-Domain Product Reviews. Afyon Kocatepe Univ. J. Sci. Eng. 2025, 25, 497–509. [Google Scholar] [CrossRef]

- Kumar, B.V.; Sadanandam, M. A Fusion Architecture of BERT and RoBERTa for Enhanced Performance of Sentiment Analysis of Social Media Platforms. Int. J. Comput. Digit. Syst. 2024, 15, 51–66. [Google Scholar] [CrossRef]

- Kurniadi, F.I.; Paramita, N.L.P.S.P.; Sihotang, E.F.A.; Anggreainy, M.S.; Zhang, R. BERT and RoBERTa Models for Enhanced Detection of Depression in Social Media Text. Procedia Comput. Sci. 2024, 245, 202–209. [Google Scholar] [CrossRef]

- Kılıçer, S.; Şamlı, R. E-Ticaret Sitelerindeki Türkçe Ürün Yorumları Üzerine Makine Öğrenmesi Algoritmaları ile Duygu Analizi. Veri Bilim. 2023, 6, 15–23. [Google Scholar]

- Vural, M.; Aydoğan, M. Duygu Analizi Görevi Üzerinde Geniş Dil Modellerinin Performansının Kıyaslanması. In Proceedings of the 14th International Academic Research Congress (ICAR), Online, 14–15 October 2024. [Google Scholar]

- Arzu, A.; Aydoğan, M. Transformers Tabanlı Mimariler ile Türkçe Duygu Sınıflandırması Karşılaştırmalı Analizi. 2023. Available online: https://dergipark.org.tr/tr/download/article-file/3364427 (accessed on 8 December 2025).

- Oflazer, K. Türkçe ve Doğal Dil İşleme. T.B.V. Bilgisayar Bilimleri ve Mühendisliği Dergisi. 1994, 5. Available online: https://dergipark.org.tr/tr/pub/tbbmd/issue/22245/238795 (accessed on 8 December 2025).

- Çabuk, M. E-Ticaret Ürün Yorumları Veri Seti. Kaggle. 2023. Available online: https://www.kaggle.com/datasets/mujdatcabuk/eticaret-urun-yorumlari (accessed on 8 December 2025).

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- de Leeuw, J.; Jia, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A.K. Comparing Accuracy Assessments to Infer Superiority of Image Classification Methods. Int. J. Remote Sens. 2006, 27, 223–232. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.