Abstract

The growing use of Unmanned Aerial Vehicles (UAVs) raises the need to improve their autonomous navigation capabilities. Visual odometry allows for dispensing positioning systems, such as GPS, especially on indoor flights. This paper reports an effort toward UAV autonomous navigation by proposing a translational velocity observer based on inertial and visual measurements for a quadrotor. The proposed observer complementarily fuses available measurements from different domains and is synthesized following the Immersion and Invariance observer design technique. A formal Lyapunov-based observer error convergence to zero is provided. The proposed observer algorithm is evaluated using numerical simulations in the Parrot Mambo Minidrone App from Simulink-Matlab.

1. Introduction

Thanks to the advancement of unmanned aerial vehicles, these have gained ground in various applications, including law enforcement, precision agriculture, and architectural/industrial inspection. Additionally, researchers have chosen to use this aircraft type, which offers excellent academic advantages, to test new nonlinear control theory methods. Crewless aircraft have made a quantum leap, and the nonlinear control theory has evolved. The basis of the classical nonlinear control theory, built by, for instance, [,], has been adapted rapidly into this century’s new technologies, as reported in [,]. Hence, quadrotor aerial vehicles have become a worldwide standard platform for robotics research. In particular, the Parrot Mambo Minidrone App in Simulink-Matlab offers excellent functionality for testing new control and state observation algorithms. It provides semi-realistic inertial and visual sensors, making real-time implementation straightforward. In reference to the first developments of sensor fusion for unmanned aerial vehicles, we can find that in [], the measurements from an Inertial Navigation System (INS) and GPS sensors are fused by using a Kalman filter. The information for the simulation experiment was used to examine the data of both sensors; tool implementations of the filter were considered. The research article [] establishes a basic requirement for an autonomous robot merging the data of different sensors; odometric and sonar sensors are fused together by means of an Extended Kalman Filter (EKF). The adaptive algorithm performs a precise localization of the vehicle and can be established in a wide range of experimental situations. The researchers in [] describe a simple yet powerful statistical technique for fusing information from different sensors that aims to obtain the spectral error densities of the navigation gravity model, completing a robust aerial model that absorbs vertical disturbances. The information is computed in the time-dependent frequency.

In [], the authors present a real-time implementation of position controllers based on the Adaptive–Proportional–Integral Derivative (APID) method for the Parrot Mambo Minidrone. An adaptive mechanism based on a second-order sliding mode control is also included to modify the conventional parameters of the altitude controller. Simulations and experimental flights validate the success of this approach. The research reported in [] synthesizes Proportional–Integral–Derivative (PID), Linear Quadratic Regulator (LQR), and Model Predictive Control (MPC) algorithms for a Parrot Minidrone, and the control algorithms’ strengths and weaknesses are analyzed. These control techniques were chosen considering that the MPC design for Parrot Minidrones is unavailable in the existing literature. The automatic code generation capabilities offered by the Simulink coder facilitated the experimental implementation of the proposed control algorithms. The use of a quadrotor linear model evidently causes a relatively large mismatch between simulations and experimental results. The work in [] presented the real-time implementation of a novel Cartesian translational robust control strategy for the Parrot Mambo Minidrone. Multiple data were collected from actual flight experiments involving a set of four Parrot Mambo Minidrones. A first-order dynamic with time delay was identified as the mathematical model for the Cartesian translational dynamic. The control strategy was designed based on a parameter variant discrete-time linear system. Reference [] reports the design and implementation of a cascade attitude controller for the Parrot Mambo Minidrone. The controller was designed using the triple-step and nonlinear integral sliding mode control NISMC method considering the three-DOF nonlinear model. In [], a hand gesture-based drone controller for the Parrot Mambo Minidrone is developed; the controller can perform take-off, hovering, and landing operations. The delay between executing each command is only three seconds, and the system accuracy obtained for hand gesture detection is remarkably good. The system can be further extended by modifying the controller so that the UAV can move and perform multiple commands using hand gestures.

Current directions aim to develop nonlinear observer strategies for autonomous navigation using only onboard sensors. The work in [] proposed an embedded fast Nonlinear Predictive Model (NMPC) algorithm. This controller ensures a stable and safe flight of micro aerial robots relying solely on onboard sensors to localize themselves. The implemented controller can drive the micro aerial vehicle to track a path and outstand external disturbances. The design of a computationally efficient optical flow algorithm for tiny multirotor aerial vehicles, also known as pocket drones, is the focus of the work reported []. The Edge-Flow algorithm uses a compressed representation of an image frame to match it with the one in the previous time step; the adaptive time horizon also enables it to detect sub-pixel flow, from which velocities can be observed. Reference [] presents a fusion filter design using the Kalman Filter (KF) complemented with the No Motion No Integration Filter (NMNI) to reduce noise influence to observe the tilt angle using only one accelerometer and one gyroscope. This approach relieves the burden of multiple sensors for attitude tracking, reducing battery energy consumption and helping the drone obtain the precise angle under rotor vibrations.

Translational velocity observation for quadrotors is an appealing problem from practical and theoretical perspectives. In [], a globally exponential convergent observer based on nonlinear adaptive techniques is proposed. The observer uses measurements from an Attitude and Heading Reference System (AHRS). Numerical simulations evaluate the observer algorithm that shows good performance in the presence of noisy acceleration measurements. Reference [] reports the design of a deterministic quadrotor translational velocity observer employed to reconstruct the scale factor of the position determined by a Simultaneous Localization And Mapping (SLAM) algorithm. The translational velocity observer uses the translational acceleration and the non-scaled translational position; its performance is evaluated through numerical simulations. Translational velocity observation is also an essential issue for fixed-wing aircrafts. Reference [] presents an exponentially stable nonlinear wind velocity observer for fixed-wing unmanned aerial vehicles. The proposed observer employs a GNSS-aided Inertial Navigation System (INS), an attitude observer, and a pitot-static probe measuring dynamic pressure and airspeed in the longitudinal direction. The proposed observer estimates wind velocity and, as by-products, the angles of attack, sideslip, and scaling factor of the pitot-static probe measurement without requiring UAV maneuvers with the Persistence of Excitation (PE). The algorithm is well-suited for embedded systems and was tested through simulations. Finally, in [], a uniform, semi-global, exponentially stable nonlinear observer for attitude, gyro bias, position, velocity, and specific force estimation for a fixed-wing UAV is presented. The nonlinear observer uses inertial and visual measurements without any assumptions about the flight altitude or the structure of the terrain being recorded. Experimental data from a UAV test flight and simulated data show that the nonlinear observer performs robustly.

Examining the existing literature reveals a significant gap in quadrotor translational velocity observations. No deterministic observer complements inertial and visual measurements with a formal proof of convergence of the observation error and a semi-realistic numerical simulation. By incorporating novel methodologies such as the Immersion and Invariance method, this research fills these gaps and presents a technique to complementarily fuse both measurements, accompanied by a formal proof of the convergence to zero of the observation error. This research was driven by an aim to enhance quadrotor capabilities to fly autonomously using only onboard sensors. In relation to the autonomous performance of aerial vehicles, we can find in [], mounted-based stations, which are expected to become an integral component of future intelligent transportation systems. The aerial vehicle, integrated with the ground vehicular network, is used to bridge coverage gaps, offer broader communication services, and improve the network connection stability. Its self understanding is the main task, which is performed by the autonomous unmanned vehicle. As mentioned, a fundamental goal of the autonomous UAV is the development of navigation systems that help to realize any kind of aerial operations; inside [], a nano-UAV learns to detect and fly through programmed trajectories with an autonomous navigation system based on neural networks. This research article is extremely pretentious because, besides the autonomous requirements, it is needed to establish appropriate sensor fusion, as well as the overall perspective of the whole aerial environment. Key insights from this research include the complementary nature of the inertial and visual sensors. Moreover, the findings reported in this article complement the work presented in []. The nonlinear translational velocity observer of [] degrades when the quadrotor remains in hover; this problem is overcome by adding the optical flow measurement. Adding the optical measurement also gives the proposed observer an advantage over the observer reported in []. Finally, it is important to observe that the nonlinear observers reported in [,] use different sensors.

This paper introduces a novel sensor fusion strategy for observing the quadrotor translational velocity. The proposed observer algorithm uniquely fuses inertial and visual measurements. This fusion strategy is synthesized following the Immersion and Invariance approach method introduced in []. The observer error convergence to zero is guaranteed using Lyapunov theory arguments, and its performance is evaluated through numerical simulations in the semi-realistic Parrot Minidrone app from Matlab-Simulink. It is demonstrated that the proposed observer operates effectively under noisy measurements and considering different quadrotor motions.

This work has the following structure: Section 2 presents the quadrotor model and describes the available measurements. Section 3 reports the sensor fusion algorithm and formally states the nonlinear observer design. Section 4 is devoted to the numerical simulation study, the observation error, and the stability properties of the designed observer; finally, Section 5 presents the conclusions of this work.

2. Quadrotor Mathematical Model and Available Measurements

2.1. Quadrotor Dynamics

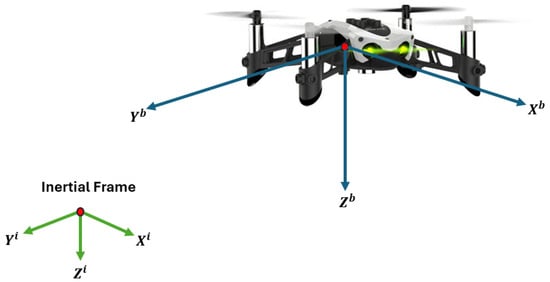

The basic structure of the dynamic quadrotor model has been reported in various research papers and books. This work considers the dynamic quadrotor model reported in [,]. The model contains states expressed in two frames of reference, the inertial and the fixed body, and is described by the following set of differential equations. See Figure 1.

where is the quarotor position in the inertial reference frame. is the rotation matrix from the fixed body to inertial coordinates with

where is the identity matrix, and denotes the translational velocity expressed in the fixed body frame. Moreover, m represents the vehicle’s mass, g is the gravitational force constant, , is the total thrust force produced by the four rotors,

and is a skew symmetric matrix such that

is the inertia matrix, is the quadrotor rotational velocity expressed in the fixed-body frame coordinates, and is the vector of moments generated by the differential rotor’s thrust and rotor’s reaction moment.

Figure 1.

Mambo Parrot’s coordinate frames.

2.2. Available Measurements

This work considers that the quadrotor has an Inertial Measurement Unit (IMU), a monocular camera pointing downwards, and a height sensor. Thus, the following signals are available.

2.2.1. Quadrotor’s Specific Translational Acceleration

As the leading electronic sensor, multi-rotors carry an IMU, which provides information on the fixed body coordinates. An IMU delivers the specific translational acceleration , the angular velocity , and the intensity of the Earth’s magnetic field. According to the work reported in [], the specific translational acceleration measured by the IMU’s accelerometer mounted on board an aerial vehicle is given by

where models the total external forces acting on the vehicle where the IMU is mounted. From the second equation in (1), it follows that

Notice that the above equation does not include the term because the Coriolis acceleration is an inertial force. Substituting (3) into (2), the specific acceleration force measured by an accelerometer onboard a quadrotor is

The first available measurement is the specific translational acceleration, which element-to-element reads in the following way,

2.2.2. Quadrotor’s Attitude and Angular Velocity

The IMU data processed through an estimation algorithm constitutes an Attitude and Heading Reference System (AHRS) that provides the quadrotor attitude R and angular velocity . The AHRS can deliver the attitude using a parameterization such as Euler angles or quaternions or directly delivering the rotation matrix R. Thus, one has

2.2.3. Optical Flow

Nowadays, the second crucial sensor for autonomous navigation is a monocular camera. The pixel velocity can be measured and related to the quadrotor velocity by processing the camera image.

The cornerstone to interpreting an image inside a computer is brightness. The camera sensors integrate the irradiance from the scene so that defines the brightness of the pixel located at ; thus, the k image captured at time t can be characterized as [],

Computer vision deals with extracting meaningful information from images; this is extracting meaningful information from brightness. A much more straightforward problem is computing image motion information from brightness. Consider two images of the same pixel location, and , taken from infinitesimally close vantage points and at infinitesimally consecutive time instants ; thus,

where with an infinitesimal time increment. Assume that only pixels belonging to flat and parallel image plane portions and moving parallel to the image plane are considered. Then,

with and the pixel velocity. As a result,

Expanding the right-hand-side of Equation (7) by the Taylor series, one has

Neglecting the high-order terms, it follows that

this equation is known as the brightness constancy constraint [] or the optical flow constraint equation [,]. Note that it is impossible to compute the speeds and perpendicular to the image gradient using the brightness constancy constraint; this drawback is known as the aperture problem []. It is necessary to evaluate the brightness constancy constraint in each pixel location belonging to a region where and can be assumed constant, for example, the window

with the image plane. If the window is fixed inside the image plane, the computed speeds and are known as the optical flow. Using the differential method proposed by Lucas–Kanade [], the computation of constant values of and in each small neighborhood can be implemented as the minimization of

with , a function giving more influence to constraints at the center of than those on the boundary. As reported in [], this method is one of the most reliable.

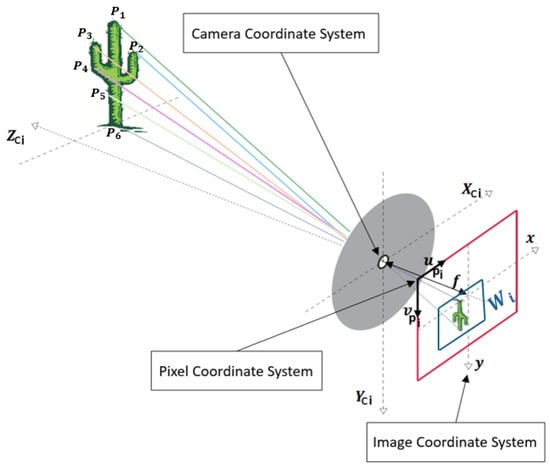

Consider a camera onboard the aerial vehicle monitoring several characteristic points located at , as illustrated in Figure 2. It is worth mentioning that this Figure comes from [] with slight additions. The velocity of each point relative to the camera is given by:

From the perspective projection condition, it follows that the location of each characteristic point projects on the image plane as follows

with f the focal length of the camera lens. Combining (10) and (11), one obtains

the pixel velocity registered by the camera due to the quadrotor motion.

Figure 2.

Pinhole camera principle [].

Figure 2 shows that . Using the differential method proposed by Lukas-Kanade to determine and in a region , it is possible to obtain the quadrotor translational velocity as follows

in an image region for some i.

Consider the following assumption,

Assumption 1.

The optical flow is computed in a region that contains the pixel image origin; this is, . Moreover, the quadrotor has a height controller that ensures for some constant and .

2.2.4. Height Sensor

Ultrasonic or laser devices to measure distance can be mounted on quadrotors. Hence, it is assumed that the following signals are also measured

thus, the matrix is also measurable since it can be expressed as

Finally, note that in terms of the measurable signals, the second equation in (7) can be expressed as

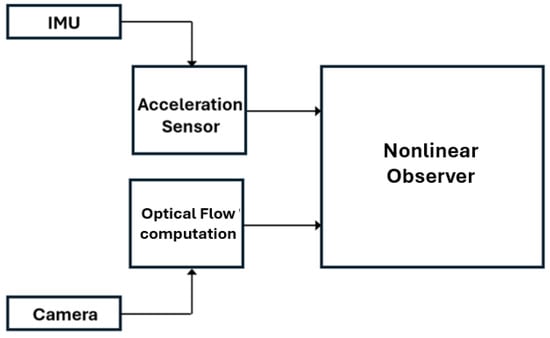

3. Nonlinear Observer Design

Sensor fusion has become essential for solving mobile robotics state observation problems []. Better spatial and temporal coverage, robustness to sensor failures, and increased state observation accuracy are the main desirable properties of sensor fusion algorithms. This research article proposes a sensor fusion algorithm to estimate the quadrotor Cartesian velocity based on the Immersion and Invariance observer design technique proposed in []. The proposed fusion algorithm can be classified as a complementary fusion across domains [,]. Both considered sensors measure the same quantity in different domains and in acceleration and pixel velocity, and they work in a complementary configuration. Figure 3 illustrates schematically the proposed sensor fusion. The sensor fusion algorithm is designed in the deterministic nonlinear time-invariant framework.

Figure 3.

Sensor fusion.

The observer design method can be explained as follows. Consider the following non-linear, deterministic, time-invariant system [].

where and are the unmeasured and measured states, correspondingly.

Definition 1.

The dynamic system

- is positively invariant;

The design of the observer of the form given in Definition 1 requires additional properties on the mapping , as stated in the following result.

Theorem 1.

Consider the system (17). Assume that the vector fields and are forward complete and that there exist differentiable maps such that

- A1 For all and the map satisfies,

- A2 The dynamic systemhas a (globally) asymptotically stable equilibrium at uniformly in and .

Remark 1.

The result in Theorem 1 is a simplified version of the general observer design theory reported in []. The proof of Theorem 1 was reported in []. The sensor fusion characteristics of the observer in Definition 1 are as follows. Assume that two measurements and contain information on the nonmeasurable state η. Then, it is possible to define a function that fuses both measurements, and then the function integrates the fusion to the observer design procedure.

Cartesian Velocity Observer

Here, the quadrotor Cartesian velocity observer is designed. The observer fuses all measurements described in Section 2.2. First, using all available measurements, the following measurements are tailored

with

From the definition of the manifold (19), the observer error is defined as

with

with and scalar constants. Note that the function fuses the information of the same quantity, translational velocity, expressed in different domains, body axes acceleration , and optical flow . The scalars and modulate the fusion. The time derivative of the observer error reads as

Using (16) and (22), one obtains

Now, to express (26) in terms of the observer error, the Equation (23) is solved for and substituted in (26). Thus,

Defining

with a matrix gain, the state observer dynamic can be defined as follows

It is important to verify that the state observer dynamic depends only on available measurements and known parameters. Then, the following vector differential equation described the observer error dynamic

Hence, one has the following.

Proposition 1.

Consider that Assumption 1 holds. Assume that the quadrotor is equipped with a set of sensors to measure , . Assume that the quadrotor flies over a surface with enough visual characteristics and there is a region containing the pixel location , where the optical flow is constant. Then, there exist constants , and a matrix Γ such that the observer dynamic (29) complimentarily fuses the available measurements and the observer error exponentially converges to zero.

Proof.

The function in (24) performs the complementary fusion of the available measurements directly related to the quadrotor translational velocity. From this point, the observer design follows the lines of Theorem 1.

To analyze the observation error’s stability properties, consider the following Lyapunov function []

with a diagonal positive definite matrix. Thus, one has

with and the smallest and greatest eigenvalues of any matrix A.

4. Results

This section presents a semi-realistic numerical simulation study to validate the theoretical developments of the previous section. First, the available measurements—the body axes acceleration and the optical flow—must be computed. Then, the proposed observer is implemented. It is essential to underscore that the Parrot Minidrone simulator provided by MATLAB-Simulink incorporates realistic quadrotor dynamics and sensor models. The works in [,,] illustrate that the experimental implementation is straightforward after performing numerical simulations using this simulator; see also the work in [], where semi-realistic simulations are performed.

The Parrot Minidrone’s physical characteristics and the camera specifications are summarized in Table 1.

Table 1.

Drone’s physical characteristics and camera specifications.

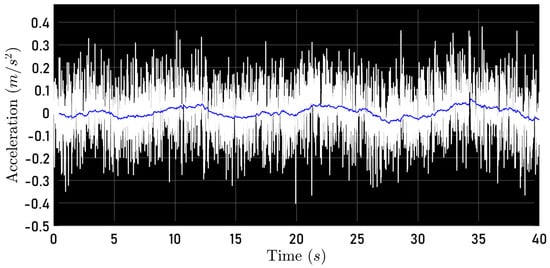

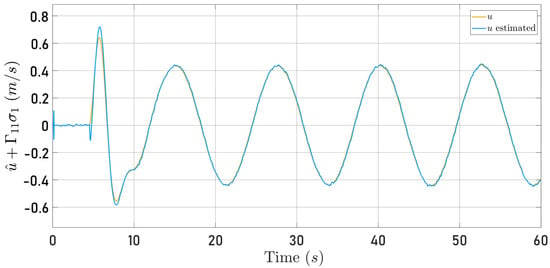

4.1. Determination of the Parameter

Note that in Equation (24), two essential measurements to reconstruct the quadrotor velocity are and . Table 1 shows that the quadrotor’s mass is available, but the parameter is not. Hence, the quadrotor follows a circular trajectory, recording, along the axis, its acceleration and velocity u to determine from the following relationship

It is important to highlight that to compute , the measured acceleration was filtered using a low-pass first-order filter, as recommended in []. Then, the value of was computed as the average value of the values obtained during the flight. Figure 4 shows (white line) the recorded acceleration and (blue line) the reconstructed acceleration; this is,

Hence, for this quadrotor, one has . The parameter is related to the blade’s aerodynamic profile and induced drag forces. This positive constant is known in helicopter literature as blade drag [,].

Figure 4.

Measured acceleration and reconstructed acceleration .

Note that this is an open-loop reconstruction so it is not expected that and are exactly coincident. However, as reported in [,], this procedure gives an adequate approximation of .

4.2. Optical Flow Algorithm Design

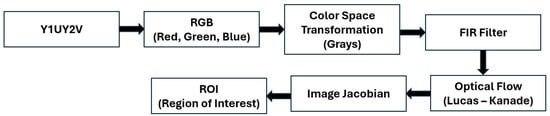

The optical flow estimation algorithm is implemented in the Parrot Minidrone Competition simulation environment, specifically in the Image Processing subsystem in the Flight Control System block. The optical flow estimation algorithm is performed as follows: (a) The monocular camera information that arrives in the Y1UY2V format is transformed into the RGB format. (b) The image in RGB format is transformed to Grayscale and filtered using an FIR (Finite Response Impulse) filter represented as a 2D coefficient matrix or a pair of separable filter coefficient vectors. (c) The filtered image is used to estimate the optical flow, employing the Lucas–Kanade method. The block implementing the Lucas–Kanade method delivers the pixel displacement per frame as a complex number for each image’s pixel.

These calculated displacements are multiplied by the corresponding number of frames per second (intrinsic parameter of the camera) to obtain the pixel velocity. Finally, the pixel velocities are subjected to statistical processing consisting of selecting a region of interest on the image plane, which, considering Assumption 1, corresponds to the image origin. Figure 5 shows a block diagram of the optical flow algorithm.

Figure 5.

Optical flow block design.

4.3. Quadrotor Trajectories

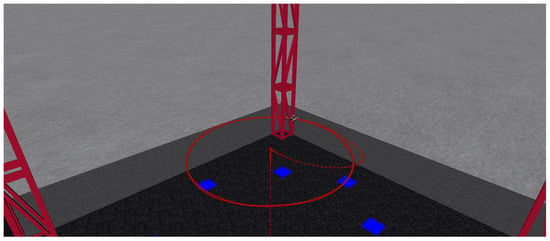

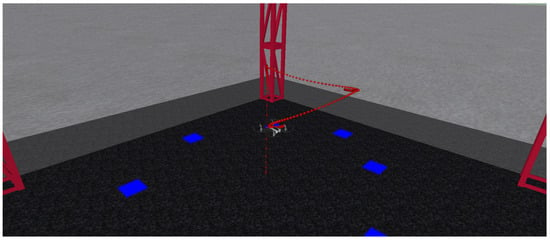

To test the proposed observer, two trajectories are considered. In the first one, the quadrotor tracks a circle while in the second one, the quadrotor visits two waypoints. Figure 6 shows the quadrotor’s path during the circle-tracking flight at a constant altitude. Figure 7 depicts the quadrotor’s path during the two-way point flight, which is at a constant altitude once again.

Figure 6.

Aircraft tracking: circular-type trajectory.

Figure 7.

Aircraft tracking: square-type trajectory.

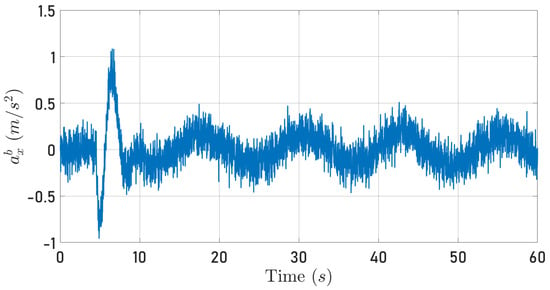

4.4. Measurements

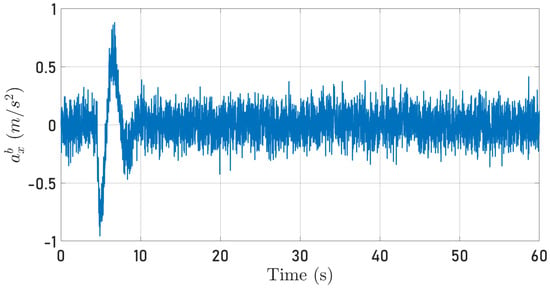

Note that the parameter is required to implement the proposed observer to reconstruct the quadrotor-specific force. The quadrotor acceleration is obtained directly from the IMU implemented in the simulator. Figure 8 and Figure 9 show the specific force along the axis delivered by the IMU when the quadrotor tracks the circular and the square-type (the quadorotor moves first along the axis and, 10 s after, moves along the axis) trajectories, respectively. It is essential to state that the IMU from Simulink is implemented considering that the accelerometers also measure the gravity force, contradicting Equation (4). Hence, the gravitational force is subtracted from the IMU’s accelerometer measurement to match (4).

Figure 8.

Specific force measured along the axis while the quadrotor follows a circular trajectory.

Figure 9.

Specific force measured along the axis while the quadrotor follows a square-type trajectory.

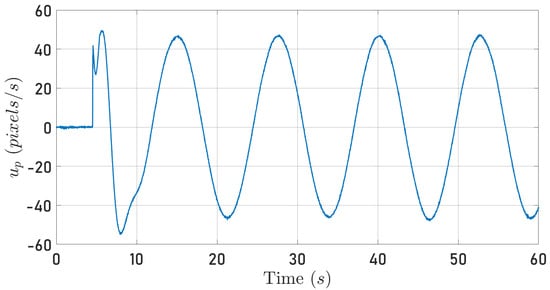

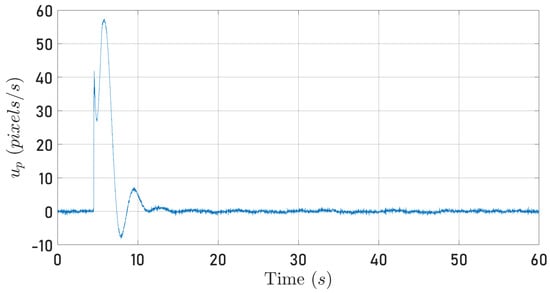

Figure 10 and Figure 11 depict the quadrotor optical flow along the axis computed as described in Section 4.2 when the quadrotor follows the circular and square-type trajectories, respectively.

Figure 10.

Computed optical flow along the axis while the quadrotor tracks a circular trajectory.

Figure 11.

Computed optical flow along the axis while the quadrotor tracks a square-type trajectory.

From Equation (22), it is clear that the signals plotted in Figure 8, Figure 9, Figure 10 and Figure 11 contain information about the translational velocity . It is not evident to identify a relationship between the specific force and optical flow measurements. However, the proposed observer fuses the information and extracts the translational velocity .

4.5. Observer Evaluation

The observer state dynamic described by Equation (29) is implemented inside the Parrot Mambo Minidrone simulator. The observed velocity

is compared with the velocity computed by the internal algorithm of the Parrot Mambo Minidrone simulator, which employs a Kalman filter.

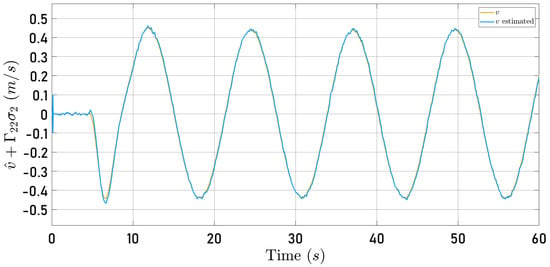

Figure 12 and Figure 13 show the time history of the observed velocity along the and axes, respectively, together with the speeds computed by the internal algorithm of the Parrot Mambo Minidrone simulator when the quadrotor follows the circular trajectory. The observer gain matrices were fixed as follows

The scalar constants and were fixed at the following values

Figure 12.

Observed speed (blue line) and speed computed by the Parrot Mambo simulator algorithm u (yellow line).

Figure 13.

Observed speed (blue line) and speed computed by the Parrot Mambo simulator algorithm v (yellow line).

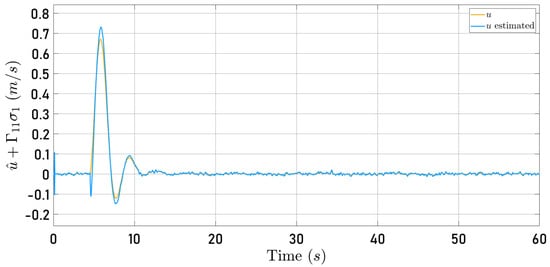

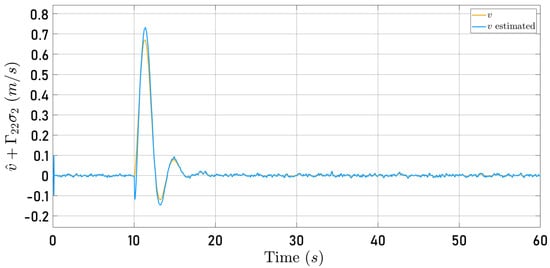

Now, Figure 14 and Figure 15 present the observer error behavior when the quadrotor follows the square-type trajectory. The speeds computed by the Parrot Mambo Minidrone algorithm are also shown.

Figure 14.

Observed speed (blue line) and speed computed by the Parrot Mambo simulator algorithm u (yellow line).

Figure 15.

Observed speed (blue line) and speed computed by the Parrot Mambo simulator algorithm v (yellow line).

Figure 12, Figure 13, Figure 14 and Figure 15 illustrate that the selected observer gains performed adequately to identify both translational speeds.

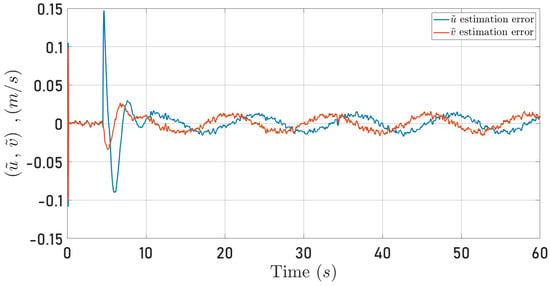

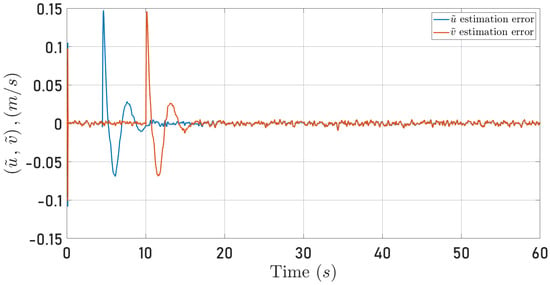

Figure 16 and Figure 17 present the observation errors to examine the proposed algorithm’s performance more closely. Note that the observation errors only converge to a neighborhood of zero. This behavior results from the semi-realistic simulation that includes noise in the measurements. However, the strong result in Proposition 1 hints at this observer behavior.

Figure 16.

Observed speeds errors for the circular trajectory. (blue line), (red line).

Figure 17.

Observed speeds errors for the square-type trajectory. (blue line), (red line).

Remark 2.

Under mild assumptions, consider that measurement noise enters into the translational velocity dynamics (16), as follows

with a bounded time variant disturbance modeling the noise in all measurements. Then, the observer error dynamic becomes

Now, from the analysis in Proposition 1, one obtains

with . Finally,

Hence, from Lemma 9.2 in [], it follows that the observer error is ultimately bounded as observed in Figure 16. Note in (44), that the ultimate bound depends on the noise level modeled by .

A more challenging problem where the proposed observer design method may fail is the case where the noise enters the translational velocity dynamic as follows

with and bounded time variant disturbances.

4.6. Observer Gains

A nonlinear time-varying model describes the observer error dynamics (see Equation (30)). Thus, determining adequate observer gains is a complex task; however, with some mild assumptions, at least locally, it is possible to determine a suitable observer gains combination. For example, assume that ; then, the observer error dynamics reduces to

Hence, the following Eigenvalues locally shape the observer error dynamics

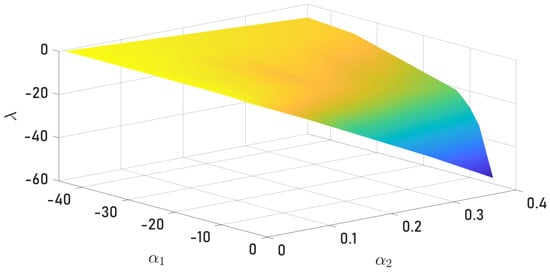

From Equation (47), the selection follows trivially. Now, assuming that , the combination of and was selected as follows. For a fixed value of , the Eigenvalue was computed considering intervals for and that give a negative value, as illustrated in Figure 18. It is important to remember that a negative Eigenvalue will not guarantee an adequate observation of the quadrotor velocity due to numerical problems in the simulation.

Figure 18.

Eigenvalues for different combinations of gains and .

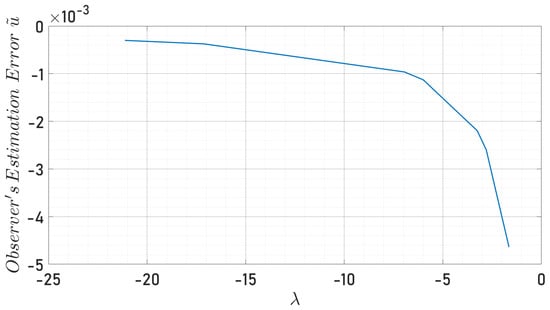

In Figure 18, one can observe the optimal values region from the scalar constants, as well as the Eigenvalues, described previously, that were estimated with precision and accuracy. The displayed chromatic variety denotes the set of Eigenvalues , according to the different values that the scalar constants can take. The last result Figure 19 shows the estimation errors analyzed only with respect to the x-axis because analogically, the y-axis presents the same behavior. As expected, increasing the value of decreases the observer error until the numerical errors appear at a specific value of , where the observer error diverges.

Figure 19.

Eigenvalues vs. observer’s estimation error in the axis.

5. Conclusions

This article proposed a novel sensor fusion observation algorithm to observe the translational velocity using available measurements from onboard sensors for a quadrotor. Besides the successful implementation of the designed observation algorithm, the main contributions are listed next:

- The designed observer can estimate the linear speeds of the aircraft for different trajectories with precision.

- The optical flow was obtained without using image features or patterns compared to other research works.

- The application of Lyapunov’s theory through a correct proposed Lyapunov function demonstrates asymptotic convergence to zero of the nonlinear observer error.

- As mentioned initially, this article was intended to compensate for the overall information loss in indoor flights by observing the vehicle’s translational velocities. The observed velocity is precise enough so that the main objective has been successfully fulfilled.

- As previously evoked in the introduction, this research project surpassed the performance of the observer proposed in [], mainly in flight trajectories, in which, at certain moments, the MAV does not make any displacements, remaining in stationary or hover flight, thanks to the incorporation of the Optical Flow into the observation algorithm.

Author Contributions

Conceptualization, H.R.-C.; methodology H.R.-C. and L.A.M.-P.; software J.R.M.-I.; validation H.R.-C., L.A.M.-P. and J.M.-U.; formal analysis H.R.-C.; investigation H.R.-C., L.A.M.-P., J.M.-U. and J.R.M.-I.; writing—original draft preparation J.R.M.-I. and J.M.-U.; writing—review and editing H.R.-C., L.A.M.-P., J.M.-U. and J.R.M.-I.; supervision H.R.-C. and L.A.M.-P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Simulation files are available upon request to the first or second authors.

Acknowledgments

The first and second authors would like to acknowledge the finantial support from CONACyT.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lyapunov, A.M. The general problem of the stability of motion. Int. J. Control. 1992, 55, 531–534. [Google Scholar] [CrossRef]

- Khalil, H.K. Control of Nonlinear Systems; Prentice Hall: New York, NY, USA, 2002. [Google Scholar]

- Astolfi, A.; Karagiannis, D.; Ortega, R. Nonlinear and Adaptive Control with Applications; Springer: Cham, Switzerland, 2008; Volume 187. [Google Scholar]

- Van der Schaft, A. L2-Gain and Passivity Techniques in Nonlinear Control; Communications and Control Engineering; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Sasiadek, J.; Hartana, P. Sensor fusion for navigation of an autonomous unmanned aerial vehicle. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 4029–4034. [Google Scholar]

- Jetto, L.; Longhi, S.; Venturini, G. Development and experimental validation of an adaptive extended Kalman filter for the localization of mobile robots. IEEE Trans. Robot. Autom. 1999, 15, 219–229. [Google Scholar] [CrossRef]

- Harriman, D.W.; Harrison, J.C. Gravity-induced errors in airborne inertial navigation. J. Guid. Control. Dyn. 1986, 9, 419–426. [Google Scholar] [CrossRef]

- Noordin, A.; Mohd Basri, M.A.; Mohamed, Z. Adaptive PID Control via Sliding Mode for Position Tracking of Quadrotor MAV: Simulation and Real-Time Experiment Evaluation. Aerospace 2023, 10, 512. [Google Scholar] [CrossRef]

- Okasha, M.; Kralev, J.; Islam, M. Design and Experimental Comparison of PID, LQR and MPC Stabilizing Controllers for Parrot Mambo Mini-Drone. Aerospace 2022, 9, 298. [Google Scholar] [CrossRef]

- Rubio Scola, I.; Guijarro Reyes, G.A.; Garcia Carrillo, L.R.; Hespanha, J.P.; Burlion, L. A Robust Control Strategy With Perturbation Estimation for the Parrot Mambo Platform. IEEE Trans. Control. Syst. Technol. 2021, 29, 1389–1404. [Google Scholar] [CrossRef]

- Zhu, C.; Chen, J.; Zhang, H. Attitude Control for Quadrotors Under Unknown Disturbances Using Triple-Step Method and Nonlinear Integral Sliding Mode. IEEE Trans. Ind. Electron. 2023, 70, 5004–5012. [Google Scholar] [CrossRef]

- Naseer, F.; Ullah, G.; Siddiqui, M.A.; Jawad Khan, M.; Hong, K.S.; Naseer, N. Deep Learning-Based Unmanned Aerial Vehicle Control with Hand Gesture and Computer Vision. In Proceedings of the 2022 13th Asian Control Conference (ASCC), Jeju, Republic of Korea, 4–7 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Nascimento, T.; Saska, M. Embedded fast nonlinear model predictive control for micro aerial vehicles. J. Intell. Robot. Syst. 2021, 103, 1–11. [Google Scholar] [CrossRef]

- McGuire, K.; de Croon, G.; de Wagter, C.; Remes, B.; Tuyls, K.; Kappen, H. Local histogram matching for efficient optical flow computation applied to velocity estimation on pocket drones. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3255–3260. [Google Scholar] [CrossRef]

- Hoang, M.L.; Carratù, M.; Paciello, V.; Pietrosanto, A. Fusion Filters between the No Motion No Integration Technique and Kalman Filter in Noise Optimization on a 6DoF Drone for Orientation Tracking. Sensors 2023, 23, 5603. [Google Scholar] [CrossRef]

- Benzemrane, K.; Damm, G.; Santosuosso, G. Nonlinear adaptive observer for Unmanned Aerial Vehicle without GPS measurements. In Proceedings of the 2009 European Control Conference (ECC), Budapest, Hungary, 23–26 August 2009; pp. 597–602. [Google Scholar] [CrossRef]

- Gómez-Casasola, A.; Rodríguez-Cortés, H. Scale Factor Estimation for Quadrotor Monocular-Vision Positioning Algorithms. Sensors 2022, 22, 8048. [Google Scholar] [CrossRef] [PubMed]

- Borup, K.T.; Fossen, T.I.; Johansen, T.A. A nonlinear model-based wind velocity observer for unmanned aerial vehicles. IFAC-PapersOnLine 2016, 49, 276–283. [Google Scholar] [CrossRef]

- Hosen, J.; Helgesen, H.H.; Fusini, L.; Fossen, T.I.; Johansen, T. A Vision-aided Nonlinear Observer for Fixed-wing UAV Navigation. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016; pp. 1–19. [Google Scholar] [CrossRef]

- He, Y.; Wang, D.; Huang, F.; Zhang, R.; Min, L. Aerial-Ground Integrated Vehicular Networks: A UAV-Vehicle Collaboration Perspective. IEEE Trans. Intell. Transp. Syst. 2023. [Google Scholar] [CrossRef]

- Kalenberg, K.; Müller, H.; Polonelli, T.; Schiaffino, A.; Niculescu, V.; Cioflan, C.; Magno, M.; Benini, L. Stargate: Multimodal Sensor Fusion for Autonomous Navigation On Miniaturized UAVs. IEEE Internet Things J. 2024. [Google Scholar] [CrossRef]

- Mahony, R.; Kumar, V.; Corke, P. Multirotor Aerial Vehicles: Modeling, Estimation, and Control of Quadrotor. IEEE Robot. Autom. Mag. 2012, 19, 20–32. [Google Scholar] [CrossRef]

- Leishman, R.C.; Macdonald, J.C.; Beard, R.W.; McLain, T.W. Quadrotors and Accelerometers: State Estimation with an Improved Dynamic Model. IEEE Control. Syst. Mag. 2014, 34, 28–41. [Google Scholar] [CrossRef]

- Ma, Y.; Soatto, S.; Košecká, J.; Sastry, S. An Invitation to 3-D Vision: From Images to Geometric Models; Springer: Cham, Switzerland, 2004; Volume 26. [Google Scholar]

- Xie, N.; Lin, X.; Yu, Y. Position estimation and control for quadrotor using optical flow and GPS sensors. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 181–186. [Google Scholar] [CrossRef]

- Weiss, L.; Sanderson, A.; Neuman, C. Dynamic sensor-based control of robots with visual feedback. IEEE J. Robot. Autom. 1987, 3, 404–417. [Google Scholar] [CrossRef]

- Wu, Y. Optical Flow and Motion Analysis; Advanced Computer Vision Notes Series 6. 2001. Available online: http://www.eecs.northwestern.edu/~yingwu/teaching/EECS432/Notes/optical_flow.pdf (accessed on 1 May 2024).

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef]

- Spagl, M. Optische Positions-und Lagebestimmung einer Drohne im Geschlossenen Raum. Master’s Thesis, Hochschule Rosenheim Unoiversity of Applied Sciences, Rosenheim, Germany, 2021. [Google Scholar]

- Mitchell, H.B. Multi-Sensor Data Fusion: An Introduction; Springer Science & Business Media: Berlin, Germany, 2007. [Google Scholar]

- Durrant-Whyte, H.F. Sensor models and multisensor integration. Int. J. Robot. Res. 1988, 7, 97–113. [Google Scholar] [CrossRef]

- Boudjemaa, R.; Forbes, A. Parameter Estimation Methods in Data Fusion; NPL Report CMSC 38/04. 2004. Available online: https://eprintspublications.npl.co.uk/2891/1/CMSC38.pdf (accessed on 1 May 2024).

- Noordin, A.; Mohd Basri, M.A.; Mohamed, Z. Position and attitude tracking of MAV quadrotor using SMC-based adaptive PID controller. Drones 2022, 6, 263. [Google Scholar] [CrossRef]

- Bramwell, A.R.S.; Balmford, D.; Done, G. Bramwell’s Helicopter Dynamics; Elsevier: Amsterdam, The Netherlands, 2001. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).