Abstract

Artificial intelligence (AI) shows promise in streamlining MRI workflows by reducing radiologists’ workload and improving diagnostic accuracy. Despite MRI’s extensive clinical use, systematic evaluation of AI-driven productivity gains in MRI remains limited. This review addresses that gap by synthesizing evidence on how AI can shorten scanning and reading times, optimize worklist triage, and automate segmentation. On 15 November 2024, we searched PubMed, EMBASE, MEDLINE, Web of Science, Google Scholar, and Cochrane Library for English-language studies published between 2000 and 15 November 2024, focusing on AI applications in MRI. Additional searches of grey literature were conducted. After screening for relevance and full-text review, 66 studies met inclusion criteria. Extracted data included study design, AI techniques, and productivity-related outcomes such as time savings and diagnostic accuracy. The included studies were categorized into five themes: reducing scan times, automating segmentation, optimizing workflow, decreasing reading times, and general time-saving or workload reduction. Convolutional neural networks (CNNs), especially architectures like ResNet and U-Net, were commonly used for tasks ranging from segmentation to automated reporting. A few studies also explored machine learning-based automation software and, more recently, large language models. Although most demonstrated gains in efficiency and accuracy, limited external validation and dataset heterogeneity could reduce broader adoption. AI applications in MRI offer potential to enhance radiologist productivity, mainly through accelerated scans, automated segmentation, and streamlined workflows. Further research, including prospective validation and standardized metrics, is needed to enable safe, efficient, and equitable deployment of AI tools in clinical MRI practice.

1. Introduction

In the last two decades, artificial intelligence (AI), particularly machine learning (ML) and natural language processing (NLP), have significantly transformed the field of radiology []. AI applications have demonstrated promise in reducing the diagnostic workload of radiologists while potentially enhancing productivity and diagnostic accuracy across various modalities. Magnetic resonance imaging (MRI) plays a crucial role in modern diagnostics due to its superior soft-tissue contrast, multi-planar capabilities, and lack of ionizing radiation, making it essential for diagnosing a wide range of conditions, from neurological disorders to musculoskeletal injuries []. As of 2022, there are an estimated 50,000 MRI systems installed worldwide, with over 95 million MRI scans performed yearly []. Despite the widespread use and critical importance of MRI in clinical diagnostics, there has been limited systematic assessment of how AI applications affect clinical productivity within MRI workflows across various specialties. Despite novel advancements in deep learning (DL) for computer imaging with increased emphasis on quality optimization whilst maintaining accuracy [,,,,], it remains to be seen if these can be translated into medical imaging (specifically MRI) whilst optimizing time gains or losses.

The complexity of MRI interpretation significantly contributes to radiologists’ increased workload and the potential for diagnostic delays. Radiologists are tasked with analyzing large volumes of intricate images—often in the thousands—requiring the analysis of one image every 3–4 s during a typical 8 h workday to meet workload demands []. Furthermore, interobserver variability can result in inconsistent diagnoses, underscoring the need for tools that standardize and improve diagnostic accuracy []. AI holds promise in addressing these challenges by automating routine tasks, optimizing scan protocols, and aiding in image interpretation, ultimately enhancing workflow efficiency and reducing diagnostic errors [,].

Previous reviews have primarily focused on specific subspecialties or examined AI applications across multiple imaging modalities, often offering limited insight into the implications of AI specifically within MRI [,,,]. The heterogeneity in study designs, endpoints, and performance metrics makes it difficult to conduct a formal meta-analysis with robust reasoning []. Given the wide variations in study designs, AI models, clinical settings, and outcome measures, a narrative review is more suitable. This approach allows for the synthesis of findings and insights despite the lack of uniformity, providing a flexible framework to explore the impact of AI on MRI.

Measuring productivity in radiology is multifaceted. While time savings and increased throughput are often-cited benefits of AI, other aspects such as diagnostic accuracy, workflow efficiency, and reduction in radiologist fatigue are equally important [,]. However, there is no clear consensus on which metrics should be used to assess these outcomes. Studies often focus on specific applications of AI—such as lesion detection, scan protocolling and scan prioritization—which limits the ability to make an overall assessment of AI’s contribution to productivity [,,]. Furthermore, AI models developed in specific institutions or datasets may not generalize well to broader clinical practice, raising concerns about bias and reproducibility [,]. Ethical considerations, including data privacy and the need for transparency in AI algorithms, also play a significant role in adoption of AI in MRI []. Radiologist acceptance of AI tools depends on their reliability and ability to integrate seamlessly into existing workflows, highlighting the importance of collaborative efforts between AI developers and clinicians [].

This review aims to provide a comprehensive overview of AI applications in MRI across different clinical specialties, with a particular focus on their potential to enhance radiologist productivity during scanning and/or interpretation. We will examine studies that assess AI tools in terms of time savings, accuracy improvements, and workflow integration, while also identifying gaps in the literature where further research is needed. By addressing the current landscape and identifying areas for improvement, we hope to inform future research and guide the practical integration of AI tools in MRI.

2. Materials and Methods

2.1. Literature Search Strategy

In order to enhance the transparency and completeness of our review, we employed a systematic search with our narrative review methodology. Initial search strategy was performed according to Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [].

On 15 November 2024, a literature search of the major databases was conducted (PubMed, EMBASE, MEDLINE, Web of Science, Google Scholar, and Cochrane library) using the following strategy: (productivity OR productiveness OR prolificacy) AND (radiology OR radiologist) AND (artificial intelligence OR AI) AND (magnetic resonance imaging OR MRI). Limits were applied to only include English language studies published between the year 2000 and 15 November 2024.

Additional grey literature searches have also been conducted via hand search (OATD, OpenGrey, OCLC, NIH Clinical Trials, TRIP medical database).

2.2. Study Screening and Selection Criteria

A two-stage screening process was used. Studies were first screened by title and abstract (A.N). A full text review was then performed for any potentially eligible studies. Any controversies at either stage were reviewed by multiple authors (W.O., A.L, J.T.P.D.H) during assessment for study eligibility.

The inclusion criteria were as follows: studies focusing on the topic of using AI in MRI to enhance productivity, English studies, and studies performed on human subjects. The exclusion criteria were as follows: non-original research (for example, review articles and editorial correspondences), unpublished work, non-peer reviewed work, conference abstracts, and case reports. Studies focused primarily on other imaging modalities (for example radiographs, CT, or nuclear medical imaging) were also excluded.

2.3. Data Extraction and Reporting

The selected studies were extracted and compiled onto a spreadsheet using Microsoft Excel Version 16.81 (Microsoft Corporation, Washington, DC, USA). The following data were extracted:

- Study details: title, authorship, year of publication and journal name.

- Application and primary outcome measure.

- Study details: sample size, region studied, MRI sequences used.

- Artificial intelligence technique used.

- Key results and conclusion.

3. Results

3.1. Search Results

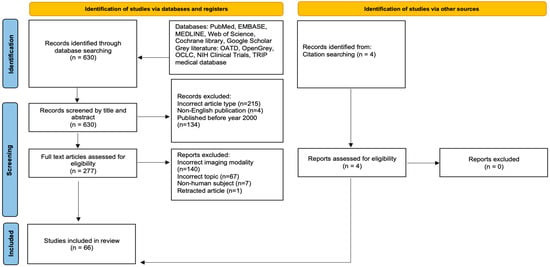

Our initial literature search identified 630 studies following screening to the specified criteria and removal of any duplicates. Subsequently, 134 studies which did not meet the date range, 215 of incorrect article types (as specified in the exclusion criteria), and 4 non-English publications were initially excluded during screening by title and abstract alone. This led to 277 studies being selected for full screening. Four additional studies were also identified from citation searching and assessed for eligibility during the full screening. The end result was the inclusion of 66 studies in the present review (see Figure 1 for a detailed flowchart). The articles were summarized based on data extracted in Table 1 and Table 2.

Figure 1.

PRISMA flowchart showing the two-step study screening process. Adapted from PRISMA Group, 2020 [].

Table 1.

Summary of selected studies.

Table 2.

Productivity measures of selected studies.

We classified the included studies based on the following themes: Reducing scan times to improve efficiency (13/66, 19.7%), automating segmentation (26/66, 39.4%), optimizing worklist triage and workflow processes (7/66, 10.6%), decreasing reading times (16/66, 24.2%), and time-saving and workload reduction (6/66, 9.1%). In terms of overall productivity gains, around half of the studies (35/66, 53.1%) demonstrated this clearly across one or more of the themes identified. The remaining studies were deemed to have unclear productivity gains based on the limitations highlighted in Table 2 and subsequent discussion, with a few productivity losses (3/66, 4.5%) due to the use of AI also seen.

3.2. Overview of How Can AI Help in MRI Interpretation

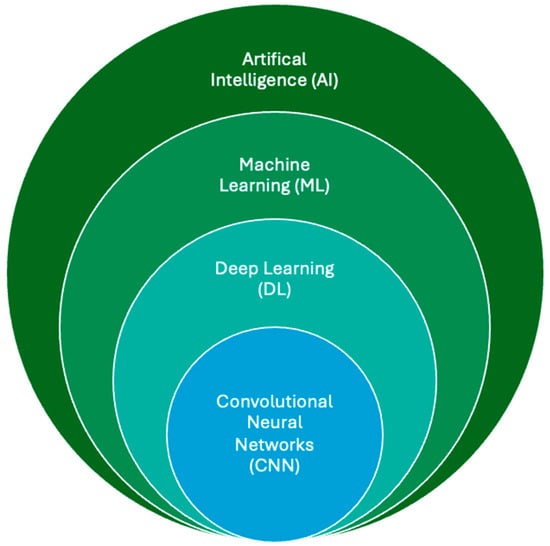

Artificial intelligence (AI) refers to the use of computers and technology to perform tasks that mimic human intelligence and critical thinking []. First introduced at the 1956 Dartmouth Conference, AI gained significant traction in medicine during the third AI boom in the 2010s, driven by advancements in machine learning (ML) and deep learning (DL) []. ML involves training models to make predictions using existing datasets, while DL employs artificial neural networks modeled on the brain’s architecture to solve complex problems [,]. The exponential growth in digital imaging and reporting data have further accelerated advancements in medical imaging, particularly through the application of convolutional neural networks (CNNs), a key subset of DL. CNNs have revolutionized medical imaging by enabling the development of computer-aided diagnostic (CAD) systems, which assist radiologists in detecting lesions and tumors across various imaging modalities, including MRI []. These systems aim to enhance diagnostic accuracy and efficiency, reducing workload and interobserver variability. Many CAD systems rely on CNN models to deliver expert-level performance across different areas of imaging research []. The relationship between AI, ML, DL, and CNN is depicted in Figure 2, highlighting the interconnected nature of these technologies and their role in advancing medical imaging.

Figure 2.

The relationship between AI, ML, DL, and CNN. ML lies within the field of AI and allows computer systems to learn without explicit programming or knowledge. As a subsection of machine learning, deep learning uses computational models similar to the neuronal architecture within the brain, simulating multilayer neural networks in order to resolve complex tasks. A CNN automatically learns and adapts to spatial hierarchies of features through backpropagation using multiple building blocks, allowing analysis of 2D and 3D medical images.

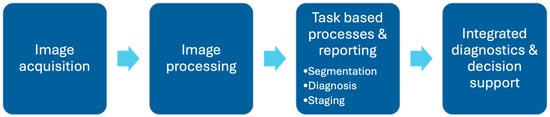

While AI has demonstrated success in improving accuracy, enhancing efficiency is equally critical to facilitate the seamless integration of these models into clinical practice []. The clinical application of AI in MRI can be broadly categorized into five key steps: image acquisition, image processing, task-specific applications, reporting, and integrated diagnostics. This workflow is further illustrated in Figure 3.

Figure 3.

Outline of AI application in radiology workflow in typical clinical setting. AI has potential in reducing scan times during image acquisition and processing, support specific image-based task processes such as segmentation/diagnosis/staging, reduce reading time during reporting, and improve integrated diagnostic processes beyond the reporting phase, including deciding appropriate treatment plans and evaluating prognosis in patients.

3.3. Reducing Scan Times to Improve Efficiency

Implementing more efficient protocols and eliminating unnecessary sequences can significantly reduce scan times, streamlining the initial stages of image acquisition and processing. This, in turn, eases the pressure on subsequent steps, such as reducing MRI reading times, ultimately helping to alleviate radiologist fatigue.

One key example is the improvements in AI-driven lung morphometry using diffusion-weighted MRI (DW-MRI). A deep cascade of residual dense networks (DC-RDN) has enabled the rapid production of high-quality images []. This approach reduced the required breath-holding time from 17.8 s to 4.7 s, with each slice (64 × 64 × 5) reconstructed within 7.2 ms.

In one study [] exploring an MRI acceleration framework to reconstruct dynamic 2-D cardiac MRI sequences from undersampled data, a deep cascade of convolutional neural networks (CNNs) was successfully used to accelerate data acquisition. This approach resulted in improvements in both image quality and reconstruction speed. Each complete dynamic sequence was reconstructed in under 10 s, with each 2-D image frame taking only 23 ms. Faster reconstruction enables more timely image availability for diagnosis and intervention, directly contributing to increased radiologist throughput.

For assessing hepatobiliary phase (HBP) adequacy in gadoxetate disodium (EOB)-enhanced MRI, a convolutional neural network (CNN) was shown to evaluate HBP adequacy in real time, potentially reducing examination times by up to 48% for certain patient groups []. In gastric cancer workups, a deep learning-accelerated single-shot breath-hold (DSLB) T2-weighted imaging technique demonstrated superior quality, faster acquisition times, and improved staging accuracy compared to current BLADE T2-weighted imaging methods []. DSLB reduced the acquisition time of T2-weighted imaging from a mean of 4 min 42 s per patient to just 18 s per patient while delivering significantly better image quality, with 9.43-fold, 8.00-fold, and 18.31-fold improvements in image quality scores across three readers compared to the standard BLADE.

In spinal MRI, one study [] utilized a deep learning-based reconstruction algorithm combined with an accelerated protocol for lumbar MRI, reducing the average acquisition time by 32.3% compared to standard protocols while maintaining image quality. A separate study [] on lumbar central canal stenosis (LCCS) demonstrated improved measurement accuracy using sagittal lumbar MR slices alone, compared to models that combined sagittal and axial slices. This approach showed robustness across moderate-to-severe LCCS cases, suggesting that automated LCCS assessment using sagittal T2 MRI alone could reduce the need for additional axial imaging, thereby shortening scan times.

However, limitations remain. Prior studies on DL techniques for MRI acceleration [,] did not specify improvements across all full spine MRI examination sequences, and their clinical utility was constrained by the lack of multicenter validation. Despite these caveats, DL-based reconstruction in musculoskeletal (MSK) MRI offers a promising direction for reducing examination times. For instance, one study [] demonstrated that a 10 min accelerated 3D SPACE MRI protocol for the knee delivered comparable image quality and diagnostic performance to a 20 min 2D TSE MRI protocol.

A novel study [] on MRI of the knee introduced a variational network framework that integrates data consistency with deep learning-based regularization, enabling the reconstruction of high-quality images from undersampled MRI data. This method outperformed non-AI reconstruction algorithms by a factor of 4 and achieved reconstruction times under 193 ms on a single graphics card, requiring no parameter tuning once trained. These characteristics make it easily integrable into clinical workflows.

DL models [,,,] have also been developed to accelerate reconstruction times for various brain MRI sequences while maintaining accuracy and improving image quality. These models enhance the signal-to-noise ratio (SNR) and reduce artifacts, such as those caused by head motion. In one study [], an accelerated DL reconstruction method improved image quality and reduced sequence scan times by 60% for brain MRI.

Not all studies demonstrated reduced scan times despite improvements in image quality. For instance, a DL-based reconstruction model for intracranial magnetic resonance angiography (MRA) significantly improved SNR and contrast-to-noise ratio (CNR) for basilar artery analysis [], but increased scan times, potentially affecting clinical productivity. Similarly, for shoulder MRI, while DL-based reconstructions significantly improved SNR and CNR, they showed inconsistencies in reducing scan times when applied [,].

3.4. Automating Segmentation

A significant majority of the included studies focused on automating segmentation in MRI across various specialties. Manual segmentation is often time-consuming and labor-intensive for radiologists []. AI models provide a promising solution by easing this workload and enabling radiologists to deliver more concise and time-efficient imaging-based diagnoses.

Multiple studies [,,] focusing on breast tumor have demonstrated the successful development of AI programs for automating tumor segmentation in dynamic contrast-enhanced MRI (DCE-MRI). One such program achieved a 20-fold reduction in manual annotation time for expert radiologists, while another showed the potential to reduce unnecessary biopsies of benign breast lesions by up to 36.2%. Automated tumor segmentation not only enhances productivity but also minimizes interobserver variability, leading to more consistent and accurate diagnoses.

For lung upper airway segmentation in static and dynamic 2D and 3D MRI, a study utilizing the VGG19 architecture [] demonstrated a deep learning segmentation system that was statistically indistinguishable from manual segmentations, accounting for human variability. This represents a significant improvement over earlier models using classic augmentation, enabling more efficient structure identification. However, time savings were not detailed, leaving questions about overall efficiency in broader clinical applications.

In head, neck, and lung oncology, AI-based approaches to radiation planning have shown significant time savings with automated segmentation models combing various imaging modalities, including MRI [,]. These models eliminated labor-intensive, observer-dependent manual delineation of organs-at-risk (OARs). One study reported average model runtimes of 56 s and 3.8 min, compared to 12.4 min for clinical target volume (CTV) delineation and 30.7 min for OAR delineation by junior radiation oncologists. These time reductions allow radiologists and radiation oncologists to focus on complex decision-making tasks and patient care.

Studies on hepatocellular carcinoma detection and liver segmentation [,,,] have shown that radiomic and deep learning approaches can automate segmentation and delineation of pathological subtypes across MRI sequences, achieving 10- to 20-fold improvements in measurement time compared to manual methods.

AI pipelines for MRI have also demonstrated potential in automating segmentation and reducing contouring time for renal lesions [,]. In one study, combining MRI and AI for renal lesion differentiation during follow-up resulted in cost savings over 10 years, with total estimated costs of $8054 for the MRI strategy and $7939 for the MRI + AI strategy. This approach also yielded marginal gains in quality-adjusted life years (QALYs), demonstrating potential for reallocating radiologist resources to other tasks. Additionally, a novel automated deep learning-based abdominal multiorgan segmentation (ALAMO) technique [] successfully segmented multiple OARs for MR-only radiation therapy and MR-guided adaptive radiotherapy. This technique offers potential time savings and reduces fatigue by replacing manual delineation with comparable accuracy.

For shoulder pathologies such as rotator cuff tears, deep learning frameworks utilizing parameters like occupation ratio and fatty infiltration [] have achieved fast and accurate segmentation of the supraspinatus muscle and fossa from shoulder MRI. These frameworks enhance diagnostic efficiency and objectivity, with some performance metrics surpassing those of experienced radiologists [].

In brain MRI segmentation, skull stripping is a critical preprocessing step that removes non-brain tissue (e.g., skull, skin, and eyeballs) to reduce computational burden and misclassification risks, enabling more targeted analysis of brain tissue []. One deep learning model using anatomical context-encoding network (ACEnet) [] could segment an MRI head scan of 256 × 256 × 256 within approximately 9 s on a NVIDIA TITAN XP GPU, facilitating potential real-time applications. The model achieved Dice scores greater than 0.976. Another application [] focused on cerebrospinal fluid (CSF) volume segmentation, demonstrating strong reliability in measuring age-related brain atrophy. This application automatically segmented brain and intracranial CSF and measured their volumes and volume ratios within 1 min, using a popular 3D workstation in Japan. For brain pathologies, a CNN model [] accurately segmented meningiomas and provided volumetric assessments during serial follow-ups, achieving a median performance of 88.2% (within the inter-expert variability range of 82.6–91.6%). The study also reported a 99% reduction in segmentation time, averaging 2 s per segmentation (p < 0.001).

Automated segmentation has also enabled assessments of generalized parameters, such as tissue volume and distribution. One deep learning pipeline [] automated measurements across various landmarks in the lower limbs. While this study reported time gains rather than savings, the potential for improved clinical value in diagnosing or monitoring lipoedema or lymphedema represents a potential productivity enhancement. For rarer hand tumors, a CNN-based segmentation system [] improved tumor analysis on hand MRIs, though its accuracy (71.6%) was limited by a small sample size and narrow tumor selection.

Advances in image segmentation have extended to niche subspecialties in MRI. In neonatology, models integrating clinical variables (e.g., gestational age and sex) with automated segmentation techniques [,,] have been used to screen for pathologies such as hypoxic–ischemic encephalopathy (HIE) and predict neurodevelopmental outcomes up to two years later. In nasopharyngeal carcinoma (NPC) treatment, a deep learning-based segmentation model [] accurately delineated gross tumor volume (GTV), surpassing previous methods and improving clinicians’ efficiency in creating radiation plans for targeted therapies.

AI models have also been applied to sleep apnea evaluation. A study [] using deep learning-based segmentation of the pharynx, tongue, and soft palate on mid-sagittal MRI efficiently identified sleep apnea-related structures. Results were consistent with intra-observer variability, and the model’s processing time of only 2 s on a modern GPU demonstrates its potential for real-time clinical applications, such as computer-aided reporting.

3.5. Optimizing Worklist Triage and Workflow Processes

A multicenter study [] developed an automated sorting tool for cardiac MRI examinations by linking examination times with appropriate post-processing tools. This tool directed images that did not require quantitative post-processing to PACS more quickly for interpretation. By streamlining the workflow, radiologists received cardiac MRI images sooner, enhancing clinical efficiency. However, the tool demonstrated poor accuracy when handling images with high variability in acquisition parameters, such as perfusion imaging across different centers, highlighting a key limitation.

AI has also made significant progress in prostate MRI, particularly in the early detection and classification of prostate cancer []. One proposed model [] aimed to optimize worklist triage by automating the grading of extra-prostatic extension using T2-weighted MRI, ADC maps, and high b-value DWI sequences. This approach shows promise for improving workflow efficiency in prostate cancer evaluations.

In the workup for hepatocellular carcinoma (HCC), an AI model demonstrated the ability to differentiate between pathologically proven HCC and non-HCC lesions, including those with atypical imaging features on MRI []. This CNN-based model achieved an overall accuracy of 87.3% and a computational time of less than 3 ms, suggesting its potential as a clinical support tool for prioritizing worklists and streamlining MRI workflows.

Workflow efficiencies can also be achieved through improved and automated protocoling of MRI studies. A recent study [] using a secure institutional large language model demonstrated significantly better clinical detail in spine MRI request forms and an accurate protocol suggestion rate of 78.4%. Another study [] on musculoskeletal MRI evaluated a CNN-based chatbot-friendly short-text classifier, which achieved 94.2% accuracy. The study suggested extending this text-based framework to other repetitive radiologic tasks beyond image interpretation to enhance overall efficiency.

For breast malignancy evaluation using MRI, artificial intelligence has shown great potential in improving diagnostic accuracy and workflow efficiency. A notable multicenter study [] utilized machine learning algorithms to identify axillary-tumor radiomic signatures in patients with invasive breast cancer. This approach successfully detected axillary lymph node metastases at early stages, which is crucial for surgical decision-making. Integrating such AI tools into clinical workflows could help radiologists prioritize scans more effectively and reduce the need for additional surgical interventions in select patients.

Beyond assisting with report generation, several studies have targeted workflow improvements in integrated diagnostics, including decision support and outcome prediction. While these advancements currently have limited impact on routine reporting practices, the growing demand for real-time data and follow-up reporting could significantly burden radiologists if workflow efficiencies are not implemented [].

Various AI models [,] have also demonstrated success in predicting mortality outcomes and early recurrence pre- and post-surgery. These models identified deep learning signatures linked to risk factors such as increased microvascular invasion and tumor number, outperforming conventional clinical nomograms in accuracy. However, most studies did not discuss how these innovations could be seamlessly integrated into clinical practice.

One AI model [] focusing on hepatocellular carcinoma (HCC) described an automated risk assessment framework with a runtime of just over one minute per patient (0.7 s for automated liver segmentation, 65.4 s for extraction of radiomic features, and 0.42 s for model prediction). If implemented, such frameworks could enhance clinical reporting while maintaining workflow efficiency.

3.6. Decreasing Reading Times

Reading times can vary significantly depending on procedure type, individual radiologist, and specialty []. However, reducing reading time is a straightforward way to measure increased efficiency achieved by radiologists in a typical clinical workflow, assuming workload and report accuracy remain broadly consistent.

The use of AI has advanced to improve reporting times for post-processed images across various specialties. One CNN-based model [], used for aortic root and valve measurements, achieved an average speed improvement of 100 times compared to expert cardiologists in the UK during image interpretation.

In spinal MR imaging, AI has brought multifaceted improvements, particularly in tasks where measurements by reporting radiologists are repetitive and time-consuming. One study [] introduced a deep learning model for detecting and classifying lumbar spine central canal and lateral recess stenosis, achieving accuracy comparable to a panel of expert subspecialized radiologists. A subsequent study [] focusing on productivity metrics for this model demonstrated significant reductions in reporting times. Similar performance metrics have been observed in other DL models [,,] across various centers using different training datasets, such as SpineNet and Deep Spine. However, a key limitation is that time savings, if any, were not always clearly specified.

In musculoskeletal (MSK) imaging, AI applications have also reduced reading times, particularly for detecting ligament and meniscal injuries in the knee. A multicenter study [] evaluating an AI assistant program reported marked improvements in accuracy (over 96%) and time savings in diagnosing anterior cruciate ligament (ACL) ruptures on MRI, particularly among trainee radiologists. Diagnostic time for trainees improved to 10.6 s (from 13.8 s) for routine tasks and 13.0 s (from 37.5 s) for difficult tasks, compared to 8.5 s (from 10.5 s) and 13.6 s (from 23.3 s), respectively, for expert radiologists.

In neuroradiological reporting of multiple sclerosis (MS) lesions, prior deep learning methods were limited due to error susceptibility. A multicenter study [] introduced a novel automation framework called “Jazz”, which identified two to three times more new lesions than standard clinical reports. Reading time using “Jazz” averaged 2 min and 33 s, though the study did not compare this to the time required for a standard radiologist-generated report without AI assistance. Another multicenter study [] tested a deep learning model to automatically differentiate active from inactive MS lesions using non-contrast FLAIR MRI images. The model achieved an average accuracy of up to 85%, but a significant limitation was its reliance on manually selected regions of interest (ROIs) already diagnosed and categorized by radiologists, thereby restricting productivity gains in decreased reading time. In other neurological presentations, various AI models [,,] have demonstrated improvements in MRI related to Parkinson’s disease, with fully automated networks achieving accuracies of up to 93.5% and processing times of under one minute to distinguish between patients with Parkinson’s disease and healthy controls.

For prostate MRI scans, a DL-based computer-aided diagnosis (CAD) system [] improved diagnostic accuracy for suspicious lesions by 2.9–4.4% and reduced average reading time by 21%, from 103 to 81 s. However, a study [] examining an AI clinical decision support tool for brain MRI found significant improvements in performance among non-specialists in diagnosis, producing top differential diagnoses, and identifying rare diseases, while specialist performance showed no significant differences with or without the AI tool. This underscores the need to benchmark AI models against expert performance to justify their integration into radiologists’ workflows.

In prostate multiparametric MRI (mpMRI), a retrospective multicenter study [] reported a significant reduction in radiologist reading time (by 351 s) when grading was AI-assisted. Another study [] on prostate segmentation showed that, for prostate cancer diagnosis, AI-based CAD methods improved consistency in internal (κ = 1.000; 0.830) and external (κ = 0.958; 0.713) tests compared to radiologists (κ = 0.747; 0.600). The AI-first read (8.54 s/7.66 s) was faster than both the readers (92.72 s/89.54 s) and the concurrent-read method (29.15 s/28.92 s). Nonetheless, there is criticism that the training datasets currently used to validate deep learning CAD models for prostate cancer workup are inadequate. Insufficient training data have been shown to result in worse performance compared to expert panels of radiologists [].

3.7. Time-Saving and Workload Reduction

Several studies have explored radiologist time-saving and workload reduction strategies that extend beyond previously discussed themes.

A deep learning model [] utilizing time-resolved angiography with stochastic trajectories (TWIST) sequences was developed to identify normal ultrafast breast MRI examinations and exclude them from the radiologist’s workload when integrated into the workflow. The trained model achieved an AUC of 0.81, reducing workload by 15.7% and scan times by 16.6% through elimination of unnecessary additional sequences. This demonstrates AI’s potential to streamline imaging protocols and enhance workflow efficiency.

To address challenges in obtaining high-quality training data for AI models, one study [] evaluated manual prostate MRI segmentation performed by radiology residents. The study found that residents achieved segmentation accuracy comparable to experts, suggesting that residents could be employed to create training datasets. This approach could alleviate work pressures on expert radiologists while maintaining the high-quality data necessary for AI development.

Exploring broader applications, a CNN [] addressed limitations in learning from small or suboptimal MRI datasets using a progressive growing generative adversarial network augmentation model. By generating realistic MRIs of brain tumors, the model enabled accurate classification of gliomas, meningiomas, and pituitary tumors, achieving an accuracy of 98.54%. This augmentation model offers a novel method to reduce the burden on radiologists by minimizing the need to manually generate large training datasets for deep learning frameworks.

In spine MRI, a study [] investigated the effectiveness of AI-generated reports. The study reported improvements in patient-centered summaries and workflow efficiency by assisting radiologists in producing concise reports. However, the technology also presented challenges, with 1.12% artificial hallucinations and 7.4% potentially harmful translations identified. These error rates highlight that, without optimization, the current implementation of such technology could increase radiologists’ workload, as they would need to verify AI-generated reports before sharing them with patients.

4. Discussion

This review highlights the wide-ranging AI solutions applied to MRI across multiple specialties. In particular, many studies focused on accelerating scan times, automating segmentation, optimizing workflows (e.g., MRI form vetting), and reducing radiologist reading times, with each contributing to improved productivity in different ways. In the following sections, we examine these findings in greater detail, beginning with a discussion of the dominant AI architectures employed. We then address the strengths and limitations of the current evidence, delve into the ethical and regulatory framework which is essential for real-world adoption, and conclude with future directions and recommendations that emphasize broader validation, feedback loops, and next-generation AI modalities.

4.1. Dominant AI Architectures and Approaches

In our review, the studies employed a range of AI-based techniques, with convolutional neural network (CNN) architectures being the most frequently utilized. CNNs can be customized [] for a wide array of tasks, including image processing, lesion detection, and characterization. Notable architectures used in the models include ResNet, DenseNet, and EfficientNet, which address specific limitations of earlier CNN models. ResNet, for instance, mitigates the vanishing gradient issue, while EfficientNet reduces computational and memory loads, leading to enhanced performance in both accuracy and efficiency [,,,,,]. Additionally, U-Net, a widely adopted CNN-based architecture, enables rapid and precise image segmentation and is commonly integrated into studies focused on automating segmentation tasks in MRI.

Beyond CNNs, other AI-based techniques, including some emerging technologies, were also implemented. For example, certain models utilized machine learning-driven intelligent automation software []. Classical machine learning (ML) methods continue to excel in simpler feature extraction tasks and often demonstrate more consistent performance across certain imaging modalities []. However, DL-based neural networks, particularly CNNs, have been shown to outperform classical ML approaches in most MRI-related applications []. A key advancement driving the success of DL is the integration of self-supervised learning (SSL).

Unlike traditional supervised learning, which relies on manually labeled datasets for “ground truth” supervisory signals, SSL enables models to generate implicit labels from unstructured data. By leveraging pretext tasks and downstream tasks, SSL-based ML models can effectively analyze large volumes of unlabeled medical imaging data while reducing the need for extensive human fine-tuning []. This approach has facilitated previously impractical developments, such as automated anatomical tracking in medical imaging []. Beyond deep learning-based applications, SSL has also been incorporated into novel ML frameworks for MRI, including dynamic fetal MRI, functional MRI for autism and dementia diagnosis, and cardiac MRI, all of which have demonstrated promising results [,,]. Although SSL may not directly enhance daily clinical workflow efficiency or reduce workload, it has the potential to accelerate the development of novel ML imaging techniques aimed at improving these aspects.

Another significant development since 2017 is the adoption of Transformer-based architectures for imaging tasks. Originally designed for sequence-to-sequence prediction, the Transformer architecture employs a self-attention mechanism that dynamically adjusts to input content, offering notable advantages over traditional CNNs in image processing tasks []. This innovation has also contributed to the emergence of large language models (LLMs), such as OpenAI’s Generative Pre-Trained Transformer (GPT), which leverage Transformer architecture and extensive text-based corpora to perform a wide range of natural language processing (NLP) tasks. Other foundational LLMs, including Bidirectional Encoder Representations from Transformers (BERT), have served as precursors to domain-specific models like Med-PaLM, which focuses on healthcare applications []. Unlike earlier ML architectures that relied on either SSL or unsupervised learning, LLMs primarily utilize SSL to derive labels from input data.

While LLMs have gained traction in various fields, including medical chatbot development [], their application in MRI remains limited. Among the reviewed studies, only one implemented an LLM-based approach for MRI analysis [], highlighting the need for further exploration in this domain. Future research could investigate the potential of LLMs to automate text-intensive radiology tasks, such as report generation and clinical protocol, thereby enhancing efficiency in radiological workflows.

4.2. Strengths and Limitations of Current Evidence

Some studies included in this review were sourced outside the initial search strategy to allow a more comprehensive discussion of certain topics. Because the journal archives and grey literature sources were limited to those commonly available in public or academic settings, the search was not exhaustive. Consequently, AI applications under vendor development or within non-commercial settings may have been overlooked. In addition, by adopting a broad scope that examines AI developments in MRI across multiple specialties, this review may have sacrificed depth in favor of breadth compared to more narrowly focused analyses.

The heterogeneity observed in the included research limited the feasibility of conducting a formal meta-analysis, thereby reducing the ability to draw robust, statistically aggregated conclusions. Variations in study design, patient population data, and MRI protocols (across either training and testing datasets) in turn reduce the reproducibility of the models discussed. Through issues such as data leakage, the same results are prevented from being obtained under conditions defined by other clinical settings []. With that said, the majority of studies (52/66, 78.8%) described attempts at internal cross-validation using different splits of training and test datasets. This would have helped prevent overfitting, thereby assisting in more reliable model generalization []. Apart from discrepancies in data use or preprocessing, computational costs and hardware variations are major sources of irreproducibility. Our included studies either did not discuss this information, or failed to simulate results using lower performance hardware or software. This posed a challenge in making direct comparisons between them, or deriving relevance for clinical practice.

A lack of external validation characterized the majority of included studies (48/66, 72.7%). This is not an uncommon limitation, with a 2019 meta-analysis [] reporting that only 6% of 516 studies on novel AI applications in medical imaging included external validation as a key component. This is despite the fact that such validation is a definitive step to ensuring that models can generalize to diverse clinical settings, patient populations, and imaging protocols. In addition, aside from one study [], the long-term impact and cost-effectiveness of AI applications were not thoroughly compared across different specialties.

Finally, the retrospective nature of many studies raises questions about their applicability to real-world clinical settings []. The potential sources of bias, including differences in patient demographics and potential conflicts of interest, were also not discussed in detail, which raises questions about the fairness and equity of certain AI solutions. Although this review sought to highlight productivity metrics enhanced by AI, most studies reported outcomes related primarily to time savings and diagnostic accuracy. Radiologist-specific productivity indicators, including reductions in repetitive tasks or improved workflow satisfaction, were often relegated to secondary outcomes, complicating cross-study comparisons.

4.3. Recommended Enhancements

4.3.1. Regulatory Frameworks and Compliance

Regulatory and compliance factors are crucial for a successful and consistent translation of AI tools into routine MRI practice. Concerns related to patient privacy, data security, and algorithmic transparency must be balanced with the potential productivity gains []. Regulatory guidelines established by organizations such as the United States Food and Drug Administration (FDA) and CE marking often mandate rigorous validation of AI algorithms, including prospective trials and post-market surveillance [,]. However, significant heterogeneity persists among vendors regarding the deployment, pricing, and maintenance of these AI products for specific clinical applications []. This variability poses challenges for stakeholders in selecting the most appropriate AI solution.

To address these complexities, several radiological organizations, including the American College of Radiology (ACR), Canadian Association of Radiologists (CAR), European Society of Radiology (ESR), Royal Australian and New Zealand College of Radiologists (RANZCR), and Radiological Society of North America (RSNA), have released joint guidelines to support the development, implementation, and use of AI tools []. These guidelines prioritize patient safety, ethical considerations, seamless integration with existing clinical workflows, and the stability of AI models through ongoing monitoring. Nevertheless, as these guidelines do not directly govern AI products or vendor selection, the responsibility falls on individual radiology departments or institutions to establish tailored frameworks. These frameworks must enable the selection and integration of suitable AI solutions while adhering to region-specific data standards [].

4.3.2. Generalizability and Multi-Center Validation

To ensure fairness and equity in AI-driven MRI applications, future research should prioritize minimizing biases related to demographics and disease prevalence. For smaller institutions that may lack the resources to compile large datasets, multicenter collaboration and external validation are crucial. Although many AI models achieve high accuracy within their home institutions, differences in imaging protocols, sequences, and MRI hardware often remain underexplored, leading to model underspecification and limited generalizability [,]. By incorporating multicenter validation, models can be trained on larger, more diverse datasets from multiple regions, enabling the development of algorithms that adapt to varying clinical populations and imaging protocols. In cases where legislative constraints hinder multicenter data sharing, synthetic data augmentation offers an alternative strategy to enhance dataset diversity. Publicly available, anonymized datasets, such as PROSTATEx, have already been utilized in some of the reviewed studies [,] and were recently combined with regional datasets to facilitate a novel multicenter, multi-scanner validation study for multiparametric MRI in prostate cancer [].

Another approach to improving generalizability is the adoption of a federated learning (FL) framework, which has gained increasing recognition in medical AI research []. FL enables institutions to collaboratively develop machine learning models while maintaining data privacy, as each institution retains local control over its dataset and model updates are orchestrated through a centralized server. This framework allows institutions to selectively share or receive data within a trusted execution environment. However, FL presents challenges, including concerns about data heterogeneity and the protection of sensitive medical information []. Additionally, stress testing is an effective strategy to address model underspecification and improve robustness. By simulating variations in imaging parameters, introducing artificial noise, and modifying datasets to mimic real-world conditions, stress testing can identify model vulnerabilities. While studies on stress testing in MRI-based AI remain limited, findings from other imaging domains suggest its potential benefits [].

From an implementation perspective, several infrastructure-related challenges must be addressed to enable real-world AI deployment in MRI. Many hospitals lack in-house machine learning expertise, necessitating collaborations with external research groups or commercial organizations. These partnerships often rely on third-party cloud-based computing systems, raising concerns regarding data security and privacy []. Furthermore, seamless integration of AI tools into existing radiology information systems (RIS) and picture archiving and communication systems (PACS) is essential for widespread adoption. Given the complexity and variability of radiology IT infrastructures, the development of open-source, vendor-agnostic PACS-AI platforms holds promise in addressing these integration challenges [,].

Beyond technical considerations, adequate human IT support and radiologist training are critical to ensuring the successful implementation of AI in clinical practice. Without appropriate infrastructure and user engagement, even the most advanced AI models may struggle to demonstrate tangible benefits in real-world settings. A recent systematic review [] highlighted a significant gap in AI education among radiologists and radiographers, despite widespread enthusiasm for its adoption. This challenge is further exacerbated by the “black box” nature of certain AI models, which obscure decision-making processes and hinder practitioners’ ability to validate outputs []. Moreover, standardized training programs for imaging professionals in AI applications remain largely absent []. Increasing support for “white box” AI systems, which provide greater interpretability, alongside the development of dedicated educational platforms, could enhance transparency and trust. By equipping healthcare professionals with the knowledge to assess AI-generated recommendations and communicate their implications effectively, these efforts could ultimately foster greater public confidence in AI-driven medical technologies.

4.3.3. Ethical Considerations

The expansion of digital healthcare has heightened concerns regarding ethical data usage and patient confidentiality. As hospitals and imaging centers rapidly upgrade IT systems to integrate AI tools, cybersecurity measures sometimes fail to keep pace. This has led to well-documented breaches of medical record databases and instances of fraudulent claims, exacerbating patient mistrust in AI-driven healthcare solutions []. In response, some regions have incorporated ethical AI guidelines into their legal frameworks []. For instance, the European Parliament’s AI Act, adopted in 2024 and set for full enforcement by 2026, complements existing legislation such as the General Data Protection Regulation (GDPR) and the Data Act. The AI Act establishes requirements for safety, reliability, and individual rights while fostering innovation and competitiveness. As the continued expansion of AI in healthcare relies on access to large-scale patient datasets, implementing robust regulatory frameworks is essential to maintaining public trust.

Hospitals and imaging centers must establish clear strategies for integrating AI-driven workflow changes to optimize efficiency while maintaining high standards of patient care. One key approach involves redesigning tasks to allow radiologists to focus on complex image interpretation and direct patient interactions while delegating routine or repetitive processes to automated AI tools []. However, for successful implementation, radiologists should play a central role in defining AI system performance parameters and must have the ability to pause or override the system if clinical targets are not met []. Ensuring transparency and control over AI-assisted decision-making will be essential for fostering trust and adoption among healthcare professionals.

Additionally, defining clear roles, responsibilities, and liabilities for clinicians involved in AI-integrated workflows is crucial for smooth and effective implementation []. AI adoption is expected to contribute to a rising clinical workload, making it imperative that AI-driven workflows align seamlessly with existing administrative and logistical processes []. In many cases, these supporting processes may also require AI-driven optimizations to prevent additional administrative burdens on radiologists and radiographers. A well-coordinated, symbiotic relationship between AI automation and hospital workflow management will be essential to maximizing the benefits of AI in radiology while ensuring operational efficiency and clinician satisfaction.

4.3.4. Current Recommendations

The prominence of segmentation among the included studies is unsurprising, given that it represents one of the earliest applications of AI in medical imaging []. However, a key limitation in comparing these studies is the heterogeneity of computational metrics and the lack of standardization for clinical implementation. Additionally, over a third of the segmentation studies (10/26, 38.5%) did not report reasoning time alongside accuracy improvements, limiting the ability to assess their potential clinical applicability. Despite these limitations, common trends in convolutional neural network (CNN) usage were observed across the included segmentation studies. UNet, an encoder–decoder CNN with skip connections and a fully convolutional architecture, enables the preservation of spatial information and achieves high precision in pixel-level segmentation. As a result, it has become the mainstream choice for medical image segmentation [].

The majority of the included segmentation studies employed UNet, either in customized forms or in combination with other CNNs for specific segmentation tasks. One study focusing on liver segmentation [] utilized UNet++, an enhanced variant of UNet that incorporates denser skip connections between different stages to reduce the semantic gap between the encoder and decoder. Although this approach improved efficiency, the dice similarity coefficient (DSC) for liver tumor segmentation remained below 0.7, indicating room for further accuracy improvements. Two studies that demonstrated overall productivity gains [,] applied U-Net architectures with an EfficientNet encoder. EfficientNet encoders require up to eight times fewer parameters compared to similar networks, making them well-suited for building efficient models with limited computational resources []. Despite these resource optimizations, both studies maintained high segmentation accuracy, reporting strong DSC scores between 0.97 and 0.99. This suggests that the combination of U-Net architectures with EfficientNet encoders is an effective approach for achieving both accuracy and computational efficiency in medical image segmentation.

Among the included studies focusing on reduced reading times, the choice of AI model for lesion classification was largely determined by the anatomical region and disease pathology under investigation. ResNet is one of the most widely recommended deep learning models for classification due to its superior feature extraction capabilities []. It was employed in several studies [,] examining brain and spine pathologies, with one study identifying ResNet50 as the most effective pre-trained model for discriminating lesions in multiple sclerosis (MS), achieving an AUC of 0.81. However, the same study demonstrated even greater accuracy with a custom-designed network, achieving an AUC of 0.90. This aligns with a broader trend observed in other studies comparing CNN performances, where task-specific architectures often outperformed standard pre-trained models. Despite variations in model selection, all studies reported measurable improvements in classification accuracy with AI assistance. However, studies that failed to clarify productivity gains did not provide a comparison of AI processing times against human reading times, limiting their clinical applicability.

Rather than focusing solely on model selection, our analysis suggests that future research should prioritize simulated AI applications in time-constrained settings. These studies should incorporate a diverse range of readers with varying levels of experience, not just expert radiologists, to better reflect real-world clinical workflows. An example of this approach is seen in one of the included studies [], which focused on automated classification of lumbar spine central canal and lateral recess stenosis. Conducted under standardized reading conditions, the study found that AI-assisted reporting required 47 to 71 s, compared to 124 to 274 s for unassisted radiologists. Additionally, DL-assisted general and in-training radiologists demonstrated improved interobserver agreement for four-class neural foraminal stenosis, with κ values of 0.71 and 0.70 (with DL) vs. 0.39 and 0.39 (without DL), respectively (both p < 0.001). Beyond the measurable gains in efficiency and accuracy, the study highlights the advantages of an “AI and human collaboration model”, which should be a focal point for future research.

Among all productivity themes analyzed, the use of AI for reducing MRI scanner time demonstrated the highest success in achieving measurable productivity gains, with 84.6% (11/13) of the included studies reporting positive outcomes. Even in studies where productivity losses were observed [,], improvements in image quality—such as enhanced signal-to-noise ratio (SNR) and contrast-to-noise ratio (CNR)—were still achieved. However, these studies did not clarify how such enhancements would translate to improved classification or diagnostic accuracy. Despite the demonstrated benefits, only a limited number of deep learning-based reconstruction (DLR) techniques are currently available for clinical MRI scanners []. Moreover, most of these techniques remain vendor-specific, including Advanced Intelligent Clear-IQ Engine (AiCE; Canon Medical Systems), AIR Recon DL (GE Healthcare), and Deep Resolve (Siemens). Another significant barrier to broader adoption is the lack of publicly available training datasets, which restricts independent advancements and customization for different clinical settings [].

To overcome these challenges, our study recommends further vendor-agnostic research aimed at improving image quality, particularly for older MRI scanners from various manufacturers. One example is a multicenter study [] that investigated deep learning reconstruction for brain MRI using SubtleMR, an FDA-cleared, vendor-agnostic CNN trained on thousands of MRI datasets spanning multiple vendors, scanner models, field strengths, and clinical sites. By leveraging such diverse datasets, studies can provide stronger evidence that deep learning-based processing enhances MRI efficiency and diagnostic value across different clinical environments.

Because of the limited number of studies demonstrating novel improvements in workflow processes or workload reduction, our paper advocates for increased integration of Transformer-based architectures and large language models (LLMs) in future machine learning (ML) applications for MRI. These advancements could facilitate automated draft report generation, standardization of clinical protocols, and triaging of complex cases by leveraging information from request forms and electronic medical records []. Given their low customization costs and compatibility with existing IT infrastructure [], LLMs have the potential to surpass other AI tools in overcoming current technological barriers. Recent innovations, such as voice assistants, could further enhance the utility of LLMs by enabling conversational interactions. Integrating LLMs into existing speech recognition dictation systems used in radiology departments may offer real-time productivity benefits, fostering seamless collaboration between AI and human radiologists. This approach could help mitigate potential time losses or increased workload associated with text-based reporting [].

Despite the early success of LLMs in other imaging modalities, such as radiograph interpretation [], recent studies evaluating their applicability in MRI have highlighted several challenges. One major limitation is that human–LLM interactions have primarily been assessed in controlled environments rather than real-world clinical settings, where frequent interruptions and high workloads influence radiologists’ behavior []. Additionally, the phenomenon of “hallucinations” in generative AI remains a significant barrier to its adoption in MRI-reporting workflows []. Future LLM must address these shortcomings to ensure reliability, safety, and clinical trustworthiness. As with other emerging AI applications, the successful integration of LLMs in MRI will require close collaboration among AI developers, clinical radiologists, healthcare administrators, and regulatory bodies. Establishing best practices for ethical and efficient implementation will also be critical for ensuring the widespread adoption of LLMs across MRI and other medical specialties [].

4.3.5. Future Directions

Looking ahead, more standardized and multicenter prospective studies are needed to validate AI models under real-world conditions. Such trials could compare AI-driven MRI workflows with conventional practices, providing clearer insights into time savings, cost-effectiveness, and overall patient outcomes. In parallel, guidelines such as the recent PRISMA extensions for AI research [] should continue to evolve in step with rapidly expanding AI research and applications. Establishing standardized productivity metrics, such as reduced turnaround times, minimized radiologist fatigue, and improved throughput would allow more meaningful comparisons across different studies.

Beyond technical measures, future research should also focus on user experiences, radiologist satisfaction, and patient-centered outcomes. While improvements in conventional metrics remain valuable, AI imaging has significant potential to reduce the fatigue and cognitive overload commonly experienced by radiologists. Burnout is a pressing concern, with one systematic review [] finding rates as high as 88% in some regions. Although AI could theoretically mitigate burnout by streamlining tasks and maintaining diagnostic performance during prolonged reading sessions, a cross-sectional study [] indicated that frequent AI use may paradoxically raise burnout risks if ease of adoption, radiologist satisfaction, and workflow integration are not properly addressed. Thus, tackling these interlinked factors is vital for the long-term viability of AI tools in MRI.

Lastly, initiatives that include iterative feedback loops, where radiologists refine AI outputs, could enhance model accuracy, yield institution-specific solutions, and build trust in AI recommendations. However, integrating AI into radiological workflows is not easy and requires significant resources and time, highlighting the need for strategies to continuously retrain and adapt models to evolving clinical settings []. Diversifying data sources, for instance by involving radiology residents in dataset creation [], could lessen the load on senior radiologists and bolster model robustness. In addition, incorporating AI education into the radiology residency curriculum will equip future radiologists with the necessary technical competencies []. Further evidence is also required to support vendor-neutral platforms that can seamlessly integrate AI systems from multiple developers, thereby improving MRI access and facilitating enhanced combined reporting [,].

5. Conclusions

This review highlights significant advances in productivity metrics achieved through AI applications in MRI across various specialties, particularly in automating segmentation and reducing both scanning and reading times. Although many studies showed improvements in diagnostic accuracy, quantitative descriptions of efficiency gains were often limited. To enable meaningful clinical translation, future AI models must prioritize maintaining or improving productivity metrics without compromising diagnostic accuracy. In order to tackle underexplored themes in optimizing workflow and reducing workload, our study recommends increased focus and incorporation of LLM advancements in MRI workflow in order to accelerate radiologist engagement with substantial volumes of textual information encountered in day-to-day clinical practice. Good practices seen in other imaging modalities, where topics such as large-scale workload reduction or refined worklist triage have seen more maturity, should be explored and adopted in MRI [,].

Collaborative efforts among researchers, clinicians, and industry partners remain essential for developing standardized benchmarks and guidelines to measure productivity. Encouragingly, emerging initiatives are also addressing reproducibility concerns by integrating feedback loops for continuous model retraining and optimization. Exploring alternative training data sources could further reduce radiologist workload while retaining the credibility of reported productivity metrics. Ultimately, striking the right balance between efficacy, safety, and ease of implementation will be key to ensuring that AI-driven tools can meaningfully enhance radiologist productivity in MRI.

Author Contributions

Conceptualization, methodology, validation, data curation, writing—review and editing, A.N., W.O., A.L., A.M., Y.H.T., Y.J.L., S.J.O. and J.T.P.D.H.; software, resources, A.N., N.W.L., J.J.H.T., N.K. and J.T.P.D.H.; writing—original draft preparation, A.N. and J.J.H.T.; project administration, A.N., A.L., Y.H.T., S.J.O. and J.T.P.D.H.; supervision, funding acquisition, J.T.P.D.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by: Direct Funding from MOH/NMRC: This research is supported by the Singapore Ministry of Health National Medical Research Council under the NMRC Clinician Innovator Award (CIA); Grant Title: Deep learning pipeline for augmented reporting of MRI whole spine (CIAINV23jan-0001, MOH-001405) (J.T.P.D.H.).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction in the Abstract. This change does not affect the scientific content of the article.

References

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing Healthcare: The Role of Artificial Intelligence in Clinical Practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef]

- GE Healthcare—Committing to Sustainability in MRI. Available online: https://www.gehealthcare.com/insights/article/committing-to-sustainability-in-mri (accessed on 15 November 2024).

- Zhou, M.; Zhao, X.; Luo, F.; Luo, J.; Pu, H.; Tao, X. Robust RGB-T Tracking via Adaptive Modality Weight Correlation Filters and Cross-modality Learning. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 95. [Google Scholar] [CrossRef]

- Zhou, M.; Lan, X.; Wei, X.; Liao, X.; Mao, Q.; Li, Y. An End-to-End Blind Image Quality Assessment Method Using a Recurrent Network and Self-Attention. IEEE Trans. Broadcast. 2023, 69, 369–377. [Google Scholar] [CrossRef]

- Zhou, M.; Chen, L.; Wei, X.; Liao, X.; Mao, Q.; Wang, H. Perception-Oriented U-Shaped Transformer Network for 360-Degree No-Reference Image Quality Assessment. IEEE Trans. Broadcast. 2023, 69, 396–405. [Google Scholar] [CrossRef]

- Zhou, M.; Shen, W.; Wei, X.; Luo, J.; Jia, F.; Zhuang, X.; Jia, W. Blind Image Quality Assessment: Exploring Content Fidelity Perceptibility via Quality Adversarial Learning. Int. J. Comput. Vis. 2025. [Google Scholar] [CrossRef]

- Zhou, M.; Wu, X.; Wei, X.; Tao, X.; Fang, B.; Kwong, S. Low-Light Enhancement Method Based on a Retinex Model for Structure Preservation. IEEE Trans. Multimed. 2024, 26, 650–662. [Google Scholar] [CrossRef]

- McDonald, R.J.; Schwartz, K.M.; Eckel, L.J.; Diehn, F.E.; Hunt, C.H.; Bartholmai, B.J.; Erickson, B.J.; Kallmes, D.F. The Effects of Changes in Utilization and Technological Advancements of Cross-Sectional Imaging on Radiologist Workload. Acad. Radiol. 2015, 22, 1191–1198. [Google Scholar] [CrossRef] [PubMed]

- Quinn, L.; Tryposkiadis, K.; Deeks, J.; De Vet, H.C.W.; Mallett, S.; Mokkink, L.B.; Takwoingi, Y.; Taylor-Phillips, S.; Sitch, A. Interobserver Variability Studies in Diagnostic Imaging: A Methodological Systematic Review. Br. J. Radiol. 2023, 96, 20220972. [Google Scholar] [CrossRef]

- Khalifa, M.; Albadawy, M. AI in Diagnostic Imaging: Revolutionising Accuracy and Efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Potočnik, J.; Foley, S.; Thomas, E. Current and Potential Applications of Artificial Intelligence in Medical Imaging Practice: A Narrative Review. J. Med. Imaging Radiat. Sci. 2023, 54, 376–385. [Google Scholar] [CrossRef] [PubMed]

- Younis, H.A.; Eisa, T.A.; Nasser, M.; Sahib, T.M.; Noor, A.A.; Alyasiri, O.M.; Salisu, S.; Hayder, I.M.; Younis, H.A. A Systematic Review and Meta-Analysis of Artificial Intelligence Tools in Medicine and Healthcare: Applications, Considerations, Limitations, Motivation and Challenges. Diagnostics 2024, 14, 109. [Google Scholar] [CrossRef]

- Gitto, S.; Serpi, F.; Albano, D.; Risoleo, G.; Fusco, S.; Messina, C.; Sconfienza, L.M. AI Applications in Musculoskeletal Imaging: A Narrative Review. Eur. Radiol. Exp. 2024, 8, 22. [Google Scholar] [CrossRef]

- Vogrin, M.; Trojner, T.; Kelc, R. Artificial Intelligence in Musculoskeletal Oncological Radiology. Radiol. Oncol. 2021, 55, 1–6. [Google Scholar] [CrossRef]

- Wenderott, K.; Krups, J.; Zaruchas, F.; Weigl, M. Effects of Artificial Intelligence Implementation on Efficiency in Medical Imaging—A Systematic Literature Review and Meta-Analysis. NPJ Digit. Med. 2024, 7, 265. [Google Scholar] [CrossRef]

- Stec, N.; Arje, D.; Moody, A.R.; Krupinski, E.A.; Tyrrell, P.N. A Systematic Review of Fatigue in Radiology: Is It a Problem? Am. J. Roentgenol. 2018, 210, 799–806. [Google Scholar] [CrossRef]

- Ranschaert, E.; Topff, L.; Pianykh, O. Optimization of Radiology Workflow with Artificial Intelligence. Radiol. Clin. N. Am. 2021, 59, 955–966. [Google Scholar] [CrossRef] [PubMed]

- Clifford, B.; Conklin, J.; Huang, S.Y.; Feiweier, T.; Hosseini, Z.; Goncalves Filho, A.L.M.; Tabari, A.; Demir, S.; Lo, W.-C.; Longo, M.G.F.; et al. An Artificial Intelligence-Accelerated 2-Minute Multi-Shot Echo Planar Imaging Protocol for Comprehensive High-Quality Clinical Brain Imaging. Magn. Reson. Med. 2022, 87, 2453–2463. [Google Scholar] [CrossRef] [PubMed]

- Jing, X.; Wielema, M.; Cornelissen, L.J.; van Gent, M.; Iwema, W.M.; Zheng, S.; Sijens, P.E.; Oudkerk, M.; Dorrius, M.D.; van Ooijen, P.M.A. Using Deep Learning to Safely Exclude Lesions with Only Ultrafast Breast MRI to Shorten Acquisition and Reading Time. Eur. Radiol. 2022, 32, 8706–8715. [Google Scholar] [CrossRef]

- Chen, G.; Fan, X.; Wang, T.; Zhang, E.; Shao, J.; Chen, S.; Zhang, D.; Zhang, J.; Guo, T.; Yuan, Z.; et al. A Machine Learning Model Based on MRI for the Preoperative Prediction of Bladder Cancer Invasion Depth. Eur. Radiol. 2023, 33, 8821–8832. [Google Scholar] [CrossRef]

- Ivanovska, T.; Daboul, A.; Kalentev, O.; Hosten, N.; Biffar, R.; Völzke, H.; Wörgötter, F. A Deep Cascaded Segmentation of Obstructive Sleep Apnea-Relevant Organs from Sagittal Spine MRI. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 579–588. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, Y.; Qi, H.; Hu, Z.; Chen, Z.; Yang, R.; Qiao, H.; Sun, J.; Wang, T.; Zhao, X.; et al. Deep Learning–Enhanced T1 Mapping with Spatial-Temporal and Physical Constraint. Magn. Reson. Med. 2021, 86, 1647–1661. [Google Scholar] [CrossRef]

- Herington, J.; McCradden, M.D.; Creel, K.; Boellaard, R.; Jones, E.C.; Jha, A.K.; Rahmim, A.; Scott, P.J.H.; Sunderland, J.J.; Wahl, R.L.; et al. Ethical Considerations for Artificial Intelligence in Medical Imaging: Data Collection, Development, and Evaluation. J. Nucl. Med. 2023, 64, 1848–1854. [Google Scholar] [CrossRef]

- Martín-Noguerol, T.; Paulano-Godino, F.; López-Ortega, R.; Górriz, J.M.; Riascos, R.F.; Luna, A. Artificial Intelligence in Radiology: Relevance of Collaborative Work between Radiologists and Engineers for Building a Multidisciplinary Team. Clin. Radiol. 2021, 76, 317–324. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Cui, Z.; Shi, Z.; Jiang, Y.; Zhang, Z.; Dai, X.; Yang, Z.; Gu, Y.; Zhou, L.; Han, C.; et al. A Robust and Efficient AI Assistant for Breast Tumor Segmentation from DCE-MRI via a Spatial-Temporal Framework. Patterns 2023, 4, 100826. [Google Scholar] [CrossRef]

- Pötsch, N.; Dietzel, M.; Kapetas, P.; Clauser, P.; Pinker, K.; Ellmann, S.; Uder, M.; Helbich, T.; Baltzer, P.A.T. An A.I. Classifier Derived from 4D Radiomics of Dynamic Contrast-Enhanced Breast MRI Data: Potential to Avoid Unnecessary Breast Biopsies. Eur. Radiol. 2021, 31, 5866–5876. [Google Scholar] [CrossRef]

- Zhao, Z.; Du, S.; Xu, Z.; Yin, Z.; Huang, X.; Huang, X.; Wong, C.; Liang, Y.; Shen, J.; Wu, J.; et al. SwinHR: Hemodynamic-Powered Hierarchical Vision Transformer for Breast Tumor Segmentation. Comput. Biol. Med. 2024, 169, 107939. [Google Scholar] [CrossRef]

- Lim, R.P.; Kachel, S.; Villa, A.D.M.; Kearney, L.; Bettencourt, N.; Young, A.A.; Chiribiri, A.; Scannell, C.M. CardiSort: A Convolutional Neural Network for Cross Vendor Automated Sorting of Cardiac MR Images. Eur. Radiol. 2022, 32, 5907–5920. [Google Scholar] [CrossRef]

- Parker, J.; Coey, J.; Alambrouk, T.; Lakey, S.M.; Green, T.; Brown, A.; Maxwell, I.; Ripley, D.P. Evaluating a Novel AI Tool for Automated Measurement of the Aortic Root and Valve in Cardiac Magnetic Resonance Imaging. Cureus 2024, 16, e59647. [Google Scholar] [CrossRef]

- Duan, C.; Deng, H.; Xiao, S.; Xie, J.; Li, H.; Zhao, X.; Han, D.; Sun, X.; Lou, X.; Ye, C.; et al. Accelerate Gas Diffusion-Weighted MRI for Lung Morphometry with Deep Learning. Eur. Radiol. 2022, 32, 702–713. [Google Scholar] [CrossRef]

- Xie, L.; Udupa, J.K.; Tong, Y.; Torigian, D.A.; Huang, Z.; Kogan, R.M.; Wootton, D.; Choy, K.R.; Sin, S.; Wagshul, M.E.; et al. Automatic Upper Airway Segmentation in Static and Dynamic MRI via Anatomy-Guided Convolutional Neural Networks. Med. Phys. 2022, 49, 324–342. [Google Scholar] [CrossRef]

- Wang, J.; Peng, Y.; Jing, S.; Han, L.; Li, T.; Luo, J. A deep-learning approach for segmentation of liver tumors in magnetic resonance imaging using UNet++. BMC Cancer 2023, 23, 1060. [Google Scholar] [CrossRef] [PubMed]

- Gross, M.; Huber, S.; Arora, S.; Ze’evi, T.; Haider, S.P.; Kucukkaya, A.S.; Iseke, S.; Kuhn, T.N.; Gebauer, B.; Michallek, F.; et al. Automated MRI Liver Segmentation for Anatomical Segmentation, Liver Volumetry, and the Extraction of Radiomics. Eur. Radiol. 2024, 34, 5056–5065. [Google Scholar] [CrossRef] [PubMed]

- Oestmann, P.M.; Wang, C.J.; Savic, L.J.; Hamm, C.A.; Stark, S.; Schobert, I.; Gebauer, B.; Schlachter, T.; Lin, M.; Weinreb, J.C.; et al. Deep Learning–Assisted Differentiation of Pathologically Proven Atypical and Typical Hepatocellular Carcinoma (HCC) versus Non-HCC on Contrast-Enhanced MRI of the Liver. Eur. Radiol. 2021, 31, 4981–4990. [Google Scholar] [CrossRef] [PubMed]

- Yan, M.; Zhang, X.; Zhang, B.; Geng, Z.; Xie, C.; Yang, W.; Zhang, S.; Qi, Z.; Lin, T.; Ke, Q.; et al. Deep Learning Nomogram Based on Gd-EOB-DTPA MRI for Predicting Early Recurrence in Hepatocellular Carcinoma after Hepatectomy. Eur. Radiol. 2023, 33, 4949–4961. [Google Scholar] [CrossRef]

- Wei, H.; Zheng, T.; Zhang, X.; Wu, Y.; Chen, Y.; Zheng, C.; Jiang, D.; Wu, B.; Guo, H.; Jiang, H.; et al. MRI Radiomics Based on Deep Learning Automated Segmentation to Predict Early Recurrence of Hepatocellular Carcinoma. Insights Imaging 2024, 15, 120. [Google Scholar] [CrossRef]

- Gross, M.; Haider, S.P.; Ze’evi, T.; Huber, S.; Arora, S.; Kucukkaya, A.S.; Iseke, S.; Gebauer, B.; Fleckenstein, F.; Dewey, M.; et al. Automated Graded Prognostic Assessment for Patients with Hepatocellular Carcinoma Using Machine Learning. Eur. Radiol. 2024, 34, 6940–6952. [Google Scholar] [CrossRef]

- Cunha, G.M.; Hasenstab, K.A.; Higaki, A.; Wang, K.; Delgado, T.; Brunsing, R.L.; Schlein, A.; Schwartzman, A.; Hsiao, A.; Sirlin, C.B.; et al. Convolutional Neural Network-Automated Hepatobiliary Phase Adequacy Evaluation May Optimize Examination Time. Eur. J. Radiol. 2020, 124, 108837. [Google Scholar] [CrossRef]

- Liu, G.; Pan, S.; Zhao, R.; Zhou, H.; Chen, J.; Zhou, X.; Xu, J.; Zhou, Y.; Xue, W.; Wu, G. The Added Value of AI-Based Computer-Aided Diagnosis in Classification of Cancer at Prostate MRI. Eur. Radiol. 2023, 33, 5118–5130. [Google Scholar] [CrossRef]

- Simon, B.D.; Merriman, K.M.; Harmon, S.A.; Tetreault, J.; Yilmaz, E.C.; Blake, Z.; Merino, M.J.; An, J.Y.; Marko, J.; Law, Y.M.; et al. Automated Detection and Grading of Extraprostatic Extension of Prostate Cancer at MRI via Cascaded Deep Learning and Random Forest Classification. Acad. Radiol. 2024, 31, 4096–4106. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Xing, Z.; Kong, Z.; Yu, Y.; Chen, Y.; Zhao, X.; Song, B.; Wang, X.; Wu, P.; Wang, X.; et al. Artificial Intelligence as Diagnostic Aiding Tool in Cases of Prostate Imaging Reporting and Data System Category 3: The Results of Retrospective Multi-Center Cohort Study. Abdom. Radiol. 2023, 48, 3757–3765. [Google Scholar] [CrossRef] [PubMed]

- Hosseinzadeh, M.; Saha, A.; Brand, P.; Slootweg, I.; de Rooij, M.; Huisman, H. Deep Learning–Assisted Prostate Cancer Detection on Bi-Parametric MRI: Minimum Training Data Size Requirements and Effect of Prior Knowledge. Eur. Radiol. 2022, 32, 2224–2234. [Google Scholar] [CrossRef] [PubMed]

- Oerther, B.; Engel, H.; Nedelcu, A.; Schlett, C.L.; Grimm, R.; von Busch, H.; Sigle, A.; Gratzke, C.; Bamberg, F.; Benndorf, M. Prediction of Upgrade to Clinically Significant Prostate Cancer in Patients under Active Surveillance: Performance of a Fully Automated AI-Algorithm for Lesion Detection and Classification. Prostate 2023, 83, 871–878. [Google Scholar] [CrossRef]

- Li, Q.; Xu, W.-Y.; Sun, N.-N.; Feng, Q.-X.; Hou, Y.-J.; Sang, Z.-T.; Zhu, Z.-N.; Hsu, Y.-C.; Nickel, D.; Xu, H.; et al. Deep Learning-Accelerated T2WI: Image Quality, Efficiency, and Staging Performance against BLADE T2WI for Gastric Cancer. Abdom. Radiol. 2024, 49, 2574–2584. [Google Scholar] [CrossRef]

- Goel, A.; Shih, G.; Riyahi, S.; Jeph, S.; Dev, H.; Hu, R.; Romano, D.; Teichman, K.; Blumenfeld, J.D.; Barash, I.; et al. Deployed Deep Learning Kidney Segmentation for Polycystic Kidney Disease MRI. Radiol. Artif. Intell. 2022, 4, e210205. [Google Scholar] [CrossRef]