Abstract

The equilibrium optimizer (EO) is a recently developed physics-based optimization technique for complex optimization problems. Although the algorithm shows excellent exploitation capability, it still has some drawbacks, such as the tendency to fall into local optima and poor population diversity. To address these shortcomings, an enhanced EO algorithm is proposed in this paper. First, a spiral search mechanism is introduced to guide the particles to more promising search regions. Then, a new inertia weight factor is employed to mitigate the oscillation phenomena of particles. To evaluate the effectiveness of the proposed algorithm, it has been tested on the CEC2017 test suite and the mobile robot path planning (MRPP) problem and compared with some advanced metaheuristic techniques. The experimental results demonstrate that our improved EO algorithm outperforms the comparison methods in solving both numerical optimization problems and practical problems. Overall, the developed EO variant has good robustness and stability and can be considered as a promising optimization tool.

1. Introduction

Optimization problems have gained significant attention in engineering and scientific domains. In general, the objective of optimization problems is to achieve the best possible outcome by minimizing the corresponding objective function while minimizing undesirable factors [1]. These problems may involve constraints, which means that various constraints need to be satisfied during the optimization process. Based on their characteristics, optimization problems can be classified into two categories: local optimization and global optimization. Local optimization aims to determine the optimal value within a local region [2]. On the other hand, global optimization aims to find the optimal value within a given region. Therefore, global optimization is more challenging compared to local optimization.

To address various types of global optimization problems, numerous optimization techniques have been developed [3]. Among the current optimization techniques, metaheuristic algorithms have gained widespread attention due to their advantages of being gradient-free, not requiring prior information about the problem, and offering high flexibility. Metaheuristic algorithms provide acceptable solutions with relatively fewer computational costs [4]. Based on their sources of inspiration, metaheuristic algorithms can be classified into four categories: swarm intelligence algorithms, evolutionary optimization algorithms, physics-inspired algorithms, and human-inspired algorithms [5,6]. Swarm intelligence optimization algorithms simulate the cooperative behavior observed in animal populations in nature. Examples of such algorithms include Artificial Bee Colony (ABC) [7], Particle Swarm Optimization (PSO) [8], Grey Wolf Optimization (GWO) [9], Firefly Optimization (FA) [10], Ant Colony Optimization (ACO) [11], Harris Hawks Optimization Algorithm (HHO) [12], Salp Swarm Algorithm (SSA) [13], and others. The second category draws inspiration from the concept of natural evolution. These algorithms include, but are not limited to, Evolution Strategy (ES) [14], Differential Evolution (DE) [15], Backtracking Search Algorithm (BSA) [16], Stochastic Fractal Search (SFS) [17], Wildebeests Herd Optimization (WHO) [18]. The third class of metaheuristic algorithms is inspired by physics concepts. The following algorithms are some examples of physics-inspired algorithms: Simulated Annealing (SA) [19] algorithm, Big Bang-Big Crunch (BB-BC) [20] algorithm, Central Force Optimization (CFO) [21], Intelligent Water Drops (IWD) [22], Slime Mold Algorithm (SMA) [23], Gravitational Search Algorithm (GSA) [24], Black Hole Algorithm (BHA) [25], Water Cycle Algorithm (WCA) [26], Lightning Search Algorithm (LSA) [27]. As these physics-inspired algorithms proved to be effective in engineering and science, more similar algorithms were developed, such as Multi-Verse Optimizer (MVO) [28], Thermal Exchange Optimization (TEO) [29], Henry Gas Solubility Optimization (HGSO) [30], Equilibrium Optimizer (EO) [31], Archimedes Optimization Algorithm (AOA) [32], and Special Relativity Search (SRS) [33]. The last class of metaheuristic techniques simulates human behavior, such as Seeker Optimization Algorithm (SOA) [34], Imperialist Competitive Algorithm (ICA) [35], Brain Storm Optimization (BSO) [36], and Teaching-Learning-Based Optimization (TLBO) [37].

The most popular categories among these are swarm intelligence algorithms and physics-inspired algorithms, as they offer reliable metaphors and simple yet efficient search mechanisms. In this work, we consider leveraging the search behavior of swarm intelligence algorithms to enhance the performance of a physics-inspired algorithm called EO. EO simulates the dynamic equilibrium concept of mass in physics. In a container, the attempt to achieve dynamic equilibrium of mass within a controlled volume is performed by expelling or absorbing particles, which are referred to as a set of operators employed during the search in the solution space. Based on these search models, EO has demonstrated its performance across a range of real-world problems, such as solar photovoltaic parameter estimation [38], feature selection [39], multi-level threshold image segmentation [40], and so on. Despite the simple search mechanism and effective search capability of the EO algorithm, it still suffers from limitations, such as falling into local optima traps and imbalanced exploration and exploitation. To address these limitations, this paper proposes a novel variant of EO called SSEO by introducing an adaptive inertia weight factor and a swarm-based spiral search mechanism. The adaptive inertia weight factor is employed to enhance population diversity and strengthen the algorithm’s global exploration ability, while the spiral search mechanism is introduced to expand the search space of particles. These two mechanisms work synergistically to achieve a balance between exploration and exploitation phases of the algorithm. To evaluate the performance of the proposed algorithm, 29 benchmark test functions from the IEEE CEC 2017 are used. The results obtained by SSEO are compared against several state-of-the-art metaheuristic algorithms, including the basic EO, spiral search mechanism-based metaheuristics, and recently proposed variants of EO. The test results demonstrate that SSEO provides competitive results on almost all functions compared to the benchmark algorithms. Additionally, the SSEO algorithm is tested on a real-world problem of MRPP and compared against several classical metaheuristic algorithms. Simulation results on three maps with different characteristics indicate that the developed SSEO-based path planning approach can find obstacle-free paths with smaller computational costs, suggesting its promising potential as a path planner. The main contributions of this work can be summarized as follows:

- Utilizing the structure of EO, an enhanced variant called SSEO is proposed, which employs two simple yet effective mechanisms to improve population diversity, convergence performance, and the balance between exploration and exploitation.

- SSEO incorporates an adaptive inertia weight mechanism to enhance population diversity in EO and a swarm-inspired spiral search mechanism to expand the search space. The simultaneous operation of these two mechanisms ensures a stable balance between exploration and exploitation.

- To evaluate the effectiveness and problem-solving capability of SSEO, the CEC 2017 benchmark function set is utilized. Experimental results demonstrate that the proposed algorithm outperforms the basic EO, several recently reported EO variants, and other state-of-the-art metaheuristic algorithms.

- To investigate the ability of the proposed EO variant in solving real-world problems, it is applied to address the MRPP problem. Simulation results indicate that, compared to the benchmark algorithms, SSEO can provide reasonable collision-free paths for the mobile robot in different environmental settings.

The remaining parts of this paper are organized as follows: Section 2 provides a literature review. Section 3 introduces the search framework and mathematical model of the basic EO. Section 4 reports the developed strategies and the framework of the SSEO algorithm. The validation of the SSEO algorithm’s effectiveness using CEC 2017 functions is presented in Section 5. Section 6 introduces the developed SSEO-based MRPP approach and validates its performance. Finally, Section 7 summarizes the research and extends future research directions.

2. Related Work

Well-established metaheuristic algorithms are equipped with reasonable mechanisms to transition between exploration and exploitation. Global exploration allows the algorithm to comprehensively search the solution space and explore unknown regions, while local exploitation aids in fine-tuning solutions within specific areas to improve solution accuracy. EO algorithm, a recently proposed physics-inspired metaheuristic algorithm, is based on metaphors from the field of physics. The efficiency and applicability of EO have been demonstrated in benchmark function optimization problems as well as real-world problems. However, despite EO’s attempt to design effective search models based on reliable metaphors, the transition from exploration to exploitation during the search process is still imperfect, resulting in limitations such as getting trapped in local optima and premature convergence.

To mitigate the inherent limitations of EO and provide a viable alternative efficient optimization tool for the optimization community, many researchers have made improvements and proposed different versions of EO variants. Gupta et al. [41] introduced mutation strategies and additional search operators, referred to as mEO, into the basic EO. The mutation operation is used to overcome the problem of population diversity loss during the search process, and the additional search operators assist the population in escaping local optima. The performance of mEO was tested on 33 commonly used benchmark functions and four engineering design problems. Experimental results demonstrated that mEO effectively enhances the search capability of the EO algorithm.

Houssein et al. [42] strengthened the balance between exploration and exploitation in the basic EO algorithm by employing the dimension hunting technique. The performance of the proposed EO variant was tested using the CEC 2020 benchmark test suite and compared with advanced metaheuristic methods. Comparative results showed the superiority of the proposed approach. Additionally, the proposed EO variant was applied to multi-level thresholding image segmentation of CT images. Comparative results with a set of popular image segmentation tools showed good performance in terms of segmentation accuracy.

Liu et al. [43] introduced three new strategies into EO to improve algorithm performance. In this version of EO, Levy flight was used to enhance particle search in unknown regions, the WOA search mechanism was employed to strengthen local exploitation tendencies, and the adaptive perturbation technique was utilized to enhance the algorithm’s ability to avoid local optima. The performance of the algorithm was tested on the CEC 2014 benchmark test suite and compared with several well-known algorithms. Comparative results showed that the proposed EO variant outperformed the compared algorithms in the majority of cases. Furthermore, the algorithm’s capability to solve real-world problems was investigated using engineering design cases, demonstrating its practicality in addressing real-world problems.

Tan et al. [44] proposed a hybrid algorithm called EWOA, which combines EO and WOA, aiming to compensate for the inherent limitations of the EO algorithm. Comparative results with the basic EO, WOA, and several classical metaheuristic algorithms showed that EWOA mitigates the tendency of the basic EO algorithm to get trapped in local optima to a certain extent.

Zhang et al. [45] introduced an improved EO algorithm, named ISEO, by incorporating an information exchange reinforcement mechanism to overcome the weak inter-particle information exchange capability in the basic EO. In ISEO, a global best-guided mechanism was employed to enhance the guidance towards a balanced state, a reverse learning technique was utilized to assist the population in escaping local optima, and a differential mutation mechanism was expected to improve inter-particle information exchange. These three mechanisms were simultaneously embedded in EO, resulting in an overall improved algorithm performance. The effectiveness of ISEO was demonstrated on a large number of benchmark test functions and engineering design cases.

Minocha et al. [46] proposed an EO variant called MEO, which enhances the convergence performance of the basic EO. In MEO, adjustments were made to the construction of the balance pool to strengthen the algorithm’s search intensity, and the Levy flight technique was introduced to improve global search capability. To investigate the convergence performance of MEO, 62 benchmark functions with different characteristics and five engineering design cases were utilized. Experimental results demonstrated that MEO provides excellent robustness and convergence compared to other algorithms.

Balakrishnan et al. [47] introduced an improved version of EO, called LEO, for feature selection problems. LEO inherits the framework and Levy flight mechanism of EO with the expectation of providing a better search capability in comparison to the basic EO. To validate the performance of LEO, the algorithm was tested on a microarray cancer dataset and compared with several high-performing feature selection methods. Comparative results showed significant advantages of LEO in terms of accuracy and speed compared to the compared algorithms.

3. The Original EO

EO is a recently proposed novel physics-based metaheuristic algorithm designed for addressing global optimization problems. In the initial stage, EO randomly generates a set of particles to initiate the optimization process. In EO, the concept of concentration is used to represent the state of particles, similar to the particle positions in PSO. The algorithm expects the particles to achieve a state of balance within a mass volume, and the process of striving towards this balance state constitutes the optimization process of the algorithm, with the final balanced state being the optimal solution discovered by the algorithm. The EO algorithm generates the initial population in the following manner:

where is a random vector between [0, 1], and are the boundaries of the search region, and N is the population size.

After the search process is started, the initial particles are updated in concentration according to the following equation:

where is the concentration of a randomly selected particle in the equilibrium pool; F represents the exponent term, responsible for adjusting the global and local search behavior; G represents the generation rate, responsible for local search; is a random value; and V is a constant with a value of 1. The second term in the equation is responsible for global exploration, while the third term is responsible for local exploitation. The equilibrium pool is constructed as follows:

where , , , and are the four particles with the optimal concentration in the population, which are called equilibrium candidates, and is the mean value of the above four particles. In the optimization process, there is a lack of information about the equilibrium state, and the equilibrium candidates are used to act as equilibrium states to drive the optimization process.

The concept of exponential term is used in EO to adjust the global search and local search behavior, and the mathematical model of the exponential term F is calculated according to the following equation:

where t is a nonlinear function of the number of iterations, and is a parameter that adjusts the local and global search capabilities of the algorithm. t and are calculated according to the following equation, respectively.

In Equation (5), and denote the current iteration round and the set maximum number of iterations, respectively, and the parameter is responsible for adjusting the local exploration capacity of the algorithm and is set as a constant. In Equation (6), the parameter is responsible for managing the global exploration capacity of the algorithm and is set as a constant. In the basic EO, and are set to 0 and 1, respectively. In addition, r and are random vectors between [0, 1]. Correspondingly, (0.5) controls the direction of particle concentration change.

The generation rate G is the key factor, which is used to fine tune the given region and improve the solution accuracy. G is calculated according to the following equation:

where F is the exponential term, which is calculated according to Equation (4), and is the initial value, which is calculated according to the following equation.

where and are random values between [0, 1], and the generation rate control factor controls whether the generation rate can participate in the concentration update process.

From Equation (2), it can be seen that the concentration update mechanism consists of three terms. The first term is the balance candidate to guide the particle update; the second and third terms are the concentration variables, which are responsible for local exploitation and global exploration, respectively. the EO algorithm, with the help of these three behaviors, is able to achieve local exploitation in the early stage and global exploration in the later stage.

4. Proposed Improved EO

The effectiveness of the EO algorithm has been demonstrated in numerical optimization problems, engineering design cases, and real-world scenarios, attracting numerous researchers to apply this algorithm to solve problems in their respective fields. However, when faced with challenging optimization tasks, EO still exhibits insufficient exploration capabilities and can get trapped in local optima. To mitigate these limitations, this paper proposes two customized strategies that are embedded into the basic EO algorithm, aiming to develop a competitive optimization approach. In the proposed SSEO, adaptive inertia weight factors are employed to enhance global exploration tendencies, while a spiral search mechanism is introduced to expand the search space. In the following sections, detailed descriptions of the two strategies utilized in this study are presented.

The introduction of customized strategies in SSEO addresses the limitations of EO, resulting in a more robust optimization solution. By integrating adaptive inertia weight factors and the spiral search mechanism, SSEO significantly enhances its global exploration capabilities and expands the search space. These improvements provide SSEO with a competitive advantage, enabling it to effectively tackle complex optimization tasks. Extensive evaluations and comparisons with state-of-the-art algorithms across diverse domains, such as numerical optimization, engineering design, and real-world applications, confirm the exceptional performance of SSEO. These experimental findings validate the reliability and efficiency of SSEO as a powerful optimization tool.

4.1. Adaptive Inertia Weight Strategy

The basic EO algorithm employs a simple, easy-to-implement, and effective concentration updating mechanism, which enables it to rapidly converge to the optimal or suboptimal solution when faced with simple optimization problems. However, when dealing with complex multimodal optimization problems, the algorithm often gets trapped in local optima during the concentration updating process. The main reason is that the information regarding the equilibrium candidates has not been fully utilized. Specifically, one distinctive feature of the basic EO lies in the creation of an equilibrium pool. The candidates within the equilibrium pool offer knowledge about equilibrium states and establish search patterns for particles. The equilibrium pool constructed by candidates forms a fundamental component of the EO algorithm. By fully harnessing the information stored in the equilibrium candidates, it is possible to guide particles towards more promising regions. However, in the case of the basic EO, this aspect of the process did not yield the expected results, resulting in a decline in algorithm performance. Therefore, in this study, we introduced an inertia weight factor to the equilibrium candidates, aiding them to exert a more proactive influence on particles, consequently enhancing the particles’ ability to escape local optima. The adaptive concentration update equation Equation (10), formed by incorporating the inertia weight, is employed to replace the original concentration update equation. The novel mathematical model for concentration updating is as follows:

where is the inertia weight factor. To calculate , the following equation is utilized:

where is a constant, is the current iteration round, and and represent the maximum and minimum values of the inertia weight factor, respectively.

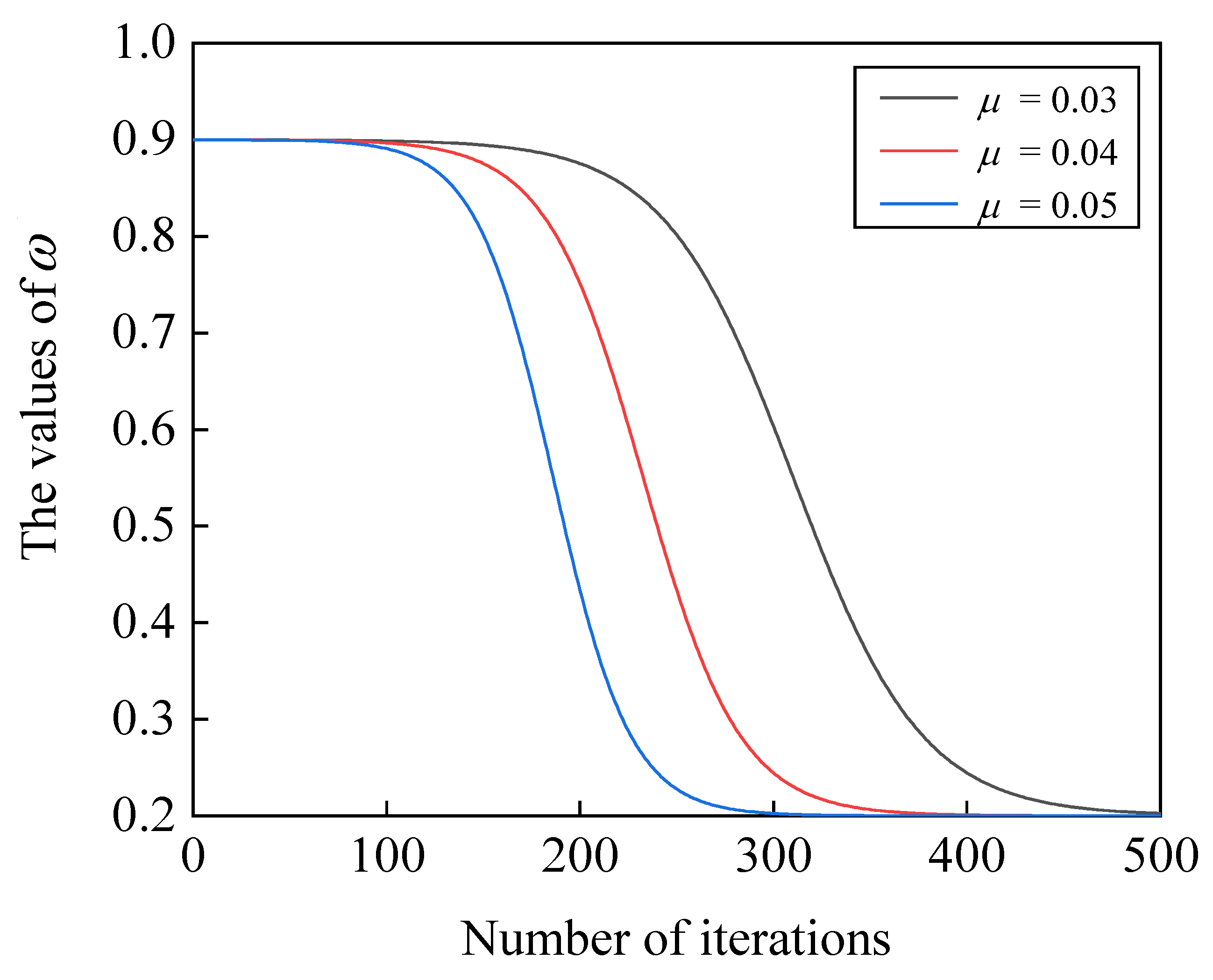

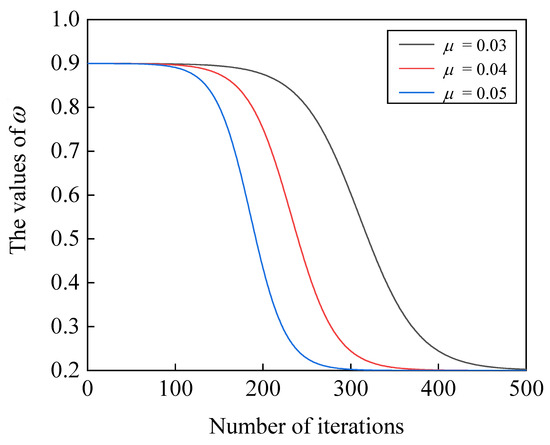

In order to visually observe the changing trend of the proposed inertia weight factor during the iterations, Figure 1 illustrates the nonlinear decay process of . According to Figure 1, the value of decreases as the iterations progress. This provides larger concentration variations to the particles in the early stages of iteration while contributing smaller concentration variations in the later stages. As a result, the algorithm is able to extensively explore the solution space during the initial iterations and finely adjust the given foreground region towards the end of the iterations.

Figure 1.

Nonlinear decay of the proposed inertia weight.

4.2. Spiral Search Strategy

The EO algorithm incorporates the concept of a balance pool, which consists of five balance candidates that replace the balance state and guide the particles in their concentration updates. While this approach increases population diversity and helps the algorithm escape from local optima, it simultaneously reduces the particles’ ability to finely explore a given region. In other words, the basic EO algorithm suffers from limited local development capabilities. In order to enhance the algorithm’s local exploration ability, the spiral search mechanism is integrated into the concentration update process of the EO algorithm. This mechanism introduces a spiral movement pattern that allows particles to explore the vicinity of the current position in a more comprehensive and systematic manner. The proposed concentration update equation based on the spiral search is formulated as follows:

where denotes the distance between the current particle and the equilibrium candidate, c is a constant, and l is a random value between [0, 1]. The distance is calculated according to the following equation:

By incorporating the spiral search mechanism, the particles in the algorithm are guided to explore a given region with specific search behaviors. This integration effectively addresses the limitation of inadequate local exploration capability caused by the lack of particle interaction in the algorithm. Consequently, the algorithm’s local development capacity is enhanced, leading to improved solution precision. The spiral search mechanism enables the particles to efficiently delve into the local search space, allowing for finer adjustments and refinements of the solutions. As a result, the algorithm achieves higher accuracy in capturing the local optima and refining the obtained solutions.

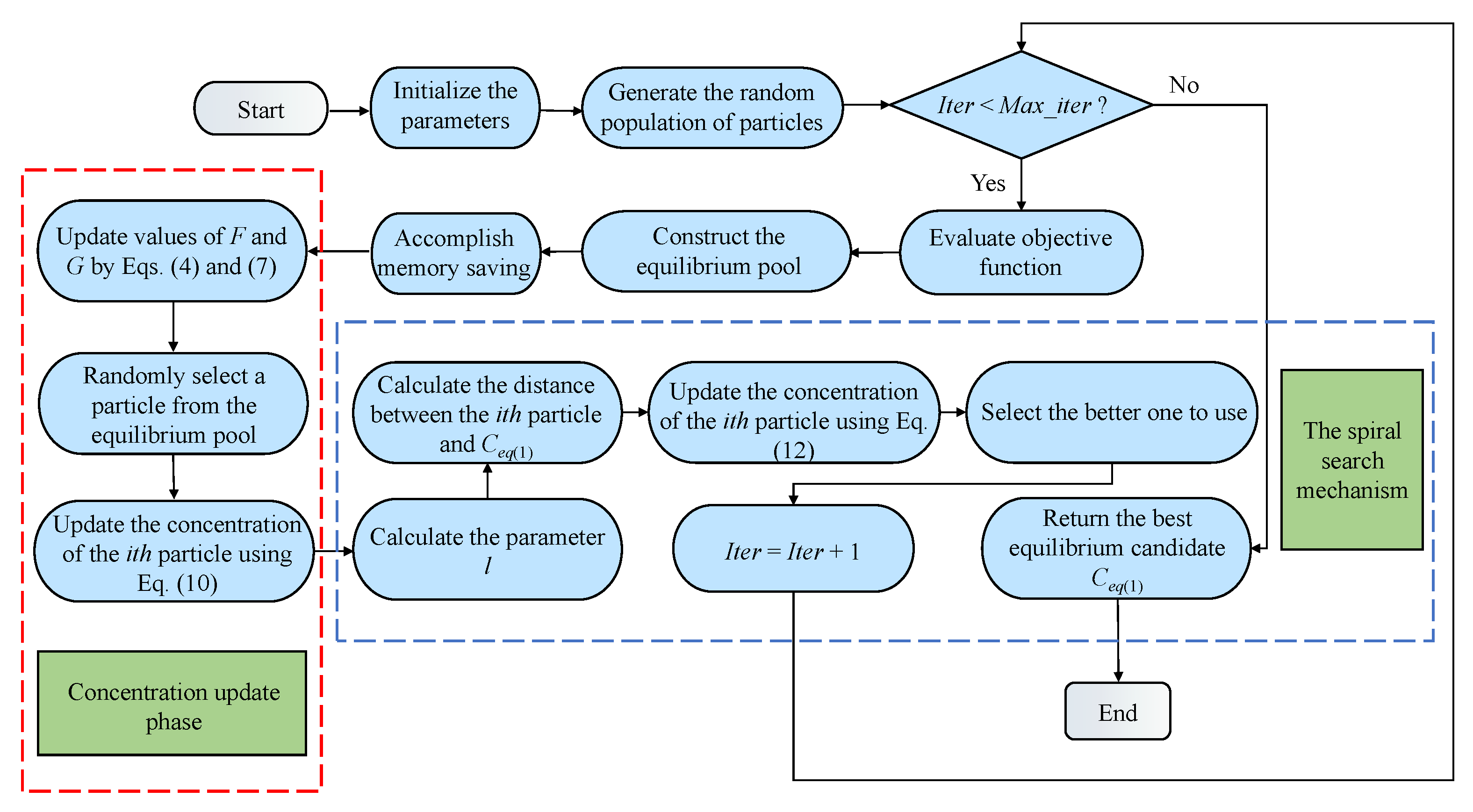

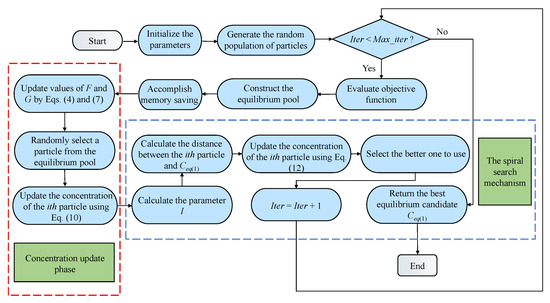

4.3. The Flowchart of SSEO

To facilitate a better understanding of the implementation details of SSEO, Figure 2 illustrates the flowchart of the SSEO. From the diagram, it can be observed that, in comparison to the basic EO algorithm, SSEO incorporates a new concentration update equation and introduces a spiral search phase.

Figure 2.

The flowchart of SSEO.

5. Simulation Results and Discussion

In this section, the performance of the proposed SSEO algorithm was evaluated using the CEC2017 benchmark function set. A comprehensive comparison was conducted with the basic EO algorithm and several popular EO variants to assess the effectiveness of SSEO. The following section will provide detailed insights into the experimental setup, methodology, and analysis of the results. The experiments aimed to investigate the algorithm’s performance in terms of convergence speed, solution quality, and robustness across a diverse set of optimization problems.

5.1. Benchmark Functions

In this study, we conducted a performance evaluation of the algorithm using the CEC 2017 benchmark function set. The CEC 2017 test suite comprises 29 functions with diverse characteristics, which can be categorized into four types: unimodal, multimodal, hybrid, and combinatorial. The details of the benchmark functions in the CEC 2017 test suite are reported in Table 1. By executing the SSEO algorithm on these functions, the obtained results provide comprehensive insights into the algorithm’s performance. The use of the CEC 2017 test suite ensures a thorough assessment of the algorithm’s capability to handle various types of optimization problems.

Table 1.

Summary of the 29 CEC 2017 benchmark problems.

5.2. Experimental Setup

The performance of the SSEO algorithm was compared with the basic EO and several advanced EO variants using the CEC 2017 test suite. The comparison was conducted by maintaining consistency with the specific parameters used in the respective original literature of the compared algorithms. In the SSEO algorithm, the values of and were set to 0.55 and 0.2, respectively. The maximum number of iterations was set to 500, and the population size was set to 30. The algorithm was executed 30 times on each function, and the mean and variance were recorded for evaluation purposes. The implementation of the algorithm was performed using MATLAB 2016b software with the utilization of the M-language. This experimental setup ensured a fair and reliable comparison of the SSEO algorithm against other algorithms in terms of their performance on the diverse functions in the CEC 2017 test suite.

5.3. Comparison of SSEO with Other Well-Performing EO-Based Methods

In this section, we conducted experiments using the SSEO algorithm on the 29 functions from the CEC 2017 test suite. The performance of SSEO was compared against the basic EO, recently reported EO variants, metaheuristic algorithms based on the spiral search mechanism, and other well-performing metaheuristic algorithms. The EO variants included in the comparison were mEO [41], LWMEO [43], ISEO [45], and IEO [42]. The metaheuristic algorithms based on the spiral search mechanism included MFO [48], DMMFO [49], and WEMFO [50]. Well-performing metaheuristic algorithms included PSO [8] and OOSSA [51]. Table 2 lists the parameter settings of seven algorithms. Table 3 lists the results obtained by these algorithms on 30-dimensional functions, and the statistics of the corresponding Wilcoxon signed rank tests are reported in Table 4. Table 5 lists the results obtained by these algorithms on 100-dimensional functions, and the statistics of the corresponding Wilcoxon signed rank tests are shown in Table 6. The symbols “+/=/−” in Table 4 and Table 6 represent better than, similar to, and inferior to, respectively. These tables provide a comprehensive evaluation of the algorithms’ performance and facilitate a comparative analysis of their optimization capabilities across various test functions.

Table 2.

Parameter setting of the ten algorithms.

Table 3.

Comparisons of eleven algorithms on CEC 2017 benchmark functions with 30 dimensions.

Table 4.

Statistical conclusions based on Wilcoxon signed-rank test on 30-dimensional benchmark problems.

Table 5.

Comparisons of eleven algorithms on CEC 2017 benchmark functions with 100 dimensions.

Table 6.

Statistical conclusions based on Wilcoxon signed-rank test on 100-dimensional benchmark problems.

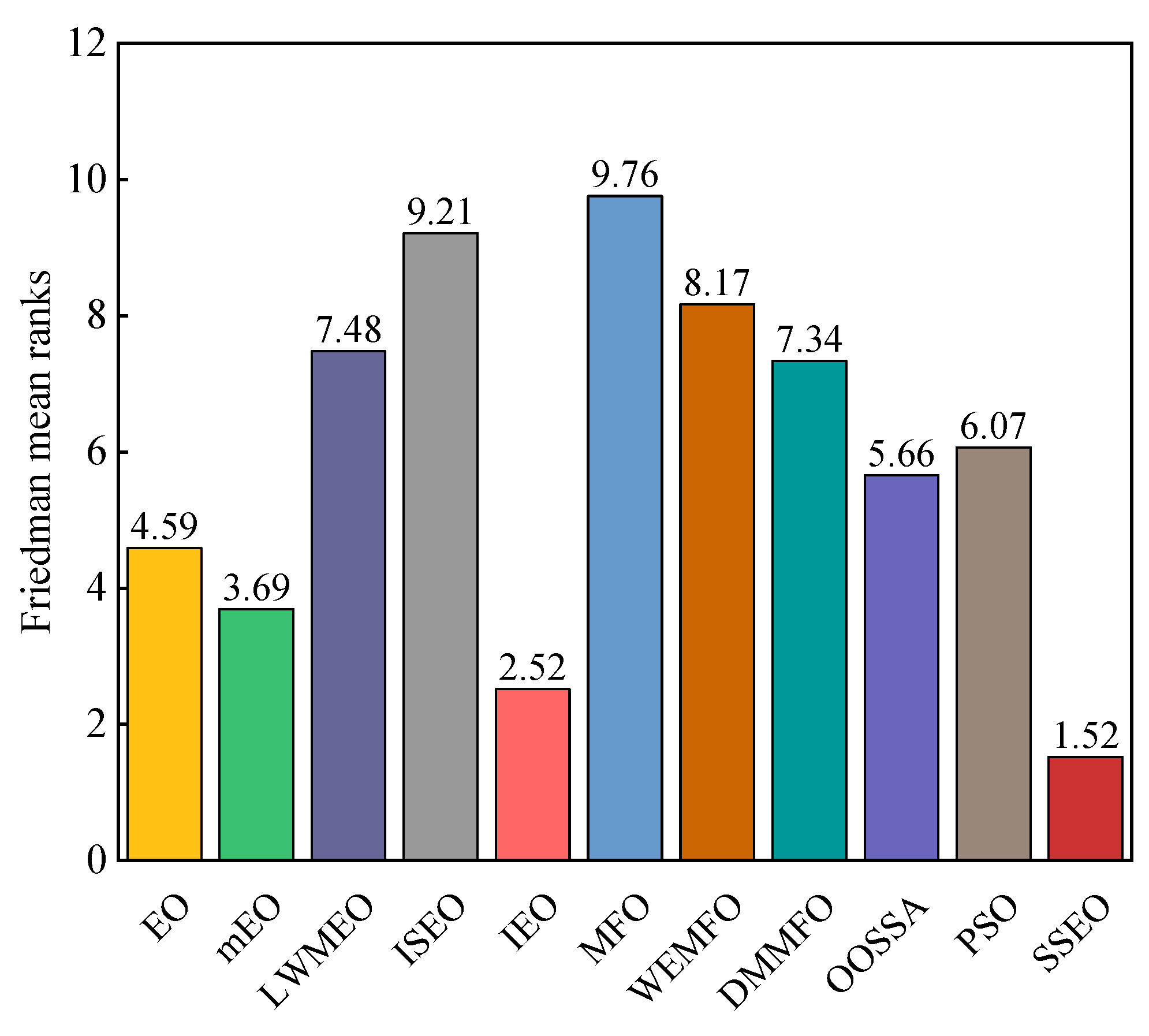

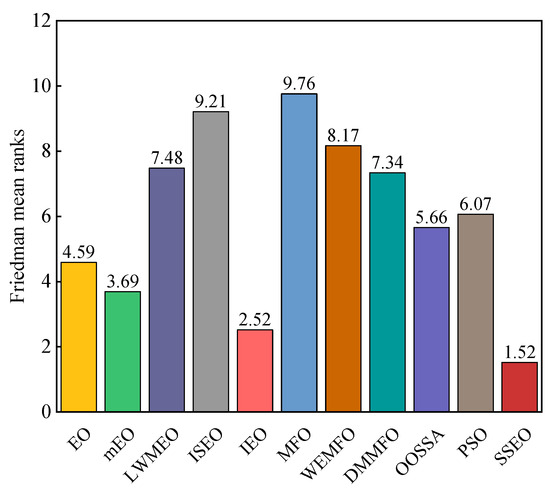

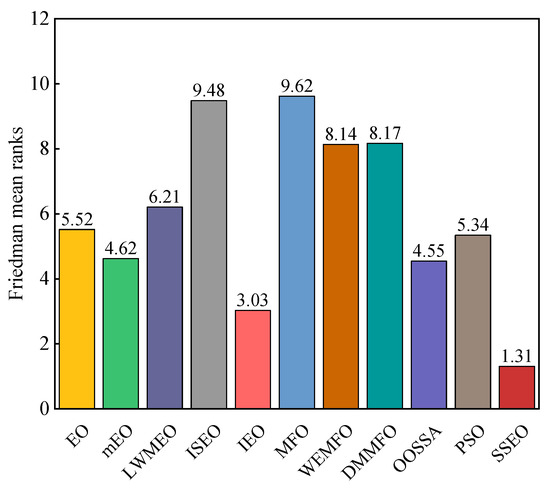

According to Table 3, the SSEO algorithm achieved the best performance in more than half of the functions. Specifically, SSEO outperformed MFO, WEMFO, DMMFO, and OOSSA on all benchmark functions. Among the 29 functions evaluated, SSEO outperformed the basic EO on 28 functions, with the exception of F23. In comparison to IEO, SSEO surpassed it on 22 functions but fell behind on 7 functions. When compared to LWMEO, SSEO outperformed it on 25 functions but was surpassed on F4 and F13. With the exception of F17, F20, and F27, SSEO demonstrated better performance than mEO on all functions. SSEO performed worse than ISEO on F27 but outperformed it on other functions. SSEO was superior to PSO on all 28 functions except F4. Additionally, Figure 3 presents the Friedman average ranking results of the algorithms on these functions. According to Figure 3, SSEO obtained the highest ranking, followed by IEO, mEO, EO, OOSSA, PSO, DMMFO, LWMEO, WEMFO, ISEO, and MFO. Moreover, according to Table 4, it is apparent that these algorithms are less than 0.05 on the vast majority of functions, which illustrates that there is a significant difference between SSEO and the other algorithms. Based on these analyses, we can conclude that the experimental results favor the performance of SSEO over the other algorithms.

Figure 3.

Friedman mean ranks obtained by the employed algorithms on CEC 2017 benchmark functions with 30 dimensions.

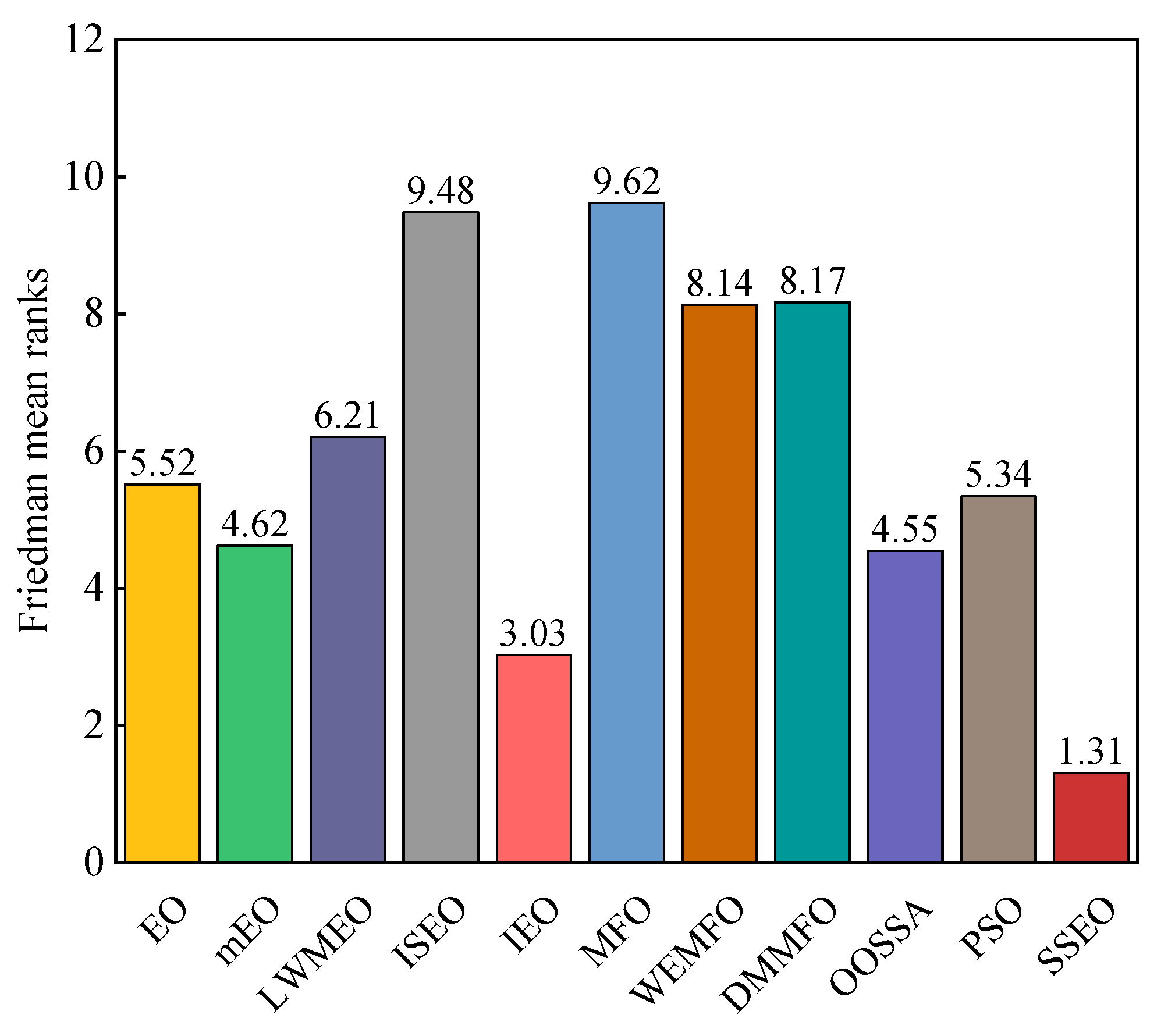

From the reported results in Table 5, it can be observed that SSEO achieved the best efficiency in most of the benchmark functions. In pairwise comparisons, SSEO outperformed the basic EO across all test cases. SSEO beat LWMEO, ISEO, MFO, WEMFO, and DMMFO across all benchmark functions. SSEO exhibited better performance than IEO in a significant number of functions and inferior performance in a few cases. Except for F11, SSEO performed better than mEO on all functions. SSEO was inferior to OOSSA in one function but surpassed it in 28 functions. Regarding PSO, SSEO was inferior to PSO on F25, while it outperformed PSO in the remaining functions. The Friedman average ranking results of these algorithms on the 29 100-dimensional functions are plotted in Figure 4. According to Figure 4, the proposed SSEO obtained the highest ranking, followed by IEO, OOSSA, mEO, PSO, EO, LWMEO, WEMFO, DMMFO, ISEO, and MFO. Furthermore, based on Table 6, the statistical analysis results of the Wilcoxon signed-rank test of these algorithms are almost lower than 0.05. This shows that there is a significant difference between the SSEO algorithm and the comparative algorithms.

Figure 4.

Friedman mean ranks obtained by the employed algorithms on CEC 2017 benchmark functions with 100 dimensions.

Based on these analyses, we can conclude that the experimental results consistently support the superior performance of SSEO over the other algorithms.

6. Architecture of Mobile Robot Path Planning Using SSEO

The MRPP problem in autonomous mobile robots is a pivotal issue in robotics. This problem can be transformed into an optimization problem and solved using metaheuristic algorithms. In [52], the SSA algorithm was employed for the MRPP problem. The developed algorithm was tested in different environments, and the results showed that the algorithm was able to plan reasonable obstacle-free paths for autonomous mobile robots. In [53], a PSO-based MRPP approach was developed, and comprehensive experiments validated the effectiveness of the introduced method. In [54], a navigation strategy for a mobile robot encountering stationary obstacles was proposed using the FA algorithm. Simulation results show that the method successfully achieves the three basic objectives of path length, path smoothness, and path safety. In [55], the ABC algorithm is used in the MRPP problem to help autonomous mobile robots to generate suitable paths. The effectiveness of the algorithm is verified by simulating the algorithm under two terrains. In [56], GWO was employed for MRPP, and simulation outcomes highlighted its favorable performance in terms of path length and obstacle avoidance. In this section, we employ SSEO to address the MRPP problem and compare it with several classical metaheuristic algorithms. This evaluation aims to assess SSEO’s performance in real-world scenarios and validate its effectiveness in tackling practical optimization challenges. The empirical assessment intends to showcase SSEO’s efficacy in practical problem-solving and offer valuable insights for its practical applications.

6.1. Robot Path Planning Problem Description

In simple terms, the objective of the MRPP problem is to find an obstacle-free path from a starting point to a destination point. This process takes into consideration two main factors: minimizing the path length and avoiding collisions with obstacles. Based on these two factors, the following objective function has been devised:

where L is the path length, is the penalty factor, and is a flag variable that determines whether the interpolant point is lying inside of the threatening areas. is used to determine whether the route collides with an obstacle.

The objective function of the MRPP problem is designed to balance the trade-off between finding the shortest path and ensuring obstacle avoidance. It combines the consideration of path length minimization with the incorporation of collision avoidance constraints. By formulating the problem in this way, the objective function guides the optimization algorithm, such as SSEO, to explore feasible solutions that optimize both path length and obstacle avoidance simultaneously. This formulation enables the algorithm to search for efficient and collision-free paths for the mobile robot, addressing the complexities of real-world scenarios.

6.2. Simulation Results

In this section, the SSEO algorithm is employed along with several well-established metaheuristic algorithms that have been validated for their performance in the MRPP problem. The MRPP problem is simulated on three different maps to evaluate the performance of these algorithms. The selected algorithms for comparison include ABC [7], PSO [8], GWO [9], FA [10], and SSA [13], which have been widely used and studied in the field. Table 7 lists the parameter settings of seven algorithms.

Table 7.

Parameter setting of the five algorithms.

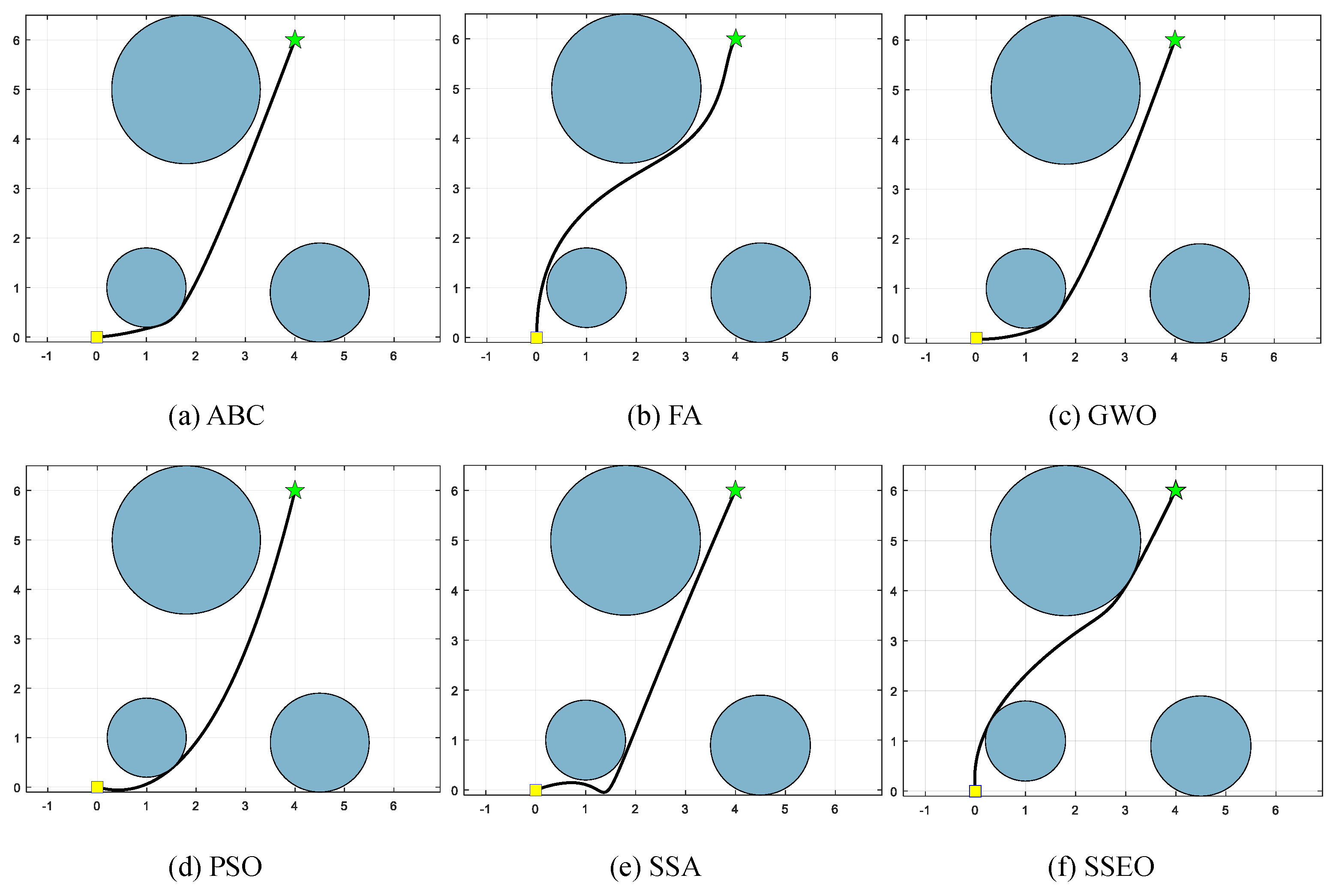

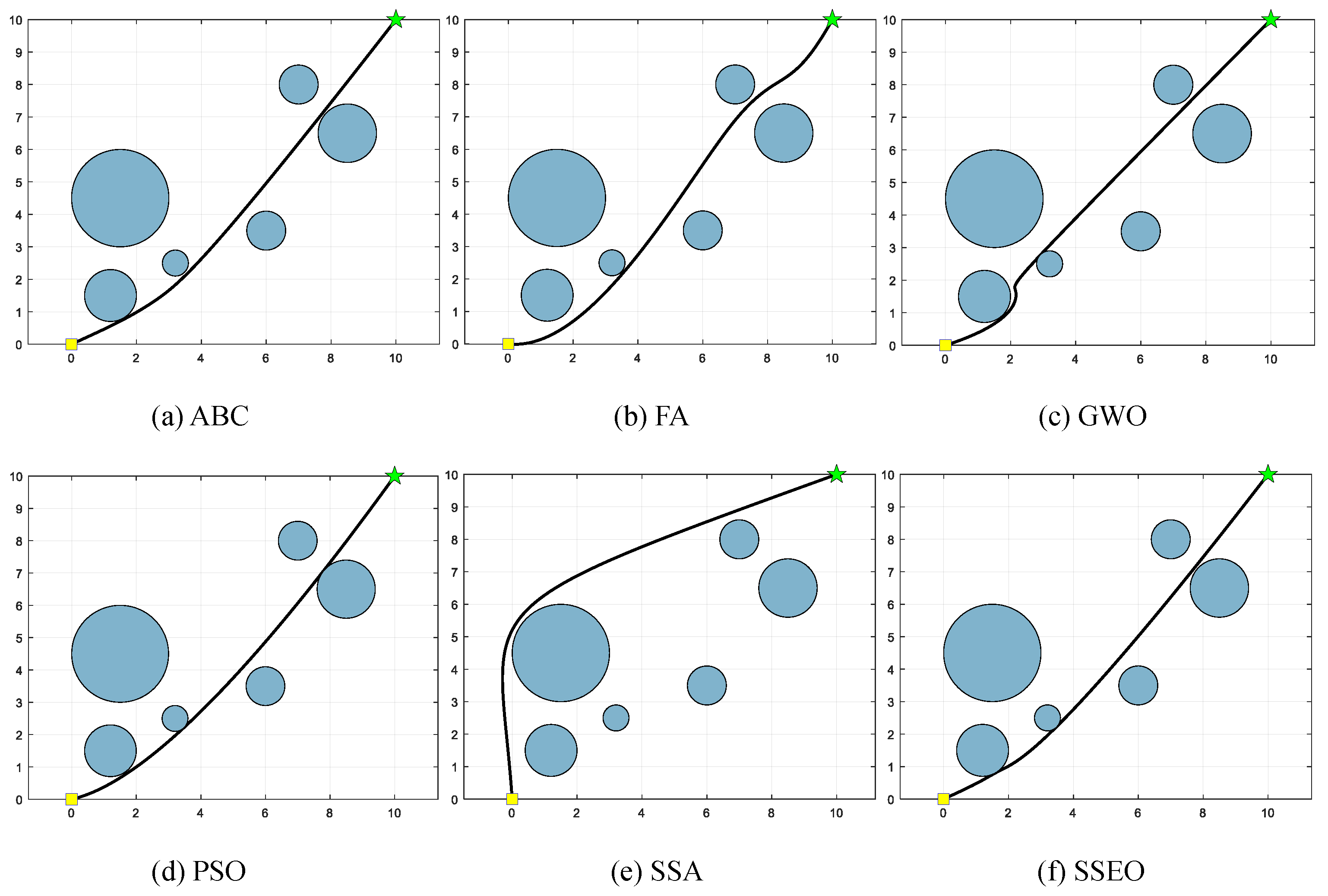

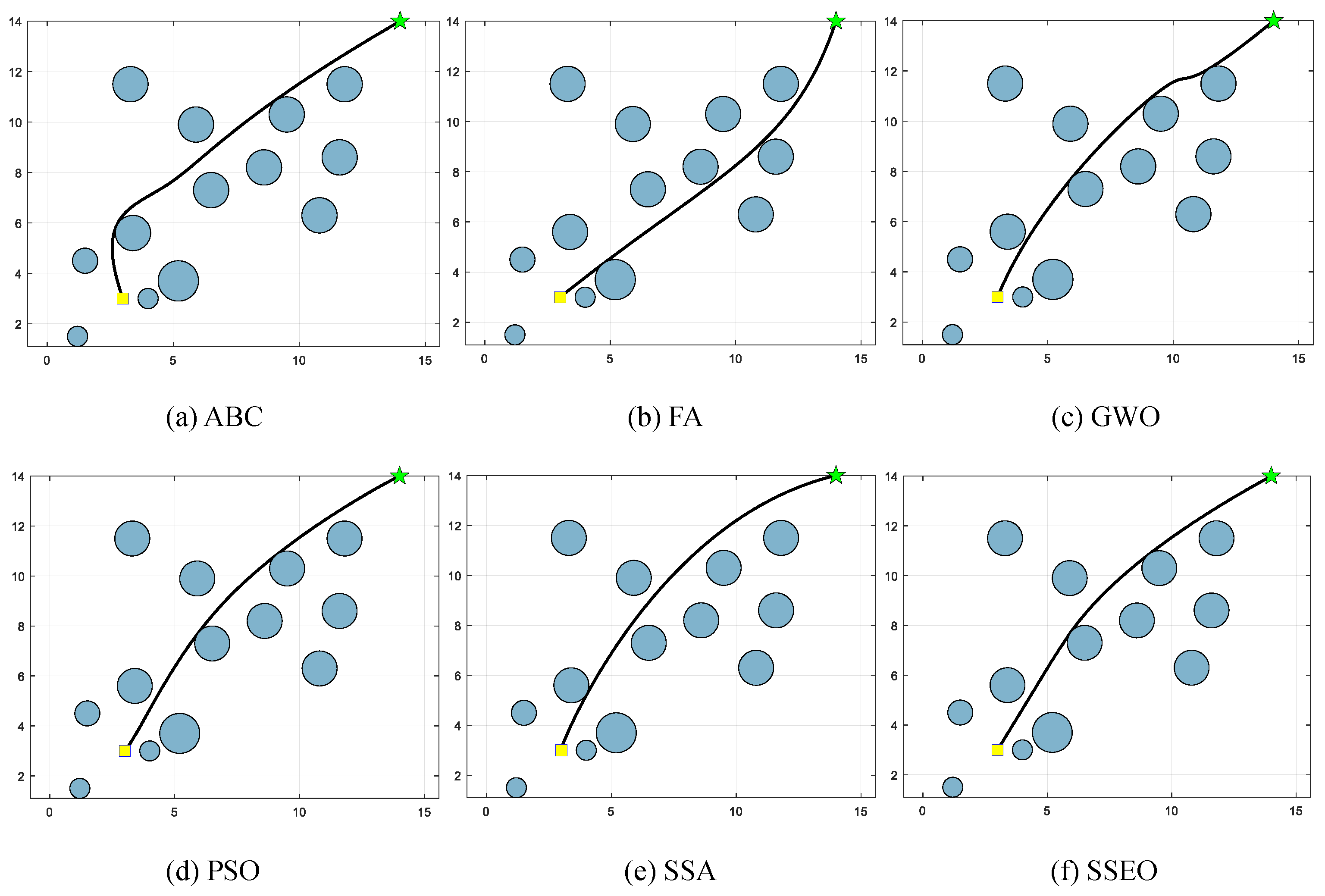

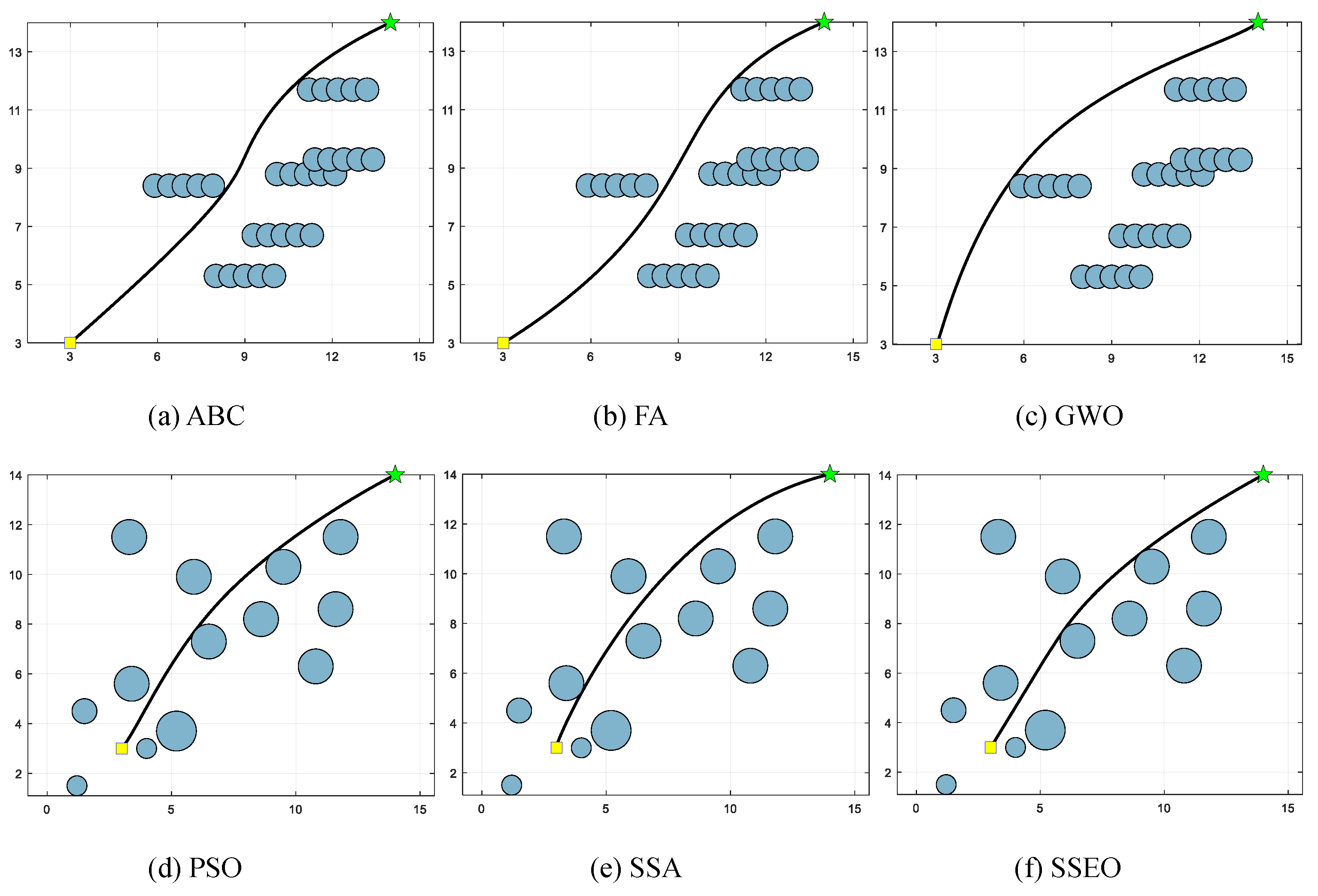

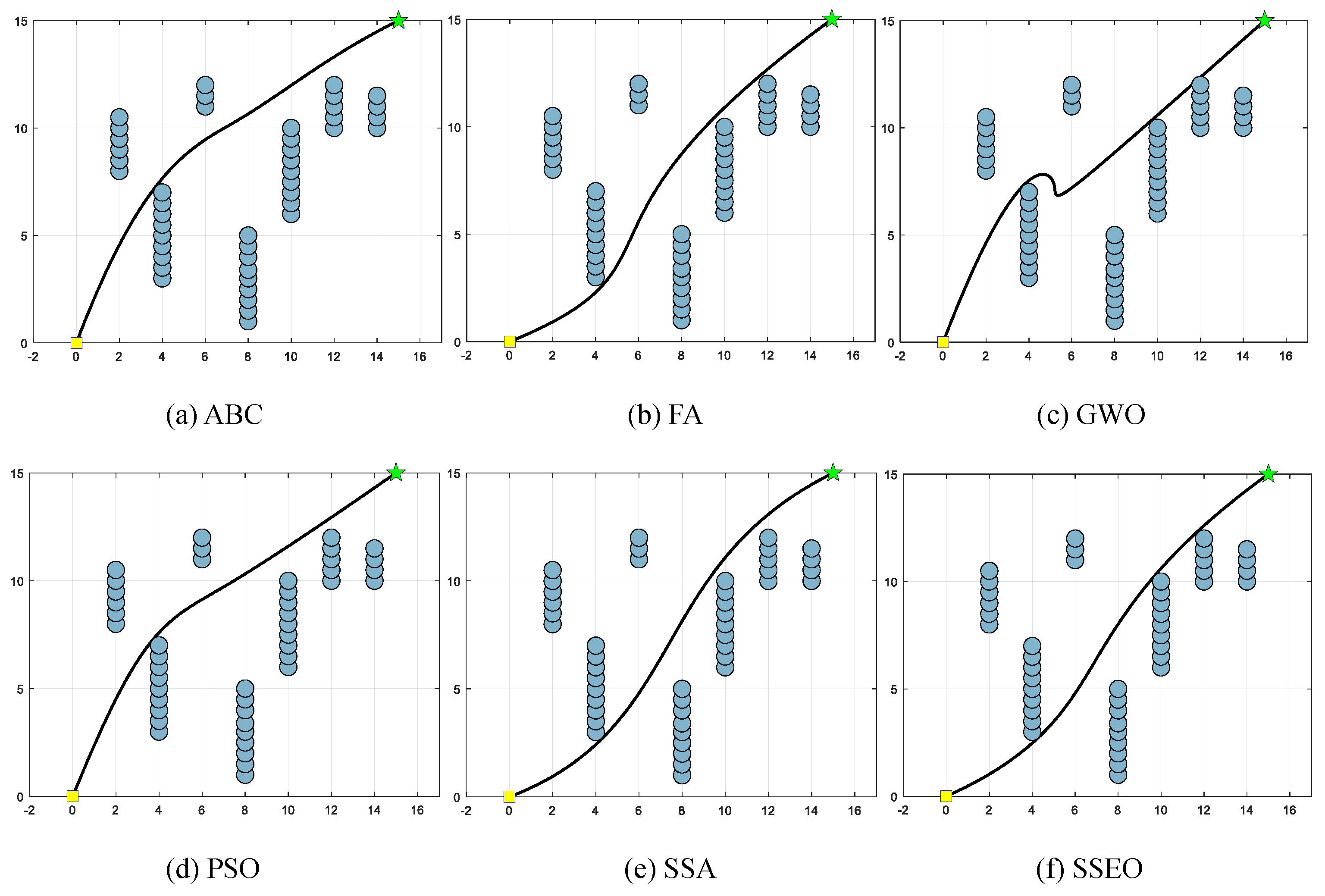

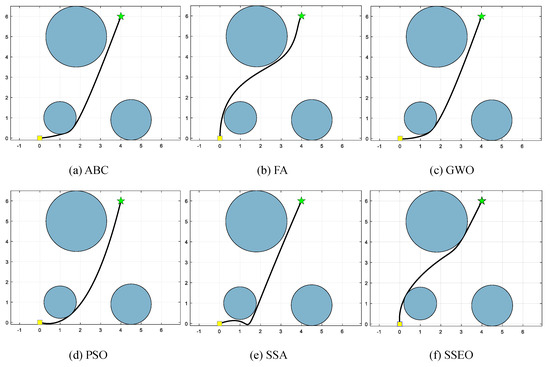

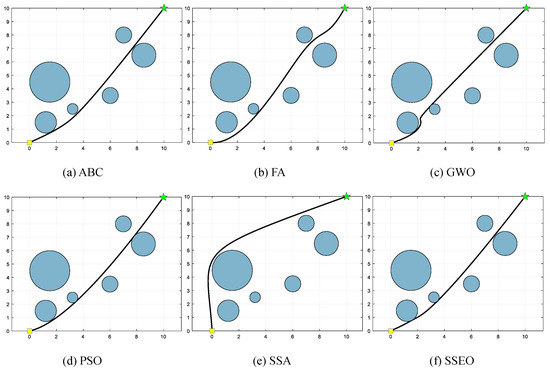

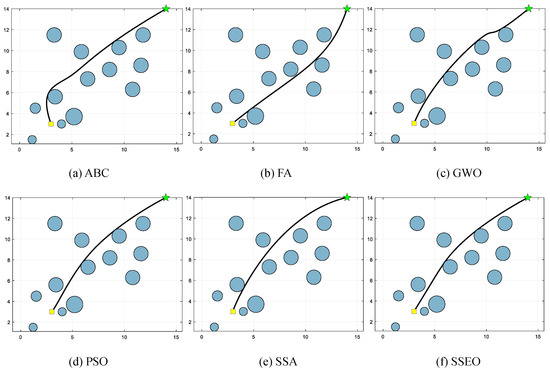

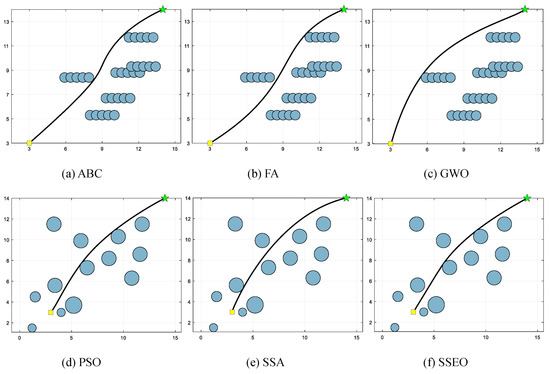

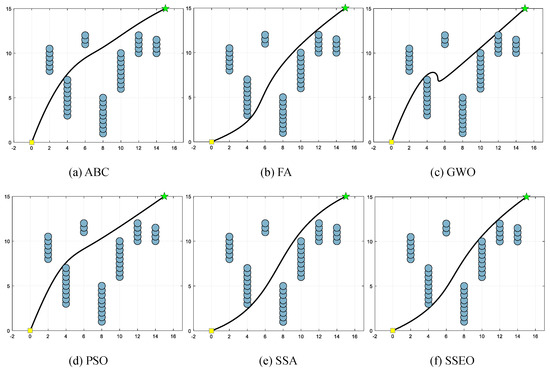

Five environment settings, derived from [51], are chosen for simulating the MRPP problem. It should be noted that the green star indicates the end point. The details of these environment settings are presented in Table 8. The path lengths obtained by the algorithm are shown in Table 9, and the corresponding trajectories are presented in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9.

Table 8.

Type of environment.

Table 9.

The minimum route length comparison of SSEO-based MRPP method and comparison approaches under five environmental setups.

Figure 5.

Map 1: (a) ABC, (b) FA, (c) GWO, (d) PSO, (e) SSA, and (f) SSEO.

Figure 6.

Map 2: (a) ABC, (b) FA, (c) GWO, (d) PSO, (e) SSA, and (f) SSEO.

Figure 7.

Map 3: (a) ABC, (b) FA, (c) GWO, (d) PSO, (e) SSA, and (f) SSEO.

Figure 8.

Map 4: (a) ABC, (b) FA, (c) GWO, (d) PSO, (e) SSA, and (f) SSEO.

Figure 9.

Map 5: (a) ABC, (b) FA, (c) GWO, (d) PSO, (e) SSA, and (f) SSEO.

According to Table 9, the SSEO algorithm consistently produces the shortest trajectory lengths compared to the other benchmark algorithms across all environment settings. This indicates that the SSEO algorithm exhibits superior performance in solving the MRPP problem.

However, when comparing with the benchmark methods, the proposed SSEO algorithm consistently discovers shorter paths in each environment setting. This indicates that the SSEO algorithm possesses strong global optimization capabilities and the ability to avoid local optima. In summary, the SSEO algorithm shows promising potential as a path planner for the MRPP problem, outperforming the benchmark methods in terms of path length optimization and collision avoidance in diverse environments.

7. Conclusions

This study proposes an improved variant of EO, called SSEO, by introducing an adaptive inertia weight factor and a nature-inspired spiral search mechanism. In SSEO, the overall search framework of the basic EO is retained while incorporating the adaptive inertia weight factor and spiral search mechanism to overcome the imbalanced exploitation–exploration trade-off and suboptimal convergence performance encountered by the basic EO. The performance of the proposed SSEO algorithm is evaluated using 29 functions from the CEC 2017 benchmark test suite, and comparisons are made against the basic EO, improved EO variants, and spiral search-based metaheuristic techniques. The test results demonstrate the effectiveness of SSEO. Furthermore, the capability of the SSEO algorithm to solve real-world problems is tested using an MRPP problem. Comparative results with several classical metaheuristic algorithms reveal that SSEO is a promising path planner.

Author Contributions

Conceptualization, H.D. and Z.W.; methodology, Y.L. and Z.W.; software, Z.W. and G.J.; validation, H.D., Y.L. and P.H.; formal analysis, Z.W., G.J. and G.D.; investigation, Y.L. and Z.W.; resources, H.D. and G.D.; data curation, Z.W. and P.H.; writing—original draft preparation, Y.L. and Z.W.; writing—review and editing, Z.W.; visualization, H.D. and G.J.; supervision, P.H.; project administration, H.D. and P.H.; funding acquisition, P.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Nature Science Foundation of China (Grant No. 61461053, 61461054, and 61072079); the Yunnan Provincial Education Department Scientific Research Fund Project (Grant No. 2022Y008); the Yunnan University’s Research Innovation Fund for Graduate Students, China (Grant No. KC-22222706); and the Ding Hongwei Expert Grassroots Research Station of Youbei Technology Co., Yunnan Province.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to thank the editor and the anonymous reviewers for their helpful and constructive comments, which have helped us to improve the manuscript significantly.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gharehchopogh, F.S. Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artif. Intell. Rev. 2022, 56, 5479–5543. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, H.; Yang, J.; Wang, J.; Li, B.; Yang, Z.; Hou, P. Advanced orthogonal opposition-based learning-driven dynamic salp swarm algorithm: Framework and case studies. IET Control. Theory Appl. 2022, 16, 945–971. [Google Scholar] [CrossRef]

- Kaveh, A.; Zaerreza, A. A new framework for reliability-based design optimization using metaheuristic algorithms. Structures 2022, 38, 1210–1225. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, H.; Yang, J.; Hou, P.; Dhiman, G.; Wang, J.; Yang, Z.; Li, A. Orthogonal pinhole-imaging-based learning salp swarm algorithm with self-adaptive structure for global optimization. Front. Bioeng. Biotechnol. 2022, 10, 1018895. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Azeem, S.A.A.; Jameel, M.; Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowl.-Based Syst. 2023, 268, 110454. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2018, 22, 387–408. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Hossein, G.A.; Yang, X.-S.; Alavi, A.H. Mixed variable structural optimization using firefly algorithm. Comput. Struct. 2011, 89, 2325–2336. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Choi, K.; Jang, D.-H.; Kang, S.-I.; Lee, J.-H.; Chung, T.-K.; Kim, H.-S. Hybrid Algorithm Combing Genetic Algorithm with Evolution Strategy for Antenna Design. IEEE Trans. Magn. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Price, K.V. Differential evolution. In Handbook of Optimization: From Classical to Modern Approach; Springer: Berlin/Heidelberg, Germany, 2013; pp. 187–214. [Google Scholar]

- Civicioglu, P. Backtracking Search Optimization Algorithm for numerical optimization problems. Appl. Math. Comput. 2013, 219, 8121–8144. [Google Scholar] [CrossRef]

- Salimi, H. Stochastic Fractal Search: A powerful metaheuristic algorithm. Knowl.-Based Syst. 2015, 75, 1–18. [Google Scholar] [CrossRef]

- Amali, D.G.B.; Dinakaran, M. Wildebeest herd optimization: A new global optimization algorithm inspired by wildebeest herding behaviour. J. Intell. Fuzzy Syst. 2019, 37, 8063–8076. [Google Scholar] [CrossRef]

- Dimitris, B.; Tsitsiklis, J. Simulated annealing. Stat. Sci. 1993, 8, 10–15. [Google Scholar]

- Osman, K.E.; Eksin, I. A new optimization method: Big bang–big crunch. Adv. Eng. Softw. 2006, 37, 106–111. [Google Scholar]

- Richard, A.F. Central force optimization. Prog. Electromagn. Res. 2007, 77, 425–491. [Google Scholar]

- Hosseini, H.S. The intelligent water drops algorithm: A nature-inspired swarm-based optimization algorithm. Int. J. Bio-Inspired Comput. 2009, 1, 71–79. [Google Scholar] [CrossRef]

- Nguyen, T.-T.; Wang, H.-J.; Dao, T.-K.; Pan, J.-S.; Liu, J.-H.; Weng, S. An Improved Slime Mold Algorithm and its Application for Optimal Operation of Cascade Hydropower Stations. IEEE Access 2020, 8, 226754–226772. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Khasawneh, A.M.; Alshinwan, M.; Mirjalili, S.; Shehab, M.; Abuaddous, H.Y.; Gandomi, A.H. Black hole algorithm: A comprehensive survey. Appl. Intell. 2022, 52, 11892–11915. [Google Scholar] [CrossRef]

- Sadollah, A.; Eskandar, H.; Lee, H.M.; Yoo, D.G.; Kim, J.H. Water cycle algorithm: A detailed standard code. SoftwareX 2016, 5, 37–43. [Google Scholar] [CrossRef]

- Shareef, H.; Ibrahim, A.A.; Mutlag, A.H. Lightning search algorithm. Appl. Soft Comput. 2015, 36, 315–333. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-Verse Optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2015, 27, 495–513. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A novel meta-heuristic optimization algorithm: Thermal exchange optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Futur. Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Goodarzimehr, V.; Shojaee, S.; Hamzehei-Javaran, S.; Talatahari, S. Special relativity search: A novel metaheuristic method based on special relativity physics. Knowl.-Based Syst. 2022, 257, 109484. [Google Scholar] [CrossRef]

- Dai, C.; Chen, W.; Zhu, Y.; Zhang, X. Seeker optimization algorithm for optimal reactive power dispatch. IEEE Trans. Power Syst. 2009, 24, 1218–1231. [Google Scholar]

- Kaveh, A.; Talatahari, S. Optimum design of skeletal structures using imperialist competitive algorithm. Comput. Struct. 2010, 88, 1220–1229. [Google Scholar] [CrossRef]

- Cheng, S.; Qin, Q.; Chen, J.; Shi, Y. Brain storm optimization algorithm: A review. Artif. Intell. Rev. 2016, 46, 445–458. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Mirjalili, S.; Chakrabortty, R.K.; Ryan, M.J. Solar photovoltaic parameter estimation using an improved equilibrium optimizer. Sol. Energy 2020, 209, 694–708. [Google Scholar] [CrossRef]

- Ahmed, S.; Ghosh, K.K.; Mirjalili, S.; Sarkar, R. AIEOU: Automata-based improved equilibrium optimizer with U-shaped transfer function for feature selection. Knowl.-Based Syst. 2021, 228, 107283. [Google Scholar] [CrossRef]

- Wunnava, A.; Naik, M.K.; Panda, R.; Jena, B.; Abraham, A. A novel interdependence based multilevel thresholding technique using adaptive equilibrium optimizer. Eng. Appl. Artif. Intell. 2020, 94, 103836. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K.; Mirjalili, S. An efficient equilibrium optimizer with mutation strategy for numerical optimization. Appl. Soft Comput. 2020, 96, 106542. [Google Scholar] [CrossRef]

- Houssein, E.H.; Helmy, B.E.-D.; Oliva, D.; Jangir, P.; Premkumar, M.; Elngar, A.A.; Shaban, H. An efficient multi-thresholding based COVID-19 CT images segmentation approach using an improved equilibrium optimizer. Biomed. Signal Process. Control 2022, 73, 103401. [Google Scholar] [CrossRef]

- Liu, J.; Li, W.; Li, Y. LWMEO: An efficient equilibrium optimizer for complex functions and engineering design problems. Expert Syst. Appl. 2022, 198, 116828. [Google Scholar] [CrossRef]

- Tan, W.-H.; Mohamad-Saleh, J. A hybrid whale optimization algorithm based on equilibrium concept. Alex. Eng. J. 2023, 68, 763–786. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, Q. Information-utilization strengthened equilibrium optimizer. Artif. Intell. Rev. 2022, 55, 4241–4274. [Google Scholar] [CrossRef]

- Minocha, S.; Singh, B. A novel equilibrium optimizer based on levy flight and iterative cosine operator for engineering optimization problems. Expert Syst. 2022, 39, e12843. [Google Scholar] [CrossRef]

- Balakrishnan, K.; Dhanalakshmi, R.; Akila, M.; Sinha, B.B. Improved equilibrium optimization based on Levy flight approach for feature selection. Evol. Syst. 2023, 14, 735–746. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Ma, L.; Wang, C.; Xie, N.-G.; Shi, M.; Ye, Y.; Wang, L. Moth-flame optimization algorithm based on diversity and mutation strategy. Appl. Intell. 2021, 51, 5836–5872. [Google Scholar] [CrossRef]

- Shan, W.; Qiao, Z.; Heidari, A.A.; Chen, H.; Turabieh, H.; Teng, Y. Double adaptive weights for stabilization of moth flame optimizer: Balance analysis, engineering cases, and medical diagnosis. Knowl.-Based Syst. 2021, 214, 106728. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, H.; Yang, Z.; Li, B.; Guan, Z.; Bao, L. Rank-driven salp swarm algorithm with orthogonal opposition-based learning for global optimization. Appl. Intell. 2022, 52, 7922–7964. [Google Scholar] [CrossRef]

- Ding, H.; Cao, X.; Wang, Z.; Dhiman, G.; Hou, P.; Wang, J.; Li, A.; Hu, X. Velocity clamping-assisted adaptive salp swarm algorithm: Balance analysis and case studies. Math. Biosci. Eng. 2022, 19, 7756–7804. [Google Scholar] [CrossRef] [PubMed]

- Song, B.; Wang, Z.; Zou, L. An improved PSO algorithm for smooth path planning of mobile robots using continuous high-degree Bezier curve. Appl. Soft Comput. 2021, 100, 106960. [Google Scholar] [CrossRef]

- Hidalgo-Paniagua, A.; Vega-Rodríguez, M.A.; Ferruz, J.; Pavón, N. Solving the multi-objective path planning problem in mobile robotics with a firefly-based approach. Soft Comput. 2017, 21, 949–964. [Google Scholar] [CrossRef]

- Xu, F.; Li, H.; Pun, C.-M.; Hu, H.; Li, Y.; Song, Y.; Gao, H. A new global best guided artificial bee colony algorithm with application in robot path planning. Appl. Soft Comput. 2020, 88, 106037. [Google Scholar] [CrossRef]

- Ou, Y.; Yin, P.; Mo, L. An Improved Grey Wolf Optimizer and Its Application in Robot Path Planning. Biomimetics 2023, 8, 84. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).