Abstract

The volume and diversity of digital information have led to a growing reliance on Machine Learning (ML) techniques, such as Natural Language Processing (NLP), for interpreting and accessing appropriate data. While vector and graph embeddings represent data for similarity tasks, current state-of-the-art pipelines lack guaranteed explainability, failing to accurately determine similarity for given full texts. These considerations can also be applied to classifiers exploiting generative language models with logical prompts, which fail to correctly distinguish between logical implication, indifference, and inconsistency, despite being explicitly trained to recognise the first two classes. We present a novel pipeline designed for hybrid explainability to address this. Our methodology combines graphs and logic to produce First-Order Logic (FOL) representations, creating machine- and human-readable representations through Montague Grammar (MG). The preliminary results indicate the effectiveness of this approach in accurately capturing full text similarity. To the best of our knowledge, this is the first approach to differentiate between implication, inconsistency, and indifference for text classification tasks. To address the limitations of existing approaches, we use three self-contained datasets annotated for the former classification task to determine the suitability of these approaches in capturing sentence structure equivalence, logical connectives, and spatiotemporal reasoning. We also use these data to compare the proposed method with language models pre-trained for detecting sentence entailment. The results show that the proposed method outperforms state-of-the-art models, indicating that natural language understanding cannot be easily generalised by training over extensive document corpora. This work offers a step toward more transparent and reliable Information Retrieval (IR) from extensive textual data.

1. Introduction

Factoid sentences are commonly used to characterise news [1] and word definitions from dictionaries [2,3], as they can be easily used to recognise conflicting opinions, as well as to represent the majority of a sentence type contained in Knowledge Bases (KBs) such as ConceptNet 5.5 [4] or DBpedia [5,6]. The automated extraction of KB nodes and edges from full text is limited, as it results in some sentences not being correctly parsed and rendered as full-text nodes [7]. This is a major limitation when addressing the possibility of answering common-sense questions, as a machine cannot easily interpret the latter information, thus leading to low-accuracy results (55.9% [8]). To improve these results, we need a technique that provides a machine-readable representation of spurious text within the graph while also ensuring the correctness of the representation, going beyond the strict boundaries of a graph KB representation. We can consider this the dual problem of querying bibliographical metadata, using a query language that is as similar as possible to the natural language. Notably, given the untrustworthiness of existing Natural Language Processing (NLP) approaches in relation to Information Retrieval (IR), librarians still rely on domain-oriented query languages [9]. By providing a more trustworthy and verifiable representation of a full text in a natural language, we can then generate a more reliable intermediate representation of the text that can be used to query the bibliographical data [10,11].

An adequate semantic representation of the sentences should capture both the semantic nature of the data as well as recognising implication, inconsistency, or indifference as classification outcomes over pairs of sentences. To date, this has not been considered in the literature, as inconsistency is merged with indifference [12]; we have either similarity or entailment classification, but not the classification of conflicting information. Thus, none of the available datasets for NLP support training abilities to address the spread of misinformation through logical reasoning. Pre-trained language models (Section 2.2) are often characterised as “stochastic parrots” [13]; they exhibit significant deficiencies in formal reasoning due to their reliance on learned statistical patterns from vector representations rather than proper logical inference [14,15]. On the other hand, sound inference is fundamentally grounded in logic, for which well-known reasoning algorithms already exist without the need for hardcoding truth derivation rules [16]. Pre-trained language models merely mimic logical processes without formally incorporating them or, if they do through prompting, they provide limited reasoning ability that is restricted to a subset of all possible natural deduction rules (Section 2.2.3, T5: DeBERTaV2+AMR-LDA [12]). On the other hand, we could ultimately reason through text by first deriving a logic-based representation of the text through Montague Grammar (MG) [17], before subsequently applying logical inference steps. While doing so, we also want to avoid any contradiction of information as ex falso [sequitur] quodlibet (i.e. from falsehood, anything follows). This begs the following question: how do we reason with negation?

Classical logic approaches face the “principle of explosion”, where a single contradiction can render an entire system nonsensical by deriving arbitrary facts from false premises. Paraconsistent reasoning, in contrast, acknowledges and isolates a contradiction to avoid the above [18,19]. This can be used in realistic misinformation detection when considering data coming from disparate sources. The work by Zhang et al. [1] has already motivated the effectiveness of doing so for “repairing” the inference steps of AI algorithms over real-world contradictory data when handling misinformation online; thus, this paper puts this reasoning to the extreme by incorporating paraconsistency reasoning at the heart of the inference step rather than postponing at the end in order to address potential reasoning flaws. At the time of writing, no logical inference algorithm is known to reason paraconsistently, despite their formalisation in the aforementioned theoretical literature.

We continue our previous work on Logical, Structural and Semantic text Interpretation (LaSSI) [20], addressing the former limitations while addressing the following questions:

- RQ №1

- Is it possible to create a plug-and- play and explainable NLP pipeline for sentence representation? By exploiting white-box reasoning, we can ultimately visualise the outcome of each inference and sentence generation step (Section 5.3). Furthermore, by designing the pipeline in a modular way, it is easy to replace each single component to adapt to different linguistic needs. Through declarative context-free rewritings for NLP representation (Section 6), we ensure pipeline versatility by changing the inner rules rather than requiring code changes, as well as future extensibility. Therefore, we can see how the full text is transformed at every stage, allowing any errors to be identified and corrected using a human-in-the-loop approach.

- RQ №2

- Can pre-trained language models correctly capture the notion of sentence similarity? The previous result should imply the impossibility of accurately deriving the notion of equivalence, as entailment implies equivalence through if-and-only-if relationships, but not vice versa. Meanwhile, the notion of sentence indifference should be kept distinct from the notion of conflict. We designed empirical experiments with certain datasets to address the following sub-questions:

- (a)

- Can pre-trained language models capture propositional calculus? These experiments work on the following considerations. Given that First-Order Logic (FOL) is more expressive than propositional calculus, and given that pre-trained models are assumed to reason on arbitrary sentences, they are thus representable in FOL as per Montague’s assumption [17]. Additionally, given that propositional calculus is less expressive but included in FOL, any inability to capture propositional calculus will also invalidate the possibility of extending the approach to soundly making inferences in FOL. Current experiments (Section 4.2.1) show that pre-trained language models cannot adequately capture the information contained in logical connectives from propositional calculus.

- (b)

- Can pre-trained language models distinguish between active and passive sentences? Experiments (Section 4.2.2) show that their intermediate representation (AMR, tokens, and embeddings) is insufficient for distinguishing them faithfully.

- (c)

- Can pre-trained language models correctly capture minimal FOL extensions for spatiotemporal reasoning? Spatiotemporal reasoning requires specific part-of and is-a reasoning, requiring minimal FOL extensions (eFOLs). The paper’s results validate the observations from RQ №2(a), thus remarking on the impossibility of these approaches to reason on eFOLs (Section 4.2.3). Additional experiments clearly remark on the impossibility of such models to derive correct solutions even after re-training (Section 5.3).

To address the former limitations, we propose LaSSI, which is an instance of the General, Explainable, and Verified Artificial Intelligence (GEVAI) framework (Section 2.1) providing modular phases. After addressing discourse integration in the a priori phase concerning entity recognition and semantic ambiguity through entity type association, the ad hoc phase applies derivational and declarative rules (when possible) to transform full-text representations into final logical formulae. While doing so, we further address discourse integration through pronoun resolution ([21], Supplement X.5) and address ambiguities from the generated Universal Dependency (UD) parsing by exploiting syntactic and morphological information through our proposed Upper Ontology—Parmenides. Finally, the ex post phase explains sentences’ logical entailment by providing a confidence score as a result of reasoning paraconsistently over Parmenides for semantic information, while plotting the reasoning outcomes graphically (Section 5.3). We also offer a pipeline ablation study for testing the different stages of discourse integration (Section 5.2) and compare our explainers to other textual explainers (SHAP and LIME) using state-of-the-art methodologies to tokenise text and correlate it with the predicted class (Section 5.3). Last, we draw our conclusions and indicate some future research directions (Section 6). The datasets for this paper are available online (https://osf.io/g5k9q/; accessed on 14 May 2025). We explain further concepts of the paper in our technical report [21].

2. Related Works

2.1. General Explainable and Verified Artificial Intelligence (GEVAI)

A recent survey conducted by Seshia et al. introduced the notion of verified Artificial Intelligence (AI) [22], through which we seek greater control by exploiting reliable and safe approaches to derive a specification that describes abstract properties of the data . Through verifiability, the specification itself can be used as a witness of the correctness of the proposed approach by determining whether the data satisfy such specification, i.e., (formal verification). This survey also revealed that, at the time of its writing, providing a truly verifiable approach of NLP is still an open challenge, remaining unresolved with current techniques. In fact, specification is not simply considered the outcome of a classification model or the result of applying a traditional explainer, but rather a compact representation of the data in terms of the properties it satisfies in a machine- and human-understandable form. Furthermore, as remarked in our recent survey [23], the possibility of explaining the decision-making process in detail, even in the learning phase, goes hand in hand with using an abstract and logical representation of the data. However, if one wants to use a numerical approach to represent the data, such as when using Neural Networks (NNs) and producing sentence embeddings from transformers (Section 2.2), then one is forced to reduce the explanation of the entire learning process to the choice of weights, which are useful for minimising the loss function, and to the loss function itself [24]. A possible way to partially overcome this limitation is to jointly train a classifier with an explainer [25], which might then pinpoint the specific data features leading to the classification outcome [26]. As current explainers mainly state how a single feature affects the classification outcome, they mainly lose information on the correlations between these features, which are extremely relevant in NLP (semantic) classification tasks. More recent approaches [11] have attempted to revive previous non-training-based approaches, showing the possibility of representing a full sentence with a query via semantic parsing [10]. A more recent approach also enables sentence representation in logical format rather than ensuring an SQL representation of the text. As a result, the latter can also be easily rewritten and used for inference purposes. Notwithstanding the former, researchers have not covered all the rewriting steps required to capture different linguistic functions and categorise their role within the sentence, unlike in this study. Furthermore, while the authors of [11] attempted to answer questions, our study takes a preliminary step back. We first test the suitability of our proposed approach to derive correct sentence similarity from the logical representation. Then, we tackle the possibility of using logic-based representations to answer questions and ensure the correct capturing of multi-word entities within the text, while differentiating between the main entities based on the properties specifying them.

Our latest work also highlights the potential for achieving verification when combined with explainability, making the data understandable to both humans and machines [23]. This identifies three distinct phases that should be considered prerequisites for achieving good explanations. First, within the first a priori explanation, unstructured data should achieve a higher structural representation level by deriving additional contextual information from the data and its environment. Second, the ad hoc explanation should provide an explainable way through which a specification is extracted from the data, where provenance mechanisms help trace all the data processing steps. If represented as a logical program, the specification can also ensure both human and machine understandability by being expressed in an unambiguous format. Lastly, the ex post phase (post hoc in [25]) should further refine the previously generated specifications by achieving better and more fine-grained explainability. Therefore, we can derive even more accessible results and facilitate comparisons between models, while also enabling them to be compared with other data. Section 3 reflects these phases.

2.2. Pre-Trained Language Models

We now introduce our competing approaches, which all work by assuming that information can be distilled from a large set of annotated documents and is suitable for training tasks, leading to a model representation minimising the loss function over an additional training dataset. The characterisation of Large LanguageModels (LLMs) as “stochastic parrots” [13] posits that their proficiency lies in mimicking statistical patterns from vast training data rather than genuine comprehension, leading to significant challenges such as hallucination and the amplification of societal biases. Research has explored these issues, even noting how biases can be intentionally manipulated [15]. Private information from prompt data can be revealed [27], as well as issues with semantic leakage [28]. To mitigate these problems, researchers propose a combination of methodological and technical solutions. Existing human-in-the-loop approaches look at creating expert-annotated datasets in specialised fields like mental health and nutrition counselling, advocating improved data quality and reduced bias [29]. The rules declared in Parmenides, Generalised Graph Grammar (GGG) rewriting, and the Logical Functions (in [21], Table S1) are all expressed declaratively, meaning these can be changed to alter results for the most correct outcome. By using logic, we are minimising bias, which is known to affect pre-trained language models [30], and alleviating the causes of semantic leakage [28]. On the other hand, symbolic NNs such as those proposed in [31] never consider negation. If a system does not use logic combined with negation, then there is no possibility for the system to reason over contradictory and real-world data sentences, which also contain negations. We focus on pre-trained language models for sentence similarity and logical prediction tasks. Table 1 summarises our findings.

2.2.1. Sentence Transformers

Google introduced transformers [32] as a compact way to encode semantic representations of data into numerical vectors, usually within a Euclidean space, through a preliminary tokenisation process. After converting tokens and their positions into vector representations, a final transformation layer provides the final vector representation for the entire sentence. The overall architecture seeks to learn a vector representation for an entire sentence, maximising the probability distribution over the extracted tokens. This is ultimately achieved through a loss minimisation task that depends on the transformer’s architecture of choice; while masking considers predicting the masked out tokens by learning a conditional probability distribution over the non-masked one, autoregression learns a stationary distribution for the first token and a conditional probability distribution, aiming to predict the subsequent tokens, which are gradually unmasked. While sentence transformers adopt the former approach, generative LLMs (Section 2.2.3) use the latter.

Table 1.

A comparative table between the competing pre-trained language model approaches and LaSSI.

Table 1.

A comparative table between the competing pre-trained language model approaches and LaSSI.

| Sentence Transformers (Section 2.2.1) | Neural Information Retrieval (IR) (Section 2.2.2) | Generative Large Language Model (LLM) (Section 2.2.3) | GEVAI (Section 2.1) | |||

|---|---|---|---|---|---|---|

| MPNet [33] | RoBERTa [34] | MiniLMv2 [35] | ColBERTv2 [36] | DeBERTaV2+AMR-LDA [12] | LaSSI (This Paper) | |

| Task | Document Similarity | Query Answering | Entailment Classification | Paraconsistent Reasoning | ||

| Sentence Pre-Processing | Word Tokenisation + Position Encoding | • AMR with Multi-Word Entity Recognition • AMR Rewriting | • Dependency Parsing • Generalised Graph Grammars • Multi-Word Entity Recognition • Logic Function Rewriting | |||

| Similarity/Relationship inference | Permutated Language Modelling | – | Annotated Training Dataset | Factored by Tokenisation | • Logical Prompts • Contrastive Learning | • Knowledge Base-driven Similarity • TBox Reasoning |

| Learning Strategy | Static Masking | Dynamic Masking | Annotated Training Dataset | • Autoregression • Sentence Distance Minimisation | ||

| Final Representation | One vector per sentence | Many vectors per sentence | Classification outcome | Extended First-Order Logic (FOL) | ||

| Pros | Deriving Semantic Similarity through Learning | Generalisation of document matching | Deriving Logical Entailment through Learning | • Reasoning Traceability • Paraconsistent Reasoning • Non biased by documents | ||

| Cons | • Cannot express propositional calculus • Semantic similarity does not entail implication capturing | • Inadequacy of AMR • Reasoning limited by Logical Prompts • Biased by probabilistic reasoning | Heavily Relies on Upper Ontology | |||

Pre-trained sentence transformer models are extensively employed to turn text into vectors known as embeddings and are fine-tuned on many datasets for general-purpose tasks such as semantic search, grouping, and retrieval. Nanjing University of Science and Technology and Microsoft Research jointly created MPNet [33], which aims to consider the dependency among predicted tokens through permuted language modelling, while considering their position within the input sentence. RoBERTa [34], which is a collaborative effort between the University of Washington and Facebook AI, is an improvement over traditional BERT models, where masking only occurs at data pre-processing, by performing dynamic masking, thus generating a masking pattern every time a training token sequence is fed to the model. The authors also recognised the positive effect of hyperparameter tuning over the resulting model, hence systematising the training phase while considering additional documents. Lastly, Microsoft Research [35] took an opposite direction on the hyperparameter tuning challenge—rather than consider hundreds of millions of parameters, MiniLMv2 considers a simpler approach, compressing large transformers via pre-trained models, where a small student model is trained to mimic the pre-trained one. Furthermore, the authors exploited a contrastive learning objective for maximising the sentence semantics’ similarity mapping—given a training dataset composed of pairs of full text sentences, the prediction task matches one sentence from the pair, and then the other is given.

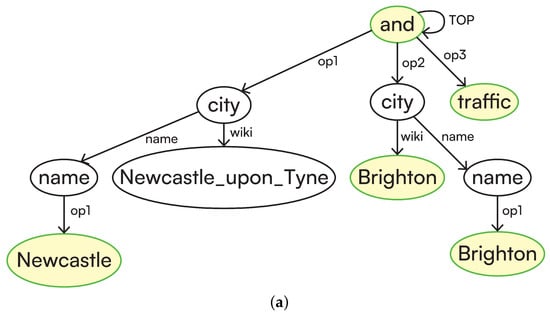

Recent surveys on the expressive power of transformer-based approaches, mainly for capturing text semantics, reveal some limitations in their reasoning capabilities. First, when two sentences are unrelated, the attention mechanisms are dominated by the last output vector [25], which might easily lead to hallucination and untrustworthy results such as the ones resulting from semantic leakage [28]. Second, theoretical results have suggested that these approaches are unable to reason on propositional calculus [37]. If the impossibility of simple logical reasoning during the learning phase is confirmed, this would strongly undermine the possibility of relying on the resulting vector representation for determining complex sentence similarity. Lastly, while these approaches’ ability to represent synonymy relations and carry out multi-word name recognition is recognised, their ability to discard parts of the text deemed irrelevant is well known to result in some difficulty with capturing higher-level knowledge structures [25]. That said, if a word is then considered a stop word, it will not be used in the similarity learning mechanism, and the semantic information will be permanently lost. On the contrary, a learning approach exploiting either Abstract Meaning Representation (AMR) or UD graphs (Figure 1; see ([21], Supplement I) can potentially limit this information loss. Section 2.2.3 discusses more powerful generative-based approaches that attempt to overcome the limitations above.

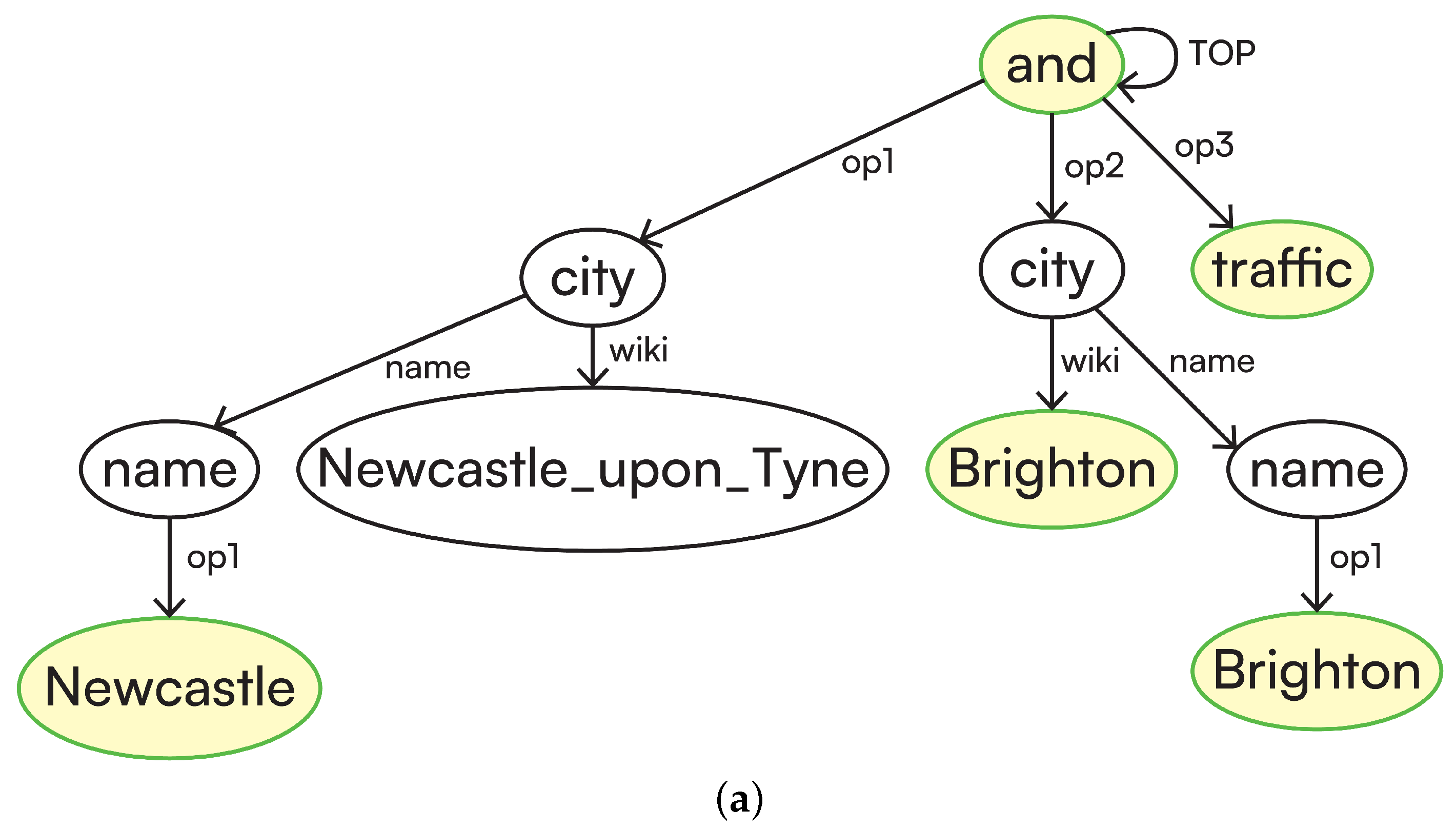

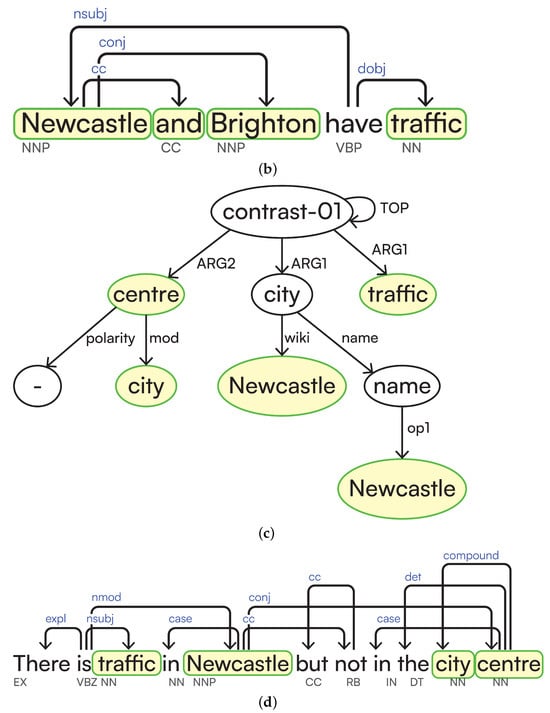

Figure 1.

Visualisation of the differences between Part of Speech (POS) tagging, AMR graphs, and UDs, providing more explicit relationships between words. AMRs were generated through AMREager (https://bollin.inf.ed.ac.uk; accessed on 24 April 2025), while UDs were generated using StanfordNLP [38]. Graphs are highlighted to word correspondences, represented as graph nodes. (a) AMR for “Newcastle and Brighton have traffic”: “and” is conjoining the subject with the direct object, losing grammatical distinction between them (op1, op2, and op3). (b) POS tagging (below) and UD (above) for “Newcastle and Brighton have traffic”: there is a clear distinction between subject and direct object; “Newcastle” and “Brighton” are joined by a conj, and the verb “have” relates to “traffic” through a dobj. (c) AMR for “There is traffic in Newcastle but not in the city centre”: despite contrast-01 highlighting the opposite sentiment between “traffic in Newcastle” and “city centre”, verb information is lost. (d) POS tagging (below) and UD(above) for “There is traffic in Newcastle but not in the city centre”: all the desired information is retained, other than a negation dependency that should be extracted from “not”.

2.2.2. Neural Information Retrieval (IR)

IR concerns retrieving full text documents given a full text query. Classical approaches tokenise the query into words of interest and retrieve documents within a corpus, ranking them based on the presence of tokens [39]. Neural IR is an improvement over classical IR, which was text-bound without considering semantic information. After representing queries and documents as vectors, relevance is computed through the dot product. Early versions exploited transformers, representing documents and queries as single vectors. Late interaction approaches like ColBERTv2, proposed by Keshav et al. [36], provide a finer granularity representation by encoding the former into multi-vectors. After finding each document token and maximising the dot product with a given query token, the final document ranking score is defined by summing all the maximised dot products. Training is then performed to maximise the matches of the given queries with human-annotated documents, which are marked as positive or negative matches for each query. Please observe that although this approach might help maximise the recall of the documents based on their semantic similarity to the query, the query tokenisation phase might lose information concerning the correlation between the different tokens occurring within the document, thus potentially disrupting any structural information occurring across query tokens. On the other hand, retaining semantic information concerning the relationships between entities leads us to a better logical and semantic representation of the text, as our proposed approach proves. This paper considers benchmarks against ColBERTv2 through the pre-trained RAGatouille v0.0.9 (https://github.com/AnswerDotAI/RAGatouille; accessed on 22 April 2025) library.

2.2.3. Generative Large Language Model (LLM)

As a result of the autoregressive tasks generally adopted by generative LLMs, when the system is asked about concepts on which it was not trained initially, it tends to invent misleading information [40]. This is inherently due to the probabilistic reasoning embedded within the model [13], not accounting for inherent semantic contradiction implicitly resulting from the data through explicit rule-based approaches [7,41]. These models do not account for probabilistic reasoning by contradiction, with facts given as conjunctions of elements, leading to the inference of unlikely facts [18,42]. All these consequences lead to hallucinations, which cannot be trusted to verify the inference outcome [43].

DeBERTaV2+AMR-LDA, proposed by Qiming Bao et al. [12], is a state-of-the-art model supporting sentence classification through logical reasoning using a generative LLM. The model can conclude whether the first given sentence entails the second or not, thus attempting to overcome the above limitations of LLMs. After deriving an AMR of a full text sentence, the graphs are rewritten to obtain logically equivalent sentence representations for equivalent sentences. AMR-LDA is used to augment the input prompt before feeding it into the model, where prompts are given for logical rules of interest to classify the notion of logical entailment throughout the text. Contrastive learning is then used to identify logical implications by learning a distance measure between different sentence representations, aiming to minimise the distance between logically entailing sentences while maximising the distance between the given sentence and the negative example. This approach has several limitations. First, the authors only considered equivalence rules that frequently occur in the text and not all of the possible equivalence rules, thus heavily limiting the reasoning capabilities of the model. Second, in doing so, the model does not exploit contextual information from the knowledge graphs to consider part-of and is-a relationships relevant for deriving correct entailment implications within spatiotemporal reasoning. Third, due to the lack of paraconsistent reasoning, the model cannot clearly distinguish whether the missing entailment is due to inconsistency or whether the given facts are not correlated. Lastly, the choice of using AMR heavily impacts the ability of the model to correctly distinguish different logical functions of the sentence within the text.

The present study overcomes the limitations above in the following manner. First, we avoid hardcoding all possible logical equivalence rules by interpreting each formula using classical Boolean-valued semantics for each atom within the sentences. After generating a truth table with all the atoms, we then evaluate the Boolean-valued semantics for each atom combination ([21], Appendix A.2). In doing so, we avoid the explosion problem by reasoning paraconsistently, thus removing the conflicting worlds (also ([21], Appendix A.2)). Second, we introduce a new compact logical representation, where entities within the text are represented as functions ([21], Section 3.2.4); the logical entailment of the atoms within the logical representation is then supported by a KB expressing complex part-of and is-a relationships ([21], Appendix A.3). Third, we consider a three-fold classification score through the confidence score (Definition 1)—while and can be used to differentiate between implication and inconsistency, intermediate values will capture indifference. Lastly, we use UD graphs rather than AMR graphs ([21], Supplement I and VI.1), similarly to recent attempts at providing reliable rule-based Question Answering (QA) [11].

This study considered benchmarking against the pre-trained LLM classifier, which was made available through HuggingFace by the original paper’s authors (AMR-LE-DeBERTa-V2-XXLarge-Contraposition-Double-Negation-Implication-Commutative-Pos-Neg-1-3).

3. Materials and Methods

Let and be full text sentences. In this paper, we consider only factoid sentences that can, at most, represent existentials, expressing the omission of knowledge to, at some point, be injected with new, relevant information. represents a transformation function, in which the vector and logical representations are denoted as and for and , respectively.

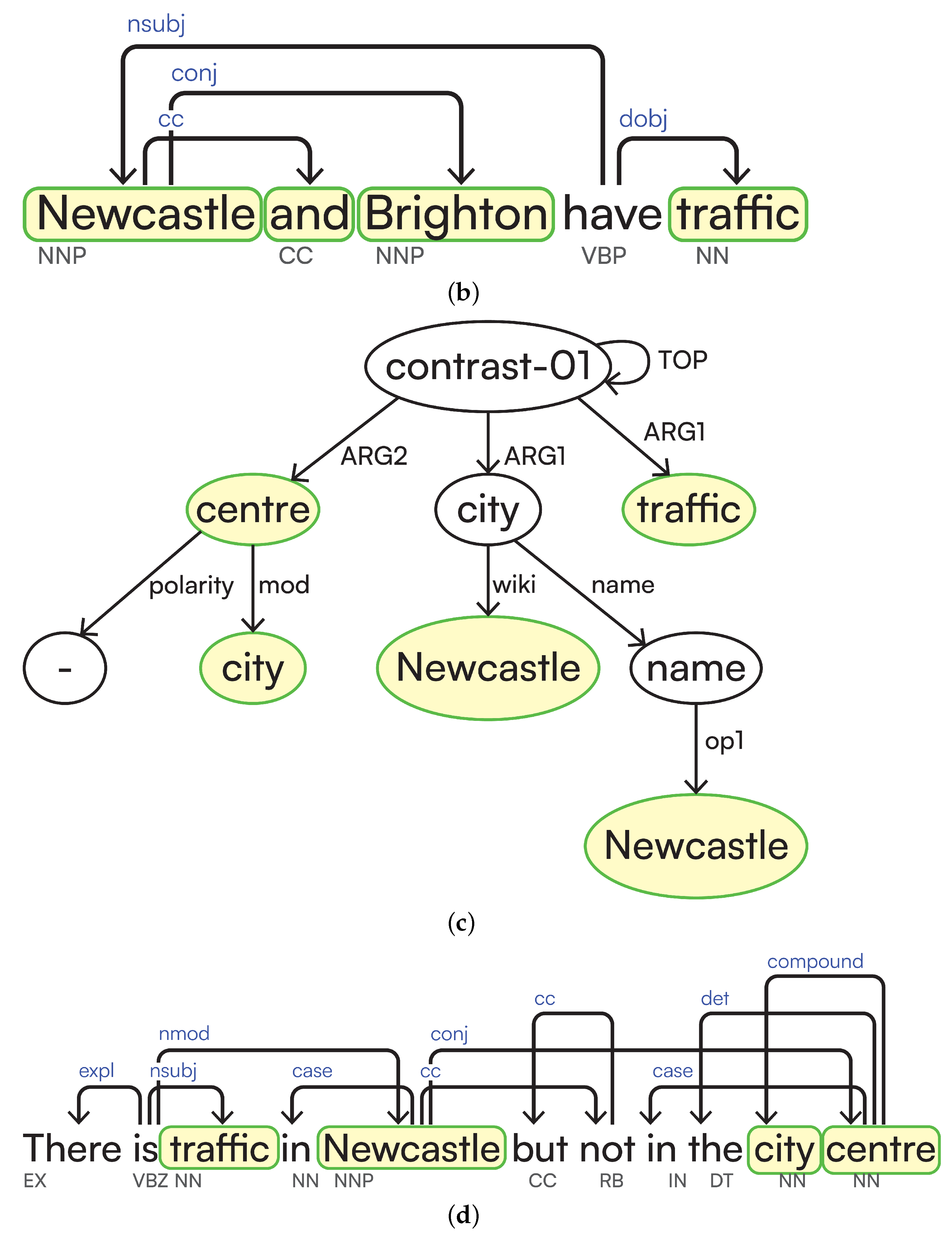

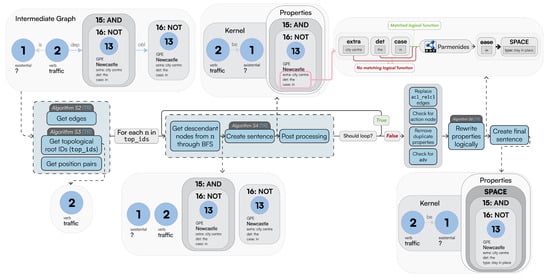

From , we want to derive a logical interpretation through while capturing the common-sense notions from the text. We then need a binary function that expresses this for each transformation (Section 4.1). Figure 2 offers a birds-eye view of the entire pipeline, as narrated in the present paper.

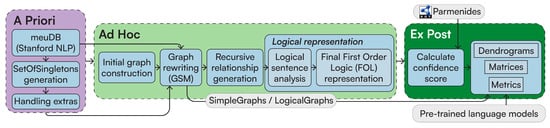

Figure 2.

LaSSI pipeline: operational description of the pipeline, reflecting the outline of this section.

3.1. A Priori

In the a priori explanation phase, we aim to enrich the semantic information for each word (Section 3.1.1) to subsequently recognise multi-word entities (Section 3.1.2) with extra information (i.e., specifications, ([21], Supplement III.2.1)) by leveraging the former. This information will be used to pair the intermediate syntactic and morphological sentence representation achieved through subsequent graph rewritings (Section 3.2) with the semantic interpretation derived from the phase narrated within the forthcoming subsections.

The main data structure used to nest dependent clauses represented as relationships occurring at any recursive sentence level is the Singleton. This is a curated class used throughout the pipeline to portray entities within the graph from the given full text. This also represents the atomic representation of an entity (Section 3.1.1); it includes each word of a multi-word entity (Section 3.1.2) and is defined with the following attributes: id, named_entity, properties, min, max, type, confidence, and kernel. When kernel is none, the properties mainly refer to the entities, thus including the aforementioned specifications ([21], Supplement III.2.1); otherwise, they refer to additional entities leading to logical functions associated with the sentence. Kernel is used when we want to represent an entire sentence as a coarser-grained component of our pipeline; this is defined as a relationship between a source and target mediated by an edge label (representing a verb), while an extra Boolean attribute reflects its negation ([21], Section 3.2.2). The source and target are also Singletons, as we want to be able to use a kernel as a source or target of another kernel (e.g., to express causality relationships) so that we have consistent data structures across all entities at all stages of the rewriting. The properties of the kernel could include spatiotemporal or other additional information, represented as a dictionary, which is used later to derive logical functions through logical sentence analysis ([21], Section 3.2.3).

3.1.1. Syntactic Analysis Using Stanford CoreNLP

This step aims to extract syntactic information from the input sentences and using Stanford CoreNLP. A Java service within our LaSSI pipeline utilises Stanford CoreNLP to process the full text, generating annotations for each word. These annotations include base forms (lemmas), POS tags, and morphological features, providing a foundational understanding of the sentence structure while considering entity recognition. The Multi-Word Entity Unit DataBase (meuDB) contains information about all variations of each word in a given full text. This could refer to American and British spellings of a word like “centre” and “center”, or typos in a word like “interne” instead of “internet”. Each entry in the meuDB represents an entity match appearing within the full text, with some collected from specific sources, including GeoNames [44] for geographical places, SUTime [45] for recognising temporal entities, Stanza [46] and our curated Parmenides ontology for detecting entity types, and ConceptNet [4] for generic real-world entities. Depending on the trustworthiness of each source, we also associate a confidence weight.

When generating the resolution for Multi-Word Entity Units (MEUs), a typed match is also performed when no match is initially found from Stanford NLP, so the type from the meuDB is returned for the given MEU. This categorisation subsequently allows the representation of each single named entity occurring in the text to be represented as a Singleton, as discussed before.

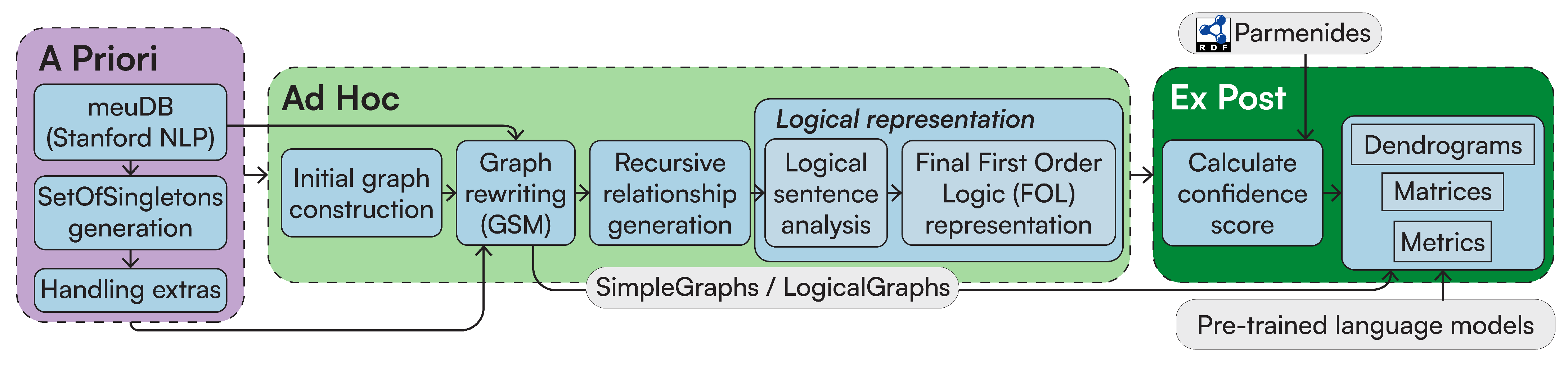

3.1.2. Generation of SetOfSingletons

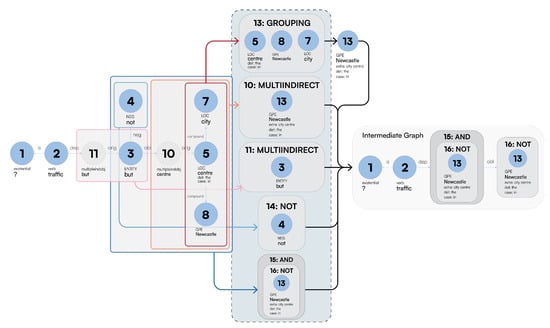

A SetOfSingletons is a specific type of Singleton containing multiple entities, e.g., an array of Singletons. As showcased in Figure 3, a group of items is generated by coalescing distinct entities grouped into clusters, as indicated by UDs relationships, such as the coordination of several other entities or sentences (conj), the identification of multi-word entities (compound), or the identification of multiple logical functions attributed to the same sentence (multipleindobj, derived after the GGG rewriting of the original UDs graph). Each SetOfSingletons can be associated with types.

Figure 3.

We show how different types of SetOfSingletons generated from distinct UD relationships lead to intermediate graphs. We showcase coordination (e.g., AND and NOT), multi-word entities (e.g., GROUPING), and multiple logical functions (e.g., MULTIINDIRECT). The sequence of changes highlighted in the central column is applied by visiting the graph in lexicographical order [47] in order to start changing the graph from the nodes with fewer edge dependencies. The box colours refer to different groups of nodes within the transformation.

We now illustrate the proposed SetOfSingleton type according to the application order from the example given in Figure 3.

- Multi-Word Entities: In [21], Algorithm S8 performs node grouping [48] over the nodes connected by compound edge labels while efficiently visiting the graph using a Depth-First Search (DFS). After this, we identify whether a subset of these nodes acts as a specification (extra) to the primary entity of interest or whether it should be treated as a single entity, while also considering the type of information for disambiguation purposes.

- Multiple Logical Functions: Due to the impossibility of graphs to represent n-ary relationships, we group multiple adverbial phrases into one SetOfSingleton. These will then be semantically disambiguated by their function during the Logical Sentence Analysis ([21], Section 3.2.3). Figure 3 provides a simple example, where each MULTIINDIRECT contains either one adverbial phrase or a conjunction. In [21], Supplement III.1 provides a more compelling example, where the SetOfSingleton actually contains more Singletons.

- Coordination: For coordination induced by conj relationships, we can derive a coordination type to be AND, NEITHER, or OR via cc relationships.

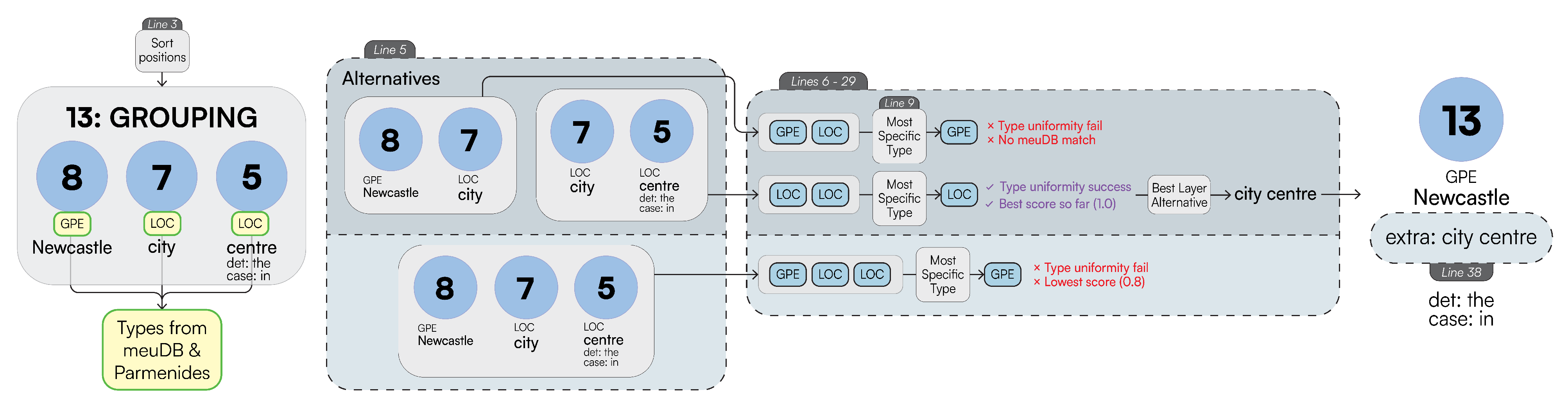

Example 1.

After coalescing the compound relationships from Figure 3, we would like to represent the grouping “Newcastle city centre” as a Singleton with a core entity “Newcastle” and an extra “city centre”. Figure 4 sketches the main phases of [21], Algorithm S8, leading to this expected result. For our example, the possible ordered permutations of the entities within GROUPING are “Newcastle city”, “city centre”, and “Newcastle city centre”. Given these alternatives, “Newcastle city centre” returns a confidence of 0.8 and “city centre” returns the greatest confidence of 1.0, so our chosen alternative is [city, centre]. As “Newcastle” is the entity with the most specific type, this is selected as our chosen_entity; subsequently, “city centre” becomes the extra property to be added to “Newcastle”, resulting in our final Singleton: Newcastle[extra:city centre].

For Simplistic Graphs, “Newcastle upon Tyne” would be represented as one Singleton with no extra property.

Figure 4.

Continuing the example from Figure 3, we display how SetOfSingletons with type GROUPING are rewritten into Singletons with an extra property, modifying the main entity with additional spatial information. Line numbers refer to distinct subsequent phases from Algorithm S8.

Figure 4.

Continuing the example from Figure 3, we display how SetOfSingletons with type GROUPING are rewritten into Singletons with an extra property, modifying the main entity with additional spatial information. Line numbers refer to distinct subsequent phases from Algorithm S8.

Last, LaSSI also handles compound_prt relationships; unlike the above, these are coalesced into one Singleton as they represent a compound word, as follows: becomes . Therefore, these are not represented as a SetOfSingleton.

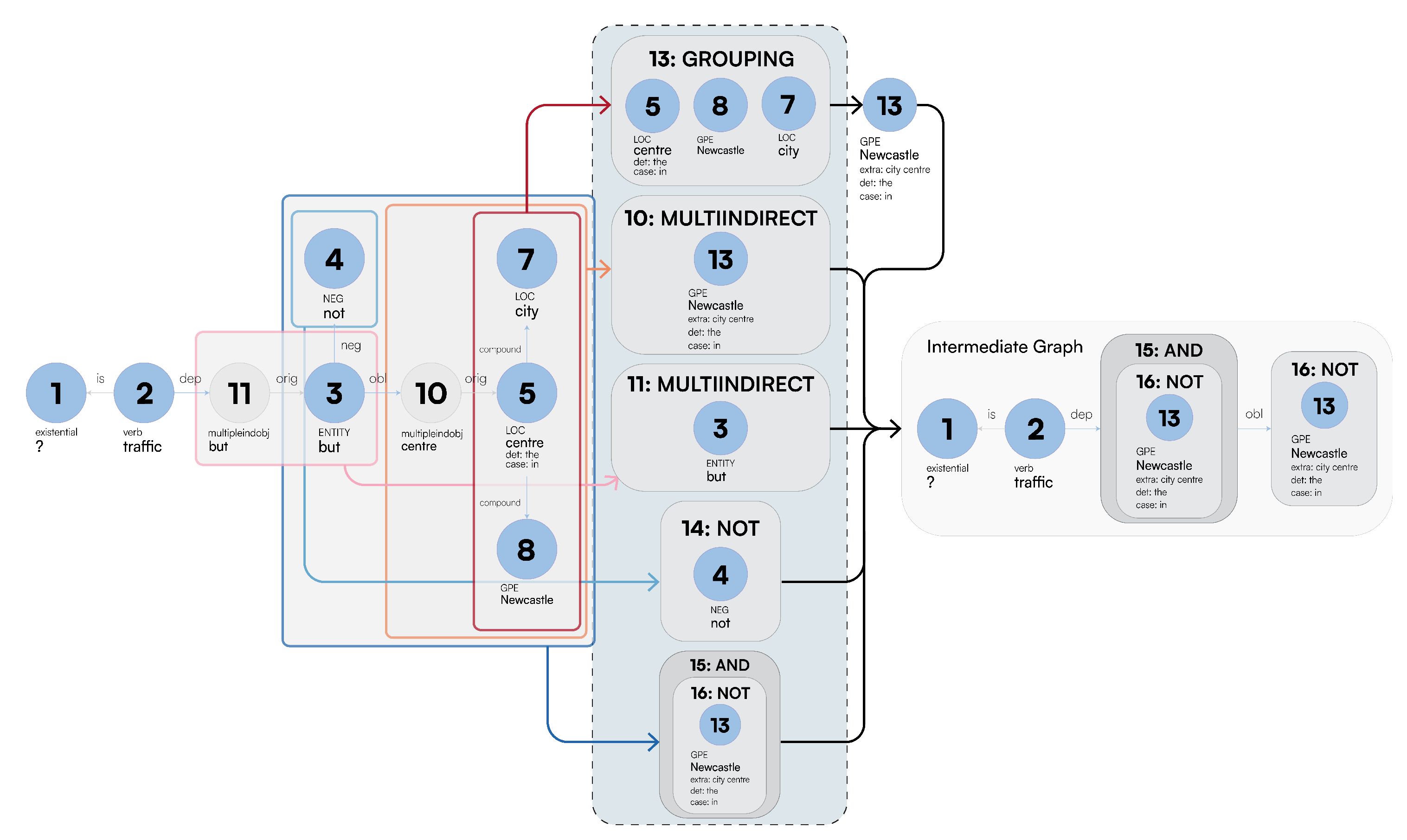

3.2. Ad Hoc

This phase provides a gradual ascent of the data representation ladder through which raw full text data are represented as logical programs via intermediate rewriting steps (Figure 5), thus achieving the most expressive representation of the text. As this provides an algorithm to extract a specification from each sentence, providing both a human- and machine-interpretable representation, we refer to this phase as an ad hoc explanation phase, where information is “mined” structurally and semantically from the text.

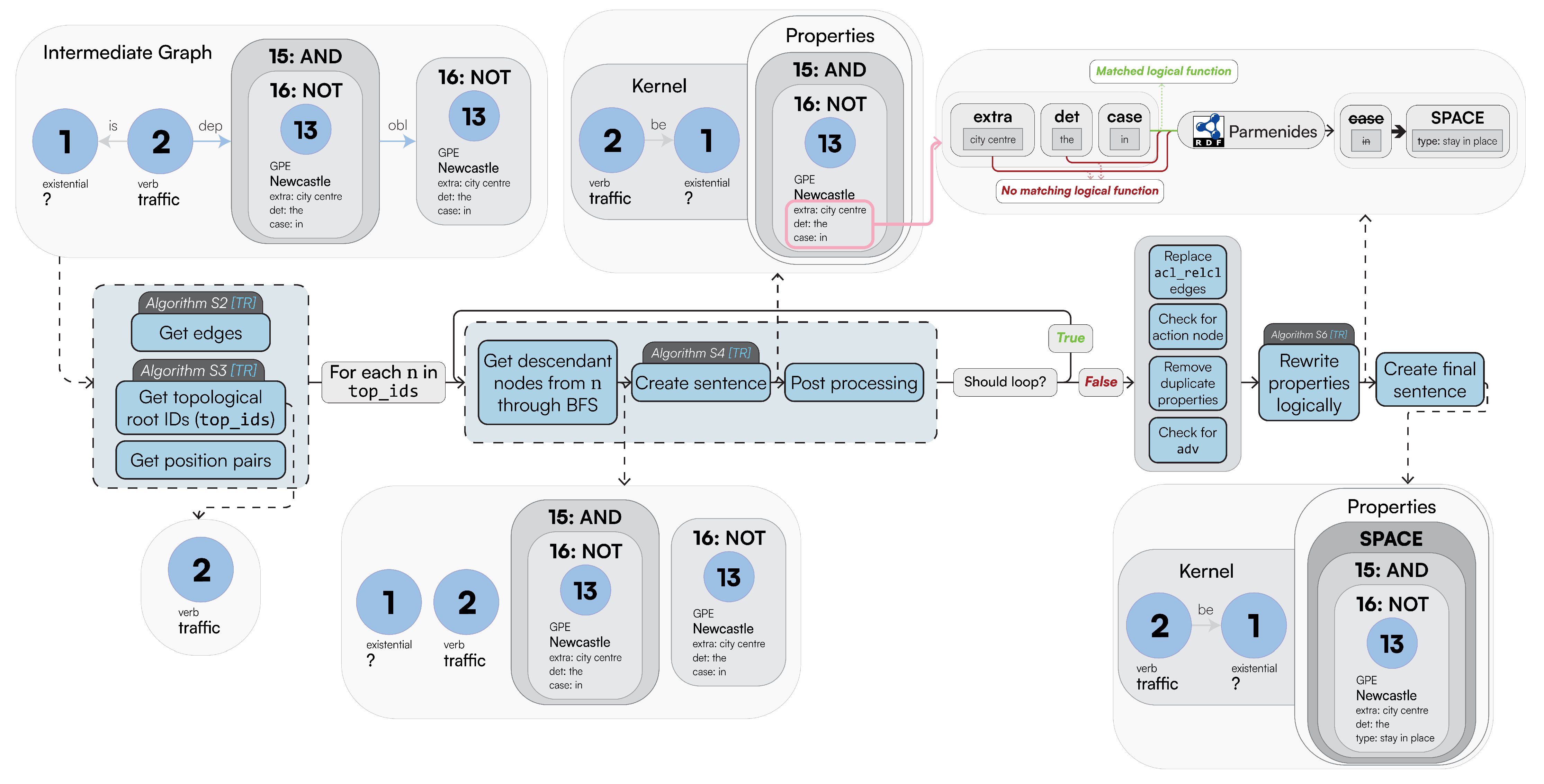

Figure 5.

A broad view of the ad hoc phase of the pipeline. With the intermediate graph generated, we iterate over each topologically sorted root node ID and create the final sentence representation. [TR] refers to our technical report [21].

The subsequent rewriting phases further decorate the raw data with contextual information. After generating a semistructured representation of the full text by enriching the text with UDs as per Stanford NLP ([21], Input in Figure 7 and Supplement VI.1), we apply a preliminary graph rewriting phase that aims to generate similar graphs for sentences using the MG approach through DatagramDB [49], where one is the permutation of the other or simply differs from the active/passive form ([21], Result in Figure 7 and Section 3.2.1). We also derive a cluster of nodes (referred to as the SetOfSingletons) that can be used later on to differentiate the main entity in relation to the concept that the kernel entity is referring to ([21], Supplement III.2.1). After this, we acknowledge the recursive nature of complex sentences by visiting the resulting graph in topological order, thus generating minimal sentences first (kernels) to then merge them into a complex and nested sentence structure ([21], Section 3.2.2). After this phase, we extract each linguistic logical function occurring within each minimal sentence using a rule-based approach, exploiting the semantic information associated with each entity as derived from the a priori phase ([21], Section 3.2.3).

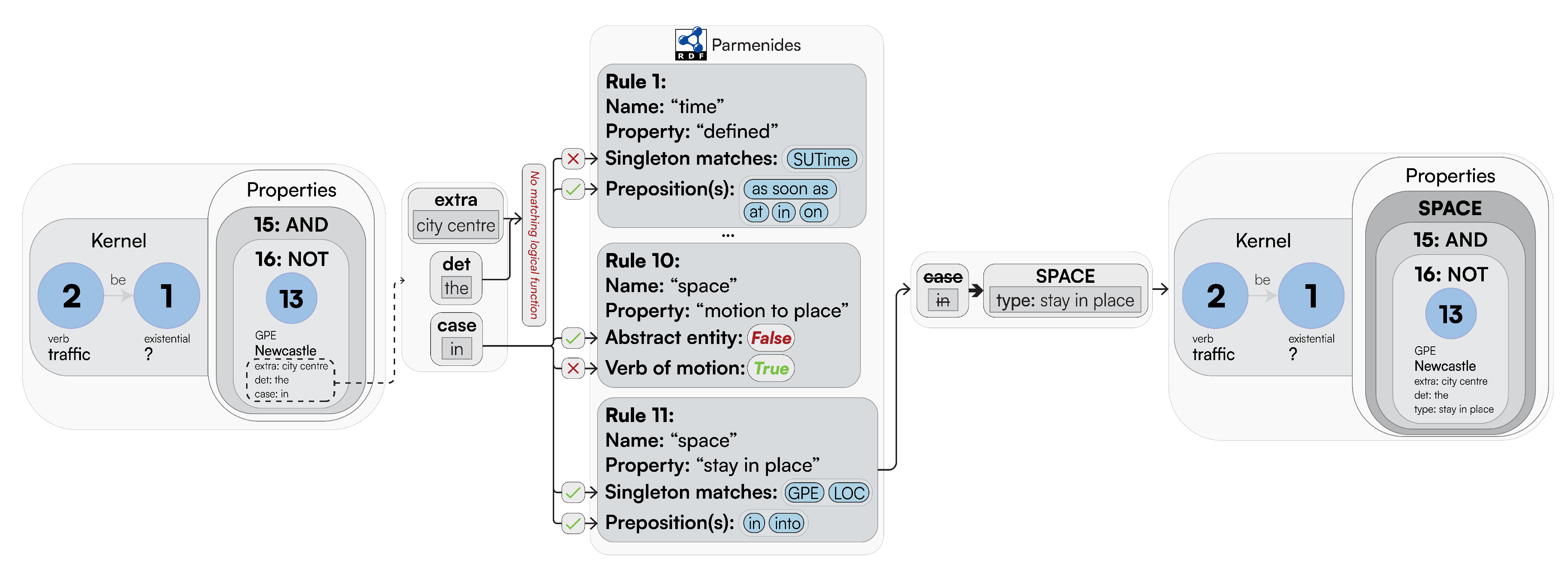

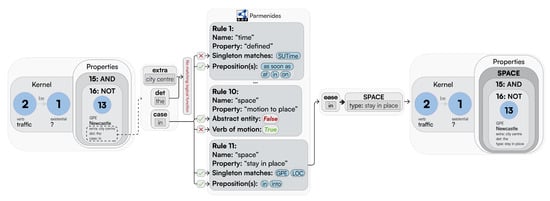

This rewriting mechanism exploits grammar rules found in any Italian linguistic book ([21], Supplement II.1), and is therefore easily accessible. To avoid hardcoding these rules in the pipeline, we declaratively represent them as instances of LogicalRewriteRule concepts within our Parmenides Upper Ontology ([21], Figure 9a). These rules can be easily interpreted as Horn clauses ([21], Figure 9c). These are used in LaSSI to support further logical functions by extending the rules within the ontology rather than changing the codebase. Figure 6 summarises the contribution of [21], Algorithm S6. For each sentence node (either SetOfSingletons or Singleton), we obtain its properties and test all the rules, as stored in Parmenides, in declaration order. Upon the satisfaction of the premises, the rule determines how to rewrite the matched content as either properties of the relationship or of the aforementioned node.

Figure 6.

An abstraction of ([21], Algorithm S6), continuing the example from Figure 4. We show how properties are matched against the Parmenides KB to find a logical function that matches each given property, to thus be rewritten and replaced within the final logical representation.

Example 2.

The sentence “Traffic is flowing in Newcastle city centre, on Saturdays” is initially rewritten as follows: flow(Traffic, None)[(GPE:Newcastle[(extra:city centre), (4:in)]), (DATE:Saturdays[(9:on)])]. We have both the location of “Newcastle” and the time of “Saturdays”. Given the rules from ([21], Figure 9a), the sentence would match the DATE property and GPE. After the application of the rules, the relationship is rewritten as follows:

| flow(Traffic, None)[(SPACE:Newcastle[(type:stay in place), (extra:city centre)]), (TIME:Saturdays[(type:defined)])] |

Due to lemmatisation, the edge label becomes flow from “is flowing”.

For conciseness, additional details for how such a matching mechanism works are presented in [21], Supplement V.

Finally, we derive a logical representation in FOL. Each entity is represented as one single function, where the arguments provide the name of the entity, its potential specification value, and any adjectives associated with it (cop), as well as any explicit quantification. These are pivotal for spatial information, from which we can determine if all the parts of the area () or just some of these () are considered. These characterisations are not represented as FOL universal or existential quantifiers, as they are only used to refine the intended interpretation of the function representing the spatial entity. Transitive verbs are then always represented with binary propositions, while intransitive verbs are always represented as unary ones; for both, their names refer to the associated verb name. If any ellipsis from the text makes an implicit reference to either of the arguments, these are replaced with a fresh variable, which is then bound with an existential quantifier. For both functions and propositions, we provide a minor syntax extension that does not substantially affect its semantics, rather than using shorthand to avoid expressing additional function and proposition arguments referring to further logical functions and entities associated with them. We then introduce explicit properties p as key–value multimaps. Among these, we also consider a special constant (or 0-ary function) None, identifying that one argument is missing relevant information. We then derive the following syntax, which can adequately represent factoid sentences like those addressed in the present paper:

When an entity “foo” is associated with only a name and has no explicit all/some representation, this will be rendered as . When “foo” comes with a specification “bar” and has no explicit all/some representation, this is represented as . Given the intermediate representation resulting from [21], Section 3.2.2, we rewrite any logical connective occurring within either the relationships’ properties or within the remaining SetOfSingletons as logical connectives within the FOL representation, representing each Singleton as a single function. Each free variable in the formula is bound to a single existential quantifier. When possible, negations potentially associated with specifications of a specific function are then expanded and associated with the proposition containing such function as a term.

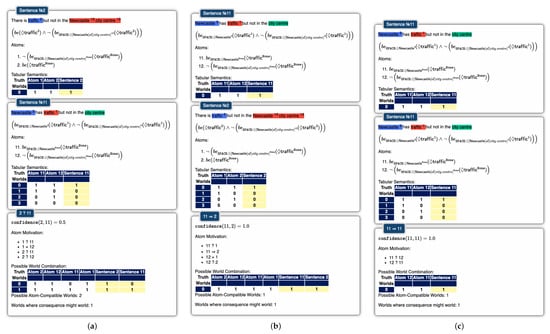

3.3. Ex Post

The ex post explanation phase details the similarity of two full-text sentences through a similarity score over a representation derived from the previous phase. When considering traditional transformer approaches representing sentences as semantic vectors, we consider traditional cosine similarity metrics ([21], Supplement VIII.1 and VIII.2). For LLMs, we consider normalised confidence score ([21], Supplement VIII.3). When considering graphs that are representable as collections of edges, we consider alignment-driven similarities, for which node and edge similarity is defined via the cosine similarity over their full-text representation ([21], Supplement VIII.4). We decompose the two full-text sentences into atoms, which we expand over through a common approach—Upper Ontologies [50,51], where TBox reasoning rules are hardcoded to ensure the correctness of the inference approach. We derive the equivalence through atoms expanded as propositions using the Parmenides KB, providing relationships occurring between two propositions, while remarking entailment, equivalence, or indifference ([21], Appendix A.1). This culminates in calculating the confidence value of and . Thus, we derive two truth tables for each sentence, each extracted from possible worlds that hold for all atoms within each sentence, which we then equi-join. By naturally joining all the derived tables together into , including the tabular semantics associated with each formula A and B ([21], Appendix A.2), we trivially reason paraconsistently by only considering the worlds that do not contain contradicting assumptions [19]. We refer to Section 5.3.4 for some high-level graphical representation of this phase. We express confidence from Equation (3) as follows:

While the metric summarises the logic-based sentence relatedness, provides the full possible world combination table for the number derived. By following the interpretation of the support score, we then derive a score of 1 when the premise entails the consequence, 0 when the implication derives a contradiction, and any value in between otherwise, noting the estimated ratio of possible worlds, as stated above. Thus, the aforementioned score induces a three-way classification for the sentences of choice.

At this stage, we must derive a logic-driven similarity score to overcome the limitations of current symmetrical measures that cannot capture logical entailment. We can then re-formulate the classical notion of confidence from association rule mining [52], which implicitly follows the steps of entailment and provides an estimate for conditional probability. From each sentence and its logical representation A, we need to derive the set of circumstances or worlds in which we trust the sentence will hold. As confidence values are always normalised between 0 and 1, these give us the best metric to accurately represent the degree of trustworthiness of information. We can then rephrase the definition of confidence for logical formulae as follows:

Definition 1

(Confidence). Given two logically represented sentences A and B, let and represent the set of possible worlds where A and B hold, respectively. Then, the confidence metric, denoted as , is defined based on its bag semantics as follows:

Please observe that the only formula with an empty set of possible worlds is logically equivalent to the universal falsehood ; thus, .

In [21], Appendix A.2 formalises the definition of the tabular semantics in terms of relational algebra, thus showcasing the possibility of enumerating all the words for which one formula holds, while circumscribing them to the propositions that define the formula.

4. Results

The Results section is structured as follows. First, we demonstrate the impossibility of deriving the notion of logical entailment via any symmetric similarity function (Section 4.1). We base our argument on the following observation: given that current symmetric metrics are better suited to capture sentence similarity through clustering due to their predisposition of representing equivalence rather than entailment, we show that assuming symmetrical metrics also leads to incorrect clustering outcomes, based on which dissimilar or non-equivalent sentences are grouped. Second, we provide empirical benchmarks showing the impossibility of achieving this through classical transformer-based approaches, as outlined in the Introduction (Section 4.2). All of these components provide a pipeline ablation study concerning the different rewriting stages occurring within the ad hoc phase of the pipeline, while more thorough considerations addressing disabling the a priori phase are presented in the Discussion section (Section 5.2). To improve the paper’s readibility, we moved our scalability results to [21] (Appendix C), where we address the scalability (also referred to as AI latency) of our proposed approach by considering sub-sets of full-text sentences appearing as nodes for the ConceptNet common-sense knowledge graph ([21], Appendix C).

The experiments were run on a Linux Desktop machine with the following specifications: CPU: 12th Gen Intel i9-12900 (24) @ 5 GHz; memory: 32 GB DDR5. The raw data for the results, including confusion matrices and generated final logical representations, can be found on OSF.io (https://osf.io/g5k9q/; accessed on 28 March 2025).

4.1. Theoretical Results

As the notion of verified AI incentivises inheriting logic-driven notions for ensuring the correctness of the algorithms [22], we leverage the logical notion of soundness [53] to map the common-sense interpretation of a full text into a machine-readable representation (as a logical rule or a vector embedding); a rewriting process is sound if the rewritten representation logically follows from the original sentence, which then follows the notion of correctness. For the sake of the current paper, we limit our interest to capturing logical entailment as generally intended from two sentences. Hence, we are interested in the following definition of soundness.

Definition 2

(Weak Soundness). Weak Soundness, in the context of sentence rewriting, refers to the preservation of the original semantic meaning of the logical implication of two sentences α and β. Formally, as follows:

where S is the common-sense interpretation of the sentence, is the notion of logical entailment between textual concepts, and is a predicate deriving the notion of entailment from the τ transformation of a sentence through the choice of a preferred similarity metric .

In the context of this paper, we are then interested in capturing sentence dissimilarities in a non-symmetrical way, thus capturing the notion of logical entailment, as follows:

Any symmetric similarity metrics (including cosine similarity and edge-based graph alignment) cannot be used to express logical entailment (Section 4.1.1), while the notion of confidence adequately captures the notion of logical implication by design (Section 4.1.2). All proofs for the forthcoming lemmas are presented in [21], Appendix B.

4.1.1. Cosine Similarity

The above entails that we can always derive a threshold value , above which we can deem as one sentence implying the other, thus enabling the following definition.

Definition 3.

Given α and β are full texts and τ is the vector embedding of the full text, we derive entailment from any similarity metric as follows:

where θ is a constant threshold. This definition allows us to express implications as exceeding a similarity threshold.

As cosine similarity captures the notion of similarity, and henceforth an approximation of a notion of equivalence, we can clearly see that this metric is symmetric.

Lemma 1.

Cosine similarity is symmetric

Symmetry breaks the capturing of directionality for logical implication. Symmetric similarity metrics can lead to situations where soundness is violated. For instance, if holds based on a symmetric metric, then would also hold, even if it is not logically valid. We derive implication from similarity when the similarity metric for is different to that of , given that ; this shows that one thing might imply the other but not vice versa. To enable the identification of implication across different similarity functions, we entail the notion of implication via the similarity value as follows.

Lemma 2.

All symmetric metrics trivialise logical implication, as follows:

Since symmetric metrics such as cosine similarity cannot capture the directionality of implication, they cannot fully represent logical entailment. This limitation highlights the need for alternative approaches to model implication accurately, thus violating our intended notion of correctness.

4.1.2. Confidence Metrics

In contrast to the former, we show that the confidence metric presented in Section 3.3 produces a value that aims to express logical entailment under the assumption that the transformation from the full text to a logical representation is correct.

Lemma 3.

When two sentences are equivalent, they always have the same confidence.

As a corollary of the above, this shows that our confidence metric is non-symmetric.

Corollary 1.

Confidence provides an adequate characterisation of logical implication.

This observation leads to the definition of the following notion of given —the processing pipeline.

Definition 4.

Given that α and β are the full text and τ is the logical representation of the full text derived in the ad hoc phase (Section 3.2), we derive entailment from any similarity metric as follows:

4.2. Classification

While the previous section provided the basis for demonstrating the theoretical impossibility of achieving a perfect notion of implication, the following experiments aimed to test this argument from a different perspective. To make the paper more concise, we refer to [21], Appendix D)for further information concerning the preliminary clustering steps, through which we derive the upper threshold , separating the indifferent and conflicting sentences from the equivalent and entailing ones.

We want to determine the ability to not only identify which sentences are equivalent but also ascertain their ability to differentiate between logical entailment, indifference, and inconsistent representation. To do so, we need to first annotate each sentence within our proposed dataset to indicate such a difference. Given that none of the proposed approaches were explicitly trained to recognise conflicting arguments, we then derived an upper threshold value , separating the conflicting sentences from the rest by taking the maximal similarity score between the pair of sentences expected to be contradictory from the manual annotation. If , we consider only one threshold value separating entailing and contradictory data (). Thus, we consider all similarity values above prediction values for a logical entailment and all values lower than as predictive of a conflict between the two sentences. Otherwise, we determine an indifference relationship. Please observe that different clustering algorithms might lead to different scores; given this, we will also expect to see variations within the classification scores discussed in [21], Supplement VII.3. Thus, our classification results will explicitly report the name of the paired clustering algorithm leading to the specific classification outcome.

We used the following transformers available on HuggingFace [54] from the current state-of-the-art research papers discussed in Section 2.2. T1. all-MiniLM-L6-v2 [35], T2. all-MiniLM-L12-v2 [35], T3. all-mpnet-base-v2 [33], T4. all-roberta-large-v1 [34], T5. DeBERTaV2+AMR-LDA [12], and T6. ColBERTv2+RAGatouille [36]

For all the approaches, we consider all similarity metrics as already normalised between 0 and 1, as discussed in Section 3.3. We can derive a distance function from this by subtracting the value from one; hence, . Since not all of these approaches lead to symmetric distance functions and given that the clustering algorithms work under symmetric distance functions, we obtain a symmetric definition by averaging the distance values as follows:

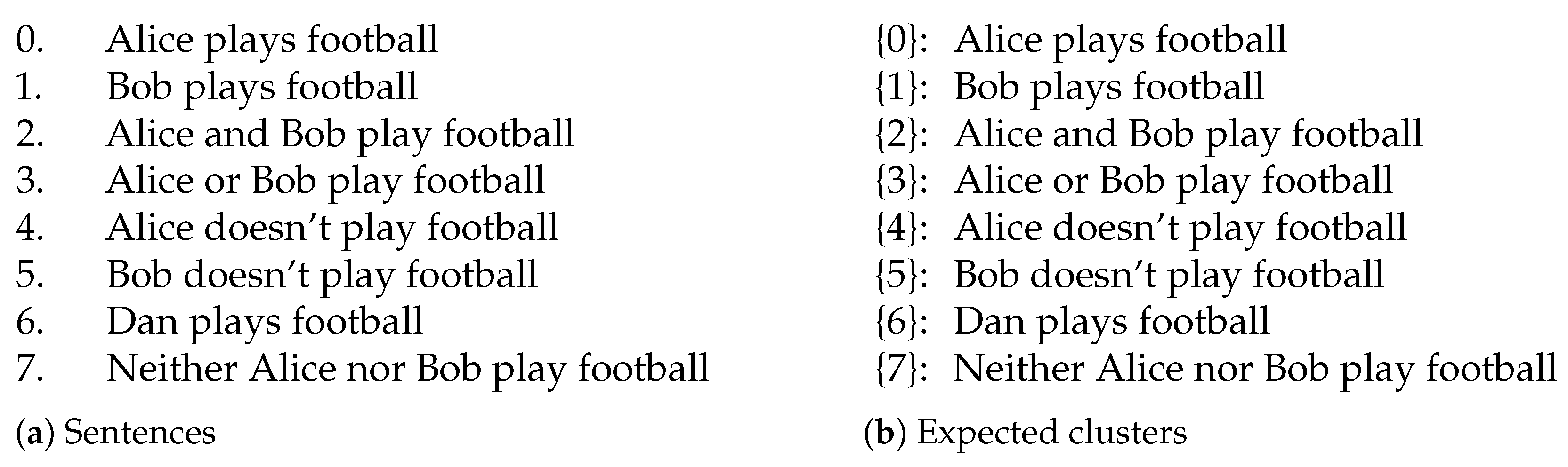

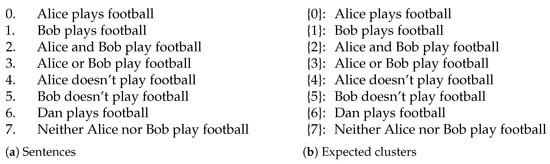

4.2.1. Capturing Logical Connectives and Reasoning

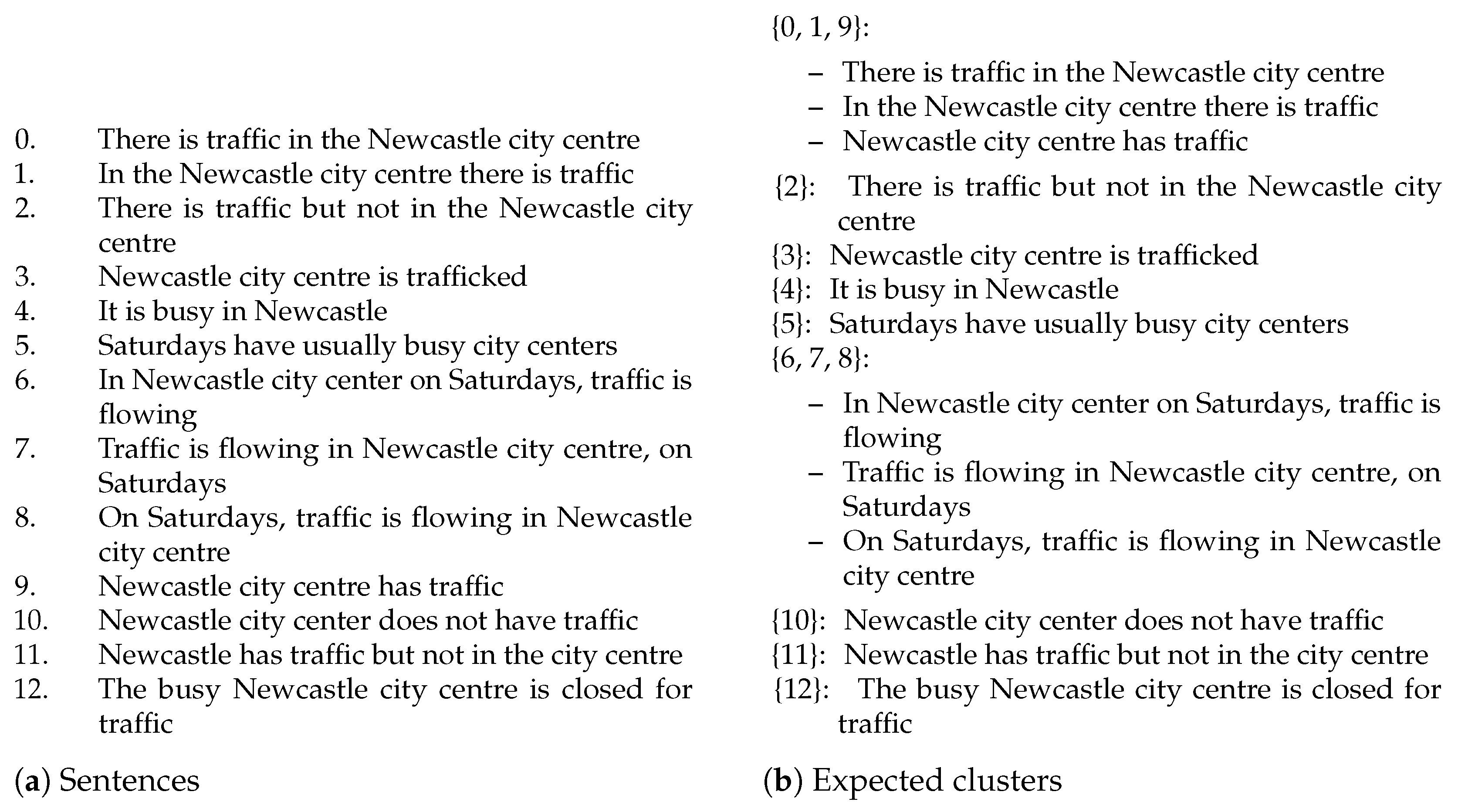

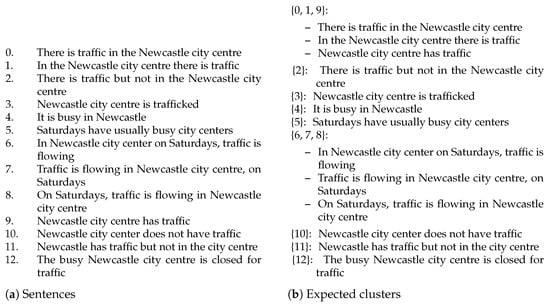

We check if the logical connectives are treated as stop words, which are then ignored by transformers. This is demonstrated through sentences in Figure 7a, whereby one sentence might imply another, but not vice versa. However, with our clustering approaches, we only want to cluster sentences that are similar in both directions; therefore, our clusters have only one element, as shown in Figure 7b.

Figure 7.

Sentences and expected clusters for RQ №2(a), where no sentences are clustered together, as no sentence is perfectly similar to another in both directions.

In Table 2, we use both macro and weighted average scores, as all our datasets are heavily unbalanced. Furthermore, as different clustering algorithms might derive different upper threshold values , we kept the distinction between the different classification outcomes, leading to a difference in classification. Our logical approach excels, achieving perfect scores across all classification scores. The preliminary stage of our pipeline, Simple Graph (SG), achieves the worse scores across the board, thus clearly indicating that just targeting node similarity through node and edge embedding is insufficient for fully capturing sentence structure, even after GGG rewriting. Logical Graphs (LGs) slightly improve over SGs due to the presence of a logical operation; this approach is shown to improve over classical sentence transformer approaches, with a few exceptions.

Table 2.

Classification scores for RQ №2(a) sentences; the best value for each row is highlighted in bold blue text, while the worst values are highlighted in red. The classes are distributed as follows: implication: 15; inconsistency: 16; indifference: 33.

In fact, ColBERTv2+RAGatouille provides better performances than other sentence transformers, and DeBERTaV2+AMR-LDA shows poor performance, thus indicating the unsuitability of this approach to provide reasoning over other logical operators not captured in the training phase. Moreover, our proposed logical representation improves over LGs through the tabular reasoning phase, due to the classical Boolean interpretation of the formulae; this indicates that graph similarity alone cannot be used to fully capture the essence of logical reasoning. In competing approaches, the weighted average is consistently lower compared to the macro average, thus suggesting that the misclassification task is not necessarily ascribable due to the imbalanced nature of the dataset, while also suggesting that the majority class (indifference) was mainly misrepresented.

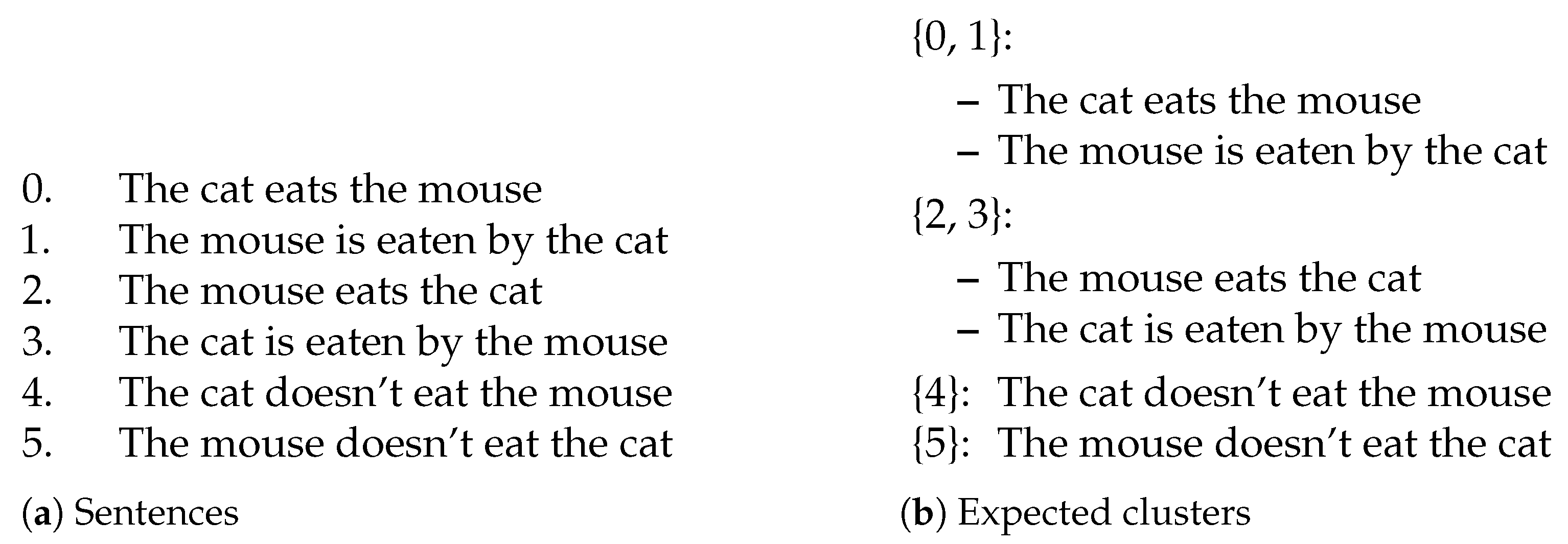

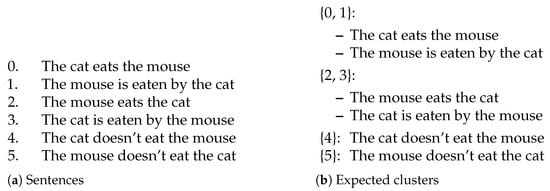

4.2.2. Capturing Simple Semantics and Sentence Structure

The sentences in Figure 8a are all variations of the same subjects (a cat and mouse), with different actions and active/passive relationships. The dataset is set up to produce sentences with similar words but in a different order, allowing for the determination of whether the sentence embedding understands context from structure rather than edit distance. Figure 8b shows the expected clusters for the RQ №2(b) dataset. Here, 0 and 1 are clustered together as the subject’s action on the direct object is the same in both: “the cat eats the mouse” is equivalent to “the mouse is eaten by the cat”. Similarly, sentences 2 and 3 are the same, but with the action reversed (the mouse eats the cat in both).

Figure 8.

Sentences and expected clusters for RQ №2(b).

Table 3 provides a more in-depth analysis of the situation where, instead of being satisfied with the possibility that the techniques mentioned above can capture the notion of equivalence between sentences, we also require that they can make finer-grained semantic distinctions. The inability of DeBERTaV2+AMR-LDA to fully capture sentence semantics might be reflected in the choice of the AMR as, in this dataset, the main differences across the data are based on the presence of negation and of sentences in both active and passive form. This supports the evidence that transformer-based approaches providing one single vector generally provide better results through masking and tokenisation. Differently from the previous set of experiments, subdividing the text encoding into multiple different sentences proved to be ineffective for the ColBERTv2+RAGatouille approach, as subdividing short sentences into different tokens for the derivation of a vector results in the complete loss of the semantic information captured by the sentence structure. In this scenario, the proposed logical approach is mainly supported by tabular semantics, where most of the sentences are simply represented as two variants of atoms, potentially being negated. This indicates the impossibility of fully capturing logical inference through graph structure alone. In this scenario, precision scores appear almost the same, independent of the clustering and averaging technique. The Accuracy and F1 scores show that the similarity score is very near to a random choice.

Table 3.

Classification scores for RQ №2(b) sentences, with the best value for each row highlighted in bold blue text, while the worst values are highlighted in red. The classes are distributed as follows: Implication: 10, Inconsistency: 8, Indifference: 18.

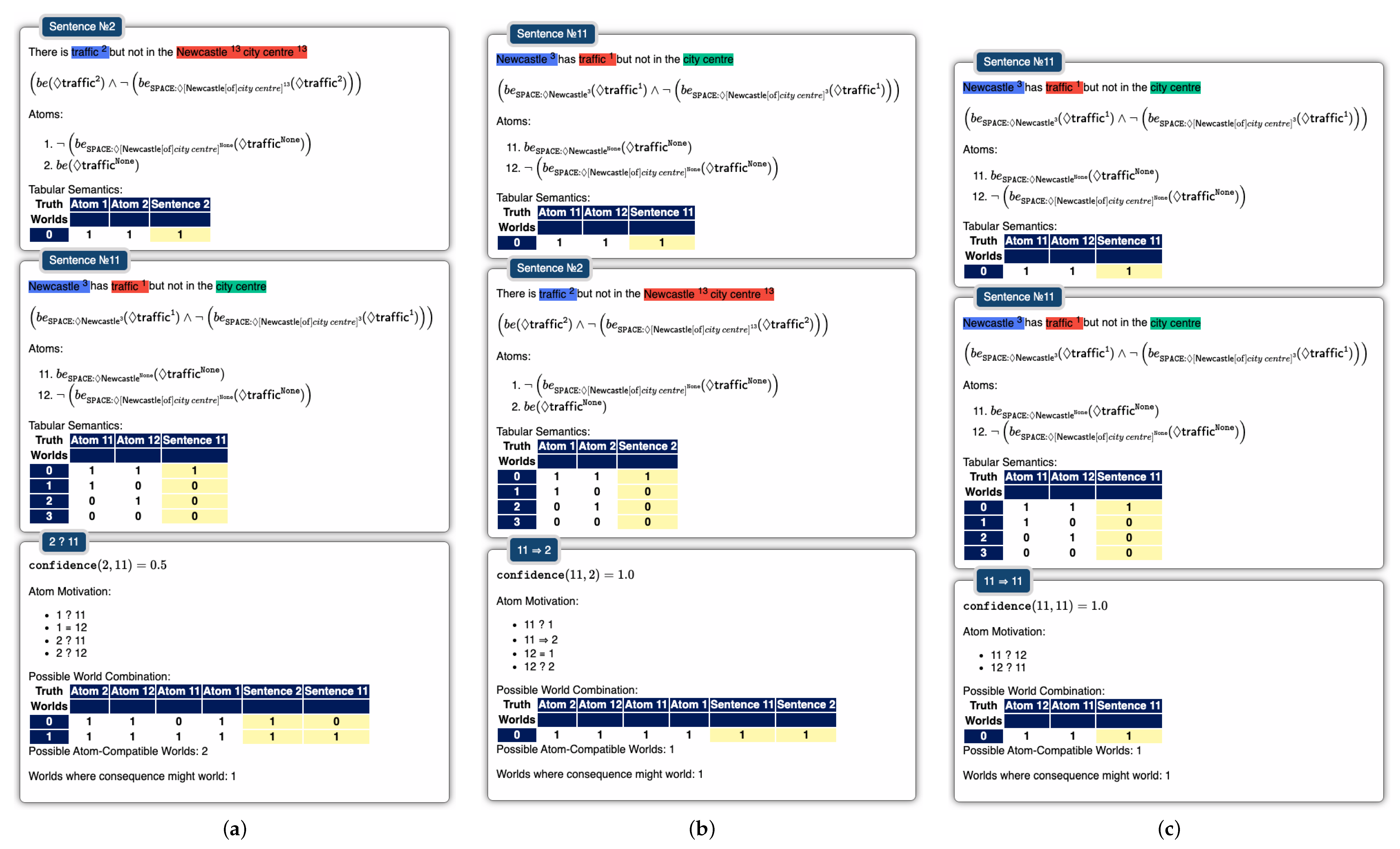

4.2.3. Capturing Simple Spatiotemporal Reasoning

We considered multiple scenarios involving traffic in Newcastle, as presented in Figure 9a, which have been extended from our previous paper to include more permutations of the sentences. This was carried out to obtain multiple versions of the same sentence that should be treated equally by ensuring that the rewriting of each permutation is the same, therefore resulting in 100% similarity. We consider this dataset as a benchmark over a part/existential semantics , thus assuming that all the potential quantifiers being omitted refer to an existential (e.g., somewhere in Newcastle, in some city centers, on some Saturdays). In addition, we consider negation as a flattening out process.

Figure 9.

Sentences and expected clusters for RQ №2(c).

The clustering results now seem in line with the classification scores from Table 4, whereny DeBERTaV2+AMR-LDA consistently provided low scores, being slightly improved by our preliminary simplistic SG representation. Despite the good ability of LGs to cluster the sentences, they less favourably capture correct sentence classifications, as (consistently with the previous results) they are outperformed by competing transformer-based approaches which, still, provided less-than-random scores in terms of accuracy. Even in this scenario, the competing approaches exhibited a lower weighted average compared to the macro one, thus indicating that the results were not biased by the unbalanced dataset.

Table 4.

Classification scores for RQ №2(c) sentences, with the best value for each row highlighted in bold blue text, while the worst values are highlighted in red. The classes are distributed as follows: Implication: 32, Inconsistency: 27, Indifference: 110.

5. Discussion

This section begins with a brief motivation on our design choice for considering shorter sentences before discussing more complex ones (Section 5.1). It then continues with a more detailed ablation study regarding our pipeline (Section 5.2), as well as comparing the different types of explainability achievable by current explainers as compared to our proposed approach (Section 5.3). In [21], Supplement IX provides some further preliminary considerations that should be carried out when analysing the logical representation output as returned by our pipeline.

5.1. Using Short Sentences

We restricted our current analysis to full texts with no given structure, as in ConceptNet [4], instead of being parsed as semantic graphs. If there are no major ellipses in a sentence, it can be fully represented using propositional logic; otherwise, we need to exploit existential quantifiers (which are now supported by the present pipeline). In propositional logic, the truth value of a complex sentence depends on the truth values of its simpler components (i.e., propositions) and the connectives that join them. Therefore, using short sentences for logical rewriting is essential, as their validity directly influences that of the larger, more complex sentences constructed from them. If the short sentences are logically sound, the resulting rewritten sentences will also be logically sound and we can ensure that each component of the rewritten sentence follows a well-formed formula, thereby maintaining logical consistency throughout the process. For example, consider the sentence “It is busy in Newcastle city centre because there is traffic.” This sentence can be broken down into two short sentences: “It is busy in Newcastle city centre” and “There is traffic”. These short sentences can be represented as propositions, such as P and Q. The original sentence can then be expressed as . Thus, through the use of short sentences, we ensure that the overall sentence adheres to the rules of propositional logic [16].

5.2. LaSSI Ablation Study

We implicitly performed an initial ablation study in Section 4.2. Our three ad hoc representation steps (SGs, LGs, and Logical) demonstrate how the introduction (or removal) of stages in the pipeline can enforce the proper semantic and structural understanding of sentences given different scenarios (RQ №2). Here, we extend this study by disabling the a priori phase of each pipeline stage and comparing the results, thus implicitly limiting the possibility of reasoning through entities being expressed within our Parmenides KB. As the ex post phase mainly reflects the computation of the confidence score required for the logical phase to derive a sensible score, the generation of the scores (both for the clustering and the classification tasks), as well as the generation of the dendrograms, was not considered in this analysis. Thus, we focused on the different stages of the ad hoc phase while considering the dis-/enabling of the a priori phase.

Concerning the datasets for RQ №2(a) and RQ №2(b), we obtained the same dendrograms and clustering results; this should be ascribed to any missing semantic entities of interest, as mainly common entities were involved. As a consequence, we obtain the same clustering and classification results. Due to the redundant nature of these plots, the dendrogram plots have been moved to [21], Supplement IX.2.

We now discuss the dendrograms and classification outcomes for the RQ №2(c) dataset. Ref. [21], Figure S11 shows different dendrograms. When disabling the a priori phase and the consequent meuDB match, we can see that fewer entities are matched, thus resulting in a slight decrease in the similarity values. Here, the difference lies in the logical implication of the sentences, and more marked results regarding the logical representation of sentences can be noticed. As sentences are finally reduced to the number of possible words that their atoms can generate, we can better appreciate the differences in similarity with a more marked gradient. Making a further comparison with the clusters, we observe that the further semantic rewriting step undertaken in the final part of the ad hoc phase is the one that fully guarantees the uniformity of the representation of sentences, which is maintained despite failure to recognise the entities in the correct way. To better discriminate the loss of precision due to the lack of recognition of the main entities, we provide the obtained results in Table 5. It can be clearly seen that SGs are not affected by the a priori phase as, in this section, no further semantic rewriting is performed and all the nodes are flattened out as merely nodes containing only textual information. On the other hand, the a priori phase seems to negatively affect LGs, as further distinction between the main entity and specification does not improve the transformer-based node and edge similarity, given that part of the information is lost. As the ex post phase requires the preliminary recognition of entities for the computation of the confidence score, and given that disabling the a priori phase leads to the missed recognition of entities, the improvement in scores can be merely ascribed to the correctness of the reasoning abilities implemented through Boolean-based classical semantics. Comparing the results for the logical representation with those of the competing approaches in Table 4, we see that—notwithstanding the lack of multi-entity recognition, which is instrumental in connecting the entities within the intermediate representation leading to the final graph—the usage of a proper logical reasoning mechanism allowed our solution to still achieve globally better classification scores, when compared to those used for comparison.

Table 5.

Classification scores for RQ №2(c) sentences, comparing transformation stages with the a priori phase disabled in each case.

5.3. Explainability Study

The research paradigm known as Design Science Research focusses on the creation and verification of information science prescriptive knowledge while assessing how well it fits with research objectives [55]. While the previous set of experiments show that the current methodology attempts to overcome the limitations of current state-of-the-art approaches, this section will focus on determining the suitability of LaSSI at explaining the reason leading to the final confidence score, which is to be used both as a similarity score and as a classification outcome. The remainder of the current subsection will be structured according to the rigid framework of Design Science Research, as identified by Johannesson and Perjons [56]. This will ensure the objectiveness of our outlined considerations.

5.3.1. Explicate Problem

While considering explanation classification for textual content, we seek an explainable methodology motivating why the classifier returned the expected class for a specific text. At the time of the writing, given that the pre-trained language models act as black boxes, the only possible way to derive the explanation for the classification outcome from the text is to train another white-box classifier, often referred to as an “explainer”. This acts as an additional classifier correlating single features to the classification outcome, thus potentially introducing further classification errors [57]. Currently, explainers for textual classification tasks weight each specific word or passage of the text; despite such characterisation being sufficient for sentiment analysis [58] or misinformation detection [26], these cannot adequately represent the notion of semantic entailment that requires the definition of a correlation between premise and consequence as occurring within the text, as well as requiring us to target deeper semantic correlations across two distinct parts of the given implication.

5.3.2. Define Requirements

Given that current explainers cannot explicitly derive any trained model reasoning using explanations similar to the chain of thought prompting [59] as they merely correlate the features occurring within the text with the classification label, requiring this assumption will bias the evaluation against real-world explainers. The above considerations limit the correctness and desiderata to the basic characteristics that an explainer must possess.

- Req №1

- The trained model used by the explainer should minimise the degradation of classification performances.

- Req №2

- The explainer should provide an intuitive explanation of the motivations why the text correlates with the classification outcome.

- Req №3

- The explainer should derive connections between semantically entailing words towards the classification task.

- (a)

- The existence of one single feature should not be sufficient to derive the classification; when this occurs, the model will overfit a specific dataset rather than learning to understand the general context of the passage.

5.3.3. Design and Develop

At the time of writing, both LIME [60,61,62] and SHAP [63,64] values require an extra off-the-shelf classifier to support the explanation of words or passages within the text in relation to the classification label. We then pre-process our annotated dataset to create pairs of strings as in [21], Supplement VIII.3, in order to associate the expected classification outcome. The resulting corpus is used to fit the following models:

- TF-IDFVec+DT: TF-IDF Vectorisation [65] is a straightforward approach to represent each document within a corpus as a vector, where each dimension describes the TF-IDF value [39] for each word in the document. After vectorising the corpus, we fit a Decision Tree (DT) for learning the correlation between word frequency and classification outcome. Stopwords such as “the” typically have high IDF scores, as they might frequently occur within the text. We retain all the occurring words to minimise our bias when training the classifier. As this violates Req №3(a), we decide to pair this mechanism with the following, being attention-based.

- DistilBERT+Train: DistilBERT [66] is a transformer model designed to be fine-tuned on tasks that use entire sentences (potentially masked) to make decisions [67]. It uses WordPiece subword segmentation to extract features from the full text. We use this transformer to go beyond straightforward word tokenisation as the former. Thus, this approach will not violate Req №3(a) if the attention mechanism will not focus on one single word to draw conclusions, thus remarking on their impossibility to draw correlations across the two sentences.

The resulting trained model is then fed to a LIME and SHAP explainer, explaining how single word frequencies (TF-IDFVec+DT) or sentence parts (DistilBERT+Train) correlate with the expected classification label.

5.3.4. Artifact Evaluation

We decide to train the previous models over the RQ №2(c) dataset, as this is more semantically rich; correlations across entailing sentences are quintessential, while both term similarity and logical connectives should be considered. So, to avoid any potential bias a classifier introduces when providing the classification labels, we train the models from the former section directly on the annotated dataset.

Performance Degradation

In relation to Req №1, Table 6 showcases a straightforward DT and frequency-based classification task outperforming a re-trained language model. While the former model clearly over-fits over the term frequency distribution, thus potentially leading to deceitful explanations, the latter might still derive wrong explanations due to low model precision. Higher values on the weighted averages entail that the classifiers are biased towards the majority class—indifference.

Table 6.

Performance degradation when training a preliminary model used by the explainer to correlate parts of text to the classification label. Refer to Section 4.2.3 for classification results from LaSSI.

Intuitiveness

In relation to Req №2, an intuitive explanation should clearly show why the model made a specific classification based on the input text, ideally in a way that aligns with human understanding or, at a minimum, reveals the model’s internal logic.

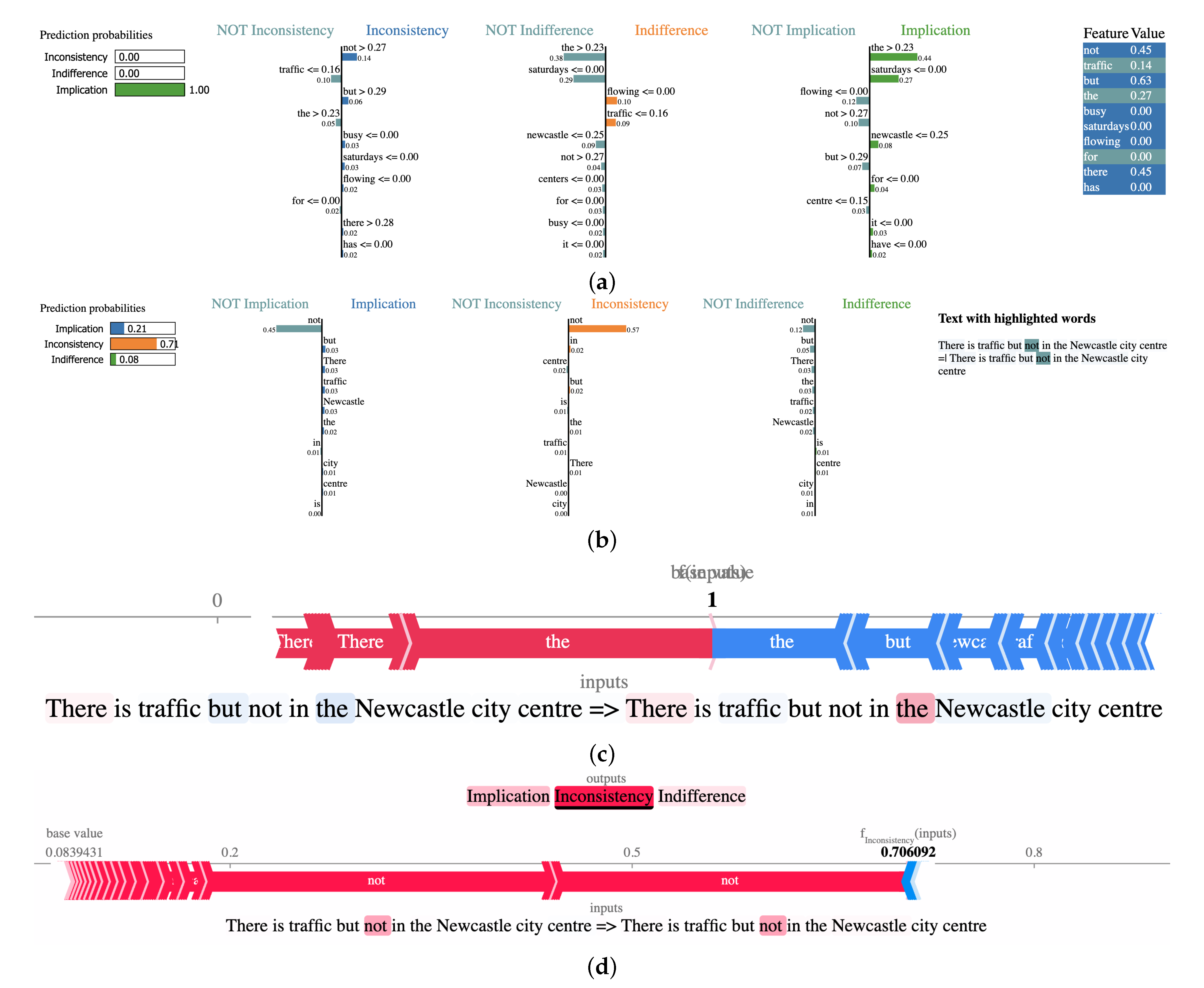

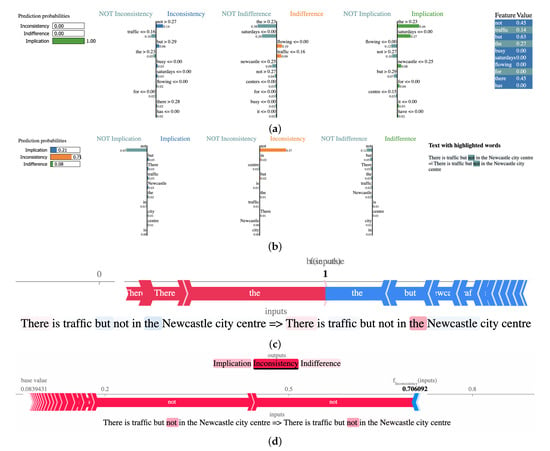

LIME plots display bar charts where each bar corresponds to a feature. The length of the bar illustrates the feature’s importance for that particular prediction, and the colour indicates the direction of influence towards a class. By examining the features with the longest bars and their associated colours, we can understand which factors were most influential in the model’s decision for that instance. Individual LIME plots are self-explanatory. However, when comparing across plots of different sentences and models (Figure 10a,b), legibility could have been improved if the order and the colour of classes could be fixed.

Figure 10.

LaSSI explanations for indifference and implication between sentence 2 (“There is traffic but not in the Newcastle city centre”) and sentence 11 (“Newcastle has traffic but not in the city centre”). The strength of the colours within the feature value table and highlighted words in (a) and (b) respectively refer to how much the words influence the classification outcome. The colours within the LIME graphs refer to which class they are in as indicated by the prediction probabilities legend. For the SHAP graphs ((c) and (d)), the strength of the colour within the highlighted words in the graph caption refer to their influence on the classification outcome. Red and blue refers to an increase and decrease in prediction value respectively. (a) LIME explanation using TF-IDFVec+DT, highlighting the word “the” in both NOT Indifference and Implication, leading to 100% confidence for an Implication classification. (b) LIME explanation using DistilBERT+Train, highlighting the word “not” leading to a NOT implication and Inconsistency classifications, ultimately resulting in an Inconsistency classification. (c) SHAP explanation using TF-IDFVec+DT, highlighting the model’s confidence in the word “the” leading to an Implication classification (1 for the image above). (d) SHAP explanation using DistilBERT+Train, highlighting the model’s confidence in the word “not”, leading to Inconsistency.