Abstract

In supervised fine-tuning (SFT) for Text2SQL tasks, particularly for databases with numerous tables, encoding schema features requires excessive tokens, escalating GPU resource requirements during fine-tuning. To bridge this gap, we propose LR-SQL, a general dual-model SFT framework comprising a schema linking model and an SQL generation model. At the core of our framework lies the schema linking model, which is trained on a novel downstream task termed slice-based related table filtering. This task dynamically partitions a database into adjustable slices of tables and sequentially evaluates the relevance of each slice to the input query, thereby reducing token consumption per iteration. However, slicing fragments destroys database information, impairing the model’s ability to comprehend the complete database. Thus, we integrate Chain of Thought (CoT) in training, enabling the model to reconstruct the full database context from discrete slices, thereby enhancing inference fidelity. Ultimately, the SQL generation model uses the result from the schema linking model to generate the final SQL. Extensive experiments demonstrate that our proposed LR-SQL reduces total GPU memory usage by 40% compared to baseline SFT methods, with only a 2% drop in table prediction accuracy for the schema linking task and a negligible 0.6% decrease in overall Text2SQL Execution Accuracy.

1. Introduction

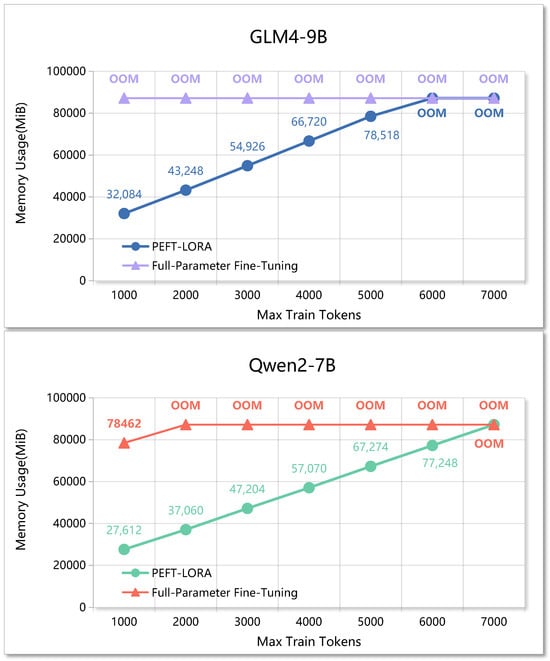

Text2SQL is the process of translating natural language questions into executable SQL queries, enabling users to access and analyze data without learning query languages and bridging the gap between human intent and structured database retrieval. Given a natural language query and the schema of the target database, which includes its tables, columns, data types, and relationships, the Text2SQL system identifies the relevant tables and columns, infers the logical reasoning steps, and generates an SQL statement that is both syntactically correct and semantically faithful to the question. Text2SQL has evolved from early embedding-based approaches [1,2,3], which encode questions and database schemas into a shared semantic space before symbolic decoding, to large language model (LLM)-based approaches [4,5] that directly generate SQL in a sequence-to-sequence manner. Embedding-based methods perform well on small or moderately complex databases but struggle to generalize to large schemas or capture deeper semantic relations, whereas LLM-based methods leverage broad knowledge and reasoning abilities from large-scale pretraining to achieve higher accuracy and stronger adaptability to schema variations. However, the reliance of Text2SQL on commercial LLMs [6,7,8,9] limits its applicability in security-sensitive scenarios, underscoring the growing need for open-source models that can be deployed on premises and integrate advanced architectures with privacy-preserving capabilities to enable practical and efficient Text2SQL systems. To bridge the gap between open-source models and commercial counterparts in pretraining data coverage, and to enable open-source models to form richer connections within complex database schemas, supervised fine-tuning (SFT) provides an effective means of knowledge injection. By compensating for the absence of task-specific pretraining data, SFT can transform large language models into domain specialists. However, full-parameter fine-tuning requires updating each parameter in the LLM. As shown in Figure 1, even for the Qwen2-7B model with an input length of 1000 tokens and a batch size of 1, the memory footprint reaches 78,462 MiB, which is close to the 80 GB single-card limit of commercial GPUs. This leads to the first challenge: how to train an LLM to capture database characteristics with limited GPU memory.

Figure 1.

The relationship between the number of tokens and GPU memory usage under a fine-tuning batch size of one.

To address the substantial GPU memory demands of SFT in large-scale LLMs, parameter-efficient fine-tuning (PEFT) methods [10,11] have emerged as the preferred strategy. PEFT reduces both memory usage and computation cost by adjusting only a small subset of parameters, such as low-rank adapters, bias terms, or projection matrices, while keeping the remainder frozen. Recent Text2SQL frameworks, such as DTS-SQL [12] and DB-GPT [13], employ LoRA [11] to fine-tune large language models, thereby establishing connections among the input question, the database schema, and the resulting SQL query. However, as databases increase in size and complexity with a growing number of tables [14], the token budget required to encode schemas and associated metadata grows rapidly. As illustrated in Figure 1, even when PEFT removes most of the parameter update overhead, the long token sequences generated during schema encoding remain the dominant source of GPU memory consumption in fine-tuning. This leads to the second challenge: how to control the number of input tokens to further reduce GPU memory usage during fine-tuning.

Therefore, efficient token management during fine-tuning is crucial for alleviating memory bottlenecks. To control input token length, short-context-window LLMs [15] typically address overlength inputs through long-text information extraction [16,17] or sliding-window inference [18], ensuring that essential content fits within the limited context window. Motivated by this, we compare two fine-tuning strategies for Text2SQL: (1) DB-GPT [13], which directly establishes the relationship among the question, database schema, and SQL in a single generation step, and (2) DTS-SQL [12], which decomposes the task into two subtasks—schema linking, to connect the question with relevant tables, and SQL generation, to produce the query from the linked schema. Our analysis shows that DB-GPT’s one-step generation makes it difficult to integrate summarization-based token control for schema compression. In contrast, DTS-SQL’s schema linking stage first filters relevant tables before SQL generation, allowing the schema to be split into multiple segments (slices). The LLM then examines each slice to determine whether relevant tables exist, iterating until all slices are processed and the complete relevant schema elements are obtained. The selected schema elements are then passed to the fine-tuned SQL generation model. Building on this design, we extend DTS-SQL into a low-memory supervised fine-tuning framework that scales effectively to increasingly large schemas. Although this slicing strategy reduces the usage of training-time memory, it also introduces a problem: independently processing slices can obscure the global relational structure of the database, making it difficult for the model to reconstruct a holistic view during inference. To mitigate this, we integrate the Chain-of-Thought (CoT) reasoning paradigm [19,20] into supervised fine-tuning, enabling intermediate reasoning results to be carried over across slices.

Our proposed LR-SQL framework operates as follows: For a given database, we first construct schema slices based on foreign key relationships among its tables. We then design a fine-tuning task for schema linking named slice-based related table filtering in which the LLM selects relevant tables from each slice. To maintain a global understanding of the database despite slice-wise processing, we integrate CoT prompts during training, enabling reasoning results accumulated from all previously processed slices to inform the inference on the current slice. Finally, the fine-tuned SQL generation model produces executable queries from the selected schema elements. In summary, our contributions are as follows.

- (1)

- Low-memory Text2SQL fine-tuning framework. We develop a general supervised fine-tuning framework for Text2SQL under low-GPU-memory scenarios, which includes both the schema linking model and the SQL generation model. Our framework is publicly available at https://github.com/hongWin/LR-SQL (accessed on 25 August 2025).

- (2)

- Large-scale benchmark construction. We construct a large-scale dataset, based on the Spider dataset, to simulate Text2SQL tasks in large- and medium-scale databases, closely reflecting real-world scenarios.

- (3)

- Schema slicing for efficient linking. We propose an innovative schema linking strategy that decomposes databases into multiple slices with adjustable token capacities, enabling flexible adaptation to resource constraints.

2. Related Work

2.1. Fine-Tuning-Based Text2SQL Methods

Existing Text2SQL fine-tuning approaches can be broadly categorized into single-model fine-tuning and multi-model fine-tuning.

Single-model fine-tuning: The creators of DB-GPT [13] combine multiple PEFT techniques to train a single SQL generation model that captures the relationship between schema information, natural language questions, and SQL queries. They also evaluate several open-source LLMs on Text2SQL tasks and establish a low-resource Text2SQL fine-tuning benchmark. Sun et al. [21] fine-tune a proprietary SQL generation model for the power grid domain, demonstrating the effectiveness of supervised fine-tuning in domain-specific industrial applications. Hong et al. [22] construct a preference dataset enabling interaction between an SQL generation model and a database benchmark and subsequently apply direct preference optimization [23] to continually refine the model. More recent studies [24,25] enhance the CoT reasoning capabilities of SQL generation models using reinforcement learning.

Multi-model fine-tuning: The creators of Sql-palm [26] and DTS-SQL [12] and Shen et al. [27] establish supervised fine-tuning pipelines primarily targeting SQL generation. Among them, Sun et al. [21] and Pourreza et al. [12] fine-tune two models—a schema linking model and an SQL generation model—where the schema linking model extracts schema elements relevant to the question, thereby reducing input noise for the SQL generation stage. In contrast, Shen et al. [27] fine-tune three models: a table prediction model, a column prediction model, and an SQL generation model.

However, the aforementioned fine-tuning methods do not address scenarios involving very large databases, where constructing the relationship between a question and the database requires the full schema as input. This forces the LLM to encode a large number of tokens, leading to substantial GPU memory consumption during fine-tuning. To overcome this challenge, our approach dynamically controls the amount of context included in each training batch, enabling efficient fine-tuning of a private database management assistant tailored to specific needs.

2.2. Low-Resource Training Based on PEFT

Parameter-efficient fine-tuning methods focus on selectively updating only a small subset of parameters in the target model during fine-tuning. As a representative PEFT approach, LoRA [11] freezes the pretrained model parameters and inserts trainable low-rank decomposition matrices into specific weight matrices, thus greatly reducing the number of trainable parameters and memory requirements while preserving the original model knowledge. To further push the limit of parameter compression, LoRA-FA [28] trains only the downsampling layers of LoRA, while FourierFT [29] compresses the trainable parameter volume even more aggressively. However, GPU memory consumption during fine-tuning is not solely determined by the number of trainable parameters: excessive compression can even harm final model performance. The floating-point precision of model parameters is another critical factor that influences both the model size and the memory cost during training. For example, QLoRA [30] combines 4-bit quantization with LoRA to further reduce fine-tuning memory usage, while APIQ [31] pushes this further by quantizing floating-point precision down to 2 bits. In parallel, other studies focus on token-level optimization. LongLoRA [32] employs short-shift attention to split long sequences into shorter ones for parallel computation, while Simoulin et al. [33] selectively train only informative tokens. However, current open-source LLMs exhibit diverse architectural designs, with significant differences in transformer layer implementation, normalization placement, and parameter partitioning, which impose structural constraints on the reusability of PEFT methods [34,35]. For example, the approaches in [32,33] are only compatible with specific architectures such as LLaMA2 [15]. Our method overcomes this limitation by designing new downstream tasks that take advantage of the strengths of these approaches while remaining compatible with diverse model architectures in the Text2SQL setting.

2.3. Short-Context-Window Handling for Long Contexts

Although recent open-source models [36,37] can process texts containing tens of thousands of tokens, fine-tuning them on long-context inputs still demands substantial GPU resources [38]. Guiding short-context-window LLMs to perform long-text reading comprehension is emerging as an effective strategy to control the number of input tokens. For example, Li et al. [39] construct concise representations by identifying and pruning redundant information. Recomp [17] uses two-stage retrieval: an extractive compressor distills question-relevant content, followed by a generative compressor that produces the final answer, effectively limiting the input length per query. ReadAgent [40] segments the contexts into semantically coherent episodes, compresses them into gist memories to preserve global narrative context, and selectively retrieves original pages to recover critical details. Similarly, SEGMENT+ [16] divides text into segments, evaluates the relevance of each segment to the query with the LLM, and synthesizes summaries of useful segments for the final answer. MEMWALKER [41] builds a hierarchical memory tree by summarizing chunks into nodes and recursively integrating them into higher-level summaries; at the query stage, the LLM traverses the tree to efficiently retrieve the most relevant segments. In particular, these long-text reasoning approaches share a common strategy: segmenting the text, summarizing each segment, and then aggregating the summaries to produce a final response. Inspired by how short-context-window LLMs handle long-text input, we adopt a similar approach for Text2SQL schema linking. Specifically, we partition the database into slices, allow the LLM to extract relevant tables from each slice, and then consolidate the extracted information for the SQL generation model to produce the final query. This approach effectively controls the number of tokens per LLM input, reducing GPU memory usage at the token level during fine-tuning.

3. Methodology

We first analyze two supervised fine-tuning strategies for Text2SQL tasks: (i) end-to-end SQL generation and (ii) schema linking-assisted SQL generation.

In the first strategy, end-to-end SQL generation fine-tuning [13] directly learns the mapping between natural language questions and the corresponding SQL statements. The fine-tuning objective is given in function (1):

function (1) trains the SQL generation model to map each question to the corresponding ground-truth SQL , given the complete table set S of the target database D. Here, denotes trainable parameters and Loss is the entropy loss. Since S must be provided in full during fine-tuning, this approach incurs high GPU memory usage.

The second strategy, schema linking-assisted SQL generation [12], decomposes the Text2SQL task into two subtasks: schema linking and SQL generation. The schema linking fine-tuning objective is given in function (2):

function (2) trains the schema linking model to predict the set of tables and their contents that satisfy the SQL query requirements , given the database D and all table descriptions S. The SQL generation stage then uses the schema linking output and related tables from the target SQL to construct its fine-tuning object, as expressed in function (3):

function (3) trains the SQL generation model to produce SQL queries matching based on the query and the descriptions of relevant tables from database D. The union combines the predictions of the schema linking model with the ground-truth tables , which improves generalization and error correction compared to using alone [14].

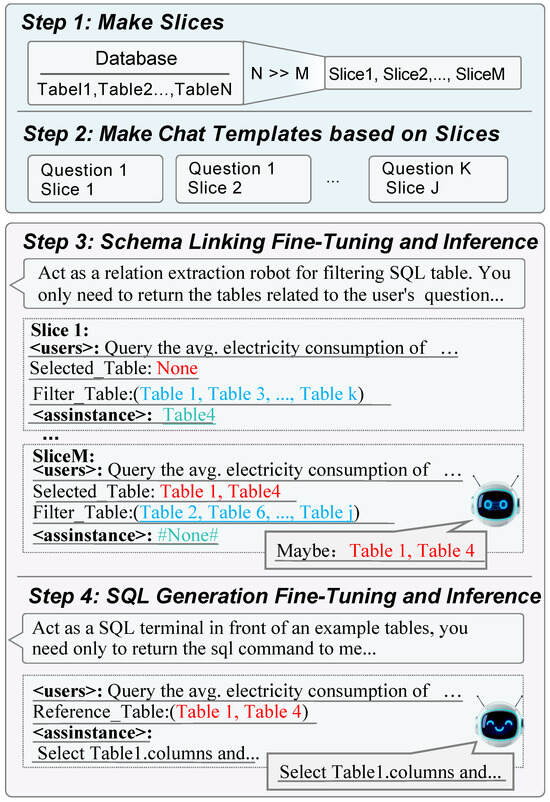

Comparing the two categories, the objective in function (3) requires significantly fewer tokens during fine-tuning, as it only involves the union of relevant table subsets , whereas function (1) requires the entire schema S. In strategy (ii), the main computational bottleneck arises from schema linking (2), mainly due to the large context size of S. During fine-tuning, a single training sample containing the entire database schema S substantially increases GPU memory usage, which may result in excessive hardware capacity. Since the schema linking only needs to predict tables relevant to the query, processing the entire schema at once is unnecessary. Instead, S can be partitioned into smaller segments (slices), each forming an independent training instance, until all tables in the database are covered. Therefore, we propose the LR-SQL framework, whose overall workflow is illustrated in Figure 2. The first key challenge in LR-SQL is designing an effective partitioning strategy that balances GPU memory constraints while preserving the critical relationships among tables in the database.

Figure 2.

The overall framework of LR-SQL.

3.1. Slice Construction

The core idea of splitting the entire database for partial fine-tuning is to ensure that the relationships between table structures are preserved within each limited input context while enabling the model to recover its understanding of the complete database during inference. Our first focus is on how to effectively disassemble the database. Inspired by the concept in [16,17,40], we control the context length for each fine-tuning iteration in the schema linking task and collect the final answer through a fragmented question-answering process during inference. Based on the observation in [42] that tables connected via foreign keys tend to appear more frequently in join queries, we aim to group such tables within the same slice whenever possible.

Accordingly, we first define the formal expression of table relationships in the database as follows.

As shown in function (4), we categorize all tables in the database into two distinct groups. The set contains groups of tables interconnected through foreign keys, expressed as , where denotes the i-th correlation group, meaning that every table in is linked by the i-th foreign key or its associated foreign keys. We define , where includes the table name and all its columns in the i-th correlation group, formally written as , where denotes the k-th column in the j-th table of the i-th correlation group and represents a foreign key correlation. The second set, , contains tables without foreign key connections, defined as , where each table in this set comprises its table name and all columns, formally expressed as , where denotes the p-th column in the o-th table.

By constructing and , we define the minimum granularity w of the table information for supervised fine-tuning. The construction procedure of the slice set W is outlined in Algorithm 1. To ensure the integrity of foreign key relationships, the algorithm first processes the groups with foreign key references . Specifically, for each group , the corresponding table schema is encoded, and the number of tokens produced by the encoder is counted. This token count is then added to the accumulated token length of the current slice and compared against the predefined maximum length of the slice . If the total length does not exceed , the table is appended to the current slice. Otherwise, the current slice is finalized and stored in the slice collection W, and a new slice is initialized with the current table. After all foreign key-related groups are processed, the algorithm proceeds to handle the non-referenced groups in a similar manner. Unlike the foreign key groups, these only require checking whether the current table can fit into the existing slice; if not, a new slice is created. Through this procedure, the database is reorganized and partitioned into a set of coherent table slices W. The relationship between tables and slices can be expressed as follows:

where M denotes the total number of slices and represents the j-th constructed slice. is the encoding function that maps natural language text to its machine-readable token representation, and denotes the function that counts the total number of tokens. The detail performemce on random slicing and foreign key slicing is deploy in Appendix A.

| Algorithm 1 Constructing the slice set W |

| Require: , , , Ensure: Slice set W 1: Initialize , 2: while do 3: while do 4: if then 5: 6: else 7: , 8: 9: end if 10: end while 11: end while 12: while do 13: if then 14: 15: else 16: , 17: 18: end if 19: end while 20: return W |

3.2. Fine-Tuning on the Slice-Based Related Table Filtering Task

Based on the set of slices W, we construct a schema linking the fine-tuning task with token quantity constraints: we submit the build question templates, slices, and relevant tables to the LLM for fine-tuning. The objective for model fine-tuning is constructed as follows.

where N represents the total number of questions contained in the dataset. stands for the j-th slice and M indicates the total number of slices. denotes the target table names for slice ; if no tables related to the question are found within that slice, the special token “#None#” is assigned. During the inference stage, once the predictions for all slices related to the question are complete, a definitive table prediction result is derived.

When using function (6) to achieve the objectives of the task, the model faces a challenge: It can only identify tables potentially relevant to the query within each slice based on current information during the inference process. However, the table information inferred from previous slices may contribute to partial solutions to the overall problem, indicating that earlier conclusions could positively impact future decisions.

The method that incrementally breaks down the problem and combines the reasoning results in each step is known as CoT [19]. While CoT stimulates the model’s inherent knowledge, the model lacks relevant knowledge about the private database before fine-tuning. Therefore, during supervised fine-tuning, we instill database knowledge into the model along with CoT reasoning capabilities, allowing the model to recognize short-term connections between the current slice and previous slices, as well as long-term relationships between each discrete slice and the original intact database. We construct the final objective for supervised fine-tuning as follows:

Based on (7), for the same problem , we integrate the information from the target table covered by the first j − 1 slices into the training objective of the j-th slice. By constructing optimal objectives for different problems across different slices, we allow the model to learn the relationship between the slices and the problems while injecting the CoT reasoning.

4. Experiments

4.1. Dataset Construction

To build a large-scale dataset that simulates the real-world scenario, we use the Spider [43] training set and expand it by curating 254 distinct tables from the original 164 databases, filtering out duplicates. This forms Spider-Large, comprising 4030 question–answer pairs divided into training and validation sets in a 9:1 ratio (3647 for training and 383 for evaluation). During our empirical study, we observed that encoding information from 256 tables resulted in a very high token count, and memory requirements for PEFT on a medium-scale language model (batch size of 1) exceeded the limits of a single 80 GB GPU. To evaluate LR-SQL and the baseline methods, we randomly sampled 172 Spider-Large tables to create Spider-Medium, containing 1914 pairs of questions and answers, also divided 9:1 into 1736 training and 178 validation instances.

4.2. Experimental Setup

Base models: Our framework incorporates three open-source models: ChatGLM4 9B [44], Qwen2 7B [45], and DeepSeek 7B [46]. The DeepSeek model is limited to a context length of 4096 tokens, restricting its ability to manage conversational input up to this length. In contrast, both ChatGLM4 9B and Qwen2 7B demonstrate the capacity to handle context lengths exceeding a hundred thousand tokens.

Metrics. Our method includes two distinct models: a schema linking model and an SQL generation model. The schema linking model is evaluated using four key metrics for table prediction: total accuracy (TotalAcc), filtered accuracy (FilteredAcc), average precision (AvgPre), and average recall (AvgRecall) [12]. Total accuracy measures exact table matching, where the predicted tables align perfectly with the query targets. Filtered accuracy assesses table coverage, ensuring that predicted tables include all query targets. The SQL generation model employs two main metrics: Execution Accuracy (EX) and Logical Form Accuracy (EM) [43]. EX requires predicted SQL results to match standard SQL queries exactly. EM evaluates the similarity between generated and annotated SQL. GPU_usage denotes the maximum memory consumption (in MiB) required to run the full Text2SQL model. Inference time refers to the total time (in seconds) needed to complete inference on the validation set.

Hyperparameter Settings. All experimental procedures are executed on a solitary A800-80G GPU, using LoRA layers for supervised fine-tuning. Our hyperparameter tuning builds upon the fine-tuning settings of the baseline DTS-SQL [12], followed by a targeted search to identify optimal values (see Table 1). For LoRA, we test ranks {32, 64, 128} and scaling factors , selecting rank 64 and to balance adaptation capacity and memory usage. LoRA dropout is tuned over {0.05, 0.1, 0.2}, with 0.1 providing effective regularization. We explore learning rates {, , }; results in faster and more stable convergence. Weight decay values {0.0, 0.01, 0.05} show that 0.01 effectively reduces overfitting. Among learning rate schedulers (linear, cosine, and constant), cosine decay achieves smoother convergence and better final performance. Warmup ratios {0.0, 0.01, 0.05} indicate that 1% warmup best avoids early gradient spikes. Gradient clipping norms {0.1, 0.3, 0.5} suggest that 0.3 balances stability and efficiency. The final configuration (Table 1) represents the best trade-off among performance, stability, and resource usage.

Table 1.

Hyperparameter search space. Best-performing values are in bold.

4.3. Schema Linking Task Evaluation

To show the efficacy of LR-SQL, we conduct a comparison with three distinct approaches to predict database tables in two datasets. In the table, we use “-” to represent cases where experimental results could not be obtained.

Baselines.Zero-shot: This method transfers question-answering templates to the model during inference, stimulating the model’s existing knowledge while enhancing its ability to follow instructions. Compromise: This is a highly streamlined supervised fine-tuning strategy that simply correlates questions, table names, and corresponding answers. DTS-SQL [12]: This is a methodology that establishes connections between questions, comprehensive database contents, and table or column names.

Hyperparameter setting. In all the experiments, we configure the total slice count by adjusting , represented as total (w) = total (), to 8 for the GLM4 and Qwen2 models. Specifically, we define total(w) as the total number of slices used during training, while total () is defined as the total number of slices used during inference. For the DeepSeek model, we assign the slice number as total(w) = total() = 11 on Spider-Large and total(w) = total() = 8 on Spider-Medium. To facilitate a more accurate comparison of memory usage during supervised fine-tuning, we set the training batch size to 1 for the DTS-SQL approach and to 2 for both the compromise and LR-SQL during the training phase. The ultimate table prediction outcomes for each model, employing the different methods, are detailed in Table 1.

Results and Analysis. As shown in Table 2, the zero-shot setting consistently yields the lowest accuracy across both Spider-Large and Spider-Medium, confirming that relying solely on pretrained knowledge without schema-aware fine-tuning remains insufficient for complex Text2SQL tasks. Although zero-shot models achieve moderate recall in some cases, their precision is generally poor, indicating that the extracted tables often contain irrelevant candidates. The compromise strategy, which establishes a minimal link between questions and table names while omitting column-level context, demonstrates a clear improvement in accuracy over zero-shot. Its low GPU footprint makes it suitable for constrained environments; however, the absence of fine-grained schema information limits its scalability to large and complex databases, where performance improvements are restricted. DTS-SQL achieves the highest overall accuracy among baselines by effectively leveraging comprehensive schema representations to bridge questions and corresponding tables. Nevertheless, its reliance on encoding entire database structures leads to a substantial increase in token counts during fine-tuning, which in turn drives GPU memory usage to levels that can cause out-of-memory or out-of-context-window errors, particularly in Spider-Large. This constraint limits its applicability to high-resource settings. In contrast, the proposed LR-SQL achieves accuracy that is comparable to, and in some cases approaching, that of DTS-SQL while substantially reducing GPU memory requirements. For example, in Spider-Large, GLM4 (LR-SQL) reaches 91.38% total accuracy with 27% lower GPU usage compared to GLM4 (compromise) and avoids the memory issues encountered by DTS-SQL. This demonstrates that the dual-model architecture of LR-SQL, combined with slice-based schema linking, not only preserves schema awareness but also provides a practical solution for large-scale Text2SQL tasks under limited computational budgets. Overall, these results validate the effectiveness of LR-SQL in balancing accuracy, efficiency, and scalability, making it a viable alternative to resource-intensive methods in real-world database environments.

Table 2.

Performance of table prediction for different models in the schema linking task. LR-SQL rows are highlighted in gray, and the best values for each attribute are underlined. OOW and OOM denote errors caused by exceeding the model’s maximum context window and GPU memory limits, respectively.

4.4. CoT Effectiveness Evaluation

To validate the effectiveness of our proposed CoT-accompanied training, we conducted comparative experiments on three approaches. These are the no_CoT fine-tuning method based on function (6), the method that incorporates CoT during the inference phase while also being based on function (6) in fine-tuning, depicted as CoT, and the CoT-accompanied fine-tuning based on function (7), depicted as CoT_Injection.

Results and Analysis. As shown in Table 3, CoT_Injection consistently outperforms no_CoT across all three models and both dataset scales, with improvements in total accuracy reaching up to +5.22% (DeepSeek and Spider-Large) and +2.81% (GLM4 and Spider-Medium). This confirms that explicitly integrating CoT into the training process helps the model internalize multi-step reasoning patterns, rather than relying on heuristic reasoning during inference. In contrast, applying CoT only during inference generally fails to match the performance of no_CoT, especially in Qwen2 on Spider-Large, where accuracy drops by over 13%. This suggests that without prior exposure to structured reasoning during training, the model may misinterpret intermediate steps, leading to reasoning drift and degraded final predictions. Notably, CoT_Injection also provides modest but consistent gains in filtered accuracy, precision, and recall, indicating that the improvement is not merely due to random chance or dataset bias but reflects a genuine enhancement in both understanding and execution of multi-step queries. This highlights the importance of aligning reasoning strategies between training and inference to ensure stable generalization.

Table 3.

Experimental analysis of Chain-of-Thought injection effectiveness. LR-SQL rows are highlighted in gray, and the best values for each attribute are underlined.

4.5. SQL Generation Evaluation

After the schema linking stage, the SQL generation model is required to produce the final executable query. Our goal for LR-SQL is to reduce GPU memory consumption during supervised fine-tuning without sacrificing query generation accuracy.

Baselines. We compare LR-SQL with three representative approaches. The first, zero-shot, uses handcrafted prompts to directly generate SQL without task-specific fine-tuning. The second, DTS-SQL [12], splits Text2SQL into table selection and SQL generation stages, fine-tuning each stage separately. The third, DB-GPT [13], maps natural language questions and database schemas directly to SQL queries in an end-to-end manner.

Results and Analysis. The results in Table 4 reveal several key findings. In both Spider-Large and Spider-Medium, LR-SQL achieves EX and EM scores comparable to those of DTS-SQL and higher than those of DB-GPT, despite using substantially less GPU memory (e.g., vs. for DTS-SQL). DTS-SQL fails to run on Spider-Large due to out-of-memory errors, whereas LR-SQL maintains stable inference, demonstrating its scalability advantage for large-schema databases. Compared with zero-shot prompting, LR-SQL yields improvements of more than in EX and in EM in Spider-Large, confirming the need for fine-tuning for complex schema-aware generation. The slight EM gap between LR-SQL and DTS-SQL on Spider-Medium suggests that while table–SQL decoupling benefits accuracy, our token-constrained schema linking achieves a better balance between efficiency and effectiveness, making it more suitable for resource-constrained deployments.

Table 4.

The final results of using different supervised fine-tuning methods for Text2SQL. LR-SQL rows are highlighted in gray, and the best values for each attribute are underlined.

Overall, these results validate that two-stage fine-tuning with token-efficient schema linking not only reduces GPU requirements but also sustains high SQL generation performance, outperforming end-to-end SFT in both robustness and scalability.

4.6. Slice Token, GPU Memory, and Performance Evaluation

In LR-SQL, the GPU memory consumption during training can be effectively controlled by adjusting the parameter. Different sizes correspond to varying amounts of table information encapsulated within each slice, which in turn can influence both training efficiency and inference performance. Table 5 presents the results of the experiments carried out on the GLM4 model, which achieved the best overall performance, showing how different granularities affect memory usage, inference time, and table prediction accuracy.

Table 5.

Experiment on the impact of using slices with different granularities on GPU memory and table prediction accuracy during fine-tuning. The best values for each attribute are underlined.

Results and Analysis. The results in Table 5 indicate that by tuning , LR-SQL can flexibly balance GPU memory requirements and inference efficiency. In particular, smaller values reduce memory usage but may slightly increase inference time due to the larger number of slices to process. Despite this trade-off, the filtered accuracy metric remains relatively stable across different slice granularities, demonstrating that the model can maintain high table prediction precision even under reduced memory conditions. These findings suggest that offers a practical mechanism for adapting LR-SQL to hardware constraints without compromising prediction quality, providing a valuable guideline for deploying the model in resource-limited scenarios.

4.7. Slice Size During Inference Evaluation

During inference, we denote the constructed slice as . Varying the size of influences both the number of inference iterations and the predictive performance of the model. A smaller causes each slice to cover more table information, leading to an increased number of encoded tokens per slice. Consequently, different settings produce varying inference times and prediction accuracies. In all experiments, the setting of w remains consistent with that in Section 4.3.

Results and Analysis.Table 6 shows that reducing the number of slices () decreases the number of inference steps, resulting in shorter inference times. However, this efficiency gain comes at the cost of reduced performance in all evaluation metrics. In contrast, inference achieves the best performance when is set close to the size of the training slice w, indicating that alignment between the training and granularity of the inference slice allows the model to fully leverage the table representations learned while maintaining a reasonable inference speed.

Table 6.

Results of constructing slices with different granularities during inference.The best values for each attribute are underlined.

5. Conclusions

We propose LR-SQL, a supervised fine-tuning framework that innovatively reduces GPU memory consumption by focusing on the schema linking subtask within the Text2SQL pipeline. LR-SQL decomposes large-scale databases into manageable schema slices, enabling efficient training on limited hardware. By integrating Chain-of-Thought reasoning, LR-SQL effectively preserves global relational context across slices during training and inference, allowing the model to capture complex schema interactions without compromising accuracy. Experimental results demonstrate that LR-SQL achieves performance on par with state-of-the-art baselines while significantly lowering memory requirements. Its compatibility with parameter-efficient fine-tuning methods such as QLoRA further enhances its practicality.

From a managerial perspective, LR-SQL enables a cost-efficient and privacy-compliant deployment of Text2SQL models on resource-constrained hardware, thereby broadening accessibility for organizations with limited computational resources or stringent data governance requirements. In future work, we plan to use reinforcement learning with human feedback (RLHF) to further enhance the reasoning capabilities of the schema linking model, with the aim of improving the accuracy of schema element prediction, as well as investigating slice construction strategies based on query frequency.

Limitations. LR-SQL reduces GPU memory usage by decomposing large schemas into multiple slices, but this increases inference latency since results from different slices must be aggregated (e.g., 4.53 + 6.49 s vs. 2.87 s on Spider-Large compared with zero-shot). This trade-off between memory efficiency and latency can be partly mitigated through parallel inference, caching, or VLLM-based acceleration. In practice, LR-SQL has been applied to databases with up to 200–300 tables, achieving strong performance, while handling extremely large databases remains a topic for future work. Although slice-based processing may slightly increase training time, controlling the number of slices and leveraging multi-GPU parallelization allows a practical balance between accuracy, memory usage, and training/inference speed.

Author Contributions

Writing—original draft, W.W.; Visualization, Y.S.; Supervision, P.L. and C.D.; Project administration, Y.Z. and S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (Grant No. 62071244).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Authors Yongpan Zhang, Pengwei Lu and Cheng Ding were employed by the company China Telecom Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Appendix A. Ablation Study on Different Slicing Methods

To evaluate the effectiveness of the first step in LR-SQL, namely constructing data slices based on foreign key relationships, we conducted an ablation study on the Spider-Medium dataset, comparing random slicing with f foreign key slicing. All experimental settings follow those described in Section 4.3. The results are summarized in Table A1.

Table A1.

Performance comparison of Qwen2 and GLM4 on Spider-Medium with different slicing strategies (random vs. foreign key). Foreign key based slicing method rows are highlighted in gray.

Table A1.

Performance comparison of Qwen2 and GLM4 on Spider-Medium with different slicing strategies (random vs. foreign key). Foreign key based slicing method rows are highlighted in gray.

| Model | Slicing | TotalAcc | FilteredAcc | AvgPre | AvgRecall |

|---|---|---|---|---|---|

| GLM4 | Random | 91.57 | 93.82 | 97.61 | 96.44 |

| Foreign Key | 94.38 | 97.19 | 96.91 | 97.85 | |

| Qwen2 | Random | 84.27 | 87.08 | 94.94 | 92.37 |

| Foreign Key | 89.89 | 92.13 | 94.94 | 94.48 |

As shown in Table A1, slicing based on foreign key relationships consistently improves model performance compared to random slicing. For GLM4, foreign key slicing increases total accuracy and filtered accuracy by approximately 2.81% and 3.37%, respectively. These results suggest that incorporating foreign key information in the slicing process allows models to better capture relational structures across tables, leading to more accurate SQL predictions. Overall, the foreign key slicing strategy provides consistent performance improvements across different LLMs, demonstrating its effectiveness and practical utility in the LR-SQL preprocessing pipeline.

Appendix B. Further Experiment for QLORA

QLoRA [30] reduces the memory footprint of fine-tuning LLMs by quantizing pretrained weights to 4-bit precision while keeping them frozen and only training lightweight low-rank adapter matrices. This design eliminates the need to store full-precision gradients and optimizer states for the entire model, thereby lowering GPU memory consumption by an order of magnitude while maintaining performance close to full fine-tuning. Building on this idea, we integrate quantization into LoRA to further compress the GPU memory required for fine-tuning. We evaluate QLoRA on the Spider-Medium dataset using Qwen2, focusing on both the memory savings and the trade-off in model performance. Specifically, QLoRA is tested under two settings: (1) loading the pretrained weights at full precision (QLoRA No Quant.) and (2) loading them in 4-bit quantization (QLoRA Quant.). After training, the LoRA adapters are merged for inference. All experimental settings follow those described in Section 4.3.

The results are reported in Table A2. Compared to standard LoRA, QLoRA indeed reduces GPU memory usage, but the reduction is relatively modest. More importantly, quantization introduces a noticeable drop in performance. In particular, aligning the precision between training and inference proves essential: QLoRA (Quant.) consistently outperforms QLoRA (No Quant.), suggesting that the quantized training–inference pipeline maintains better consistency, while a mismatch between training and inference precision can severely degrade performance.

Table A2.

Comparison between LoRA and QLoRA on Spider-Medium with Qwen2. QLoRA results are reported with and without quantized loading. The best values for each attribute are underlined.

Table A2.

Comparison between LoRA and QLoRA on Spider-Medium with Qwen2. QLoRA results are reported with and without quantized loading. The best values for each attribute are underlined.

| Method | TotalAcc | FilteredAcc | AvgPre | AvgRecall | GPU Usage |

|---|---|---|---|---|---|

| LoRA | 89.89 | 92.13 | 94.94 | 94.48 | 38,374 |

| QLoRA (No Quant.) | 53.38 | 59.55 | 71.3 | 67.6 | 35,813 |

| QLoRA (Quant.) | 62.36 | 63.48 | 80.15 | 73.17 | 35,813 |

Appendix C. Further Experiment on Wiki-SQL Dataset

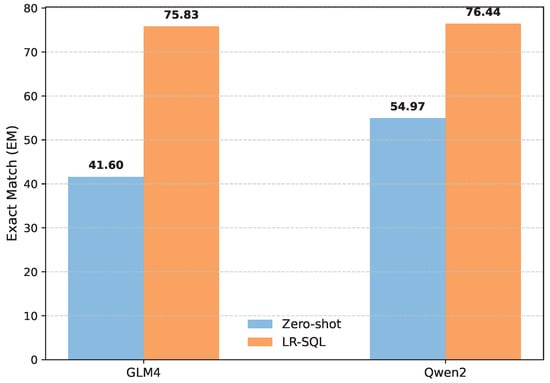

To evaluate the SQL generation capability of LR-SQL on different datasets, we further assessed the performance of the LR-SQL model on the Wiki-SQL benchmark. Figure A1 summarizes the EM results under both zero-shot and fine-tuned (LoRA) settings.

Figure A1.

Exact Match (EM) results on WikiSQL.

The results in Figure A1 indicate that fine-tuning the SQL generation model using LR-SQL significantly improves its performance over the base models. Specifically, both GLM4 and Qwen2 exhibit substantial gains in EM scores after LoRA-based adaptation, with Qwen2 achieving the highest performance. This shows that LR-SQL can effectively enhance SQL generation capabilities across different datasets, confirming the robustness and generalizability of its fine-tuning strategy. Furthermore, the improvements suggest that even strong pretrained LLMs benefit from lightweight, task-specific adaptation when applied to structured query tasks.

References

- Xiao, D.; Chai, L.; Zhang, Q.W.; Yan, Z.; Li, Z.; Cao, Y. CQR-SQL: Conversational Question Reformulation Enhanced Context-Dependent Text-to-SQL Parsers. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; pp. 2055–2068. [Google Scholar] [CrossRef]

- Zheng, Y.; Wang, H.; Dong, B.; Wang, X.; Li, C. HIE-SQL: History Information Enhanced Network for Context-Dependent Text-to-SQL Semantic Parsing. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; pp. 2997–3007. [Google Scholar] [CrossRef]

- Hui, B.; Geng, R.; Wang, L.; Qin, B.; Li, Y.; Li, B.; Sun, J.; Li, Y. S2SQL: Injecting Syntax to Question-Schema Interaction Graph Encoder for Text-to-SQL Parsers. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; pp. 1254–1262. [Google Scholar] [CrossRef]

- Shi, L.; Tang, Z.; Zhang, N.; Zhang, X.; Yang, Z. A Survey on Employing Large Language Models for Text-to-SQL Tasks. ACM Comput. Surv. 2025. [Google Scholar] [CrossRef]

- Li, H.; Zhang, J.; Liu, H.; Fan, J.; Zhang, X.; Zhu, J.; Wei, R.; Pan, H.; Li, C.; Chen, H. Codes: Towards building open-source language models for text-to-sql. Proc. ACM Manag. Data 2024, 2, 1–28. [Google Scholar] [CrossRef]

- Lee, D.; Park, C.; Kim, J.; Park, H. MCS-SQL: Leveraging Multiple Prompts and Multiple-Choice Selection For Text-to-SQL Generation. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; Rambow, O., Wanner, L., Apidianaki, M., Al-Khalifa, H., Eugenio, B.D., Schockaert, S., Eds.; pp. 337–353. [Google Scholar]

- Pourreza, M.; Rafiei, D. Din-sql: Decomposed in-context learning of text-to-sql with self-correction. Adv. Neural Inf. Process. Syst. 2024, 36, 36339–36348. [Google Scholar]

- Wang, B.; Ren, C.; Yang, J.; Liang, X.; Bai, J.; Chai, L.; Yan, Z.; Zhang, Q.W.; Yin, D.; Sun, X.; et al. MAC-SQL: A Multi-Agent Collaborative Framework for Text-to-SQL. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; Rambow, O., Wanner, L., Apidianaki, M., Al-Khalifa, H., Eugenio, B.D., Schockaert, S., Eds.; pp. 540–557. [Google Scholar]

- Dong, X.; Zhang, C.; Ge, Y.; Mao, Y.; Gao, Y.; Lin, J.; Lou, D. C3: Zero-shot text-to-sql with chatgpt. arXiv 2023, arXiv:2307.07306. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-Efficient Transfer Learning for NLP. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR; Proceedings of Machine Learning Research. Volume 97, pp. 2790–2799. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Pourreza, M.; Rafiei, D. DTS-SQL: Decomposed Text-to-SQL with Small Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 8212–8220. [Google Scholar] [CrossRef]

- Xue, S.; Qi, D.; Jiang, C.; Cheng, F.; Chen, K.; Zhang, Z.; Zhang, H.; Wei, G.; Zhao, W.; Zhou, F.; et al. Demonstration of DB-GPT: Next Generation Data Interaction System Empowered by Large Language Models. Proc. VLDB Endow. 2024, 17, 4365–4368. [Google Scholar] [CrossRef]

- yu Zhu, G.; Shao, W.; Zhu, X.; Yu, L.; Guo, J.; Cheng, X. Text2Sql: Pure Fine-Tuning and Pure Knowledge Distillation. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 3: Industry Track), Albuquerque, NM, USA, 30 April–2 May 2025; pp. 54–61. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Shi, W.; Li, S.; Yu, K.; Chen, J.; Liang, Z.; Wu, X.; Qian, Y.; Wei, F.; Zheng, B.; Liang, J.; et al. SEGMENT+: Long Text Processing with Short-Context Language Models. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 16605–16617. [Google Scholar] [CrossRef]

- Xu, F.; Shi, W.; Choi, E. Recomp: Improving retrieval-augmented lms with compression and selective augmentation. arXiv 2023, arXiv:2310.04408. [Google Scholar] [CrossRef]

- Cao, B.; Cai, D.; Lam, W. InfiniteICL: Breaking the Limit of Context Window Size via Long Short-term Memory Transformation. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 27 July–1August 2025; Che, W., Nabende, J., Shutova, E., Pilehvar, M.T., Eds.; pp. 11402–11415. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Zhang, Z.; Yao, Y.; Zhang, A.; Tang, X.; Ma, X.; He, Z.; Wang, Y.; Gerstein, M.; Wang, R.; Liu, G.; et al. Igniting language intelligence: The hitchhiker’s guide from chain-of-thought reasoning to language agents. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Sun, G.; Shen, R.; Jin, L.; Wang, Y.; Xu, S.; Chen, J.; Jiang, W. Instruction Tuning Text-to-SQL with Large Language Models in the Power Grid Domain. In Proceedings of the 2023 4th International Conference on Control, Robotics and Intelligent System, Guangzhou, China, 25–27 August 2023; pp. 59–63. [Google Scholar]

- Hong, Z.; Yuan, Z.; Chen, H.; Zhang, Q.; Huang, F.; Huang, X. Knowledge-to-SQL: Enhancing SQL Generation with Data Expert LLM. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L.W., Martins, A., Srikumar, V., Eds.; pp. 10997–11008. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Manning, C.D.; Ermon, S.; Finn, C. Direct preference optimization: Your language model is secretly a reward model. Adv. Neural Inf. Process. Syst. 2023, 36, 53728–53741. [Google Scholar]

- Stoisser, J.L.; Martell, M.B.; Fauqueur, J. Sparks of Tabular Reasoning via Text2SQL Reinforcement Learning. In Proceedings of the 4th Table Representation Learning Workshop, Vienna, Austria, 1 August 2025; Chang, S., Hulsebos, M., Liu, Q., Chen, W., Sun, H., Eds.; pp. 229–240. [Google Scholar] [CrossRef]

- Ma, P.; Zhuang, X.; Xu, C.; Jiang, X.; Chen, R.; Guo, J. Sql-r1: Training natural language to sql reasoning model by reinforcement learning. arXiv 2025, arXiv:2504.08600. [Google Scholar] [CrossRef]

- Sun, R.; Arik, S.Ö.; Muzio, A.; Miculicich, L.; Gundabathula, S.; Yin, P.; Dai, H.; Nakhost, H.; Sinha, R.; Wang, Z.; et al. Sql-palm: Improved large language model adaptation for text-to-sql (extended). arXiv 2023, arXiv:2306.00739. [Google Scholar]

- Shen, R.; Sun, G.; Shen, H.; Li, Y.; Jin, L.; Jiang, H. SPSQL: Step-by-step parsing based framework for text-to-SQL generation. In Proceedings of the 2023 7th International Conference on Machine Vision and Information Technology (CMVIT), Xiamen, China, 24–26 March 2023; pp. 115–122. [Google Scholar]

- Zhang, L.; Zhang, L.; Shi, S.; Chu, X.; Li, B. Lora-fa: Memory-efficient low-rank adaptation for large language models fine-tuning. arXiv 2023, arXiv:2308.03303. [Google Scholar]

- Gao, Z.; Wang, Q.; Chen, A.; Liu, Z.; Wu, B.; Chen, L.; Li, J. Parameter-efficient fine-tuning with discrete fourier transform. arXiv 2024, arXiv:2405.03003. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. Qlora: Efficient finetuning of quantized llms. Adv. Neural Inf. Process. Syst. 2024, 36, 10088–10115. [Google Scholar]

- Liao, B.; Herold, C.; Khadivi, S.; Monz, C. ApiQ: Finetuning of 2-Bit Quantized Large Language Model. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 20996–21020. [Google Scholar] [CrossRef]

- Chen, Y.; Qian, S.; Tang, H.; Lai, X.; Liu, Z.; Han, S.; Jia, J. Longlora: Efficient fine-tuning of long-context large language models. arXiv 2023, arXiv:2309.12307. [Google Scholar]

- Simoulin, A.; Park, N.; Liu, X.; Yang, G. Memory-Efficient Fine-Tuning of Transformers via Token Selection. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 21565–21580. [Google Scholar] [CrossRef]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, Punta Cana, Dominican Republic, 7–11 November 2021; Moens, M.F., Huang, X., Specia, L., Yih, S.W.t., Eds.; pp. 3045–3059. [Google Scholar] [CrossRef]

- Li, X.L.; Liang, P. Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–16 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; pp. 4582–4597. [Google Scholar] [CrossRef]

- Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 technical report. arXiv 2024, arXiv:2412.15115. [Google Scholar] [CrossRef]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Gao, T.; Wettig, A.; Yen, H.; Chen, D. How to train long-context language models (effectively). arXiv 2024, arXiv:2410.02660. [Google Scholar] [CrossRef]

- Li, Y.; Dong, B.; Guerin, F.; Lin, C. Compressing Context to Enhance Inference Efficiency of Large Language Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 7–11 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; pp. 6342–6353. [Google Scholar] [CrossRef]

- Lee, K.H.; Chen, X.; Furuta, H.; Canny, J.; Fischer, I. A human-inspired reading agent with gist memory of very long contexts. arXiv 2024, arXiv:2402.09727. [Google Scholar] [CrossRef]

- Chen, H.; Pasunuru, R.; Weston, J.; Celikyilmaz, A. Walking down the memory maze: Beyond context limit through interactive reading. arXiv 2023, arXiv:2310.05029. [Google Scholar] [CrossRef]

- Silberschatz, A.; Korth, H.F.; Sudarshan, S. Database System Concepts; McGraw-Hill: Columbus, OH, USA, 2011. [Google Scholar]

- Yu, T.; Zhang, R.; Yang, K.; Yasunaga, M.; Wang, D.; Li, Z.; Ma, J.; Li, I.; Yao, Q.; Roman, S.; et al. Spider: A large-scale human-labeled dataset for complex and cross-domain semantic parsing and text-to-sql task. arXiv 2018, arXiv:1809.08887. [Google Scholar]

- GLM, T.; Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Rojas, D.; Feng, G.; Zhao, H.; Lai, H.; et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. arXiv 2024, arXiv:2406.12793. [Google Scholar]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 technical report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Bi, X.; Chen, D.; Chen, G.; Chen, S.; Dai, D.; Deng, C.; Ding, H.; Dong, K.; Du, Q.; Fu, Z.; et al. Deepseek llm: Scaling open-source language models with longtermism. arXiv 2024, arXiv:2401.02954. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).