Abstract

We compare the convergence balls and the dynamical behaviors of two efficient weighted-Newton-like equation solvers by Sharma and Arora, and Grau-Sánchez et al. First of all, the results of ball convergence for these algorithms are established by employing generalized Lipschitz constants and assumptions on the first derivative only. Consequently, outcomes for the radii of convergence, measurable error distances and the existence–uniqueness areas for the solution are discussed. Then, the complex dynamical behaviors of these solvers are compared by applying the attraction basin tool. It is observed that the solver suggested by Grau-Sánchez et al. has bigger basins than the method described by Sharma and Arora. Lastly, our ball analysis findings are verified on application problems and the convergence balls are compared. It is found that the method given by Grau-Sánchez et al. has larger convergence balls than the solver of Sharma and Arora. Hence, the solver presented by Grau-Sánchez et al. is more suitable for practical application. The convergence analysis uses the first derivative in contrast to the aforementioned studies, utilizing the seventh derivative not on these methods. The developed process can be used on other methods in order to increase their applicability.

Keywords:

Banach space; Fréchet derivative; basin of attraction; local convergence; convergence ball MSC:

65H10; 65G99; 47H99; 49M15

1. Introduction

Let us consider two Banach spaces and . Suppose is a subset of , which is convex and open. We denote the set { linear and bounded operators} by . For a Fréchet derivable operator , define the equation

This equation regularly appears when physical, chemical and other scientific problems are modeled mathematically and we need numerical methods to solve them. Take note that this is a critical job since analytical solutions to these equations are occasionally available. Therefore, nonlinear equations are usually approached by an iterative method, through which an approximate solution can be found. Developing more accurate iterative approaches for approximating the solution of these equations is a huge challenge in general. The conventional Newton’s iterative approach is the most often employed technique for this problem. Besides this, a number of algorithms have been suggested to boost the convergence rate of the orthodox solvers such as Newton’s [1] and Chebyshev–Halley’s [2], among others. Cordero and Torregrosa [3] proposed several variants of Newton’s solver of second, third and fifth convergence orders based on quadrature rules of fifth order for solving nonlinear equations. Two families of zero-finding iterative approaches to address nonlinear equations are presented in [4] by applying Obreshkov-like techniques [5] and Hueso et al. [6] developed a third-order and fourth-order class of predictor–corrector algorithms free from the second derivative to solve systems of nonlinear equations. By composing two weighted-Newton steps, Sharma et al. [6] constructed an efficient fourth-order weighted-Newton method to solve nonlinear systems. Cordero et al. [7] studied two bi-parametric fourth-order families of predictor–corrector iterative solvers by generalizing Ostrowski’s and Chun’s algorithms [8,9]. They used Newton’s solver as the predictor of the first family, and Steffensen’s method as the predictor of the second one. For solving systems of nonlinear equations, Sharma and Arora [10] designed Newton-like iterative approaches of the fifth and eighth orders of convergence.

In this study, we are interested in two sixth convergence order equation solvers proposed by Sharma and Arora [11] and Grau-Sánchez et al. [12], respectively. For a starting point , the iterative formula designed by Sharma and Arora is written as follows:

The solver constructed by Grau-Sánchez et al. can be presented as

where is a starter, . For these iterative procedures, the convergence theorems have been developed by applying the expensive Taylor’s formula and imposing constraints on the derivative of the seventh order. The scope of utilization of these solvers is restricted due to such convergence results based on derivatives of higher order. To demonstrate this, we choose

where and D is defined on . Then, it is noteworthy that the existing convergence theorems for (2) and (3) do not hold for this example, since is unbounded on V. In addition, these convergence results produce no statements on , the convergence ball and the precise location of . The ball analysis of an iterative formula is useful in the estimation of the radii of convergence balls and bounds on , and in determining the area of uniqueness for . It should be noted that the effects of ball convergence are extremely beneficial because they shed light on the challenging issue of selecting starting guesses. This motivates us to establish ball convergence of solvers (2) and (3) by applying conditions only on . Our work allows computation of the convergence radii and the estimates , and also provides an accurate location of .

The summary of the entire document can be written as follows: Section 1 is the introduction. The results of ball convergence for solvers (2) and (3) are established in Section 2. Section 3 deals with the comparison of attraction basins for these algorithms. In Section 4, numerical studies are performed. Finally, concluding remarks are written.

2. Ball Analysis

First, it is convenient for the local convergence analysis of solver (2) to develop real parameters and functions. Let . Suppose the following:

- (1)

- Function has a least root for some function that is non-decreasing and continuous. Set .

- (2)

- Function has a least root for some function that is non-decreasing and continuous, and is defined as

- (3)

- Function has a least root .Set and .

- (4)

- Function has a least root for some functions , that is non-decreasing and continuous, with function defined as

- (5)

- Function has a least root .Set and .

- (6)

- Function has a least root , with defined asDefine parameter

It shall be shown that is a convergence radius for solver (2). By , we denote the closure of ball with center and of radius . We use the conditions (C) in the ball convergence of solver (2) provided that functions are as defined previously and is a simple root of D. Suppose the following:

- ()

- For allSet .

- ()

- For all

- ()

- for some to be determined later.

- ()

- There exists , satisfyingSet .

Next, the ball convergence of solver (2) is presented.

Theorem 1.

Suppose that the conditions (C) hold. Then, iteration generated by solver with is well defined in ; remains in for all ; and . Moreover, the following assertions hold:

and

where the functions and the radius ρ were given previously. Furthermore, the only root of D in the set is .

Proof.

Assertions (6)–(8) are shown using induction on m. Let . Then, using (5) and (), we obtain

It then follows by (9), and the lemma due to Banach on linear invertible operators [4,13] that and

Notice that exist and we can write by () the first substep of solver (2) for :

showing and (6) for .

Similarly, we have from (), () and the third substep of solver (2) for :

showing and (8) for . Simply exchange by , respectively, in the previous calculations to complete the induction for (6)–(8). It then follows from the estimation

where that and .

Set for some with . Then, in view of () and (), we obtain

so, we conclude from the invertibility of T and the identity . □

Suppose functions have least root in denoted by , respectively.

Set ; and in conditions (C).

The definitions of functions are motivated by the estimates:

and similarly,

Hence, we arrive at the ball convergence result for solver (3).

Theorem 2.

Suppose that the conditions (C) hold for . Then, the conclusions of Theorem 1 hold for solver (3) with replaced by , respectively.

Remark 1.

The continuity condition

is used in existing works on higher-order iterative algorithms instead of the assumption.

. However, then, since , we obtain

This is a significant result because all earlier findings can be rewritten in terms of Γ since , which is a more accurate location of . This expands the radius of the convergence ball, tightens the upper error distances and helps in providing a better location of . It is worth considering the example for . Then, we obtain

and using Rheinboldt or Traub [13,14] (for ), we obtain ; using previous studies by Argyros [15,16] (for ), ; and with our proposed analysis, , so

3. Attraction Basins Comparison

We compare the complex dynamical behaviors of solvers (2) and (3) by applying the basin of attraction tool. For solving equations in , the methods (2) and (3) can be presented as follows:

Let the sequence stand for the sequence of iterates produced by an iterative algorithm starting from . The set of points constructs the attraction basin related to a zero of , where O indicates a second or higher degree polynomial with complex coefficients. The area × on is used with a grid of points to create attraction basins. Every point is regarded as a starting estimation, and solvers (11) and (12) are applied to seven different test functions. The point remains in the basin of a zero of a test function if . We paint the starter using a specific color associated with . Based on the number of iterations, we assign the light to dark colors for each initial guess . If is not a member of the attraction basin of any zero of the test polynomial, it is displayed in black color. If , then we stop the iteration procedure. Otherwise, we terminate the process after 400 iterations. The test polynomials are selected from [8,17,18]. The fractal pictures are constructed with the help of MATLAB 2019a.

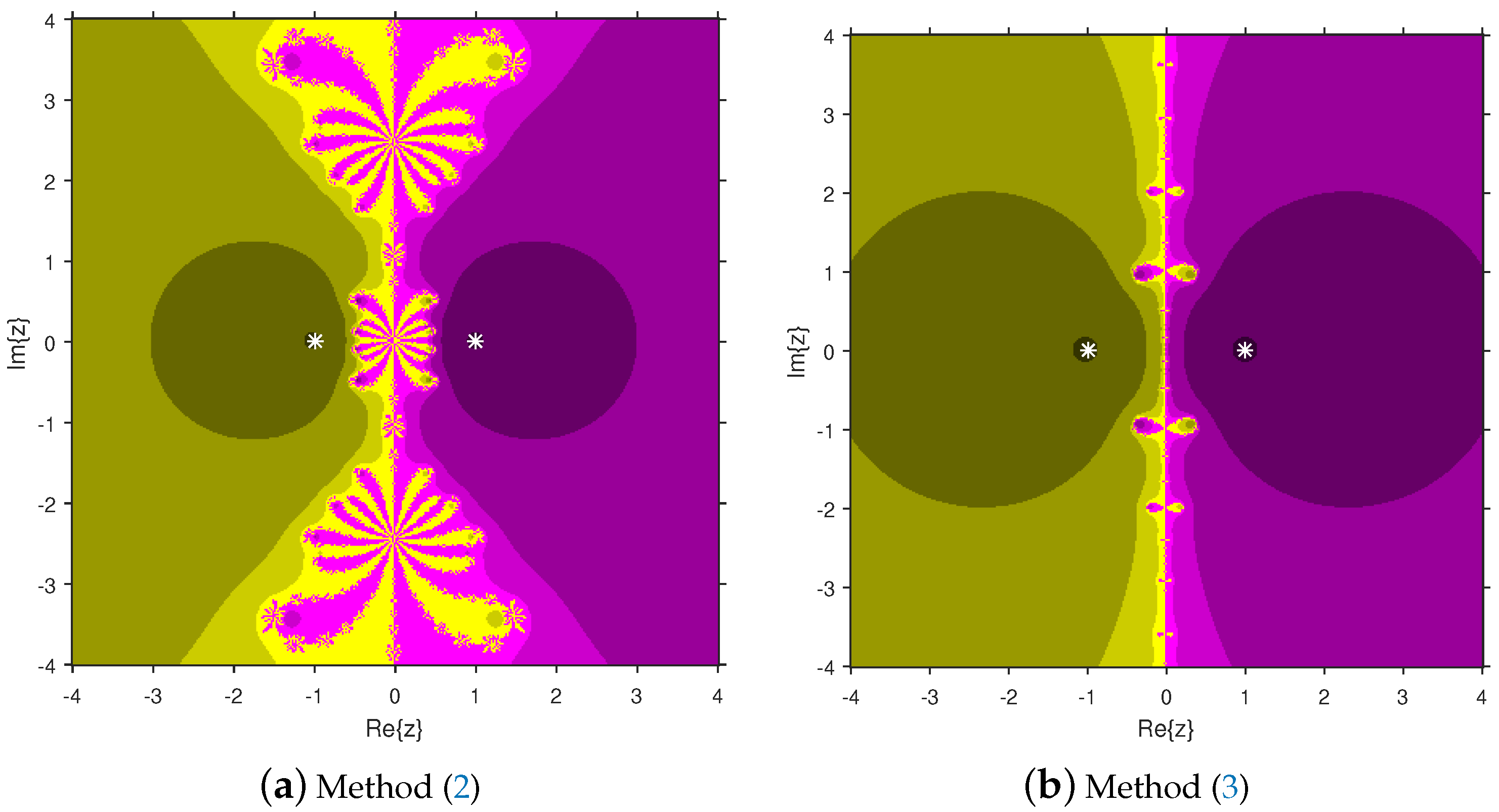

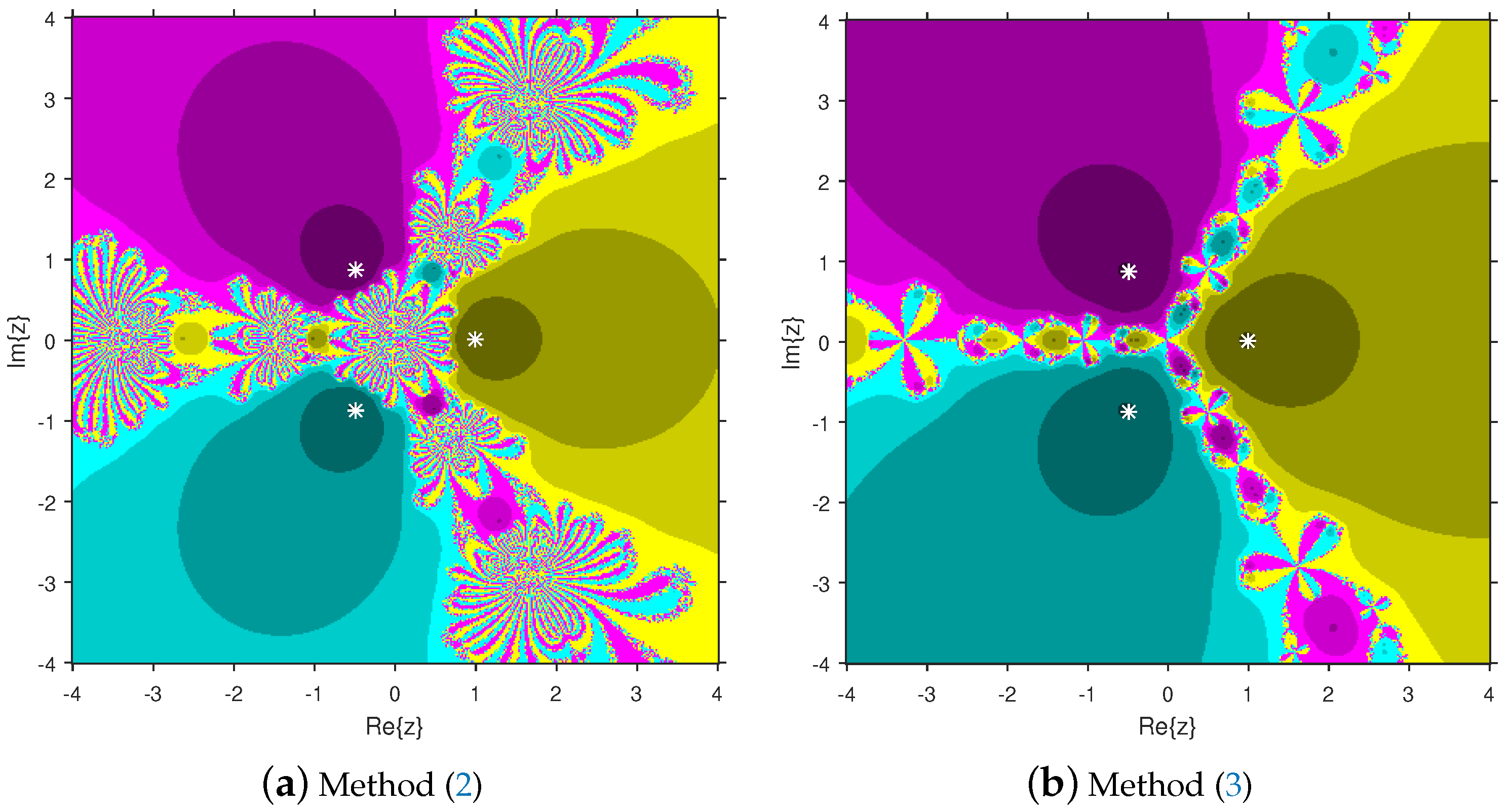

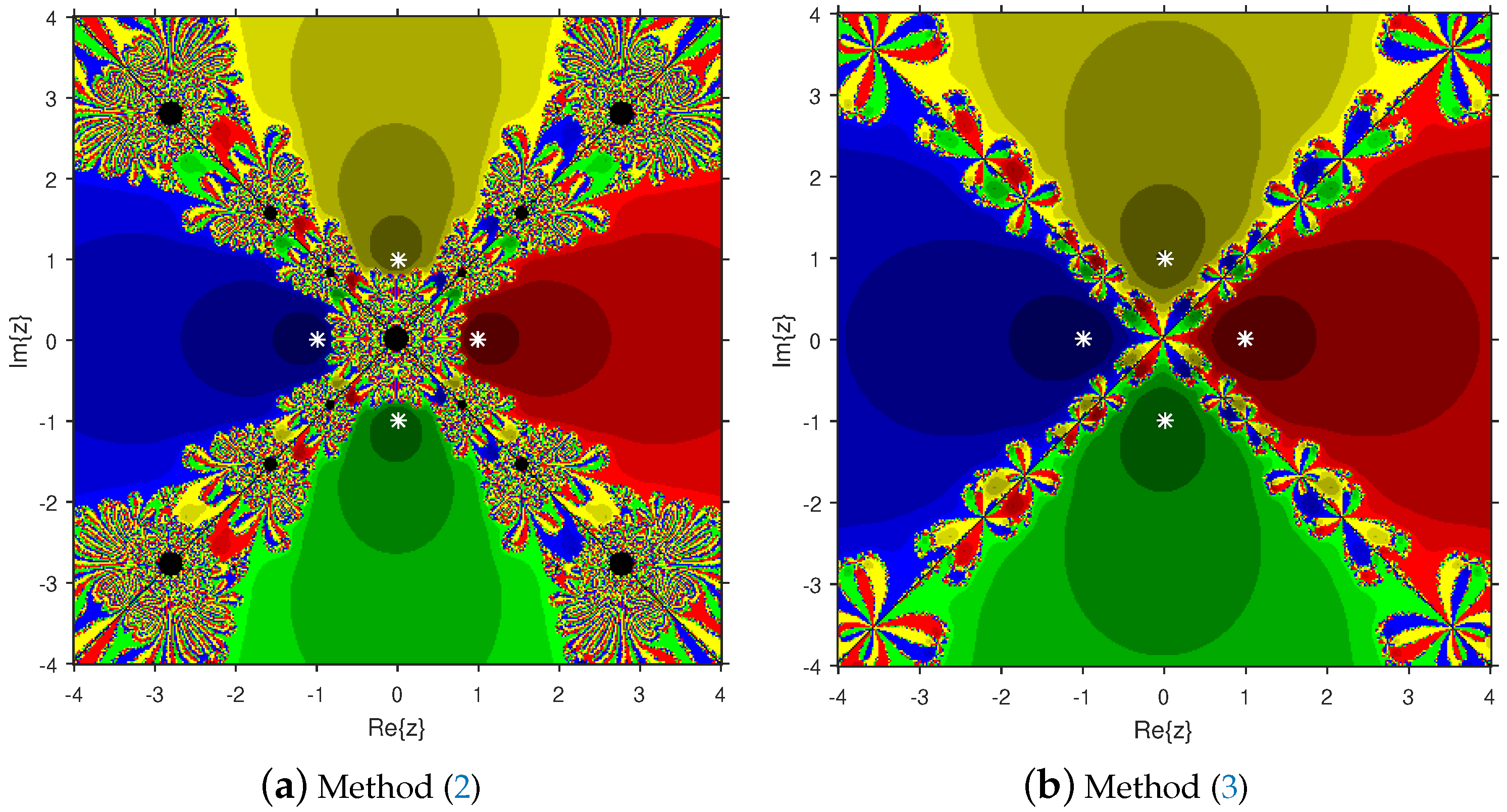

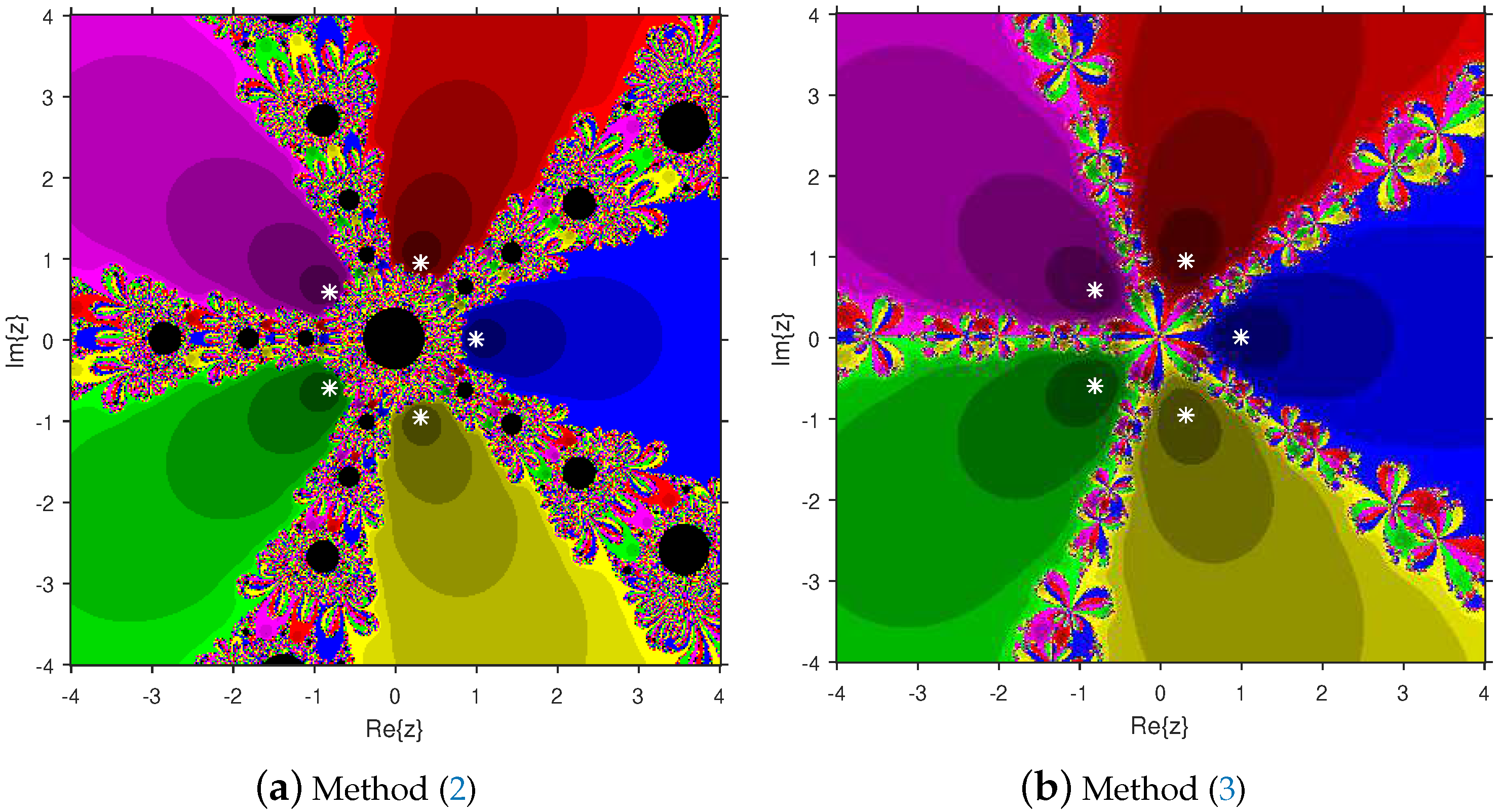

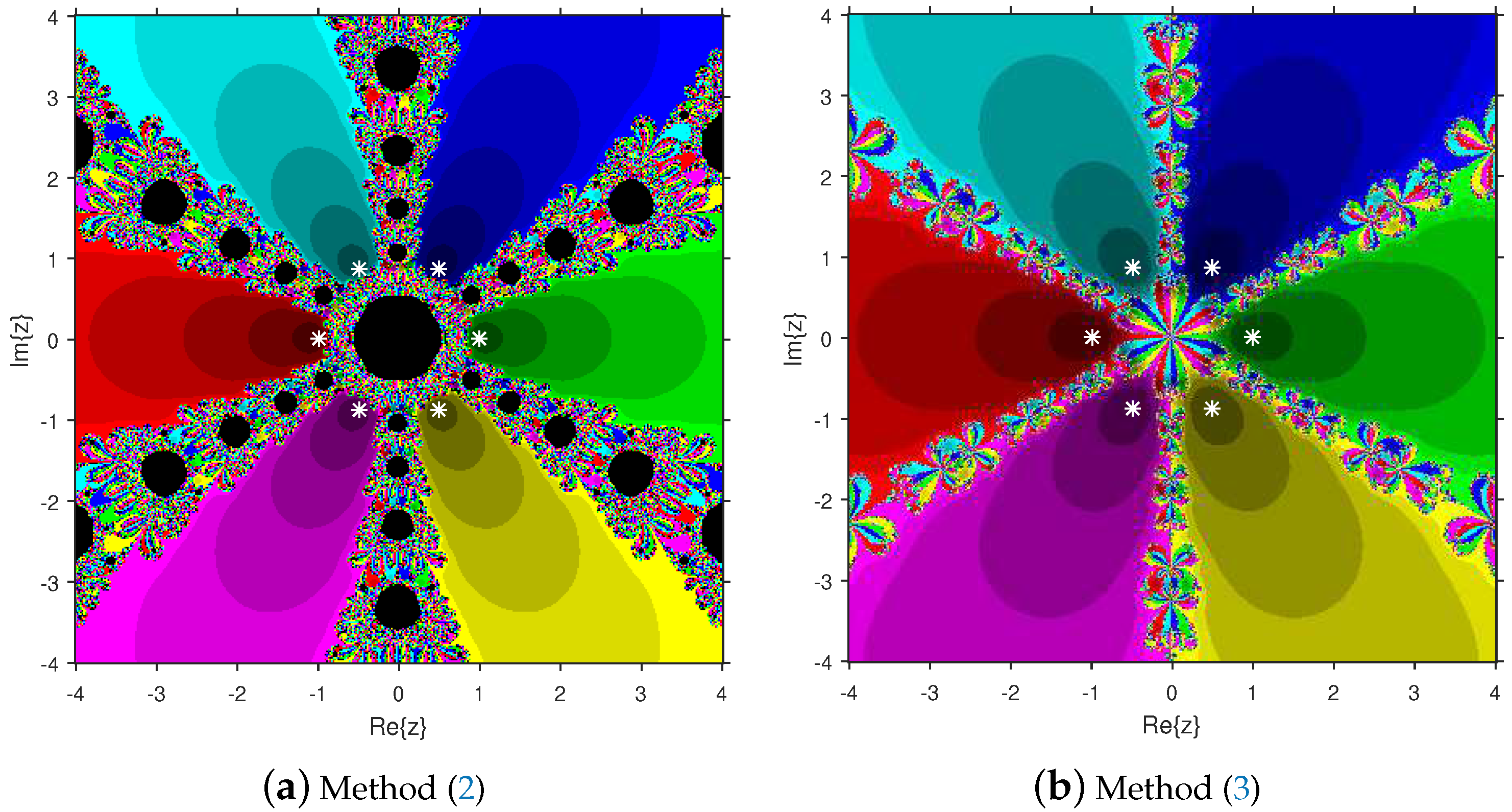

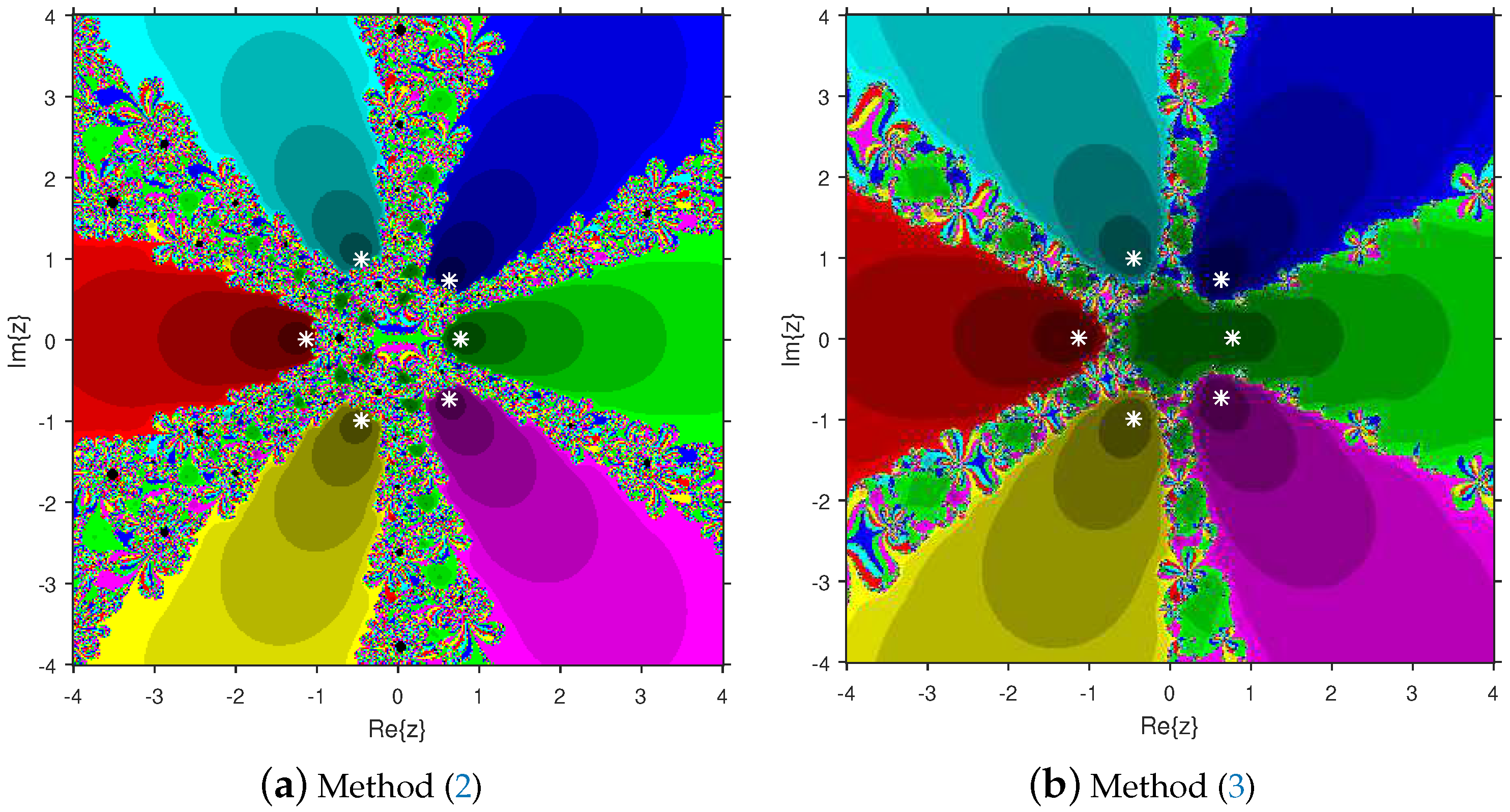

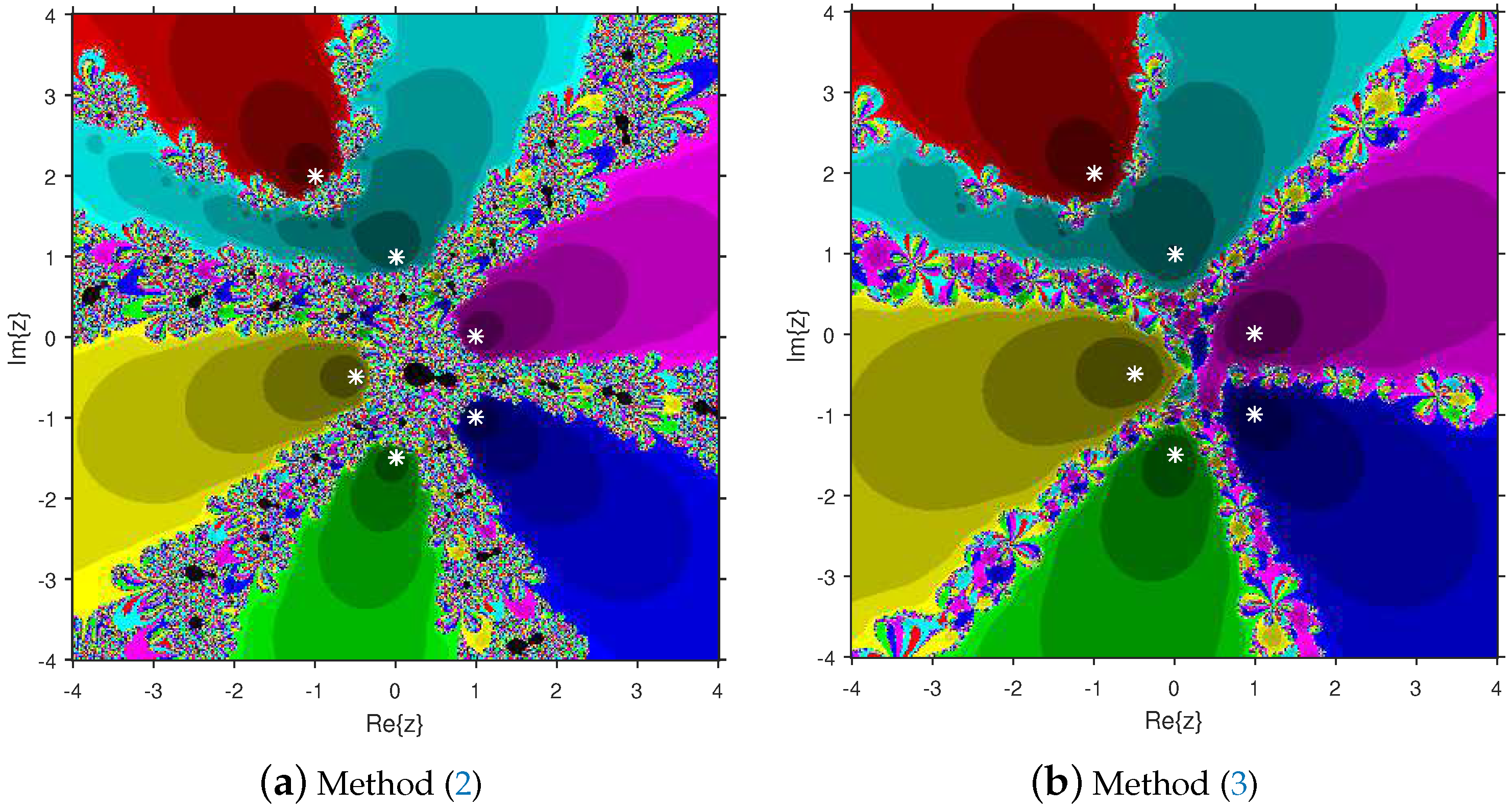

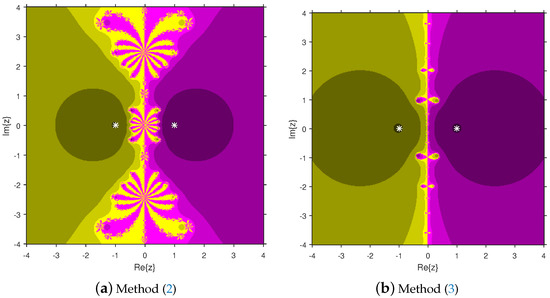

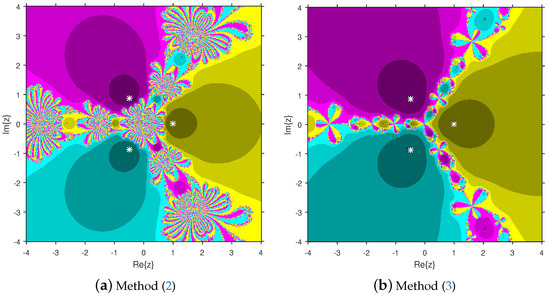

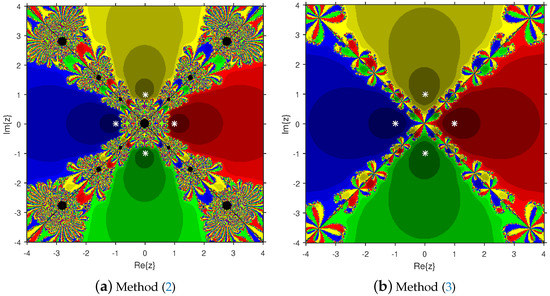

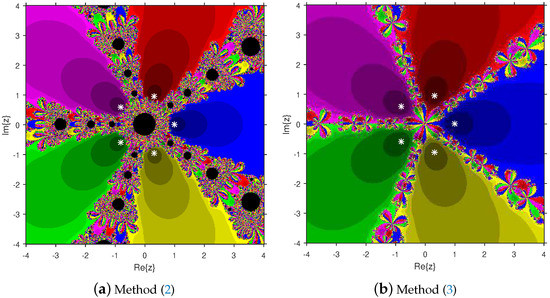

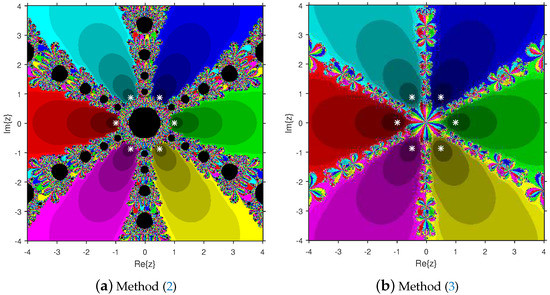

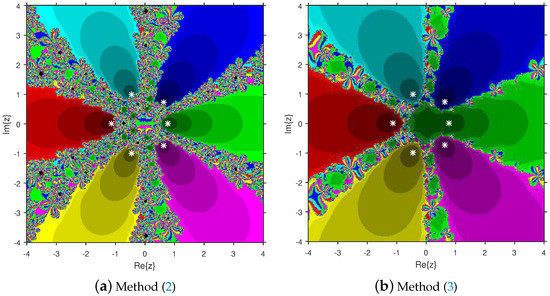

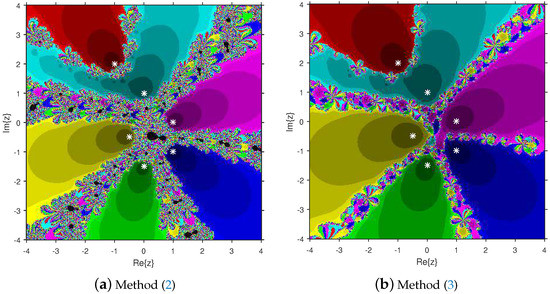

Firstly, we select a second-degree polynomial to show the attraction basins for solvers (11) and (12) in Figure 1a and Figure 1b, respectively. In these figures, magenta and yellow regions denote the attraction basins of the zeros 1 and , respectively, of . Figure 2a and Figure 2b represent the attraction basins for solvers (11) and (12), respectively, related to the zeros of . In these diagrams, the basins of , 1 and are displayed in magenta, yellow and cyan, respectively. Next, a fourth-degree polynomial is chosen to demonstrate the attraction basins for solvers (11) and (12) in Figure 3a and Figure 3b, respectively. The basins of the solutions , , 1 and i of are, respectively, displayed in blue, green, red and yellow regions in these figures. Furthermore, we use a fifth-degree polynomial to construct the basins for solvers (11) and (12) in Figure 4a and Figure 4b, respectively. In these pictures, yellow, magenta, blue, green and red colors are applied to represent the attraction basins of the zeros , , 1, and , respectively, of . Finally, the sixth-degree complex polynomials , and are taken. Figure 5a and Figure 5b provide the attraction basins for solvers (11) and (12) related to the zeros , 1, , , and of in red, green, magenta, blue, yellow and cyan colors, respectively. Then, is selected to illustrate the basins for solvers (11) and (12) in Figure 6a and Figure 6b, respectively. In these diagrams, red, green, magenta, blue, yellow and cyan colors are applied to display the basins associated with the solutions , , , , and of , respectively. In Figure 7a and Figure 7b, the basins for solvers (11) and (12) related to the roots , , , 1, i and of are painted in blue, yellow, green, magenta, cyan and red, respectively.

Figure 1.

Comparison of attraction basins associated with second-degree polynomial .

Figure 2.

Comparison of attraction basins associated with third-degree polynomial .

Figure 3.

Comparison of attraction basins associated with fourth-degree polynomial .

Figure 4.

Comparison of attraction basins associated with fifth-degree polynomial .

Figure 5.

Comparison of attraction basins associated with sixth-degree polynomial .

Figure 6.

Comparison of attraction basins associated with sixth-degree polynomial .

Figure 7.

Comparison of attraction basins associated with sixth degree polynomial .

Based on the diagrams, we arrive at the conclusion that solver (12) has larger basins in comparison with solver (11). On the boundary points, solver (12) exhibits less chaotic behavior than (11). Moreover, the fractal pictures (Figure 3a, Figure 4a, Figure 5a, Figure 6a and Figure 7a) of formula (11) contain big black zones that demonstrate no convergence to the zeros of the corresponding polynomials. Therefore, we conclude that solver (12) is more numerically stable than solver (11). Hence, solver (12) is more preferable over solver (11) for practical use.

4. Numerical Examples

We apply the proposed techniques to estimate the convergence radii of iterative algorithms (2) and (3).

Example 1

([15]). Let and . Consider D on V for as

We have . Then, , the identity operator. Let

where

In turn, we can write for ,

So,

Moreover, we have

and

Hence, we can choose . Then, the condition is validated. The equation gives ; so,

Concerning the third condition in , recall that the divided difference is usually defined by

or

In either case, the left-hand side of the third condition gives

Therefore, we can choose

Hence, by these choices of the functions and , the conditions are validated for this example. Similarly, for the first condition in , notice that ,

for some between and with . It follows that

Thus, the condition is verified for . In order to validate the second condition in , notice that

However, since . Hence, if we choose , the second condition is is validated. Using proposed theorems, we obtain ρ and . These values are given in Table 1.

Table 1.

Comparison of convergence radii for Example 1.

Example 2

([1]). Let us consider and . Define D on V by

where . It follows by this definition that the Fréchet derivative is given as

It follows by this definition that . If we substitute the last two formulas on the left-hand side of condition and use the max-norm on V, we see that

provided that ; so, and . Similarly, the first condition in gives

provided that . Then, according to the work in Exercise 1, we can choose and . The convergence radii ρ and are obtained using the suggested theorems and presented in Table 2.

Table 2.

Comparison of convergence radii for Example 2.

Example 3

We have . By repeating the work in Example 2 and using the max-norm, we see that . Therefore, we can choose , and . We apply the suggested results to compute ρ and (Table 3).

([16]). Let us consider and . Define the nonlinear integral equation as

where and is given on as

Table 3.

Comparison of convergence radii for Example 3.

5. Conclusions

The convergence balls as well as the dynamical behaviors of two efficient weighted-Newton-like equation solvers are compared. The ball convergence outcomes of methods (2) and (3) are produced by considering the generalized Lipschitz continuity of the first derivative only. Then, the complex dynamical behaviors of these algorithms are compared by employing the attraction basin tool. It is noticed that solver (3) has bigger basins than method (2). Lastly, our analytical findings are verified on application problems. It is found that method (3) has larger convergence balls than solver (2). Hence, solver (3) is better than method (2) for practical application. Our approach can be used to extend other methods [2,17,18,19,20] in a similar fashion. This will be the topic of our future research.

Author Contributions

Conceptualization, I.K.A., S.R., C.I.A. and D.S.; methodology, I.K.A., S.R., C.I.A. and D.S.; software, I.K.A., S.R., C.I.A. and D.S.; validation, I.K.A., S.R., C.I.A. and D.S.; formal analysis, I.K.A., S.R., C.I.A. and D.S.; investigation, I.K.A., S.R., C.I.A. and D.S.; resources, I.K.A., S.R., C.I.A. and D.S.; data curation, I.K.A., S.R., C.I.A. and D.S.; writing—original draft preparation, I.K.A., S.R., C.I.A. and D.S.; writing—review and editing, I.K.A., S.R., C.I.A. and D.S.; visualization, I.K.A., S.R., C.I.A. and D.S.; supervision, I.K.A., S.R., C.I.A. and D.S.; project administration, I.K.A., S.R., C.I.A. and D.S.; funding acquisition, I.K.A., S.R., C.I.A. and D.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Argyros, I.K. Unified Convergence Criteria for Iterative Banach Space Valued Methods with Applications. Mathematics 2021, 9, 1942. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S. Advances in Iterative Methods for Nonlinear Equations; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newtons method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar]

- Potra, F. On Q-order and R-order of Convergence. J. Optim. Theory Appl. 1989, 63, 415–431. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Gutiérrez, J.M. Zero-finder methods derived from Obreshkovs techniques. Appl. Math. Comput. 2009, 215, 2992–3001. [Google Scholar]

- Sharma, J.R.; Guna, R.K.; Sharma, R. An efficient fourth order weighted-Newton method for systems of nonlinear equations. Numer. Algor. 2013, 62, 307–323. [Google Scholar] [CrossRef]

- Cordero, A.; García-Maimó, J.; Torregrosa, J.R.; Vassileva, M.P. Solving nonlinear problems by Ostrowski-Chun type parametric families. J. Math. Chem. 2015, 53, 430–449. [Google Scholar] [CrossRef]

- Neta, B.; Scott, M.; Chun, C. Basins of attraction for several methods to find simple roots of nonlinear equations. Appl. Math. Comput. 2012, 218, 10548–10556. [Google Scholar]

- Petković, M.S.; Neta, B.; Petkovixcx, L.; Dzžunixcx, D. Multipoint Methods for Solving Nonlinear Equations; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Sharma, J.R.; Arora, H. Improved Newton-like methods for solving systems of nonlinear equations. SeMA J. 2016, 74, 1–7. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 2013, 222, 497–506. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, Á.; Noguera, M. Ostrowski type methods for solving systems of nonlinear equations. Appl. Math. Comput. 2011, 218, 2377–2385. [Google Scholar] [CrossRef]

- Rheinboldt, W.C. An adaptive continuation process for solving systems of nonlinear equations. In Mathematical Models and Numerical Methods; Tikhonov, A.N., Ed.; Banach Center: Warsaw, Poland, 1978; pp. 129–142. [Google Scholar]

- Traub, J.F. Iterative Methods for Solution of Equations; Prentice-Hall: Upper Saddle River, NJ, USA, 1964. [Google Scholar]

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; Engineering Series; CRC Press: Boca Raton, FL, USA; Taylor and Francis Group: Abingdon, UK, 2022. [Google Scholar]

- Argyros, I.K.; Magreñán, Á.A. A Contemporary Study of Iterative Methods; Elsevier: Amsterdam, The Netherlands; Academic Press: New York, NY, USA, 2018. [Google Scholar]

- Liu, T.; Qin, X.; Wang, P. Local Convergence of a Family of Iterative Methods with Sixth and Seventh Order Convergence under Weak Condition. Int. J. Comput. Methods 2019, 16, 1850120. [Google Scholar] [CrossRef]

- Scott, M.; Neta, B.; Chun, C. Basin attractors for various methods. Appl. Math. Comput. 2011, 218, 2584–2599. [Google Scholar] [CrossRef]

- Magreñán, Á.A. Different anomalies in a Jarratt family of iterative root-finding methods. Appl. Math. Comput. 2014, 233, 29–38. [Google Scholar]

- Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Third and fourth order iterative methods free from second derivative for nonlinear systems. Appl. Math. Comput. 2009, 211, 190–197. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).