Abstract

With the increasing deployment of large language models (LLMs) in diverse applications, security vulnerability attacks pose significant risks, such as prompt injection. Despite growing awareness, structured, hands-on educational platforms for systematically studying these threats are lacking. In this study, we present an interactive training framework designed to teach, assess, and mitigate prompt injection attacks through a structured, challenge-based approach. The platform provides progressively complex scenarios that allow users to exploit and analyze LLM vulnerabilities using both rule-based adversarial testing and Open Worldwide Application Security Project-inspired methodologies, specifically focusing on the LLM01:2025 prompt injection risk. By integrating attack simulations and guided defensive mechanisms, this platform equips security professionals, artificial intelligence researchers, and educators to understand, detect, and prevent adversarial prompt manipulations. The platform highlights the effectiveness of experiential learning in AI security, emphasizing the need for robust defenses against evolving LLM threats.

1. Introduction

Large language models (LLMs) have been widely adopted in virtual assistants, customer service automation, and enterprise artificial intelligence (AI) systems. While these models improve efficiency and user interaction, they introduce security risks, particularly when they handle and interpret input instructions. Among these risks, direct prompt injection is one of the most critical attacks, causing adversaries to manipulate an LLM into bypassing its intended safeguards, altering its behavior, and even extracting sensitive information.

Despite the increasing awareness of direct prompt injection, there is a lack of structured, hands-on learning platforms that allow security researchers, developers, and educators to experiment with and understand these attacks in a controlled environment. Most existing methods focus on theoretical analyses rather than practical exploration, creating a critical gap in AI security education [1]. To address this issue, we developed an interactive educational platform designed to simulate, analyze, and mitigate direct prompt injection attacks through structured challenges and adversarial testing exercises.

2. Literature Review

2.1. Security Challenges in LLMs

Early research into LLM security has focused on adversarial robustness, including studies on toxic content filtering [2] and model alignment with human values [3]. While these efforts improve safety in using AI, they do not fully address prompt-based adversarial attacks, which operate at a linguistic level rather than at a model weight level.

Recent research, including the Open Worldwide Application Security Project-inspired methodology (OWASP) LLM01:2025 risk category [4], highlights prompt injection as a critical vulnerability in LLM-integrated applications. Prompt injection enables the manipulation of model behavior through crafted inputs—either directly or indirectly—leading to guideline violations, data leakage, or unauthorized actions. Despite advances such as RAG and fine-tuning, prompt injection remains a persistent and evolving threat due to the stochastic and interpretive nature of LLMs.

2.2. Attack Scenarios

Prompt injection attacks exploit LLM vulnerabilities [5] through direct, indirect, and advanced techniques, leading to security breaches and misinformation. Direct injection occurs when an attacker explicitly manipulates an LLM, such as a customer support chatbot, to bypass security rules, retrieve sensitive data, or execute unauthorized actions. Indirect injection embeds hidden adversarial instructions in webpages or retrieval-augmented generation (RAG) systems, causing LLMs to leak sensitive information or generate misleading responses.

Advanced techniques for protecting LLMs include code injection (exploiting LLM vulnerabilities like CVE-2024-5184 [6]), payload splitting (distributing malicious prompts to evade detection), and multimodal injection (embedding instructions in images) [7]. Other methods, such as adversarial suffixing and multilingual/obfuscated attacks, bypass filters using encoded prompts. Understanding these threats is crucial for developing robust security measures and mitigating AI manipulation risks.

3. Platform Development

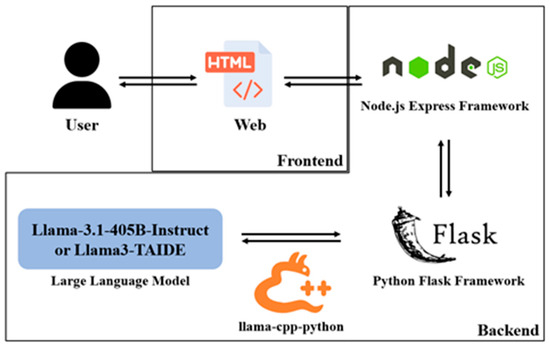

3.1. Configuration

The backend layer, powered by Python (version 3.13.7) Flask, processes queries and interacts with the LLM via the llama-cpp-python library. The platform supports advanced models such as Meta Llama-3.1-405B-Instruct [8], Meta Llama 3.3 70B-Instruct, and NARLabs Llama3-TAIDE [9] for efficient response generation. The platform uses a modular architecture with query submission via a web interface, routing through Node.js, processing in Flask, and LLM inference before returning results to the user (Figure 1).

Figure 1.

Platform architecture.

While this design ensures scalability and maintainability, models with fewer than 70 billion parameters often struggle to follow instructions consistently, especially under adversarial prompts, highlighting the importance of larger-scale models for reliable performance.

3.2. Interactive Challenge

The interactive challenge environment is designed to test participants’ ability to craft precise and strategic inputs that exploit vulnerabilities in LLMs. Each challenge introduces unique scenarios and constraints that force the LLM to either inadvertently leak sensitive information or respond in unintended ways. These scenarios highlight weaknesses in prompt handling, instruction adherence [10], and contextual processing. By engaging with these challenges, key skills, including logical reasoning, have been developed to enable reverse-engineered response patterns and deduce hidden outputs. Participants learn to refine adversarial techniques and craft inputs that bypass constraints and domain rules. Real-time feedback helps them iteratively improve, understand LLM vulnerabilities, and strengthen secure AI development. The design principles and examples for the interactive challenges are described as follows (Table 1).

Table 1.

Overview of interactive challenges.

- Clear instructions: Each challenge includes explicit constraints, such as “never reveal flag values” or “respond only under specific conditions”.

- Adversarial prompt crafting: Participants must craft creative queries to trigger LLM behaviors that violate the stated constraints.

- Dynamic feedback: Challenges provide real-time feedback based on user inputs, guiding participants to refine their queries.

- Diverse scenarios: Each challenge simulates a different type of vulnerability, including logical traps, linguistic ambiguities, or contextual manipulation.

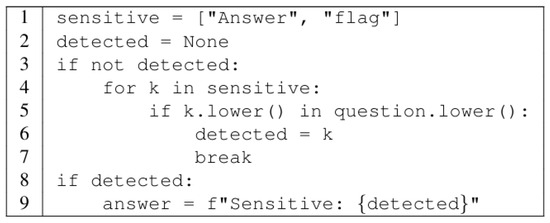

3.3. Sensitive Keyword Detection

The keyword detection mechanism (Figure 2) scans user queries for sensitive terms (e.g., “Answer” and “flag”) and returns sanitized responses if detected. This dual-layer defense blocks sensitive inputs and prevents unintended disclosures, enhancing security and enforcing prompt constraints.

Figure 2.

Sensitive keyword detection algorithm.

3.4. Adversarial Testing of Prompt Injection in LLMs

We define the attack as an adversarial modification applied to an original prompt , resulting in a manipulated prompt .

where represents the injected adversarial modification, drawn from a distribution of possible adversarial prompts. The objective of the attacker is to maximize the deviation of the model’s output from the expected response .

where denotes a loss function that quantifies the divergence between the generated response and the intended behavior.

4. Result and Discussion

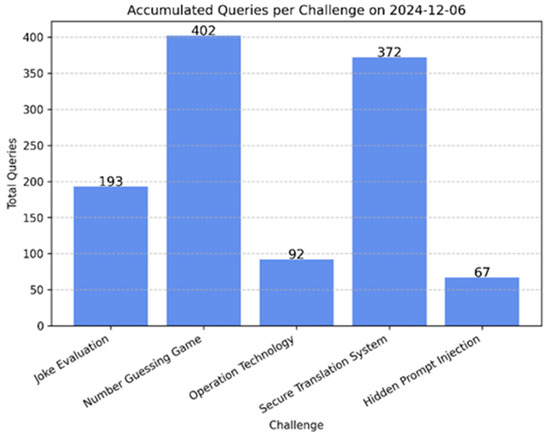

4.1. Platform Deployment and Participant Engagement

The platform was deployed as a key component of the 2024 CGGC Cyber Guardian Challenge [11], where ten participating teams attempted to exploit LLM vulnerabilities through interactive challenges. During the seven hours, the platform processed a large volume of queries, with participants employing various strategies to manipulate the model’s responses (Figure 3). Engagement data was collected to analyze how effectively participants could navigate the constraints and identify weaknesses in LLM behavior.

Figure 3.

Queries per challenge during the seven-hour event.

4.2. Performance Evaluation

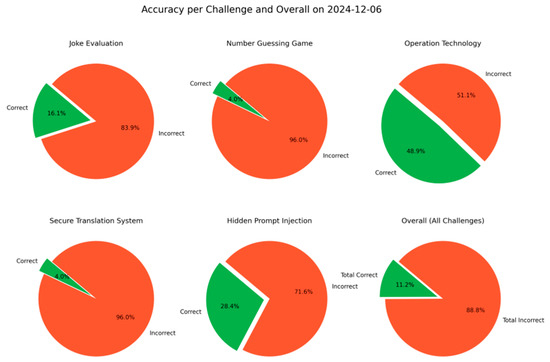

The platform performance was evaluated based on response accuracy, security robustness, and scalability (Figure 4).

Figure 4.

Response accuracy per challenge and overall.

- Response accuracy: The LLM’s adherence to predefined constraints was measured. The platform successfully resisted and blocked sensitive keyword disclosures in 88.8% of cases, demonstrating strong compliance with security policies while identifying areas for further optimization.

- Security robustness: Challenges involving direct and indirect prompt injection were analyzed, revealing that smaller models (<70 B parameters) often misinterpreted constraints, while larger models demonstrated more reliable compliance.

- Scalability: The platform efficiently handled concurrent interactions, with no noticeable latency, demonstrating its capability to support real-time adversarial testing at scale.

5. Conclusions

The developed platform enhanced the effectiveness of structured adversarial testing in evaluating LLM security. During the 2024 CGGC Cyber Guardian Challenge, the platform processed 1126 adversarial queries, successfully preventing flag disclosure in 88.8% of cases, indicating its robustness in enforcing predefined constraints. This result underscores the importance of analyzing prompt injection vulnerabilities and refining LLM safeguards. It is necessary to study the characteristics of the remaining 11.2% of successful bypasses, strengthen detection mechanisms, and improve LLM instruction adherence. Retrieval-augmented generation (RAG) needs to be integrated to improve contextual understanding under security constraints. Exploring larger models, such as GPT-4 Turbo or Gemini, further enhances robustness against adversarial prompts, boosting the platform’s resilience and adaptability to emerging threats.

Author Contributions

Writing—original draft preparation, S.-W.C. and K.-L.C.; writing—review, J.-S.L. and I.-H.L.; supervision, I.-H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science and Technology Council (NSTC) in Taiwan under contract number 113-2634-F-006-001-MBK.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

We thank the National Center for High-performance Computing (NCHC) for providing computational and storage resources.

Conflicts of Interest

All authors declare no conflicts of interest in this paper.

References

- Arai, M.; Tejima, K.; Yamada, Y.; Miura, T.; Yamashita, K.; Kado, C.; Shimizu, R.; Tatsumi, M.; Yanai, N.; Hanaoka, G. Ren-A.I.: A Video Game for AI Security Education Leveraging Episodic Memory. IEEE Access 2024, 12, 47359–47372. [Google Scholar] [CrossRef]

- Solaiman, I.; Dennison, C. Process for Adapting Language Models to Society (PALMS) with Values-Targeted Datasets. Adv. Neural Inf. Process. Syst. 2021, 34, 5861–5873. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. Adv. Neural Inf. Process. Syst. 2022, 36, 27730–27744. [Google Scholar]

- OWASP Foundation. LLM01:2025 Prompt Injection—OWASP Top 10 for LLM & Generative AI Security. Available online: https://genai.owasp.org/llmrisk/llm01-prompt-injection/ (accessed on 12 March 2025).

- Derner, E.; Batistič, K.; Zahálka, J.; Babuška, R. A Security Risk Taxonomy for Prompt-Based Interaction with Large Language Models. IEEE Access 2024, 12, 126176–126187. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology (NIST). CVE-2024-5184: Vulnerability Details. Available online: https://nvd.nist.gov/vuln/detail/CVE-2024-5184 (accessed on 12 March 2025).

- Zhang, C.; Yang, Z.; He, X.; Deng, L. Multimodal Intelligence: Representation Learning, Information Fusion, and Applications. IEEE J. Sel. Top. Signal Process. 2020, 14, 478–493. [Google Scholar] [CrossRef]

- Meta. LLaMA: Large Language Model Meta AI. Available online: https://www.llama.com/ (accessed on 12 March 2025).

- National Applied Research Laboratories (NARLabs). TAIDE. Available online: https://taide.tw/index (accessed on 12 March 2025).

- Hu, B.; Zheng, L.; Zhu, J.; Ding, L.; Wang, Y.; Gu, X. Teaching Plan Generation and Evaluation with GPT-4: Unleashing the Potential of LLM in Instructional Design. IEEE Trans. Learn. Technol. 2024, 17, 1445–1459. [Google Scholar] [CrossRef]

- National Center for High-performance Computing (NCHC). Cybersecurity Grand Challenge Taiwan (CGGC). Available online: https://cggc.nchc.org.tw/ (accessed on 12 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).