Abstract

The World Health Organization estimates that between 10% and 20% of young people worldwide suffer from mental health problems during adolescence, which is a crucial time for many youngsters. Adolescent mental health cases have increased by 31% as a result of the COVID-19 epidemic, which has made the issue much worse. Due to stigma, restricted access, and challenges in evaluating emotional states, traditional mental health care frequently faces challenges. The goal of this research is to create a chatbot system that uses machine learning and natural language processing (NLP) to identify emotional distress and mental health issues in teenagers at an early age. The study offers a safe environment for teenagers to express their emotions while examining chatbot interactions to find trends suggestive of mental health problems. To improve the efficacy of the chatbot, the methodology integrates quantitative and qualitative techniques, utilizing data from open datasets and mental health services. Sentiment analysis and emotion identification are important strategies that enable the chatbot to react sympathetically. By offering easily available support and highlighting the role of technology in addressing adolescent mental health issues, this project ultimately seeks to enhance youth mental health outcomes.

1. Introduction

Adolescence is a crucial time marked by profound changes in one’s physical, mental, and social makeup. Many teenagers experience emotional, social, and intellectual demands at this time that may have an effect on their mental health [1]. The World Health Organization (WHO) estimates that between 10% and 20% of youth globally have mental health issues. This situation was made worse by the COVID-19 epidemic, which caused a 31% increase in teenage mental health issues [2]. Social pressure, academic stress, and the stigma associated with mental health are some of the factors that make it difficult for teenagers to get timely care [3]. Teenage mental health problems are becoming more common, which emphasizes how urgently effective, easily available, and expandable mental health care is needed [1].

People of all ages can experience depression [4]. It can be extremely dangerous and result in issues like diabetes and high blood pressure, anxiety attacks, and even death following a heart attack. As a result, it is crucial to identify its causes and detect it in order to provide the right treatment. Additionally, there is a need to de-stigmatize depression and mental health; this can be achieved through the use of Social Network Mental Disorder Detection [5]. To identify emotional imbalance, tests based on different artificial intelligence and machine learning algorithms can be conducted in various settings. As technology advances, a number of AI-based methods have been developed to give machines emotional intelligence so they can recognize human emotions.

Finding data that accurately depicts the subject’s or patient’s mental state is one of the difficulties faced by researchers and practitioners in both psychology and psychiatry. The conventional methods rely on collecting information from the subject and their close friends and family members, as well as requesting a chosen few members of a particular group to complete surveys and questionnaires that could reveal information about the mental health of different people or groups [6]. Early detection and intervention are crucial since parents frequently find it difficult to relate to or support their adolescent children. Artificial intelligence (AI) technologies offer creative answers to these problems, especially those that make use of natural language processing (NLP). NLP-powered chatbots can give kids a safe, private, and convenient way to express their feelings, get support, and possibly identify early warning indicators of mental health issues [7,8].

Our approach is to use machine learning algorithms and NLP techniques to handle human emotions [9]. We have developed a solution to this issue following a careful and in-depth examination of the prior methods. Data mining, AI, and machine learning approaches are being employed for this. Systems for detecting emotions have a variety of uses. Emotions are identified in market research to understand consumer sentiment, which is crucial for companies. Through consumer reviews of different products, emotion-detection algorithms can identify the customer’s feelings [4].

This project intends to significantly improve youth mental health outcomes by giving teens an easily accessible forum to share their feelings and get quick feedback [10]. We believe that this investigation will raise awareness of the significance of employing cutting-edge technological solutions to address teenage mental health issues. The primary objective of this study is to develop a chatbot system that makes use of natural language processing (NLP) to assist in the early detection of emotional states and mental health issues in teenagers [11]. The study specifically looks at how people interact with the chatbot to identify patterns that can point to mental health problems. It also aims to put into practice machine learning algorithms that, given user input, can accurately identify emotional distress and possible mental health issues. Important terms related to this study include chatbots, which are AI-driven conversational agents created to mimic human interaction through text or voice communication; natural language processing (NLP), a subfield of artificial intelligence that allows machines to comprehend, interpret, and react to human language; and emotional states, which are the spectrum of emotions people experience and have a substantial impact on their overall mental health. Early detection is the process of seeing early warning signals of mental health concerns to enable prompt intervention.

2. Literature Review

2.1. Concept of Early Mental Health Detection

Early mental health detection refers to the process of identifying mental health issues at an initial stage, which is crucial for preventing the worsening of conditions. Early symptom recognition can result in prompt interventions that greatly enhance people’s results [3]. This proactive approach highlights how crucial it is to keep an eye on emotional states and actions in order to identify possible mental health issues before they become more serious. Mental health practitioners can put procedures into place that lessen the impact of these problems by concentrating on early indicators. By lessening the overall cost of mental illness in society, early detection supports both individual recovery and larger public health initiatives. Technology integration, such as chatbots and AI-powered tools, improves the capacity to identify mental health problems early on, making it simpler for people to get the treatment they need [4].

2.2. Approaches to Early Detection

Approaches use self-reported surveys and structured psychological evaluations to find underlying mental health problems. In therapeutic settings, these instruments are frequently used to assess emotional health and identify diseases like depression. A thorough grasp of a person’s mental condition can be obtained through in-person clinical interviews with mental health specialists. Standardized testing could overlook subtle information that these interviews can provide. Peers, parents, and teachers are essential in spotting behavioral changes in adolescence. Their observations may serve as early indicators of possible mental health problems, allowing for appropriate treatment [4].

Wearable technology, chatbots, and mental health applications are examples of emerging technologies that are being used more and more to track mental health indicators. When someone is in need of assistance, these tools can offer real-time data and support [5]. Natural language processing (NLP) and artificial intelligence (AI) have the potential to greatly enhance early detection techniques. AI can spot indications of discomfort that conventional techniques might miss by examining text data from communications and social media posts. Emotional patterns and warning signs in communication can be identified by AI-driven sentiment analysis and emotion-detection methods. This capacity makes it possible to identify those who are at risk, enabling earlier support and intervention.

2.3. Challenges in Early Detection

Teenagers are frequently deterred from getting treatment because mental health problems are stigmatized. They may be reluctant to talk about their difficulties out of fear of being judged or misunderstood, which could eventually postpone the necessary help [3]. Although technology presents novel approaches to mental health monitoring, these instruments may have biases and limits. For example, algorithms might not fairly reflect a range of demographics, which could result in inappropriate or ineffectual interventions [10].

3. Methodology

3.1. Research Design

By combining statistical data with user experiences and insights, the study’s mixed-methods research design combines quantitative and qualitative approaches to provide a thorough analysis of the efficacy of NLP-based chatbots in early mental health detection [12]. NLP-based chatbots are especially useful for early mental health detection because they facilitate natural language interactions, which make it easier for adolescents to express their feelings. This accessibility can encourage users to have conversations about their mental health, which is essential for prompt intervention [11]. Several AI and machine learning methods, including sentiment analysis and emotion recognition algorithms, are used in the study. In order to provide proactive assistance and intervention, these techniques examine user interactions to find emotional patterns suggestive of mental suffering. Furthermore, in order to improve the accuracy of distress detection over time, machine learning models are trained on large datasets [3,12].

3.2. Data Collection

The Shout mental health text messaging service, which records conversations in real time and offers insights into users’ mental health needs and behaviors during crises, is one of the main sources of text data [8]. Furthermore, the study might make use of openly accessible mental health datasets to improve natural language processing (NLP) model training, enabling a more thorough comprehension of mental health concerns [13]. Theanonymization of user data, which guarantees the protection of individual identities while enabling insightful research of mental health patterns, is one of the most important ethical considerations. By establishing stringent procedures for data processing and storage, the research complies with data privacy laws and ensures that pertinent legal frameworks are followed to protect user information [8].

3.3. NLP and Machine Learning Techniques

Tokenization, which divides text into discrete words or phrases, and stop-word removal, which gets rid of common terms that might not be useful for sentiment analysis, are crucial processes. In order to improve the model’s generalization across word variants, stemming and lemmatization are also used to reduce words to their base forms [6]. Various machine learning and deep learning models are employed for these tasks, with BERT, LSTM, and CNN showing promising results.

For sentiment classification tasks, Long Short-Term Memory (LSTM) networks work well, especially BiLSTM models. They are appropriate for examining emotional content in social media and other text formats because they can identify long-term dependencies in text. BERT and LSTM are two examples of sophisticated sentiment classification algorithms that have demonstrated notable efficacy in identifying emotions in text. These models make use of deep learning methods to comprehend subtleties in sentiment and context. Emotions are categorized using a variety of models, including joyful, sad, and worried. Both supervised learning, in which models are trained on labeled datasets, and unsupervised learning, which finds patterns in unlabeled data, can accomplish this classification [14].

To improve the chatbot’s conversational abilities, the architecture can make use of sophisticated natural language processing (NLP) models like GPT, which are made to produce text that is human-like and comprehend context. More organic interactions with users are made possible by this [7]. The chatbot can determine the user’s emotional state and modify its responses by including an emotion-detection model. This improves the user experience by offering sympathetic and pertinent responses. An emotion-detection model is integrated into the system, which uses user input analysis to pinpoint emotional states [15,16]. Several machine learning techniques are used to accomplish this, guaranteeing the precise identification of emotions including joy, sorrow, and anxiety [17]. The chatbot can modify its responses to match the user’s emotional state based on the sentiment it has identified [16]. This feature enables the chatbot to respond with empathy, personalizing and bolstering the engagement [15].

When compared to the total number of predictions, accuracy indicates how frequently the model makes accurate predictions; a score of 0.9 or above is regarded as outstanding. High precision means fewer false positives [4]. Precision measures the accuracy of positive predictions, showing how many of those forecasted as positive are actually right. By displaying the ratio of true positives to actual positives, recall gauges how successfully the model finds all pertinent instances; a high recall indicates fewer false negatives. When assessing models with unbalanced datasets, the F1-score is helpful because it aggregates precision and recall into a single figure. Lastly, a confusion matrix that displays the number of true positives, true negatives, false positives, and false negatives provides a visual summary of the model’s performance [16].

The usability and interaction quality of chatbots such as BETSY and XiaoE have been assessed. For example, BETSY’s text-only version outperformed a digital human interface in terms of usability scores, suggesting that more straightforward interfaces could improve user experience [11].

NLP-enabled emotional intelligence in chatbots can improve the quality of interactions by enabling chatbots to more accurately identify and react to user emotions, resulting in a more customized experience [18]. Key issues in AI chatbot development, such as emotion detection, bias mitigation, and data privacy, are highlighted by recent research [19]. Investigate privacy-enhancing technologies, suggesting techniques such as federated learning and differential privacy, for protecting user data in LLM-based chatbots [20]. Analyze AI chatbots for mental health assistance, highlighting the importance of sympathetic answers and putting forward a federated learning framework to resolve issues with bias and privacy. Provide a framework of input, design, and functional diversity, equality, and inclusion (DEI) safeguards for chatbots that will support organizational DEI goals [21].

4. Result

The outcomes of our deep learning-based natural language processing (NLP) models for sentiment analysis and mental health detection are shown in this chapter. This section aims to evaluate how well our model performs in identifying sentiment/emotions and identifying content pertaining to mental health in social media text. Performance indicators including accuracy, loss, and label dispersion will be used to analyze the data. We will also go over the dataset’s main conclusions, emphasizing trends in sentiment analysis and mental health categorization. The ability of the model to process and comprehend textual data from two datasets, text_emotions.csv for sentiment classification and reddit_mentalhealth_sample.csv for mental health detection, will be used to assess its efficacy.

4.1. Dataset Overview

4.1.1. Emotion Dataset Overview

The efficacy of the model is assessed by its ability to process textual data from the text_emotions.csv dataset for sentiment classification. The structure of this dataset, which details the columns used for analysis, is outlined in Table 1 below.

Table 1.

Emotion dataset overview.

Anger (110 instances) and boredom (179 instances) are the least common sentiment categories, whereas neutral (8638 instances) and anxiety (8459 instances) are the most common. A number of emotions are overrepresented in the sample, which is unbalanced, stated in Table 2 below. Examples of data are given in Table 3.

Table 2.

Label distributions.

Table 3.

Example samples.

4.1.2. Mental Health Dataset Overview

The 700 Reddit posts that make up the mental health dataset are categorized by the subreddit in which they were submitted. The seven categories stand for various mental health issues or topics. Each class contains an equal number of 100 samples, making it a balanced dataset, stated in Table 4, examples from this dataset are provided in Table 5 below:

Table 4.

Label distribution of mental health dataset.

Table 5.

Example sample.

4.2. Model and NLP Techniques

4.2.1. Preprocessing Steps

Both of the datasets underwent the following preprocessing steps in Table 6 below:

Table 6.

Preprocessing steps.

4.2.2. LSTM Model Architecture

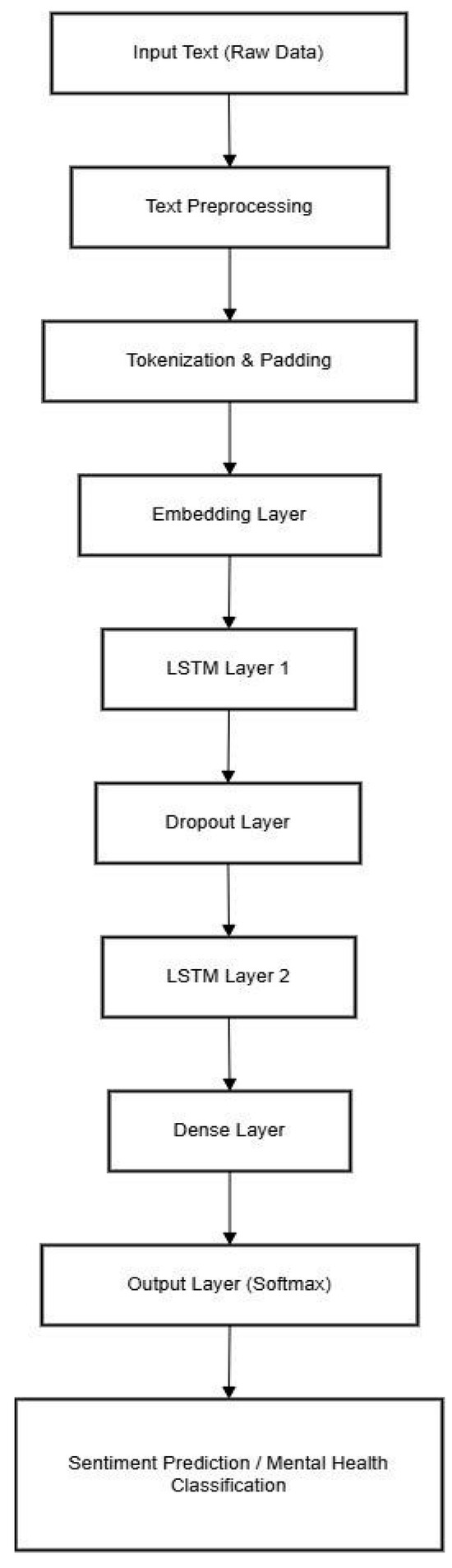

Long Short-Term Memory (LSTM), a recurrent neural network (RNN) architecture intended for processing sequential input, serves as the foundation for the NLP model employed in this investigation. For sentiment analysis and mental health classification, the model was trained independently. LSTM is perfect for natural language processing (NLP) activities like sentiment analysis and mental health classification because they are good at capturing long-term dependencies, to simplify the understanding of fractions (see Figure 1).

Figure 1.

LSTM architecture model diagram.

The efficiency of LSTM models in managing sequential data is demonstrated by their performance evaluation in a variety of applications. Metrics for training and validation are essential for evaluating model performance and resolving problems such as overfitting [22].

4.2.3. LSTM Sentiment Analysis Model

The LSTM sentiment analysis model’s training progress across ten epochs reveals a slow learning phase at first, followed by appreciable gains, and then indications of overfitting. The accuracy stays at about 21% and the loss is high for the first four epochs, indicating that the model is having difficulty learning. The validation accuracy increases to 26.76% and the loss begins to decline by the fifth epoch, indicating a notable improvement. The model shows improved learning between epochs six and seven, with training accuracy of 34.53% and validation accuracy of 31.84%.

There are indications of overfitting starting in period eight. The model appears to be memorizing training data rather than generalizing well, as evidenced by the validation accuracy stagnating at about 31% and the validation loss starting to grow while the training accuracy continues to increase, reaching 45.33% at the last epoch. Techniques like boosting dropout, adding L2 regularization, using a learning rate scheduler, or using early stopping could assist in avoiding overfitting and increase performance. Furthermore, feature extraction could be further improved by employing a bidirectional LSTM or increasing model complexity with additional LSTM layers. A detailed breakdown of the performance metrics for each epoch is presented in Table 7.

Table 7.

Detailed breakdown of each epoch in Sentiment Analysis Model Performance.

4.2.4. LSTM Mental Health Classification Model Performance

The training process of the LSTM model for mental health classification over 10 epochs demonstrates an initial learning phase, followed by improvements, and finally indications of overfitting. The model struggles to learn during the first two epochs, with loss remaining high and accuracy remaining low at about 15–17%. However, by the third epoch, training accuracy rises significantly to 27.92%, indicating that the model is beginning to capture useful patterns. By the fourth and fifth epochs, accuracy keeps improving, reaching 32.18%, although validation accuracy varies, indicating inconsistent generalization.

Training accuracy significantly increases after the sixth epoch, hitting 43%, but validation loss starts to rise, suggesting possible overfitting. This tendency is increasingly noticeable in later epochs, when validation accuracy stays low at 22.86% and validation loss keeps increasing, even while training accuracy reaches 66.94% in the last epoch. These findings imply that rather than effectively generalizing to new data, the model is the memorization of training data. Techniques like dropout regularization, model complexity reduction, hyperparameter adjustment, or gathering more varied data could assist in enhancing generalization performance by mitigating overfitting, as detailed in Table 8.

Table 8.

Detailed breakdown of each epoch in Mental Health Classification Model Performance.

5. Discussion

The possibility of machine learning and sentiment analysis methods to forecast mental health conditions from social media material has been investigated in recent research. With accuracies ranging from 82% to 99%, several models, such as Random Forest, Extra Trees, and Logistic Regression, have demonstrated promise in diagnosing mental health disorders [23]. Nonetheless, issues like class disparity and the inability to differentiate between closely similar disorders like depression and anxiety still exist [24] Even though sentiment analysis has promise for evaluating mental health, further study is required to resolve issues and boost precision for psychiatric applications [25]. Even though NLP-based chatbots have demonstrated efficacy in identifying mental health issues in teenagers, certain obstacles still need to be addressed. The inability to comprehend more complicated situations is one of the primary obstacles, particularly when it comes to using informal or slang language, which is frequently employed by teenagers in everyday discussions [26]. To account for variations in user culture, language, and communication preferences, the chatbot’s natural language processing (NLP) model must be trained on a more varied and representative dataset [27].

Furthermore, one significant element that may have an impact on the model’s ability to accurately identify different emotional expressions is bias in the training data [28]. More inclusive data-processing methods are required to enhance chatbot performance across a range of user demographics, as prior research has demonstrated that NLP models have a tendency to be biased towards particular groups depending on the data sources used in their training [29].

Concerns about data security and privacy are also very important when using chatbots to diagnose mental health issues. The ethical management of user data is required by laws like the General Data Protection Regulation (GDPR) and health data security regulations, particularly when it comes to AI-based healthcare. Users could be hesitant to convey their emotions through chatbots if there are no robust security measures in operation.

Additionally, although chatbots can be used as early detection tools, professional intervention is still necessary to follow up on the results obtained. Integration with mental health professionals, such as psychologists or counselors, can increase the effectiveness of early detection and intervention because research indicates that human interaction is still necessary in more complex cases [30].

6. Conclusions

According to this study, NLP-based chatbots hold a lot of promise for the early detection of teenage emotional states and mental health issues. The chatbot can recognize communication patterns that point to mental health conditions like anxiety and sadness by using sentiment analysis and machine learning techniques. But in order to make this system more effective, more work needs to be performed on improving user security and privacy, diversifying training data, and improving model accuracy. Furthermore, in order to follow up on detection results with the proper treatments, it is crucial to integrate this technology with expert assistance. When these areas are improved, NLP-based chatbots could be a useful and approachable tool to assist teenagers in taking a more proactive approach to managing their mental health. For this project, two datasets that were both taken from GitHub were used. Reddit Mental Health Sample Reddit users’ talks about mental health are included in this sample, which offers real-world textual data that may be used to train models to identify patterns linked to various mental health conditions. The second is the Text Emotion Dataset, which is used to train models to identify emotional sentiments in user input. It consists of text samples tagged with different emotions.

Author Contributions

Conceptualization, N.G.F., D.A.H. and K.K.; methodology, N.G.F. and M.A.F.; software, N.G.F.; validation, N.G.F.; investigation, N.G.F., D.A.H. and M.A.F.; data curation, N.G.F. and M.A.F.; writing—original draft preparation, N.G.F.; writing—review and editing, N.G.F., D.A.H. and M.A.F., K.K. and I.L.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are not publicly available due to privacy or ethical restriction.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mishra, U. Emotion Detection In Virtual Assistants and Chatbots. Int. Res. J. Mod. Eng. Technol. Sci. 2023, 5, 1107–1112. [Google Scholar] [CrossRef]

- Seoyunion. RedditMentalhealth Sample Dataset (CSV). Available online: https://github.com/seoyunion/RedditMentalhealth/blob/main/reddit_mentalhealth_sample.csv (accessed on 20 June 2025).

- Kanchitank. Text-Emotion-Analysis Dataset. Available online: https://github.com/kanchitank/Text-Emotion-Analysis/tree/master/dataset/data (accessed on 20 June 2025).

- World Health Organization (WHO). Mental Health of Adolescents. 10 October 2024. Available online: https://www.who.int/news-room/fact-sheets/detail/adolescent-mental-health (accessed on 20 June 2025).

- Gomez, A.; Roberts, L. Integrating AI Chatbots with Professional Mental Health Services. Healthc. AI Psychol. 2023, 14, 135–150. [Google Scholar]

- Infant, A.; Indirapriyadharshini, A.; Narmatha, P. Parents and Teenagers Mental Health Suggestions Chatbot Using Cosine Similarity. In E3S Web of Conferences; EDP Sciences: Les Ulis, France, 2024. [Google Scholar] [CrossRef]

- Rajput, A.; Nazlah Al Yamaniyyah, A.; Arabia, S. Natural Language Processing, Sentiment Analysis and Clinical Analytics. In Innovation in Health Informatics; Academic Press: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Singh, A.; Singh, J. Synthesis of Affective Expressions and Artificial Intelligence to Discover Mental Distress in Online Community. Int. J. Ment. Health Addict. 2024, 22, 1921–1946. [Google Scholar] [CrossRef] [PubMed]

- Zygadło, A.; Kozłowski, M.; Janicki, A. Text-Based Emotion Recognition in English and Polish for Therapeutic Chatbot. Appl. Sci. 2021, 11, 10146. [Google Scholar] [CrossRef]

- Kevin, B.; Vikin, B.; Nair, M. Building a Chatbot for Healthcare Using NLP. TechRxiv Prepr. 2023; 1–8. [Google Scholar] [CrossRef]

- Luo, B.; Lau, R.Y.K.; Li, C.; Si, Y.W. A Critical Review of State-of-the-Art Chatbot Designs and Applications. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, e1434. [Google Scholar] [CrossRef]

- Belser, C.A. Comparison of Natural Language Processing Models for Depression Detection in Chatbot Dialogues. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2023. [Google Scholar]

- Grové, C. Co-Developing a Mental Health and Well-Being Chatbot with and for Young People. Front. Psychiatry 2021, 11, 606041. [Google Scholar] [CrossRef] [PubMed]

- Kostenius, C.; Lindström, F.; Potts, C.; Pekkari, N. Young Peoples’ Reflections about Using a Chatbot to Promote Their Mental Well-Being in Northern Periphery Areas—A Qualitative Study. Int. J. Circumpolar Health 2024, 83, 2369349. [Google Scholar] [CrossRef] [PubMed]

- Miller, C. Enhancing Accessibility of Mental Health Support through AI. J. Digit. Health Innov. 2021, 17, 80–95. [Google Scholar]

- Telezhenko, D.; Tolstoluzka, O. Development and training of LSTM models for control of virtual distributed systems using TensorFlow and Keras. GoS 2024, 38, 163–168. [Google Scholar] [CrossRef]

- Abdelhalim, E.; Anazodo, K.S.; Gali, N.; Robson, K. A Framework of Diversity, Equity, and Inclusion Safeguards for Chatbots. Bus. Horiz. 2024, 67, 487–498. [Google Scholar] [CrossRef]

- Jain, E.; Rathour, A. Understanding Mental Health through Sentiment Analysis: Using Machine Learning to Analyze Social Media for Emotional Insights. In Proceedings of the 5th International Conference on Data Intelligence and Cognitive Informatics (ICDICI), Tirunelveli, India, 18–20 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 782–786. [Google Scholar] [CrossRef]

- European Union. General Data Protection Regulation (GDPR). 2018. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 20 June 2025).

- Bilquise, G.; Ibrahim, S.; Shaalan, K. Emotionally Intelligent Chatbots: A Systematic Literature Review. Hum. Behav. Emerg. Technol. 2022, 2022, 9601630. [Google Scholar] [CrossRef]

- Smith, J.; Brown, A. Challenges in NLP-Based Chatbots for Mental Health. J. AI Psychol. 2023, 15, 120–135. [Google Scholar]

- Zhang, J. An Overview of the Application of Sentiment Analysis in Mental Well-Being. Appl. Comput. Eng. 2023, 8, 354–359. [Google Scholar] [CrossRef]

- Lee, K.; Zhang, M. Bias in AI-Based Psychological Assessments. IEEE Trans. Comput. Intell. 2021, 30, 78–90. [Google Scholar]

- Machová, K.; Szabóová, M.; Paralič, J.; Mičko, J. Detection of Emotion by Text Analysis Using Machine Learning. Front. Psychol. 2023, 14, 1190326. [Google Scholar] [CrossRef] [PubMed]

- Casu, M.; Triscari, S.; Battiato, S.; Guarnera, L.; Caponnetto, P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Appl. Sci. 2024, 14, 5889. [Google Scholar] [CrossRef]

- Joshi, M.L.; Kanoongo, N. Depression Detection Using Emotional Artificial Intelligence and Machine Learning: A Closer Review. Mater. Today Proc. 2022, 58, 217–226. [Google Scholar] [CrossRef]

- Wang, M. Data Representation and Bias in Sentiment Analysis Models. AI Soc. 2022, 18, 112–125. [Google Scholar]

- Sahu, P. Emotional Intelligence in Chatbots: Revolutionizing Human-Machine Interaction. Available online: https://www.researchgate.net/publication/375526296 (accessed on 20 June 2025).

- AlMakinah, R.; Norcini-Pala, A.; Disney, L.; Canbaz, M.A. Enhancing Mental Health Support through Human-AI Collaboration: Toward Secure and Empathetic AI-Enabled Chatbots. arXiv 2024, arXiv:2410.02783. [Google Scholar]

- Ashlin Deepa, R.N.; Karlapati, P.; Mulagondla, M.R.; Amaranayani, P.; Toram, A.P. An Innovative Emotion Recognition and Solution Recommendation Chatbot. In Proceedings of the 8th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 25–26 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1100–1105. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).