Abstract

This research aims to explore and compare several nonparametric regression techniques, including smoothing splines, natural cubic splines, B-splines, and penalized spline methods. The focus is on estimating parameters and determining the optimal number of knots to forecast cyclic and nonlinear patterns, applying these methods to simulated and real-world datasets, such as Thailand’s coal import data. Cross-validation techniques are used to control and specify the number of knots, ensuring the curve fits the data points accurately. The study applies nonparametric regression to forecast time series data with cyclic patterns and nonlinear forms in the dependent variable, treating the independent variable as sequential data. Simulated data featuring cyclical patterns resembling economic cycles and nonlinear data with complex equations to capture variable interactions are used for experimentation. These simulations include variations in standard deviations and sample sizes. The evaluation criterion for the simulated data is the minimum average mean square error (MSE), which indicates the most efficient parameter estimation. For the real data, monthly coal import data from Thailand is used to estimate the parameters of the nonparametric regression model, with the MSE as the evaluation metric. The performance of these techniques is also assessed in forecasting future values, where the mean absolute percentage error (MAPE) is calculated. Among the methods, the natural cubic spline consistently yields the lowest average mean square error across all standard deviations and sample sizes in the simulated data. While the natural cubic spline excels in parameter estimation, B-splines show strong performance in forecasting future values.

1. Introduction

Regression analysis is a statistical method used to determine the relationship between an independent and a dependent variable, allowing for predictions of the dependent variable based on the independent one. However, there are cases where the assumptions underlying regression analysis may only apply to certain variables. An incorrect parametric model can lead to misleading interpretations [1,2]. Furthermore, there may be instances where a suitable parametric model does not exist. Nonparametric regression methods can be employed to address these challenges. These methods estimate parameters in situations where the data exhibit nonlinear relationships. Constructing a smoothing curve based on the available data is often called the smoothing method. This approach provides an alternative when traditional parametric models are either unsuitable or the regression analysis assumptions are not satisfied.

Nonparametric regression offers several advantages, making it a valuable tool in various research and data analysis fields. Its flexibility allows the model to capture complex and nonlinear relationships that parametric methods might overlook. Amodia et al. [3] noted that nonlinear models enabled nonparametric regression techniques to fit generalized additive models effectively. Salibian-Barrera [4] introduced robust nonparametric regression methods to handle symmetric error distributions, which is particularly useful when the underlying relationships in the data are not well defined or vary across different sections. This approach allows for accurate predictions that capture intricate patterns and fluctuations in the data without imposing rigid constraints.

Parametric methods, by contrast, often assume that the data follows a specific distribution. EL-Morshedy et al. [5] introduced the discrete Burr–Hatke distribution to highlight the importance of parameter estimation in regression models. However, nonparametric regression does not rely on such assumptions, making it suitable for analyzing data with unknown or nonstandard distributions. Gal et al. [6] proposed a method for estimating parameters in nonparametric regression using residuals based on both symmetric and nonsymmetric distributions. Moreover, nonparametric regression handles outliers and influential observations more effectively than some parametric methods. Because it focuses on local data points, outliers exert less influence on the overall fit, resulting in a more robust model to extreme values. Cizek and Sadikoglu [7] studied the robustness of nonparametric regression, noting that it requires only weak identification assumptions, thus minimizing the risk of model misspecification.

Nonparametric regression methods typically involve a dependent variable and one or more independent variables. The primary goal of nonparametric regression is to estimate a smoothing function that provides a more refined representation of the data rather than focusing on estimating regression coefficients. This smoothing function helps capture the underlying trend between the dependent variable and one or more independent variables. When there is only one independent variable, this technique is known as scatterplot smoothing, which improves the visual clarity of the scatterplot, making it easier to identify trends in the relationship between the dependent and independent variables [8]. Nonparametric regression is used to estimate the relationship between variables without assuming a specific functional form, and these estimated covariates are then incorporated into models where multiple equations are solved simultaneously [9].

Various methods exist for estimating nonparametric regression models, including the kernel smoothing method [10], local polynomial regression [11], regression splines [12], smoothing splines [13], and penalized splines [14]. Additionally, nonparametric regression models have been adapted for time series analysis, allowing for the modeling of potential nonlinear relationships. Chen and Hong [15] proposed nonparametric estimation techniques for testing smooth structural changes in time series models.

Nonparametric regression, a well-established technique in smoothing methods, has recently seen applications in various fields. Demir and Toktamis [16] explored the nonparametric kernel estimator using fixed bandwidth and the adaptive kernel estimator for long-tailed and multi-modal distributions. Shang and Cheng [17] developed a smoothing spline method and computational trade-offs to address critical challenges in applying distribution algorithms. Feng and Qian [18] proposed a dynamic natural cubic spline model with a two-step procedure for forecasting the yield curve. Than and Tjahjowidodo [19] applied B-spline functions to various applications, including computer graphics, computer-aided design, and computer numeric control systems. Xiao [20] comprehensively studied large sample asymptotic theory for penalized splines, incorporating B-splines and an integrated squared derivative penalty.

In the context of economic growth, which is crucial for developing countries, many of the relevant data come in sequential form—such as time series data, user action sequences, or DNA sequences. Such sequential data include daily, monthly, quarterly, and yearly metrics such as unemployment, economic growth, gold prices, and exchange rates. Adams and Asemota [21] evaluated the performance of smoothing parameter selection methods in nonparametric regression models applied to time series data. Sriliana et al. [22] proposed developing a mixed estimator nonparametric regression model for longitudinal data and applied it to model the poverty gap index. As a result, accurate estimation or prediction of business-related information remains a challenge.

To address this challenge, we aim to evaluate the performance of nonparametric regression models, particularly in cases where the dependent variable exhibits cyclic patterns and nonlinear relationships with time series data. We apply various techniques, including smoothing splines, natural cubic splines, B-splines, and penalized splines. Gascoigne and Smith [23] proposed a new method for modeling unequal age–period–cohort data using penalized smoothing splines to select the best approximating function. Klankaew and Pochai [24] used natural cubic spline techniques to approximate solutions for groundwater quality assessments. Jiang and Lin [25] studied a methodology for parametrizing B-spline surfaces using a fast progressive-iterative approximation method to refit section curves. Tan and Zhang [26] applied penalized methods to find the indemnity function for designing weather index insurance.

The primary objective of this study is to systematically evaluate and compare the performance of various nonparametric regression methods, including smoothing splines, natural cubic splines, B-splines, and penalized splines, in the context of time series data exhibiting cyclic patterns and nonlinear relationships. By applying these methods, we aim to improve the accuracy of forecasting and parameter estimation, particularly in scenarios where traditional parametric models fail to capture the complexities inherent in the data.

An essential contribution of this research is the application of these nonparametric methods to both simulated and real-world datasets, focusing on Thailand’s monthly coal imports. This approach underscores the practical utility of nonparametric regression in addressing real-world challenges related to energy forecasting. The study also offers a comparative analysis of these methods based on performance metrics such as mean square error (MSE) and mean absolute percentage error (MAPE), providing insights into the most suitable techniques for different data types.

This research advances the field of nonparametric regression by demonstrating the flexibility of these models in capturing complex, nonlinear relationships while offering a detailed examination of how the choice of knots and smoothing parameters can influence forecast accuracy. The findings have significant implications for future work in time series analysis, particularly in areas where data do not adhere to parametric assumptions, making these techniques invaluable across various fields such as economics, engineering, and environmental studies.

This paper is structured as follows: Section 2 provides an overview of the nonparametric regression methodology. Section 3 outlines the process and presents our simulated data analysis results. Section 4 demonstrates the application of our proposed models to real-world data. Section 5 discusses the findings, and Section 6 presents our conclusions.

2. The Nonparametric Regression Model

Nonparametric regression involves estimating the relationship between the function of independent variables () and dependent variables (). Here, we present an overview of some standard methods used in nonparametric regression models:

where is an error in all observations.

The nonparametric regression is based on a smoothing technique, which produces a smoother. A smoother is a tool for estimating the function of predictor variables that can be used to enhance the visual appearance of trends in the plot.

The principle of nonparametric regression, or regression spline, is introduced by Eubank [27,28], who states that the local neighborhoods are specified by a group of locations:

in the range of interval where . These locations are known as knots, and are called interior knots.

A regression spline can be constructed using the -th degree truncated power basis with knots :

where denotes the -th power of the positive part of , where . The first basis functions of the truncated power basis (3) are polynomials of degree up to , and the others are all the truncated power functions of the degree . A regression spline can be expressed as

where are the unknown coefficients to be estimated by a suitable loss minimization.

The popular smoothing techniques are shown in detail in this section.

2.1. Smoothing Spline Method

The estimated procedure of the smoothing spline method is to minimize the penalized least square criterion to fit a function of predictor variables (), written by

where is the residual sum of squares, and is the roughness penalty in the range of finite interval [], which is a measure of the curve called the smoothing parameter, or . We emphasize the second derivative of the roughness penalty, the cubic smoothing spline, which is commonly considered in the statistical literature [29].

To illustrate, it can be written in matrix form, introduced by Wu and Zhang [30]. The roughness penalty can be expressed as

Let , where In general, is the number of knots, and are all the knots of the smoothing spline that can be sorted in increasing order as .

Set Define matrix as a matrix:

Define as a matrix, with all the entries being 0 except for

Define as a matrix, with all the entries being 0 except

for

and

It follows that the PLS criterion from (6) and (7) can be written as

where are the response variables, is an incidence matrix with if and otherwise, and . Therefore, an explicit expression for the smoothing spline function () evaluated at the knots is as follows:

2.2. Natural Cubic Spline Method

Hastie and Tibshirani [31] studied the generalized additive model to verify the type of model required. The generalized additive model is applied, with the smoothing functions estimated by a thin plate regression spline for the natural cubic splines estimator.

The natural cubic spline aims to reduce this uncertainty by imposing linearity in the boundary knots. For example, the basis function would be linear to the left as 0 and to the right of 1; here, 0 and 1 are called boundary knots, and the other knots are interior knots. The linearity is enforced through the constraints the spline satisfy at the boundary knots. These conditions are automatically satisfied by choosing the following basis:

with

The function of predictor variables can be written of the basis function as

where are an N-dimension set of basis functions for representing this family of natural splines, and is the coefficient vector of the natural cubic spline with the number of knots. The fitting of the natural cubic spline function from (10) evaluated at the knots is as follows:

The coefficient estimator can be obtained by minimizing the penalized least square criterion, as follows:

where ,

Therefore, the fitting of natural cubic spline functions () evaluates at the knots depending on the following smoothing parameter:

2.3. B-Splines Method

A spline is a segmented polynomial model called a piecewise polynomial, which is a piece of polynomial that has segmented properties at interval form on knot points [32]. Knot points are points that indicate changes in data in sub-intervals. Given a set of knots, the B-splines basis function recursively can be defined by

where is the th of the B-splines basis function of order for the knot sequence . Liu et al. [33] used an algorithm to compute B-splines of any degree on the piecewise polynomial function. The th degree of B-splines’ function is evaluated from the th degree as

where basis of order with knots . The nonparametric regression model can be written in the form of B-splines as

Therefore, the fitting of B-splines’ function evaluated at the knots is as follows:

The penalized least square criterion is given by

where , is the coefficient vector of the B-spline.

Therefore, the solution of the B-splines’ function () to the minimization of PLS is

2.4. Penalized Spline Method

The smoothing spline method [34] needs to compute the integral that defines to compute the integral of the roughness function. The penalized spline method is designed to overcome these drawbacks by using the truncated power basis in Equation (3). Let denote the truncated power basis of the degree with knots . Then, we can express the in (1) as , where is the associated coefficient vector. Let be a diagonal matrix, with its first diagonal entries being 0 and other diagonal entries being 1. That is,

Then, the penalized spline smoother is defined as , where is the minimizer of the following PLS criterion:

where and

The penalized spline smoother can be expressed as

2.5. Smoothing Parameter Estimation

In this paper, the selection of the smoothing parameter is focused on the generalized cross-validation (GCV) suggested by Wahba [35] and Craven and Wahba [36]. The best smoothing parameter value is the GCV, which optimizes a smoothness selection criterion [37]. It helps choose smoothing parameters by minimizing the generalized cross-validation function. This function is calculated as follows:

where of smoothing spline is , the natural cubic spline is , B-splines is , and the penalized spline is .

3. Simulation Data and Results

In the simulation study, we estimated the dependent variable for the performance smoothing spline (SS), natural cubic spline (NS), B-splines (BS), and penalized spline (PS). The sequence of time series data defines the independent variable as cyclic patterns and nonlinear forms. The data were generated at 1000 replications [38,39,40] in 50, 100, 150, and 200 sample sizes. It was performed using R, a language and environment for statistical computing [41]. The statistical analysis used the Splines package [42] and the SemiPar package [43].

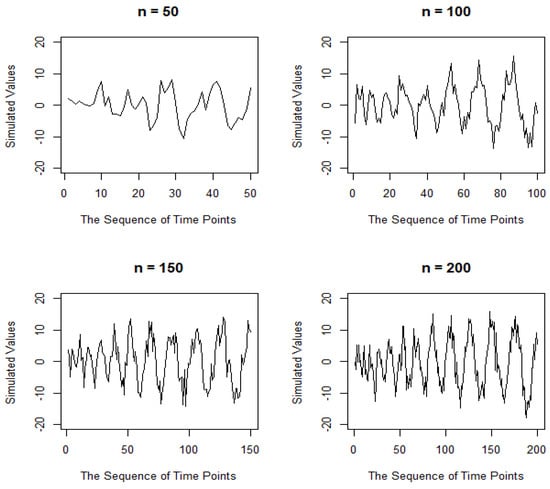

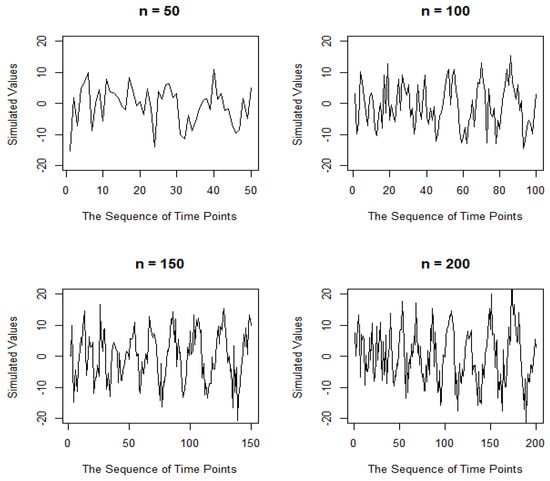

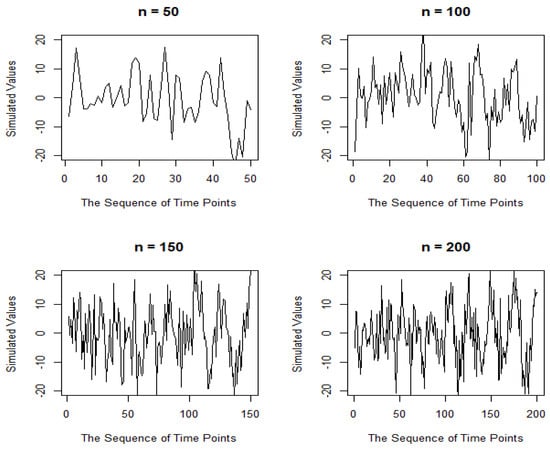

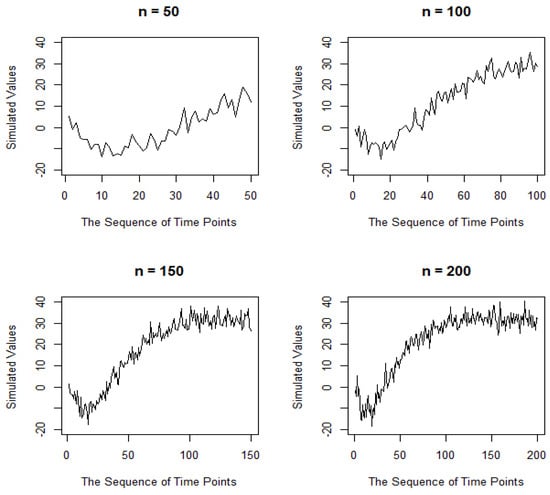

This function simulates the cyclic patterns in terms of time series data, as follows:

where is an error term via the normal distribution with mean zero and standard deviations 1, 3, 5, and 7, as shown in Figure 1, Figure 2, Figure 3 and Figure 4.

Figure 1.

The time series plot of cyclic patterns with a standard deviation of one for several sample sizes.

Figure 2.

The time series plot of cyclic patterns with a standard deviation of three for several sample sizes.

Figure 3.

The time series plot of cyclic patterns with a standard deviation of five for several sample sizes.

Figure 4.

The time series plot of cyclic patterns with a standard deviation of seven for several sample sizes.

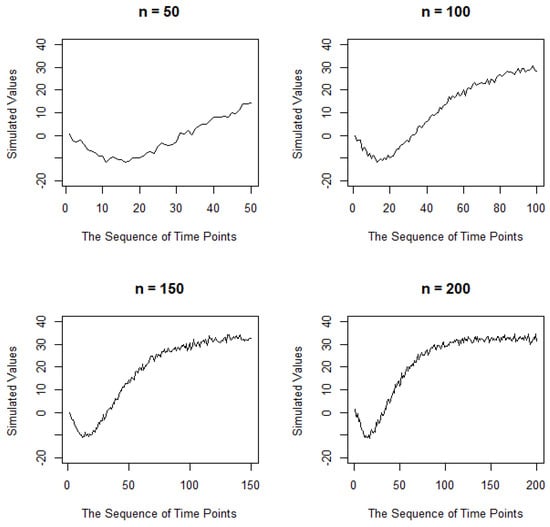

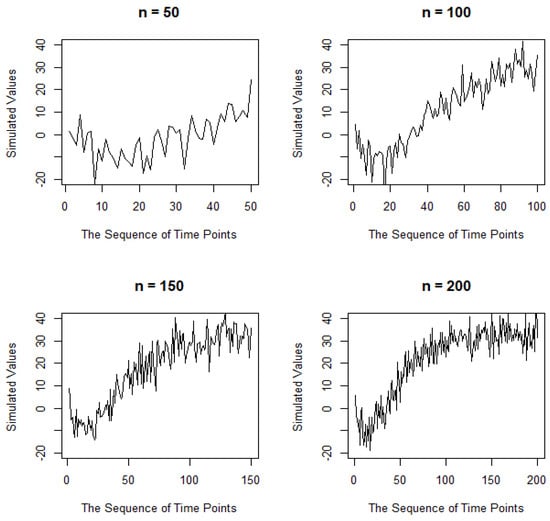

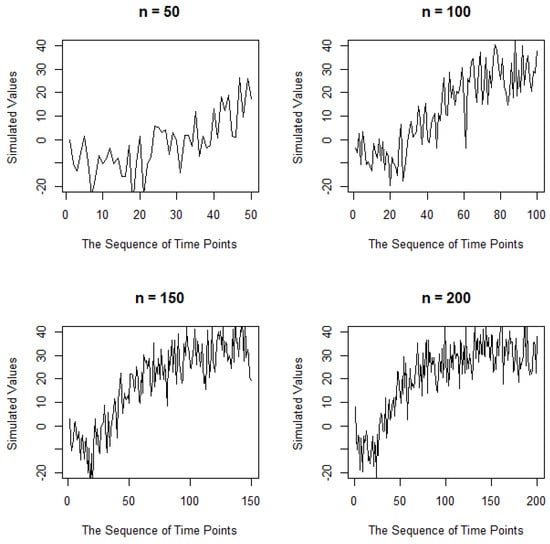

With the use of nonlinear forms, the dependent variables are simulated from the following function:

where is an error term via the normal distribution with mean zero and standard deviations 1, 3, 5, and 7, as shown in Figure 5, Figure 6, Figure 7 and Figure 8.

Figure 5.

The time series plot of nonlinear forms with a standard deviation of one for several sample sizes.

Figure 6.

The time series plot of nonlinear forms with a standard deviation of three for several sample sizes.

Figure 7.

The time series plot of nonlinear forms with a standard deviation of five for several sample sizes.

Figure 8.

The time series plot of nonlinear forms with a standard deviation of seven for several sample sizes.

The performance of estimation is compared by average mean square error (AMSE) as follows:

where is the number of replications. The mean square error (MSE) is computed by

where is the dependent variable, and is the estimated dependent variable from nonparametric regression methods.

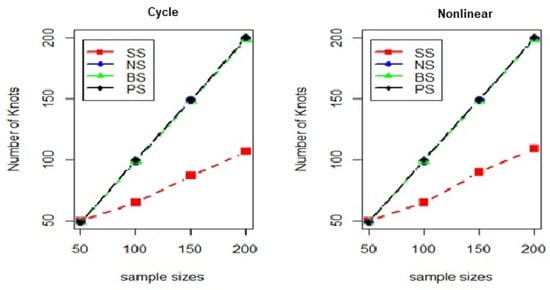

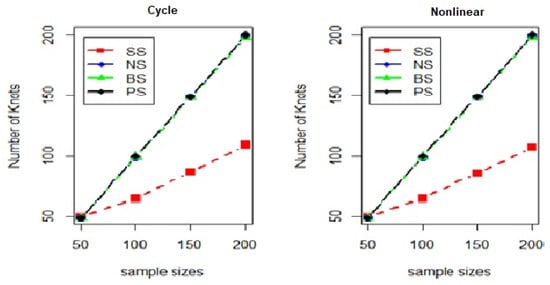

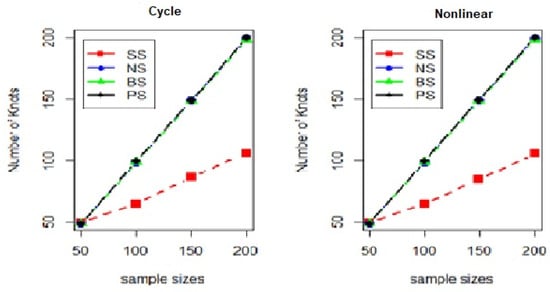

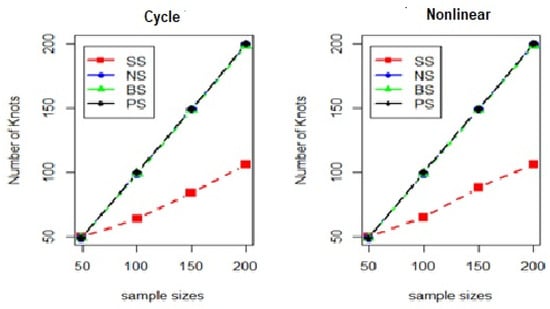

The simulation analysis comprises the average mean square error (AMSE) and the mean of the knot from smoothing spline (SS), natural cubic spline (NS), B-splines (BS), and penalized spline (PS) under standard deviation of error as 1, 3, 5, and 7, as presented in Table 1, Table 2, Table 3 and Table 4. To illustrate, the number of knots can be plotted using several methods and sample sizes, as shown in Figure 9, Figure 10, Figure 11 and Figure 12.

Table 1.

The average mean square error (AMSE) and the mean of the knot (Knot) for the standard deviation of one.

Table 2.

The average mean square error (AMSE) and the mean of the knot (Knot) for the standard deviation of three.

Table 3.

The average mean square error (AMSE) and the mean of the knot (Knot) for the standard deviation of five.

Table 4.

The average mean square error (AMSE) and the mean of the knot (Knot) for the standard deviation of seven.

Figure 9.

The plots of a number of knots with cycle and nonlinear forms for the standard deviation of one.

Figure 10.

The plots of a number of knots with cycle and nonlinear forms for the standard deviation of three.

Figure 11.

The plots of a number of knots with cycle and nonlinear forms for the standard deviation of five.

Figure 12.

The plots of a number of knots with cycle and nonlinear forms for the standard deviation of seven.

From Table 1, Table 2, Table 3 and Table 4, the average mean square error (MSE) for the cyclic data differs slightly from that for the nonlinear data, particularly for the natural cubic spline, B-splines, and penalized spline methods. However, the average MSE for the smoothing spline is higher than that for the other methods, and the number of knots used is lower, as shown in Figure 9, Figure 10, Figure 11 and Figure 12. The number of knots for the various methods tends to approach the sample sizes. The average MSE increases as the standard deviation rises, indicating the impact on the model’s fitting performance. Despite the increase in sample sizes, the parameter estimation remained unaffected. The results also show that the natural cubic spline consistently outperforms the other nonparametric regression models.

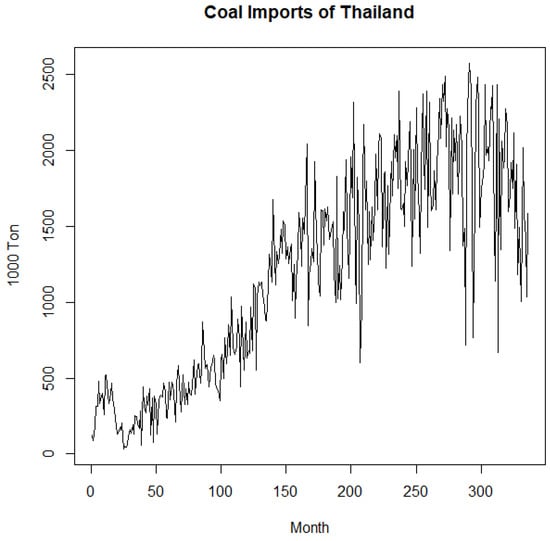

4. Application with Real Data

Coal imports have played a significant role in Thailand’s energy sector, contributing to energy security, baseload power generation, cost-effectiveness, industrial use, and overall economic development. In recent years, estimating and forecasting coal imports has gained renewed attention as Thailand reassesses its energy strategies in light of environmental considerations. This study examines a dataset of Thailand’s coal imports consisting of 336 monthly records (in 1000 tons) from January 1996 to December 2023, sourced from https://www.eppo.go.th/index.php/th/energy-information/static-energy/coal-lignite (accessed on 1 October 2024). The dataset exhibits cyclic patterns, with a seasonal component and nonlinear trends, as shown in Figure 13.

Figure 13.

The time series plot of Thailand’s coal imports.

The empirical analysis applies four nonparametric regression methods to estimate the smoothing function for Thailand’s coal imports. The independent variable is represented by a sequence of 336 months, while the dependent variable is the monthly coal volume (per 1000 tons). Mean square error (MSE) measures the differences between actual and estimated values, while mean absolute percentage error (MAPE) assesses the prediction accuracy in percentage terms. The precision of both estimation and forecasting is evaluated using MSE and MAPE. The equations for calculating MSE and MAPE are as follows:

and

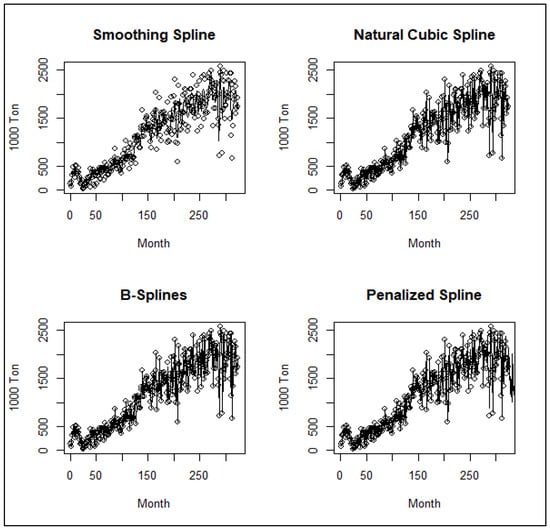

The mean square errors (MSEs) and knots are approximated from the smoothing spline, natural cubic spline, B-splines, and penalized spline methods displayed in Figure 14.

Figure 14.

The fitted nonparametric regression model of Thailand’s coal imports.

Based on the above figure, all methods’ fitted nonparametric regression models make it difficult to indicate the best-performing method, especially the natural cubic spline, B-splines, and penalized spline. Then, the mean square errors (MSEs) are used to investigate the outperformed method, with the results presented in Table 5.

Table 5.

The mean square errors (MSEs) and knots for estimating nonparametric regression methods.

Table 5 presents the optimal knots and MSE values for the smoothing spline, natural cubic spline, B-spline, and penalized spline methods. The natural cubic spline yielded the lowest MSE, indicating it is this dataset’s most accurate estimation method.

Following the estimation, these nonparametric regression models are used to forecast future values for the next 12 months, with MAPE calculated to assess the accuracy over the given period. Table 6 displays the actual data, predicted values, and MAPE for all four methods.

Table 6.

The volume of coal imports per month, forecasting values for 12 months, and MAPE.

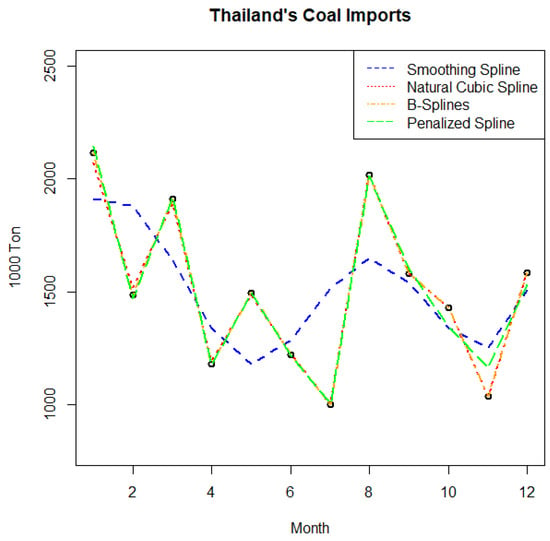

Table 6 shows that the B-splines method yielded the lowest MAPE, at 0.0027. While the natural cubic spline proved to be the most suitable method for estimating real data, B-splines outperformed it in forecasting future values, as shown in Figure 15.

Figure 15.

Plot of actual data (o) and forecasts for 12 future periods.

Figure 15 compares four nonparametric regression methods—smoothing spline, natural cubic spline, B-splines, and penalized spline—for modeling Thailand’s coal imports over 12 months. Both the B-splines and penalized spline methods provide the closest fit to the actual data points, while the natural cubic spline also performs well, though with a smoother curve. In contrast, the smoothing spline shows more significant variation and deviates from the other methods, particularly around months 7 and 9. Overall, the B-splines and penalized spline methods offer the best fit for accurately forecasting coal imports.

5. Discussion

The simulated results are listed in Table 1, Table 2, Table 3 and Table 4, which are applied or conducted using the nonparametric regression model. The natural cubic spline outperforms the other methods across all sample sizes and standard deviations. However, the nonlinear form shows more accurate estimations than the cyclic patterns based on the mean square errors. Increasing sample sizes and standard deviations did not affect the estimation process, as the nonparametric methods allow for flexible interpolation and extrapolation through knots, making them versatile for various applications. Nonparametric regression is instrumental when linearity is not a reasonable assumption in the data.

Regarding the impact of knots in real datasets, the four nonparametric regression methods show an increasing number of knots for forecasting future data, as seen in Table 7. However, increasing the number of knots does not necessarily guarantee the best method, and time spent finding optimal knots may not always be justified. This study suggests that B-splines are more effective for forecasting future values, while smoothing splines, natural cubic splines, and penalized splines rely on the same knot approach described in [44]. Knots are specific points in the independent variable space where the relationship between the independent and dependent variables may shift. They are commonly used in spline-based nonparametric regression methods, such as cubic splines or piecewise linear regression, and offer distinct advantages in improving model flexibility and accuracy.

Table 7.

The number of knots, mean square errors (MSEs), and mean absolute percentage errors (MAPE) for Thailand’s coal imports.

6. Conclusions

The significance of this study lies in its comparison of popular nonparametric regression methods, including smoothing splines, natural cubic splines, B-splines, and penalized splines, applied to both simulated data and a real-world dataset. Cyclic patterns and nonlinear forms are simulated across standard deviations and sample sizes. The natural cubic spline consistently achieves the lowest MSE for parameter estimation, demonstrating its effectiveness when applied to time series data. Additionally, using a real dataset, such as Thailand’s coal imports, produced similar fitted model results to those obtained from the simulated data. However, as Mineo et al. [45] noted, B-splines are particularly suitable for forecasting future values. Despite these advantages, nonparametric regression presents challenges, including the risk of overfitting, the need for larger sample sizes, and increased computational complexity. Ultimately, the choice between parametric and nonparametric regression methods depends on the characteristics of the data and the goals of the analysis.

Future research could focus on increasing the number of knots to improve model accuracy and exploring other nonparametric regression techniques, such as kernel smoothing or local polynomial regression. These methods could be tested on datasets with varying complexities. It will also be essential to consider computational efficiency for larger datasets and develop a broader range of error metrics, such as RMSE or MAE, to evaluate forecasting accuracy comprehensively.

Funding

This work was financially supported by King Mongkut’s Institute of Technology Ladkrabang Research Fund (Grant number: 2566-02-05-005).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Ernst, A.F.; Alber, C.J. Regression assumptions in clinical psychology research practice-a systematic review of common misconceptions. PeerJ 2017, 5, e3323. [Google Scholar] [CrossRef]

- Flatt, C.; Jacobs, R.L. Principle assumptions of regression analysis: Testing, techniques, and statistical reporting of imperfect data sets. Adv. Dev. Hum. Resour. 2019, 21, 484–502. [Google Scholar] [CrossRef]

- Amodia, S.; Aria, M.; D’Ambrosio, A. On concurvity in nonlinear and nonparametric regression models. Statistica 2014, 74, 85–98. [Google Scholar]

- Salibian-Barrera, M. Robust nonparametric regression: Review and practical considerations. Econom. Stat. 2023, 26, 1–28. [Google Scholar] [CrossRef]

- EL-Morshedy, M.; Eliwa, M.S.; Altun, E. Discrete Burr-Hatke Distribution with properties, estimation methods and regression model. IEEE Access 2022, 8, 74359–74370. [Google Scholar] [CrossRef]

- Gal, Y.; Zhu, X.; Zhang, J. Testing symmetry of model errors for nonparametric regressions by using correlation coefficient. Commun. Stat. Simul. Comput. 2022, 51, 1436–1453. [Google Scholar]

- Cizek, P.; Sadikoglu, S. Robust nonparametric regression: A Review. WIREs Comput. Stat. 2022, 12, 1–16. [Google Scholar]

- Ahamada, I.; Flachaire, E. Non-Parametric Econometrics; Oxford University Press: Oxford, UK, 2010; pp. 19–20. [Google Scholar]

- Mammen, E.; Rothe, C.; Scienle, M. Nonparametric regression with nonparametrically generated covariates. Ann. Stat. 2012, 40, 1132–1170. [Google Scholar] [CrossRef]

- Ghosh, S. Kernel Smoothing: Principles, Methods and Application; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2018; pp. 19–20. [Google Scholar]

- Yuan, Y.; Zhu, H.; Lin, W.; Marron, J.S. Local polynomial regression for symmetric positive definite matrices. J. R. Stat. Soc. B Stat. Method 2012, 74, 697–719. [Google Scholar] [CrossRef]

- Qi, X.; Wang, H.; Pan, X.; Chu, J.; Chiam, K. Prediction of interfaces of geological formations using the multivariate adaptive regression spline method. Undergr. Space 2021, 6, 252–266. [Google Scholar] [CrossRef]

- Wang, Y. Smoothing Splines Methods and Applications; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Boca Raton, FL, USA, 2011; pp. 11–12. [Google Scholar]

- Zhou, T.; Elliott, M.R.; Little, J.A. Penalized spline of propensity methods for treatment comparison. J. Am. Stat. Assoc. 2019, 114, 1–19. [Google Scholar] [CrossRef]

- Chen, B.; Hong, Y. Testing for smooth structural changes in time series models via nonparametric regression. Econometrica 2012, 80, 1157–1183. [Google Scholar]

- Demir, S.; Toktamis, O. On the adaptive Nadaraya-Watson kernel regression estimators. Hacet. J. Math. Stat. 2010, 39, 429–437. [Google Scholar]

- Shang, Z.; Cheng, G. Computational limits of a distributed algorithm for smoothing spline. J. Mach. Learn. Res. 2017, 18, 1–37. [Google Scholar]

- Feng, P.; Qian, J. Forecasting the yield curve using a dynamic natural cubic spline model. Econ. Lett. 2018, 168, 73–76. [Google Scholar] [CrossRef]

- Than, D.V.; Tjahjowidodo, T. A direct method to solve optimal knots of B-splines curve: An application for non-uniform B-splines curves fitting. PLoS ONE 2017, 12, e0173857. [Google Scholar]

- Xiao, L. Asymptotic theory of penalized spline. Electron. J. Stat. 2019, 13, 747–794. [Google Scholar] [CrossRef]

- Adams, S.O.; Asemota, O.J. The efficiency of the proposed smoothing methods over classical cubic smoothing spline regression model with autocorrelated residual. J. Math. Stat. Stud. 2023, 4, 26–40. [Google Scholar] [CrossRef]

- Sriliana, I.; Budiantara, I.N.; Ratnasari, V. The performance of mixed truncated spline-local linear nonparametric regression model for longitudinal data. MethodsX 2024, 12, 102652. [Google Scholar] [CrossRef]

- Gascoigne, C.; Smith, T. Penalized smoothing splines resolve the curvature identifiability problem in age-peroid-cohort models with unequal intervals. Stat. Med. 2023, 42, 1888–1908. [Google Scholar] [CrossRef]

- Klankaew, P.; Pochai, N. A numerical groundwater quality assessment model using the cubic spline method. IAENG Int. J. Appl. Math. 2024, 54, 111–116. [Google Scholar]

- Jiang, X.; Lin, Y. Reparameterization of B-splines surface and its application in ship hull modeling. Ocean Eng. 2023, 286, 115535. [Google Scholar] [CrossRef]

- Tan, K.S.; Zhang, J. Flexible weather index insurance design with penalized splines. N. Am. Actuar. J. 2024, 28, 1–26. [Google Scholar] [CrossRef]

- Eubank, R.L. Spline Smoothing and Nonparametric Regression. J.R. Stat. Soc. A Stat. Soc. 1989, 152, 119–120. [Google Scholar]

- Eubank, R.L. Nonparametric Regression and Spline Smoothing, 2nd ed.; Marcel Dekker: New York, NY, USA, 1999; pp. 27–28. [Google Scholar]

- Green, P.J.; Silverman, B.W. Nonparametric Regression and Generalized Linear Models: A Roughness Penalty Approach; Chapman and Hall: London, UK, 1994; pp. 19–20. [Google Scholar]

- Wu, H.; Zhang, J.T. Nonparametric Regression Methods Mixed-Effects Models for Longitudinal Data Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2006; pp. 50–52. [Google Scholar]

- Hastie, T.; Tibshirani, R. Generalized Additive Models; Chapman and Hall: London, UK, 1990; pp. 27–28. [Google Scholar]

- Wood, N.S. Generalized Additive Models: An Introduction with R.; Chapman & Hall/CRC: Boca Raton, FL, USA, 2006; pp. 204–206. [Google Scholar]

- Liu, X.; Wang, X.; Wu, Z.; Zhang, D.; Liu, X. Extending Ball B-splines by B-splines. Comput. Aided Geom. Des. 2020, 82, 101926. [Google Scholar] [CrossRef]

- Ruppert, D.; Wand, M.P.; Carroll, R.J. Semiparametric Regression; Cambridge University Press: Cambridge, UK, 2003; pp. 65–67. [Google Scholar]

- Wahba, G. Spline Models for Observational Data; SIAM: Philadelphia, PA, USA, 1990; pp. 45–50. [Google Scholar]

- Craven, P.; Wahba, G. Smoothing noisy data with spline functions: Estimating the correct degree of smoothing by the method of generalized cross-validation. Numer. Math. 1979, 31, 377–403. [Google Scholar] [CrossRef]

- Li, A.; Cao, J. Automatic search intervals for the smoothing parameter in penalized splines. Stat. Comput. 2023, 33, 1–18. [Google Scholar] [CrossRef]

- Altukhaes, W.D.; Roozbeh, M.; Mohamed, N.A. Robust Liu estimator used to combat some challenges in partially linear regression model by improving LTS algorithm using semidefinite programming. Mathematics 2024, 12, 2787. [Google Scholar] [CrossRef]

- Abonazel, R.A.; Awwad, F.A.; Eldin, E.T.; Kibria, B.M.G.; Khattab, I.G. Developing a two-parameter Liu estimator for the COM–Poisson regression model: Application and simulation. Front. Appl. Math. Stat. 2023, 9, 956963. [Google Scholar] [CrossRef]

- Lukman, A.F.; Ayinde, K.; Kun, S.S.; Adewuyi, E.T. A Modified new two-parameter estimator in a linear regression model. Mod. Sim. Eng. 2019, 2019, 6342702. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024; Available online: https://www.R-project.org/ (accessed on 14 June 2024).

- R Core Team. Splines Package. Available online: https://stat.ethz.ch/R-manual/R-devel/library/splines/html/ (accessed on 14 March 2024).

- Ruppert, D.; Wand, M.P.; Carroll, R.J. SemiPar Package. Available online: https://CRAN.R-project.org/package=SemiPar (accessed on 16 September 2024).

- Partyka, A.W. Organ surface reconstruction using B-splines and Hu moments. Acta Polytech. Hung. 2014, 10, 151–161. [Google Scholar] [CrossRef]

- Mineo, E.; Alencer, A.P.; Mour, M.; Fabris, A.E. Forecasting the term structure of interest rates with dynamic constrained smoothing B-splines. J. Risk Financ. Manag. 2020, 13, 65. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).