Artificial Intelligence in Finance: From Market Prediction to Macroeconomic and Firm-Level Forecasting

Abstract

1. Introduction

Methods of Literature Search

2. Model Families and Learning Components

2.1. Regularized & Baseline Linear Models

2.2. Trees and Ensembles

- 1.

- Bootstrap Aggregation (Bagging): Trees are grown on resampled datasets, increasing model diversity.

- 2.

- Random Feature Selection: At each split, only a subset of predictors is considered, reducing variance and improving generalization.

- Robustness to Overfitting: Averaging over many trees reduces variance while maintaining predictive power [7].

- Nonlinear Modeling Capability: RF captures complex, nonlinear relationships without strong parametric assumptions [3].

- Variable Importance Measures: RF provides internal estimates of feature relevance, enhancing interpretability in economic and financial settings.

- Black-Box Nature: Interpretability remains limited compared to linear econometric models [6].

- Bias in Extrapolations: RF performs poorly outside the range of training data, making it less suitable for structural counterfactual analysis.

- Class Imbalance: In financial forecasting tasks with skewed datasets, RF can be biased toward majority classes, requiring resampling or weighting strategies [23].

- Computational Demands: Large forests can be resource-intensive, though parallelization mitigates this problem.

2.3. Neural Architecture

- Activation Functions: evolving from sigmoid to ReLU and its variants to mitigate vanishing gradients [27].

- Regularization Techniques: integration of hidden-layer pruning via group Lasso to enforce sparsity and improve efficiency [9].

- Metaheuristic Optimization: incorporating swarm intelligence or evolutionary algorithms to optimize weights and architectures [25].

- Hybrid Forecasting: combining feedforward neural structures with econometric models for financial time series [20].

Attention and Transformer Architectures

2.4. Learning Components

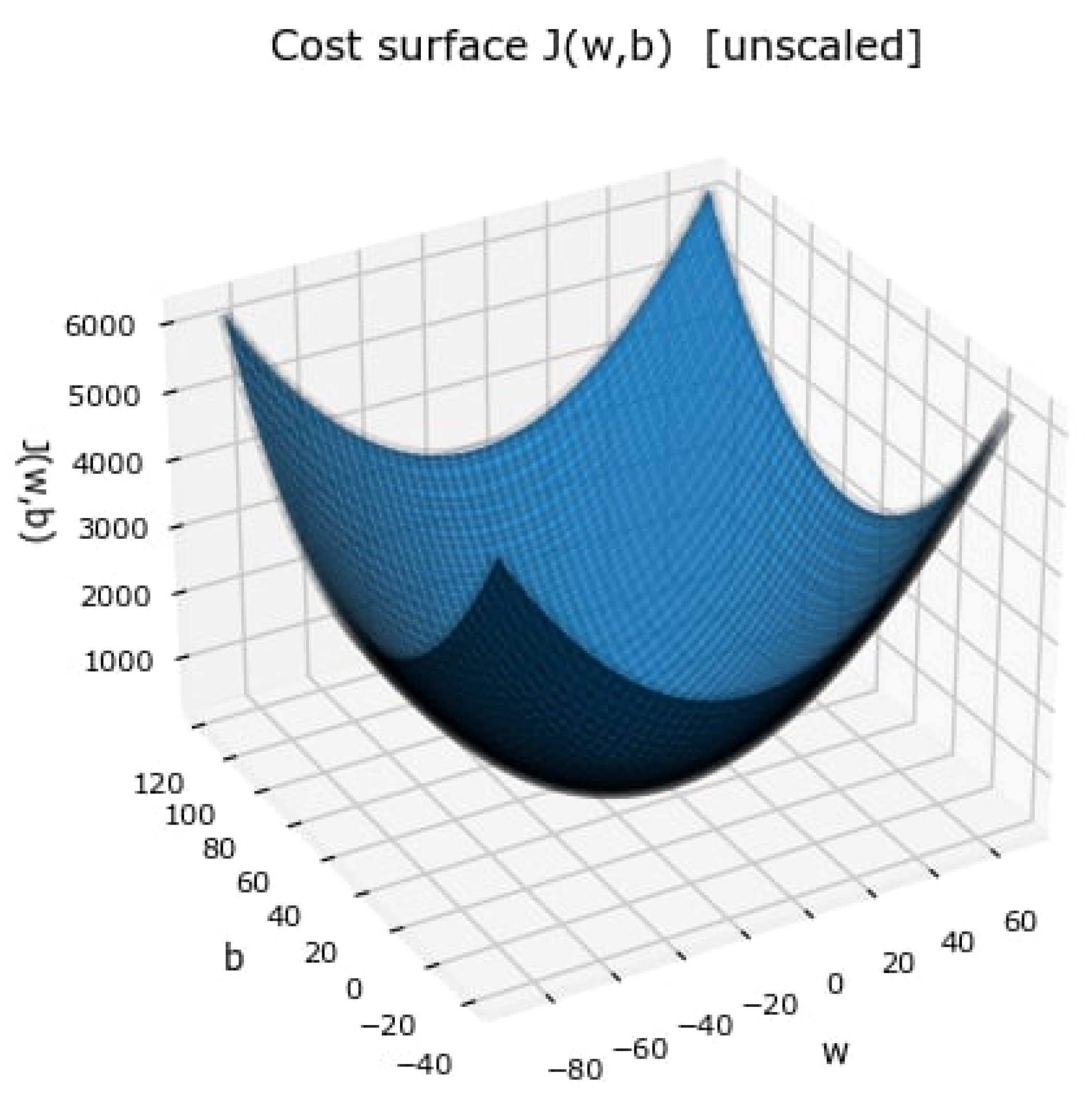

2.4.1. Cost Functions

- is the number of training examples.

- is the prediction for input x.

- is the true target value.

- The squared term represents the error for each example.

- The cost is averaged over the dataset, normalizing it across datasets of different sizes.

- The factor of 2 simplifies derivatives when applying gradient descent later.

2.4.2. Loss Functions

- Loss is the measure of error for a single training example.

- Cost aggregates the loss across all training examples, often as an average.

2.4.3. Optimization (Gradient Descent)

- is the cost function.

- are the partial derivatives (the gradients).

- is the learning rate, a scalar controlling the step size.

2.4.4. Linear Activation

- is the pre-activation (weighted sum).

- is the activation, which in the linear case is simply the identity function.

- The neuron outputs a continuous value, suitable for predicting numerical targets.

- The cost function (mean squared error) directly evaluates how close the linear activation outputs are to the actual target values.

- Lack of expressiveness. If all layers in a neural network use purely linear activations, the composition of functions collapses into a single linear transformation. For example:which is still linear in . Thus, adding more layers provides no additional representational power.

- Inability to model complex functions. Many real-world problems involve nonlinear patterns (classification, signal recognition, image tasks). A network of linear activations cannot approximate such functions. This motivates the use of nonlinear activations such as sigmoid, tanh, or ReLU.

2.4.5. Sigmoid Activation

- is the linear combination of inputs and parameters.

- is the activated output.

- 1.

- Probabilistic Interpretation—Outputs lie in naturally mapping to probabilities.

- 2.

- Non-linearity—Introduces the ability to learn nonlinear relationships beyond linear models.

- 3.

- Universality—As shown by approximation theorems, networks with sigmoid activations can approximate any measurable function given sufficient hidden units.

- 4.

- Ease of Implementation—Historically, sigmoid was widely adopted due to its mathematical simplicity and interpretability.

- Vanishing Gradients: For large gradient approach zero, which slows down or prevents learning in deep architectures.

- Non-zero-centered outputs: Since values lie in gradients during optimization may propagate inefficiently.

- Slower convergence compared to newer functions such as ReLU or tanh, especially in multi-layer networks.

- LSTM networks for stock forecasting employ sigmoid gates (input, forget, and output) to regulate information flow, crucial in sequential modeling of financial time series [34].

- Feedforward forecasting models integrate sigmoid functions at output layers to transform weighted inputs into directional probabilities of market movement [34].

- Hybrid forecasting models also rely on sigmoid-based layers in combination with other methods, ensuring probabilistic classification outputs.

3. Signals and Feature Sets in the Literature

3.1. Macroeconomic Indicators

3.2. Financial Market Signals

3.3. Company-Level and Operational Data

3.4. Feature Engineering Patterns

3.5. Data Quality and Leakage Risks

3.6. Summary

4. Task-Centric Synthesis and Practical Guidance

- Use returns for directional or classification tasks.

- Use levels or growth rates for magnitude forecasting (GDP, revenue, etc.).

- Cross-entropy or AUC for directional accuracy.

- RMSE, MAE, or MAPE for continuous prediction.

- Include regularization (ridge/lasso/elastic-net) to stabilize estimation.

- Level 1: Linear/logistic regression, understand gradient descent and overfitting.

- Level 2: Regularized linear models, control complexity in high-dimensional data.

- Level 3: Tree ensembles (RF, GBM), capture nonlinearities and interactions.

- Markets & firms: rolling or expanding windows.

- Macroeconomics: vintage or pseudo-real-time evaluation.

- Build features only from information available at or before the forecast date.

- Use purged or embargoed splits for overlapping horizons.

- Report both accuracy and probability calibration.

- Run ablations against strong ensemble baselines to test if added complexity pays off.

| Forecasting Task | Appropriate Metrics | Notes/Caveasts |

|---|---|---|

| Directional classification | Log-loss (cross-entropy), Brier score, calibration curves | Penalize overconfidence; check probability calibration |

| Magnitude regression | Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), Mean Absolute Percentage Error (MAPE, with caution) | MAPE unstable near zero values; MAE/RMSE generally preferred |

| Portfolio/decision tasks | Utility-adjusted or cost-sensitive metrics (profit-and-loss, Sharpe ratio, downside risk) | Evaluation should reflect economic usefulness rather than purely statistical fit |

4.1. Financial Markets

4.2. Macroeconomics

4.3. Company-Level Planning

4.4. Cross-Cutting Guidance

- Directional classification (e.g., predicting up/down market moves) is best evaluated with log-loss, Brier score, or calibration curves, since these capture not only classification accuracy but also the reliability of predicted probabilities.

- Magnitude regression (e.g., forecasting GDP growth, inflation, or asset returns) is typically assessed using MAE and RMSE. MAPE is sometimes applied for interpretability, but its instability near zero targets makes it less reliable in macro econometric or financial settings.

- Portfolio or decision-driven tasks (e.g., trading strategies or asset allocation) require utility-adjusted or cost-sensitive losses, such as realized profit-and-loss, Sharpe ratio, or downside risk measures. These metrics ensure that evaluation reflects the economic value of predictions, not only their statistical accuracy.

5. Challenges and Open Problems

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ARIMA | Autoregressive Integrated Moving Average |

| AUC | Area Under the Curve |

| BOJ | Bank of Japan |

| CPI | Consumer Price Index |

| ECB | European Central Bank |

| ENS | Ensemble Methods |

| FNN | Feedforward Neural Network |

| GDP | Gross Domestic Product |

| IMF | International Monetary Fund |

| ISM | Institute for Supply Management |

| KPIs | Key Performance Indicators |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MASE | Mean Absolute Scaled Error |

| ML | Machine Learning |

| NN | Neural Network |

| OLS | Ordinary Least Squares |

| PMI | Purchasing Managers’ Index |

| ReLU | Rectified Linear Unit |

| RF | Random Forest |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SGD | Stochastic Gradient Descent |

| SiLU | Sigmoid Linear Unit |

| sMAPE | Symmetric Mean Absolute Percentage Error |

| SVM | Support Vector Machine |

References

- Maehashi, K.; Shintani, M. Macroeconomic Forecasting Using Factor Models and Machine Learning: An Application to Japan. J. Jpn. Int. Econ. 2020, 58, 101104. [Google Scholar] [CrossRef]

- Muskaan, M.; Sarangi, P.K. A Literature Review on Machine Learning Applications in Financial Forecasting. JTMGE 2020, 11, 23–27. [Google Scholar] [CrossRef]

- Masini, R.P.; Medeiros, M.C.; Mendes, E.F. Machine Learning Advances for Time Series Forecasting. arXiv 2021, arXiv:2012.12802. [Google Scholar] [CrossRef]

- Crone, S.F.; Hibon, M.; Nikolopoulos, K. Advances in Forecasting with Neural Networks? Empirical Evidence from the NN3 Competition on Time Series Prediction. Int. J. Forecast. 2011, 27, 635–660. [Google Scholar] [CrossRef]

- Fama, F. Efficient Capital Markets: A Review of Theory and Empirical Work. J. Financ. 1970, 25, 383–417. [Google Scholar] [CrossRef]

- Long, X.; Kampouridis, M.; Jarchi, D. An In-Depth Investigation of Genetic Programming and Nine Other Machine Learning Algorithms in a Financial Forecasting Problem. In Proceedings of the 2022 IEEE Congress on Evolutionary Computation (CEC), Padua, Italy, 18–23 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar]

- Rayner, B.; Bolhuis, M. Deus Ex Machina? A Framework for Macro Forecasting with Machine Learning. IMF Work. Pap. 2020, 2020, 25. [Google Scholar] [CrossRef]

- Coulombe, P.G. The Macroeconomy as a Random Forest. arXiv 2020, arXiv:2006.12724. [Google Scholar] [CrossRef]

- Smalter Hall, A. Machine Learning Approaches to Macroeconomic Forecasting. Econ. Rev. 2018. [Google Scholar] [CrossRef]

- Elliott, G.; Gargano, A.; Timmermann, A. Complete Subset Regressions. J. Econom. 2013, 177, 357–373. [Google Scholar] [CrossRef]

- Lanbouri, Z.; Achchab, S. Stock Market Prediction on High Frequency Data Using Long-Short Term Memory. Procedia Comput. Sci. 2020, 175, 603–608. [Google Scholar] [CrossRef]

- Borovkova, S.; Tsiamas, I. An Ensemble of LSTM Neural Networks for High-frequency Stock Market Classification. J. Forecast. 2019, 38, 600–619. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Wang, Z.; Kong, F.; Feng, S.; Wang, M.; Yang, X.; Zhao, H.; Wang, D.; Zhang, Y. Is Mamba Effective for Time Series Forecasting? arXiv 2024, arXiv:2403.11144. [Google Scholar] [CrossRef]

- Liang, A.; Jiang, X.; Sun, Y.; Shi, X.; Li, K. Bi-Mamba+: Bidirectional Mamba for Time Series Forecasting. arXiv 2024, arXiv:2404.15772. [Google Scholar]

- Li, Q.; Qin, J.; Cui, D.; Sun, D.; Wang, D. CMMamba: Channel Mixing Mamba for Time Series Forecasting. J. Big Data 2024, 11, 153. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation Functions: Comparison of Trends in Practice and Research for Deep Learning. In Proceedings of the 2nd International Conference on Computational Sciences and Technology, Jamshoro, Pakista, 17–19 December 2018. [Google Scholar]

- Patel, J.; Shah, S.; Thakkar, P.; Kotecha, K. Predicting Stock Market Index Using Fusion of Machine Learning Techniques. Expert. Syst. Appl. 2015, 42, 2162–2172. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Paruchuri, H. Conceptualization of Machine Learning in Economic Forecasting. Asian Bus. Rev. 2021, 11, 51–58. [Google Scholar] [CrossRef]

- Wasserbacher, H.; Spindler, M. Machine Learning for Financial Forecasting, Planning and Analysis: Recent Developments and Pitfalls. Digit. Financ. 2022, 4, 63–88. [Google Scholar] [CrossRef]

- Arpit, D.; Jastrzębski, S.; Ballas, N.; Krueger, D.; Bengio, E.; Kanwal, M.S.; Maharaj, T.; Fischer, A.; Courville, A.; Bengio, Y.; et al. A Closer Look at Memorization in Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Stempien, D.; Slepaczuk, R. Hybrid Models for Financial Forecasting: Combining Econometric, Machine Learning, and Deep Learning Models. arXiv 2025, arXiv:2505.19617. [Google Scholar]

- Gallego-García, S.; García-García, M. Predictive Sales and Operations Planning Based on a Statistical Treatment of Demand to Increase Efficiency: A Supply Chain Simulation Case Study. Appl. Sci. 2020, 11, 233. [Google Scholar] [CrossRef]

- Fan, J.; Xue, L.; Zou, H. Strong Oracle Optimality of Folded Concave Penalized Estimation. Ann. Stat. 2014, 42, 819–849. [Google Scholar] [CrossRef] [PubMed]

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent Neural Networks: A Comprehensive Review of Architectures, Variants, and Applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Wallbridge, J. Transformers for Limit Order Books. arXiv 2020, arXiv:2003.00130. [Google Scholar] [CrossRef]

- Berti, L.; Kasneci, G. TLOB: A Novel Transformer Model with Dual Attention for Price Trend Prediction with Limit Order Book Data. arXiv 2025, arXiv:2502.15757. [Google Scholar]

- Araci, D. FinBERT: Financial Sentiment Analysis with Pre-Trained Language Models. arXiv 2019, arXiv:1908.10063. [Google Scholar]

- Lim, B.; Arik, S.O.; Loeff, N.; Pfister, T. Temporal Fusion Transformers for Interpretable Multi-Horizon Time Series Forecasting. arXiv 2019, arXiv:1912.09363. [Google Scholar] [CrossRef]

- Yang, J.; Li, P.; Cui, Y.; Han, X.; Zhou, M. Multi-Sensor Temporal Fusion Transformer for Stock Performance Prediction: An Adaptive Sharpe Ratio Approach. Sensors 2025, 25, 976. [Google Scholar] [CrossRef]

- Kamalov, F.; Gurrib, I.; Rajab, K. Financial Forecasting with Machine Learning: Price Vs Return. J. Comput. Sci. 2021, 17, 251–264. [Google Scholar] [CrossRef]

- Alemu, H.Z.; Wu, W.; Zhao, J. Feedforward Neural Networks with a Hidden Layer Regularization Method. Symmetry 2018, 10, 525. [Google Scholar] [CrossRef]

- Buckmann, M.; Joseph, A. An Interpretable Machine Learning Workflow with an Application to Economic Forecasting. SSRN J. 2022. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Yoon, J. Forecasting of Real GDP Growth Using Machine Learning Models: Gradient Boosting and Random Forest Approach. Comput. Econ. 2021, 57, 247–265. [Google Scholar] [CrossRef]

- Agrawal, A.; Gans, J.; Goldfarb, A. The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2019; ISBN 978-0-226-61333-8. [Google Scholar]

- Ojha, V.K.; Abraham, A.; Snášel, V. Metaheuristic Design of Feedforward Neural Networks: A Review of Two Decades of Research. Eng. Appl. Artif. Intell. 2017, 60, 97–116. [Google Scholar] [CrossRef]

- Fildes, R.; Stekler, H. The State of Macroeconomic Forecasting. J. Macroecon. 2002, 24, 435–468. [Google Scholar] [CrossRef]

- Guo, H. Predicting High-Frequency Stock Market Trends with LSTM Networks and Technical Indicators. AEMPS 2024, 139, 235–244. [Google Scholar] [CrossRef]

- Jegadeesh, N.; Titman, S. Returns to Buying Winners and Selling Losers: Implications for Stock Market Efficiency. J. Financ. 1993, 48, 65–91. [Google Scholar] [CrossRef]

- Asness, C.S.; Moskowitz, T.J.; Pedersen, L.H. Value and Momentum Everywhere. J. Financ. 2013, 68, 929–985. [Google Scholar] [CrossRef]

- Andersen, T.G.; Bollerslev, T.; Diebold, F.X.; Labys, P. Modeling and Forecasting Realized Volatility. SSRN J. 2001. [Google Scholar] [CrossRef]

- López de Prado, M.M. Advances in Financial Machine Learning; Wiley: Hoboken, NJ, USA, 2018; ISBN 978-1-119-48208-6. [Google Scholar]

- Bailey, D.H.; López De Prado, M. The Deflated Sharpe Ratio: Correcting for Selection Bias, Backtest Overfitting, and Non-Normality. JPM 2014, 40, 94–107. [Google Scholar] [CrossRef]

- Sirignano, J.; Cont, R. Universal Features of Price Formation in Financial Markets: Perspectives from Deep Learning. SSRN J. 2018. [Google Scholar] [CrossRef]

- Zhang, Z.; Zohren, S.; Roberts, S. DeepLOB: Deep Convolutional Neural Networks for Limit Order Books. arXiv 2018. [Google Scholar] [CrossRef]

- Giannone, D.; Reichlin, L.; Small, D. Nowcasting: The Real-Time Informational Content of Macroeconomic Data. J. Monet. Econ. 2008, 55, 665–676. [Google Scholar] [CrossRef]

- Croushore, D.; Stark, T. A Real-Time Data Set for Macroeconomists. J. Econom. 2001, 105, 111–130. [Google Scholar] [CrossRef]

- Ghysels, E.; Sinko, A.; Valkanov, R.I. MIDAS Regressions: Further Results and New Directions. SSRN J. 2006. [Google Scholar] [CrossRef]

| Domain/Data Type | Recommended Protocol(s) | Key Considerations |

|---|---|---|

| Financial markets (high-frequency, daily prices, order book data) | Rolling windows with walk-forward evaluation; apply embargo periods to prevent look-ahead bias | Market data are highly non-stationary; overlapping information can cause leakage; evaluation should mimic real-time trading. |

| Macroeconomic indicators (GDP, CPI, unemployment, PMI) | Expanding windows using only information available at each point in time; ideally use vintage/real-time datasets | Data revisions and publication lags must be respected; forecasts should only use contemporaneously available data. |

| Firm-level data (sales, earnings, planning variables) | Rolling-origin evaluation (train on early history, then move forward step by step); sometimes expanding windows if longer history is available | Firm-level datasets are often short and subject to structural breaks; align validation with the decision horizon (monthly, quarterly). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Popa, F.G.; Muresan, V. Artificial Intelligence in Finance: From Market Prediction to Macroeconomic and Firm-Level Forecasting. AI 2025, 6, 295. https://doi.org/10.3390/ai6110295

Popa FG, Muresan V. Artificial Intelligence in Finance: From Market Prediction to Macroeconomic and Firm-Level Forecasting. AI. 2025; 6(11):295. https://doi.org/10.3390/ai6110295

Chicago/Turabian StylePopa, Flavius Gheorghe, and Vlad Muresan. 2025. "Artificial Intelligence in Finance: From Market Prediction to Macroeconomic and Firm-Level Forecasting" AI 6, no. 11: 295. https://doi.org/10.3390/ai6110295

APA StylePopa, F. G., & Muresan, V. (2025). Artificial Intelligence in Finance: From Market Prediction to Macroeconomic and Firm-Level Forecasting. AI, 6(11), 295. https://doi.org/10.3390/ai6110295

_Zheng.png)