Abstract

One of the most popular cure rate models in the literature is the Berkson and Gage mixture model. A characteristic of this model is that it considers the cure to be a latent event. However, there are situations in which the cure is well known, and this information must be considered in the analysis. In this context, this paper proposes a mixture model that accommodates both latent and non-latent cure fractions. More specifically, the proposal is to extend the Berkson and Gage mixture model to include the knowledge of the cure. A simulation study was conducted to investigate the asymptotic properties of maximum likelihood estimators. Finally, the proposed model is illustrated through an application to credit risk modeling.

MSC:

62N01

1. Introduction

Statistical techniques for censored data have been extensively studied in the literature. A common assumption underlying these models is that each individual in the study will eventually experience the event of interest if followed long enough. However, this assumption does not hold in many real-world scenarios, including biomedical, financial, demographic, criminological, and engineering research. Such individuals are typically referred to as cured, non-susceptible, immune, or long-term survivors, and their survival times are considered infinite. The remaining individuals are classified as susceptible.

Models for analyzing data with a proportion of cured individuals are often called cure models or cure rate models. According to [1], such a model, in practical terms can be used, for example, to model data related to various types of cancer for which a significant proportion of patients are cured.

Since the seminal mixture model proposed by [2], which assumes that the population under study is a combination of cured and susceptible individuals, several approaches have been developed to accommodate the presence of a cured fraction. These include promotion time and frailty models. Promotion time models treat the time-to-event as a result of the first occurrence among a set of latent failures. These models are useful in contexts where multiple failure mechanisms are present [3]. Extensions of this modeling approach have been studied in [4,5,6]. Frailty models incorporate unobserved heterogeneity between individuals by modeling vulnerability to the event of interest as a latent variable (frailty). These models can be extended to accommodate cure fractions, as presented in [7,8]. Models that incorporate long-term survivors offer an advantage over standard survival techniques, as they allow for the simultaneous estimation of parameters associated with both the susceptible and cured subpopulations [9,10].

Among the aforementioned models, the most popular cure fraction model is the Berkson and Gage mixture model [2]. This model assumes the existence of heterogeneity in the population under study. Consequently, the modeling is based on the mixture of two distributions: one representing the distribution of failure or survival times of susceptible individuals and the other corresponding to a degenerate distribution (which allows for, in principle, infinite survival times) for cured individuals.

Let T be a non-negative random variable denoting the survival time of the entire population. Under the mixture assumption, the Berkson and Gage [2] model has the form

where is the proportion of cured individuals and denotes the survival function of the susceptible group.

However, the model (1) may not be suitable for some problems since it treats the cure as a latent event. In fact, there are situations in which the cure is known, i.e., situations in which it is known that the censoring occurred due to the individual’s cure. One example arises in credit risk modeling, where the variable of interest is the time to default (i.e., delay in loan repayment): a customer who fully repays the loan is a known cured case, and this information should be incorporated into the analysis.

In this context, the main contribution of this work is to develop a model that accommodates both latent and non-latent cure fractions. More specifically, the proposal is to extend the Berkson and Gage mixture model (1) to incorporate known cure information. The model proposed in this paper is illustrated using artificial data of customers who have taken out loans from a financial institution. A credit risk score derived from the proposed model is then used to classify customers according to their risk of default.

This manuscript is organized as follows: Section 2 introduces the model formulation and the procedures for estimating the model parameters using the maximum likelihood method. Section 3 presents a simulation study conducted to investigate whether the usual asymptotic properties of maximum likelihood estimators hold and illustrates the proposed model using artificial data. Finally, concluding remarks are provided in Section 4.

2. Materials and Methods

2.1. Model Formulation

The model proposed in this paper is formulated considering that an individual observed in the sample can be part of one of three distinct subpopulations, consisting of susceptible (non-cured) individuals, non-susceptible individuals who are known to be cured (non-latent cure), and non-susceptible individuals whose status as cued is unknown (latent cure).

The knowledge of the cure of an individual can be represented by a random variable K following a Bernoulli distribution with a success probability . In addition, given , the latent cure variable, C, follows a Bernoulli distribution with success probability . Thus,

and

Since , , and , the probability of an individual being cured is given by

This model proposes a mixture of distributions for individuals who are not known to be cured, i.e., given , we have . Here, T is a random variable that represents the time to failure, Y is a continuous random variable that represents the time to failure of non-cured individuals, and Z is a degenerate variable at infinity (i.e., that represents the time to failure of cured individuals.

Given the value of K, the survival function in mixture form is given by

and

Thus, from (5) and (6), we have that the survival function and the probability density function of the entire population are given, respectively, by

and

where is the proportion of individuals who are known to be cured, is the proportion of cured individuals, and and are, respectively, the survival and probability density function of susceptible individuals.

Note that if , the model (7) reduces to the Berkson and Gage [2] mixture model (1). In addition, this model can also be characterized as a cure rate mixture model with competing risks [11], where the non-latent cure is considered a competing cause, and its time is assumed to be a degenerate variable at infinity.

2.2. Likelihood Function

Assuming a non-informative right-censoring mechanism, the response of the individual i observed in the sample can be represented by the term (, , ), where is the observed time of the i-th individual in the sample and and are their respective indicators of censorship and knowledge of the cure, . Here,

and

The contribution of the i-th individual to the likelihood function is given by , if it is known that individual i is cured in ; , if it is unknown that individual i is cured and the observation is not censored; and , if it is unknown that individual i is cured and the observation is censored. That is, for an observed value of , the contribution to the likelihood function is

Thus, the likelihood function is given by

where , and are the parameters to be estimated. Here, and are, respectively, the survival and probability density functions of susceptible (non-cured) individuals, and is the vector of times observed in the sample with their respective indicators of censorship and knowledge of cure .

Note that when the distribution of susceptible individuals, , is identifiable, the likelihood function (11) is identifiable as well, except in cases where no observations with known cure status are present in the sample. In fact, when , the likelihood function (11) results in , which is not identifiable (the non-identifiability arises due to the permutation of the values of and ). In such scenarios, where no known cures are observed, the proposed model is not recommended, and it is more appropriate to consider the Berkson and Gage mixture model (1).

2.3. Regression Model

In this work, the proposal is to incorporate the covariates into the model in the probability of cure , as presented in (4). Thus, considering the logit link function, the regression model will be expressed by , which results in

In (12), is a vector of k explanatory variables and is its respective vector of regression coefficients.

The distribution of the random variable T was reparametrized in order to ensure that one of its new parameters represents the probability of cure. The proposed reparameterization is given by

which results in

In the new parameterization proposed in (13), is a parameter that corresponds to the probability of cure and represents the ratio between the odds of the known cure and the proportion of the latent cure. Large values of indicate a greater known cure in relation to latent cure.

From (11), (12) and (14), the likelihood function can be rewritten as

In (15), , and and are the survival and probability density functions of susceptible individuals, respectively. Here, is the vector of times observed in the sample with their respective indicators of censorship and knowledge of the cure , is the vector of parameters of the distribution of non-susceptible individuals, and are the regression coefficients associated with the observed explanatory variables , .

Applying the logarithm to the likelihood function (15), we get

where c is a constant that does not depend on , and .

The likelihood equation is given by

Thus, the value , which satisfies Equation (17), is the maximum likelihood estimator of the model parameters, which under appropriate regularity conditions has asymptotically a multivariate normal distribution with mean and variance and covariance matrix given by

The value of and the observed information matrix can be obtained numerically using computational optimization methods using the Newton–Raphson-type algorithm, which provides an accurate numerical approximation for this matrix. From these results, it is possible to construct confidence intervals for the parameters and carry out significance tests on the model covariates.

3. Results and Discussions

3.1. Simulation Study

This section describes a simulation study conducted to investigate whether the usual asymptotic properties of maximum likelihood estimators are present. The performance of the proposed model was also evaluated in the presence of censored data.

The study of the proposed model was conducted considering simulated data in R software, version 4.3.3 [12]. The simulations performed in this work considered in the model a dichotomous covariate, , generated from a Bernoulli distribution with a probability of success and a numerical covariate, , with a standard normal distribution. These covariates were included in the the probability of cure (4) taking into account a logit link function, i.e., , where and . For a fixed value of , the values of and are given by (14).

This simulation study considered that the time to failure (U) of susceptible individuals follows a Weibull distribution with shape parameter and scale parameters . The Weibull distribution was chosen due to its popularity in modeling survival data. In addition, the time to censoring (V) follows a Weibull distribution with the same shape parameter and scale parameters , where q denotes the proportion of censorship. This result is based on the fact that when and . The survival times and their respective censoring indicators can be obtained following the steps of Algorithm 1.

| Algorithm 1: Obtaining the survival time of the proposed model |

|

Note 1: In the case of no censoring (q = 0), the value of in Step 6 can be specified in a way that . For example, .

Note 2: The Step 9 implies that the censored times of non-susceptible individuals in which the cure is not known will be equal to .

The survival time samples were simulated considering the values , , , and several values of and . The values of and were defined in order to vary the proportions of known and latent cures ( and , respectively). A total of 5 simulation scenarios were considered, as shown in Table 1.

Table 1.

Scenarios used in the simulations.

The mean estimates, the mean square error (MSE), and the coverage probability (CP) of the estimators were obtained from 1000 Monte Carlo replicates, considering sample sizes n = 50, 100, 200, and 500 and censoring percentages equal to 0%, 10%, and 30% (i.e., censoring susceptible individuals).

For the construction of confidence intervals (CI) for the calculation of the CP, a confidence level of 95% was considered. In addition, since and the parameters and of the Weibull distribution are positive, a logarithmic transformation was applied for constructing the CIs of these parameters.

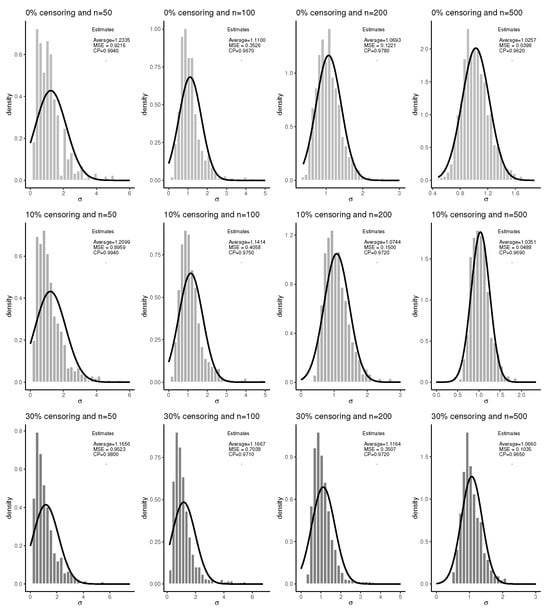

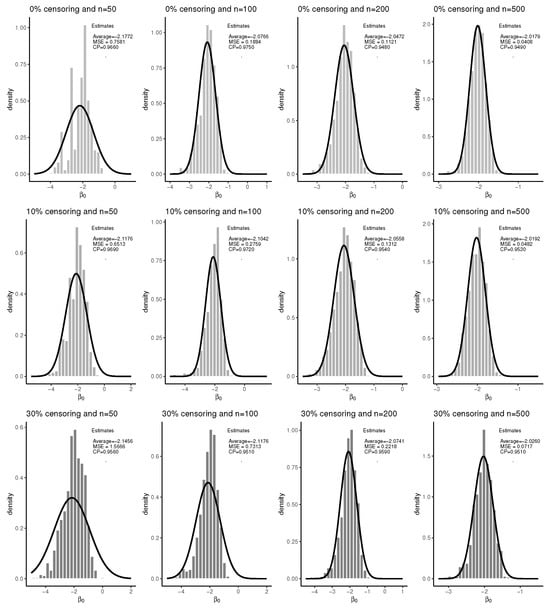

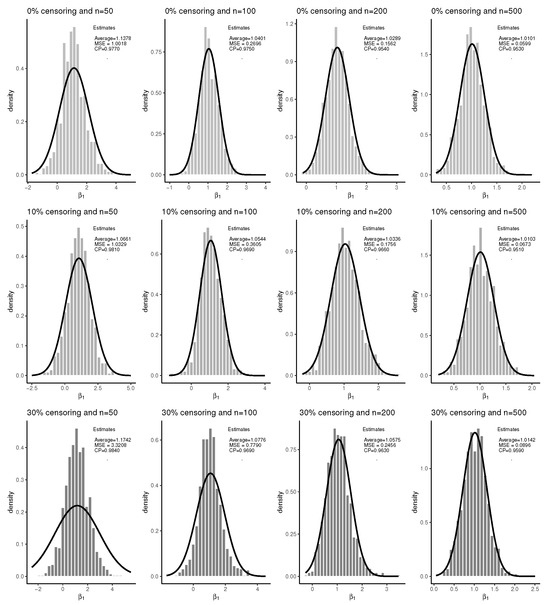

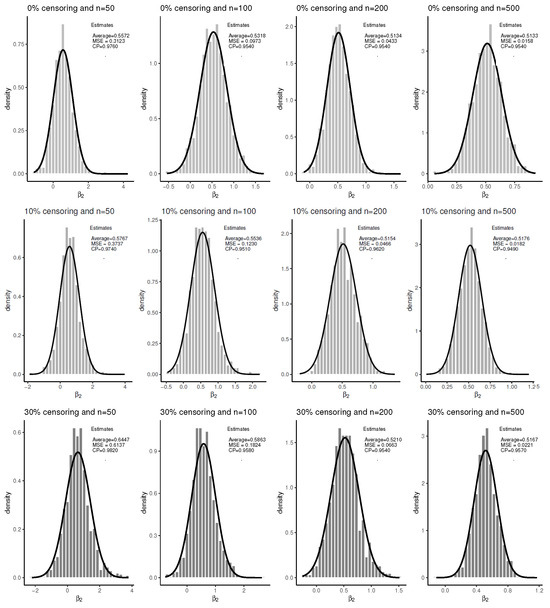

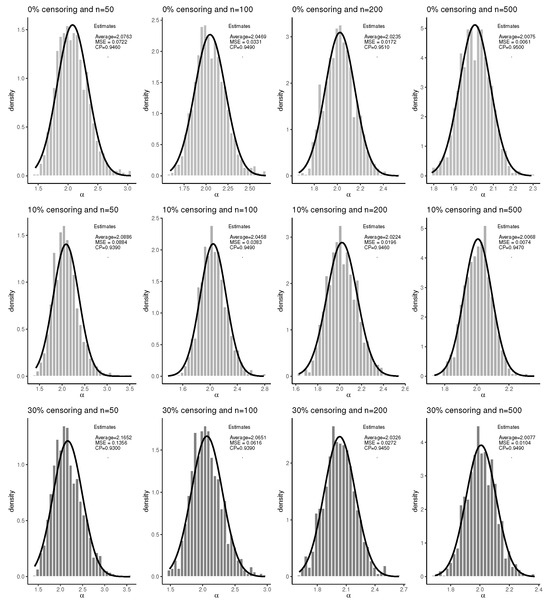

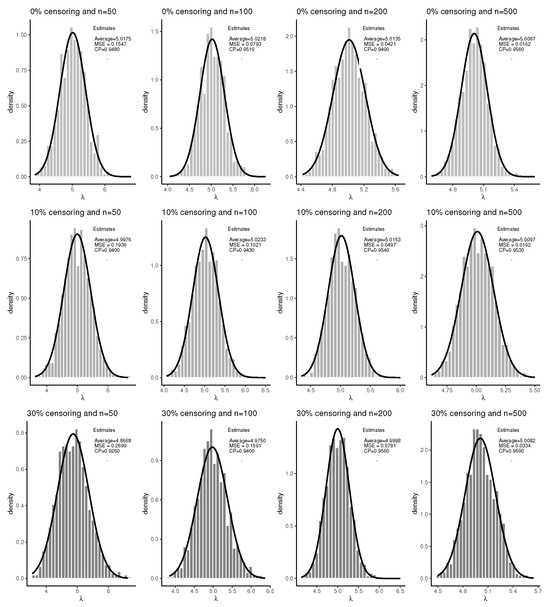

Analysis of the results shown in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6, referring to Scenario 2—in which data histograms were plotted with normal distribution curves superimposed, along with the values of the average, mean square error (MSE) and coverage probability (CP)—provides evidence of the asymptotic normality of the estimators, regardless of the censoring percentage.

Figure 1.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

Figure 2.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

Figure 3.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

Figure 4.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

Figure 5.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

Figure 6.

Empirical distribution of the estimates of from 1000 Monte Carlo replications with the fitted normal curve (Scenario 2).

When the censoring percentage is equal to zero, the estimators have excellent statistical properties. The parameter estimates exhibit small bias and low variance, indicating consistency. In addition, the CPs remain close to the nominal confidence level, regardless of the parameter and sample size. The largest difference observed was 0.0440 (0.9940–0.9500), for the parameter with a sample size of n = 50.

In the presence of censored observations, there is an increase in the deviations of the estimates from the true parameter values, and this effect is more pronounced as the percentage of censoring increases. Consequently, the probability of coverage tends to move away from the nominal confidence level. However, this behavior is to be expected in censored contexts. It is important to note that as the sample size increases, even under high censoring percentages, the estimators once again show desirable properties. For example, for n = 500 and 30% censoring, the biggest difference observed was 0.0660 (1.066–1.000) in the average, 0.1035 in the MSE, and 0.0150 (0.9650–0.9500) in the CP, all three associated with the parameter .

Finally, the results showed evidence of the asymptotic normality of the estimators for all parameters and censorship percentages, as evidenced by the fit of the normal curves superimposed on the histograms, which improves with increasing sample size. This behavior justifies the use of normal approximations for constructing confidence intervals and testing hypotheses about the model parameters, even in scenarios with censoring. The results of the other scenarios were similar to those observed in Scenario 2. The numerical results for these scenarios are shown in Table 2.

Table 2.

Average of estimates, MSE, and probability of coverage (CP) of parameters considering the simulation scenarios and different sample sizes and censoring percentages.

3.2. An Illustrative Example

This section presents an illustration of the application of the proposed model to an artificial dataset of clients who take a loan at a financial institution. The decision to use artificial data for this illustration is due to the high commercial value associated with credit risk data and also due to legal factors that prevent the disclosure of sensitive customer information. Given these limitations, it has been decided to consult the existing literature in order to identify the variables most frequently used to classify low- and high-risk applicants in the context of credit risk analysis. Based on this investigation, the following explanatory variables were selected: (1) credit limit: is the maximum amount of credit a lender authorizes to each customer (log scale); (2) gender (female and male); (3) social class, coded into 5 levels (class A to class E); (4) marital status, coded into 3 levels (single, married, and widowed/separated) and; (5) age in years.

Based on these variables, a simulated database was created with 1000 observations, also including the time (in months) until the occurrence of default. In these studies, it has been observed that censorship rates are generally high, resulting in a low number of registered defaults. In this context, this study adopted a censorship rate of 50% in order to approximate the real data reported in the literature in studies such as [13]. This strategy allowed the simulated base to more faithfully represent the characteristics observed in real credit risk analysis scenarios.

In this study, the explanatory variables were generated as follows: credit limit by a lognormal distribution, gender by a Bernoulli distribution, social classification by a multinomial distribution with five categories, marital status by a multinomial distribution with three categories, and age by a Poisson distribution. Survival times were generated using Algorithm 1 described in Section 3. In addition, the time values were truncated at t = 60. In these cases, the indicators of censorship and knowledge cure were defined as and , respectively. This procedure was adopted to represent loans with terms up to 60 months. Here, indicates that default will not occur (an individual who is known to be cured) either because they have paid off the loan early or because they have completed the entire loan period without missing a payment.

The simulated dataset is provided in Table S1 of the Supplementary Materials. The sample exhibited a censoring rate of 54.4%, of which 44.0% correspond to individuals known to be cured ().

The application data was adjusted using the proposed model considering that the time to default of susceptible individuals follows a Weibull distribution with a shape parameter and a scale parameter , and the covariates were included in the probability of cure (4), taking into account a logit link function. The maximum likelihood point estimates of the parameters, with their respective confidence intervals, are shown in Table 3.

Table 3.

Point and interval estimates of the parameters of the proposed model.

The results in Table 3 indicate that, except for age, all covariates significantly influence the cure at the 5% significance level. The probability of cure for the clients was calculated using (12), based on the obtained estimates and considering all covariates. This probability of cure was used to classify clients as having a low or high risk of default. The cutoff point considered was 0.456, that is, a customer is classified as having a high risk of default if their probability of cure, (or classified as low risk if ).

The definition of the cut-off point took into account the proportion of defaulting customers in the sample. In the observed sample, a customer is considered to be in default when . This cutoff point resulted in correct predictions, sensitivity (probability of the model classifying a good customer as low risk) and specificity (probability of the model classifying a defaulting customer as having a high risk) of 70.3%, 56.4%, and 82.0%, respectively, indicating a good classification of customers, particularly the defaulting ones. In fact, the cut-off point can be adjusted to increase or decrease sensitivity or specificity in order to control the error that the financial institution considers to be the most critical.

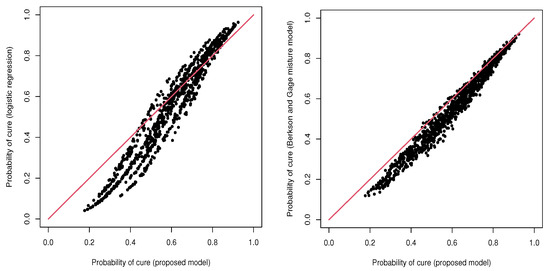

For benchmarking purposes, a logistic regression model and the Weibull Berkson and Gage mixture model were used to classify customers in this data set. The results of the logistic regression (correct predictions of 71.7%, sensitivity of 56.4%, and specificity of 82.4%) and the Berkson and Gage model (correct predictions of 70.2%, sensitivity of 54.4% and specificity of 83.5%) were similar to those of the proposed model. Figure 7 shows the probabilities of cure estimated by the proposed model, logistic regression, and Berkson and Gage mixture model, indicating agreement among these three methodologies.

Figure 7.

Probabilities of cure estimated by the proposed model, logistic regression model, and Berkson and Gage mixture model.

The results show that the model proposed in this study achieved performance similar to that of the benchmark models. Under a naive comparison, the overall accuracy rate of the proposed model was 70.3%, while the logistic regression and the Berkson and Gage mixture models achieved rates of 71.7% and 70.2%, respectively.

However, it is important to note that, despite the similarity in results to the logistic regression model, the proposed model also accounts for the time until default for susceptible clients. Moreover, the logistic model is not fully appropriate in this context, as there are latent cured (non-defaulting) clients for whom it is not known with certainty whether they are truly cured. In addition, as expected, the Weibull mixture model underestimates the cure probabilities. This occurs because the Berkson and Gage model ignores the presence of known cures, potentially considering some clients who are clearly cured as non-cured individuals.

4. Conclusions

This work presents an extension of the Berkson and Gage mixture model [2] that accommodates both latent and non-latent cure fractions. This model can be viewed as a cure rate mixture model with competing risks, considering the non-latent cure as a competing cause.

The proposed model was reparameterized in terms of the cure probability, with a regression structure attached to this parameter. Furthermore, a simulation study was conducted considering a Weibull distribution for the time to the event of susceptible (non-cured) individuals. The results of these simulated data provide evidence of the asymptotic properties of the estimators.

The proposed model is illustrated using a synthetic dataset of customers who have taken out loans from a financial institution, and its performance is compared with that of logistic regression and the standard Berkson and Gage mixture model. The results show that the proposed model not only achieves competitive accuracy but also offers important conceptual and practical advantages, since the logistic model ignores the time-to-event distribution and the Berkson and Gage model neglects known cure information when such data are available.

It is important to note that any other probability distribution could be used to model the time to event of susceptible individuals. Moreover, a regression structure, such as a proportional hazards or accelerated failure time model, can also be employed to incorporate covariates into the modeling of this time. In addition, future works could consider the implementation of informative censoring mechanisms, which are commonly encountered in credit risk modeling.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/stats8030082/s1, Table S1. Artificial dataset of 1000 clients who take a loan in a financial institution.

Author Contributions

Conceptualization, E.Y.N., F.M.A. and M.R.P.C.; methodology, E.Y.N., F.M.A. and M.R.P.C.; software, E.Y.N., F.M.A. and M.R.P.C.; validation, E.Y.N., F.M.A. and M.R.P.C.; formal analysis, E.Y.N., F.M.A. and M.R.P.C.; investigation, E.Y.N., F.M.A. and M.R.P.C.; resources, E.Y.N., F.M.A. and M.R.P.C.; data curation, E.Y.N. and M.R.P.C.; writing—original draft preparation, E.Y.N., F.M.A. and M.R.P.C.; writing—review and editing, E.Y.N., F.M.A. and M.R.P.C.; visualization, E.Y.N., F.M.A. and M.R.P.C.; supervision, E.Y.N.; project administration, E.Y.N.; funding acquisition, E.Y.N. and F.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior—Brazil (CAPES)—Finance Code 001, National Council for Scientific and Technological Development (CNPq), Editais de Auxílio Financeiro DPI/DPG/UnB, DPI/DPG/BCE/UnB, and PPGEST/UnB.

Data Availability Statement

The data presented in this study are available in the Supplementary Materials. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, M.H.; Ibrahim, J.G.; Sinha, D. A new Bayesian model for survival data with a surviving fraction. J. Am. Stat. Assoc. 1999, 94, 909–919. [Google Scholar] [CrossRef] [PubMed]

- Berkson, J.; Gage, R.P. Survival curve for cancer patients following treatment. J. Am. Stat. Assoc. 1952, 47, 501–515. [Google Scholar] [CrossRef]

- Yakovlev, A.Y.; Tsodikov, A.D. Stochastic Models of Tumor Latency and Their Biostatistical Applications; World Scientific: Singapore, 1996. [Google Scholar]

- Oliveira, M.R.; Moreira, F.; Louzada, F. The zero-inflated promotion cure rate model applied to financial data on time-to-default. Cogent Econ. Financ. 2017, 5, 1395950. [Google Scholar] [CrossRef]

- Chen, T.; Du, P. Promotion time cure rate model with nonparametric form of covariate effects. Stat. Med. 2018, 37, 1625–1635. [Google Scholar] [CrossRef] [PubMed]

- Gómez, Y.M.; Gallardo, D.I.; Bourguignon, M.; Bertolli, E.; Calsavara, V.F. A general class of promotion time cure rate models with a new biological interpretation. Lifetime Data Anal. 2023, 29, 66–86. [Google Scholar] [CrossRef] [PubMed]

- Leão, J.; Leiva, V.; Saulo, H.; Tomazella, V. Incorporation of frailties into a cure rate regression model and its diagnostics and application to melanoma data. Stat. Med. 2018, 37, 4421–4440. [Google Scholar] [CrossRef] [PubMed]

- Cancho, V.G.; Barriga, G.; Leão, J.; Saulo, H. Survival model induced by discrete frailty for modeling of lifetime data with long-term survivors and change-point. Commun. Stat.-Theory Methods 2021, 50, 1161–1172. [Google Scholar] [CrossRef]

- Maller, R.A.; Zhou, X. Survival Analysis with Long-Term Survivors; Wiley: Hoboken, NJ, USA, 1996. [Google Scholar]

- Rodrigues, J.; Cancho, V.G.; de Castro, M.; Louzada-Neto, F. On the unification of long-term survival models. Stat. Probab. Lett. 2009, 79, 753–759. [Google Scholar] [CrossRef]

- Larson, M.G.; Dinse, G.E. A Mixture Model for the Regression Analysis of Competing Risks Data. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1985, 34, 201–2011. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024; Available online: https://www.R-project.org/ (accessed on 1 September 2025).

- Dirick, G.C.L.; Baesens, B. Time to default in credit scoring using survival analysis: A benchmark study. J. Oper. Res. Soc. 2017, 68, 652–665. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).