Abstract

Linking methods are widely used in the social sciences to compare group differences regarding the mean and the standard deviation of a factor variable. This article examines a comparison between robust Haberman linking (HL) and invariance alignment (IA) for factor models with dichotomous and continuous items, utilizing the and loss functions. A simulation study demonstrates that HL outperforms IA when item intercepts are used for linking, rather than the original HL approach, which relies on item difficulties. The results regarding the choice of loss function were mixed: showed superior performance in the simulation study with continuous items, while performed better in the study with dichotomous items.

1. Introduction

When comparing multiple groups in confirmatory factor analysis (CFA; [1]) or item response theory (IRT; [2,3]) models with respect to a factor variable, certain identification assumptions are required. A common approach assumes that item parameters remain consistent across groups, a property referred to as measurement invariance [4,5]. This principle is frequently discussed in the social sciences [5,6]. In the IRT literature, the lack of invariance is called differential item functioning (DIF; [7,8,9,10,11]).

To address violations of measurement invariance, the invariance alignment (IA) method [12,13,14,15], also known as alignment optimization [16,17], has been proposed for comparing groups in unidimensional factor models. The IA method aims to maximize the number of item parameters that are (approximately) invariant while permitting limited deviations. This approach enhances the robustness of group comparisons in the presence of measurement invariance violations. The IA method is frequently applied in social sciences for analyzing questionnaire data [18,19,20,21,22,23,24,25].

The Haberman linking (HL; [26]) method, as a particular linking method [27,28,29], has been developed for group comparisons in IRT models. While it is widely applied in educational testing, it remains largely unfamiliar to practitioners in other fields (but see [30,31,32,33,34] for exceptions). This trend is also reflected in the citation counts of the original sources of IA and HL. The original IA article of Asparouhov and Muthén (2014, Structural Equation Modeling [12]) has been cited 849 times according to Google Scholar, 440 times according to Web of Science, and 392 times according to CrossRef (as of 22 November 2024). In contrast, the original HL article of Haberman (2009, ETS Research Report [26]) has only 80 citations according to Google Scholar and 32 citations according to CrossRef (as of 22 November 2024).

Notably, HL appears to share significant similarities with the invariance alignment (IA) method. As noted in [35], researcher “Matthias von Davier, in particular, also highlighted how the alignment optimization [i.e., invariance alignment] method is very similar to the simultaneous test-linking approach proposed by Haberman (2009)” [35].

This article examines a comparison between HL and the IA method. Specifically, a robust version of HL, termed robust HL, is contrasted with IA in the presence of fixed differential item functioning (DIF) effects, which follows a sparsity structure; that is, only a subset (i.e., the minority) of item parameters exhibit DIF. This article builds on previous work of the author in a precursor article [36], published in 2020 in the Stats journal. To facilitate the comparison between the HL and IA linking methods, the robust loss functions and are employed in both HL and IA. A recently proposed differentiable approximation of the loss function is applied for HL and IA for the first time. As in [36], HL was modified to enable linking based on item intercepts instead of item difficulties.

The findings in this article, from two simulation studies, show that the modified HL specification with linking based on item intercepts closely resembles IA and, in several contexts, outperforms it. Moreover, in contrast to previous work in [36], the simulation studies in this article involved only three groups, rather than a larger number of groups, and manipulated the DIF effect size and the number of items to more thoroughly investigate the generalizability of the findings from the comparison of HL and IA. Previous work pointed out that the performance of different loss functions strongly depends on the size of the DIF effect sizes, where more robust (i.e., less biased) results are typically obtained with larger DIF effect sizes. In addition, more robust results can be expected with a larger number of items.

The structure of the article is as follows. Section 2 reviews unidimensional factor models for dichotomous and continuous items. The robust and functions are discussed in Section 3. Descriptions of IA and HL with robust loss functions are provided in Section 4 and Section 5, respectively. Section 6 presents results from a simulation study with dichotomous items, while Section 7 discusses findings from a simulation study using continuous items. Finally, Section 8 concludes the article with a discussion.

2. Unidimensional Factor Model

This section discusses unidimensional factor models for dichotomous items in Section 2.1 and for continuous items in Section 2.2. Let denote the vector of items in group . The vector of items is related to a normally distributed factor variable , commonly referred to as the trait or ability variable in IRT. It is assumed that is normally distributed with mean and standard deviation (SD) in group g. For identification reasons, we assume that and holds in the first group. In the unidimensional factor models, it is assumed that items are conditionally independent given the latent variable . This implies that

Equation (1) represents the local independence assumption in IRT for dichotomous items, and it implies uncorrelated residual variables in the factor model for continuous items.

2.1. Dichotomous Items

We now describe the unidimensional factor model for dichotomous items . The function for in group g is referred to as the item response function (IRF). The IRF of the two-parameter logistic (2PL) model [37] is defined as

where and denote the item discrimination and the item difficulty , respectively, and is the logistic distribution function.

The 2PL model in (2) can be equivalently expressed with item intercepts instead of item difficulties . Then, the 2PL model is parametrized as

where , or .

The parameters of the 2PL model can be consistently estimated using marginal maximum likelihood estimation (MML; [38,39,40]). When estimating the 2PL model (2) separately in group g, the identification constraints and must be applied. The identified item parameters and in this specification are given as

2.2. Continuous Items

The unidimensional factor model for continuous items in CFA is defined as

where is the item intercept and is the item discrimination, respectively. The residual variables are uncorrelated with the factor variable .

The parameters of the unidimensional factor model (5) can be estimated with maximum likelihood [1,41]. When estimating the unidimensional model (5) separately in group g, the identification constraints and must again be applied. Under this specification, the identified item parameters and are expressed as

3. and Loss Functions

In this paper, DIF effects are treated as outliers that should be excluded from group comparisons [42,43,44,45,46,47,48]. Group differences are calculated using robust location measures, which are estimated via robust loss functions [49,50,51].

A flexible class of such a robust loss function is the (with ) loss function, refs. [36,52,53,54,55] defined as

The case (i.e., the loss function) corresponds to the widely known square loss function, while (i.e., the loss function) corresponds to median regression [56,57]. However, the loss function is not differentiable at for . To address this, a differentiable approximation of is given by

where is a tuning parameter that controls the approximation error of relative to [12]. In practice, values such as (see [12] for and ) or [36,58] have been effectively applied in various contexts.

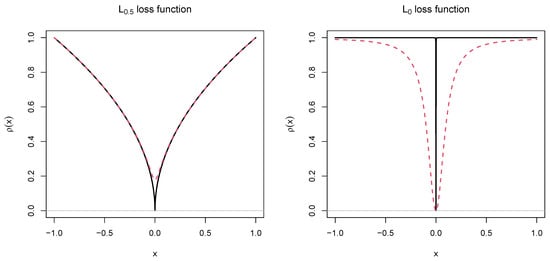

The left panel in Figure 1 depicts the exact loss function as a black solid line and its approximation as a red dashed line. The two functions are nearly identical except near the nondifferentiable point .

Figure 1.

loss function (left panel) and loss function (right panel). The exact functions are displayed with black solid lines, while their differentiable approximations are displayed in red dashed lines.

As an alternative to the loss function, the loss function [59,60] is defined as

where denotes the indicator function. This loss function takes the value 0 for and 1 for , effectively counting the number of deviations from 0. The loss function is particularly well suited for scenarios involving partial invariance, where only a small number of DIF effects deviate from zero. An approximation of this loss function is given by (see [61])

where is a tuning parameter. The choice has been shown to perform well across various settings [62,63].

In the previous work of the author [36], the loss function was approximated using the loss function with a very small value of . However, in our experience based on simulation studies involving structural equation models [62], the approximation (10) outperforms the differentiable approximation of the loss function in (8) in terms of the precision of parameter estimates. Consequently, this article focuses solely on the loss function.

The right panel in Figure 1 depicts the exact loss function as a black solid line and its approximation as a red dashed line. Note that the function is even discontinuous at the point , and there are moderate deviations of the approximation from the exact loss function .

The loss function with , such as , is preferred over in situations involving asymmetric error distributions (e.g., outliers). For instance, all outlying DIF effects in one group might be either positive or negative, creating a scenario of unbalanced DIF. In such cases, the loss function has been found to perform inadequately.

4. Invariance Alignment

In this section, we review the estimation of the IA method proposed by Asparouhov and Muthén [12,14]. The IA method has been discussed for continuous items [12], dichotomous items [14], and polytomous items [64].

The originally proposed IA method estimates group means and group SDs in a single step. However, it has been shown that the optimization can be split into two successive steps: first, estimating , and then estimating , as in HL [36]. The IA method relies on estimated item discriminations (or the log-transformed item discriminations and item intercepts (; ).

First, group SDs can be obtained by minimizing the optimization function

where represents the or loss function. Note that the original IA method also involves weights for pairs of groups g and h, which accounts for different sample sizes in the optimization function. We omit the inclusion of weights here, as these weights become irrelevant when all sample sizes per group are equal.

For identification purposes, one can either set or apply the constraint ; the latter constraint is implemented in the popular Mplus software [65].

As an alternative to the optimization function in (11), log-transformed group SDs with could alternatively be estimated based on log-transformed item discriminations by minimizing

where group SDs can be estimated as based on estimated values for .

In the second step, group means are estimated based on previously estimated group SDs by minimizing

Again, the originally proposed IA method uses the loss function, which employs the power .

In the Mplus implementation of the loss function with and , the default tuning parameter in the differentiable approximation of the loss function is [36,63,65]. However, selecting may be beneficial in many contexts [63,66]. The choice in the loss function was found to yield the best performance [66].

5. Haberman Linking

The originally proposed HL method [26] estimates group means and group SDs based on log-transformed item discriminations and item difficulties .

In the first step, the log-transformed group standard deviations are estimated (). In the second step, group means are estimated. For identification purposes, the constraints (implying ) and are imposed.

Log-transformed SDs and log-transformed joint item discriminations , where for , are obtained by minimizing the following optimization function:

where is the or loss function. The original HL method uses the loss function [26]. However, because distribution parameters need to be consistently estimated without being influenced by outlying DIF effects, this article employs differentiable approximations of robust loss functions, specifically and .

Subsequently, group means and joint item difficulties are determined by minimizing

where the estimated SDs are inserted in the optimization function. Similar to the optimization function (14) for SDs, the original HL method uses the loss function.

As an alternative to the optimization function in (15), a modified optimization function in HL uses item intercepts instead of item difficulties (see also [36]). The use of item intercepts is motivated by their role in IA. In this adaptation to HL, the group means and joint item intercepts are determined by minimizing

There is a lack of research comparing the performance of the optimization functions and in HL, particularly in comparing HL with these optimization functions to IA. As an exception, the author’s precursor article [36] included these two specifications, but they were only analyzed for a moderate or large number of groups, with a fixed DIF effect size and a fixed number of items. This article aims to provide additional insights into the performance of these two specifications for a smaller number of groups and more extended data-generating models.

6. Simulation Study 1: Dichotomous Items

6.1. Method

The 2PL model for groups was used as the IRF in the data-generating model. The latent factor variable was normally distributed within each group, with group means , , and . The SDs were , , and .

The simulation study was conducted for and items. Group-specific parameters and for each item and for groups were based on fixed base item parameters, with DIF effects remaining constant across replications within a simulation condition. We now describe the item parameter choice in the item condition. For the condition, the item parameters were duplicated. For 10 items, the base item discriminations were all set to 1.00. The base item difficulties were set as 0.21, −1.47, 0.09, 0.55, −0.67, 0.77, 0.99, −1.75, 0.10, and 1.17, resulting in a mean item difficulty of 0.000 and an SD of 0.999. For the condition, the item parameters were duplicated. The base item parameters are also available at https://osf.io/kcnyu (accessed on 22 November 2024).

Fixed uniform DIF effects were assumed, meaning that DIF could occur in item difficulties, while item discriminations were not prone to DIF. Specifically, group-specific item difficulties were simulated as , where the factor was chosen as either 1 and , indicating DIF items, or 0 for DIF-free items. In the first group, the factor was 1 for the first item and −1 for the second item, while all other items did not exhibit DIF. In the second group, DIF effects were simulated for the third and fourth items with a factor of 1. In the third group, DIF effects were simulated for the fifth and sixth items with a factor of −1. Note that the DIF effects were canceled out in the first group, but they were all positive in the second group and negative in the third group, resulting in unbalanced DIF. The size of the DIF effect was set to 0, 0.3, and 0.6, representing no DIF, small DIF, and large DIF, respectively.

The sample sizes per group were selected as , 500, 1000, and 2000 to represent typical sample sizes in small- to large-scale testing applications of the 2PL model [67,68].

In each of the 4 (sample size N) × 3 (DIF effect ) × 2 (number of items I) = 24 cells of the simulation, 3000 replications were conducted. The 2PL model was first estimated separately in each of the three groups. Linking was performed in four specifications. First, IA was carried out using two approaches: the original method, based on untransformed item discriminations ([12]; method IA1, using optimization functions (11) and (13)), and an alternative method based on log-transformed item discriminations, similar to the approach used in HL (method IA2, using optimization functions (12) and (13)). In addition, we used the originally proposed HL ([26]; method HL1, using optimization functions (14) and (15)), which relies on item difficulties, and an HL implementation that employed item intercepts (method HL2, using optimization functions (14) and (16)). These four specifications were applied to both the (i.e., ) and (i.e., ) loss functions, resulting in a total of 8 different linking methods. The loss function was not considered, as it has been shown in [36] to produce biased parameter estimates in IA and HL when unbalanced DIF is present. Furthermore, the simulated DIF effects were asymmetric, rendering the loss function ineffective in providing nearly unbiased linking estimates. For identification purposes, the distribution parameters in the first group were fixed at and , while and were freely estimated for the second and third groups.

We evaluated the bias and the root mean square error (RMSE) for the and estimates. Additionally, a relative RMSE was calculated, with IA1 using the loss function (i.e., ) serving as the reference method (set to a relative RMSE of 100). To summarize the results across groups, the average absolute bias and average relative RMSE were computed for both the and estimates across the two groups.

All analyses for this simulation study were carried out with the statistical software R (Version 4.4.1 [69]). The 2PL model was estimated with the function sirt::rasch.mml2() function from the R package sirt (Version 4.2-89 [70]). HL and IA were estimated with the sirt::linking.haberman.lq() and sirt::invariance.alignment() functions from the same R package, respectively. Replication material for this simulation study can be assessed at https://osf.io/kcnyu (accessed on 22 November 2024).

6.2. Results

Table 1 summarizes the average absolute bias and average relative RMSE for the estimated group means, . When (no DIF), all methods exhibited minimal bias, with values near 0.00 for larger sample sizes (N). As increased, bias grew across all methods. IA1, IA2, and HL2 performed similarly in terms of bias, while HL1 demonstrated the poorest performance.

Table 1.

Simulation Study 1 (dichotomous items): Average absolute bias and average relative root mean square error (RMSE) for estimated group means as a function of the DIF effect , number of items I, and sample size N.

In terms of RMSE, IA2 closely resembled IA1 for and . HL1 consistently showed the highest RMSE, although HL2 outperformed IA1 and IA2 in certain conditions with larger sample sizes. Increasing the number of items from 10 to 20 slightly reduced bias; however, bias converged to zero as sample sizes increased.

For the loss function, resulted in less bias than , but estimates based on were more variable, as reflected in higher RMSE values for most conditions. Overall, the HL2 method, as an alternative to HL1, was competitive with IA1 for estimating group means. HL2 offered particular advantages with large DIF effects (i.e., for ).

Table 2 presents the average absolute bias and relative RMSE for the estimated SDs . Across all conditions, bias was small for all methods, with slightly better performance observed at larger values of I and N. This outcome was expected, as DIF was introduced only in item difficulties, not in item discriminations.

Table 2.

Simulation Study 1 (dichotomous items): Average absolute bias and average relative root mean square error (RMSE) for estimated group standard deviations as a function of the DIF effect , number of items I, and sample size N.

RMSE values were generally higher for the HL methods compared to the IA methods; however, these differences diminished as sample size N increased. The loss function with outperformed in terms of relative RMSE.

Overall, HL2 performed similarly to, if not better than, IA1 for dichotomous items. Only a few conditions favored over .

7. Simulation Study 2: Continuous Items

7.1. Method

In this second simulation study, HL and IA were compared for continuous items. The data-generating model followed the simulation from Asparouhov and Muthén [12]. Continuous item responses were simulated using a unidimensional factor model with groups. The normally distributed factor variable was measured by either or normally distributed items.

The group means of the factor variable were set to 0, 0.3, and 1.0, while the group SDs were set to 1, 1.5, and 1.2, respectively. Item responses were generated with uniform DIF and nonuniform DIF. The following describes the item parameters in the condition with items. Each group had one noninvariant item intercept, , and one noninvariant item discrimination, . For all groups, invariant item discriminations and residual variances of the indicator variables were set to (), and invariant item intercepts were set to . The noninvariant item parameters in the first group were and . The noninvariant item parameters in the second group were and . The noninvariant item parameters in the third group were and . In the item condition, the item parameters were doubled.

The sample sizes , 500, 1000, and 2000 were selected to reflect typical applications of the IA method [15,16].

In each of the 4 (sample size N) × 2 (number of items I) = 8 cells of the simulation, 3000 replications were performed. The unidimensional factor model was estimated in the first step using the R (Version 4.4.1 [69]) function sirt::invariance_alignment_cfa_config() from the R package sirt (Version 4.2-89 [70]). Subsequently, the linking methods HL1, HL2, IA1, and IA2, as described in Simulation Study 1 (see Section 6.1), were applied for the powers and in the loss function. Consistent with the previous simulation study, the average absolute bias and average relative RMSE were used as outcome measures. The method IA1 with again served as the reference method for calculating the relative RMSE. As in Simulation Study 1, the HL and IA methods were implemented using the sirt::linking.haberman.lq() and sirt::invariance.alignment() functions from the same sirt package, respectively. Replication material for this simulation study is available at https://osf.io/kcnyu (accessed on 22 November 2024).

7.2. Results

Table 3 presents the average absolute bias and average relative RMSE for the estimated group means () and group standard deviations (). Both estimates improved in terms of bias as the sample size N increased. For sample sizes of at least 1000, the bias across all methods became negligible (i.e., 0.01 or lower), particularly under . The HL2 method consistently outperformed HL1, especially at smaller N and I, demonstrating the advantage of using item intercepts over item difficulties in HL. Additionally, HL2 outperformed both IA1 and IA2 across all conditions of this simulation study for and . Lower RMSE values were observed for compared to across the four linking methods in many scenarios. The loss function performed less favorably than the loss function only at small sample sizes.

Table 3.

Simulation Study 2 (continuous items): Average absolute bias and average relative root mean square error (RMSE) for estimated group means and estimated group standard deviations as a function of the number of items I, and sample size N.

8. Discussion

In this paper, we compared the linking methods robust HL and IA for the (i.e., ) and (i.e., ) loss functions. It turned out that robust HL performed similarly, if not better than IA, if it was applied in a variant relying on item intercepts instead of item difficulties. Importantly, the originally proposed HL, which relies on item difficulties instead of item intercepts, was clearly inferior to IA. The findings for the loss function choice vs. were mixed. In our first simulation study, involving dichotomous items, RMSE values were larger for the loss function. In contrast, RMSE values were smaller for in the second simulation study, involving continuous items. However, in the two studies, the bias in estimated group means and SDs was smaller for than for , indicating that always comes with higher variable parameter estimates.

As with any simulation study, our study also has several limitations. First, future studies might focus on comparing HL and IA for polytomous item responses. Second, we applied HL and IA to two-parameter models. The linking methods could also be applied in estimation settings for one-parameter models, namely, the Rasch model in IRT for dichotomous items [71] or the tau-equivalent measurement model for continuous items [72]. Alternatively, the item discriminations could be assumed as invariant across groups, while item intercepts are freely estimated in the first step. In the second step, only item intercepts are linked, reducing the uncertainty in estimated item discriminations during linking. Third, linking errors and total error estimation [73] could be developed and evaluated for IA.

In contrast to IA, both simulation studies revealed that additionally estimated item parameters in HL did not provide efficiency losses in the parameter estimates. Hence, HL might be preferred over IA for statistical reasons, and it has the advantage compared to IA that joint item parameters (i.e., that can be interpreted as item parameters in the partial invariance situation) are simultaneously estimated. In contrast, a subsequent estimation step is required in IA to determine joint item parameter estimates and DIF effects for items.

Finally, if robust HL or IA methods are utilized in the linking procedure, items with large DIF effects are essentially excluded from group comparisons. In this way, it is presupposed that the construct being measured will not be changed by eliminating these items from comparisons involving the factor variable. This view implies that these DIF items are deemed as construct-irrelevant. However, it might be dangerous to treat these DIF items with robust linking functions in group comparisons if they are essentially part of the construct (i.e., they are construct-relevant; see [74,75,76,77,78] but see [79,80] for alternative views).

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This article only uses simulated datasets. Replication material for creating the simulated datasets in the simulation studies can be found at https://osf.io/kcnyu (accessed on 22 November 2024).

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 2PL | Two-parameter logistic |

| CFA | Confirmatory factor analysis |

| DIF | Differential item functioning |

| HL | Haberman linking |

| IA | Invariance alignment |

| IRF | Item response function |

| IRT | Item response theory |

| MML | Marginal maximum likelihood |

| RMSE | Root mean square error |

| SD | Standard deviation |

References

- Bartholomew, D.J.; Knott, M.; Moustaki, I. Latent Variable Models and Factor Analysis: A Unified Approach; Wiley: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Bock, R.D.; Gibbons, R.D. Item Response Theory; Wiley: Hoboken, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Liu, J.; Ying, Z. Item response theory—A statistical framework for educational and psychological measurement. arXiv 2021, arXiv:2108.08604. [Google Scholar] [CrossRef]

- Meredith, W. Measurement invariance, factor analysis and factorial invariance. Psychometrika 1993, 58, 525–543. [Google Scholar] [CrossRef]

- Millsap, R.E. Statistical Approaches to Measurement Invariance; Routledge: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Mellenbergh, G.J. Item bias and item response theory. Int. J. Educ. Res. 1989, 13, 127–143. [Google Scholar] [CrossRef]

- Bauer, D.J. Enhancing measurement validity in diverse populations: Modern approaches to evaluating differential item functioning. Brit. J. Math. Stat. Psychol. 2023, 76, 435–461. [Google Scholar] [CrossRef]

- Holland, P.W.; Wainer, H. (Eds.) Differential Item Functioning: Theory and Practice; Lawrence Erlbaum: Hillsdale, NJ, USA, 1993. [Google Scholar] [CrossRef]

- Penfield, R.D.; Camilli, G. Differential item functioning and item bias. In Handbook of Statistics; Volume 26: Psychometrics; Rao, C.R., Sinharay, S., Eds.; Elsevier: Amsterdam, The Netherlands, 2007; pp. 125–167. [Google Scholar] [CrossRef]

- Thissen, D. A review of some of the history of factorial invariance and differential item functioning. Multivar. Behav. Res. 2024. epub ahead of print. [Google Scholar] [CrossRef]

- Wells, C.S. Assessing Measurement Invariance for Applied Research; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar] [CrossRef]

- Asparouhov, T.; Muthén, B. Multiple-group factor analysis alignment. Struct. Equ. Model. 2014, 21, 495–508. [Google Scholar] [CrossRef]

- Asparouhov, T.; Muthén, B. Penalized structural equation models. Struct. Equ. Model. 2024, 31, 429–454. [Google Scholar] [CrossRef]

- Muthén, B.; Asparouhov, T. IRT studies of many groups: The alignment method. Front. Psychol. 2014, 5, 978. [Google Scholar] [CrossRef] [PubMed]

- Muthén, B.; Asparouhov, T. Recent methods for the study of measurement invariance with many groups: Alignment and random effects. Sociol. Methods Res. 2018, 47, 637–664. [Google Scholar] [CrossRef]

- Cieciuch, J.; Davidov, E.; Schmidt, P. Alignment optimization. Estimation of the most trustworthy means in cross-cultural studies even in the presence of noninvariance. In Cross-Cultural Analysis: Methods and Applications; Davidov, E., Schmidt, P., Billiet, J., Eds.; Routledge: Oxfordshire, UK, 2018; pp. 571–592. [Google Scholar] [CrossRef]

- Pokropek, A.; Davidov, E.; Schmidt, P. A Monte Carlo simulation study to assess the appropriateness of traditional and newer approaches to test for measurement invariance. Struct. Equ. Model. 2019, 26, 724–744. [Google Scholar] [CrossRef]

- Fischer, R.; Karl, J.A. A primer to (cross-cultural) multi-group invariance testing possibilities in R. Front. Psychol. 2019, 10, 1507. [Google Scholar] [CrossRef]

- Han, H. Using measurement alignment in research on adolescence involving multiple groups: A brief tutorial with R. J. Res. Adolesc. 2024, 34, 235–242. [Google Scholar] [CrossRef]

- Lai, M.H.C. Adjusting for measurement noninvariance with alignment in growth modeling. Multivar. Behav. Res. 2023, 58, 30–47. [Google Scholar] [CrossRef] [PubMed]

- Leitgöb, H.; Seddig, D.; Asparouhov, T.; Behr, D.; Davidov, E.; De Roover, K.; Jak, S.; Meitinger, K.; Menold, N.; Muthén, B.; et al. Measurement invariance in the social sciences: Historical development, methodological challenges, state of the art, and future perspectives. Soc. Sci. Res. 2023, 110, 102805. [Google Scholar] [CrossRef]

- Luong, R.; Flake, J.K. Measurement invariance testing using confirmatory factor analysis and alignment optimization: A tutorial for transparent analysis planning and reporting. Psychol. Methods 2023, 28, 905–924. [Google Scholar] [CrossRef] [PubMed]

- Sideridis, G.; Alghamdi, M.H. Bullying in middle school: Evidence for a multidimensional structure and measurement invariance across gender. Children 2023, 10, 873. [Google Scholar] [CrossRef]

- Tsaousis, I.; Jaffari, F.M. Identifying bias in social and health research: Measurement invariance and latent mean differences using the alignment approach. Mathematics 2023, 11, 4007. [Google Scholar] [CrossRef]

- Wurster, S. Measurement invariance of non-cognitive measures in TIMSS across countries and across time. An application and comparison of multigroup confirmatory factor analysis, Bayesian approximate measurement invariance and alignment optimization approach. Stud. Educ. Eval. 2022, 73, 101143. [Google Scholar] [CrossRef]

- Haberman, S.J. Linking Parameter Estimates Derived from an Item Response Model Through Separate Calibrations; (Research Report No. RR-09-40); Educational Testing Service: Princeton, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Kolen, M.J.; Brennan, R.L. Test Equating, Scaling, and Linking; Springer: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Lee, W.C.; Lee, G. IRT linking and equating. In The Wiley Handbook of Psychometric Testing: A Multidisciplinary Reference on Survey, Scale and Test; Irwing, P., Booth, T., Hughes, D.J., Eds.; Wiley: New York, NY, USA, 2018; pp. 639–673. [Google Scholar] [CrossRef]

- Sansivieri, V.; Wiberg, M.; Matteucci, M. A review of test equating methods with a special focus on IRT-based approaches. Statistica 2017, 77, 329–352. [Google Scholar] [CrossRef]

- Höft, L.; Bernholt, S. Longitudinal couplings between interest and conceptual understanding in secondary school chemistry: An activity-based perspective. Int. J. Sci. Educ. 2019, 41, 607–627. [Google Scholar] [CrossRef]

- Liu, G.; Kim, H.J.; Lee, W.C.; Kim, Y. Comparison of Simultaneous Linking and Separate Calibration with Stocking-Lord Method; (CASMA Research Report Number 57); Center for Advanced Studies in Measurement and Assessment, University of Iowa: Iowa City, IA, USA, 2024; Available online: https://tinyurl.com/2bj6pbwn (accessed on 20 December 2024).

- Moehring, A.; Schroeders, U.; Wilhelm, O. Knowledge is power for medical assistants: Crystallized and fluid intelligence as predictors of vocational knowledge. Front. Psychol. 2018, 9, 28. [Google Scholar] [CrossRef]

- Neuenschwander, M.P.; Mayland, C.; Niederbacher, E.; Garrote, A. Modifying biased teacher expectations in mathematics and German: A teacher intervention study. Learn. Individ. Differ. 2021, 87, 101995. [Google Scholar] [CrossRef]

- Sewasew, D.; Schroeders, U.; Schiefer, I.M.; Weirich, S.; Artelt, C. Development of sex differences in math achievement, self-concept, and interest from grade 5 to 7. Contemp. Educ. Psychol. 2018, 54, 55–65. [Google Scholar] [CrossRef]

- Avvisati, F.; Le Donné, N.; Paccagnella, M. A meeting report: Cross-cultural comparability of questionnaire measures in large-scale international surveys. Meas. Instrum. Soc. Sci. 2019, 1, 8. [Google Scholar] [CrossRef]

- Robitzsch, A. Lp loss functions in invariance alignment and Haberman linking with few or many groups. Stats 2020, 3, 246–283. [Google Scholar] [CrossRef]

- Birnbaum, A. Some latent trait models and their use in inferring an examinee’s ability. In Statistical Theories of Mental Test Scores; Lord, F.M., Novick, M.R., Eds.; MIT Press: Reading, MA, USA, 1968; pp. 397–479. [Google Scholar]

- Aitkin, M. Expectation maximization algorithm and extensions. In Handbook of Item Response Theory, Volume 2: Statistical Tools; van der Linden, W.J., Ed.; CRC Press: Boca Raton, FL, USA, 2016; pp. 217–236. [Google Scholar] [CrossRef]

- Bock, R.D.; Aitkin, M. Marginal maximum likelihood estimation of item parameters: Application of an EM algorithm. Psychometrika 1981, 46, 443–459. [Google Scholar] [CrossRef]

- Glas, C.A.W. Maximum-likelihood estimation. In Handbook of Item Response Theory, Volume 2: Statistical Tools; van der Linden, W.J., Ed.; CRC Press: Boca Raton, FL, USA, 2016; pp. 197–216. [Google Scholar] [CrossRef]

- Basilevsky, A.T. Statistical Factor Analysis and Related Methods: Theory and Applications; Wiley: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- De Boeck, P. Random item IRT models. Psychometrika 2008, 73, 533–559. [Google Scholar] [CrossRef]

- Halpin, P.F. Differential item functioning via robust scaling. Psychometrika 2024, 89, 796–821. [Google Scholar] [CrossRef] [PubMed]

- Magis, D.; De Boeck, P. Identification of differential item functioning in multiple-group settings: A multivariate outlier detection approach. Multivar. Behav. Res. 2011, 46, 733–755. [Google Scholar] [CrossRef] [PubMed]

- Robitzsch, A. Robust and nonrobust linking of two groups for the Rasch model with balanced and unbalanced random DIF: A comparative simulation study and the simultaneous assessment of standard errors and linking errors with resampling techniques. Symmetry 2021, 13, 2198. [Google Scholar] [CrossRef]

- Robitzsch, A. Comparing robust linking and regularized estimation for linking two groups in the 1PL and 2PL models in the presence of sparse uniform differential item functioning. Stats 2023, 6, 192–208. [Google Scholar] [CrossRef]

- Tutz, G.; Schauberger, G. A penalty approach to differential item functioning in Rasch models. Psychometrika 2015, 80, 21–43. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Liu, Y.; Liu, H. Testing differential item functioning without predefined anchor items using robust regression. J. Educ. Behav. Stat. 2022, 47, 666–692. [Google Scholar] [CrossRef]

- Huber, P.J.; Ronchetti, E.M. Robust Statistics; Wiley: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Maronna, R.A.; Martin, R.D.; Yohai, V.J. Robust Statistics: Theory and Methods; Wiley: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Wilcox, R.R.; Keselman, H.J. Modern robust data analysis methods: Measures of central tendency. Psychol. Methods 2003, 8, 254–274. [Google Scholar] [CrossRef]

- Giacalone, M.; Panarello, D.; Mattera, R. Multicollinearity in regression: An efficiency comparison between Lp-norm and least squares estimators. Qual. Quant. 2018, 52, 1831–1859. [Google Scholar] [CrossRef]

- Lipovetsky, S. Optimal Lp-metric for minimizing powered deviations in regression. J. Mod. Appl. Stat. Methods 2007, 6, 20. [Google Scholar] [CrossRef]

- Livadiotis, G. General fitting methods based on Lq norms and their optimization. Stats 2020, 3, 16–31. [Google Scholar] [CrossRef]

- Sposito, V.A. On unbiased Lp regression estimators. J. Am. Stat. Assoc. 1982, 77, 652–653. [Google Scholar]

- Koenker, R.; Hallock, K.F. Quantile regression. J. Econ. Perspect. 2001, 15, 143–156. [Google Scholar] [CrossRef]

- Koenker, R. Quantile regression: 40 years on. Annu. Rev. Econ. 2017, 9, 155–176. [Google Scholar] [CrossRef]

- Robitzsch, A. Implementation aspects in regularized structural equation models. Algorithms 2023, 16, 446. [Google Scholar] [CrossRef]

- Oelker, M.R.; Pößnecker, W.; Tutz, G. Selection and fusion of categorical predictors with L0-type penalties. Stat. Model. 2015, 15, 389–410. [Google Scholar] [CrossRef]

- Oelker, M.R.; Tutz, G. A uniform framework for the combination of penalties in generalized structured models. Adv. Data Anal. Classif. 2017, 11, 97–120. [Google Scholar] [CrossRef]

- O’Neill, M.; Burke, K. Variable selection using a smooth information criterion for distributional regression models. Stat. Comput. 2023, 33, 71. [Google Scholar] [CrossRef] [PubMed]

- Robitzsch, A. L0 and Lp loss functions in model-robust estimation of structural equation models. Psych 2023, 5, 1122–1139. [Google Scholar] [CrossRef]

- Robitzsch, A. Examining differences of invariance alignment in the Mplus software and the R package sirt. Mathematics 2024, 12, 770. [Google Scholar] [CrossRef]

- Mansolf, M.; Vreeker, A.; Reise, S.P.; Freimer, N.B.; Glahn, D.C.; Gur, R.E.; Moore, T.M.; Pato, C.N.; Pato, M.T.; Palotie, A.; et al. Extensions of multiple-group item response theory alignment: Application to psychiatric phenotypes in an international genomics consortium. Educ. Psychol. Meas. 2020, 80, 870–909. [Google Scholar] [CrossRef]

- Muthén, L.; Muthén, B. Mplus User’s Guide, Version 8.11; Muthén & Muthén: Los Angeles, CA, USA, 2024. Available online: https://www.statmodel.com/ (accessed on 22 November 2024).

- Robitzsch, A. Implementation aspects in invariance alignment. Stats 2023, 6, 1160–1178. [Google Scholar] [CrossRef]

- Lietz, P.; Cresswell, J.C.; Rust, K.F.; Adams, R.J. (Eds.) Implementation of Large-Scale Education Assessments; Wiley: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Rutkowski, L.; von Davier, M.; Rutkowski, D. (Eds.) A Handbook of International Large-scale Assessment: Background, Technical Issues, and Methods of Data Analysis; Chapman Hall/CRC Press: London, UK, 2013. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Core Team: Vienna, Austria, 2024; Available online: https://www.R-project.org (accessed on 15 June 2024).

- Robitzsch, A. sirt: Supplementary Item Response Theory Models, 2024. R Package Version 4.2-89. Available online: https://github.com/alexanderrobitzsch/sirt (accessed on 13 November 2024).

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; Danish Institute for Educational Research: Copenhagen, Denmark, 1960. [Google Scholar]

- Raykov, T.; Marcoulides, G.A. Introduction to Psychometric Theory; Routledge: London, UK, 2011. [Google Scholar] [CrossRef]

- Monseur, C.; Berezner, A. The computation of equating errors in international surveys in education. J. Appl. Meas. 2007, 8, 323–335. Available online: https://bit.ly/2WDPeqD (accessed on 22 November 2024).

- Camilli, G. The case against item bias detection techniques based on internal criteria: Do item bias procedures obscure test fairness issues? In Differential Item Functioning: Theory and Practice; Holland, P.W., Wainer, H., Eds.; Erlbaum: Hillsdale, NJ, USA, 1993; pp. 397–417. [Google Scholar]

- Funder, D.C.; Gardiner, G. MIsgivings about measurement invariance. Eur. J. Pers. 2024, 38, 889–895. [Google Scholar] [CrossRef]

- Robitzsch, A.; Lüdtke, O. Why full, partial, or approximate measurement invariance are not a prerequisite for meaningful and valid group comparisons. Struct. Equ. Model. 2023, 30, 859–870. [Google Scholar] [CrossRef]

- Shealy, R.; Stout, W. A model-based standardization approach that separates true bias/DIF from group ability differences and detects test bias/DTF as well as item bias/DIF. Psychometrika 1993, 58, 159–194. [Google Scholar] [CrossRef]

- Welzel, C.; Inglehart, R.F. Misconceptions of measurement equivalence: Time for a paradigm shift. Comp. Political Stud. 2016, 49, 1068–1094. [Google Scholar] [CrossRef]

- Fischer, R.; Rudnev, M. From MIsgivings to MIse-en-scène: The role of invariance in personality science. Eur. J. Pers. 2024. epub ahead of print. [Google Scholar] [CrossRef]

- Meuleman, B.; Żółtak, T.; Pokropek, A.; Davidov, E.; Muthén, B.; Oberski, D.L.; Billiet, J.; Schmidt, P. Why measurement invariance is important in comparative research. A response to Welzel et al. (2021). Sociol. Methods Res. 2023, 52, 1401–1419. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).