Abstract

This paper introduces the EGTL (extract, generate, transfer, load) model, a theoretical framework designed to enhance the traditional ETL processes by integrating a novel ‘generate’ step utilizing generative artificial intelligence (GenAI). This enhancement optimizes data extraction and processing, presenting a high-level solution architecture that includes innovative data storage concepts: the Fusion and Alliance stores. The Fusion store acts as a virtual space for immediate data cleaning and profiling post-extraction, facilitated by GenAI, while the Alliance store serves as a collaborative data warehouse for both business users and AI processes. EGTL was developed to facilitate advanced data handling and integration within digital ecosystems. This study defines the EGTL solution design, setting the groundwork for future practical implementations and exploring the integration of best practices from data engineering, including DataOps principles and data mesh architecture. This research underscores how EGTL can improve the data engineering pipeline, illustrating the interactions between its components. The EGTL model was tested in the prototype web-based Hyperloop Decision-Making Ecosystem with tasks ranging from data extraction to code generation. Experiments demonstrated an overall success rate of 93% across five difficulty levels. Additionally, the study highlights key risks associated with EGTL implementation and offers comprehensive mitigation strategies.

1. Introduction

In the field of data engineering, efficient data handling and extraction using ETL processes form the cornerstone of managing complex, multivariate systems. Widely used in data engineering practices, traditional ETL processes face challenges posed by the increasing volume, velocity, and variety of data in the current big data landscape [1]. The advent of generative artificial intelligence (GenAI) technologies offers promising advantages to augment ETL frameworks and processes, yet the integration of GenAI remains underexplored.

The aim of this research is to propose the EGTL model—a theoretical approach designed to integrate GenAI within the ETL process, thereby enhancing its efficiency and effectiveness. This paper does not seek to empirically validate the model but rather to lay the foundational concepts and design, which will be subject to future empirical testing within the scope of subsequent research phases.

The primary research goal is to design a solution architecture for enhancing the ETL process with GenAI to illustrate how different components interact, correlate, and support each other.

By incorporating a “generate” step into the traditional ETL process, the EGTL model leverages GenAI for enhanced data handling and extraction. This step serves as a crucible for innovation to improve the data processing life cycle, allowing for dynamic data generation, cleaning, and profiling before the data progresses through the pipeline. This research answers the following questions:

- How to improve the ETL process?

- How does the E(G)TL model enhance data handling and extraction in multivariate systems compared to a traditional ETL processes?

- In what ways can GenAI within the E(G)TL framework improve data quality and extract new insights from data?

- How can the E(G)TL Model be tailored to support a decentralized data architecture data mesh while adhering to DataOps principles, enhancing decision-making ecosystems?

Central to the proposed model are the Fusion and Alliance stores. The Fusion store acts as a virtual melting pot where the data undergo cleaning and profiling immediately after extraction, facilitated by GenAI technologies. Meanwhile, the Alliance store serves as a collaborative data warehouse, supporting both business users and GenAI in a symbiotic data processing environment.

The EGTL model is envisioned as a blueprint for the reality of modern data engineering by allowing more structured, flexible, and coherent use of GenAI in data processing. It is aligned with the best practices highlighted within the growing field of DataOps, which is a set of principles rather than a specific tool or software. This study not only explores the theoretical underpinnings of the EGTL model but also glimpses into some practical applications. While the application of EGTL is designed for use in digital ecosystem, a use case is explored in the context of data optimization within the Hyperloop technology decision-making ecosystem.

By reimagining the ETL process through the lens of GenAI, this research contributes to the evolving discourse on data engineering, offering a comprehensive high-level framework for the efficient handling and extraction of data in multivariate systems. Exploration of the EGTL model aims to guide decision-making processes and foster innovation in the integration of AI technologies within data engineering pipelines corresponding with DataOps principles and data mesh architecture.

The EGTL model is designed with the modern IT code of ethics supporting diverse, inclusive descriptors and terminology to ensure that the model is free from any sort of stereotypes, slang, bias, or culture assumptions.

2. Materials and Methods

The EGTL model is aimed to process large amounts of structured and unstructured data, operate in the scope of relational and non-relational data, build simulations, and to provide analytics to the end business users. A high-level solution architecture must be designed to include all possible (known and unknown) data requirements for complex and interconnecting systems. It supports data quality of the given product, improves user experience, and helps to choose appropriate development and data engineering tools. Big data products rely heavily on the collection, processing, integration, and analysis of vast amounts of data from various sources. Orchestration of the data lifecycle by the data engineering field plays a critical role in ensuring that these data are accurate, complete, relevant, consistent, accessible, and actionable for decision-making purposes [2].

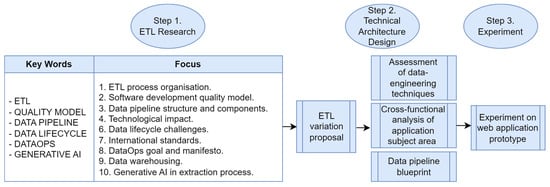

This research methodology begins with a State-of-the-Art ETL (extract, transform, load) of existing scientific publications and industry reports to assess existing practices in data engineering related to ETL. This part of the research focuses on the ETL process organization, software development quality model, data pipeline structure and components, technological impact, data lifecycle challenges, international standards, DataOps goal and manifesto, data warehousing, and GenAI in the extraction process. The research methodology overview is visually presented in Figure 1.

Figure 1.

Research methodology overview.

The second step in this research is to design a high-level solution architecture based on the best data engineering practices. Based on the ETL research phase findings, modifications to the existing ETL process and proposed ETL variations can be applied to enhance the existing process with GenAI.

A high-level solution architecture of the product is necessary to understand what kind of input/source data the developers are dealing with, and what will be the target or highlighting solution expected at the output. Establishing a solution architecture design before drafting data requirements is strategically important and practical for several reasons. This approach ensures that the data requirements are aligned with the overall system’s capabilities, constraints, and objectives. It is a foundational aspect of solution architecture in complex projects.

Establishing a solution architecture first delineates the system’s high-level boundaries and capabilities, ensuring that subsequent data requirements are fully compatible with the system’s technological framework. This initial step is key for understanding the scope of data the system can process, guiding the formulation of data requirements that are not only feasible but also optimized for the chosen architecture. It must be a strategic, compliant, user-focused, and integrated approach to system development [3]. During this step, a cross-functional analysis of the application subject area is conducted. Data-engineering techniques of data pipeline orchestration are assessed to choose the appropriate data integration method organization and composition of data processes. A data pipeline blueprint is arranged as the basis for the solution architecture design in the final stage of research.

The final step of the research methodology involves conducting an experiment on a web application prototype, specifically designed to test the proposed enhancements to the ETL process using GenAI. This step is aimed to support the EGTL technical design conclusion and serve as a bridge between the theoretical design developed in this research and its potential future practical applications. It provides empirical evidence on how the EGTL (extract, generate, transform, load) model operates within a real-world environment. The experiment focuses on integrating GenAI into various stages of data processing within the Hyperloop Decision-Making Ecosystem (HDME), assessing the model’s effectiveness in improving data quality and optimizing tasks such as data extraction, generation, transformation, and analysis.

By implementing this experiment on an actual web application prototype, this research directly explores how GenAI within the EGTL framework can enhance data quality and extract new insights from data. This practical assessment not only aims to validate the theoretical advantages of the model but also to uncover any challenges or limitations associated with applying GenAI for ETL enhancements.

3. Related Works

Extract, transform, load (ETL) processes are key aspects in modern data management, serving as the backend for data warehousing, analytics, and decision making in complex data projects. This chapter provides an analysis of ETL, examining its organization, impact on technology projects, and integration with modern methodologies. It also includes the effect of DataOps and GenAI on ETL. The ETL process is examined both from a data engineering perspective and its implications in the quality of big data product corresponding to data mesh architecture.

The ETL process is bound to the project solution architecture. It is structured into three core stages: extraction, transformation, and loading. Every stage has its own role in the high-level structure that ensures the integrity and usability of data. The extraction stage collects data from diverse sources. The transformation stage “cleans” and converts data into a format suitable for analysis. The loading stage transfers the processed data into a data warehouse.

Ensuring quality in ETL is one of the primary objectives of any data project, as it has a direct effect on the underlying user data. Problems with data quality in ETL might lead to an incorrect delivery of data, analysis, privacy issues, and/or security problems. The Hyperloop Decision-Making Ecosystem, where an EGTL model will be applied and approbated, is a big data software application, which uses various data sources and complex data formats and is what makes monitoring the data quality aspect particularly interesting in the scope of an EGTL compared to the traditional ETL.

Domain ownership, federated governance, data as products, and a self-serve data infrastructure platform to enable platform-wide thinking are the backbone of the data mesh. They offer a decentralized approach to data architecture and organizational design, emphasizing domain-oriented data ownership, self-serve data infrastructure, and product thinking in data management [4]. Under the framework of data mesh, a data product is defined as an independent entity that encompasses all necessary elements for processing and maintaining domain-specific data for analytical or intensive data applications. Data products are structured to provide accessibility to other teams through designated output interfaces.

The software development quality model for ETL embraces the following aspects: accuracy, efficiency, scalability, and maintainability. Adherence to best practices in coding, modular design, and comprehensive testing ensures high-quality ETL processes that are resilient to changes in data sources and business requirements [5].

Weng Yu highlights the importance of understanding the irregular nature of data modes alongside metadata, and the complexity of its representations make it difficult to build them in a unimodal way [6]. The data pipeline structure and its components are also affected by the irregular nature of data modes, as in the case of multimodal and complex data needed for a big data product that has corresponding requirements, e.g., a Hyperloop Decision-Making Ecosystem in the realm of intelligent transportation systems (ITS). A data pipeline in ETL includes data sources, data extraction tools, transformation engines, data loading mechanisms, and a data warehouse. Each component is optimized to handle a specific solution’s tasks, ensuring an efficient flow and transformation of data according to system requirements.

Modern ETL processes significantly impact technology projects by enabling efficient data handling, ensuring data quality, and facilitating informed decision-making. For the ITS realm, and more specifically for the Hyperloop Decision-Making Ecosystem, the implementation of which is part of future studies, ETL processes enable the benefits of predictive analytics, and at some point, real-time data processing by transforming raw data into valuable business insights.

Managing the data lifecycle in ETL involves several challenges, including (1) handling large volumes of data, (2) different data formats, (3) data security, (4) compliance with regulations, and (5) complying with data quality requirements. Addressing these challenges requires robust ETL processes, effective data governance, and adherence to data management best practices.

International standards ISO/IEC 25002:2024 [7], ISO/IEC 25012 [8], and GDPR impact the existing ETL processes. ISO/IEC 25002:2024 ensures quality criteria in software applications and addressing well-known human biases. ISO/IEC 25012 sets standards for the quality of data products. The General Data Protection Regulation (GDPR) [9] provides a set of requirements and regulations for the storage and management of user data. Overall, these standards define data quality requirements and ensure adherence to data privacy and security regulations, which impact the life cycle of big data products.

DataOps is a relevant new field. It was introduced in a 2014 Lenny Liebmann publication on the IBM Big Data and Analytics Hub [10]. More than just a profession, it is a set of principles that big data projects must comply with to streamline the design, implementation, and maintenance of data pipelines in different aspects. In an ETL, DataOps facilitates continuous integration and delivery, enhances collaboration between teams, and promotes agile methodologies, leading to more efficient and reliable data pipelines. Following DataOps principles is necessary to ensure the success of big data projects [11].

The storage needs for a big data project (data warehousing) is a critical component of the ETL process. This enhanced approach to data warehousing is designed to accommodate the dynamic nature of big data, ensuring that storage solutions are not only robust and efficient but also flexible enough to adapt to the evolving needs of data analytics and processing. Modern data warehousing techniques focus on scalability, performance, and integrating diverse data types, including structured and unstructured data [12].

GenAI revolutionizes the extraction stage of the ETL by enabling automated data extraction from unstructured sources, enhancing data quality, and creating synthetic data to augment sparse datasets [13]. This leads to more efficient data processing and broader analytical capabilities.

ETL processes are fundamental in shaping the landscape of web-applications processing big data. They allow organizations to harmonize disparate data sources, making the data more accessible and actionable for business intelligence, analytics, and decision-making. By streamlining the flow of data from its origin to the point of use, ETL processes facilitate improved data quality, reliability, and insights, ultimately shaping how businesses leverage data for strategic advantages [1]. The ETL process will evolve due to the increasing complexity and amount of data being used.

In the context of a big data project, specifications underscore the necessity for integrating cutting-edge technologies and methodologies, in the scope of this paper—DataOps and GenAI, ensure the project’s success. By organizing effectively and adhering to quality standards and international regulations, the project can play a crucial role in extracting and maximizing value from vast datasets, paving the way for innovative solutions and enhanced decision-making processes. Therefore, it is proposed to develop a modified version of the ETL process as a model that can comply with modern tools and principles aiding in development of the big data project.

The main limitation of ETL tools working “outside of the box” is that they were designed to work in “batch” data processing modes: collect data, download them, collect more data, download them, etc. In some situations, batch data loading works well. Cross-Platform Conversion Tools may be difficult to implement for cross-platform data sources, especially where big data collection is used. When the packet includes broken loading data, you need to track the problem, troubleshoot and quickly resend the corrected data [14]. Limitations of classic ETL process applications highlight the necessity for process modifications to leverage all the power of GenAI during all data cycles, to be able to process data of different formats and handle both batch and streaming data.

It is important to highlight the Medallion architecture, a data engineering framework that organizes data into multiple layers or “medals”—Bronze, Silver, and Gold—each representing a different stage of data refinement [15]. In this structure, raw data are initially ingested into the Bronze layer, where it is stored in its most unprocessed form. The data are then cleaned, filtered, and enriched in the Silver layer, making it more structured and reliable. Finally, in the Gold layer, the data undergoes further refinement and aggregation, preparing it for high-quality business analytics, reporting, and advanced use cases such as machine learning.

Delta Lake, often associated with the Medallion architecture, is an open-source storage layer designed to enhance the reliability and performance of data lakes [16]. It offers features like ACID transactions, scalable metadata handling, and the ability to efficiently manage both batch and streaming data. ACID transactions, which stand for atomicity, consistency, isolation, and durability, ensure the reliable processing of database transactions, particularly in relational databases. However, in non-relational or distributed databases, the strict enforcement of these properties can create performance bottlenecks [17].

Moreover, while Delta Lake introduces robustness, it also adds complexity, particularly in managing the transaction log and maintaining ACID properties across distributed systems [16]. The continuous need to update the transaction log and ensure data consistency across large datasets can slow down data processing, especially in real-time scenarios. Additionally, the requirement to store multiple versions of data, driven by features like time travel and versioning, can lead to increased storage costs. [18].

The EGTL model represents a significant advancement in data integration methodologies, establishing itself as an innovative approach within the broader research landscape. Traditional ETL processes have long served as the foundation of data warehousing and business intelligence, primarily focusing on structured data and batch processing. However, the rise of big data [19], the demand for real-time analytics, and the increasing complexity of data sources have revealed the limitations of these traditional approaches [20]. By incorporating GenAI into the data pipeline, the EGTL model addresses these challenges, enhancing data quality, enabling the extraction of novel insights, and offering greater flexibility in data processing. It introduces previously unavailable opportunities, such as generating code based on extracted data and dynamically creating new pages or modules through continuous self-improvement. This approach aligns with emerging trends in data engineering, including the adoption of DataOps and data mesh principles, which prioritize automation, continuous integration, and decentralized data management. The EGTL model’s relevance is further underscored by its ability to bridge the gap between conventional ETL frameworks and modern, AI-driven data environments, making it a valuable contribution to the ongoing evolution of data processing techniques.

4. Comparative Analysis

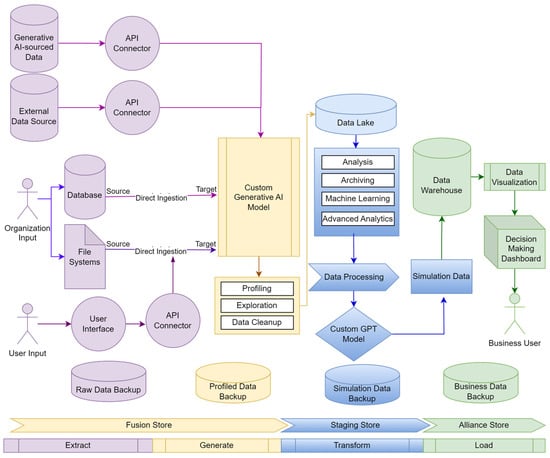

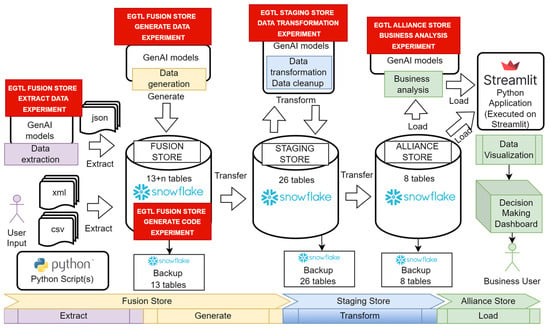

The development of a sophisticated technical framework for the ETL process supported by GenAI is primarily based on a thorough data engineering strategy, aimed at effectively using and capitalizing on data from various sources, being able to support the volume and quality of processed data (Figure 2).

Figure 2.

EGTL model high-level solution architecture. EGTL model’s phases are represented by a distinct color: Extract (color: purple), Generate (color: yellow), Transform (color: blue), Load (color: green). The arrows between the phases represent the flow of data through each stage of the EGTL process, illustrating how data moves within the phase and from one phase to the next.

Existing and widely used data engineering integration process methods are extract, transform, load (ETL) and extract, load, transform (ELT) (Table 1).

Table 1.

Data integration process overview.

This study proposes to use a variation of the ETL process, which is extract, generate, transform, load (EGTL) (Table 1). The “generate” step in EGTL implies an additional focus on data profiling, cleansing, or validation immediately after extraction and before the transformation process, preferably using GenAI practices. In EGTL, the focus of the processes is on the usage of custom GPT models to support all steps in the data integration process. This allows the project to have additional flexibility, utilizing the power of both structured and unstructured data, and at the same time, being able to aggregate data according to data requirements and big data project needs. Big data projects can potentially utilize the power of new disruptive technologies, and it is a matter of time until corresponding frameworks will be introduced and used by the industry. The identified problem in existing ETL processes, particularly in terms of data extraction, lies in handling the increasing volume, velocity, and variety of data sources.

An examination of the documentation from AWS, Google Cloud, Microsoft Azure, and Oracle indicated that conventional ETL systems had difficulty quickly and effectively extracting data from a variety of heterogeneous sources, including both structured and unstructured data. The intricacy of data can cause problems with performance, scalability, and quality, which can affect how quickly and accurately decisions based on data are made. Integrating cutting-edge data extraction technologies, like data virtualization or real-time streaming to improve flexibility and adaptability in a variety of datasets, is a necessary part of modernizing ETL procedures to meet these difficulties.

Utilizing the benefits of GenAI in the EGTL data integration process will include dispersed data into big data projects, which can impact the insights and knowledge generated by a project’s ecosystem. According to the requirements, the end goal of an application is to provide knowledgeable insights for decision making to business users of the system. The data pipeline must allow data flows to be enriched by insights starting from the extraction until the final stage, with delivery to the business users of the ecosystem.

According to [13], GenAI enhances the extract phase through various critical functions (Table 2). These functions, such as data identification and extraction, play a pivotal role in maintaining data quality during pre-processing. By establishing a robust foundation for data integrity, GenAI supports the efficient processing of complex data sources, facilitates advanced analytics, and adds significant value by improving the scalability and adaptability of the extraction process [23]. Additionally, GenAI automates the programming code creation for schema design and table deployment, streamlining database management. This capability improves schema accuracy and reduces manual effort in database setup, deployment, and maintenance. Furthermore, the integration of GenAI aids in managing backups across all stages of the ETL process.

Table 2.

GenAI support for extract phase in ETL [13] (For collecting information for Table 2, the OpenAI GPT-4 model was used [13]. Prompts: “How can Generative Artificial Intelligence support extraction phase of ETL process?” and “Can you please suggest example applications and use cases?”. The output data were refined by the author and included in the submission).

In the EGTL process there are three storage stores designed: Fusion, Staging, and Alliance to customize stages between different business groups (Table 2). While the Staging store is derived from the ETL process [24], the Fusion store is a virtual melting space where data cleaning and profiling is processed immediately after extraction and before loading it to the staging area. The alliance store contains the target data warehouse structures accessed both by business users and GenAI.

Table 3 describes the three distinct storage stores employed in the EGTL process, each fulfilling specialized roles within the data pipeline. The Fusion store acts as a workspace for real-time data cleaning, profiling, and validation immediately after extraction, ensuring that data are prepared for subsequent stages. The Staging store manages operational data, serving as a buffer where complex transformations, such as formatting, enrichment, and applying business logic, take place before the data are moved to the final storage. Lastly, the Alliance store is the target data warehouse where fully processed data are made available to both business users and GenAI models, enabling advanced analytics, insight generation, and supporting key decision-making tasks. Each store plays a crucial role in optimizing data quality, accessibility, and usability throughout the EGTL process.

Table 3.

Stores in EGTL process.

As organizations of various sizes increasingly report data quality issues in traditional ETL systems’ outputs [25], the Alliance store helps mitigate these challenges. According to the benefits outlined in [11] (Table 4), the Alliance store enhances both data management and analytics by automating and optimizing data cleansing and integration processes. This leads to more accurate and efficient data handling, aligning with data mesh principles, which emphasize decentralized data ownership and improved data accessibility across the organization.

Table 4.

Benefits of Alliance store (Proposed by this study).

Fusion and Alliance data stores align with data mesh principles as they offer a decentralized approach to data architecture and organizational design, emphasizing domain-oriented data ownership, self-serve data infrastructure, and product thinking in data management.

The data pipeline process is a subject of the data engineering field. The ecosystem’s high-level solution architecture is designed by arranging EGTL. EGTL as a modified ETL process in data engineering projects ensures data delivery to end users starting from extraction of data, transforming data, and, finally, loading to target data structures. The model is designed to combine, handle, and examine various data sources, transforming them into practical insights for decision making. The high-level architecture is designed to effectively manage the flow of data from many sources including user inputs, file systems, databases, and external sources. Each of these sources provides important information that is crucial for extracting insights for knowledge that can be further processed into decisions as the big data project’s ultimate outcome. In the given solution architecture, business users relate as end users of the ecosystem (Figure 2).

External data sources connect to the data lake via advanced programming interface (API) connectors, enabling the system to handle both streaming and near-real-time data alongside batch loading. This setup allows direct data ingestion using API from on-premises or cloud-based databases and filesystems, such as the Hadoop Distributed File System, which enhances the system’s fault tolerance [26].

To obtain accurate results in data analytics for decision making, the data must meet the following criteria [27]: accuracy, completeness, consistency, and timeliness. The data goal can be achieved by utilizing the artificial intelligence GenAI model (see Table 2) during the extraction and generation phase. Ecosystem operations for these phases are conducted in the Fusion store, which acts as an in-memory virtual data melting space for ensuring data quality before ingesting to the Staging store.

Another factor to be considered is outliers. Outliers are data points that deviate significantly from the average or typical values. Outliers can arise due to errors in data collection or processing, or they can represent accurate yet uncommon measurements. It is advisable to consistently examine for outliers, and occasionally it is advantageous and suitable to eliminate them [28]. The correct identification of outliers during data collection will affect a product’s data quality and thus the quality of information, knowledge, and decisions for the ecosystem’s end user. Outliers also support extracting meaningful insights during data mining according to [29].

A central component of the proposed design is the creation of a data lake, which functions as the primary repository for all incoming data. Based on system requirements, the design can be adapted to incorporate the data lake within the Fusion, Staging, or Alliance stores. The data lake is designed to efficiently manage large volumes of diverse data, storing it in its raw, unprocessed state [30]. It can accommodate a wide range of data types, including user inputs, structured database records, and unstructured data from various sources. User interaction with the data lake occurs through an interface provided by specific applications, allowing access via API through the user interface or external tools.

The data lake’s adaptability and scalability make it an optimal solution for efficiently managing vast amounts of diverse data types, ensuring that valuable information is preserved throughout the entire process.

After the data have been collected in the data lake, the next step is to conduct historical analysis, archiving, analysis, exploration, machine learning and advanced analytics alongside data processing. These steps together are necessary to produce simulation data, which will be loaded into a more organized and structured environment—the data warehouse. At this stage, the data are subjected to additional processing and refinement. The data warehouse is specifically built to enhance query performance, enabling efficient retrieval and pre-processing data for data visualization [31]. This phase of the architecture is key in converting data into a structure that is appropriate for more advanced analytical procedures, resulting in a decision-making ecosystem dashboard. The data warehouse is where data begins to acquire a more significant structure, facilitating intricate inquiries, trend analysis, and predictive modeling. These capabilities are valuable for producing knowledge within the system. Depending on the system requirements, the data warehouse can be adopted to Fusion, Staging, and Alliance stores, or all together. Finally, processed, visualized data are delivered to business users of the ecosystem.

Creating and managing backups is a critical aspect of every stage in the ETL process [32]. This ensures the ability to trace and address failures across any subcomponent within the Hyperloop system. During data extraction, the raw data must be backed up to preserve its integrity. Similarly, during the transformation stage, aggregated data should be securely stored. Finally, simulation data, which supports visualizations and is accessible to business users, must also be backed up to ensure the continuity and availability of critical insights.

A study conducted by D. Fernandes focused on a novel architecture for data management in ITS, emphasizing the use of edge-based data lakes [33]. This architecture addresses challenges with handling high-speed vehicle data and efficiently integrating and processing heterogeneous ITS data. The edge-based approach ensures low-latency processing and effective data integration, essential for real-time decision making in ITS.

The edge-based data lake design is highly applicable to the big data product, in this case, for a decision-making system of the Hyperloop project. It offers a flexible and effective solution for managing various data needs and facilitates improved decision making and operational effectiveness. The architecture’s capacity to handle data at the network edge, minimizing latency and enhancing resource utilization, is well suited to the requirements of a project containing (but not limited to) the use of disruptive technologies.

5. Concept Validation

This chapter outlines the theoretical validation of the EGTL model, utilizing abstract functions and formal notations to ensure the model’s consistency and efficacy. This study applies principles from functional programming and formal systems to define the operations within the EGTL pipeline, providing a theoretical foundation for the processes involved and validated using unit testing processes [34] and the Python programming language Pytest framework [35]. The EGTL model abstraction is defined using a series of functions, each corresponding to a stage in the data handling pipeline. These functions are represented using Greek letters to emphasize their theoretical and abstract nature:

- Ε(D)—Epsilon for extract

- Γ(D)—Gamma for generate

- Τ(D)—Tau for transfer

- Λ(D)—Lambda for load

Extract Function (Ε). The extract function, ϵ, is responsible for retrieving data from various sources and preparing it for further processing according to Figure 2. Formal definition:

where D is the dataset, represents the extraction applied to each data item according to EGTL solution (Figure 2). Source is proposed by this study.

Generate Function (Γ). The generate function, γ, is responsible for applying GenAI-related operations to data according to the EGTL solution (Figure 2)—cleaning, augmentation, error correction, et al. Formal definition:

where D is the dataset, and is related to each data item . Source is proposed by this study.

Transfer Function (Τ). The transfer function, τ, transforms the data according to business logic and prepares it for loading into the storage system according to the EGTL solution (Figure 2). Formal definition:

where D is the dataset, and is the related transformation to each data item . Source is proposed by this study.

Load Function (λ). The load function, λ, is responsible for loading the data into the target data warehouse according to the EGTL solution (Figure 2). Formal definition:

where D is the dataset, and is the related loading processes to each data item . Source is proposed by this study.

The complete EGTL process is represented by combining these functions in a sequential manner, defining the overall data transformation and loading process. Formal definition:

where represents load function (4), represents generate function (2), represents transform function (3), represents extract function (1), and is the dataset. Source is proposed by this study.

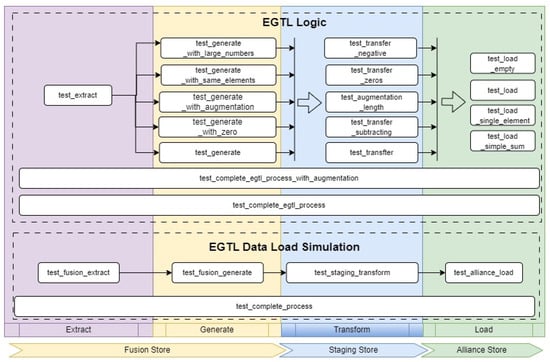

The unit-testing framework strategy (Figure 3) for the EGTL model is structured around two core components. First, it tests the overall EGTL logic to ensure that each phase—extract, generate, transform, and load—operates correctly and consistently across varying data scenarios. Second, the framework tests the EGTL data load process, focusing specifically on how data moves between the Fusion, Staging, and Alliance stores (see Table 3). The unit-testing framework offers several key benefits. It ensures the early detection of bugs and inconsistencies within the EGTL model, reducing the risk of system failures during real-time operations. Furthermore, it allows us to conduct a complete integration test of the proposed solution. Detailed unit tests are included in the Supplementary Materials Section.

Figure 3.

Unit testing framework strategy for EGTL. EGTL model’s phases are represented by a distinct color: Extract (color: purple), Generate (color: yellow), Transform (color: blue), Load (color: green). The arrows between the phases represent the order of test cases execution.

6. Implementation Framework

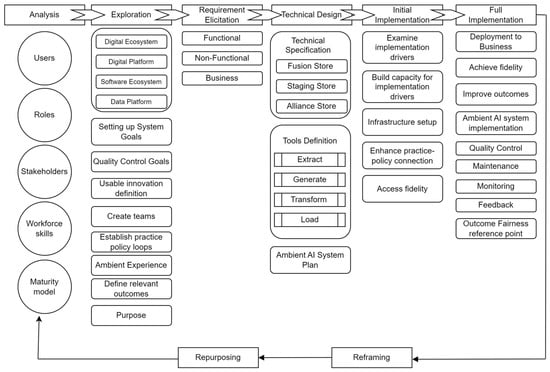

The enhanced extract, generate, transfer, load (EGTL) model implementation framework represents an approach to data handling in the software development lifecycle. Integrating the principles of Kahneman’s method for outcome coding [36], the EGTL framework is designed to optimize the journey from analysis to full implementation within digital ecosystems.

The integration of advanced data processing models necessitates a structured approach that is both comprehensive and adaptable to the evolving requirements of digital ecosystems according to Ron Adner’s research: “the ecosystem is characterized by the alignment structure of the multilateral set of partners that need to interact in order for a focal value proposition to materialize” [37]. This chapter outlines a strategic framework for the implementation of EGTL, which unites the methodical aspects of software development with outcome-oriented strategies drawn from Amos and Kahneman’s methodologies (Figure 4).

Figure 4.

EGTL implementation framework.

The EGTL implementation framework is a methodical approach designed to usher in an advanced paradigm for data handling in digital ecosystems, encapsulating a seven-step process (Table 5) that transitions from a foundational analysis to full-scale deployment. It commences with the Analysis phase, which establishes a comprehensive understanding of user interactions and system capabilities, followed by the Exploration phase that delves into the system’s possibilities and sets innovation benchmarks. The Requirement Elicitation phase meticulously assembles all necessary functional and non-functional criteria, paving the way for the Technical Design phase, where a robust framework with specialized data storing Fusion, Staging, and Alliance is conceptualized. In the EGTL Process Tools Definition phase, the core processes of EGTL are precisely articulated to ensure seamless data flow and enrichment. Acting as a transactor, the Initial Implementation phase focuses on integrating the theoretical model into practical settings, establishing infrastructure and building capacity. The Full Implementation phase then makes sure the EGTL framework comes into full effect within the business environment, emphasizing operational excellence, monitoring, and continuous quality improvement. This structured progression ensures that each step lays the foundation for the next, creating a robust pathway from theory to practice that is both scalable and adaptable to future innovations in data engineering. The Implementation Framework is a lifecycle where each cycle is followed by outcome coding and reframing, repurposing the process depending on the feedback, and monitoring results and outcomes.

Table 5.

EGTL methodological approach.

7. EGTL Experiment

The experiment utilizing the EGTL model was executed on the prototype of the Hyperloop Decision-Making Ecosystem (HDME), a web-based application designed to facilitate decision-making processes within Hyperloop technology projects through the creation of various simulation modeling scenarios. The HDME employs the Snowflake data warehouse for data storage, with distinct schemas established for each EGTL store: FUSION_STORE (26 tables + those generated by GenAI during EGTL process), STAGING_STORE (52 tables), and ALLIANCE_STORE (16 tables). This experimental framework integrated the EGTL model into the foundational data warehouse architecture and processing workflows within HDME. The experimental setup for the EGTL model is illustrated in Figure 5.

Figure 5.

Visualization of EGTL experiment setup in HDME solution. EGTL model’s phases are represented by a distinct color: Extract (color: purple), Generate (color: yellow), Transform (color: blue), Load (color: green). Arrows between the figure components illustrate movement of data. Experiment titles are illustrated by red color.

The execution of the experiments was fully automated using Python scripts, which simulated near-real-time data processing scenarios. A range of GenAI models, including gemini-1.5-flash, gpt-3.5-turbo, gpt-4, and mistral-small, were employed at each stage of the experiment. The HDME interfaced with these GenAI models via API connections, which were also managed through Python scripting.

Five distinct difficulty levels were developed to assess the applicability of GenAI models across the different stores. These levels correspond to varying degrees of data intensity exerted on the HDME system and the associated complexity for the GenAI models. Each successive level introduced increasingly complex tasks. To quantify this complexity, relative data intensity units were established, with each unit corresponding to progressively more intricate prompts given to the GenAI models, thereby demanding higher complexity in the outputs. For example, a data intensity value of two could manage twice the data volume compared to a value of one, contingent on the nature of the source data provided to the GenAI through data frames.

Difficulty level 1 corresponds to a complexity of five relative data intensity units, imposing very low pressure on the HDME system. Difficulty level 2, with 10 relative intensity units, applies low pressure, while difficulty level 3, at 20 units, represents the optimal pressure needed for HDME to generate effective simulation modeling scenarios. As a result, most experiments were conducted within difficulty levels 1 through 3. However, an exception was made for the Alliance store business analysis experiment, which was conducted at difficulty level 5. This higher difficulty level was necessary because the GenAI model had to process entire tables containing comprehensive historical data across eleven Hyperloop criteria. Levels 4 and 5, apart from this case, were primarily used for extreme scenarios aimed at troubleshooting and understanding the limitations of GenAI enhancements within the ETL process.

Data extraction and generation experiments within the EGTL framework were carried out in the Fusion store. The GenAI models were specifically applied during the extract phase to retrieve data for two primary tasks: Hyperloop technical specification details and advancements in Hyperloop technology. The outcomes of these data extraction experiments are presented in Table 6. The variations in average prompt and output sizes across different models and difficulty levels also highlight the impact of model architecture on handling and generating complex data.

Table 6.

Performance metrics of EGTL fusion store data extraction experiment.

The results reveal that all models performed exceptionally well at lower difficulty levels, with a consistent 100% success rate in difficulty levels 1 and 2. However, as the difficulty increased to level 3, the success rates for some models, particularly mistral-small and gpt-3.5-turbo, dropped significantly to 50% and 60%, respectively. Gpt-4 and gemini-1.5-flash exhibited the ability to generate larger output sizes at higher difficulty levels, which could be advantageous in scenarios requiring detailed and comprehensive data processing.

The code generation by GenAI and execution in EGTL was conducted in the Fusion store. The experiment involved three tasks to improve the underlying data architecture in real time mode. GenAI generated a programming code to create new tables for missing tables related to Hyperloop subsystem specifications. The second task for GenAI was to clean up redundant Hyperloop subsystem specifications. In the scope of the third task, GenAI enhanced the existing underlying database system by creating backup tables for those previously created. All three tasks were handled by Python scripts, where one set of scripts was dedicated to code generation on flight and another set of scripts were responsible for capturing SQL queries produced by GenAI and executing them immediately. All experiments were successful, and all produced queries were correct (Table 7). Gemini-1.5-flash demonstrated the best performance of 0.95 s and 2 s for different task complexity levels with the largest output. Gpt-4 and gpt-3.5-turbo models took the longest time execution for code generation tasks.

Table 7.

Performance metrics of EGTL fusion store generate and execute code experiment.

The data generation task involved populating a table related to the Hyperloop system dynamics criterion, tailored to user preferences (e.g., scenarios that are negative or highly positive). For all associated tasks, the GenAI models generated datasets in JSON format, which were then stored in the Fusion store tables. The experiment was deemed complete once these data were successfully transferred to the Staging and Alliance stores.

The overall success rates indicate that the models performed well, particularly at lower difficulty levels, with an average success rate of 92.5% for difficulty level 1 (Table 8). However, as task difficulty increased, the success rates slightly declined, with the lowest average success rate of 78% observed at difficulty level 3. The gpt-3.5-turbo and gpt-4 models showed notable robustness, achieving near-perfect performance even at higher difficulty levels, while the gemini-1.5-flash model demonstrated a more pronounced decline in success rates as complexity increased. The average model work time generally increased with task difficulty, reflecting the additional computational effort required.

Table 8.

Performance metrics of EGTL fusion store generate data experiment.

The EGTL data transformation experiment was conducted in the Staging store. This experiment focused on cleaning and transforming data related to the scalability criterion within the Hyperloop system dynamics, achieving an 88.56% success rate. Initially, “dirty” data were deliberately introduced into the database. The GenAI model then selected the appropriate column from the loaded dataset, normalized the values according to specified requirements, checked for null and negative values, made the necessary corrections, and finally reconstructed the dataset. The processed data were then saved in JSON format.

Table 9 details the performance metrics for the data transformation experiments conducted in the Staging store, focusing on the scalability criterion within the Hyperloop system dynamics. The overall success rate across all models and difficulty levels was 88.56%, indicating a generally effective performance. At difficulty level 1, the success rate was almost perfect at 97.5%, but it slightly decreased as the task difficulty increased, with the lowest success rate of 85% observed at difficulty level 3. The gemini-1.5-flash and gpt-3.5-turbo models maintained consistently high success rates, even at higher difficulty levels, showcasing their strong data transformation capabilities. In contrast, the mistral-small model exhibited a more significant decline in performance at higher difficulty levels, suggesting potential limitations when handling more complex transformations.

Table 9.

Performance metrics of EGTL staging store data transformation experiment.

The average model work time increased with difficulty, as expected, with gpt-4 showing the highest work times, reflecting the more intensive processing required at higher complexity levels. Despite these variations, output correctness remained high across all models, with an average of 98.67%, indicating that the transformations were reliably executed.

The EGTL business analysis experiment was performed in the Alliance store. This experiment involved submitting an entire table containing historical system dynamics data for eleven Hyperloop criteria to the GenAI model. The task assigned to the GenAI was to analyze the provided data and generate actionable insights for the user by returning specific signals. The results of this analysis are detailed in Table 10. Notably, all models achieved a 100% success rate across the board, demonstrating their strong capability to handle complex business analysis tasks. The average model work time varied significantly between models, with mistral-small being the fastest at 0.54 milliseconds, and gpt-3.5-turbo and gpt-4 requiring considerably more time, averaging around 9.87 and 9.74 milliseconds respectively.

Table 10.

Performance metrics of EGTL alliance store business intelligence experiment.

Table 11 provides a comparative summary of the performance metrics across all EGTL experiments, detailing the performance of the different stages. The overall success rate for all experiments was a strong 92.36%, indicating high effectiveness across the EGTL framework. The extraction phase exhibited a success rate of 87.92%, slightly lower than the other phases, which suggests that this stage may present more challenges or complexities, especially as the task difficulty increases. The generation and transformation stages followed closely with success rates of 92.98% and 88.56%, respectively, reflecting a reliable performance, albeit with slight variations that may be attributed to the complexity of tasks and the nature of the data processed.

Table 11.

Summary of all EGTL experiments.

The loading phase achieved a perfect success rate of 100%, underscoring the robustness of the models in analyzing data at this final stage. Across all stages, the average output correctness remained consistently high, close to 100%, indicating that the models were accurate in their outputs regardless of the stage or task complexity. The average model work time was notably higher in the extraction and transformation stages compared to the loading stage, reflecting the increased computational demands during these phases.

To conclude, the EGTL experiment was successfully executed on the Hyperloop Decision-Making Ecosystem (HDME), a web-based prototype designed to streamline decision making in Hyperloop technology projects. The EGTL (extract, generate, transform, load) model was integrated with Snowflake data warehouses, utilizing three specialized stores—Fusion, Staging, and Alliance. Through automated Python scripts, the experiment simulated near-real-time data processing, evaluating several GenAI models, including gemini-1.5-flash, GPT-3.5-turbo, GPT-4, and mistral-small, across five difficulty levels. The results demonstrated a robust performance at lower complexity levels, though success rates decreased as task difficulty intensified. Overall, the experiment highlighted GenAI’s potential to improve data workflows, especially in extraction, transformation, code generation, and business analysis tasks. The HDME prototype, EGTL experiment code, and detailed results are included in the Supplementary Materials Section.

8. Discussion

The integration of GenAI to all stages of the ETL process helps in data identification and extraction, data augmentation, enhancing data quality, pattern recognition, and anomaly detection, automating extraction workflows, real-time data extraction, semantic understanding, and custom extraction for specific domains. It is at a critical change, facing both unprecedented challenges and opportunities, because it possesses risks and benefits of GenAI, the assessment of which will be part of the next study. New roles and professions emerged with the rise of GenAI [38]. The integration of GenAI into existing data pipelines must be conducted responsibly and corresponding with ethical standards and guidelines. By following a structured software development lifecycle and applying outcome coding, the EGTL framework is positioned to improve data handling processes, providing a path for innovation in data engineering products.

Based on the limitations and challenges highlighted in Table 12, the process encounters several significant issues during implementation, primarily revolving around the use of GenAI. One major limitation is the constraint on prompt size, where GenAI models are either programmatically or cost-limited, leading to potential hallucination effects when processing large datasets. This issue is compounded by GenAI’s susceptibility to hallucination, especially when handling large volumes of data or vague prompts, which can result in the generation of incorrect or nonsensical outputs. Additionally, the variability in dataset column sizes and incorrect dataset formats further complicate the process, requiring refined prompt engineering and additional functions to manage these inconsistencies. Costs associated with frequent API calls for GenAI processing, security issues related to blocked API responses due to malicious content, and limitations on the number of requests that can be handled by the GenAI are also noted as challenges. These factors highlight the need for careful model selection, prompt segmentation, and targeted optimizations to mitigate the risks and ensure the reliability of the EGTL process.

Table 12.

EGTL limitations and challenges.

Only the minimum necessary amount of user data for system operations must be processed. The goal of the EGTL model is not to dramatically increase the data involved in the data product. Instead, the goal is to optimize and raise efficiency by providing the maximum number of meaningful insights that result in knowledge at the same time using less resources and processing less data. Missing and incorrect data can be replaced by already available data in GenAI models.

As IT project personnel need adaptation and training to work effectively with new big data tools, the traditional ETL process must also evolve. One key objective of this improvement is to better navigate the complexities of modern data handling and extraction processes. The foundation, basis, and backbone of data engineering in ETL methods increasingly fall short in addressing the multifaceted demands of today’s data-intensive environments. This study has introduced a novel modification to the conventional ETL process, termed EGTL—extract, generate, transfer, load. This innovative approach integrates a pivotal “generate” phase, leveraging GenAI to enhance immediate data cleaning, profiling, and aggregation during the extraction phase. The model proposed to enrich the data quality and utility before it undergoes further transformation. The proposed EGTL model is an independent tool and is not bound to specific brands, products or vendors.

ETL process analysis highlighted challenges, limitations and opportunities in current storage data processing in data engineering. The application of DataOps principles to the current ETL process contributes to the big data project success; therefore, it is vital as a requirement for the successful implementation of big data products that require complex various data sources and formats.

9. Conclusions and Next Steps

This paper proposed a modified variation of the existing ETL data integration process that is named EGTL—extract, generate, transform, load. This method relies on instant data cleaning and profiling before the transformation step in the data pipeline during the generation stage, and this stage operates with the inclusion of GenAI on data aggregation during its extraction and later profiling. EGTL focuses on the use of GenAI custom GPT models to support handling data in all stages of the data lifecycle. EGTL supports the goal of big data product that is focused on providing knowledge as an ultimate outcome of the big data lifecycle, ensuring that a system’s insights for operators and business users will be produced from both structured and unstructured data sources, utilizing various formats.

One of the EGTL model’s key aspects is the alignment with data mesh architecture principles by design. The incorporation of data mesh to EGTL supports enhanced data governance, emphasizing domain-oriented data ownership, self-serve data infrastructure, and product thinking in data management. Together, the EGTL model with data mesh enables a project to handle data more efficiently, foster innovation, and ensure that the data are accessible and useful across different teams and applications. In the given model, the data are treated as a product, emphasizing domain-specific ownership, which improves data quality and relevance.

DataOps principles prioritize agile and lean practices to improve the quality and speed of data analytics, focusing on collaboration, automation, and continuous integration/continuous delivery pipelines for data [10]. Data mesh, alternatively, introduces a decentralized socio-technical approach, organizing data as a product, with domain-oriented, self-serve data infrastructure as a platform to enhance discoverability, accessibility, and security across corporate data stores [6]. Incorporating alternative but not contradictive modern DataOps and data mesh principles contribute to the novelty and uniqueness of the EGTL model in data engineering.

Fusion, Staging, and Alliance stores correspond to EGTL customization in different stages and business groups. High-level user group access is defined for each store, highlighting access for developers, administration, quality-assurance, GenAI models and business users. Big data application business logic is considered to be orchestrated in the data lake where transformation, data processing, and data manipulation alongside machine learning algorithms are conducted. The transformation stage produces simulation data, which is loaded to the data warehouse. Simulation models are processed in the data warehouse and data are visualized, where operators and business users can access decision- making insights using an interactive dashboard. The EGTL model is designed in a way that it can handle various kinds of extraction sources, including but not limited to external databases, a project’s internal databases and file systems, and the data provided by a system’s user uploads using UI.

Based on the results from the experiments conducted within the EGTL framework, several key findings emerged that underscore the effectiveness of integrating GenAI into the data processing pipeline. The experiments demonstrated a high overall success rate across different stages, with the loading phase achieving a perfect success rate of 100%, reflecting the robustness of the EGTL model in completing complex data management tasks. Notably, even at higher difficulty levels, models such as GPT-4 and GPT-3.5-turbo exhibited strong performance, particularly in handling more intricate data transformation tasks.

The conducted code generation experiments demonstrated a high level of success, with all tasks achieving a 100% success rate. The generative AI models efficiently generated programming code for schema creation, unused structure removal, and backup table generation, significantly streamlining real-time system operations. These results validate the capability of the EGTL model to automate complex code generation processes, contributing to DataOps principles on streamlining workflows and data reliability. Furthermore, it supports decentralized data management and facilitates a self-serve infrastructure according to the data mesh.

The experiments validated the feasibility of real-time data processing, especially at lower difficulty levels, where the average model work time of 1.63 s was deemed acceptable for streaming data applications. This indicates that the EGTL model can effectively support near-real-time data processing, a critical capability in modern data-driven environments. Overall, the experiment results affirm that the EGTL model, enhanced by GenAI, can improve data quality, processing efficiency and opening new possibilities for real-time data integration and analysis, positioning it as a valuable advancement in the field of data engineering.

The next step is to propose the solution architecture of the Hyperloop project decision-making ecosystem that is based on a data engineering ETGL model. A case study should be performed on exact big data projects to measure the efficiency and efficacy of EGTL. A comparison of custom GPT model usage in EGTL and use cases, the performance impact, and ethical and security considerations should be analyzed in future research.

A solution architecture high-level design contributed to the need for setting up data requirements for the big data project involving complex various data sources, formats, and storages supported by GenAI.

Limitations. Research does not evaluate ethical or moral considerations for the use of GenAI in the ETL process or the cultural, religious, or political aspects of EGTL model integration. The study might not address industry-specific extraction challenges comprehensively, e.g., unique regional regulatory requirements, proprietary data formats, or the sensitivity level of information being processed and its security.

This study recommends using the proposed EGTL model responsibly and according to the rules, laws, and professional ethics and regulations of the area where it is applied. These limitations highlight the need for continuous research and the adaptation of ETL processes to keep pace with the evolving data landscape. The EGTL model is one aspect that shows how existing ETL processes can be improved given disruptive technologies. Specific user group access for Fusion and Alliance stores must be set based on project requirements and the best security practices and are not in the scope of the research.

Future research should focus on several key areas. First, scalability testing with larger datasets and real-time applications is critical to validate the model’s effectiveness in handling high data loads and streaming environments. Second, case studies applying the EGTL model to web applications should demonstrate its practical utility across diverse digital ecosystems. Additionally, gathering industry feedback through surveys will be essential for informing and guiding future refinements to the EGTL model.

Supplementary Materials

The following supporting information can be downloaded at: https://github.com/pirrencode/tsi_data_load_model/tree/main/tests/unit (accessed on 23 September 2024), test_data_load.py: Test Data Load; test_logic.py: Test Logic; results.md: Test Results. https://github.com/pirrencode/hpl_api (accessed on 23 September 2024), app.py: HDME Web-application and EGTL Experiment. https://github.com/pirrencode/hpl_api/tree/main/egtl_experiment_data (accessed on 23 September 2024), egtl_alliance_store_analytics_results.csv: EGTL Alliance store experiment results; egtl_fusion_store_data_extraction_results.csv: EGTL Fusion store data extraction experiment results; egtl_fusion_store_generate_data_results.csv: EGTL Fusion store data generation experiment results; egtl_fustion_store_generate_code_results.csv: EGTL Fusion store code generation experiment results; egtl_staging_store_data_transformation_result.csv: EGTL Staging store data transformation experiment results; egtl_summary_of_all_experiments_final.csv: EGTL summary results of all experiments.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material, and further inquiries can be directed to the corresponding authors.

Acknowledgments

Mihails Savrasovs, scientific supervisor. Francesco Maria Turno, researcher, for reviews, help with ideation, and for motivation.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Sabtu, A.; Azmi, N.F.M.; Sjarif, N.N.A.; Ismail, S.A.; Yusop, O.M.; Sarkan, H.; Chuprat, S. The challenges of Extract, Transform and Loading (ETL) system implementation for near real-time environment. In Proceedings of the 2017 International Conference on Research and Innovation in Information, Langkawi, Malaysia, 16–17 July 2017. [Google Scholar]

- Bhattacharjee, A.; Barve, Y.; Khare, S.; Bao, S.; Kang, Z.; Gokhale, A.; Damiano, T. STRATUM: A BigData-as-a-Service for Lifecycle Management of IoT Analytics Applications. In Proceedings of the 2019 IEEE International Conference on Big Data, Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar] [CrossRef]

- Kirsch, L.J.; Beath, C.M. The enactments and consequences of token, shared, and compliant participation in information systems development. Account. Manag. Inf. Technol. 1996, 6, 221–254. [Google Scholar] [CrossRef]

- Data Mesh Architecture. DataMesh-Architecture.com. Available online: https://www.datamesh-architecture.com (accessed on 27 February 2024).

- Theodorou, V.; Abelló, A.; Lehner, W.; Thiele, M. Quality measures for ETL processes: From goals to implementation. Concurr. Comput. Pract. Exp. 2016, 28, 3969–3993. [Google Scholar] [CrossRef]

- Weng, Y. A service components pipeline model based on multi-source data extraction. Signal Process. 2016, 124, 5–12. [Google Scholar] [CrossRef]

- ISO/IEC 25002:2024; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Quality Model Overview and Usage. ISO: Geneva, Switzerland, 2024. Available online: https://www.iso.org/standard/78175.html (accessed on 23 September 2024).

- ISO/IEC 25012:2008; Software Engineering—Software Product Quality Requirements and Evaluation (SQuaRE)—Data Quality Model. ISO: Geneva, Switzerland, 2024. Available online: https://www.iso.org/standard/35736.html (accessed on 23 September 2024).

- General Data Protection Regulation (GDPR). GDPR-info.eu. Available online: https://gdpr-info.eu (accessed on 27 August 2024).

- DataOps Principles. DataOps, 2023. Available online: https://dataopsmanifesto.org/en/ (accessed on 23 September 2024).

- Bloomberg, J. DataOps: What, Why, and How? 2019. Available online: https://www.linkedin.com/pulse/dataops-what-why-how-jason-bloomberg (accessed on 27 February 2024).

- Kimpel, J.F. Critical Success Factors for Data Warehousing: A Classic Answer to a Modern Question. Issues Inf. Syst. 2013, 14, 376–384. [Google Scholar] [CrossRef]

- ChatGPT-4 [Artificial Intelligence Language Model], OpenAI, 2024. Available online: https://openai.com/ (accessed on 23 September 2024).

- Aubakirova, A. Python Tools Evaluation for ETL-Process Development and Maintenance; Transport and Telecommunication Institute: Riga, Latvia, 2019. [Google Scholar]

- Databricks. What Is a Medallion Architecture? 2023. Available online: https://www.databricks.com/glossary/medallion-architecture (accessed on 31 August 2024).

- Foxley-Marrable, M. Medallion Architecture. DataPlatforms.ca. Available online: https://dataplatforms.ca/blog/2024-04-16-Medallion-Architecture/ (accessed on 31 August 2024).

- Das, T.; Boyd, R.; Lee, D.; Jaiswal, V. Delta Lake: Up and Running; O’Reilly Media: Sebastopol, CA, USA, 2021; pp. 75–112. [Google Scholar]

- Armbrust, M. Diving into Delta Lake: Unpacking the Transaction Log. Databricks. Available online: https://www.databricks.com/blog/2019/08/21/diving-into-delta-lake-unpacking-the-transaction-log.html (accessed on 31 August 2023).

- Montecchi, D.; Plati, L. Time series big data: A survey on data stream frameworks, analysis and algorithms. J. Big Data 2023, 10, 60. [Google Scholar] [CrossRef]

- Geisler, S. Real-Time Analytics: Benefits, Limitations, and Tradeoffs. Program. Comput. Softw. 2023, 49, 1–25. [Google Scholar] [CrossRef]

- Amazon, What Is ETL (Extract Transform Load)? 2024. Available online: https://aws.amazon.com/what-is/etl/ (accessed on 27 February 2024).

- What Is ELT? IBM, 2024. Available online: https://www.ibm.com/topics/elt (accessed on 23 September 2024).

- García, S.; Ramírez-Gallego, S.; Luengo, J.; Benítez, J.M.; Herrera, F. Big data preprocessing: Methods and prospects. Big Data Anal. 2016, 1, 9. [Google Scholar] [CrossRef]

- Data Warehouse Architecture (with a Staging Area). Oracle, 2024. Available online: https://docs.oracle.com/cd/B10501_01/server.920/a96520/concept.htm#50822 (accessed on 27 August 2024).

- Anand, N.; Kumar, M. An Overview on Data Quality Issues at Data Staging ETL. In Proceedings of the International Conference on Advances in Computer Science and Application, Lucknow, India, 21–22 June 2013. [Google Scholar]

- Karun, A.K.; Chitharanjan, K. A review on Hadoop—HDFS infrastructure extensions. In Proceedings of the 2013 IEEE Conference on Information & Communication Technologies, Thuckalay, India, 11–12 April 2013. [Google Scholar] [CrossRef]

- Fan, W.; Geerts, F. Foundations of Data Quality Management; Morgan & Claypool Publishers: San Rafael, CA, USA, 2012. [Google Scholar]

- Downey, A.B. Probability and Statistics for Programmers; O’Reilly Media: Sebastopol, CA, USA, 2011; pp. 19–20. [Google Scholar]

- Knorr, E.M. Outliers and Data Mining: Finding Exceptions in Data. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2002. [Google Scholar] [CrossRef]

- Giebler, C.; Gröger, C.; Hoos, E.; Schwarz, H.; Mitschang, B. Leveraging the Data Lake: Current State and Challenges. In Big Data Analytics and Knowledge Discovery; Ordonez, C., Song, I.-Y., Anderst-Kotsis, G., Tjoa, A.M., Khalil, I., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11708. [Google Scholar]

- Hassan, C.A.U.; Hammad, M.; Uddin, M.; Iqbal, J.; Sahi, J.; Hussain, S.; Ullah, S.S. Optimizing the Performance of Data Warehouse by Query Cache Mechanism. IEEE Access 2022, 10, 13472–13480. [Google Scholar] [CrossRef]

- Chang, V. Towards a Big Data system disaster recovery in a Private Cloud. Ad Hoc Netw. 2015, 35, 65–82. [Google Scholar] [CrossRef]

- Fernandes, D.; Moura, D.L.L.; Santos, G.; Ramos, G.S.; Queiroz, F.; Aquino, A.L.L. Towards Edge-Based Data Lake Architecture for Intelligent Transportation System. In Proceedings of the MSWiM ’23: Int’l ACM Conference on Modeling Analysis and Simulation of Wireless and Mobile Systems, Montreal, QC, Canada, 30 October–3 November 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Daka, E.; Fraser, G. A Survey on Unit Testing Practices and Problems. In Proceedings of the 2014 IEEE 25th International Symposium on Software Reliability Engineering, Naples, Italy, 3–6 November 2014; pp. 201–211. [Google Scholar] [CrossRef]

- Hunt, J. PyTest Testing Framework. In Advanced Guide to Python 3 Programming; Undergraduate Topics in Computer Science; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Kahneman, D.; Tversky, A. Choices, Values, and Frames; Cambridge University Press: Cambridge, UK, 2000; pp. 321–323. ISBN 9780521627498. [Google Scholar]

- Adner, R. Ecosystem as structure: An actionable construct for strategy. J. Manag. 2017, 43, 39–58. [Google Scholar] [CrossRef]

- Marr, B. 12 New Jobs in The Generative AI Era. LinkedIn. Available online: https://www.linkedin.com/pulse/12-new-jobs-generative-ai-era-bernard-marr-z7bge (accessed on 9 September 2023).

- Nandi, A. Understanding Gen AI Hallucinations: A Deep Dive into the Phenomenon. AIM Research. Available online: https://aimresearch.co/council-posts/council-post-understanding-gen-ai-hallucinations-a-deep-dive-into-the-phenomenon (accessed on 31 August 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).