Abstract

Quality assessment in industrial applications is often carried out through visual inspection, usually performed or supported by human domain experts. However, the manual visual inspection of processes and products is error-prone and expensive. It is therefore not surprising that the automation of visual inspection in manufacturing and maintenance is heavily researched and discussed. The use of artificial intelligence as an approach to visual inspection in industrial applications has been considered for decades. Recent successes, driven by advances in deep learning, present a possible paradigm shift and have the potential to facilitate automated visual inspection, even under complex environmental conditions. For this reason, we explore the question of to what extent deep learning is already being used in the field of automated visual inspection and which potential improvements to the state of the art could be realized utilizing concepts from academic research. By conducting an extensive review of the openly accessible literature, we provide an overview of proposed and in-use deep-learning models presented in recent years. Our survey consists of 196 open-access publications, of which 31.7% are manufacturing use cases and 68.3% are maintenance use cases. Furthermore, the survey also shows that the majority of the models currently in use are based on convolutional neural networks, the current de facto standard for image classification, object recognition, or object segmentation tasks. Nevertheless, we see the emergence of vision transformer models that seem to outperform convolutional neural networks but require more resources, which also opens up new research opportunities for the future. Another finding is that in 97% of the publications, the authors use supervised learning techniques to train their models. However, with the median dataset size consisting of 2500 samples, deep-learning models cannot be trained from scratch, so it would be beneficial to use other training paradigms, such as self-supervised learning. In addition, we identified a gap of approximately three years between approaches from deep-learning-based computer vision being published and their introduction in industrial visual inspection applications. Based on our findings, we additionally discuss potential future developments in the area of automated visual inspection.

1. Introduction

Industrial production and maintenance are under constant pressure from increasing quality requirements due to rising product demands, changing resources, and cost specifications. In addition, there are constantly changing framework conditions due to new and changing legal requirements, standards, and norms. Ultimately, the increasing general flow of information via social media and other platforms leads to an increased risk of reputational damage from substandard products. These influences, combined with the fact that quality assurance is still predominantly performed or supported by human inspectors, have led to the need for advances in continuous quality control. Since vision is the predominant conscious sense of humans, most inspection techniques in the past and even today are of a visual nature [1]. However, manual visual inspection (VI) has several drawbacks, which have been studied, for example, by Steger et al., Sheehan et al., and Chiang et al. [2,3,4], specifically including high labor costs, low efficiency, and low real-time performance in the case of fast-moving inspection objects or large surface areas. According to Swain and Guttmann [5], minimal error rates of 10−3 can be reached for very simple accept/reject tasks. Though highly dependent on the inspection task, Drury and Fox [6] observed error rates of 20% to 30% in more complex VI tasks in their studies. In addition, decreasing efficiency and accuracy occur during human inspection due to fatigue and resulting attention deficits.

As a way to counteract these effects, automation solutions were pushed in the 1980s. The goal was to increase efficiency and performance and reduce costs while minimizing human error. Accordingly, computer vision (CV) methods were introduced to VI, which was initially only relevant for the automation of simple, monotonous tasks. In the beginning, they served more as a support for inspectors [7], but as development progressed, whole tasks were solved without human involvement. This was the beginning of automated visual inspection (AVI).

With deep learning (DL) becoming the technology of choice in CV since 2010, showing better generalization and less sensitivity to application conditions than traditional CV methods, new models have reached performance levels that even surpass those of humans in complex tasks like image classification, object detection, or segmentation [8,9,10]. Accordingly, the development and use of such DL techniques for use in industrial AVI are reasonable and understandable. In order to provide an overview of the current state of research and development and to understand what the current focus is, we provide answers to the following guiding questions in our study.

- What are the requirements that have to be considered when applying DL-based models to AVI?

- Which AVI use cases are currently being addressed by deep-learning models?

- Are there certain recurring AVI tasks that these use cases can be categorized into?

- What is the data basis for industrial AVI, and are there common benchmark datasets?

- How do DL models perform in these tasks, and which of them can be recommended for certain AVI use cases?

- Are recent state-of-the-art (SOTA) CV DL models used in AVI applications, and if not, is there untapped potential?

From these questions, we derive key insights and challenges for AVI and give an outlook on future research directions that we believe will have a positive impact on the field in industry as well as research. From these questions, we further derive the structure for our study as follows. In Section 2, we describe our literature research process and the constraints we defined. This is followed by Section 3, where we derive requirements for DL-based AVI and categorize the surveyed literature based on the use case as well as on how these use cases are solved to answer questions one to three. Section 4 deals with the evaluation of the industrial approaches and data characteristics, summarizes developments in academic research, and identifies promising models and methods from it that are not yet utilized in application use cases (questions 3, 4, and 5). Section 5 summarizes our survey and gives an outlook on possible future research directions.

2. Methodology of Literature Research

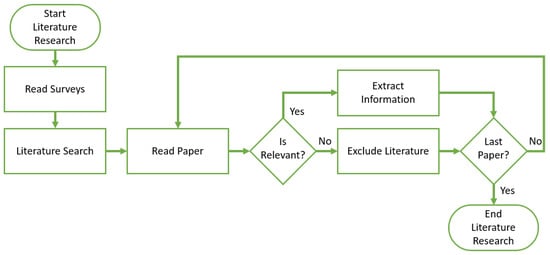

In our work, we analyzed the SOTA by surveying recent papers solving AVI use cases with DL-based approaches. Figure 1 succinctly illustrates the employed literature research methodology for our comprehensive review. Therefore, we followed the systematic literature research procedure proposed by Vom Brocke et al. [11] and adhered to their recommended process, starting with the investigation of survey papers on VI with DL to justify the necessity of our research. We used scholarly search engines like Google Scholar, Web of Science (WOS), and Semantic Scholar and found many related survey publications on VI with DL. However, all publications put their focus on DL-based VI in specific industry sectors or methodologies but not on industrial VI as a whole.

Figure 1.

Flow chart of the literature research process.

Zheng et al. [12], e.g., focused their survey on surface defect detection with DL techniques. The same applies to the surveys by Jenssen et al. [13], Sun et al. [14], Yang et al. [15], Nash et al. [16], Liu et al. [17], and Donato et al. [18], who concentrated on different industry sectors, like steel production, railway applications, power lines, or manufacturing in general. There are also many publications in the areas of civil engineering and structural health monitoring. Ali et al. [19], Ali et al. [20], Hamishebahar et al. [21], and Intisar et al. [22] studied DL-based approaches to crack detection. Chu et al. [23], Qureshi et al. [24], and Ranyal et al. [25] reviewed the general SOTA in the AVI of pavements and roads, while Kim et al. [26] focused more specifically on the detection of potholes. A wider scope is covered by Zhou et al. [27] and Hassani et al. [28], as they consider structural health monitoring as a whole, not just cracks or pavements and roads. Chew et al. [29] highlighted the consideration of falling facade objects in their review, while Luleci et al. [30] emphasized the application of generative adversarial networks (GANs). Mera et al. [31] surveyed the literature on class imbalance for VI. Tao et al. [32] covered a wide range of industrial applications but restricted their survey to unsupervised anomaly detection.

Similarly, Rippel et al. [33] reviewed the literature with a focus on anomaly detection with DL in AVI. In addition to an overview, they also discuss the advantages and disadvantages of the reviewed anomaly detection approaches.

The only survey we found that deals with VI as a whole, i.e., not only one VI use case or industry sector, was written in 1995 by Newmann et al. [34]. However, this work was published over 20 years ago and did not deal with DL and, therefore, does not cover new challenges that emerged with the era of digitization.

Given the lack of a domain-overarching perspective on VI, we gathered relevant publications utilizing the WOS online research tool, as it is recognized as the leading scientific citation and analytical platform and lists publications across a wide area of knowledge domains [35]. The research field we are interested in is an intersection of two topics: DL-based CV and AVI in industrial use cases. Thus, we gathered search terms with the intention of covering the most important aspects of each of the two areas. Table 1 lists all defined search terms. We formulated search queries to find publications that contain at least one term from each of the two categories and conducted our literature search on 4 March 2023.

Table 1.

Search terms used for literature research.

Furthermore, we defined additional constraints to refine the results of the query. The considered time range started from 1 January 2010 in order to also include less high-profile publications before the first largely successful CV model “AlexNet” was proposed in 2012. Furthermore, we only considered open-access publications written in English to be transparent and comprehensible in our work by only using references accessible to the scientific community. The query with the aforementioned constraints resulted in 6583 publications. As a next refinement step, we excluded all science categories that are not associated with an industrial context (detailed listing in Table A1). After filtering, we obtained exactly 808 publications for further investigation. For the next research step, we read the publications, and in that process, we defined them as relevant or not relevant based on set constraints. The constraints for a publication being relevant to our survey are manifold and include that the authors described their approach appropriately with information about the task, the method, the used data, and performance. Moreover, we also restricted ourselves to publications that use 2D image data and in which an industrial context is clearly identifiable. The decision to exclusively consider publications that employ AVI on 2D imagery is motivated by the widespread availability and affordability of cameras compared to alternative devices, such as hyperspectral or 3D cameras as well as light detection and ranging (LiDAR) devices.

For example, this excludes publications that deal with a medical context, remote sensing, or autonomous driving. All these constraints resulted in a publication corpus of 196 publications that we investigated further.

3. Categorization of Visual Inspection (Tasks)

In this section, we first discuss the term VI in more detail, as it is used in our publication corpus to lay the foundation for our further analysis. Next, we derive a hierarchy, which structures all VI use cases from the gathered literature (Section 3.2). In addition to the VI use case, we also group the publications by the AVI task, with the aim of categorizing them by the methodology that is used to solve them. These are closely related to the CV tasks classification, object detection, and segmentation. These AVI tasks provide the structure for the following Section 3.3.1 to Section 3.3.4. In each subsection, we review and analyze publications that aim to automate the corresponding AVI task using DL-based methods.

In general, VI describes the visual assessment of the state of a manufacturing product or assembly, a vehicle, a building, or infrastructure and its comparison to specified requirements. The publication corpus shows two application contexts in which VI is employed: maintenance and manufacturing. In manufacturing, the inspection is executed, e.g., after critical points during or at the end of manufacturing processes, like the final machining step of a component responsible for key functions of the overall system or the point at which two partial assemblies are combined into one. By doing so, it is confirmed that the finished product meets the predefined quality standards [34,36]. In a maintenance context, See et al. [37] define VI as the periodical monitoring of features that indicate a certain state of the inspected object that impairs its functionality or operational safety and can lead to additional negative impacts like injury, fatality, or the loss of expensive equipment.

3.1. Requirements for Deep-Learning Models in AVI

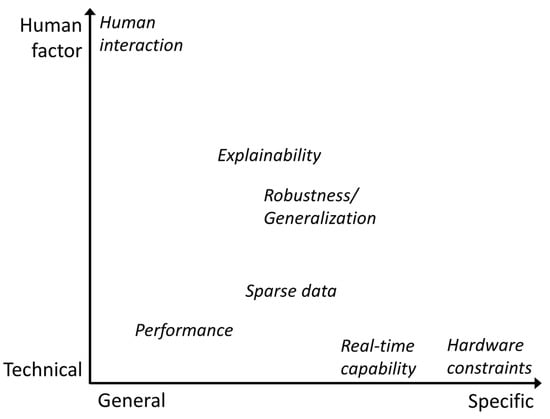

Several requirements have to be considered when introducing DL-based AVI to a previously manual inspection process or even to an already automated process that uses classical CV methods. To answer our second question, “What are the requirements that have to be considered when applying DL-based models to AVI?”, we analyzed our publication corpus with regard to requirements, with either a direct textual mention or indirect mention through evaluation procedures, as well as reported metrics. These requirements can be grouped in two dimensions: on the one hand, between general and application- or domain-specific requirements or, on the other hand, between hard technical and soft human factors. The most general technical challenge is performance, as visualized in Figure 2.

Figure 2.

AVI requirements grouped by their combined properties with regard to specificity and whether they are technical-factor- or human-factor-driven.

In the case of the automation of previously manual processes, human-level performance is usually used as a reference value, which is intended to guarantee the same level of safety, as mentioned by Brandoli et al. for aircraft maintenance [38]. If the target process is already automated, the DL-based solution needs to prevail against the established solution. Performance can be measured by different metrics, as some processes are more focused on false positives (FPs), like the one investigated by Wang et al. [39], or false negatives (FNs). Therefore, it cannot be considered a purely general requirement, as it is affected by the choice of metric. Real-time capability is a strictly technical challenge, as it can be defined by the number of frames per second (FPS) a model can process but is mostly specific, as it is mainly required when inspecting manufactured goods on a conveyor belt or rails/streets from a fast-moving vehicle for maintenance [39,40,41,42]. Hardware constraints are the most specific and rare technical challenge found in our publication corpus. This usually means that the models have to run on a particular edge device, which is limited in memory, the number of floating-point operations per second (FLOPS), or even the possible computational operations it can perform [43]. Sparse (labeled) data are primarily a technical challenge, where the emphasis is put on the fact that models with more parameters generally perform better but require more data samples to optimize those parameters, as well. The labeling process introduces the human factor into this requirement because a consistent understanding of the boundary between different classes is necessary in order to produce a coherent distribution of labels with as few non-application-related inconsistencies or outliers as possible. This is especially true if there are few samples and if multiple different persons create the labels. Models need to perform well with these small labeled industrial datasets [44,45,46,47,48] or, even better, work without labeled data [49,50]. One of the key advantages of DL-based models compared to classic CV methods is their generalization capability, which makes them robust against partly hidden objects, changing lighting conditions, or new damage types. This characteristic is required for many use cases where it is not possible to enforce controlled conditions or have full visibility, such as rail track inspection [42,51,52], or it is an added benefit when a model is able to extrapolate to previously unseen damages [53]. As this requirement is not easily quantifiable and application-specific to a certain degree, we place it centrally in both dimensions. Part of any industrial transformation process is the people involved, whether they are directly affected as part of the process or indirectly affected through interfaces with the process. To improve the acceptance of change processes, it is necessary to convince domain experts that they can trust the new DL solution. In addition, explainability can also be helpful from a model development perspective to determine the reason for certain model confusions that lead to errors [54].

3.2. Overview of Visual Inspection Use Cases

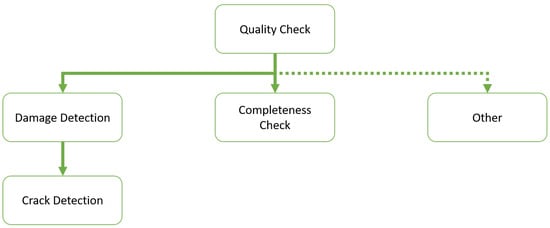

In order to answer our second guiding question, “Which AVI use cases are currently being addressed by DL models?”, we examined the reviewed publications to determine whether it is possible to summarize the solved VI tasks into specific use cases. We identified a hierarchy of VI use cases based on the surveyed literature, that visualized in Figure 3.

Figure 3.

Hierarchical structure of top-level VI use cases based on the surveyed literature.

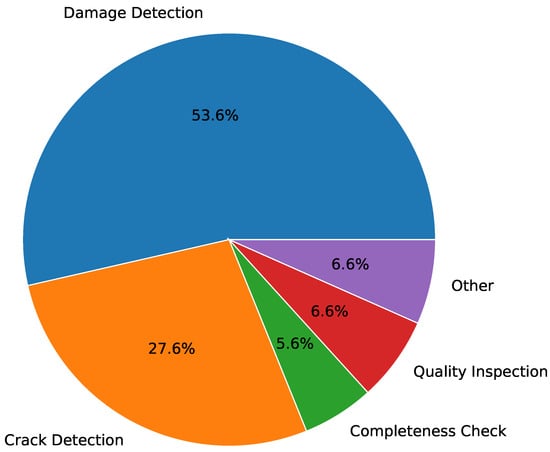

As previously mentioned, VI is getting more challenging due to ever-increasing quality requirements, and all use cases can be considered to be at least quality inspection. In our literature review, quality inspection use cases are those that do not detect defects or missing parts but the state of an object. For example, determining the state of woven fabrics or leather quality is a use case we considered to be only quality inspection [55,56]. Damage detection, also referred to as defect detection in the literature, summarizes all VI use cases that classify or detect at least one type of damage. An example of damage detection use cases is the surface defect detection of internal combustion engine parts [57] or the segmentation of different steel surface defects [58]. Crack detection can be considered a specialization of damage detection use cases and has its own category because of its occurrence frequency in the surveyed literature. The crack detection use case deals solely with crack classification, localization, or segmentation. The application context is usually the maintenance of public buildings, for example, pavement cracks [59,60] or concrete cracks [61,62]. In addition to detecting defects, another VI use case is to check whether a part is missing or not. Completeness check summarizes these use cases. A completeness check can be the determination of whether something is missing, or to the contrary, the determination of whether something is present. O’Byrne et al. [63] proposed a method to detect barnacles on ship hulls. Another example is provided by Chandran et al. [51], who propose a DL approach to detect rail track fasteners for railway maintenance. The last VI use case class we defined as other, which includes VI use cases that cannot directly be seen through only quality inspection and are not of the damage detection or completeness check type. Example use cases are plant disease detection [64,65] or type classification [66]. Figure 4 shows the distribution of the VI use cases over the investigated literature. Most publications (53.57%) deal with damage detection use cases. The second most (27.55%) researched VI use case is crack detection, followed by quality inspection (6.63%) as well as other use cases (6.63%), and the least occurring type is completeness check use cases (5.61%).

Figure 4.

Distribution of reviewed publications by VI use cases.

3.3. Overview on How to Solve Automated Visual Inspection with Deep-Learning Models

In the following, we aim to answer our third guiding question, “Are there certain recurring AVI tasks that these use cases can be categorized into?”, by investigating with which DL approach the VI use cases can be solved. For this, we determined four different AVI tasks to categorize the approaches. Each of these tasks aims to answer one or more questions about the inspected object. Binary classification tries to answer the question, Is the inspected object in the desired state? This applies mainly to accept/reject tasks, like separating correctly produced parts from scrap parts, regardless of the type of deficiency. Multi-class classification goes one step further, trying to answer the question, In which state is the inspected object? By additionally identifying the type of deficiency, it is possible to, e.g., distinguish between parts that are irreparably damaged and parts that can still be reworked to pass the requirements or determine the rework steps that are necessary. Localization further answers the question, Where do we find this state on the inspected object? This adds information about the locality of a state of interest, as well as enabling the finding of more than one target. It can be utilized, e.g., to check assemblies for their completeness. The fourth AVI task, multi-class localization, answers the question, Where do we find which state on the inspected object? For example, the state of a bolt can be present, missing, rusty, or cracked. Thus, the set of states is not fixed and depends, among other things, on application-specific conditions, as well as on the object under inspection.

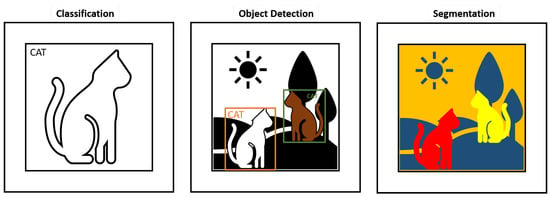

These four AVI tasks are closely related to the three most common CV tasks, image classification, object detection, and segmentation, which are visualized in Figure 5.

Figure 5.

Visualization of the three different CV tasks—classification, object detection with two bounding boxes, and segmentation.

In image classification, the goal is to assign a corresponding label to an image. Object detection is performed by a method or model that searches for objects of interest. Usually, the object is indicated by a rectangular bounding box, and simultaneously, object classification is performed for each object. Unlike pure classification, multiple objects can be detected and classified. Image segmentation is the process of separating every recognizable object into corresponding pixel segments. This means that both classification AVI tasks are performed by image classification models, while both localization tasks are performed by either an object detection model or a segmentation model.

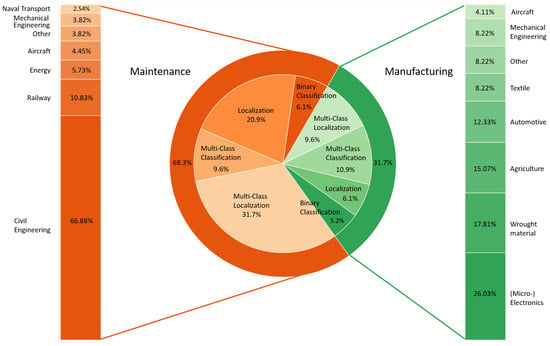

Figure 6 shows the composition of our publication corpus with regard to the application context, industry sector, and AVI task.

Figure 6.

Distribution of reviewed publications by inspection context, VI task, and associated industrial sector.

The number of papers in the maintenance context outweigh those addressing manufacturing by two to one, as depicted by the outer pie chart in the center. Each of those contexts is associated with several different industrial sectors in which AVI is applied. The share of the industry sectors in each context is plotted on the left- and right-hand sides. The biggest shares in maintenance are held by the fields of civil engineering, railway, energy, and aircraft. These sum up to a total of 87.89% of all maintenance publications. The manufacturing sectors, (micro-) electronics, wrought material, agriculture, automotive, and textiles, add up to a total of 79.46% of all manufacturing papers. In addition to the industry sectors, we also group the applications per context by the AVI task. The distribution of VI tasks for each industry context is visualized by the inner pie chart. For maintenance applications, 77.01% of their total 68.3% is covered by basic and multi-class localization tasks. Only 15.7% of the tasks can be attributed to classification tasks. In manufacturing, the VI tasks are spread across 16.1% classification and 15.7% localization publications. The multi-class variants are clearly more frequent for both, with 9.6% for localization and 10.9% for classification.

In the following subsections, one for each AVI task, we investigate the collected literature and utilized models. Only the best-performing architecture is mentioned if multiple are utilized. Models that are derived from established base architectures like Residual Networks (ResNet) [67] are still considered to belong to that architecture family unless they are combined or stacked with another architecture. We also subsumed all versions of the “you only look once” (YOLO) architecture [68] under YOLO. Models that are custom designs of the authors and not based on any established architectures are subsumed under the categories multi-layer perceptron (MLP), convolutional neural network (CNN), or Transformer based on their main underlying mechanisms.

3.3.1. Visual Inspection via Binary Classification

In the surveyed literature, 21 publications describe a way to approach AVI with binary image classification; these are summarized in Table 2. Following the general trend of VI use cases, damage detection is addressed ten times with binary classification.

Adibhatla et al. [50] used a ResNet, Selmaier et al. [46] used an Xception architecture, and Jian et al. [69] used a DenseNet to classify whether damage is visible or not. Crack detection is addressed seven times with binary classification. In four publications, the authors propose a CNN architecture for AVI crack detection. In the other two publications, crack detection was performed with an AlexNet or a Visual geometry group model (VGG). Ali et al. [70] proposed a sliding window vision transformer (ViT) as a binary classifier for crack detection in pavement structures. Binary classification is also utilized for completeness checks and plant disease detection (other). For plant disease detection, Ahmad et al. [64] used an MLP, while O’Byrne et al. [63] used a custom CNN for a completeness check use case.

Table 2.

Overview of VI use cases and models that solve these problems via binary classification.

Table 2.

Overview of VI use cases and models that solve these problems via binary classification.

| VI Use Case | Model | Count | References |

|---|---|---|---|

| Crack Detection | AlexNet | 1 | [71] |

| CNN | 4 | [72,73,74,75] | |

| VGG | 1 | [76] | |

| ViT | 1 | [70] | |

| Damage Detection | AlexNet | 1 | [77] |

| CNN | 1 | [78] | |

| DenseNet | 3 | [38,69,79] | |

| Ensemble | 1 | [80] | |

| MLP | 1 | [81] | |

| ResNet | 1 | [50] | |

| SVM | 1 | [82] | |

| Xception | 1 | [46] | |

| Quality Inspection | AlexNet | 1 | [83] |

| MLP | 1 | [84] | |

| Other | MLP | 1 | [64] |

| Completeness Check | CNN | 1 | [63] |

3.3.2. Visual Inspection via Multi-Class Classification

Table 3 presents an overview of 42 publications that solve various use cases of AVI through multi-class classification and the models that are used to solve them. The models used to solve these use cases include popular DL architectures such as AlexNet, CNN, DenseNet, EfficientNet, GAN, MLP, MobileNet, ResNet, single-shot detector (SSD), and VGG. Twenty-one publications describe approaches for damage detection, of which six approaches are based on custom CNNs. The other four authors used ResNet-based architectures. Kumar et al. [85] proposed an MLP architecture to perform damage detection. Also, an EfficientNet and a single-shot detector (SSD) were employed for multi-class damage detection. Five publications cover crack detection use cases. For example, Alqahtani [86] used a CNN, and Elhariri et al. [87] as well as Kim et al. [88] used a VGG. Also, DL models like ResNet, DenseNet, and an ensemble architecture are proposed by some authors. Completeness checks were performed with the help of a ResNet by Chandran et al. [51] or an SSD, as shown by Yang et al. [89]. In seven publications, the authors used custom CNNs, DenseNet, ResNet, or VGG in quality inspection use cases. Also, other use cases can be addressed by different DL-based CV models or MLPs.

Table 3.

Overview of VI use cases and models that solve these use cases via multi-class classification.

3.3.3. Visual Inspection via Localization

As previously mentioned, localization is used to detect where an object of interest is located. Table 4 summarizes which VI use cases are addressed with localization and the appropriate models. In a total of 50 publications, localization was employed for AVI. Contrary to classification approaches, crack detection is the most addressed VI use case, with a total of 26 publications investigating it. The most utilized approach for crack detection is the CNN, which was applied in eight publications. Furthermore, in three other publications, extended CNN architectures were used. Kang et al. [120] introduced a CNN with an attention mechanism, and Yuan et al. [121] used a CNN with an encoder–decoder architecture. Andrushia et al. [122] combined a CNN with a long short-term memory cell (LSTM) to process the images recurrently for crack detection. Among custom CNN approaches, six authors used UNet to detect cracks, mostly in public constructions. Damage detection via localization occurred 16 times and was addressed with at least twelve different DL-based models. Three authors decided to approach it with DL-based models of the Transformer family. For example, Wan et al. [123] utilized a Swin-Transformer to localize damages on rail surfaces. Completeness checks can be executed with YOLO and/or regional convolutional neural networks (RCNNs). Furthermore, YOLO can be used for vibration estimation, as shown by Su et al. [124]. Oishi et al. [125] proposed a Faster RCNN to localize abnormalities on potato plants.

Table 4.

Overview of VI use cases and models that solve these use cases via localization.

3.3.4. Visual Inspection via Multi-Class Localization

The majority of the literature reviewed used multi-class localization for VI. In 83 publications, it is shown how to approach different use cases, like crack or damage detection, with multi-class localization. Table 5 provides a detailed overview. As for the two classification approaches, damage detection is the most investigated VI use case, with 58 publications. Therein, YOLO and Faster RCNNs are the two most used models, with over ten publications. They are followed by CNNs and Mask RCNN models, which are utilized more than five times. FCN, SSD, and UNet can also be used as approaches to multi-class damage detection. Huetten et al. [164] conducted a comparative study of several CNN models highly utilized in AVI and three vision transformer models, namely, detection transformer (DETR), deformable detection transformer (DDETR), and Retina-Swin, on three different damage detection use cases on freight cars. Multi-class localization was used in 15 publications for crack detection. In five publications, the authors performed crack detection with a YOLO model. Crack detection can also be performed with AlexNet, DeepLab, FCN, Mask RCNN, and UNet, which was shown in different publications. In three different publications, the authors show how to conduct quality inspection with YOLO. YOLO can be used in a tobacco use case, as well (other), as shown by Wang et al. [165].

Table 5.

Overview of VI use cases and models that solve these use cases via multi-class localization.

4. Analysis and Discussion

In this section, we focus on answering guiding questions three, “What is the data basis for industrial AVI and are there common benchmark datasets?”; four, “How do deep learning models perform on these tasks and which of them can be recommended for certain AVI use cases?”; and five, “Are recent SOTA CV deep learning models used in AVI application, and if not, is there untapped potential?”. We sought answers to these questions by analyzing the performance of the models as well as comparing the SOTA in DL-based AVI applications and academic CV research. First, we look into the datasets and learning paradigms that are utilized. This is followed by an analysis of the performance of the applied DL models in the AVI tasks they have been categorized into. This section is concluded by summarizing developments in academic research and identifying promising models and methods from it.

4.1. Inspection Context and Industrial Sectors

There are many different industry sectors that apply AVI in maintenance and manufacturing. In maintenance, the clear majority (66.88%) of publications are from the civil engineering sector, while, in manufacturing, the distribution of a similar total share is more even, with 26.03% from (micro-) electronics, 17.81% from wrought material production, 15.07% from agriculture, and 12.33% from automotive. This may be due to the fact that many manufacturing applications were already targeted for automation with classic CV, as the environmental conditions that affect image quality, as well as variance, are easier to control, so the expected process improvements were generally lower. Most maintenance use cases are performed outdoors in varying light, weather, and seasonal conditions. Therefore, DL-based CV was needed to address use cases in the civil engineering or railway sector, such as bridge, road, or building facade inspection, as well as rail surface, fastener, or catenary inspection. Looking at the preferred AVI tasks, we see a clear majority of 77.01% for localization in maintenance, while, in manufacturing, the shares of classification (50.47%) and localization (49.53%) are evenly split. The reason for this is that, in maintenance, it is usually necessary to assess a whole system with many different parts, so the need for localization arises, while, in manufacturing, it is easier to limit the inspection to individual parts, which is also possible with classification.

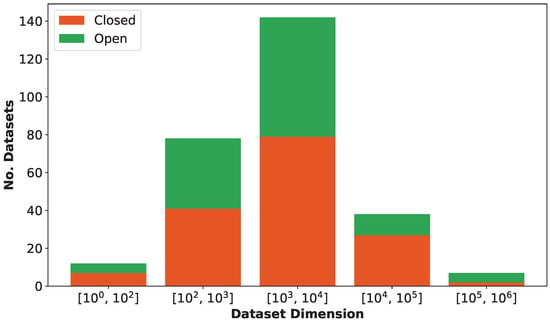

4.2. Datasets and Learning Paradigms

The dataset dimensions vary from 45 images [48] to more than 100,000 images [81,94,183,231], with the average number of images at 14,614 and a median of 1952. Figure 7 visualizes the number of datasets grouped by the decimal power of their dimension, ranging from less than 100 to less than 1,000,000. The two largest groups cover the intervals [101, 1000] and [1001, 10,000], with most of the datasets tending toward the lower boundary of their respective group. These two groups also feature the most open-access datasets: in total, 77/243 (31.69%) of the papers used at least one open-access dataset.

Figure 7.

Distribution of dataset dimensions (number of samples) utilized by publications in our publication corpus.

Another important factor in relation to datasets is the uniformity of the distribution of their samples across the classes, often formulated more negatively as class imbalance. Class imbalance should be considered in learning processes since it can result in incorrect classification, detection, or segmentation. Therefore, imbalance in datasets has to be quantified differently across different learning tasks. For classification, each sample is directly associated with only one class. Object detection may feature more than one class per image, several instances of the same class, or no annotated class at all. So, to quantify the imbalance of an object detection dataset, the number of annotated objects, as well as the number of images featuring no objects, is required. Dataset imbalance for segmentation tasks can be quantified in the same way as for object detection, but the most exact measure would be to evaluate the pixel areas covered by each class in relation to the overall pixel area of the dataset, which is only reported by Neven et al. and Li et al. [58,121]. Given the number of classes n, the total number of samples, and the number of samples per class, we propose to quantify the balance in a dataset by Equation (1). The distance between the hypothetical balanced class ratio and the actual ratio between samples of a particular class and the total number of samples is used to quantify the imbalance for this class. The Euclidean distance is chosen as the distance metric for numerical stability reasons. Summing up these distances for each class yields zero for a perfectly balanced dataset and one for a completely imbalanced dataset, so subtracting it from one achieves a metric measuring the balance.

In total, our publication corpus encompasses 244 results produced on 204 unique datasets. For 47 publications, there is no detailed information on the training data, while 54 do not specify their test data, and in 44 cases, neither training nor testing data are sufficiently described. This means that, despite many authors providing open access to their data bases, very few researchers in the same field make use of them. This may be either due to available datasets being deemed insufficient in size, label precision, image quality, or other reasons or because they are not advertised enough and therefore not recognized by peer researchers. Overall, this makes the results less comparable and harder to reproduce and hinders further development of existing research results by others, which is detrimental to the progress of AVI.

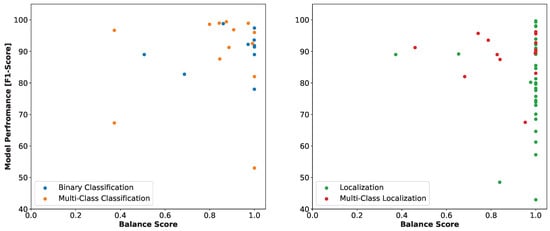

Figure 8 visualizes the relationship between model performance measured by the F1-score and the balance score defined in the last section. We chose to only include publications in this plot that report the F1-score because it is the only reported metric that deals well with balanced as well as imbalanced datasets. The chart on the left shows a mostly linear correlation between balance and performance in classification tasks, with a few upward and downward outliers. These are predominantly from the multi-class versions. Binary classification has a lot more publications based on entirely balanced datasets.

Figure 8.

Performance of models in classification (left) and localization tasks (right) plotted against the balance score.

The first thing that stands out for the localization tasks depicted in the right graph is the accumulation of (binary- or single-class) localization datasets with a balance score of one. This is primarily attributable to the fact that it is seldom specified whether and how many images without inspection targets are contained in the datasets, specifically for segmentation. The general correlation between class balance and performance is visible as well, but the gradient of an imaginary regression line would be slightly lower compared to classification. This can be explained by the higher resilience of localization models to dataset imbalance based on the property that there are generally fewer areas of interest or foreground in an image than background, and in this task, they have to be explicitly marked.

In our literature review, we found that 14 datasets were utilized more than once. Table 6 lists them in descending order by the number of publications in which they have been used. Most of them are concerned with enforcing a binary differentiation between damaged and intact or background, respectively, via the computer vision tasks classification or segmentation (SDNET 2018, CFD, RSSDD, Deep Crack, Crack Tree, Özgenel crack dataset, Crack 500, Crack LS 315, Aigle RN). This places them at the bottom end regarding class-induced complexity. In many cases, framing the learning like this may be the only way of making it manageable at 500 or fewer samples with a certain performance goal in mind. The cost of data acquisition and annotation certainly plays a role, as well. Lastly, the applications all come from a maintenance context, where the basic recognition of damages in the field can be a satisfactory first step, and a more detailed inspection will be performed by the personnel repairing the damage.

Table 6.

Characteristics of benchmark datasets in automated visual inspection in industrial applications. Pixel area percentages are based on [121].

Statements about the imbalance of the segmentation datasets are possible in different levels of detail. Li et al. [121] report the pixel area percentages of cracks and background for DeepCrack, CrackTree 260, Crack LS 315, and Crack 500, which are very pronounced, with the highest balance being 0.35 for Crack 500. The balance reaches as low as 0.32 for Crack LS 315 and CrackTree, which poses a significant challenge compared to anything deemed feasible in classification problems. CFD, RSDD, and Aigle RN do not allow a statement to be made, as there is no information about images without damage, the number of class instances, or the area ratios between classes. The two classification datasets SDNET 2018 and Özgenel crack dataset are far more balanced, with scores of 0.51 and 1.0, as well as offering significantly more data samples.

The Severstal and Magnetic Tile Surface segmentation datasets have higher class-induced complexity, with four and six classes compared to only two. The extracted information about them only allows for an instance-based evaluation of balance, which leads to balance scores that are slightly higher compared to the crack segmentation datasets, with 0.37 and 0.40, respectively. They are also both from the production context in quality assurance applications, where the focus may be not only on recognizing any damages but also on categorizing them in more detail to be able to draw conclusions about process parameters and ultimately improve the quality in the future. The NEU surface dataset is also from the steel industry like the Severstal dataset but with a completely balanced class distribution at six total classes, albeit with only 1800 samples at a similar resolution.

GRDDC 2020 and Road Damage Dataset 2018 offer a more nuanced view of road damage with four and eight classes, respectively. Despite their higher number of classes, they are a lot less imbalanced compared to the binary crack segmentation datasets, but this is only partly due to the learning task of object detection being pursued.

A clear recommendation can be made for Crack 500 as a crack segmentation benchmark, as it offers a comparatively high balance score, the most data samples for this specific CV task, and the highest-resolution images, as well. This means it is possible to increase the number of samples even further by subdividing them, which may be necessary to reduce the computational cost or use an efficient training batch size, depending on the used model. For crack classification, the Özgenel crack dataset seems to be recommendable, as it is well balanced and has a tolerable size difference from SDNET. In all other areas, a targeted combination of datasets, such as the NEU surface defect database and Severstal dataset for steel surface detection, to compensate for each other’s weaknesses appears to be the best solution.

The majority of industrial AVI use cases are based on datasets that are, at most, 10% of the size of the Microsoft common objects in context benchmark dataset (MS COCO) or 1% of the size of the ImageNet benchmark dataset. One would therefore expect that most authors resort to methods that do not require labeled training data or strategies that reduce the number of training samples required.

Still, the vast majority of the surveyed literature covers supervised learning applications (97.37%), with only three publications employing unsupervised anomaly detection [48,50,125] and one using semi-supervised learning [130]. Regarding the training processes employed, we can identify three groups. The first group, which accounts for 49.32%, utilizes transfer learning; i.e., the models are initialized with weights from one of the major CV challenges, and in most cases, an implementation from one of the many available open-source repositories is also used. This reduces the training time as well as lowers the number of required samples for a sufficiently large training dataset and thus the effort to create such a dataset. The second group, which makes up 30.59% of the publication corpus, trains their models from scratch. This results in a much larger need for training data and training time but is also the only way if the author creates their own architecture, which 21.0% of the authors did. In the third group, which is made up of the remaining 20.09%, there is no indication in the publication of whether the model/s were pretrained or not. In none of the publications using datasets with more than 50,000 samples did the authors utilize transfer learning. This seems to be a viable approach since the datasets are sufficiently large. At the same time, a comparison with transfer learning approaches would be insightful since the underlying models have been pretrained on much larger datasets. However, it is counterintuitive and incomprehensible that transfer learning from benchmarks, or the addition of samples from an open dataset from the same domain, was not used in 20 of the 68 cases with fewer than 1000 samples. Five of the eight classification models in this group performed below the median compared to other models with their respective architectures, while only five of the twelve localization models are in this performance range. So, there is no clear indication of whether transfer learning would have improved performance in these cases. In addition to transfer learning, self-supervised or unsupervised learning could prove useful since they alleviate the effort of labeling.

4.3. Performance Evaluation by AVI Task

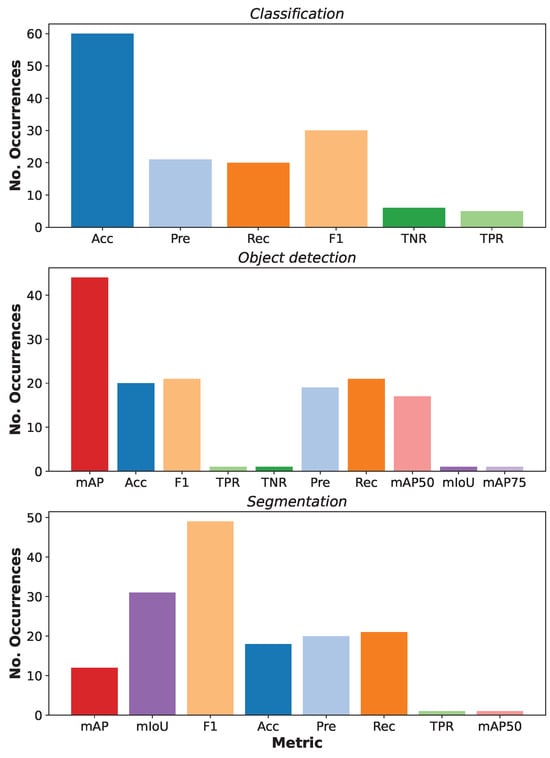

The performance of the DL models utilized is not as easily comparable because of the heterogeneity of reported metrics as well as datasets. In total, ten different metrics were reported, namely, accuracy (Acc), precision (Pre), recall (Rec), F1-score (F1), true-negative rate (TNR), true-positive rate (TNR), mean average precision (mAP), mean average precision at 50% as well as 75% intersection over union (mAP50, mAP75), and mean intersection over union (mIoU). Figure 9 shows how often a certain metric was utilized for applications that use classification, object detection, and segmentation (from top to bottom).

Figure 9.

Distribution of metrics used in papers in our publication corpus grouped by CV task.

The first thing that stands out is the fact that accuracy is still the most common metric for classification tasks, despite having low descriptive quality, especially with imbalanced datasets, which are quite common, as stated in Section 4.2. The F1-score, which is the harmonic mean of precision and recall, is the second most common metric. Precision and recall are tied for third place. Among object detection use cases, the mean average precision (mAP) is the most reported metric, probably because it is also the official metric of the MS COCO object detection challenge. The F1-score and recall are also very relevant in object detection tasks, with the second-most occurrences in the surveyed literature, while accuracy is in third place. Segmentation tasks are predominantly evaluated by their F1-score and mean intersection over union (mIoU) between predictions and the ground truth. Recall is in the top three as well for the last CV task.

In the following, we will first analyze the performance of the models employed in the application use cases to derive recommendations for each AVI task based on the most reported metrics. These are accuracy as well as F1-score for (binary and multi-class) classification and mAP as well as F1-score for (binary and multi-class) localization. If precision and recall were reported but F1-score was not, we calculated it based on them and take it into account as well. To be able to provide recommendations for segmentation models as well, we will also look at the mIoU, as this seems to be a metric reported almost exclusively for this CV task. After this, we will also look at the performance on the benchmark datasets from Table 6 and determine whether there are any differences.

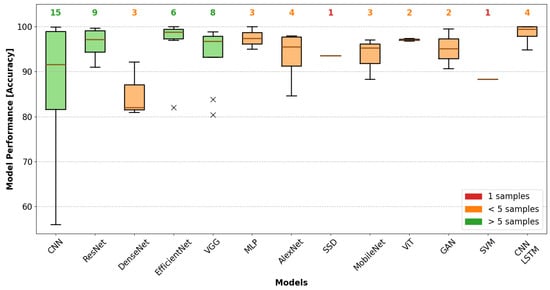

Custom CNN LSTM networks show the best median accuracy, as depicted in Figure 10, but this does not have the highest expressive value, with a sample size of four and all results coming from the same paper [74].

Figure 10.

Distribution of model accuracy for all classification models reporting it as a metric in our publication corpus. × marks data points that have a distance of more than 1.5 times the interquartile range to the first or third quartile.

The same applies to MLPs and ViT, which show a higher median accuracy than all other models with two to four occurrences. Despite their good performance, no general recommendation can be given for MLPs because all of them employ hand-engineered input features. EfficientNet, on the contrary, is very close performance-wise but was applied to five datasets by four different authors. ResNet and VGG are the best two architectures, with considerable sample sizes of nine and eight, respectively. While the accuracy distribution for VGG shows less spread, meaning more consistent performance over different tasks, ResNet’s median performance is almost as good. Custom CNNs have the third-worst median accuracy and a very large spread, as well. As this category contains very different architectures, this is to be expected. So, all in all, the best-performing choice for solving classification tasks based on accuracy as the metric from our publication corpus is EfficientNet.

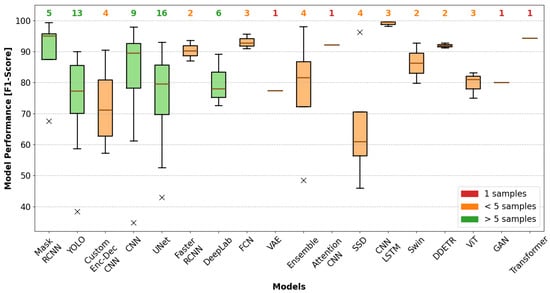

When looking at the classification F1-scores in Figure 11, we generally see similar median values and a higher spread compared to accuracy.

Figure 11.

Distribution of model F1-scores for all classification models reporting it (or precision and recall) as a metric in our publication corpus. × marks data points that have a distance of more than 1.5 times the interquartile range to the first or third quartile.

This is unexpected but could be an indicator that most of the datasets do not show strong imbalances. As for accuracy, EfficientNet has the highest median F1-score, closely followed by ViT. MLPs have the third-highest median F1-score and the third-narrowest interquartile range. ResNet has the fourth-highest median F1-score as well as the third-largest interquartile range, but it is still the best model, with at least five occurrences. It is closely followed by custom CNNs and MobileNet with very similar median F1-scores but also the largest spread for the former. VGG-type models show a much weaker performance when evaluated with the F1-score compared to accuracy. Based on our publication corpus, we would recommend using Efficient or ResNet models for classification tasks if one does not want or does not have the experience or expertise to create their own custom CNN model. ViT looks promising, as well, but has to be investigated more thoroughly to make a general statement.

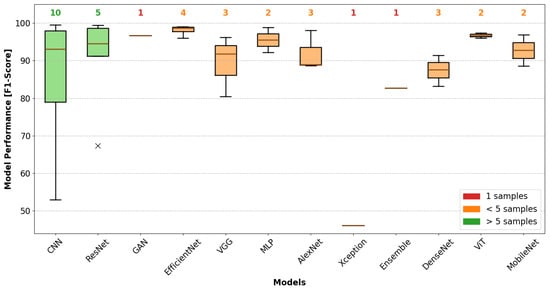

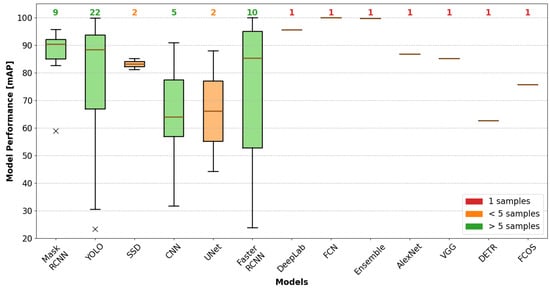

The localization performance represented by mAP is visualized in Figure 12.

Figure 12.

Distribution of model mAP for all localization models reporting it as a metric in our publication corpus. × marks data points that have a distance of more than 1.5 times the interquartile range to the first or third quartile.

Mask RCNN models show the highest median mAP and the lowest interquartile range and spread among models with at least five occurrences. The two other models with a high mAP performance and at least five samples are YOLO and Faster RCNN. Faster RCNNs have a similar median mAP but a much larger interquartile range with a slightly higher min–max spread. As YOLO has more than twice as many occurrences as Faster RCNN, it seems reasonable to say it is the better model, especially the newest versions. It is interesting to see that the spread and interquartile range of Mask RCNN are so much smaller than those of Faster RCNN, as it is actually a Faster RCNN architecture with an added segmentation head. Multi-task training seems to show a benefit regarding better generalization. Custom CNN architectures perform much worse median-wise compared to classification tasks, even though they have a smaller performance spread than YOLO and Faster RCNN. SSD, UNet, DeepLab, FCN, ensemble models, AlexNet, VGG, DETR, and the fully convolutional one-stage object detection model (FCOS) have only two or fewer publications reporting mAP. Except for DETR and FCOS, the performance is within the mAP range of Mask RCNN or even above it, which indicates promise. However, the data basis is insufficient for a valid assessment.

When looking at the localization F1-scores in Figure 13, there are fewer models with only one reported performance and also one more with a sample size of five or above compared to mAP (Mask RCNN, YOLO, UNet, DeepLab, and CNN).

Figure 13.

Distribution of model F1-scores for all localization models reporting it (or precision and recall) as a metric in our publication corpus. × marks data points that have a distance of more than 1.5 times the interquartile range to the first or third quartile.

Mask RCNN has the highest median F1-score as well as the lowest spread among those five and also the second-highest median overall. Custom CNNs show the second-highest median F1-score, as well as a smaller interquartile range and min–max spread, compared to the mAP metric. The next three models, UNet, DeepLab, and YOLO, are within 5% of their median F1-score, around 78%. UNet shows the highest median of the three, but also the largest interquartile range and min–max spread. YOLO’s median performance is the lowest, with an interquartile range very similar to that of UNet but a slightly smaller min–max range. So, DeepLab seems to be the best of those three models, as it features the second-highest median F1-score and the narrowest spread. Faster RCNN, FCN, attention CNN, CNN LSTM, DDETR, and custom transformer models have between 1 and 3 reported performances within the range of the best-performing model, Mask RCNN. Further investigations on those models will yield more general insight into their promising performance.

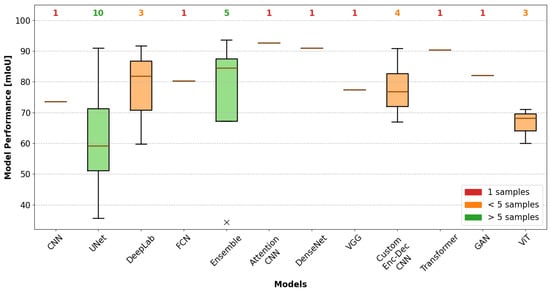

There are mostly segmentation models found in the mIoU boxplots in Figure 14, as it was reported as a metric for other CV tasks less than five times.

Figure 14.

Distribution of model mIoU for all localization models reporting it as a metric in our publication corpus. × marks data points that have a distance of more than 1.5 times the interquartile range to the first or third quartile.

The reported values are generally lower than mAP and F1-score due to the higher difficulty of the segmentation task compared to object detection. There are also only five models with more than one sample, which are UNet, ensemble models, DeepLab, ViT, and custom encoder–decoder-CNNs. The best of those five regarding the median mIoU are ensemble models. DeepLab shows the second-highest median mIoU with a slightly larger min–max spread compared to ensemble models. Custom encoder–decoder CNNs achieve a median mIoU 5%p lower but with a slightly lower spread. The UNet model, which occurred the most, also has the worst performance regarding the median, as well as the interquartile range and min–max spread. Of the seven models with only one sample, an attention-enhanced CNN, DenseNet, and a custom transformer yield a performance that is more than 10%p better than the median of DeepLab. Further investigation of these architectures on different datasets seems promising.

Based on our surveyed literature, we would recommend using Mask RCNN or YOLO models for localization tasks solved with object detection models and DeepLab, Mask RCNN, or UNet for segmentation. Despite a higher time expenditure for implementation and training, the use of customized CNN models can be justified and yield similar performance to the aforementioned models. However, it should be noted that, on the one hand, the necessary expertise must be available and, on the other hand, the amount of data must be sufficient. CNN LSTM, DDETR, custom transformer, and FCN look promising, as well, but have to be investigated more thoroughly to make a more informed statement.

4.4. Comparison with Academic Development in Deep Learning Computer Vision Models

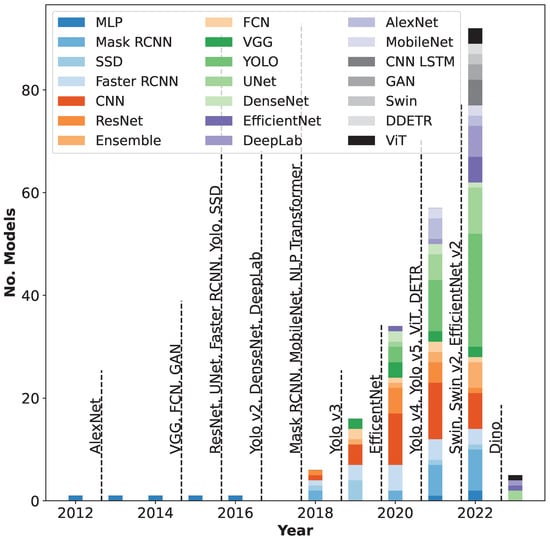

Figure 15 illustrates how often a certain DL model was applied to VI each year between 2012 and 2023 based on our publication corpus. In addition, we highlight the progress of SOTA DL CV models by marking in which year the models were first proposed. There were very few publications from 2012 to 2017, but 2018 onward, there has been a strong increase every following year, reaching a maximum to date of almost 100 publications in 2022.

Figure 15.

Timeline showing the number of occurrences of models in a certain year in our publication corpus from 2012 to 2023. Only models that occurred at least two times in total were included. Vertical dashed lines mark the proposal of models considered to be research milestones.

In 2012, a CNN received significant attention for its classification ability. This CNN is called AlexNet and took first place at the ImageNet Large Scale Visual Recognition Challenge 2012 (ILSVRC 2012) with an error rate 10.9%p lower than the second-place model [249]. Thereafter, the field of CV was dominated by CNN architectures. In the following years, the models became deeper and more complex. In 2014, VGG [250] and FCN [251] were introduced, but with deeper models, the vanishing gradient problem occurs more intensively. One solution was introduced in 2015 with Microsoft’s ResNet, which uses residual connections [67]. Due to the residual connections, the gradient does not decrease arbitrarily. This year also marks the proposal of the one-stage object detection architectures YOLO [68] and SSD [252], which are optimized for inference speed; the third iteration of the two-stage detector (Faster) RCNN [253]; and the segmentation architecture UNet [254]. In 2016, DenseNet was published, and it uses a similar approach to ResNet’s residual connections, called dense connections [255]. In the same year, the second version of YOLO [256] was published, adopting the concept of anchor boxes from SDD and using a new backbone network, called Darknet-19, specifically designed for object detection. In 2017, an RCNN version for semantic segmentation, called Mask RCNN [257], as well as the first transformer model in natural language processing [258], was proposed. The third version of YOLO [259], proposed in 2018, adds multi-scale detection in addition to a deeper backbone network to further improve the detection of small objects. In 2019, Google proposed EfficientNets [260], a series of models, where architectural scaling parameters like width, depth, and resolution were not chosen by the authors but determined by a learning-based approach called neural architecture search. YOLO v4 [261] and v5 [262] were published in very short succession by different authors implementing many similar improvements, like a new data augmentation strategy called mosaic augmentation, where different images are combined for training, the mish activation function [263], and cross-stage partial connections (CSP) [264], to name a few.

Recently, the impressive results of transformer models in natural language processing (NLP) have attracted the attention of CV researchers to adapt them to their domain. Two different approaches have been established: either they are utilized as backbone networks, such as the vision transformer (ViT) [265] or the shifted windows transformer (Swin) [266], or they function as detection heads, such as the detection transformer (DETR) [267] and the dynamic head transformer (DyHead) [268], which work on features extracted by an upstream (CNN) backbone. Nowadays, transformer models outperform convolution-based models on CV benchmark datasets like MS COCO [269] or Pascal visual object classes (VOC) by up to 7.5% in mAP [270].

Another advantage of transformers is that they do not require a lot of labeled data, since they can be pretrained for object detection in a self-supervised manner before being fine-tuned on small labeled datasets. Chen et al. proposed a pretraining task specifically for the DETR model that improves on its supervised performance, while Bar et al. even achieved highly accurate zero-shot with the same model [271,272]. Xu et al. developed a student–teacher-based training procedure for object detection that is independent of the model architecture, but transformers show very good performance when trained with it [273]. These aforementioned methods are limited to the task of object detection, but transformers are also able to learn more general representations from self-supervised training that can be adapted to different CV tasks, such as classification, object detection, segmentation, key point detection, or pose estimation. These methods can be grouped into three different categories: masked image modeling (MIM), contrastive learning, and hybrids of those two. In masked image modeling, parts of the input images are masked out, usually with the average color values of the image, and the task is to reconstruct these masked-out sections [274,275]. Contrastive learning tries to achieve similar representations for similar input images. This is achieved by augmenting the input in two different ways and forcing the model to represent both views close to each other, while augmented views of other images are pushed away in the representation space [276,277,278,279,280,281]. Fang et al. combined both paradigms, conditioning their model to reconstruct the masked-out features of a contrastive image language pretraining (CLIP) model [282] to achieve even better results. In AVI, properties like strong generalization and data efficiency are highly desirable, since labeled data are scarce. Especially in tasks where errors or damages need to be detected, there is a lack of examples for training. When looking at the timeline in Figure 15, it is noticeable that there is a time gap of two to three years between the invention of a model and its transfer to an AVI application. Consequently, we can argue that the performance in AVI can be improved by the increased application of newer CNN models, such as Efficient v1/2 [260,283], FCOS [284], or attention-enhanced CNN models, which showed promise in localization tasks with regard to their F1-score and mIoU. The introduction of vision transformer models such as DETR, Swin v1, or the Retina-Swin [266,267,285] started in 2022, which is already faster than what we observed with most CNN models in the past. Most of these publications yielded results within the top range of their AVI use case, as has been shown in Section 4 [70,123,152,164,187]; therefore, we expect and recommend the acceleration of the application of vision transformers to the domain of AVI, especially even newer, very parameter-efficient models like Dino and its variants [286,287,288,289]. All publications utilizing transformer models applied them via supervised transfer learning, disregarding their excellent semi-supervised learning capabilities with regard to generalization and data efficiency. This could potentially reduce the amount of labeled data required to train them, which ultimately leads to a lower investment of time for labeling and thus cost. Nevertheless, it must be noted that transformers need more memory than CNNs; therefore, the benefits may be limited on edge devices. Further research into the model miniaturization of vision transformers may lead to an improvement on this front.

5. Conclusions

In this review, we provide a comprehensive overview of 196 scientific publications published between 2012 and March 2023 dealing with the application of DL-based models for AVI in manufacturing and maintenance.

The publications were categorized and critically evaluated based on six guiding questions, which helped us throughout the literature review. By answering these questions, we can report several findings.

Based on the literature, we derived key requirements for AVI use cases. These requirements were subsequently organized into two distinct dimensions, namely, general versus specific requirements and hard technical versus soft human factors. As a result, we identified several essential aspects that transcend particular use cases and are of broad significance. These encompass performance, explainability, robustness/generalization, the ability to handle sparse data, real-time capability, and adherence to hardware constraints.

The use cases in which DL-based AVI is applied can be structured hierarchically. All of them fall under quality inspection, but they can be subdivided into damage detection, completeness check, and others, where the latest contains use cases like plant disease detection, food contamination detection, and indirect wear and vibration estimation. The damage detection category features one additional sub-use-case that occurs frequently: crack detection.

We analyzed the datasets utilized in AVI regarding size distributions as well as the uniformity of their class distributions. This is measured by a balance metric that we propose. In many cases, the dataset parameters required to compute it are incompletely or imprecisely reported. On the one hand, this limited our analysis, but on the other hand, it also makes the results less comparable between publications and harder to reproduce and hinders further development of existing research results by others, which is detrimental to the progress of AVI overall. In addition, we could identify 14 datasets that were utilized more than once to benchmark tasks, out of the wide selection of 77 open datasets provided by authors. So, we want to encourage AVI researchers to give more detailed dataset descriptions to improve comparability and use and improve open datasets to advance the field as a whole.

We found that AVI can be addressed with classification and localization tasks, of which both come in two forms: binary and multi-class. These four AVI tasks are closely related to the three most common CV tasks, which means that classification AVI tasks are performed by image classification models, while localization tasks are performed by either object detection or segmentation models. In the domain of maintenance-oriented AVI use cases, we observed that localization methodologies are prevalent, as they are utilized approximately 77.2% of the time, while classification methods are employed in about 22.8% of instances. In contrast, within the manufacturing-focused AVI applications, the distribution of localization and classification strategies is more evenly balanced, with localization accounting for 49.2% and classification accounting for 50.8% of use cases. These discernible discrepancies in the adoption of localization and classification approaches can be attributed to various environmental factors that govern the distinct operational requirements and objectives of maintenance and manufacturing scenarios. Especially for maintenance, we recommend prioritizing localization-capable methodologies such as YOLO and Mask RCNNs. The inherent capability of these methods to precisely localize and delineate objects of interest is deemed crucial to enhancing the effectiveness of AVI for maintenance purposes. If the task can be performed through classification, ResNet and EfficientNet are the models we recommend using.

We also compared the SOTA in AVI applications with the advance in academic CV research. We identified a time gap of two to three years between the first proposal of a model and its transfer to an industrial AVI application. The first publications utilizing vision transformer models occurred at the lower bound of this gap in the first quarter of 2022, while more followed over the course of the year. Since their results are in the upper performance range of their respective AVI use cases, and much effort is being put into reducing their hardware requirements, we expect them to become the standard in the future. A more thorough investigation of vision transformers for classification and attention-enhanced CNNs, DDETR, and custom transformer models for localization in more different use cases could yield insights if these models live up to the potential they have shown in their low number of applications.

This review paper is not without limitations, which should be acknowledged in order to fully assess its scope and implications. The first limitation concerns the use of exclusively 2D image data. While this choice was motivated by broad applicability, it inadvertently limits the integration of more sophisticated imaging technologies. Nevertheless, it is important to emphasize that input signals other than traditional RGB images limit the effectiveness of domain adaptation techniques, e.g., transfer learning, due to a more pronounced domain gap. Another limitation of our literature review is the concise treatment of the models and training methods used in the reviewed publications. Given the extensive nature of the publication corpus considered, it could distort the research scope that we wanted to address by providing a detailed exposition of each model and training methodology. It should be noted that all of the included literature sources are open-access, allowing interested readers unrestricted access to the publications themselves, where more detailed descriptions of these aspects can be found.

These limitations provide avenues for future research to delve deeper into the topics addressed herein and extend the knowledge base in the field. Therefore, a future addition to our work will be looking at other input sensors for AVI. For example, an interesting research question to investigate is, To what extent can approaches used for 2D images be transferred to 3D images, containing depth information, or other sensor outputs, such as point clouds?

Another direction for future research is to investigate the time gap between academic research and industrial applications, which we assume is not limited to AVI. The reasons are probably manifold, but is it possible to reduce the identified gap of two to three years? And what is necessary to reduce the gap?

We expect vision transformers to become the models of choice for AVI applications. However, most publications focused on fine-tuning pretrained models in a supervised manner, ignoring their strong self-supervised learning capabilities. These have been demonstrated on benchmark datasets for specific tasks, such as object detection, as well as for learning more general representations transferable to a multitude of tasks. From our point of view, the transfer of self-supervised learning to AVI applications seems to be a research path worth spending more attention on in the future, especially because the available datasets for industrial applications are much smaller than the benchmark datasets.

Author Contributions

Conceptualization, N.H. and M.A.G.; Methodology, N.H. and M.A.G.; Resources, T.M.; Data Curation, N.H., M.A.G., K.A. and F.H.; Writing—Original Draft Preparation, N.H., M.A.G., K.A. and F.H.; Writing—Review, R.M. and T.M.; Writing—Editing, N.H. and M.A.G.; Visualization, N.H. and M.A.G.; Supervision, T.M.; Project Administration, R.M.; Funding Acquisition, R.M. and T.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Federal Ministry for Digital and Transport in the program “future rail freight transport” under grant number 53T20011UW.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AVI | Automated visual inspection |

| DL | Deep learning |

| CNN | Convolutional neural network |

| MS COCO | Microsoft common objects in context (object detection) dataset |

| CV | Computer vision |

| DETR | Detection transformer [267] |

| DDETR | Deformable detection transformer [290] |

| FCN | Fully convolutional neural network (semantic segmentation model) [251] |

| FCOS | Fully convolutional one-stage object detection (model) [284] |

| FLOPS | Floating-point operations per second |

| FN(R) | False-negative rate |

| FP(R) | False-positive rate |

| FPS | Frames per second |

| GAN | Generative adversarial network |

| ILSVRC 2012/ImageNet | ImageNet Large Scale Visual Recognition Challenge 2012 |

| LiDAR | Light detection and ranging, 3D laser scanning method |

| LSTM | Long short-term memory cell (recurrent neural network variant) |

| mAP | Mean average precision (common object detection performance metric) |

| MIM | Masked image modeling |

| MLP | Multi-layer perceptron (network) |

| NLP | Natural language processing |

| Pascal VOC | Pascal visual object classes (object detection dataset) |

| ResNet | Residual Network [67] |

| RCNN | Regional convolutional neural network [253] |

| SOTA | State of the art |

| SSD | Single-shot detector [252] |

| SVM | Support vector machine |

| Swin | Shifted windows transformer [266] |

| TN(R) | True-negative rate |

| TP(R) | True-positive rate |

| VI | Visual inspection |

| ViT | Specific architecture of a vision transformer model published in [265] |

| VGG | Visual geometry group (model) [250] |

| WoS | Web of Science |

| YOLO | You only look once (object detection model) [68] |

Appendix A

Table A1.

Detailed listing of included and excluded Web of science categories.

Table A1.

Detailed listing of included and excluded Web of science categories.

| Web of Science Category | Exc. | Web of Science Category | Exc. |

|---|---|---|---|

| Engineering Electrical Electronic | Food Science Technology | ||

| Instruments Instrumentation | Mathematics Applied | ||

| Computer Science Information Systems | Medical Informatics | x | |

| Engineering Multidisciplinary | Nanoscience Nanotechnology | ||

| Materials Science Multidisciplinary | Nuclear Science Technology | x | |

| Chemistry Analytical | Oceanography | x | |

| Telecommunications | Operations Research Management Science | x | |

| Physics Applied | Psychology Experimental | x | |

| Engineering Civil | Thermodynamics | x | |

| Chemistry Multidisciplinary | Agricultural Engineering | ||

| Imaging Science Photographic Technology | Agriculture Dairy Animal Science | x | |

| Remote Sensing | x | Audiology Speech Language Pathology | x |

| Environmental Sciences | Behavioral Sciences | x | |

| Computer Science Interdisciplinary Applications | Biochemistry Molecular Biology | x | |

| Geosciences Multidisciplinary | x | Ecology | x |

| Construction Building Technology | Engineering Industrial | ||

| Engineering Mechanical | Health Care Sciences Services | x | |

| Multidisciplinary Sciences | Materials Science Textiles | ||

| Radiology Nuclear Medicine Medical Imaging | x | Medicine Research Experimental | x |

| Engineering Biomedical | x | Pathology | x |

| Astronomy Astrophysics | x | Physics Mathematical | x |

| Computer Science Artificial Intelligence | Physics Multidisciplinary | ||

| Mechanics | Physics Particles Fields | x | |

| Transportation Science Technology | Physiology | x | |

| Neurosciences | x | Quantum Science Technology | x |

| Energy Fuels | Respiratory System | x | |

| Acoustics | x | Robotics | |

| Oncology | x | Surgery | x |

| Engineering Manufacturing | Architecture | ||

| Mathematical Computational Biology | x | Chemistry Medicinal | x |

| Mathematics Interdisciplinary Applications | Dentistry Oral Surgery Medicine | x | |

| Metallurgy Metallurgical Engineering | Dermatology | x | |

| Optics | x | Developmental Biology | x |

| Green Sustainable Science Technology | Engineering Environmental | ||

| Biochemical Research Methods | x | Fisheries | x |

| Computer Science Software Engineering | Forestry | x | |

| Automation Control Systems | Gastroenterology Hepatology | x | |

| Computer Science Theory Methods | x | Genetics Heredity | x |

| Computer Science Hardware Architecture | x | Geriatrics Gerontology | x |

| Geography Physical | x | Immunology | x |

| Agriculture Multidisciplinary | Infectious Diseases | x | |

| Chemistry Physical | x | Marine Freshwater Biology | x |

| Engineering Aerospace | Materials Science Biomaterials | ||

| Environmental Studies | x | Medical Laboratory Technology | x |

| Materials Science Composites | Obstetrics Gynecology | x | |

| Medicine General Internal | x | Otorhinolaryngology | x |

| Physics Condensed Matter | x | Paleontology | x |

| Rehabilitation | x | Parasitology | x |

| Biotechnology Applied Microbiology | x | Peripheral Vascular Disease | x |

| Clinical Neurology | x | Pharmacology Pharmacy | x |

| Engineering Ocean | Physics Fluids Plasmas | x | |

| Materials Science Characterization Testing | Physics Nuclear | x | |

| Meteorology Atmospheric Sciences | x | Plant Sciences | x |

| Water Resources | x | Psychiatry | x |

| Geochemistry Geophysics | x | Public Environmental Occupational Health | x |

| Mathematics | Sport Sciences | x | |

| Neuroimaging | x | Transportation | |

| Agronomy | Tropical Medicine | x | |

| Cell Biology | x | Veterinary Sciences | x |

| Engineering Marine |

References

- Drury, C.G.; Watson, J. Good practices in visual inspection. In Human Factors in Aviation Maintenance-Phase Nine, Progress Report, FAA/Human Factors in Aviation Maintenance; 2002. [Google Scholar]

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Sheehan, J.J.; Drury, C.G. The analysis of industrial inspection. Appl. Ergon. 1971, 2, 74–78. [Google Scholar] [CrossRef] [PubMed]

- Chiang, H.Q.; Hwang, S.L. Human performance in visual inspection and defect diagnosis tasks: A case study. Int. J. Ind. Ergon. 1988, 2, 235–241. [Google Scholar] [CrossRef]