Highlights

What are the main findings?

- SAUCF achieves secure voice-driven mission planning in under 105 s with a 97.5% safety classification accuracy and 0.52 m GPS trajectory precision.

- The decision-tree based framework demonstrated safety compliance across simulation and field trials with continuous human-in-the-loop supervision.

What are the implications of the main findings?

- Eliminates complex mission planning enabling non-expert agricultural operators to control UAS through natural language commands.

- Provides a validated framework integrating biometric security, LLM-based planning, and operator oversight for safe agricultural UAS deployment.

Abstract

Precision agriculture increasingly recognizes the transformative potential of unmanned aerial systems (UASs) for crop monitoring and field assessment, yet research consistently highlights significant usability barriers as the main constraints to widespread adoption. Complex mission planning processes, including detailed flight plan creation and way point management, pose substantial technical challenges that mainly affect non-expert operators. Farmers and their teams generally prefer user-friendly, straightforward tools, as evidenced by the rapid adoption of GPS guidance systems, which underscores the need for simpler mission planning in UAS operations. To enhance accessibility and safety in UAS control, especially for non-expert operators in agriculture and related fields, we propose a Secure UAS Control Framework (SAUCF): a comprehensive system for natural-language-driven UAS mission management with integrated dual-factor biometric authentication. The framework converts spoken user instructions into executable flight plans by leveraging a language-model-powered mission planner that interprets transcribed voice commands and generates context-aware operational directives, including takeoff, location monitoring, return-to-home, and landing operations. Mission orchestration is performed through a large language model (LLM) agent, coupled with a human-in-the-loop supervision mechanism that enables operators to review, adjust, or confirm mission plans before deployment. Additionally, SAUCF offers a manual override feature, allowing users to assume direct control or interrupt missions at any stage, ensuring safety and adaptability in dynamic environments. Proof-of-concept demonstrations on a UAS plat-form with on-board computing validated reliable speech-to-text transcription, biometric verification via voice matching and face authentication, and effective Sim2Real transfer of natural-language-driven mission plans from simulation environments to physical UAS operations. Initial evaluations showed that SAUCF reduced mission planning time, minimized command errors, and simplified complex multi-objective workflows compared to traditional waypoint-based tools, though comprehensive field validation remains necessary to confirm these preliminary findings. The integration of natural-language-based interaction, real-time identity verification, human-in-the-loop LLM orchestration, and manual override capabilities allows SAUCF to significantly lower the technical barrier to UAS operation while ensuring mission security, operational reliability, and operator agency in real-world conditions. These findings lay the groundwork for systematic field trials and suggest that prioritizing ease of operation in mission planning can drive broader deployment of UAS technologies.

1. Introduction

Unmanned aerial systems (UASs) are rapidly reshaping precision agriculture by pro-viding high-resolution imagery that enable faster field mapping and timely site-specific decision support. Surveys and empirical studies have shown UASs’ effectiveness in yield prediction, crop monitoring, weed detection, and other precision applications that can reduce input use while maintaining or increasing yields [1,2,3]. However, despite their technical advantages, practical adoption of UASs in agriculture remains constrained by usability and operational barriers. Typical workflows require manual waypoint programming, sensor configuration, and toolchain integration that place high cognitive and technical demands on non-expert operators. At the same time, safety and security concerns, including unauthorized access and privacy risks associated with imaging data, pose legal and operational obstacles that limit routine deployment in agricultural environments [2,4,5]. Recent advances in automatic speech recognition (ASR) provide reliable transcription of spoken directives, while large language model (LLM) based planning frameworks facilitate intent identification and synthesis of high-level missions into executable plans. Frameworks that translate domain-specific language into structured mission plans enable operators to express mission intent in agricultural terms (e.g., “monitor the north field for irrigation stress”) rather than specifying low-level flight parameters. Parallel progress in mission planning frameworks has enabled field mapping from semantic goals to concrete trajectories and sensing actions for UAS missions [6,7,8]. However, despite these advances, current systems do not provide an end-to-end pipeline that allows agricultural operators to issue spoken commands and directly obtain fully specified UAS missions without manual waypoint programming or toolchain configuration. This gap motivates our development of a unified, voice-driven, LLM-based mission control system that converts natural agricultural directives into executable plans for the UAS. In this work we present the Secure Agricultural UAS Control Framework (SAUCF), a voice-driven framework that couples LLM-based mission planning with dual-factor biometric authentication and human-in-the-loop supervision to produce secure, verifiable, and operator-authorized missions. SAUCF integrates ASR, intent parsing with LLMs, decision-tree-based mission execution, continuous telemetry and health monitoring, and operator override capability. We validate SAUCF through simulation and simulation-to-reality (sim2real) experiments on UAS platforms, showing that natural-language directives are translated into precise, executable trajectories while preserving operator control and improving situational safety [9,10]. Recent research has demonstrated the feasibility of using large language models to interpret high-level task descriptions and produce robust action plans. LLMs’ few-shot and instruction-following properties facilitate mapping from natural language to structured mission representations, enabling rapid prototyping of voice-driven control systems [7]. Work focusing on system-level resilience and security emphasizes the importance of authentication, anomaly detection, and secure communication channels for real-world deployment of autonomous aerial systems [5,11]. SAUCF extends this direction by explicitly combining biometric authentication and access control with LLM-driven plan generation and validated mission execution for agricultural tasks, including LLM-based safety filtering through few-shot SAFE/UNSAFE command classification. A body of work has explored natural-language interaction for aerial platforms and agricultural robots without centering on security. Domain-specific ASR and command grammars can improve command recognition in noisy outdoor settings, and several frameworks demonstrate robust mapping from spoken commands to UAS behaviors in simulation and controlled field trials [8,12]. These approaches highlight the practical gains from language-first interfaces but typically omit integrated authentication or access-control mechanisms that are essential for operational safety in open environments. Bridging sim-to-real gaps is crucial for deploying perception and planning modules trained in simulation into safety-critical agricultural operations. Prior studies in remote sensing and perception report domain-adaptation and digital-twin strategies to improve real-world transfer of models for detection and mapping tasks [3,13]. SAUCF leverages sim-validated mission planning pipelines and real-world telemetry checks to improve trajectory fidelity during sim2real deployment, reducing the risk of unexpected behavior when moving from virtual validation to field operation.

Objectives of SAUCF

To our knowledge, the existing works do not simultaneously integrate: (1) voice-driven, LLM-based mission control for agricultural UASs; (2) dual-factor, biometric operator authentication and access control; and (3) simulation-validated mission execution with continuous telemetry and an explicit human-in-the-loop override. SAUCF unifies these elements. The core objectives of this study are:

- Develop a secure and modular SAUCF architecture that converts spoken instructions into decision-tree-encoded UAS survey missions, thereby reducing operational barriers associated with manual mission setup while enforcing dual-factor biometric authentication.

- Develop and verify an integrated pipeline combining ASR and LLM-based mission planning and mission execution with continuous telemetry, LLM-based safety filtering through few-shot SAFE/UNSAFE command classification, and explicit human-in-the-loop override to ensure secure and reliable mission execution.

- Perform a simulation backed assessment of sim2real transfer to field UAS platform, quantifying the safety compliance and the applicability of Natural language to action pipelines.

2. Methodology

Section 2 presents the systematic design, implementation, and evaluation procedure for the Secure Agricultural UAS Control Framework (SAUCF). The methodology establishes a comprehensive approach for developing a voice-driven, secure, and human-in-the-loop agricultural UAS system that emphasizes operator safety, mission reliability, and ease of use. We first give an architectural overview and then detail each pipeline module, their interfaces, and how they are integrated for safe, voice-driven agricultural missions.

2.1. System Architecture and Design Philosophy

The SAUCF is a modular, human-centered system that transforms natural language voice commands into executable agricultural UAS missions while maintaining stringent security and safety protocols. The framework addresses three critical challenges in agricultural UAS deployment:

- (i)

- reducing technical complexity for non-expert operators,

- (ii)

- ensuring secure access control and mission auditability;

- (iii)

- preserving human authority through comprehensive safety oversight, including ensuring reliable sim-to-real mission execution.

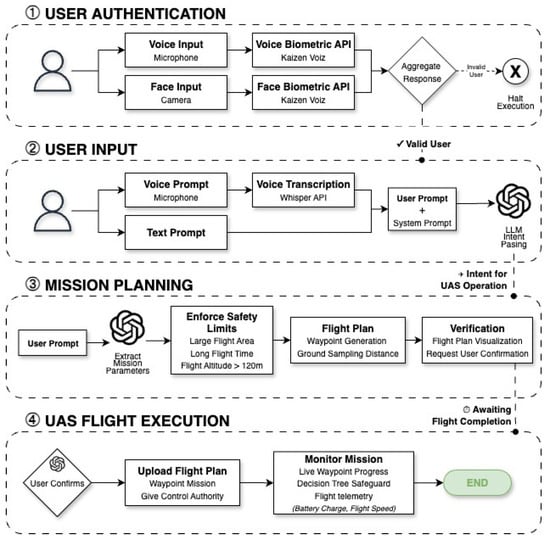

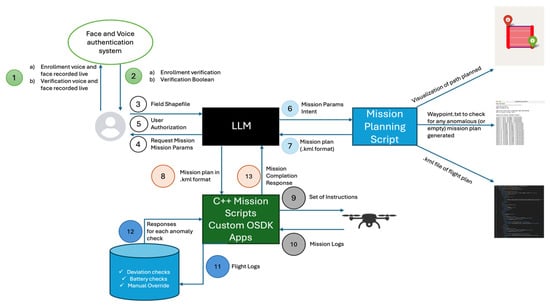

The system architecture, illustrated in Figure 1, consists of six sequential modules operating in a secure pipeline: multi-modal biometric authentication, voice command processing with automatic speech recognition (ASR), natural language intent parsing, automated mission planning, mission visualization and approval, and supervised onboard execution with telemetry monitoring.

Figure 1.

Secure Agricultural UAS Control Framework (SAUCF) Architecture.

2.2. Security and Authentication Framework

SAUCF implements a dual-factor biometric authentication system to address the safety aspects of agricultural UAS operations where unauthorized access can result in crop damage, privacy breaches, and legal liabilities. The framework incorporates voice and face authentication modules provided by Kaizen Voiz (Edison, NJ, USA). These modules are integrated into our system to enable secure operator verification. Our contribution lies in embedding these components within the framework and implementing enrollment and verification functionalities accessible through the system interface.

2.3. Natural Language Processing Pipeline

Natural language removes the need for complex programming or waypoint management expertise and lets operators express missions in agricultural terminology (e.g., “monitor the field”). This improves usability and reduces operator error.

2.3.1. Voice Command Acquisition and Speech Recognition

The first step is voice-based input of mission directives. A user speaks a mission command (e.g., “I want you to perform the mapping mission for me”) into a microphone. The audio is digitized and processed by the Whisper ASR system, an end-to-end encode-decoder transformer model for speech-to-text conversion [14]. Whisper transcribes the audio stream into text with high accuracy even in varied conditions. In our implementation, Whisper runs on a GPU-equipped machine (the Jetson Nano’s GPU) to allow for low-latency transcription. The integration boundary between ASR and language understanding is the raw text output: once ASR completes, the recognized text is forwarded to the intent-parsing module.

2.3.2. Large Language Model Integration for Intent Parsing

Mission interpretation leverages GPT-4 to convert natural language commands (e.g., “monitor the north field at 50-m altitude”) into structured mission parameters. The LLM integration uses function-calling mechanisms that constrain outputs to predefined agricultural operations. Few-shot prompting techniques maintain the agricultural domain context while training the system to identify appropriate mission parameters and reject potentially hazardous commands, thereby preventing unsafe flight directives through hallucination mitigation.

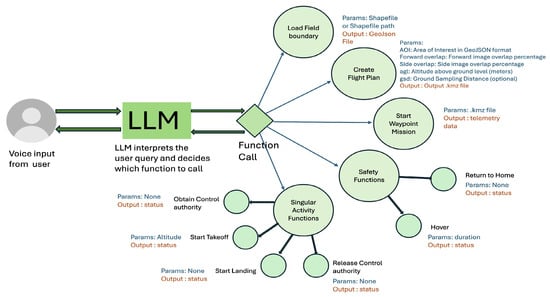

The LLM interacts with operators to confirm operational parameters, obtain field boundaries (coordinates or shapefiles), and specify critical flight variables such as altitude constraints or Ground Sampling Distance (GSD). It also extracts the area and prompts the user if it is not within safe limits. If the area is large (over 4 hectares) or critically large (over 6 hectares), it issues a warning about potential issues like long flight times and asks for confirmation before proceeding. A complete overview of how the LLM uses structured function-calling to translate operator intent into actionable mission commands is shown in Figure 2.

Figure 2.

LLM Function Calling for UAS mission planning and control.

2.4. Automated Mission Planning and Path Generation

The framework addresses critical planning challenges in agricultural UAS operations by automating the creation of complex and efficient flight coverage patterns, removing the need for manual waypoint programming. It also incorporates an operator verification step, providing visual confirmation of mission plans before deployment to prevent errors and ensure that all coverage objectives are achieved.

2.4.1. Flight Pattern Optimization

The planning module utilizes the open-source UAS-flight plan Python library (version 0.3.7) [15] to generate efficient coverage patterns from structured mission parameters [15]. The system automatically calculates optimal waypoint sequences using lawnmower grid or spiral patterns over specified polygonal areas, incorporating altitude constraints and image overlap requirements for consistent data collection. The planner outputs GPS waypoint sequences (latitude, longitude, altitude) that ensure complete area coverage while minimizing flight time and battery consumption.

2.4.2. Mission Serialization and Visualization

Computed flight plans are serialized into kml files containing complete mission specifications including waypoints and altitudes. The mission visualization file (.html) provides map-based displays of flight paths overlaid on satellite imagery, enabling operators to verify coverage patterns and identify potential issues before mission deployment. The visualization system allows operators to adjust parameters or abort missions based on visual assessment of automatically generated flight paths.

2.5. Onboard Execution and Safety Monitoring

The framework incorporates comprehensive execution monitoring to ensure safe and reliable flight operations under continuous operator oversight. It enables real-time decision-making in response to changing conditions or anomalies, preserves human authority for intervention, and maintains system reliability through constant tracking of critical flight parameters to prevent mission failure or equipment damage.

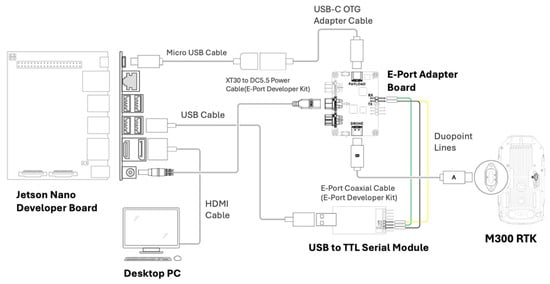

2.5.1. Companion Computer Integration

Mission execution operates on an NVIDIA Jetson Nano (Santa Clara, CA, USA ) companion computer integrated with the UAS platform via DJI’s Onboard SDK (OSDK v4.0.0). The Jetson connects to the flight controller through a USB-serial adapter (FT232 chip) via DJI’s E-port development interface, enabling programmatic flight control and real-time mission orchestration (Figure 3). Once the mission is approved, execution proceeds on the UAS. The Jetson Nanoacts as the onboard companion computer, running the mission under DJI’s Onboard SDK. The SDK provides a serial API to the flight controller, allowing the computer to execute high-level commands (takeoff, land, waypoint navigation, etc.).

Figure 3.

Jetson Nano integrated with DJI M300 RTK. Source: [16].

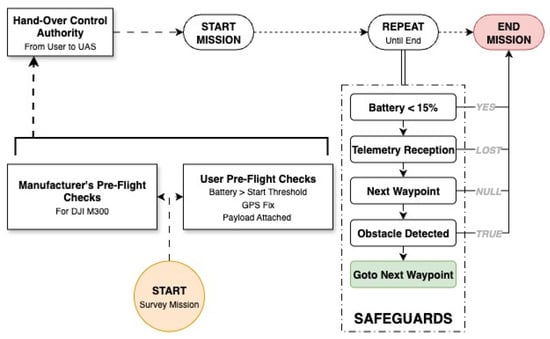

2.5.2. Decision Tree Based Safeguards

Mission execution follows a decision-tree architecture (Figure 4) that sequences on-board actions while enforcing comprehensive safety constraints. Execution begins with explicit operator authorization and pre-flight checks (battery, GPS fix, payload status) and requires human-in-the-loop confirmation of mission parameters before authority is granted. Once cleared, the system performs a monitored takeoff to the operator-specified altitude and iterates through the KML-defined waypoint list generated during planning. At each waypoint the tree enforces telemetry validation, battery thresholds (30% abort threshold), and safety guards (built-in obstacle triggers); the navigation primitive computes local NED offsets from GPS signals and issues offset moves until arrival within a defined tolerance. Navigation failures are treated as recoverable contingencies—reactive subtrees either re-plan, skip the problematic waypoint, or invoke return-to-home/monitored landing sequences depending on the fault condition. Throughout the mission, the framework logs telemetry for audit and post-flight analysis, preserves operator override at all times, and releases control authority only after a monitored landing.

Figure 4.

Decision Tree for mission execution with integrated safeguards and data logging.

2.5.3. Human-in-the-Loop Safety Integration

Safety enforcement maintains operator authority throughout mission execution. The system requires manual confirmation before UAS arming and takeoff and provides continuous telemetry monitoring (GPS position, altitude, battery status, flight mode) via real-time data streams. Emergency manual override capabilities through RC-based controls ensure immediate operator intervention when required.

Real-time telemetry data is transmitted from the Jetson to ground control stations via Wi-Fi, enabling live mission monitoring and immediate response to system anomalies.

All operator interventions and system responses are logged for post-mission analysis and regulatory compliance.

The onboard execution and monitoring system ensures autonomous mission execution by providing reliable waypoint navigation and mission completion under SDK control.

It delivers real-time status updates through continuous telemetry streaming, enabling operator situational awareness throughout the mission. The system includes emergency response capabilities such as automatic return-to-home in the event of system anomalies while also preserving manual override authority through RC-based controls for immediate operator intervention. Additionally, it maintains comprehensive logging of the entire mission, recording both operator interventions and system responses for post-mission analysis and review.

3. Experimental Settings

The SAUCF requires a hybrid hardware-software architecture to effectively meet the demands of real-time processing, including voice recognition, LLM integration, and flight control, which necessitate significant computational resources. This architecture facilitates seamless multi-modal integration by coordinating voice input, visual authentication, and UAS control systems. It ensures safety-critical operations through reliable hardware-software interfaces essential for mission-critical agricultural UAS tasks. Additionally, the framework is designed with extensibility in mind, featuring a modular structure that enables future enhancements and compatibility across different platforms.

3.1. Experimental Environment

The deployed hardware configuration is as follows (Table 1):

Table 1.

Hardware configuration used in the experimental environment.

The software stack runs on Ubuntu 18.04 LTS on the Jetson, and adopts a componentised design that isolates perception, language, planning, and control responsibilities (Table 2):

Table 2.

Software stack used in the SAUCF.

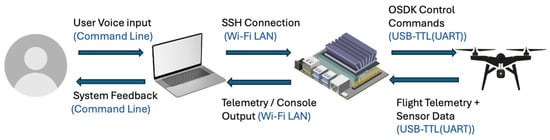

Inter-component communication uses standard, well-understood formats to maximise modularity and testability (Figure 5). These formats are summarised in Table 3:

Figure 5.

Detailed Architecture of System Integration and Communication Protocols.

Table 3.

Inter-component communication formats used within SAUCF.

The overall control, telemetry and network transmission protocols between the operator, ground system, Jetson companion computer, and the UAS is illustrated in Figure 6.

Figure 6.

UAS Control and Telemetry Data and Network transmission Protocols.

The integrated hardware-software architecture delivers real-time processing capability through GPU-accelerated voice recognition and mission planning, ensuring rapid and efficient operation. It provides reliable flight control via direct integration with commercial UAS platforms using established SDKs while maintaining secure authentication through multi-modal biometric verification with onboard sensors. Designed for flexibility, the extensible framework supports the addition of new sensors, platforms, and agricultural applications. Furthermore, it incorporates custom agricultural functionality through specialized OSDK applications specifically tailored for monitoring and mapping missions in precision agriculture.

3.2. Evaluation Metrics

Mission validation utilizes DJI Assistant 2 (Enterprise Edition) simulation environment for initial testing, with simulation outputs integrated through the DJI OSDK parser to validate parsing accuracy and behavioral consistency. Validated mission plans are subsequently executed on physical UAS fields to confirm simulation-to-field alignment. System performance assessment employs comprehensive evaluation criteria:

- Flight-Control Unit Tests: Unit tests were used to validate the correctness of control authority acquisition and release, takeoff, landing, and a complete three-waypoint micro-mission sequence.

- Altitude-Robust Mission Execution: Survey missions were executed at 5 m, 10 m, 20 m, 30 m, and 50 m above ground level to evaluate stability, waypoint accuracy, and safety-guard compliance across varying altitudes.

- Battery-Safety Compliance: Low-battery tests checked whether the system correctly detected when the battery was running low and automatically initiated a return-to-home (RTH) as expected.

- Mission Planning Time: Time elapsed from voice input to deployable mission plan.

- Decision Tree Compliance: Validation of executed behaviors against predefined sequences.

- Operational Safety Classification: Ability of the LLM to correctly classify mission commands as SAFE or UNSAFE before intent parsing.

- Authentication Reliability: Biometric system performance measured via FAR and FRR across modalities.

- Execution Consistency Over Varying Survey Altitudes: GPS trajectory comparison between planned and executed GPS coordinates over varying altitudes.

The evaluation framework ensures operational reliability while maintaining the flexibility required for diverse agricultural mission profiles.

4. Experimental Results and Analysis

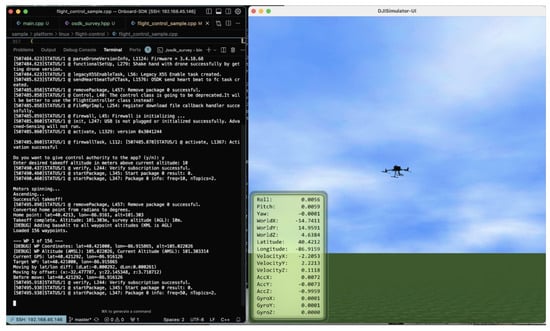

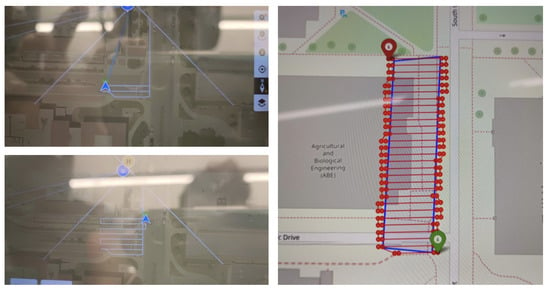

We evaluated the Secure Agricultural UAS Control Framework (SAUCF) against the metrics outlined in Section 3.2. Experimental validation included both simulation trials in DJI Assistant 2 and field deployments (Figure A1) using the DJI Matrice 300 RTK platform (Figure A2). The results are summarized below.

4.1. Unit Testing of Core Flight Actions

We ran a set of unit and integration tests in both the DJI Assistant 2 simulator (Figure A3) and in real field flights to check whether the basic flight actions worked reliably and could support the higher-level LLM-generated missions.

Flight-Control Unit Tests: The system successfully obtained and released control authority in all trials and performed monitored vertical takeoff and autonomous landing without fault.

We tested a small mission with three waypoints to check if the drone followed them in the right order, kept the correct altitude, and reached each point accurately. The drone completed all three segments correctly in every test, showing that the waypoint following works reliably.

Altitude-varied survey missions: Survey flights at 5 m, 10 m, 20 m, 30 m, and 50 m above ground level demonstrated stable path-following and coverage-pattern integrity at all altitudes tested. No altitude-induced failures, oscillations, or significant deviations were observed.

Battery-Safety Compliance: Low-battery tests showed that the system correctly detected when the battery was running low and automatically triggered Return-to-Home (RTH). This confirms that the safety logic responds properly when power is limited.

All unit and integration tests passed in both simulation and field trials, showing that the basic flight functions are reliable and provide a strong foundation for SAUCF’s higher-level mission planning and execution.

4.2. Mission Planning Time

The complete workflow comprised two major timing components: biometric verification and mission planning. Voice verification averaged 17.5 s and face verification averaged 14.7 s, resulting in a total authentication duration of approximately 32.2 s. This ensured secure operator validation before proceeding to mission execution.

Following verification, the mission planning stage—from the initial natural language input to the generation of a deployable waypoint set—required 72.8 s on average in our tests. This included processing the uploaded shapefile, extracting field boundaries, and computing optimized flight paths.

Thus, the overall time from initial command input to finalized waypoint plan was under 105 s, demonstrating the system’s capability to provide secure and operator-in-the-loop agricultural mission planning in near-real time.

4.3. Decision Tree Compliance

All executed missions adhered to the predefined behavior tree structure, with 100% compliance across simulation and field trials. Safety checks (battery, GPS lock, obstacle detection) were consistently enforced at each node, validating the reliability of the execution framework.

4.4. Operational Safety Classification

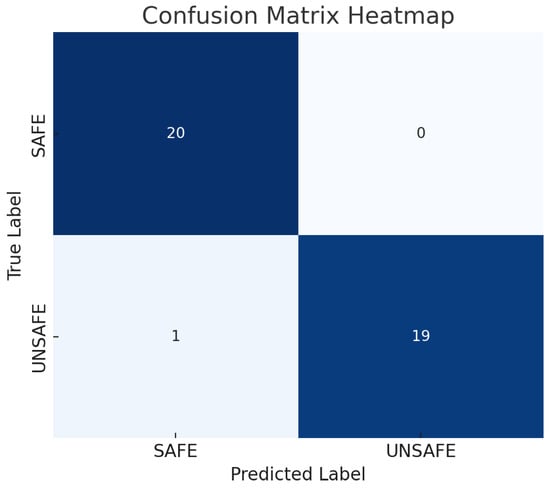

To enhance operational safety, the LLM was fine-tuned with a safety classification layer capable of distinguishing between SAFE and UNSAFE mission commands prior to intent parsing. Across 40 representative commands, the classifier achieved an overall 97.5% accuracy (95.2% precision and 100% recall for SAFE, and 100% precision and 95.0% recall for UNSAFE) as seen in the confusion matrix (Figure 7).

Figure 7.

Confusion matrix for the safety classification layer over a test set of 40 representative commands (20 SAFE, 20 UNSAFE).

This additional security layer prevents unsafe or unauthorized operations by ensuring that the system not only interprets intent correctly but also predicts whether the mission should start at all. In practice, this provides robust protection against potentially harmful or legally restricted UAS deployments, complementing the operator confirmation loop and reinforcing the reliability of natural language mission control.

4.5. Authentication Reliability

The multi-modal biometric authentication system was evaluated on a cohort of 10 enrolled users and 10 non-enrolled users. As expected, none of the non-enrolled users were granted access, confirming the system’s ability to reject unauthorized attempts. Among the enrolled users, 8 out of 10 were successfully verified, corresponding to an effective acceptance rate of 80%.

An interesting observation was that both the voice and face verification modules showed sensitivity to background interference. In several cases, ambient speech or the presence of another person in the camera’s field of view reduced accuracy, leading to false rejections. This highlights the importance of controlled input conditions or the incorporation of noise-robust models to further improve reliability in real-world agricultural environments.

4.6. Flight Trajectory Consistency over Varying Survey Altitudes

GPS trajectory analysis across survey missions flown at 5 m, 10 m, 20 m, 30 m, and 50 m showed that the highest average deviation occurred at the 30 m survey altitude (Table 4). At this altitude, the mission exhibited an average waypoint deviation of 0.52 m with a standard deviation of 0.19 m between planned and executed positions.

Table 4.

Waypoint-level comparison between planned (target) and executed GPS coordinates during field testing for survey mission at altitude 30 m. Deviation is computed using great-circle distance between target and actual coordinates. SAUCF achieves consistent sub-meter accuracy with an average deviation of 0.52 m, and all waypoints fall within a 1.0 m tolerance.

4.7. Summary

Overall, the results demonstrate that SAUCF achieves mission planning, reliable intent parsing, robust biometric authentication, and safe, consistent execution across simulation and real-world environments. These findings validate the framework’s ability to deliver secure, operator-friendly, and mission-reliable agricultural UAS operations.

5. Discussion

The experimental evaluation of the Secure Agricultural UAS Control Framework (SAUCF) demonstrates the effectiveness of combining human-in-the-loop safety, natural language mission specification, and modular authentication into a single cohesive pipeline for agricultural UAS operations. The discussion below interprets the results in the context of system objectives and broader implications for agricultural UAS deployment.

5.1. Mission Planning and Usability

The mission planning efficiency results indicate that the system can generate complete, operator-verified waypoint sets in under two minutes from the initial voice command. This latency is acceptable in agricultural contexts where missions are typically planned in advance, and it represents a practical trade-off between secure authentication, language interpretation, and mission generation. Compared to manual waypoint programming, which can take considerably longer and requires technical expertise, SAUCF significantly reduces operator burden while ensuring secure mission planning. The integration of natural language processing with domain-specific constraints proved effective in preventing unsafe or infeasible missions, directly addressing the first system objective of reducing technical complexity for non-expert operators.

5.2. Reliability of Execution Framework

The decision tree compliance results confirm that the safety-guarded execution architecture consistently enforced all pre-flight and in-flight checks. This is a strong validation of the modular design philosophy, where execution is structured as a sequence of monitored tasks rather than opaque automation. The ability to handle contingencies such as low battery, navigation errors, or obstacle detection reflects robustness and operational reliability. These findings align with prior research emphasizing behavior trees as interpretable and verifiable control frameworks for autonomous robotics.

5.3. Safety in Natural Language Command Processing

The integration of a safety classification layer before intent parsing proved critical in ensuring secure command interpretation. With a 97.5% classification accuracy, the system demonstrated the ability to prevent unsafe or legally restricted missions, thereby addressing the second objective of ensuring secure access control. While the misclassification of one unsafe command indicates room for improvement, the near-perfect recall rate across both SAFE and UNSAFE categories suggests that the classifier performs reliably as a protective filter. This outcome highlights the necessity of safety-aware LLM integration in domains where operator trust and regulatory compliance are paramount.

5.4. Authentication Performance

The biometric authentication evaluation confirmed the viability of multi-modal verification in agricultural UASs. While the acceptance rate of 80% among enrolled users demonstrates the system’s capacity for secure operator verification, the observed sensitivity to background noise and visual interference underscores an important limitation. In uncontrolled outdoor environments, such factors can reduce the reliability of biometric systems. Future iterations of SAUCF should incorporate noise-robust ASR models and face recognition systems designed for variable illumination and occlusion to improve performance in real-world agricultural settings.

5.5. Trajectory Consistency over Varying Survey Altitudes

The GPS trajectory analysis showed a maximum average deviation (at a particular altitude) of 0.52 m with a standard deviation of 0.19 m between planned and executed waypoints. This level of consistency is within acceptable tolerances for most agricultural monitoring and mapping tasks, where sub-meter accuracy ensures reliable coverage and data collection. Moreover, the close alignment between simulation and field execution validates the fidelity of the simulation-to-reality transfer (Figure A4). Compared to prior agricultural UAS studies reporting deviations above one meter, SAUCF demonstrates improved precision, which enhances both mission reliability and operator trust.

5.6. Limitations and Future Work

While SAUCF demonstrates strong performance across planning, authentication, and execution, some limitations remain. First, authentication accuracy could be improved by integrating adaptive multi-modal fusion strategies that dynamically weight biometric modalities (voice and face) based on environmental conditions [17]. Second, the current framework depends on reliable wireless connectivity for real-time telemetry, which may not always be available in remote agricultural environments. Incorporating redundant communication channels or edge-based fallback safety behaviors would enhance resilience [18]. Finally, although the system leverages a large language model for intent parsing, reliance on external APIs introduces potential latency and dependency issues. Future work should investigate lightweight, on-device language models tailored for agricultural UAS command interpretation [19].

5.7. Implications

Overall, the results validate the design philosophy of SAUCF: combining secure operator verification, natural language usability, and structured safety enforcement yields a practical and robust agricultural UAS control framework. By lowering barriers for non- expert operators while maintaining security and safety, SAUCF represents a step toward democratizing UAS-based precision agriculture. Its modular architecture and extensibility further position it as a foundation for future enhancements, including integration with advanced sensing modalities, multi-UAS coordination, and adaptive mission planning strategies.

6. Conclusions

This study presented the Secure Agricultural UAS Control Framework (SAUCF), a modular, voice-driven, and human-in-the-loop system for secure and reliable agricultural UAS operations. Through the integration of multi-modal biometric authentication, natural language processing, automated mission planning, and behavior-tree-based execution, SAUCF addresses three critical challenges in agricultural UAS deployment: reducing technical complexity for non-expert operators, ensuring secure access control, and preserving human authority through continuous safety oversight.

Experimental validation demonstrated that the framework achieves near real-time mission planning (under 105 s), high safety compliance through behavior tree enforcement, and reliable intent parsing with a safety-aware LLM classifier. The biometric authentication module effectively secured operator access while GPS trajectory deviations during waypoint execution remained within acceptable tolerances for typical agricultural monitoring and mapping tasks, where sub-meter precision is sufficient to ensure reliable coverage and data collection. A key contribution of SAUCF lies in its ability to bridge the sim2real gap for agricultural UAS missions with a maximum average deviation of 0.52 m. By leveraging simulation-backed validation and telemetry-driven safeguards, the system demonstrated strong alignment between simulated mission plans and physical execution during field trials. Together, these results validate the robustness and practicality of the framework in both simulation and real-world field deployments.

Going forward, further improvements can be made by incorporating noise-robust bio-metric models, resilient communication mechanisms, and lightweight on-device language models to reduce dependence on cloud APIs. Moreover, extensions to multi-UAS coordination, adaptive mission planning, and integration with advanced sensing modalities can expand the applicability of SAUCF to larger-scale and more complex agricultural missions.

In summary, SAUCF demonstrates that a secure, voice-driven, and operator-friendly UAS control pipeline is not only feasible but also effective in bridging the gap between autonomous flight capabilities and the practical needs of precision agriculture.

Author Contributions

Conceptualization, D.S. and V.A.; methodology, V.A. and N.S.; software, N.S.; validation, D.S. and V.A.; formal analysis, V.A. and N.S.; investigation, D.S.; resources, D.S.; data curation, V.A.; writing—original draft preparation, N.S.; writing—review and editing, V.A. and N.S.; visualization, V.A. and N.S.; supervision, D.S.; project administration, D.S.; funding acquisition, D.S. All authors have read and agreed to the published version of the manuscript.

Funding

The USDA National Institute of Food and Agriculture Hatch project 1012501 provided major funding for the project. We also wish to thank the Purdue-IIT Gandhinagar collaboration program for providing partial funding for the project.

Data Availability Statement

The Appendix A supporting the conclusions of this study, including simulation demonstrations and on-field testing videos, are publicly available through the project webpage at https://nihar1402-iit.github.io/SAUCF-Project-Page/ (accessed on 9 December 2025).

Acknowledgments

We wish to thank Kaizen Voiz (Edison, New Jersey, USA) (https://kaizenvoiz.com/) for providing the biometric voice and face authentication APIs used in this work. During the preparation of this manuscript, the authors used OpenAI’s ChatGPT (GPT-5, 2025) to reframe sections of the Section 1 and Section 6, and for language refinement in the Section 5. The tool was used solely to improve clarity and readability; all ideas and interpretations are those of the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1.

Test area used for experimental evaluation. (Left) Ground view of the site. (Right) Google Earth satellite image with four marked boundary corners used to define the mission area during waypoint planning.

Figure A2.

(Left) On-field testing of SAUCF. (Right) DJI Matrice 300 RTK platform and Jetson Nano companion computer used for field trials.

Figure A3.

Simulation testing of the SAUCF using the DJI Matrice 300 RTK platform and Jetson Nano companion computer on DJI Assistant 2 (Enterprise Series) v2.1.20 simulation software.

Figure A4.

Sequential drone motion frames during execution (left) compared with the predetermined mission path (right).

References

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, N. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Mekdad, Y.; Aris, A.; Babun, L.; Fergougui, A.E.; Conti, M.; Lazzeretti, R.; Uluagac, A.S. A Survey on Security and Privacy Issues of UAVs. Comput. Netw. 2023, 224, 109626. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, Y.; Zeng, J.; Yang, Y.; Jia, Y.; Song, H.; Lv, T.; Sun, Q.; An, J. AI-Driven Safety and Security for UAVs: From Machine Learning to Large Language Models. Drones 2025, 9, 392. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. (NeurIPS) 2020, 33, 1877–1901. [Google Scholar]

- Contreras, R.; Ayala, A.; Cruz, F. Unmanned Aerial Vehicle Control through Domain-Based Automatic Speech Recognition. Computers 2020, 9, 75. [Google Scholar] [CrossRef]

- Fagundes-Júnior, L.A.; Barcelos, C.O.; Silvatti, A.P.; Brandão, A.S. UAV–UGV Formation for Delivery Missions: A Practical Case Study. Drones 2025, 9, 48. [Google Scholar] [CrossRef]

- Ghilom, M.; Latifi, S. The Role of Machine Learning in Advanced Biometric Systems. Electronics 2024, 13, 2667. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Zhang, Y.; Yao, S.; Wang, P.; Wu, H.; Xu, Z.; Wang, Y.; Zhang, Y. Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture. Appl. Sci. 2022, 12, 11830. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep Learning for Remote Sensing Image Classification: A Survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- OpenAI. Whisper: General-Purpose Speech Recognition Model; GitHub Repository. 2022. Available online: https://github.com/openai/whisper (accessed on 15 June 2025).

- Adhikari, N. Drone Flight Plan Generator, Version 0.3.6; Github: San Francisco, CA, USA, 2025. Available online: https://github.com/hotosm/drone-flightplan (accessed on 15 June 2025)Version 0.3.6.

- DJI Developers. Jetson Nano Quick Guide. 2023. Available online: https://developer.dji.com/doc/payload-sdk-tutorial/en/quick-start/quick-guide/jetson-nano.html (accessed on 12 September 2025).

- Zeeshan, N.; Bakyt, M.; Moradpoor, N.; La Spada, L. Continuous Authentication in Resource-Constrained Devices via Biometric and Environmental Fusion. Sensors 2025, 25, 5711. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Chen, Q.; Zhu, X.; Yao, W.; Chen, X. Edge computing powers aerial swarms in sensing, communication, and planning. Innovation 2023, 4, 100506. [Google Scholar] [CrossRef] [PubMed]

- Hoffpauir, K.; Simmons, J.; Schmidt, N.; Pittala, R.; Briggs, I.; Makani, S.; Jararweh, Y. A Survey on Edge Intelligence and Lightweight Machine Learning Support for Future Applications and Services. J. Data Inf. Qual. 2023, 15, 1–30. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).