Abstract

Massive Open Online Courses (MOOCs) have gained increasing popularity in recent years, highlighting the growing importance of effective course recommendation systems (CRS). However, the performance of existing CRS methods is often limited by data sparsity and suffers under cold-start scenarios. One promising solution is to leverage course-level conceptual information as side information to enhance recommendation performance. We propose a general framework for integrating LLM-generated concepts as side information into various classic recommendation algorithms. Our framework supports multiple integration strategies and is evaluated on two real-world MOOC datasets, with particular focus on the cold-start setting. The results show that incorporating LLM-generated concepts consistently improves recommendation quality across diverse models and datasets, demonstrating that automatically generated semantic information can serve as an effective, reusable, and scalable source of side knowledge for educational recommendations. This finding suggests that LLMs can function not merely as content generators but as practical data augmenters, offering a new direction for enhancing robustness and generalizability in course recommendation.

1. Introduction

With the rapid growth of Massive Open Online Courses (MOOCs) as a scalable and accessible alternative to traditional classroom-based education, learning opportunities have become more democratized than ever before. However, the overwhelming number of available courses has made it increasingly difficult for learners to identify those most aligned with their individual goals and interests [1]. To address this challenge, course recommendation systems have emerged as a necessary tool to reduce information overload and assist students in making informed and efficient course selections. Despite their effectiveness, most existing systems rely heavily on historical user–course interactions, which makes them particularly vulnerable to data sparsity and cold-start problems, especially for new users or newly introduced courses [2].

Incorporating side information, such as course concepts or topical knowledge, has been shown to improve both the accuracy and interpretability of recommendation systems, while also helping to mitigate challenges related to data sparsity and cold-start scenarios [3,4,5,6,7]. These approaches have demonstrated strong performance in modeling user preferences when rich auxiliary information is available. However, manually extracting such information is labor-intensive, time-consuming, and difficult to scale. Although some automatic extraction techniques have been explored, they often fall short in terms of quality and reliability [8,9].

Recent developments in educational AI have sparked growing interest in applying large language models (LLMs), such as GPT, to support learning-related tasks [10,11]. Thanks to their broad general knowledge and strong contextual reasoning capabilities, LLMs can summarize content, infer key topics, and generate concept-level representations from unstructured course descriptions. Motivated by these capabilities, our previous work [10,12] explored the use of LLMs to automatically extract core concepts from course materials. Through both qualitative and quantitative analyses, we found that the generated concepts were semantically coherent, aligned closely with course content, and in some cases even surpassed the quality of concepts derived from expert annotations. Despite this promise, the use of LLM-generated concepts remains largely underexplored in the context of recommender systems. In particular, it is still unclear whether such automatically generated concepts can serve as effective side information to improve recommendation performance, especially in data-sparse or cold-start scenarios. Although prior studies have shown that incorporating side information can improve recommendation accuracy, it remains unclear whether automatically generated concepts, rather than manually crafted or domain-specific features, can serve as effective side signals in course recommendation. This uncertainty is particularly critical in education, where scalable and transferable sources of side information are often limited. In this work, we take a different perspective: instead of focusing on architectural modifications, we systematically examine whether LLM-generated course concepts can enhance recommendation quality when integrated into classical and modern baselines. Specifically, we explore multiple fusion strategies to inject these semantic concepts into different families of recommendation models, and we analyze which integration methods yield the largest gains, why they work, and under what conditions they are most effective. By shifting the focus from model redesign to evaluating the utility of LLM-derived concepts as side information, our study provides new empirical evidence on the role of LLMs in educational recommendation and offers practical insights for improving accuracy in both cold-start and sparse-data scenarios. To this end, we raise a central research question: How and to what extent can LLM-generated course concepts, injected as model-agnostic side information via multiple integration strategies, improve recommendation quality across classical and modern recommender systems, particularly under sparsity and cold-start conditions?

In this work, we propose a general and lightweight framework for integrating LLM-generated course concepts as side information into various classic recommendation algorithms. Unlike prior approaches that require model-specific modifications, our framework is architecture-agnostic and supports multiple fusion strategies, allowing seamless adaptation to a wide range of recommender models. Importantly, our use of LLMs is confined to the data preparation stage, which ensures minimal computational overhead and high applicability in real-world settings. To evaluate the effectiveness and generalizability of our approach, we conduct extensive experiments on two publicly available MOOC datasets. We apply our framework to a variety of baseline models and observe consistent performance improvements, particularly in cold-start scenarios where side information is most beneficial. Additionally, we compare the impact of different LLMs on concept generation and downstream recommendation quality, providing further insights into how model choice influences effectiveness. Our results demonstrate that even without model architectural changes, LLM-generated concepts can serve as effective side information to enhance recommendation accuracy in a low-cost, scalable manner.

To summarize, our main contributions are as follows:

- We propose a novel data augmentation approach that leverages LLM-generated course concepts as side information to improve recommendation performance. Our method operates solely at the data preparation stage, without involving the inference phase, ensuring low computational overhead.

- We design a general and flexible integration framework that supports multiple fusion strategies and can be applied across a wide range of recommendation algorithms without modifying their architectures.

- We conduct comprehensive experiments on two publicly available MOOC datasets and demonstrate that our approach consistently improves performance, particularly in cold-start scenarios.

- We perform a comparative analysis of concepts generated by different LLMs and examine their downstream impact on recommendation effectiveness, providing practical insights into model selection and data quality.

2. Related Work

2.1. Course Recommendation

Early developments in course recommendation systems primarily relied on content-based methods and collaborative filtering (CF) approaches [13,14,15,16,17]. For example, Polyzou et al. [18] applied random walk-based techniques to capture sequential dependencies between courses, while Wagner et al. [19] leveraged traditional machine learning to identify course selection patterns and reduce dropout risks. With the rise of deep learning, a number of neural approaches have been proposed to improve recommendation performance. Gong et al. [20] introduced an attentional graph convolutional network to incorporate concept relationships. Zhang et al. [3] and Pardos et al. [21] explored hierarchical and connectionist models for better preference learning. Jiang et al. [22] modeled goal-based recommendation processes, while Yu et al. [23] proposed a hierarchical reinforcement learning framework that expanded course concepts in a multi-stage manner. Gao et al. [5,24] developed relation-graph and sequence-based models to capture complex interactions between students, exercises, and course content. More recently, there has been growing interest in improving explainability in course recommendation. Yang et al. [6] introduced KEAM, which constructs user profiles from course-specific knowledge graphs and leverages them to deliver explainable course recommendations. Building on this, MAECR [25] further incorporates multi-perspective meta-paths and dual-side modeling to capture both student preferences and course suitability.

Despite these advances, course recommendation remains uniquely challenging. Issues such as data sparsity, cold-start scenarios, and the lack of structured, interpretable representations of course content continue to hinder performance and user trust. While knowledge graphs and hand-crafted side information have been explored, they often require manual effort and are difficult to generalize across domains. In particular, few studies have investigated the use of automatically generated side information, such as course concepts produced by LLMs, to enhance course recommendations. These gaps motivate our work.

2.2. Concept Extraction

Identifying key concepts within educational content has long been considered essential for supporting student understanding and guiding course selection. The task of concept extraction has attracted considerable attention, particularly in the context of MOOCs and digital learning resources, which involves automatically identifying and representing important knowledge elements within a course. Early efforts in this area employed a variety of semi-supervised [26], embedding-based [8], and graph-driven approaches [27] to extract course concepts. Changuel et al. [28] leveraged prerequisite annotations to construct concept sequences. Yu et al. [29] incorporated external knowledge bases and interactive refinement. While effective to a certain extent, these methods often suffer from scalability issues, over-reliance on textual inputs, limited generalizability, and high computational costs due to complex model designs. More recent work has begun exploring the integration of LLMs for enhancing concept identification [10,11,30]. These models offer the ability to infer semantically coherent and context-aware concepts from raw course descriptions without relying on structured annotations. Our previous research [12] has shown that LLM-generated concepts can achieve high semantic quality and strong alignment with course content.

However, most existing studies treat concept extraction as a standalone task and do not explore how LLM-generated concepts can be used in downstream educational tools. To the best of our knowledge, no prior work has investigated the use of these automatically generated concepts as side information to improve course recommendation systems—a gap we aim to address in this study.

2.3. Large Language Models in Education

LLMs such as GPT, Gemini, and Claude, pre-trained on vast text corpora, exhibit strong general knowledge and reasoning capabilities. These models have achieved remarkable performance in tasks such as machine translation, text summarization, and question answering [12,31]. Their emergence has also opened new opportunities in education, including automated content generation, personalized learning experiences, and enhanced educational tools [32].

A growing body of research has explored the application of LLMs in educational contexts. For instance, GPT has been studied for generating course-aligned concepts to improve interoperability [11], assisting in qualitative codebook development [33], and producing feedback for counselor training [34]. Lohr et al. [35] employed retrieval-augmented generation (RAG) to generate semantically annotated quiz questions tailored to specific courses, while Kieser et al. [36] demonstrated that ChatGPT-4 can simulate realistic student responses for concept inventories, enabling scalable data augmentation in physics education. These studies show that LLMs can generate pedagogically relevant content that supports teaching and assessment. In parallel, several works have begun integrating LLMs into course recommendation systems [37]. Coursera-REC [38] combines LLMs with RAG to generate personalized MOOC recommendations with natural-language explanations. Meanwhile, CR-LCRP [39] utilizes multi-granularity data augmentation within a heterogeneous learner–course network to improve recommendations under sparse conditions.

Despite these advancements, most existing work treats LLMs as generation engines for full recommendations or textual feedback, rather than as tools for producing structured, reusable side information. In particular, the use of LLM-generated course concepts—as semantic signals that can be systematically integrated into conventional recommendation models—remains largely unexplored. Our work fills this gap by leveraging LLMs to generate high-quality concept-level features that are integrated into a recommendation framework, enabling data-efficient enhancement without architectural modification.

2.4. LLM-Based Data Augmentation in Recommender Systems

Data augmentation has emerged as a critical strategy for improving model robustness and mitigating sparsity in recommender systems by enriching training data with additional signals [40,41]. Recently, LLMs have emerged as a promising tool for data augmentation due to their generative capabilities and access to rich contextual knowledge. For example, ColdLLM [42] and LLMRec [43] generate synthetic user–item interactions or expand graph-based relations to strengthen learning under sparse conditions. Wang et al. [44] rely on LLMs to produce pairwise comparison preferences or aspect-level attributes that enhance downstream models through preference modeling or representation learning. These approaches typically focus on simulating user behaviors or preferences to create additional interaction signals.

While these methods have shown promising results, they predominantly use LLMs to simulate behavior-level data, such as clicks, preferences, or rankings, thereby augmenting interaction information. In contrast, few studies have investigated the use of LLMs to generate structured side information that can be directly injected into recommendation models. Our work addresses this gap by employing LLMs to extract course-level concepts from textual descriptions and using these concepts as semantic features to improve recommendation performance. This approach offers a lightweight and architecture-agnostic form of augmentation that is especially valuable in educational recommendation scenarios.

3. Proposed Framework

In this section, we present a concept-based data augmentation framework designed to enhance course recommendations by incorporating side information automatically generated by large language models (LLMs). The framework is motivated by the observation that concept-level information can provide valuable semantic signals beyond interaction data, especially in scenarios where user behavior is sparse or unavailable. Instead of relying on manually annotated features or domain-specific extraction pipelines, our method utilizes LLMs to infer relevant course concepts from available course-related information. These concepts are then encoded into dense semantic vectors using a pre-trained encoder and injected into various recommendation models through a set of lightweight, architecture-independent integration strategies. The overall framework is designed to be general, efficient, and compatible with a wide range of existing algorithms. This section introduces the core components of our approach, including an overview of the framework, the concept generation process, and the integration mechanisms.

3.1. Overview of the Framework

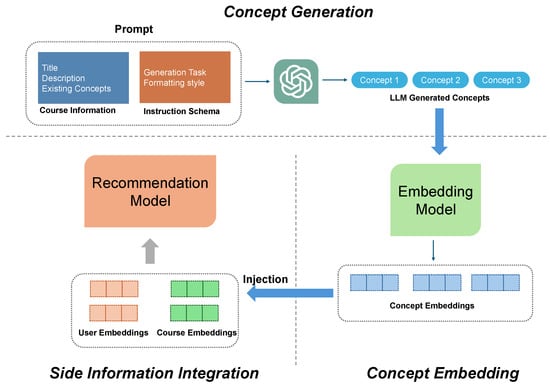

As illustrated in Figure 1, the framework consists of three core components: Concept Generation, Concept Embedding, and Side Information Integration. It is worth noting that the user embeddings shown in Figure 1 are obtained from the users’ historical interaction data. Each baseline recommender model (e.g., MF, NeuMF, LightGCN, FM, ItemKNN) computes these embeddings through its own standard parameterization process. For instance, matrix factorization learns user latent vectors by decomposing the user–item interaction matrix, while graph-based models like LightGCN aggregate neighbor signals to form user representations. In our framework, these user embeddings remain unchanged and serve as the basis for integrating the additional concept-level side information through the strategies described in Section 3.3.

Figure 1.

Overview of the proposed framework for integrating LLM-generated course concepts into recommendation models.

In the first stage, LLMs are used to infer a set of relevant concepts for each course based on its available information. These concepts are intended to reflect the key topics or knowledge areas covered by the course. In the second stage, the generated concepts are transformed into semantic vector representations using a pre-trained embedding model, enabling them to capture rich contextual meaning. In the final stage, the resulting concept embeddings are integrated into various baseline recommendation models through multiple model-agnostic fusion strategies, including linear addition, attention-based weighting, gating mechanisms, concatenation, and similarity-based integration. Notably, this integration process does not require any architectural modifications to the underlying models.

An important feature of our framework is that the LLM is used only during the data preparation stage. Once the concepts and their embeddings are obtained, they can be reused across different recommendation algorithms without repeated LLM inference, resulting in low computational overhead and strong scalability. We detail the concept generation and embedding procedure in Section 3.2, and introduce the integration strategies in Section 3.3.

3.2. LLM-Based Concept Generation & Embedding

3.2.1. Concept Generation with LLMs

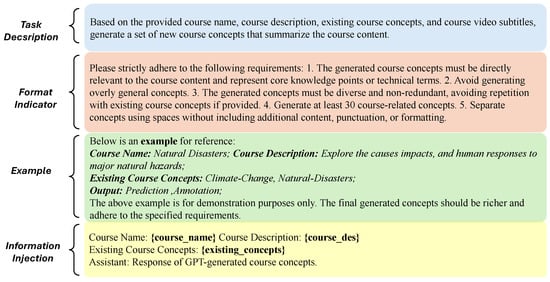

To generate course-level concept information, following our previous work [12], we utilize LLMs to produce structured semantic concepts based on course-related input. This process is guided by a prompt design that systematically instructs the LLM on the generation task. As illustrated in Figure 2, each prompt for both datasets is composed of three components: task description, format indicator, and information injection.

Figure 2.

Prompt design for guiding LLM-based course concept generation across different datasets, consisting of task description, format indicator, and information injection.

The task description defines the objective of the generation. In our case, we explicitly specify that the LLM is expected to generate a set of course-relevant knowledge concepts. This instruction helps orient the model toward identifying topical or thematic keywords that capture the core content of the course. The format indicator defines the expected structure of the output, including the number of concepts and the formatting style. Standardizing the output format is crucial for ensuring that the generated results can be parsed and processed consistently. To enforce format compliance, we implement a retry mechanism that automatically re-prompts the LLM if the response deviates from the predefined structure. In our implementation, we require the LLM to generate at least 30 concepts for each course. The information injection component provides course-specific details that serve as context for the generation task. The content of this section varies depending on the dataset. In particular, the MOOCCube dataset contains rich metadata, including the course title, a full course description, and a list of manually annotated concepts. These components are concatenated and provided to the LLM as contextual input. In contrast, the XuetangX dataset contains only course titles, which serve as the sole input. This difference is not due to design choice but reflects the inherent data availability across the two datasets. Formally, let denote the course-related input (e.g., course title, course description), and let represent the constructed prompt, as illustrated in Figure 2. The LLM processes this prompt to generate the ’s concept set , which can be systematically obtained as follows:

where the output set is required to contain at least 30 well-structured concept terms, in accordance with the format constraints specified in the prompt template. To maintain generality and avoid fine-tuning, we adopt a one-shot prompting strategy across all settings. The one-shot design allows the model to infer the structure of the desired output based on a single example included in the prompt. This choice balances performance and simplicity while ensuring that the generation process remains model-agnostic and scalable. Using this prompt formulation, the LLM produces a rich and diverse set of candidate concepts for each course, forming a semantically meaningful basis for downstream embedding and integration. To ensure completeness, we briefly clarify how we validated and used the generated concepts. Building on our prior study [12], which systematically evaluated GPT-generated course concepts on the same two datasets (MOOCCube and XuetangX), we rely on previously established evidence that such concepts are semantically coherent, aligned with course topics, and—in several cases—closer to learner-perceived relevance than legacy “ground-truth” tags. Importantly, ref. [12] also demonstrated that even when the input is limited to concise course titles (as in XuetangX), minimal-context prompting still yields consistent and high-quality concept sets. In this work, we intentionally avoid heavy post-processing: apart from format checking and de-duplication, the concepts are used as generated. We do not assume that LLM-generated concepts are clean, perfectly aligned, or low-noise; in fact, large language models may produce substantial numbers of imperfect, off-topic, or hallucinated terms. Human-readability alone does not imply semantic correctness or interpretability, and concept-level structures produced by LLMs do not constitute reliable reasoning paths. Although our experiments show that the aggregate semantic signal can still yield measurable improvements in accuracy, this should not be interpreted as evidence that hallucination is negligible. Rather, the generated concepts in this study function strictly as auxiliary semantic features, whose reliability is further discussed and mitigated through the strategies presented in Section Hallucination Risks and Mitigation Strategies. We do not evaluate, claim, or imply interpretability of LLM-generated concepts.

3.2.2. Semantic Embedding of Concepts

Once the concept set has been generated for a given course, each concept needs to be transformed into a numerical vector that can be processed by recommendation models. This step bridges the gap between symbolic natural language concepts and dense feature representations commonly used in machine learning systems. By embedding the concepts into a continuous semantic space, we enable their integration as structured side information across various model architectures. A wide range of techniques has been developed for textual and knowledge-based embeddings. Traditional word-level embeddings such as GloVe [45] and Word2Vec [46] capture co-occurrence statistics from large corpora. Contextual language models, such as BERT [47], provide dynamic representations that are sensitive to input context. Additionally, knowledge graph embedding methods like TransE [48], TransR [49], and Concept2Vec [50] are capable of modeling structured relationships between entities. Beyond these, general-purpose text embedding models such as InferSent [51], Universal Sentence Encoder [52], and Sentence-BERT [53] have demonstrated strong performance in mapping variable-length texts into semantic vectors suitable for downstream applications such as retrieval, classification, and inference tasks.

In this work, we employ the OpenAI text-embedding-3-large model to encode each concept into a fixed-dimensional vector. This model offers a general-purpose semantic encoder that maps input strings, such as words or short phrases, into 3072-dimensional dense embeddings. Specifically, we input the name of each concept into the encoder to obtain a word-level semantic representation. All embeddings are computed using the default settings of the encoder, without any fine-tuning. Prior to encoding, the input text is lowercased and whitespace-normalized, and duplicate concepts within each course are removed. Each processed concept is then transformed into a 3072-dimensional semantic vector, denoted as:

where represents the embedding function implemented by the text-embedding-3-large encoder model. To obtain a course-level embedding, we aggregate all concept embeddings associated with the course. Let be the set of embeddings for concepts in . We compute the aggregated representation as:

where is a pooling function such as element-wise mean, sum, or max. In our main experiments, we adopt mean pooling due to its simplicity, effectiveness, and model-agnostic nature. The resulting course-level concept embedding provides a compact and semantically enriched representation of the course. It can be easily incorporated into various recommendation models as additional side information. All concept embeddings are precomputed and cached, allowing for efficient reuse across models and experimental configurations, thereby supporting scalability and low inference cost. Importantly, since the course-level embedding is derived from a diverse set of semantic concepts, it encapsulates rich topical information that complements interaction-based signals, enabling downstream models to make more informed and context-aware recommendations.

3.3. Side Information Integration Strategies

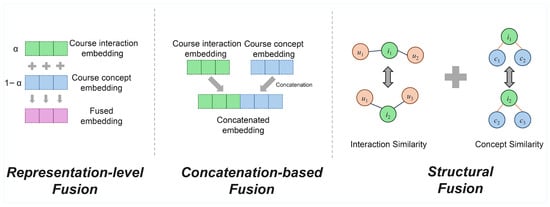

After deriving course-level representations by aggregating LLM-generated concept embeddings, we aim to incorporate this external semantic signal into various components of the recommendation pipeline. To achieve this, we propose a unified and model-agnostic framework for side information integration, specifically tailored to concept-level knowledge in this study. The proposed framework is designed to be modular and extensible, allowing it to seamlessly augment existing recommendation models without requiring architectural modifications. It supports multiple integration strategies, each enabling a different way to inject semantic knowledge into the pipeline while preserving compatibility with standard model structures. Detailed information fusion strategies are shown in Figure 3. We categorize the fusion strategies into three types based on how the concept-level embeddings participate in the recommendation process:

Figure 3.

Illustration of the three concept integration strategies: representation-level fusion, concatenation-based fusion, and structural-level fusion.

- Representation-level Fusion, where concept embeddings are combined with course embeddings to generate a new semantic representation used in prediction;

- Concatenation-based Fusion, where concept embeddings are treated as additional input features and concatenated with user and item embeddings before modeling;

- Structural Fusion, where concept information is used to enhance similarity measures or graph structures without directly modifying item representations.

Furthermore, for each baseline model, we adopt multiple fusion variants to assess the effectiveness of different strategies under a unified experimental setting. It is important to note that each fusion category encompasses several concrete implementations (e.g., linear, gated, or attention-based fusion under the representation-level strategy), reflecting the flexibility and extensibility of our integration framework.

3.3.1. Representation-Level Fusion

Representation-level fusion refers to the integration of LLM-derived concept embeddings into the course representation space in a way that directly replaces or transforms the original item embedding used in prediction. Specifically, for a given user and course , let denote the user embedding learned from interaction data, the ID-based course embedding also learned from interaction data, and the concept-level embedding of course , which is obtained via pooling over all its associated concept vectors in Concept Embedding component as mentioned in Equation (1). A fusion function is applied to combine and , resulting in a fused representation . The final prediction score is then computed as:

where is the model-specific interaction function, such as a dot product or neural scoring module. We explore several instantiations of the fusion function , each providing different inductive biases and levels of adaptability. The most straightforward approach is linear fusion, where a scalar weight controls the contribution of the two embeddings:

While effective, this method applies the same mixing ratio across all items and dimensions. To allow more expressive control, we adopt a gated fusion mechanism inspired by gated neural networks. A dimension-wise gating vector is computed as:

where is the sigmoid activation function and ⊙ denotes element-wise multiplication. This formulation enables per-dimension control over the contribution of each information source. Furthermore, we implement an attention-based fusion mechanism that dynamically assigns soft weights to the two embeddings based on their joint representation, as previously defined in this section. A query vector is constructed to attend to the two information sources. Depending on the model architecture, can be derived from either the user embedding (e.g., in collaborative models) or the item embedding itself (e.g., in graph-based models). In general, we define it as follows:

where is a context embedding and is a trainable parameter. The attention mechanism uses two key–value pairs constructed as follows:

where all transformation matrices are learnable, scaled dot-product attention is used to compute the weights:

The final fused course representation is then given by:

This dynamic fusion strategy enables the model to softly adjust its reliance on structural versus semantic information depending on the interaction context, offering a more flexible and adaptive representation space. Overall, representation-level fusion serves as a general and model-agnostic mechanism that enables seamless semantic enrichment of course embeddings, making it readily applicable to a wide range of recommendation architectures without modifying their interaction functions.

3.3.2. Concatenation-Based Fusion

Concatenation-based fusion incorporates concept-level information by appending it to existing user and item representations prior to prediction. Unlike representation-level fusion, which replaces or transforms the original course embedding, this strategy preserves the base embeddings and treats the concept embedding as an auxiliary feature vector. The combined vector is then passed to the downstream scoring module, such as a factorization machine or multilayer perceptron. This fusion strategy is model-agnostic and applies uniformly across a wide range of recommendation architectures. The core idea is to concatenate the concept-level embedding with either the ID-based course embedding , the user embedding , or both. When the embedding dimensions differ, the concept vector can be projected or preprocessed to align with the target latent space. The fused input can then be formally expressed as:

where is the model-specific prediction function. Beyond using continuous embeddings, this strategy also allows incorporating concept-level information in structured or categorical formats, particularly in models that are designed to handle high-dimensional or heterogeneous input features. For instance, factorization-based methods and hybrid recommenders often support flexible feature injection. In this context, we explore several complementary variants that encode concepts as:

- (i)

- One-hot vectors, where each concept is assigned a unique binary feature, and the entire concept set is represented as a sparse binary vector. This enables the model to learn individual interactions between users, items, and specific concepts, and is particularly suited to models that support high-dimensional sparse input, such as factorization machines.

- (ii)

- Signal-separated inputs, where the concept embedding is not simply concatenated with item embeddings but passed through a separate modeling pathway (e.g., an independent FM component). This allows the model to capture interactions that are specific to the concept signal without conflating it with ID-based features.

- (iii)

- Cluster-level categorical features, where semantically similar concepts are grouped into clusters and represented as discrete IDs. These cluster IDs are then embedded and concatenated with existing input vectors, allowing the model to capture group-level semantics while reducing dimensionality and noise.

All of these follow the same fusion principle, enriching the input space by directly concatenating semantic features, while offering flexibility in how concept information is encoded. In summary, concatenation-based fusion provides a unified and extensible mechanism for incorporating concept-level signals into recommendation models. By treating semantic knowledge as additional input features, this strategy enables the model to learn more expressive and flexible representations without modifying the interaction structure.

3.3.3. Structural Fusion

Structure-level fusion introduces concept-level information by enhancing the underlying structures used in recommendation, such as item similarity matrices or graph connectivity. Instead of altering embedding representations, this strategy modifies how information flows between items, thereby capturing semantic relationships not evident from interaction data alone. We begin by constructing a semantic-aware similarity matrix that combines interaction and concept-level semantic information. For any pair of courses and , the fused semantic-aware similarity can be defined as:

where is derived from user interaction signals (e.g., cosine similarity between implicit feedback vectors), and is computed based on the cosine similarity between their concept-level embeddings and . The balance parameter controls the relative importance of behavioral versus semantic similarity. The resulting similarity matrix serves as a unified structural representation. In neighborhood-based methods, it can guide similarity-weighted prediction. In graph-based models, it forms the basis of an augmented item–item adjacency matrix which supports more informed message propagation. To ensure sparsity and preserve computational efficiency, we optionally filter out weak semantic connections by introducing a thresholding mechanism. The final adjacency matrix can be obtained as follows:

where is a hyperparameter controlling semantic edge density, semantic connections are retained only when concept similarity exceeds a predefined threshold. The resulting matrix is then used to guide message passing or similarity-weighted aggregation in the downstream recommendation model. This enriched structure allows the model to propagate signals through both observed interactions and concept-level semantic relations. As a result, items that share similar topics or knowledge domains become structurally connected, which improves generalization in sparse or cold-start settings.

Taken together, we introduce three strategies for integrating concept-level side information: representation-level, concatenation-based, and structure-level fusion. Each strategy operates at a different stage of the modeling pipeline, including representation, input, and structure. All are supported under a unified, model-agnostic framework that ensures compatibility across collaborative filtering, factorization-based, and graph-based methods. This flexibility allows the framework to serve as a general optimization layer for diverse recommendation models. Importantly, the use of LLM-derived concept embeddings provides rich semantic signals at low cost, without requiring labeled data or domain-specific engineering, which makes the approach both scalable and practical for real-world educational applications.

4. Experimental Setup

4.1. Datasets

In the context of this work, we focus on the scenario of course recommendation within an MOOC environment. We evaluate our framework on two publicly available MOOC datasets: MOOCCube [54] and XuetangX [3], which differ in scale and the richness of course metadata. This diversity enables us to assess the robustness of our concept-based augmentation approach across both high- and low-resource scenarios. MOOCCube is a large-scale dataset focused on online education, containing 706 real-world MOOC courses, 199,199 users, and 672,853 user–course interaction records. Each course is accompanied by a title, a full course description, and a set of manually annotated concepts. These metadata elements make MOOCCube a high-resource dataset suitable for testing the full capacity of our concept generation and integration pipeline. XuetangX, by contrast, is derived from a real-world MOOC platform and includes 82,535 users, 1302 courses, and 458,453 interaction records. However, it provides only course titles without additional metadata such as descriptions or expert-curated concepts. This makes XuetangX a representative low-resource setting for evaluating the generalizability of our method under limited textual input. To ensure data quality and consistency, we apply several preprocessing steps to both datasets. Specifically, we remove courses with fewer than 10 user interactions and filter out users with fewer than 5 interactions to stabilize model learning and evaluation. Additionally, we exclude courses whose content (e.g., graduation projects or university-specific theses) may introduce semantic ambiguity. After filtering, the final dataset statistics are summarized in Table 1. Since the richness of course metadata differs between the two datasets, we adopt different prompt designs for LLM-based concept generation. For MOOCCube, we include the course title, description, and annotated concepts in the prompt as contextual input. For XuetangX, where only the course title is available, we use it as the sole input to the LLM. This design allows us to evaluate the effectiveness of our concept generation strategy under varying information conditions.

Table 1.

Statistics of the two datasets.

4.2. Evaluation Metrics

To estimate the effectiveness of each model, we rely on six widely recognized metrics following previous research [3,55,56]: Precision@K, Recall@K, Hit Ratio (HR@K), Normalized Discounted Cumulative Gain (NDCG@K), and Mean Reciprocal Rank (MRR@K) of Top-K recommendations. It’s important to mention that the higher metric values signify superior performance.

Precision@K is a measure for computing the fraction of relevant items out of all the recommended items, which can be obtained as:

where being the recommendation list based on Top-K, being all relevant items in test dataset for user.

Recall@K is defined as the proportion of relevant items that are retrieved, which can be obtained as:

where being the recommendation list based on Top-K, being all relevant items in test dataset for user. It is to answer the coverage question, among all those considered relevant items.

Hit Ratio (HR@K) is a recall-based metric that measures the percentage of the ground truth instances that are successfully recommended in the top-K recommendation, which is defined as:

where GT means ground truth, the total number of items actually of interest to all users in the test set.

Normalized Discounted Cumulative Gain(NDCG@K) is a measure of ranking quality, extended by the Discounted Cumulative Gain (DCG). NDCG@K is computed as:

where p is the position of an item in the recommendation list and indicates the score obtained by an ideal ranking of .

Mean Reciprocal Rank (MRR@K) evaluates the average of the reciprocal ranks of the first relevant item in the Top-K recommendation list for each user. It reflects how early the model is able to retrieve the first relevant item. Specifically, if no relevant item appears within the Top-K recommendations, the reciprocal rank is considered to be zero. The metric is computed as:

where denotes the position of the first relevant item in the Top-K recommendation list for user u, and is the total number of users.

4.3. Baselines

To evaluate the effectiveness of our proposed framework in the course recommendation task, we benchmark its performance against a set of representative baseline models. Each baseline is evaluated both in its original form and with the integration of our LLM-generated concept embeddings, enabling a clear comparison that highlights the contribution of our side information fusion strategies. We select one representative algorithm from each of six major families of recommendation approaches, ensuring a diverse and comprehensive evaluation. We compared and extended the different baseline methods given below:

- Collaborative Filtering—Item-based KNN [57]: a classic memory-based collaborative filtering method that models user and item based on item similarity obtained by interaction information.

- Matrix Factorization—MF [58], learns latent representations of users and items by decomposing the user–item interaction matrix.

- Factorization Machines—LightFM [59], a hybrid recommender system combining collaborative filtering and content-based technique, these two baselines differ in the loss function.

- Deep Learning-based Methods—Neural Matrix Factorization (NeuMF) [60], combines generalized matrix factorization (GMF) and multilayer perceptrons (MLP) to jointly model both linear and nonlinear user–item interactions, resulting in more expressive preference learning.

- Graph Neural Network-based Models—LightGCN [61], propagates user and item embeddings over a bipartite interaction graph using simplified graph convolutions to enhance collaborative filtering.

- Knowledge-enhanced Models—KEAM [7], integrates course knowledge graphs into an autoencoder-based architecture whose intermediate layer is instantiated with concept nodes and course–concept links. While this design yields a structured representation, it must not be interpreted as an explanation mechanism. Human-readable nodes are a necessary but by no means sufficient condition for explainability, and concept-layer activations do not represent a faithful reasoning process unless explicitly evaluated. In this work, KEAM is treated purely as a conventional recommender that consumes concept embeddings, without attributing any form of interpretability to its architecture or outputs.

These baselines span a diverse set of modeling paradigms, covering both traditional and deep learning-based approaches, as well as models that utilize structured knowledge. For each baseline, we implement two versions: a vanilla model and a version augmented with LLM-generated concept embeddings using our proposed fusion strategies. Importantly, the architecture of each model remains unchanged; the integration is performed solely at the input level. This design enables a fair and architecture-agnostic evaluation of our framework, demonstrating its flexibility and effectiveness across a wide range of recommendation methods.

4.4. Training Details

All models were implemented using PyTorch 2.1.2 and trained on a single NVIDIA RTX 4060 Ti GPU. For each dataset, we randomly split the user–course interaction records into training, validation, and test sets, following an 80/20 ratio. We ensured that each user retained at least one interaction in either the training or test set to guarantee valid evaluation. Unless otherwise noted, all models use an embedding size of 64 for both users and items. To ensure fair comparisons, we performed hyperparameter tuning for each model and selected the best configuration on the validation set. The optimization was conducted using either the Adam or the SGD optimizer, with the better-performing choice adopted for each baseline. The learning rate and other hyperparameters were selected through grid search. Most models were trained with the Bayesian Personalized Ranking (BPR) loss. For each positive interaction, we sampled one negative course that the user had not interacted with. An exception is the matrix factorization model, which was trained using the mean squared error (MSE) loss with regularization, consistent with its original formulation. Training was run for up to 50 epochs, with early stopping based on the validation hit ratio (HR). The batch size was set to 2048 across all models.

To incorporate external semantic knowledge, we used concept-level course embeddings generated by the GPT family of large language models. In particular, the majority of experiments relied on GPT-4.1 to extract and represent course concepts in a high-dimensional semantic space. The resulting 3072-dimensional embeddings were projected into the same 64-dimensional latent space as ID-based embeddings via a learnable transformation layer. This projection enabled the semantic information to be seamlessly fused into various recommendation models under different fusion strategies. All models were trained under a unified protocol to isolate the effects of fusion strategies from other confounding factors.

4.5. Model-Specific Integration Settings

We apply the proposed concept-level integration framework to a set of representative recommendation models, each belonging to a different architectural family. For each model, we adopt one or more fusion strategies defined in Section 3.3, including representation-level, concatenation-based, and structure-level fusion. Table 2 summarizes the specific fusion variants adopted for each baseline model. For the Collaborative Filtering (ItemKNN) model, we adopt the structure-level fusion strategy by enhancing the similarity matrix with concept-aware semantic signals. Specifically, we compute a hybrid similarity score as a weighted combination of interaction-based similarity and concept-based similarity, as described in Equation (2). The mixing coefficient is empirically set to 0.3, 0.5, or 0.7, and the best-performing value is selected on the validation set. In the Matrix Factorization (MF) model, we implement both representation-level and concatenation-based fusion. Representation-level fusion includes linear, gated, and attention-based mechanisms, each producing a fused course representation before prediction. Additionally, we include a concatenation variant where the concept embedding is appended to the ID-based embeddings and processed through a neural scoring function. For the Factorization Machines (FM) model, we focus on concatenation-based fusion, aligning with its original formulation that emphasizes direct integration of side information. However, given the high dimensionality of LLM-generated concept embeddings, we employ dimensionality reduction via projection and explore several fusion variants, including one-hot encoding, cluster-level categorical features, signal-separated channels, and DNN-based transformations. These allow the model to ingest semantic features while preserving parameter efficiency. The NeuMF model mirrors the fusion design of MF and includes all four representations and concatenation variants: linear, gated, attention-based, and direct concatenation. The fused input is then propagated through multilayer perceptron layers to learn nonlinear interactions. In LightGCN, we apply all three fusion strategies. Representation-level fusion includes linear, gated, and attention-based mechanisms for embedding refinement. Concatenation-based fusion appends concept embeddings to the ID-based inputs before graph convolution. Structure-level fusion is achieved by augmenting the course–course graph with additional edges between conceptually similar items, where concept similarity exceeds a cosine threshold of 0.85. Finally, in the KEAM model, which builds student profiles based on knowledge graphs, we replace the original domain-specific knowledge base with concept graphs constructed from LLM-generated course concepts. Replacing a manually defined domain knowledge base with LLM-generated concepts enables KEAM to operate on datasets lacking predefined concept structures (e.g., XuetangX). However, such substitution also introduces additional hallucination risks, since both LLM-generated concepts and automatically constructed graphs may contain semantic noise or structural inconsistencies. We therefore treat the resulting concept layer as a flexible semantic enrichment mechanism rather than a validated knowledge graph or an interpretable reasoning substrate. The mitigation strategies outlined in Section Hallucination Risks and Mitigation Strategies describe how these risks can be moderated in practice. The original KEAM model heavily relies on rich course annotations from the dataset, a limitation common to most knowledge graph-based recommenders. By leveraging LLM-generated concepts, we alleviate this dependency and provide a scalable alternative that retains semantic depth while substantially improving generalizability across sparse or low-resource settings.

Table 2.

Summary of side information integration strategies applied to each baseline model.

5. Results

5.1. Overall Performance Comparison

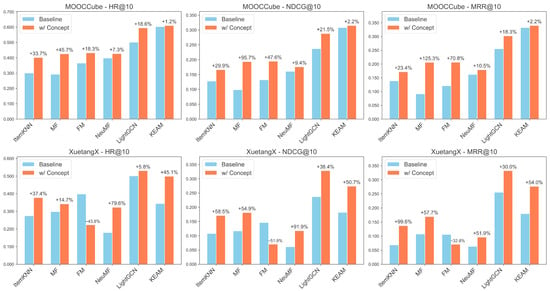

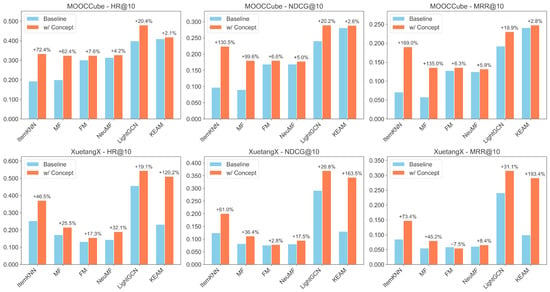

To evaluate the effectiveness of our concept-level augmentation framework, we report the top-performing fusion results for each baseline model across both the MOOCCube and XuetangX datasets. Table 3, Table 4, Table 5 and Table 6 comprehensively summarize the performance comparison of standard baselines and their enhanced versions under different top-K settings (K = 5, 10, 15, 20) on both datasets. Additionally, Figure 4 visualizes the relative gains in HR@10, NDCG@10, and MRR@10 metrics, highlighting the consistent improvements brought by integrating LLM-generated concepts.

Table 3.

Performance of Recommendation Models on MOOCCube Dataset at K = 5 and K = 10.

Table 4.

Performance of Recommendation Models on XuetangX Dataset at K = 5 and K = 10.

Table 5.

Performance of Recommendation Models on MOOCCube Dataset at K = 15 and K = 20.

Table 6.

Performance of Recommendation Models on XuetangX Dataset at K = 15 and K = 20.

Figure 4.

Performance comparison of baseline and concept-augmented models on the MOOCCube and XuetangX datasets. The Y-axis shows the absolute metric values (HR@10, NDCG@10, and MRR@10). The improvement percentages above each pair indicate the relative gains over the baseline.

On the MOOCCube dataset, all baseline models clearly benefit from incorporating the generated concept information. Notably, ItemKNN, MF, and LightGCN achieve remarkable performance gains. Specifically, ItemKNN shows improvements of HR@10, NDCG@10, and MRR@10 scores by +33.7%, +29.9%, and +23.4%, respectively, clearly demonstrating that simple collaborative-filtering approaches substantially benefit from enriched semantic signals. Similarly, MF exhibits substantial gains of HR@10 score by +45.7%, NDCG@10 score by +95.7%, and MRR@10 score by +125.3%, highlighting how latent factor-based methods benefit considerably from external semantic enrichment. The improvement observed in LightGCN (HR@10 score by +18.6%, NDCG@10 score by +21.5%, MRR@10 score by +18.3%) indicates that even models leveraging graph structures can effectively integrate and utilize external concept information to enhance prediction quality. Additionally, other evaluated models such as NeuMF and KEAM also exhibit meaningful performance gains, demonstrating the broad applicability of our proposed concept-level augmentation approach in improving recommendation quality. We note that our evaluation focuses on performance improvement rather than interpretability assessment. NeuMF, a neural network-based collaborative filtering model, achieves improvements of the HR@10 score by +7.3%, NDCG@10 score by +9.4%, and MRR@10 score by +10.5%. This suggests that deep neural models, which inherently capture complex nonlinear interactions, can further leverage semantic signals from the generated concepts, thereby enhancing recommendation effectiveness. KEAM, as a knowledge-enhanced model, inherently utilizes external knowledge through concept integration. Nevertheless, even KEAM demonstrates measurable improvements (+1.2% in HR@10 score, +2.2% in NDCG@10, and MRR@10 scores). This highlights that LLM-generated concepts provide valuable complementary signals beyond manually annotated metadata, even when rich information is already available.

On the XuetangX dataset, our results indicate consistent performance improvements across the majority of baseline models after integrating the LLM-generated concept embeddings, despite the dataset’s limited metadata (only course titles available). Specifically, pronounced relative improvements are observed in traditional collaborative filtering methods, such as ItemKNN and MF, as well as in more advanced models, including LightGCN and KEAM. For instance, at HR@10 score, ItemKNN improves by +37.4%, MF by +14.7%, LightGCN by +5.8%, and KEAM notably by +45.1%. Similar trends emerge at NDCG@10, where the improvements are particularly striking: ItemKNN (+58.5%), MF (+54.9%), LightGCN (+38.4%), and KEAM (+50.7%). Additionally, MRR@10 metrics exhibit robust performance gains, further underscoring the general effectiveness of our concept-level augmentation strategy. However, we observe a performance decrease in the FM model across all evaluated metrics. We attribute this negative outcome primarily to the integration strategy employed by FM, which relies solely on concatenation-based fusion. Given XuetangX’s inherently sparse context (course titles only), concatenating multiple concept embeddings directly could introduce excessive semantic noise and redundancy, negatively impacting FM’s ability to effectively model interactions. In contrast, more structured or flexible models—such as LightGCN, which utilizes graph-based embeddings, and KEAM, enhanced with knowledge graph-based representations—show greater resilience to noise and effectively leverage the semantic richness provided by the generated concepts. The remarkable performance uplift seen in KEAM is particularly noteworthy, which is a knowledge-enhanced model already designed to incorporate structured external knowledge, and still meaningfully benefits from our concept-generation approach, especially on the XuetangX dataset. On XuetangX, KEAM initially suffers from limited metadata to construct a high-quality knowledge graph, leading to weak baseline performance. However, after introducing LLM-generated concepts, KEAM achieves notable relative improvements (HR@10, NDCG@10 and MRR@10 scores by +45.1%, +50.7% and +54.0%, respectively). This clearly demonstrates that our framework effectively bridges the semantic gap, substantially improving recommendation performance even when existing metadata is sparse or entirely missing. These results highlight a critical insight: the choice of integration strategy is paramount, especially in resource-limited datasets like XuetangX. Although our augmentation framework universally offers meaningful semantic enrichment, carefully matching fusion methods to the model architecture is essential to ensure optimal performance gains.

Another critical advantage of our framework is its practical efficiency and scalability. Since the LLM-generated concepts are produced only once during the data preparation stage, they require minimal computational cost and are fully reusable across different recommendation models or future runs. Importantly, our integration approach does not involve any changes to the underlying model architectures, ensuring seamless adaptability and ease of implementation in diverse real-world scenarios. This low-cost generation and high reusability, coupled with consistent and substantial performance gains, position our approach as an attractive and practical augmentation strategy for large-scale educational recommendation systems. In summary, these experimental results confirm not only the semantic quality and relevance of the generated concept features but also validate the effectiveness, flexibility, and practical utility of our proposed integration strategies. Our framework successfully demonstrates a robust and generalizable solution to enhancing educational recommendation performance across diverse modeling approaches and metadata availability conditions.

5.2. Effectiveness of Different Integration Strategies

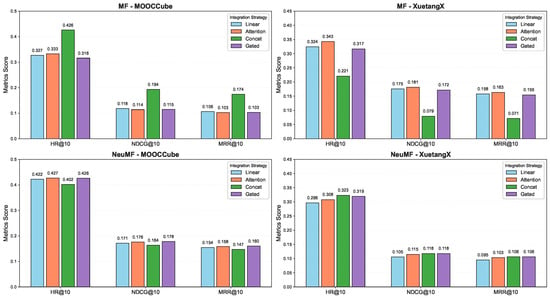

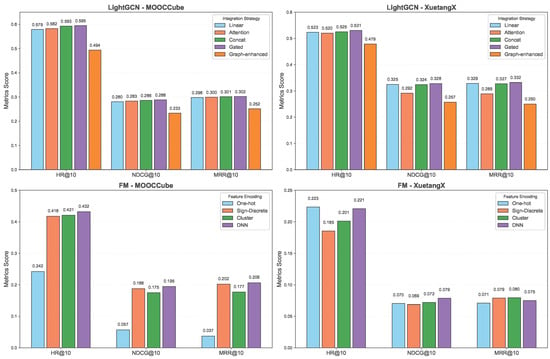

To deeply investigate the effectiveness of integrating LLM-generated course concepts, we systematically evaluated various integration strategies across four representative recommendation frameworks: Matrix Factorization (MF), Factorization Machines (FM), Neural Matrix Factorization (NeuMF), and Light Graph Convolutional Networks (LightGCN). Specifically, we examined four general strategies, Linear, Attention-based, Concatenation (Concat), and Gated fusion, alongside model-specific encodings for FM (One-hot, Sign-Discrete, Cluster, DNN) and a Graph-enhanced integration method for LightGCN. The comprehensive results at K = 10 are illustrated in Figure 5 and Figure 6.

Figure 5.

Performance of integration strategies applied to MF and NeuMF models on the MOOCCube and XuetangX datasets, evaluated at K = 10 using HR@10, NDCG@10, and MRR@10.

Figure 6.

Performance of integration strategies applied to FM and LightGCN models on the MOOCCube and XuetangX datasets, evaluated at K = 10 using HR@10, NDCG@10, and MRR@10.

From the Integration Strategy Perspective, clear performance patterns emerged. For simpler matrix-based models like MF, the Concatenation strategy demonstrated clear effectiveness, particularly on the MOOCCube dataset, achieving the highest HR@10 (0.426), NDCG@10 (0.194), and MRR@10 (0.174). This indicates that directly augmenting item embeddings with rich semantic information substantially enhances their representation capabilities. Conversely, the Attention-based fusion showed stronger results on the XuetangX dataset (HR@10 = 0.343), likely due to its adaptive weighting mechanism, which effectively handles limited semantic contexts. In contrast, Linear and Gated fusions underperformed in the simpler MF architecture, highlighting their inability to adequately model complex semantic interactions in this context. For FM-based models, deeper semantic interactions captured through neural network-based encoding (FM + DNN) notably outperformed simpler encodings like One-hot or Cluster-based methods. On the MOOCCube dataset, FM + DNN achieved superior results, with HR@10 (0.432), NDCG@10 (0.195), and MRR@10 (0.206), clearly surpassing other variants. A similar but less prominent advantage emerged on XuetangX, reaffirming that capturing sophisticated nonlinear semantic interactions through neural architectures notably enhance the FM framework, especially when semantic signals are relatively rich. For complex hybrid architectures such as NeuMF and LightGCN, adaptive integration strategies consistently provided superior results. NeuMF exhibited robust performance gains using Attention and Gated fusions, with Gated fusion notably achieving competitive HR@10 (0.426) and the highest MRR@10 (0.160) on MOOCCube. Likewise, LightGCN strongly favored the Gated fusion approach, achieving remarkable results (HR@10 = 0.595 on MOOCCube; HR@10 = 0.531 on XuetangX). Notably, the Graph-enhanced strategy, which directly integrates concepts into the underlying graph structure, markedly underperformed (e.g., HR@10 = 0.494 on MOOCCube). This unexpected finding suggests that direct manipulation of graph structures might introduce semantic redundancy or noise, negatively affecting graph embedding quality.

Analyzing from the Dataset Perspective, we specifically focused on data sparsity, a common issue in recommendation scenarios. Both datasets lacked rich, explicitly annotated semantic course information, relying instead solely on the limited semantic context provided by LLM-generated concepts. Nevertheless, clear performance improvements were observed on the MOOCCube dataset. For instance, MF’s HR@10 improved substantially from a baseline of 0.292 to 0.426 with Concatenation fusion, clearly demonstrating the efficacy of automatically generated concepts in alleviating sparsity. Similarly, complex models like LightGCN also benefited considerably from semantic augmentation, indicating the general robustness of adaptive integration methods. The XuetangX dataset presented even greater sparsity challenges, given its shorter course titles. Despite this limitation, substantial performance gains were observed, particularly when using dynamic fusion strategies. LightGCN’s Gated fusion notably improved HR@10 from the baseline of approximately 0.501 to 0.531. These results underscore the suitability of adaptive embedding fusion methods for effectively exploiting sparse semantic signals.

From the Baseline Methodological Perspective, we explicitly compared our enhanced models against traditional baselines without semantic embeddings. Models enhanced with LLM-generated concepts consistently outperformed their baselines across multiple metrics. The MF and FM models on MOOCCube showed considerable improvements, clearly underscoring the benefits of semantic enrichment. On XuetangX, dynamic fusion methods (Attention and Gated) also demonstrated substantial improvements over baseline performances, validating the general effectiveness of semantic integration even under severely sparse conditions.

These findings offer several critical insights. First, simple models such as MF substantially benefit from straightforward semantic embedding concatenation, effectively overcoming inherent limitations related to sparsity. Second, FM-based frameworks require deeper, nonlinear semantic interactions, highlighting the value of neural-based encodings. Third, adaptive fusion methods like Gated and Attention strategies consistently outperform simpler integration approaches in complex architectures such as NeuMF and LightGCN, emphasizing the importance of tailored integration methods according to model complexity. Moreover, the interplay between dataset semantic richness and integration strategy effectiveness was particularly notable. Richer contexts like MOOCCube notably amplify semantic embedding integration effects, while sparse datasets like XuetangX illustrate the necessity and robustness of adaptive methods. An intriguing yet counterintuitive result was that directly enhancing the graph structure with semantic embeddings (Graph-enhanced fusion) underperformed, highlighting potential drawbacks in semantic redundancy and noise introduction, thus indicating a need for carefully controlled semantic integration. In summary, our findings provide clear empirical evidence that integrating LLM-generated semantic embeddings enhances recommendation effectiveness across diverse models and dataset contexts. These insights not only inform theoretical developments in semantic feature integration but also offer practical guidelines for deploying effective recommendation systems in educational settings.

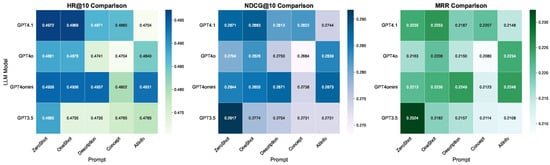

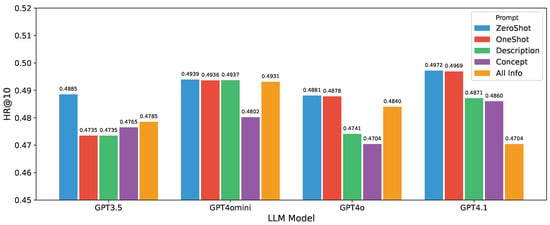

5.3. Influence of Different LLMs

To investigate how different large language models (LLMs) and prompt configurations for concept generation affect recommendation, we conducted a systematic evaluation across 20 LLM + prompt combinations. Specifically, we selected four representative LLMs, which are GPT3.5, GPT4o, GPT4omini, and GPT4.1, and paired each with five prompting strategies: ZeroShot, OneShot, Description, Concept, and All Information. These prompt types, inspired by recent studies [12], vary in the amount and type of contextual information provided to the LLM. These prompting strategies are aligned with the P1–P5 templates proposed in prior work [12], allowing us to assess how incremental context affects the model’s generation behavior. For example, ZeroShot uses only the course title without examples or additional input, while OneShot includes a single in-context example. Concept adds original human-annotated concepts, Description provides the course description, and All Information integrates all available metadata to form the most complete context. Importantly, all LLMs were queried using the same task instructions and format templates to ensure fairness in generation quality comparison as shown in Section 3.2. Our goal in this section is not to benchmark large language models themselves, but to investigate whether LLM-generated concepts can serve as effective side information and how the quality of such concepts affects recommendation performance. To control confounding factors such as tokenizer differences, prompt syntax, and output formatting, we selected four models from the same family—GPT-3.5, GPT-4o-mini, GPT-4o, and GPT-4.1—representing a clear spectrum of reasoning capability and cost. This design enables a controlled examination of whether concept quality, rather than architectural or vendor differences, drives the observed performance gains. Using models from one provider also ensures consistent prompting and reproducibility under a unified API environment. Future work will extend this analysis to open-source and domain-specific LLMs (e.g., Llama 3, Mistral, Qwen) to assess cross-model robustness and generalizability. All experiments were conducted on the MOOCCube dataset. We selected 100 target courses and used each of the 20 LLM+prompt configurations to generate course-level concepts. To create a realistic recommendation evaluation setting, we retained only users who had interacted with at least one of the selected courses. For each user, one interaction was randomly held out for testing. This yielded a test set comprising 13,153 users and 30,969 interactions. Following the concept generation stage, we encoded each course’s concept set using the same semantic embedding process described in Section 3.2.2, and obtained a 3072-dimensional embedding vector for each course. These embeddings were then projected into a shared latent space and injected into the LightGCN model for evaluation via the fusion strategies introduced earlier. This setup allows us to directly assess how the choice of LLM and prompt affects both the semantic quality of generated concepts and the resulting recommendation performance.

To better understand the effect of different LLM and prompt choices on downstream recommendation quality, we perform a multi-dimensional analysis from three perspectives: (1) the influence of LLM architecture, (2) the impact of prompt design, and (3) the overall efficacy of integrating LLM-generated concepts. This allows us to comprehensively evaluate not only the raw performance differences but also the underlying patterns and implications for future design of educational recommendation systems enhanced by large language models. Our results reveal that the choice of LLM architecture strongly influences downstream recommendation performance. As shown in Figure 7 and Figure 8, GPT4.1 consistently outperforms other models across all evaluation metrics, achieving the highest Recall@10 score of 0.4972 with the ZeroShot prompt. GPT4o and GPT4omini follow closely, with best Recall@10 scores of 0.4881 and 0.4939, respectively. GPT3.5 lags, with its highest score capped at 0.4885. The overall ranking of LLMs remains stable across metrics, indicating the robustness of this trend. These findings suggest that more advanced LLMs, particularly GPT4.1, possess a stronger ability to extract meaningful and generalizable concepts from limited course information, which translates into better user–item matching in recommendations. Notably, even the performance of GPT4omini, a lightweight variant, is highly competitive, indicating that parameter scale is not the sole determinant of utility. This opens up opportunities for applying smaller models in resource-constrained educational settings.

Figure 7.

Heatmap visualization of recommendation performance on the MOOCCube dataset, showing HR@10, NDCG@10, and MRR@10 scores for combinations of four LLMs (GPT3.5, GPT4omini, GPT4o, GPT4.1) and five prompt types (ZeroShot, OneShot, Description, Concept, AllInfo).

Figure 8.

Performance comparison of different LLM models and prompt configurations on MOOCCube dataset.

Prompt design also plays a critical role in shaping recommendation quality. When aggregating results across all LLMs, we observe the following average HR@10 scores per prompt type: ZeroShot (0.4919), OneShot (0.4880), Description (0.4821), Concept (0.4783), and All Information (0.4815). Surprisingly, the minimal-context prompts (ZeroShot and OneShot) outperform richer-context prompts (Description, Concept, and AllInfo) across nearly all LLMs. This trend holds not only in HR@10 but also in NDCG@10 and MRR@10, reflecting that simpler prompts often yield more effective concepts for downstream recommendation tasks. One possible explanation is that excessive or heterogeneous input—such as full descriptions or noisy human-annotated concepts—may introduce redundancy, ambiguity, or distractive signals. On the other hand, concise prompts like course titles might force LLMs to rely more on pre-trained knowledge and abstraction capabilities, thus producing more transferable and generalized concept representations. Among all configurations, GPT4.1 with ZeroShot prompt delivers the highest HR@10 of 0.4972, and this trend is consistent across other models like GPT4o and GPT4omini. At first glance, this seems counterintuitive—one would expect that providing more context should help the model generate better outputs. However, this observation resonates strongly with prior findings from human evaluation in previous research in educational scenarios [12], where human raters also preferred concepts generated using simpler prompts (e.g., P1, analogous to ZeroShot). Our results thus echo and extend this insight: less is more, especially when the downstream task requires semantic abstraction and transferability, rather than memorization or domain-specific fit. This further suggests that human-aligned semantic quality and machine-aligned recommendation utility may co-evolve under similar prompting patterns, reinforcing the value of prompt minimalism.

Importantly, we find that all 20 LLM + prompt configurations outperform the no-concept baseline, demonstrating that LLM-generated concepts are beneficial across the board. Even the lowest-performing combination, GPT4.1 with All Info prompt, achieves a Recall@10 of 0.4704, which is still substantially above the LightGCN baseline without concept information. This validates our core hypothesis: LLM-generated concepts, even when prompted with minimal input, can effectively serve as side information to improve personalized recommendations. The consistent lift across different models and prompts highlights the robustness of this approach and its potential scalability to other datasets or domains. In summary, our findings underscore the strong influence of both LLM selection and prompt design on the quality of generated concepts and their downstream utility. More powerful LLMs such as GPT4.1 consistently yield better results, and prompts with minimal context (e.g., ZeroShot and OneShot) often outperform more elaborate ones. The fact that ZeroShot configurations perform best—mirroring human preference trends observed in prior work—highlights the non-trivial dynamics of prompting. Importantly, all combinations tested offer tangible gains over the baseline, validating the practical value of LLM-generated concepts for enhancing personalized course recommendations.

5.4. Cold-Start Scenario Recommendation

Cold-start is one of the core challenges in recommender systems, where models struggle to make accurate predictions due to the lack of sufficient user interaction history. In our study, we specifically address the user cold-start problem and investigate whether integrating GPT-generated course concepts can alleviate this issue. We consider two levels of cold-start. In the MOOCCube dataset, we select users with no more than 5 historical interactions (173,249 users), while in the XuetangX dataset, we consider users with no more than 3 interactions (31,269 users). For each user, we hold out one interaction as the test instance. This setup allows us to evaluate the robustness of our framework under both moderate and extreme sparsity conditions. The concept fusion strategy adopted for each baseline is the best-performing variant identified in previous experiments. The results under the cold-start scenario are presented in Table 7 and Table 8, while Figure 9 illustrates the relative improvements of the recommendation models after incorporating GPT-generated course concepts. To better understand the effectiveness of our proposed framework under cold-start conditions, we conduct a multi-perspective analysis across two datasets, various model types, and performance comparison with the general (non-cold-start) setting, as shown in Section 5.1. To facilitate clearer cross-section comparison and avoid requiring readers to flip back to Section 5.1, we additionally summarize in Table 9 and Table 10 the top-10 performance results (HR@10, NDCG@10, and MRR@10) of all six baseline models under both general and cold-start conditions. These summary tables provide a concise view of how concept integration influences performance across different levels of data sparsity.

Table 7.

Cold-Start Recommendation Performance on MOOCCube Dataset at K = 5, 10, 15, and 20.

Table 8.

Cold-Start Recommendation Performance on XuetangX Dataset at K = 5, 10, 15, and 20.

Figure 9.

Performance comparison of baseline and concept-augmented models under the cold-start setting at K = 10. The bars show absolute performance values, while the numbers above indicate the relative improvements (%) compared with the baseline.

Table 9.

Comparison of Top-10 Recommendation Performance between General and Cold-Start Settings on XuetangX Dataset.

Table 10.

Comparison of Top-10 Recommendation Performance between General and Cold-Start Settings on MOOCCube Dataset.

First, we examine the differences between the two datasets in terms of cold-start severity and observed gains. MOOCCube represents a moderate cold-start scenario, with users having ≤5 interactions, while XuetangX presents a more extreme case, limiting user histories to ≤3 interactions. Despite these challenges, our framework demonstrates remarkable adaptability. On MOOCCube, all models benefit from concept integration, with consistent performance improvements across metrics and top-K values. Notably, models such as MF and LightGCN achieve substantial gains, e.g., MF improves HR@10 from 0.1996 to 0.3242 and MRR@10 from 0.0577 to 0.1356. On the more challenging XuetangX dataset, the improvements remain robust, especially for models capable of flexibly leveraging semantic information. For instance, LightGCN increases HR@10 from 0.4565 to 0.5437, while KEAM jumps from 0.2317 to 0.5103. These findings highlight the strong generalization ability of our framework, even when only minimal contextual information (e.g., course titles) is available. Second, we analyze how different model types benefit from LLM-generated concepts under the cold-start scenario. Traditional collaborative filtering models like ItemCF and MF exhibit the most pronounced relative improvements. For example, ItemCF’s NDCG@10 improves by +130.5% on MOOCCube and +61.0% on XuetangX, showing that even simple models can greatly benefit from external semantic signals. On the other hand, models such as NeuMF and LightGCN also show measurable but more moderate improvements, as they already capture complex user–item interactions internally. Importantly, the performance of FM deserves closer attention. Unlike in Section 5.1, where FM shows stable gains on MOOCCube, in the XuetangX cold-start setting, FM’s performance drops after incorporating concept embeddings (e.g., MRR@10 from 0.0590 to 0.0546). This counterintuitive result is likely due to the lack of rich contextual signals in XuetangX and the FM model’s reliance on concatenation-based fusion, which may introduce noise when concept signals are limited or overly redundant. Third, comparing cold-start results to those in Section 5.1 under general settings, we find both similarities and distinctions. In both cases, concept integration consistently improves performance, confirming the utility of semantic enrichment. However, the gains are often more pronounced in cold-start scenarios, particularly for models originally hampered by sparse interaction data. MF, for instance, exhibits a relative improvement of over +60% in HR@10 under cold-start, compared to a smaller gain under full data settings. This indicates that the impact of concept information is magnified when behavioral signals are scarce, further reinforcing the value of our augmentation strategy in sparse recommendation environments.