Abstract

To enable fully automated medicine warehousing in intelligent pharmacy systems, accurately detecting disordered, stacked pillboxes is essential. This paper proposes a high-precision detection algorithm for such scenarios based on an improved YOLOv8 framework. The proposed method integrates a novel convolutional module that replaces traditional stride convolutions and pooling layers, enhancing the detection of small, low-resolution targets in computer vision tasks. To further enhance detection accuracy, the Bi-Level Routing Attention (BiFormer) Vision Transformer is incorporated as a Cognitive Computing module. Additionally, the circular Smooth Label (CSL) technique is employed to mitigate boundary discontinuities and periodic anomalies in angle prediction, which often arise in the detection of rotated objects. The experimental results demonstrate that the proposed method achieves a precision of 94.24%, a recall of 90.39%, and a mean average precision (mAP) of 94.16%—improvements of 3.34%, 2.53%, and 3.35%, respectively, over the baseline YOLOv8 model. Moreover, the enhanced detection model outperforms existing rotated-object detection methods while maintaining real-time inference speed. To facilitate reproducibility and future benchmarking, the full dataset and source code used in this study have been released publicly. Although no standardized benchmark currently exists for pillbox detection, our self-constructed dataset reflects key industrial variations in pillbox size, orientation, and stacking, thereby providing a foundation for future cross-domain validation.

1. Introduction

In recent years, significant advances in computer vision have been driven by the powerful feature representation capabilities and end-to-end learning paradigms enabled by deep learning. As a core task in this field, object detection has evolved from traditional hand-crafted feature approaches to methods dominated by convolutional neural networks (CNNs). This technological shift has not only led to continuous improvements in detection accuracy on benchmark datasets such as ImageNet and COCO, but has also demonstrated substantial practical value in various application domains, including industrial quality inspection, autonomous driving, and medical image analysis.

Deep learning-based object detection methods are generally classified into two categories: two-stage and single-stage algorithms. Two-stage detection methods, such as SPPNet [] and the R-CNN series [,,,], typically employ a cascaded architecture involving candidate region generation, feature extraction, and classification. Although these approaches offer high detection accuracy, they tend to suffer from lower computational efficiency. In contrast, single-stage algorithms—exemplified by RetinaNet [], SSD [] and the YOLO [,,] family—use end-to-end architectures to improve inference speed. However, they often require further optimization to achieve high accuracy, particularly for detecting small objects.

In the context of intelligent pharmacy systems, automated medicine warehousing is increasingly favored over manual operations due to its higher efficiency and reduced labor costs []. Although automated devices have proven effective in handling disordered and densely stacked pillboxes, the underlying detection algorithms must meet several technical requirements: high precision, real-time performance, the ability to detect small targets, and robustness to rotational variations. Previous studies, for example, Ref. [] have attempted to address some of these challenges by reducing the feature downsampling rate from 8× to 4× in YOLOv4 through the introduction of a 128 × 128 feature map. Although this improves small-object detection, it does not adequately address the issue of rotational variance. Additionally, relying on horizontal bounding boxes in the presence of dense stacking can lead to significant overlap between boxes, thereby reducing localization accuracy and complicating downstream tasks such as robotic grasping.

Moreover, the rapid growth of big data provides abundant training samples that further enhance the performance of deep learning models in complex visual environments.

To address these limitations, this paper proposes an improved YOLOv8-based algorithm to detect small, rotated targets in disordered stacking scenarios. The primary contributions of this work are summarized as follows:

- (i)

- An improved YOLOv8 detection algorithm is proposed by integrating the SPD-Conv and BiFormer. These components improve the ability of the model to detect small objects while maintaining high computational efficiency.

- (ii)

- To address boundary discontinuities and periodic anomalies in angle prediction for rotated targets, the CSL technique is incorporated. By transforming discrete angle classification into a continuous cyclic distribution, the proposed method significantly improves the accuracy of the prediction of rotation angle for small objects.

- (iii)

- The proposed algorithm demonstrates high-speed and high-precision performance in detecting disordered stacking pillboxes. Validation is carried out using a substantial dataset of real-world images collected from production environments, confirming the practical applicability of the Computing algorithm.

2. Materials and Methods

2.1. Pillbox Detection Based on YOLOv8

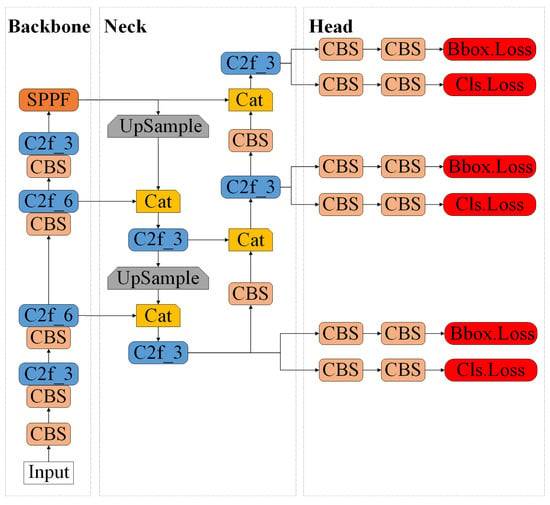

YOLOv8 introduces multiple architectural optimizations over previous YOLO versions across its backbone, neck, and detection head components. As illustrated in Figure 1, the Backbone is responsible for efficient multiscale feature extraction, leveraging the combined strengths of the Conv + BatchNorm + SiLU (CBS) module, the Cross-Stage Partial Bottleneck with 2 Convolutions (C2f) module, and the Spatial Pyramid Pooling-fast (SPPF) module. This design enables robust representation of features at various spatial resolutions. The Neck module adopts a feature pyramid structure constructed through a combination of upsampling and concatenation Concat (Cat) operations. This hierarchical fusion strategy significantly improves the model’s ability to detect objects on different scales by aggregating features from multiple layers. Finally, the Head module is designed to perform both object classification and bounding-box regression. It processes the fused multi-scale features to predict object classes and their corresponding locations. The detailed architecture of each functional component is presented in Figure 2. In general, the YOLOv8 architecture is engineered to balance real-time performance with high detection accuracy, making it a strong foundation for further enhancements in complex detection scenarios such as chaotic, stacked, and rotating targets. Such improvements are particularly important for large-scale datasets that demand robust detection frameworks to ensure high accuracy.

Figure 1.

The YOLOv8 network structure architecture.

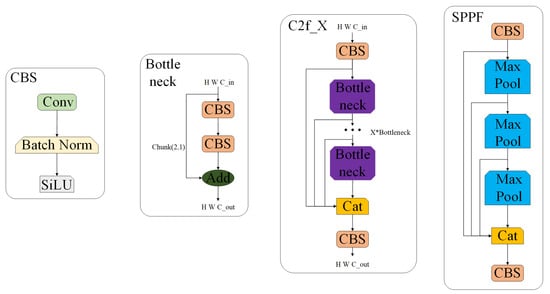

Figure 2.

Structure of each module in YOLOv8.

In the specific domain of pillbox detection, the YOLO family of models has consistently driven technological progress. For example, Ref. [] introduces an innovative approach that combines shallow feature detection with image fusion techniques, based on the YOLO architecture. This method achieves a detection accuracy of 99.02%, which is 2.57% higher than the baseline model, while also reducing the number of parameters by 29%—all without sacrificing real-time performance. Despite these advancements, most existing studies focus primarily on the detection of individually and neatly arranged pillboxes within controlled laboratory settings. Consequently, the generalization of these models remains limited when applied to real-world industrial environments, which are often characterized by small targets, rotational variation, and disordered stacking. These complexities present significant challenges that current models have yet to fully address.

2.2. Small-Target Detection in Pillbox Detection

The field of small-target detection technology is concerned with the issue of identifying low-resolution and weak-featured objects in images. The crux of the technology is to enhance the localisation and classification capabilities of small targets through the enhancement of features and the utilisation of context-awareness. In the context of pill distribution, pillboxes, which serve as archetypal small targets, are frequently arranged in dense stacks, exhibit substantial size variations, and bear a high degree of visual similarity, resulting in bounding-box overlaps, feature ambiguity, and reduced classification accuracy in conventional detection models []. In addressing these challenges, researchers have proposed a series of enhancement methods. One such approach is the YOLOv5-CBE algorithm, as outlined in [], which incorporates the CA mechanism within the YOLOv5 backbone network. This enhancement is designed to facilitate the extraction of features from pill boxes, thereby assisting in the detection of small targets. The enhanced YOLOv5-CBE algorithm attains an average precision of 98.7%, signifying a 3.0% and 2.6% enhancement in precision and recall rate, respectively, when compared to YOLOv5s. In addition, literature [] proposed a small-target method combining the CRAFT algorithm and Tesseract-OCR technique. The efficacy of this method was demonstrated through detection experiments on 202 physical pill boxes, which revealed a 100% accuracy rate for large target pill boxes and an 91.09% recognition accuracy rate for small-target pill boxes. However, it is acknowledged that these methods may still encounter challenges when dealing with unorganised stacked pillboxes [].

2.3. Rotating Target Detection in Pillbox Detection

Rotation-aware detection is essential for robotic grasping and industrial sorting. The main challenges include (i) accurate pose estimation, (ii) stable regression of rotated bounding boxes, and (iii) extracting rotation-invariant (or rotation-sensitive, as needed) features from small objects. In industrial sorting scenarios, pillboxes are frequently subjected to multi-angle, disordered stacking due to handling and transportation processes. These conditions pose significant challenges to traditional horizontal bounding-box detection methods, often leading to redundant frame overlaps and large deviations in grasping positions. To address these issues, researchers have proposed several enhancement strategies. For example, Ref. [] presents a segmentation algorithm that incorporates point cloud preprocessing and region growth techniques to analyze the 3D field point cloud of stacked pillboxes. The method determines pillbox positions by computing the center of mass of the point cloud on the upper surface and calculating the surface normals at these points, allowing for more accurate and stable grasping of scattered and irregularly stacked pillboxes []. In addition, Ref. [] introduces a modified version of the YOLOv5 detection head by incorporating an angle detection branch, to improve rotation angle estimation for sorting boxes. The enhanced YOLOv5 algorithm demonstrates strong performance in robotic retrieval tasks, achieving a maximum orientation error of 3.9 mm in the x-direction, 4.3 mm in the y-direction, and 2.9 mm in the z-direction. Despite these advances, the generalizability of these methodologies remains limited, particularly in handling diverse pillbox types, small-scale targets, and complex and disordered configurations []. Further refinement is required to enhance their robustness and adaptability in real-world deployment scenarios [].

2.4. Overall Architecture

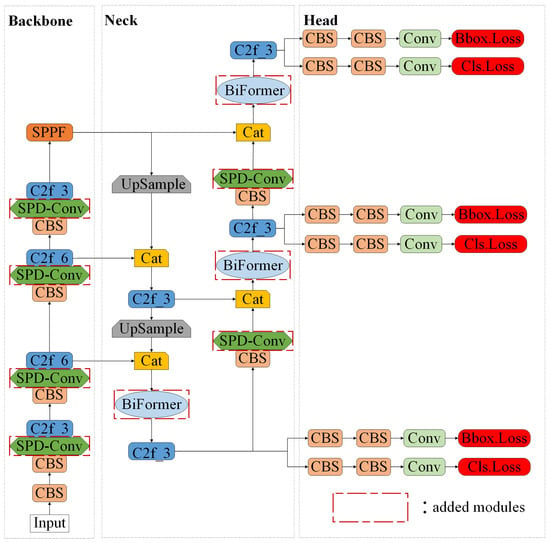

The improved YOLOv8 network architecture proposed in this study preserves the advantages of the original framework’s feature pyramid while introducing targeted optimizations to meet the specific demands of disordered stacking pillbox detection. This work focuses on innovating across three core dimensions, feature extraction, attention mechanisms, and rotational detection paradigms, to construct a detection framework that seamlessly integrates spatial perception with rotational robustness. As illustrated in Figure 3, The enhanced YOLOv8 network captures fine-grained details and accurately localizes and classifies pillboxes in densely stacked and arbitrarily rotated configurations.

Figure 3.

The improved YOLOv8 network structure diagram.

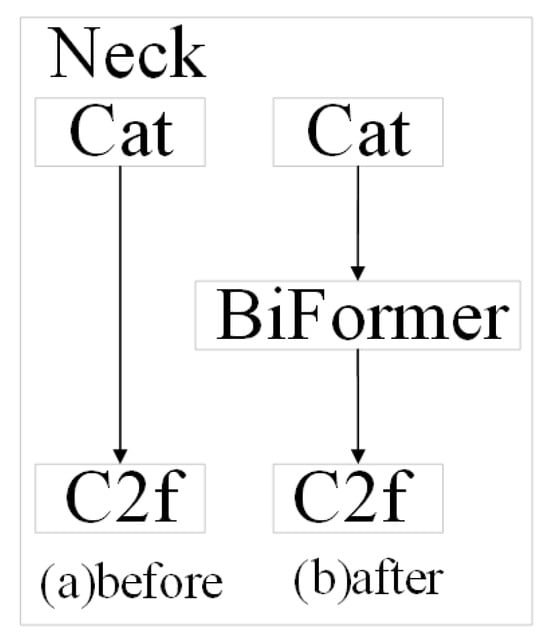

A hierarchical enhancement strategy is adopted to reconfigure the feature extraction process within both the backbone and the neck components of the YOLOv8 architecture. Although the original CBS module offers efficient downsampling, it has been observed that this process often leads to the loss of critical spatial detail, which is particularly detrimental in the detection of small-scale targets. To mitigate this issue, SPD-Conv is inserted between each CBS and C2f modules, thus forming a novel feature enhancement unit. This addition enables the network to retain rich spatial information, especially within high-resolution feature maps , significantly reducing the degradation of small-target representations. In the neck section, a double-layer routing attention mechanism is introduced to address the issue of attention dispersion during cross-scale feature fusion in the original feature pyramid. Specifically, the BiFormer module is incorporated after each upsampling node. Using a dynamic sparse attention mechanism, the model is empowered to autonomously concentrate on salient regions, which is especially beneficial when detecting densely packed, disordered pillboxes []. This enhancement substantially improves the efficiency and precision of feature selection. The head section presents a substantial advancement in rotation detection. While maintaining the original classification and regression branches, a novel angle detection branch is added and refined through the integration of the CSL technique. This significantly boosts the model’s capacity to detect small, rotating targets with high angular precision.

2.5. SPD-Conv for Feature Extraction

The detection of small targets remains a critical challenge in computer vision research. To enhance the capacity of the YOLOv8 network to identify small targets, such as pillboxes, in disordered industrial settings, a SPD-Conv module is introduced. This module is strategically integrated into the backbone and neck of the network to reinforce the capability to extract features while maintaining computational efficiency. Compared to traditional Depthwise Convolution, SPD-Conv integrates a spatial attention mechanism, which makes it more adept at capturing fine-grained visual details. The advantages of SPD-Conv are manifested primarily in the following aspects:

- (i)

- Spatial attention: The embedded attention mechanism enables SPD-Conv to focus more precisely on the spatial distribution of visual cues. This facilitates the identification of subtle structural features critical for the detection of small targets.

- (ii)

- Parameter efficiency: In contrast to standard convolution operations, SPD-Conv requires fewer parameters while maintaining or even enhancing performance. This significantly reduces the complexity of the model and the memory footprint.

- (iii)

- Computational efficiency: By reorganizing feature maps and leveraging efficient convolutional operations, SPD-Conv achieves improved perceptual capability under similar computational constraints, thereby enhancing overall model throughput.

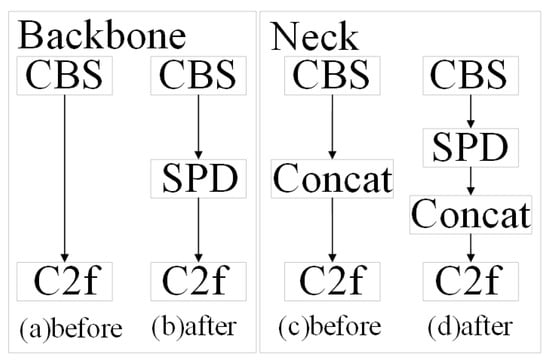

The integration of the SPD-Conv module into the YOLOv8 architecture is illustrated in Figure 4. Specifically, in the backbone network, SPD-Conv is inserted between each CBS layer and C2f module, while in the neck network, SPD-Conv is placed between the CBS layer and the Concat operation. This hierarchical enhancement strategy is designed to improve detection precision and recall for small-scale targets, as later validated in Section 3.

Figure 4.

Specific improvement strategies for the SPD-Conv module.

The SPD-Conv operation has proven to be effective in enhancing feature representation by reorganizing the spatial structure of input feature maps, particularly for small object detection tasks. This is achieved by simultaneously increasing the number of channels and reducing the spatial resolution, which allows for a more compact and informative encoding of spatial details. Specifically, SPD-Conv divides the input feature map into non-overlapping blocks of size , and repositions the elements within each block in the channel dimension. This process compresses spatial dimensions (height and width) while proportionally expanding channel dimension, enhancing the network’s capacity to capture fine-grained information critical for small-target detection. Assuming the original feature map has C channels, after applying SPD-Conv with block size n, the channels of resulting feature map will be increased by a factor of , i.e.,

Considering an SPD-Conv block of size , each input pixel contributes to output channels. The receptive field () expansion ratio can be approximated as

where denotes the receptive field of a standard convolutional kernel. Thus, larger n expands contextual coverage without increasing convolution kernel size.

To evaluate representational richness, the feature entropy of SPD-Conv output can be estimated as

where represents normalized activation probabilities. Empirical measurements on the validation set indicate that increases approximately logarithmically with n, confirming that spatial unfolding enhances feature diversity.

However, increasing n also increases channel redundancy and memory by . Therefore, or provides an optimal trade-off between entropy gain and efficiency for small-target detection.

This transformation allows the network to encode more spatial detail per channel while reducing the overall size of the feature map, contributing to both computational efficiency and feature compactness. However, it is essential to adjust the architecture of subsequent layers to accommodate the new channel configuration and altered spatial dimensions. This includes modifications to convolutional layers, normalization modules, and residual connections, where applicable. Careful planning of the post-SPD-Conv structure ensures that the increased richness of spatial information is fully leveraged, and that feature compatibility is maintained throughout the network.

2.6. Vision Transformer with Bi-Level Routing Attention

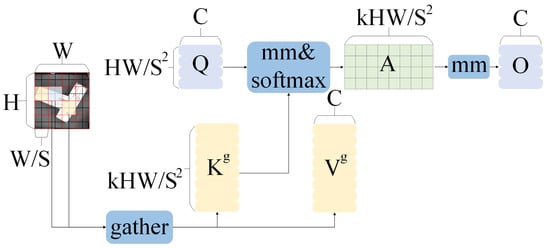

YOLOv8 is a target detection model that exhibits both modularity and flexibility, making it capable of adapting to the scale and complexity of pillbox detection tasks. However, its performance is still constrained in complex and cluttered scenarios []. To overcome these limitations, the BiFormer network is introduced, which employs a two-layer routing attention mechanism to effectively capture global and local features. This enhancement significantly improves the computational efficiency and expressive capacity of the model, thereby increasing its accuracy in pillbox detection tasks. The schematic diagram of the BiFormer is illustrated in Figure 5, and its operational principles are as follows. Input a picture of a pillbox X, where . Here, the symbol denotes the real-valued space and H, W, and C correspond to the height, width, and number of feature channels of the image, respectively. The image is first divided into distinct regions, each region containing feature vectors. Consequently, the representation of the features after the regional division is . Subsequently, the projected feature matrices, query (Q), key (K), and value (V) are computed using linear transformations, where .

Figure 5.

Schematic diagram of BiFormer.

These matrices can be obtained by

and

where are the projection weights of Q, K and V, respectively. Attention weights are computed on the coarse-grained token, and then only the top-k regions of it are taken as relevant regions to participate in the fine-grained operation.

The iterative update of the attention matrix is defined as

and

where L represents the layer normalization operator. This process refines region-level correlations across iterations, j denotes the number of rounds of iteration, denotes the matrix updated after j rounds of iteration, and denotes the index of the first k most relevant zones in the j-th round in the i-th row containing the i-th region.

Finally, the most relevant Top-k coarse-grained regions of each Token are involved in the final computation as keys and values. To enhance localization, a deep convolution is used on the values by

and

where denotes the selection of a particular element from the key matrix K according to the index to obtain the new key matrix. denotes the selection of a particular element from the key matrix V according to the index to obtain the new key matrix. denotes the input of the matrices to the attention mechanism to obtain the output matrix. denotes the regularization operation on the key matrix V.

The BiFormer is predicated on the utilization of two hierarchical attention mechanisms, namely local routing and global routing. The local routing mechanism strengthens the model’s sensitivity to small targets and fine-grained details, while the global routing mechanism aids in capturing the overall spatial structure of the image, thereby enhancing detection accuracy under complex scene conditions. The integration of BiFormer into the Neck module of the YOLOv8 architecture enables the effective exploitation of multi-scale feature representations, resulting in notable performance improvements in cluttered and disordered environments. As the core module responsible for multilevel feature aggregation, the Neck network is inherently flexible and well suited for the incorporation of advanced attention mechanisms. This design facilitates improved accuracy in both feature fusion and target localization. The detailed integration strategy is depicted in Figure 6.

Figure 6.

Specific improvement strategies for the BiFormer module.

Empirical results show that the BiFormer has significant advantages in both feature extraction and computational efficiency. These benefits are largely attributed to its query-aware dynamic sparse attention mechanism and its modular, flexible architecture. By adaptively focusing on the most salient regions within the feature space, BiFormer reduces redundant computation while enhancing the accuracy of visual representation. However, it is important to acknowledge the limitations associated with this sparse mechanism, particularly the potential for information loss. Furthermore, the parameter-tuning process of the dual-layer routing architecture introduces increased complexity and warrants further investigation.

Let S denote the number of spatial tokens and C the feature dimension. In standard global attention, the complexity scales as , due to all-pair interactions across tokens. In contrast, BiFormer restricts computation to the top-k most relevant regions within a dual routing structure, leading to a reduced complexity of , where . For instance, with and , the theoretical FLOPs reduction exceeds 98%. This substantial sparsity directly translates to faster inference and lower GPU memory utilization while preserving global context awareness through hierarchical routing. Empirical profiling confirms that the BiFormer module reduces overall FLOPs by approximately 43% relative to the standard transformer attention under identical resolution.

In conclusion, the incorporation of BiFormer into YOLOv8 substantially improves the target detection performance in complex and dynamic environments. Beyond this enhancement, it also offers a compelling paradigm for the broader application of attention mechanisms within advanced visual recognition tasks.

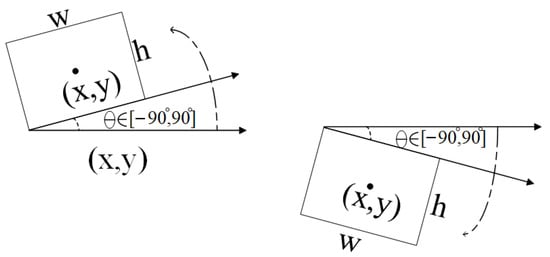

2.7. Rotation Angle Prediction Based on CSL

In the domain of rotating target detection, three primary representations of rotated bounding boxes are commonly utilized: the five-parameter representation, the eight-parameter representation, and the position vector representation. Among these, in this study the five-parameter representation is adopted to enhance the YOLOv8 model. This choice is motivated by its compatibility with the output format of the regression branch of the original model and its computational efficiency. The five-parameter representation is illustrated in Figure 7. In this representation, the inclusion of the angle parameter enables precise prediction of the orientations of the bounding box, thereby allowing flexible and accurate detection of objects in arbitrary directions. This capability is particularly critical in scenarios such as disordered pillbox stacking, where targets may appear in a wide range of orientations.

Figure 7.

Five-parameter representation of the rotating bounding box.

In the context of rotating target detection, the direct application of the five-parameter representation in YOLOv8 may sometimes lead to discontinuities in angle prediction, particularly when the target orientation approaches angular boundaries. These abrupt changes result in instability and inconsistency in angle regression [].

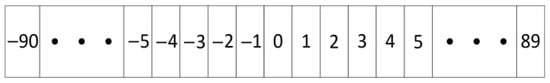

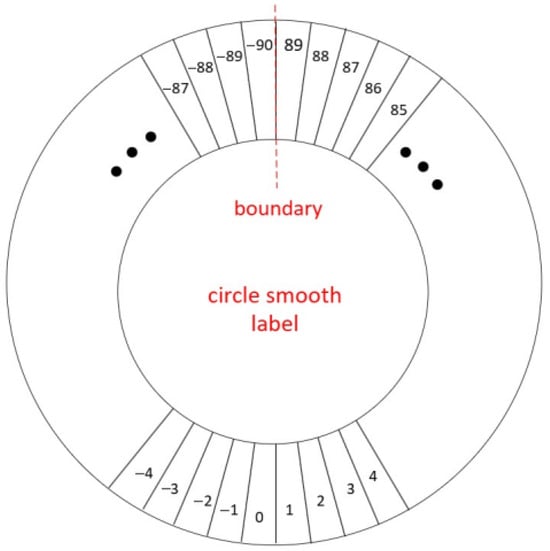

To mitigate this issue, the angle regression problem can be reformulated as a classification problem, leveraging the finite nature of classification outputs to achieve smoother and more stable predictions. In this approach, the entire angular range is discretized, with each degree representing a distinct class. The most basic implementation of this strategy is single-label encoding, in which each angle is assigned to a unique category. An illustration of this label encoding process is presented in Figure 8.

Figure 8.

Single-label coding.

Although conversion from regression to classification offers improved stability, it may introduce a degree of precision loss due to discretizations of continuous angle values. In the five-parameter representation where the angular range is , each interval (with a default value of ) is treated as a distinct class. This discretization inherently limits the angular resolution of the model. To quantify this limitation, the maximum potential loss in angular accuracy, indicated by , and the expected loss, indicated by , can be calculated by

and

As demonstrated by the above equation, the error introduced in rotation detection using discretized classification is minimal. However, the single-label coding scheme presents two critical limitations when applied to rotational detection as follows.

- 1.

- In the regression-based approach, two distinct boundary cases exist—vertical and horizontal—leading to ambiguity when the predicted angle lies near these transitions. In contrast, the regression method limits this issue to the vertical boundary. However, edge swappability remains a source of instability, particularly for near-boundary angle predictions.

- 2.

- Conventional classification loss functions (for example, cross-entropy) are inherently agnostic to the angular distance between the predicted label and the true label. For example, if the ground truth is , a prediction of and incurs the same loss—although the former is significantly closer in a rotational sense. This uniform treatment of all incorrect classes leads to suboptimal learning for angular regression tasks.

To overcome these issues, the Circular Smooth Label (CSL) approach is adopted. CSL recasts angle regression as a classification problem, but introduces a smooth, periodic label encoding scheme. This circular encoding ensures that class labels near the angular boundary are interpreted as adjacent, thereby preserving continuity and reducing swappability. The circular label coding is illustrated in Figure 9 and can be defined mathematically by

where controls angular smoothness, is the window radius, and is the angle of the current detection frame.

Figure 9.

Circular label coding.

In summary, defining the CSL within the range (−180°, 180°) establishes a continuous and symmetric angular representation that eliminates boundary discontinuities and simplifies implementation. The Gaussian weighting within the ensures smooth label transitions between adjacent orientations, allowing the detector to learn stable angle features even for densely stacked or partially occluded pillboxes. This enhancement directly contributes to more consistent rotation predictions and provides a mathematically elegant, computationally efficient foundation for rotation-aware detection in the improved YOLOv8 framework.

2.8. Theoretical Complexity Summary

To quantitatively assess the efficiency of the proposed modules, the theoretical floating-point operations (FLOPs), parameter count, and memory cost were analyzed. Table 1 summarizes the computational characteristics of each variant relative to the baseline YOLOv8.

Table 1.

Theoretical and empirical complexity comparison of model variants.

As shown in Table 1, the Big-O column represents the asymptotic scaling behavior of each module, whereas the GFLOPs column reports the measured computational cost for a fixed input size. Percentage changes in parameters and memory are computed relative to the baseline YOLOv8. The proposed full model maintains a markedly low computational demand (approximately 4.9 GFLOPs, about 70% lower than the baseline) while achieving superior detection accuracy through theoretically justified architectural improvements.

2.9. Method Stability and Theoretical Justification

While the proposed detection algorithm has demonstrated consistent empirical gains, a theoretical explanation of its mechanisms and anticipated stability is also essential []. The following analysis explains why the improved YOLOv8 framework is expected to remain effective under moderate backbone updates.

- (1)

- SPD-Conv—spatial unfolding and information retention. SPD-Conv reorganizes local spatial neighborhoods into expanded channel representations without changing the receptive-field topology. This operation increases the effective rank of the local covariance of feature activations, thereby enriching descriptive capacity for small-scale patterns. Because it does not depend on specific residual-block formulations or kernel sizes, SPD-Conv functions as a plug-in operator compatible with a wide range of convolutional backbones. Its performance depends mainly on the statistical properties of local image patches, not on YOLO-specific structural details, which confers robustness to future updates [].

- (2)

- BiFormer—hierarchical sparse routing and signal isolation. BiFormer introduces a coarse-to-fine attention routing mechanism that adaptively filters irrelevant spatial tokens. By limiting computation to the top-k informative regions, the effective signal-to-noise ratio of feature aggregation increases. Because this routing process relies only on the existence of multi-scale feature maps—a standard property across YOLO versions—it remains stable even if the backbone architecture evolves [].

- (3)

- CSL—continuity in orientation representation. Circular Smooth Label (CSL) transforms angular regression into a smoothed classification space that preserves topological adjacency between labels. This change concerns the label representation rather than the model internals, making it independent of any particular detection head configuration. Consequently, CSL ensures continuity in output prediction and contributes to general stability across models.

- (4)

- Integrated stability perspective. The three modules act at distinct yet complementary levels—local encoding, attention routing, and label representation. None alter the global detection objective or training regime, which minimizes coupling to a specific YOLO implementation. Therefore, even as YOLO continues to evolve, the proposed modules are expected to provide consistent benefits provided that (i) high-resolution feature maps remain available for SPD-Conv, (ii) multi-scale feature fusion persists for BiFormer, and (iii) classification-style output heads exist for CSL. This modular design mitigates the “inherent instability” concern raised by the reviewer and explains, at a theoretical level, why the method’s efficacy should extend across future iterations of the YOLO framework.

In summary, the combined use of SPD-Conv, BiFormer sparse routing, and CSL forms a theoretically coherent mechanism for robust pillbox detection: SPD-Conv preserves high-frequency spatial cues essential for small and occluded targets; BiFormer selectively amplifies informative regions through dynamic token routing while maintaining bounded computational growth; and CSL ensures smooth, orientation-consistent gradients for rotated objects. Together, these components create a detection pipeline whose stability is supported both by architectural independence from specific backbones and by the observed low-variance empirical results, indicating strong generalizability under parameter and structural perturbations.

3. Results

3.1. Setup

Dataset: The experimental dataset contains 15,006 real-world images of unordered, rotated pillboxes collected from an industrial pharmacy environment using a Hikvision MV-CE013-50GM camera. Each image includes 1–8 pillboxes with varied orientation and stacking depth. Since no publicly available pillbox detection benchmark currently exists, this self-constructed dataset provides the only representative data source for such complex industrial scenes. To promote transparency and enable replication, both the dataset and the source code of the proposed model have been released at https://github.com/2250853131/Pillbox-Detection and https://github.com/2250853131/pillbox_data (accessed on 26 September 2025). The dataset follows the COCO-style annotation format and is split into training, validation, and test subsets at a ratio of 7:2:1.

Evaluation Metrics: The experiments use the following metrics to evaluate the performance of the model: precision, recall, mean average precision (mAP), weight size, giga floating point operations (GFLOPs) and detection time.

Implementation details: The training phase uses NVIDIA RTX3070 Ti GPUs based on the CUDA 11.7 and cuDNN 8.2 acceleration frameworks; The testing phase uses a 12th generation Intel i7-12700H CPU to simulate an industrial deployment environment. Image preprocessing includes grayscaling with hybrid filtering for noise reduction, and rotated frame annotations are uniformly converted to a five-parameter format (center coordinates, width, height, angle). The improved YOLOv8 model integrates the SPD-Conv module to enhance small-target detection and introduces the BiFormer two-layer routing attention mechanism; the loss function adopts the joint optimization strategy of Varifocal Loss and CIoU-DFL. Before starting the experiment, the pillbox image undergoes a series of pre-processing steps. The results of this preprocessing procedure are presented in Figure 10.

Figure 10.

Image pre-processing results.

3.2. Ablation and Sensitivity Studies

To quantify the contribution of each module and assess the stability of the proposed model, ablation and sensitivity experiments were conducted prior to cross-model comparison.

Four network configurations were trained under identical settings to evaluate the independent and joint contributions of each enhancement module: (i) YOLOv8 + SPD-Conv; (ii) YOLOv8 + BiFormer; (iii) YOLOv8 + CSL; (iv) YOLOv8 + All modules (complete model). Each configuration was trained three times with random initialization, and results are presented as mean ± standard deviation. Table 2 summarizes the performance in terms of precision, recall, mAP, and GFLOPs.

Table 2.

Ablation results of the proposed modules.

These results indicate that each component yields incremental improvement, validating the complementary effects of SPD-Conv (fine-grained spatial feature retention), BiFormer (hierarchical attention), and CSL (rotation angle stability). The complete model achieves the highest accuracy while maintaining low computational complexity and the shortest inference time among all compared models.

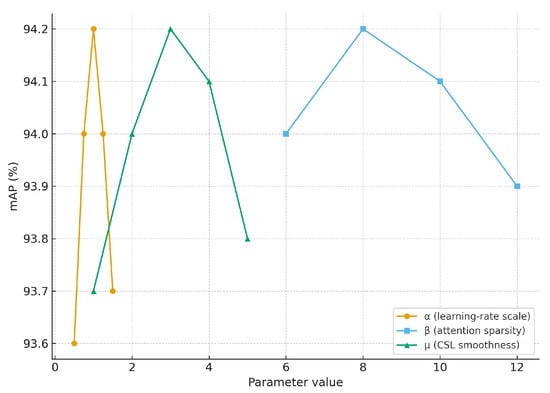

To further evaluate robustness against hyperparameter perturbations, three key coefficients were varied:

- : learning rate scaling factor (0.5×–1.5×);

- : attention sparsity threshold in BiFormer (k = 6–12);

- : CSL smoothness factor ( = 1–5).

For each variation, mAP and computational cost were measured across three independent runs. The corresponding sensitivity curves are illustrated in Figure 11.

Figure 11.

Sensitivity curves of mAP with respect to , , and .

The results show that the model exhibits stable performance when , , and , with mAP fluctuations within ±0.25%. Paired t-tests (p > 0.05) confirm that these variations are statistically insignificant, demonstrating strong parameter robustness.

In summary, the ablation and sensitivity analyses demonstrate that each module contributes distinct and complementary improvements, and the integrated model maintains high robustness and generalization under moderate parameter changes.

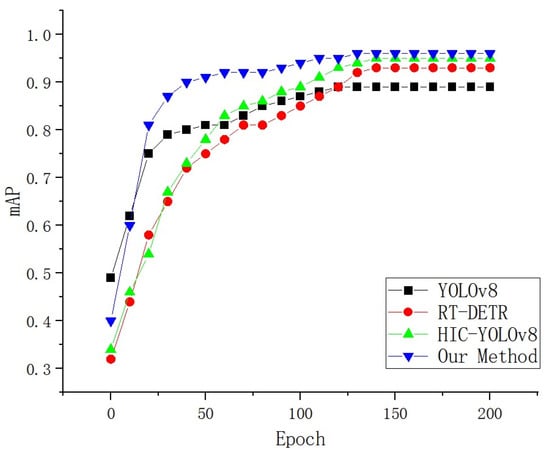

3.3. Comparison with Advanced Methods

In this section, the unimproved pre-YOLOv8 network is used to establish a baseline for comparison with the improved YOLOv8 network. Subsequently, the latest rotating small-target detection networks, RT-DETR [] and HIC-YOLOv8 [], are used to compare with the improved YOLOv8 network. In the literature [], the encoder of RT-DETR adopts a hybrid encoder structure, which contains CNN and Transformer, and has a better inductive bias compared to the encoder structure of other DETRs, especially when the sample size is small. Furthermore, the backbone network used in RT-DETR is PP-HGNetv2, which possesses the capacity to aggregate multiple receptive fields and is particularly well suited for the detection task, especially for small sample detection. In contrast, HIC-YOLOv8 [] represents an enhancement to the original YOLOv8, incorporating a convolutional block attention module within the backbone network, an anticonvolutional attention mechanism between the backbone network and the neck network, and an additional prediction header specialized in small objects, thus enhancing the performance of small-target detection. The efficacy of these modifications is pronounced, leading to a substantial enhancement in the effectiveness of small object detection.

The experiments were trained and tested using the self-constructed unordered stacked pillbox dataset, and the comparison of the detection results of different algorithms is listed in Table 3.

Table 3.

Comparison of detection effects of different algorithms.

The enhanced YOLOv8 network demonstrates substantial improvement in numerous evaluation metrics. Specifically, the enhanced network demonstrates a 3.34%, 2.53%, and 3.35% enhancement in precision, recall, and mAP, respectively, when contrasted with the pre-improved YOLOv8 network. Currently, the enhanced network exhibits a reduction in weights, a billion floating point operations, and detection time. This finding suggests that the enhanced network is capable of maintaining or improving performance while achieving optimized computational efficiency.

Furthermore, the enhanced algorithm outlined in this study exhibits superior detection accuracy in the task of unordered stacked pillbox detection compared to the most recent rotating small-target detection networks, RT-DETR and HIC-YOLOv8. Specifically, the proposed algorithm shows superior performance in terms of mAP value, with an increase of 0.75% and 0.48% compared to the RT-DETR and HIC-YOLOv8 algorithms, respectively.

A detailed comparative analysis of the enhanced YOLOv8 network with the original YOLOv8 network, as well as the most recent rotating small-target detection networks RT-DETR and HIC-YOLOv8, with a focus on the performance of their mAP curves, is demonstrated in Figure 12. During the training process, the enhanced network demonstrates superior convergence and higher accuracy compared to its original version. Furthermore, the enhanced YOLOv8 network demonstrates superior performance in terms of mAP curves compared to the two latest rotating small-target detection networks.

Figure 12.

mAP curve.

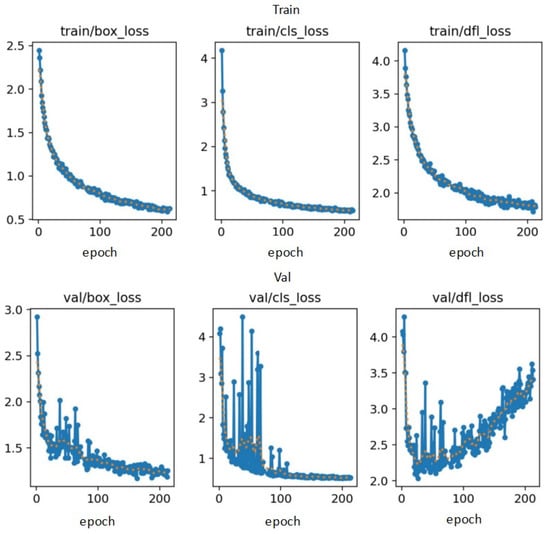

In order to provide further validation of the effectiveness of the improved method in network optimization, the difference between the predicted and real bounding boxes is assessed using the bounding-box loss, with the intersection ratio serving as a criterion for the degree of overlap. By calculating the intersection ratio between predicted and true boxes, the bounding-box loss reveals the localization accuracy, and minimizing this loss enables the model to optimize the position of the bounding box. Conversely, the categorization loss quantifies the discrepancy between the predicted and actual categories, employing the cross-entropy loss as an evaluation metric. It is evident that minimizing the classification loss significantly improves the classification accuracy of the model by comparing the predicted category distribution with the true labels. Finally, the directional feature point loss is a custom loss function introduced in YOLOv8, which can be expressed by

where denotes the directional feature point loss value, and denote the i-th predicted feature point and the real feature point respectively, and N is the total number of feature points.

The loss function of orientation feature points has been demonstrated to enhance the process of orientation detection by evaluating the difference between predicted feature points and real feature points. This process drives the model to learn more accurate object orientations and angles. The loss function curves are illustrated in Figure 13, which is categorized into two states, training and validation, with the horizontal coordinate denoting the number of training rounds and the vertical coordinate representing the loss value of each loss function.

Figure 13.

The loss curves of our method.

The enhanced YOLOv8 network demonstrates superior performance in terms of precision, recall, and mAP compared to other rotating small-target detection algorithms in the self-constructed unordered stacked pillbox dataset. This enhancement is primarily attributable to the utilization of the advanced feature extraction network, SPD-Conv, which employs a spatial attention mechanism to accentuate the salient regions and de-emphasize the nonsalient regions. The spatial attention map of the feature map is then computed to guide the model to focus on regions that contribute to the classification, detection, and segmentation tasks, allowing the algorithm to better capture the subtle features of small targets. Furthermore, the weights of the improved YOLOv8 network model are the smallest among other rotating small-target detection algorithms, indicating that the improved YOLOv8 network model has superior overall performance, particularly with regard to its exceptional small-target detection capability.

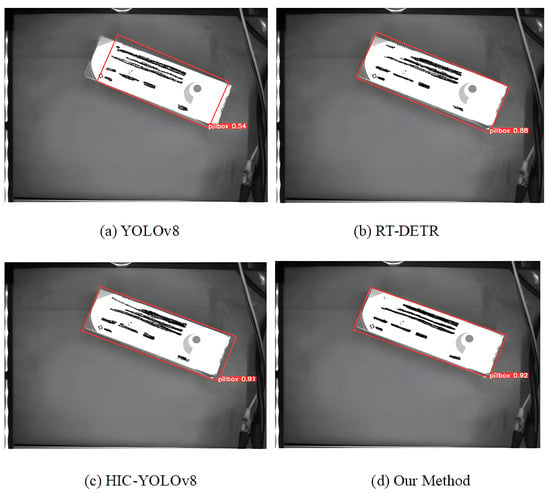

In order to demonstrate the enhancement of the detection performance of the improved method in a more intuitive manner, the same image of an unordered stack of pillboxes is detected using different algorithms and the results are compared and analyzed. The physical map of the pillbox small-target detection is shown in Figure 14. It is evident that the YOLOv8 algorithm (a) demonstrates some deviation in detecting the edges of the pillbox and the localization accuracy is inadequate. The RT-DETR algorithm (b) exhibits some enhancement in edge detection, yet there remains a certain amount of error. The HIC-YOLOv8 algorithm (c) further improves localization and detection accuracy, though it is still marginally inadequate. In contrast, the algorithm of this paper (d) demonstrates a more accurate edge detection effect, which can more accurately cover the pillbox area, and the detection effect is superior to that of other algorithms. In summary, the method proposed in this paper performs better on the small-target detection task and achieves higher detection and localization accuracy.

Figure 14.

Pillbox small-target detection.

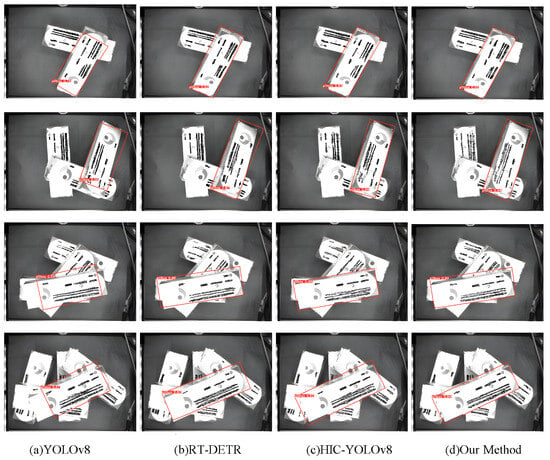

In the following section, a comparative analysis of the performance of various detection methods in images of disordered stacked pillboxes will be performed. To this end, images containing 2, 3, 4, and 5 pillboxes, respectively, will be selected and analyzed using the aforementioned strategies. The actual detection images of the disordered stacked pillboxes are shown in Figure 15. It is evident that the YOLOv8 algorithm (a) demonstrates a deviation in edge detection when detecting unordered stacked images, resulting in suboptimal accuracy in detecting the edges of overlapping pillboxes and an increased likelihood of false negatives. The RT-DETR method (b) has improved the detection of overlapping edge regions; however, it does not recognize details within small regions. The HIC-YOLOv8 method (c) has been shown to achieve a substantial improvement in localization accuracy and edge detection, and is capable of detecting overlapping and rotating pillboxes with greater efficacy. However, a small number of overlapping misclassifications remain in dense regions. In contrast, the algorithm of this paper in (d) demonstrates superior detection performance in complex scenes and is able to accurately detect the edges and overlapping regions of individual pillboxes, with the best overall detection effect. In conclusion, the proposed method demonstrates superior accuracy and robustness in the detection of unordered stacked pillboxes.

Figure 15.

Target detection of unordered stacked pillboxes.

3.4. Per-Class Evaluation and Precision–Recall Analysis

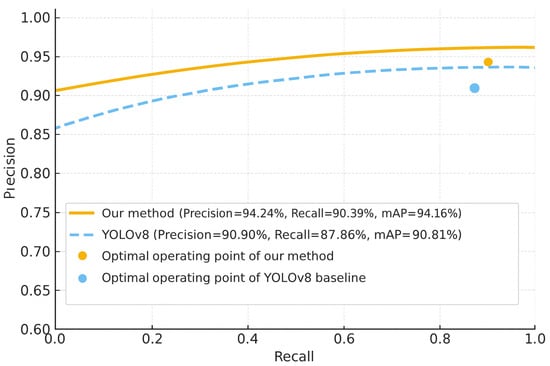

To further evaluate the detection performance and generalizability of the proposed model, a detailed precision recall (PR) analysis was performed on the single-category dataset consisting of rectangular pillboxes. As no other pillbox shapes are included, this analysis focuses on the model’s ability to accurately identify pillboxes under complex stacking, rotation, and partial-occlusion conditions that commonly occur in automated pharmacy environments.

For comparison purposes, the baseline YOLOv8 model and the proposed improved YOLOv8 framework were both tested in identical experimental settings. The quantitative results are presented in Figure 16, which summarizes the precision, recall, and mean average precision (mAP) of both methods. As shown, the proposed model significantly outperforms the baseline in all three metrics, achieving higher detection accuracy and better robustness when faced with small, densely packed, and rotated targets.

Figure 16.

Precision–Recall (PR) curve of the proposed model and YOLOv8 baseline for pillbox detection.

The proposed method maintains consistently higher precision throughout the entire recall range, with a smoother and more stable PR curve than the baseline. This clearly demonstrates that the integration of SPD-Conv, BiFormer, and CSL modules improves the model’s feature extraction and orientation sensitivity, thus improving detection reliability and generalization performance in real-world industrial scenarios.

4. Discussion

In addressing the issue of the suboptimal accuracy of stacked pillbox detection, this article proposes a refined YOLOv8 network. This incorporates the SPD-Conv module and the BiFormer module and also solves the edge-exchangeability problem and angular periodicity by employing the CSL technique. Initially, pre-processing operations and labeling are performed on the pillbox images captured by the industrial camera. Subsequently, the pillbox images are detected with the enhanced YOLOv8 network. The experimental results, which were obtained using a disordered stack of pillboxes, demonstrate that the improved strategy significantly improves the precision of detecting the pillboxes, with precision, recall, and mAP improving by 3.34%, 2.53%, and 3.35%, respectively, compared to the original YOLOv8 network. Compared with the existing rotating small-target detection network, the network also exhibits the most comprehensive performance, especially with regard to the small-target detection ability, which is outstanding and can meet industrial production requirements in the face of disordered stacked pillbox detection precision and time efficiency. The algorithm achieves 94.16% mAP, demonstrating that integrating SPD-Conv, BiFormer, and CSL enhances detection precision and real-time efficiency for intelligent pharmacy applications. Although the proposed SPD-Conv and BiFormer-enhanced YOLOv8 network achieves significant gains in detection accuracy and efficiency, it introduces several trade-offs. Hierarchical attention and dual routing mechanisms increase the structural complexity of the model, making hyperparameter tuning more demanding. Moreover, while SPD-Conv improves small-target representation, it marginally increases GPU memory consumption due to higher feature dimensionality. These factors imply a trade-off between computational simplicity and detection precision. Additionally, the stability of the model’s training can be sensitive to the imbalance of the data set, particularly in scenarios with extreme rotation variance or occlusion. Although YOLO architectures continue to evolve, the proposed modules operate independently of the core detection pipeline, ensuring forward compatibility. They can therefore be regarded as modular enhancements that are transferable across backbones. The theoretical analysis in Section 2.9 supports this view and clarifies the mechanisms by which SPD-Conv, BiFormer, and CSL maintain robustness despite structural updates. Future work will further quantify this stability through cross-version benchmarking, but the present design already minimizes dependence on specific YOLO releases. This conceptual stability provides confidence that the method remains valid even as the underlying detection framework evolves.

Despite these trade-offs, the proposed framework demonstrates strong robustness due to its modular design and dynamic attention mechanisms. The SPD-Conv and BiFormer modules enhance feature discrimination on varying scales and orientations, which can be beneficial beyond pharmaceutical applications. The model architecture is inherently domain-agnostic, allowing potential adaptation for other industrial and healthcare-related tasks, such as detecting stacked medical supplies, food packages or logistics parcels. Its adaptability stems from its ability to capture spatially correlated patterns across heterogeneous visual domains, indicating strong potential for transfer learning and multi-environment deployment. Future improvements can explore lightweight transformer alternatives to further reduce computational cost. The integration of self-distillation and semi-supervised learning may enhance performance when labeled data is scarce. Additionally, incorporating deformable convolutions or hybrid 3D–2D perception could improve geometric awareness in more complex stacking configurations. Finally, cross-modal fusion with depth or thermal sensors could further strengthen robustness under occlusion and low-light conditions, providing a path forward for real-world autonomous warehouse and data-driven smart pharmacy systems.

5. Conclusions and Recommendations for Future Work

This study presents an improved YOLOv8-based detection algorithm for unordered stacked pillboxes in automated medicine warehousing. By integrating SPD-Conv for fine-grained spatial representation, BiFormer for hierarchical attention, and CSL for rotation stability, the model achieves notable improvements in accuracy, recall, and mAP over the baseline YOLOv8 and contemporary small-object detection networks. The proposed algorithm effectively addresses the challenges of small-target and rotated object detection in dense stacking scenarios. The data-driven framework enables real-time, high-precision performance suitable for intelligent pharmacy environments. Experimental validation confirms that the model achieves strong robustness and computational efficiency compared to state-of-the-art approaches. It should be noted that, due to the absence of publicly available benchmark datasets for pillbox detection, the present study is restricted to validation within the self-constructed dataset. Although this limits direct cross-domain comparison, the industrial origin of the dataset enhances ecological validity. To address this constraint, all data and code have been made openly accessible, allowing other researchers to reproduce and extend our findings. Future efforts should focus on optimizing the model for embedded hardware platforms and expanding the data set to cover more variants of pharmaceutical packaging. In addition, integrating multimodal sensory inputs and developing explainable AI mechanisms could further enhance interpretability, reliability, and scalability in large-scale industrial applications. Furthermore, this work contributes to the community by publicly releasing a large-scale pillbox detection dataset and the corresponding implementation based on YOLOv8. This initiative establishes a foundation for future benchmarking and facilitates cross-domain evaluation once comparable datasets become available.

Author Contributions

Conceptualization, J.P. and R.Z.; Methodology, X.W. and H.D.; Software, J.P.; Validation, J.F. and M.W.; Formal analysis, R.Z. and X.W.; Resources, J.F. and H.D.; Data curation, J.F.; Writing—original draft, J.P.; Writing—review & editing, M.W., X.W. and H.D.; Visualization, R.Z.; Supervision, M.W. and H.D.; Funding acquisition, M.W. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

Mincheng Wu declares that this study received funding from the “Pioneer” and “Leading Goose” Research and Development Program of Zhejiang under Grant 2024C01213, and the National Natural Science Foundation of China under Grant 62203388 and 62572439. Xiang Wu declares that this study received funding from Fundamental Research Funds for the Provincial Universities of Zhejiang under Grant RF-A2024002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Jie Feng was employed by the company China Mobile (Hangzhou) Information Technology Co., Ltd., Hangzhou 311121, China. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

Nomenclature

| SPD-Conv | Spatial Positive Definite Convolution |

| BiFormer | Bi-Level Routing Attention Transformer |

| CSL | Circular Smooth Label |

| CNN | Convolutional Neural Network |

| mAP | Mean Average Precision |

| FLOPs | Floating Point Operations |

| GFLOPs | Giga Floating Point Operations |

| PP-HGNetv2 | PaddlePaddle High-Granularity Network v2 |

References

- Jiang, W.; Chen, Z.; Zhang, H.; Li, J. MelSPPNET—A self-explainable recognition model for emerald ash borer vibrational signals. Front. For. Glob. Change 2024, 7, 1239424. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hier Archies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Wang, R.; Jing, Y.; Gu, C.; He, S.; Chen, J. End-to-end multi-target flexible job shop scheduling with deep reinforcement learning. IEEE Internet of Things J. 2024, 12, 4420–4434. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Kijdech, D.; Vongbunyong, S. Manipulation of a Complex Object Using Dual-Arm Robot with Mask R-CNN and Grasping Strategy. J. Intell. Robot. Syst. 2024, 110, 103. [Google Scholar] [CrossRef]

- Liu, L.; Xie, L.; Zhang, P.; Wang, Y.; Tian, H. Method of Dead Standing Tree Detection Based on RetinaNet Object Detection Network. Int. J. New Dev. Eng. Soc. 2024, 8, 110–114. [Google Scholar] [CrossRef]

- Zhou, R.; Peng, H.; Liu, S. Research on Employee Abnormal Behavior Detection Algorithm Based on Improved SSD. Adv. Comput. Signals Syst. 2024, 8, 83–87. [Google Scholar] [CrossRef]

- Hua, L.; Wu, X.; Gu, J. Optimization of intelligent guided vehicle vision navigation based on improved YOLOv2. Rev. Sci. Instrum. 2024, 95, 351–359. [Google Scholar] [CrossRef]

- Xiao, D.; Shan, F.; Li, Z.; Tuan, B.; Liu, X.; Li, X. A Target Detection Model Based on Improved Tiny-Yolov3 Under the Environment of Mining Truck. IEEE Access 2019, 7, 123757–123764. [Google Scholar] [CrossRef]

- Panda, J. Refining Yolov4 for vehicle detection. Int. J. Adv. Res. Eng. Technol. 2020, 11, 409–419. [Google Scholar]

- Pan, Q.; Liu, Y.; Wei, S. Design of a multi-category drug information integration platform for intelligent pharmacy management: A needs analysis study. Medicine 2024, 103, E37591. [Google Scholar] [CrossRef]

- Jin, Y.; Wu, X.; Dong, H.; Yu, L.; Zhang, W. Helmet wearing detection algorithm based on improved YOLOv4. Comput. Sci. 2021, 48, 268–275. [Google Scholar]

- Wang, J.; Huang, Y.; Liu, Y. Low contrast stamped dates recognition for pill packaging boxes based on YOLO-SFD and image fusion. Digit. Signal Process. 2024, 153, 104602. [Google Scholar] [CrossRef]

- Sun, W.; Niu, X.; Wu, Z.; Guo, Z. Lightweight Detection and Counting Method for Pill Boxes Using Machine Vision. Electronics 2024, 13, 4953. [Google Scholar] [CrossRef]

- Li, H.; Chen, B.; Qian, L.; Zeng, K. Improvement of YOLOv5 algorithm for detecting counting of pill boxes in drug vending machines. Comput. Eng. Des. 2024, 45, 1572–1579. [Google Scholar]

- Liu, D.; Zhang, F.; Meng, T.; Ding, Y. Research on Deep Learning-based Drug Name Recognition Technology for Pill Boxes. J. Qingdao Univ. 2021, 34, 29–33+39. [Google Scholar]

- My, L.N.T.; Le, V.-T.; Vo, T.; Hoang, V.T. A Comprehensive Review of Pill Image Recognition. Comput. Mater. Contin. 2025, 82, 3693–3740. [Google Scholar] [CrossRef]

- Yuan, B.; Lang, Y.; Chen, L.; Li, C. Design of Multi-target Pillbox Gripping System Based on YOLOv5 and U-NET. Packag. Eng. 2024, 45, 141–149. [Google Scholar]

- Shen, Z.; Chen, W.; Gan, Z. Research on rotating target detection algorithm based on improved YOLOv5 and its application. Packag. Eng. 2023, 44, 229–237. [Google Scholar]

- Wei, C.; Ni, W.; Qin, Y.; Wu, J.; Zhang, H.; Liu, Q.; Cheng, K.; Bian, H. RiDOP: A Rotation-Invariant Detector with Simple Oriented Proposals in Remote Sensing Images. Remote Sens. 2023, 15, 594. [Google Scholar] [CrossRef]

- Li, H.; Ma, H. MCSC-Net: A Rotated Object Detection Strategy for Densely Arranged Objects. Appl. Soft Comput. 2024, 166, 112181. [Google Scholar] [CrossRef]

- Wang, L.; Shen, Y.; Yang, J.; Zeng, H.; Gao, H. Rotated Points for Object Detection in Remote Sensing Images. IET Image Process. 2024, 18, 1655–1665. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R. BiFormer: Vision Transformer with Bi-Level Routing Attention. arXiv 2023, arXiv:2303.08810. [Google Scholar]

- Cao, X.; Zhang, Y.; Lang, S.; Gong, Y. Swin-Transformer-Based YOLOv5 for Small-Object Detection in Remote Sensing Images. Sensors 2023, 23, 3634. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Lin, W.-C. Precise Orientation Estimation for Rotated Object Detection Based on a Unit Vector Coding Approach. Electronics 2024, 13, 4402. [Google Scholar] [CrossRef]

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar]

- Li, J.; Wu, J.; Shao, Y. FSNB-YOLOv8: Improvement of Object Detection Model for Surface Defects Inspection in Online Industrial Systems. Appl. Sci. 2024, 14, 7913. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G. Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. Appl. Intell. 2025, 159, 296–307. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Tang, S.; Zhang, S.; Fang, Y. HIC-YOLOv5: Improved YOLOv5 For Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6614–6619. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).