Abstract

Background: Understanding visual context in images is essential for enhanced Point-of-Interest (POI) recommender systems. Traditional models often rely on global features, overlooking object-level information, which can limit contextual accuracy. Methods: This study introduces micro-level contextual tagging, a method for extracting metadata from individual objects in images, including object type, frequency, and color. This enriched information is used to train WORLDO, a Vision Transformer model designed for multi-task learning. The model performs scene classification, contextual tag prediction, and object presence detection. It is then integrated into a content-based recommender system that supports feature configurations. Results: The model was evaluated on its ability to classify scenes, predict tags, and detect objects within images. Ablation analysis confirmed the complementary role of tag, object, and scene features in representation learning, while benchmarking against CNN architectures showed the superior performance of the transformer-based model. Additionally, its integration with a POI recommender system demonstrated consistent performance across different feature settings. The recommender system produced relevant suggestions and maintained robustness even when specific components were disabled. Conclusions: Micro-level contextual tagging enhances the representation of scene context and supports more informative recommendations. WORLDO provides a practical framework for incorporating object-level semantics into POI applications through efficient visual modeling.

1. Introduction

Scene recognition focuses on interpreting images to assign categories to environments based on their visual attributes, which is vital for applications like Points-of-Interest (POI) services, including recommendations for places to explore. Traditional methods of image classification, such as Convolutional Neural Networks (CNN) [] and Vision Transformers (ViT) [], are highly effective in achieving accuracy, yet they tend to emphasize global visual features rather than finer details.

Consequently, these methods often neglect finer-grained contextual details, limiting their ability to capture crucial attributes like environmental colors and object-level properties within a scene. Additionally, while object recognition models, like DETR [], effectively detect and localize individual items, they typically lack structured approaches for leveraging these objects to derive deeper contextual meaning.

To overcome these limitations, this paper introduces the concept of micro-level contextual tagging, an innovative method for extracting detailed attributes from individual objects within an image. By building on the WORLD model [], this paper enhances the original model and demonstrates an application of such model by integrating it with a POI-based content-based recommender system.

Unlike the previous Bag-of-Objects (BoO) methodology, which systematically tags scenes based on detected objects, micro-level tagging dives deeper into the finer attributes of these objects, capturing object-specific environmental colors and encoding detailed properties like their frequency in an image. This enriched metadata enhances the granularity and richness of context available for understanding and categorizing POI scenes.

Building on this refined tagging mechanism, a novel Deep Learning Transformer model is proposed, called WORLD with Objects (WORLDO), specifically designed to leverage these detailed micro-level contextual attributes for improved image classification and contextual tagging. The model is trained on a dataset generated through micro-level tagging, resulting in a more sophisticated understanding of scene context compared to conventional methodologies relying solely on global visual features.

Finally, to evaluate its practical effectiveness, the proposed model is integrated into a POI-based recommender system. The recommender system’s accuracy and overall performance is thoroughly benchmarked against different feature configurations, demonstrating robustness of the proposed strategy within POI-related applications.

This paper’s primary contributions include:

- Introducing micro-level tagging, a method that systematically generates enriched contextual metadata by identifying and annotating detailed attributes of individual objects, such as colors, within images.

- Developing a specialized Transformer-based deep learning model trained on micro-level contextual metadata, enhancing scene recognition with precise contextual understanding beyond standard classification approaches.

- Evaluating the proposed model within a POI-based recommendation system, showcasing its potential and robustness.

The remainder of this paper is organized as follows: Section 2 presents the materials and methods, detailing the modifications made to the original WORLD model and introducing WORLDO, the newly proposed Transformer-based architecture for micro-level contextual tagging. It also describes the datasets used, the data preprocessing pipeline, and the model training process. Section 3 evaluates the performance of the WORLDO model across its three supervised tasks and includes a detailed analysis of results using confusion matrices. Section 4 introduces the content-based recommender system that integrates the WORLDO model and evaluates its effectiveness using Precision@K across multiple configurations. Section 5 concludes the paper by summarizing key findings.

2. Materials and Methods

This study extends our previous work on the WORLD architecture [], introducing several modifications that enhance its efficiency and applicability for POI-oriented scene understanding. As was noted in their work, WORLD had potential further development through incorporating the colors of the objects. Additionally, they suggested a future application of the model in integrating with a POI recommender system.

The updated model, WORLDO, removes the BERT-based textual branch and relies solely on a Vision Transformer (ViT) for feature extraction. This change reduces computational overhead and simplifies the training pipeline, resulting in a more lightweight architecture.

WORLDO is trained in a multi-task setup with supervision from three tasks: scene classification, contextual tag prediction, and object presence detection. These outputs capture both high-level semantic categories and fine-grained visual attributes, enabling the model to build a rich understanding of image content. This representation is particularly well-suited for downstream applications such as Point-of-Interest (POI) identification, where recognizing visual patterns in environments can support more personalized or context-aware tasks. Ultimately, the proposed model was integrated into a content-based recommender system, which can give recommendations based on the visual and contextual understanding learned from the multi-task training process. This integration enhances the model’s ability to suggest relevant POIs to users by leveraging the learned semantic and visual features in real-time.

The following sections describe the deep learning architectures involved and detail the data preparation and training process.

2.1. Related Work

POI recommender systems aim to suggest locations that match users’ interests and mobility patterns. Although conceptually like conventional recommender systems, POI recommenders must also consider the spatial and physical interactions between users and places. Early research primarily extended classical methods such as collaborative filtering and matrix factorization to location-based social networks (LBSNs). Ye et al. [] integrated LBSN activity data into collaborative filtering, while Cheng et al. [] incorporated geographical and social influence into matrix factorization. Later frameworks modeled more complex spatiotemporal and sequential dependencies: iGSLR [] and LORE [] captured geographical and sequential check-in patterns, GeoSoCa [] combined personalized geographic distributions with social correlations, and Li et al. [,] introduced unified matrix-factorization approaches to learn both intrinsic and extrinsic user interests from observed and potential check-ins.

Subsequent studies enhanced these paradigms through personalized ranking (PRFMC []), category-aware recommendation [], latent geographic representation learning (POI2Vec []), and contextual regularization with graph-based constraints []. While these classical methods improved performance, their linear modeling assumptions limit their ability to capture complex user behaviors and heterogeneous contextual factors. This limitation has driven a shift toward deep learning–based frameworks [,], which can learn nonlinear relationships and richer representations for more accurate POI recommendation.

Building on these developments, recent research has increasingly explored visual and multimodal cues to enrich POI recommendation beyond check-in or textual data. Visual representations can capture environmental context and aesthetic features that strongly influence user preferences but are not reflected in metadata alone. Advances in deep convolutional and transformer-based architectures have enabled high-level semantic understanding from images, opening new directions in scene recognition and image-driven recommendation. Vision Transformers (ViTs) have demonstrated superior scalability and context modeling compared to convolutional networks, effectively capturing both global spatial layouts and object-level relationships within complex scenes [,,]. Parallel work in multimodal recommender systems highlights strategies for integrating heterogeneous signals through modality encoding, feature interaction, feature enhancement, and optimization [].

Despite these advances, image-based POI recommendation remains underexplored, with most studies relying on global visual embeddings rather than explicit object- or tag-level context. The approach proposed in this work, WORLDO, addresses this gap by introducing micro-level contextual tagging within a ViT framework to produce semantically rich visual embeddings for POI recommendation, enabling finer-grained understanding of scene context compared to existing global feature-based methods.

2.2. Transformer Models

Transformers have significantly impacted the Deep Learning realm by revolutionizing natural language processing (NLP). Introduced by Vaswani et al. [], Transformers use the self-attention mechanism to weight the importance of input data dynamically by capturing dependencies across sequences without recurrent or convolutional structures.

Based on the success in the area of NLP, researchers have utilized the Transformer architecture for computer vision tasks, developing the Vision Transformer (ViT) []. ViT treats images as sequences by dividing them into fixed-size patches, which are linearly embedded and fed into a Transformer encoder. This novel approach allows the model to leverage self-attention to capture global contextual relationships across image patches effectively. ViT has demonstrated competitive or superior performance compared to traditional Convolutional Neural Networks (CNNs) in the domain of POIs [].

Another area which has excelled in using Transformers is Object Detection. Carion et al. [] have introduced DEtection TRansformer (DETR) for object detection, which eliminates the need for handcrafted components like region proposal networks and post-processing steps. Unlike traditional object detection frameworks such as Faster R-CNN [] and YOLO [], DETR directly predicts object classes and bounding boxes using a transformer encoder–decoder structure. This design enables it to efficiently detect multiple objects in parallel while preserving spatial relationships, making it well-suited for automated contextual tagging in POI scene classification.

2.3. Proposed Model

This work presents an extension of WORLD, a multimodal neural network designed for multi-task learning that integrates Vision Transformer (ViT) and BERT for visual and textual representation learning, respectively. The original model is capable of performing both multi-label tagging and single-label classification from image and tag inputs.

WORLDO, has been streamlined by removing the BERT-based textual branch, resulting in a vision-only model focused entirely on visual content understanding. This change brings practical advantages, as the removal of BERT significantly reduces the computational complexity and memory footprint of the model, making it lighter and more efficient during both training and inference. Second, the model no longer requires tokenized textual input, thereby simplifying the data preprocessing pipeline and eliminating dependency on additional natural language components. As a result, WORLDO can be deployed easier in environments where only visual data is available.

Despite this simplification, the model’s multi-task capacity has been expanded to include three distinct prediction tasks: (1) scene classification—Formula (3), (2) contextual tagging—Formula (4), and (3) object presence prediction—Formula (5). Each image is processed by the Vision Transformer, which encodes the input into a high-dimensional visual representation. This representation is projected into a lower-dimensional latent space and passed to the model’s task-specific heads, as summarized in Table 1.

Table 1.

Formulae of WORLDO model.

- Class Head: A fully connected classification layer responsible for predicting a single label from a predefined set of categories, each representing a type of POI image (e.g., amusement park, airport).

- Tags Head: A multi-label prediction head designed to identify all relevant descriptive tags associated with an image (e.g., crowded, colorful_cars).

- Objects Head: A prediction branch introduced to model object presence information derived from structured annotations (e.g., car, person, truck).

2.3.1. Datasets

As WORLDO replicates the behavior of the WORLD model, it also employs the Common Objects in Context (COCO) [] dataset for object detection. COCO is a large and diverse dataset containing over 330,000 annotated images and 1.5 million object instances across 80 object categories, including people, animals, vehicles, household items, and outdoor scenes. As such, it is widely used as a benchmark for object detection tasks.

In addition, the mini-Places [] dataset, a subset of Places2 [], is used to extend the Bag-of-Objects methodology, which was originally used for WORLD, for automated contextual tag generation and object identification within images. The complete process is described below. For this study, 80% of the mini-Places dataset is utilized, with the selected classes and the number of training and testing images per class detailed in Table 2.

Table 2.

Mini-places 80% dataset.

These tags form part of a custom dataset created for this experiment, which is used to further train ImageNet []; the dataset underlying the ViT pretrained model.

2.3.2. Data Pipeline Preparation

To support the training of the proposed visual multi-task model, a structured data preprocessing pipeline was developed. This pipeline begins by loading a preprocessed JSON file generated by processing images using the standard pretrained DETR model (trained on the COCO dataset). Each image from the mini-places training dataset is passed through the DETR model, which generates predictions for object classes based on a confidence score threshold. The objects detected are airplane, apple, backpack, banana, baseball bat, baseball glove, bear, bed, bench, bicycle, bird, boat, book, bottle, bowl, broccoli, bus, cake, car, carrot, cat, cell phone, chair, clock, couch, cow, dining table, dog, donut, elephant, fire hydrant, fork, frisbee, giraffe, hair drier, horse, hot dog, keyboard, kite, knife, laptop, microwave, motorcycle, orange, oven, parking meter, person, pizza, potted plant, refrigerator, remote, sandwich, scissors, sheep, sink, skateboard, skis, snowboard, spoon, sports ball, stop sign, suitcase, surfboard, teddy bear, tennis racket, toaster, toilet, toothbrush, traffic light, train, truck, umbrella, wine glass, zebra. The threshold is applied to filter out low-confidence predictions, ensuring only high-confidence detections are retained. The resulting annotations for each image include the file name, its associated place category, a list of contextual tags, and a dictionary of detected objects with aggregated class-wise counts and additional properties such as color (when available).

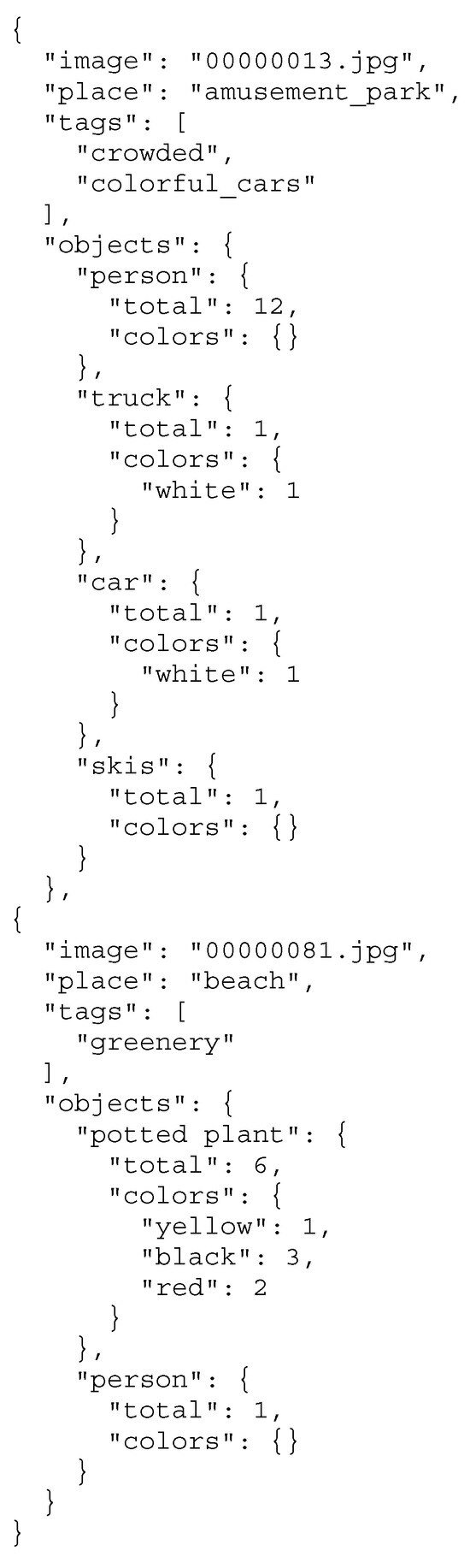

As can be observed from the JSON file sample at Figure 1, the details stored for each image are the following:

Figure 1.

Sample JSON file for Model Training.

- Image: the filename of the processed image;

- Place: the class of the processed image, indicating the scene;

- Tags: contextual tags automatically generated based on predefined rules which are applied on the detected objects;

- Objects: the object classes (e.g., “person”, “truck”) and the values are their respective counts in the image. Furthermore, it separates each item based on other categories (i.e., color), counting each variation of the object separately.

The detected objects are grouped into categories, e.g., “crowded”. Tags are assigned based on object counts exceeding category-specific thresholds. Table 3 displays the tags, the objects which each tag is applied for and their thresholds.

Table 3.

Object-based thresholds for tagging.

In addition to the threshold-based categories listed in Table 3, several compound and contextual tags are generated dynamically according to specific object co-occurrence rules. The tag “urban-outdoor” is added when transportation objects, people, and at least one umbrella appear in the same scene, indicating a busy outdoor environment. The tag “cozy-indoor” is produced when the combined count of household furniture and appliances reaches four or more, reflecting an interior setting. “House-party” is assigned when five or more people and at least three pieces of furniture are detected, while “techie-setup” appears when two or more gadgets coexist with at least two furniture items. A color-based tag, “colorful_cars”, is added when over 30% of the detected cars differ in color, capturing visual diversity in scenes with cars.

The pipeline then extracts all unique scene categories and maps them to integer indices, enabling supervised single-label classification through the model’s Class Head. In parallel, it collects all unique contextual tags and object classes, converting them into fixed-length binary vectors that serve as targets for multi-label classification via the Tags Head and Objects Head, respectively.

This unified supervision format enables efficient encoding of categorical data and supports scalable, multi-task training. As a result, the model is trained to jointly predict three complementary tasks: the place represented in the image, its descriptive semantic tags, and the objects present within the scene.

2.3.3. Training of the Model

This work aims to demonstrate the feasibility of extending a Vision Transformer (ViT)-based architecture to perform rich multi-task learning over Point-of-Interest (POI) scenes by integrating supervision from scene categories, contextual tags, and object detections. As the primary focus was on evaluating the structure and utility of the proposed model, extensive hyperparameter tuning was not prioritized. Instead, the model was trained until it produced consistent and interpretable outputs across all heads.

The proposed model, WORLDO, was trained using the PyTorch framework (v2.3.1+cu121) with Torchvision (v0.18+cu121) and CUDA (v12.1). The training was performed on a system equipped with an AMD Threadripper 1950x processor, an NVIDIA GTX 1080Ti GPU, 32GB DDR4 RAM (3200 MHz), and a Samsung 960Pro 512GB SSD.

To ensure the correct implementation of the ViT backbone, the ViTModel module from the Hugging Face Transformers library [] was used without modifying its core architecture. A linear projection layer was added to map the ViT output into a lower-dimensional latent space suitable for multi-task learning.

WORLDO was trained for ten epochs, a duration determined by hardware constraints. Each training batch contained an input image along with its corresponding supervision signals: a scene class label, a set of contextual tags, and object presence annotations. The ViT backbone encoded each image into a high-level visual representation, which was then projected into a 256-dimensional feature vector. This latent feature size was chosen to balance representational richness with computational efficiency. Preliminary experiments using 128 and 512-dimensional vectors showed only marginal performance differences while significantly increasing memory consumption; therefore, 256 dimensions were adopted as an effective compromise. The resulting shared representation was subsequently passed to three task-specific heads:

- Tags Head: outputs logits for multiple descriptive tags;

- Class Head: predicts a single scene category;

- Objects Head: performs multi-label prediction for object presence.

Each of these heads computes its own loss using an appropriate objective function. Binary Cross-Entropy Loss with logits is used for the tag and object heads, as both represent independent multi-label classification tasks. The scene classification head uses Cross-Entropy Loss, suitable for single-label classification. The overall loss is calculated as a weighted sum of these three task-specific losses, allowing for joint optimization, which is illustrated by Formula (6).

The model parameters were optimized using the AdamW [] optimizer with a learning rate of 0.00005 and a weight decay of 0.01. AdamW, a variant of the Adam optimizer [], was selected due to its proven effectiveness in training transformer-based architectures. Unlike traditional Adam, AdamW decouples weight decay from the gradient update, resulting in more stable training and better generalization, especially important when fine-tuning large pretrained models.

The chosen learning rate of 0.00005 is commonly used in fine-tuning settings, setting a balance between preserving pretrained knowledge and enabling adaptation to downstream tasks. The inclusion of weight decay serves as a regularization mechanism to mitigate overfitting, particularly in the shared transformer backbones and task-specific heads.

The image resolution was fixed at 224 × 224 pixels to align with the input requirements of the pretrained ViT encoder, ensuring consistent and high-quality visual feature extraction. While larger ViT configurations (e.g., 256 × 256 input) could capture finer spatial details, they substantially increase training time and GPU memory usage. Considering the available hardware resources and the study’s primary goal of evaluating feature representations rather than maximizing accuracy, the 224 × 224 resolution of the pretrained ViT backbone was retained. A batch size of 16 was selected to balance memory limitations with training efficiency, and a fixed random seed of 42 was used to ensure experimental reproducibility across all runs. The source code and dataset generation scripts are provided in the Supplementary Materials.

This training configuration enabled WORLDO to learn a unified, high-level visual representation that supports the simultaneous prediction of semantic tags, scene classes, and object-level information.

With this specific training configuration, WORLDO requires only 338 MB of disk space, compared to WORLD’s 767 MB. Additionally, WORLDO has 86,611,298 trainable parameters, whereas WORLD has 196,279,580. This makes WORLDO less computationally expensive to train and deploy.

2.4. Recommender Systems

Recommender systems are widely used to enhance user experiences by predicting and suggesting relevant content. In the domain of Points-of-Interest (POI) recommendation, these systems guide users toward locations that align with their preferences, behaviors, and contextual needs. POI recommendation methods are broadly categorized into three main approaches: content-based filtering, collaborative filtering, and hybrid approaches. Each method has distinct mechanisms and advantages, and their effectiveness can be significantly enhanced through contextual tagging, which incorporates additional information to refine recommendations.

2.4.1. Content-Based Filtering

Content-based POI recommendation systems suggest locations based on the attributes of previously visited places. These models analyze features such as location type, user preferences, and descriptive tags to identify similarities between different POIs.

Such a model was demonstrated by Agrawal et al. [], where they introduced a content-based POI recommendation system that leverages tag embeddings to improve recommendation accuracy. By representing descriptive tags in a continuous vector space, their approach captures semantic similarities between locations, enabling more precise suggestions. For example, if a user frequently visits historical landmarks, the system can recommend similar sites based on their tag embeddings.

While content-based filtering enhances personalization, it faces challenges such as the cold-start problem, where limited user history reduces recommendation effectiveness.

2.4.2. Collaborative Filtering

Collaborative Filtering refers to the collaboration between users for predicting the behavior of a target user. The first examples of collaborative filtering models were implemented by calculating the similarity of users or of items [].

In the context of POIs, collaborative filtering makes recommendations based on user interactions and preferences inferred from other users. By leveraging crowd-sourced behavior, this approach identifies patterns in user visits, ratings, and check-ins to generate recommendations. There are two common forms of collaborative filtering:

- User-based collaborative filtering, which recommends locations based on the preferences of users with similar profiles.

- Item-based collaborative filtering, which suggests locations that share user visit patterns with previously visited POIs.

2.4.3. Hybrid Approaches

Hybrid recommender systems combine two or more recommendation strategies to leverage their complementary strengths and mitigate individual limitations. This approach aims to enhance recommendation accuracy and address common challenges such as cold-start problems and data sparsity [].

2.4.4. Integrating WORLDO with Recommender System

The developed recommender system utilizes a hybrid content-based filtering approach, integrating multiple semantic dimensions derived from the WORLDO model. Specifically, the recommendation process incorporates scene classification, contextual tags, object annotations, and visual embeddings extracted from a Vision Transformer (ViT) backbone. Each Point-of-Interest (POI) is represented by combining these semantic features into a unified vector, enabling nuanced recommendations that reflect diverse visual and contextual attributes. This hybrid approach was chosen for its flexibility and interpretability, allowing the system to leverage detailed content characteristics while remaining adaptable through user-driven toggling of individual semantic dimensions (scene, tags, objects). Consequently, the recommender effectively balances precision, personalization, and transparency, making it particularly robust for varied application scenarios and user preferences, including scenarios with limited or no prior interaction data.

The system offers two distinct modes, providing flexibility in how the recommendations are generated. These modes enable users to tailor the recommender system’s behavior based on their preferences. The recommender evaluates POIs based on a content-based approach, which relies on cosine similarity to compare the features of items in the dataset.

Single-Images Query Mode: In this mode, the system recommends POIs based on a single query image provided by the user. The user submits an image, and the system processes it to generate an embedding using the ViT backbone of the WORLDO model. This image is then compared to the existing POIs in the dataset to find the most similar ones. The similarity is determined using cosine similarity, where the system computes how closely the embedding of the query image aligns with the embeddings of the POIs in the database. The top- most similar POIs are returned, with a higher similarity score indicating a stronger match.

User Profile Mode: This mode allows the system to recommend POIs based on the user’s preferences inferred from multiple uploaded images. The system builds a user profile by averaging the embeddings of the images provided by the user. This aggregated profile represents the user’s interests and is compared to the POIs in the dataset using the same cosine similarity approach. The system then returns the top- POIs whose embeddings are most like the user’s profile.

The recommender calculates similarity between the query image (or user profile) and the POIs by first embedding both the query and the POIs into a shared feature space. Each POI and the query image are represented as a 256-dimensional vector generated by the WORLDO model. This feature vector includes visual and semantic information (depending on which features are enabled). The cosine similarity between these vectors is computed using Formula (7):

where and are the feature vectors of the query and a POI, respectively, and and are the Euclidean norms of the vectors. Cosine similarity returns values between −1 and 1, where 1 indicates that the vectors are identical while −1 represents the least similarity. For each recommendation, the system selects the POIs with the highest cosine similarity scores to the query or user profile, providing a ranked list of the most relevant suggestions.

The implemented recommender system gives the ability to enable or disable specific semantic and visual features during the recommendation process. This feature allows for flexibility in terms of which attributes are considered when calculating similarity, making it more adaptable and customizable for different contexts. Any of the following can be turned on or off for recommendations:

- Visual Embedding: The ViT-based visual features are present in the recommendation process but can be disabled, thus the system will rely only on semantic features (scene, tags, and objects) for the recommendations.

- Scene Classification: This includes the category of the POI (e.g., amusement park, airport, beach). If enabled, the system will incorporate scene class information into the feature vector for similarity calculation. Disabling this feature means the model will ignore the scene category when comparing POIs.

- Tags: Tags are descriptive labels (e.g., crowded, wildlife, colorful_cars) assigned to POIs. These tags are multi-hot encoded and can be used in the recommendation process. If disabled, the system will not consider the tags when comparing POIs.

- Objects: Objects represent the specific items present in the scene, such as cars, people, or plants. Objects, just like tags, are encoded in a multi-hot format. If disabled, object-based information is ignored during similarity computation.

3. Results

This study was evaluated on the proposed WORLDO model and its integration with the content-based recommender system. The model was assessed across its three primary tasks: scene classification, contextual tag prediction, and object presence detection. For multi-label tasks such as tag and object prediction, the evaluation focused on the F1-score, which balances precision and recall providing a robust measure of performance. Scene classification performance was evaluated using standard classification accuracy. Additionally, the effectiveness of the recommender system was evaluated in generating relevant POI suggestions based on visual and semantic features extracted from WORLDO. Recommendation quality is measured using the Precision@K and further analysis is provided to explore the impact of toggling different features (scene, tags, objects, and visual features) on system performance. The results offer a comprehensive view of the model’s capabilities and limitations, while also highlighting the practical value of integrating multi-task visual understanding into recommendation frameworks.

3.1. Classification Performance

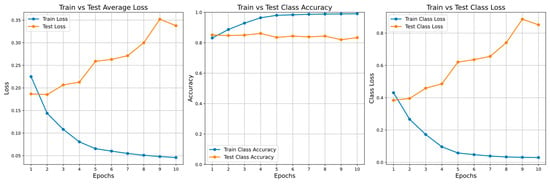

WORLDO was evaluated across its three supervised tasks: scene classification, contextual tag prediction, and object presence detection. Scene classification, being a single-label task, was assessed using standard accuracy metrics. Table 4 and Figure 2 summarize the model’s performance over ten training epochs.

Table 4.

Training accuracy for each epoch.

Figure 2.

Train vs. Test Accuracy charts.

The results show a steady improvement in both training and testing accuracy during the early epochs, with training class accuracy increasing from 0.83 in the first epoch to 0.99 by the tenth. Correspondingly, training loss decreased continuously from 0.225 to 0.046. Test accuracy remained stable throughout, fluctuating slightly between 0.82 and 0.86, which indicates good generalization despite a gradual rise in test loss after epoch 5.

While the gap between training and testing losses suggests mild overfitting, the model still maintained high and consistent classification accuracy across all epochs. Extending training to ten epochs allowed the accuracy and loss curves to plateau, confirming convergence and supporting the model’s stability over prolonged training.

3.2. Multi-Label Performance

For the multi-label tagging and object-detection tasks, the model achieved strong predictive performance. The final F1-scores reached 0.8589 for tag prediction and 0.6638 for object prediction. Tag prediction attained a precision of approximately 0.84 and recall of 0.87, while object prediction achieved a precision of 0.83 and recall of 0.56.

These results indicate that WORLDO effectively captures the relationships between objects, contextual attributes, and scene semantics. The relatively high precision across both tasks suggests that the model produces accurate positive predictions, whereas the lower recall in object detection implies that certain small or visually complex objects remain more difficult to identify. Overall, the results confirm that micro-level contextual tagging yields rich semantic representations that enhance the model’s ability to understand and classify complex scenes in POI imagery.

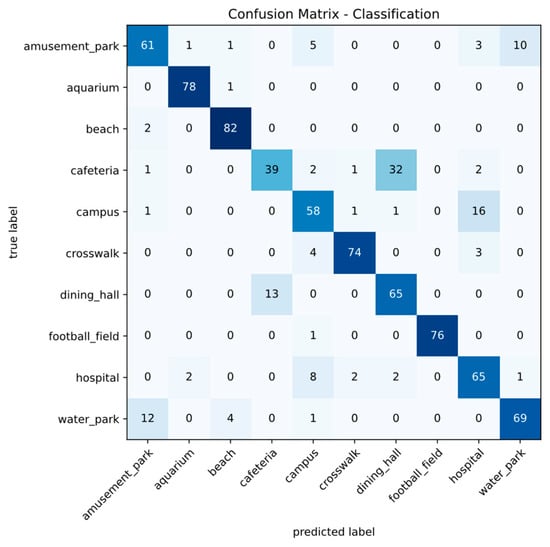

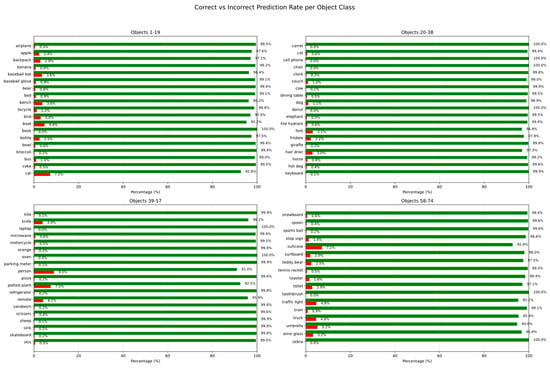

3.3. Error Analysis Using Confusion Matrices

To further analyze WORLDO’s performance, confusion matrices were generated for all three supervised tasks. For scene classification, the confusion matrix (Figure 3) shows that most categories were correctly identified, with strong diagonal dominance across nearly all classes. Minor misclassifications occurred primarily between visually similar environments such as dining hall and cafeteria, indicating that the model’s errors are mainly due to fine-grained visual similarity rather than misinterpretation of scene context.

Figure 3.

Classification Confusion Matrix.

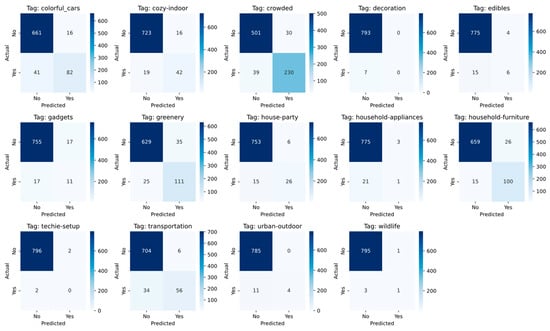

For multi-label tasks, Figure 4 and Figure 5 illustrate the distribution of true versus predicted labels for tags and objects, respectively. The matrices confirm that the model consistently recognizes common contextual tags (e.g., crowded, transportation, greenery) while exhibiting some under-prediction of less frequent tags. Likewise, object detection results show stable precision across dominant categories but reduced recall for smaller or low-contrast items. These findings reinforce the quantitative results reported in Section 3.1 and Section 3.2, demonstrating that extended training enhances both classification stability and contextual consistency in tag and object recognition.

Figure 4.

Multi-Label Tags Confusion Matrices.

Figure 5.

Multi-Label Objects Bar Chart.

3.4. Model-Level Ablation Study

To assess the contribution of each supervision signal in WORLDO, a model-level ablation study was performed. The experiment isolates the effect of the three learning objectives: scene classification, contextual tag prediction, and object presence detection. This is achieved by disabling one loss component at a time in Formula (6), without changing any other parameters.

Four configurations were trained for 10 epochs using the same dataset, splits, hyperparameters, and random seed as in the main experiments:

- A0 (Full) (α, β, γ) = (1, 1, 1)

- A1 (-Objects) = (1, 1, 0)

- A2 (-Tags) = (0, 1, 1)

- A3 (-Class) = (1, 0, 1)

Each variant was evaluated on scene classification accuracy, macro F1 for tag prediction, and macro F1 for object presence. In addition, a separate Vision Transformer model trained solely for scene classification (without tag or object supervision) was used as a reference to isolate the impact of multi-task learning. Its results are reported in Section 3.5.

As shown in Table 5, removing any supervision signal degrades both its corresponding metric and, to a lesser degree, the performance of the other heads. Thus, the results confirm the interdependence of the three tasks.

Table 5.

Model-level ablation results.

By disabling the object loss (A1), the objects head F1 is greatly reduced, while the tags and classification performance is almost unaffected, indicating that object-level supervision primarily supports its own head.

Eliminating the tag loss (A2) causes a drastic drop in tag F1 (to 0.11) and a moderate decline in scene accuracy, suggesting that contextual tag prediction enriches the learned representation and indirectly benefits global scene recognition.

After the classification loss (A3) is removed, scene accuracy falls to 0.0563, demonstrating that it has a vital role in guiding global feature learning, while tag and object scores remain stable or slightly higher due to reduced competition in the shared encoder.

Overall, this study shows that WORLDO’s multi-task structure provides complementary supervision signals: each objective contributes uniquely to the representation quality, and their joint optimization yields the most balanced and robust performance.

3.5. Comparative Evaluation with CNN Baselines

To further evaluate the performance of the WORLDO model, some CNN models were fine-tuned under identical conditions and compared against WORLDO (class-only). Specifically, ResNet50 [], VGG16 [], and EfficientNetV2 [] were trained for scene classification only, using the same dataset, image resolution (224 × 224), batch size (16), and epoch count (10) as used for WORLDO. All models were initialized with ImageNet pre-trained weights and optimized using AdamW (learning rate = 5 × 10−5, weight decay = 0.01). Furthermore, the seed value of 42 was selected for reproducibility of the testing. Performance was evaluated on the test set using classification accuracy and the weighted F1 score to account for potential class imbalance.

Since the benchmark dataset did not include tag or object annotations, all CNNs and the WORLDO (class-only) baseline were trained in a single-task configuration. The influence of the auxiliary tag and object prediction heads on representation quality is analyzed separately in the ablation study (Section 3.4).

As shown in Table 6, all CNN baselines achieved comparable performance, with ResNet50 yielding the highest accuracy (0.769) and F1 (0.760) among them. WORLDO trained for single-task scene classification outperformed all CNNs, reaching an accuracy of 0.7937 and an F1 score of 0.791. This confirms the superior capability of Vision Transformers to capture global spatial dependencies and contextual information compared to convolutional models. When combined with auxiliary tagging and object-level supervision, as demonstrated in the ablation study, WORLDO’s multi-task design further enhances representation richness, providing more context-aware embeddings that support downstream recommendation performance.

Table 6.

Comparison of WORLDO against CNN models.

3.6. Recommender System Evaluation

Following the model-level analysis, the recommender system evaluation investigates how these learned representations influence POI retrieval performance. The effectiveness of the integrated recommender system was evaluated based on its ability to retrieve visually and semantically relevant Points of Interest (POIs) from a separate evaluation dataset. The system follows a content-based recommendation strategy, in which cosine similarity between feature embeddings extracted by the WORLDO model is computed to identify and rank related images. For each query, all candidate images in the evaluation set are compared, and the precision of the retrieved results is measured to assess recommendation accuracy.

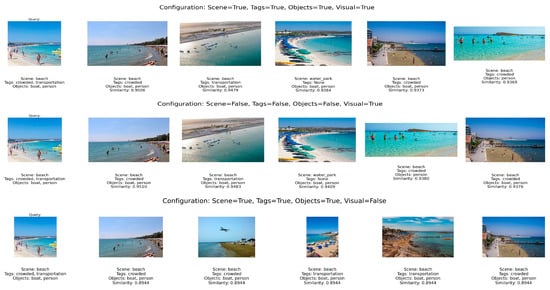

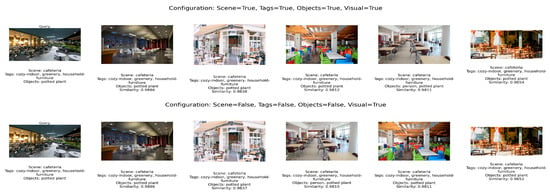

For evaluating the recommender system, three images of different POIs were chosen. The recommender system will return the five most relevant locations. As illustrated in Figure 6 below, the first image shows a beach, the second shows a dining hall, and the third shows a coffee shop.

Figure 6.

Recommender system testing images.

The selected set of test images was submitted to the recommender system for evaluation. The system was initially configured with all feature components enabled: visual features, scene class, contextual tags, and object presence. To examine the contribution of each feature type, the evaluation was repeated while selectively disabling components: first scene class, then tags, followed by objects.

Table 7 presents the number of relevant POIs retrieved under each configuration, based on shared scene, tag, or object overlap. This evaluation approach follows the principle of Precision@K, which measures how many of the top- recommended items are relevant to the query. The values in parentheses represent the average cosine similarity between the query and the recommended items, providing an indication of how closely the retrieved POIs match the input image based on visual and semantic similarity.

Table 7.

Recommender system Precision@K evaluation approach.

The evaluation results demonstrate that the recommender system performs consistently across all configurations, achieving perfect precision (5/5) for every test image and maintaining high cosine similarity scores above 0.86 in all cases. The minimal variation between configurations indicates that the system’s recommendations remain stable even when specific feature components are disabled. For instance, in the Beach scenario, the cosine similarity values fluctuate only slightly between configurations (0.894–0.943), suggesting that both visual and semantic embeddings capture complementary aspects of scene content. Similarly, Dining Hall and Coffee Shop scenarios exhibit near-identical precision and similarity values across all ablation settings, reflecting the robustness of the learned representations in visually structured environments.

These results suggest that the integrated features learned by the WORLDO model provide a strong and redundant representation of contextual features. Visual embeddings contribute to semantic alignment, while tag and object features reinforce category-level understanding. Even when one modality is removed, the system retains high retrieval accuracy, confirming that the multi-feature embedding space is cohesive and internally consistent. The recommendations generated for the Beach image are shown in Figure 7.

Figure 7.

Recommendation results for the Beach image.

For the Dining Hall and Coffee Shop images, the system maintained perfect performance even when individual components were disabled, including visual features. This suggests that in some scenes, semantic features such as tags, objects, or scene class alone can be sufficient for effective recommendation. Figure 8 presents the recommendations generated by the recommender system based on the input image of the Coffee Shop. The results clearly reflect the influence of the image’s visual features. However, when the system is configured to exclude visual features, the recommendations rely primarily on the contextual elements derived from semantic analysis.

Figure 8.

Recommendations for the Coffee Shop example.

The recommender system was evaluated using a curated dataset of 200 images, collected independently of the training data described in Section 2.3.1. The results indicate a strong correlation between the richness of visual embeddings and recommendation quality. Visual features capture spatial and color cues that are especially valuable for distinguishing visually diverse POIs, such as beaches or amusement parks. When these features are disabled, semantic-only recommendations may achieve similar precision but with reduced contextual understanding, underscoring the complementary role of visual representations in ensuring recommendation relevance.

The evaluation dataset encompasses seven POI categories that provide a representative range of visual and contextual conditions: amusement parks (23 images), aquariums (9), beaches (54), cafeterias (45), university campuses (19), football fields (30), and water parks (20). These categories collectively balance indoor and outdoor scenes, structured and open spaces, and varying levels of visual complexity. Such diversity allows a comprehensive assessment of WORLDO’s ability to retrieve semantically and visually relevant POIs across heterogeneous environments.

Overall, the results confirm that WORLDO maintains stable performance across different feature configurations, demonstrating that each representation (scene, tag, object, and visual embedding) contributes distinct and complementary information to the recommendation process. The system shows particularly strong alignment between visual and semantic features in complex, open environments such as beaches and amusement parks, while structured scenes like cafeterias and campuses remain robust under partial feature ablation. Future evaluations using broader and more heterogeneous datasets could further elucidate how individual feature modalities interact at scale and contribute to overall recommendation quality.

4. Discussion

This study introduced the WORLDO model, a multi-task visual model designed for visual scene understanding in the POI domain. WORLDO builds upon the original WORLD architecture by removing the BERT-based textual branch in favor of a streamlined design centered on visual inputs and structured semantic labels, including scene classes, contextual tags, and object presence. Through this simplification, the model is less computationally expensive and still maintains strong performance across all three prediction tasks.

The model was further extended with a content-based recommender system that utilizes the fused feature embeddings generated by WORLDO to retrieve visually and semantically relevant POIs. The recommender system supports two usage modes, single-image queries and multi-image user profiling, and allows dynamic control over the use of scene, tag, object, and visual features. Experimental results demonstrate that WORLDO achieves consistent and robust performance across different feature configurations, with perfect recommendation precision and high cosine similarity scores in all test cases. The ablation study confirmed that the model benefits from multi-task learning, as disabling individual components led to measurable though limited variations in classification and F1 performance. Additionally, benchmarking against conventional CNN architectures (ResNet50, VGG16, and EfficientNetV2) highlighted the superior representational power of transformer-based embeddings for capturing complex spatial and contextual relationships.

While the model shows promise, several limitations constrain its current generalizability. The evaluation was limited to a dataset of only 200 POIs, reducing the potential for diverse recommendations and nuanced evaluation. Furthermore, the system does not incorporate real user interaction data; instead, relevance is estimated through deterministic rules such as scene or tag overlap, which do not reflect user preferences.

To address these issues, future improvements will focus on three key areas. Expanding the dataset is essential to enhance robustness and recommendation diversity. Incorporating user behavior data, such as result ratings, would enable more personalized, hybrid recommendation approaches. Finally, transforming the model into a multimodal system by training on image-text pairs, such as CLIP, would allow for zero-shot recommendations from natural language queries and improve system flexibility in scenarios lacking structured metadata.

5. Conclusions

WORLDO presents a capable framework for visual scene understanding and POI recommendation. By focusing on visual and structured semantic features, it offers a computationally efficient approach that achieves strong performance. The integrated recommender system demonstrates how these features can be leveraged to deliver meaningful suggestions without requiring textual descriptions. While some limitations exist, particularly in data scale and personalization, the model offers a solid foundation for future research in visual-based and multimodal recommendation systems.

Supplementary Materials

The following supporting information can be downloaded at: https://github.com/LoPCY7/WORLDO/ (accessed on 10 November 2025), Code for: Generating Dataset; Training WORLDO; Recommender System.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, visualization, and writing—original draft preparation, P.M.; supervision, writing—review and editing, I.D. and H.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

https://huggingface.co/LoPCY7/WORLDO/blob/main/model.pth (accessed on 10 November 2025), Pre-Trained WORLDO model used in this paper. https://github.com/LoPCY7/WORLDO/tree/main/data/dataset (accessed on 10 November 2025), Generated JSON files used for training the model in this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| POI | Points-of-Interest |

| ViT | Vision Transformer |

| WORLD | Weight Optimization for Representation and Labeling Descriptions |

| WORLDO | WORLD with Objects |

| CNN | Convolutional Neural Network |

| DETR | DEtection TRansformer |

| BoO | Bag-of-Objects |

| BERT | Bidirectional Encoder Representations from Transformers |

| COCO | Common Objects in Context |

| JSON | JavaScript Object Notation |

References

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.H.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Messios, P.; Dionysiou, I.; Gjermundrød, H. Automated Contextual Tagging in Points-of-Interest Using Bag-of-Objects. In Proceedings of the Fourth International Conference on Innovations in Computing Research (ICR’25), London, UK, 25–27 August 2025; pp. 62–73. [Google Scholar]

- Ye, M.; Yin, P.; Lee, W.-C.; Lee, D.-L. Exploiting geographical influence for collaborative point-of-interest recommendation. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information-SIGIR ’11, Beijing, China, 25–29 July 2011; p. 325. [Google Scholar]

- Cheng, C.; Yang, H.; King, I.; Lyu, M. Fused matrix factorization with geographical and social influence in location-based social networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; Volume 1, pp. 17–23. [Google Scholar]

- Zhang, J.-D.; Chow, C.-Y. iGSLR: Personalized geo-social location recommendation. In Proceedings of the 21st ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems; Association for Computing Machinerty: New York, NY, USA, 2013; pp. 334–343. [Google Scholar]

- Zhang, J.D.; Chow, C.Y.; Li, Y. LORE: Exploiting sequential influence for location recommendations. In Proceedings of the SIGSPATIAL’14: 22nd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Dallas, TX, USA, 4–7 November 2014; pp. 103–112. [Google Scholar]

- Zhang, J.-D.; Chow, C.-Y. GeoSoCa. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval; Association for Computing Machinerty: New York, NY, USA, 2015; pp. 443–452. [Google Scholar]

- Li, H.; Ge, Y.; Hong, R.; Zhu, H. Point-of-Interest Recommendations. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 975–984. [Google Scholar]

- Li, H.; Ge, Y.; Lian, D.; Liu, H. Learning User’s Intrinsic and Extrinsic Interests for Point-of-Interest Recommendation: A Unified Approach. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2117–2123. [Google Scholar]

- Manotumruksa, J.; Macdonald, C.; Ounis, I. A Personalised Ranking Framework with Multiple Sampling Criteria for Venue Recommendation. In Proceedings of the 2017 ACM Conference on Information and Knowledge Management (CIKM ’17), Singapore, 6–10 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1469–1478. [Google Scholar]

- He, J.; Li, X.; Liao, L. Category-aware Next Point-of-Interest Recommendation via Listwise Bayesian Personalized Ranking. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 1837–1843. [Google Scholar]

- Feng, S.; Cong, G.; An, B.; Chee, Y.M. POI2Vec: Geographical latent representation for predicting future visitors. In Proceedings of the 31st AAAI Conference on Artificial Intelligence AAAI 2017, San Francisco, CA, USA, 4–9 February 2017; pp. 102–108. [Google Scholar]

- Han, P.; Li, Z.; Liu, Y.; Zhao, P.; Li, J.; Wang, H.; Shang, S. Contextualized Point-of-Interest Recommendation. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; Volume 2021-Janua, pp. 2484–2490. [Google Scholar]

- Wu, L.; He, X.; Wang, X.; Zhang, K.; Wang, M. A Survey on Accuracy-oriented Neural Recommendation: From Collaborative Filtering to Information-rich Recommendation. IEEE Trans. Knowl. Data Eng. 2022, 35, 4425–4445. [Google Scholar] [CrossRef]

- Dacrema, M.F.; Cremonesi, P.; Jannach, D. Are we really making much progress? A worrying analysis of recent neural recommendation approaches. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019; pp. 101–109. [Google Scholar]

- Darcet, T.; Oquab, M.; Mairal, J.; Bojanowski, P. Vision Transformers Need Registers. In Proceedings of the 12th International Conference on Learning Representations ICLR 2024, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Lepori, M.; Tartaglini, A.; Vong, W.K.; Serre, T.; Lake, B.M.; Pavlick, E. Beyond the Doors of Perception: Vision Transformers Represent Relations Between Objects. Adv. Neural Inf. Process. Syst. 2024, 37, 131503–131544. [Google Scholar]

- Guo, H.; Wang, Y.; Ye, Z.; Dai, J.; Xiong, Y. big.LITTLE Vision Transformer for Efficient Visual Recognition. arXiv 2024, arXiv:2410.10267. [Google Scholar] [CrossRef]

- Liu, Q.; Hu, J.; Xiao, Y.; Zhao, X.; Gao, J.; Wang, W.; Li, Q.; Tang, J. Multimodal Recommender Systems: A Survey. ACM Comput. Surv. 2024, 57, 1–17. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Messios, P.; Dionysiou, I.; Gjermundrød, H. Comparing Convolutional Neural Networks and Transformers in a Points-of-Interest Experiment. In Proceedings of the Third International Conference on Innovations in Computing Research (ICR’24), Lecture Notes in Networks and Systems; Daimi, K., Al Sadoon, A., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; Volume 1058, pp. 153–162. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 Million Image Database for Scene Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami Beach, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Transformers Hugging Face Library. Available online: https://pypi.org/project/transformers/ (accessed on 30 January 2025).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Agrawal, S.; Roy, D.; Mitra, M. Tag Embedding Based Personalized Point Of Interest Recommendation System. Inf. Process. Manag. 2021, 58, 102690. [Google Scholar] [CrossRef]

- Su, X.; Khoshgoftaar, T.M. A Survey of Collaborative Filtering Techniques. Adv. Artif. Intell. 2009, 2009, 421425. [Google Scholar] [CrossRef]

- Çano, E.; Morisio, M. Hybrid recommender systems: A systematic literature review. Intell. Data Anal. 2017, 21, 1487–1524. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; Volume 139, pp. 10096–10106. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).