Abstract

With the development of autonomous technology, the research into multimodal human-machine interaction (HMI) for autonomous vehicles (AVs) has attracted extensive attention, especially in automotive wellness. To support the design of HMIs for automotive wellness in AVs, this paper proposes a multimodal design framework. First, three elements of the framework were envisioned based on the typical composition of an interactive system. Second, a five-step process for utilizing the proposed framework was suggested. Third, the framework was applied in a design education course for exemplification. Finally, the AttrakDiff questionnaire was used to evaluate these interactive prototypes with 20 participants who had an affinity for HMI design. The questionnaire responses showed that the overall impression was positive and this framework can help design students to effectively identify research gaps and expand design concepts in a systematic way. The proposed framework offers a design approach for the development of multimodal HMIs for autonomous wellness in AVs.

1. Introduction

With the rapid development of automatic technology, the market for autonomous vehicles (AVs) is increasingly enormous [1]. At present, assistive driving technology has become relatively mature [2], and could result in extensive changes in people’s mode of travel by reducing the burdens of driving [3,4]. Subsequently, non-driving related activities in vehicles may become diverse [5,6,7]. Recent studies have shown that passengers want to engage in activities that promote health and wellness [3,8]. According to Singleton et al. [9,10], the notion of automotive wellness can be described as maintaining physical health and positive mental wellbeing in vehicles. To date, there have been several explorations aimed at improving different aspects of autonomous wellness through the development of various human–machine interaction (HMI) systems. For example, Krome et al. [11] implemented an exercise bike into the car context using exergame elements to translate traffic jams into fitness behaviors. Bito et al. [12] developed an automatic in-vehicle video system to improve the driving experience by generating highlights of a trip. Deserno et al. [13] embedded medical sensors into a bus to empower the vehicle with diagnostic functions for stroke prevention. From a social wellness perspective, Lakier et al. [14] proposed a cross-car game concept for semi-autonomous driving.

Recent research projects have also investigated multimodal HMIs to effectively support autonomous wellness [15,16]. In particular, many novel designs explored visualizing health information using interactive light [17], digital assistants [18], ambient [19] and heads-up displays [20] in the AV context. Modalities such as auditory [21,22,23,24,25,26], olfactory [27], gaze [28], and tactile [29] interactions have also been applied to improve driving experiences. Due to the prevalence of visual computing, augmented reality and virtual reality display techniques have also been increasingly utilized to facilitate various daily activities in the car, including entertainment [30], working [31], calling [32], etc.

In the transition towards automated driving in our society, HMI researchers should be focused on how to design and develop AV applications to shed light on future car scenarios [33,34]. Despite the growing research efforts focused on autonomous wellness, there is a lack of design frameworks to help designers efficiently find niches in the context and direct them in seamlessly developing design concepts. Given this inadequacy, this paper aims to develop a multimodal HMI design framework for automotive wellness. Based on a theoretical work by Benyon et al. [35], we argued that subjects of the system (i.e., AVs, passengers), target activities related to wellness, and system interactivity (i.e., system input, system output) should be systematically considered as key elements when developing multimodal HMIs for autonomous wellness in AVs. Accordingly, we proposed a design process to support designers in their efforts to adopt these three elements in their practices easily. We examined the proposed framework based on a design education course and used the students’ works to exemplify its usefulness.

2. The Framework Design

2.1. Background

There is a long history of design method exploration in order to inspire designers to carry out interaction design. For example, participatory design methods can directly involve users as partners in the design process [36]. Cultural probes [37] aim to inspire new design ideas, focusing on the creative, engaging, and playful applications of technologies. Ethnography is used to understand the social and situated use of technologies in particular environments [38]. These methods certainly play an important role in inspiration. Nevertheless, one of their weaknesses is that researchers tend to be trapped in a narrow perspective without holistic design considerations when applying these methods.

In addition, a well-established design process can help designers produce results efficiently. For instance, the Double Diamond design model, proposed by the British Design Council, has been widely used in design research and practices [39]. In this model, a linear design process has been clearly divided into four parts: discover, define, develop, and deliver. To our knowledge, there are a vast amount of evolved frameworks of the Double Diamond design model to suit different kinds of design projects [40]. Similarly, in this paper, we hoped to leverage such a linear design process to support the design and development of the HMI design of automotive wellness in AVs, which involves the composition of the interactive system.

Benyon et al. [35] used People, Activities, Contexts and Technologies as four elements of a framework for interactive system design. First, people are regarded as the essential part of the interactive system, for which individual differences in physical, psychological, and usage characteristics cannot be ignored. Second, ten important characteristics (i.e., frequency, working well at both peaks and troughs of working, interrupted and pick up with no mistakes, response time of system, communication and coordination with others, well-defined tasks, safety, dealing with errors, data requirements and input device, media) of activities were listed that need to be considered, especially the content. Third, contexts can be distinguished into the organizational context, the social context, and the physical circumstances. Sometimes context can be seen as the surrounds of an activity and it should take the responsibility of gluing activities together. Fourth, the last, but not least, essential element of an interactive system is technologies. According to Benyon et al. [35], activities and contexts determine requirements for technology development, whereas the deployment of new technologies changes activities and contexts.

While there have been some existing models to support the HMI design for AVs (e.g., [41]), they lacked thorough considerations of improving automotive wellness. Therefore, based on the aforementioned design methods and models, we propose a new design framework and a matched process in this paper.

2.2. Elements of the Framework

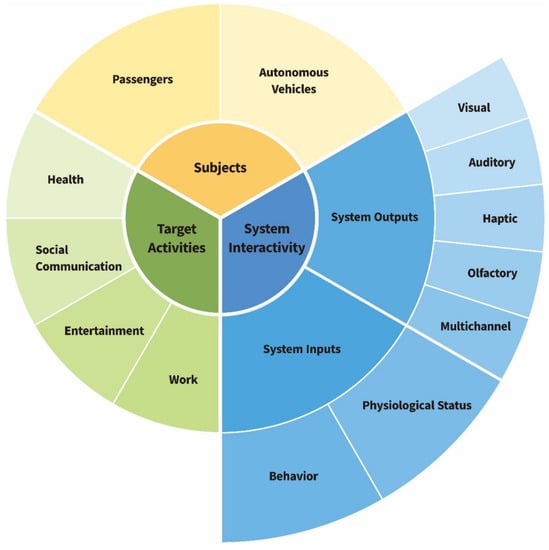

According to Benyon et al. [35], an interactive system consisted of the following elements: people, contexts, activities, and technologies. Thus, we tried to map the key HMI design considerations of autonomous wellness in AVs to these aforementioned elements for supporting designers in exploring relevant themes. As shown in Figure 1, a circular model with color-coded elements was proposed, with three main aspects, including subjects, target activities, and system interactivity.

Figure 1.

The proposed multimodal HMIs design framework for automotive wellness in AVs.

Specifically, in an AV environment, we deemed passengers as people and vehicles as contexts of the interactive system, respectively. They were further categorized into the category of subjects (in yellow) to represent the information senders (vehicles) and receivers (passengers) of the system.

In addition, we adopted the concept of activities from [3] and dedicated it to in-vehicle activities related to health and wellbeing. Following previous categorizations of in-vehicle activities [42,43], in this study, we summarized target activities (in green) as health, entertainment, work, and social communication. However, the types of target activities might be expanded in the future.

Lastly, we proposed the system interactivity of HMIs that corresponded to technologies, which could be further divided into inputs and outputs from a human–computer interaction perspective. As for system inputs, due to the advance in ubiquitous sensing [13,44,45,46], it is common to track user behaviors and physiological status as inputs for interactive technologies, which may significantly expand the modalities of data for future HMIs. As for system outputs, according to the increase in considering multiple sensory channels for HMI designs [47,48], we envisioned that different modalities (e.g., visual, auditory, haptic, etc.) could be leveraged to provide proper feedback in the interactive system of HMIs.

2.3. The Process for Utilizing the Proposed Framework

Following Morrison et al. [49], we proposed a five-step design process that could support HMI researchers in discovering the research questions, defining target subjects, and developing and delivering design concepts, where each step will be taken as the premise of the next step. These five steps can be described as follows.

- Find research gaps. Researchers need to extract passengers, AVs, target activities, system inputs and system outputs based on desktop research and/or user studies. It is helpful to find gaps by contrasting these elements;

- Specify the subjects. In this step, researchers are required to specify the personal information of passengers and scenarios of vehicle usage;

- Specify target activities. This step is to help researchers clarify which aspects of wellness they hope to solve through HMI design. Passengers’ demands should be sufficiently considered;

- Specify system interactivity. In this step, system inputs and system outputs need to be considered systematically. Explicitly, researchers are required to compare which combinations of system inputs and system outputs can achieve the target activities more effectively;

- Design HMI for autonomous wellness in AVs based on pre-defined elements. All the elements of the interactive system have been defined in the previous steps. Thus, specific products need to be designed within the limits of these elements.

3. Model Application

We applied the proposed framework in a second-year bachelor design course for practice. In this course, we defined the following subjects according to the framework. Specifically, passengers were defined as middle-aged commuters with a family of procreation, and AVs were defined as 1.5-h one-way commuting. Next, we illustrate the applicability of this framework with five students’ cases from this course.

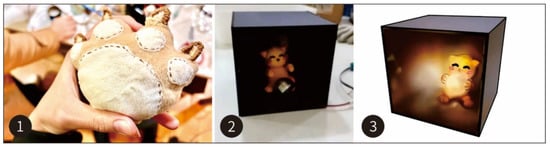

3.1. Social Communication—Behavior—Multichannel Feedback

“Personal Interaction Devices in Car” is a set of interactive devices for parent–child communication in the car, as shown in Figure 2. During the design, this group further defined commuters as commuters who take their children to work. Thus, passengers in this project specifically referred to parents and children in commuting and AVs referred to the commuting process together with children. In the aspect of target activities, this group chose social communication aimed at helping parents to perceive the children’s status in time and prevent children from feeling concern. Finally, as for system interactivity, this group regarded the children’s pat and press on the doll as system inputs, which triggered the tactile feedback (warming) of the toy as well as the visual feedback (light) and auditory feedback (music) of the display device as system outputs arising from these behaviors.

Figure 2.

Personal Interaction Devices in Car technical prototype ((1) Triggering haptic feedback of the toy by pat and press; (2) the general status of the display device; (3) the indication state of the display device with visual feedback and auditory feedback).

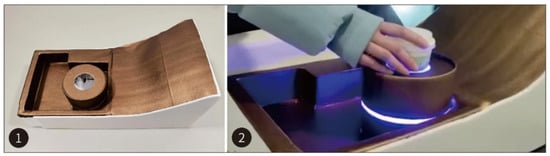

3.2. Social Communication—Behavior—Visual Feedback

The “Time Hobbyhorse” is a device that helps commuter parents and children waiting at home to communicate in real-time and share the journey, as shown in Figure 3. This group further expanded passengers into commuters and children waiting at home. Regarding the aspect of target activities, this group also chose social communication aiming at enhancing the communication between children and parents by presenting the position of parents during commuting to children. Finally, as for system interactivity, this group regarded parents’ position status as system inputs and triggered the visual feedback (rotation of the Time Hobbyhorse and lighting) as system outputs from this behavior.

Figure 3.

Time Hobbyhorse technical prototype ((1) The general status of the Time Hobbyhorse; (2) trigger of visual feedback from the physical situation).

3.3. Entertainment—Behavior—Multichannel Feedback

“Carfee Break” is a device designed to allow passengers in AVs to enjoy a more comfortable rest environment, as shown in Figure 4. In the aspect of target activities, this group chose entertainment aimed at relieving passengers’ fatigue during commuting. Finally, as for system interactivity, this group regarded the behavior of putting hot drinks into the cup holder as system inputs and triggered the visual feedback (lighting) and olfactory feedback (pleasant aroma) as system outputs from this behavior.

Figure 4.

Carfee Break technical prototype ((1) The general status of Carfee Break; (2) trigger visual feedback and olfactory feedback by a physical action).

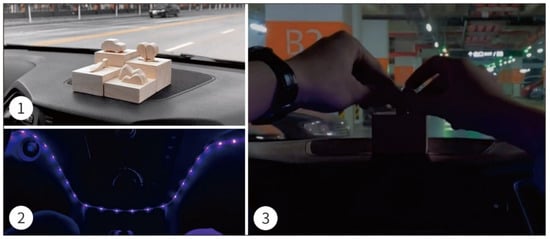

3.4. Entertainment—Explicit Behavior—Visual Feedback

“E-Car” is a modular intelligent manual double-trigger mode switch, as shown in Figure 5. This group expanded AVs into four kinds of modes: Business Mode for commuting, Didi Mode for sharing, Couple Mode for lovers’ travel, and Tourism Mode for traveling. In the aspect of target activities, this group also chose entertainment aimed at improving comfort. Finally, as for system interactivity, this group regarded different gestures for particular modes as system inputs and triggered the visual feedback (lighting) as system outputs from these behaviors.

Figure 5.

E-car technical prototype ((1). The general status of the E-car, which includes four modes; (2) the indication state with visual feedback; (3) trigger by a physical action).

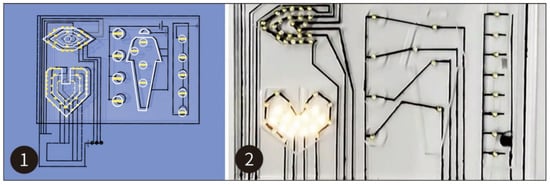

3.5. Health—Physiological Status—Visual Feedback

“Health Tester” is an auxiliary product that can monitor the physical condition of passengers in real-time, as shown in Figure 6. In the aspect of target activities, this group chose physiological status aimed at helping passengers to realize their health status. Finally, as for system interactivity, this group considered the physiological data of passengers monitored by sensors as system inputs and triggered the visual feedback (lighting) as system outputs from the physiological status. Specifically, the device was divided into four parts: the eyes in the upper left corner corresponded to the passengers’ fatigue; the heart in the left lower corner corresponded to the passengers’ heart and other important body organs; the human figure pattern in the middle represents the muscles in different parts of the body was to show the degree of muscle fatigue; the bar on the right was used to show the temperature of the passengers.

Figure 6.

Health Tester technical prototype ((1) The general status of the Health Tester; (2) trigger of visual feedback by physiological status).

4. Evaluation

In order to effectively evaluate these five design concepts, we conducted an evaluation with 20 participants. The main purpose of this test was to examine the attractiveness of these design prototypes, in order to provide evidence of the effectiveness of our proposed framework for multimodal HMI designs.

4.1. Participants

A total of 20 participants (13 males, 7 females) from 23 to 45 years old (M = 29, SD = 7.5) were recruited for this design evaluation. We recruited participants through targeted invitation. All participants had education experience in industrial design or interaction design, and were very familiar with the theories, methodologies, and case studies of HMI. Specifically, four university lecturers in design, four design practitioners with more than three years’ work experience, four Ph.D. candidates in design, and eight postgraduate students in design took part in the test.

4.2. Setup

The test was conducted online. Five video showcases and five design images were prepared to introduce the concepts of each work, respectively. An online survey was also prepared based on the AttrakDiff questionnaire [50], which is helpful to evaluate the attractiveness of products [51]. AttrakDiff has been widely applied for heuristic testing of HMI designs as a single evaluation method [52,53,54,55]. Similarly, we conducted single evaluations on the five designs and adopted the AttrakDiff online tool [56] to analyze our research data.

4.3. Procedure

Before the experiment, we briefly introduced this project and demonstrated the concept and functionality of each prototype through video showcases and design images. Then participants, were allowed to ask questions about the prototypes to help them understand the concepts thoroughly. Subsequently, they were invited to fill in the questionnaire.

4.4. Data Collection and Analysis

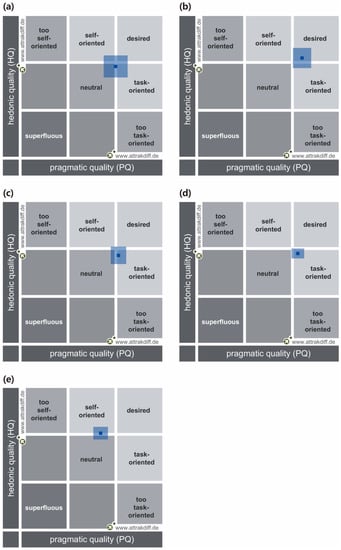

The questionnaire data were collected through a Chinese online survey service provider called Wen Juan Xing, which can provide functions equivalent to Amazon Mechanical Turk [57]. All the obtained data were imported to the AttrakDiff online tool for data analysis, which can automatically calculate the Hedonic Quality (HQ) and the Pragmatic Quality (PQ) of the evaluated concept design, in order to reveal its attractiveness (ATT). Specifically, HQ is an index that describes the originality and beauty of a product, whereas PQ can be used to indicate usability aspects, i.e., efficiency, effectiveness, and learnability [58]. The higher the HQ and PQ values, the evaluated product has higher ATT. The values of HQ, PQ, and ATT all ranged from −3 (negative) to 3 (positive). Based on the online tool, a visual analysis diagram of AttrakDiff can be generated to support the data analysis, in which the vertical axis represents the HQ value (bottom = low extent) and the horizontal axis represents the PQ value (left = low extent) of a specific design. In the diagram, a rectangle is used to show the questionnaire results, where its size is adversely associated with the confidence of the data.

4.5. Results

We obtained 20 responses to the 5 concept designs and imported them to AttrakDiff for data analysis. As shown in Figure 7, the values of all students’ designs were close to the “desired” area and their ATT values were greater than 1.0. That is to say, the attractiveness of these prototypes was weighed positively.

Figure 7.

AttrakDiff evaluation results of (a) Personal Interaction Devices in Car; (b) Time Hobbyhorse; (c) Carfee Break; (d) E-Car; (e) Health Tester.

Specifically, Figure 7a shows that the HQ value of Personal Interaction Devices in Car is 0.86 (confidence = 0.51) and the PQ value is 1.02 (confidence = 0.47), which jointly resulted in an ATT value of 1.32. Additionally, Figure 7b reveals that Time Hobbyhorse achieved higher results, with an HQ value of 1.23 (confidence = 0.42) and a PQ value of 1.43 (confidence = 0.38). The ATT value of this design was as high as 1.85, which proved its strong attractiveness. By comparison, we found that the concept of Personal Interaction Devices in Car was less desirable than Time Hobbyhorse. This might be due to the reason that social interactions within vehicles could lead to safety concerns. By enabling lightweight, single-direction communications between passengers and their families at home, Time Hobbyhorse was therefore deemed more acceptable and novel to our participants. This finding implies that while developing multimodal HMIs for automotive wellness, the design framework should also support designers to brainstorm possibilities beyond the context of vehicles only.

According to Figure 7c, Carfee Break had an HQ value of 0.75 (confidence = 0.35) and a PQ value of 1.14 (confidence = 0.33), with an overall ATT value of 1.17 for verifying its attractiveness. The result of E-Car (Figure 7d) showed that the HQ value was 0.83 (confidence = 0.20) and the PQ value was 1.26 (confidence = 0.26), and the ATT value of this work was 1.16. Both Carfee Break and E-Car had strong task-oriented features, because they selected entertainment as target activities, aiming to provide passengers with a relaxing ambience.

As shown in Figure 7e, Health Tester was evaluated with an HQ value of 1.08 (confidence = 0.26) and a PQ value of 0.38 (confidence = 0.31), with an ATT value of 1.19. Of all these works, Health Tester was the least task-oriented, which can be attributed to the fact that it focused on helping passengers learn their health conditions within the car, through timely visualization of their physiological data. Therefore, rather than focusing on specific HMI tasks, Health Tester was applied in the context of an ambient display to provide a reminder of unhealthy conditions.

5. Discussion and Conclusions

In this paper, we have proposed a multimodal HMI design framework for automotive wellness in AVs based on the notion of Benyon et al. [35]. In this framework, three elements were proposed and divided into five dimensions. Accordingly, we developed the process of utilizing this framework and examined it in a design course to exemplify the effectiveness of this model. We learned that the proposed framework could support the development of extraordinary HMI concepts for automotive wellness as the clearly classified target activities of our proposed framework. Moreover, we learned that the list of system outputs and system inputs from the framework helped the students turn the concept designs into feasible engineering developments. Based on the application throughout the design course, we also found one advantage of the linear process for utilizing the proposed framework was the provision of clear guidance to explore HMI design. All these students’ works were evaluated by the AttrakDiff questionnaire, with 20 participants who had design backgrounds. Due to the positive AttrakDiff results (all with ATT > 1), we proved that the proposed framework and the design process could effectively support students in developing desirable multimodal HMIs for automotive wellness.

Although the proposed framework has been proven to be effective in practice, it was shown that it is not sufficiently detailed. Consequently, students may feel that the concept of some elements is not clearly defined, which required us to reflect on the framework. The development of multimodal HMI design for automotive wellness in AVs requires researchers to consider various factors in the interactive system. First, each element needs to be expanded for design ideation. For example, target activities have been summarized as health, entertainment, work, and social communication. However, the actual demand of passengers may become more diverse with increased attention to wellness [59,60]. Thus, the types of target activities should be reclassified and expanded. As for system inputs, they can be connected with specific technologies. For example, it would be worth investigating an index of existing sensors used to monitor different physiological measures [13]. Second, the proposed framework was aimed at relatively simple tasks. A remaining challenge is whether it is still applicable when facing a complex in-vehicle situation with multiple passengers [61,62]. This is one of the directions we need to study next.

Author Contributions

Conceptualization, X.R. and Y.Z.; methodology, Y.Z.; validation, X.R. and Y.Z.; formal analysis, Y.Z.; investigation, X.R. and Y.Z.; resources, X.R.; data curation, Y.Z.; writing—original draft preparation, Y.Z.; writing—review and editing, X.R.; visualization, Y.Z.; supervision, X.R.; project administration, X.R.; funding acquisition, X.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Beijing Institute of Technology Research Fund Program for Young Scholars (XSQD-202018002).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yaqoob, I.; Khan, L.U.; Kazmi, S.A.; Imran, M.; Guizani, N.; Hong, C.S. Autonomous driving cars in smart cities: Recent advances, requirements, and challenges. IEEE Netw. 2019, 34, 174–181. [Google Scholar] [CrossRef]

- Walch, M.; Lange, K.; Baumann, M.; Weber, M. Autonomous Driving: Investigating the Feasibility of Car-Driver Handover Assistance. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 11–18. [Google Scholar]

- Pfleging, B.; Rang, M.; Broy, N. Investigating User Needs for Non-Driving-Related Activities during Automated Driving. In Proceedings of the 15th International Conference on Mobile and Ubiquitous Multimedia, Rovaniemi, Finland, 12–15 December 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 91–99. [Google Scholar]

- Singleton, P.A. Discussing the “positive utilities” of autonomous vehicles: Will travellers really use their time productively? Transp. Rev. 2019, 39, 50–65. [Google Scholar] [CrossRef]

- Tasoudis, S.; Perry, M. Participatory Prototyping to Inform the Development of a Remote UX Design System in the Automotive Domain. Multimodal Technol. Interact. 2018, 2, 74. [Google Scholar] [CrossRef]

- Stevens, G.; Bossauer, P.; Vonholdt, S.; Pakusch, C. Using Time and Space Efficiently in Driverless Cars: Findings of a Co-Design Study. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow Scotland, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–14. [Google Scholar]

- Kun, A.L.; Boll, S.; Schmidt, A. Shifting gears: User interfaces in the age of autonomous driving. IEEE Pervas. Comput. 2016, 15, 32–38. [Google Scholar] [CrossRef]

- Pfleging, B. Automotive User Interfaces for the Support of Non-Driving-Related Activities. Ph.D. Thesis, Universität Stuttgart, Stuttgart, Germany, 2017. [Google Scholar]

- Lumileds Automotive Wellness: The Growing Importance of Health and Wellbeing on the Road. Available online: https://lumileds.com/company/blog/automotive-wellness-the-growing-importance-of-health-and-wellbeing-on-the-road/ (accessed on 1 June 2022).

- Singleton, P.A.; De Vos, J.; Heinen, E.; Pudāne, B. Potential Health and Well-Being Implications of Autonomous Vehicles. In Advances in Transport Policy and Planning; Elsevier: Amsterdam, The Netherlands, 2020; Volume 5, pp. 163–190. [Google Scholar]

- Krome, S.; Goddard, W.; Greuter, S.; Walz, S.P.; Gerlicher, A. A Context-Based Design Process for Future Use Cases of Autonomous Driving: Prototyping AutoGym. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 265–272. [Google Scholar]

- Bito, K.; Siio, I.; Ishiguro, Y.; Takeda, K. Automatic Generation of Road Trip Summary Video for Reminiscence and Entertainment using Dashcam Video. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Leeds, UK, 9–14 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 181–190. [Google Scholar]

- Deserno, T.M. Transforming Smart Vehicles and Smart Homes into Private Diagnostic Spaces. In Proceedings of the 2020 2nd Asia Pacific Information Technology Conference, Bali Island, Indonesia, 17–19 January 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 165–171. [Google Scholar]

- Lakier, M.; Nacke, L.E.; Igarashi, T.; Vogel, D. Cross-Car, Multiplayer Games for Semi-Autonomous Driving. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play, Barcelona, Spain, 22–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 467–480. [Google Scholar]

- Pieraccini, R.; Dayanidhi, K.; Bloom, J.; Dahan, J.; Phillips, M.; Goodman, B.R.; Prasad, K.V. A multimodal conversational interface for a concept vehicle. New Sch. Psychol. Bull. 2003, 1, 9–24. [Google Scholar]

- Braun, M.; Broy, N.; Pfleging, B.; Alt, F. Visualizing natural language interaction for conversational in-vehicle information systems to minimize driver distraction. J. Multimodal User 2019, 13, 71–88. [Google Scholar] [CrossRef]

- Löcken, A.; Ihme, K.; Unni, A. Towards Designing Affect-Aware Systems for Mitigating the Effects of In-Vehicle Frustration. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, Oldenburg, Germany, 24–27 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 88–93. [Google Scholar]

- Alpers, B.S.; Cornn, K.; Feitzinger, L.E.; Khaliq, U.; Park, S.Y.; Beigi, B.; Hills-Bunnell, D.J.; Hyman, T.; Deshpande, K.; Yajima, R.; et al. Capturing Passenger Experience in a Ride-Sharing Autonomous Vehicle: The Role of Digital Assistants in User Interface Design. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, DC, USA, 21–22 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 83–93. [Google Scholar]

- Riegler, A.; Aksoy, B.; Riener, A.; Holzmann, C. Gaze-based Interaction with Windshield Displays for Automated Driving: Impact of Dwell Time and Feedback Design on Task Performance and Subjective Workload. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, DC, USA, 21–22 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 151–160. [Google Scholar]

- Riegler, A.; Wintersberger, P.; Riener, A.; Holzmann, C. Investigating User Preferences for Windshield Displays in Automated vehicles. In Proceedings of the 7th ACM International Symposium on Pervasive Displays, Munich, Germany, 6–8 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–7. [Google Scholar]

- Burnett, G.; Hazzard, A.; Crundall, E.; Crundall, D. Altering Speed Perception through the Subliminal Adaptation of Music within a Vehicle. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 164–172. [Google Scholar]

- Meck, A.; Precht, L. How to Design the Perfect Prompt: A Linguistic Approach to Prompt Design in Automotive Voice Assistants–An Exploratory Study. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Leeds, UK, 9–14 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 237–246. [Google Scholar]

- Beattie, D.; Baillie, L.; Halvey, M. Exploring How Drivers Perceive Spatial Earcons in Automated Vehicles. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Maui, HI, USA, 11–15 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; Volume 1. Article No. 36. [Google Scholar]

- Wang, M.; Lee, S.C.; Sanghavi, H.K.; Eskew, M.; Zhou, B.; Jeon, M. In-Vehicle Intelligent Agents in Fully Autonomous Driving: The Effects of Speech Style and Embodiment Together and Separately. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Leeds, UK, 9–14 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 247–254. [Google Scholar]

- Gang, N.; Sibi, S.; Michon, R.; Mok, B.; Chafe, C.; Ju, W. Don’t Be Alarmed: Sonifying Autonomous Vehicle Perception to Increase Situation Awareness. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 237–246. [Google Scholar]

- Jeon, M. Multimodal Displays for Take-Over in Level 3 Automated Vehicles while Playing a Game. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Dmitrenko, D.; Maggioni, E.; Vi, C.T.; Obrist, M. What Did I Sniff? Mapping Scents Onto Driving-Related Messages. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 154–163. [Google Scholar]

- Wang, C.; Krüger, M.; Wiebel-Herboth, C.B. “Watch out!”: Prediction-Level Intervention for Automated Driving. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, DC, USA, 21–22 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 169–180. [Google Scholar]

- Vo, D.; Brewster, S. Investigating the Effect of Tactile Input and Output Locations for Drivers’ Hands on In-Car Tasks Performance. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, DC, USA, 21–22 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Paredes, P.E.; Balters, S.; Qian, K.; Murnane, E.L.; Ordóñez, F.; Ju, W.; Landay, J.A. Driving with the fishes: Towards calming and mindful virtual reality experiences for the car. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Singapore, 8–12 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; Volume 2, pp. 1–21. [Google Scholar]

- Janssen, C.P.; Kun, A.L.; Brewster, S.; Boyle, L.N.; Brumby, D.P.; Chuang, L.L. Exploring the Concept of the (Future) Mobile office. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands, 21–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 465–467. [Google Scholar]

- Janssen, C.P.; Kun, A.; van der Meulen, H. Calling while Driving: An Initial Experiment with HoloLens. In Proceedings of the Ninth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Manchester, VT, USA, 26–29 June 2017; Public Policy Center, University of Iowa: Iowa City, IA, USA, 2017. [Google Scholar]

- Janssen, C.P.; Boyle, L.N.; Kun, A.L.; Ju, W.; Chuang, L.L. A hidden markov framework to capture human–machine interaction in automated vehicles. Int. J. Hum.-Comput. Interact. 2019, 35, 947–955. [Google Scholar] [CrossRef]

- Janssen, C.P.; Iqbal, S.T.; Kun, A.L.; Donker, S.F. Interrupted by my car? Implications of interruption and interleaving research for automated vehicles. Int. J. Hum.-Comput. Stud. 2019, 130, 221–233. [Google Scholar] [CrossRef]

- Benyon, D.; Turner, P.; Turner, S. Designing Interactive Systems: People, Activities, Contexts, Technologies; Pearson Education: London, UK, 2005. [Google Scholar]

- Spinuzzi, C. The methodology of participatory design. Tech. Commun. 2005, 52, 163–174. [Google Scholar]

- Gaver, B.; Dunne, T.; Pacenti, E. Design: Cultural probes. Interactions 1999, 6, 21–29. [Google Scholar] [CrossRef]

- Hughes, J.A.; Randall, D.; Shapiro, D. Faltering from Ethnography to Design. In Proceedings of the 1992 ACM Conference on Computer-Supported Cooperative Work, Toronto, ON, Canada, 1–4 November 1992; Association for Computing Machinery: New York, NY, USA, 1992; pp. 115–122. [Google Scholar]

- Design Council. The “Double Diamond” Design Process Model; Design Council: London, UK, 2005. [Google Scholar]

- Gustafsson, D. Analysing the Double Diamond Design Process through Research & Implementation. Master’s Thesis, Aalto University, Espoo, Finland, 2019. [Google Scholar]

- Rousseau, C.; Bellik, Y.; Vernier, F.; Bazalgette, D. A framework for the intelligent multimodal presentation of information. Signal Process. 2006, 86, 3696–3713. [Google Scholar] [CrossRef]

- Kim, H.S.; Yoon, S.H.; Kim, M.J.; Ji, Y.G. Deriving Future User Experiences in Autonomous Vehicle. In Proceedings of the AutomotiveUI’15: The 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 112–117. [Google Scholar]

- Jeon, M.; Riener, A.; Sterkenburg, J.; Lee, J.; Walker, B.N.; Alvarez, I. An international survey on automated and electric vehicles: Austria, Germany, South Korea, and USA. In Proceedings of the International Conference on Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management, Las Vegas, NV, USA, 15 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 579–587. [Google Scholar]

- Leonhardt, S.; Leicht, L.; Teichmann, D. Unobtrusive vital sign monitoring in automotive environments—A review. Sensors 2018, 18, 3080. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Pharr, M.; Salvatore, G.A. Lab-on-skin: A review of flexible and stretchable electronics for wearable health monitoring. ACS Nano 2017, 11, 9614–9635. [Google Scholar] [CrossRef] [PubMed]

- Poslad, S. Ubiquitous Computing: Smart Devices, Environments and Interactions; John Wiley & Sons: Chichester, UK, 2011. [Google Scholar]

- Schifferstein, H.N.; Desmet, P.M. Tools facilitating multi-sensory product design. Des. J. 2008, 11, 137–158. [Google Scholar] [CrossRef]

- Beaudouin-Lafon, M.M. Interfaces Multimodales: Concepts, Modèles et Architectures. Ph.D. Thesis, Université Paris, Paris, France, 30 May 1995. [Google Scholar]

- Morrison, G.R.; Ross, S.J.; Morrison, J.R.; Kalman, H.K. Designing Effective Instruction; John Wiley & Sons: Chichester, UK, 2019. [Google Scholar]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer 2003; Springer: Berlin, Germany, 2003; pp. 187–196. [Google Scholar]

- Hassenzahl, M.; Monk, A. The inference of perceived usability from beauty. Hum.-Comput. Interact. 2010, 25, 235–260. [Google Scholar] [CrossRef]

- Beyer, G.; Alt, F.; Müller, J.; Schmidt, A.; Isakovic, K.; Klose, S.; Schiewe, M.; Haulsen, I. Audience behavior around large interactive cylindrical screens. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 1021–1030. [Google Scholar]

- Hu, J.; Le, D.; Funk, M.; Wang, F.; Rauterberg, M. Attractiveness of an interactive public art installation. In Proceedings of the International Conference on Distributed, Ambient, and Pervasive Interactions, Las Vegas, NV, USA, 21–26 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 430–438. [Google Scholar]

- Fastnacht, T.; Fischer, P.T.; Hornecker, E.; Zierold, S.; Aispuro, A.O.; Marschall, J. The hedonic value of sonnengarten: Touching plants to trigger light. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia, Stuttgart, Germany, 26–29 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 507–514. [Google Scholar]

- Wang, C.; Terken, J.; Hu, J. “Liking” Other Drivers’ Behavior while Driving. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, 17–19 September 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1–6. [Google Scholar]

- AttrakDiff. Available online: http://www.attrakdiff.de/index-en.html (accessed on 1 September 2022).

- Wu, S.J.; Bai, X.; Fiske, S.T. Admired rich or resented rich? How two cultures vary in envy. J. Cross Cult. Psychol. 2018, 49, 1114–1143. [Google Scholar] [CrossRef]

- Schrepp, M.; Held, T.; Laugwitz, B. The influence of hedonic quality on the attractiveness of user interfaces of business management software. Interact. Comput. 2006, 18, 1055–1069. [Google Scholar] [CrossRef]

- De Vos, J. Towards happy and healthy travellers: A research agenda. J. Transp. Health 2018, 11, 80–85. [Google Scholar] [CrossRef]

- De Vos, J. Analysing the effect of trip satisfaction on satisfaction with the leisure activity at the destination of the trip, in relationship with life satisfaction. Transportation 2019, 46, 623–645. [Google Scholar] [CrossRef]

- Brewster, S.; McGookin, D.; Miller, C. Olfoto: Designing a Smell-Based Interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, Québec, Canada, 22–27 April 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 653–662. [Google Scholar]

- Fröhlich, P.; Baldauf, M.; Mirnig, A.G. 2nd Workshop on User Interfaces for Public Transport Vehicles: Interacting with Automation. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands, 21–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 19–24. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).