Abstract

The progression and adoption of innovative learning methodologies signify that a respective part of society is open to new technologies and ideas and thus is advancing. The latest innovation in teaching is the use of Augmented Reality (AR). Applications using this technology have been deployed successfully in STEM (Science, Technology, Engineering, and Mathematics) education for delivering the practical and creative parts of teaching. Since AR technology already has a large volume of published studies about education that reports advantages, limitations, effectiveness, and challenges, classifying these projects will allow for a review of the success in the different educational settings and discover current challenges and future research areas. Due to COVID-19, the landscape of technology-enhanced learning has shifted more toward blended learning, personalized learning spaces and user-centered approach with safety measures. The main findings of this paper include a review of the current literature, investigating the challenges, identifying future research areas, and finally, reporting on the development of two case studies that can highlight the first steps needed to address these research areas. The result of this research ultimately details the research gap required to facilitate real-time touchless hand interaction, kinesthetic learning, and machine learning agents with a remote learning pedagogy.

1. Introduction

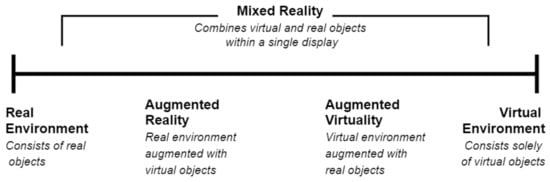

Augmented Reality (AR) allows for the superimposing of computer-generated virtual 3D objects on top of a real environment in real time [1] as explained in Figure 1. Learning assisted with AR technology enables ubiquitous [2], collaborative [3], and localized learning [4]. It facilitates the magic manifestation of a virtual object displayed in real time in a real-world space that can engage a user in the learning process like no other medium has been able to before. AR is an emerging technology with high potential for learning, teaching, and creative training [5].

Figure 1.

Milgram’s Continuum about Physical reality, Augmented Reality, Augmented Virtuality and Virtual Reality [6].

The fundamental research question this paper seeks to address is that since AR technology has a large volume of published studies about education that reports advantages, limitations and effectiveness, what are the major challenges and emerging opportunities that can help to adopt new pedagogies such as kinesthetic and self-directed learning in the resource- constrained environments in AR?

2. Background

AR-based training has advantages over Virtual Reality (VR) approaches, as training takes place in the real world and can have access to real tactile feedback when performing a training task. Other advantages include the instructions and location-dependent information being directly linked and/or attached to physical objects [7].

AR due to both its novelty and potential to create innovate and attractive interfaces can bring a natural enticement to the learning process [8,9]. It can be used with desktops, tablets, smartphones, or Head-Mounted Displays (HMDs). Moreover, it is portable and adaptable in different scenarios to enhance the learning process in the traditional classroom, special education classroom, and outside the classroom [10]. The large-scale study of Ecosystems Augmented Reality Learning System (EARLS) reported the highest ratings on “Usefulness of learning Ecosystems” [11]. However, previous research presented results of reviewing different methods of augmenting educational content, testing at different education levels and subject domains [12], game-based learning [13], AR in remote learning [14] and systematic review of AR in Science, Technology, Engineering, and Mathematics (STEM) [15], but it lacks focus on new interaction techniques, the involvement of intelligent agents and collaboration capabilities [3] in the AR application.

Given recent moves worldwide to explore the use of remote learning, it is now the perfect time to explore the current challenges of this field and its potential future research directions of using AR for education to meet this need. Advancements in machine learning combined with agent-oriented approaches allow for developing rich conversational embodied agents to aid in remote learning. It has long been known that a co-learner agent can greatly help a participant in a learning task [16] and collaborative learning approaches with enhanced emotional abilities have relatively recently been also shown to increase this effect [17]. Research in 3D Augmented Reality Agents (AURAs) [18] is more limited though. Thus, this review includes examining the different uses of agents within some of the projects surveyed. To capture the current state of the art related work for this research, five types of AR applications are considered based on the definition of the five directions of AR education explored by [19] in the field. These categories are as follows:

- AR books;

- AR educational games;

- AR discovery-based learning applications;

- AR projects that model real-world objects for interaction;

- AR projects exploring skill-based training.

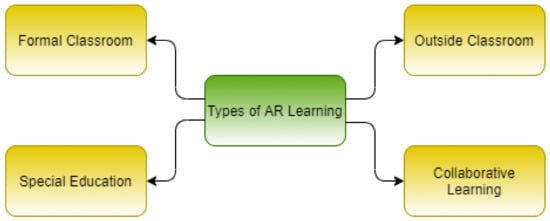

The survey has a narrow focus on AR-specific learning scenarios outlined in Figure 2, and this search did not have sufficient papers to justify taking a typical systematic review approach, as all papers that met the criteria were included, giving a perfect snapshot of the current state of the art in these areas.

Figure 2.

Types of AR learning included in this study.

2.1. AR Learning in Formal Classrooms

In the classroom setting, AR allows students to learn through the combination of both real and computer-generated images [20]. It helps to understand the different topics with different scenarios.

2.2. AR Learning in Special Education

AR has the capability to create a learning opportunity for special children by overcoming the physical barriers; it can bring a high-quality educational experience to students with learning and physical disabilities as well as the special education classroom, as explained in Section 4.11.

2.3. AR Learning Outside the Classroom

Using AR smartphone application, the AR learning experience can be extended outside the formal classroom, including self-assisted learning. AR can create immersive learning opportunities by overlaying digital content from field trips to learning in personalized space.

2.4. AR for Collaborative Learning

If an educator is looking to model scientific practice, AR provides the opportunity to support the multifaceted world of scientific exploration. The need for collaborative learning has increased recently due to the growing demand for remote and independent learning where students need to connect with other mates and teachers.

In Section 4, current research studies are presented in different subsections according to domains and then educational level, which resulted from surveying and documenting projects in this field discovered through an exhaustive search. These research projects will be further examined in a table listing research objectives, educational levels, subjects they trialed the study with, how they have created their AR applications, and what devices and tracking technologies they used.

This review gives a high-level overview of the different user interface complexity with the corresponding level of possible collaboration with either a human or some form of Artificial Intelligence (AI) construct. This construct can take the form of a simple script, an agent-based system, or a machine learning algorithm.

In Section 5, based on the review from Section 4, this section will identify future research areas. This is followed by Section 6, where the exploration of these research gaps is presented with the implementation of two case studies, which can better illustrate the proposed research directions and highlight the current state of the art in AR. Finally, Section 7 will outline the conclusions of this paper.

3. Methodology

The search aimed to cover all the reputed AR studies in education found through IEEE Xplore, ACM digital library, and Google Scholar with the keywords “Augmented Reality” and “Learning” in the title after 2000. Considering the results found by IEEE Xplore 395 and ACM Digital Library 546 and 3389 on Google Scholar, this study is moving away from the systematic review approach to explore more technical aspects of the AR learning applications. The goal of this study is to explore only those studies which are presenting new and productive applications for learning with different APIs, interaction capacity, libraries, agents, and display devices. The studies conducted at the early level up to 5th grade are considered Primary School and from 6th to 8th grade are considered Elementary School. Both Primary and Elementary Schools are considered Early Education in the analysis, while upper classes before undergraduate are considered secondary levels (High School). After Secondary, all the upper classes are considered as university (Tertiary Education).

Finally, this research only included papers that focus on these four AR learning scenarios mentioned in Figure 2.

4. Results and Discussion

This section will provide detail discussion on AR learning studies in different domains and different educational levels. Furthermore, this discussion is presented in Table 1 with a short objective, number of participants (subjects), display devices (desktop, handheld (smartphones/tablets), WebAR, HMDs), UI level, collaboration capacity and agency. Below is the detailed discussion.

Table 1.

AR studies with their status of domain, education level, libraries, display devices, user interaction, collaboration capacity and agents.

4.1. Interactive ARBooks for Early Classes

AR books are the most adopted learning pedagogy in the field of AR learning [21]. This concept involves converting the traditional books into interactive AR books by overlaying 3D contents. Nguyen et al. reported that the use of AR, regardless of grade level or subject area, allows students to be actively engaged in the learning process [22]. The concept of Augmented Instructions [23] can convert a physical book to virtuality and ARGarden [24] is an interactive flower gardening AR system, creating a positive learning engagement by adding visualization in the learning process.

Similarly, study findings about AR for teaching basic concepts of transportation [25], Toys++ [26] and an AR magical playbook to digitize the traditional storytelling by [27] shows the role of AR as an engaging factor in the learning process.

Adding more to interaction, an inquiry-based AR learning environment AIBLE [28] manipulates the virtual representations of the Sun, the Moon, and the Earth, which helped to prove the concept of tasks mobilization and active learning in AR.

The use of AR to enhance the learner’s interest in the Chinese library classification scheme was supported by using a physical presentation agent [29]. To learn the role of parents in child learning, the concept of an AR picture [30] identified four behavioral patterns: parent as dominator, child as dominator, communicative child–parent pair, and low communicative child–parent pair.

4.2. Interactive Books for Higher Classes

For high school, Liarokapis, Fotis et al. [31] developed Multimedia Augmented Reality Interface for E-learning (MARIE) to use the potential of AR by superimposing Virtual Multimedia Content (VMC) information in an AR tabletop setting, enabling the user to interact with the VMC composed of three-dimensional objects and animations.

To convert the traditional books into interactive AR books, miBook (Multimedia Interactive Book) reflects the development of a new concept of virtual interpretation of conventional textbooks and audio-visual content [32]. This idea of virtual interpretation showed an impact on learning outcomes by adding up visualization to a regular textbook. In a similar approach, the ARIES system [33] showed the physical markers as significant impact creators in usability and perceived enjoyment proved as a much more important factor than perceived usefulness.

To find the effectiveness of AR, the ARCS model (Attention, Relevance, Confidence, Satisfaction) of motivation was applied by Wei et al. [34] using “AR Creative-Classroom” and “AR Creative-Builder”. A pilot study proved that the proposed teaching scheme significantly improved learning motivation and student creativity in creative design courses.

To enhance the reading and writing of physical books, SESIL combines book pages and handwriting recognition using the AR camera [35]. It is considered robust and reliable for practical use in education as it yielded positive results. In addition, Jeonghye Han conducted an exploratory study to empirically examine children’s observations toward the computer- and robot-mediated AR systems which reported positive dramatic play and interactive engagement [36]. By mixing the interactive concept maps with AR technology, the support of a good instructional technique and scaffolds improved the learning outcomes when mixed in to develop a new learning pedagogy [37].

4.3. STEM (Science, Technology, Engineering, and Mathematics) Education

One of the first use cases of AR learning in secondary education is for STEM subjects. AR allows teachers to incorporate new technology and techniques in the classroom, which is one of the primary scenarios outlined in Section 2.1. STEM is taught in secondary and tertiary level education, which will be discussed in the overview of Education Level given in Section 5.1. Given the link between the technologies that enable AR and STEM, it naturally has become one of the primary domains where AR learning is present, as discussed in Section 5.2. Some of the best examples of AR learning come from looking into the possible use cases in Chemistry. Chen et al. [38] investigated how students interact with AR models as compared to physical models to learn amino acids structure in the 3D environment. Learning chemistry with ARChemist [39] and through gestures tested in CHEMOTION [40] provide a virtual interaction with chemicals using hand-tracking technology.

Similarly to Chemistry, one of the first topics to be covered using AR learning is Astronomy. The use of the AR to learn the Earth–Sun relationship [41], Earth–Moon System [42] and Live Solar System (LSS) [43] helped to enhance meaningful engagement in learning astronomy concepts and conceptual thinking. AR can assist in learning gravity and planetary motion with an interactive simulation, which increased the learning gain significantly and increased the positive attitudes of the students [44].

Visualization techniques of biology processes within AR allow students to understand better processes that are impossible in real time. Nickels et al. [45] developed an AR framework ProteinScanAR as an assistive tool for engaging lessons on molecular biology topics using AR. Science Center To Go (SCeTGo) [46] investigated the role of teachers’ and students’ acceptance and found AR pedagogical efficiency very constructive. Likewise, at the high school level, there are many AR studies at university level for learning anatomy. For example, refs. [47,48,49,50,51,52] developed AR anatomy learning systems to learn the exterior to interior of the body by introducing an innovative, hands-on study of the human musculoskeletal system. In addition, the use of leapmotion for 3D body anatomy learning was tested to use hand tracking for interacting with 3D models [53,54].

The teaching of engineering subjects is a cornerstone of STEM, and as such, there are multiple examples of AR learning in this area. One summary to view these innovations used 3D web tools in technical and engineering education to help the multidimensional augmentation of teaching materials [55,56] used in technology and design engineering. Learning Physics through Play Project (LPP) helps to learn concepts of physics about force and motion [57] and LightUp [58] is used for learning concepts of electronics such as circuit boards, magnets, and plastic sheets.

By combining modern mobile AR technology and pedagogical inquiry activities, Chang et al. [59] used AR for teaching Nuclear Power Plant activities with more productive digital visualization. Adding more to learning electronics concepts, ElectARmanual [60] and an AR-based flipped learning system [61] helped to achieve better learning outcomes by using the AR guiding mechanism.

Collaboration within an AR environment is an important AR learning scenario as outlined in Section 5.8. In keeping with the Chemistry theme, one example of a tangible interaction study that focused on chemistry was conducted using a Tangible User Interface (TUI) called Augmented Chemistry (AC) which reported higher user acceptance by interacting with the 3D models in the lab [62]. The tangible user interface could be one area that helps collaborative learning, but the nature of tangible interaction can require additional resources, and in the current COVID crises, alternative touchless interaction approaches could be a better solution to this, which will be discussed in Section 6.1 and further in Section 6.5 with a Chemistry-related case study. Other prominent examples of collaboration using Situated Multimedia Arts Learning Lab (SMALLab) found extensive evidence as a powerful approach to learn in a design experiment with secondary earth science students [63]. There is also the collaboration ability of AR and Internet of Things (IoTs) to create productivity in Engineering education with different scenarios [64]. Finally, AR as a learning tool in the mathematics tested with Construct 3D [65] and GeoAR [66] to support learning the geometry showed a highly positive impact concerning its educational potential.

Field trips are one example of STEM scenarios that require leaving the formal learning environment to suit the outdoor AR use case mentioned in Section 2.3. Embodied experiences at the field trips for the science classrooms with situated simulations obtained valuable and effective results about student engagement and their connection with the experiential learning from the curriculum [67].

This potential for kinesthetic learning or hands-on learning by performing tasks (discussed more in Section 6.2) has been adopted for AR technical training for people to learn new maintenance and assembly skills for various industries [68]. For a trainee, interaction with real-world objects and machinery parts while obtaining the virtual information for learning is the actual advantage of using AR for training.

4.4. Language and Vocabulary Learning

The use of AR for learning languages is concerned with the formal classroom learning in Section 2.1, which has been tested successfully in different studies. The use of AR flashcards for learning about the English alphabet and animals [69] and an AR-based game for Kanji learning [70] reported AR as a tool of motivation and visual presentation to learning languages. To test the ubiquitous games in the learning approach for language learning, HELLO (Handheld English Language Learning Organization) [71] and another handheld language learning approach [72] showed improved retention of words, which increased student satisfaction and attention [73]. Similarly, TeachAR using kinect [74] is used for teaching basic English words (colors, shapes, and prepositions) and game-based foreign language learning [75]. The use of Microsoft Hololens for vocabulary learning, as compared with traditional flashcard-based learning, produced higher productivity and effectiveness in learning outcomes [76].

For language learning at higher classes, a mobile learning tool Explorez [77] used interacting with objects to improve their French language skills which received acceptance as “useful” and “motivating for students”.

4.5. Collaborative Learning

The collaborative learning approach, as defined in Section 2.4, provides an opportunity of collaboration: either teacher-to-student or student-to-student. AR as collaborative learning [78] with SMALLab, which is a Student-Centered Learning Environment (SCLE) that uses interactive digital media in a multimodal sensing framework, reported promising results in social and collaboration aspects. Furthermore, in the collaborative learning approach, ref. [79] used ARClassNote, which is an AR application that allows users to save and share handwritten notes over optical see-through HMDs. It makes it easier to communicate between instructors and students by sharing written class materials.

An AR game concept, “Locatory”, was introduced by combining a game logic with collaborative gameplay and personalized mobile AR visualization, which provides different perspectives of the interactive 3D visualization to learn the content with AR and identify positive experiences [80]. LookingGlass and Hololens between students and teachers to collaborate is adopted in METAL [81] where users can share 3D content between devices. Further identified collaboration opportunities are discussed in Section 5.8, and there is a recommended approach in Section 6.6.

4.6. Environment and History Learning

Taking AR to location-based learning for environment and history as outlined in Section 2.3 has proved many successful results. A study of learning environment [82] and location-based experiments [83] reported the use of an AR learning engagement factor by providing virtual media over the top of the physical environment. Gurjot Singh et al. developed an inquiry-based learning application CI-Spy that seeks to engage the students in history using an AR environment [84]. This enabled a comprehensive understanding of historical inquiry for students by combining AR experiences with strategic learning. Lu et al. chose game-based learning for a marine learning application with interactive storytelling and an interactive game-based test [85]. It helped the students to learn in the virtual context, thus deepening their involvement in the learning experience.

The idea of iARBook captures video input and sends it to the Vuforia, which processes frames in real time to detect and find the images in the database [86]. Once it recognizes an image, the related scene is rendered over the video frame as a learning object. By considering the goals of learning achievement and attitude, EARLS promotes a positive learning attitude among students over Keyboard/Mouse-based Computer-Assisted Instruction (KMCAI) approach [11].

4.7. Special Education

AR learning in special education, as defined in the Section 2.2, can increase the learning gain by enhancing the representation of content. To explore AR learning in special education, Luna et al. [87] created 3D Learning Objects using AR for an online learning program that is working for ADHD (Attention Deficit Hyperactivity Disorder)-affected students. It was further developed as an AHA project in the extended study as a Web-based AR learning system [88]. Their evaluation study highlighted the potential of AR for interactive learning and allowing users to become more engaged with learning content [89]. In addition, it provides opportunities for educational engagement and process reiteration for learners.

4.8. MOOC (Massive Open Online Courses)

MOOC (Massive Open Online Courses), as shown in Section 2.3, facilitate learning outside the classrooms. Its importance has been increased recently due to the remote learning adoption throughout the world in the emergency due to the pandemic. The use of AR in the MOOC has been taken into account to generate interactive and extra appealing online content, which helped to create more productivity by improving visualization, supporting individualism, and enlightening the interest factor [90,91]. MOOC as a remote learning environment can lack the hands-on approach of other learning approaches.

4.9. Technical Training

AR has been taken as a learning tool in skill-based training. In general, technical training, the concept of kinesthetic learning is important for hands-on learning. For example, the integration of Intelligent Tutoring Systems (ITS) involves training users about how to assemble components on a computer motherboard, including identifying individual components and installing them on the motherboard [92]. This approach of adaptive guidance helped an intelligent AR system show faster performance than an AR training system without intelligent support.

Joanne Yip et al. [93] used AR for a technical training workshop to perform the threading task, which facilitated better learning and helped to improve students’ learning experience and understanding of the complex concepts. In Section 6.2, this concept was taken into account along with a focus on adding intelligence in the technical skills learning and doing more with a hands-on approach in a resource-constrained environment. These training experiences also require the introduction of Authoring Tools to allow for the rapid development of customized experiences, which are further discussed in Section 6.

4.10. Authoring Tools

The need for authoring tools is the more important work in AR learning, which still has open opportunities. There is very little work on authoring tools in AR [94]. In an immersive AR authoring tool, allowing users to create AR content was an excellent approach to addressing the need for authoring tools [95]. It reduces the workload for the teacher in creating and managing AR learning experiences. This can be directly aided by the introduction of different forms of artificial intelligence; one such approach is discussed in Section 6.3, and the background to this work will be discussed in the next section.

4.11. Multi-Agent Systems

In both use cases, Section 2.1 and Section 2.3, incorporating the agents can play a significant role. The concept of using interacting intelligent agents in AR can bring more productive results. The use of self-directed animated agents in the AR such as AR Puppet [96], validated in AR Lego, helped in the autonomous decisions based on their perception of the real environment. A multi-agent system (SRA agent) approach helped to increase the motivation, using the principle of “learn by doing” or kinesthetic learning [97].

Kid Space is an advanced, centralized projection device that creates multi-modal interactivity and intelligently projects AR content across surfaces using a visible agent to help with learning by playing [98]. The initial study showed that children were involved actively with the projected character during a math exercise.

FenAR is an AR system following the Problem-Based Learning (PBL) approach [99]. The evaluation of this study indicated that integrating AR with PBL activities improved students’ learning achievement and increased their positive attitudes toward physics subjects. Alexandru Balog [100] examined the aspects of perceived enjoyment in the students’ acceptance of an Augmented Reality Teaching Platform (ARTP) developed using Augmented Reality in School Environments (ARiSE) [101] with test cases in biology and chemistry. This research found perceived usefulness and ease of use as extrinsic and perceived enjoyment as intrinsic for this news learning environment. This paper has recommendation an implementation of machine learning agents in Section 6.3.

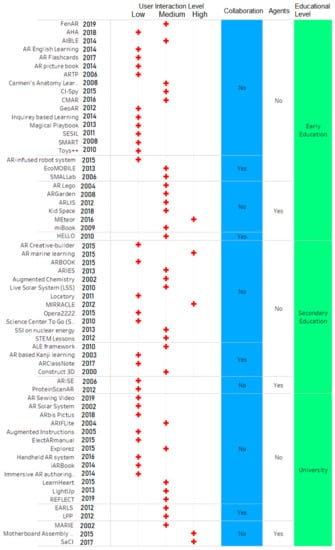

Table 1 shows details of AR learning studies according to their research objectives, educational levels, interaction capacity, collaboration capacity, and agency. Subs. means subjects, participants of the specific study. Studies those have no evaluations are mentioned as (-). Collab. means collaboration capacity. The user interaction (UI) is categorized as Low, Medium, and High; Low means only placing the 3D objects in the real environment, and High means a higher interaction such as a hand interaction or gestures. It has been divided based on simple marker-based interaction to real-time touchless hand interaction. These findings are further visualized in Figure 3.

Figure 3.

Graphics representation of different AR applications according to their user interaction capacity, collaboration capacity, agents and educational level.

5. Main Insights and Future Research Agenda

Examining current and past projects based on educational level, domain, tracking, collaboration capacity, agents, and interaction level leads naturally to identifying specific future research areas. The AR application design requirements suggested in [116] include being flexible of the content that the teacher can adapt according to the children’s needs, guiding in the exploration to maximize the learning opportunities, in a limited time, and attention to curriculum needs.

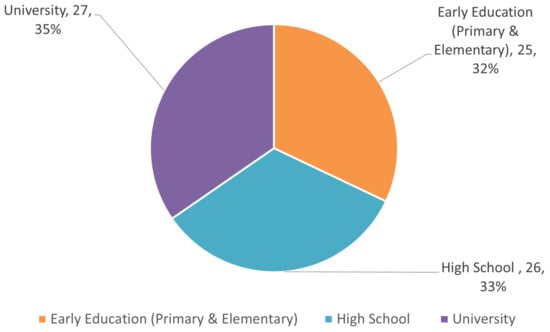

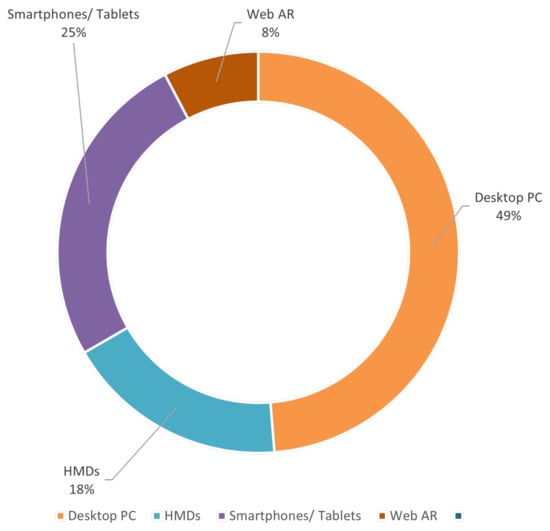

This analysis involves 25 studies from primary and elementary levels, 26 from secondary school levels, and 27 from university levels. In devices-based analysis, a desktop is used in 49% and tablets/smartphones, HMDs, laptops, and WebAR are used in 25%, 18%, and 8%, respectively.

5.1. Education Level

The analysis shows that AR has been tested and proved equally effective at three educational levels: early (Primary and Elementary School), secondary (High School) and tertiary education (University) presented in Section 4. Furthermore, there is a trend toward AR use in medical education [117]; however, there is a lack of focus on technical or vocational evaluation [93]) of its use in teaching. Figure 4 explains distribution based on educational level.

Figure 4.

AR studies distribution according to educational level.

5.2. Domain

At early level education (Primary and Elementary schools), most of the studies are using AR for alphabet learning such as [69], vocabulary learning, or early level science topics as [28]. At the secondary level (High School) and tertiary level (University), it has been used as a learning enhancement source for STEM subjects, as discussed in Section 4.3. STEM has emerging future opportunities in the immersive learning technology. To teach those topics or skill training where actual material is not affordable or not possible in the class setting, the use of AR technology can be an effective resource for students.

5.3. Experiments Conducted to Evaluate AR Education

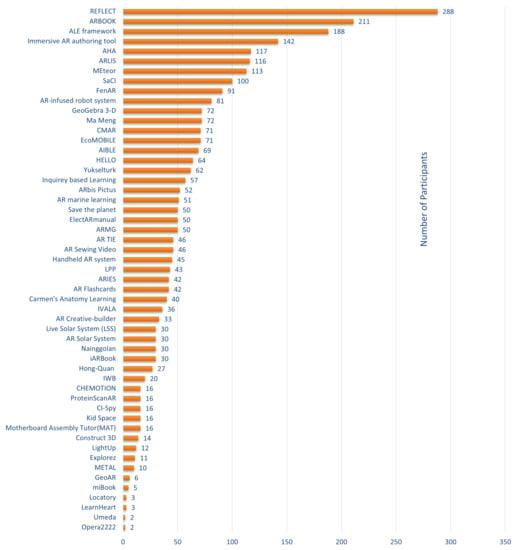

Most of the large-scale studies have conducted experiments using the control group and experiment group. The focus of the studies is to seek to increase students’ attention, become more relevant to the study topics, and gain confidence and satisfaction compared to the traditional learning resources. Some studies are evaluated at a large scale just like EARLS [11]. Figure 5 shows the visual explanation of the scale of the experiments used in the evaluation of different studies (excluding EARLS in the graph as an outlier).

Figure 5.

AR studies analysis according to the scale of experiments (Participants).

5.4. Libraries Used

GPS has been used in location-based learning applications. Vuforia is used as tracking SDK for most of the studies just like [86]. ARKit, ARCore, ARFoundation, MRTK, and AR.js are the other main libraries used for tracking. These are compatible with a new series of high-end devices. There are lots of custom-made solutions used as well.

5.5. Devices

In the previous studies, desktop PCs have been used as a major device for AR applications followed by smartphones and HMDs [92]. With the saturation, now, tablets and smartphones are achieving higher adoption rates due to their portability. Specifically, in Section 4.3, there is a higher possibility of using a smartphone due to the availability of personal devices. Figure 6 explain distribution based on devices.

Figure 6.

Distribution of display devices used in AR educational applications.

Smartphones and tablets are the most reliable devices for AR in education due to affordability, as the cost of good HMDs is much higher versus smartphones. The legacy desktop is moving more toward smartphones and HMDs such as Magic Leap and Hololens. In Section 6, recommendations will be presented using two exemplar case studies; one using a desktop and the other using a smartphone.

5.6. Tracking

Most of the studies have used markers for tracking, which are implemented with Vuforia such as in [86], and recent studies started using markerless tracking with ARCore or ARKit (moved to plane tracking) in AR application, which allows the users to use the application without a specified marker. However, the use of markers is still important in situations that require high accuracy and some form of tangible interaction with virtual objects. Studies involving location-based learning are using GPS-like field trips study [67]. For devices not supported by ARCore, ARFoundation with ARCore XR Plugin is adopted to achieve a similar experience. This is important, as AR conducted in the classroom (Section 2.1 requires good lightning conditions, which are not always possible. Some studies focused on the hand-tracking technology to use gestures with Leapmotion and full body with Kinect; these are presented further with recommendations in the Section 6.

5.7. User Interaction

There are very few studies in AR learning which are using hand tracking and gesture control functionality. User interaction has a vast opportunity for applications in the future. The use of Kinect for anatomy learning is a great example of it [51]. There are opportunities to discover new forms of interaction, gesture versus real-time hand interaction (adopted in Section 6), and possibilities of tactile learning. Leapmotion technology is not widely explored yet in AR learning applications. Recent innovation in the machine vision-based hand tracking technology in smartphones [118,119] is also a very recent opportunity to explore, which is still going through testing stages to achieve stability.

5.8. Collaboration

There is still no significant focus on collaboration between students and teachers in an AR learning setting in the previous studies. However, it is a crucial aspect of AR learning, as outlined in Section 2.4. Furthermore, both formal and outside classroom environments can be enhanced by collaborative learning.

Few studies have attempted collaborative learning, such as interactive simulation for learning astronomy [44], HELLO [71], EARLS by [11], ARClassNote [79] and METAL [81]. This area will be a critical future research area and will be discussed in the future research direction section, as collabotation is an important need for remote learning. There is a need for collaborative learning in the remote learning setup where the teacher can have access to students and students can collaborate in between. This approach is further addressed in Section 6.6.

5.9. Agents

From all of the above studies discussed, there are only a few that have considered agents (as shown in Figure 3); these are presented in Section 4.11 such as Kid Space [98,99] for problem-based learning. Machine Learning Unity Agents are pretty new and have not been effectively implemented in any AR studies for education, as discussed in detail in Section 6.3. Experiments are required to demonstrate this logical next step in developing AR learning applications where agents can enhance the learning process and hence outcomes.

6. Highlighting Future Directions Using Prototype Case Studies

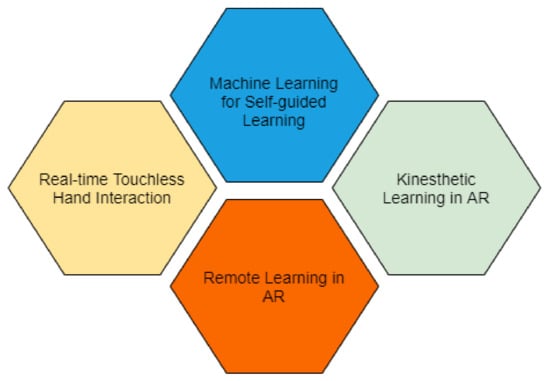

Based on the visual presentation in Figure 3, which shows an evident lack of “High” level interaction and “Agency”, this section will focus on highlighting future directions by the creation of prototype case study applications that can illustrate the research gaps that need to be addressed. These research gaps derived from Section 5.3 though to Section 5.9 include how to approach real-time touchless hand interaction, kinesthetic learning, machine learning agents, and remote learning components in the AR for learning applications.

The recent health crisis influences the choice to illustrate these research gaps due to the COVID-19 pandemic, which has caused educational disruption and forced people to re-think traditional e-learning approaches and innovate new ways. This research has taken four components into further investigation as presented in Figure 7.

Figure 7.

Four core components of a future research approach in this area.

The worldwide adoption of the Suspending Classroom without Suspending Learning policy [120] has created a rush toward the learning technologies and mainly focuses on learning within the personal space. Furthermore, the virus spread through touching created demand for touchless or contactless technologies, which is essential in digital transformation, including AR educational applications. Therefore, the future of this research tends more toward finding new types of user interfaces, integration of agents to create more productive learning contexts, and new formats of learning pedagogies.

6.1. Real-Time Touchless Hand Interaction (Avoid Touching Devices)

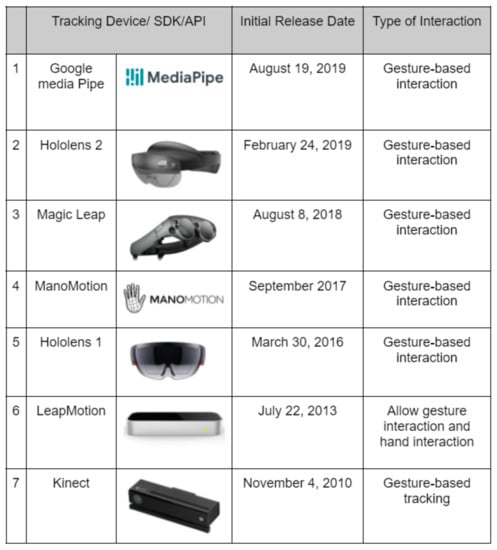

In previous studies, the interaction is AR has been tested such as tracking markers, voice and gestures [74] and some other case studies presented in the Section 4.3, but there is no research about real-time hand interaction, which can help to implement the practical kinesthetic learning approach. These research gaps tie into our Section 5.8 discussions of the future direction of tracking and Section 5.9 on user interaction. In touchless hand interaction, there is nothing to hold in your hands, no buttons to push, no need for a mouse, keyboard, or touch on the display screen. It is all with the help of a depth sensor camera, motion sensor camera, infrared technology, and machine learning algorithms that help to interact with the digital elements.

Research studies use Kinect and leapmotion tested gesture-based touchless interaction with learning objects. Hololens provides gesture-based interaction with the 3D environment, as it has been used for the vocabulary learning approach [76]. Kinect and Leapmotion are working only with desktop systems, and Hololens is too costly to afford as a personalized learning solution. The current shift of the learning spaces is moving toward more personalized, affordable, and portable, so smartphones can be the topmost priority for learning technology. The recent development of the Google Mediapipe by [121] and manomotion for hand tracking in smartphones has opened new opportunities for touchless interaction technologies on affordable devices. Touchless interaction by hand-tracking technology is of two types: interacting with gestures and real-time hand interaction with virtual objects.

Figure 8 shows the different options of touchless interaction APIs and devices, which are providing the gesture-based interaction ability to the user for interaction in an augmented environment. This demonstrates the current state of the art about devices which was discussed previously in Section 5.7.

Figure 8.

Devices/APIs used in AR for interaction to allow hand tracking and gestures.

6.2. Kinesthetic Learning

Kinesthetic learning is a form of learning which allows the user to “learn by doing the task” [122] instead of reading, listening, or watching. Specifically, in the STEM subjects, this learning approach is required to help students with learning the concepts, as the distance learning mode has many challenges for both learners and instructors. This is especially for learners during COVID, where access to hands-on experiences is sometimes not possible due to social distancing requirements. When considering remote learning for technical and scientific topics, the use of hands-on work is preferred for acquiring better knowledge; a kinesthetic learning approach with touchless hand interaction can bring this power in AR learning. It can add a positive impact on learning and skills acquisition when integrated effectively. To proceed with the kinesthetic learning approach, there is a need of real-time hand interaction with the 3D objects.

6.3. Machine Learning Agents for Self-Guided Learning

The changing imperative of the current learning state is moving toward independent learning or self-guided learning. Machine learning and artificial intelligence play a significant role in achieving this goal in e-learning solutions by taking a new role as a frontier. As discussed in Section 5.9, machine learning agents can transform the future of learning if implemented in the AR application intelligently. In addition, artificial intelligence and human interaction can play a role by utilizing the collected data. To integrate the machine learning agents in the proposed research, there are two possible use cases which are taken into account:

- End User Trainer

- Self-Assessment

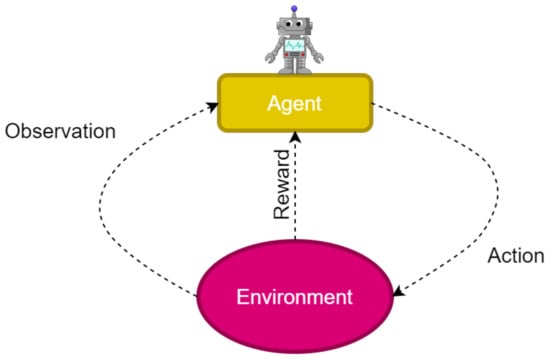

This research has taken machine learning agents to work in unity, which is following the reinforcement learning concept as explained in Figure 9.

Figure 9.

Process flow of machine learning agent in the AR application, following the reinforcement learning.

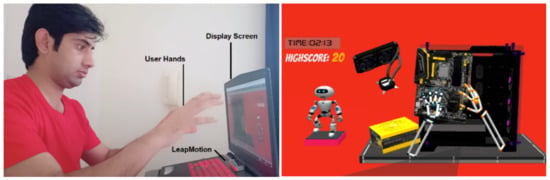

6.4. First Case Study—Learning PC Assembling

By considering the recommendations made in Section 6, a practical approach is adopted for the implementation of this concept. This first case study focuses on learning PC assembling for high school students as a part of necessary computer science education. A motherboard assembly learning case study [92] is presented in Section 4, which added an interaction hand to interact with the virtual PC, thus allowing hands-on learning in the virtual environment). The technical components are the unity 3D Engine, Unity machine learning agents (Ml-agents), Leapmotion hand-tracking SDK, Leapmotion device, hand interaction SDK, and 3D models.

In Figure 10, a demonstration shows touchless hand interaction on the desktop PC using the Leapmotion device. It is a PC assembly learning case study using a kinesthetic learning approach by allowing the user to do PC assembling tasks with the 3D PC parts using the Leapmotion hand tracking.

Figure 10.

Touchless hand interaction for learning PC assembly using Leapmotion hand tracking.

Using real-time hand interaction and practicing the “learning by doing” approach, this study is developed for a Windows desktop PC, as the Leapmotion device is incompatible with android devices. The role of the virtual robot present in Figure 10 is defined as an engaging factor by providing different gestures to complete tasks by the user. The ML-agents are used to train the Neural Network (NN) model, which is integrated into the application later as a user trainer based on the pre-trainer models; secondly, it helps in the self-assessment in the testing phase when the user himself is interacting with the system to virtually disassemble the PC.

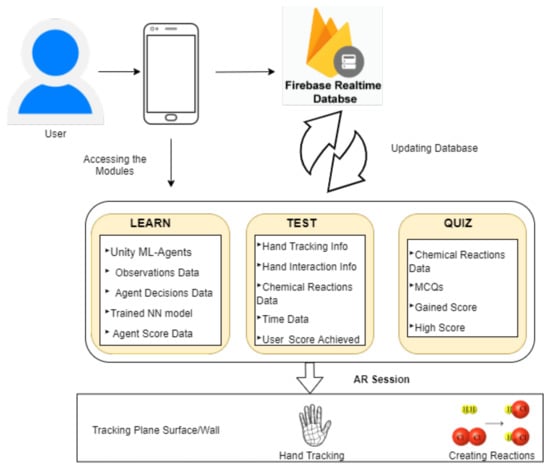

6.5. Second Case Study—Learning Chemical Reactions

Moving away from the desktop environment and HMDs with hand tracking in smartphones is a long-awaited technology that is now possible with Google Mediapipe and Manomotion using the neural network and machine learning algorithms. This concept from the virtual chemistry lab is influenced by the second case study, which is influenced by [62] and the STEM-related case studies presented in Section 4.7. Moving the display device from a desktop to a smartphone, this case study was implemented using the latest interventions in the vision-based SDK Manomotion with ARFoundation and ARCore XR Plugin. Manomotion provides real-time 2D and 3D hand tracking without using any external hardware with the smartphone with minimal computing power. The architecture diagram and process of learning flow have been explained in Figure 11.

Figure 11.

Architecture diagram and working flow of recommended approach in second case study.

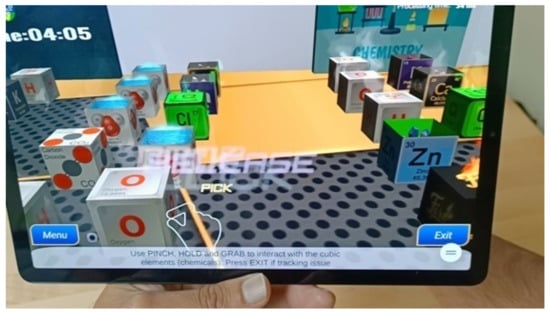

Figure 12 shows the hand tracking in smartphones and touchless hand interaction, which allows the user to create chemical reactions by interacting with cubic elements. Using the depth camera, custom-made hand, and collaborating with defined gestures of Manomation, it allows the implementation real-time hand interaction with the virtual objects (chemicals/elements). Real-time hand interaction is natural and is a great solution for a health-centric digital interaction. In this case study, machine learning agents are used to implement the user trainer and self-assessment learning scenarios following the flow explained in Figure 9.

Figure 12.

Touchless hand interaction for learning chemical reactions using hand tracking (real-time hand interaction) in smartphones.

The ML agent has been taken as a trainer for users by following previously trained models that help users learn the chemical reactions and then create the same reactions in the next module, where agents help in self-assessment. For training agents, data of user hand interaction with the models and success in creating reactions have been taken as behavior collections and rewards assigning. The trained Neural Network (NN) models are integrated into the AR application in Unity, which works as a trainer module for users before learning by interacting with 3D objects.

As explained in Figure 11, users will start with the LEARN module, where machine learning agents will help users learn to create chemical reactions using the previously trained NN model. In the next step, the process moves into the TEST module, where hand tracking is enabled using Manomotion technology and allows users to create chemical reactions using hand interaction. After completing the TEST module, the user enters the QUIZ module, which has all assessment-related MCQs that complete a learning cycle from assisted learning to hands-on learning and assessment.

6.6. Empowering Remote Learning in AR

The landscape of education has dramatically changed since the COVID-19 pandemic started, which provided a directional shift toward blended learning. Through AR applications, learning becomes dynamic and user-centered. All of the previous studies in AR were implemented in the context of the classroom environment. Still, it has not been explored in the personal devices outside the classroom with an organized system such as ARETE [123]. The shift of the current learning scenario has created a need to implement new types of learning pedagogies using the collaboration factor, as mentioned in Section 6.7. In addition, the concept of remote learning has been adopted by integrating the Firebase database. In Section 6.5, it is developed for smartphones and involves remote user authentication and post-test learning assessment integrated with a Google Firebase database which helps to control and access the learning outcome of the users.

This approach allows for the conducting of a remote evaluation, as explained in [124] in VR applications for skill training. This design would start to address the research gap highlighted in Section 5.3. This application can be extended as a collaboration and authoring tool between the students and instructor, and it further allows for large-scale remote evaluation with 100s if not 1000s of participants. COVID-19 has led to a global acceleration in remote learning adoption as the realization sets in that this is the ‘new normal’ [125].

6.7. Limitations

The main limitations of the study are that it is not following a systematic review approach because intelligent agents and kinesthetic learning using hand-tracking technology is not widely adopted yet. Hence, this study aims to consider only those AR studies presenting some new ideas in interaction and enhancing learning capacity. Secondly, we are not using “Mixed Reality” (Only “Augmented Reality”) as a keyword to find the research items because this study is specifically planned for AR only.

AR has been applied in education for visualization, annotation, and storytelling in STEM and early education. Still, there is a lack of intelligent agents, hand tracking, and especially real-time hand interaction, which is needed for personalized and hands-on learning in resource-constraint environments for learning STEM subjects. There are very few studies that have performed evaluations at a large scale [11]. Despite the listed advantages, certain drawbacks should be considered when building educational solutions with AR: Some teachers may not be able to put these new technologies into practice due to a lack of necessary skills. There is a need for instructors willing to engage with new technologies and educational institutions to adapt their infrastructure with applications of AR in the classroom. Hardware availability is a limitation to consider for the uptake of AR in schools. Still, it is time for governments and policymakers to consider the investment in AR devices, given their long-term impact on knowledge retention and students’ enhanced engagement with educational content and activities.

7. Conclusions

From STEM to foreign language learning, immersive technologies have proven to be effective and result-oriented tools in creating more interactive learning environments. In particular, AR enables more sophisticated, interactive, and discovery-based forms of learning. Of course, the portability and compatibility of the contents between devices matter; however, practically, it is impossible to provide the AR contents with the same quality on all devices.

The next logical step in AR-based learning discovered from reviewing the current literature is the development of applications that can enable personalized learning materials for both students and teachers. Future technology challenges are user acceptance, proving its educational effect, and further development of the frameworks to develop these innovative applications. As the cost of hardware and software decreases, AR technology will become more affordable, thus allowing it to be widespread at all educational levels. Based on the previous studies and current progress in technology, it can be said that AR can produce supportive results in the education of STEM subjects to reduce the cognitive load in learning. Virtual lab-based practical learning, where augmented objects can fill the need for physical material, allows the user to “learn by doing” where learning material in physical shape is unavailable.

The investigated future research areas have been further demonstrated in two STEM-related case studies, which will be further advanced in future work and evaluated in terms of usability. The use of hand interaction technology combined with kinesthetic learning pedagogy can be integrated with multi-sensory haptic feedback to facilitate our effects for realism and enter into the metaverse as a collaborative learning approach.

Author Contributions

Conceptualization, M.Z.I. and A.G.C. methodology, M.Z.I.; software, M.Z.I.; formal analysis, M.Z.I.; investigation, M.Z.I.; resources, A.G.C.; data curation, M.Z.I.; writing—original draft preparation, M.Z.I.; writing—review and editing, A.G.C. and E.M.; visualization, M.Z.I.; supervision, A.G.C.; project administration, A.G.C.; funding acquisition, A.G.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by University College Dublin, Ireland and Beijing-Dublin International College (BDIC), Beijing. The APC was funded by BDIC.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mangina, E. 3D learning objects for augmented/virtual reality educational ecosystems. In Proceedings of the 2017 23rd International Conference on Virtual System & Multimedia (VSMM), Dublin, Ireland, 31 October–2 November 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Cárdenas-Robledo, L.A.; Peña-Ayala, A. Ubiquitous learning: A systematic review. Telemat. Inform. 2018, 35, 1097–1132. [Google Scholar] [CrossRef]

- Marques, B.; Silva, S.S.; Alves, J.; Araujo, T.; Dias, P.M.; Santos, B.S. A conceptual model and taxonomy for collaborative augmented reality. IEEE Trans. Vis. Comput. Graph. 2021, 14, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Martins, N.C.; Marques, B.; Alves, J.; Araújo, T.; Dias, P.; Santos, B.S. Augmented reality situated visualization in decision-making. Multimed. Tools Appl. 2022, 81, 14749–14772. [Google Scholar] [CrossRef]

- Avila-Garzon, C.; Bacca-Acosta, J.; Duarte, J.; Betancourt, J. Augmented Reality in Education: An Overview of Twenty-Five Years of Research. Contemp. Educ. Technol. 2021, 13, 302. [Google Scholar]

- Hughes, C.E.; Stapleton, C.B.; Hughes, D.E.; Smith, E.M. Mixed reality in education, entertainment, and training. IEEE Comput. Graph. Appl. 2005, 25, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, Y.; Kyza, E.A. Relations between student motivation, immersion and learning outcomes in location-based augmented reality settings. Comput. Hum. Behav. 2018, 89, 173–181. [Google Scholar] [CrossRef]

- Mystakidis, S.; Christopoulos, A.; Pellas, N. A systematic mapping review of augmented reality applications to support STEM learning in higher education. Educ. Inf. Technol. 2021, 13, 1–15. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Antonioli, M.; Blake, C.; Sparks, K. Augmented Reality Applications in Education. J. Technol. Stud. 2014, 40. [Google Scholar] [CrossRef]

- Hsiao, K.F.; Chen, N.S.; Huang, S.Y. Learning while exercising for science education in augmented reality among adolescents. Interact. Learn. Environ. 2012, 20, 331–349. [Google Scholar] [CrossRef]

- Sirakaya, M.; Alsancak Sirakaya, D. Trends in Educational Augmented Reality Studies: A Systematic Review. Malays. Online J. Educ. Technol. 2018, 6, 60–74. [Google Scholar] [CrossRef]

- Pellas, N.; Fotaris, P.; Kazanidis, I.; Wells, D. Augmenting the learning experience in primary and secondary school education: A systematic review of recent trends in augmented reality game-based learning. Virtual Real. 2019, 23, 329–346. [Google Scholar] [CrossRef]

- Nesenbergs, K.; Abolins, V.; Ormanis, J.; Mednis, A. Use of augmented and Virtual Reality in remote higher education: A systematic umbrella review. Educ. Sci. 2021, 11, 8. [Google Scholar] [CrossRef]

- Sırakaya, M.; Alsancak Sırakaya, D. Augmented reality in STEM education: A systematic review. Interact. Learn. Environ. 2020, 1–14. [Google Scholar] [CrossRef]

- Ju, W.; Nickell, S.; Eng, K.; Nass, C. Influence of colearner agent gehavior on learner performance and attitudes. In Proceedings of the CHI’05 Extended Abstracts on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 1509–1512. [Google Scholar]

- Maldonado, H.; Lee, J.E.R.; Brave, S.; Nass, C.; Nakajima, H.; Yamada, R.; Iwamura, K.; Morishima, Y. We learn better together: Enhancing elearning with emotional characters. In Computer Supported Collaborative Learning 2005: The Next 10 Years! Routledge: London, UK, 2017; pp. 408–417. [Google Scholar]

- Campbell, A.G.; Stafford, J.W.; Holz, T.; O’Hare, G.M. Why, when and how to use augmented reality agents (AuRAs). Virtual Real. 2014, 18, 139–159. [Google Scholar] [CrossRef]

- Yuen, S.C.Y.; Yaoyuneyong, G.; Johnson, E. Augmented reality: An overview and five directions for AR in education. J. Educ. Technol. Dev. Exch. (JETDE) 2011, 4, 11. [Google Scholar] [CrossRef]

- Chang, G.; Morreale, P.; Medicherla, P. Applications of augmented reality systems in education. In Proceedings of the Society for Information Technology & Teacher Education International Conference, California, CA, USA, 3 January–29 March 2010; Association for the Advancement of Computing in Education (AACE): Chesapeake, VA, USA, 2010; pp. 1380–1385. [Google Scholar]

- Koutromanos, G.; Mavromatidou, E. Augmented Reality Books: What Student Teachers Believe About Their Use in Teaching. In Research on E-Learning and ICT in Education; Springer: Berlin, Germany, 2021; pp. 75–91. [Google Scholar]

- Nguyen, V.T.; Jung, K.; Dang, T. Creating virtual reality and augmented reality development in classroom: Is it a hype? In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; IEEE: New York, NY, USA, 2019; pp. 212–2125. [Google Scholar]

- Asai, K.; Kobayashi, H.; Kondo, T. Augmented instructions—A fusion of augmented reality and printed learning materials. In Proceedings of the 2005 Fifth IEEE International Conference on Advanced Learning Technologies, Kaohsiung, Taiwan, 5–8 July 2005; IEEE: New York, NY, USA, 2005; pp. 213–215. [Google Scholar]

- Oh, S.; Woo, W. ARGarden: Augmented edutainment system with a learning companion. In Transactions on Edutainment I; Springer: Berlin, Germany, 2008; pp. 40–50. [Google Scholar]

- Freitas, R.; Campos, P. SMART: A SysteM of Augmented Reality for Teaching 2 nd grade students. In Proceedings of the 22nd British HCI Group Annual Conference on People and Computers: Culture, Creativity, Interaction-Volume 2, Liverpool, UK, 1–5 September 2008; BCS Learning & Development Ltd.: Swindon, UK, 2008; pp. 27–30. [Google Scholar]

- Simeone, L.; Iaconesi, S. Toys++ ar embodied agents as tools to learn by building. In Proceedings of the 2010 10th IEEE International Conference on Advanced Learning Technologies, Sousse, Tunisia, 5–7 July 2010; IEEE: New York, NY, USA, 2010; pp. 649–650. [Google Scholar]

- Tomi, A.B.; Rambli, D.R.A. An interactive mobile augmented reality magical playbook: Learning number with the thirsty crow. Procedia Comput. Sci. 2013, 25, 123–130. [Google Scholar] [CrossRef]

- Fleck, S.; Simon, G.; Bastien, J.C. [Poster] AIBLE: An inquiry-based augmented reality environment for teaching astronomical phenomena. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality-Media, Art, Social Science, Humanities and Design (ISMAR-MASH’D), Munich, Germany, 10–12 September 2014; IEEE: New York, NY, USA, 2014; pp. 65–66. [Google Scholar]

- Chen, C.M.; Tsai, Y.N. Interactive augmented reality system for enhancing library instruction in elementary schools. Comput. Educ. 2012, 59, 638–652. [Google Scholar] [CrossRef]

- Cheng, K.H.; Tsai, C.C. Children and parents’ reading of an augmented reality picture book: Analyses of behavioral patterns and cognitive attainment. Comput. Educ. 2014, 72, 302–312. [Google Scholar] [CrossRef]

- Liarokapis, F.; Petridis, P.; Lister, P.F.; White, M. Multimedia augmented reality interface for e-learning (MARIE). World Trans. Eng. Technol. Educ. 2002, 1, 173–176. [Google Scholar]

- Dias, A. Technology enhanced learning and augmented reality: An application on multimedia interactive books. Int. Bus. Econ. Rev. 2009, 1, 103–110. [Google Scholar]

- Wojciechowski, R.; Cellary, W. Evaluation of learners’ attitude toward learning in ARIES augmented reality environments. Comput. Educ. 2013, 68, 570–585. [Google Scholar] [CrossRef]

- Wei, X.; Weng, D.; Liu, Y.; Wang, Y. Teaching based on augmented reality for a technical creative design course. Comput. Educ. 2015, 81, 221–234. [Google Scholar] [CrossRef]

- Margetis, G.; Koutlemanis, P.; Zabulis, X.; Antona, M.; Stephanidis, C. A smart environment for augmented learning through physical books. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; IEEE: New York, NY, USA, 2011; pp. 1–6. [Google Scholar]

- Han, J.; Jo, M.; Hyun, E.; So, H.J. Examining Young Children’s Perception toward Augmented Reality-Infused Dramatic Play. Educ. Technol. Res. Dev. 2015, 63, 455–474. [Google Scholar] [CrossRef]

- Chen, C.H.; Chou, Y.Y.; Huang, C.Y. An Augmented-Reality-Based Concept Map to Support Mobile Learning for Science. Asia-Pac. Educ. Res. 2016, 25, 567–578. [Google Scholar] [CrossRef]

- Chen, Y.C. A study of comparing the use of augmented reality and physical models in chemistry education. In Proceedings of the 2006 ACM International Conference on Virtual Reality Continuum and Its Applications, Hong Kong China, 14 June–17 April 2006; ACM: New York, NY, USA, 2006; pp. 369–372. [Google Scholar]

- Woźniak, M.P.; Lewczuk, A.; Adamkiewicz, K.; Józiewicz, J.; Malaya, M.; Ladonski, P. ARchemist: Aiding Experimental Chemistry Education Using Augmented Reality Technology. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–6. [Google Scholar]

- Al-Khalifa, H.S. CHEMOTION: A gesture based chemistry virtual laboratory with leap motion. Comput. Appl. Eng. Educ. 2017, 25, 961–976. [Google Scholar] [CrossRef]

- Shelton, B.E.; Hedley, N.R. Using augmented reality for teaching earth-sun relationships to undergraduate geography students. In Proceedings of the First IEEE International Workshop Augmented Reality Toolkit, Darmstadt, Germany, 29 September 2002; IEEE: New York, NY, USA, 2002; Volume 8. [Google Scholar]

- Lindner, C.; Rienow, A.; Jürgens, C. Augmented Reality applications as digital experiments for education—An example in the Earth-Moon System. Acta Astronaut. 2019, 161, 66–74. [Google Scholar] [CrossRef]

- Sin, A.K.; Zaman, H.B. Live Solar System (LSS): Evaluation of an Augmented Reality book-based educational tool. In Proceedings of the 2010 International Symposium on Information Technology, Tokyo, Japan, 26–29 October 2010; IEEE: New York, NY, USA, 2010; Volume 1, pp. 1–6. [Google Scholar]

- Lindgren, R.; Tscholl, M.; Wang, S.; Johnson, E. Enhancing learning and engagement through embodied interaction within a mixed reality simulation. Comput. Educ. 2016, 95, 174–187. [Google Scholar] [CrossRef]

- Nickels, S.; Sminia, H.; Mueller, S.C.; Kools, B.; Dehof, A.K.; Lenhof, H.P.; Hildebrandt, A. ProteinScanAR-An augmented reality web application for high school education in biomolecular life sciences. In Proceedings of the 2012 16th International Conference on Information Visualisation, Montpellier, France, 11–13 July 2012; IEEE: New York, NY, USA, 2012; pp. 578–583. [Google Scholar]

- Buchholz, H.; Brosda, C.; Wetzel, R. Science center to go: A mixed reality learning environment of miniature exhibits. In Proceedings of the “Learning with ATLAS@ CERN” Workshops Inspiring Science Learning EPINOIA, Rethymno, Greece, 25–31 July 2010; pp. 85–96. [Google Scholar]

- Juan, C.; Beatrice, F.; Cano, J. An augmented reality system for learning the interior of the human body. In Proceedings of the 2008 Eighth IEEE International Conference on Advanced Learning Technologies, Santander, Spain, 1–5 July 2008; IEEE: New York, NY, USA, 2008; pp. 186–188. [Google Scholar]

- Barrow, J.; Forker, C.; Sands, A.; O’Hare, D.; Hurst, W. Augmented reality for enhancing life science education. In Proceedings of the 2019 VISUAL, Rome, Italy, 30 June–4 July 2019; pp. 7–12. [Google Scholar]

- Blum, T.; Kleeberger, V.; Bichlmeier, C.; Navab, N. mirracle: An augmented reality magic mirror system for anatomy education. In Proceedings of the 2012 IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, 4–8 March 2012; IEEE: New York, NY, USA, 2012; pp. 115–116. [Google Scholar]

- Ma, M.; Fallavollita, P.; Seelbach, I.; Von Der Heide, A.M.; Euler, E.; Waschke, J.; Navab, N. Personalized augmented reality for anatomy education. Clin. Anat. 2016, 29, 446–453. [Google Scholar] [CrossRef]

- Meng, M.; Fallavollita, P.; Blum, T.; Eck, U.; Sandor, C.; Weidert, S.; Waschke, J.; Navab, N. Kinect for interactive AR anatomy learning. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, SA, Australia, 1–4 October 2013; IEEE: New York, NY, USA, 2013; pp. 277–278. [Google Scholar]

- Barmaki, R.; Yu, K.; Pearlman, R.; Shingles, R.; Bork, F.; Osgood, G.M.; Navab, N. Enhancement of Anatomical Education Using Augmented Reality: An Empirical Study of Body Painting. Anat. Sci. Educ. 2019, 12, 599–609. [Google Scholar] [CrossRef]

- Nainggolan, F.L.; Siregar, B.; Fahmi, F. Anatomy learning system on human skeleton using Leap Motion Controller. In Proceedings of the 2016 3rd International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 15–17 August 2016; IEEE: New York, NY, USA, 2016; pp. 465–470. [Google Scholar]

- Umeda, R.; Seif, M.A.; Higa, H.; Kuniyoshi, Y. A medical training system using augmented reality. In Proceedings of the 2017 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 24–26 November 2017; IEEE: New York, NY, USA, 2017; pp. 146–149. [Google Scholar]

- Liarokapis, F.; Mourkoussis, N.; White, M.; Darcy, J.; Sifniotis, M.; Petridis, P.; Basu, A.; Lister, P.F. Web3D and augmented reality to support engineering education. World Trans. Eng. Technol. Educ. 2004, 3, 11–14. [Google Scholar]

- Thornton, T.; Ernst, J.V.; Clark, A.C. Augmented Reality as a Visual and Spatial Learning Tool in Technology Education. Technol. Eng. Teach. 2012, 71, 18–21. [Google Scholar]

- Enyedy, N.; Danish, J.A.; Delacruz, G.; Kumar, M. Learning physics through play in an augmented reality environment. Int. J. Comput.-Support. Collab. Learn. 2012, 7, 347–378. [Google Scholar] [CrossRef]

- Chan, J.; Pondicherry, T.; Blikstein, P. LightUp: An augmented, learning platform for electronics. In Proceedings of the 2013 12th International Conference on Interaction Design and Children, New York, NY, USA, 24–27 June 2013. [Google Scholar]

- Chang, H.Y.; Wu, H.K.; Hsu, Y.S. Integrating a mobile augmented reality activity to contextualize student learning of a socioscientific issue. Br. J. Educ. Technol. 2013, 44, E95–E99. [Google Scholar] [CrossRef]

- Martín-Gutiérrez, J.; Fabiani, P.; Benesova, W.; Meneses, M.D.; Mora, C.E. Augmented reality to promote collaborative and autonomous learning in higher education. Comput. Hum. Behav. 2015, 51, 752–761. [Google Scholar] [CrossRef]

- Chang, S.C.; Hwang, G.J. Impacts of an augmented reality-based flipped learning guiding approach on students’ scientific project performance and perceptions. Comput. Educ. 2018, 125, 226–239. [Google Scholar] [CrossRef]

- Fjeld, M.; Voegtli, B.M. Augmented chemistry: An interactive educational workbench. In Proceedings of the 2002 International Symposium on Mixed and Augmented Reality, Darmstadt, Germany, 30 September–1 October 2012; IEEE: New York, NY, USA, 2002; pp. 259–321. [Google Scholar]

- Birchfield, D.; Megowan-Romanowicz, C. Earth science learning in SMALLab: A design experiment for mixed reality. Int. J. Comput.-Support. Collab. Learn. 2009, 4, 403–421. [Google Scholar] [CrossRef]

- Probst, A.; Ebner, M. Introducing Augmented Reality at Secondary Colleges of engineering. In DS 93: Proceedings of the 20th International Conference on Engineering and Product Design Education (E&PDE 2018), Dyson School of Engineering, Imperial College, London, UK, 6–7 September 2018; The Design Society: Glasgow, UK, 2018; pp. 714–719. [Google Scholar]

- Kaufmann, H. Collaborative Augmented Reality in Education; Institute of Software Technology and Interactive Systems, Vienna University of Technology: Vienna, Austria, 2003. [Google Scholar]

- Kirner, T.G.; Reis, F.M.V.; Kirner, C. Development of an interactive book with augmented reality for teaching and learning geometric shapes. In Proceedings of the 7th Iberian Conference on Information Systems and Technologies (CISTI 2012), Madrid, Spain, 20–23 June 2012; IEEE: New York, NY, USA, 2012; pp. 1–6. [Google Scholar]

- Liestøl, G.; Smørdal, O.; Erstad, O. STEM—Learning by means of mobile augmented reality. Exploring the Potential of augmenting classroom learning with situated simulations and practical activities on location. In Edulearn15 Proceedings; The International Academy of Technology, Education and Development: Valencia, CA, USA, 2015. [Google Scholar]

- Webel, S.; Bockholt, U.; Engelke, T.; Gavish, N.; Olbrich, M.; Preusche, C. An augmented reality training platform for assembly and maintenance skills. Robot. Auton. Syst. 2013, 61, 398–403. [Google Scholar] [CrossRef]

- Safar, A.H.; Al-Jafar, A.A.; Al-Yousefi, Z.H. The Effectiveness of Using Augmented Reality Apps in Teaching the English Alphabet to Kindergarten Children: A Case Study in the State of Kuwait. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 417–440. [Google Scholar] [CrossRef]

- Wagner, D.; Barakonyi, I. Augmented reality kanji learning. In Proceedings of the 2nd IEEE/ACM International Symposium on Mixed and Augmented Reality, Tokyo, Japan, 10 October 2003; IEEE Computer Society: Washington, DC, USA, 2003; p. 335. [Google Scholar]

- Liu, T.Y.; Chu, Y.L. Using ubiquitous games in an English listening and speaking course: Impact on learning outcomes and motivation. Comput. Educ. 2010, 55, 630–643. [Google Scholar] [CrossRef]

- Santos, M.E.C.; Taketomi, T.; Yamamoto, G.; Rodrigo, M.M.T.; Sandor, C.; Kato, H. Augmented reality as multimedia: The case for situated vocabulary learning. Res. Pract. Technol. Enhanc. Learn. 2016, 11, 4. [Google Scholar] [CrossRef]

- Radu, I. Why should my students use AR? A comparative review of the educational impacts of augmented-reality. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; IEEE: New York, NY, USA, 2012; pp. 313–314. [Google Scholar]

- Dalim, C.S.C.; Dey, A.; Piumsomboon, T.; Billinghurst, M.; Sunar, S. Teachar: An interactive augmented reality tool for teaching basic english to non-native children. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Merida, Mexico, 19–23 September 2016; IEEE: New York, NY, USA, 2016; pp. 82–86. [Google Scholar]

- Yukselturk, E.; Altıok, S.; Başer, Z. Using game-based learning with kinect technology in foreign language education course. J. Educ. Technol. Soc. 2018, 21, 159–173. [Google Scholar]

- Ibrahim, A.; Huynh, B.; Downey, J.; Höllerer, T.; Chun, D.; O’donovan, J. Arbis pictus: A study of vocabulary learning with augmented reality. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2867–2874. [Google Scholar] [CrossRef] [PubMed]

- Perry, B. Gamifying French Language Learning: A case study examining a quest-based, augmented reality mobile learning-tool. Procedia-Soc. Behav. Sci. 2015, 174, 2308–2315. [Google Scholar] [CrossRef]

- Birchfield, D.; Ciufo, T.; Minyard, G. SMALLab: A mediated platform for education. In Proceedings of the ACM SIGGRAPH 2006 Educators Program, Boston, MA, USA, 30 July–3 August 2006; ACM: New York, NY, USA, 2006; p. 33. [Google Scholar]

- Choi, J.; Yoon, B.; Jung, C.; Woo, W. ARClassNote: Augmented Reality Based Remote Education Solution with Tag Recognition and Shared Hand-Written Note. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; IEEE: New York, NY, USA, 2017; pp. 303–309. [Google Scholar]

- Specht, M.; Ternier, S.; Greller, W. Dimensions of mobile augmented reality for learning: A first inventory. J. Res. Educ. Technol. 2011, 7, 117–127. [Google Scholar]

- Pan, X.; Zheng, M.; Xu, X.; Campbell, A.G. Knowing Your Student: Targeted Teaching Decision Support Through Asymmetric Mixed Reality Collaborative Learning. IEEE Access 2021, 9, 164742–164751. [Google Scholar] [CrossRef]

- Chiang, T.H.; Yang, S.J.; Hwang, G.J. Students’ online interactive patterns in augmented reality-based inquiry activities. Comput. Educ. 2014, 78, 97–108. [Google Scholar] [CrossRef]

- Kamarainen, A.M.; Metcalf, S.; Grotzer, T.; Browne, A.; Mazzuca, D.; Tutwiler, M.S.; Dede, C. EcoMOBILE: Integrating augmented reality and probeware with environmental education field trips. Comput. Educ. 2013, 68, 545–556. [Google Scholar] [CrossRef]

- Singh, G.; Bowman, D.A.; Hicks, D.; Cline, D.; Ogle, J.T.; Johnson, A.; Zlokas, R.; Tucker, T.; Ragan, E.D. CI-spy: Designing a mobile augmented reality system for scaffolding historical inquiry learning. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality-Media, Art, Social Science, Humanities and Design, Fukuoka, Japan, 29 September–3 October 2015; IEEE: New York, NY, USA, 2015; pp. 9–14. [Google Scholar]

- Lu, S.J.; Liu, Y.C. Integrating augmented reality technology to enhance children’s learning in marine education. Environ. Educ. Res. 2015, 21, 525–541. [Google Scholar] [CrossRef]

- Bazzaza, M.W.; Al Delail, B.; Zemerly, M.J.; Ng, J.W. iARBook: An immersive augmented reality system for education. In Proceedings of the 2014 International Conference on Teaching, Assessment and Learning (TALE), Wellington, New Zealand, 8–10 December 2014; IEEE: New York, NY, USA, 2014; pp. 495–498. [Google Scholar]

- Luna, J.; Treacy, R.; Hasegawa, T.; Campbell, A.; Mangina, E. Words Worth Learning-Augmented Literacy Content for ADHD Students. In Proceedings of the 2018 IEEE Games, Entertainment, Media Conference (GEM), Galway, Ireland, 15–17 August 2018; IEEE: New York, NY, USA, 2018; pp. 1–9. [Google Scholar]

- Mangina, E.; Chiazzese, G.; Hasegawa, T. AHA: ADHD Augmented (Learning Environment). In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, NSW, Australia, 4–7 December 2018; IEEE: New York, NY, USA, 2018; pp. 774–777. [Google Scholar]

- Chiazzese, G.; Mangina, E.; Chifari, A.; Merlo, G.; Treacy, R.; Tosto, C. The AHA Project: An Evidence-Based Augmented Reality Intervention for the Improvement of Reading and Spelling Skills in Children with ADHD. In Proceedings of the International Conference on Games and Learning Alliance, Palermo, Italy, 5–7 December 2018; Springer: Berlin, Germany, 2018; pp. 436–439. [Google Scholar]

- Chauhan, J.; Taneja, S.; Goel, A. Enhancing MOOC with augmented reality, adaptive learning and gamification. In Proceedings of the 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE), Amritsar, India, 1–2 October 2015; IEEE: New York, NY, USA, 2015; pp. 348–353. [Google Scholar]

- Campbell, A.G.; Santiago, K.; Hoo, D.; Mangina, E. Future mixed reality educational spaces. In Proceedings of the 2016 Future Technologies Conference (FTC), San Francisco, CA, USA, 6–7 December 2016; IEEE: New York, NY, USA, 2016; pp. 1088–1093. [Google Scholar]

- Westerfield, G.; Mitrovic, A.; Billinghurst, M. Intelligent Augmented Reality Training for Motherboard Assembly. Artif. Intell. Educ. 2015, 25, 157–172. [Google Scholar] [CrossRef]

- Yip, J.; Wong, S.H.; Yick, K.L.; Chan, K.; Wong, K.H. Improving quality of teaching and learning in classes by using augmented reality video. Comput. Educ. 2019, 128, 88–101. [Google Scholar] [CrossRef]

- Dengel, A.; Iqbal, M.Z.; Grafe, S.; Mangina, E. A Review on Augmented Reality Authoring Toolkits for Education. Front. Virtual Real. 2022, 3, 798032. [Google Scholar] [CrossRef]

- Jee, H.K.; Lim, S.; Youn, J.; Lee, J. An augmented reality-based authoring tool for E-learning applications. Multimed. Tools Appl. 2014, 68, 225–235. [Google Scholar] [CrossRef]

- Barakonyi, I.; Psik, T.; Schmalstieg, D. Agents that talk and hit back: Animated agents in augmented reality. In Proceedings of the 3rd IEEE/ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; IEEE Computer Society: Washington, DC, USA, 2004; pp. 141–150. [Google Scholar]

- Chamba-Eras, L.; Aguilar, J. Augmented Reality in a Smart Classroom—Case Study: SaCI. IEEE Rev. Iberoam. Tecnol. Del Aprendiz. 2017, 12, 165–172. [Google Scholar] [CrossRef]

- Anderson, G.J.; Panneer, S.; Shi, M.; Marshall, C.S.; Agrawal, A.; Chierichetti, R.; Raffa, G.; Sherry, J.; Loi, D.; Durham, L.M. Kid Space: Interactive Learning in a Smart Environment. In Proceedings of the Group Interaction Frontiers in Technology, Boulder, CO, USA, 16 October 2018; ACM: New York, NY, USA, 2018; p. 8. [Google Scholar]

- Mustafa, F.; Tuncel, M. Integrating augmented reality into problem based learning: The effects on learning achievement and attitude in physics education. Comput. Educ. 2019, 142, 103635. [Google Scholar]

- Balog, A.; Pribeanu, C. The role of perceived enjoyment in the students’ acceptance of an augmented reality teaching platform: A structural equation modelling approach. Stud. Inform. Control. 2010, 19, 319–330. [Google Scholar] [CrossRef]

- Bogen, M.; Wind, J.; Giuliano, A. ARiSE—Augmented reality in school environments. In Proceedings of the European Conference on Technology Enhanced Learning, Crete, Greece, 1–2 October 2006; Springer: Berlin, Germany, 2006; pp. 709–714. [Google Scholar]

- Scaravetti, D.; Doroszewski, D. Augmented Reality experiment in higher education, for complex system appropriation in mechanical design. Procedia Cirp 2019, 84, 197–202. [Google Scholar] [CrossRef]

- Brom, C.; Sisler, V.; Slavik, R. Implementing digital game-based learning in schools: Augmented learning environment of ‘Europe 2045’. Multimed. Syst. 2010, 16, 23–41. [Google Scholar] [CrossRef]

- Kiourexidou, M.; Natsis, K.; Bamidis, P.; Antonopoulos, N.; Papathanasiou, E.; Sgantzos, M.; Veglis, A. Augmented reality for the study of human heart anatomy. Int. J. Electron. Commun. Comput. Eng. 2015, 6, 658. [Google Scholar]

- Zhou, X.; Tang, L.; Lin, D.; Han, W. Virtual & augmented reality for biological microscope in experiment education. Virtual Real. Intell. Hardw. 2020, 2, 316–329. [Google Scholar]

- von Atzigen, M.; Liebmann, F.; Hoch, A.; Bauer, D.E.; Snedeker, J.G.; Farshad, M.; Fürnstahl, P. HoloYolo: A proof-of-concept study for marker-less surgical navigation of spinal rod implants with augmented reality and on-device machine learning. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Rehman, I.U.; Ullah, S. Gestures and marker based low-cost interactive writing board for primary education. Multimed. Tools Appl. 2022, 81, 1337–1356. [Google Scholar] [CrossRef]

- Little, W.B.; Dezdrobitu, C.; Conan, A.; Artemiou, E. Is augmented reality the new way for teaching and learning veterinary cardiac anatomy? Med. Sci. Educ. 2021, 31, 723–732. [Google Scholar] [CrossRef] [PubMed]

- Rebollo, C.; Remolar, I.; Rossano, V.; Lanzilotti, R. Multimedia augmented reality game for learning math. Multimed. Tools Appl. 2022, 81, 14851–14868. [Google Scholar] [CrossRef]