Abstract

In recent years, disease attacks have posed continuous threats to agriculture and caused substantial losses in the economy. Thus, early detection and classification could minimize the spread of disease and help to improve yield. Meanwhile, deep learning has emerged as the significant approach to detecting and classifying images. The classification performed using the deep learning approach mainly relies on large datasets to prevent overfitting problems. The Automatic Segmentation and Hyper Parameter Optimization Artificial Rabbits Algorithm (AS-HPOARA) is developed to overcome the above-stated issues. It aims to improve plant leaf disease classification. The Plant Village dataset is used to assess the proposed AS-HPOARA approach. Z-score normalization is performed to normalize the images using the dataset’s mean and standard deviation. Three augmentation techniques are used in this work to balance the training images: rotation, scaling, and translation. Before classification, image augmentation reduces overfitting problems and improves the classification accuracy. Modified UNet employs a more significant number of fully connected layers to better represent deeply buried characteristics; it is considered for segmentation. To convert the images from one domain to another in a paired manner, the classification is performed by HPO-based ARA, where the training data get increased and the statistical bias is eliminated to improve the classification accuracy. The model complexity is minimized by tuning the hyperparameters that reduce the overfitting issue. Accuracy, precision, recall, and F1 score are utilized to analyze AS-HPOARA’s performance. Compared to the existing CGAN-DenseNet121 and RAHC_GAN, the reported results show that the accuracy of AS-HPOARA for ten classes is high at 99.7%.

1. Introduction

Agriculture is considered as a significant source of income in African and Asian countries [1]. To perform precise agriculture, plant disease detection and classification play significant roles in producing more yield for farmers and helping to improve their standard of life [2]. Detecting and classifying plant diseases at an early stage can minimize the economic losses of farmers and the nation [3]. In today’s world, tomatoes are the second most liked by people worldwide, but they are susceptible to diseases and pests during their growth, which seriously disturbs their crop and quality [4]. Among these, leaf diseases need to be considered as a significant phase, since leaves help in photosynthesis [5]. Tomatoes are generally affected by various diseases such as leaf curl, spots, mosaic bacterial wilt, early blight, and fruit canker [6] under extreme weather conditions and environmental factors [7]. Identifying and classifying leaf diseases at their early stages improves the yield of tomatoes and minimizes losses [8]. On the other hand, the poor prediction and classification of plant leaf disease leads to overusing pesticides, which affects plant growth and severely impacts crops [9]. Moreover, detecting plant disease with the naked eye creates complexity for farmers and consumes more time, resulting in degradation in the quality of plants [10].

Approaches based on Artificial Intelligence (AI) can minimize errors while classifying the images of diseased plant leaves [11]. In recent years, approaches based on deep learning techniques have helped researchers who work in the agricultural domain to classify images of disease-affected leaves [12]. Practical classification can be achieved using effective image augmentation, where the images are augmented and new training samples are created from the existing ones [13]. Augmentation methods generate converted versions of the images from the dataset to improve diversity [14]. However, traditional augmentation approaches face challenges and provide a higher misclassification ratio [15]. Using deep learning approaches for classification offers better results than machine learning approaches [16,17]. In current research, standard image augmentation techniques such as shift, zoom, and rotation are used to generate new images from original datasets and increase the number of images. Still, they are unable to decrease misclassifications [18,19].

Additionally, the methods currently in use based on tomato leaf disease are ineffective, resulting in misclassification with a lower accuracy [20]. The aforementioned problem is the impetus for this study’s effort to create a successful augmentation strategy for enhancing classification performance. According to this research, AS-HPOARA improves classification accuracy by enhancing the image with a pixel-by-pixel residual method. Additionally, the proposed method tends to learn and anticipate the ideal size and form of each pixel in the leaf image.

The key contributions are as follows:

The data were initially gathered from the Plant Leaf Disease dataset, where rotation, scaling, and translation were employed to stabilize the training images and z-score normalization was used to normalize the images.

Deep learning data segmentation based on Modified UNet was created for producing effective augmented images at different resolutions. Modified UNet employs a more significant number of fully connected layers to generate a better representation of deeply buried characteristics.

In addition, the plant leaf images were classified using the augmented images created from the source images. The classification was carried out using HPO-based ARA, where the training data were enhanced and the statistical bias was eliminated to raise the classification accuracy in order to convert the images from one domain to another in a paired manner.

Additionally, the model complexity was reduced by adjusting the hyperparameters that minimize overfitting. Lastly, plant leaf diseases were categorized using the HPOARA system.

This research is arranged as follows: Section 2 provides related works about data augmentation and classification techniques developed for plant disease detection. A detailed explanation of AS-HPOARA is provided in Section 3, whereas the outcomes of the AS-HPOARA are specified in Section 4. Further, conclusions are made in Section 5.

2. Related Work

Wu [21] used GAN-based data augmentation to improve the categorization of tomato leaf disease. The Deep Convolutional GAN (DCGAN) was created to create enhanced images, and GoogleNet was utilized to forecast diseases. The DCGAN was optimized using the learning rate, batch size, and momentum to produce more realistic and diverse data. The use of noise-to-image GANs, meanwhile, that portray the image of healthy leaves as ill leaves, led to an imbalance in effectiveness.

Abbas [22] described a deep learning method that produced synthetic images of plant leaves using Conditional GAN (C-GAN). An additional identification of tomato diseases was performed using the generated synthetic images. Additionally, the DenseNet121 was trained to classify tomato leaf diseases using fake and real images. However, based on only appearance, the C-GAN could not identify different disease stages.

Sasikala Vallabhajosyula et al. [23] presented a Deep Ensemble Neural Network (DENN) based on transfer learning to detect plant leaf disease. While tuning the hyper-parameters, the authors of this research hoped to enhance the classification utilizing DENN and transfer learning. With the aid of transfer learning, these models were confident in extracting discriminating features. The suggested algorithms accurately classified plant leaf diseases by extracting the distinguishing characteristics from leaves. The plant pathologists found it difficult and time-consuming to identify plant diseases manually, and this method was unreliable.

A unique, 14-layered deep convolutional neural network (14-DCNN) was presented by J. Arun Pandian et al. [24] to identify plant leaf diseases from leaves. Several open datasets were combined to form a new dataset. The dataset’s class sizes were balanced using data augmentation techniques. One thousand training epochs of the suggested DCNN model were conducted in an environment with multiple graphics processing units (GPUs). The most appropriate hyperparameter values were chosen randomly using the coarse-to-fine searching strategy to enhance the proposed DCNN model’s training efficacy. Additional data were needed for the DCNN’s training procedure to be effective.

The fine-grained-GAN was introduced by Zhou [25] to perform local spot area data augmentation. To improve the identification of grape leaf spots, data augmentation was used. It utilized hierarchical mask generation to increase the ability of spot feature representation. An upgraded quick R-CNN and fine-grained-GAN were integrated with a fixed-size bounding box to reduce computations and prevent the classifier’s scale variability. However, the technique offered was only appropriate for finding visible leaf spots.

A collaborative framework of diminished features and effective feature selection for cucumber leaf disease identification was offered by Jaweria Kianat et al. [26]. This study proposed a hybrid structure built on feature fusion and selection algorithms that used three fundamental phases to categorize cucumber disease. At last, a collection of classifiers was used to categorize the most discriminant traits. During the serial-based fusion stage, better features over a threshold were chosen. However, it was time-consuming, challenging, prone to mistakes, and deceptive.

To expand the data on tomato leaves and identify diseases, Deng [27] built the RAHC_GAN. For adjusting the size of the actual disease region and enhancing the intra-class data, hidden parameters were included in the input side of the generator. Additionally, residual attention block was included to help the disease region concentrate better. Next, a multi-scale discriminator was employed to enhance the texture of the newly produced images. However, GAN introduced variability while identifying the images, which reduced the overall effectiveness.

Multi-objective image segmentation was used to show tea leaf disease identification by Somnath Mukhopadhyay et al. [28]. The Non-dominated Sorting Genetic Algorithm (NSGA-II) for image clustering was suggested for finding the disease area in tea leaves. Next, the tea leaves’ corresponding feature reduction and disease identification were accomplished using PCA and a multi-class SVM. To fully assist farmers, the suggested system recognized five different diseases from the input and offered relevant actions to be performed. A higher accuracy was achieved by this method; however, it was very time-consuming.

Plant disease classification using ARO with an improved deep learning model was demonstrated by K. Jayaprakash and Dr. S. P. Balamurugan [29]. The developed AROIDL-PDC technique aims to identify and classify various plant diseases. The AROIDL-PDC technique employs a median filtering (MF) strategy during preprocessing. An upgraded version of the MobileNeXt algorithm was also used for feature extraction. The procedure of hyperparameter tuning was then carried out using the ARO approach. Finally, the logistic regression (LR) classifier categorized plant diseases. Several simulations were run to show how the AROIDL-PDC technique performed better.

Deep neural networks through transfer learning were used to forecast rice leaf diseases [30]. In this study, an InceptionResNetV2 model that had already been trained contained the information as weights, which were then transferred to the research investigation for the feature extraction process utilizing the transfer learning approach. Deep learning was enhanced to increase the accuracy in classifying the many diseases affecting rice leaves. The accuracy was improved by running 15 epochs of the simple CNN model with various hyperparameters to 84.75%.

3. Proposed AS-HPOARA Method

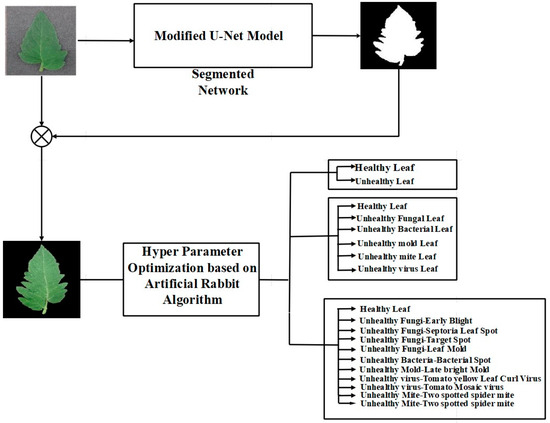

This study aimed to enhance the classification of plant diseases using AS-HPOARA-based data augmentation. The AS-HPOARA method was separated into two parts: the generation of synthetic images using AS-HPOARA and the discriminator-based classification of images of plant diseases. Figure 1 presents a block diagram for the entire AS-HPOARA technique. The generator module received the input images and added a label and an appropriate quantity of noise to produce the pixel variations. The Gaussian Noise data augmentation tool added Gaussian noise to the training images. The sigma value was directly related to the size of the Gaussian Noise effect.

Figure 1.

Block diagram for the overall AS-HPOARA method.

Additionally, this AS-HPOARA offered enhanced pictures, which improved accuracy. Three different types of classifications were performed in this work: (a) the binary classification of healthy and diseased leaves, (b) the five-class classification of healthy and four diseased leaves, and finally, (c) ten-class classification with healthy and nine different disease classes. All the images were divided into ten different classes, where one class was healthy and the other nine classes were unhealthy. Those unhealthy classes were categorized into five subgroups (namely bacterial, viral, fungal, mold, and mite disease). Some sample tomato leaf images for healthy and different unhealthy classes and leaf masks from the Plant Village dataset are shown in Figure 1.

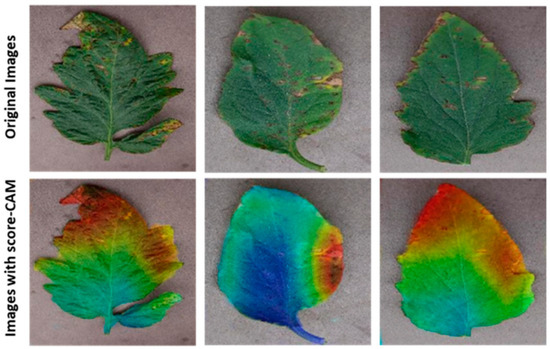

The Plant Village dataset, which contains leaf images and accompanying segmented leaf masks, was used for this investigation. The optimum segmentation network for separating the leaves from the background was investigated using Modified UNet segmentation algorithms. The Score-Cam visualization, which has proven to be quite trustworthy in diverse applications [31], was employed to validate further the segmented leaf leveraging in the categorization.

3.1. Dataset Acquisition

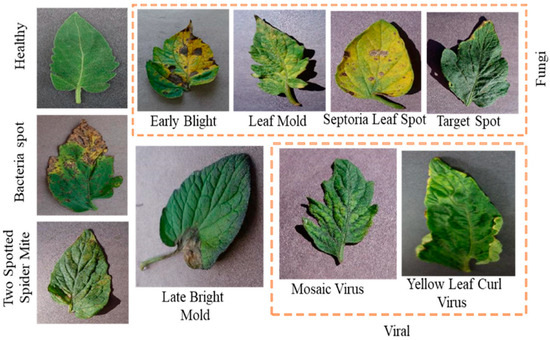

The data utilized in this study to evaluate the AS-HPOARA approach were obtained from the publicly accessible tomato PlantVillage dataset [32]. There are ten different classes in this dataset of 18,161 leaves from PlantVillage. Nine of those ten classes are for diseases, while the final lesson is for health. The ten classes are Tomato Healthy (TH), Tomato Mosaic Virus (TMV), Tomato Early Blight (TEB), Tomato Late Blight (TLB), Tomato Bacterial Spot (TBS), Tomato Leaf Mold (TLM), Tomato Septoria Leaf Spot (TSLS), Tomato Target Spot (TTS), Tomato Yellow Leaf Curl Virus (TYLCV), and Tomato Two-Spotted Spider Mite (TTSSM). Plant disease identification may be performed using the PlantVillage dataset. Illness control procedures can waste time and money and result in additional plant losses if the illness and its causative agent are not correctly identified [33]. Therefore, an accurate illness diagnosis is essential. Plant pathologists frequently have to rely on symptoms to pinpoint a disease issue. It can be used to determine a plant’s species and any diseases it could be carrying. Six augmentation methods, including scaling, rotation, noise injection, gamma correction, picture flipping, and PCA color augmentation, were used to create this dataset [34]. These methods enhanced the dataset to produce a diversified dataset with various background circumstances. Figure 2 displays sample images for the healthy and unhealthy classes and leaf masks. Additionally, Table 1 provides a thorough breakdown of the dataset’s picture count, which is helpful for classification tasks.

Figure 2.

Model images of healthy and unhealthy leaves.

Table 1.

Overall images of the Plant Village dataset.

The images obtained from the tomato plant village dataset were resampled into the size of aiming to improve the classifier’s computational ability. The imbalance problem was avoided using 300 randomly chosen images from each dataset class. Three hundred images were therefore taken into consideration for evaluation over a total of 10 classifications [35]. About 12,000 images were acquired and combined with the real images in the dataset during the final augmentation stage utilizing AS-HPOARA. Thus, a total of 15,000 images were used for the classification, and the dataset was separated into training, validation, and testing phases in the ratio of 70:15:15.

3.2. Preprocessing

Z-score normalization was performed to normalize the images using the mean and standard deviation of the dataset’s images [36]. To accommodate the Modified UNet segmentation model, the images were enlarged to 256 × 256 and 224 × 224, respectively.

3.3. Augmentation

Training with an unbalanced dataset affects the bias, because the dataset is unbalanced and cannot have an identical number of images for each category. Three augmentation techniques were used in the present investigation to equalize the training images:

Rotation: The position of an object in the frame is altered by randomly rotating a source image by a certain number of degrees, either clockwise or anticlockwise. As part of the image augmentation procedure, the photos were rotated between 5 and 15 degrees in clockwise and anticlockwise orientations. In this work, 2.5% to 10% image magnifications were used.

Scaling: Scaling means increasing or decreasing an image’s frame size. In this augmentation technique, the small size of an image within a dimension range is selected at random. This augmentation technique has applications in object detection tasks, for example.

Translation: In order to enhance back translation, text material must first be translated into another language and then back into the original language. This method enables the creation of textual data that differ from the original text’s original context and meaning. The images’ translations in the horizontal and vertical directions ranged from 5% to 20%.

3.4. Segmentation

There are numerous segmentation models using U-nets in research [37]. In the current research, variations of the Modified U-Net [38] were examined to select the one that performed the best.

The structure of the Modified U-Net is revealed in Figure 3.

Figure 3.

Architecture of Modified U-Net model.

The Modified U-Net was used, a variant of the U-Net model with minor differences in the decoding section. A down sampling max pooling layer with a stride equal to 2 followed each pair of subsequent 3 × 3 convolutional layers in an encoding block. All the convolutional layers were expanded to use batch normalization and ReLU activation. At the last layer, a pixel-by-pixel SoftMax was used to convert every pixel into a class of binary backdrop. This layer then employed 1 × 1 convolution for translating the output from the final decoding block to feature maps.

3.4.1. Hyperparameter Optimization Based Classification

The classification performance was improved by adjusting the Artificial Rabbits Algorithm (ARA)’s (hyperparameter optimization) ideal hyperparameters. Regulating the learning behavior of the constructed models involved optimizing the hyperparameters. The established model parameters produced satisfactory results if the hyperparameters were appropriately tuned, since they did not minimize the loss function. To achieve the best classification results, hyperparameter optimization was performed. This work used hyperparameter-optimization-based ARA for classification [39]. The below steps reveal the search processes of the ARA approach.

3.4.2. Detour Foraging (Exploration)

When foraging, rabbits prefer to wander to far regions where other people are, neglecting what is nearby, much like an old Chinese proverb that states [40]: “A rabbit does not eat grass near its own nest.” This is called detour foraging, and its numerical equation is displayed in Equations (1)–(5),

where,

- rounding to the nearest integer,

- returns a random permutation of integers,

- random numbers

- standard normal distribution

3.4.3. Transition from Exploration to Exploitation

It has already made the move from exploration, and it will continue to do so over time. The following formula describes the power factor : In ARA, rabbits frequently adopt random concealment in the later stages of the search, while they are more likely to use continuous detour foraging in the early stages of the iteration. Equation (6) illustrates the idea of rabbit energy to achieve a balanced ratio between exploitation and exploration.

3.4.4. Random Hiding (Exploitation)

Predators commonly pursue and attack rabbits. To survive, they would dig a variety of shelter-filled burrows all around the nest. In ARA [41], a rabbit constantly builds D burrows throughout the search space’s dimensions and then chooses one at random to hide in to reduce the likelihood of being caught. The mathematical model of this behavior is illustrated in Equations (7)–(11):

depicts the -th rabbit’s burrow randomly. refers to the hiding parameter. In the present version, burrows are utilised for hiding , and are two random numbers between 0 and 1, and follows a standard normal distribution [42].

4. Results and Discussion

The proposed technique was modeled by Python 3.7, and the system was run with parameters such as 8GB RAM and an i5 processor. In this section, the AS-HPOARA method’s results are explained. It is suggested to improve the classification of tomato leaf diseases using AS-HPOARA-based data augmentation and classification.

4.1. Leaf Segmentation Analysis

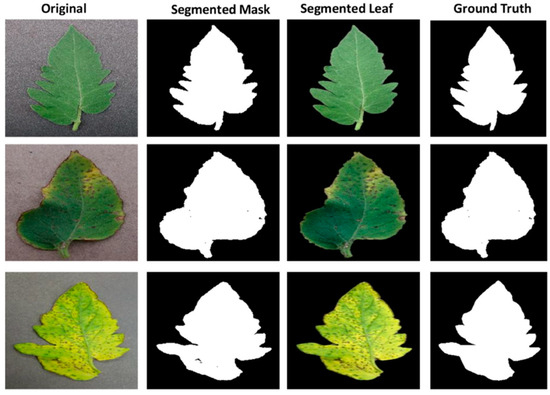

The test set’s class distribution resembled that of the training set. In order to attain the most significant performance measures and choose the optimal tomato leaf segmentation model, three distinct loss functions—Negative Log-Likelihood (NLL) loss, Binary Cross-Entropy (BCE) loss, and Mean-Squared Error (MSE) loss—were utilized in the present investigation. Additionally, as described in several recent publications, an initial termination standard of five epochs with no enhancement in validation loss was adopted. Table 2 compares the presentations of the segmentation model while utilizing the NLL, BCE, and MSE loss functions as three distinct types of segmentation loss functions. It should be highlighted that the Modified UNet with NLL performed well in both quantitative and qualitative terms when segmenting the leaf region over the entire set of images, in terms of test loss (0.0076), test accuracy (98.66), and dice (98.73) for the segmentation of tomato leaves, respectively.

Table 2.

Loss function analysis of modified UNet.

The Modified U-net model was applied to the specified dataset to find the top-performing leaf segmentation model. Five-fold cross-validation was used, where 70% of the leaf images and their corresponding ground truth masks were randomly selected and used for training, the remaining 15% for testing, and another 15% for validation. The test set’s class distribution resembled that of the training set. To avoid the overfitting issue, 90% of the training dataset comprising 70% of the dataset was used for the training, while 10% was used for the validation. The entire training and inference pipelines were described using k-fold cross-validation (2, 3, 4, and 5). Table 3 shows the k-fold validation for the ten classes with NLL loss functions, which provided better results when compared to the BCE and MSE loss functions.

Table 3.

K-fold validation for 10-classes with NLL loss function.

4.2. Leaf Disease Classification Analysis

In order to classify the segmented portions of tomato leaf disease, the research looked at a deep learning framework based on a CNN designated as Modified UNet. In this investigation, three distinct categorization trials were run. Table 4 gives an outline of the classification and segmentation trials’ variables.

Table 4.

Ratings of training variables used for segmentation and classification.

An overview of the dataset training and outcomes based on effectiveness and a comparative evaluation are provided in the sections that follow. Additionally, the testing time per image, that is, the amount of time it took for each network to categorize or segment an input image, was additionally contrasted between the segmentation and classification networks. The Modified UNet surpassed the other learned models between the networks trained on leaf pictures with/without segmented 2, 6, and 10-class issues. Additionally, it can be seen that, as the Modified UNet model was scaled, the network’s scaled depth, width, and resolution caused an increase in the testing time. The performance improved whenever the network increased according to authors’ testing of the various Modified UNet versions. The efficiency using the enlarged version of Modified UNet did not improve much as the classification strategy became more complex.

Figure 4 clearly shows that adding more parameters resulted in better network performances for the 2, 6, and 10-class tasks. For the Plant Village dataset, the Modified U-net using the NLL loss function produced the segmented leaf pictures shown in Figure 4, along with some sample images, related ground truth masks, and test tomato leaf images.

Figure 4.

Samples of tomato leaf images.

4.3. Performance Evaluation of AS-HPOARA

The performance of the proposed AS-HPOARA was assessed by considering the following cases:

Case 1: The binary classes, such as healthy and unhealthy leaves, were considered for analysis.

Case 2: The six different classes were healthy tomato, tomato septoria leaf spot, tomato bacterial spot, tomato late blight, tomato target spot, and tomato yellow leaf curl virus.

Case 3: In this case, all the ten classes obtained from the dataset were considered.

The performance of AS-HPOARA without data augmentation for all three cases is given in Table 5. While considering the loss function, NLL outperformed the other two loss functions (BCE and MSE), which is clearly mentioned in Table 2. Therefore, Table 6 provides the performance analyses of the different classes for the NLL loss function.

Table 5.

Performance analysis of classifiers without data augmentation for NLL loss function.

Table 6.

Performance analysis of classifiers with AS-HPOARA for NLL loss function.

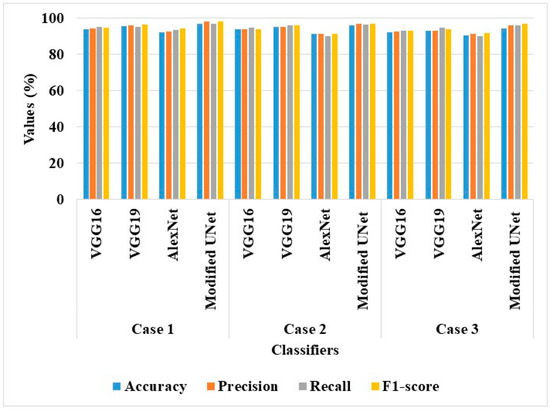

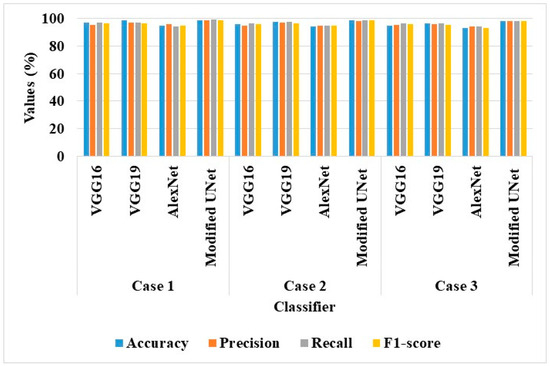

The effectiveness of AS-HPOARA was assessed for the aforementioned scenarios using a variety of classifiers, including VGG16, VGG19, and AlexNet. In this section, both classifiers with and without AS-HPOARA were evaluated for their performances. Next, Figure 5 provides a graphic representation of all the performances.

Figure 5.

Graphical results of classifiers without AS-HPOARA.

According to the analysis, in all three scenarios, DenseNet121 without AS-HPOARA offered a greater level of classification accuracy than VGG16, VGG19, and AlexNet. For instance, the Modified UNet achieved an accuracy of 97.11% in case 1, compared to VGG16’s 94.08%, VGG19’s 95.79%, and AlexNet’s 92.36%. Because of its large number of completely linked layers, which aided in achieving a richer representation of deeply buried characteristics, the DenseNet121 performed a better classification.

Table 6 shows the suggested AS-HPOARA-based data augmentation and performance analysis of the various classifiers (VGG16, VGG19, AlexNet, and Modified UNet).

The investigation led to the conclusion that the AS-HPOARA and Modified UNet combo performed better than the other classifiers. For instance, AS-HPOARA achieved an accuracy of 99.08% for case 1, compared to VGG16’s 96.93%, VGG19’s 98.82%, and AlexNet’s 95.08%. Additionally, AS-HPOARA’s accuracy was greater than the accuracy in each of the three scenarios. The discriminator received as an input the augmented images of the various resolutions produced by the AS-HPOARA generator. Consequently, the AS-HPOARA’s progressive training was employed to improve the classification of tomato leaf diseases. Figure 6 displays the graphical outcomes of the classifiers using AS-HPOARA.

Figure 6.

Graphical results of classifiers with AS-HPOARA.

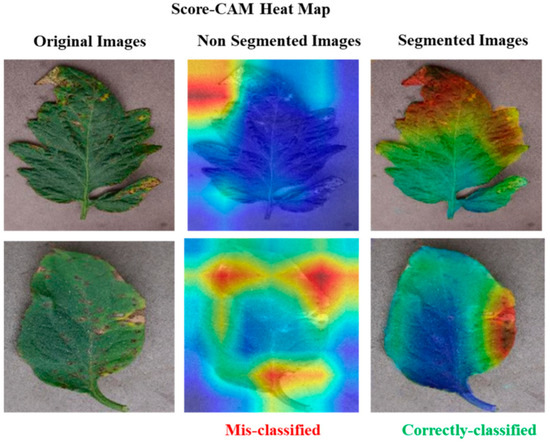

4.4. Visualization Using Score-Cam

This study used visualization techniques to examine the trained networks’ dependability. Five separate categories of score-CAM were incorrectly categorized as healthy or harmful classes. Heat maps for the segmented tomato leaf pictures were employed for the ten-class challenge to show the affected portions clearly. Additionally, the networks picked up knowledge from the segmented leaf images, increasing the reliability of the network’s judgment. This served to refute the charge that CNN lacks credibility and draws its decisions from irrelevant regions. Additionally, segmentation aided the categorization, as the network picked up knowledge from the area of interest. This trustworthy education helped in inaccurate categorization. The segmented leaf images leveraging in the classification were supplementarily proved by the Score-Cam visualization procedure, which has been found to be dependable in various applications. Figure 7 displays the segmented leaves’ heat maps and the original tomato leaf samples taken from Python. While Figure 8 shows the Score-CAM visualization of classified portions.

Figure 7.

Score-CAM visualization of classified images.

Figure 8.

Score-CAM visualization of classified portions.

Score-CAM [40] is a recently proposed visualization technique that was utilized in this study because of its promising results among the many visualization techniques that are now accessible, including Smooth Grad, Grad-CAM, Grad-CAM++, and Score-CAM. Each heat map’s weight was determined by its forward passing score on the target class, and the final result was produced by linearly combining the weights and activation maps. By deriving the weight of each activation map from its forward passing score on the target class, Score-CAM eliminated the dependence on gradients. If it can be confirmed that the network always bases its decisions on the leaf area, it can assist users in understanding how the network makes decisions and increase end-user trust.

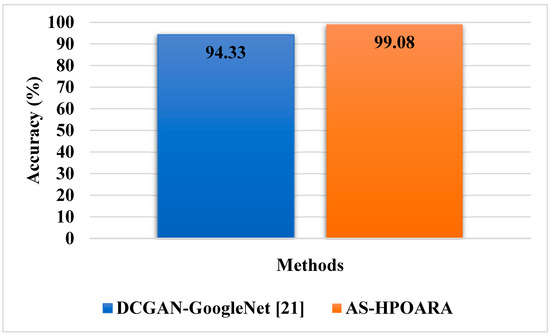

4.5. Comparative Analysis

In this sub-section, existing methodologies such as Deep Convolutional GAN (DCGAN)-GoogleNet [21] and Conditional GAN (CGAN)-DenseNet121 [22] were utilized to assess the effectiveness of the proposed AS-HPOARA. The DCGAN-GoogleNet was assessed using case 1, and the CGAN-DenseNet121 was evaluated using case 3. The comparison of AS-HPOARA based on case 1 and case 3 is represented in Table 7 and Table 8, respectively. Moreover, the graphical representation for classification accuracy compared with the existing DCGAN-GoogleNet and CGAN-DenseNet121 is presented in Figure 9.

Table 7.

Comparative analysis of AS-HPOARA for Case 1.

Table 8.

Comparative analysis of AS-HPOARA for Case 3.

Figure 9.

Graphical comparisons of accuracy for AS-HPOARA [21].

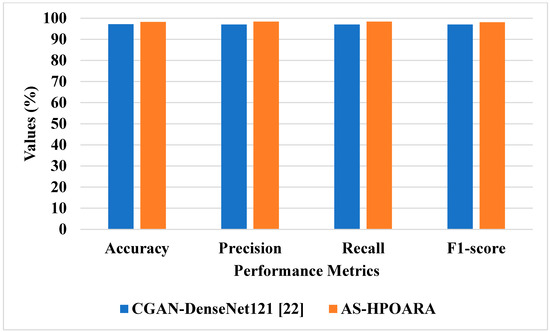

The graphic comparison of the AS-HPOARA and pre-existing CGAN-DenseNet121 is shown in Figure 10. The overall results indicated that, compared to the existing methodologies, the proposed strategy outperformed them regarding overall metrics. For instance, the suggested AS-HPOARA’s classification accuracy for case 1 was 99.08%, compared to the accuracy of DCGAN-Google Net [21] being 94.33%. Like instance 2, the suggested approach had a classification accuracy of 98.7% compared to the existing CGAN-DenseNet121 [22], with an accuracy of 97.11%. Due to its capacity to execute pixelwise distribution to calculate the optimistic shape for every pixel of the leaf picture, the suggested approach produced superior results and improved disease classification. Table 9 shows a comparative analysis of the existing RAHC_GAN [27] regarding accuracy.

Figure 10.

Graphical comparisons of AS-HPOARA and existing CGAN-DenseNet121 [22].

Table 9.

Comparative analysis of AS-HPOARA with existing RAHC_GAN.

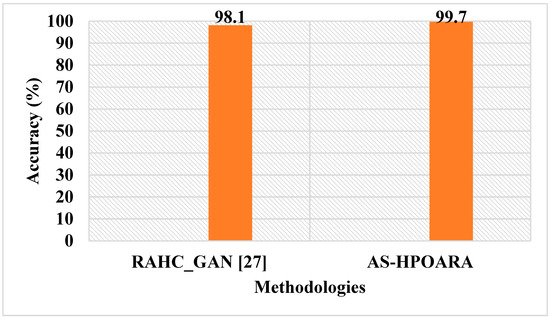

Table 9 clearly shows that the proposed AS-HPOARA achieved an accuracy of 99.7%, which was better than the existing RAHC_GAN [27], which had a 98.1% accuracy. A graphical comparison of the AS-HPOARA and the existing RAHC_GAN [27] is shown in Figure 11.

Figure 11.

Graphical comparisons of AS-HPOARA and existing RAHC_GAN [27].

4.6. Discussion

This research analyzed different existing techniques; they were DCGAN-GoogleNet [21], CGAN-DenseNet121 [22], and RAHC_GAN [27]. The existing DCGAN-GoogleNet [21] was analyzed using five classes: healthy tomato, tomato late blight, tomato septoria leaf spot, tomato target spot, and tomato yellow leaf curl virus, while in the existing CGAN-DenseNet121 [22], all the ten classes obtained from the dataset were considered. The Automatic Segmentation and Hyper Parameter Optimization based Artificial Rabbits Algorithm (AS-HPOARA) was created to improve the classification of plant leaf diseases. The proposed AS-HPOARA method was evaluated using the PlantVillage dataset. The images were normalized using the dataset’s mean and standard deviation via z-score normalization. The training images were balanced in this work using three augmentation techniques: rotation, scaling, and translation. Image augmentation before classification lowered the overfitting issues and increased the classification precision. The HPO-based ARA performed the classification to convert the images from one domain to another in a pairing manner. The performance of AS-HPOARA was evaluated using accuracy, precision, recall, and F1 score. From the result analysis, the accuracy of AS-HPOARA for ten classes was high at 99.08% compared to the existing DCGAN-GoogleNet [21], which was 94.33%. Subsequently, traditional augmentation only modifies the location and direction of an image, so little data are learned and an enhancement in accuracy is restricted, whereas, taking the existing CGAN-DenseNet121 [22], it obtained an accuracy of 94.33%, a precision of 97%, a recall of 97%, and an F1 score of 97%. In this case, the proposed AS-HPOARA accomplished a better accuracy (98.7%), precision (98.52%), recall (98.58%), and F1 score (98.27%). The existing RAHC_GAN [27] obtained an accuracy of 98.1%, which was much less when compared to the proposed AS-HPOARA, which achieved a 99.7% accuracy.

5. Conclusions

The applications of deep learning techniques play a vital role in the computerized classification of leaf diseases. However, overfitting and inadequate data training complicate the current methods for detecting and classifying sick leaves. The PlantVillage dataset was used to assess the proposed AS-HPOARA approach. Z-score normalization was performed using the dataset’s mean and standard deviation to normalize the images. Three augmentations were employed in this study to stabilize the training images: rotation, scaling, and translation. Since Modified UNet uses more fully connected layers to better represent deeply buried features, it was considered for segmentation. In order to translate the images from one domain to another in a paired fashion and assess the uncertainty with the resulting images, the classification was completed using HPO-based ARA. According to the experimental findings, the suggested AS-HPOARA offered superior classification outcomes to the conventional DCGAN-GoogleNet and CGAN-DenseNet121. With a classification accuracy of 99.08% for ten classes, the proposed AS-HPOARA strategy exceeded earlier methods. The proposed AS-HPOARA accomplished an accuracy of 99.7%, while the existing RAHC_GAN achieved an accuracy of 98.1%, which is very low. Additionally, investigating CNN through non-linear feature extraction layers may be beneficial for finding possible results. In the future, this research will be further extended by analyzing various meta-heuristic algorithms to improve the accuracy of leaf disease classification.

Author Contributions

All authors contributed equally to the conceptualization, formal analysis, investigation, methodology, and writing and editing of the original draft. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deanship of Scientific Research at King Khalid University through large group Research Project under grant number RGP.2/146/44.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The datasets used during the current study are available from the corresponding author on reasonable request.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through large group Research Project under grant number RGP.2/146/44.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Algani, Y.M.A.; Caro, O.J.M.; Bravo, L.M.R.; Kaur, C.; Al Ansari, M.S.; Bala, B.K. Leaf disease identification and classification using optimized deep learning. Meas. Sens. 2023, 25, 100643. [Google Scholar] [CrossRef]

- Gao, L.; Lin, X. Fully automatic segmentation method for medicinal plant leaf images in complex background. Comput. Electron. Agric. 2019, 164, 104924. [Google Scholar] [CrossRef]

- Zhou, J.; Fu, X.; Zhou, S.; Zhou, J.; Ye, H.; Nguyen, H.T. Automated segmentation of soybean plants from 3D point cloud using machine learning. Comput. Electron. Agric. 2019, 162, 143–153. [Google Scholar] [CrossRef]

- Yadav, S.; Sengar, N.; Singh, A.; Singh, A.; Dutta, M.K. Identification of disease using deep learning and evaluation of bacteriosis in peach leaf. Ecol. Inform. 2021, 61, 101247. [Google Scholar] [CrossRef]

- Andrushia, A.D.; Neebha, T.M.; Patricia, A.T.; Umadevi, S.; Anand, N.; Varshney, A. Image-based disease classification in grape leaves using convolutional capsule network. Soft Comput. 2022, 27, 1457–1470. [Google Scholar] [CrossRef]

- Zhang, K.; Wu, Q.; Chen, Y. Detecting soybean leaf disease from synthetic image using multi-feature fusion faster R-CNN. Comput. Electron. Agric. 2021, 183, 106064. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Nanehkaran, Y.A.; Zhang, D.; Chen, J.; Tian, Y.; Al-Nabhan, N. Recognition of plant leaf diseases based on computer vision. J. Ambient. Intell. Humaniz. Comput. 2020. [Google Scholar] [CrossRef]

- Jiang, F.; Lu, Y.; Chen, Y.; Cai, D.; Li, G. Image recognition of four rice leaf diseases based on deep learning and support vector machine. Comput. Electron. Agric. 2020, 179, 105824. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef]

- Wang, C.; Du, P.; Wu, H.; Li, J.; Zhao, C.; Zhu, H. A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-Net. Comput. Electron. Agric. 2021, 189, 106373. [Google Scholar] [CrossRef]

- Adeel, A.; Khan, M.A.; Sharif, M.; Azam, F.; Shah, J.H.; Umer, T.; Wan, S. Diagnosis and recognition of grape leaf diseases: An automated system based on a novel saliency approach and canonical correlation analysis based multiple features fusion. Sustain. Comput. Inform. Syst. 2019, 24, 100349. [Google Scholar] [CrossRef]

- Singh, V. Sunflower leaf diseases detection using image segmentation based on particle swarm optimization. Artif. Intell. Agric. 2019, 3, 62–68. [Google Scholar] [CrossRef]

- Mishra, M.; Choudhury, P.; Pati, B. Modified ride-NN optimizer for the IoT based plant disease detection. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 691–703. [Google Scholar] [CrossRef]

- Sunil, C.K.; Jaidhar, C.D.; Patil, N. Cardamom plant disease detection approach using EfficientNetV2. IEEE Access 2021, 10, 789–804. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abdelwahab, M.; Abo-Zahhad, M. Tomato leaf segmentation algorithms for mobile phone applications using deep learning. Comput. Electron. Agric. 2020, 178, 105788. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, Z.; Zhang, L.; Dong, W.; Rao, Y. SLIC_SVM based leaf diseases saliency map extraction of tea plant. Comput. Electron. Agric. 2019, 157, 102–109. [Google Scholar] [CrossRef]

- Ahmad, N.; Asif, H.M.S.; Saleem, G.; Younus, M.U.; Anwar, S.; Anjum, M.R. Leaf image-based plant disease identification using color and texture features. Wirel. Pers. Commun. 2021, 121, 1139–1168. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Javed, K.; Raza, M.; Saba, T. An automated system for cucumber leaf diseased spot detection and classification using improved saliency method and deep features selection. Multimedia Tools Appl. 2020, 79, 18627–18656. [Google Scholar] [CrossRef]

- Cap, Q.H.; Uga, H.; Kagiwada, S.; Iyatomi, H. Leafgan: An effective data augmentation method for practical plant disease diagnosis. IEEE Trans. Autom. Sci. Eng. 2020, 19, 1258–1267. [Google Scholar] [CrossRef]

- Wu, Q.; Chen, Y.; Meng, J. DCGAN-Based Data Augmentation for Tomato Leaf Disease Identification. IEEE Access 2020, 8, 98716–98728. [Google Scholar] [CrossRef]

- Abbas, A.; Jain, S.; Gour, M.; Vankudothu, S. Tomato plant disease detection using transfer learning with C-GAN synthetic images. Comput. Electron. Agric. 2021, 187, 106279. [Google Scholar] [CrossRef]

- Vallabhajosyula, S.; Sistla, V.; Kolli, V.K.K. Transfer learning-based deep ensemble neural network for plant leaf disease detection. J. Plant Dis. Prot. 2022, 129, 545–558. [Google Scholar] [CrossRef]

- Pandian, J.A.; Kumar, V.D.; Geman, O.; Hnatiuc, M.; Arif, M.; Kanchanadevi, K. Plant Disease Detection Using Deep Convolutional Neural Network. Appl. Sci. 2022, 12, 6982. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, Z.; Zhou, S.; Xing, J.; Wu, Q.; Song, J. Grape leaf spot identification under limited samples by fine grained-GAN. IEEE Access 2021, 9, 100480–100489. [Google Scholar] [CrossRef]

- Kianat, J.; Khan, M.A.; Sharif, M.; Akram, T.; Rehman, A.; Saba, T. A joint framework of feature reduction and robust feature selection for cucumber leaf diseases recognition. Optik 2021, 240, 166566. [Google Scholar] [CrossRef]

- Deng, H.; Luo, D.; Chang, Z.; Li, H.; Yang, X. RAHC_GAN: A Data Augmentation Method for Tomato Leaf Disease Recognition. Symmetry 2021, 13, 1597. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Paul, M.; Pal, R.; De, D. Tea leaf disease detection using multi-objective image segmentation. Multimedia Tools Appl. 2021, 80, 753–771. [Google Scholar] [CrossRef]

- Jayaprakash, K.; Balamurugan, S.P. Artificial Rabbit Optimization with Improved Deep Learning Model for Plant Disease Classification. In Proceedings of the 2023 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 23–25 January 2023; pp. 1109–1114. [Google Scholar]

- Krishnamoorthy, N.; Prasad, L.N.; Kumar, C.P.; Subedi, B.; Abraha, H.B.; Sathishkumar, V.E. Rice leaf diseases prediction using deep neural networks with transfer learning. Environ. Res. 2021, 198, 111275. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-weighted visual explanations for convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 24–25. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using Deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Trivedi, V.K.; Shukla, P.K.; Pandey, A. Process of Recognition of Plant Diseases by Using Hue Histogram, K-Means Clustering and Forward-Propagation Deep Neural Networks. In Proceedings of the Third Doctoral Symposium on Computational Intelligence: DoSCI 2022, Lucknow, India, 10 November 2022; Springer Nature: Singapore, 2022; pp. 11–26. [Google Scholar]

- Trivedi, V.K.; Shukla, P.; Pandey, A. Plant leaves disease classification using bayesian regularization back propagation deep neural network. J. Phys. Conf. Ser. 2021, 1998, 012025. [Google Scholar] [CrossRef]

- Agarwal, M.; Gupta, S.K.; Biswas, K.K. Development of Efficient CNN model for Tomato crop disease identification. Sustain. Comput. Inform. Syst. 2020, 28, 100407. [Google Scholar] [CrossRef]

- Kalaivani, S.; Shantharajah, S.P.; Padma, T. Agricultural leaf blight disease segmentation using indices based histogram intensity segmentation approach. Multimedia Tools Appl. 2020, 79, 9145–9159. [Google Scholar] [CrossRef]

- Tassis, L.M.; de Souza, J.E.T.; Krohling, R.A. A deep learning approach combining instance and semantic segmentation to identify diseases and pests of coffee leaves from in-field images. Comput. Electron. Agric. 2021, 186, 106191. [Google Scholar] [CrossRef]

- Manjunath, R.; Kwadiki, K. Modified U-NET on CT images for automatic segmentation of liver and its tumor. Biomed. Eng. Adv. 2022, 4, 100043. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Elshahed, M.; Tolba, M.A.; El-Rifaie, A.M.; Ginidi, A.; Shaheen, A.; Mohamed, S.A. An Artificial Rabbits’ Optimization to Allocate PVSTATCOM for Ancillary Service Provision in Distribution Systems. Mathematics 2023, 11, 339. [Google Scholar] [CrossRef]

- Kumar, V.S.; Jaganathan, M.; Viswanathan, A.; Umamaheswari, M.; Vignesh, J. Rice leaf disease detection based on bidirectional feature attention pyramid network with YOLO v5 model. Environ. Res. Commun. 2023, 5, 065014. [Google Scholar] [CrossRef]

- Upadhyay, S.K.; Kumar, A. A novel approach for rice plant diseases classification with deep convolutional neural network. Int. J. Inf. Technol. 2021, 14, 185–199. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).