Abstract

The Superb Fairy-wren Optimization Algorithm (SFOA) is a meta-heuristic algorithm inspired by the behavior of the superb fairy-wren. However, the conventional SFOA tends to converge to local optima and exhibits limited convergence accuracy when addressing complex optimization problems. To overcome these drawbacks, this study proposes an Improved Superb Fairy-wren Optimization Algorithm (ISFOA). The ISFOA incorporates four strategies—Chebyshev chaotic mapping, an adaptive weighting factor, Cauchy–Gaussian mutation, and t-distribution perturbation—to enhance the algorithm’s ability to balance global exploration and local exploitation. An ablation study using the CEC 2021 test suite was performed to evaluate the individual contribution of each strategy. Moreover, to comprehensively assess the performance of ISFOA, a comparative analysis was carried out against eight other meta-heuristic algorithms on both the CEC2005 and CEC2021 benchmark function sets. Additionally, the practical applicability of ISFOA was examined by comparing it with eight other optimization algorithms across seven engineering design problems. The comprehensive experimental results indicate that ISFOA outperforms the original SFOA and other compared algorithms in terms of robustness and convergence accuracy, thereby offering an efficient and reliable approach for solving complex optimization problems.

1. Introduction

The deepening process of global industrialization has led to increasingly large-scale and complex problems in areas such as industrial production, logistics management, and energy scheduling. Traditional optimization methods often face computational bottlenecks when addressing such complex, high-dimensional, nonlinear, and multi-constrained problems [1]. Metaheuristic optimization algorithms [2] are a class of intelligent optimization techniques that do not rely on specific mathematical properties of the objective function. Owing to their strong global search capability and high adaptability, they have shown significant promise in solving diverse complex optimization problems [3] and are widely applied across many engineering and scientific fields.

In recent years, researchers have proposed numerous metaheuristic algorithms, including Newton-Raphson-Based Optimizer (NRBO) [4], Artemisinin Optimization (AO) [5], Ivy Optimization Algorithm (IVY) [6], Tianji’s Horse Racing Optimization (THRO) [7], Projection-Iterative-Methods-based Optimizer (PIMO) [8], Holistic Swarm Optimization (HSO) [9], Artificial Lemming Algorithm (ALA) [10], Genetic Algorithm (GA) [11], Chaos Game Optimization (CGO) [12], Gradient-Based Optimizer (GBO) [13], Slime Mold Algorithm (SMA) [14], Marine Predators Algorithm (MPA) [15], Polar Lights Optimization (PLO) [16], Parrot Optimizer (PO) [17], Black-winged Kite Algorithm (BKA) [18], GOOSE algorithm (GOOSE) [19], Hippopotamus Optimization Algorithm (HO) [20], PID-based Search Algorithm (PIA) [21], Fully Informed Search Algorithm (FISA) [22], Sinh Cosh Optimizer (SCHO) [23], An Enhanced Snake Optimizer Algorithm (ESOA) [24], Tyrannosaurus Optimization Algorithm (TOA) [25], and many others. However, to date, no universally applicable optimization algorithm capable of effectively solving all engineering and numerical optimization problems has been developed [26]. Therefore, researchers are committed to improving existing metaheuristic algorithms by integrating specific strategies to develop various variants, aiming to enhance their performance, robustness, and adaptability to specific problem categories. These improvement strategies have significantly expanded the range of metaheuristic algorithms. For example, Qiao et al. [27] proposed an Improved Red-Billed Blue Magpie Optimization (IRBMO) algorithm by integrating multiple strategies such as Logistic-Tent chaotic initialization, a dynamic balance factor, and dual-mode perturbation. This effectively enhanced population diversity, balanced search capability, and addressed the issues of premature convergence and performance degradation of the original algorithm in high-dimensional optimization. Lv et al. [28] proposed a Multi-Strategy Improved Dung Beetle Optimizer (MIDBO) by incorporating strategies such as improved chaotic initialization, a nonlinear dynamic balance factor, and multi-population differential co-evolution. This effectively enhanced population diversity, balanced exploration and exploitation capabilities, and resolved the tendencies of premature convergence and insufficient accuracy of the original algorithm in complex optimization problems. Chen et al. [29] proposed the Butterfly Search and Triangular Walk Crested Porcupine Optimizer (BTCPO), which effectively addressed the shortcomings of the original Crested Porcupine Optimizer in terms of convergence speed and local exploitation accuracy, achieving a dynamic balance between exploration and exploitation. It demonstrated significant advantages on both benchmark functions and engineering design problems.

The Superb Fairy-wren Optimization Algorithm (SFOA) is a metaheuristic inspired by the social behavior of the superb fairy-wren [30]. While the Superb Fairywren Optimization Algorithm (SFOA) is inspired by the complex social behaviors of the superb fairywren, which establish a multi-phase search strategy framework and demonstrate considerable potential for solving complex problems, the original algorithm is constrained by several structural limitations. For example, its reliance on fixed weighting factors hinders an adaptive balance between exploration and exploitation; its simplistic and random population initialization method often results in insufficient diversity; and its escape mechanism proves inefficient when addressing complex multimodal problems. These shortcomings collectively limit the algorithm’s performance, increasing its susceptibility to local optima and reducing solution accuracy. Therefore, this study selects the SFOA as a basis for enhancement, aiming to mitigate these structural weaknesses through targeted modifications, thereby unlocking its latent potential and developing a more robust and effective optimization approach. To address these limitations, this paper proposes an Improved Superb Fairy-wren Optimization Algorithm (ISFOA), which integrates four strategies—Chebyshev chaotic mapping, an adaptive weighting factor, Cauchy–Gaussian mutation, and t-distribution perturbation—to better balance global exploration and local exploitation. An ablation study using the CEC 2021 benchmark was conducted to assess the contribution of each strategy. The effectiveness of ISFOA was further evaluated through comprehensive comparisons with eight other metaheuristic algorithms on the CEC 2005 and CEC 2021 benchmark suites. Additionally, the practical performance of ISFOA was examined by applying it to seven engineering design problems and comparing the results with those obtained by the eight other optimizers. The main contributions of this paper are as follows:

(1) The Improved Superb Fairy-wren Optimization Algorithm (ISFOA) is proposed, which integrates four strategies: Chebyshev chaotic mapping, an adaptive weighting factor, Cauchy–Gaussian mutation, and t-distribution perturbation.

(2) The Chebyshev chaotic mapping strategy is introduced to enhance the traversal and uniformity of the initial population distribution in the solution space.

(3) An adaptive weighting factor is designed to balance exploration and exploitation throughout the entire iterative process, a challenge in the original SFOA.

(4) The Cauchy–Gaussian mutation strategy is incorporated to improve population diversity.

(5) The t-distribution perturbation strategy is further introduced to enhance the robustness and solution quality of ISFOA, as well as its ability to balance global exploration and local exploitation.

(6) Ablation and comparative experiments are conducted between ISFOA and several classical and newly proposed metaheuristic algorithms on different benchmark sets. Results demonstrate that ISFOA exhibits significant advantages in robustness, convergence accuracy, and other performance metrics.

(7) The effectiveness of ISFOA is also validated on real-world engineering problems.

The structure of this paper is as follows: Section 1 presents the introduction; Section 2 describes the Superb Fairy-wren Optimization Algorithm (SFOA); Section 3 details the improvement strategies applied to SFOA; Section 4 presents ablation and comparative experiments between ISFOA and other metaheuristic algorithms on different benchmark sets; Section 5 validates the performance of ISFOA on practical engineering problems; finally, conclusions and discussions are provided in Section 6.

2. Superb Fairy-Wren Optimization Algorithm

The Superb Fairy-wren Optimization Algorithm is a meta-heuristic algorithm that emulates the natural behaviors of the superb fairy-wren—including foraging, breeding, chick-rearing, and predator evasion—to search for optimal solutions to complex problems.

2.1. Population Initialization

Similarly to other meta-heuristic optimization algorithms, SFOA employs random initialization for population initialization:

where represents the position of the i-th individual in the j-th dimension; denotes the upper bound of the search space; denotes the lower bound of the search space; and denotes random numbers within the range.

2.2. Young Bird Growth Stage

During the Young Bird Growth Stage, the position of each individual in the population is updated by simulating the growth dynamics of nestlings. SFOA models the process of individual position updates in the solution space: individuals continuously learn from extensive experience and rapidly adjust their positions throughout this growth stage.

where denotes the position of the i-th individual in the j-th dimension at time step t + 1; denotes the position of the i-th individual in the j-th dimension at time step t; denotes the proportional coefficient for juveniles.

2.3. Breeding and Feeding Stage

When environmental risk factors are low and the population is dominated by adult birds, the algorithm transitions into the Breeding and Feeding Stage. In SFOA, to assess the level of environmental danger, the environmental risk value is first calculated using Equation (4) to simulate risk fluctuations. When the computed risk value s is sufficiently small, it indicates a relatively safe environment, and the algorithm proceeds to the Breeding and Feeding Stage.

where denotes the position of the current optimal individual; denotes the weighting factor, which is set to 0.8 in the original algorithm; denotes the environmental danger factor; denotes the process of the gradual expansion of the activity range during the rotational tutoring among birds; denotes the current number of function evaluations; denotes the maximum number of function evaluations.

2.4. Avoiding Natural Enemies Stage

In the Avoiding Natural Enemies Stage, the SFOA updates individual positions by simulating the defensive responses of prey to predation. When a simulated individual is detected, it engages in rapid displacement and high-frequency perturbations to evade tracking. Concurrently, it emits warning signals that trigger adaptive responses from other members of the population. Collectively, these modeled behaviors form the foundation for the position-update mechanism in this stage.

where denotes the random step size generated by Lévy flight; denotes the escape distance coordination factor.

3. Improved Superb Fairy-Wren Optimization Algorithm

3.1. Chebyshev Chaotic Map

To address the problem that the population initialization method in the standard SFOA tends to produce uneven population distribution and inadequate exploration of the solution space, this study employs a Chebyshev chaotic map to generate the initial population. This approach enhances the ergodicity and distribution uniformity of the population across the solution space, thereby improving the algorithm’s global exploration capability in the early search stages and increasing its computational efficiency. The working principle of the Chebyshev chaotic mapping strategy is described as follows:

where denotes the initialization coefficient; denotes the function value of the t-th iteration; denotes the function value of the (t + 1)-th iteration.

3.2. Adaptive Weighting Factor

In the breeding and brooding phase of the SFOA, the weighting coefficient C is a key parameter that controls the degree to which individuals learn from the optimal one. In the original SFOA, C is fixed at 0.8, which results in inflexible search behavior and disrupts the balance between exploration and exploitation. To overcome this limitation, the ISFOA replaces it with an adaptive weight C(t), bounded within [0.1, 1]. Setting allows the algorithm to closely follow the global optimum in early iterations, facilitating broad exploration; setting ensures that, in later stages, the search shifts toward local refinement while preserving a baseline of global guidance. The weight decays nonlinearly from 1 to 0.1, driving a smooth adaptive search process. This modification not only better simulates biological behavior, but also meets the optimization-theoretic requirement for dynamic equilibrium between exploration and exploitation.

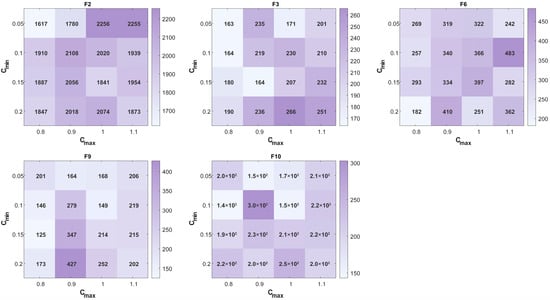

To verify the sensitivity of the adaptive weighting factor, we selected five representative functions (F2, F3, F6, F9, F10) from the CEC 2021 benchmark and tested 16 parameter combinations formed by varying ∈ {0.05, 0.1, 0.15, 0.2} and ∈ {0.8, 0.9, 1.0, 1.1}.

The results, visualized in a series of heatmaps (Figure 1), show that the ISFOA maintains stable and high performance across diverse optimization problems within the tested parameter range. Specifically, a contiguous region of darker shading—indicating better performance—surrounds the chosen parameter set (0.1, 1.0). This pattern suggests that performance remains robust under slight parameter variations, demonstrating low sensitivity around this configuration.

The adaptive weighting factor operates on the following principle:

where denotes the minimum value of the weight, which is set to 0.1 in this study; denotes the maximum value of the weight, which is set to 1 in this study; and denotes the maximum number of time steps.

3.3. Cauchy–Gaussian Mutation

Population diversity is a critical factor influencing the performance of optimization algorithms. During the search process, its decline often leads to premature convergence to local optima. To address this, the paper introduces a Cauchy–Gaussian hybrid mutation strategy to perturb individuals. This approach combines the heavy-tailed properties of the Cauchy distribution, which facilitate escaping local optima, with the local search properties of the Gaussian distribution, thereby effectively maintaining population diversity and promoting global convergence. The principle of the Cauchy–Gaussian mutation strategy is described as follows:

where denotes the Cauchy–Gaussian mutated individual; ; ; denotes the standard deviation; denotes a random variable following the Cauchy distribution; denotes a random variable following the Gaussian distribution.

Figure 1.

Heat map of adaptive weighting factor.

Figure 1.

Heat map of adaptive weighting factor.

3.4. T-Distribution Perturbation

To enhance the robustness, solution quality, and exploration–exploitation balance of the SFOA, a t-distribution perturbation strategy is introduced following the individual position update. This strategy integrates the strengths of both Gaussian and Cauchy mutations, promoting a dynamic search trade-off and alleviating the tendency to converge prematurely or stagnate in local optima during later iterations. Specifically, the iteration number serves as the degrees of freedom of the t-distribution, moderating the magnitude of perturbation. In early iterations, the perturbation resembles a Cauchy distribution, promoting global exploration; in later stages, it approximates a Gaussian distribution, favoring local refinement. This design effectively prevents premature convergence and enhances the overall search efficiency of the algorithm.

The t-distribution perturbation strategy operates as follows:

where denotes the t-distribution with degrees of freedom being the number of iterations.

This study proposes a multi-strategy enhancement to the Superb Fairywren Optimization Algorithm (SFOA) designed to address specific limitations at distinct stages of the search process. The core improvement lies not in a simple combination of four strategies, but in a temporally structured and functionally complementary framework that fosters synergistic interaction. The framework systematically integrates: (1) a Chebyshev chaotic map for generating a uniformly distributed initial population, thereby establishing a robust foundation for global exploration; (2) an adaptive weight factor to dynamically regulate the trade-off between exploration and exploitation throughout the iterative process; (3) a Cauchy–Gaussian hybrid mutation introduced in the mid-phase, leveraging the global exploration capability of the Cauchy operator and the local refinement strength of the Gaussian operator to sustain population diversity and counteract premature convergence; and (4) a t-distribution perturbation operator applied in later iterations, which facilitates a smooth transition between the local-focused Gaussian pattern and the exploration-oriented Cauchy pattern, thereby enhancing the quality and robustness of the final convergence. The effectiveness of this coordinated, phased strategy combination is demonstrated conclusively through systematic ablation experiments. The performance of the complete ISFOA significantly exceeds that of any variant lacking a single component, providing strong evidence for the theoretical rationale and practical utility of the proposed approach in simultaneously improving convergence accuracy, speed, and stability.

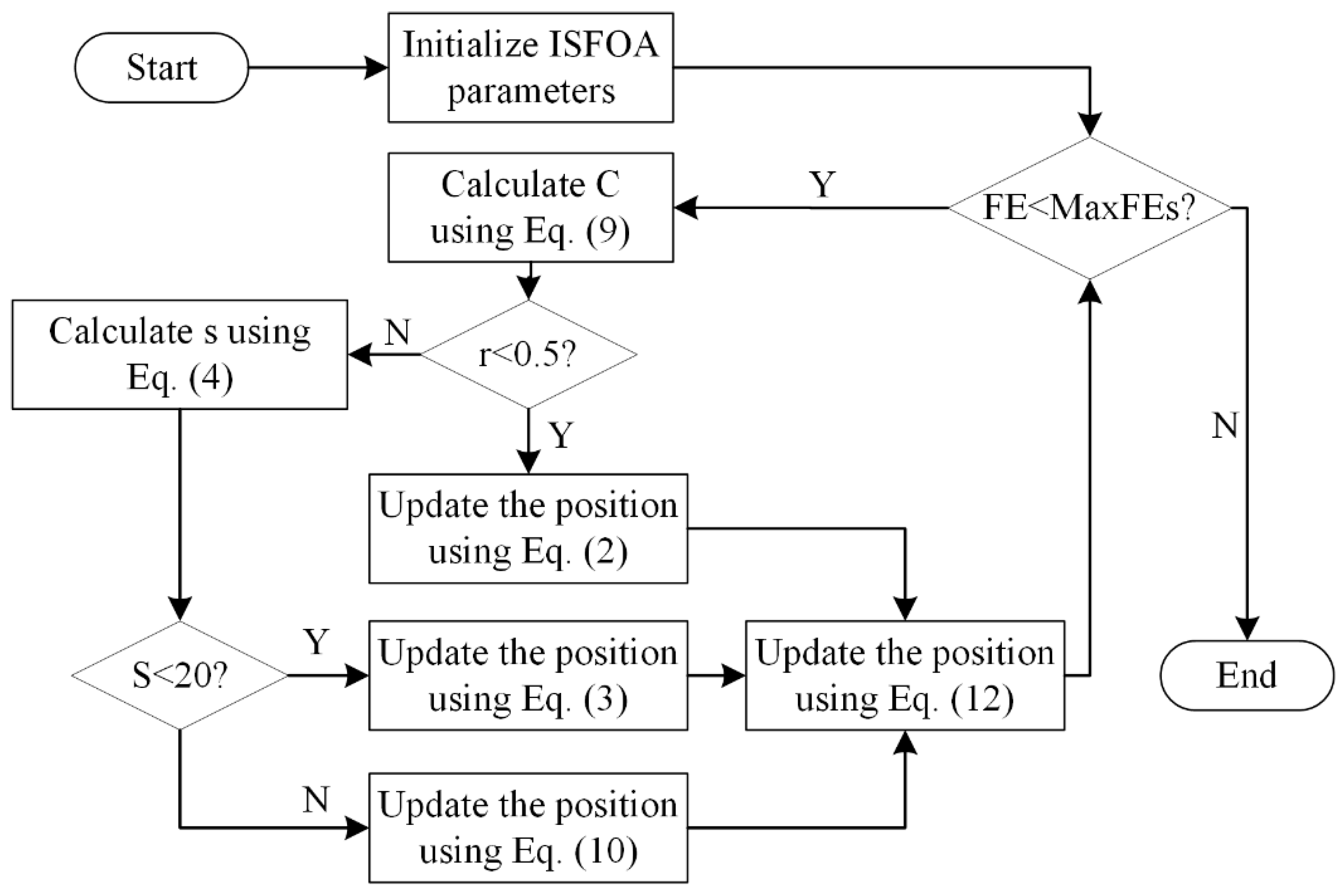

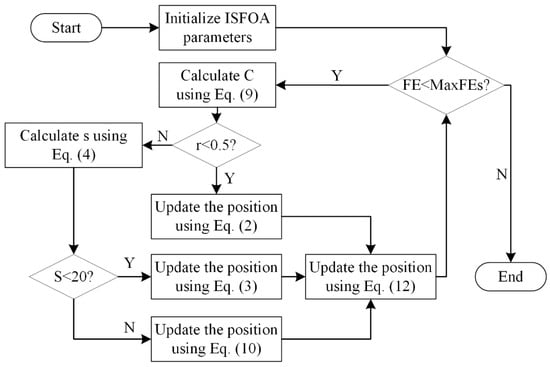

3.5. The Flowchart for ISFOA

To show the specific steps of ISFOA more clearly, its pseudo-code and flowchart are shown here, as shown in Algorithm 1 and Figure 2.

| Algorithm 1 Pseudocode of ISFOA |

| 1. Input: The number of candidate solution N, Dimension D, The max number of fitness evaluation MaxFEs |

| 2. Output: The best candidate solution |

| 3. Generate the initial population using Equation (8) |

| 4. While FEs < MaxFEs |

| 5. Calculate C using Equation (9) |

| 6. For i = 1:N |

| 7. If r > 0.5 |

| 8. Update the position using Equation (2) |

| 9. Else |

| 10. Calculate s using Equation (4) |

| 11. If s < 20 |

| 12. Update the position using Equation (3) |

| 13. Else |

| 14. Update the position using Equation (10) |

| 15. End if |

| 16. End if |

| 17. End for |

| 18. Evaluate fitness of each candidate solution |

| 19. FEs = FEs + N |

| 20. End while |

Figure 2.

The flowchart of the Improved Superb Fairy-wren Optimization Algorithm.

3.6. The Time Complexity of ISFOA

For an optimization problem with population size N and dimension D, given a maximum iteration count T, the overall time complexity of the original SFOA is O(N × D × T). ISFOA incorporates four enhancement strategies. First, the Chebyshev Chaotic Map replaces random initialization, requiring O(N × D) operations once, thus contributing negligibly to per-iteration complexity. Second, the Adaptive Weighting Strategy dynamically updates the weight C during breeding and brooding, adding about 4 × N × Doperations per iteration. Third, the Cauchy–Gaussian Mutation perturbs the best individual, introducing roughly 5 × D operations per iteration. Finally, the t-distribution Perturbation acts on the whole population after position updates, adding about 2 × N × D operations per iteration.

Overall, the time complexity of ISFOA remains O(N × D × T). Although the added strategies increase per-iteration computation, they incur only constant-factor overhead and do not change the asymptotic order. Importantly, the resulting gains in convergence speed and solution quality outweigh this overhead. Compared to SFOA and other benchmarks, ISFOA typically reaches better solutions in fewer iterations, achieving a favorable trade-off between computational cost and performance. Thus, ISFOA not only attains superior optimization results but also exhibits higher overall efficiency on complex problems.

4. Performance Evaluation of the Improved Superb Fairy-Wren Optimization Algorithm

All experiments in this section were conducted on a conventional personal computer running MATLAB R2023a, configured with a Windows 11 (64-bit) OS, an Intel(R) Core(TM) i7-6700HQ CPU, and 24 GB of RAM. The parameters of the comparison algorithm are shown in Table 1.

Table 1.

Parameters of the comparison algorithm.

4.1. Test Function Configuration

To evaluate the performance of the ISFOA, ablation studies and comparative experiments were conducted using benchmark functions from the CEC2021, CEC2005 [31], and CEC2021 [32] test suites, as detailed in Table 2 and Table 3. The CEC2005 benchmark comprises 23 functions, categorized into unimodal (F1–F5), multimodal (F6–F13), and fixed-dimensional multimodal (F14–F23) types, which collectively assess convergence accuracy, speed, and ability to avoid local optima. The CEC2021 benchmark includes 10 functions, divided into basic (F1–F6), hybrid (F7–F8), and composite (F9–F10) categories, providing further evaluation of the algorithm’s robustness and global search capability in complex solution spaces.

Table 2.

Test Function for CEC2005.

Table 3.

Test Function for CEC2021.

4.2. Abolition Experiment

The experimental settings in the ablation study are consistent with those in the comparative experiments: the population size is set to N = 30, the maximum number of iterations is 1000, and each algorithm is independently run 30 times to obtain statistical results.

To assess the individual contributions of the four strategies to the overall performance of SFOA (Table 4), an ablation study was conducted using the CEC 2021 benchmark functions. The ablation variants are defined as follows: ISFOA1 omits the Chebyshev chaotic map, reverting to random initialization; ISFOA2 removes the adaptive weighting factor, employing a fixed weight (C = 0.8); ISFOA3 excludes the Cauchy–Gaussian mutation strategy; and ISFOA4 omits the t-distribution perturbation strategy. The results (Table 5) evaluate the independent effect of each core strategy in the Improved SFOA. The findings demonstrate the following: the t-distribution perturbation most significantly enhances the algorithm’s ability to explore complex search spaces; the adaptive weighting factor plays a key role in multimodal optimization; the Cauchy–Gaussian mutation effectively maintains population diversity; and the Chebyshev chaotic map facilitates a more uniform initial population distribution. The complete ISFOA outperforms all ablation variants in convergence accuracy, stability, and robustness, confirming the effectiveness of the multi-strategy cooperative design.

Table 4.

Various SFOA variants with different strategies.

Table 5.

The result of the abolition experiment.

Furthermore, we conducted the Wilcoxon rank-sum test on ISFOA and its variants, as shown in Table 6. The Wilcoxon rank-sum test is a nonparametric statistical test used to determine whether a significant difference exists between two independent samples. In the experiments, the significance level was set at 0.05. A significance level exceeding 0.05 indicates no statistically significant difference, while a value below 0.05 denotes a significant performance difference. In this table, the symbols “+”, “−”, and “=” represent cases where ISFOA performs significantly better, significantly worse, or shows no significant difference, respectively.

Table 6.

Results of the Wilcoxon rank sum test for the ISFOA ablation experiment.

The results indicate that all four enhancement strategies integrated into the ISFOA positively impact the performance of the original SFOA, though their scope and degree of significance vary. Specifically, the Chebyshev chaotic mapping strategy performs comparably to the original random initialization on most test functions, yet it demonstrates distinct advantages on the composite function F10, providing a better initial population distribution for handling complex problems. The adaptive weighting factor shows widespread and consistent performance gains, leading to significant improvements across all test functions. The Cauchy–Gaussian mutation strategy provides clear and targeted enhancements for optimizing hybrid and composite functions. The t-distribution perturbation strategy yields significant performance improvements on all functions.

4.3. Comparative Experimental Study on the CEC 2005

To evaluate the optimization performance of the ISFOA, comparative experiments were conducted using the CEC 2005 benchmark test suite. The algorithms selected for comparison include SFOA, Particle Swarm Optimization(PSO) [33], Gray Wolf Optimizer (GWO) [34], Sparrow Search Algorithm (SSA) [35], Rime Optimization Algorithm (RIME) [36], Coati Optimization Algorithm (COA) [37], Subtraction-Average-Based Optimizer (SABO) [38], and Dung Beetle Optimizer (DBO) [39], representing eight established metaheuristic optimizers. Comprehensive results demonstrate that the multi-strategy Improved SFOA (ISFOA) significantly outperforms the original SFOA and other mainstream algorithms in solution accuracy, convergence speed, and robustness on this benchmark.

The numerical results for the CEC 2005 functions are presented in Table 7. A synthesis of outcomes from both the CEC 2005 and CEC 2021 suites allows a performance analysis along two core dimensions: solution accuracy and robustness. In terms of solution accuracy, ISFOA shows a marked advantage on the vast majority of test functions. After 30 independent runs, its best, average, and median values consistently reach or closely approach the theoretical global optimum across unimodal, multimodal, and fixed-dimensional functions. Particularly on high-dimensional or highly multimodal complex functions, ISFOA reliably finds solutions of superior quality, with errors often orders of magnitude lower than those of other algorithms. Regarding algorithm robustness, ISFOA exhibits strong stability. The standard deviation of its results is generally very low, reaching zero for some functions, which indicates minimal sensitivity to the randomness of initialization and reliable convergence in every trial. In contrast, the performance of the other compared algorithms fluctuates more considerably. Their best and average values deviate significantly from the theoretical optimum, accompanied by higher standard deviations, reflecting less stable performance and lower success rates. The high accuracy and robustness of ISFOA validate that the proposed multi-strategy enhancements effectively improve its overall capability to handle diverse search landscapes.

Table 7.

Results of the test function for CEC2005.

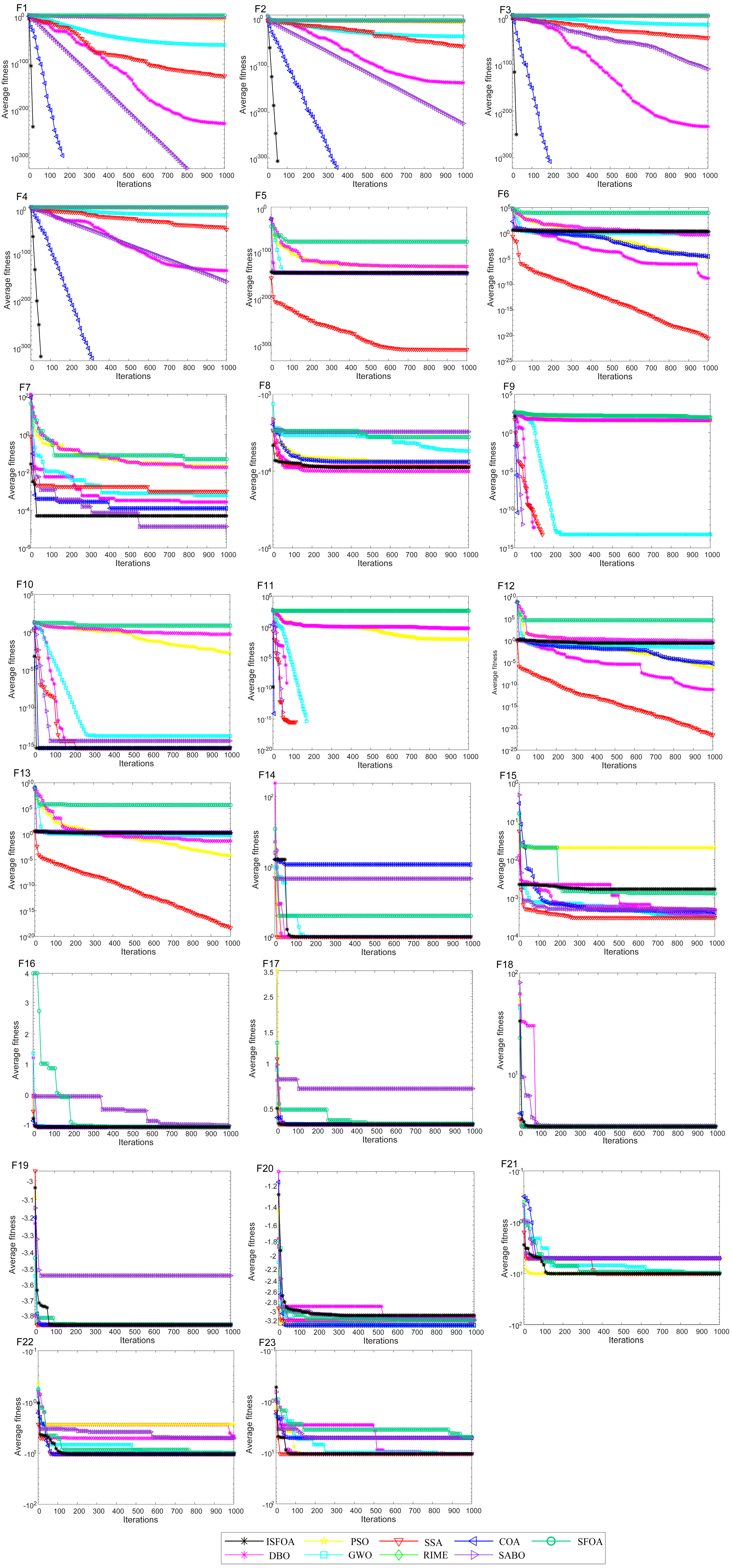

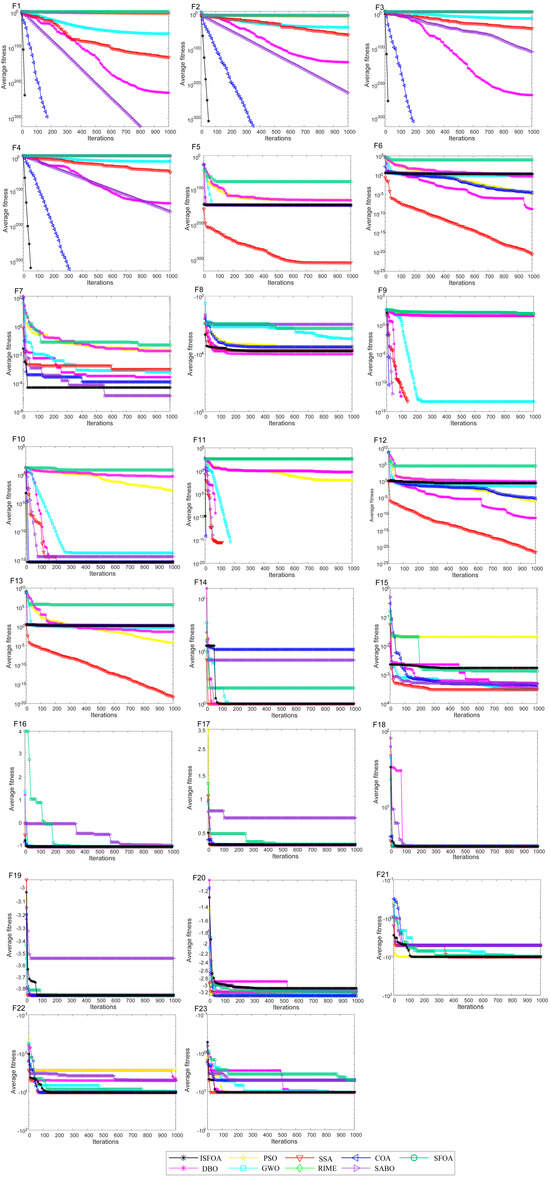

The convergence curves on the CEC 2005 test set are shown in Figure 3. Compared to SFOA, PSO, GWO, SSA, RIME, COA, SABO, and DBO, ISFOA demonstrates superior convergence behavior on most functions. On unimodal functions, its convergence curve descends steeply from the early iterations and stabilizes rapidly near the theoretical optimum, verifying the efficiency of its improved initialization and adaptive mechanisms in simpler search spaces. On multimodal functions, ISFOA’s curve decreases steadily in a “step-wise” manner, effectively escaping local optima. In contrast, algorithms like SFOA and PSO often stagnate prematurely. On fixed-dimensional multimodal functions, ISFOA also converges quickly and stably, with a smooth curve and final accuracy markedly better than that of other algorithms, some of which exhibit slow convergence, oscillatory behavior, or premature stagnation.

Figure 3.

CEC2005 convergence iteration curve.

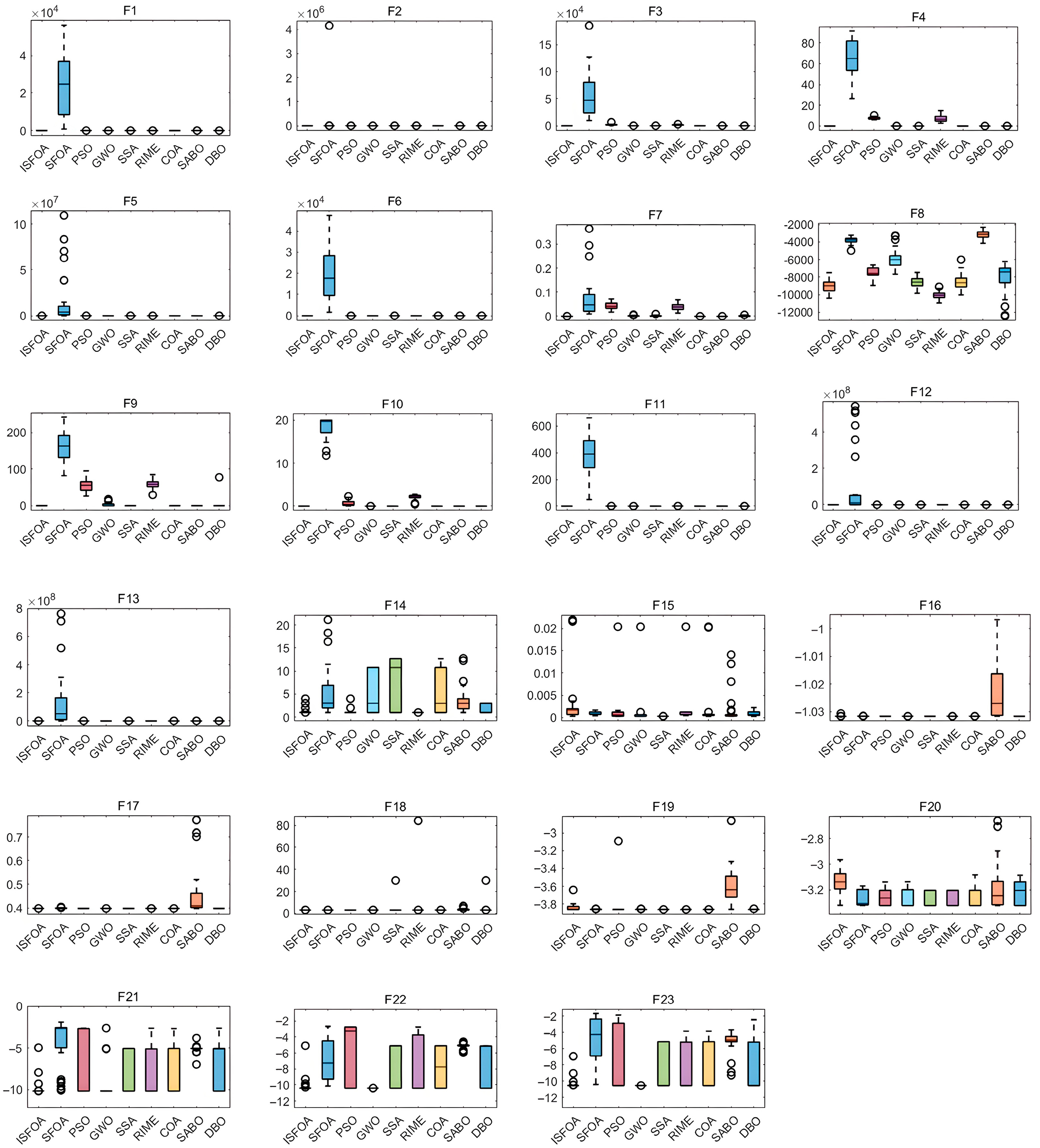

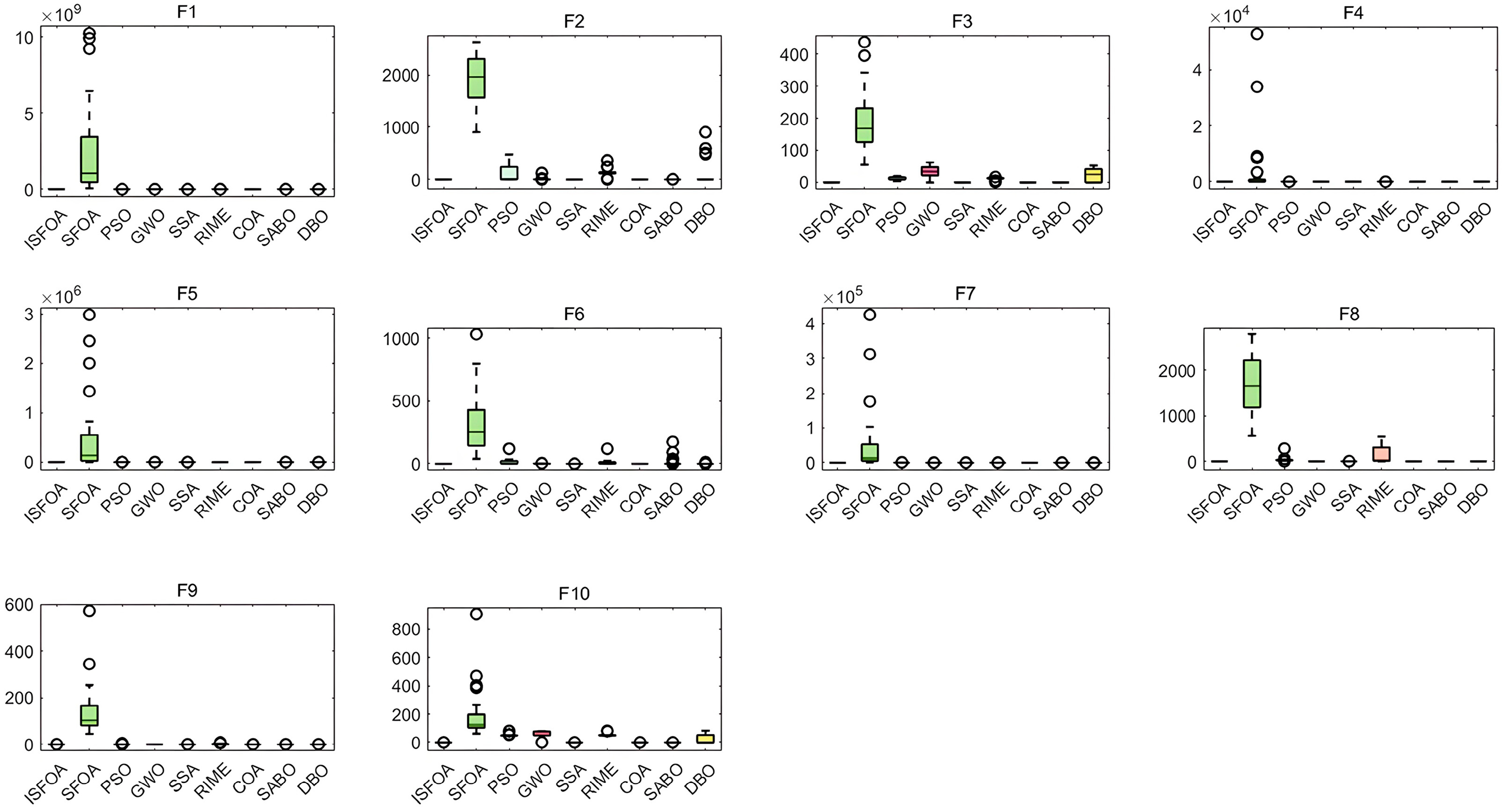

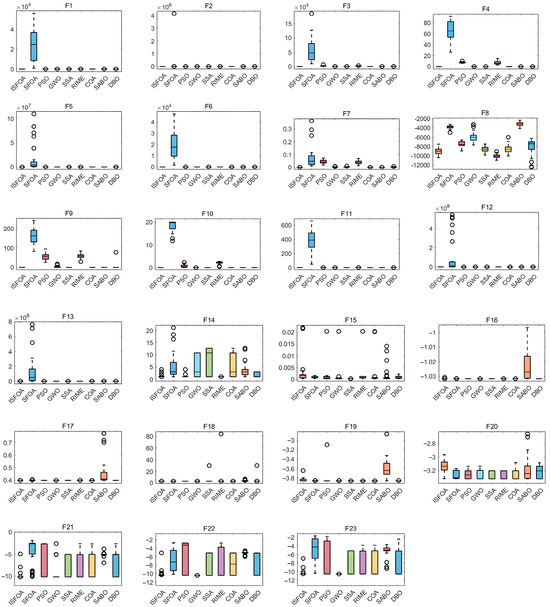

The box plots for the CEC 2005 test set are shown in Figure 4, visually summarizing the stability and result quality distribution for each algorithm over 30 independent runs. ISFOA’s box plots show a clear advantage on most functions. For functions where the theoretical optimum is attainable, ISFOA’s box collapses to a single line at the optimum with no outliers, indicating 100% accurate convergence and absolute stability. For more challenging functions, its box is typically the shortest and positioned closest to the optimum. In contrast, the box plots for the original SFOA and other algorithms commonly display elongated boxes, medians distant from the optimum, and numerous outliers, reflecting high result variability, unstable performance, and sensitivity to initial conditions or random factors.

Figure 4.

CEC2005 box-and-line plot.

Table 8 presents the results of the Wilcoxon rank-sum test (significance level α = 0.05), where the symbols “+”, “−”, and “=” denote that ISFOA performs significantly better, significantly worse, or not significantly different, compared to each algorithm, respectively.

Table 8.

Results of the Wilcoxon rank-sum test for ISFOA on the CEC2005.

The results show that ISFOA achieves a statistically significant advantage over PSO, GWO, SSA, and RIME on all 23 test functions. Against SABO and DBO, ISFOA also demonstrates strong competitiveness, securing a significant advantage in 22 out of 23 comparisons. When compared with COA, ISFOA performs significantly better on 16 functions, with no significant difference observed on the remaining 7.

In summary, across all benchmark comparisons, the number of cases in which ISFOA holds a significant advantage far exceeds those with no significant difference. This statistically confirms that ISFOA achieves an overall performance improvement in terms of solution accuracy, robustness, and convergence, demonstrating clear comprehensive advantages over the state-of-the-art algorithms included in this study.

4.4. Comparative Experimental Study on the CEC 2021

The results for the CEC 2021 test functions are presented in Table 9. ISFOA demonstrates significant advantages in solution accuracy, stability, and robustness.

Table 9.

Results of the Test Function for CEC2021.

On the basic functions (F1–F6), ISFOA consistently attains the theoretical optimum of 0, indicating precise convergence to the global optimum in all 30 independent runs. In contrast, algorithms such as SFOA and PSO produce results that are several orders of magnitude worse, highlighting ISFOA’s superior accuracy and reliability on these functions. On the hybrid functions (F7–F8), ISFOA maintains an extremely high convergence success rate, with both its best and mean values at zero and a near-zero standard deviation, demonstrating its efficacy on complex, multi-modal landscapes. On the composite functions (F9–F10), ISFOA remains stable and consistently locates the theoretical optimum, whereas other algorithms exhibit performance degradation, with some failing to approach the optimal solution effectively. This further confirms ISFOA’s strong global exploration capability and its ability to escape local optima.

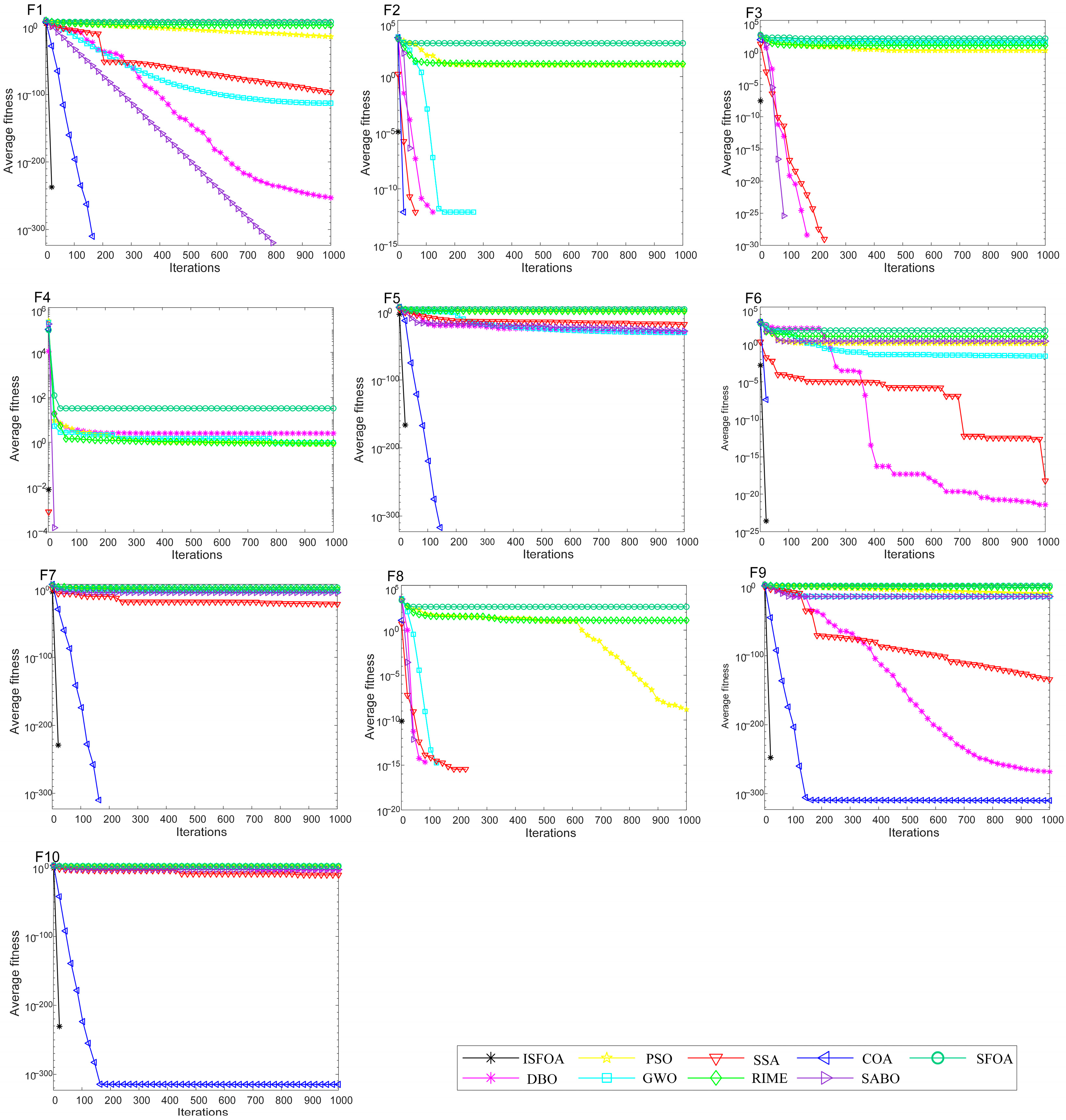

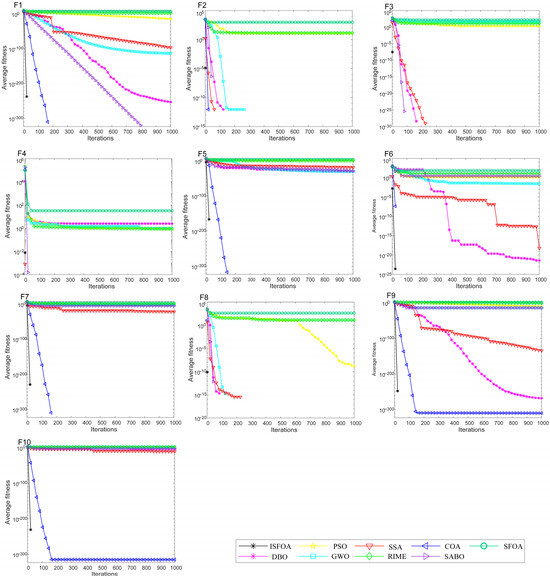

The convergence curves for CEC 2021 are shown in Figure 5. They visually illustrate ISFOA’s significant advantage, with convergence behavior adapting to function complexity. On basic functions, ISFOA’s curve descends steeply and stabilizes rapidly at the optimum without oscillation. On hybrid functions, the curves show excellent adaptability across search regions, converging smoothly without the plateaus or fluctuations seen in other algorithms. On the most challenging composite functions, ISFOA exhibits a steady, step-wise decline, consistently escaping local optima. In contrast, comparison algorithms such as SFOA and PSO often stagnate prematurely. This demonstrates the effectiveness of ISFOA in maintaining population diversity and ensuring global convergence.

Figure 5.

CEC2021 convergence iteration curve.

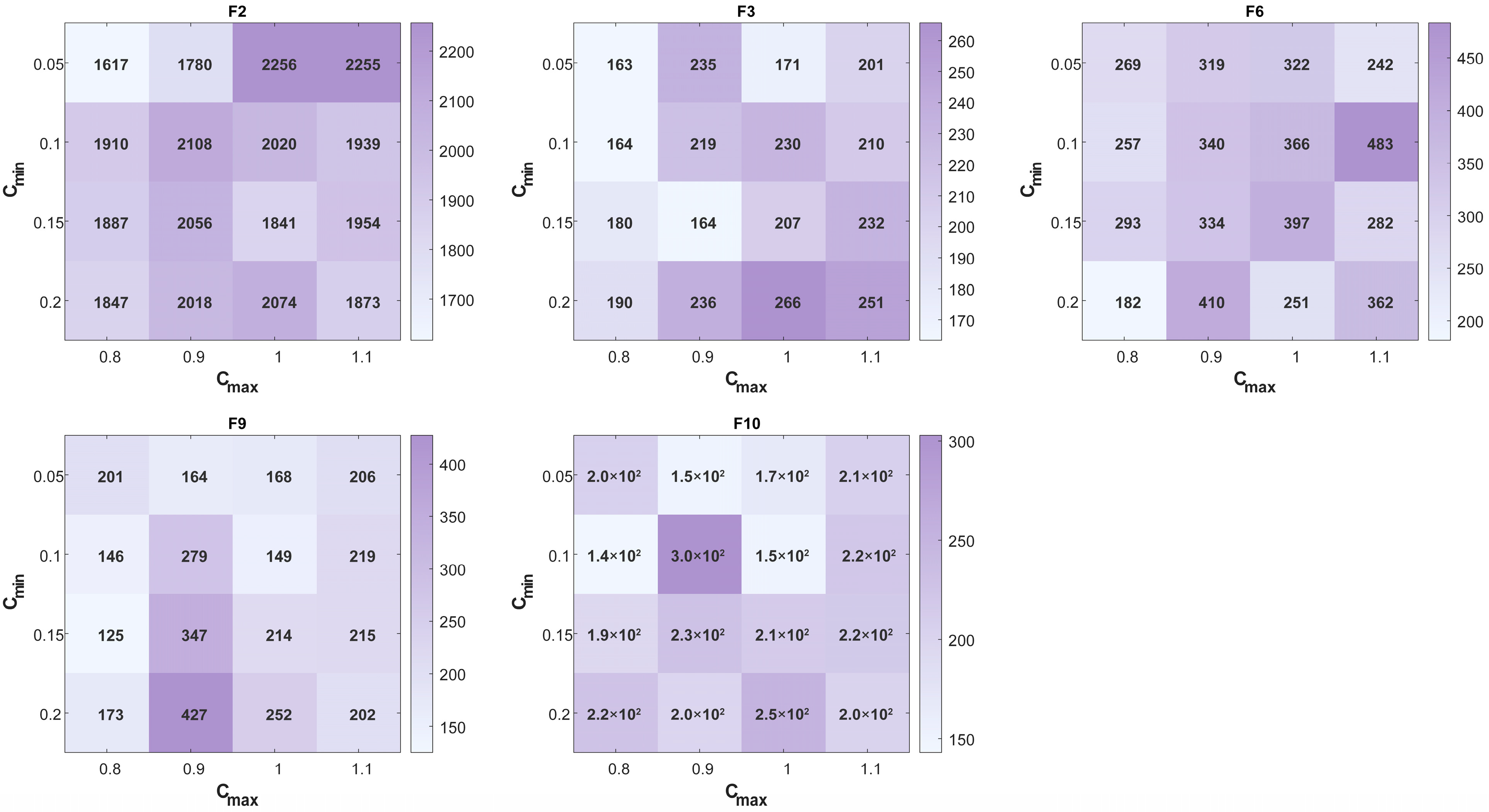

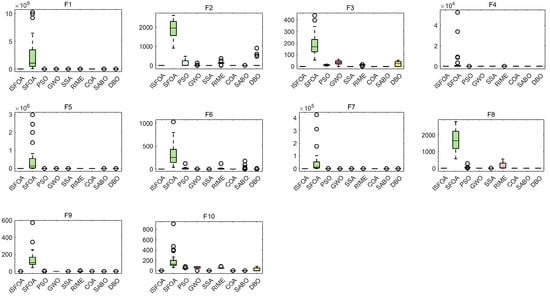

The box plots for CEC 2021 are shown in Figure 6, further confirming ISFOA’s exceptional stability and robustness. Across all ten functions, ISFOA’s box plots show consistent superiority. On functions where the theoretical optimum is attainable, the box collapses to a single line at the optimum with no outliers. Even on composite functions where other algorithms struggle, ISFOA’s box is the shortest, and its median is closest to the optimum, indicating highly concentrated, high-quality solutions. In contrast, other algorithms typically exhibit elongated boxes, medians far from the optimum, widely dispersed edges, and numerous outliers. This reflects substantial result fluctuation, unstable performance, and high sensitivity to initial conditions.

Figure 6.

CEC2021 box-and-line plot.

The results of the Wilcoxon rank-sum test are presented in Table 10. In the experiments, the significance level was set at 0.05. A significance level exceeding 0.05 indicates no statistically significant difference, while a value below 0.05 denotes a significant performance difference. In this table, the symbols “+”, “−”, and “=” denote that ISFOA performs significantly better, significantly worse, or shows no significant difference, respectively.

Table 10.

Results of the Wilcoxon rank-sum test for ISFOA on the CEC2021.

Specifically, compared to the original SFOA, ISFOA demonstrates statistically significant superiority across all 10 test functions, fully validating the effectiveness of the improvement strategies proposed in this paper. In comparison with traditional algorithms (e.g., PSO and RIME), ISFOA also exhibits comprehensive superiority, performing significantly better on all test functions, which highlights its strong competitiveness in solving optimization problems. Compared to GWO and DBO, ISFOA shows significant advantages on 9 functions, with comparable performance only on function F8. This is because the specific structure of F8 enables both ISFOA and GWO to converge to the global optimum. Against SSA and SABO, ISFOA performs significantly better on six and seven functions, respectively, while no significant difference is observed on the remaining functions. Compared to COA, ISFOA demonstrates better performance on functions F9 and F10, indicating its greater competitiveness in solving hybrid and composition functions.

In summary, the experimental results based on the CEC2021 benchmark set indicate that the overall performance of ISFOA is superior to all compared algorithms, including the original SFOA, PSO, GWO, SSA, RIME, COA, SABO, and DBO.

5. Engineering Applications of ISFOA

To assess the performance of ISFOA on practical engineering constrained optimization problems, seven typical problems were selected and solved using ISFOA and various other metaheuristic algorithms, as listed in Table 11. Engineering constrained optimization is generally challenging due to the frequent presence of multiple highly nonlinear and complex constraints. In this study, the penalty function method is employed to handle these constraints by assigning a sufficiently large penalty to infeasible solutions, thereby converting the original constrained problem into an unconstrained formulation. Table 12 presents the best value, mean value, standard deviation, and corresponding ranking obtained from 30 independent runs of each algorithm.

Table 11.

The real-world constrained engineering optimization problems.

Table 12.

Test results of engineering problems.

The results in Table 12 show that ISFOA is highly effective in solving the selected engineering optimization problems. It outperforms all other compared algorithms on six of the seven problems, with its result on problem P5 being only slightly less competitive than that of DBO. Overall, ISFOA successfully handles constrained optimization in practical engineering contexts, demonstrating excellent performance, and its comprehensive results are clearly superior to those of the other algorithms evaluated.

6. Conclusions

This study proposes an Improved Superb Fairy-wren Optimization Algorithm. The algorithm enhances the original Superb Fairy-wren Optimization Algorithm by integrating four strategies: a Chebyshev Chaotic Map, an adaptive weighting factor, Cauchy–Gaussian mutation, and t-distribution perturbation. These improvements systematically bolster the algorithm’s capacity to balance global exploration and local exploitation, maintain population diversity, and achieve higher convergence accuracy.

Ablation studies confirm the critical role of each strategy. The Chebyshev Chaotic Map ensures a uniform initial population distribution. The adaptive weighting factor dynamically balances exploration and exploitation during multimodal optimization. Cauchy–Gaussian mutation effectively preserves population diversity, while t-distribution perturbation provides a robust mechanism to escape local optima in later iterations, which is particularly impactful in complex search spaces.

Experimental evaluations on the CEC2005 and CEC2021 benchmark suites show that ISFOA significantly outperforms several mainstream metaheuristic algorithms—including DBO, COA, GWO, PSO, SSA, RIME, and SABO—in terms of the mean, best, and standard deviation of results. This demonstrates ISFOA’s superior solution accuracy, robustness, and convergence speed, as further validated by the Wilcoxon rank-sum test. Moreover, when applied to seven real-world constrained engineering problems (e.g., welded beam design, tension/compression spring design, and pressure vessel design), ISFOA also exhibits excellent performance in both solution quality and stability, underscoring its practical utility.

Despite its strong overall performance, ISFOA has limitations. Its convergence precision on certain test functions can be improved, and the incorporation of multiple strategies increases computational complexity. Future work will therefore focus on the following: (1) optimizing the adaptive parameter adjustment mechanism; (2) developing hybrid models by combining ISFOA with other intelligent algorithms; (3) extending its application to high-dimensional, multi-objective, and large-scale optimization problems, as well as to dynamic systems such as fault diagnosis and remaining useful life prediction; and (4) validating its competitiveness in more complex engineering scenarios, including UAV path planning, feature selection, and hyperparameter optimization. These efforts aim to advance the practical application of metaheuristic algorithms in broader domains.

Author Contributions

Conceptualization, Y.C. (Yachao Cao) and H.L.; methodology, Y.C. (Yachao Cao); software, H.L.; validation, Y.C. (Yachao Cao), Z.W. and Q.Z.; formal analysis, Y.C. (Yachao Cao); investigation, Y.C. (Yachao Cao); resources, Q.Z.; data curation, Y.C. (Yachao Cao); writing—original draft preparation, H.L.; writing—review and editing, H.L.; visualization, Y.C. (Yachao Cao); supervision, Y.C. (Yanping Cui); project administration, Z.W.; funding acquisition, Y.C. (Yanping Cui). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Defense Science and Technology Industry Bureau Vehicle Power Special Project (VTDP-3202); Central Guidance on Local Science and Technology Development Fund of Hebei Province (254Z1904G); Hebei Science and Technology Innovation Project (2025004); Research Project of Hebei Education Department (CXY2025041); Shijiazhuang science and Technology Bureau Basic Research Project of Shijiazhuang University in Hebei Province (241791157A).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code supporting the findings of this study is openly available in the GitHub repository at: https://github.com/Lv1118/algorithm, accessed on 9 January 2026.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Akinola, O.O.; Ezugwu, A.E.; Agushaka, J.O.; Zitar, R.A.; Abualigah, L. Correction to: Multiclass feature selection with metaheuristic optimization algorithms: A review. Neural Comput. Appl. 2023, 35, 5593. [Google Scholar] [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar]

- Selvarajan, S. A comprehensive study on modern optimization techniques for engineering applications. Artif. Intell. Rev. 2024, 57, 194. [Google Scholar] [CrossRef]

- Sowmya, R.; Premkumar, M.; Jangir, P. Newton-Raphson-based optimizer: A new population-based metaheuristic algorithm for continuous optimization problems. Eng. Appl. Artif. Intell. 2024, 128, 107532. [Google Scholar]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Wu, Z.; Chen, H. Artemisinin optimization based on malaria therapy: Algorithm and applications to medical image segmentation. Displays 2024, 84, 102740. [Google Scholar] [CrossRef]

- Ghasemi, M.; Zare, M.; Trojovský, P.; Rao, R.V.; Trojovská, E.; Kandasamy, V. Optimization based on the smart behavior of plants with its engineering applications: Ivy algorithm. Know.-Based Syst. 2024, 295, 111850. [Google Scholar]

- Wang, L.; Du, H.; Zhang, Z.; Hu, G.; Mirjalili, S.; Khodadadi, N.; Hussien, A.G.; Liao, Y.; Zhao, W. Tianji’s horse racing optimization (THRO): A new metaheuristic inspired by ancient wisdom and its engineering optimization applications. Artif. Intell. Rev. 2025, 58, 282. [Google Scholar] [CrossRef]

- Yu, D.; Ji, Y.; Xia, Y. Projection-Iterative-Methods-based Optimizer: A novel metaheuristic algorithm for continuous optimization problems and feature selection. Knowl.-Based Syst. 2025, 326, 113978. [Google Scholar]

- Akbari, E.; Rahimnejad, A.; Gadsden, S.A. Holistic swarm optimization: A novel metaphor-less algorithm guided by whole population information for addressing exploration-exploitation dilemma. Comput. Methods Appl. Mech. Eng. 2025, 445, 118208. [Google Scholar]

- Xiao, Y.; Cui, H.; Khurma, R.A.; Castillo, P.A. Artificial lemming algorithm: A novel bionic meta-heuristic technique for solving real-world engineering optimization problems. Artif. Intell. Rev. 2025, 58, 84. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Talatahari, S.; Azizi, M. Chaos game optimization: A novel metaheuristic algorithm. Artif. Intell. Rev. 2021, 54, 917–1004. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Bozorg-Haddad, O.; Chu, X. Gradient-based optimizer: A new metaheuristic optimization algorithm. Inf. Sci. 2020, 540, 131–159. [Google Scholar] [CrossRef]

- Chen, H.; Li, C.; Mafarja, M.; Heidari, A.A.; Chen, Y.; Cai, Z. Slime mould algorithm: A comprehensive review of recent variants and applications. Int. J. Syst. Sci. 2023, 54, 204–235. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Huang, T.; Huang, F.; Qin, Z.; Pan, J. Correction to: An improved polar lights optimization algorithm for global optimization and engineering applications. Sci. Rep. 2025, 15, 16469. [Google Scholar] [CrossRef]

- Lian, J.; Hui, G.; Ma, L.; Zhu, T.; Wu, X.; Heidari, A.A.; Chen, Y.; Chen, H. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 2024, 172, 108064. [Google Scholar] [CrossRef]

- Wang, J.; Wang, W.C.; Hu, X.X.; Qiu, L.; Zang, H.F. Black-winged kite algorithm: A nature-inspired metaheuristic for solving benchmark functions and engineering problems. Artif. Intell. Rev. 2024, 57, 98. [Google Scholar] [CrossRef]

- Hamad, R.K.; Rashid, T.A. GOOSE algorithm: A powerful optimization tool for real-world engineering challenges and beyond. Evol. Syst. 2024, 15, 1249–1274. [Google Scholar] [CrossRef]

- Amiri, M.H.; Mehrabi Hashjin, N.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 2024, 14, 5032. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y. PID-based search algorithm: A novel metaheuristic algorithm based on PID algorithm. Expert Syst. Appl. 2023, 232, 120886. [Google Scholar] [CrossRef]

- Ghasemi, M.; Rahimnejad, A.; Akbari, E.; Rao, R.V.; Trojovský, P.; Trojovská, E.; Gadsden, S.A. A new metaphor-less simple algorithm based on Rao algorithms: A Fully Informed Search Algorithm (FISA). PeerJ Comput. Sci. 2023, 9, e1431. [Google Scholar] [CrossRef] [PubMed]

- Bai, J.; Li, Y.; Zheng, M.; Khatir, S.; Benaissa, B.; Abualigah, L.; Wahab, M.A. A sinh cosh optimizer. Knowl.-Based Syst. 2023, 282, 111081. [Google Scholar]

- Yao, L.; Yuan, P.; Tsai, C.Y.; Zhang, T.; Lu, Y.; Ding, S. ESO: An enhanced snake optimizer for real-world engineering problems. Expert Syst. Appl. 2023, 230, 120594. [Google Scholar] [CrossRef]

- Sahu, V.S.D.M.; Samal, P.; Panigrahi, C.K. Tyrannosaurus optimization algorithm: A new nature-inspired metaheuristic algorithm for solving optimal control problems. e-Prime Adv. Electr. Eng. Electron. Energy 2023, 5, 100243. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 2002, 1, 67–82. [Google Scholar] [CrossRef]

- Qiao, Y.; Han, Z.; Fu, H.; Gao, Y. An Improved Red-Billed Blue Magpie Algorithm and Its Application to Constrained Optimization Problems. Biomimetics 2025, 10, 788. [Google Scholar] [CrossRef]

- Lv, W.; He, Y.; Yang, Y.; Ma, X.; Chen, J.; Zhang, Y. Improving the Dung Beetle Optimizer with Multiple Strategies: An Application to Complex Engineering Problems. Biomimetics 2025, 10, 717. [Google Scholar] [CrossRef]

- Chen, B.; Chen, Y.; Cao, L.; Chen, C.; Yue, Y. An Improved Crested Porcupine Optimization Algorithm Incorporating Butterfly Search and Triangular Walk Strategies. Biomimetics 2025, 10, 766. [Google Scholar] [CrossRef]

- Jia, H.; Zhou, X.; Zhang, J.; Mirjalili, S. Superb Fairy-wren Optimization Algorithm: A novel metaheuristic algorithm for solving feature selection problems. Clust. Comput. 2025, 28, 246. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.-P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization; Technical Report; KanGAL Report Number 2005005; Nanyang Technological University: Singapore; Kanpur Genetic Algorithms Laboratory, Indian Institute of Technology: Kanpur, India, 2005. [Google Scholar]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technical Report; School of Electrical Engineering, Zhengzhou University: Zhengzhou, China, 2014. [Google Scholar]

- Gad, A.G. Correction to: Particle swarm optimization algorithm and its applications: A systematic review. Arch. Comput. Methods Eng. 2023, 30, 3471. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Su, H.; Zhao, D.; Heidari, A.A.; Liu, L.; Zhang, X.; Mafarja, M.; Chen, H. RIME: A physics-based optimization. Neurocomputing 2023, 532, 183–214. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Trojovská, E.; Trojovský, P. Coati Optimization Algorithm: A new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl.-Based Syst. 2023, 259, 110011. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Subtraction-average-based optimizer: A new swarm-inspired metaheuristic algorithm for solving optimization problems. Biomimetics 2023, 8, 149. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Shen, B. Dung beetle optimizer: A new metaheuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.