Abstract

Fine-grained image recognition is one of the key tasks in the field of computer vision. However, due to subtle inter-class differences and significant intra-class differences, it still faces severe challenges. Conventional approaches often struggle with background interference and feature degradation. To address these issues, we draw inspiration from the human visual system, which adeptly focuses on discriminative regions, to propose a bio-inspired gradient-aware attention mechanism. Our method explicitly models gradient information to guide the attention, mimicking biological edge sensitivity, thereby enhancing the discrimination between global structures and local details. Experiments on the CUB-200-2011, iNaturalist2018, nabbirds and Stanford Cars datasets demonstrated the superiority of our method, achieving Top-1 accuracy rates of 92.9%, 90.5%, 93.1% and 95.1%, respectively.

1. Introduction

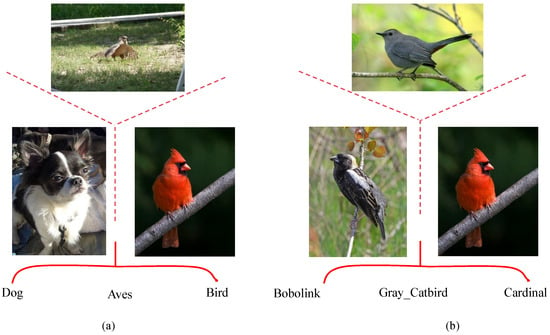

Fine-grained image recognition is vital for applications ranging from ecological monitoring to intelligent retail. As shown in Figure 1, it involves discriminating between subordinate categories under the same superordinate class (e.g., bird species) and is characterized by two principal challenges: inter-class differences can be extremely subtle, relying on fine local cues like edges and textures, while intra-class variations can be substantial due to factors such as pose, occlusion, and illumination, making it difficult to learn representations that are both discriminative and robust.

Figure 1.

Illustration of (a) coarse-grained classification, where inter-class differences are large and easy to separate, and (b) fine-grained classification, where categories differ only in subtle local attributes.

Recent research on fine-grained visual recognition has mainly developed in two directions. The first one emphasizes the positioning and cropping of differentiated areas. These methods typically rely on rigid rectangular proposals to detect and crop protruding areas [1]. However, such strategies often introduce background noise and fail to capture structural relationships among regions, leading to overly large or imprecise bounding boxes. The second one leverages attention mechanisms to extract discriminative information directly from the full image. For instance, TransFG [2] employs counterfactual attention to suppress background interference and improve region localization. MetaFormer [3] enriches discriminative semantics by injecting auxiliary meta-information—such as geographical, attribute, or textual cues—into visual tokens. Dual-Fusion [4] enhances representations through cross-layer feature fusion without heavy annotation overhead. MPSA [5] introduces partial sampling attention for multi-scale representation learning, while HERBS [6] incorporates high-temperature refinement and background suppression modules to strengthen discriminative features and reduce background noise in multi-granularity learning.

Despite these advances in modeling discriminative regions, two underlying issues remain largely unaddressed. The progressive feature aggregation in Transformer architectures tends to weaken high-frequency components such as edge and texture gradients, causing a loss of fine local details that are critical for discriminating highly similar categories; Although discriminative regions can be localized, there is a lack of explicit modeling for continuously and reliably incorporating these high-frequency structural cues into the global representation. This missing systematic propagation pathway restricts the model’s capacity to achieve an optimal balance between discriminability and robustness.

Research [7] has demonstrated that in the hierarchical processing of the human visual cortex, gradients serve as fundamental and powerful low-level visual features, while the attention mechanism optimizes information processing by enhancing neural signals associated with task-relevant gradient information. In the classic theory of computational neuroscience and cognitive science, the Feature Integration Theory [8], visual processing is divided into two stages. In the preattentive stage, the visual system processes basic features of the entire scene in parallel and automatically, such as color, orientation, motion, and spatial frequency (the basis of gradients). These features are registered in specific “feature maps.” In the feature integration stage, attention binds these disparate features together to form the objects we perceive. Edges and contours in an image correspond precisely to areas of high activity in the orientation feature maps. These high-gradient regions generate strong signals during the preattentive stage and become candidate foci for attention.

We draw inspiration from the above theory and propose a gradient-aware spatial attention framework for fine-grained image recognition. Our core idea is to directionally recalibrate spatial attention using explicit gradient information and encode the fine gradient representation into a compact gradient token, which is then injected into the Transformer. This makes it possible to jointly model local high-frequency details and global semantics. The main contributions are:

- We propose an efficient method for constructing gradient-aware spatial weight maps, which effectively encodes high-frequency direction cues at an extremely low computational cost through multi-directional one-step attention fusion.

- We propose a strategy of injecting compact gradient tokens into the Transformer, which enables the model to explicitly utilize high-frequency local information while maintaining the global semantic modeling capability.

- The proposed method is evaluated on four widely used fine-grained benchmarks: CUB-200-2011, iNaturalist 2018, NABirds, and Stanford Cars, achieving top-1 accuracies of 92.9%, 90.5%, 93.1%, and 95.1%, respectively, demonstrating its effectiveness.

2. Related Work

2.1. Fine-Grained Image Recognition

Fine-grained image recognition addresses the modeling problem of subtle appearance differences between homologous categories, with the aim of accurately distinguishing visually similar subordinate categories.

Early approaches [9,10,11] typically relied on strongly supervised annotations such as part keypoints, bounding boxes, or segmentation masks to guide models toward discriminative regions. While effective at enhancing local feature discriminability, these methods suffer from high annotation cost and poor scalability. Subsequent research [12,13,14] therefore explored self-supervised, weakly supervised, or structured network designs that aim to automatically discover discriminative parts and strengthen local representations. In addition, auxiliary modalities such as textual cues or spatio-temporal/geographic priors [15] have been leveraged to enrich representations and suppress background noise. Although these auxiliary modalities can improve robustness, they depend on the availability and reliability of external sources, and effectively incorporating such priors while preserving generalization remains challenging.

In fine-grained image recognition, discriminative features between different categories often reside in extremely subtle local differences. Introducing explicit priors, such as shape structure, object contours, and multi-scale texture patterns, has become a key strategy for enhancing model discriminability. Khan et al. [16] propose a Region of Interest (ROI)-based object recognition model to address limitations in existing methods by efficiently identifying objects at any image location while handling challenges like overlapping objects and noisy backgrounds. Cross-X [17] facilitates multi-scale feature correspondence by establishing associations across different images and network layers, explicitly encouraging the model to capture discriminative cues ranging from local details to global patterns. Han et al. [18] propose an end-to-end Part-based Convolutional Neural Network (P-CNN) that integrates channel attention, automatic part localization, and multi-stream classification with a novel Duplex Focal Loss to achieve effective fine-grained visual categorization. These methods, by embedding strong priors closely aligned with task requirements, perform exceptionally well in scenarios with limited data or well-defined prior knowledge. However, their performance heavily depends on the quality and completeness of the incorporated priors, which, to some extent, constrains their generalization capability.

2.2. Attention Mechanism

The core idea of the attention mechanism is to enable models to dynamically focus on the most important parts of the input. Its development originated from sequence-to-sequence learning in machine translation, initially manifested as the additive attention proposed by Bahdanau et al. [19] and the multiplicative attention introduced by Luong et al. [20]. These early mechanisms computed association weights between hidden states to achieve alignment of the source sequence, shifting information processing from static encoding to dynamic focusing and laying the groundwork for handling long sequences and complex dependencies. Subsequently, Vaswani et al. [15] pioneered the Transformer architecture in 2017, which relied entirely on the self-attention mechanism and completely abandoned recurrent and convolutional structures. The core of this architecture—scaled dot-product attention—leverages the query–key–value model to compute global dependencies in parallel, significantly enhancing training efficiency and long-range modeling capability. The success of Transformer established attention as a fundamental component of deep learning and catalyzed the wave of pre-trained models such as BERT [10] and GPT [21].

Ref. [22] first applied the standard Transformer directly to image recognition, introducing the Vision Transformer (ViT), which achieves performance comparable to or exceeding that of CNNs on multiple benchmarks. Relative to CNNs, ViT imposes fewer image-specific inductive biases and therefore offers greater modeling flexibility, but this advantage typically comes at the cost of requiring large-scale training data to learn effective visual representations. Although the Transformer has achieved success in vision tasks, its powerful global focus ability reduces the ability to express details. Fine-grained high-frequency details may be weakened through layers of self-attention, thereby damaging the sensitivity to subtle differences. A variety of optimization paths have been explored: replacing self-attention with pure MLPs or simplified token-mixing operators [23], compressing token counts via token selection, pruning, or hierarchical downsampling [2] and introducing bidirectional cross-attention, cross-layer attention, or frequency-domain modeling to better balance low-frequency semantics and high-frequency textures [24,25]. Ref. [26] proposes a new hybrid learning framework that combines dynamic physical models with data-driven quadratic neural networks and bidirectional LSTM. Compared with traditional models, it significantly improves interpretability, credibility and reliability. Ref. [27] proposes a SimAM-Point module, which successfully applies a neuroscience-inspired 3D parameter-free attention mechanism to point cloud feature extraction. By optimizing an energy function, it achieves channel-wise and spatial attention computation without introducing additional parameters, significantly enhancing feature discriminability while maintaining computational efficiency. Nevertheless, most of these solutions do not explicitly model high-frequency information, or they struggle to achieve an ideal trade-off between detail preservation and computational efficiency.

3. Materials and Methods

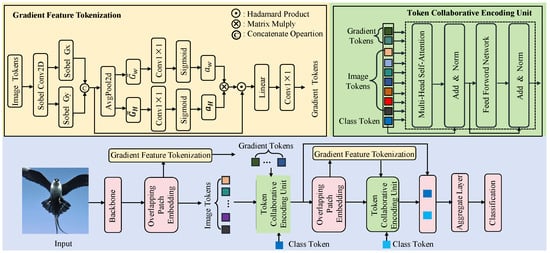

Figure 2 illustrates the overall architecture of our gradient-aware spatial attention framework for fine-grained recognition. An input image is first encoded by a backbone into feature maps and then converted into overlapping patch embeddings to produce spatially contiguous image tokens. To recover high-frequency cues degraded in fine-grained tasks, we propose the Gradient Feature Tokenization (GFT) module: Sobel filters compute per-token gradient magnitude and orientation maps; learnable one-dimensional directional attention is applied along height and width; the two directional weights are combined via the Hadamard product to form a 2D spatial weighting map, which is used to weight local gradient features and project them into gradient tokens aligned with the image-token dimensionality. Image tokens, gradient tokens, and a learnable class token are fed into the Token Collaborative Encoding Unit (TCEU), where multi-head attention enables cross-token interaction to enhance fine-detail representation. The TCEU runs in two stages: stage one refines the initial tokens; stage two introduces a new class token, concatenates it with the stage-one outputs and recomputes gradient tokens, and applies attention for further refinement. Finally, the class tokens from both stages are fused in the Aggregation Layer to produce the final prediction.

Figure 2.

Overview of the proposed Gradient-Aware Spatial Attention for Fine-Grained Image Recognition. The lower part shows the network pipeline. The upper part is divided into two sections: the left section highlights the Gradient Feature Extraction module, while the right section illustrates the Token Collaborative Encoding Unit.

3.1. Image Feature Tokenization

To convert a two-dimensional image into a sequence suitable for a Transformer, we start from the input image , where H and W denote the image spatial dimensions and the last dimension corresponds to the three RGB channels. A backbone convolutional network is first used to extract intermediate feature maps:

where denotes the feature map, B is the batch size, is the number of channels, and , are the spatial resolutions of the feature map. The initial feature extraction stage of the backbone employs three consecutive convolutional layers followed by Mobile Inverted Bottleneck Convolution blocks with squeeze-and-excitation modules [28].

To convert the continuous feature map M into image tokens, we adopt the Overlapping Patch Embedding operator [29]. This operator reduces spatial dimensions via convolutional layers with padding and projects local regions into a high-dimensional embedding space, yielding a sequence of visual tokens:

where is the total number of image tokens and C denotes the embedding dimension. This design preserves fine-grained local information while facilitating attention-based modeling in subsequent Transformer layers.

3.2. Gradient Feature Tokenization

3.2.1. Gradient Feature Extraction

To address the vulnerability of vision Transformers to gradient-feature degradation in fine-grained tasks, we propose the Gradient Feature Tokenization module to enhance fine-grained representation. Built on top of the overlapping patch embedding, this method introduces explicit gradient extraction, directional attention modeling, and projects the weighted gradient features back into token space as augmented inputs to the Transformer. The procedure is as follows.

Let the output of the overlapping patch embedding be , where B is the batch size, is the sequence length and C is the channel dimension. First, reshape Y to spatial form .

To capture per-channel local directional gradient information, we apply Sobel operators via convolution along the horizontal and vertical directions. Define the convolution kernels as

Using grouped convolution (number of groups , padding ), we convolve to obtain the horizontal and vertical gradient maps:

Concatenating and yields the joint gradient representation:

3.2.2. Directional Attention Construction

To further mine global spatial response patterns of the gradient features, we perform adaptive average pooling along the width and height axes to obtain orthogonal global statistics:

We apply one-dimensional convolutions (kernel size ) to and , followed by a Sigmoid activation to produce directional attention weights:

where denotes the Sigmoid function.

3.2.3. Attention Reconstruction and Gradient Weighting

The directional attentions and are reconstructed into a two-dimensional spatial attention map via outer product:

The attention map A is applied to the original gradient features G element-wise to realize spatially adaptive enhancement:

where ⊙ denotes the Hadamard product. This process emphasizes discriminative edges and texture regions, improving fine-detail representation.

3.2.4. Gradient Token Generation

Flatten the weighted gradient features into a sequential representation:

To align with the dimensionality of the original image tokens, apply a linear projection to each spatial position’s -dimensional gradient vector:

A lightweight convolutional layer is then used to refine channel distribution, yielding the final gradient tokens:

The resulting augmented output serves as input to the Token Collaborative Encoding Unit and participates in self-attention and feed-forward computations. By explicitly modeling gradient information and directional attention and integrating them as token embeddings into the vision Transformer, this mechanism refines sensitivity to local spatial structure and strengthens representations of edges and textures.

3.3. Token Collaborative Encoding Unit

To enable effective discrimination between visually similar categories, our model integrates complementary information from multiple representations: the global semantics from a class token, local details from image patches, and structural cues from gradients. To this end, we introduce the Token Collaborative Encoding (TCE) Unit. The TCE unit is designed to fuse these distinct modalities by first concatenating the learnable classification token , the gradient-enhanced tokens and the original image tokens into a unified sequence. This integrated representation allows the model to collaboratively aggregate high-level semantics with fine-grained structural details for more robust recognition. And it enriches the input sequence in a new complementary form, leveraging the powerful fusion ability of self-attention to achieve a more comprehensive and distinctive representation.

Concretely, the learnable classification token , the gradient-enhanced tokens and the original image tokens are concatenated to form the initial input:

The fused sequence is then fed into L standard Transformer layers, each comprising multi-head self-attention (MHSA) and a position-wise feed-forward network (MLP). To ensure stable gradient flow and accelerate convergence, pre-normalization with Layer Normalization (LN) [30] is applied before the attention and feed-forward sublayers. Specifically, the computation within each layer is:

The sequence is projected to query, key and value matrices , where denotes the number of image tokens and indicates one class token plus G gradient tokens. The self-attention operation incorporates a relative positional bias to encode spatial relationships [23]:

By jointly fusing semantic, gradient and appearance cues, the Token Collaborative Encoding Unit strengthens the model’s ability to focus on discriminative features, which is particularly important for fine-grained image recognition.

The interaction between the gradient tokens and the class token is not mediated by an explicit, standalone cross-attention mechanism. Instead, their interaction is facilitated implicitly and dynamically within the Token Collaborative Encoding (TCE) Unit through a standard multi-head self-attention (MSA) layer. After concatenating all tokens into a unified sequence, the MSA operation allows every token to attend to every other token. In this process, the class token can selectively attend to and assimilate informative structural cues from the gradient tokens, while the gradient tokens also receive global contextual guidance from the class token.

4. Results and Discussion

4.1. Datasets

We evaluate the proposed method on four widely used fine-grained visual recognition benchmark datasets (see Table 1): iNaturalist 2018 [31], CUB-200-2011 [32], NABirds [33] and Stanford Cars [34]. iNaturalist 2018 contains millions of real-world images covering plants, animals and fungi, and poses significant challenges due to severe class imbalance and domain shifts. CUB-200-2011 targets bird species recognition and exhibits high inter-class similarity as well as substantial intra-class variations caused by illumination, pose and viewpoint changes. NABirds extends CUB-200-2011 with a hierarchical taxonomy and adds additional difficulty through seasonal appearance changes. Stanford Cars focuses on fine-grained vehicle model recognition, where subtle visual differences among hundreds of car models demand strong discriminative capability. For all datasets, we strictly follow the official training/testing splits in our experiments.

Table 1.

The Details of Datasets We Used and the Corresponding Learning Rates.

4.2. Experimental Details

The backbone network was initialized with weights pretrained on iNaturalist 2021. All input images were resized to pixels. Experiments were implemented in PyTorch 2.0 and run on two NVIDIA RTX 4090 GPUs. We optimize using AdamW with a weight decay of 0.05, and employ a cosine annealing learning-rate schedule. Initial learning rates were chosen per-dataset based on preliminary validation: for iNaturalist 2018, CUB-200-2011 and NABirds, and for Stanford Cars. To stabilize early training we use a linear warm-up: CUB-200-2011 employs a 20-epoch warm-up whereas the other datasets use a 5-epoch warm-up. Standard data augmentations are applied (random crop, horizontal flip, color jitter), and stochastic depth regularization is used with a maximum drop rate of 0.1. Models were trained for 300 epochs with a batch size of 16. The experiments were conducted in a distributed data-parallel setting using PyTorch’s DistributedDataParallel. To ensure both reproducibility and proper data diversity across training processes, we employed a seed-setting strategy that combines a global base seed with the unique rank of each process. These settings were selected to improve generalization and mitigate overfitting while accommodating dataset-specific characteristics.

4.3. Comparison with SOTA Methods

4.3.1. iNaturalist 2018

Table 2 compares our gradient-aware spatial attention network with representative methods on iNaturalist 2018. Unlike MetaFormer [3], which enhances semantic discrimination via joint spatio-temporal tokens, our approach explicitly encodes high-frequency details as gradient tokens and fuses them with class and spatio-temporal tokens using cross-modal attention. This explicit modeling improves localization of fine-grained cues and suppresses background noise. On iNaturalist 2018, our method attains a Top-1 accuracy of 89.9%, demonstrating the benefit of incorporating gradient information.

Table 2.

Comparison Results on iNaturalist 2018.

4.3.2. CUB-200-2011

As shown in Table 3, our gradient-aware spatial attention network attains 92.9% accuracy on CUB-200-2011, outperforming TransFG [47] by over 1.2%. Rather than relying on auxiliary metadata as in MetaFormer [3] or on independent region heads that may break spatial consistency (e.g., IELT [11]), we introduce the Gradient Feature Tokenization (GFT) module to embed gradient-derived high-frequency cues into token embeddings. This explicit gradient modeling improves sensitivity to subtle texture differences and yields substantial gains in fine-grained recognition.

Table 3.

Comparison Results on CUB-200-2011.

4.3.3. NABirds

As shown in Table 4, the proposed gradient-aware spatial attention fine-grained recognition model achieves a Top-1 accuracy of 93.1% on the NABirds dataset, substantially outperforming TransIFC [47] which attains 91.0%. Unlike TransIFC, which focuses solely on discriminative regions and may overlook important contextual information, our model retains the complete image token sequence while incorporating gradient information, enabling the model to obtain richer feature representations. Compared to MPSA [5], which employs a local region sampling strategy, the proposed method integrates gradient-based features into the image feature maps, thereby balancing global structural cues and local fine-grained details and yielding superior performance.

Table 4.

Comparison Results on NABirds.

4.3.4. Stanford Cars

Table 5 reports results on Stanford Cars, where our method achieves a Top-1 accuracy of 95.1%. To better capture fine-grained textures (e.g., body finishes, emblems, and minor ornaments), we propose the Gradient Feature Tokenization (GFT) module, which encodes gradient-derived high-frequency cues into tokens aligned with image embeddings. The GFT preserves spatial structure while strengthening discriminative detail and yields consistent gains in challenging fine-grained scenarios.

Table 5.

Comparison Results on Stanford Cars.

4.4. Visualization Analysis

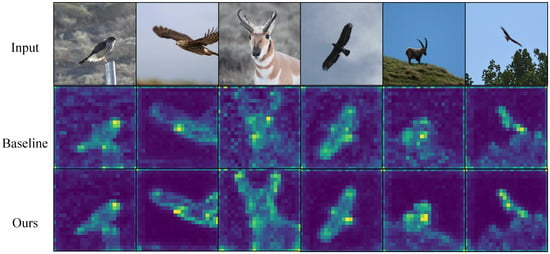

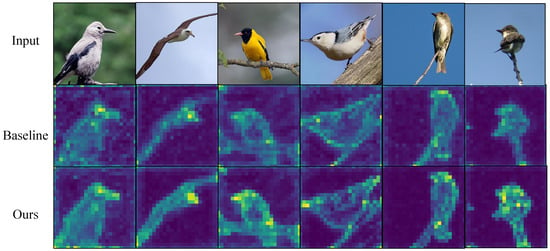

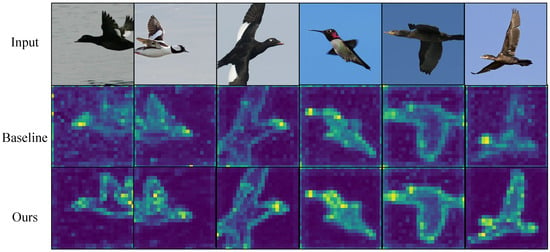

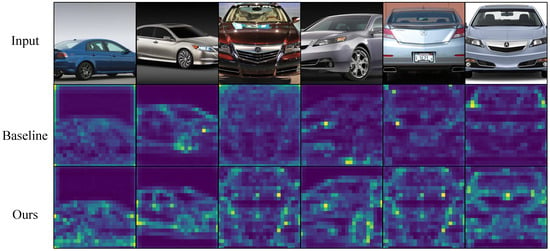

Figure 3, Figure 4, Figure 5 and Figure 6 present attention maps on iNaturalist 2018, CUB-200-2011, NABirds and Stanford Cars. The first row shows input images, the second row shows maps from the comparative model [3], and the third row shows maps from our gradient-aware spatial attention. While the comparative model attends to background or dispersed regions, our method concentrates on foreground edges, textures and discriminative parts. These results indicate that gradient tokens supply effective high-frequency cues, improving localization and discrimination of subtle features.

Figure 3.

Visualization on iNaturalist 2018. Our method emphasizes species-specific cues despite large intra-class diversity and clutter.

Figure 4.

Visualization on NABirds. Compared with the baseline, our model keeps stable attention on birds across complex scenes and poses.

Figure 5.

Visualization on CUB-200-2011. Our method focuses on key body parts (e.g., head, wings) while reducing background distraction.

Figure 6.

Visualization on Stanford Cars. The model consistently attends to key components (e.g., headlights, wheels), capturing fine shape differences.

4.5. Ablation Study

4.5.1. Effectiveness of the Proposed Module

The core component of the proposed gradient-aware spatial attention framework for fine-grained image recognition is the Gradient Feature Tokenization module. This module explicitly encodes image gradient cues—such as edges and subtle textures—into gradient tokens to enhance standard visual representations.

As shown in Table 6, integrating gradient tokens consistently improves performance across multiple fine-grained recognition datasets. On the iNaturalist 2018 dataset, performance increases by 1.2%, demonstrating the effectiveness of gradient-aware modeling in complex real-world biodiversity scenarios characterized by significant intra-class variation. Notably, on the challenging NABirds dataset, our method achieves state-of-the-art performance, surpassing previous leading approaches. These results indicate that the Gradient Feature Tokenization module not only provides complementary high-frequency structural cues but also exhibits strong generalization ability in diverse and realistic fine-grained recognition tasks.

Table 6.

Ablation Studies of the Proposed Gradient Feature Tokenization Modules.

4.5.2. Impact of the Number of Gradient Tokens

As shown in Table 7, we investigate the effect of varying the number of gradient tokens in the Gradient Feature Tokenization module on model performance. On the CUB-200-2011 dataset, the best performance is achieved when the number of gradient tokens is set to 12, yielding the optimal balance between accuracy and efficiency. Therefore, this configuration is adopted as the default setting. Similar trends are observed across other datasets, indicating that using 12 gradient tokens provides stable and generalizable performance in diverse fine-grained image recognition tasks.

Table 7.

Effect of gradient token count on Gradient-Aware Spatial Attention for Fine-Grained Image Recognition accuracy.

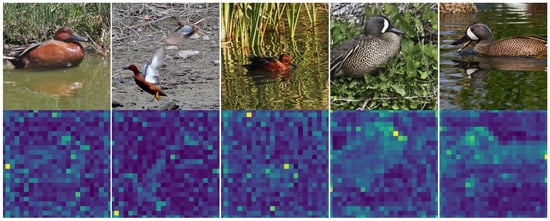

4.5.3. Analysis of Hard Cases

To thoroughly evaluate the robustness and failure modes of our model, we conducted systematic analysis and visualization of attention features on hard cases. As shown in Figure 7, our analysis reveals that these challenging samples primarily exhibit two typical patterns: (1) severe occlusion of critical discriminative regions (e.g., bird heads obscured by foliage), preventing the model from capturing decisive features; (2) strong semantic interference from background elements, causing attentional drift in the model. These findings reveal inherent limitations in the current gradient-aware mechanism. Future work will explore multimodal feature fusion to address a broader range of challenging cases.

Figure 7.

Hard cases on NABirds dataset.

4.5.4. Model Complexity Analysis

As shown in Table 8, to further validate the efficiency of our model, we compare its computational cost with several recent works. Our model requires 52.7 G FLOPs, which is notably lower than most contemporary models. In terms of model capacity, the number of the parameters of our method is 88.1 M, which is slightly higher than that of MetaFormer. Overall, these results demonstrate a superior trade-off between performance and computational complexity.

Table 8.

Comparison of the properties of the proposed model with the existing state-of-the-art models.

4.5.5. Sensitivity Analysis of Sobel Kernel Size

To determine the optimal configuration for the gradient-aware module, we conducted a sensitivity analysis on the size of the Sobel operator. The results, presented in Table 9, compare the performance achieved with kernel sizes of , , and . kernel is observed to yield the best performance on the benchmark. We posit that the smaller kernel excels at capturing the fine-grained, high-frequency details most pertinent to our task. Conversely, larger kernels, while offering a broader receptive field, may over-smooth these localized and critical features, leading to a discernible performance drop.

Table 9.

Sensitivity Analysis of Sobel Kernel Size on Nabirds dataset.

5. Conclusions

To address the insufficient exploitation of high-frequency details and the lack of effective local–global integration in fine-grained image recognition, this paper proposes a gradient-aware spatial attention framework. By explicitly modeling gradient signals, the method constructs a gradient-aware spatial attention mechanism that enables collaborative modeling of local discriminative details and global semantics. Experimental results demonstrate that the proposed approach significantly improves accuracy and robustness across multiple benchmark fine-grained datasets, particularly under challenging conditions such as background clutter and limited training samples. This work explores a novel pathway for efficiently incorporating explicit visual priors into Transformer architectures, enhancing the model’s sensitivity to subtle structural differences and contributing to the interpretability of vision Transformers. The proposed paradigm provides valuable insights for future research on multi-scale local prior modeling and hybrid attention mechanism design. In the future, integrating classic high-frequency detail enhancement techniques, such as Laplacian filtering, wavelet and dedicated edge enhancement modules, will be an important direction for our future research.

Furthermore, we point out that the method’s performance may be challenged in scenarios with: (1) extremely low-resolution inputs where gradient information becomes unreliable, (2) cases where critical discriminative features reside in color or spectral properties rather than structural patterns, and (3) situations with severe occlusion that obscures most structural details. Systematically studying these boundary conditions through more challenging test cases represents an important direction for future work.

The proposed gradient-aware spatial attention framework, validated on fine-grained image recognition tasks, exhibits substantial potential for various critical application domains requiring discrimination of subtle structural differences. In medical imaging, this approach can enhance the identification of fine edges and texture patterns, as well as improve recognition of pathological cellular structures in histopathological slides. Similarly, for industrial automated inspection, the framework can be deployed to detect microscopic defects such as hairline cracks on metal surfaces, micro-scratches on optical lenses, or faint anomalies in textiles. Adapting our framework to these domains through retraining on domain-specific data represents a straightforward and productive direction for future research.

Author Contributions

B.M.: conceptualization, methodology, data curation, project administration, writing, review; J.L.: methodology, supervision, writing, review; W.Z.: methodology, supervision, writing, review; Z.J.: methodology, supervision, writing, review; X.S.: supervision, review; B.J.: methodology, supervision, editing, funding acquisition, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by High-quality Development Special Project of the Ministry of Industry and Information Technology under Grant TC220H05V-W06, Joint Fund of Henan Province Science and Technology R&D Program under grant 245200810007, Joint Fund of Henan Province Science and Technology R&D Program under grant 225200810117, Joint Fund of Henan Province Science and Technology R&D Program under grant 235200810035, and the Scientific and Technological Research Project of Henan Academy of Sciences under grant 20252320005.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The source code is available at: https://github.com/bingbeu/GASA.git (accessed on 10 December 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zheng, H.; Fu, J.; Zha, Z.J.; Luo, J. Learning deep bilinear transformation for fine-grained image representation. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://arxiv.org/abs/1911.03621 (accessed on 10 December 2025).

- He, J.; Chen, J.N.; Liu, S.; Kortylewski, A.; Yang, C.; Bai, Y.; Wang, C. Transfg: A transformer architecture for fine-grained recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 852–860. [Google Scholar]

- Diao, Q.; Jiang, Y.; Wen, B.; Sun, J.; Yuan, Z. Metaformer: A unified meta framework for fine-grained recognition. arXiv 2022, arXiv:2203.02751. [Google Scholar]

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Learning gabor texture features for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1621–1631. [Google Scholar]

- Wang, J.; Xu, Q.; Jiang, B.; Luo, B.; Tang, J. Multi-Granularity Part Sampling Attention for Fine-Grained Visual Classification. IEEE Trans. Image Process. 2024, 33, 4529–4542. [Google Scholar] [CrossRef]

- Chou, P.Y.; Kao, Y.Y.; Lin, C.H. Fine-grained visual classification with high-temperature refinement and background suppression. arXiv 2023, arXiv:2303.06442. [Google Scholar]

- Moran, J.; Desimone, R. Selective attention gates visual processing in the extrastriate cortex. Science 1985, 229, 782–784. [Google Scholar] [CrossRef]

- Treisman, A.M.; Gelade, G. A feature-integration theory of attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Peng, Y. Fine-grained image classification via combining vision and language. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5994–6002. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019. [Google Scholar]

- Xu, Q.; Wang, J.; Jiang, B.; Luo, B. Fine-grained visual classification via internal ensemble learning transformer. IEEE Trans. Multimed. 2023, 25, 9015–9028. [Google Scholar] [CrossRef]

- Chu, G.; Potetz, B.; Wang, W.; Howard, A.; Song, Y.; Brucher, F.; Leung, T.; Adam, H. Geo-aware networks for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef]

- Do, T.; Tran, H.; Tjiputra, E.; Tran, Q.D.; Nguyen, A. Fine-grained visual classification using self assessment classifier. In Proceedings of the 2024 IEEE Conference on Artificial Intelligence (CAI), Singapore, 25–27 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 597–602. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://arxiv.org/abs/1706.03762 (accessed on 10 December 2025).

- Khan, R.; Raisa, T.F.; Debnath, R. An efficient contour based fine-grained algorithm for multi category object detection. J. Image Graph. 2018, 6, 127–136. [Google Scholar] [CrossRef]

- Luo, W.; Yang, X.; Mo, X.; Lu, Y.; Davis, L.S.; Li, J.; Yang, J.; Lim, S.N. Cross-x learning for fine-grained visual categorization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8242–8251. [Google Scholar]

- Han, J.; Yao, X.; Cheng, G.; Feng, X.; Xu, D. P-CNN: Part-Based Convolutional Neural Networks for Fine-Grained Visual Categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 579–590. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. arXiv 2015, arXiv:1508.04025. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. arXiv 2018, in press. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhu, H.; Ke, W.; Li, D.; Liu, J.; Tian, L.; Shan, Y. Dual cross-attention learning for fine-grained visual categorization and object re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 4692–4702. [Google Scholar]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Li, Y.; Xu, C.; Wang, Y. An image patch is a wave: Phase-aware vision mlp. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10935–10944. [Google Scholar]

- Keshun, Y.; Yingkui, G.; Yanghui, L.; Yajun, W. A novel physical constraint-guided quadratic neural networks for interpretable bearing fault diagnosis under zero-fault sample. Nondestruct. Test. Eval. 2025, 1–31. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Li, F.; Feng, Z.; Jia, L.; Li, P. RailVoxelDet: A Lightweight 3-D Object Detection Method for Railway Transportation Driven by Onboard LiDAR Data. IEEE Internet Things J. 2025, 12, 37175–37189. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8769–8778. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset; Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Van Horn, G.; Branson, S.; Farrell, R.; Haber, S.; Barry, J.; Ipeirotis, P.; Perona, P.; Belongie, S. Building a bird recognition app and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 595–604. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 554–561. [Google Scholar]

- Park, S.; Hong, Y.; Heo, B.; Yun, S.; Choi, J.Y. The majority can help the minority: Context-rich minority oversampling for long-tailed classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6887–6896. [Google Scholar]

- Touvron, H.; Cord, M.; El-Nouby, A.; Verbeek, J.; Jégou, H. Three things everyone should know about vision transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 497–515. [Google Scholar]

- Cui, J.; Zhong, Z.; Tian, Z.; Liu, S.; Yu, B.; Jia, J. Generalized parametric contrastive learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 7463–7474. [Google Scholar] [CrossRef]

- Liu, J.; Huang, X.; Zheng, J.; Liu, Y.; Li, H. MixMAE: Mixed and masked autoencoder for efficient pretraining of hierarchical vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6252–6261. [Google Scholar]

- Mac Aodha, O.; Cole, E.; Perona, P. Presence-only geographical priors for fine-grained image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 9596–9606. [Google Scholar]

- Touvron, H.; Cord, M.; Sablayrolles, A.; Synnaeve, G.; Jégou, H. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 32–42. [Google Scholar]

- Tian, C.; Wang, W.; Zhu, X.; Dai, J.; Qiao, Y. Vl-ltr: Learning class-wise visual-linguistic representation for long-tailed visual recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 73–91. [Google Scholar]

- Kim, D.; Heo, B.; Han, D. DenseNets reloaded: Paradigm shift beyond ResNets and ViTs. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 395–415. [Google Scholar]

- Girdhar, R.; Singh, M.; Ravi, N.; Van Der Maaten, L.; Joulin, A.; Misra, I. Omnivore: A single model for many visual modalities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16102–16112. [Google Scholar]

- Singh, M.; Gustafson, L.; Adcock, A.; Reis, V.d.F.; Gedik, B.; Kosaraju, R.P.; Mahajan, D.; Girshick, R.; Dollár, P.; van der Maaten, L. Revisiting weakly supervised pre-training of visual perception models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 804–814. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Ryali, C.; Hu, Y.T.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A hierarchical vision transformer without the bells-and-whistles. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: Birmingham, UK, 2023; pp. 29441–29454. [Google Scholar]

- Liu, H.; Zhang, C.; Deng, Y.; Xie, B.; Liu, T.; Li, Y.F. TransIFC: Invariant cues-aware feature concentration learning for efficient fine-grained bird image classification. IEEE Trans. Multimed. 2023, 27, 1677–1690. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Weinberger, K.Q. Densely Connected Convolutional Networks; IEEE Computer Society: Washington, DC, USA, 2016. [Google Scholar]

- Chang, D.; Tong, Y.; Du, R.; Hospedales, T.; Song, Y.-Z.; Ma, Z. An Erudite Fine-Grained Visual Classification Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Liu, M.; Zhang, C.; Bai, H.; Zhang, R.; Zhao, Y. Cross-part learning for fine-grained image classification. IEEE Trans. Image Process. 2021, 31, 748–758. [Google Scholar] [CrossRef]

- Zhao, P.; Yang, S.; Ding, W.; Liu, R.; Xin, W.; Liu, X.; Miao, Q. Learning Multi-Scale Attention Network for Fine-Grained Visual Classification. J. Inf. Intell. 2025, 3, 492–503. [Google Scholar] [CrossRef]

- Rao, Y.; Chen, G.; Lu, J.; Zhou, J. Counterfactual attention learning for fine-grained visual categorization and re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1025–1034. [Google Scholar]

- Tang, Z.; Yang, H.; Chen, C.Y.C. Weakly supervised posture mining for fine-grained classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23735–23744. [Google Scholar]

- Kim, S.; Nam, J.; Ko, B.C. Vit-net: Interpretable vision transformers with neural tree decoder. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; PMLR: Birmingham, UK, 2022; pp. 11162–11172. [Google Scholar]

- Jiang, X.; Tang, H.; Gao, J.; Du, X.; He, S.; Li, Z. Delving into multimodal prompting for fine-grained visual classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 2570–2578. [Google Scholar]

- Zhang, Z.C.; Chen, Z.D.; Wang, Y.; Luo, X.; Xu, X.S. A vision transformer for fine-grained classification by reducing noise and enhancing discriminative information. Pattern Recognit. 2024, 145, 109979. [Google Scholar] [CrossRef]

- Bi, Q.; Zhou, B.; Ji, W.; Xia, G.S. Universal Fine-grained Visual Categorization by Concept Guided Learning. IEEE Trans. Image Process. 2025, 34, 394–409. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Yan, K.; Huang, F.; Li, J. Graph-based high-order relation discovery for fine-grained recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15079–15088. [Google Scholar]

- Song, Y.; Sebe, N.; Wang, W. On the Eigenvalues of Global Covariance Pooling for Fine-Grained Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3554–3566. [Google Scholar] [CrossRef]

- Du, R.; Xie, J.; Ma, Z.; Chang, D.; Song, Y.Z.; Guo, J. Progressive learning of category-consistent multi-granularity features for fine-grained visual classification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9521–9535. [Google Scholar] [CrossRef]

- Ke, X.; Cai, Y.; Chen, B.; Liu, H.; Guo, W. Granularity-aware distillation and structure modeling region proposal network for fine-grained image classification. Pattern Recognit. 2023, 137, 109305. [Google Scholar] [CrossRef]

- Shi, Y.; Hong, Q.; Yan, Y.; Li, J. LDH-ViT: Fine-grained visual classification through local concealment and feature selection. Pattern Recognit. 2025, 161, 111224. [Google Scholar] [CrossRef]

- Imran, A.; Athitsos, V. Domain adaptive transfer learning on visual attention aware data augmentation for fine-grained visual categorization. In Proceedings of the Advances in Visual Computing: 15th International Symposium, ISVC 2020, San Diego, CA, USA, 5–7 October 2020; Proceedings, Part II 15. Springer: Berlin/Heidelberg, Germany, 2020; pp. 53–65. [Google Scholar]

- Yao, H.; Miao, Q.; Zhao, P.; Li, C.; Li, X.; Feng, G.; Liu, R. Exploration of class center for fine-grained visual classification. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9954–9966. [Google Scholar] [CrossRef]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10819–10829. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).