Abstract

Table detection is a preliminary step in extracting reliable information from tables in scanned document images. We present CasTabDetectoRS, a novel end-to-end trainable table detection framework that operates on Cascade Mask R-CNN, including Recursive Feature Pyramid network and Switchable Atrous Convolution in the existing backbone architecture. By utilizing a comparativelyightweight backbone of ResNet-50, this paper demonstrates that superior results are attainable without relying on pre- and post-processing methods, heavier backbone networks (ResNet-101, ResNeXt-152), and memory-intensive deformable convolutions. We evaluate the proposed approach on five different publicly available table detection datasets. Our CasTabDetectoRS outperforms the previous state-of-the-art results on four datasets (ICDAR-19, TableBank, UNLV, and Marmot) and accomplishes comparable results on ICDAR-17 POD. Upon comparing with previous state-of-the-art results, we obtain a significant relative error reduction of , , , and on the datasets of ICDAR-19, TableBank, UNLV, and Marmot, respectively. Furthermore, this paper sets a new benchmark by performing exhaustive cross-datasets evaluations to exhibit the generalization capabilities of the proposed method.

1. Introduction

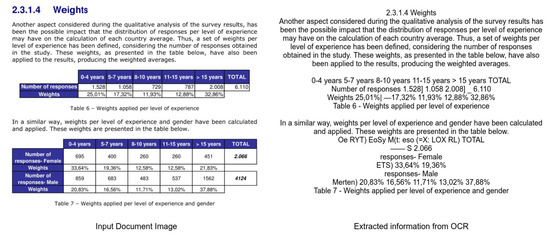

The process of digitizing documents has received significant attention in various domains, such as industrial, academic, and commercial sectors. The digitization of documents facilitates the process of extracting information without manual intervention. Apart from the text, documents contain graphical page objects, such as tables, figures, and formulas [1,2]. Albeit modern Optical Character Recognition (OCR) systems [3,4,5] can extract the information from scanned documents, they fail to interpret information from graphical page objects [6,7,8,9]. Figure 1 exhibits the problem of extracting tabular information from a document by applying open-source Tesseract OCR [10]. It is evident that even the state-of-the-art OCR system fails to parse information from tables in document images. Therefore, for complete table analysis, it is essential to develop accurate table detection systems for document images.

Figure 1.

Illustrating the need of applying table detection before extracting information in document images. We apply open source Tesseract-OCR [10] on a document image containing two tables. Besides the textual content, the OCR system fails miserably in interpreting information from tables.

The problem of accurate table detection in document images is still an open problem in the research community [8,11,12,13,14]. The high amount of intra-class variance (arbitraryayouts of tables, varying presence of rulingines) andow amount of inter-class variance (figures, charts, and algorithms equipped with horizontal and verticalines thatookike tables) makes the task of classifying andocalizing tables in document images even more challenging. Owing to these involved intricacies in table detection, custom heuristics based methodsack in producing robust solutions [15,16].

Prior works have tackled the involved challenges of table detection througheveraging meta-data or utilizing morphological information from tables. However, these methods are vulnerable in case of scanned document images [17,18]. Later, the utilization of deepearning-based approaches to attempt the task of table detection in document images have shown a remarkable improvement in the past few years [8]. Intuitively, the task of table detection has been formulated as an object detection problem [7,19,20,21], in which a table can be a targeted object present in a document image instead of a natural scene image. Consequently, the rapid progress in object detection algorithms hased to the extraordinary improvement in state-of-the-art table detection systems [11,12,13,20]. However, the prior approaches struggle in predicting preciseocalization of tabular boundaries in distinctive datasets. Moreover, they either rely on external pre-/post-processing methods to further refine their predictions [11,13] or incorporate memory intensive deformable convolutions [12,20]. Furthermore, prior state-of-the-art methods relied on heavy and high resolution backbones, such as ResNeXt-101 [22] and HRNet [23], which require expensive process of training.

To tackle the aforementioned issues present in existing approaches, we present CasTabDetectoRS, an end-to-end trainable novel object detection pipeline by incorporating the idea of Recursive Feature Pyramids (RFP) and Switchable Atrous Convolutions (SAC) [24] into Cascade Mask R-CNN [25] for detection of tables in document images. Furthermore, this paper empirically establishes that generic and robust table detection systems can be built without depending on pre-/post-processing methods and heavy backbone networks.

To summarize, the main contribution of this work are explained below:

- We present CasTabDetectoRS, a novel deepearning-based table detection approach that operates on Cascade Mask R-CNN equipped with recursive feature pyramid and switchable atrous convolution.

- We experimentally deny the dependency of custom heuristics or heavier backbone networks to achieve superior results on table detection in scanned document images.

- We accomplish state-of-the-art results on four publicly available table detection datasets: ICDAR-19, TableBank, Marmot, and UNLV.

- We demonstrate the generalization capabilities of the proposed CasTabDetectoRS by performing the exhaustive cross-datasets evaluation.

The remaining paper is structured as follows. Section 2 categorizes the prioriterature into rule-based, earning-based, and object detection-based methods. Section 3 describes the proposed table detection pipeline by addressing all the essential modules, such as RFP (Section 3.1), SAC (Section 3.2), and Cascade Mask R-CNN (Section 3.3). Section 4 presents the comprehensive overview of employed datasets, experimental details, and evaluation criteria, along with quantitative and qualitative analysis that follows with a comparison with previous state-of-the-art results and cross datasets evaluation. Section 5 concludes the paper and outlines possible future directions.

2. Related Work

The problem of table detection in documents has been investigated over the past few decades [16,26]. Earlier, researchers employed rule-based systems to solve table detection [16,26,27,28,29]. Afterwards, researchers exploited statisticalearning, mainly machineearning-based approaches, which were eventually replaced with deepearning-based methods [7,8,11,12,19,20,30,31,32,33,34].

2.1. Rule-Based Methods

To the best of our knowledge, Itonori et al. [26] addressed the problem of table detection in document images by employing a rule-based method. The proposed approacheveraged the arrangements of text-blocks and position of rulingines to detect tables in documents. Chandran and Kasturi [27] proposed another method that operates on rulingines to resolve table detection. Similarly, Pyreddy and Croft [35] published a heuristics-based table detection method that first identifies structural elements from a document and then filters the table.

Researchers have defined tabularayouts and grammars to detect tables in documents [29,36]. The correlation of white spaces and vertical connected component analysis is employed to predict tables [37]. Another method that transforms tables present in HTML documents into aogical structure is proposed by Pivk et al. [36]. Shigarov et al. [18] capitalized the meta-data from PDF files and treated each word as a block of text. The proposed method restructured the tabular boundaries byeveraging bounding boxes of each word.

We direct our readers to References [15,16,38,39,40] for a thorough understanding of these rule-based methods. Although the prior rule-based systems detect tables in document havingimited patterns, they rely on manual intervention toook for optimal rules. Furthermore, they are vulnerable in producing generic solutions.

2.2. Learning-Based Methods

Similar to the field of computer vision, the domain of table analysis have experienced a notable progress after incorporatingearning-based methods. Initially, researchers investigate machineearning-based methods to resolve table detection in document images. Unsupervisedearning was implemented by Kieninger and Dengel [41] to improve table detection in documents. Later, Cesarini et al. [42] employed supervisedearning-based system to find tables in documents. Their system reforms document into MXY tree representation. Later, the method predicts the tables by searching for blocks that are surrounded with rulingines. Kasar et al. [43] proposed a blend of SVM classifier and custom heuristics [43] to resolve table detection in documents. Researchers have also explored the capabilities of Hidden Markov Models (HMMs) toocalize tabular areas in documents [44,45]. Even though machineearning-based approaches have alleviated the research for table detection in documents, they require external meta-data to execute reliable predictions. Moreover, they fail to obtain generic solutions on document images.

Analogous to the field of computer vision, the power of deepearning has made a remarkable impact in the field of table analysis in document images [2,8]. To the best of our knowledge, Hao et al. [46] introduced the idea of implementing Convolutional Neural Network (CNN) to identify spatial features from document images. The authors merged these features with the extracted meta-data to predict tables in PDF documents.

Although researchers have employed Fully Convolutional Network (FCN) [47,48] and Graph Neural Network (GNN) [34,49] to perform table detection in document images, object detection-based approaches [7,8,11,12,19,20,30,31,32,33,34] have delivered state-of-the-art results.

2.3. Table Detection as an Object Detection Problem

There has been a direct relationship with the progress of object detection networks in computer vision and table detection in document images [8]. Gilani et al. [19] formulated the problem of table detection as an object detection problem by applying Faster R-CNN [50] to detect tables in document images. The presented work employed distance transform methods to modify pixels in raw document images fed to the Faster R-CNN.

Later, Schreiber et al. [7] presented another method that exploits Faster R-CNN [50] equipped with pre-trained base networks (ZFNet [51] and VGG-16 [52]) to detect tables in document images. Furthermore, Siddiqui et al. [20] published another Faster R-CNN-based method equipped with deformable convolutions [53] to address table detection having arbitraryayouts. Moreover, in Reference [33], the authors employed Faster R-CNN with a coronerocating an approach to improve the predicted tabular boundaries in document images.

Saha et al. [54] empirically established that Mask R-CNN [55] produces better results as compared to Faster R-CNN [50] in detecting tables, figures, and formulas. Zhong et al. [56] presented a similar conclusion by applying Mask R-CNN toocalize tables. Moreover, YOLO [57], SSD [58], and RetinaNet [59] have been employed to exhibit the benefits of closed domain fine-tuning on table detection in document images.

Recently, researchers have incorporated novel object detection algorithms, such as Cascade Mask R-CNN [25] and Hybrid Task Cascade (HTC) [60], to alleviate the performance of table detection systems in document images [11,12,13,14]. Although these prior methods have progressed state-of-the-art results, there is significant room for improvement inocalizing accurate tabular boundaries in scanned document images. Furthermore, the existing table detection methods either rely on heavier backbones or incorporate memory-intensive deformable convolutions. However, this paper proposes that state-of-the-art results can be achieved on table detection in scanned document images with intelligent incorporation of a relatively smaller backbone network with recursive feature pyramid networks and switchable atrous convolutions.

3. Method

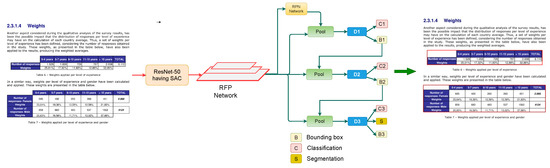

The presented approach incorporates RFP and SAC into a Cascade Mask R-CNN to attempt table detection in scanned document images as exhibited in Figure 2. Section 3.1 discusses the RFP module, whereas Section 3.2 talks about SAC module. Section 3.3 describes the employed Cascade Mask R-CNN, along with complete description of the proposed pipeline.

Figure 2.

Presented table detection framework consisting of Cascade Mask R-CNN, incorporating RFP and SAC in backbone network (ResNet-50). The modules RFP and SAC are illustrated in separate figures.

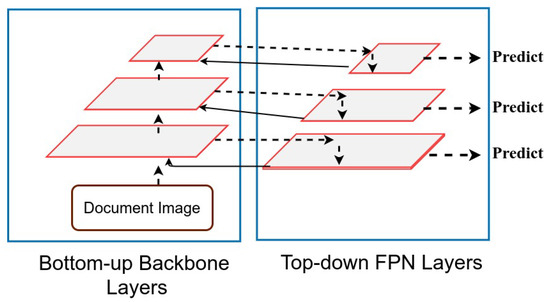

3.1. Recursive Feature Pyramids

Instead of the traditional Feature Pyramid Networks (FPN) [61], in our table detection framework, we incorporate Recursive Feature Pyramids (RFP) [24] to improve the processing of feature maps. To understand the conventional FPN,et denote the j-th stage of a bottom-up backbone network, and represent the j-th top-down FPN function. The backbone network N having FPN produces a set of feature maps, where total feature maps are equal to the number of stages. For instance, a backbone network with three stages is demonstrated in Figure 3. Therefore, with a number of stages S = 3, the output feature is given by:

where j iterates over 1, …, S, represents the input image, and is set to 0. However, in the case of RFP, feedback connections are added to the conventional FPN, as illustrated in Figure 3 with solid black arrows. If we include feature transformations before joining the feedback connections from FPN to the bottom-up backbone, then, the output feature of RFP is explained in Reference [24] as:

where j enumerates over S, and the transformation of FPN to RFP makes it a recursive function. If we unfold the RFP to a sequence of T, mathematically, it is given by:

where t enumerates over U, and U is the number of unfolded steps. The superscript t represents the function and the features at unfolded step t. We empirically set U = 2 in our experiments. For a comprehensive explanation of the RFP module, please refer to Reference [24].

Figure 3.

Illustrating design of Recursive Feature Pyramid module. The Recursive Feature Pyramid includes feedback connections that are highlighted with solidines. The top-down FPNayers send the feedback to the bottom-up backboneayers by inspecting the image twice.

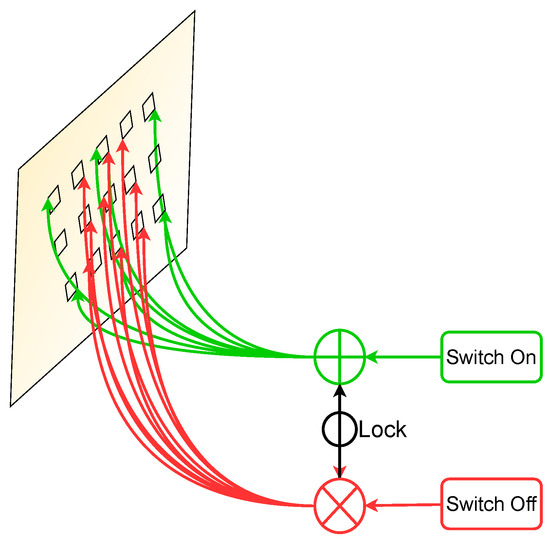

3.2. Switchable Atrous Convolution

We replace the conventional convolutions present in backbone network ResNet [62] and FPN with SAC. The atrous convolution also referred to as dilated convolution [63] enables the ability to increase the size of effective receptive field by introducing an atrous rate. For an atrous rate of l in atrous convolution, it adds zeros between the values of consecutive filter. Due to this, the kernel with a size of filter enlarges to a size of without causing any change in the number of network parameters. Figure 4 depicts an example of a atrous convolution with the atrous rate of 1 (displayed in red), whereas an atrous rate of 2 is demonstrated in green color.

Figure 4.

Illustrating Switchable Atrous Convolution. The red symbol ⨂ depicts atrous convolutions with an atrous rate set to 1, whereas the green symbol ⨁ denotes an atrous rate of 2 in a 3 × 3 convolutionalayer.

To transform a convolutionalayer to SAC, we employ the basic atrous convolutional operation Con that takes input i, weights w, and an atrous rate l and outputs y. Mathematically, it is given by:

In case of SAC explained in Reference [24], the above convolutionalayer converts into:

where defines the switch function which is implemented is a combination of an average pooling and convolutionayer with kernel of and , respectively. The symbol is trainable weight, and l is a hyper-parameter. Owing to switch function, our backbone network adapts to arbitrary scales of tabular images, defying the need for deformable convolutions [53]. We empirically set the atrous rate, l to 3 in our experiments. Moreover, we implement the idea ofocking mechanism [24] by setting the weights to in order to exploit the backbone network pre-train on MS-COCO dataset [64]. Initially, , and w is set according to the pre-trained weights. We refer readers to Reference [24] for a detailed explanation on SAC.

3.3. Cascade Mask R-CNN

To investigate the effectiveness of Recursive Feature Pyramid (RFP) and Switchable Atrous Convolution (SAC) modules on the task of table detection in scanned document images, we fuse these components into a cascade Mask R-CNN. The cascade Mask R-CNN is a direct combination of Mask R-CNN [55] and a recently proposed Cascade R-CNN [25].

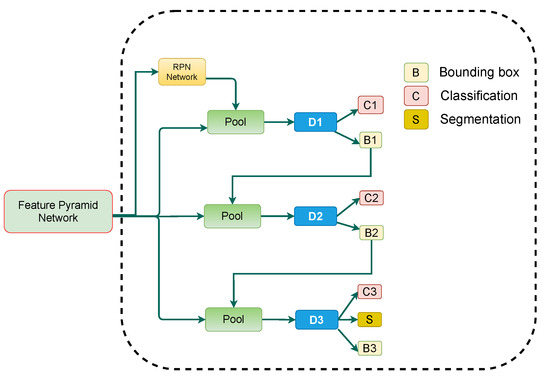

As depicted in Figure 5, the architecture of our utilized cascade Mask R-CNN closely follows the cascaded architecture introduced in Reference [25], along with the addition of segmentation branch at the final network head [55]. The proposed CasTabDetectoRS consists of three detectors operating on rising IoU (Intersection over Union) thresholds of 0.5, 0.6, and 0.7, respectively. The Region of Interest (ROI) pooling takesearned proposals from the Region proposal Network (RPN) and propagates the extracted ROI features to a series of network heads. The first network head receives the ROI features and performs classification and regression. The output of the first detector is treated as an input for the subsequent detector. Therefore, the predictions from the deeper network are refined andess prone to produce false positives. Furthermore, each regressor is enhanced with theocalization distribution estimated by the previous regressor instead of the actual initial distribution. This enables the network head operating on a higher IoU threshold to predict optimallyocalized bounding boxes. In the final stage of cascaded networks, along with regression and classification, the network performs segmentation to advance the final predictions further.

Figure 5.

Explained architecture of Cascade Mask R-CNN module employed in the proposed pipeline. The dotted boundary outlines the two-stage detection phase of Cascade Mask R-CNN.

As illustrated in Figure 2, the proposed CasTabDetectoRS employs ResNet-50 [62] as a backbone network. Theightweight ResNet-50 backbone equipped with SAC generates feature maps from the input scanned document image. The extracted feature maps are passed to the RFP that optimally transforms the features byeveraging feedback connections. Subsequently, these optimized features are passed to the RPN that estimates the potential candidate regions of interest. In the first stage of cascade R-CNN, the network head takes the proposals from RPN and feature maps from the FPN module and performs regression and classification with an IoU threshold of 0.5. The subsequent stages of Cascade Mask R-CNN further refine the predicted bounding boxes with an increasing IoU threshold. Analogous to Reference [55], the network in the final cascaded stage segments the object in a bounding box, along with classification and regression.

4. Experimental Results

4.1. Datasets

4.1.1. ICDAR-17 POD

The competition about detecting graphical Page Object Detection (POD) [1] was organized at ICDAR in 2017, which yielded the ICDAR-2017 POD dataset. The dataset contains bounding box information for tables, formulas, and figures. From 2417 images present in the dataset, 1600 images are used to fine-tune our network, and 817 images are utilized as a test set. Since the previous methods [12,20,30] have reported results on varying IoU thresholds, we present our results with an IoU threshold value ranging from 0.5–0.9 to draw a direct comparison with prior methods. A couple of samples from this dataset are illustrated in Figure 6.

Figure 6.

Sample document images from the ICDAR-17 POD dataset [1]. The red boundary represents the tabular area in document images.

4.1.2. ICDAR-19

Another competition for Table Detection and Recognition (cTDaR) [65] is organized at ICDAR in 2019. For the task of table detection (TRACK A), two new datasets (historical and modern) are introduced in the competition. The historical dataset comprises hand-written accountingedgers, train timetables, whereas the modern dataset consists of scientific papers, forms, and commercial documents. In order to have a direct comparison against prior state-of-the-art [11], we report results on the modern datasets with an IoU threshold ranging from 0.5–0.9. Figure 7 depicts a pair of instances from this dataset.

Figure 7.

Sample document images from the ICDAR 19 Track A (Modern) dataset [65]. The red boundary highlights the tabular area in document images.

4.1.3. TableBank

Currently, TableBank [66] is one of the enormous datasets publicly available for the task of table detection in document images. The dataset comprises 417K annotated document images that are obtained by crawling documents from the arXiv database. It is important to highlight that we take 1500 images from the splits of Word and LaTeX and 3000 samples from Word + LaTeX split. This enables our results to have a straightforward comparison with earlier state-of-the-art results [11]. For a visual aid, a couple of samples from this dataset are highlighted in Figure 8.

Figure 8.

Sample document images from the TableBank dataset [66]. The red boundary outlines the tabular area in document images.

4.1.4. UNLV

UNLV [67] dataset comprises scanned document images collected from commercial documents, research papers, and magazines. The dataset has around 10K images. However, only 427 images contain tables. Since prior state-of-the-art methods [20] have only used tabular images, we follow the identical split for direct comparison. Figure 9 depicts a pair of document images from the UNLV dataset.

Figure 9.

Sample document images from the UNLV dataset [67]. The red boundary marks the tabular area in document images.

4.1.5. Marmot

Earlier, Marmot [68] was one of the most widely exploited datasets in the table community. This dataset is published by the Institute of Computer Science and Technology (Peking University) by collecting samples from Chinese and English conference papers. The dataset consists of 2K images with an almost 1:1 ratio between positive to negative samples. For direct comparison with previous work [20], we used the cleaned version of the dataset by Reference [7] and did not incorporate any sample of the dataset in the training set. A couple of instances from the Marmot dataset are outlined in Figure 10.

Figure 10.

Sample document images from the Marmot dataset [68]. The red boundary denotes the tabular area in document images.

4.2. Implementation Details

We implement CasTabDetectoRS in Pytorch byeveraging the MMdetection framework [69]. Our table detection method operates on ResNet-50 backbone network [62] pre-trained on ImageNet [70]. Furthermore, we transform all the conventional convolutions present in the bottom-up backbone network to SAC. We closely follow the experimental configurations of Cascade Mask R-CNN [25] in order to execute the training process. All input documents images are resized with a maximum size of 1200 × 800 by preserving the actual aspect ratio. We train all the models for straight 14 epochs by initially setting theearning rate of 0.0025 with aearning rate decay of 0.1 after six epochs and ten epochs. We set the IoU threshold values to [0.5, 0.6, 0.7], respectively, for the three stages of R-CNN. We use a single anchor scale of 8, whereas the anchor ratios are set to [0.5, 1.0, 2.0]. We train all the models with a batch size of 1. We train all the models on NVIDIA GeForce RTX 1080 Ti GPU with 12 GB memory (Santa Clara, CA, USA).

4.3. Evaluation Protocol

Analogous to the prior table detection method on scanned documentimages [7,8,11,12,19,20,30,31,32,33], we assess the performance of our CasTabDetectoRS on precision, recall, and F1-score. We have reported the IoU threshold values, along with the achieved results for direct comparison with the existing approaches.

4.3.1. Precision

The precision [71] computes the ratio of true positive samples over the total predicted samples. Mathematically, it is calculated as:

4.3.2. Recall

The recall [71] is defined as the ratio of true positives over all all correct samples from the ground truth. It is calculated as:

4.3.3. F1-Score

The F1-score [71] is defined as the harmonic mean of precision and recall. Mathematically, it is given by:

4.3.4. Intersection over Union

Intersection over Union (IoU) [72] computes the intersecting region between the predicted and the ground truth region. The formula for the calculation of IoU is:

4.4. Result and Discussion

To evaluate the performance of the proposed CasTabDetectoRS, we report the results on five different publicly available table detection datasets. This section presents a comprehensive quantitative and qualitative analysis of our presented approach on all the datasets.

4.4.1. ICDAR-17 POD

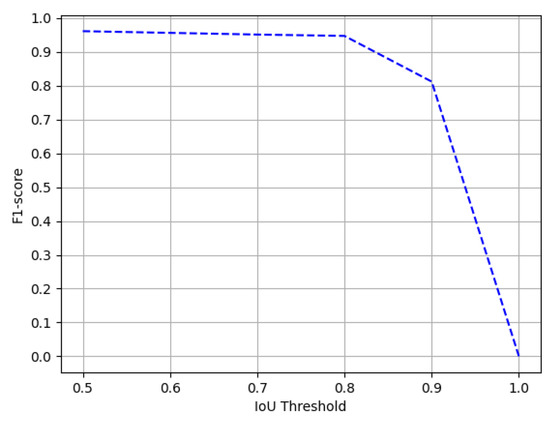

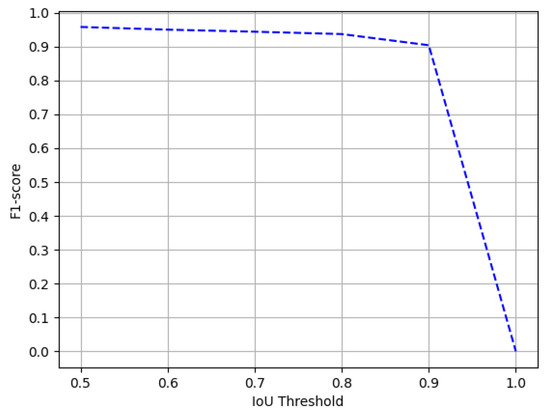

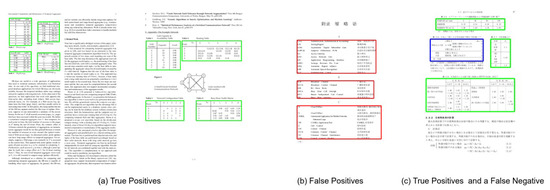

The ICDAR-17 POD challenge dataset consists of 817 images with 317 tables in the test set. For direct comparison with previous entries in the competition [1] and previous state-of-the-art results, we report the results on the IoU threshold value of 0.6 and 0.8. Table 1 summarizes the results achieved by our model. On an IoU threshold value of 0.6, our CasTabDetectoRS achieves a precision of , recall of , and F1-score of . On increasing the IoU threshold from 0.6 to 0.8, the performance of our network only indicates a slight drop with a precision of , recall of , and F1-score of . Furthermore, Figure 11 illustrates the effect of various IoU thresholds on our table detection system. The qualitative performance of our proposed method on the ICDAR-17 POD dataset is highlighted in Figure 12. Analysis of incorrect results discloses that the network fails toocalize precise tabular areas or produce false positives.

Table 1.

Performance comparison between the proposed CasTabDetectoRS and previous state-of-the-art results on table detection dataset of ICDAR-17 POD. Best results are highlighted in the table.

Figure 11.

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the ICDAR-2017-POD table detection dataset.

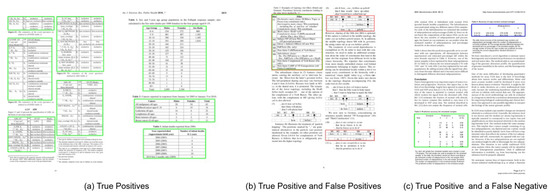

Figure 12.

CasTabDetectoRS results on the ICDAR-2017 POD table detection dataset. Green represents true positive, red denotes false positive, and blue color highlights false negative. In this figure, (a) represents a couple of samples containing true positives, (b) highlights true positive and false positives, and (c) depicts a true positive and a false negative.

Comparison with State-of-the-Art Approaches

Byooking at Table 1, it is evident that our network achieves comparable results with the existing state-of-the-art approaches on the ICDAR-17 POD dataset. It is important to emphasize that methods introduced in References [1,20] either rely on the heavy backbone with memory-intensive deformable convolutions [53] or are dependent on multiple pre- and post-processing methods to achieve the results. On the contrary, our CasTabDetectoRS operates on aighter weight ResNet-50 backbone with switchable atrous convolutions.

Furthermore, it is vital to mention that the system [54] that produced state-of-the-art results on this datasetearns to classify tables, figures, and equations. Byeveraging the information about other graphical page objects, such as figures and equations, their system reduces the misclassification of tables. On the contrary, the proposed system only trains on theimited tabular information and has no idea about other similar graphical page objects. Therefore, havingow inter-class variance between the different graphical page objects and tables in this dataset, our network produces more false positives and fails to surpass state-of-the-art results on this dataset.

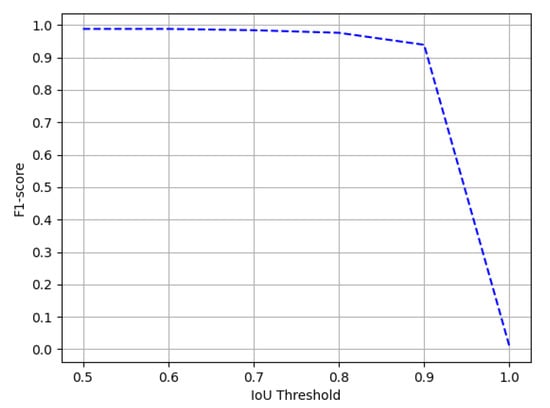

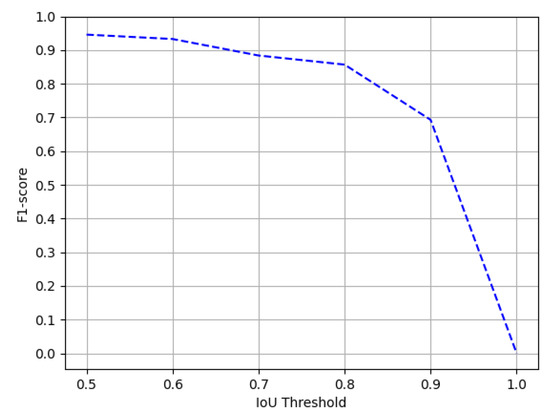

4.4.2. ICDAR-19

In this paper, the ICDAR-19 represents the Modern Track A part of the table detection dataset introduced in the table detection competition at ICDAR 2019 [65]. In order to draw strict comparisons with participants of the competition and existing state-of-the-art results, we evaluate the performance of our proposed method on the higher IoU threshold of 0.8 and 0.9. Table 2 presents the quantitative analysis of our proposed method, whereas the performance in terms of F1-score of our table detection method on various IoU thresholds is illustrated in Figure 13. The qualitative analysis is demonstrated in Figure 14. After analyzing false positives yielded by our network, we realize that the ground truth of the ICDAR-19 dataset has unlabeled tables present in the modern document images. One instance of such a scenario is exhibited in Figure 14b.

Table 2.

Performance comparison between the proposed CasTabDetectoRS and previous state-of-the-art results on the dataset of ICDAR 19 Track A (Modern). Best results are highlighted in the table.

Figure 13.

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the ICDAR-2019 Track A (Modern) dataset.

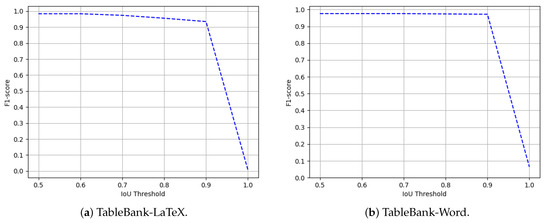

Figure 14.

CasTabDetectoRS results on the table detection dataset of ICDAR-2019 Track A (Modern). Green represents true positive, whereas red denotes false positive. In this figure, (a) highlights a couple of samples containing true positives, whereas (b) represents a true positive and a false positive.

Comparison with State-of-the-Art Approaches

Along with presenting our achieved results on the ICDAR-19 dataset, Table 2 compares the performance of our CasTabDetectoRS with the prior state-of-the-art approaches. It is evident that our introduced cascade network equipped with RFP and SAC surpassed the previous state-of-the-art results with a significant margin. We accomplish a precision of 0.964, recall of 0.988, and an F1-score of 0.976 on an IoU threshold of 0.8. Upon increasing the IoU threshold to 0.9, the proposed table detection method achieves a precision of 0.928, recall of 0.951, and F1-score of 0.939. The higher difference between the F1-score of our method and the previously achieved F1-score clearly exhibits the superiority of our CasTabDetectoRS.

4.4.3. TableBank

We evaluate the performance of the proposed method on all the three splits of TableBank dataset [66]. To establish a straightforward comparison with the recently achieved state-of-the-art results [11] on TableBank, we report the results on the IoU threshold of 0.5. Furthermore, owing to the superior predictions of our proposed method, we present results on a higher IoU threshold of 0.9. Table 3 summarizes the performance of our CasTabDetectoRS on the splits of TableBank-LaTeX, TableBank-Word, and TableBank-Both. Along with the quantitative results, we demonstrate the performance of the proposed system in terms of F1-score by increasing the IoU thresholds from 0.5 to 1.0. Figure 15 depicts the drop in performance on the split of TableBank-LaTeX and TableBank-Word, whereas, Figure 16 depicts a couple of true positives and one instance each of false positive and a false negative. Figure 17 explains the F1-score on the split of TableBank-Both dataset.

Table 3.

Performance comparison between the proposed CasTabDetectoRS and previous state-of-the-art results on various splits of TableBank dataset. The double horizontalines divide the different splits. Best results are highlighted in the table.

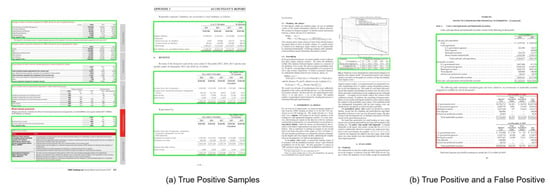

Figure 15.

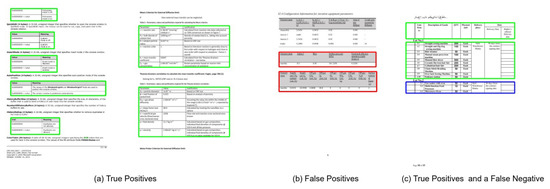

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the TableBank-LaTeX and TableBank-Word datasets.

Figure 16.

CasTabDetectoRS results on the TableBank dataset. Green represents true positive, red denotes false positive, and blue color highlights false negative. In this figure, (a) represents a couple of samples containing true positives, (b) illustrates false positives, and (c) depicts true positives and false negatives.

Figure 17.

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the TableBank-Both dataset.

Comparison with State-of-the-Art Approaches

Table 3 provides the comparison between existing state-of-the-art table detection methods and our proposed approach. It is clear that our proposed CasTabDetectoRS has surpassed the previous baseline and state-of-the-art methods on all the three splits of the TableBank dataset. On the dataset split of TableBank-LaTeX, we achieve an F1-score of 0.984 and 0.935 with an IoU threshold of 0.5 and 0.9, respectively. Similarly, we accomplish F1-scores of 0.976 and 0.972 on the IoU threshold of 0.5 and 0.9, respectively, on the TableBank-Word dataset. Moreover, we attain F1-scores of 0.978 and 0.957 on IoU of 0.5 and 0.9, respectively, on the TableBank-(Word + LaTex) dataset.

4.4.4. Marmot

The Marmot dataset consists of 1967 document images comprising 1348 tables. Since prior state-of-the-art approaches [12,20] have employed the model trained on the ICDAR-17 dataset to evaluate the performance on the Marmot dataset, we have identically reported the results to have a direct comparison. Table 4 presents the quantitative analysis of our proposed method, whereas Figure 18 illustrates the effect of our CasTabDetectoRS on increasing the IoU threshold from 0.5 to 1.0. Figure 19 portrays the qualitative assessment of our table detection system on the Marmot dataset by illustrating samples of true positives, false positives, and a false negative.

Table 4.

Performance comparison between the proposed CasTabDetectoRS and previous state-of-the-art results on the Marmot dataset. Best results are highlighted in the table.

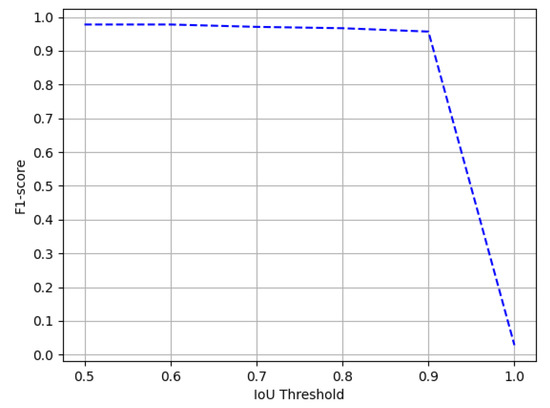

Figure 18.

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the Marmot dataset.

Figure 19.

CasTabDetectoRS results on the Marmot dataset. Green represents true positive, red denotes false positive, and blue color highlights false negative. In this figure, (a) exhibits a couple of samples containing true positives, (b) illustrates false positives, and (c) depicts true positives and false negatives.

Comparison with State-of-the-Art Approaches

Table 4 summarizes the performance comparison between the previous state-of-the-art results and the results achieved by our CasTabDetectoRS Marmot dataset. Our proposed method outperforms the previous results with an F1-score of 0.958 and 0904 on the IoU threshold values of 0.5 and 0.9, respectively.

4.4.5. UNLV

The UNLV dataset comprises 424 document images containing a total of 558 tables. We evaluate the performance of our presented method on the UNLV dataset to exhibit the completeness of our approach. Similarly, for direct comparison with prior works [12,19] on this dataset, we present our results on the IoU threshold of 0.5 and 0.6 as summarized in Table 5. Moreover, Figure 20 explains the deterioration in performance of the system on increasing the IoU threshold from 0.5 to 1.0. For the qualitative analysis on the UNLV dataset, examples of true positives, false positives, and a false negative are illustrated in Figure 21.

Table 5.

Performance comparison between the proposed CasTabDetectoRS and previous state-of-the-art results on the UNLV dataset. Best results are highlighted in the table.

Figure 20.

Performance evaluation of our CasTabDetectoRS in terms of F1-score over the varying IoU thresholds ranging from 0.5 to 1.0 on the UNLV dataset.

Figure 21.

CasTabDetectoRS results on the UNLV dataset. Green represents true positive, red denotes false positive, and blue color highlights false negative. In this figure, (a) highlights a couple of samples containing true positives, and (b) represents a true positive and a false positive, whereas (c) depicts true positives and false negatives.

Comparison with State-of-the-Art Approaches

The performance comparison between the proposed method and previous attempts on the UNLV dataset is summarized in Table 5. With the obtained results, it is apparent that our proposed system has outsmarted earlier methods with F1-scores of 0.946 and 0.933 on the IoU threshold values of 0.5 and 0.6, respectively.

4.4.6. Cross-Datasets Evaluation

Currently, the deepearning-based table detection methods are preferred over rule-based methods due to their better generalization capabilities over distinctive datasets. To investigate how well our proposed CasTabDetectoRS generalize over different datasets, we perform cross-dataset evaluation by incorporating four state-of-the-art table detection models inferred over five different datasets. We summarize all the results in Table 6.

Table 6.

Examining the generalization capabilities of the proposed CasTabDetectoRS through cross datasets evaluation.

With the table detection model trained on the TableBank-LaTeX dataset, apart from ICDAR-19, we achieve impressive results on ICDAR-17, TableBank-Word, Marmot, and UNLV with an average F1-score of 0.865. After manual inspection, we observe that the system produces several false positives due to the varying nature of document images in ICDAR-19 and TableBank-LaTeX. The table detection model trained on the ICDAR-17 dataset yields the average F1-score of 0.812 owing to the poor results achieved on the ICDAR-19 and UNLV datasets. The network trained on the ICDAR-19 dataset becomes the most generalized model accomplishing the average F1-score of 0.924. Although the size of the UNLV dataset is small (424 document images), the model trained on this dataset generates second-best results with an average F1-score of 0.897.

Manual investigation of cross-datasets evaluation yields the misinterpretation of other graphical page objects [2] with tables. However, with the obtained results, it is evident that our proposed CasTabDetectoRS produces state-of-the-art results on a specific dataset and generalizes well over the other datasets. Such types of well-generalized table detection systems for scanned document images are required in several domains [8].

5. Conclusions and Future Work

This paper presents CasTabDetectoRS, the novel table detection framework for scanned document images, which comprises Cascade Mask R-CNN with a Recursive Feature Pyramid (RFP) network with Switchable Atrous Convolutions (SAC). The proposed CasTabDetectoRS accomplishes state-of-the-art performances on the four different table detection datasets (ICDAR-19 [65], TableBank [66], UNLV [67], and Marmot [68]), while achieving comparable results on the ICDAR-17-POD [1] dataset.

Upon direct comparison against previous state-of-the-art results on ICDAR-19 Track A (Modern) dataset, we reduce the relative error by and in terms of achieved F1-score on IoU thresholds of 0.8 and 0.9, respectively. On the dataset of TableBank-LaTeX and TableBank-Word, we decrease the relative error by on each dataset split. On TableBank-Both, we reduce the relative error by . Similarly, on the Marmot dataset [68], we observe a reduction, whereas the system achieves a relative error reduction of on the UNLV dataset [67]. Furthermore, this paper empirically establishes that, instead of incorporating heavy backbone networks [11,12] and memory exhaustive deformable convolutions [20], state-of-the-art results are achievable by employing a relativelyightweight backbone network (ResNet-50) with SAC. Moreover, this paper demonstrates the generalization capabilities of the proposed CasTabDetectoRS through extensive cross-datasets evaluations. It is important to emphasize that our proposed network takes 9.9 gigabytes of VRAM (Video Read Access Memory) memory with an inference time of 10.8 frames per second. The achieved network complexity is incomparable since prior state-of-the-art methods in this domain have not reported their network complexity and inference time.

In the future work, we plan to extend the proposed framework by tackling the even more challenging task of table structure recognition in scanned document images. We expect that our cross-datasets evaluation sets a benchmark that will be followed in future examinations of table detection methods. Furthermore, the backbone network and the region proposal network of the proposed pipeline can be enhanced by exploiting the attention mechanism [73,74].

Author Contributions

Writing—original draft preparation, K.A.H.; writing—review and editing, K.A.H., M.Z.A.; supervision and project administration, M.L., A.P., D.S. All authors have read and agreed to the submitted version of the manuscript.

Funding

The workeading to this publication has been partially funded by the European project INFINITY under Grant Agreement ID 883293.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gao, L.; Yi, X.; Jiang, Z.; Hao, L.; Tang, Z. ICDAR2017 competition on page object detection. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition. (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1417–1422. [Google Scholar]

- Bhatt, J.; Hashmi, K.A.; Afzal, M.Z.; Stricker, D. A Survey of Graphical Page Object Detection with Deep Neural Networks. Applied Sci. 2021, 11, 5344. [Google Scholar] [CrossRef]

- Zhao, Z.; Jiang, M.; Guo, S.; Wang, Z.; Chao, F.; Tan, K.C. Improving deepearning based optical character recognition via neural architecture search. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

- Hashmi, K.A.; Ponnappa, R.B.; Bukhari, S.S.; Jenckel, M.; Dengel, A. Feedback Learning: Automating the Process of Correcting and Completing the Extracted Information. In Proceedings of the International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 20–25 September 2019; Volume 5, pp. 116–121. [Google Scholar]

- van Strien, D.; Beelen, K.; Ardanuy, M.C.; Hosseini, K.; McGillivray, B.; Colavizza, G. Assessing the Impact of OCR Quality on Downstream NLP Tasks. In Proceedings of the ICAART (1), Valletta, Malta, 22–24 February 2020; pp. 484–496. [Google Scholar]

- Kieninger, T.G. Table structure recognition based on robust block segmentation. In Document Recognition V; Electronic Imaging: San Jose, CA, USA, 1998; Volume 3305, pp. 22–32. [Google Scholar]

- Schreiber, S.; Agne, S.; Wolf, I.; Dengel, A.; Ahmed, S. Deepdesrt: Deepearning for detection and structure recognition of tables in document images. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1162–1167. [Google Scholar]

- Hashmi, K.A.; Liwicki, M.; Stricker, D.; Afzal, M.A.; Afzal, M.A.; Afzal, M.Z. Current Status and Performance Analysis of Table Recognition in Document Images with Deep Neural Networks. IEEE Access 2021, 9, 87663–87685. [Google Scholar] [CrossRef]

- Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Cascade Network with Deformable Composite Backbone for Formula Detection in Scanned Document Images. Appl. Sci. 2021, 11, 7610. [Google Scholar] [CrossRef]

- Smith, R. An overview of the Tesseract OCR engine. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; Volume 2, pp. 629–633. [Google Scholar]

- Prasad, D.; Gadpal, A.; Kapadni, K.; Visave, M.; Sultanpure, K. CascadeTabNet: An approach for end to end table detection and structure recognition from image-based documents. In Proceedings of the IEEE/CVF Conference Computer Vision Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 572–573. [Google Scholar]

- Agarwal, M.; Mondal, A.; Jawahar, C. CDeC-Net: Composite Deformable Cascade Network for Table Detection in Document Images. arXiv 2020, arXiv:2008.10831. [Google Scholar]

- Zheng, X.; Burdick, D.; Popa, L.; Zhong, X.; Wang, N.X.R. Global table extractor (gte): A framework for joint table identification and cell structure recognition using visual context. In Proceedings of the IEEE/CVF Winter Conference Applied Computer Vision, Virtual (Online), 5–9 January 2021; pp. 697–706. [Google Scholar]

- Afzal, M.Z.; Hashmi, K.; Liwicki, M.; Stricker, D.; Nazir, D.; Pagani, A. HybridTabNet: Towards Better Table Detection in Scanned Document Images. Appl. Sci. 2021, 11, 8396. [Google Scholar]

- Coüasnon, B.; Lemaitre, A. Handbook of Document Image Processing and Recognition, Chapter Recognition of Tables and Forms; Doermann, D., Tombre, K., Eds.; Springer: London, UK, 2014; pp. 647–677. [Google Scholar]

- Zanibbi, R.; Blostein, D.; Cordy, J.R. A survey of table recognition. Doc. Anal. Recognit. 2004, 7, 1–16. [Google Scholar] [CrossRef]

- Kieninger, T.; Dengel, A. Applying the T-RECS table recognition system to the businessetter domain. In Proceedings of the 6th International Conference on Document Analysis and Recognition, Seattle, WA, USA, 10–13 September 2001; pp. 518–522. [Google Scholar]

- Shigarov, A.; Mikhailov, A.; Altaev, A. Configurable table structure recognition in untagged PDF documents. In Proceedings of the 2016 ACM Symposium Document Engineering, Vienna, Austria, 13–16 September 2016; pp. 119–122. [Google Scholar]

- Gilani, A.; Qasim, S.R.; Malik, I.; Shafait, F. Table detection using deepearning. In Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 771–776. [Google Scholar]

- Siddiqui, S.A.; Malik, M.I.; Agne, S.; Dengel, A.; Ahmed, S. Decnt: Deep deformable cnn for table detection. IEEE Access 2018, 6, 74151–74161. [Google Scholar] [CrossRef]

- Hashmi, K.A.; Stricker, D.; Liwicki, M.; Afzal, M.N.; Afzal, M.Z. Guided Table Structure Recognition through Anchor Optimization. arXiv 2021, arXiv:2104.10538. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2016, arXiv:1611.05431. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representationearning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. arXiv 2020, arXiv:2006.02334. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference Computer vision pattern Recognition, Salt Lake City, UT, USA, 30–31 January 2018; pp. 6154–6162. [Google Scholar]

- Itonori, K. Table structure recognition based on textblock arrangement and ruled ine position. In Proceedings of the 2nd International Conference on Document Analysis and Recognition (ICDAR’93), Tsukuba City, Japan, 20–22 October 1993; pp. 765–768. [Google Scholar]

- Chandran, S.; Kasturi, R. Structural recognition of tabulated data. In Proceedings of the 2nd International Conference on Document Analysis and Recognition (ICDAR’93), Sukuba, Japan, 20–22 October 1993; pp. 516–519. [Google Scholar]

- Hirayama, Y. A method for table structure analysis using DP matching. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–15 August 1995; Volume 2, pp. 583–586. [Google Scholar]

- Green, E.; Krishnamoorthy, M. Recognition of tables using table grammars. In Proceedings of the 4th Annual Symposium Document Analysis Information Retrieval, Desert Inn Hotel, Las Vegas, NV, USA, 24–26 April 1995; pp. 261–278. [Google Scholar]

- Huang, Y.; Yan, Q.; Li, Y.; Chen, Y.; Wang, X.; Gao, L.; Tang, Z. A YOLO-based table detection method. In Proceedings of the International Conference Document Analysis Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 813–818. [Google Scholar]

- Casado-García, Á.; Domínguez, C.; Heras, J.; Mata, E.; Pascual, V. The benefits of close-domain fine-tuning for table detection in document images. In International Workshop Document Analysis System; Springer: Cham, Switzerland, 2020; pp. 199–215. [Google Scholar]

- Arif, S.; Shafait, F. Table detection in document images using foreground and background features. In Proceedings of the Digital Image Computing: Techniques Applied (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Sun, N.; Zhu, Y.; Hu, X. Faster R-CNN based table detection combining cornerocating. In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1314–1319. [Google Scholar]

- Qasim, S.R.; Mahmood, H.; Shafait, F. Rethinking table recognition using graph neural networks. In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 142–147. [Google Scholar]

- Pyreddy, P.; Croft, W.B. Tintin: A system for retrieval in text tables. In Proceedings of the 2nd ACM International Conference Digit Libraries, Ottawa, ON, Canada, 14–16 June 1997; pp. 193–200. [Google Scholar]

- Pivk, A.; Cimiano, P.; Sure, Y.; Gams, M.; Rajkovič, V.; Studer, R. Transforming arbitrary tables intoogical form with TARTAR. Data Knowl. Eng. 2007, 3, 567–595. [Google Scholar] [CrossRef]

- Hu, J.; Kashi, R.S.; Lopresti, D.P.; Wilfong, G. Medium-independent table detection. In Document Recognition Retrieval VII; International Society for Optics and Photonics: Bellingham, WA, USA, 1999; Volume 3967, pp. 291–302. [Google Scholar]

- e Silva, A.C.; Jorge, A.M.; Torgo, L. Design of an end-to-end method to extract information from tables. Int. Doc. Anal. Recognit. (IJDAR) 2006, 8, 144–171. [Google Scholar] [CrossRef]

- Khusro, S.; Latif, A.; Ullah, I. On methods and tools of table detection, extraction and annotation in PDF documents. J. Information Sci. 2015, 41, 41–57. [Google Scholar] [CrossRef]

- Embley, D.W.; Hurst, M.; Lopresti, D.; Nagy, G. Table-processing paradigms: A research survey. Int. Doc. Anal. Recognit. (IJDAR) 2006, 8, 66–86. [Google Scholar] [CrossRef]

- Kieninger, T.; Dengel, A. The t-recs table recognition and analysis system. In International Workshop on Document Analysis System; Springer: Seattle, WA, USA, 1998; pp. 255–270. [Google Scholar]

- Cesarini, F.; Marinai, S.; Sarti, L.; Soda, G. Trainable tableocation in document images. In Proceedings of the Object Recognition Supported User Interaction Service Robots, International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 3, pp. 236–240. [Google Scholar]

- Kasar, T.; Barlas, P.; Adam, S.; Chatelain, C.; Paquet, T. Learning to detect tables in scanned document images usingine information. In Proceedings of the 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1185–1189. [Google Scholar]

- e Silva, A.C. Learning rich hidden markov models in document analysis: Table ocation. In Proceedings of the 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; pp. 843–847. [Google Scholar]

- Silva, A. Parts That Add Up to a Whole: A Framework for the Analysis of Tables; Edinburgh University: Edinburgh, UK, 2010. [Google Scholar]

- Hao, L.; Gao, L.; Yi, X.; Tang, Z. A table detection method for pdf documents based on convolutional neural networks. In Proceedings of the 12th IAPR Workshop Document Analysis System (DAS), Santorini, Greece, 11–14 April 2016; pp. 287–292. [Google Scholar]

- Kavasidis, I.; Palazzo, S.; Spampinato, C.; Pino, C.; Giordano, D.; Giuffrida, D.; Messina, P. A saliency-based convolutional neural network for table and chart detection in digitized documents. arXiv 2018, arXiv:1804.06236. [Google Scholar]

- Paliwal, S.S.; Vishwanath, D.; Rahul, R.; Sharma, M.; Vig, L. Tablenet: Deepearning model for end-to-end table detection and tabular data extraction from scanned document images. In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 128–133. [Google Scholar]

- Holeček, M.; Hoskovec, A.; Baudiš, P.; Klinger, P. Table understanding in structured documents. In Proceedings of the International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 20–25 September 2019; Volume 5, pp. 158–164. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks forarge-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference Computer vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Saha, R.; Mondal, A.; Jawahar, C. Graphical object detection in document images. In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 51–58. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Zhong, X.; ShafieiBavani, E.; Yepes, A.J. Image-based table recognition: Data, model, and evaluation. arXiv 2019, arXiv:1911.10683. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focaloss for dense object detection. In Proceedings of the IEEE International Conference Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4974–4983. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residualearning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Analysis Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollar, P. Microsoft COCO: Common objects in context (2014). arXiv 2019, arXiv:1405.0312. [Google Scholar]

- Gao, L.; Huang, Y.; Déjean, H.; Meunier, J.L.; Yan, Q.; Fang, Y.; Kleber, F.; Lang, E. ICDAR 2019 competition on table detection and recognition (cTDaR). In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1510–1515. [Google Scholar]

- Li, M.; Cui, L.; Huang, S.; Wei, F.; Zhou, M.; Li, Z. Tablebank: Table benchmark for image-based table detection and recognition. In Proceedings of the 12th Language Resource Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 1918–1925. [Google Scholar]

- Shahab, A.; Shafait, F.; Kieninger, T.; Dengel, A. An open approach towards the benchmarking of table structure recognition systems. In Proceedings of the 9th IAPR International Workshop Document Analysis System, Boston, MA, USA, 9–10 June 2010; pp. 113–120. [Google Scholar]

- Fang, J.; Tao, X.; Tang, Z.; Qiu, R.; Liu, Y. Dataset, ground-truth and performance metrics for table detection evaluation. In Proceedings of the 10th IAPR International Workshop Document Analysis System, Gold Coast, Australia, 27–29 March 2012; pp. 445–449. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Blaschko, M.B.; Lampert, C.H. Learning toocalize objects with structured output regression. In Proceedings of the European Conference Computer Vision, Marseille, France, 12–18 October 2008; pp. 2–15. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).