Abstract

Due to the distinctive attributes of underwater environments, underwater images frequently encounter challenges such as low contrast, color distortion, and noise. Current underwater image enhancement techniques often suffer from limited generalization, preventing them from effectively adapting to a variety of underwater images taken in different underwater environments. To address these issues, we introduce a diffusion model-based underwater image enhancement method using an adversarial learning strategy, referred to as adversarial learning diffusion underwater image enhancement (ALDiff-UIE). The generator systematically eliminates noise through a diffusion model, progressively aligning the distribution of the degraded underwater image with that of a clear underwater image, while the discriminator helps the generator produce clear, high-quality underwater images by identifying discrepancies and pushing the generator to refine its outputs. Moreover, we propose a multi-scale dynamic-windowed attention mechanism to effectively fuse global and local features, optimizing the process of capturing and integrating information. Qualitative and quantitative experiments on four benchmark datasets—UIEB, U45, SUIM, and LSUI—demonstrate that ALDiff-UIE increases the average PCQI by approximately 12.8% and UIQM by about 15.6%. The results indicate that our method outperforms several mainstream approaches in terms of both visual quality and quantitative metrics, showcasing its effectiveness in enhancing underwater images.

1. Introduction

During human exploration of the oceans, the quality of underwater images directly affects the accuracy with which both humans and machines compute, recognize, analyze, and interpret visual data. However, due to the different absorption rates of light waves and the existence of refraction and scattering problems, underwater images generally have color distortion, blurred details, and other problems [1]. Underwater image enhancement technology is key to solving this problem, which is of great significance to the fields of underwater archaeology, marine biology research, and marine resource development [2,3]. Compared to other image enhancement tasks, the complexity and diversity of underwater environments present a unique set of challenges for underwater image enhancement. Specifically, the attenuation of light in water is closely related to its wavelength; the longer the wavelength, the faster the attenuation. Thus, red light, which has the longest wavelength, attenuates the fastest, while blue and green light attenuate more slowly, causing the color distortion commonly observed in underwater images. Moreover, suspended particles in water can cause both forward and backward scattering, resulting in blurriness, reduced contrast, and increased noise in underwater images. Image enhancement technologies are effective in mitigating light attenuation and color distortion in underwater environments, thereby improving the image quality of underwater robots or detection equipment and enhancing the accuracy of image analysis.

In recent years, significant transformations in underwater image enhancement have been driven by remarkable advancements in computer vision technology. Deep learning methods, with their data-driven strategies, autonomously learn complex image features and relationships, making them highly effective for underwater image enhancement. Generative adversarial networks (GANs) [4] produce notably clearer and more realistic images, making them the preferred model for underwater image enhancement tasks in recent years. GAN leverages its adversarial mechanism to learn the image distribution of the target domain, making it well-suited for image enhancement tasks in various underwater environments. However, GAN tends to optimize overall image quality, often overlooking small-scale features (such as fine textures and edges), resulting in less realistic local details [5]. Instead, diffusion models excel at modeling probability distributions, allowing them to progressively denoise and restore image details [6]. This approach shows significant advantages in reconstructing fine-grained details and preserving natural textures, but struggles with global consistency, such as overall color balance and lighting correction, which limits their effectiveness in underwater image enhancement. Underwater images often require enhancements to both local details and global features. Therefore, we propose a diffusion model-based underwater image enhancement method using an adversarial learning strategy. It fully leverages the strengths of GANs and diffusion models to enhance the quality and stability of generated images, addressing some of their individual limitations. The main contributions of this paper are summarized as follows:

- We introduce an adversarial diffusion model for underwater image enhancement, named ALDiff-UIE. It employs a diffusion model as the generator to produce high-quality images through its iterative denoising steps and utilizes a discriminator to evaluate and provide adversarial feedback to refine the generated images. The diffusion model guides the generation of local details, while feedback from the discriminator enhances global features, resulting in more globally consistent and visually realistic generated images.

- We developed a multi-scale dynamic-windowed attention mechanism, designed to effectively extract features at multiple scales and implement self-attention within windows along both vertical and horizontal dimensions. This mechanism incorporates an agent strategy that optimizes the process of capturing and integrating information while reducing computational complexity.

- The comprehensive experimental results demonstrate that the proposed method significantly enhances image details and contrast. Across various standard evaluation metrics, the proposed method shows substantial performance improvement, further validating its effectiveness in the field of underwater image enhancement.

This paper is organized as follows: Section 2 discusses related work on underwater image enhancement methods, Section 3 presents the ALDiff-UIE method, and Section 4 concludes with experiments and results demonstrating the effectiveness of the proposed method. Section 5 and Section 6 summarize the strengths and weaknesses of the proposed algorithm and provide an outlook for future research work.

2. Related Work

In recent years, the rapid development of underwater exploration has driven researchers to actively engage in the study of underwater image enhancement. The main goal of underwater image enhancement is to improve the visual quality of degraded underwater images, providing more accurate and reliable data for subsequent observations and analysis. Currently, underwater image enhancement methods can be categorized into traditional methods and data-driven methods [7].

2.1. Traditional Methods

Traditional methods primarily rely on physical models or image processing techniques, utilizing prior knowledge or assumptions to enhance images. These methods can be broadly categorized into physical model-based methods and non-model-based methods.

Physical model-based methods rely on underwater image formation models, requiring the inverse operation of these models to restore clear images. For instance, Berman et al. [8] considered multiple spectral profiles of different water types and estimated the attenuation ratios of the blue–red and blue–green color channels, reducing the underwater image enhancement problem to a single-image dehazing task. Muniraj et al. [9] proposed an underwater image enhancement method by combining a color constancy framework and dehazing, in which the transmission map is estimated by analyzing the intensity differences between each channel. Li et al. [10] proposed a method for underwater image enhancement, PWGAN (physics-based WaterGAN), which utilizes both the scattering coefficient of underwater images and the depth information from clear images to fine-tune the outputs of two generative adversarial networks, effectively reducing color distortion and image blurring while restoring the details of the underwater objects. Most physical model-based methods rely on estimating background light and depth in underwater images. However, these methods are effective only in specific scenes, as physical features, such as prior knowledge, cannot be accurately estimated in more complex underwater environments, limiting the generalizability of these approaches.

Non-physical model-based methods enhance underwater images by directly modifying pixels using techniques that do not take the underwater image formation model into account. For instance, Li et al. [11] introduced a contrast enhancement algorithm based on prior histogram distributions. Xu et al. [12] presented a weak-light image enhancement fusion framework that combines multi-scale fusion with dual-model global stretching. Some studies are based on the Retinex theory, such as Zhang et al.’s [13] development of a multi-scale Retinex algorithm that combines bilateral and trilateral filtering for more precise image processing. Zhuang et al. [14] developed an underwater image enhancement method based on Bayesian Retinex, incorporating multi-order gradient priors for reflectance and illumination. Non-physical modeling methods perform image enhancement by modifying the pixels of an image, which is simple and easy to implement and low-cost, but ignores the optical properties of underwater imaging, resulting in commonly over- or under-corrected images after enhancement.

2.2. Data-Driven-Based Methods

In recent years, data-driven deep learning methods have gained significant traction in the field of underwater image enhancement, owing to their powerful feature-learning capabilities. These methods have shown great promise in advancing the state-of-the-art in underwater image enhancement. Deep learning-based underwater image enhancement methods can be broadly divided into four main categories, as follows: CNN-based methods, GAN-based methods, Transformer-based methods, and diffusion model-based methods.

CNN-based methods: Wu et al. [15] designed a two-stage underwater image convolutional neural network based on structural decomposition to enhance the texture information of the image by decomposing the underwater image into a high-frequency image and a low-frequency image. Jiang et al. [16] adopted a divide-and-conquer strategy, comprising a strong prior phase that decomposes complex underwater degradation into sub-problems and a fine-grained phase that employs a multi-branch color enhancement module and a pixel attention module to improve color accuracy and detail perception. Li et al. [17] proposed UWCNN, which is a lightweight CNN network with jointly optimized multinomial loss based on a priori knowledge of the underwater scene. Khandouzi et al. [18] proposed a coarse-to-fine end-to-end underwater image enhancement method combining classical image processing with lightweight deep networks, which achieves effective enhancement of underwater images through global-local dual-branching CNN, improved histogram equalization, and attention module.

GAN-based methods: These methods build an adversarial neural network comprising generators and discriminators, generating images through the competition between these components. Liu et al. [19] integrated features across different scales, enhancing the model’s capability to improve image quality. Islam et al. [20] employed an objective function aimed at capturing global content, style, color, and local texture comprehensively, facilitating a more thorough feature capture of the image. Zhang et al. [21] designed a layered, attention-intensive aggregation in the encoder and introduced inter-block serial connections to better adapt to complex underwater scenes. Chaurasia et al. [22] treated underwater image enhancement as an image-to-image translation task, and customized the objective functions to achieve effective conversion of color, content, and style. Guo et al. [23] proposed a Transformer-based generative adversarial network with a two-branch discriminator, generating more realistic colors while preserving image content.

Transformer-based methods: Transformer is a deep learning architecture based on self-attention mechanisms. Ren et al. [24] enhanced the U-Net architecture by embedding the Swin Transformer, improving the model’s ability to capture global dependencies and boosting its performance in underwater image enhancement tasks. Peng et al. [25] proposed a U-shaped Transformer and introduced a loss function that combines various color spaces. Khan et al. [26] proposed a multi-domain query cascaded Transformer network that integrates local transmission features and global illumination characteristics. Shen et al. [27] proposed a dual-attention Transformer that integrates channel self-attention and pixel self-attention mechanisms. This design significantly enhances underwater image processing by effectively capturing features and contextual information.

Diffusion model-based methods: Originally proposed by Sohl-Dickstein et al. [28] in 2015, the diffusion model has regained researchers’ attention in recent years with the introduction of the denoising diffusion probabilistic model (DDPM) [29] for image generation tasks. Unlike the encoding and generation processes of other generative models, DDPM breaks these processes into multiple steps, enabling precise approximation of small variations using a normal distribution. Lu et al. [30] proposed an improved network structure based on DDPM, introducing a dual U-net architecture for the image enhancement task, which effectively facilitates the transformation between two image data distributions. Tang et al. [31] introduced a conditional diffusion framework for underwater image enhancement, along with a lightweight Transformer-based neural network as a denoising network, which not only enhances image quality effectively but also reduces the runtime of the denoising process. Shi et al. [32] proposed a content-preserving diffusion model, utilizing the difference between original images and noisy images as input. Zhao et al. [33] proposed a physics-aware diffusion model that effectively utilizes physical information to guide the diffusion process.

Despite significant advancements in underwater image enhancement, a notable gap remains in effectively addressing both local and global image features simultaneously. Traditional methods, including both physical and non-physical approaches, face challenges in adapting to the complex underwater environment due to their reliance on prior knowledge and limited assumptions. While deep learning techniques, such as GANs and diffusion models, have shown promise, GANs prioritize global image quality at the cost of fine details, whereas diffusion models excel at recovering local features but struggle to maintain global consistency. Therefore, there is a clear need for a method that combines the strengths of GANs and diffusion models while incorporating advanced attention mechanisms to achieve more precise enhancement.

3. Proposed Method

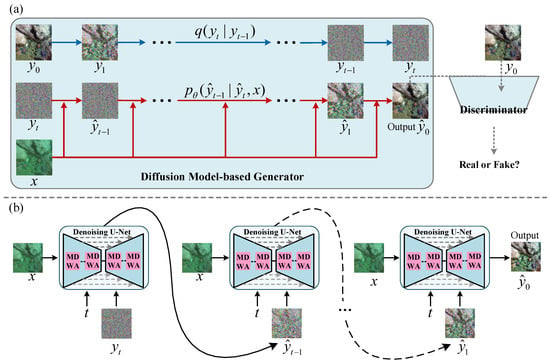

To obtain high-quality underwater images, we propose an underwater image enhancement method, named ALDiff-UIE. As shown in Figure 1a, the proposed method comprises two core components, that is, a diffusion model-based generator and a PatchGAN-based discriminator, trained in a competitive framework. The generator attempts to produce data, while the discriminator attempts to distinguish between real and generated data. The diffusion model is based on probabilistic modeling, where noise is incrementally added to the data during the forward process and then removed step-by-step in the reverse process to generate new data.

Figure 1.

The diagram of the proposed method. (a) Provides an overview of the ALDiff-UIE framework, which consists of a diffusion model-based generator and a discriminator trained in a competitive framework. The generator utilizes a diffusion model that incrementally adds noise to the image in the forward process and removes it step-by-step in the reverse process to generate a new image. (b) Depicts the inference process of the proposed method, detailing how the framework produces enhanced underwater images. MDWA refers to the multi-scale dynamic-windowed attention module.

The generator employs the denoising process of the diffusion model to eliminate noise and enhance image details, with its inference process illustrated in Figure 1b. However, the randomness inherent in the generation process of diffusion models poses significant challenges for underwater image enhancement tasks. Therefore, during the denoising process, we use degraded underwater images to guide the model in generating enhanced images with similar high-level semantic information as the degraded images, achieving finer control. In contrast to traditional generators, our generator begins with Gaussian noise and gradually introduces structure and patterns until the generated data aligns with the desired distribution. This gradual process helps prevent issues related to pattern collapse.

3.1. Diffusion Model-Based Generator

The generator employs a diffusion model that incrementally adds noise to the data in the forward process and removes it step-by-step in the reverse process to generate new data. The forward process is a Markov chain in which Gaussian noises are gradually added to the input data over several time steps, driving the distribution of the data to converge towards a standard Gaussian distribution. This step is predefined and does not require learning. In contrast, the denoising process trains a neural network in a learnable manner, using pure noise as input and the degraded underwater image as a condition, enabling the model to progressively recover the distribution of the clear underwater image.

The core strategy of the generator is to learn a specific inverse process, denoted as , without altering the forward diffusion process . The forward Markovian diffusion process q, which involves the stepwise addition of Gaussian noise, can be mathematically expressed as follows:

where represents the image at time step , is the noisy image at time step t, is a variance, and is the identity matrix. Given the initial image , the distribution of can be expressed as follows:

where . The process proceeds iteratively, adding noise at each time step until the image distribution converges to a standard Gaussian distribution after T steps.

In the reverse process, we use the degraded underwater image x to estimate the conditional distributions . The inference process can be modeled as a reverse Markovian process, aiming to recover the target image from the Gaussian noise , i.e.,

Among them, can be denoted as the combination of and a predicted noise by a neural network. We adopt a U-Net architecture integrated with a multi-scale dynamic-windowed attention module as the denoising network.

3.1.1. Multi-Scale Dynamic-Windowed Attention Module

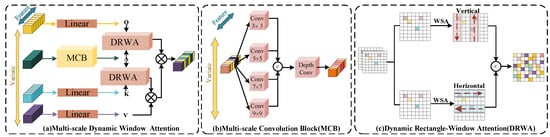

Underwater images often exhibit distinct challenges due to complex environmental conditions, such as water turbidity, light absorption, and scattering effects. These factors create unique visual properties, such as cross-scale similarities (where similar features appear at multiple scales) and anisotropy (directional variation in features, such as water texture or object edges). These characteristics demand specific considerations when extracting features for tasks like underwater image enhancement. In this part, we introduce a multi-scale dynamic-windowed attention module to address these challenges, as illustrated in Figure 2.

Figure 2.

The diagram of the multi-scale dynamic-windowed attention module. (a) Multi-scale dynamic-windowed attention, (b) multi-scale convolution block (MCB), (c) dynamic rectangle-windowed attention (DRWA).

To effectively capture cross-scale similarities, we employ the multi-scale Convolution block to extract local features. Specifically, the input feature is partitioned into four parts, each maintaining channels, resulting in partitioned feature maps . Subsequently, convolution kernels of varying sizes () are applied to the partitioned feature maps to obtain a series of feature maps rich in spatial information. This design not only introduces local inductive bias but also comprehensively captures spatial information at different scales. These feature maps are then concatenated along the channel dimension to obtain an agent feature token rich in multi-scale information:

where . This token not only preserves the local details but also integrates global contextual information across different scales, thereby providing a rich feature representation for subsequent processing.

To effectively address anisotropy caused by factors such as light and water flow, we introduce a dynamic rectangular window attention mechanism. This mechanism divides the input features into a series of non-overlapping rectangular windows , each with a height of and a width of , where i denotes the index of the i-th window, resulting in a total of windows. Subsequently, we split the feature maps into two groups along the channel dimension and apply attention mechanisms with windows of different directions to each group, which allows us to establish window-based dependencies over a larger range within specific dimensions. Afterward, the two groups of features are reconnected along the channel dimension and interact with the value tokens to aggregate key information.

Specifically, the feature token A serves as an agent for the query token Q, indirectly interacting with the key (K) and value (V) tokens to aggregate key information. Finally, the processed information is passed back to the query token Q to further enrich its information content. This indirect interaction mode not only substantially reduces the computational burden but also enhances the efficiency of information integration. The interaction process is realized through the following formula:

3.1.2. Objective

To enhance local-scale detail recovery and stabilize adversarial training, we adopt a patch-based discriminator D. During training only, PatchGAN partitions each generated image into small patches (e.g., pixels) and classifies each as real or fake. The adversarial loss function is given as follows:

The corresponding optimization objective for the diffusion model can be expressed as follows:

The comprehensive goal of the proposed model can be expressed as follows:

We propose an approach that compels the network to preserve realism in every region, ensuring the effective removal of localized underwater haze or chromatic aberrations without sacrificing edge sharpness. Compared with the full-image discriminator, the network structure of PatchGAN is lighter and less computationally intensive, which helps to maintain training stability and suppress excessive smoothing and pattern collapse during the gradual denoising of the diffusion model. Algorithm 1 gives the complete training and inference process for ALDiff-UIE.

| Algorithm 1 ALDiff-UIE Training and Inference |

Require: Paired dataset , noise schedule Ensure: Trained generator and discriminator

|

4. Experiments

To verify the effectiveness of the proposed method, comprehensive experiments were conducted in this section. Specifically, both qualitative and quantitative experiments were performed, comparing our method with eight state-of-the-art methods. Qualitative evaluation refers to judging the naturalness and visual details of the enhancement effect through visual comparisons (e.g., subjective scoring or side view comparisons). Quantitative evaluation relies on objective metrics (e.g., UICQE [34], PCQI [35], entropy [36], etc.) to measure the degree of improvement in image quality. Ablation studies were conducted to gain insights into the contribution of each component. The following sections provide detailed implementation information and a thorough analysis of the experimental results.

4.1. Implementation Details

The proposed model was trained using the underwater image enhancement benchmark (UIEB) dataset [37], which comprises 890 pairs of clear and degraded underwater images. To ensure the effectiveness of the model’s training, we selected 800 pairs of images for training, with the remaining 90 pairs reserved for testing. The training was conducted on an NVIDIA™ GeForce RTX 3090 platform, using the PyTorch [38] framework. Model parameter optimization was carried out with the Adam optimizer, a learning rate of , and a batch size of four for each training iteration. The entire training process consisted of 300 iterations.

To thoroughly assess the generalization capabilities of the proposed model, we conducted a comparative analysis between the proposed method and eight different approaches, as follows: Shallow [39], MLFCGAN [19], MetaUE [40], FUnIE-GAN [20], UIESS [41], DM [31], LiteEnhanceNet [42], and FiveA [16]. This analysis was performed across four datasets, namely, U45 [43], SUIM [44], UIEB [37], and LSUI [25], ensuring a comprehensive and objective evaluation. U45 [43], specifically designed by Li et al. for testing, contains images with color casts, low contrast, and a fog-like effect simulating underwater degradation, providing a realistic representation of underwater environments. SUIM [44] includes a wide variety of underwater images across eight categories, such as fish, coral reefs, plants, sunken ships/ruins, humans, robots, seabed/sand, and water backgrounds. LSUI [25] serves as a large-scale underwater image repository, comprising 4279 underwater images along with corresponding high-quality reference images, offering ample validation resources for assessing the model performance.

For quantitative evaluation, we use widely recognized objective quality assessment metrics to assess images, which provide precise evaluation functions for aspects such as color, detail, and contrast. UIQM [45], a comprehensive evaluation metric, is a linear combination of three metrics, namely, UICM, USIM, and UIConM. A higher UIQM value indicates better image quality. UCIQE [34] assesses images in the CIELAB color space, with a higher UCIQE value signifying a better balance in chromaticity, contrast, and saturation enhancement. PCQI [35] quantifies image distortion within individual blocks by evaluating the average intensity, signal strength, and signal structure. A higher PCQI value indicates better image quality. The gradient-based contrast evaluation index [46] reflects the contrast of the image, with higher values indicating greater contrast. Entropy [36] quantifies the information content of an image, with higher values indicating greater information richness.

In ablation experiments, since the UIEB dataset provides reference images, we utilize full-reference image quality assessment metrics, specifically the peak signal-to-noise ratio (PSNR) [47] and the structural similarity index (SSIM) [48], to quantify the performance of each component. PSNR measures the difference between the enhanced image and the reference image, with a higher value indicating a smaller difference and better image quality. SSIM evaluates the similarity between the enhanced image and the reference image, with a higher SSIM value indicating higher structural similarity. Additionally, we utilize CIEDE2000 [49] for color quality evaluation, considering factors such as the human eye’s sensitivity to different color stimuli and the scope of color difference calculations. CIEDE2000 provides a more accurate color quality assessment, with lower values indicating higher color quality.

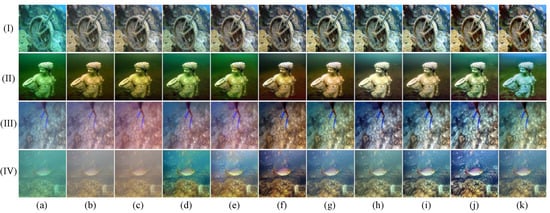

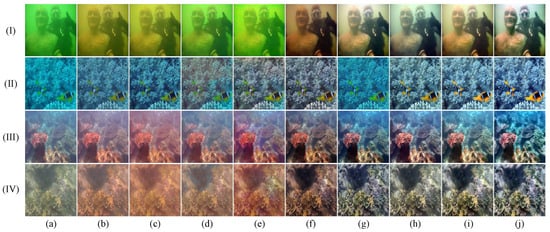

4.2. Qualitative Comparisons

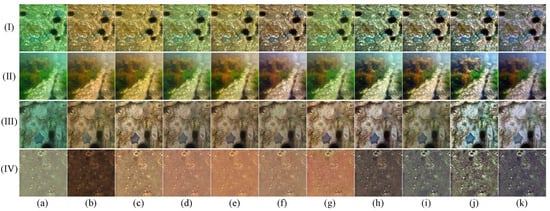

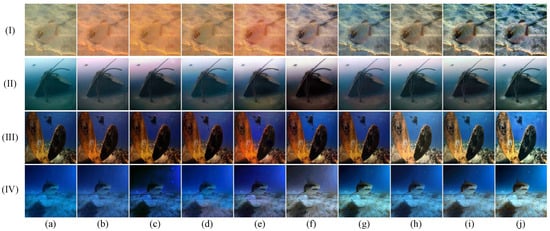

In this section, we conduct a thorough qualitative comparison utilizing publicly available underwater image datasets to evaluate the generalization performance of the proposed method in real-world scenarios. The comparative results, shown in Figure 3, Figure 4, Figure 5 and Figure 6, clearly highlight the distinct performances of various methods across the UIEB, U45, LSUI, and SUIM datasets.

Figure 3.

Qualitative comparisons for samples from the UIEB dataset: I-IV represents the four images in the UIEB dataset. From left to right are (a) raw underwater images, the results of (b) shallow [39], (c) MLFCGAN [19], (d) MetaUE [40], (e) FUnIE [20], (f) UIESS [41], (g) DM [31], (h) LiteEnhanceNet [42], (i) FiveA [16], and (j) the proposed method, and (k) reference images.

Figure 4.

Qualitative comparisons for samples from the U45 dataset: I–IV represents the four images in the U45 dataset. From left to right are (a) raw underwater images, the results of (b) shallow [39], (c) MLFCGAN [19], (d) MetaUE [40], (e) FUnIE [20], (f) UIESS [41], (g) DM [31], (h) LiteEnhanceNet [42], (i) FiveA [16], and (j) the proposed method.

Figure 5.

Qualitative comparisons for samples from the LSUI dataset: I–IV represents the four images in the LSUI dataset. From left to right are (a) raw underwater images, the results of (b) shallow [39], (c) MLFCGAN [19], (d) MetaUE [40], (e) FUnIE [20], (f) UIESS [41], (g) DM [31], (h) LiteEnhanceNet [42], (i) FiveA [16], (j) the proposed method, and (k) reference images.

Figure 6.

Qualitative comparisons for samples from the SUIM dataset: I–IV represents the four images in the SUIM dataset. From left to right are (a) raw underwater images, the results of (b) shallow [39], (c) MLFCGAN [19], (d) MetaUE [40], (e) FUnIE [20], (f) UIESS [41], (g) DM [31], (h) LiteEnhanceNet [42], (i) FiveA [16], and (j) the proposed method.

As shown in Figure 3, in cases (I), (III), and (IV), our method produces more realistic colors and richer details compared to other approaches. In case (II), the FiveA method shows a certain advantage in color fidelity, yet our method preserves a greater amount of image details.

As depicted in Figure 4, in scenes (I) and (II), our method preserves rich color information and effectively maintains image details, while the other methods exhibit varying degrees of color deviation. In scene (III), although the LiteEnhanceNet method achieves more natural color reproduction, our method demonstrates a significant advantage in detail recovery. In scene (IV), both the DM method and our method achieve accurate color restoration in underwater environments, but our method renders finer, sharper details.

As can be seen from Figure 5, in scene (I), while both LiteEnhanceNet and our method render natural colors, our method demonstrates a notable advantage in contrast enhancement and detail presentation. In scenes (II), (III), and (IV), our method not only accurately restores the images’ colors but also delivers superior clarity and contrast.

As illustrated in Figure 6, scenes (I), (II), and (IV) demonstrate the superior ability of the proposed method to accurately restore colors, effectively mitigating color deviations compared to other methods. However, in scene (III), although LiteEnhanceNet, FiveA, and our method all produce natural colors, a closer inspection reveals that LiteEnhanceNet is overexposed, while FiveA falls short of our method in terms of detail precision.

4.3. Quantitative Comparisons

In this section, we conduct a comprehensive quantitative comparison between the proposed method and several state-of-the-art image enhancement methods. Table 1 provides evaluation results for each enhanced image shown in Figure 3 and Figure 4. It is evident that our method outperforms others across three evaluation metrics, namely, UCIQE, PCQI, and entropy. Notably, in scenario (II) of Figure 3, DM achieved the highest UCIQE score, yet its enhanced images exhibited a significant red color cast. In scenario (II) of Figure 3 and scenario (IV) of Figure 4, FiveA achieved higher entropy scores; however, the details of its enhanced results were relatively blurred.

Table 2 showcases the quantitative evaluation results for the enhanced images in Figure 5 and Figure 6, further validating the comprehensive superiority of our method. While other methods occasionally outperformed ours in specific metrics for certain scenarios, these improvements were often accompanied by issues like color casts. For instance, in scenario (II) of Figure 6, FUnIE and MLFCGAN obtain the best UCIQE scores, yet the enhanced images of both methods suffer from a red color bias. In scenario (IV), although FiveA achieves a higher entropy score, its resulting image exhibits an undeniable blue bias. It must be emphasized that while existing no-reference image quality assessment metrics have provided essential benchmarks for evaluating image quality, studies [50,51] have demonstrated that these metrics may exhibit biases towards certain specific image characteristics. Consequently, relying solely on quantitative evaluations is insufficient for a comprehensive and accurate assessment of model quality. It is imperative to integrate both qualitative and quantitative analyses for a more holistic evaluation.

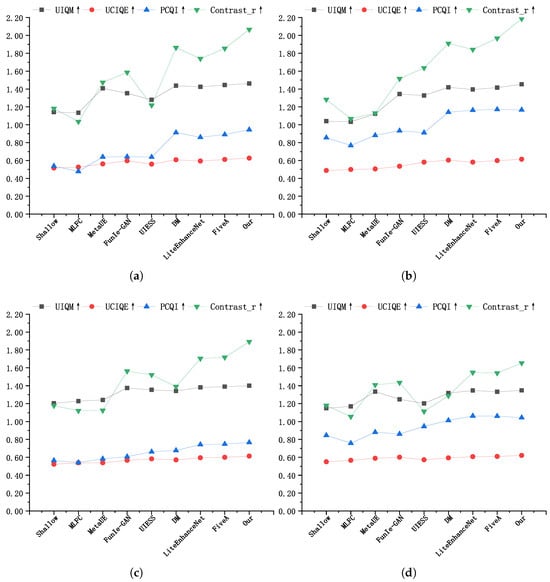

Additionally, Figure 7 presents the average values of UIQM, UCIQE, PCQI, and other metrics across the UIEB, U45, LSUI, and SUIM datasets, respectively. From these figures, it can be clearly observed that the proposed method outperforms other methods across all evaluated metrics. These results demonstrate that the proposed method efficiently enhances images across various underwater environments, validating its effectiveness and generalizability.

Figure 7.

Average quantitative values on different datasets. (a) Average quantitative values on the UIEB dataset. (b) Average quantitative values on the U45 dataset. (c) Average quantitative values on the LSUI dataset. (d) Average quantitative values on the SUIM dataset.↑ indicates that higher is better.

To evaluate the complexity of our proposed method, we conducted experiments on the UIEB dataset, following the experimental conditions outlined in the implementation details. As shown in Table 3, our method does not outperform existing approaches in terms of model size or latency. This is primarily because we incorporate a multi-stage reverse-diffusion module and discriminator with inter-stage feature interactions and attention weighting, which balances global structure restoration and local detail refinement. We also employ multiple reconstruction and adversarial losses and perform additional intermediate-feature fusion and enhancement during inference to better restore texture and color. Although these designs improve image quality, they introduce numerous convolutional layers and attention operations, which increase both the parameter count and inference time.

Table 3.

Comparison of the number of parameters and the single image testing time of each method.

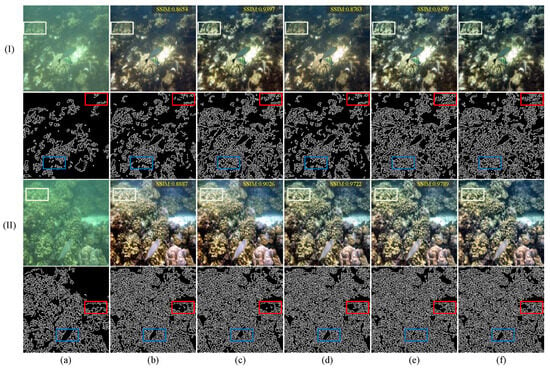

4.4. Ablation Study

In this section, we design an ablation experiment to verify the effectiveness of the individual components within the proposed model. We use Palette [52] as the baseline model for our experiments, and the specific ablation experiments are as follows:

- woMDWA: Removing the multi-scale dynamic-windowed attention mechanism from the proposed model to investigate its key role in the image enhancement task.

- woDIS: Removing the discriminator from the proposed model to evaluate its impact on the overall model performance.

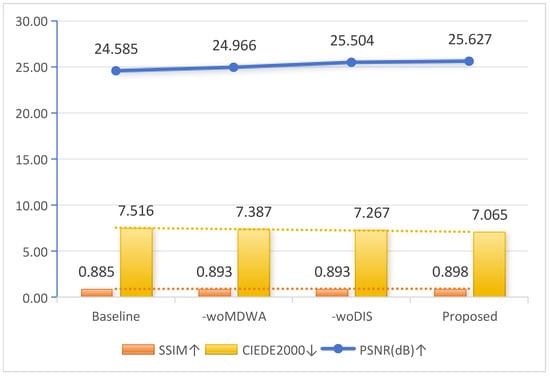

Figure 8 presents the results of the ablation study intuitively, demonstrating the impact of various components on the final enhancement outcome. White boxes mark identical key regions in the color images, facilitating side-by-side comparison of color restoration and contrast. Red boxes enclose the corresponding areas in the Canny edge maps, highlighting how each variant preserves primary structural edges (e.g., coral ridges, fish contours). Blue boxes indicate areas of fine-grained texture. Comparative analysis suggests that the discriminator ensures a balanced overall color distribution, while the multi-scale dynamic-windowed attention mechanism directs focus to target regions. The Canny-based edge maps further confirm that our complete method not only retains the main edges (red boxes) but also uncovers richer peripheral textures (blue boxes). Moreover, Figure 9 displays the average evaluation results of different components on the UIEB dataset. The proposed method achieved the best performance across all evaluation metrics, demonstrating its effectiveness.

Figure 8.

Visual comparison of the ablation study sampled from the UIEB dataset. (I) and (II) represent two images from the UIEB dataset. White, red, and blue rectangles are used to mark key areas in the image. From left to right are (a) raw underwater images, the results of (b) Baseline [52], (c) woMDWA, (d) woDIS, and (e) the proposed method, and (f) reference images.

Figure 9.

Ablation study of different components on the UIEB dataset. ↑ indicates higher is better, ↓ indicates lower is better.

5. Discussion

ALDiff-UIE introduces, for the first time, a combination of progressive detail reconstruction via diffusion models and adversarial learning, yielding significant gains in detail-recovery metrics such as PCQI while maintaining color consistency. Its multi-scale dynamic-windowed attention module surpasses fixed-window and single-scale attention mechanisms in capturing anisotropic and cross-scale textures.

Despite its leading performance in underwater image enhancement, ALDiff-UIE exhibits three primary limitations. First, its generalization to unseen water types (e.g., highly turbid river water or deep-sea environments) remains limited; future work may incorporate domain-adaptive or unsupervised fine-tuning strategies to improve adaptability across diverse water conditions. Second, unreferenced metrics such as UCIQE and PCQI may not align with subjective human perception in all scenarios, so adopting more robust perceptual-quality indicators or conducting large-scale subjective evaluations could yield more reliable assessments. Third, the combination of multi-stage backpropagation, multi-scale dynamic-windowed attention, and a PatchGAN discriminator substantially increases parameter count and inference cost, hindering real-time deployment in resource-constrained settings. Thus, future research will explore lightweight architectures, dynamic inference strategies, and more efficient unsupervised adaptation methods to enhance the model’s utility and robustness.

6. Conclusions

In this paper, we propose ALDiff-UIE, an underwater image enhancement method that integrates adversarial learning with diffusion modeling and incorporates a multi-scale dynamic-windowed attention mechanism to effectively capture cross-scale similarity and anisotropy in underwater images. Qualitative and quantitative experiments on four benchmark datasets—UIEB, U45, SUIM, and LSUI—demonstrate that ALDiff-UIE increases the average PCQI by approximately 12.8% and UIQM by about 15.6%. It also significantly outperforms existing methods on several no-reference metrics, including UCIQE and entropy (p < 0.01), and visual comparisons reveal more natural color reproduction and richer detail. Despite these improvements, ALDiff-UIE remains computationally intensive, with notable inference latency, and its performance degrades in extremely turbid or low-light conditions. In the future, we will compress the model and accelerate the inference through structured pruning, low-bit quantization, and knowledge distillation, and introduce unsupervised or semi-supervised domain adaptive fine-tuning strategies to maintain excellent generalization ability in various water environments such as high turbidity rivers and deep-sea low light, to satisfy the demand for real-time deployment on embedded and mobile devices.

Author Contributions

Conceptualization, X.D.; Methodology, Y.S.; Writing—original draft, X.C.; Writing—review & editing, Y.W.; Funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (62402085, 61972062, 62306060); the Liaoning Doctoral Research Start-up Fund (2023-BS-078); the Dalian Youth Science and Technology Star Project (2023RQ023); and the Liaoning Basic Research Project (2023JH2/101300191).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data underlying the results presented in this paper are available in the UIEB dataset [37], U45 dataset [43], SUIM dataset [44], and LSUI dataset [25].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alsakar, Y.M.; Sakr, N.A.; El-Sappagh, S.; Abuhmed, T.; Elmogy, M. Underwater image restoration and enhancement: A comprehensive review of recent trends, challenges, and applications. Vis. Comput. 2025, 41, 3735–3783. [Google Scholar] [CrossRef]

- Raveendran, S.; Patil, M.D.; Birajdar, G.K. Underwater image enhancement: A comprehensive review, recent trends, challenges and applications. Artif. Intell. Rev. 2021, 54, 5413–5467. [Google Scholar] [CrossRef]

- Cong, X.; Zhao, Y.; Gui, J.; Hou, J.; Tao, D. A comprehensive survey on underwater image enhancement based on deep learning. arXiv 2024, arXiv:2405.19684. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 28th International Conference on Neural Information Processing Systems(NIPS’14), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M.H. Diffusion models: A comprehensive survey of methods and applications. ACM Comput. Surv. 2023, 56, 1–39. [Google Scholar] [CrossRef]

- Zhou, J.; Yang, T.; Zhang, W. Underwater vision enhancement technologies: A comprehensive review, challenges, and recent trends. Appl. Intell. 2023, 53, 3594–3621. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef]

- Muniraj, M.; Dhandapani, V. Underwater image enhancement by combining color constancy and dehazing based on depth estimation. Neurocomputing 2021, 460, 211–230. [Google Scholar] [CrossRef]

- Li, Z. Physics-based Underwater Image Enhancement. In Proceedings of the 2023 IEEE 6th International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 23–25 September 2023; pp. 1020–1024. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, C.; Sun, B.; Yan, X.; Chen, M. A novel multi-scale fusion framework for detail-preserving low-light image enhancement. Inf. Sci. 2021, 548, 378–397. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171. [Google Scholar] [CrossRef]

- Wu, S.; Luo, T.; Jiang, G.; Yu, M.; Xu, H.; Zhu, Z.; Song, Y. A two-stage underwater enhancement network based on structure decomposition and characteristics of underwater imaging. IEEE J. Ocean. Eng. 2021, 46, 1213–1227. [Google Scholar] [CrossRef]

- Jiang, J.; Ye, T.; Bai, J.; Chen, S.; Chai, W.; Jun, S.; Liu, Y.; Chen, E. Five A+ Network: You Only Need 9K Parameters for Underwater Image Enhancement. arXiv 2023, arXiv:2305.08824. [Google Scholar]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Khandouzi, A.; Ezoji, M. Coarse-to-fine underwater image enhancement with lightweight CNN and attention-based refinement. J. Vis. Commun. Image Represent. 2024, 99, 104068. [Google Scholar] [CrossRef]

- Liu, X.; Gao, Z.; Chen, B.M. MLFcGAN: Multilevel feature fusion-based conditional GAN for underwater image color correction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1488–1492. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, C.; Zhou, J.; Zhang, W.; Li, C.; Lin, Z. Hierarchical attention aggregation with multi-resolution feature learning for GAN-based underwater image enhancement. Eng. Appl. Artif. Intell. 2023, 125, 106743. [Google Scholar] [CrossRef]

- Chaurasia, D.; Chhikara, P. Sea-Pix-GAN: Underwater image enhancement using adversarial neural network. J. Vis. Commun. Image Represent. 2024, 98, 104021. [Google Scholar] [CrossRef]

- Gao, Z.; Yang, J.; Zhang, L.; Jiang, F.; Jiao, X. TEGAN: Transformer Embedded Generative Adversarial Network for Underwater Image Enhancement. Cogn. Comput. 2024, 16, 191–214. [Google Scholar] [CrossRef]

- Ren, T.; Xu, H.; Jiang, G.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced swin-convs transformer for simultaneous underwater sensing scene image enhancement and super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4209616. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Khan, R.; Mishra, P.; Mehta, N.; Phutke, S.S.; Vipparthi, S.K.; Nandi, S.; Murala, S. Spectroformer: Multi-Domain Query Cascaded Transformer Network for Underwater Image Enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1454–1463. [Google Scholar]

- Shen, Z.; Xu, H.; Luo, T.; Song, Y.; He, Z. UDAformer: Underwater image enhancement based on dual attention transformer. Comput. Graph. 2023, 111, 77–88. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Lu, S.; Guan, F.; Zhang, H.; Lai, H. Underwater image enhancement method based on denoising diffusion probabilistic model. J. Vis. Commun. Image Represent. 2023, 96, 103926. [Google Scholar] [CrossRef]

- Tang, Y.; Kawasaki, H.; Iwaguchi, T. Underwater Image Enhancement by Transformer-based Diffusion Model with Non-uniform Sampling for Skip Strategy. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5419–5427. [Google Scholar]

- Shi, X.; Wang, Y.G. CPDM: Content-Preserving Diffusion Model for Underwater Image Enhancement. arXiv 2024, arXiv:2401.15649. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Dong, C.; Cai, W. Learning A Physical-aware Diffusion Model Based on Transformer for Underwater Image Enhancement. arXiv 2024, arXiv:2403.01497. [Google Scholar]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Wang, S.; Ma, K.; Yeganeh, H.; Wang, Z.; Lin, W. A patch-structure representation method for quality assessment of contrast changed images. IEEE Signal Process. Lett. 2015, 22, 2387–2390. [Google Scholar] [CrossRef]

- Tsai, D.Y.; Lee, Y.; Matsuyama, E. Information entropy measure for evaluation of image quality. J. Digit. Imaging 2008, 21, 338–347. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Fu, X.; Cao, X. Underwater image enhancement with global–local networks and compressed-histogram equalization. Signal Process. Image Commun. 2020, 86, 115892. [Google Scholar] [CrossRef]

- Zhang, Z.; Yan, H.; Tang, K.; Duan, Y. MetaUE: Model-based Meta-learning for Underwater Image Enhancement. arXiv 2023, arXiv:2303.06543. [Google Scholar]

- Chen, Y.W.; Pei, S.C. Domain Adaptation for Underwater Image Enhancement via Content and Style Separation. IEEE Access 2022, 10, 90523–90534. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, S.; An, D.; Li, D.; Zhao, R. LiteEnhanceNet: A lightweight network for real-time single underwater image enhancement. Expert Syst. Appl. 2024, 240, 122546. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A fusion adversarial underwater image enhancement network with a public test dataset. arXiv 2019, arXiv:1906.06819. [Google Scholar]

- Islam, M.J.; Edge, C.; Xiao, Y.; Luo, P.; Mehtaz, M.; Morse, C.; Enan, S.S.; Sattar, J. Semantic segmentation of underwater imagery: Dataset and benchmark. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 1769–1776. [Google Scholar]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Hautiere, N.; Tarel, J.P.; Aubert, D.; Dumont, E. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. 2008, 27, 87–95. [Google Scholar] [CrossRef]

- Korhonen, J.; You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Proceedings of the 2012 Fourth International Workshop on Quality of Multimedia Experience, Melbourne, VIC, Australia, 5–7 July 2012; pp. 37–38. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Yang, Y.; Ming, J.; Yu, N. Color image quality assessment based on CIEDE2000. Adv. Multimed. 2012, 2012, 273723. [Google Scholar] [CrossRef]

- Guo, C.; Wu, R.; Jin, X.; Han, L.; Zhang, W.; Chai, Z.; Li, C. Underwater ranker: Learn which is better and how to be better. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 702–709. [Google Scholar]

- Huang, S.; Wang, K.; Liu, H.; Chen, J.; Li, Y. Contrastive semi-supervised learning for underwater image restoration via reliable bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18145–18155. [Google Scholar]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.; Ho, J.; Salimans, T.; Fleet, D.; Norouzi, M. Palette: Image-to-image diffusion models. In Proceedings of the ACM SIGGRAPH 2022 Conference Proceedings, Vancouver, BC, Canada, 7–11 August 2022; pp. 1–10. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).