Abstract

A major computational bottleneck in classifying large-scale hyperspectral images (HSI) is the mandatory data decompression prior to processing. Compressed-domain computing offers a solution by enabling deep learning on partially compressed data. However, existing compressed-domain methods are predominantly tailored for the Discrete Cosine Transform (DCT) used in natural images, while HSIs are typically compressed using the Discrete Wavelet Transform (DWT). The fundamental structural mismatch between the block-based DCT and the hierarchical DWT sub-bands presents two core challenges: how to extract features from multiple wavelet sub-bands, and how to fuse these features effectively? To address these issues, we propose a novel framework that extracts and fuses features from different DWT sub-bands directly. We design a multi-branch feature extractor with sub-band feature alignment loss that processes functionally different sub-bands in parallel, preserving the independence of each frequency feature. We then employ a sub-band cross-attention mechanism that inverts the typical attention paradigm by using the sparse, high-frequency detail sub-bands as queries to adaptively select and enhance salient features from the dense, information-rich low-frequency sub-bands. This enables a targeted fusion of global context and fine-grained structural information without data reconstruction. Experiments on three benchmark datasets demonstrate that our method achieves classification accuracy comparable to state-of-the-art spatial-domain approaches while eliminating at least 56% of the decompression overhead.

1. Introduction

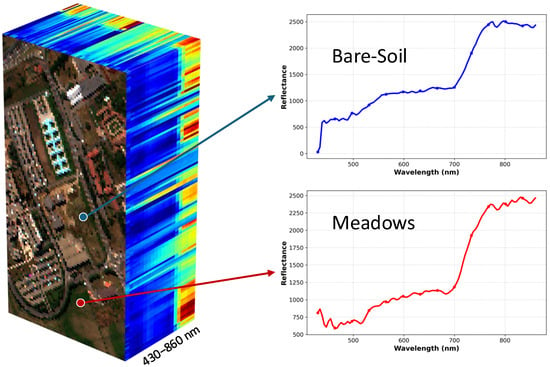

Hyperspectral imaging (HSI) acquires information across a wide range of the electromagnetic spectrum, extending well beyond the visible light captured by standard RGB cameras. Unlike conventional imagery, HSI captures hundreds of contiguous spectral bands with high spectral resolution, typically ranging from 400 to 2500 nm per band. This fine-grained spectral sampling enables the precise identification of materials and surface characteristics by analyzing their unique spectral signatures. As illustrated in Figure 1, the two classes (Bare-Soil and Meadows) exhibit distinct characteristic differences in the spectral dimension. For this reason, HSI plays a pivotal role in fields such as precision agriculture, environmental monitoring, and mineral exploration.

Figure 1.

Visualization of the Pavia University HSI. The left panel depicts the three-dimensional data cube, and the right panel displays the continuous spectral signatures extracted from distinct pixels, where the blue and red curves represent the Bare-Soil and Meadows classes, respectively.

Despite its advantages, the high spectral resolution of HSI results in substantial data volumes, necessitating efficient compression for transmission and storage. Currently, the vast majority of remote sensing satellites employ Discrete Wavelet Transform (DWT)-based compression standards to overcome bandwidth limitations. For instance, satellites such as Yaogan 6, Ziyuan 3, and Mapping Satellite-1 utilize the Joint Photographic Experts Group 2000 (JPEG2000) standard [1,2,3]. However, the subsequent decompression process creates a computational bottleneck for large-scale data analysis. Assuming that decompressing a single 200-band HSI takes approximately 10 s, processing 10,000 such samples would require nearly 28 h. This latency underscores the necessity for compressed-domain computing methods capable of processing data directly in its compressed form without full reconstruction.

Compressed-domain computing has emerged as a potential solution to this efficiency challenge. While this paradigm has been explored in natural image processing, existing approaches primarily focus on the JPEG standard, which is based on the Discrete Cosine Transform (DCT) [4,5,6]. Unfortunately, these methods are not directly transferable to HSI due to the structural disparity between transform domains. The DCT domain utilizes an block-based structure with intermixed spatial and frequency components, whereas the DWT domain features a hierarchical, multi-resolution sub-band structure. Existing DCT-based architectures, which often apply uniform projections to blocks, fail to accommodate the frequency independence and multi-scale nature inherent in the DWT structure.

Consequently, a fundamental research objective arises: How can we formulate a unified framework for HSI classification that operates efficiently and effectively within the DWT compressed domain?

Establishing such a framework presents two primary challenges derived from the specific data structure of DWT sub-bands. First, unlike the single tensor input in pixel-domain networks, DWT decomposes images into multiple sub-bands (e.g., LL, LH, HL, HH) with varying resolutions, necessitating a multi-branch architecture to extract features in parallel. Second, the semantic information is distributed across different frequency bands; thus, effectively fusing high-frequency details with low-frequency approximations is critical to prevent information loss during the classification process.

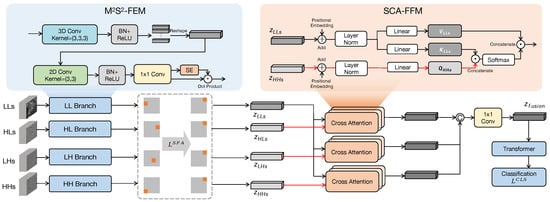

To address these challenges, we propose a novel compressed-domain classification framework named WaveHSI. To handle the multi-branch input, we design a Multi-Branch Multi-Scale Spatial-Spectral Feature Extraction Module (-FEM) with a Sub-band Feature Alignment (SFA) loss that processes wavelet sub-bands directly. Furthermore, to resolve the feature fusion challenge, we introduce a Sub-band Cross-attention Feature Fusion Module (SCA-FFM), which leverages high-frequency components to refine the semantic representation of low-frequency features.

The main contributions of this paper are summarized as follows:

- We explore a novel feature learning paradigm for the hierarchical DWT structure. By proposing the -FEM with a specialized alignment loss, we demonstrate that robust spatial–spectral representations can be learned directly from decoupled wavelet sub-bands, bypassing the inverse wavelet transform.

- We investigate the interaction mechanism between varying frequency layers. To this end, we design the SCA-FFM, which reveals that leveraging high-frequency details to guide the refinement of low-frequency features via cross-attention effectively mitigates the information loss in deep networks.

- We present a high-efficiency framework that achieves a superior trade-off between accuracy and speed. Comprehensive experiments on three benchmark datasets validate that our method reduces computational overhead, offering a promising solution for time-critical remote sensing applications.

2. Background and Related Works

2.1. Wavelet Compression Method

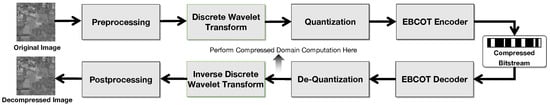

Many HSI compression algorithms utilize DWT, with JPEG2000 being the most prominent example. Based on JPEG2000, this paper explores how to implement deep learning computations directly in the compressed-domain. As shown in Figure 2, the JPEG2000 compression process comprises the following steps: (1) Preprocessing: including image tiling, DC shifting, and component transformation, which provide a more suitable data representation for subsequent wavelet transform and encoding processes. (2) DWT: This is the core step of the JPEG2000 algorithm, decomposing the image into four frequency sub-bands that capture both high-frequency and low-frequency information of the image. (3) Quantization of wavelet coefficients: This lossy step discards certain data to reduce the overall file size. (4) Encoding of quantized wavelet coefficients using Embedded Block Coding with Optimized Truncation (EBCOT) to obtain a compressed bitstream. EBCOT is an entropy encoding technique used to further compress image data and remove redundancy.

Figure 2.

JPEG2000 compression and decompression process.

Throughout this process, the computational complexity of the wavelet transform is O(), where N is the image side length [7]. This quadratic complexity causes significant computational delays for large-scale HSI, with delays increasing with the number of hyperspectral bands and wavelet decomposition levels. For large amounts of high-resolution hyperspectral data, omitting the inverse wavelet transform step can save considerable time. Beyond computational efficiency, the wavelet domain offers inherent advantages for feature representation. Distinct regions of the electromagnetic spectrum manifest unique frequency characteristics; for instance, the subtle contrast variations found in near-infrared bands correspond to specific high or low-frequency components. These features often exhibit superior discriminability in the frequency domain compared to the spatial domain, thereby validating the rationale for performing analysis directly on wavelet coefficients. Moreover, JPEG2000 provides lossless wavelet transform capabilities, allowing the transformed image to retain all information. The multi-resolution representation provided by multi-level wavelet transform enables easy access to different detail levels of the image, which are prerequisites for feasible compressed-domain computation. Therefore, following previous works [8,9,10], this paper defines the compressed-domain as the multi-level wavelet domain data obtained after EBCOT decoding and before inverse wavelet transform. We propose a method to directly input this data into neural networks without fully reconstructing the image.

2.2. DCT Compressed-Domain Computing

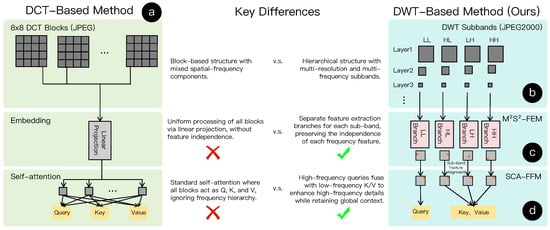

While this paper focuses on wavelet-based compression, the paradigm of compressed-domain computing has matured significantly within the context of DCT-based JPEG images. As illustrated in Figure 3a, the standard DCT compression pipeline partitions an image into independent blocks. Within each block, spatial and frequency information are intermixed, and existing methods typically apply uniform projections to these blocks to extract features.

Figure 3.

Structural comparison between DCT-based and DWT-based compressed-domain paradigms. (a) DCT-based methods typically process blocks with mixed spatial-frequency components using uniform projections. (b) In contrast, the DWT domain exhibits a hierarchical structure with distinct frequency sub-bands, which existing block-based methods fail to address. (c,d) illustrate the proposed sub-band independent processing and feature alignment strategies.

Gueguen et al. [4] pioneered this direction by modifying ResNet to accept DCT coefficients directly from the decoded blocks. By bypassing the computationally expensive inverse DCT and RGB conversion steps, they achieved significant acceleration in image classification. Subsequently, Park et al. [5] identified a natural alignment between the DCT blocks and the patch embedding mechanism of Vision Transformers. They proposed treating flattened DCT blocks as input tokens, allowing the network to learn directly from frequency representations without resizing artifacts. To further optimize the trade-off between accuracy and efficiency, Li et al. [6] introduced a learnable frequency selection mechanism (DCFormer) to filter out redundant high-frequency channels within the DCT blocks.

However, these successes in the DCT domain do not readily translate to HSI processing. As shown in the comparison between Figure 3a,b, there is a fundamental structural disparity. DCT methods rely on the independence of local blocks, whereas the DWT standard (JPEG2000) used in HSI features a global, hierarchical multi-resolution structure where frequency sub-bands are explicitly separated. This mismatch necessitates the development of specialized DWT-based frameworks, as discussed in the following section.

2.3. DWT Compressed-Domain Computing

Extensive explorations into the JPEG2000 compressed-domain have been conducted prior to this work. As early as 2005, Fehmi Chebil et al. [10] proposed a method for directly editing images in the JPEG2000 compressed-domain. By manipulating wavelet coefficients, they enabled common image editing operations such as brightness adjustment, contrast adjustment, image overlay, and linear filtering, thereby improving editing efficiency. When processing high-resolution images, this method enhanced editing performance by more than 10 times.

Fan Zhao et al. [11] proposed an adaptive blind watermarking algorithm based on the JPEG2000 compressed-domain. This algorithm fully utilizes the rich bitstream syntax of the JPEG2000 standard and enables copyright protection for JPEG2000 images by embedding watermarks in the compressed-domain. In 2023, Bisen et al. [12] proposed a method for directly extracting text and non-text regions within the JPEG2000 compressed-domain. This method utilizes JPEG2000 characteristics (such as resolution and bit depth) to efficiently separate text and non-text regions, and extracts layout information through the maximally stable extremal regions algorithm, thus saving computing resources and improving processing efficiency.

Li et al. [13] proposed an analysis workflow for whole slide image processing based on the compressed-domain. By utilizing the compressed-domain pyramid magnification structure and compressed-domain features obtained from the original bitstream, they achieved efficient analysis of whole slide image classification models. This method reduces computing resource consumption while maintaining model accuracy comparable to that of the original workflow.

In the remote sensing field, Akshara et al. [8] pioneered compressed-domain work on RGB remote sensing images. Their approach uses transposed convolutional layers to approximate higher-resolution wavelet sub-bands from the coarsest-resolution wavelet sub-bands, then employs multiple convolutional layers to extract features from high-resolution wavelet sub-bands, and finally uses a fully connected layer for classification. Although using a simple network structure, they demonstrated that compressed-domain computation can significantly reduce computing time and storage requirements while maintaining high classification accuracy when processing large-scale remote sensing image data.

Table 1 categorizes existing compressed-domain methods by their transform domain (DCT vs. DWT). Although these excellent methods have achieved promising performance on natural images and RGB remote sensing classification, no method for HSI classification tasks has been proposed, and this paper aims to fill this gap.

Table 1.

Comparison of compressed-domain computing methods.

3. Preliminaries: Wavelet Transform Based Data Structure

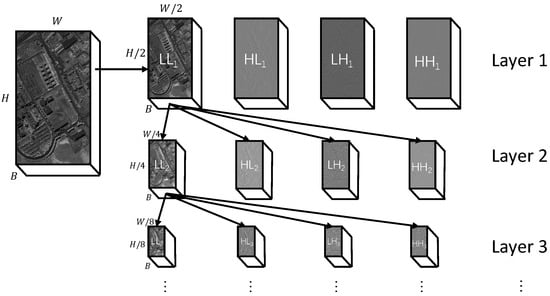

To clarify the input data format for our proposed method, this section details the structure of HSI data in the wavelet domain. This structure results from the 2D DWT used in compression standards like JPEG2000, where each HSI band is recursively decomposed. The process begins by transforming the initial data () into a low-frequency sub-band () and three high-frequency sub-bands (). The recursive relationship is defined as:

where is the k-th level DWT. The dimensions of the sub-bands at level k are . We use the same notations for as Akshara et al. [8] did.

For instance, performing a 3-level decomposition on a single band with initial dimensions of produces the following: the first level generates four sub-bands () of size ; the sub-band is then decomposed into four smaller sub-bands () of size ; finally, the sub-band is decomposed into sub-bands () of size .

As calculated above, a 4th level would reduce the LL band to approximately . This resolution is insufficient for the subsequent convolutional or attention-based modules to capture meaningful spatial context. Therefore, in this work, we utilize 3 levels to maintain a resolution of , achieving an optimal balance between frequency domain separation and spatial semantic preservation. The resulting data structure consists of three different scales, as summarized in Figure 4.

Figure 4.

Illustration of wavelet transform based data structure.

This wavelet domain structure provides the foundation for our method. Its multi-scale nature motivates the architecture of our M2S2-FEM module. The functional separation between the information-rich LL sub-bands and the detail-oriented HL, LH, HH sub-bands justifies a multi-branch processing strategy and the cross-attention fusion in our SCA-FFM. By operating directly on these coefficients instead of the reconstructed image, our method bypasses the computationally expensive inverse transform, enabling compressed-domain computing.

4. Methods

This section introduces the workflow of the WaveHSI method proposed in this paper. Section 4.1 provides an overall introduction to the entire pipeline. Section 4.2 elaborate on the specific designs of the two modules: -FEM and SCA-FFM. Section 4.3 presents the training strategy, including a sub-band random masking strategy proposed to prevent overfitting, as well as a detailed introduction to the loss functions.

4.1. Overview

After obtaining the compressed-domain data in the form described in Section 3, the WaveHSI implementation proceeds through the following four steps:

- (1)

- Data preprocessing: The HSI classification task is pixel-level, requiring prediction results for each pixel. Since the resolution of DWT domain data cannot be spatially aligned with the original image, all sub-bands are first upsampled to the spatial size of the original data using bilinear interpolation. Then, to reduce input dimension and improve computational speed, Principal Component Analysis is applied to reduce the spectral dimension of each sub-band to . To expand the contextual spatial information of input pixels, patches are extracted with each pixel as the center and r as the radius, using the patch instead of a single pixel as the input.

- (2)

- Shallow feature extraction: Since LL, HL, LH, and HH represent features in different directions, each branch needs to be processed separately. As shown in Figure 5, we designed -FEM to extract shallow features from the four sub-bands. This module has four branches with the same network structure. Each branch extracts spectral features through 3D convolution and then spatial features through 2D convolution, obtaining shallow features from the four sub-bands. To ensure the alignment of features across different branches, we employ SFA loss. This loss functions by using the spatial layout as an intermediary, compelling the channels in each sub-band feature to exhibit specific spatial activation patterns.

Figure 5. Illustration of -FEM and SCA-FFM.

Figure 5. Illustration of -FEM and SCA-FFM. - (3)

- Deep feature fusion: represents low-frequency features that retain most information and are of highest importance for classification, while other high-frequency features play auxiliary roles. To fully fuse the information from low-frequency and high-frequency features, we propose SCA-FFM. This module uses the features of HL, LH, and HH as queries, and the features of LL as keys and values to compute attention, obtaining high-level fused features.

- (4)

- Classification: Following the traditional vision transformer-based classification process, we pass the fused features into the transformer encoder, obtain the classification token after a series of nonlinear transformations, and finally obtain the classification result through a linear layer.

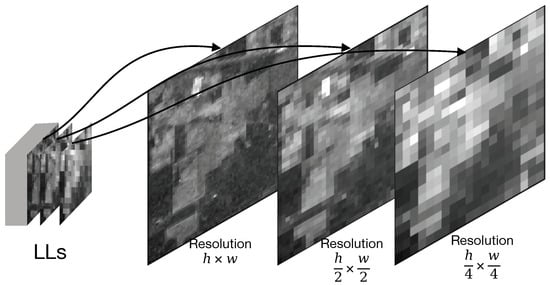

4.2. Model Architecture

4.2.1. Multi-Branch Multi-Scale Spatial–Spectral Feature Extraction Module

The -FEM module is designed to effectively extract multi-scale spatial–spectral features directly from distinct sub-bands generated by the DWT, addressing the challenge of capturing hierarchical context from wavelet coefficients. To achieve this, the module’s input is constructed through a specialized channel concatenation strategy. As illustrated in Figure 6, patches from different decomposition levels corresponding to the same spatial location are arranged adjacently along the channel dimension. This organization allows subsequent convolutional kernels to simultaneously process features from multiple resolutions, facilitating hierarchical correlation learning. This approach directly leverages the multi-scale outputs of the wavelet domain, unlike methods such as spatial pyramid pooling [14] that generate scale invariance through pooling operations.

Figure 6.

Input data of the -FEM (taking LLs of three-level wavelet decomposition as an example).

Inspired by the Mixture of Experts (MoE) architecture [15,16] in the field of large language models, we employ a multi-branch architecture with four parallel streams to process this structured multi-scale input, each dedicated to a specific sub-band type (LLs, HLs, LHs, and HHs). Each branch utilizes 3D convolution to extract initial spatial–spectral features, with kernels operating across both spatial dimensions and the concatenated scale-channel dimension to capture local patterns and spectral-scale correlations. This is followed by depthwise separable convolution, which first isolates spatial pattern learning through depthwise convolution and then efficiently combines channel information via pointwise convolution, enhancing spatial texture extraction while reducing computational overhead. To further refine feature representations, a Squeeze-and-Excitation (SE) block is incorporated in each branch to adaptively recalibrate channel importance, amplifying informative features while suppressing noise. The outputs of this process are the refined feature maps for each sub-band group: , , , and .

The LLs, HLs, LHs, and HHs branches extract features in parallel. However, this independent extraction design lacks an explicit constraint to ensure the output features from the four branches are spatially aligned with each other. To reinforce the spatial consistency and to enhance the model’s representation capability for subtle local objects, we introduce SFA Loss. Inspired by Liu et al. [17], the core idea of it is to leverage a spatial consistency prior by explicitly binding the feature channels of each branch to fixed spatial regions. By forcing all branches to adhere to the same spatial alignment rule, we indirectly achieve alignment across the different branches. The detailed calculation process is presented in Section 4.3.2.

4.2.2. Sub-Band Cross-Attention Feature Fusion Module

Compared with existing feature fusion methods [18,19], our main challenge lies in performing feature fusion across multiple wavelet sub-bands () after feature extraction by the -FEM. Simple concatenation can be suboptimal as it treats low-frequency and high-frequency sub-bands equally, potentially combining noise with signal. Similar to many multimodal studies focusing on cross-attention-based feature fusion [20,21], we propose SCA-FFM to address this issue. This module uses a cross-attention mechanism for adaptive fusion where high-frequency features query low-frequency features to integrate details with the primary signal.

Before the cross-attention step, each sub-band feature set passes through a pooling layer that applies adaptive average pooling and max pooling in parallel. This extracts both global and local context from the feature maps. Average pooling provides feature summaries, while max pooling extracts features with the highest activation. Using both provides the attention mechanism with information about general statistics and prominent features.

The central component of SCA-FFM is its cross-attention mechanism, where features from high-frequency sub-bands () serve as queries, while features from the low-frequency sub-band () serve as both keys and values. This configuration allows high-frequency features to attend to low-frequency features, weighting low-frequency information based on its relevance to high-frequency details. The computation is performed using a multi-head attention structure to capture relationships in different representation subspaces.

The attention mechanism output is then processed by 1 × 1 convolution to project features into a new dimension, followed by a transformer encoding layer to further refine the features, producing the module’s final output. The goal of this module is to generate a unified feature representation by combining information from low- and high-frequency sub-bands through a data-driven attention process.

4.3. Training Strategy

4.3.1. Sub-Band Random Masking Strategy

To force the network to learn cross-modal complementary feature representations and reduce dependence on a single sub-band, we designed a sub-band random masking strategy during the training phase. Specifically, after convolution and before the SE attention, random masking M is applied to sub-band features (LLs/HLs/LHs/HHs):

where ⊙ denotes element-wise multiplication. And the mask matrix M satisfies:

where p is a hyperparameter. The discussion on the value of p is presented in the Section 6.1.4.

The sub-band random masking strategy not only enhances cross-modal compensation learning but also trains the channel attention module’s ability to filter important features, ensuring the network has sufficient generalization ability and preventing overfitting.

4.3.2. Loss Function

Two loss functions are employed in the method proposed in this paper: one is the SFA loss denoted as , and the other is the CLS loss denoted as .

The SFA loss is applied independently to each of the four feature maps output by the M2S2-FEM module (). For any given branch’s output feature map , where D is the number of channels and is the spatial resolution, our grouping strategy is as follows: we first divide the d feature channels into groups, with each group containing channels. We then assign a fixed, artificial “region label” to all channels within the j-th group (where …). This label j corresponds to the j-th pixel location in the spatial grid. We enforce this alignment using a cross-entropy loss function, formulated as

where is the feature map of the k-th channel. represents the flattened feature map, which can be converted into an -dimensional probability vector (optionally via Softmax) representing the channel’s activation distribution across all spatial locations. is the ground-truth region label (j) assigned to the k-th channel, represented as an one-hot vector with a `1’ only at the j-th position. This loss function compels the activation energy of the k-th channel (belonging to the j-th group) to concentrate primarily on the j-th spatial pixel location. By applying this simultaneously to all four sub-band branches, we ensure that the low-frequency global features () and the high-frequency detail features () are aligned before entering the SCA-FFM.

In the classification head, we employ the standard Cross-Entropy loss , which measures the discrepancy between the model’s predicted probability and the true label distribution:

where is the batch size, C is the number of classes, is the true label for the i-th sample belonging to the j-th class, and is the corresponding predicted probability.

Our final objective function, , is a weighted sum of and SFA losses applied to each of the four sub-band branches from the M2S2-FEM:

where is a hyperparameter that balances the contribution of the classification task and the auxiliary feature alignment task. During training, we select the model that achieves the smallest total loss as the best model.

5. Experimental Setup

5.1. Datasets

- Indian Pines: It is acquired by NASA’s airborne visible/infrared imaging spectrometer sensor over northwestern Indiana, contains pixel imagery with 200 spectral bands (400–2500 nm) after preprocessing. The scene was captured in June, predominantly features farmland and woodlands with 16 land-cover categories. Ground truth annotations are available through Purdue University’s MultiSpec platform.

- Pavia University: It comprises a HSI captured by reflective optics system imaging spectrometer sensor over Pavia, Italy. It consists of pixels with 225 spectral bands (430–860 nm), each with a spatial resolution of 1.3 m. The image is divided into 9 classes: asphalt, meadows, gravel, trees, painted metal sheets, bare soil, bitumen, self-blocking bricks, and shadows.

- Kennedy Space Center: It is acquired by NASA’s sensor over Kennedy Space Center, Florida, on 23 March 1996, with 18 m spatial resolution from 20 km altitude, featuring 224 spectral bands (10 nm width, 400–2500 nm center wavelengths) and 176 retained after excluding water absorption and low signal-to-noise ratio bands. The dataset encompasses 13 land cover classes, developed to reflect discernible functional types, with classification challenges due to similar spectral signatures among certain vegetation.

As shown in Table 2, the dataset splitting ratios (training:validation:testing) were set as 10%:1%:89% for the Indian Pines and Kennedy Space Center datasets, and 5%:0.5%:94.5% for the Pavia University dataset, respectively. A random sampling strategy was adopted to select samples for model training, validation, and testing, and this ratio setting was consistent with the work of Li et al. [22] to ensure the comparability of experimental results.

Table 2.

Number of training, validation, and testing samples for Indian Pines, Pavia University, and Kennedy Space Center datasets.

5.2. Implementation Details

All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 4090D GPU, implemented using the PyTorch framework (version 2.5.1). We set the radius r of the patches to 6, resulting in a patch size of 13, and this configuration has been validated as effective in GraphGST [23]. We utilized the Adam optimizer with a fixed learning rate of . The batch size was set to 64, and each model was trained for 100 epochs. To mitigate the impact of randomness on the comparative experiments in Section 6.2, we performed 10 independent runs for each method on every dataset. The experimental results are reported as the “mean ± standard deviation”.

5.3. Evaluation Metrics

In this paper, the classification performance is evaluated using three widely adopted accuracy metrics: Overall Accuracy (OA), which represents the proportion of correctly classified pixels among all pixels; Average Accuracy (AA), which is the average of the classification accuracies for each class; and Kappa Coefficient (KA), which accounts for the agreement between the classified results and the reference data while considering the possibility of random chance. Additionally, the decode time is employed to measure the efficiency of the inverse discrete wavelet transform decode process. The inference time refers to the time taken for model inference.

5.4. Baselines

We selected 12 methods spanning from 2004 to 2024 as the baseline. They are categorized as follows: Traditional Machine Learning Methods: Support Vector Machine (SVM) (2004) [24], Random Forest (RF) (2005) [25]. Deep Learning-Based Methods: ContextNet (2017) [26], RSSAN (2020) [27], SSTN (2022) [28], SSAN (2020) [29], SSSAN (2022) [30], SSAtt (2021) [31], A2S2KResNet (2021) [32], CVSSN (2023) [22], IGroupSS-Mamba (2024) [33], GraphGST (2024) [23].

6. Results and Analysis

6.1. Ablation Studies

6.1.1. Layers in Wavelet Transform

In the JPEG2000 algorithm, the number of wavelet decomposition layers in the compression process is a hyperparameter, which is typically set to 3–6 layers. As introduced in Section 3, when the number of wavelet decomposition layers exceeds 3, the resolution becomes too small to extract effective semantic information. Additionally, to simplify the computation, we set the number of wavelet decomposition layers to 3 and investigate the experimental effects of decomposition at different levels. L1, L2, L3, and FULL represent the first layer, second layer, third layer, and full decompression, respectively.

As shown in Table 3, the performance of using L2 or L1 alone is better than that of using L3 alone. This is because L3, as the bottommost layer of wavelet decomposition, has its feature map size reduced significantly after multiple downsampling steps, resulting in the loss of a large amount of detailed information (such as key discriminative features like edges and textures) during compression, which limits the feature expression capability. The performance of using multiple layers, such as L3+2 or L3+2+1, is better than using a single layer. The reason is that multi-layer wavelet decomposition corresponds to feature spaces of different scales: L3 retains global contour information, L2 contains medium-scale details, and L1 focuses on local fine-grained features. The fusion of multiple layers can complement the semantic information of different levels, enrich the hierarchical structure of feature representation, and thereby enhance the model’s discriminative ability for complex scenes. In general, using L3+2+1 can achieve relatively good performance. Therefore, in subsequent accuracy comparison experiments, we will adopt L3+2+1.

Table 3.

Analysis of Different Decompression Layers. The best results are highlighted in bold.

In terms of time reduction, the more times the inverse DWT is performed, the more decoding time is consumed. On the three datasets, compared with full decompression, using only L3 requires no decoding time. The decoding time for using only L2 and using L3+2 is reduced by 83%. The decoding time for using only L1 and using L3+2+1 is reduced by 56%. This means that our compressed-domain computing method can save at least 56% of the decompression time while achieving good accuracy.

6.1.2. Sub-Band Feature Alignment

To validate the effectiveness of the proposed SFA loss and determine the optimal hyperparameter settings, we conducted two ablation studies. The impact of the balancing parameter in the SFA loss function is first investigated, and the results are presented in Table 4. We observe a clear trend where the classification performance initially improves as increases, reaches its peak, and then declines. Specifically, when is set to 1 × 10−2, the model achieves the highest scores across all three key metrics: KA (99.39%), OA (99.54%), and AA (99.07%). This indicates that a moderate weighting of the SFA loss is most beneficial for feature alignment. Values that are too small (1 × 10−4, 1 × 10−3) may lead to insufficient alignment, while values that are too large (1 × 10−1, 1) could potentially disrupt the primary classification task, resulting in a performance drop. Therefore, we adopt = 1 × 10−2 for all subsequent experiments.

Table 4.

The impact of value on accuracy. The best results are highlighted in bold.

We then performed an ablation study to directly assess the contribution of the SFA loss itself. Table 5 compares the classification performance of our model with and without the SFA loss. The results demonstrate that incorporating the SFA loss consistently enhances the model’s feature representation capabilities, leading to superior classification accuracy. This confirms that the SFA loss effectively aligns features from different sub-bands, reducing intra-class variance and enhancing inter-class discriminability, which ultimately boosts the overall classification performance.

Table 5.

Ablation study on SFA loss. The best results are highlighted in bold.

6.1.3. Number of Cross-Attention Blocks

To investigate the effectiveness of the cross-attention mechanism in feature fusion and determine the optimal configuration of its block quantity, we gradually increased the number of cross-attention blocks from 1 to 6 and applied them to different feature combinations of wavelet transform layers.

As shown in Table 6, the experimental results reveal the critical role of the number of cross-attention blocks and feature input combinations in model performance. Under both L3+2 and L3+2+1 configurations, the number of cross-attention modules exhibits a performance curve that “first increases and then decreases.” Specifically, as the number of modules increases from 1 to 5, model performance improves steadily and reaches its peak at 5 layers. When the number of modules increases to 6, all performance metrics show significant decline. This phenomenon indicates that 5 cross-attention blocks achieve the optimal balance between effectively capturing and fusing multi-scale features while avoiding model overfitting, whereas an excessive number of modules may introduce redundant parameters, thereby impairing the model’s generalization ability.

Table 6.

Analysis on the number of cross-attention modules. The best results are highlighted in bold.

6.1.4. Effectiveness of Different Sub-Band Random Masking Strategies

To verify the effectiveness of the wavelet sub-band-based random masking regularization method proposed in this paper and explore the impact of different masking strategies on model performance, we designed a series of ablation experiments. The experiments used a model without any masking strategy (no mask) as the baseline, testing different mask ratios and masking sub-bands. For mask ratios, we compared two different intensities: 10% (0.1) and 1% (0.01). For masking regions, we designed two strategies: one applies masking only to high-frequency sub-bands (HL, LH, HH) to preserve the complete structural information of the low-frequency approximation sub-band (LL); the other applies masking to all four sub-bands (LL, HL, LH, HH).

As shown in Table 7, the experimental results demonstrate the effectiveness of the random masking strategy in improving model performance. Among all experimental configurations, applying a 0.1 mask ratio to all four sub-bands (LL, HL, LH, HH) achieved optimal performance. Particularly when using L3+2+1, this strategy’s KA, OA, and AA reached 98.80%, 98.95%, and 99.09% respectively, comprehensively surpassing the baseline model without masking and all other masking strategies. Further analysis revealed that the 0.1 mask ratio significantly outperformed 0.01, as the latter failed to provide effective regularization due to insufficient perturbation. Interestingly, although preserving LL sub-band information is effective, applying appropriate masking to the LL sub-band can yield better results. This indicates that slightly perturbing low-frequency approximation information can prompt the model to rely not only on the signal’s macro structure but also to more effectively learn and fuse detailed and texture features contained in high-frequency sub-bands, thereby obtaining stronger generalization ability and robustness.

Table 7.

Analysis of different masking strategies. The best results are highlighted in bold.

6.2. Comparative Analysis of Classification Accuracy

WaveHSI not only saves decompression time but also achieves excellent accuracy. As shown in Table 8, Table 9 and Table 10, the experimental results demonstrate the superior performance of our proposed method in HSI classification across multiple datasets.

Table 8.

Classification Results for the Indian Pines Dataset (%). The 1st, 2nd, and 3rd best results are in bold, underlined, and italic, respectively.

Table 9.

Classification Results for the Pavia University Dataset (%). The 1st, 2nd, and 3rd best results are in bold, underlined, and italic, respectively.

Table 10.

Classification Results for the Kennedy Space Center Dataset (%). The 1st, 2nd, and 3rd best results are in bold, underlined, and italic, respectively.

For the Indian Pines dataset, our method achieves an OA of 98.60%, AA of 98.22%, and Kappa of 98.41%. In terms of statistical stability, our method yields a standard deviation of in OA, indicating more consistent convergence compared to baselines such as IGroupSS-Mamba (). regarding specific classes, our method reaches 100.00% accuracy on “Corn” and performs effectively on spatially scattered categories, achieving 97.66% on “Soybean-notill” and 99.11% on “Soybean-mintill”.

On the Pavia University dataset, our method records the highest performance metrics with an OA of 99.77% and AA of 99.63%. The stability of the approach is evidenced by the minimal variance () in OA. Class-wise analysis shows that our method classifies “Bare-soil” with 100.00% accuracy. Furthermore, it addresses the classification challenges in the “Gravel” class, achieving 99.95% accuracy, whereas competitive models like GraphGST decline to 91.29%.

Regarding the Kennedy Space Center dataset, our method attains an OA of 98.43% and AA of 97.65%. Statistically, the proposed method demonstrates improved robustness with a standard deviation of in OA, contrasting with the higher fluctuation observed in CVSSN (). While GraphGST exhibits performance degradation on specific classes such as “Slash-pine” (0.22%), our method maintains consistent accuracy across all categories, recording 99.77% on “Slash-pine” and 98.51% on “Oak/B-hammock”.

6.3. Efficiency Analysis

To evaluate the computational efficiency of the proposed framework, we compared the decoding time, inference time, and total processing time against two state-of-the-art baselines: IGroupSS-Mamba and GraphGST. The quantitative results on three datasets with varying spatial dimensions are summarized in Table 11.

Table 11.

Comparison of computational efficiency on three datasets (Unit: milliseconds). The total time is the sum of decode time and inference time. The best results are highlighted in bold.

As observed, while our method (utilizing L3+2+1) exhibits higher inference latency compared to the lightweight GraphGST model, it demonstrates a substantial advantage in decoding efficiency. On smaller datasets such as Indian Pines () and Pavia University (), the total processing time is dominated by the inference stage, resulting in no significant speedup. However, the benefits of compressed-domain computing become prominent on the larger Kennedy Space Center dataset (). In this scenario, our method achieves the lowest total time of 516.6 ms, significantly outperforming IGroupSS-Mamba (3193.5 ms) and GraphGST (1383.6 ms). This efficiency gain is primarily attributed to the elimination of the computationally expensive inverse DWT process, validating the scalability and potential of our method for large-scale hyperspectral data processing.

7. Discussion

7.1. Trade-Off Between Accuracy and Efficiency

The experimental results reveal a nuanced trade-off mechanism within the proposed WaveHSI framework, manifesting across two dimensions: data scale and decomposition depth.

Firstly, from the perspective of data scale, Table 11 indicates a scale-dependent performance characteristic. On smaller datasets like Indian Pines (), the reduction in total processing time is marginal, as the computational overhead of standard decompression is negligible compared to model inference. However, the efficiency advantage becomes increasingly pronounced on the larger Kennedy Space Center dataset (), where we achieved the minimum total time. This underscores the core value of compressed-domain computing: as the spatial resolution of sensors scales, the conventional “decode-then-process” paradigm faces severe bottlenecks, whereas our method effectively bypasses the expensive inverse DWT.

Secondly, the multi-resolution nature of wavelets offers an intrinsic flexibility to fine-tune this trade-off by selecting different decoding layers. As analyzed in Table 3, while the full fusion strategy (L3+2+1) yields the highest classification accuracy, intermediate configurations like L3+2 or using the L2 sub-band alone present compelling alternatives for time-sensitive applications. For instance, on the Kennedy Space Center dataset, adopting the L3+2 configuration reduces the decoding time by approximately 76% (173.9 ms → 41.1 ms) compared to L3+2+1, with only a negligible drop in Overall Accuracy (99.50% → 99.25%). This implies that our framework can essentially operate in different modes—ranging from a “high-precision mode” (L3+2+1) to a “high-speed mode” (L3+2)—allowing for adaptive deployment based on the specific latency constraints and computational resources of the target platform (e.g., on-orbit satellites vs. ground stations).

7.2. Limitations and Future Work

Despite the significant gains in decoding efficiency and classification accuracy, we acknowledge a limitation regarding pure inference latency. As indicated in the efficiency analysis, our method is computationally heavier during the inference phase compared to lightweight graph-based models (e.g., GraphGST). This latency is primarily largely attributed to the complex architecture of the -FEM module and the quadratic complexity of the stacked cross-attention blocks, which prioritize feature interaction depth over calculation speed. This presents a clear direction for our future research, which will focus on model lightweighting. We intend to explore network pruning and knowledge distillation techniques to streamline the -FEM structure. The objective is to retain the rich feature representation capability of the current model while significantly reducing the parameter count, aiming to achieve a balance where both decoding and inference are optimized for real-time applications.

8. Conclusions

In this paper, we pioneered the exploration of HSI classification directly within the compressed domain, offering a feasible scheme to overcome the computational bottleneck of data decompression. By operating on DWT coefficients, the proposed framework bypasses the redundancy of full image reconstruction. Specifically, we designed the -FEM to extract hierarchical spatial–spectral features from wavelet sub-bands, and the SCA-FFM to synergize low-frequency global context with high-frequency textural details. Empirical evidence across three benchmarks demonstrates that our method eliminates at least 56% of the decompression overhead while achieving classification accuracy comparable to state-of-the-art methods that rely on fully decompressed data. We believe that this work establishes a baseline for future research into efficient, end-to-end compressed-domain hyperspectral analysis.

Author Contributions

Conceptualization, X.L. and B.S.; methodology, B.S.; software, B.S.; validation, X.L.; writing—original draft preparation, B.S.; writing—review and editing, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shandong Provincial Natural Science Foundation, grant number ZR2024MF048.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in [Hyperspectral Remote Sensing Scenes] at [https://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 6 January 2025).

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive comments and suggestions that helped improve the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HSI | Hyperspectral Image |

| DCT | Discrete Cosine Transform |

| DWT | Discrete Wavelet Transform |

| EBCOT | Embedded Block Coding with Optimized Truncation |

| JPEG | Joint Photographic Experts Group |

| JPEG2000 | Joint Photographic Experts Group 2000 |

| LL | low-frequency sub-band |

| HL, LH, HH | high-frequency sub-bands |

| MOE | Mixture of Experts |

| -FEM | Multi-branch Multi-scale Spatial–Spectral Feature Extraction Module |

| SCA-FFM | Sub-band Cross-attention Feature Fusion |

| SFA | Sub-band Feature Alignment |

| KA | Kappa coefficient |

| OA | Overall Accuracy |

| AA | Average Accuracy |

| SE | Squeeze-and-Excitation |

| RF | Random Forest |

| SVM | Support Vector Machine |

| L1, L2, L3 | 1st, 2nd, and 3rd Wavelet Decomposition Levels |

| L3+2 | Combination of L3 and L2 |

| L3+2+1 | Combination of L3, L2 and L1 |

| FULL | full decompression |

References

- UCLouvain; OpenJPEG Contributors. OpenJPEG: JPEG 2000 Reference Software. Open-Source Implementation Officially Recognized by ISO/IEC and ITU-T in July 2015. Available online: https://www.openjpeg.org/ (accessed on 15 January 2025).

- Chen, Z.; Li, Q.; Xia, Y. Performance analysis of AVS2 for remote sensing image compression. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–4. [Google Scholar]

- Yu, X.; Zhao, J.; Zhu, T.; Lan, Q.; Gao, L.; Fan, L. Analysis of JPEG2000 compression quality of optical satellite images. In Proceedings of the 2022 2nd Asia-Pacific Conference on Communications Technology and Computer Science (ACCTCS), Shenyang, China, 25–27 February 2022; pp. 500–503. [Google Scholar]

- Gueguen, L.; Sergeev, A.; Kadlec, B.; Liu, R.; Yosinski, J. Faster neural networks straight from jpeg. Adv. Neural Inf. Process. Syst. 2018, 31, 3933–3944. [Google Scholar]

- Park, J.; Johnson, J. Rgb no more: Minimally-decoded jpeg vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22334–22346. [Google Scholar]

- Li, X.; Zhang, Y.; Yuan, J.; Lu, H.; Zhu, Y. Discrete cosin transformer: Image modeling from frequency domain. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 5468–5478. [Google Scholar]

- Cheng, K.O.; Law, N.F.; Siu, W.C. Fast extraction of wavelet-based features from JPEG images for joint retrieval with JPEG2000 images. Pattern Recognit. 2010, 43, 3314–3323. [Google Scholar] [CrossRef]

- Byju, A.P.; Sumbul, G.; Demir, B.; Bruzzone, L. Remote-sensing image scene classification with deep neural networks in JPEG 2000 compressed domain. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3458–3472. [Google Scholar] [CrossRef]

- Byju, A.P.; Demir, B.; Bruzzone, L. A progressive content-based image retrieval in JPEG 2000 compressed remote sensing archives. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5739–5751. [Google Scholar] [CrossRef]

- Chebil, F.; Miled, M.H.; Islam, A.; Willner, K. Compressed domain editing of jpeg2000 images. IEEE Trans. Consum. Electron. 2005, 51, 710–717. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, G.; Ren, F. Adaptive blind watermarking for JPEG2000 compression domain. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar]

- Bisen, T.; Javed, M.; Nagabhushan, P.; Watanabe, O. Segmentation-less extraction of text and non-text regions from jpeg 2000 compressed document images through partial and intelligent decompression. IEEE Access 2023, 11, 20673–20687. [Google Scholar] [CrossRef]

- Li, Z.; Li, B.; Eliceiri, K.W.; Narayanan, V. Computationally efficient adaptive decompression for whole slide image processing. Biomed. Opt. Express 2023, 14, 667–686. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Cai, R.; Chen, T.; Zhang, G.; Zhang, H.; Chen, P.Y.; Chang, S.; Wang, Z.; Liu, S. Robust Mixture-of-Expert Training for Convolutional Neural Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 90–101. [Google Scholar]

- Dai, D.; Deng, C.; Zhao, C.; Xu, R.X.; Gao, H.; Chen, D.; Li, J.; Zeng, W.; Yu, X.; Wu, Y.; et al. DeepSeekMoE: Towards Ultimate Expert Specialization in Mixture-of-Experts Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, Y.; Cai, Y.; Jia, Q.; Qiu, B.; Wang, W.; Pu, N. Novel Class Discovery for Ultra-Fine-Grained Visual Categorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 17679–17688. [Google Scholar]

- Yuan, Z.; Ding, X.; Xia, X.; He, Y.; Fang, H.; Yang, B.; Fu, W. Structure-Aware Progressive Multi-Modal Fusion Network for RGB-T Crack Segmentation. J. Imaging 2025, 11, 384. [Google Scholar] [CrossRef]

- Chen, C.; Liu, X.; Zhou, M.; Li, Z.; Du, Z.; Lin, Y. Lightweight and Real-Time Driver Fatigue Detection Based on MG-YOLOv8 with Facial Multi-Feature Fusion. J. Imaging 2025, 11, 385. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, T.; Li, Y.; Zhang, Y.; Wu, F. Multi-modality cross attention network for image and sentence matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10941–10950. [Google Scholar]

- Li, M.; Liu, Y.; Xue, G.; Huang, Y.; Yang, G. Exploring the relationship between center and neighborhoods: Central vector oriented self-similarity network for hyperspectral image classification. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1979–1993. [Google Scholar] [CrossRef]

- Jiang, M.; Su, Y.; Gao, L.; Plaza, A.; Zhao, X.L.; Sun, X.; Liu, G. GraphGST: Graph Generative Structure-Aware Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5534815. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Going deeper with contextual CNN for hyperspectral image classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual spectral–spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 449–462. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, Y.; Ma, L.; Li, J.; Zheng, W.S. Spectral–spatial transformer network for hyperspectral image classification: A factorized architecture search framework. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5514715. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X.; Wu, S. Spectral–spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3232–3245. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, G.; Jia, X.; Wu, L.; Zhang, A.; Ren, J.; Fu, H.; Yao, Y. Spectral–Spatial Self-Attention Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5512115. [Google Scholar] [CrossRef]

- Hang, R.; Li, Z.; Liu, Q.; Ghamisi, P.; Bhattacharyya, S.S. Hyperspectral image classification with attention-aided CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2281–2293. [Google Scholar] [CrossRef]

- Roy, S.K.; Manna, S.; Song, T.; Bruzzone, L. Attention-based adaptive spectral–spatial kernel ResNet for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7831–7843. [Google Scholar] [CrossRef]

- He, Y.; Tu, B.; Jiang, P.; Liu, B.; Li, J.; Plaza, A. IGroupSS-Mamba: Interval group spatial-spectral mamba for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5538817. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).