Abstract

Color difference models (CDMs) are essential for accurate color reproduction in image processing. While CDMs aim to reflect perceived color differences (CDs) from psychophysical data, they remain largely untested in wide color gamut (WCG) and high dynamic range (HDR) contexts, which are underrepresented in current datasets. This gap highlights the need to validate CDMs across WCG and HDR. Moreover, the non-geodesic structure of perceptual color space necessitates datasets covering CDs of various magnitudes, while most existing datasets emphasize only small and threshold CDs. To address this, we collected a new dataset encompassing a broad range of CDs in WCG and HDR contexts and developed a novel CDM fitted to these data. Benchmarking various CDMs using STRESS and significant error fractions on both new and established datasets reveals that CAM16-UCS with power correction is the most versatile model, delivering strong average performance across WCG colors up to 1611 cd/m2. However, even the best CDM fails to achieve the desired accuracy limits and yields significant errors. CAM16-UCS, though promising, requires further refinement, particularly in its power correction component to better capture the non-geodesic structure of perceptual color space.

1. Introduction

With rapid advancements in high dynamic range (HDR) display technology—now achieving luminance levels exceeding 1000 cd/m2 in accordance with new HDR standards [1,2], which also incorporate wide color gamut (WCG) defined in the earlier BT.2020 standard [3]—tools for precise color reproduction assessment have become essential. Color difference models (CDMs) play a crucial role by estimating perceived color differences (CDs) between colors. Mathematically, a CDM can be expressed as a function , where d is the perceived difference between colors and , and p represents the observation parameters. A basic CDM approach uses the Euclidean distance in a uniform color space (UCS), described as , where T is the transformation from the colorimetry color space to the particular UCS. A UCS can be used not only as a CDM but also as a space for performing color transformations. It has been shown that the performance of image processing algorithms significantly depends on the chosen CS or CDM, as demonstrated in applications such as tone mapping [4], color correction [5], and image recognition [6].

CDMs are developed and validated using datasets comprising color pairs and their perceived differences, as determined through psychophysical experiments. While various CDMs have been proposed for HDR contexts [7,8,9,10,11], HDR- and WCG-specific experimental data to evaluate these models are still sparse. Existing datasets, often collected based on textile and printed samples [12,13,14,15], lack WCG coverage, and recent monitor-based datasets are confined to luminance levels up to 300 cd/m2 [16,17]. Thus, dedicated experimental setups are necessary to acquire data covering both HDR and WCG ranges.

There are various types of experiments to assess CDs with different magnitudes: threshold CD (TCD) or just noticeable difference (JND), small CD (SCD), and large CD (LCD, exceeding 5–8 JNDs). Measuring CDs across these ranges is critical due to the non-geodesic nature of perceptual color space, where the cumulative JNDs along the shortest path (geodesic) can surpass the direct CD between endpoints [18,19,20]. This discrepancy challenges traditional UCS models using Euclidean metrics for varying CD magnitudes. Consequently, UCSs specifically designed for LCD or SCD applications based on the CIECAM02 model [21] have been developed, and a generalized approach using power functions (with exponents ) has been applied to current UCS models [22], including the state-of-the-art CAM16-UCS [23].

A recent review by Luo et al. [24] evaluated psychophysical datasets on CDs from over 20 studies, benchmarking CDMs across these datasets. For decades, such datasets have played a central role in the iterative development of CDMs, forming the foundation for refining gold-standard models widely used in color evaluation. This progress has, in turn, driven advancements in applied algorithms. While HDR datasets [17] (up to 1128 cd/m2) and WCG datasets [25] (covering the BT.2020 gamut) exist, the review acknowledges a significant gap: the absence of datasets encompassing both HDR and WCG. Additionally, existing HDR and WCG datasets are limited in their coverage of large CDs. Large CDs are particularly important in display manufacturing, where defective pixels significantly affect perceived color [26]. Addressing these gaps is critical for further advancing UCS development, necessitating new datasets that cover WCG, HDR, and large CD ranges. To this end, we obtained a new color difference dataset spanning threshold, small, and large CDs in HDR and WCG. Using this dataset, we developed an analytically fitted CDM and benchmarked several CDMs with STRESS and significant error fraction metrics across both new and established datasets, providing a robust foundation for future UCS development.

2. Materials and Methods

2.1. Developed Experiment Setup

To obtain psychophysical data across HDR and WCG color spaces at various color difference (CD) scales, we developed a custom experimental setup centered around a purpose-built stimulus generator. Since no commercially available device met the required HDR and WCG specifications, we collaborated with Visionica Ltd. (Moscow, Russia) (http://www.visionica.biz/, accessed 8 November 2024) to design a stimulus generator, named VCD. VCD provides four independent stimuli, each comprising several dozen LEDs per primary color, ensuring both high luminance (up to 5000 cd/m2) and uniformity of the light field.

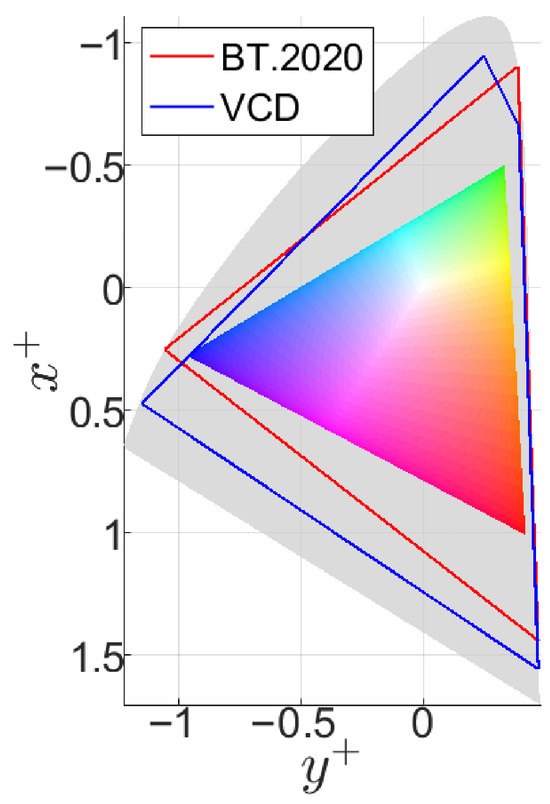

Our goal was to cover as much of the BT.2020 gamut as possible using the VCD. While the BT.2020 [1] gamut triangle is theoretically defined, its primaries, located on the spectral locus, can only be practically achieved using lasers. With LEDs, it is feasible to achieve two out of three primaries with adequate luminance levels, but achieving a high-saturation green LED with sufficient luminance remains a challenge. This issue, known as the “green gap” [27], arises from the physical limitations of current LED technology. To address this, we incorporated two green LEDs with different spectral power distributions, resulting in a total of four LEDs in the VCD. This configuration expanded the device’s color gamut (see Figure 1). Adding more LEDs would provide only marginal gains in gamut coverage while significantly complicating the device design.

Figure 1.

The VCD gamut (blue), BT.2020 (red), human visual gamut (gray), and sRGB gamut (colored) shown on the proLab chromaticity diagram.

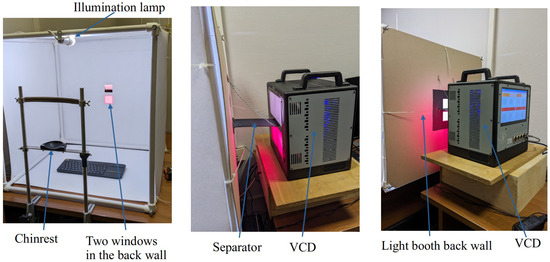

The full experimental setup included a light booth with two rear windows (for different scenarios), an illuminator, and the custom stimulus generator discussed above. Figure 2 shows views of the setup.

Figure 2.

Front and side views of the experiment setup.

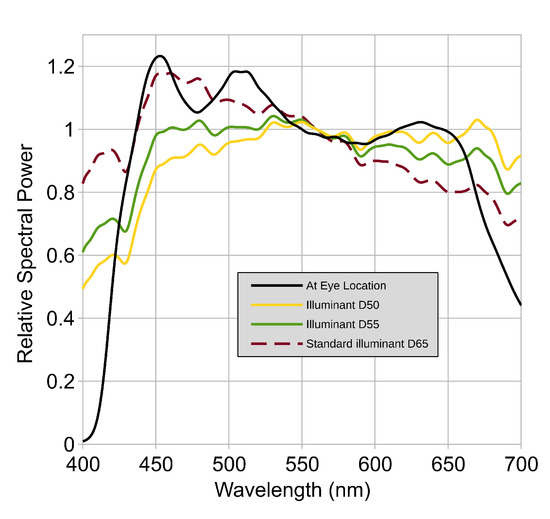

The light booth, a 70 × 70 × 70 cm cube with five white paper walls, was top-lit by a Remez 7W 5700K LED lamp (color rendering index: 98.5 and correlated color temperature: 5553 K), providing 100 cd/m2 at the center of the back wall and ensuring uniform lighting. Spectral measurements were conducted using an X-Rite I1Pro spectrophotometer (3.3 nm resolution) with ArgyllCMS software (version 2.1.2) (http://argyllcms.com/, accessed 8 November 2024). Figure 3 shows the spectral power distributions (SPDs) inside the booth alongside CIE D50, D55, and D65 illuminants.

Figure 3.

Spectral power distributions of the luminance inside the booth compared to CIE D50, D55, and D65 illuminants.

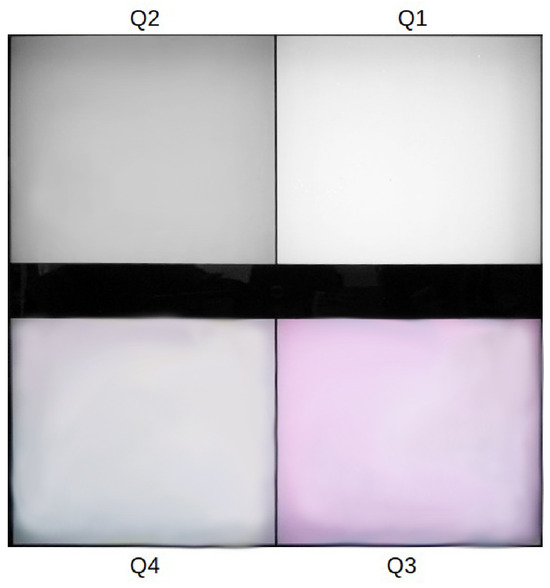

The VCD generator produced four stimuli (10 × 10 cm quadrants), each controlled independently. A 1 mm vertical separator divided the stimuli horizontally, with a 2 cm space between the upper and lower pairs; see Figure 4. The VCD was positioned 17 cm from the booth’s back wall, and a black separator was placed between viewing windows. Observers sat 80 cm from the windows, ensuring an angular stimulus size of 3.58°.

Figure 4.

Stimuli setup for SCD experiments. Quadrants: Q1, upper right; Q2, upper left; Q3, lower right; and Q4, lower left.

For TCD measurements, a 28 mm round hole in the back wall provided a 2° angular stimulus size; see Figure 5.

Figure 5.

Interior of the light booth for TCD measurements.

For SCD and LCD measurements, two 5 × 5 cm rectangular windows separated by 2 cm were used. The chinrest was adjusted to block the observer’s view of gaps between the VCD quadrants.

2.1.1. VCD Stimulus Generator Characterization

The VCD stimulus generator produced four independently controlled stimuli, each in a separate quadrant (Figure 4). Each quadrant featured a board with four primary LEDs (see Section 2.1.2) controlled at 16-bit accuracy, with settings managed remotely by a computer.

Uniformity testing via photographs revealed a 15% luminance drop along the left edge of Q1 near Q2, consistent across quadrants and imperceptible to the human eye. This variation was negligible for SCD and LCD measurements (see Section 2.2.2) but became significant for TCD if colors were compared side by side. Therefore, we employed an alternate procedure for TCD, where colors were flashed alternately within the same quadrant (see Section 2.2.1).

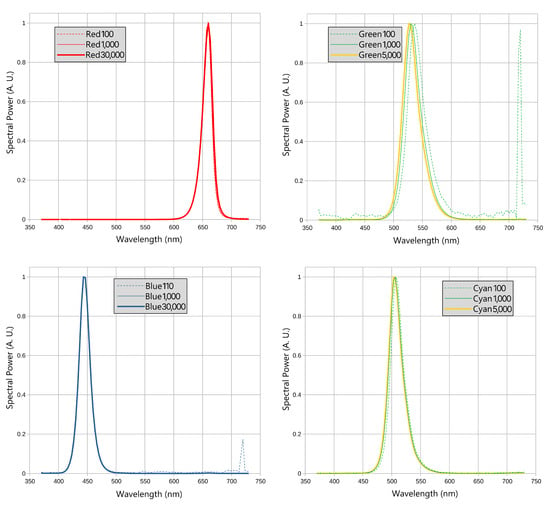

2.1.2. Primaries Spectra

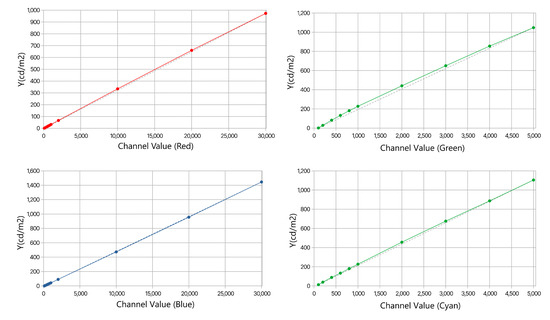

The VCD featured four primary channels—red (R), green (G), blue (B), and cyan (C)—each controlled independently via 16-bit buses, allowing luminance values from 0 to 65,535. Spectral power distributions (SPDs) were measured for various channel values in Q1 (Figure 6), with settings of 30,000 for R and B yielding luminance around 1000 and 1400 cd/m2, and 5000 for G and C yielding around 1000 and 1100 cd/m2. Minor SPD shifts due to LED heating, most notable in the green channel, were consistent across quadrants and accounted for in the VCD control software. Luminance linearity was assessed by measuring primary outputs at various channel values, approximated by a parabolic function to ensure accuracy (Figure 7).

Figure 6.

Spectral power distributions of Q1 primaries R, G, B, and C.

Figure 7.

Luminance vs. channel value for Q1 primaries (left to right, top to bottom: R, G, B, and C). Dots illustrate measurements; dashed lines represent ideal linearity; and solid lines, parabolic approximation.

To verify calibration, we compared the chromaticity coordinates of the target colors that the VCD intended to produce with the actual colors formed, which were measured using an X-Rite I1Pro spectrophotometer in its colorimeter mode. Approximately 40 different xyY values, evenly distributed across the chromaticity diagram at two luminance levels (300 and 1000 cd/m2), were tested. Chromaticity errors were calculated as the distance between the measured and target colors on the CIE 1964 xy chromaticity diagram for the 10° observer. The chromaticity error was found to depend on both the y and Y coordinates, ranging from 0.007 (for y < 0.5 at 1000 cd/m2) to 0.038 (for y ≥ 0.6 at 300 cd/m2), with a mean error of 0.017, which is considered small.

2.2. Experimental Procedures

2.2.1. TCD Measurements

TCD measurements were conducted using the “strict substitution” technique [28,29]. In this method, a color center (reference color, Stimulus A) is displayed, and a test color (Stimulus B) is shifted in a chosen direction within the color space away from the color center. Each stimulus is shown for 1.5 s in a periodic, instantaneous alternation.

Observers are given unlimited time to detect any difference; if none is observed, they increase the shift of Stimulus B by pressing a button. The just noticeable difference (JND) is the smallest shift at which the observer perceives a distinction between the two stimuli.

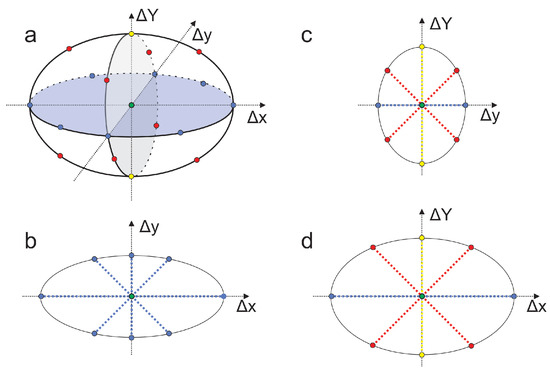

Stimulus B is shifted in discrete steps along specific directions in CIE xyY space. While a smooth surface would require measurement in numerous directions, which was not feasible, we used 18 directions combining chromaticity and luminance shifts and 8 directions with fixed luminance to approximate this surface (details in Figure 8).

Figure 8.

Arrangement of 18 measurement directions around the color center in xyY space. Blue points indicate chromaticity-only shifts (), yellow points show luminance shifts (), and red points represent combined chromaticity and luminance shifts. (a) 3D view; (b) section showing chromaticity-only shifts; (c) section with x-coordinate fixed at 0; (d) section with y-coordinate fixed.

2.2.2. SCD and LCD Measurements

SCD and LCD were measured using the “gray-scale method”, which matches color differences to a gray reference scale [25,30,31,32]. Observers viewed two pairs of colors: a reference gray pair with a known difference (3 JND for SCD, 12 JND for LCD) and a test pair.

The viewed stimuli are shown in Figure 4. The reference gray pair (stimuli Q1 and Q2) had a fixed difference, while the test pair (Q3 and Q4) started at the same color center (Q4). Observers incrementally adjusted Q3 along one of 18 preset directions (Figure 8) until its perceived difference from Q4 matched that of the gray reference pair. Upon matching, the color center and the selected shift were recorded.

The reference pair had chromaticity coordinates corresponding to D65. One sample in the reference pair remained fixed as GS-1, with the other as GS-3 for SCD or GS-5 for LCD measurements (see Table 1). For improved observer’s brightness adaptation, gray-scale luminances were increased 20-fold, with a reference white luminance of 2000 cd/m2, as detailed in Table 1, where denotes the CIELAB lightness value.

Table 1.

Gray-scale parameters from [25] and in our experiments.

2.2.3. Operating Conditions

Human color perception is strongly influenced by viewing conditions. For instance, the perceived magnitude of color differences depends on the chromatic adaptation of the human visual system to dominant illumination [33,34]. Chromatic adaptation transforms (CATs) describe these dependencies [23,35] and are often integrated into UCSs [23,36]. Developing such models requires the collection of specialized datasets [37,38]. However, it is not feasible to cover all possible scenarios within a single dataset, as this would require sampling a highly multidimensional parameter space. Consequently, different studies typically focus on specific subsets of this space. In our work, we addressed a four-dimensional space comprising three color coordinates and the magnitude of color difference. Nonetheless, datasets targeting other regions of the parameter space are still required to further improve these models. We maintained consistent viewing conditions throughout the experiment. Table 2 summarizes potential biases introduced by the experimental setup and observers, as well as the measures taken to mitigate them. Despite these efforts, some residual biases may remain. These biases can be categorized into two types: biases consistent across all measurements, and biases that vary between measurements. To assess the impact of the first type, we verified that our measurements aligned with previous datasets and evaluated their consistency, as described in Section 3.1. For the second type, we confirmed that such biases were not present as the model performance was not undermined: the CDM optimized on the training subset of our measured data achieved high accuracy on the validation subset, as detailed in Section 3.2. These two independent verifications demonstrate that our dataset collection process was not significantly influenced by large biases.

Table 2.

Potential biases and the measures taken to address them.

2.3. Collected Data

We collected comprehensive human color perception threshold data, covering small and large color differences (CDs) across 136 color centers and 2702 color pairs:

- TCDs were measured in 109 color centers (2046 pairs, 17 participants);

- SCDs were measured in 18 color centers (342 pairs, 9 participants);

- LCDs were measured in 17 color centers (314 pairs, 9 participants).

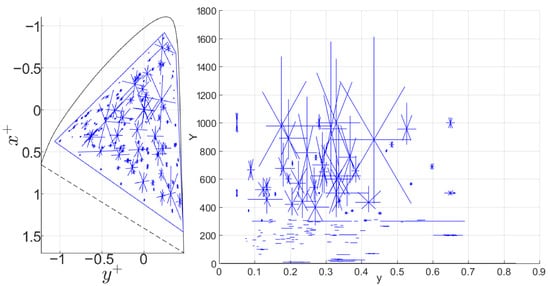

Each measurement captured three-dimensional data, forming ellipsoids in WCG and HDR areas, with color center luminances up to 1000 cd/m2 and a maximum luminance of 1611 cd/m2. Figure 9 shows the chromaticity and luminance distribution of the collected data. The convex hull of the collected color pairs forms a polygon covering 80% of the BT.2020 or BT.2100 [1] area on the proLab chromaticity diagram [36]. ProLab is a UCS based on the 3D projective transformation of CIE XYZ, with chromaticity coordinates similar to CIE xy: , , where , , and are coordinates in proLab.

Figure 9.

Collected data shown on the proLab chromaticity diagram (left) and corresponding luminance Y in cd/m2 (right). The centers of the blue segment intersections indicate the centers of the measured ellipses, while the segments themselves represent the measured color differences.

The color centers were selected using two approaches. First, we measured color differences at the color centers recommended by the CIE [39] to validate our setup by comparing the results with previously published data. Second, we randomly selected color centers from a uniform distribution in proLab for luminance levels ranging from 0 to 1000 cd/m2. This selection excluded the sRGB color gamut (up to 300 cd/m2). Consequently, for the standard luminance range, we focused on highly saturated colors, while, for HDR, we explored the full saturation range.

CDs were measured in 18 directions in space, including 8 in the chromaticity plane (Figure 8). In each session, the following steps were taken:

- A total of 8 pairs were measured with and shifts (fixed );

- If the observer was not overly fatigued, we measured 2 pairs with positive/negative (fixed , ) and 8 pairs with combined , and shifts.

A total of 231 measurement sessions were conducted, each with an observer focusing on one color center (data in certain directions were occasionally omitted if the observer reached the device’s gamut limits):

- A total of 8 directions were measured in 150 sessions (19 had partial data due to gamut limits);

- A total of 18 directions were measured in 81 sessions (3 had partial data due to gamut limits).

The order of color centers and measurement directions was randomized per observer. Each color pair within a direction was measured four times to minimize variability (following CIE recommendations [40]), using medians for analysis.

We recruited 22 observers with normal or corrected vision, who passed the Color Assessment and Diagnosis test within age-appropriate thresholds. All participants received instructions and provided informed consent.

2.4. Available Datasets

The widely used COMBVD dataset [41] includes small CDs (under 5 CIELAB units) and consists of four sub-datasets: BFD-P [12] with 2779 pairs, Witt [13] with 418 pairs, Leeds [14] with 307 pairs, and RIT-DuPont [15] with 312 pairs. We used this dataset to validate our own data and for CDM benchmarking. Additionally, we used the Size-dependent Color Threshold (SDCTh) dataset [42], which includes 80 pairs across 10 color centers, each measured by 10–13 observers to obtain 8 median pairs per center for stimuli with a radius of 72 min. Both datasets cover sRGB, limited to a luminance of 100 cd/m2 (standard dynamic range, SDR). HDR and WCG datasets exist [17,25] but are not publicly available and were thus not used here.

Table 3 summarizes the viewing conditions for each dataset (illuminant, adapting luminance , luminance factor of the neutral background , and surround). Our booth lighting was set to 100 cd/m2, i.e., . Since stimuli were displayed against a white background, , resulting in .

Table 3.

Viewing condition parameters for experimental CD datasets.

2.5. Quality Measures of Color Difference Models

The similarity between predicted CDs and reference measurements from human studies is commonly evaluated using the STRESS measure [43,44]. Let represent the vector of experimental CDs for n color pairs from a psychophysical dataset, and represent the vector of CDs for the same color pairs computed using a given CDM. The STRESS metric, , is defined as

where is a scaling factor used for normalization:

STRESS values range from 0 (perfect agreement) to 1 (maximum discrepancy). For context, the state-of-the-art CDM, CIEDE2000, achieves a “good” STRESS value of 0.292 on the COMBVD dataset, on which it was trained. However, this value depends on the dataset’s internal consistency and noise. The upper limit for STRESS on the new dataset is estimated in Section 3.1. In contrast, the poorly uniform CIE XYZ color space achieves a STRESS value of 0.692 on the same dataset, providing a reference for poor performance.

Various modifications have been proposed for handling multiple datasets [23,45]. Recently, a modified STRESS version, STRESSgroup, was introduced to ensure that all color pairs are accounted for with equal importance and to adjust for CD scale variations across datasets [46].

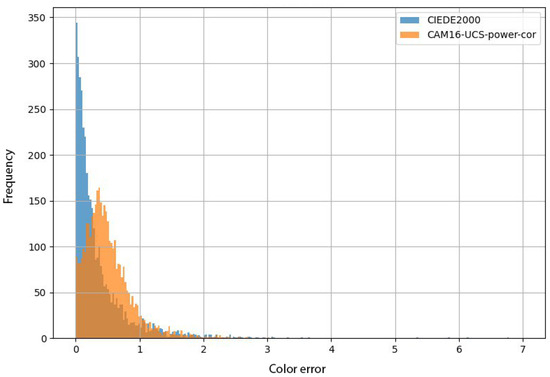

While STRESS evaluates the average error, it is equally important to assess significant errors, as a CDM serves as a measurement tool for evaluating other algorithms, such as those used in image processing. To address this, we propose analyzing the error distribution and focusing on errors exceeding a certain threshold, , which we define as unacceptable. Errors below JND are considered imperceptible, making such a threshold unreasonable. We selected , as errors form the heavy tail of the error distribution for current state-of-the-art CDMs (see Figure 10).

Figure 10.

Histogram of errors for the current state-of-the-art CDMs (CIEDE2000 and CAM16-UCS-PC) on the COMBVD dataset. The x-axis represents the magnitude of the CDM error, while the y-axis indicates the number of these errors.

To quantify significant errors, we propose calculating the fraction of errors exceeding 2 JND:

where is defined as in Equation (2).

3. Results

3.1. Comparison of the Obtained and Previously Published Data

Establishing the consistency of our experimental data with previously published data is crucial for validating our dataset. We compared our data with the COMBVD dataset [41] (see Section 2.4), which includes SCDs in the sRGB and SDR range. We focused on sub-datasets obtained under the D65 illuminant, excluding the BFD-P sub-dataset derived under the M and C illuminants.

Our consistency evaluation method leverages the fact that human color perception can be described by a continuously differentiable function without non-physiological discontinuities. This concept implies that, within a small region of color space, perceived color differences can be approximated by a linear model. By constructing a locally linear model from data in a small color space region, we can evaluate the consistency of our data with previously published datasets.

Let us consider a dataset of color pairs and their corresponding CDs. The i-th color pair in the dataset is represented as

To define the distance between two color pairs, we first establish distances for “codirectional” and “opposite” pairs in terms of the color ordering we have chosen:

where d is a distance measure, with CIEDE2000 chosen as our metric.

The distance between color pairs is defined as

It should be noted that this distance measure may not satisfy all properties of a metric. To identify regions of high data density in color space, we constructed a complete weighted graph from the set of color pairs in the datasets, calculating all possible distances between them. We then retained only the edges with weights below a certain threshold value .

To identify clusters of closely positioned color pairs containing pairs, we ranked the graph’s vertices in descending order based on their degree, i.e., the number of edges connecting each vertex to its neighbors.

The following algorithm was employed for the extraction of clusters from the graph :

- Initialize .

- If the degree of the i-th vertex , form a cluster of size consisting of the i-th vertex and its connected vertices.

- Remove the edges connecting each vertex in cluster to other vertices within the same cluster, and update .

- Repeat steps 2–4 for .

Within this cluster detection framework, any color pair can belong to multiple clusters, but any two color pairs belong to only one cluster. Sub-datasets within each cluster may vary in size.

To estimate internal consistency, we set and ; then, we optimized the linear model on each cluster. Since we assumed that the units of perceptual distance may differ across datasets by a proportionality coefficient, we employed [46] as the loss function.

First, we calculated the internal consistency of the COMBVD-D65 dataset as a reference. The results of the linear model optimization are presented in Table 4 for all five clusters we detected. The dataset consistency is represented by the highest value, .

Table 4.

Results of linear model optimization for COMBVD-D65 clusters.

Next, we evaluated the consistency between our data and the COMBVD-D65 dataset. The results of the linear model optimization for each detected cluster are shown in Table 5. The highest consistency value for our data with COMBVD-D65 is . While this is slightly worse than the internal consistency of COMBVD-D65, it remains comparable. Thus, we conclude that our data are consistent with COMBVD-D65, at least regarding the CD scale factor. We will address the issue of data consistency on an absolute scale in Section 3.3.

Table 5.

Consistency scores of COMBVD-D65 sub-datasets and our data. For each detected cluster, the number of points from each dataset (size) and the metric for the correspondence of cluster points to the constructed local linear model are provided.

3.2. Fitting the Color Difference Model to the Data: Optimally Fitted

We employed a new flexible parametric model that implements a semi-metric in color space. We also provided an analytical method for finding its optimal parameters.

Our approach built on the CAM16-UCS model with power law correction:

We enhanced this model with an additional trainable factor for improved accuracy. The model is defined in the following linear space:

where , and are CAM16-UCS coordinates of a color pair, and . Here,

where is a basis function dictionary listed in Appendix A, and is the vector of CDM parameters.

This model is a semi-metric, as it does not satisfy the triangle inequality, similar to the “gold standard” CIEDE2000 formula.

To train the model, we optimized the parameter vector to minimize STRESS on the dataset:

where

are vectors of experimental distances and model base matrix, respectively, and n represents the number of color pairs in the dataset.

This formulation results in a quadratic fractional programming problem:

where

This problem can be solved analytically, avoiding numerical optimization methods. To solve (13) analytically, we first regularize matrices A and B:

Using the Cholesky decomposition, we find an upper triangular matrix R such that . The matrix V of eigenvectors of is

The optimal solution corresponds to one of the columns of :

The optimization of CDM parameters is typically performed using numerical methods, which are computationally intensive and do not guarantee the discovery of a global minimum for non-convex objectives like STRESS. In contrast, the proposed analytical optimization approach ensures that the global minimum of STRESS is achieved while maintaining low computational complexity (approximately 0.12 s on an Intel Core i7-8550U).

The model was trained on a combined dataset comprising COMBVD, SDCTh, and our data. The dataset was split into 75% for training and 25% for testing. Using the analytical optimization Equations (15)–(17), the model achieved a STRESS value of 0.390 on the training set and 0.393 on the test set. These results demonstrate that the model is not overfitted.

3.3. Benchmarking Color Difference Models

We used the optimally fitted to analyze our dataset. Given the similar STRESS values across both previously published datasets and ours (see Table 6 and Table 7), we conclude that the datasets are consistent in terms of absolute CD scale. To evaluate the randomness of the obtained STRESS and FEM2JND values, we constructed confidence intervals at a confidence level of , corresponding to 1.96 standard deviations. For STRESS, bootstrapping [47] was applied, while the Wald confidence interval was used for FEM2JND [48].

Table 6.

Performance of CDMs on previously published datasets (lower values indicate better consistency).

Table 7.

Performance of CDMs on the dataset we collected (lower values indicate better consistency).

We benchmarked the following CDMs on psychophysical datasets. CAM16-UCS, introduced in 2016 [23], is the current state-of-the-art UCS [24]. CAM16-UCS with power law correction (see Equation (8)), which accounts for the non-geodesic properties of human color space. Other UCS models designed for WCG and HDR include ICaCb [8], ICTCp [9], and Jzazbz [10]. We also assessed Oklab [49], promising for LCD, and proLab [36], which offers good geometric properties. Additionally, we considered the CIEDE2000 formula [41], the state-of-the-art for SCD.

The results confirmed that CAM16-UCS with power correction (CAM16-UCS-PC) is the most versatile model, demonstrating the best performance on the combined dataset across both sRGB/SDR and HDR/WCG domains (see Table 8, where lower STRESS values indicate better consistency). However, CAM16-UCS-PC is not without limitations. Its accuracy could potentially improve by at least 1.3 times, as suggested by the optimally fitted . For the combined dataset, the standard deviation for CAM16-UCS-PC is 0.013, while that for the optimally fitted is 0.041, meaning the difference between the mean values exceeds the standard deviation. Moreover, despite an acceptable average error, CAM16-UCS-PC shows a notable proportion of significant errors, measured as the fraction of errors above 2 JND (Table 9). A comparison between CAM16-UCS and CAM16-UCS-PC reveals that the power correction increases significant errors by 1.8 times. While CAM16-UCS-PC performs well on average, it still produces a considerable number of significant errors.

Table 8.

Performance of CDMs on the combined dataset: previously published and ours (lower values indicate better consistency).

Table 9.

Performance of CDMs on the combined dataset: previously published and ours (lower values indicate better consistency).

A universal model is yet to be developed, but CAM16-UCS is a promising foundation, with power correction being a key area for improvement in CAM16-UCS-PC.

4. Conclusions

We collected a new experimental dataset on perceived color differences spanning three scales (threshold, small, and large). The dataset covers a wide color gamut (80% of BT.2020) at high luminance levels (up to 1611 cd/m2) using a custom stimulus generator. Measurements were conducted with 22 observers, yielding color difference data for 2605 color pairs.

To ensure dataset consistency, we introduced a method leveraging the continuous nature of human color perception. This involved fitting linear models to small psychophysical data clusters and evaluating them using the STRESS metric. Our analysis confirmed consistency between our dataset and established datasets.

We further improved the power-corrected CAM16-UCS (CAM16-UCS-PC) model by introducing a novel color difference model, optimally fitted . This refinement employed an analytical optimization approach capable of efficiently minimizing non-convex objectives, such as STRESS, while avoiding the risk of local minima. Our results demonstrated that existing models, including CAM16-UCS-PC, have not yet reached the theoretical accuracy limit, as evidenced by the performance of the optimally fitted on the combined dataset of new and established data.

Benchmarking across multiple datasets revealed that CAM16-UCS-PC is currently the most versatile model, achieving the best STRESS performance for sRGB, WCG, SDR, and HDR domains. However, the model is not without limitations. In traditional domains (SDR and sRGB), CAM16-UCS-PC exhibits fewer significant errors than CAM16-UCS. Conversely, in HDR-WCG domains, the number of significant errors for CAM16-UCS-PC is 1.8 times higher than for CAM16-UCS without power correction.

These effects can be attributed to the fact that neither model was trained on HDR or WCG data. While CAM16-UCS-PC introduces power correction to address the non-geodesic nature of color space, it remains a less appropriate model and performs worse than the base CAM16-UCS when predicting new data.

Our findings suggest that incorporating our dataset into the development of UCS could enhance future models. However, the root causes of these discrepancies remain unclear and require further investigation. Additional measurements, particularly in regions with the greatest errors, are necessary to improve model accuracy and better understand the observed effects.

Author Contributions

Conceptualization, I.N.; methodology, I.N.; software, V.K., M.K., and A.S.; validation, I.K. and O.B.; formal analysis, V.K. and O.B.; investigation, S.G.; resources, I.N.; data curation, S.G.; writing—original draft preparation, M.K. and A.S.; writing—review and editing, O.B. and A.S.; visualization, I.K.; supervision, I.N.; project administration, S.G. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by project FA2019101041 at Saint Petersburg State University.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Ethics Board of Institute for Information Transmission Problems of the Russian Academy of Sciences (The Ethics Committee Conclusion dated 13 March 2023. The Approval Code is EC-2023/5).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to the fact that the data are shared by several organizations and the issue of their transfer will be decided individually each time.

Acknowledgments

The authors thank Alexander Belokopytov and Pavel Maksimov for their support with the experimental work, and Dmitry Nikolaev for his valuable discussions and insightful comments on this study.

Conflicts of Interest

Author Olga Basova was employed by the company Smart Engines Service LLC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CDM | Color difference model |

| CD | Color difference |

| UCS | Uniform color space |

| WCG | Wide color gamut |

| HDR | High dynamic range |

| TCD | Threshold color difference |

| JND | Just noticeable difference |

| SCD | Small color difference |

| LCD | Large color difference |

| LED | Light emitting diode |

| SPD | Spectral power distribution |

| GS | Gray scale |

| SDR | Standard dynamic range |

Appendix A. Basis Functions and Their Values for Optimally Fitted

The values of these basis functions are shown in Table A1.

Table A1.

Values of the basic functions.

Table A1.

Values of the basic functions.

| Value | Value | Value | Value | ||||

|---|---|---|---|---|---|---|---|

| −0.0029 | 2.976 × 10−6 | −0.0002 | −0.0166 | ||||

| −0.0057 | −1.8581 × | 0.0117 | −2.5185 × | ||||

| −0.004 | 3.9624 × | 4.8434 × | 0.028 | ||||

| 0.0009 | −4.9423 × | 2.406 × | 3.8023 × | ||||

| 0.0023 | 3.0614 × | 3.3194 × | −7.4377 × | ||||

| 0.0066 | −8.5736 × | 0.007 | −0.0003 | ||||

| −0.0008 | 2.8571 × | 5.5821 × | −0.0289 | ||||

| −7.1602 × | −1.7522 × | −0.0036 | 3.0788 × | ||||

| −0.0034 | 5.5611 × | 4.657 × | −0.0304 | ||||

| 2.2075 × | 8.8041 × | −0.0001 | −0.0002 | ||||

| −0.0035 | 0.0096 | −0.0002 | 4.6816 × | ||||

| −3.9465 × | −4.5735 × | 0.002 | −1.1926 × | ||||

| −0.0084 | −0.0034 | 4.3452 × | 0.0207 | ||||

| −1.881 × | −8.2306 × | 0.0242 | 3.1525 × | ||||

| −4.2926 × | 1.5887 × | 1.9099 × | −0.0113 | ||||

| 5.8133 × | −4.6291 × | −0.0002 | −8.7425 × | ||||

| −1.7872 × | 0.0017 | 6.9818 × | 1.7498 × | ||||

| 0.0006 | −3.2055 × | −0.003 | 6.1974 × | ||||

| −3.8521 × | -0.0089 | 0.0002 | 0.0016 | ||||

| 4.0407 × | 4.5402 × | −0.0001 | −1.3631 × | ||||

| −6.9548 × | 9.0806 × | −0.0002 | 0.0055 | ||||

| 4.3857 × | 3.8185 × | −2.5034 × | 4.3339 × | ||||

| −5.5443 × | 0.0106 | 9.7498 × | 0.0001 |

Appendix B. Formula for

In this section, we provide the formulas for the uniform color space [10].

where the variables X, Y, and Z represent the input CIE XYZ tristimulus values. and are pre-adjusted values of X and Y, respectively, used to eliminate hue deviations before optimizing the model for improved perceptual uniformity. , and S denote the cone primary responses, while , , and are dynamically transformed cone responses. The constants are defined as follows: , , , , , , , , and 1.6295499532821566 × .

Appendix C. Formulas for CAM16-UCS and CAM16-UCS-PC

This section outlines the formulas for CAM16-UCS and CAM16-UCS with power-law correction [23].

The initial step involves calculating values and parameters that are independent of the input sample:

where , , and represent the adopted white in the test illuminant, and , , and are sensor response signals.

where D is the degree of adaptation, is the luminance of the test adapting field (cd/m2), and F is a parameter related to the influence of the environment on color perception.

If D exceeds 1 or is less than 0, it is constrained to 1 or 0, respectively.

where .

where is the achromatic response.

Further calculation steps are as follows:

If is negative,

where is the postadaptation cone response, resulting in dynamic range compression. and are computed similarly.

where a represents the Redness–Greenness component, b the Yellowness–Blueness component, and h the hue angle.

Using the unique hue data in Table A2, we set if ; otherwise, . We choose an appropriate i (i = 1, 2, or 3) such that .

where is the eccentricity factor.

where H is the hue quadrature.

where A is the achromatic response.

Table A2.

Unique hue data for calculation of the hue quadrature.

Table A2.

Unique hue data for calculation of the hue quadrature.

| Red | Yellow | Green | Blue | Red | |

|---|---|---|---|---|---|

| i | 1 | 2 | 3 | 4 | 5 |

| 20.14 | 90.00 | 164.25 | 237.53 | 380.14 | |

| 0.8 | 0.7 | 1.0 | 1.2 | 0.8 | |

| 0.0 | 100.0 | 200.0 | 300.0 | 400.0 |

The correlate of lightness J is calculated as

where Q is the correlate of brightness.

The last step is the calculation of chroma (C), colorfulness (M), and saturation (s):

The CAM16-UCS is defined as

The color difference between two colors is determined by the Euclidean distance in CAM16-UCS:

The use of the power correction function can further improve CAM16-UCS:

References

- ITU-R. BT.2100-2: Image Parameter Values for High Dynamic Range Television for Use in Production and International Programme Exchange; ITU Radiocommunication Sector: Geneva, Switzerland, 2018. [Google Scholar]

- ITU-R. BT.2390-11: High Dynamic Range Television for Production and International Programme Exchange; ITU Radiocommunication Sector: Geneva, Switzerland, 2023. [Google Scholar]

- ITU-R. BT.2020-2: Parameter Values for Ultra-High Definition Television Systems for Production and International Programme Exchange; ITU Radiocommunication Sector: Geneva, Switzerland, 2015. [Google Scholar]

- Xu, L.; Zhao, B.; Luo, M.R. Colour gamut mapping between small and large colour gamuts: Part I. gamut compression. Opt. Express 2018, 26, 11481–11495. [Google Scholar] [CrossRef] [PubMed]

- Kucuk, A.; Finlayson, G.D.; Mantiuk, R.; Ashraf, M. Performance Comparison of Classical Methods and Neural Networks for Colour Correction. J. Imaging 2023, 9, 214. [Google Scholar] [CrossRef] [PubMed]

- Ghanem, S.; Holliman, J.H. Impact of color space and color resolution on vehicle recognition models. J. Imaging 2024, 10, 155. [Google Scholar] [CrossRef] [PubMed]

- Fairchild, M.D.; Wyble, D.R. hdr-CIELAB and hdr-IPT: Simple models for describing the color of high-dynamic-range and wide-color-gamut images. In Proceedings of the Color and Imaging Conference, San Antonio, TX, USA, 8–12 November 2010; Society for Imaging Science and Technology: Springfield, VA, USA, 2010; Volume 2010, pp. 322–326. [Google Scholar] [CrossRef]

- Froehlich, J.; Kunkel, T.; Atkins, R.; Pytlarz, J.; Daly, S.; Schilling, A.; Eberhardt, B. Encoding color difference signals for high dynamic range and wide gamut imagery. In Proceedings of the Color and Imaging Conference, Darmstadt, Germany, 19–23 October 2015; Society for Imaging Science and Technology: Springfield, VA, USA, 2015; Volume 2015, pp. 240–247. [Google Scholar]

- Dolby. What is ICTCP—Introduction? White Paper, Version 7.1; Technical Report; Dolby: San Francisco, CA, USA, 2016. [Google Scholar]

- Safdar, M.; Cui, G.; Kim, Y.J.; Luo, M.R. Perceptually uniform color space for image signals including high dynamic range and wide gamut. Opt. Express 2017, 25, 15131–15151. [Google Scholar] [CrossRef]

- Huang, Y.; Xu, H.; Zhang, Y.; Hu, B.; Deng, J.; Li, L. Towards perceptual uniformity and HDR-WCG image processing: A projection-based color space. Opt. Express 2024, 32, 30742–30755. [Google Scholar] [CrossRef]

- Luo, M.R.; Rigg, B. BFD (l: C) colour-difference formula. Part 1—Development of the formula. J. Soc. Dyers Colour. 1987, 103, 86–94. [Google Scholar] [CrossRef]

- Witt, K. Geometric relations between scales of small colour differences. Color Res. Appl. 1999, 24, 78–92. [Google Scholar] [CrossRef]

- Kim, D.H.; Nobbs, J.H. New weighting functions for the weighted CIELAB colour difference formula. In AIC Colour 97, Proceedings of the 8th Congress of the International Colour Association, Kyoto, Japan, 25–30 May 1997; Color Science Association of Japan: Kyoto, Japan, 1997; Volume 97, pp. 446–449. [Google Scholar]

- Berns, R.S.; Alman, D.H.; Reniff, L.; Snyder, G.D.; Balonon-Rosen, M.R. Visual determination of suprathreshold color-difference tolerances using probit analysis. Color Res. Appl. 1991, 16, 297–316. [Google Scholar] [CrossRef]

- Xu, Q.; Shi, K.; Luo, M.R. Parametric effects in color-difference evaluation. Opt. Express 2022, 30, 33302–33319. [Google Scholar] [CrossRef]

- Xu, Q.; Cui, G.; Safdar, M.; Xu, L.; Luo, M.R.; Zhao, B. Assessing Colour Differences under a Wide Range of Luminance Levels Using Surface and Display Colours. In Proceedings of the Color and Imaging Conference, Paris, France, 21–25 October 2019; Society for Imaging Science and Technology: Springfield, VA, USA, 2019; Volume 2019, pp. 355–359. [Google Scholar] [CrossRef]

- Judd, D.V. Ideal color space: Curvature of color space and its implications for industrial color tolerances. Palette 1968, 29, 4–25. [Google Scholar]

- Izmailov, C.A.; Sokolov, E.N. Spherical model of color and brightness discrimination. Psychol. Sci. 1991, 2, 249–260. [Google Scholar] [CrossRef]

- Bujack, R.; Teti, E.; Miller, J.; Caffrey, E.; Turton, T.L. The non-Riemannian nature of perceptual color space. Proc. Natl. Acad. Sci. USA 2022, 119, e2119753119. [Google Scholar] [CrossRef] [PubMed]

- Luo, M.R.; Li, C. CIECAM02 and its recent developments. In Advanced Color Image Processing and Analysis; Springer: New York, NY, USA, 2013; pp. 19–58. [Google Scholar]

- Huang, M.; Cui, G.; Melgosa, M.; Sánchez-Marañón, M.; Li, C.; Luo, M.R.; Liu, H. Power functions improving the performance of color-difference formulas. Opt. Express 2015, 23, 597–610. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, Z.; Wang, Z.; Xu, Y.; Luo, M.R.; Cui, G.; Melgosa, M.; Brill, M.H.; Pointer, M. Comprehensive color solutions: CAM16, CAT16, and CAM16-UCS. Color Res. Appl. 2017, 42, 703–718. [Google Scholar] [CrossRef]

- Luo, M.R.; Xu, Q.; Pointer, M.; Melgosa, M.; Cui, G.; Li, C.; Xiao, K.; Huang, M. A comprehensive test of colour-difference formulae and uniform colour spaces using available visual datasets. Color Res. Appl. 2023, 48, 267–282. [Google Scholar] [CrossRef]

- Xu, Q.; Zhao, B.; Cui, G.; Luo, M.R. Testing uniform colour spaces using colour differences of a wide colour gamut. Opt. Express 2021, 29, 7778–7793. [Google Scholar] [CrossRef]

- Basova, O.; Gladilin, S.; Grigoryev, A.; Nikolaev, D. Two calibration models for compensation of the individual elements properties of self-emitting displays. Comput. Opt. 2022, 46, 335–344. [Google Scholar] [CrossRef]

- der Maur, M.A.; Pecchia, A.; Penazzi, G.; Rodrigues, W.; Di Carlo, A. Unraveling the “Green Gap” problem: The role of random alloy fluctuations in InGaN/GaN light emitting diodes. arXiv 2015, arXiv:1510.07831. [Google Scholar]

- Wyszecki, G.; Stiles, W.S. Color Science: Concepts and Methods, Quantitative Data and Formulae; John Wiley & Sons: Hoboken, NJ, USA, 2000; Volume 40. [Google Scholar]

- Danilova, M.V.; Mollon, J.D. Bongard and Smirnov on the tetrachromacy of extra-foveal vision. Vis. Res. 2022, 195, 107952. [Google Scholar] [CrossRef]

- Pytlarz, J.A.; Pieri, E.G. How close is close enough? In Proceedings of the International Broadcasting Convention (IBC 2017), Amsterdam, The Netherlands, 13–17 September 2017; pp. 1–9. [Google Scholar]

- Huang, M.; Liu, H.; Cui, G.; Luo, M.R.; Melgosa, M. Evaluation of threshold color differences using printed samples. J. Opt. Soc. Am. A 2012, 29, 883–891. [Google Scholar] [CrossRef]

- Wyszecki, G.; Fielder, G.H. New Color-Matching Ellipses. J. Opt. Soc. Am. 1971, 61, 1135–1152. [Google Scholar] [CrossRef] [PubMed]

- Fairchild, M.D. Color Appearance Models, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Susstrunk, S.E.; Holm, J.M.; Finlayson, G.D. Chromatic adaptation performance of different RGB sensors. In Proceedings of the IS&T/SPIE Electronic Imaging, San Jose, CA, USA, 20–26 January 2001; SPIE: Bellingham, WA, USA, 2001; Volume 4300. [Google Scholar] [CrossRef]

- Moroney, N.; Fairchild, M.D.; Hunt, R.W.; Li, C.; Luo, M.R.; Newman, T. The CIECAM02 Color Appearance Model. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 12–15 November 2002; Imaging Science and Technology: Springfield, VA, USA; Volume 10, pp. 23–27. [Google Scholar]

- Konovalenko, I.A.; Smagina, A.A.; Nikolaev, D.P.; Nikolaev, P.P. ProLab: AA Perceptually Uniform Projective Color Coordinate System. IEEE Access 2021, 9, 133023–133042. [Google Scholar] [CrossRef]

- Luo, M.R.; Clarke, A.A.; Rhodes, P.A.; Schappo, A.; Scrivener, S.A.; Tait, C.J. Quantifying Colour Appearance. Part I. LUTCHI Colour Appearance Data. Color Res. Appl. 1991, 16, 166–180. [Google Scholar] [CrossRef]

- Braun, K.M.; Fairchild, M.D. Psychophysical Generation of Matching Images for Cross-Media Color Reproduction. J. Soc. Inf. Disp. 2000, 8, 33–44. [Google Scholar] [CrossRef][Green Version]

- Robertson, A.R. CIE guidelines for coordinated research on colour-difference evaluation. Color Res. Appl. 1978, 3, 149–151. [Google Scholar] [CrossRef]

- Witt, K. CIE Guidelines for Coordinated Future Work on Industrial Colour-Difference Evaluation. Color Res. Appl. 1995, 20, 399–403. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Danilova, M.V. Colour sensitivity as a function of size: Psychophysically measured discrimination ellipses. In Proceedings of the 27th International Colour Vision Society Meeting, Ljubljana, Slovenia, 5–9 July 2024. [Google Scholar]

- Kruskal, J.B. Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika 1964, 29, 1–27. [Google Scholar] [CrossRef]

- García, P.A.; Huertas, R.; Melgosa, M.; Cui, G. Measurement of the relationship between perceived and computed color differences. J. Opt. Soc. Am. A 2007, 24, 1823–1829. [Google Scholar] [CrossRef]

- Melgosa, M.; Huertas, R.; Berns, R.S. Performance of recent advanced color-difference formulas using the standardized residual sum of squares index. J. Opt. Soc. Am. A 2008, 25, 1828–1834. [Google Scholar] [CrossRef]

- Nikolaev, D.P.; Basova, O.A.; Usaev, G.R.; Tchobanou, M.K.; Bozhkova, V.P. Detection and correction of errors in psychophysical color difference Munsell Re-renotation dataset. In Proceedings of the London Imaging Meeting, London, UK, 28–30 June 2023; Society for Imaging Science and Technology: Springfield, VA, USA, 2023; pp. 40–44. [Google Scholar] [CrossRef]

- Horowitz, J.L. Bootstrap methods in econometrics. Annu. Rev. Econ. 2019, 11, 193–224. [Google Scholar] [CrossRef]

- Wallis, S. Binomial confidence intervals and contingency tests: Mathematical fundamentals and the evaluation of alternative methods. J. Quant. Linguist. 2013, 20, 178–208. [Google Scholar] [CrossRef]

- Ottosson, B. A Perceptual Color Space for Image Processing. 2020. Available online: https://bottosson.github.io/posts/oklab/ (accessed on 8 November 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).