Abstract

Colorectal cancer is a major public health issue, causing significant morbidity and mortality worldwide. Treatment for colorectal cancer often has a significant impact on patients’ quality of life, which can vary over time and across individuals. The application of artificial intelligence and machine learning techniques has great potential for optimizing patient outcomes by providing valuable insights. In this paper, we propose a multimodal machine learning framework for the prediction of quality of life indicators in colorectal cancer patients at various temporal stages, leveraging both clinical data and computed tomography scan images. Additionally, we identify key predictive factors for each quality of life indicator, thereby enabling clinicians to make more informed treatment decisions and ultimately enhance patient outcomes. Our approach integrates data from multiple sources, enhancing the performance of our predictive models. The analysis demonstrates a notable improvement in accuracy for some indicators, with results for the Wexner score increasing from 24% to 48% and for the Anorectal Ultrasound score from 88% to 96% after integrating data from different modalities. These results highlight the potential of multimodal learning to provide valuable insights and improve patient care in real-world applications.

1. Introduction

Colorectal Cancer (CRC) is the third most common cancer worldwide. In 2020, an estimated 19.3 million new cancer cases were reported, with breast cancer leading at 11.7%, lung cancer at 11.4%, and CRC at 10.0% [1]. CRC can occur in the colon or rectum and typically begins as a benign polyp, which can develop into a malignant tumor over time. Traditional treatments for CRC, including surgery, radiation, and chemotherapy, have significantly improved survival rates. However, these treatments often result in surgical trauma or damage to the pelvic nerve plexus, leading to dysfunctions that can greatly impact the quality of life (QoL) of patients [2].

The healthcare industry is facing an increasing demand for services, resulting in a massive amount of data generated by hospitals daily. These data, often overlooked due to the busy nature of healthcare professionals, hold immense potential for insights and optimization when analyzed using artificial intelligence (AI) [3]. AI’s application in healthcare has the potential to revolutionize the industry by enabling disease detection without relying on expert input and providing predictive insights into patient conditions. This could significantly enhance the healthcare system and inform decision-making within the medical domain.

AI is transforming the healthcare industry by improving patient outcomes and operational efficiency. AI algorithms can analyze vast amounts of medical data and images to assist in disease diagnosis [4] and treatment planning, predict future health conditions and disease risk, assist in the discovery of new drugs, improve medical imaging accuracy [5], manage electronic health records, and provide real-time decision support to healthcare providers.

Among the many AI-driven approaches, deep learning techniques like convolutional neural networks (CNNs) have shown particular promise in medical image analysis. CNN-based models, such as U-Net [6], have become foundational for tasks requiring pixel-wise predictions, such as image segmentation and density map regression. Density map regression, which involves predicting continuous values over an image, has been effectively used in medical imaging for tasks like organ [7,8] and tumor [9,10] volume estimation, where precise localization and counting are critical.

Recent years have witnessed a surge in research articles focusing on early detection [11] and prognostic outcomes of colorectal cancer [12], driven by advancements in artificial intelligence and machine learning. A particular area of interest is the impact of curative resection operations on a patient’s QoL, due to the predictive capabilities of machine learning algorithms.

Multimodal machine learning [13], which integrates data from multiple sources, can be extremely valuable in critical fields such as finance [14], security [15], and ultimately healthcare [16]. In healthcare, this approach enhances our understanding of a patient’s health by incorporating diverse information such as clinical, genetic, and imaging data. This leads to more accurate diagnoses and personalized treatment plans. In addition, multimodal machine learning can facilitate the analysis of large and complex datasets, increasingly common in healthcare due to the widespread adoption of electronic health records. It can also enable the development of automated systems for detecting patterns and identifying important trends, saving time and improving efficiency. By significantly improving the quality of healthcare, multimodal machine learning makes advanced care more accessible to a broader range of patients.

This paper aims to predict various factors influencing CRC patients’ QoL by employing a multimodal machine learning framework that integrates diverse data sources. This study focuses on indicators such as the Global Quality of Life Index (GQOLI2), Wexner Score, Low Anterior Resection Syndrome (LARS) Score, and Anorectal Ultrasound (AUR) Score [17]. These indicators collectively provide a detailed picture of the patient’s post-treatment life and are crucial for guiding clinical decisions that could significantly enhance patient outcomes and overall well-being.

2. Motivation and Quality of Life Indicators in Colorectal Cancer

Colorectal cancer management has evolved significantly in recent years, with a growing recognition that patient care extends beyond survival rates alone. Quality of life has become a crucial focus in cancer care [18], reflecting a shift toward more holistic and patient-centered approaches. This emphasis on quality of life is motivated by the understanding that a patient’s overall well-being profoundly influences treatment outcomes, recovery processes, and long-term satisfaction with care [19].

Despite advancements in CRC treatment modalities, patients frequently encounter substantial physical and psychological challenges post-treatment. These challenges underscore the need for comprehensive, proactive approaches to assessing and managing quality of life in CRC care. Key motivations for this study include the following:

- Imitations of Current QoL Assessments: Traditional methods, typically involving post-surgical questionnaires, often provide delayed insights that fail to inform real-time clinical decision-making, limiting the ability to preemptively address potential QoL issues.

- Gap in Predictive Care Models: There is a lack of robust predictive models to anticipate QoL outcomes before surgical interventions, which could significantly improve treatment planning and patient preparation.

- Untapped Potential of Healthcare Data: The vast amount of healthcare data in CRC management remains largely underutilized for predictive and personalized care approaches.

- Need for Personalized Treatment Strategies: Each CRC patient’s journey is unique, yet current treatment protocols lack the granularity to address individual QoL concerns effectively.

- Improving Informed Consent and Patient Expectations: More accurate predictions of post-treatment QoL could significantly enhance the informed consent process, helping to set realistic expectations and improve patient satisfaction.

- Enhancing Surgical Decision-Making: Predictive tools for QoL outcomes would enable surgeons and oncologists to make more informed decisions about surgical approaches and post-operative care strategies.

In addressing these motivations, this study utilizes the following key quality of life indicators to quantify and monitor the impact of colorectal cancer treatments:

- GQOLI2: A broad measure of a patient’s subjective well-being across multiple life domains, offering insights into psychosocial adaptation post-treatment.

- Wexner Score: Assesses the severity of fecal incontinence, a common issue following colorectal cancer treatment, crucial for understanding its social and psychological impact.

- LARS Score: Quantifies bowel dysfunction symptoms experienced by patients after anterior resection, providing insights into functional recovery and needed interventions.

- AUR Score: Evaluates structural outcomes post-treatment using anorectal ultrasonography, linking physical changes to their impact on quality of life.

By introducing these indicators in a multimodal machine learning framework, this study seeks to identify key predictive factors for each quality of life indicator, thereby enabling clinicians to make more informed treatment decisions and develop personalized care strategies.

3. Related Works

In recent years, the medical field has greatly benefited from advancements in AI, optimizing both time and cost in various domains, including patient diagnosis and expert decision-making support. This progress has significantly impacted medicine, with one notable area being the prediction of QoL after CRC resection. This section explores and synthesizes the current state of the art in this field (Table 1).

Table 1.

Related works.

Breukink et al. [20] conducted a prospective study to evaluate the QoL in rectal cancer patients who received laparoscopic total mesorectal excision surgery over a one-year period. Using the European Organization for Research and Treatment of Cancer Quality of Life Questionnaire (EORTC QLQ), data were collected at 3, 6, and 12 months post-surgery. The assessment included five functional scales (physical, role, cognitive, emotional, and social) and three symptom scales (fatigue, pain, and nausea/vomiting), along with several single-item symptoms common among colorectal cancer patients, such as dyspnea, loss of appetite, insomnia, constipation, and diarrhea. This study found an overall improvement in QoL one year after surgery, although there was a notable decline in specific areas, particularly in physical and sexual functioning.

Gray et al. [21] investigated factors associated with CRC that could potentially explain variations in QoL after diagnosis. Utilizing the EORTC QLQ questionnaire and multiple linear regression models, this study aimed to identify key predictors of QoL in CRC patients. The findings indicated that gender, an unmodifiable factor, significantly affects QoL, with females generally experiencing poorer outcomes than males. In contrast, modifiable factors such as social functioning, fatigue, dyspnea, and depression were strong predictors of diminished QoL. The multiple linear regression model developed for predicting global QoL demonstrated robust performance, achieving an R-squared score of 0.574 using these identified factors.

Xu et al. [22] highlights the significance of CRC as the third leading cause of tumor mortality with a 5-year survival rate of approximately 40%, mostly due to postoperative recurrence and metastasis. Surgical treatment with adjuvant therapy is the main clinical approach for CRC. However, the high recurrence rate of 80% three years after surgery negatively impacts patients’ lives. Thus, this study aims to investigate the feasibility of machine learning in predicting CRC recurrence after surgery. Four machine learning algorithms, namely Logistic Regression, Decision Tree, Gradient Boosting, and LightGBM, were implemented. The results reveal that the Gradient Boosting and LightGBM models outperform the other two algorithms. This study also identifies age and chemotherapy as the most influential risk factors for tumor recurrence.

Valeikaite et al. [23] analyzed predictors of long-term QoL in 88 CRC patients who received curative operations in Lithuania. QoL was assessed pre-surgery and at 1, 24, and 72 months post-surgery using standardized questionnaires. Statistical analyses included non-parametric tests and multivariate logistic regression models. This study found a 67% 6-year survival rate, with positive long-term outcomes in CRC treatment and stable QoL observed two years post-surgery. Emotional and social deficits emerged as the main issues for patients, while age and radiotherapy were associated with decreased long-term QoL. This study suggests that interventions targeting these factors could help improve long-term QoL outcomes.

Lu et al. [24] conducted a review aimed at evaluating studies that identify patients at risk of short-term mortality, to improve QoL and reduce cancer recurrence. The researchers analyzed 15 articles from various databases, finding that 12 of these studies had a high risk of bias due to small sample sizes or reliance on single metrics. The reviewed articles covered multiple types of cancer and varied widely in terms of predictors and sample sizes used. Common data pre-processing techniques included discretization, normalization, and handling of missing data, while feature selection methods included recursive feature selection, parameter-increasing selection, and forward-step selection. This study highlights limitations in the existing research, particularly regarding sample size and methodological consistency, and provides insights into the techniques employed for data analysis.

Sim et al. [25] evaluated the role of HRQoL in predicting 5-year survival among lung cancer patients. The study compared two feature sets: one containing only clinical and sociodemographic variables, and the other incorporating additional QoL factors, as many lung cancer survivors reported health challenges. The results indicated that the second feature set, which included HRQoL factors, demonstrated higher predictive performance, suggesting that QoL plays a crucial role in determining patient survival.

Savic et al. [26] explored the variability in QoL among cancer patients, focusing on predicting QoL specifically for breast and prostate cancer patients. The study employed two datasets and compared two approaches: centralized learning, wherein data are combined before model training, and federated learning, which allows for machine learning models to be trained collaboratively without direct data sharing.

Karil et al. [30] developed a machine learning model to predict declines in HRQoL between 12 and 60 months post-tumor resection. Using the Quality of Life Questionnaire-Core 30 (QLQ-C30) to assess HRQoL, the model’s target class was binary, indicating the presence or absence of HRQoL decline. Selected features included patient demographics (age, gender, relationship status), tumor characteristics (lateralization, histological diagnosis, extent of resection), and prior treatments such as radiotherapy and chemotherapy. Although the model achieved promising results, the authors highlighted limitations due to the small dataset size and recommended further data collection to improve the model’s generalizability.

The studies reviewed in this section highlight AI’s potential to improve QoL predictions following CRC resection. While statistical analysis and machine learning/deep learning models have shown promise in forecasting QoL and tumor recurrence, there remains significant room for improvement. More comprehensive studies utilizing advanced models could yield deeper insights into the factors influencing patient QoL after CRC surgery.

Most techniques in the literature rely on a single modality, such as questionnaires or imaging data, which limits predictive accuracy by depending on only one source of information.

In contrast to previous studies that focus on either clinical or imaging data in isolation, our research introduces a multimodal approach that integrates both types of data. This combined approach seeks to capture synergistic insights often missed in single-modality studies, potentially enabling more accurate predictions of post-treatment QoL outcomes for CRC patients.

4. Methodology

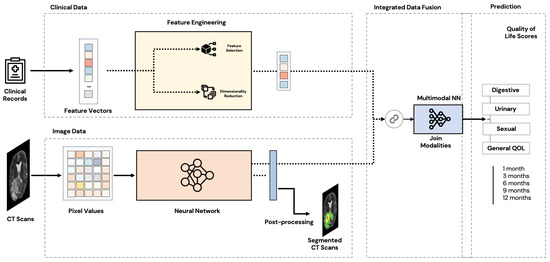

Figure 1 illustrates the proposed general multimodal architecture.

Figure 1.

General multimodal architecture.

4.1. Data Collection and Pre-Processing

The data used in this study are multimodal, including clinical data with several features and segmented CT Scans for the corresponding patients. The dataset contains information on 100 CRC patients.

4.1.1. Data Collection

Data were sourced from CHU Hassan II University Hospital (Fès), Morocco, under ethical approval and with full anonymization to ensure patient privacy. The dataset includes clinical data and CT scan images from 100 CRC patients, recorded at multiple time points: pre-surgery, immediately post-surgery, and at 3, 6, and 12 months post-surgery.

- Clinical Data: Collected from electronic health records, the clinical dataset contains 49 features, including patient demographic information (age, gender), medical history (e.g., hypertension, diabetes, smoking status), treatment details (surgery type, chemotherapy, radiotherapy), and post-surgical outcomes (complications, recurrence rates). The longitudinal follow-up allowed us to track changes in the quality of life indicators such as the Wexner Score, LARS Score, and AUR Score over time.

- Imaging Data: The CT scan images, collected at each follow-up point, provide detailed views of the patients’ anatomy. These images focus on tumor morphology and location. Tumor segmentation masks were manually annotated by medical professionals, highlighting the regions of interest for our analysis.

Our study utilizes a longitudinal dataset with a one-year follow-up period for each patient, which significantly impacts the sample size. The extended follow-up is crucial for accurately assessing post-surgical quality of life outcomes in CRC patients, as many complications and changes in QoL indicators manifest over time.

The sample of 100 patients strikes a balance between the depth of data collected and the breadth of the cohort. Each patient in the study participated in multiple assessments over the course of a year, including:

- Pre-surgical baseline measurements;

- Immediate post-surgical evaluations;

- Regular follow-up assessments at 3, 6, and 12 months post-surgery.

This intensive data collection process yielded a rich, multi-timepoint dataset for each patient, allowing us to capture the dynamic nature of QoL changes over time. The comprehensive nature of these data, while limiting the total number of patients we could include within the study timeframe, provides valuable insights into the trajectory of QoL indicators. This approach enhances the statistical power of our analyses, partially mitigating the limitations of a smaller sample size.

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the Faculty of Medicine and Pharmacy of Fez (protocol code N 27/20, September 2020). All patients provided written informed consent. To protect patient privacy, all data were de-identified before analysis.

4.1.2. Clinical Data Acquisition

Depending on the analysis focus, this dataset comprises real-world data from 100 CRC patients, including up to 49 features and potentially up to 106 target variables. Table 2 offer a detailed description of each variable.

Table 2.

Clinical data dictionary.

4.1.3. Pre-Processing

Data cleaning involves removing noise that could cause model failure, such as missing values and outliers. Important fields were handled with care by experts. The process included the following:

- Handling missing data by dropping columns with high missing values and imputing others using K-Nearest Neighbor algorithm [32].

- Handling outliers by correcting erroneous records with the help of experts.

- Discretizing continuous variables based on established thresholds to evaluate QoL.

- Balancing data by using Synthetic Minority Oversampling Technique (SMOTE) to generate synthetic samples and Random Oversampling for targets with insufficient samples.

- Scaling data to unify ranges and prevent confusion for the model.

- Encoding labels to handle numeric data and start from 0 rather than 1 for some algorithms.

4.1.4. Feature Engineering

To address the high number of features in the dataset, we used two approaches. Firstly, we employed various feature selection algorithms, including backward and forward selection, as well as domain-specific algorithms optimized for medical classification tasks. Secondly, we used principal component analysis to combine features into components while maintaining the maximum amount of explained variance. The feature selection algorithms work by iteratively adding or removing features to the model based on their significance, while the principal component analysis reduces the dimensions of the features by combining them into components. The feature selection algorithms include forward selection, which adds features iteratively until no further improvement is observed or a maximum number of features is reached, and backward selection, which eliminates features iteratively until the maximum number of features is reached. Additionally, we used various optimization algorithms [33,34,35,36] to optimize the feature selection process.

4.1.5. Imaging Data Acquisition

The imaging data in the dataset consist of CT Scans of the patients, which were collected using various imaging techniques. The CT scans provide detailed information on the structure and morphology of the tumor, as well as its location and extent.

While various imaging modalities have been used in CRC research, including MRI and PET scans, we focus on CT images for several reasons. CT scans offer high spatial resolution, are widely available, and provide detailed information about both soft tissues and bony structures. Furthermore, CT is the standard imaging modality for staging and follow-up in CRC patients.

4.1.6. Pre-Processing

First, we resized the noisy images and cropped uninformative black areas using contour detection. Then, we reduced noise using the CLAHE equalization technique and generated segmentation masks for red contours by filtering non-red colors, as defined by experts.

4.2. Clinical Data Analysis

4.2.1. Digestive Troubles Analysis

Table 3 shows the performance results of various machine learning models on predicting digestive troubles, as measured by the F1-score and accuracy metrics. The results are presented for different time periods, ranging from 1 month to 9 months after surgery, and for two different targets: LARS score and Wexner score. The models used include Random Forest, LightGBM, Logistic Regression, Decision Tree, K-Nearest Neighbors, and Gradient Boosting.

Table 3.

Digestive troubles.

The AUR score depends on the nature of surgery on early months and somewhat on the tumor field on later months. On later months, the LARS score is heavily affected by the field that was occupied by the tumor. The Wexner score is also severely affected by the field of the tumor on later months.

4.2.2. Urinary Troubles Analysis

Table 4 presents the results of different machine learning models trained to predict urinary troubles in patients at different time points. The target variable includes UI score and AUR score. The models used include XGB (extreme gradient boosting), random forest, decision tree, and gradient boosting.

Table 4.

Urinary troubles.

Model performance, based on the F1-score, ranged from 0.21 to 0.91, with accuracy spanning 70% to 97%. Selected features varied across models and included factors such as gender, surgery type, surgical approach, distance, rectal/colonic location, complete clinical response (CCR), syndrome R, endoscopy position (endo), position (TDM/TAP), protocol, anastomosis type, adjuvant chemotherapy (CMTadjuvante), extradigestive tumor presence, and colotomy IGD.

4.2.3. Sexual Troubles Analysis

This section presents the results of various models applied to different sexual troubles (dyspareunia and erection troubles) with different time intervals (3 months, 6 months, 9 months, and 12 months) and their corresponding features selected by the models.

For dyspareunia, as seen in Table 5, the results indicate that the models perform differently based on the time interval. The Bagging model achieved an F1-1 score of 0.18 and 63% accuracy for the 3 months interval with only two selected features (Constipation and Fistula). In contrast, the XGB model achieved an F1-1 score of 0.88 and 97% accuracy for the 12 months interval with only two selected features (Age and Gender). This suggests that the selected features and models significantly affect the performance of the prediction model.

Table 5.

Sexual troubles—1.

For erection troubles, as seen in Table 6, the models performed well in predicting the troubles with high accuracy and F1-1 scores. The K-Nearest Neighbor model achieved the highest F1-1 score of 0.91 and 97% accuracy for the 3 months interval with six selected features (Gender, Diabetes, Tobacco, syd r, Constipation, and envah). For the 6 months interval, the Random Forest model achieved the highest F1-1 score of 0.93 and 97% accuracy with nine selected features (Cardiopathy, Occlusion, Distance, TR:circumference, envah, resecability, CCR, reservoir, and CMTadjuvante). The Decision Tree models performed well for the 9 and 12 months intervals with F1-1 scores of 0.82 and 0.88, respectively, with only a few selected features.

Table 6.

Sexual troubles—2.

4.2.4. General Quality of Life Analysis

Table 7 shows the different models that were trained to predict the General QoL at different time intervals (1, 3, 6, 9, and 12 months) using different sets of features. The models used for the prediction are AdaBoost [37], XGB [38], and Gradient Boosting [39].

Table 7.

Results—general quality of life.

When we examine the selected features for different time intervals, we notice that specific features play important roles in predicting quality of life at different times. For example, when forecasting QoL at 1 month, features like Age, Gender, Cardiopathy, and Operated are significant. In contrast, when predicting QoL at 6 months, features like Extradig Tumor, pos(TDM/TAP), Anastomosis type, ileotsomie, and CMTadjuvante become important.

During the early months, the age and gender of the individual emerge as key factors in determining the level of GQoL. In contrast, during later months, the impact is influenced by factors related to the tumor field and the treatments administered to the patient.

4.3. Imaging Data Segmentation

Medical image segmentation is vital in healthcare by enabling precise delineation and analysis of anatomical structures and pathological regions within medical images.

To extract relevant features from CT images for QoL prediction, we employed a two-step process. First, we used a pre-trained segmentation model to isolate the regions of interest (ROI) in the CT scans. This step helps focus our analysis on the most relevant anatomical structures. Second, we extract high-level features from these ROIs. These features were then combined with clinical data in our multimodal framework to predict QoL indicators.

We apply and explore the effectiveness of UNET models and their variants in accurately delineating anatomical structures and pathological regions within medical images.

4.3.1. Segmentation Models

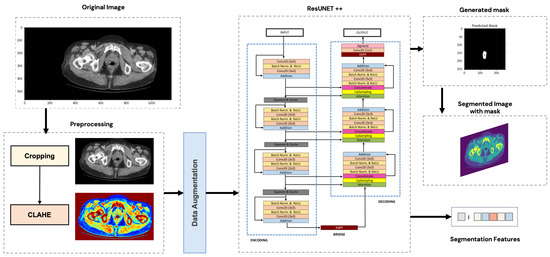

In this section, a succinct overview will be provided on the architecture of the segmentation models. The general segmentation approach is illustrated in Figure 2.

Figure 2.

General segmentation approach.

U-NET [6]

The U-NET architecture has a unique U-shaped design consisting of two main paths: a contracting or encoding path and an expanding or decoding path. The encoder is a standard convolutional neural network with repeated convolutions and ReLU activations that lead to a feature map. The encoder’s primary goal is to reduce spatial dimensions and increase channels in each layer.

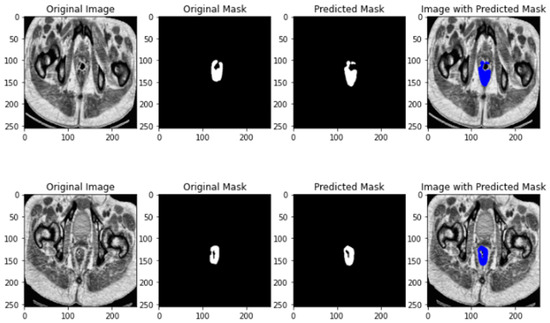

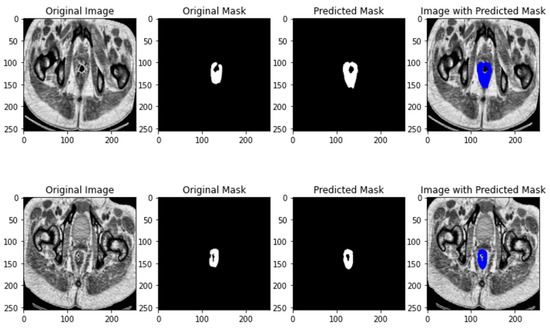

The results of U-NET segmentation is depicted in Figure 3.

Figure 3.

U-NET segmentation results.

The feature map from the encoder is then passed through the decoder path, which uses up-convolutions with ReLU activations. Each block in the decoder concatenates the feature map from the encoder with the feature map in the decoder, resulting in increased spatial dimensions and reduced channels. The final output is a probability map that indicates the mask of the initial image, based on the generated feature maps.

U-NET++ [40]

Segmenting cancer lesions or any image in the medical domain in general demands a higher level of accuracy than what is desired in natural images. Marginal errors in segmentation can be critical to patients as they would heavily influence the doctor’s decision, especially if segmentation marks patterns around a cancerous part as invasive when they are not, which results in a lower credibility of the model from a clinical perspective. Therefore, the need to recover fine details in the medical domain proves to be crucial.

To address the need for more accurate representations, U-Net++ was implemented. In addition to having the same shape as the U-Net architecture, U-Net++ introduces nested and dense skip connections between encoder and decoder layers to effectively detect fine details that result from the encoder feature maps prior to fusion with the corresponding layer of the decoder. And this is the main difference with the U-Net architecture, which uses plain skip connections to fast-forward feature maps from encoder to decoder.

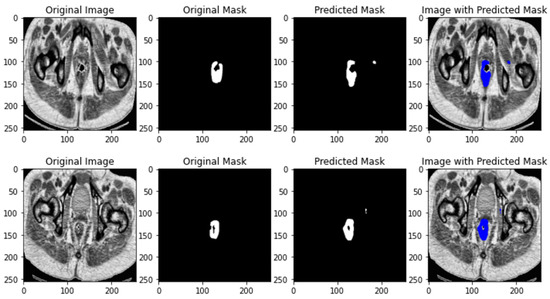

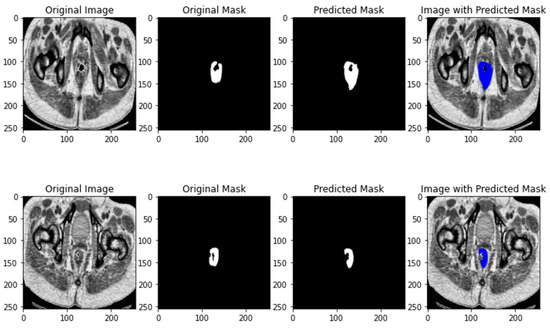

The results of U-NET++ segmentation is depicted in Figure 4.

Figure 4.

U-NET++ segmentation results.

ResUNET [41]

As its name hints, ResUNET stands for residual U-NET. It was designed to obtain a high performance with fewer parameters compared to the existing architectures. It was an improvement over the U-NET series as it leverages the concept of deep residual networks in the context of U-NET. The use of residual blocks makes it possible to build a deeper network without worrying about the problem of vanishing or exploding gradients.

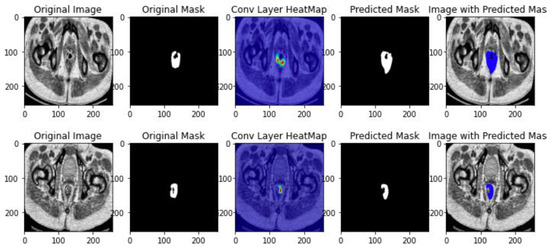

The results of ResUNET segmentation is depicted in Figure 5.

Figure 5.

ResNET segmentation results.

ResUNET++ [42]

This architecture addresses the problem of the small number of images, which exists specifically the medical domain due to patient’s privacy. The ResUNET++ architecture takes advantage of not only residual blocks, but also squeeze and excitation blocks which increases the sensitivity to the relevant features and suppress all the unnecessary features, Spatial Pyramidal Pooling which will act as a bridge between the encode and decoder architecture to capture multi-scale information, and attention blocks that determine which parts of the network requires more attention. This DL architecture results in a significant improvement over the existing ResUNET architecture.

Figure 6.

ResNET++ segmentation results.

Figure 7.

ResUNET++ class activation map.

4.3.2. Segmentation Quality and Validation

Table 8 presents the results of a segmentation experiment using four different models: UNET, UNET++, ResUNET, and ResUNET++. The evaluation was conducted using two metrics, namely DICE and IOU.

Table 8.

Segmentation results.

- DICE Coefficient (Sørensen-Dice Index)

The DICE coefficient measures the overlap between two sets and is commonly used in image segmentation tasks. The formula is

where

- A is the set of predicted labels (e.g., the predicted segmentation);

- B is the set of ground truth labels (e.g., the actual segmentation);

- is the intersection of the predicted and ground truth labels (i.e., the common area);

- and are the cardinalities (sizes) of sets A and B, respectively.

The DICE coefficient ranges between 0 and 1:

- A DICE coefficient of 1 indicates perfect overlap;

- A DICE coefficient of 0 indicates no overlap.

- Intersection over Union (IOU)

The IOU, also known as the Jaccard Index, is another metric for evaluating the overlap between two sets. The formula is

where

- A is the predicted segmentation;

- B is the ground truth segmentation;

- is the intersection of sets A and B;

- is the union of sets A and B, which can be calculated as .

Like DICE, IoU also ranges between 0 and 1:

- An IoU of 1 means perfect overlap;

- An IoU of 0 means no overlap.

The results show that the ResUNET++ model achieved the highest performance with a DICE score of 76% and an IOU score of 61%. UNET and ResUNET also produced relatively high scores, with DICE scores of 74% and 72%, respectively, and IOU scores of 58% and 56%, respectively. UNET++ had the lowest scores with a DICE score of 67% and an IOU score of 51%.

These results suggest that ResUNET++ is the most effective model for segmentation in this experiment, as it achieved the highest scores in both metrics. It is also worth noting that the traditional UNET and ResUNET models also achieved relatively high performance, indicating that they are also viable options for segmentation tasks.

4.4. Multimodal Data Integration

Multimodal data integration is a critical process in healthcare analytics [43], involving the systematic combination of data from various sources or modalities. This integration is particularly significant in the context of CRC as it encompasses a broad spectrum of data types including clinical metrics, imaging scans, and patient-reported outcomes. Each of these modalities offers unique insights into the patient’s condition; when combined, they provide a comprehensive, multi-dimensional view of patient health that is greater than the sum of its parts.

The integration of these diverse data types serves several crucial functions. By correlating features across modalities, clinicians can enhance diagnosis and prognosis, identify patterns and markers that may be less apparent when considering each data type in isolation, and tailor treatments to individual patients based on a more complete understanding of their specific disease characteristics and predicted recovery trajectories. Additionally, for CRC patients, the period following surgery is fraught with uncertainties concerning recovery and QoL. Multimodal integration allows for more accurate predictions of these outcomes, aiding in better post-operative care and monitoring.

Thus, multimodal data integration not only enriches the data landscape but also enhances the actionable insights that can be derived from it, ultimately contributing to improved patient care and treatment outcomes in CRC.

Building on these principles, our study introduces a novel multimodal machine learning framework that strategically merges PCA-reduced image features with clinical data. By implementing principal component analysis, we effectively reduce the dimensionality of complex imaging data, retaining only the most critical features. These are then synergistically integrated with detailed clinical information, forming a unified dataset that feeds into a deep learning model. This approach not only capitalizes on the complementary strengths of each data modality but also addresses the challenges of data volume and computational efficiency, setting the stage for more accurate and robust predictive outcomes.

4.5. Results and Discussion

The results in Table 9 demonstrate the potential benefits of multimodal learning. For the GQOL12 target variable, there was no improvement in test accuracy when using a multimodal approach with joint fusion compared to a monomodal approach. However, for the Wexner Score 3, LARS Score 9, and AUR Score 9 target variables, the joint fusion approach outperformed the monomodal approach in terms of test accuracy.

Table 9.

Multimodal vs. monomodal results.

This indicates that combining information from multiple modalities can lead to improved performance. For instance, the joint fusion approach achieved a significant improvement in the test accuracy of the AUR Score 9 target variable, going from 88% to 96%. This is particularly important as accurately predicting the AUR score can help clinicians make informed decisions about patient care.

Therefore, the results suggest that multimodal learning can provide valuable insights by leveraging complementary information from multiple sources. Those improvements can have a significant impact in real-world applications.

4.5.1. Implications

The improvements in accuracy across various QoL indicators can be attributed to the synergistic effect of combining clinical data with CT image features in our multimodal approach. This integration allows our model to capture a more comprehensive picture of each patient’s condition, incorporating both observable clinical factors and underlying anatomical information.

By fusing these diverse data sources, our model can detect complex interactions between clinical variables and structural characteristics visible in CT scans. For instance, the relationship between tumor location, surrounding tissue composition, and post-operative functional outcomes may be more accurately captured, leading to improved predictions across multiple QoL domains. This approach also helps mitigate issues of data sparsity often encountered with clinical data alone, as imaging data provide a consistent set of features across all patients, potentially filling in information gaps.

The predictive model offers significant potential for improving post-operative management and long-term follow-up care in CRC patients. By providing more accurate forecasts of various QoL indicators, healthcare providers can tailor their care strategies to each patient’s specific needs and risks.

In the immediate post-operative period, the model’s predictions can guide the development of personalized rehabilitation programs. Patients predicted to have a higher risk of specific dysfunctions might be enrolled in more intensive therapy programs earlier in their recovery. For long-term follow-up care, the model enables a more proactive and personalized approach. By identifying patients at higher risk of experiencing poor QoL outcomes in specific domains, healthcare teams can implement targeted surveillance and intervention strategies.

4.5.2. Limitations

Our study has several limitations that should be considered when interpreting the results. While our study included 100 patients, which is a relatively small sample size for machine learning applications, it is important to note that this represents a trade-off between sample size and data depth. The longitudinal nature of our study, with a one-year follow-up period and multiple assessment points per patient, provides rich, time-series data that capture the dynamic nature of QoL changes. This depth of data partially mitigates the limitations of a smaller sample size but may limit the generalizability of our findings to broader patient populations.

All patients were recruited from a single institution, which may introduce bias and limit the generalizability of our results to other healthcare settings or geographical regions. Multi-center studies would be valuable to validate our findings across diverse patient populations and healthcare systems. The retrospective nature of our study may introduce potential biases, particularly in terms of data completeness and quality. While we made every effort to ensure data integrity, prospective studies would be beneficial to further validate our multimodal approach.

Despite these limitations, our study provides valuable insights into the potential of multimodal machine learning for predicting QoL in CRC patients. Future research should aim to address these limitations through larger, multi-center prospective studies, incorporation of diverse imaging modalities, and continued refinement of model interpretability.

5. Conclusions

In the context of colorectal cancer management, the paradigm has increasingly shifted from a sole emphasis on survivability to a more holistic consideration of post-treatment QoL. Tumor resection, while a common therapeutic intervention, frequently does not yield significant improvements in patients’ QoL. This limitation has driven the research community to employ QoL questionnaires to capture patient outcomes over various time intervals. However, the absence of predictive models has constrained these assessments to largely descriptive analyses, which provide a generalized understanding of QoL trajectories without addressing specific health-related dimensions.

Furthermore, the existing literature predominantly focuses on descriptive statistics pertaining to global QoL measures, thereby neglecting the nuanced dimensions of health-related QoL, such as urinary, sexual, and functional outcomes. To address these gaps, our research introduces a novel system that utilizes empirical data to estimate multiple dimensions of health-related QoL prior to resection surgery. Notably, our approach includes the evaluation of urinary, sexual, and functional domains, offering a more granular analysis of anticipated patient outcomes. Additionally, we developed a segmentation model using image data from CT scans to accurately delineate affected anatomical regions, thereby enhancing the precision of our predictions.

This study makes a significant contribution to the existing body of knowledge by providing a more comprehensive and multidimensional assessment of health-related QoL in colorectal cancer patients. The integration of real-world data with advanced image-based segmentation techniques substantially increases the accuracy and robustness of our predictive system. The findings have critical implications for clinical practice, enabling healthcare providers to tailor treatment strategies more effectively to individual patient profiles. Ultimately, our work has the potential to significantly enhance the QoL of patients with colorectal cancer, thereby improving their overall well-being and reducing the burden of disease.

Future research could focus on expanding datasets to include more diverse patient populations across multiple institutions, improving model generalizability. Additionally, incorporating advanced deep learning architectures such as attention mechanisms or transformer-based models could enhance prediction accuracy by focusing on the most relevant features in clinical, imaging, and textual data [44].

Explainability [45] is another key area for improvement. Developing more interpretable models would help clinicians better understand the predictions and foster trust in AI-assisted decision-making. Lastly, future work could explore personalized intervention strategies based on model predictions, enabling early identification of at-risk patients and tailoring post-surgical care to improve outcomes.

Author Contributions

Conceptualization, M.R., M.M. and K.A.; validation, M.R., M.M. and K.A.; resources, I.T.; data curation, A.A.-A.; writing, M.R. and M.M.; supervision, S.Y., A.A.-A. and I.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the Faculty of Medicine and Pharmacy of Fez (protocol code N 27/20, September 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding authors, upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Jansen, L.; Koch, L.; Brenner, H.; Arndt, V. Quality of life among long-term (⩾5 years) colorectal cancer survivors–systematic review. Eur. J. Cancer 2010, 46, 2879–2888. [Google Scholar] [CrossRef] [PubMed]

- Saraswat, D.; Bhattacharya, P.; Verma, A.; Prasad, V.K.; Tanwar, S.; Sharma, G.; Bokoro, P.N.; Sharma, R. Explainable AI for healthcare 5.0: Opportunities and challenges. IEEE Access 2022, 10, 84486–84517. [Google Scholar] [CrossRef]

- Chtouki, K.; Rhanoui, M.; Mikram, M.; Yousfi, S.; Amazian, K. Supervised Machine Learning for Breast Cancer Risk Factors Analysis and Survival Prediction. In Proceedings of the International Conference On Big Data and Internet of Things, Tangier, Morocco, 25–27 October 2023; Springer: Berlin/Heidelberg, Germany; pp. 59–71. [Google Scholar]

- Mikram, M.; Moujahdi, C.; Rhanoui, M.; Meddad, M.; Khallout, A. Hybrid deep learning models for diabetic retinopathy classification. In Proceedings of the International Conference On Big Data and Internet of Things, Rabat, Morocco, 17–18 March 2022; Springer: Berlin/Heidelberg, Germany; pp. 167–178. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany; pp. 234–241. [Google Scholar]

- Fu, Y.; Lei, Y.; Wang, T.; Curran, W.J.; Liu, T.; Yang, X. A review of deep learning based methods for medical image multi-organ segmentation. Phys. Medica 2021, 85, 107–122. [Google Scholar] [CrossRef] [PubMed]

- Choi, M.S.; Choi, B.S.; Chung, S.Y.; Kim, N.; Chun, J.; Kim, Y.B.; Chang, J.S.; Kim, J.S. Clinical evaluation of atlas-and deep learning-based automatic segmentation of multiple organs and clinical target volumes for breast cancer. Radiother. Oncol. 2020, 153, 139–145. [Google Scholar] [CrossRef]

- Xie, Y.; Xing, F.; Shi, X.; Kong, X.; Su, H.; Yang, L. Efficient and robust cell detection: A structured regression approach. Med Image Anal. 2018, 44, 245–254. [Google Scholar] [CrossRef]

- Mahmood, F.; Borders, D.; Chen, R.J.; McKay, G.N.; Salimian, K.J.; Baras, A.; Durr, N.J. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med Imaging 2019, 39, 3257–3267. [Google Scholar] [CrossRef]

- Takamatsu, M.; Yamamoto, N.; Kawachi, H.; Chino, A.; Saito, S.; Ueno, M.; Ishikawa, Y.; Takazawa, Y.; Takeuchi, K. Prediction of early colorectal cancer metastasis by machine learning using digital slide images. Comput. Methods Programs Biomed. 2019, 178, 155–161. [Google Scholar] [CrossRef]

- Bychkov, D.; Linder, N.; Turkki, R.; Nordling, S.; Kovanen, P.E.; Verrill, C.; Walliander, M.; Lundin, M.; Haglund, C.; Lundin, J. Deep learning based tissue analysis predicts outcome in colorectal cancer. Sci. Rep. 2018, 8, 3395. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef]

- Lee, S.I.; Yoo, S.J. Multimodal deep learning for finance: Integrating and forecasting international stock markets. J. Supercomput. 2020, 76, 8294–8312. [Google Scholar] [CrossRef]

- Bousnina, N.; Ghouzali, S.; Mikram, M.; Abdul, W. DTCWT-DCT watermarking method for multimodal biometric authentication. In Proceedings of the 2nd International Conference on Networking, Information Systems & Security, Riyadh, Saudi Arabia, 1–3 May 2019; pp. 1–7. [Google Scholar]

- Acosta, J.N.; Falcone, G.J.; Rajpurkar, P.; Topol, E.J. Multimodal biomedical AI. Nat. Med. 2022, 28, 1773–1784. [Google Scholar] [CrossRef] [PubMed]

- Borgaonkar, M.; Irvine, E. Quality of life measurement in gastrointestinal and liver disorders. Gut 2000, 47, 444–454. [Google Scholar] [CrossRef] [PubMed]

- Lewandowska, A.; Rudzki, G.; Lewandowski, T.; Próchnicki, M.; Rudzki, S.; Laskowska, B.; Brudniak, J. Quality of life of cancer patients treated with chemotherapy. Int. J. Environ. Res. Public Health 2020, 17, 6938. [Google Scholar] [CrossRef]

- Velikova, G.; Booth, L.; Smith, A.B.; Brown, P.M.; Lynch, P.; Brown, J.M.; Selby, P.J. Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. J. Clin. Oncol. 2004, 22, 714–724. [Google Scholar] [CrossRef]

- Breukink, S.; Van der Zaag-Loonen, H.; Bouma, E.; Pierie, J.; Hoff, C.; Wiggers, T.; Meijerink, W. Prospective evaluation of quality of life and sexual functioning after laparoscopic total mesorectal excision. Dis. Colon Rectum 2007, 50, 147–155. [Google Scholar] [CrossRef]

- Gray, N.M.; Hall, S.J.; Browne, S.; Macleod, U.; Mitchell, E.; Lee, A.J.; Johnston, M.; Wyke, S.; Samuel, L.; Weller, D.; et al. Modifiable and fixed factors predicting quality of life in people with colorectal cancer. Br. J. Cancer 2011, 104, 1697–1703. [Google Scholar] [CrossRef]

- Xu, Y.; Ju, L.; Tong, J.; Zhou, C.M.; Yang, J.J. Machine learning algorithms for predicting the recurrence of stage IV colorectal cancer after tumor resection. Sci. Rep. 2020, 10, 2519. [Google Scholar] [CrossRef]

- Valeikaite-Tauginiene, G.; Kraujelyte, A.; Poskus, E.; Jotautas, V.; Saladzinskas, Z.; Tamelis, A.; Lizdenis, P.; Dulskas, A.; Samalavicius, N.E.; Strupas, K.; et al. Predictors of quality of life six years after curative colorectal cancer surgery: Results of the prospective multicenter study. Medicina 2022, 58, 482. [Google Scholar] [CrossRef]

- Lu, S.C.; Xu, C.; Nguyen, C.H.; Geng, Y.; Pfob, A.; Sidey-Gibbons, C. Machine Learning–Based Short-Term Mortality Prediction Models for Patients With Cancer Using Electronic Health Record Data: Systematic Review and Critical Appraisal. JMIR Med. Inform. 2022, 10, e33182. [Google Scholar] [CrossRef]

- Sim, J.A.; Kim, Y.; Kim, J.H.; Lee, J.M.; Kim, M.S.; Shim, Y.M.; Zo, J.I.; Yun, Y.H. The major effects of health-related quality of life on 5-year survival prediction among lung cancer survivors: Applications of machine learning. Sci. Rep. 2020, 10, 10693. [Google Scholar] [CrossRef] [PubMed]

- Savić, M.; Kurbalija, V.; Ilić, M.; Ivanović, M.; Jakovetić, D.; Valachis, A.; Autexier, S.; Rust, J.; Kosmidis, T. Analysis of Machine Learning Models Predicting Quality of Life for Cancer Patients. In Proceedings of the 13th International Conference on Management of Digital EcoSystems, Virtual Event, 1–3 November 2021; pp. 35–42. [Google Scholar]

- Nuutinen, M.; Korhonen, S.; Hiltunen, A.M.; Haavisto, I.; Poikonen-Saksela, P.; Mattson, J.; Kondylakis, H.; Mazzocco, K.; Pat-Horenczyk, R.; Sousa, B.; et al. Impact of machine learning assistance on the quality of life prediction for breast cancer patients. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies, Virtual Event, 9–11 February 2022; pp. 344–352. [Google Scholar]

- Zhou, C.; Hu, J.; Wang, Y.; Ji, M.H.; Tong, J.; Yang, J.J.; Xia, H. A machine learning-based predictor for the identification of the recurrence of patients with gastric cancer after operation. Sci. Rep. 2021, 11, 1571. [Google Scholar] [CrossRef] [PubMed]

- Ting, W.C.; Chang, H.R.; Chang, C.C.; Lu, C.J. Developing a novel machine learning-based classification scheme for predicting SPCs in colorectal cancer survivors. Appl. Sci. 2020, 10, 1355. [Google Scholar] [CrossRef]

- Karri, R.; Chen, Y.P.P.; Drummond, K.J. Using machine learning to predict health-related quality of life outcomes in patients with low grade glioma, meningioma, and acoustic neuroma. PLoS ONE 2022, 17, e0267931. [Google Scholar] [CrossRef] [PubMed]

- Trebeschi, S.; van Griethuysen, J.J.; Lambregts, D.M.; Lahaye, M.J.; Parmar, C.; Bakers, F.C.; Peters, N.H.; Beets-Tan, R.G.; Aerts, H.J. Deep learning for fully-automated localization and segmentation of rectal cancer on multiparametric MR. Sci. Rep. 2017, 7, 5301. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Whitley, D. A genetic algorithm tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Yang, X.S. Flower pollination algorithm for global optimization. In Proceedings of the International Conference on Unconventional Computing and Natural Computation, Orlean, France, 3–7 September 2012; Springer: Berlin/Heidelberg, Germany; pp. 240–249. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Schapire, R.E. Explaining adaboost. In Empirical Inference: Festschrift in Honor of Vladimir N. Vapnik; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–52. [Google Scholar]

- Chen, T. Xgboost: Extreme gradient boosting. R Package Version 0.4-2 2015, 1. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 1189–1232. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Johansen, D.; De Lange, T.; Halvorsen, P.; Johansen, H.D. Resunet++: An advanced architecture for medical image segmentation. In Proceedings of the 2019 IEEE International Symposium on Multimedia (ISM), San Diego, CA, USA, 9–11 December 2019; pp. 225–2255. [Google Scholar]

- Bardak, B.; Tan, M. Improving clinical outcome predictions using convolution over medical entities with multimodal learning. Artif. Intell. Med. 2021, 117, 102112. [Google Scholar] [CrossRef] [PubMed]

- Zekaoui, N.E.; Yousfi, S.; Mikram, M.; Rhanoui, M. Enhancing Large Language Models’ Utility for Medical Question-Answering: A Patient Health Question Summarization Approach. In Proceedings of the 2023 14th International Conference on Intelligent Systems: Theories and Applications (SITA), Casablanca, Morocco, 22–23 November 2023; pp. 1–8. [Google Scholar]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (xai): Toward medical xai. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).