Abstract

The article presents University of Idaho-Physical Rehabilitation Movement Data (UI-PRMD), a publically available data set of movements related to common exercises performed by patients in physical rehabilitation programs. For the data collection, 10 healthy subjects performed 10 repetitions of different physical therapy movements with a Vicon optical tracker and a Microsoft Kinect sensor used for the motion capturing. The data are in a format that includes positions and angles of full-body joints. The objective of the data set is to provide a basis for mathematical modeling of therapy movements, as well as for establishing performance metrics for evaluation of patient consistency in executing the prescribed rehabilitation exercises.

Dataset License

UI-PRMD data set is made available under the Open Data Commons Public Domain Dedication and License v1.0 whose full text can be found at: http://opendatacommons.org/licenses/pddl/1.0.

1. Summary

Patient participation in physical therapy and rehabilitation programs is an important step in the recovery process of various musculoskeletal conditions. A home exercise program (HEP), where patients perform a set of recommended physical exercises in a home-based environment, is often a substantial component of a patient’s rehabilitation treatment. Despite the enormous expenses incurred by therapy programs on behalf of both healthcare providers and patients [1], HEP treatment is not necessarily successful in helping the patient reach full functional recovery [2]. Reports in the literature indicate that one of the main barriers to successful HEP implementation is patient noncompliance with the prescribed exercise plan [3]. The low adherence rates are attributed primarily to the lack of supervision and monitoring of patient performance in the outpatient setting by a rehabilitation professional. In addition, psychological issues associated with fear of painful movements, fear of re-injury, and anxiety of increased pain have also been reported as important barriers to adherence in unsupervised exercise programs [4,5]. Subsequently, a body of work has been concentrated on the development of tools in support of HEP, such as robotic assistive devices [6], exoskeletons, haptic devices, and virtual gaming environments [7].

The authors of this article are currently participating in a research project focused on the development of a novel therapy-supporting tool for automated monitoring and evaluation of HEP episodes based on implementation of machine learning algorithms. The goal of the project is to employ a Microsoft’s Kinect sensor [8] for capturing body movements during therapy sessions, and automatically evaluate patient performance and adherence to the recommended exercises. To achieve this goal, the project encompasses several specific objectives related to the development of a methodology for mathematical modeling of patient movements, definition of performance metrics, and creation of a set of therapy movements. The authors have developed a preliminary model of human motions using a machine learning method based on a neural network architecture consisting of auto-encoder and mixture density sub-nets [9], and they have proposed a taxonomy for performance metrics for evaluation of therapy movements [10]. The publications [9,10] do not use the UI-PRMD set that is presented here. These articles use the data related to general human movements from the University of Dallas at Texas Multi-Modal Human Action Dataset (UTD-MHAD) [11], to prove the proposed concepts in a lack of a data set of therapy movements.

Machine learning methods employ observed or measured data of a system/process for establishing the relationship between the input and output parameters. These methods typically require vast amounts of data for learning the relationships among the system parameters. For many problems in the field of machine learning, the performance of the algorithms and their ability to extract relevant and useful information from data are directly proportional to the quantity and quality of the available data. In recent years, the research community in machine learning has become increasingly aware that the provision of appropriate data sets for a specific problem is essential for enhanced performance of the existing algorithms, and for developing and evaluating new algorithms. Moreover, at the present time, companies that possess large data bases are often considered to have competitive advantage and capacity to achieve better results over other companies that work on a same problem. Consequently, a great deal of recent research efforts has concentrated on the creation of data sets for various problems in machine learning [12].

Our main motivation for creating the presented data set was the identified lack of publically available comprehensive data sets of physical therapy movements. Currently, there are a large number of publically available data sets related to general human movements [13] that are extensively used for tasks like action recognition, gesture recognition, pose estimation, or fall detection. Many of these data sets employ optical motion capturing systems for recording the movements, e.g., CMU Multi-Modal Activity (CMU-MMAC) [14], and Berkley MHAD (Multi-Modal Human Action Dataset) [15]. Likewise, numerous data sets of general human movements have been created by using the Microsoft Kinect sensor, such as the MSR (Microsoft Research) Action3D data set [16] and the previously mentioned UTD-MHAD set [11].

The existing data sets of therapy movements, however, are limited either in the scope of the movements or in the provided data format. One such example is the HPTE (home-based physical therapy exercises) data set of therapy movements, created by Ar and Akgul [17]. The set contains eight shoulder and knee exercise movements performed six times by five subjects, recorded with a Kinect camera. One major limitation of the HPTE data set is that only the video and depth streams from the Kinect sensor are provided. The data set does not provides the corresponding body joint positions or angles, and although it is possible to extract the joint information from the video and depth frames, it is not a straightforward task, and it would require implementation of an image processing method. The EmoPain data set [4] was designed with an emphasis on studying pain-related emotions in physical rehabilitation, and contains high-resolution face videos, audio files, full body joint motions, and electromyographic (EMG) signals from back muscles. A group of 22 patients and 28 healthy control subjects performed seven exercises typically undertaken by patients with chronic lower back pain. Another data set presented in the work of Nishiwaki et al. [18] is restricted to three exercises of lower limbs performed by nine subjects. The activity of four leg muscles was recorded with EMG electrodes. In addition, several related data sets focus on physical activity monitoring (e.g., by wearing heart rate monitors, inertial measurement units [19]), and are typically applied for recognition or classification of the type of activity based on collected data.

The UI-PRMD set presented in this work includes 10 movements that are commonly completed by patients in physical therapy programs. A sample of 10 healthy individuals repeated each movement 10 times in front of two sensory systems for motion capturing: a Vicon optical tracker, and a Kinect camera. The movement data were collected in the Integrated Sports Medicine Movement Analysis Laboratory (ISMMAL) with the Department of Movement Sciences at the University of Idaho. The movement data have been categorized, organized and posted on a dedicated web site for a free public access. Potential benefits of publically posting the UI-PRMD set include the potential to serve as a benchmark for comparison of future research in physical therapy, and to streamline the process of establishing consistent metrics for evaluation of patient progress in rehabilitation.

The presented data set does not consider the implication on the selected movements by a particular type of injuries of level of injuries. As stated earlier, the goal of the research at this stage is to employ the data for mathematical modeling of rehabilitation movements in general, and for assessment of the deviation of motion trajectories from the derived movement models.

The organization and format of the data, as well as the movement type and files nomenclature are presented in Section 2. Section 3 describes the methods for recording the data, and provides information related to the order of the joint positions and angular displacements for the used sensory systems. Section 4 presents concluding remarks related to the contribution and limitations of the data set.

2. Data Description

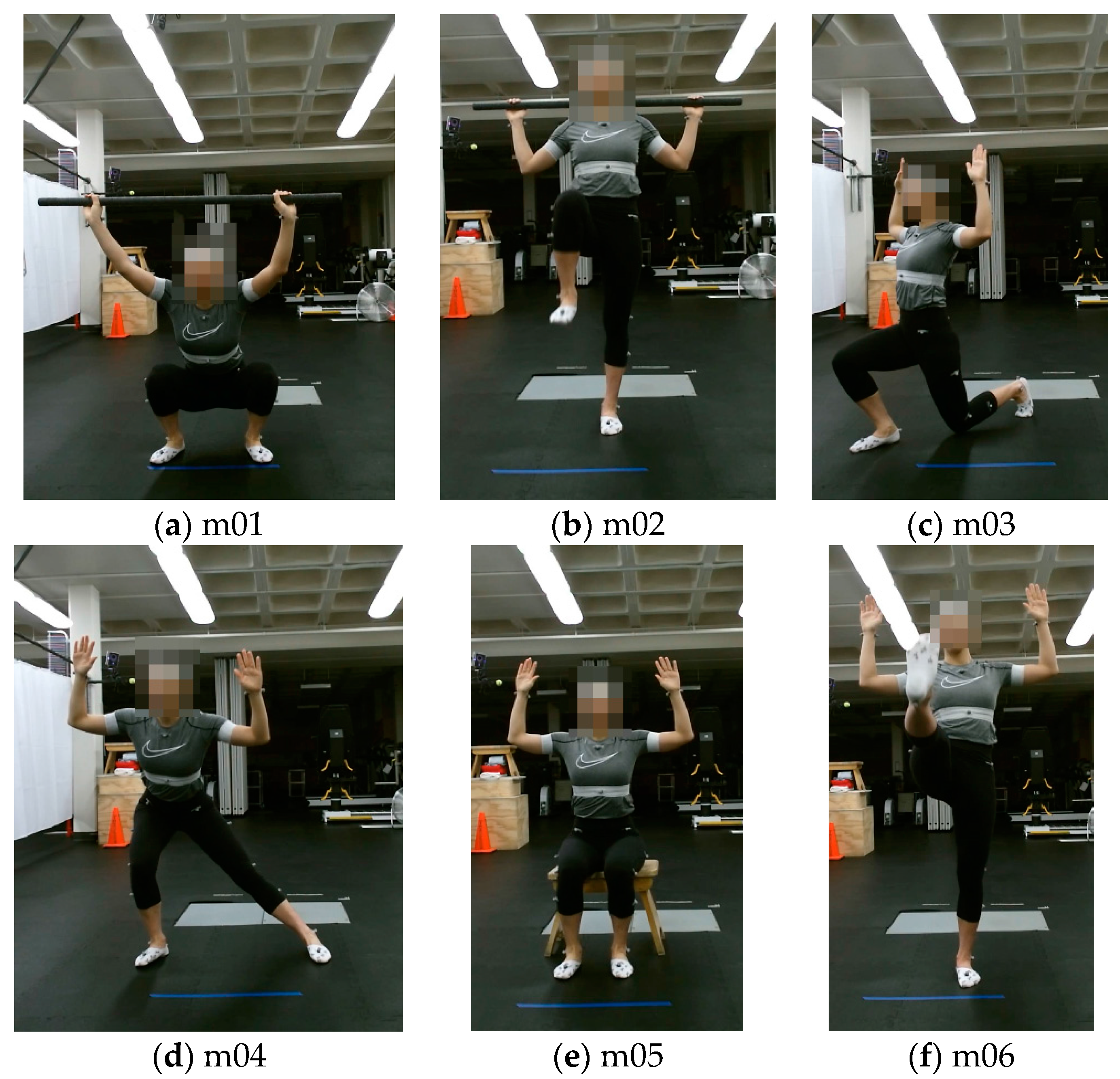

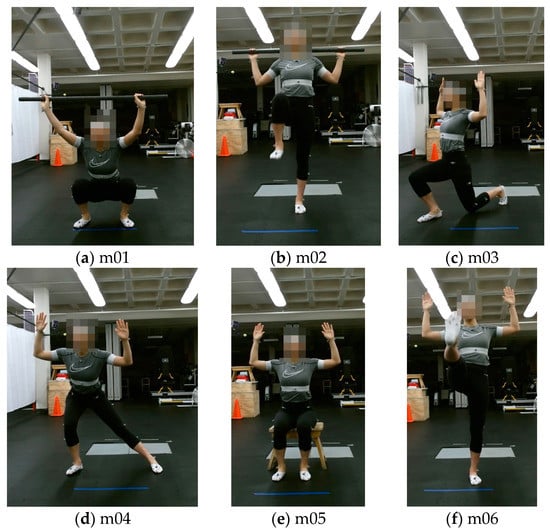

The UI-PRMD data set consists of measurements of joint angles and positions of 10 subjects while performing movements commonly performed during physical rehabilitation exercises. We selected 10 different movements, which are listed and described in Table 1. Examples of the 10 movements are displayed in Figure 1. During the data collection, each movement was initially demonstrated to a subject by one of the authors of the study, and afterwards the subject was asked to perform multiple repetitions of the movement. Each subject performed 10 repetitions of each of the 10 movements. The subjects were not asked to maintain the body posture at the end of the repetitions for a period of time.

Table 1.

Movement order and a brief description.

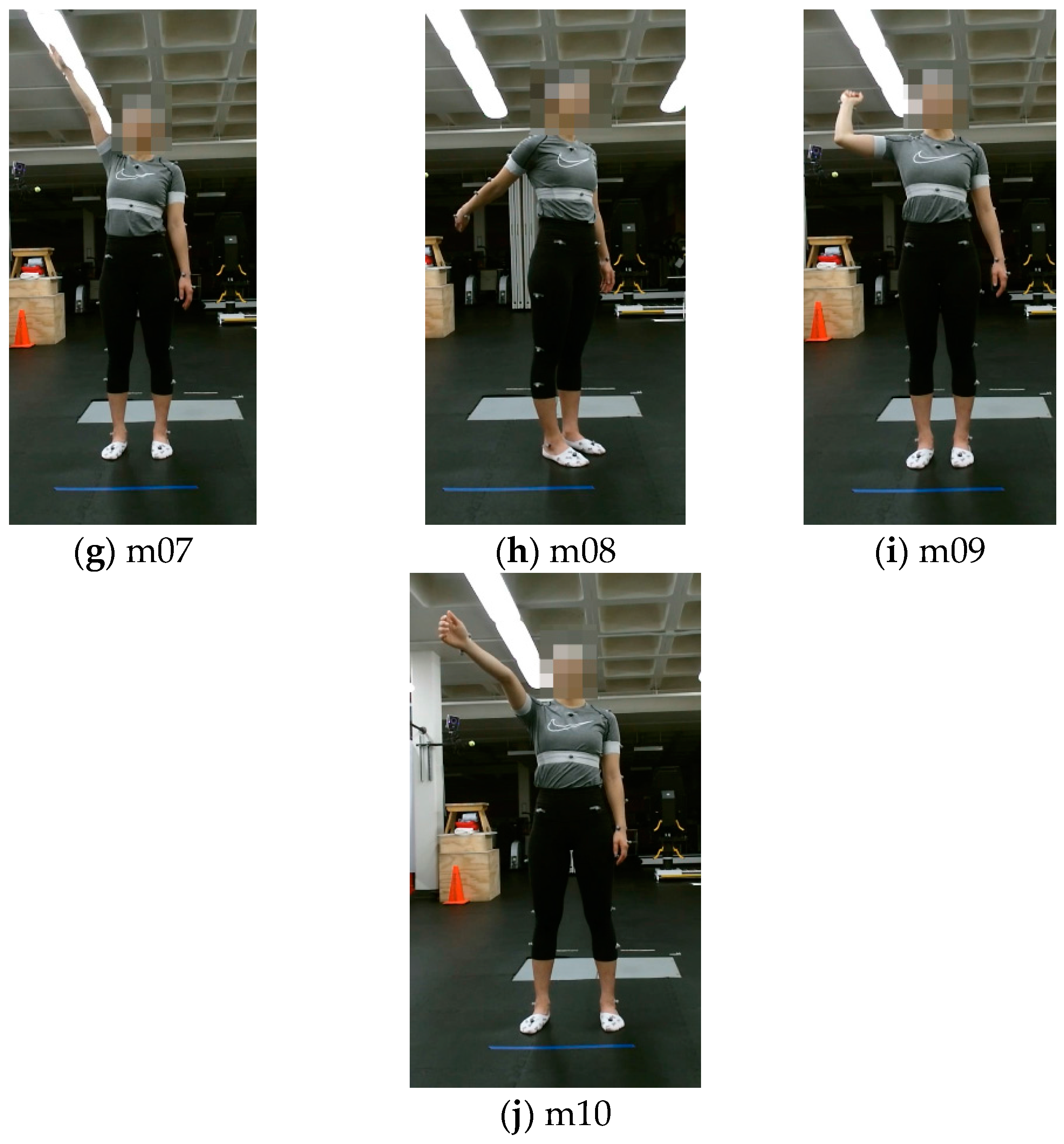

Figure 1.

Examples of the 10 movements: (a) Deep squat (m01); (b) Hurdle step (m02); (c) Inline lunge (m03); (d) Side lunge (m04); (e) Sit to stand (m05); (f) Standing active straight leg raise (m06); (g) Standing shoulder abduction (m07); (h) Standing shoulder extension (m08); (i) Standing shoulder internal-external rotation (m09); (j) Standing shoulder scaption (m10).

One motivation for selecting these movements is the body of work in the literature that, similarly to our research goal, dealt with modeling and evaluation of rehabilitation exercises. For instance, Lin and Kulic [20] employed a data set consisting of deep squats, sit to stand, knee flexion, hip flexion, and straight leg raise movements for the development of a machine learning method for automated segmentation of the repetitions in each exercise. Komatireddy et al. [21] proposed an approach for evaluation of the consistency in completing the following physical therapy exercises: deep squats, inline lunge, sitting knee extension, and standing knee extension. Similar movements were employed in other related research within the published literature [17,18]. Additional motivation for selecting the movements is because they are commonly used by clinicians as part of rehabilitation programs or as part of physical examinations for numerous situations, such as post-surgery recovery, upper body conditions (e.g., rotator cuff tendinopathy), lower body conditions (e.g., patellar tendinopathy) [22,23]. On the other hand, the choice of the movements in the presented data set was not intended to address rehabilitation for specific types of medical or musculoskeletal conditions. Furthermore, the subject performance in the data set was not expected to correspond to a perfect movement, e.g., from a sport perspective. Rather, the goal was to collect motion trajectories that are performed in a consistent manner by the group of healthy subjects, and to utilize the data for mathematical modeling and analysis of the movements.

The nomenclature of the files in the data set includes the following information:

• Movement number _ subject number _ positions/angles.

For example, the data instance ‘m04_s06_positions’ pertains to the 4th movement in Table 1 (i.e., side lunge) performed by the 6th subject, and it consists of the Cartesian position coordinates of the body joints, expressed in millimeters. Similarly, ‘m08_s02_angles’ corresponds to the recorded angular displacements for the second subject while performing the standing shoulder extension movement, expressed in degrees. The joint order and further description of the position and angular measurements are provided in the next section, which is dedicated to the description of the data recording method.

The data are presented in ASCII txt format, with comma delimiter used for separating the data values in the files.

The data are organized into two folders ‘Vicon’ and ‘Kinect’, each containing the measurements acquired by either of the two respective sensory systems. Each folder contains two subfolders, ‘Positions’ and ‘Angles’, which contain the files with the respective measurements.

Further, because each movement consists of 10 episodes, or repetitions of the same movement, the data are also provided in a segmented form, where each file comprises the measurements for one episode of one of the movements. The corresponding data are provided in the ‘Segmented Movements’ folder. The following file nomenclature is used for the segmented movements:

In this case, the file ‘m04_s06_e10_angles’ consists of the angular joint measurements for the 10th episode of the 4th movement performed by the 6th subject.

• Movement number _ subject number _ episode number _ positions/angles.

In addition, the data set provides examples of the movements performed in an incorrect, or non-optimal, manner. In Table 1, the rightmost column provides explanations of non-optimal performance for each movement. For instance, incorrect ways to perform the deep squat movement can include: upper torso not being kept vertical during the squat, knees not being aligned and kept parallel, noticeable or excessive trunk flexion, or noted loss of balance, among others. The rationale for the inclusion of non-optimal performance is that the correctly performed movements are associated with examples of movements that are demonstrated to a patient by a rehabilitation professional, and therefore they can be utilized to develop a mathematical model of the movement. In contrast, patients with musculoskeletal injury or constraints are assumed to be unable to, at least initially, perform the exercise movement in a correct or optimal manner that is generally accepted as necessary for efficient movement to reduce injury risk associated with physical activity [24]. Consequently, the consistency of the incorrectly performed movements can be evaluated in relation to the derived mathematical model of the correct movements, and a performance score can be communicated to the patient by an automated system for movement analysis. In other words, the non-optimal portion of the data can serve as a testing set, and it can be used for validation of the formulated mathematical models for the correct portion of the movements.

In creating the set of incorrect movements, the subjects performed 10 episodes of the 10 movements arbitrarily in a suboptimal manner. One should note that the subjects were not asked to simulate a patient with a specific injury, nor to perform the incorrect exercises at a certain level of a specific injury. The goal was to execute a set of non-optimal movements that represent deviation from the correctly executed movements. This portion of the data is stored into a folder named ‘Incorrect Movements’. Analogously to the described taxonomy for the correct movements, the data are classified based on the sensory system used (Vicon or Kinect), and based on whether the movements are available in their entirety or segmented into 10 episodes. The file nomenclature for the incorrect movements follows the same order as for the correct movements, and in addition, all files have an extension _inc, which implies ‘incorrect’. For instance, the file ‘m05_s04_e03_angles_inc’ corresponds to the incorrect third episode performed by the fourth subject for the sit to stand movement. One of our next goals and a future work task is to manually label the incorrect movements, based on the extent of inconsistency in performing the exercises.

The files ‘m03_s03_positions/angles’ for the Vicon system, related to the inline lunge movement performed by the third subject, are missing in the data set, due to absent measurements for the markers during the data collection. The corresponding data for the same movements recorded with the Kinect sensor is available in the data set.

3. Methods

The data were collected using two types of sensory systems: a Vicon optical tracking system, and a Microsoft Kinect camera.

Vicon optical tracker [25] is a highly accurate system designed for human motion capturing and analysis. The system employs eight cameras with high speed and resolution characteristics for tracking a set of retroreflective markers. By attaching the set of markers on strategic locations of a human body, the system calculates the position of the markers based on the acquired data from the cameras, and it uses this information to retrieve the orientations of the individual body parts.

The Kinect sensor [8] consists of a color camera and an infrared camera which are used to simultaneously acquire image and range data from the environment. The sensor was initially designed as a natural-user interface in gaming environments for Microsoft’s Xbox console; however due to its popularity among researchers, hobbyists, and the industry, Microsoft offered it as a stand-alone unit for Windows operating systems and released a software development kit (SDK). The SDK provides libraries for access to the raw RGB and depth streams, other miscellaneous data processing codes, and the most importantly for this study—a skeletal tracker with real-time motion capturing ability. In skeletal mode, the Kinect sensor can track the movements of up to 6 people and 25 skeletal joints per person.

The movements performed by the study participants were acquired simultaneously with the Vicon and Kinect systems. The software programs Nexus 2 and Brekel were employed for recording the movements with the Vicon and Kinect systems, respectively. The frame rate of the motion capture with Vicon was 100 Hz, whereas for the Kinect it was 30 Hz. The Cartesian positions values for the joints are expressed in millimeters, and the joint angles are expressed in degrees, for both the Vicon and Kinect measurements. The values in the data set are presented as recorded. The only preprocessing operation that was performed corrected large jumps in the angle measurements with Kinect, because the angles were limited to be in the (−180°, +180°) range. For the cases where these limits were exceeded, the values continued on the opposite side of the limits. No other data processing was performed.

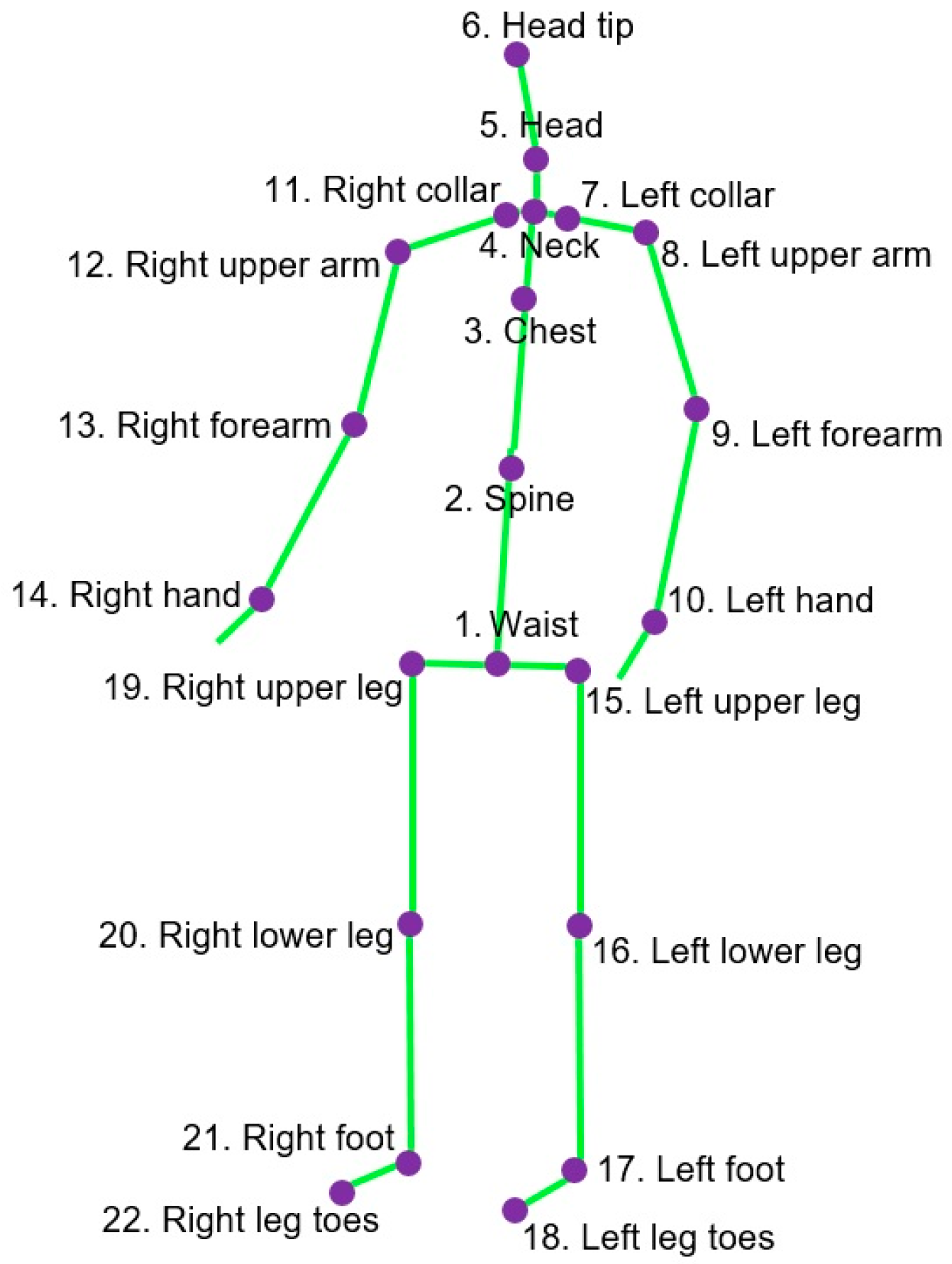

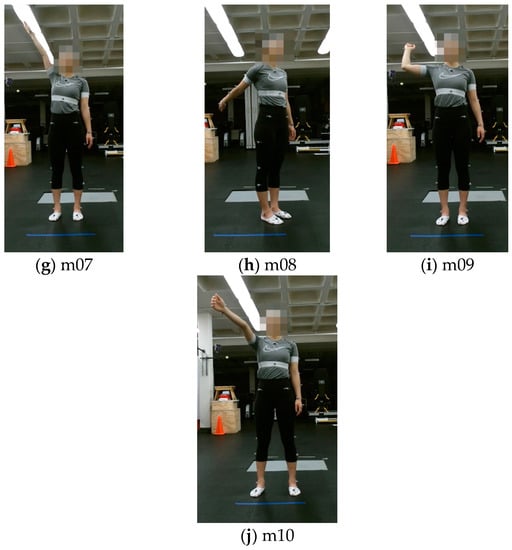

The joints in the skeletal model recorded with the Kinect sensor are shown in Figure 2. The data include the motion measurement for 22 joints. The positions of the fingers are not included because they are not relevant for assessing correct performance of the movements included in the study. The order of the joint measurements in the data set is displayed in the figure, where the first three measurements pertain to the waist joint, the next three values are for the spine, etc. The values for the waist joint are given in absolute coordinates with respect to the frame of the coordinate origin of the Kinect sensor, and the values for the other 21 joints are given in relative coordinates with respect to the parent joint in the skeletal model. For instance, the position and orientation of the left forearm is given relative to the position and orientation of the left upper arm.

Figure 2.

Joints in the skeletal model of Kinect-recorded data.

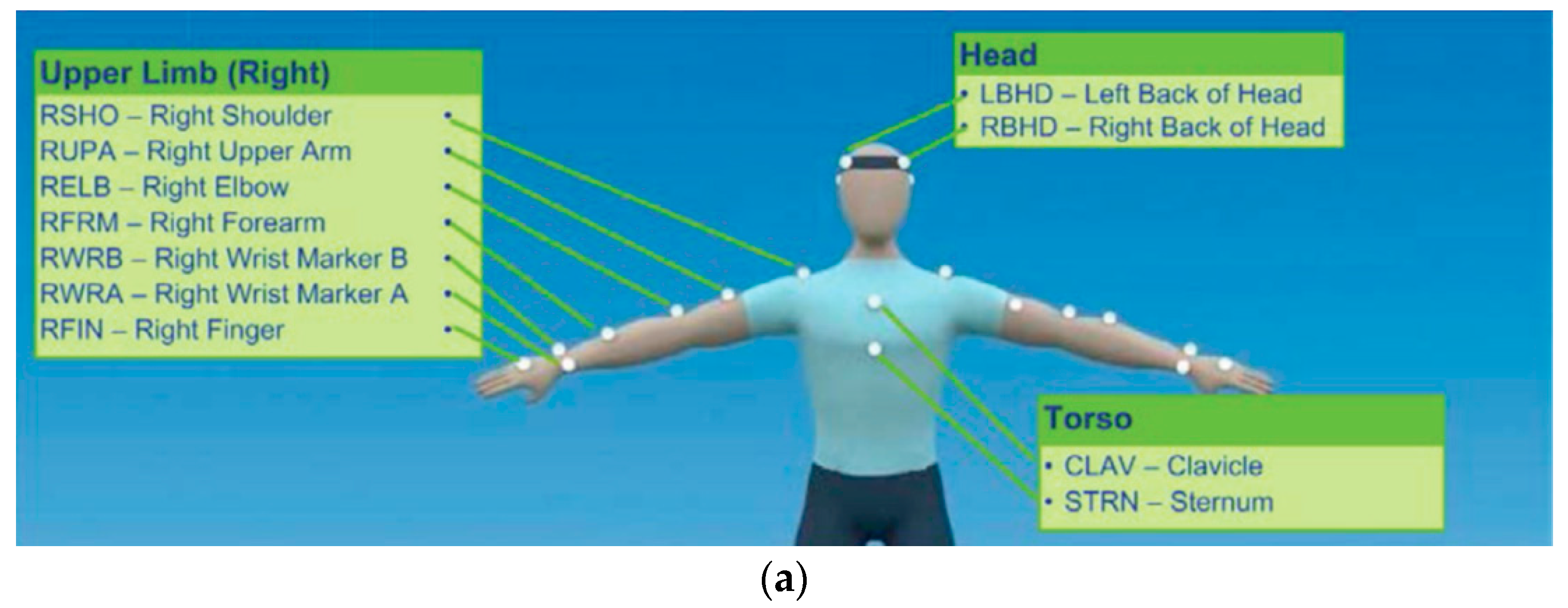

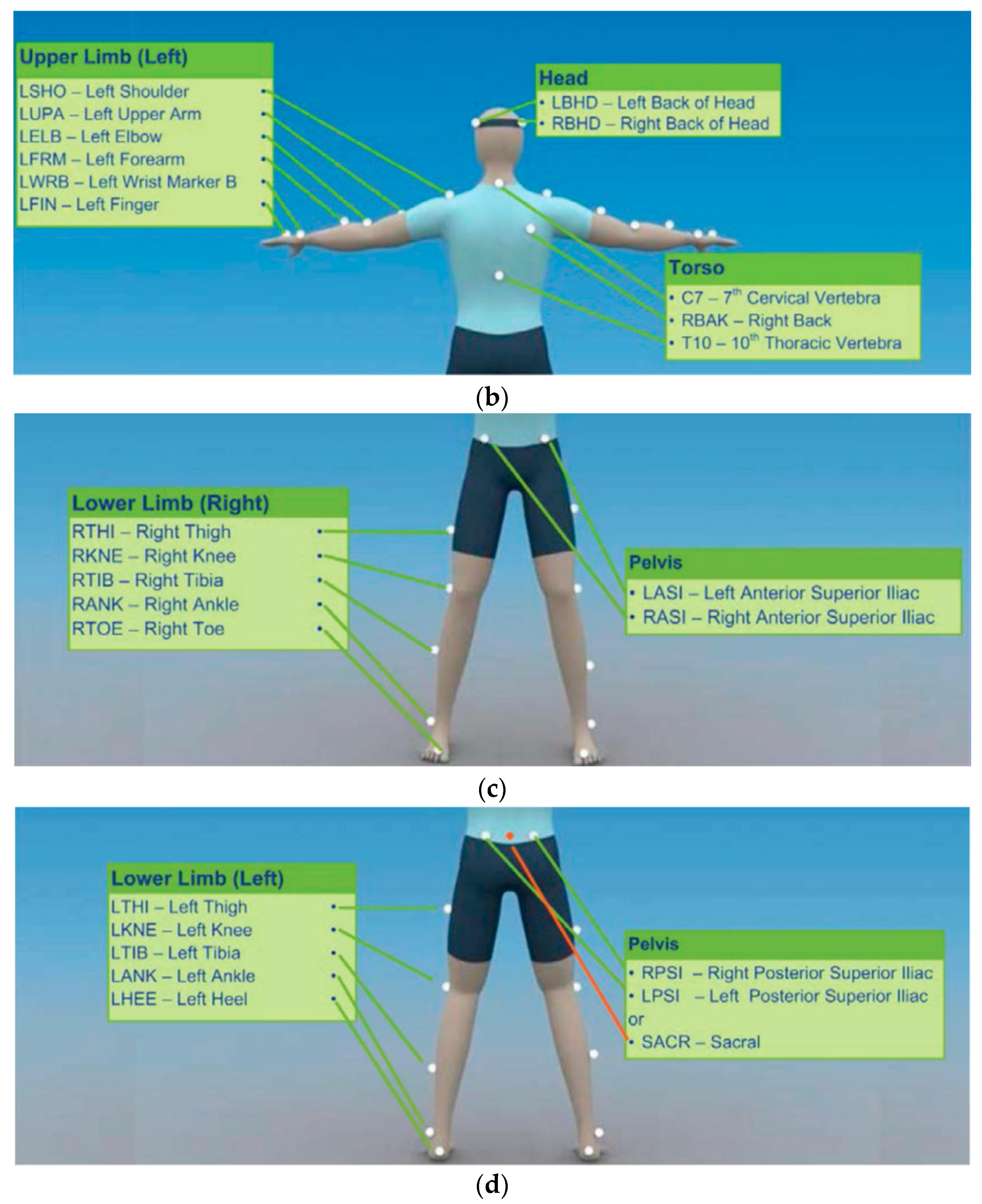

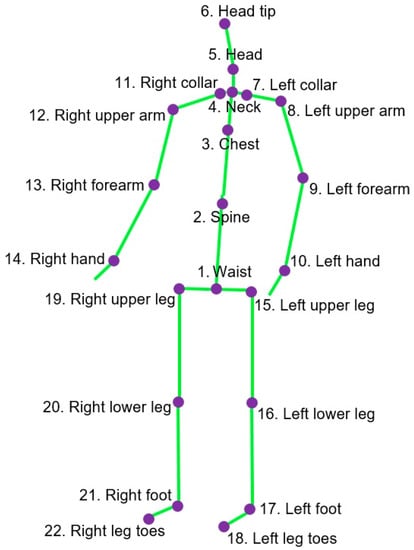

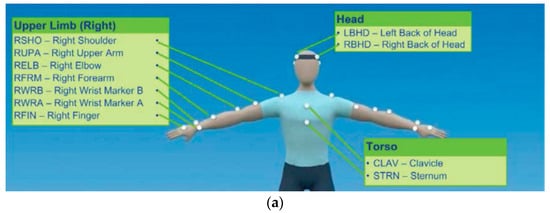

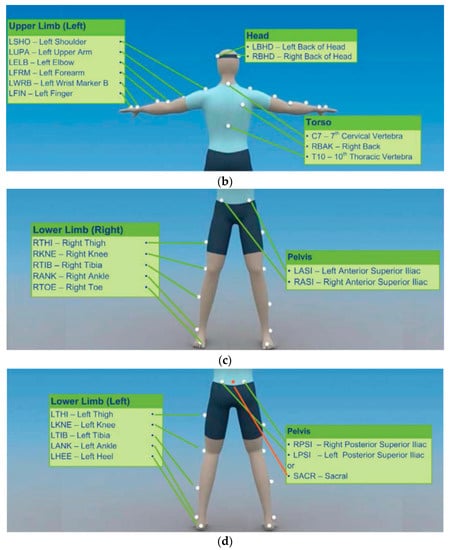

For tracking and sensing of the demonstrated movements with the Vicon system, a total of 39 reflective markers were attached on the subjects’ bodies. The locations for attaching the markers [26] are shown in Figure 3.

Figure 3.

Locations on the body for attaching the Vicon markers. (a) Front view of the upper body; (b) Back view of the upper body; (c) Front view of the lower body; (d) Back view of the lower body. The pictures are taken from [26]. Copyright©: 2016 Vicon Motion Systems Limited.

The order of measurements for the Vicon and Kinect systems are presented in Table 2. For both Vicon and Kinect, the joints for which the measurement are absolute are given with respect to the coordinate system of the sensory system, and are indicated in the parenthesis in the table. For the remaining joints, the measurements are relative, and are given with respect to the parent joint in the skeletal model. The angle outputs for all joints are represented with the YXZ triplet of Euler angles for both sensors.

Table 2.

Order of positions and angles in the data set for the Vicon and Kinect systems.

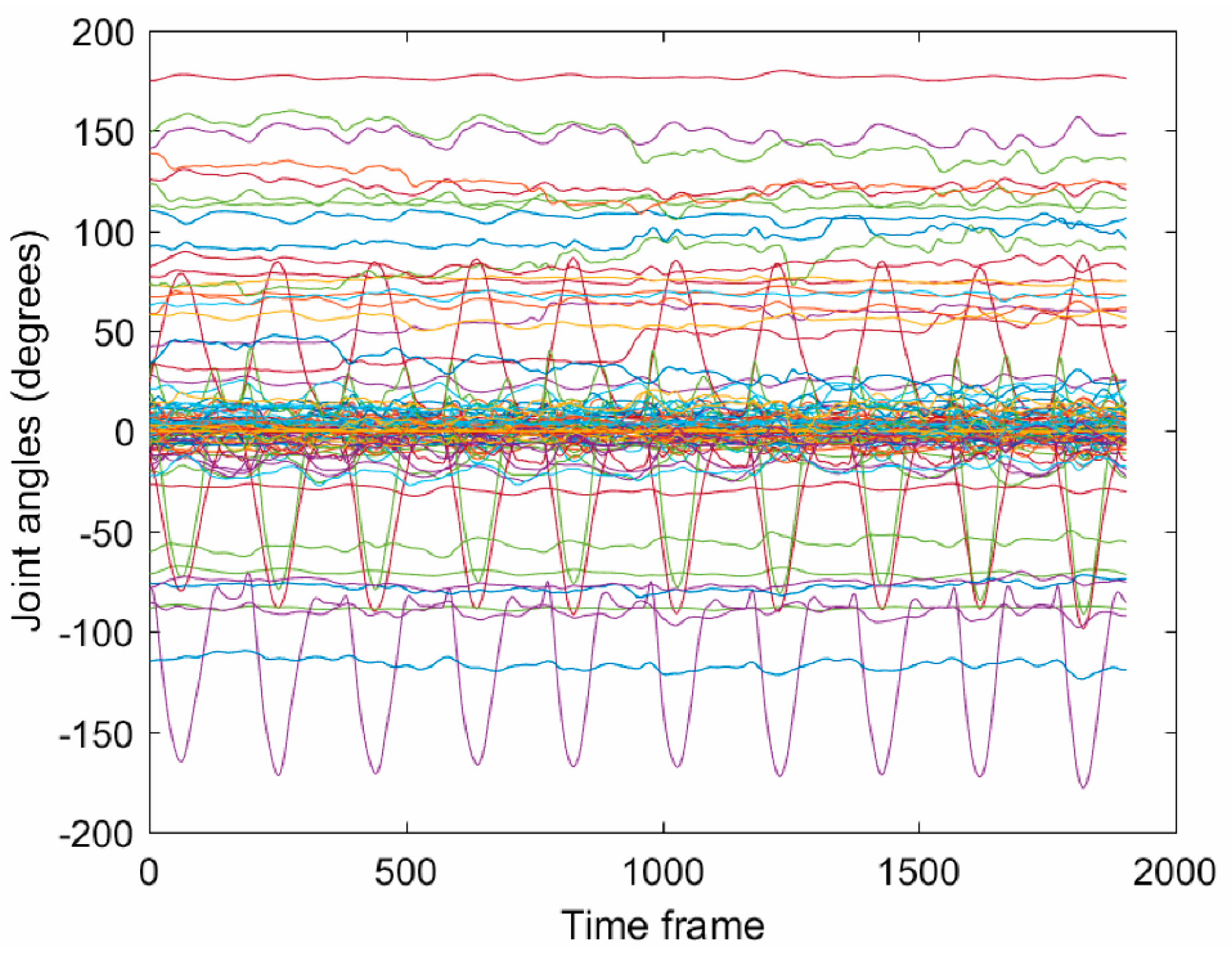

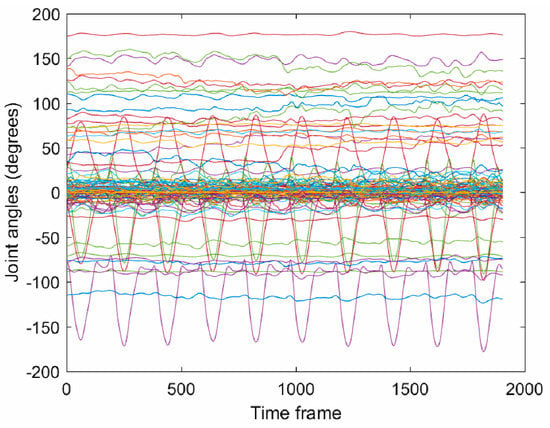

A sample of the collected data with Vicon for the ‘m06_s01_angles’ file, which is related to the standing active straight leg raise movement, is shown in Figure 4. All angular coordinates for the 39 joints are given in the figure in degrees. One can notice that only several joints have significant displacements and they correspond to the leg joints, whereas the other body joints have almost constant values.

Figure 4.

Recorded joint angles with the Vicon motion capture system for one subject showing the 10 episodes of the standing active straight leg raise movement. The figure displays the angular displacements corresponding to the 117-dimensional data for approximately 1900 time frames (i.e., 19 s).

The demographic information of the ten subjects who participated in the data collection is provided in Table 3. The average age of the subjects was 29.3 years, with the standard deviation of 5.85 years. As stated before all the subjects were in a good health condition. The subjects were either graduate students or faculty at the University of Idaho, as indicated in the table.

Table 3.

Demographic information for the subjects.

In spite of the provided information for the dominant side of the subjects in Table 3, some of the subjects were not consistent in performing the exercises with their dominant hand or leg. Table 4 lists the hand or leg with which each subject performed each of the movements, where understandably, R and L stand for right and left, respectively. The deep squat and sit to stand movements are not listed in the table because they do not depend on the subjects’ dominant side.

Table 4.

Hand or leg used by each subject in performing the 10 movements.

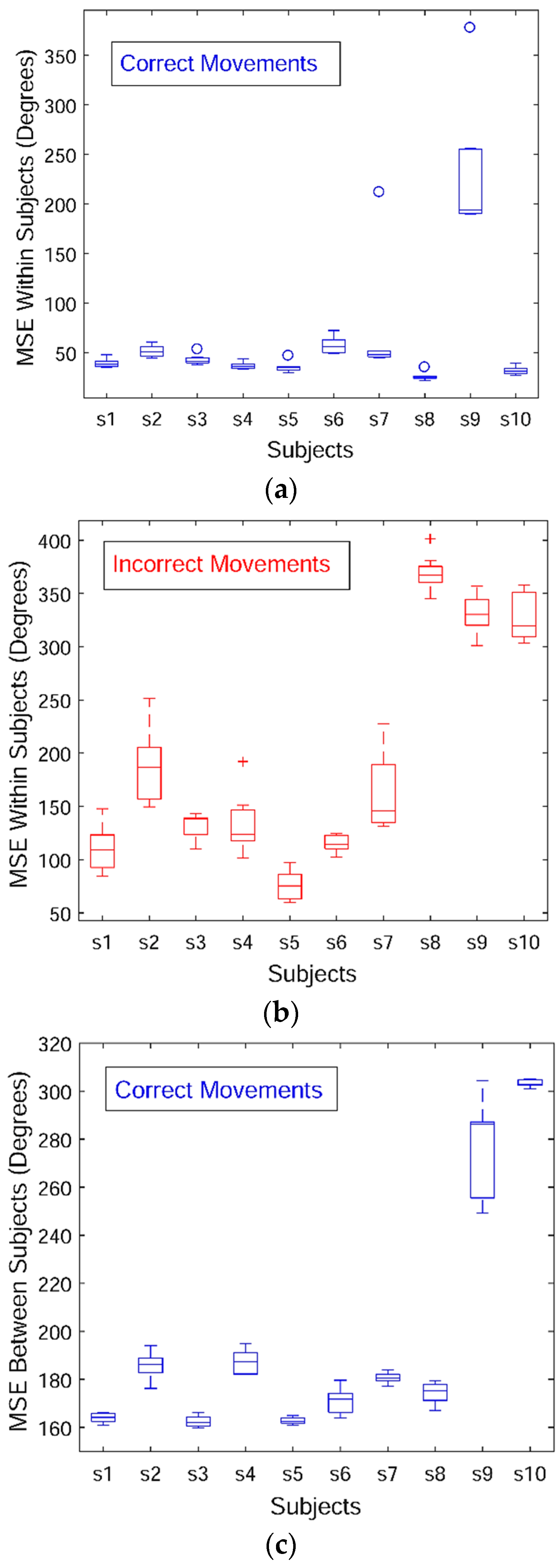

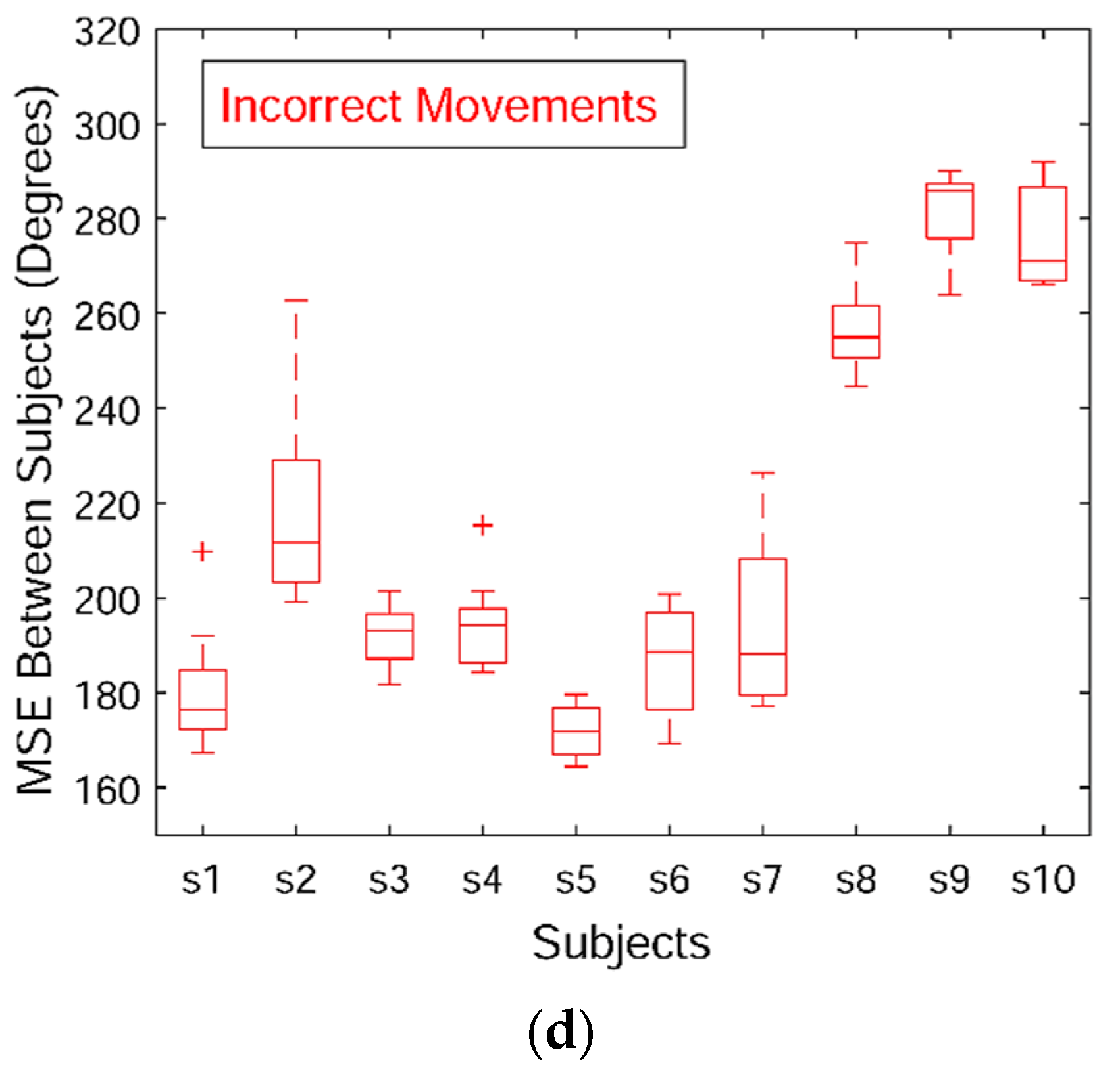

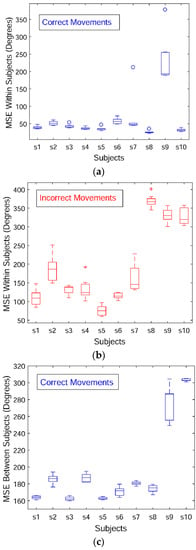

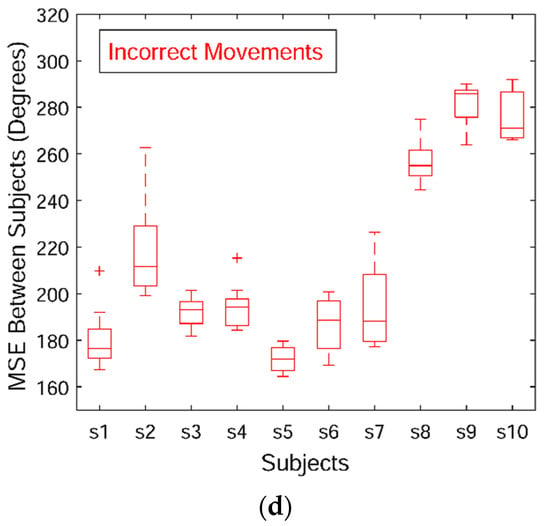

Next, the variability across the movement sequences in the data set is briefly discussed. The skeletal angular data acquired with the Vicon sensory system for the deep squat exercise, i.e., m01 movement, is employed for this purpose. Mean-square deviation (also known as mean-square error, or MSE) is selected as a statistic for comparing the variability in performance within and between subjects. For calculation of the MSE, the movement episodes were first scaled via cubic interpolation to a common length equal to the average number of time steps for all 100 episodes of m01, which was equal to 240. Afterward, the MSE was calculated and it was normalized by dividing the total deviation with the number of time steps (i.e., 240) and the number of dimensions (117 for the Vicon system). The results are shown with box diagrams in Figure 5. Figure 5a presents the deviation of the correct movements within subjects. Most of the subjects were consistent in their performance, except for Subject 9 who completed the repetitions of the dep squat exercise in a less uniform manner. Figure 5b displays the deviation within subjects of the incorrect sequences with respect to the correct movements performed by the same subject. Similarly, the last three subjects produced larger variability between their correct and incorrect movements. Analogously, Figure 5c depicts the variation between subjects, where the deviation of each correct movements is calculated with respect to all correct movements collected from all 10 subjects. Beside Subject 9, Subject 10 movements are also characterized with a greater disparity relative to the other subjects, although the subject performed the repetitions in a consistent manner based on Figure 5a. Figure 5d shows the deviation of each incorrect movement with respect to all correct movements.

Figure 5.

Mean-square deviation for the angular displacements of the deep squat movement across the 10 subjects. (a) Within-subject variance for the correct movements; (b) Within-subject variance for the incorrect movements; (c) Between-subject variance for the correct movements; (d) Between-subject variance for the incorrect movements.

Table 5 contains the means and standard deviations for the between-subject MSE deviations of the angular Vicon measurements for all 10 movements. For each movement two rows are provided corresponding to the correct movements (denoted with a ‘-c’ suffix) and the incorrect movements (denoted with an ‘-i’ suffix). The values for the subjects who used a different hand or leg for a particular movement than the majority of the other subjects (as indicted in Table 4) were not included in the table (and are indicated with NA in the table), because their movement data deviates significantly from the other movements. Our intent is to provide a preliminary statistical information on the variability of the movement data, and as part of our future work we will investigate other metrics for explanation of the consistency of the subjects’ performance.

Table 5.

Mean-square deviation related to between-subjects measurements of the correct and incorrect movements. The values represent the mean and the standard deviation (in parenthesis) for the MSE per subject. The rows with the values for the correct sequences have a ‘-c’ suffix, and the corresponding rows or the incorrect movements have an ‘-i’ suffix. In addition, the rows for the incorrect movements are shaded. NA values indicate subjects who used a different hand of leg for an exercise than the rest of the subjects.

The research project related to the data collection was approved by the Institutional Review Board at the University of Idaho on 26 April 2017 under the identification code IRB 16-124. A written informed consent for participation in a research study was approved by the board, and was obtained from all participants in the study.

4. Conclusions

In summary, the contribution of this paper is the presented data set of movements related to physical therapy exercises. The set of 10 exercises performed by 10 healthy subjects and recorded with two motion capturing systems is described. Instances of the movements performed in an incorrect manner are also provided, and can potentially be utilized for evaluation of data modeling methods. The presented data set have several limitations. In particular, all the movements were performed by healthy subjects; it would have been preferred at least the incorrect movements to have been performed by patients. In addition, the selected movements are general, and are not associated with a particular condition, or groups of patients. A shortcoming of the data set for the purpose of mathematical modeling of the movement data with machine learning methods is its size, whereas a large data set including a large number of subjects and movement types is preferred.

Acknowledgments

This work was supported by the Center for Modeling Complex Interactions through NIH Award #P20GM104420 with additional support from the University of Idaho. The authors would like to acknowledge and thank Youngmin Chun for his help with collecting the data, and Christian Williams for his help with organizing the data and segmenting the movements. The authors would also like to thank the volunteers who performed the movements for the data set.

Author Contributions

Russell Baker and David Paul conceived and designed the movements for the data set. Hyung-pil Jun and Russell Baker collected the data. Aleksandar Vakanski analyzed and processed the data for public access. Aleksandar Vakanski wrote the manuscript. David Paul, Russell Baker, and Hyung-pil Jun participated in the writing, reviewing, and approval of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Machlin, S.R.; Chevan, J.; Yu, W.W.; Zodet, M.W. Determinants of utilization and expenditures for episodes of ambulatory physical therapy among adults. Phys. Ther. 2011, 91, 1018–1029. [Google Scholar] [CrossRef] [PubMed]

- Hush, J.M.; Cameron, K.; Mackey, M. Patient satisfaction with musculoskeletal physical therapy care: A systematic review. Phys. Ther. 2011, 91, 25–36. [Google Scholar] [CrossRef] [PubMed]

- Jack, K.; McLean, S.M.; Moffett, J.K.; Gardiner, E. Barriers to treatment adherence in physiotherapy outpatient clinics: A systematic review. Man. Ther. 2010, 15, 220–228. [Google Scholar] [CrossRef] [PubMed]

- Aung, M.S.H.; Kaltwang, S.; Romera-Paredes, B.; Martinez, B.; Singh, A.; Cella, M.; Valstar, M.; Meng, H.; Kemp, A.; Shafizadeh, M.; et al. The automatic detection of chronic pain-related expression: Requirements, challenges, and the multimodal EmoPain dataset. IEEE Trans. Affect. Comput. 2016, 7, 435–451. [Google Scholar] [CrossRef]

- Hsu, C.-J.; Meierbachtol, A.; George, S.Z.; Chmielewski, T.L. Fear of reinjury in atheletes: Implications and rehabilitation. Phys. Ther. 2017, 9, 162–167. [Google Scholar] [CrossRef]

- Maciejasz, P.; Eschweiler, J.; Gerlach-Hahn, K.; Jansen-Troy, A.; Leonhardt, S. A survey on robotic devices for upper limb rehabilitation. J. NeuroEng. Rehabil. 2014, 11, 3. [Google Scholar] [CrossRef] [PubMed]

- Gauthier, L.V.; Kane, C.; Borstad, A.; Strah, N.; Uswatte, G.; Taub, E.; Morris, D.; Hall, A.; Arakelian, M.; Mark, V. Video game rehabilitation for outpatient stroke (VIGoROUS): Protocol for a multicenter comparative effectiveness trial of inhome gamified constraint-induced movement therapy for rehabilitation of chronic upper extremity hemiparesis. BMC Neurol. 2017, 17, 109. [Google Scholar] [CrossRef] [PubMed]

- Kinect Sensor for Xbox One, Microsoft Devices. Available online: https://www.microsoft.com/en-us/store/d/kinect-sensor-for-xbox-one/91hq5578vksc (accessed on 16 October 2017).

- Vakanski, A.; Ferguson, J.M.; Lee, S. Mathematical modeling and evaluation of human motions in physical therapy using mixture density neural networks. Physiother. Rehabil. 2016, 1, 118. [Google Scholar] [CrossRef]

- Vakanski, A.; Ferguson, J.M.; Lee, S. Metrics for performance evaluation of patient exercises during physical therapy. Int. J. Phys. Med. Rehabil. 2017, 5, 3. [Google Scholar] [CrossRef] [PubMed]

- UTD (University of Texas at Dallas)—MHAD (Multimodal Human Action Dataset). Available online: http://www.utdallas.edu/~kehtar/UTD-MHAD.html (accessed on 10 August 2017).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Han, F.; Reily, B.; Hoff, W.; Zhang, H. Space-time representation of people based on 3D skeletal data: A review. Comput. Vis. Image Underst. 2017, 158, 85–105. [Google Scholar] [CrossRef]

- CMU Multi-Modal Activity Database. NSF EEEC-0540865. Available online: http://kitchen.cs.cmu.edu (accessed on 10 August 2017).

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Berkeley MHAD: A comprehensive multimodal human action database. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Tampa, FL, USA, 15–17 January 2013. [Google Scholar]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Mining actionlet ensemble for action recognition with depth camera. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Ar, I.; Akgul, Y.S. A computerized recognition system for home-based physiotherapy exercises using an RGBD camera. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 1160–1171. [Google Scholar] [CrossRef] [PubMed]

- Nishiwaki, G.A.; Uragbe, Y.; Tanaka, K. EMG analysis of lower muscles in three different squat exercises. J. Jpn. Ther. Assoc. 2006, 9, 21–26. [Google Scholar] [CrossRef] [PubMed]

- Reiss, A.; Stricker, D. Creating and benchmarking a new dataset for physical activity monitoring. In Proceedings of the 5th International Conference on Pervasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2012. [Google Scholar]

- Lin, J.F.-S.; Kulic, D. Online segmentation of human motions for automated rehabilitation exercise analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 168–180. [Google Scholar] [CrossRef] [PubMed]

- Komatireddy, R.; Chokshi, A.; Basnett, J.; Casale, M.; Goble, D.; Shubert, T. Quality and quantity of rehabilitation exercises delivered by a 3-D motion controlled camera: A pilot study. Int. J. Phys. Med. Rehabil. 2016, 2, 214. [Google Scholar] [CrossRef] [PubMed]

- Sahrmann, S. Diagnosis and Treatment of Movement Impairment Syndromes; Mosby: St. Louis, MO, USA, 2008. [Google Scholar]

- Ludewig, P.M.; Lawrence, R.L.; Braman, J.P. What’s in a name? Using movement system diagnoses versus pathoanatomic diagnoses. J. Orthop. Sports Phys. Ther. 2013, 43, 280–283. [Google Scholar] [CrossRef] [PubMed]

- Cook, G. Movement: Functional Movement Systems: Screening, Assessment, and Corrective Strategies; On Target Publication: Aptos, CA, USA, 2010. [Google Scholar]

- Vicon Products, Vicon Devices. Available online: https://www.vicon.com/products (accessed on 16 October 2017).

- Plug-In Gait Reference Guide, Vicon Motion Systems; Vicon Industries Inc.: Hauppauge, New York, USA, 2016.

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).