Abstract

Dementia and heart failure are growing global health issues, exacerbated by aging populations and disparities in care access. Diagnosing these conditions often requires advanced equipment or tests with limited availability. A reliable tool distinguishing between the two conditions is essential, enabling more accurate diagnoses and reducing misclassifications and inappropriate referrals. This study proposes a novel measurement, the optimized weighted objective distance (OWOD), a modified version of the weighted objective distance, for the classification of dementia and heart failure. The OWOD is designed to enhance model generalization through a data-driven approach. By enhancing objective class generalization, applying multi-feature distance normalization, and identifying the most significant features for classification—together with newly integrated blood biomarker features—the OWOD could strengthen the classification of dementia and heart failure. A combination of risk factors and proposed blood biomarkers (derived from 10,000 electronic health records at Chiang Rai Prachanukroh Hospital, Chiang Rai, Thailand), comprising 20 features, demonstrated the best OWOD classification performance. For model evaluation, the proposed OWOD-based classification method attained an accuracy of 95.45%, a precision of 96.14%, a recall of 94.70%, an F1-score of 95.42%, and an area under the receiver operating characteristic curve of 97.10%, surpassing the results obtained using other machine learning-based classification models (gradient boosting, decision tree, neural network, and support vector machine).

1. Introduction

The proportion of older adults is increasing in every country, possibly due to improvements in education, nutrition, and healthcare. As a result, a new life-course model has been introduced, encompassing early life (younger than 45 years), midlife (45–65 years), and later life (older than 65 years) [1]. Dementia and heart failure are two of the most diagnosed chronic conditions in older adults, frequently co-occurring due to shared risk factors and interconnected biological mechanisms [2,3,4]. With increasing age, individuals are more likely to develop conditions such as high blood pressure, diabetes, high cholesterol, and obesity, all of which are known to increase the risk of heart disease and neurodegenerative diseases [5]. There exists a clear physiological link between these two disorders. For example, heart failure can cause reduced blood flow to the brain, which may accelerate cognitive deterioration. Dementia is also associated with systemic inflammation and metabolic disturbances, which adversely affect cardiovascular function [4]. This two-way interaction highlights the importance of maintaining both cognitive and cardiovascular health in older adults. By managing these comorbidities simultaneously, more effective diagnoses can be developed, allowing for improved treatment outcomes and a higher quality of life for older adults.

Dementia is a growing public concern, impacting the social and economic sectors in terms of medical and social care costs. The global societal cost of dementia was estimated at USD 1.3 trillion in 2019, with costs expected to surpass USD 2.8 trillion by 2030. Additionally, in 2019, the impact on families and caregivers was found to be significant, with an average of 5 h per day spent caring for individuals with dementia. Women were especially impacted; the majority of dementia patients who passed away were female, accounting for up to 65% of cases. Currently, there is no cure for dementia; the primary goal of care is early diagnosis, which is key to optimal management [6]. The increase in the older adult population has given rise to a greater number of dementia prevalence studies [7] that utilize digital healthcare with appropriate technology [8,9,10] and various features [11].

Heart failure is defined as a condition in which the heart has a reduced ability to pump or fill with blood or exhibits structural abnormalities that result in inadequate cardiac output. Heart failure has been described as a global pandemic, affecting approximately 64.3 million people worldwide as of 2017. The prevalence is expected to rise, primarily because individuals live longer after diagnosis due to lifesaving interventions and general improvements in life expectancy. However, the financial burden is significant—in the U.S. alone, the cost of heart failure was estimated at USD 30.7 billion in 2012, and projections suggest that costs could rise by 127% to USD 69.8 billion by 2030 [12]. Given the high prevalence and severe health consequences of heart failure, numerous studies have focused on identifying its risk factors and developing effective prevention strategies.

Biomarkers are generally used for dementia detection. These include cerebrospinal fluid biomarkers, blood-based biomarkers, neuroimaging biomarkers, and genetic biomarkers. Given the high cost of positron emission tomography/magnetic resonance imaging (PET/MRI), blood-based biomarkers offer a practical alternative for detecting dementia. They are also noninvasive and widely accessible, making them suitable for large-scale and repeated assessments. Dementia and heart failure share several risk factors, including body weight, blood cholesterol levels, hypertension, serum lipids, and diabetes [5,6]. Leveraging noninvasive and affordable methods enhances accessibility and equity, particularly in resource-limited settings. However, using such biomarkers from electronic health records (EHRs) remains challenging and requires comprehensive data integration and validation.

Digital healthcare technology is being rapidly implemented among older adults, as it improves monitoring, communication, and health data collection. Currently, healthcare knowledge-based systems, which support personalized care for older adults and their healthcare providers, are widely accessible through smart devices, such as smartphones and smart watches. In addition, innovations in healthcare and medical support are required to enhance personalized healthcare and increase life expectancy. Machine learning (ML) methods have emerged as powerful tools in medical research. In dementia studies, ML methods have been applied in both preclinical and clinical investigations. Examples include studies using various cognitive tests for dementia [13], a deep learning framework to identify dementia from DNA datasets [14], gradient boosting (GB) for dementia classification based on human genetics [15], analysis of the dementia-related gene APOE4 [16], ensemble classifiers for multi-class classification using the Alzheimer’s Disease Neuroimaging Initiative (ADNI) dataset [17], and dementia classification based on PET/MRI [18,19,20], with most datasets derived from the ADNI. ML methods have also been used in the classification of heart failure, such as support vector machines (SVMs) [21,22,23], decision trees (DTs) [24], GB [25], extreme gradient boosting (XGB) [26], and deep learning, across datasets of varying types and sizes. However, ML approaches often require large datasets to perform effectively, and performance can degrade when data are limited. Furthermore, ML methods may struggle with interpretability, which is a key consideration in medical diagnostics. To address these limitations, it is essential to explore an alternative approach that could enhance classification performance while maintaining interpretability.

Distance measurement methods, such as the weighted objective distance (WOD), have been successfully applied in the classification of various diseases, including hypertension [27] and type 2 diabetes [28], which are risk factors for dementia and heart failure [1,29,30,31]. However, the WOD is limited by its reliance on predefined thresholds and fixed values tied to specific diseases, leading to model generalization issues. To address these limitations, this study introduces the optimized weighted objective distance (OWOD). The OWOD enhances the original WOD approach by incorporating an objective function that derives data representations directly from the dataset. It identifies the target values of both dementia and heart failure risk factors and calculates the WOD accordingly. Thus, this study proposes a binary method based on distance measurement for the classification of dementia and heart failure using blood biomarkers and established risk factors from EHRs. The OWOD is expected to strengthen the binary classification of dementia and heart failure by enhancing objective class generalization, applying multi-feature distance normalization, and identifying the most significant features for classification.

2. Literature Review

Numerous studies have investigated various aspects of dementia and heart failure. These studies can be categorized into three groups, which are discussed in this section.

2.1. Feature Studies of Dementia and Heart Failure

Several studies have focused on the risk factors for both dementia and heart failure. Dementia risk factor studies can be divided into three groups. The first group encompasses the relationship between dementia and body weight [32], blood cholesterol [33], and hypertension [29,30,31]. Such studies have demonstrated significant associations between dementia development and various clinical parameters that can be obtained from routine physical check-ups and blood tests. These include hypertension [29,30,31], body weight [32], blood cholesterol levels [33], serum lipids [34], and diabetes [1]. The second group comprises preclinical studies, such as speech assessment [35,36], lifestyle activity [37], handwriting assessment [38,39], sleep disturbances [40,41], sex differences [42], physical assessment, risk score models [43,44,45], and hearing loss [1]. The third group includes brain imaging clinical studies using PET/MRI [19].

Similarly, heart failure studies can be categorized into three groups. The first group identifies risk factors such as age [46,47], sex [46,47], hypertension [46], hypercholesterolemia [47], low-density lipoprotein cholesterol (LDL-C) [46], diabetes mellitus [46], overweight/obese [47], elevated body mass index (BMI) [46], smoking [46], low physical activity [46], family history of premature cardiovascular disease (CVD) [47], chronic kidney disease [47], and lipid biomarkers [47]. Key risk factors for heart failure—such as obesity, hypercholesterolemia, hypertension, lipid biomarkers, diabetes, sex, and age—are also strongly associated with dementia [12,29,30,31,46,47]. The second group includes studies on preclinical heart failure, such as the incidence of preclinical heart failure in healthy community individuals using health status records during visits. These include age, BMI, systolic blood pressure (SBP), diastolic blood pressure (DBP), heart rate, diabetes, hypertension, obesity, hyperlipidemia, and echocardiogram [48]. Some studies assess the risk of heart failure based on preclinical information [47]. The third group comprises studies on the clinical identification and prevention of heart failure, such as heart disease identification using ML methods [21,22,23], such as DT [24], GB [25], and XGB [26].

While the reviewed studies provide valuable insights across the dementia and heart failure risk continuum—from risk factor identification to preclinical and clinical assessments—several limitations and potential biases warrant consideration. Risk factor studies often rely on observational or retrospective data, which may be subject to confounding variables and lack causal inference. Preclinical studies may introduce cultural or educational biases, limiting generalizability across diverse populations. Furthermore, risk score models in the case of dementia, although practical, can oversimplify complex disease pathways and may not fully capture interactions between multiple features. Clinical studies using advanced equipment and laboratory tests are typically conducted in well-resourced settings, potentially excluding underrepresented populations and limiting real-world applicability. These limitations underscore the need for integrative, accessible, and representative approaches in the classification of dementia and heart failure, balancing precision with inclusivity.

Although dementia and heart failure often co-occur and share multiple risk factors, their distinct clinical manifestations and biomarker profiles justify the use of a binary classification model. Developing such a model is challenging, as it must differentiate between two interrelated conditions with overlapping etiologies yet diverging diagnostic and therapeutic pathways—particularly in cases where early symptoms are ambiguous. The dementia and heart failure risk factors identified in previous studies are shown in Table 1.

Table 1.

Summary of dementia and heart failure risk factors identified in previous studies.

This study proposes a new feature and method for classifying dementia and heart failure, with blood biomarkers mainly used to construct the binary classification model. Two groups of blood-oriented features were used in this study. The first group consisted of features associated with dementia and heart failure development from existing works, termed related risk factors (R). The second group consisted of blood biomarker features also obtainable from blood testing, but with no established associations with the two conditions; these were newly proposed for this study and termed potential features (P). Therefore, the features used to develop the classification model were derived from both existing risk factors and a new set of blood biomarker-oriented features. The integration of the new set of features was expected to sharpen the boundary of classes within the dataset.

2.2. ML-Based Classifications for Dementia and Heart Failure

Several ML models have been proposed for constructing classification models from different types of data. For dementia studies, the models can be categorized into two groups. First are the models that use imaging datasets for classification [18,19,20,49,50,51,52]. The images are usually obtained from advanced laboratories and tend to have high resolutions, necessitating sophisticated models, such as deep learning-based models. The limitations of imaging-based studies include the need for advanced instrumentation and a sufficiently large sample size to ensure reliable results—factors that are often difficult to achieve in practical healthcare settings. Second are the models that use nonimaging datasets for classification [21,22,23,27,28,43,53]. Such studies utilize a range of biomarkers, such as demographic and psychiatric information [43], DNA methylation profiles [14], genetic data from ADNI-1 [15], and medical attributes [27,28,54].

For heart failure studies, SVMs have been widely adopted across different datasets, achieving prediction accuracies between 92.37% and 98.47% depending on preprocessing techniques, such as principal component analysis or hybrid feature selection [21,22,23]. DT, while simpler, has still proven effective, with an accuracy of 93.00% on Kaggle heart failure data [24]. More recent approaches have leveraged physiological signals—such as electrocardiograms—processed through recurrent neural networks and deep learning models—producing high accuracies of 99.86% [53] and 97.93% [51], respectively. These results revealed the effectiveness of the incorporation of time-series and image-based representations of cardiac signals, which appear to significantly enhance the accuracy and robustness of heart failure classification models.

Despite the high comorbidity rate of heart failure and dementia, few studies have addressed their joint classification. Previous work by the team of the corresponding author explored both binary and multi-class classification models. For binary classification, an extra trees model with data balancing achieved 89.11% accuracy on a small dataset (4297 records) [55], increasing to 96.47% on a larger dataset (14,763 records) using a data enrichment framework [54]. For multi-class classification, GB with 59 features across more than 16,000 records classified heart failure, aortic stenosis, and dementia with 83.81% accuracy [27]. XGB with 108 augmented features on 26,474 records achieved 91.42% accuracy [26]. These studies highlight the importance of large-scale, feature-rich data and robust ML algorithms for classification problems. A persistent challenge in applying ML to medical/healthcare data is the insufficiency of clinical data, as many datasets are incomplete or imbalanced, limiting the model’s ability to generalize and increasing the risk of overfitting, particularly in complex classification tasks. In addition, the complexity and limited interpretability of such models necessitate the development of more transparent methods, such as distance measurements.

2.3. Classification with Distance Measurements

Classification using distance measurements is expected to outperform ML-based models in these specific scenarios as it relies on direct comparisons between feature vectors, making it more robust, less sensitive to overfitting, and more interpretable. This is especially the case when the feature space is well defined and clinically meaningful. Classification methods using distance measurements have been introduced and widely applied in various domains over the decades. These include Euclidean distance [56], Manhattan distance [57], distance measures on fuzzy c-means algorithms [58], and objective distance [59,60,61]. Recently, a WOD [27] was proposed to solve personalized care for older adults with hypertension. This method measures the distance between the current health status of an individual and their defined level of hypertension and then generates personalized feedback based on the distances obtained. Additionally, the average weighted objective distance (AWOD) [28] has been applied for predicting type 2 diabetes. Both the WOD and AWOD leverage the concepts of objective distance, demonstrating their potential for measuring the distance between an individual’s status and expected health goals. The WOD utilizes weighting factors derived from information gain to prioritize these factors in distance calculations. The AWOD builds upon this framework by focusing on the average distances between features and is particularly effective for binary classification problems. However, both methods have limitations: the WOD often struggles with comparability in distances due to its reliance on static weighting, while the AWOD, despite improving upon the WOD, may overlook the complexities in the interactions between risk factors. These limitations highlight a critical gap in the effective modeling of the influence of diverse health conditions. To address these challenges, this study proposes the OWOD, which modifies the distance metric for each feature, enhances comparability, and employs a refined objective function derived directly from the dataset of dementia and heart failure. This new distance aims to improve the performance of the classification model and promote model generalization.

2.4. The Proposed Study

The proposed OWOD introduces an optimized target value, defined as the point of maximum divergence between class histograms. This value represents the most discriminative threshold reflecting the class boundaries and is used to determine the optimal decision point for classification. Compared with the WOD and AWOD, the OWOD enhances the modification of each feature’s distance values to facilitate comparability. It also improves on the previous distances’ predefined and standard values, in terms of finding the objective function that represents values from the dataset, thereby enhancing generalization.

Similar to the WOD and AWOD, the weighting factors of the OWOD are derived from information gain. Information gain, derived from entropy, measures uncertainty or impurity in a dataset. Entropy quantifies the level of uncertainty or disorder within data. In the classification problem, entropy is primarily utilized to determine the irrelevant attributes of a dataset [62]; accordingly, information gain is one of the most popular methods for feature selection problems [63,64]. Information gain is used as a novel feature selection for text classification problems [65]. It reduces the dimensionality of features available in the document for improving classification performance. In addition, it is applied as a weight coefficient to enhance the effectiveness of the classification algorithm [66]. Weighting attributes can improve classification accuracy [67] by prioritizing attributes from the least to the most important.

For the current study, the classification model using the OWOD was constructed with an equal number of dementia and heart failure records, ensuring both conditions were treated as equally important. This approach addressed the limitations of previous measurements, including the WOD and AWOD, by refining distance calculations and employing a more effective objective function derived from the dataset. The risk factors and additional blood biomarker features were employed to sharpen the boundary between the two dataset classes: patients with dementia and patients with heart failure. It is hypothesized that new blood biomarker features and the OWOD can promote generalization and provide better classification performance compared with other ML-based binary classification models.

3. Research Methodology

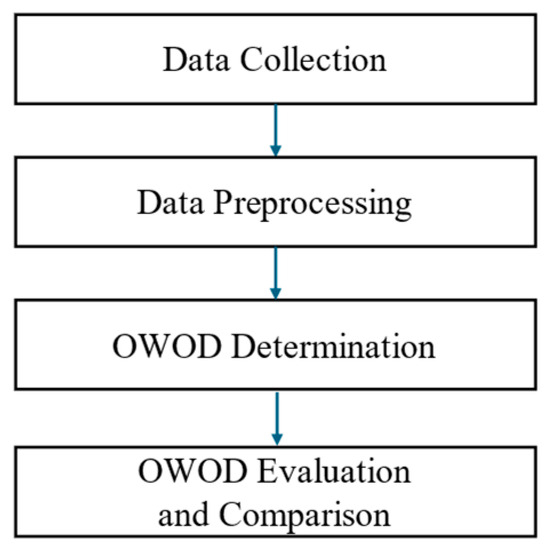

This study consisted of four main procedures, as shown in Figure 1.

Figure 1.

Research methodology consisting of four main processes: data collection, data preprocessing, optimized weighted objective distance (OWOD) determination, and OWOD evaluation and comparison.

The details of each procedure are discussed in the following sections.

3.1. Data Collection

The dataset used in this study is the EHR from the Chiang Rai Prachanukroh Hospital, Chiang Rai, Thailand, spanning the years 2016 to 2022. The dataset includes blood tests and clinical records of individuals aged 60 and over, comprising a total of 12,222 records (dementia = 5563; heart failure = 6659). No individual was diagnosed with both diseases simultaneously. Table 2 shows the features used.

Table 2.

Description of the features used in the current study.

As previously discussed (and shown in Table 2), the features used in this study were divided into two groups: R and P. The R group (including body weight, blood cholesterol, and blood pressure) was employed as it was associated with dementia and heart failure in the existing research. The P group was obtained through blood tests. Physical activity was not considered a risk factor in this study due to insufficient data; this includes smoking and alcohol history.

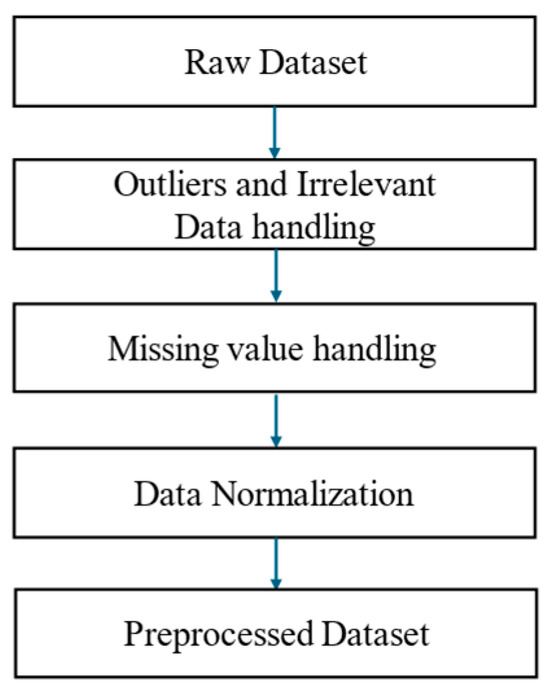

3.2. Data Preprocessing

The dataset originally consisted of 12,222 records, including 5563 dementia records and 6659 heart failure records. The raw dataset subsequently went through the preprocessing procedure shown in Figure 2. Following this, the final dataset was balanced to include 10,000 records, with 5000 dementia and 5000 heart failure cases.

Figure 2.

Data preprocessing workflow comprising outlier and irrelevant data handling, missing value imputation, and data normalization.

Prior to normalization, erroneous or invalid entries were addressed by handling outliers and irrelevant data. This included filtering or correcting values deviating significantly from the expected distribution or deemed implausible due to data entry errors (e.g., extremely low or high body weights). Missing values were addressed using mode imputation, based on the most frequent values observed in the data distribution. Data normalization was applied as part of the preprocessing workflow to ensure consistency and reliability before proceeding to OWOD determination. This step involved adjusting the raw data to a standard scale, typically by standardizing values with a mean of 0 and a standard deviation of 1, or by scaling them to a defined range. Such preprocessing ensured that the final dataset was statistically valid and suitable for subsequent analysis.

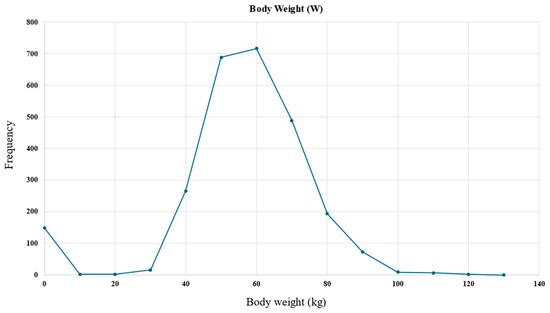

An example histogram of the body weight feature is presented in Figure 3, demonstrating its applicability in the data preprocessing step.

Figure 3.

Body weight feature histogram used in the data preprocessing step to handle outliers and missing values.

It can be observed in Figure 3 that weight values within the 0–40 kg range were erroneous entries. To address this, the data were filtered to exclude values falling outside the expected distribution range. The missing body weight entries were attributed to the most commonly occurring value, which was 60 kg.

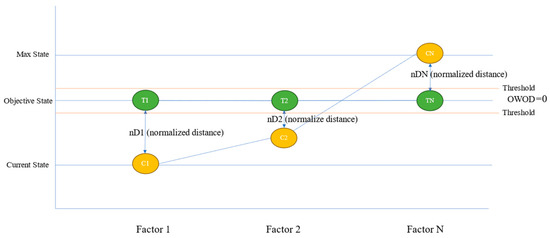

3.3. The OWOD Concept

The underlying principle of the OWOD method is based on the distance between current and objective states, with the weights derived from information gain. The weights reflect the actual impact on models and represent the diverse health conditions of individuals, as well as the general diagnostic procedures used by healthcare professionals. This concept is illustrated in Figure 4.

Figure 4.

The concept of the optimized weighted objective distance (OWOD), a weighted distance-based measure between current and objective states, reflecting the influence of each feature at the individual level.

Figure 4 shows that three main steps are required for OWOD determination. The first step involves determining the objective value for distance calculation. The objective value () is the state level of each feature (), which represents the group of dementia patients in the dataset. The current value () is the current state for each feature. The acceptable value () is the health status level for each feature acceptable for an individual. All three level values are used to determine the OWOD.

3.4. OWOD Determination

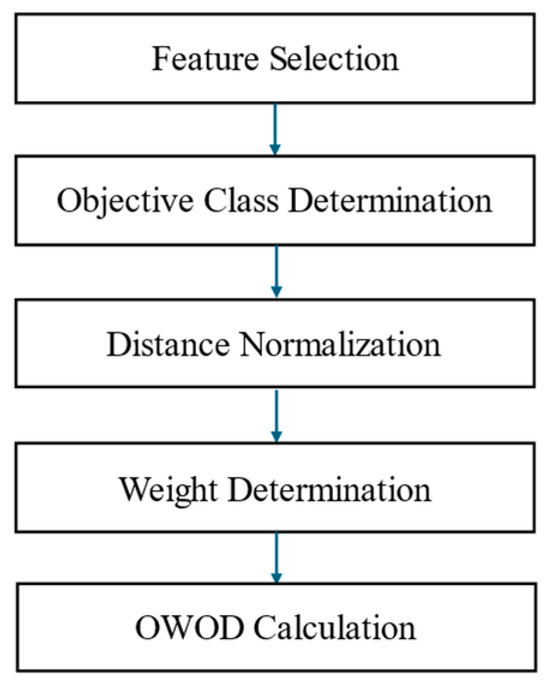

The OWOD determination process is illustrated in Figure 5.

Figure 5.

The optimized weighted objective distance (OWOD) determination process comprising feature selection, objective class determination, distance normalization, weight determination, and OWOD calculation.

The OWOD algorithm consists of five processes, beginning with feature selection, where the number of features used in the calculation is determined. The objective value represents the target level of each feature derived from the dataset. The next step involves calculating the normalized distance between the current state and the objective state. Entropy and information gain are then used to compute the weight for each feature. Factor weighting is determined based on information gain, and the final step involves calculating the OWOD.

3.4.1. Feature Selection

This study utilized a mixed selection of R and P features, testing various sets of 8, 12, 16, and 20 features to determine the feature combination of the best model. These selections were intended to investigate how varying levels of feature dimensionality influence OWOD model performance. These numbers represented incremental increases that helped examine the trade-off between feature richness and overall classification performance.

3.4.2. Objective Class Determination

To calculate the OWOD, the objective class was determined. A histogram was used to find the data distribution of the objective class. The histogram was calculated using Equation (1).

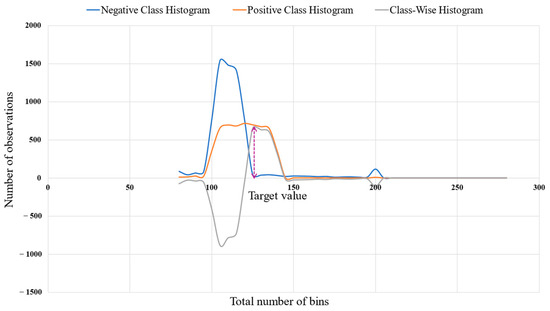

where n refers to the total number of observations, k refers to the total number of bins, and refers to the histogram data. The target value was defined as the value of observation k corresponding to the maximum positive difference between the histograms of the positive and negative classes. This objective value was determined from the class-wise histogram distribution presented in Figure 6.

Figure 6.

Determination of the target value (dotted arrow) for each feature based on class-wise histogram distributions, illustrating the distributional differences between positive and negative classes.

The target value of this feature was calculated using Equation (2).

where denotes the target value of the observation feature and corresponds to the point of maximum difference between the positive and negative class distributions.

3.4.3. Distance Normalization

The distance calculation method was used to determine the distance between the current and objective state features. The distance was calculated using Equation (3).

where refers to the target value of attributes, refers to the current state value of attributes, and refers to the distance between the target and current states.

where refers to the target value of attributes, refers to the acceptable state value of attributes, and refers to the distance between the target and acceptable states.

Data from multiple features were combined in the calculation. As distance was a key measurement, any bias could lead to issues in the final OWOD calculation. The normalization method was therefore applied. Normalization was calculated using Equation (5).

where is the distance from Equation (3), is the acceptable value and the maximum value, and is the normalized distance between the target and current states. This is shown in Equation (6).

where is the distance from Equation (4), is the acceptable value and the maximum value, and is the normalized distance between the target and acceptable states.

The normalized distance ratio used for the weight calculation is the normalized distance ratio of the normalized distance between the target and current states compared to the total normalized distance. This is shown in Equation (7).

is the normalized distance ratio of the normalized distance between the target and acceptable states compared to the total normalized distance. This is shown in Equation (8).

3.4.4. Weight Determination

To determine the entropy of the target class with respect to all attributes, an equal proportion of the target class was initially determined using Equation (9). This resulted in an equal proportion of positive and negative classes.

where is the value of the equal proportion for the positive class (+) with respect to all attributes, is the value of the equal proportion for the negative class (−) with respect to all attributes, is the total number of attributes, and is the total number of target classes.

Next, the fraction of the target class was determined using Equation (10). This value represents the fraction of the chance of being in a positive or negative class with respect to all attributes.

where represents the fraction of the positive class (+) and represents the fraction of the negative class (−), in which both classes are equal.

The weight determination relies on entropy and information gain.

- Entropy.

Shannon Entropy, an information theory method, quantifies the average level of information, surprise, uncertainty, or complexity for a given random variable based on its historical occurrences. Entropy () was calculated using Equation (11).

where is the class of attributes, is the proportion of the samples belonging to class , and is log based 2.

- Information Gain.

Information gain is the reduction in entropy produced by partitioning a set with attributes and finding the optimal candidate that produces the highest value. The information gain () was calculated using Equation (12).

where is a random variable and is the entropy of given the value of an attribute .

The split information value is a positive integer that describes the potential worth of splitting a branch from a node. This, in turn, is the intrinsic value the random variable possesses and is used to remove bias from the information gain ratio calculation. The split information value () was calculated using Equation (13).

where is a discrete random variable with possible value and is the number of times that occurs, divided by the total event count where is the set of events.

The information gain ratio , the ratio of the information gain and the split information value for the variable , was calculated using Equation (14).

The weight information () was calculated using Equation (15).

where is the ratio of entropy from Equation (11) and the information gain from Equation (14), and is the calculated weight information.

3.4.5. OWOD Calculation

To determine the OWOD of all attributes, the WOD of each attribute was first determined using Equation (16). Let .

After obtaining the of each person, the normalized WOD of each attribute () was determined using Equation (17).

where is the maximum OWOD among all attributes and is the minimum OWOD among all attributes.

Therefore, the OWOD of all attributes for each person () was determined using Equation (18).

where denotes the OWOD of all attributes for the ith individual.

To identify the class based on the obtained , the following condition was applied, as shown in Equation (19):

where is the cut-off OWOD calculated from threshold of all features. refers to the threshold of the target values for each feature and is defined as the deviation of 5% above and below the of all features. For this study, the negative class was heart failure, and the positive class was dementia.

3.4.6. OWOD Algorithm

The algorithm used to perform the OWOD calculation is presented in the pseudocode shown in Algorithm 1 as follows:

| Algorithm 1: OWOD calculation |

| Input: List of selected features (F) Output: Table of computed OWOD values 1: Initialize storage results 2: For each sample S in the dataset do 3: For each feature F do 4: Retrieve C (current level), T (target level), A (acceptable level) 5: Compute Euclidean distances: dTC ← √((T − C)2) dTA ← √((T − A)2) 6: Compute Normalized distances: ndTC ← dTC/A ndTA ← dTA/A 7: Compute Normalized distance ratios: rTC ← ndTC/(ndTC + ndTA) rTA ← ndTA/(ndTC + ndTA) 8: Compute Entropy, information gain, and gain score: EP ← calculate entropy (rTC, rTA) IG ← calculate information gain (EP) G ← calculate gain (IG) 9: Compute Entropy–gain ratio and weight: rEG ← EP/G SrEG ← sum (rEG) W ← rEG/SrEG 10: Compute Distance difference, weight*distance and normalize: DF ← |ndTC − ndTA| WD ← W × DF MxWD ← max (WD), MnWD ← min (WD) nWD ← (WD − MnWD)/(MxWD − MnWD) 11: Compute OWOD: OWOD ← average (nWD) 12: Store S, F, and OWOD values 13: End for 14: End for 15: Return OWOD result table |

3.4.7. Sample Calculation

This section demonstrates a sample calculation of the OWOD for classifying a potential group of people with dementia and a group with heart failure. To compute the OWOD, both the target and acceptable levels for each feature are applied. The target level represents the desired or optimal value for each feature; the acceptable level indicates the range of values considered tolerable. The acceptable level for each feature is defined as the standard deviation from the average value of the feature.

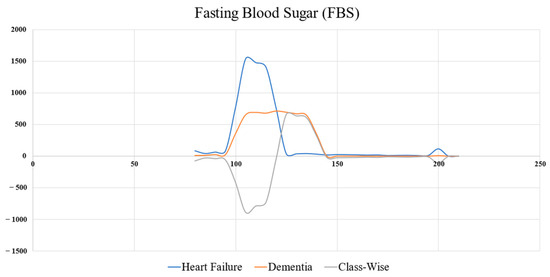

The objective function relies on obtaining the boundary of two classes by plotting the histogram of each feature, including the histogram of the objective and the other class; the target level is calculated by histogram subtraction of the two classes (grey line in Figure 7). The peak of the grey line indicates the target value.

Figure 7.

Target value determination of FBS, which is derived from a class-wise histogram distribution between the positive and negative classes.

As illustrated in Figure 7, the target level of the fasting blood sugar (FBS) () can be determined using Equation (2), resulting in a value of 130. Accordingly, when = 130, the acceptable level () = 280. More samples of target and acceptable levels are shown in Table 3. Table 4 shows the samples of records used to calculate the OWOD.

Table 3.

Sample target and acceptable values.

Table 4.

Examples of the dataset.

The classification of dementia and heart failure using the proposed measurement can be illustrated by applying the information of Person No. 1. The datasets related to the current health status of Person No. 1 are shown in Table 4. The target and acceptable levels of all features are shown in Table 3. The sample attributes comprise eight features. The target group is an objective class (OC) group and a non-objective (NC) class group. OC refers to the positive class (with dementia) and NC to the negative class (with heart failure). Following Algorithm 1, the sample calculation of the OWOD for identifying Person No. 1 is demonstrated below.

To determine the entropy of the target group with respect to all features, an equal proportion of the target group was initially determined using Equation (9). The value of the OC group and NC group is equal to 4, as follows:

Next, the fraction of the target group with respect to all features was determined using Equation (10). The fraction of the OC group () and the NC group () with respect to all features is as follows:

Thereby, the entropy of the target group with respect to all features () was determined using Equation (11). The value of is equal to 1 as follows:

To determine the entropy of each feature, an example of the entropy calculation for the weight factor is presented.

Firstly, the current distance () and acceptable distance were determined using Equations (3) and (4), respectively. According to the condition (i),, and , the and the are equal to 10 and 35 as follows:

The distances of and must be normalized and converted to percentages using Equations (5) and (6), respectively, due to the different range of each feature.

Next, the proportion of the acceptable distance and the current distance were calculated using Equations (7) and (8), respectively. The and values are equal to 0.22 and 0.78, as follows:

The fractions of the OC group and the NC group are as follows:

Thereby, the entropy of feature was determined using Equation (11). The is equal to 0.76, as follows:

Thus, the entropies of SBP, DBP, FBS, TGS, TC, HDL, and LDL are equal to 0.92, 0.86, 0.34, 0.99, 0.87, 0.99, and 1.00, respectively.

To determine the information gain of the target group with respect to all features, the entropy of all features was calculated using Equation (12). The is equal to 0.84, as follows:

Thereby, the information gain of the target group with respect to all features is equal to 0.16 as follows:

To determine the weight of each feature, the weight calculation for the weight factor is presented as an example. The significant ratio value of the weight factor () was calculated, which is equal to 4.75, as follows:

Thus, , , , , , , and .

Thereby, the weight of the weight factor was determined using Equation (15), which is equal to 0.11, as follows:

Thus, , , , , , .

To determine the OWOD of all features, the WOD of the weight factor () was calculated using Equation (16), which is equal to 3.15, as follows:

Thus, , , , and = 0.

From the values of among the eight features, is 3.15 and is 0. The normalized WOD of weight () was determined using Equation (17), which is equal to 1.00, as follows:

Thus, , , and .

Therefore, the OWOD of all features classifying the group of Person No. 1 () is equal to 0.48, as follows:

According to the conditions, before identifying the class of , was calculated with a similar algorithm by changing the current status value () to the threshold value () of all features, as illustrated in this calculation of an eight-feature dataset. The list of values is as follows: , , , , , . After determining that the value = 0.56, it could be identified that Person No. 1 was in the positive class (0.48 ≤ 0.56) and, therefore, in the dementia group.

3.4.8. OWOD Evaluation and Comparison

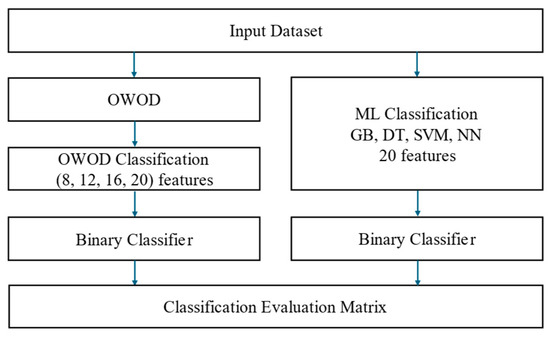

This section presents the OWOD evaluation process, as illustrated in Figure 8. The OWOD method is compared with the OWOD with differences in feature selection and other ML classification models.

Figure 8.

The optimized weighted objective distance (OWOD) evaluation framework for determining the optimal number of features used in constructing the OWOD and comparing classification performance with other machine learning (ML) models.

The impact of increasing the number of features (8, 12, 16, 20) was investigated to assess how varying levels of feature dimensionality influence the performance of the OWOD model. The best-performing OWOD model, identified based on the optimal number of features, was compared with several ML models, including GB, DT, neural network (NN), and SVM. For training and testing processes, model performance was assessed using standard evaluation metrics: accuracy, precision, recall, F1-score, and area under the receiver operating characteristic curve (AUC-ROC). A 5-fold cross-validation was applied to the training dataset (80%) to ensure robust validation, and the results were compared with the testing performance on the remaining 20% of the data.

The OWOD algorithm builds upon the previously established WOD method requiring predefined feature ranges and target values, which must be manually specified based on expert knowledge. The OWOD eliminates this requirement by directly deriving the feature ranges and target values from the dataset through an objective function. This data-driven approach makes the OWOD particularly well suited for classifying dementia and heart failure, where biomarkers and their optimal ranges are often poorly established. Consequently, the WOD is not applicable for direct comparison with the OWOD in this context.

4. Results and Discussion

This section presents the results of the proposed measurement for classifying groups of patients with dementia and heart failure. The input data used for training and validating consisted of 8000 records containing risk factors and blood biomarker features for both groups. These records were used to calculate the OWOD values and train the ML models. The evaluation was performed using 2000 unseen records to assess the classification performance of both the OWOD approach and the ML models.

4.1. Optimal Feature Dimension Results

Table 5 presents the results of the OWOD classification model using varying numbers of selected features, based on 8000 sample records representing 80% of the dataset.

Table 5.

Comparison of optimized weighted objective distance classifications by feature selection number.

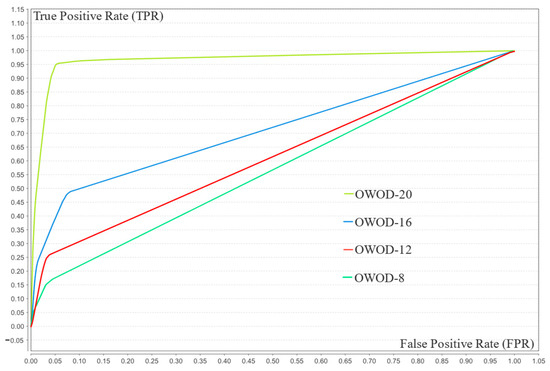

As seen in Table 5, the model’s performance improved with an increasing number of features. At eight features, its performance was limited by low recall (15.68%) and F1-score (26.44%), indicating poor sensitivity. As the number increased to 12 and 16, recall and F1-score improved notably, reflecting enhanced balance. With 20 features, all metrics—accuracy (94.95%), precision (95.64%), recall (94.20%), F1-score (94.91%), and AUC (96.60%)—reached optimal levels. This demonstrates that higher feature dimensionality significantly boosts the model’s effectiveness, particularly in detecting minority class instances. The comparison of the ROC curves is illustrated in Figure 9.

Figure 9.

Receiver operating characteristic (ROC) chart of optimized weighted objective distance (OWOD) 8–20 features comparing the OWOD with 8 features (green), 12 features (red), 16 features (blue), and 20 features (lime).

The OWOD configuration with 20 features achieved the highest and most stable TPR across all FPR values, suggesting that 20 features represent the optimal balance between model complexity and performance.

4.2. OWOD Classification Results

Table 6 shows the results of the OWOD classification using 20 features. These are labeled as “dementia” for positive class groups and “heart failure” for negative groups.

Table 6.

Optimized weighted objective distance (OWOD) classification results.

These results demonstrate the value and weight information for each person. The weight information indicates the degree to which a feature contributes to developing target conditions by representing the actual effects of each feature. Weight information with a value of 0 refers to an insignificant feature indicating control at the accepted level. Accordingly, the insignificant feature was eliminated to enhance classification performance. Weight information with a value of more than 0 refers to a significant feature affecting dementia development.

In addition, information gain computed from a reduction in entropy was employed to assign weights to each feature. The weighted features represent a degree of feature priority affecting classification. The summation of the OWOD of all features can be utilized to classify groups. Older adults with can be considered as belonging to the positive group (dementia), and those with to the negative group (heart failure). Thus, Person No. 1, with = 0.4051 and was classified as belonging to the positive group.

Confusion Matrix Evaluation

The classification performance of the OWOD was also evaluated using a confusion matrix, as shown in Table 7. The classified class was derived from the proposed measurement, and the actual class was obtained from the doctor’s decision record. TP denotes true positive, representing older adults correctly classified as belonging to the OC group. TN denotes true negative, representing those correctly classified as belonging to the NC group. FP denotes a false positive, representing those incorrectly predicted as being in the OC group. FN denotes a false negative, representing those incorrectly predicted as being in the NC group.

Table 7.

Confusion matrix of the proposed optimized weighted objective distance.

The confusion matrix of the proposed OWOD investigating 8000 records reveals that 7596 people were correctly classified. This suggests that prioritizing features by applying different weights obtained from information gain to eliminate the features that do not influence the classification is feasible. In total, 404 individuals were incorrectly classified. Variability in a particular feature measurement may account for these inaccurate results. For instance, blood pressure was recorded manually; thus, the circumstances during measurement could affect exactness. Recent caffeine use or smoking can raise SBP readings above the baseline. These findings demonstrate the possibility of misclassifications and emphasize the difficulty in classifying cases with shared overlapping risk factors, especially when the input features lack adequate discriminatory power.

4.3. Model Validation Results

This study used five-fold cross-validation to verify the OWOD model. A comparison of the OWOD and ML classification models for the validation process is shown in Table 8.

Table 8.

Comparison of the validation results of the optimized weighted objective distance (OWOD) and other machine learning models.

The validation results demonstrate the superior performance of the OWOD compared with traditional and ensemble ML methods using 20 features. The OWOD achieved the highest overall performance across all key metrics, with an accuracy of 94.95% ± 0.96, a precision of 95.64% ± 0.95, a recall of 94.20% ± 1.11, an F1-score of 94.91% ± 0.48, and an AUC-ROC of 96.60% ± 0.013. GB, while relatively precise (91.34% ± 1.16), underperformed in recall and F1-score, indicating limitations in sensitivity. The DT, SVM, and NN models exhibited even lower recall and AUC-ROC values, suggesting a weaker ability to detect positive dementia cases accurately. These findings underscore the robustness and effectiveness of the OWOD, particularly its capacity to maintain high discrimination power, which is critical for reliable medical classification.

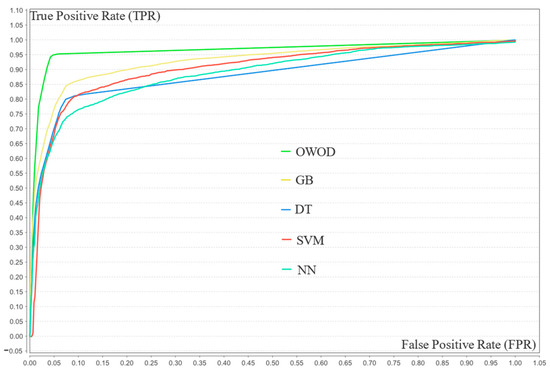

Figure 10 presents the ROC curves comparing the classification performance of five models—OWOD, GB, NN, DT, and SVM—using 20 features. The OWOD (green curve) outperformed all other methods with a consistently higher true positive rate (TPR) across nearly all false positive rates (FPRs), approaching a near-perfect classification boundary. The traditional ML models, particularly SVM and DT, demonstrated lower TPRs at equivalent FPRs, suggesting a reduced ability to distinguish between classes. This indicates that the OWOD offers a more effective solution for accurate classification in this context.

Figure 10.

Receiver operating characteristic (ROC) curve comparison of the validation results of the optimized weighted objective distance (OWOD) and other machine learning models.

To ensure fair and consistent model comparisons, each method was implemented with carefully selected hyperparameters and evaluated using five-fold cross-validation. The hyperparameter details of both the ML and OWOD classifications are presented in Table 9. These configurations, outlined in Table 10, were selected based on grid search to ensure reliable and reproducible performance evaluation across models.

Table 9.

Summary of the hyperparameters of the machine learning classifications.

4.4. Statistical Significance Comparison

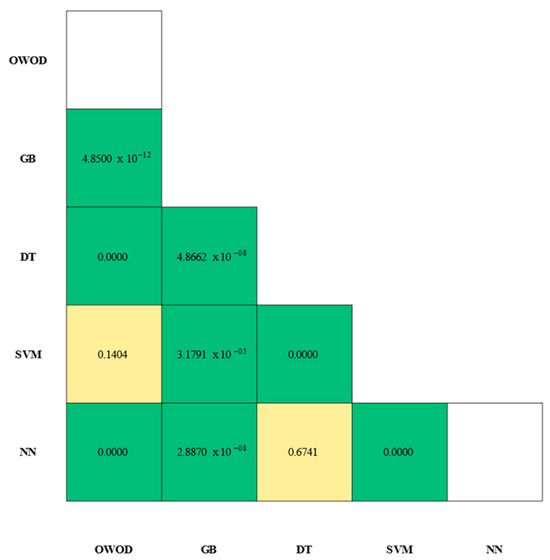

Statistical validation of the model’s performance was carried out by pairing classifiers. A McNemar’s test was applied to the paired predictions of all models. The significance matrix is illustrated in Figure 11.

Figure 11.

Statistical significance matrix from McNemar’s tests comparing model classifier pairs. Each cell represents the p-value of the comparison between two models. Green cells indicate statistically significant differences (p < 0.01), whereas yellow cells indicate non-significant differences (p ≥ 0.01).

The results showed a statistically significant value for OWOD–GB () in which the OWOD had the first-place performance (OWOD AUC = 96.60%; GB AUC = 92.90%), with GB’s performance showing significant differences against all models (). In contrast, there were some overlapping efficiencies in the classification of OWOD–SVM () and DT–NN ().

4.5. Model Comparison and Discussion

A performance comparison of the OWOD and other ML-based methods is presented in Table 10. These results were obtained from the evaluation using 20% unseen data.

Table 10.

Comparison of the testing results of the optimized weighted objective distance (OWOD) and other machine learning models.

Table 10.

Comparison of the testing results of the optimized weighted objective distance (OWOD) and other machine learning models.

| Classification Method | No. of Features | Accuracy % | Precision % | Recall % | F1-Score % | AUC-ROC % |

|---|---|---|---|---|---|---|

| OWOD | 20 | 95.45 | 96.14 | 94.70 | 95.42 | 97.10 |

| Gradient boosting (GB) | 20 | 88.20 | 90.90 | 84.90 | 87.80 | 92.40 |

| Decision tree (DT) | 20 | 86.20 | 91.04 | 80.30 | 85.33 | 87.30 |

| Support vector machine (SVM) | 20 | 84.40 | 84.89 | 83.70 | 84.29 | 90.10 |

| Neural network (NN) | 20 | 83.75 | 88.14 | 78.00 | 82.76 | 88.80 |

The testing results confirm the superior classification performance of the OWOD compared with conventional ML algorithms. The OWOD achieved the highest scores across all evaluation metrics, with an accuracy of 95.45%, a precision of 96.14%, a recall of 94.70%, an F1-score of 95.42%, and an AUC-ROC of 97.10%. This reflects the ability of the OWOD to accurately identify positive and negative classes, maintaining a high balance between sensitivity and specificity. GB demonstrated reasonably strong precision (90.90%) but a poorer recall (84.90%) and F1-score (87.80%), indicating a greater likelihood of missing TP cases. Traditional models such as DT, SVM, and NN exhibited even lower recall and AUC-ROC values, indicating limited effectiveness in differentiating between classes. These findings demonstrate that the OWOD delivers high classification performance and offers enhanced generalization capability when applied to previously unseen data.

Evaluation of Model Performances

The results from both the validation and testing phases demonstrated the superior performance and robustness of the OWOD classification model over conventional ensemble and traditional ML models. During validation, the OWOD achieved the highest metrics across all performance indicators (accuracy 94.95% ± 0.96; precision 95.64% ± 0.95; recall 94.20% ± 1.11; F1-score 94.91% ± 0.48; AUC-ROC 96.60% ± 0.013). The OWOD maintained top-tier performance in the testing phase with an accuracy of 95.45%, a precision of 96.14%, a recall of 94.70%, an F1-score of 95.42%, and an AUC-ROC of 97.10%, demonstrating excellent generalization to unseen data.

While ensemble methods such as GB are known for improving accuracy by combining multiple weak learners, the OWOD surpassed GB in all metrics. This suggests that the OWOD’s class-wise feature interpretation and target-based thresholding mechanism enable a more refined and interpretable classification boundary. Despite leveraging ensemble principles, GB showed relatively lower recall (85.26% ± 1.38 in validation; 84.90% in testing), indicating potential underperformance in identifying TP cases—an important consideration in health-related applications where sensitivity is crucial.

Traditional classifiers such as DT, SVM, and NN exhibited further limitations, with lower recall, F1-score, and AUC-ROC values in both validation and testing. While computationally efficient, these models often struggle with complex decision boundaries and noisy/overlapping feature distributions. The OWOD incorporates a distribution-based entropy framework and utilizes optimal thresholds derived from class-wise histograms, allowing for the capture of fine-grained patterns that rigid or margin-based decision mechanisms in DT, SVM, and NN may overlook. In this context, the OWOD outperformed ensemble and traditional classifiers in predictive accuracy and robustness.

It can therefore be concluded that the proposed OWOD has a higher potential for classifying groups of individuals with dementia and heart failure compared with other ML classification methods. The assumption of this study is consequently verified, in that the proposed measurement can improve classification performance. In addition, the OWOD can handle complex attributes with multiple conditions during the attribute weighting process, which further enhances performance.

4.6. Suggestions and Future Study

This study demonstrated the effectiveness of the proposed OWOD classification method in classifying individuals with dementia and heart failure using blood biomarker features. The model achieved strong performance across all evaluation metrics, underscoring its potential as a practical, noninvasive, and widely accessible decision support tool. To support clinical adoption, future research should focus on validating the model across diverse populations and healthcare settings, as well as exploring additional blood-based biomarkers to further enhance performance. While these findings are promising, the exclusion of individuals diagnosed with both dementia and heart failure in order to maintain clear class boundaries is a limitation and may reduce applicability in real-world settings. Incorporating more granular clinical information and multi-label classification approaches would allow for more sophisticated modeling and improved classification performance among complex patient populations.

The OWOD offers clear interpretability, as its decisions are based on the distance from target feature values, allowing clinicians to understand the contribution of each factor. Additionally, the OWOD can offer personalized recommendations according to each individual’s significant features after classification as efficiently as healthcare providers. These features could be used to guide lifestyle changes on a broad scale. As a result, older adults could better monitor their health status, which may lead to dementia/heart failure prevention. Potential barriers to real-world implementation include validation across diverse populations, seamless integration with existing EHR systems, and acceptance by clinicians and patients.

5. Conclusions

This study proposes the OWOD as a novel measurement to distinguish groups of patients with dementia and heart failure. The OWOD is a modification of the original WOD using entropy-based weighting features and an objective function from class-wise histogram differences, promoting a data-driven approach. The weighted features indicate a degree of feature priority affecting classification and eliminate features that do not influence classification. This study evaluated the proposed OWOD using 20 features and 10,000 EHRs and compared it with other ML classification models, including GB, DT, SVM, and NN. The OWOD outperformed the other ML models during validation and testing processes for overall performance comparison in terms of accuracy, precision, recall, F1-scores, and AUC-ROC. Future studies should include more multi-site datasets and refine features to ensure generalization and practical applicability.

Author Contributions

Conceptualization, V.N., S.C., G.H. and P.T.; methodology, V.N., S.C. and P.T.; software, V.N.; validation, V.N. and S.C.; formal analysis, G.H. and P.T.; resources, S.C.; data curation, V.N.; Visualization, V.N.; writing—original draft preparation, V.N.; writing—review and editing, S.C. and P.T.; supervision, S.C. and P.T.; project administration, P.T.; funding acquisition, G.H. and P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This study is supported in part by the Program Management Unit for Human Resources & Institutional Development, Research and Innovation (PMU-B), contract number: B04G640071; and in part by the European Union (EU) NextGenerationEU through the National Recovery and Resilience Plan, Bulgaria, project number: BG-RRP-2.013-0001.

Institutional Review Board Statement

This study received a certificate of exemption from the Mae Fah Luang University Ethics Committee on Human Research (protocol number EC 21150-13, dated 30 September 2021), as well as a certificate of ethical approval from Chiangrai Prachanukroh Hospital (protocol number EC CRH 112/64 In, dated 7 February 2022).

Informed Consent Statement

Not applicable.

Data Availability Statement

Access to the dataset is currently restricted, as the study is still ongoing.

Acknowledgments

The authors would like to express their gratitude to Chiangrai Phachanukroh Hospital, Chiang Rai, Thailand, for the data and valuable assistance.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Livingston, G.; Huntley, J.; Liu, K.Y.; Costafreda, S.G.; Selbæk, G.; Alladi, S.; Ames, D.; Banerjee, S.; Burns, A.; Brayne, C.; et al. Dementia prevention, intervention, and care: 2024 report of the Lancet standing commission. Lancet 2024, 404, 572–628. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Gao, D.; Liang, J.; Ji, M.; Zhang, W.; Pan, Y.; Zheng, F.; Xie, W. The role of life’s crucial 9 in cardiovascular disease incidence and dynamic transitions to dementia. Commun. Med. 2025, 5, 223. [Google Scholar] [CrossRef] [PubMed]

- Ye, S.; Huynh, Q.; Potter, E.L. Cognitive dysfunction in heart failure: Pathophysiology and implications for patient management. Comorbidities 2022, 19, 303–315. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, G.; Liang, Y.; He, S.; Lyu, M.; Zhu, Y. Heart–brain interaction in cardiogenic dementia: Pathophysiology and therapeutic potential. Front. Cardiovasc. Med. 2024, 11, 1304864. [Google Scholar] [CrossRef]

- Khan, S.S.; Matsushita, K.; Sang, Y.; Ballew, S.H.; Grams, M.E.; Surapaneni, A.; Blaha, M.J. Development and validation of the American Heart Association’s PREVENT equations. Circulation 2024, 149, 430–449. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Dementia. WHO Fact Sheets. 31 March 2025. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 8 September 2025).

- GBD 2019 Dementia Forecasting Collaborators. Estimation of the global prevalence of dementia in 2019 and forecasted prevalence in 2050: An analysis for the Global Burden of Disease Study 2019. Lancet Public Health 2022, 7, e105–e125. [Google Scholar] [CrossRef]

- Sohn, M.; Yang, J.; Sohn, J.; Lee, J. Digital healthcare for dementia and cognitive impairment: A scoping review. Int. J. Nurs. Stud. 2023, 140, 104413. [Google Scholar] [CrossRef]

- Stoppa, E.; Di Donato, G.W.; Parde, N.; Santambrogio, M.D. Computer-aided dementia detection: How informative are your features? In Proceedings of the IEEE 7th Forum on Research and Technologies for Society and Industry Innovation (RTSI), Paris, France, 24–26 August 2022. [Google Scholar] [CrossRef]

- Chaiyo, Y.; Temdee, P. A comparison of machine learning methods with feature extraction for classification of patients with dementia risk. In Proceedings of the Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT & NCON), Chiang Rai, Thailand, 26–28 January 2022. [Google Scholar] [CrossRef]

- Hu, S.; Yang, C.; Luo, H. Current trends in blood biomarker detection and imaging for Alzheimer’s disease. Biosens. Bioelectron. 2022, 210, 114278. [Google Scholar] [CrossRef]

- Savarese, G.; Becher, P.M.; Lund, L.H.; Seferovic, P.; Rosano, G.M.C.; Coats, A.J.S. Global burden of heart failure: A comprehensive and updated review of epidemiology. Cardiovasc. Res. 2022, 118, 3272–3287. [Google Scholar] [CrossRef]

- Li, R.; Wang, X.; Lawler, K.; Garg, S.; Bai, Q.; Alty, J. Applications of artificial intelligence to aid early detection of dementia: A scoping review on current capabilities and future directions. J. Biomed. Inform. 2022, 127, 104030. [Google Scholar] [CrossRef] [PubMed]

- Mahendran, N.; Durai Raj Vincent, P.M. A deep learning framework with an embedded-based feature selection approach for the early detection of Alzheimer’s disease. Comput. Biol. Med. 2022, 141, 105056. [Google Scholar] [CrossRef]

- Ahmed, H.; Soliman, H.; Elmogy, M. Early detection of Alzheimer’s disease using single nucleotide polymorphisms analysis based on gradient boosting tree. Comput. Biol. Med. 2022, 146, 105622. [Google Scholar] [CrossRef]

- Park, J.E.; Kim, H.J.; Kim, Y.E.; Jang, H.; Cho, S.H.; Kim, S.J.; Na, D.L.; Won, H.; Ki, C.; Seo, S.W. Analysis of dementia-related gene variants in APOE ε4 noncarrying Korean patients with early-onset Alzheimer’s disease. Neurobiol. Aging 2020, 85, 155.e5–155.e8. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Ali, F.; Abuhmed, T.; Singh, J.; Alonso, J.M. Automatic detection of Alzheimer’s disease progression: An efficient information fusion approach with heterogeneous ensemble classifiers. Neurocomputing 2022, 512, 203–224. [Google Scholar] [CrossRef]

- Shanmugam, J.V.; Duraisamy, B.; Simon, B.C.; Bhaskaran, P. Alzheimer’s disease classification using pre-trained deep networks. Biomed. Signal Process. Control 2022, 71, 103217. [Google Scholar] [CrossRef]

- Di Benedetto, M.; Carrara, F.; Tafuri, B.; Nigro, S.; De Blasi, R.; Falchi, F.; Gennaro, C.; Gigli, G.; Logroscino, G.; Amato, G. Deep networks for behavioral variant frontotemporal dementia identification from multiple acquisition sources. Comput. Biol. Med. 2022, 148, 105937. [Google Scholar] [CrossRef] [PubMed]

- Rallabandi, V.P.S.; Seetharaman, K. Deep learning-based classification of healthy aging controls, mild cognitive impairment and Alzheimer’s disease using fusion of MRI-PET imaging. Biomed. Signal Process. Control 2023, 80, 104312. [Google Scholar] [CrossRef]

- Li, J.P.; Haq, A.U.; Din, S.U.; Khan, J.; Khan, A.; Saboor, A. heart disease identification method using machine learning classification in e-healthcare. IEEE Access 2020, 8, 107562–107582. [Google Scholar] [CrossRef]

- Ahmad, A.A.; Polat, H. Prediction of heart disease based on machine learning using jellyfish optimization algorithm. Diagnostics 2023, 13, 2392. [Google Scholar] [CrossRef] [PubMed]

- Aslam, M.U.; Xu, S.; Hussain, S.; Waqas, M.; Abiodun, N.L. Machine learning based classification of valvular heart disease using cardiovascular risk factors. Sci. Rep. 2024, 14, 24396. [Google Scholar] [CrossRef] [PubMed]

- Chulde-Fernández, B.; Enríquez-Ortega, D.; Tirado-Espín, A.; Vizcaíno-Imacaña, P.; Guevara, C.; Navas, P.; Villalba-Meneses, F.; Cadena-Morejon, C.; Almeida-Galarraga, D.; Acosta-Vargas, P. Classification of heart failure using machine learning: A comparative study. Life 2025, 15, 496. [Google Scholar] [CrossRef]

- Yongcharoenchaiyasit, K.; Arwatchananukul, S.; Temdee, P.; Prasad, R. Gradient boosting-based model for elderly heart failure, aortic stenosis, and dementia classification. IEEE Access 2023, 11, 48677–48696. [Google Scholar] [CrossRef]

- Yongcharoenchaiyasit, K.; Arwatchananukul, S.; Hristov, G.; Temdee, P. Enhanced multi-model machine learning-based dementia detection using a data enrichment framework: Leveraging the blessing of dimensionality. Bioengineering 2025, 12, 592. [Google Scholar] [CrossRef]

- Chaising, S.; Temdee, P.; Prasad, R. Weighted objective distance for the classification of elderly people with hypertension. Knowl.-Based Syst. 2020, 210, 106441. [Google Scholar] [CrossRef]

- Nuankaew, P.; Chaising, S.; Temdee, P. Average weighted objective distance-based method for type 2 diabetes prediction. IEEE Access 2021, 9, 49018–49031. [Google Scholar] [CrossRef]

- Daugherty, A.M. Hypertension-related risk for dementia: A summary review with future directions. Semin. Cell Dev. Biol. 2021, 116, 82–89. [Google Scholar] [CrossRef]

- Brown, J.; Xiong, X.; Wu, J.; Li, M.; Lu, Z.K. CO76 Hypertension and the risk of dementia among medicare beneficiaries in the U.S. Value Health 2022, 25, S318. [Google Scholar] [CrossRef]

- Campbell, G.; Jha, A. Dementia risk and hypertension: A literature review. J. Neurol. Sci. 2019, 405, 146. [Google Scholar] [CrossRef]

- Park, K.; Nam, G.; Han, K.; Hwang, H. Body weight variability and the risk of dementia in patients with type 2 diabetes mellitus: A nationwide cohort study in Korea. Diabetes Res. Clin. Pract. 2022, 190, 110015. [Google Scholar] [CrossRef] [PubMed]

- Iwagami, M.; Qizilbash, N.; Gregson, J.; Douglas, I.; Johnson, M.; Pearce, N.; Evans, S.; Pocock, S. Blood cholesterol and risk of dementia in more than 1.8 million people over two decades: A retrospective cohort study. Lancet Healthy Longev. 2021, 2, e498–e506. [Google Scholar] [CrossRef] [PubMed]

- Gong, J.; Harris, K.; Peters, S.A.E.; Woodward, M. Serum lipid traits and the risk of dementia: A cohort study of 254,575 women and 214,891 men in the UK Biobank. Lancet 2022, 54, 101695. [Google Scholar] [CrossRef]

- Bertini, F.; Allevi, D.; Lutero, G.; Calzà, L.; Montesi, D. An automatic Alzheimer’s disease classifier based on spontaneous spoken English. Comput. Speech Lang. 2022, 72, 101298. [Google Scholar] [CrossRef]

- Milana, S. Dementia classification using attention mechanism on audio data. In Proceedings of the 2023 IEEE 21st World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 19–21 January 2023. [Google Scholar] [CrossRef]

- Meng, X.; Fang, S.; Zhang, S.; Li, H.; Ma, D.; Ye, Y.; Su, J.; Sun, J. Multidomain lifestyle interventions for cognition and the risk of dementia: A systematic review and meta-analysis. Int. J. Nurs. Stud. 2022, 130, 104236. [Google Scholar] [CrossRef] [PubMed]

- Cilia, N.D.; De Gregorio, G.; De Stefano, C.; Fontanella, F.; Marcelli, A.; Parziale, A. Diagnosing Alzheimer’s disease from on-line handwriting: A novel dataset and performance benchmarking. Eng. Appl. Artif. Intell. 2022, 111, 104822. [Google Scholar] [CrossRef]

- Masuo, A.; Ito, Y.; Kanaiwa, T.; Naito, K.; Sakuma, T.; Kato, S. Dementia screening based on SVM using qualitative drawing error of clock drawing test. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022. [Google Scholar] [CrossRef]

- Wong, R.; Lovier, M.A. Sleep disturbances and dementia risk in older adults: Findings from 10 years of national U.S. prospective data. Am. J. Prev. Med. 2023, 64, 781–787. [Google Scholar] [CrossRef] [PubMed]

- Khosroazad, S.; Abedi, A.; Hayes, M.J. Sleep signal analysis for early detection of Alzheimer’s disease and related dementia (ADRD). IEEE J. Biomed. Health Inform. 2023, 27, 3235391. [Google Scholar] [CrossRef]

- Chiu, S.Y.; Wyman-Chick, K.A.; Ferman, T.J.; Bayram, E.; Holden, S.K.; Choudhury, P.; Armstrong, M.J. Sex differences in dementia with Lewy bodies: Focused review of available evidence and future directions. Park. Relat. Disord. 2023, 107, 105285. [Google Scholar] [CrossRef]

- Alvi, A.M.; Siuly, S.; Wang, H.; Wang, K.; Whittaker, F. A deep learning based framework for diagnosis of mild cognitive impairment. Knowl.-Based Syst. 2022, 248, 108815. [Google Scholar] [CrossRef]

- Lin, H.; Tsuji, T.; Kondo, K.; Imanaka, Y. Development of a risk score for the prediction of incident dementia in older adults using a frailty index and health checkup data: The JAGES longitudinal study. Prev. Med. 2018, 112, 88–96. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, W.; Zhang, W.; Wang, H.; Ou, Y.; Qu, Y.; Shen, X.; Chen, S.; Wu, K.; Zhao, Q.; et al. Modifiable risk factors for incident dementia and cognitive impairment: An umbrella review of evidence. J. Affect. Disord. 2022, 314, 160–167. [Google Scholar] [CrossRef]

- Sigala, E.G.; Vaina, S.; Chrysohoou, C.; Dri, E.; Damigou, E.; Tatakis, F.P.; Sakalidis, A.; Barkas, F.; Liberopoulos, E.; Sfikakis, P.P.; et al. Sex-related differences in the 20-year incidence of CVD and its risk factors: The ATTICA study (2002–2022). Am. J. Prev. Cardiol. 2024, 19, 100709. [Google Scholar] [CrossRef] [PubMed]

- Goldsborough III, E.; Osuji, N.; Blaha, M.J. Assessment of cardiovascular disease risk: A 2022 update. Endocrinol. Metab. Clin. N. Am. 2022, 51, 483–509. [Google Scholar] [CrossRef]

- Young, K.A.; Scott, C.G.; Rodeheffer, R.J.; Chen, H.H. Incidence of preclinical heart failure in a community population. J. Am. Heart Assoc. 2022, 11, e025519. [Google Scholar] [CrossRef] [PubMed]

- Sevilla-Salcedo, C.; Imani, V.; Olmos, P.M.; Gómez-Verdejo, V.; Tohka, J. Multi-task longitudinal forecasting with missing values on Alzheimer’s disease. Comput. Methods Programs Biomed. 2022, 226, 107056. [Google Scholar] [CrossRef]

- Kherchouche, A.; Ben-Ahmed, O.; Guillevin, C.; Tremblais, B.; Julian, A.; Fernandez-Maloigne, C.; Guillevin, R. Attention-guided neural network for early dementia detection using MRS data. Comput. Med. Imag. Graph. 2022, 99, 102074. [Google Scholar] [CrossRef]

- Ahmad, M.; Ahmed, A.; Hashim, H.; Farsi, M.; Mahmoud, N. Enhancing heart disease diagnosis using ECG signal reconstruction and deep transfer learning classification with optional SVM integration. Diagnostics 2025, 15, 1501. [Google Scholar] [CrossRef]

- Menagadevi, M.; Mangai, S.; Madian, N.; Thiyagarajan, D. Automated prediction system for Alzheimer detection based on deep residual autoencoder and support vector machine. Optik 2023, 272, 170212. [Google Scholar] [CrossRef]

- Darmawahyuni, A.; Firdaus, S.N.; Tutuko, B.; Yuwandini, M.; Rachmatullah, M.N. Congestive heart failure waveform classification based on short time-step analysis with recurrent network. Informat. Med. Unlocked 2020, 21, 100441. [Google Scholar] [CrossRef]

- Chaiyo, Y.; Rueangsirarak, W.; Hristov, G.; Temdee, P. Improving early detection of dementia: Extra trees-based classification model using inter-relation-based features and K-means synthetic minority oversampling technique. Big Data Cogn. Comput. 2025, 9, 148. [Google Scholar] [CrossRef]

- Phanbua, P.; Arwatchananukul, S.; Temdee, P. Classification model of dementia and heart failure in older adults using extra trees and oversampling-based technique. In Proceedings of the 2025 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT & NCON), Nan, Thailand, 29 January–1 February 2025. [Google Scholar] [CrossRef]

- Dhar, A.; Dash, N.; Roy, K. Classification of text documents through distance measurement: An experiment with multi-domain Bangla text documents. In Proceedings of the 2017 3rd International Conference on Advances in Computing, Communication & Automation (ICACCA) (Fall), Dehradun, India, 15–16 September 2017. [Google Scholar] [CrossRef]

- Greche, L.; Jazouli, M.; Es-Sbai, N.; Majda, A.; Zarghili, A. Comparison between Euclidean and Manhattan distance measure for facial expressions classification. In Proceedings of the 2017 International Conference on Wireless Technologies, Embedded and Intelligent Systems (WITS), Fez, Morocco, 19–20 April 2017. [Google Scholar] [CrossRef]

- Han, J.; Park, D.C.; Woo, D.M.; Min, S.Y. Comparison of distance measures on Fuzzyc-means algorithm for image classification problem. AASRI Procedia 2013, 4, 50–56. [Google Scholar] [CrossRef]

- Chaichumpa, S.; Temdee, P. Assessment of student competency for personalised online learning using objective distance. Int. J. Innov. Technol. Explor. Eng. 2018, 23, 19–36. [Google Scholar] [CrossRef]

- Chaising, S.; Temdee, P. Determining recommendations for preventing elderly people from cardiovascular disease complication using objective distance. In Proceedings of the 2018 Global Wireless Summit (GWS), Chiang Rai, Thailand, 25–28 November 2018. [Google Scholar] [CrossRef]

- Chaising, S.; Prasad, R.; Temdee, P. Personalized recommendation method for preventing elderly people from cardiovascular disease complication using integrated objective distance. Wirel. Pers. Commun. 2019, 117, 215–233. [Google Scholar] [CrossRef]

- Alhaj, T.A.; Siraj, M.M.; Zainal, A.; Elshoush, H.T.; Elhaj, F. Feature selection using information gain for improved structural-based alert correlation. PLoS ONE 2016, 11, e0166017. [Google Scholar] [CrossRef] [PubMed]

- Pereira, R.B.; Carvalho, A.P.D.; Zadrozny, B.; Merschmann, L.H.D.C. Information gain feature selection for multi-label classification. J. Inf. Data Manag. 2015, 6, 48–58. [Google Scholar]

- Azhagusundari, B.; Thanamani, A.S. Feature selection based on information gain. Int. J. Innov. Technol. Explor. Eng. 2013, 2, 18–21. [Google Scholar]

- Patil, L.H.; Atique, M. A novel feature selection based on information gain using WordNet. In Proceedings of the 2013 International Conference on Information and Network Security (ICINS 2013), London, UK, 7–9 October 2013. [Google Scholar]

- Baobao, B.; Jinsheng, M.; Minru, S. An enhancement of K-Nearest Neighbor algorithm using information gain and extension relativity. In Proceedings of the 2008 International Conference on Condition Monitoring and Diagnosis, Beijing, China, 21–24 April 2008. [Google Scholar] [CrossRef]

- Gupta, M. Dynamic k-NN with attribute weighting for automatic web page classification (Dk-NNwAW). Int. J. Comput. Appl. 2012, 58, 34–40. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).