1. Introduction

Bowel sounds (BS) represent contractile activity of the gastrointestinal (GI) tract and are routinely assessed during abdominal examination. Even while BS is important for clinical practice, its interpretation is still quite subjective and does not have a standard way to measure it [

1]. Traditional stethoscope-based auscultation lacks consistency and is prone to substantial observer-dependent variability [

2]. Recent progress in auditory sensors and signal processing has created possibilities to convert BS analysis into a quantitative and reliable diagnostic method [

3,

4].

Phonoenterography (PEG) allows for the acquisition of intricate audio signatures of intestinal movement through the utilization of surface microphones [

5]. PEG is a non-invasive and cost-effective way to check GI motility that is better than invasive treatments or imaging-based diagnostics [

6,

7]. But PEG signals are hard to understand, since they are acoustically complicated and there is no one standard way to classify them. Adding notes by hand takes a lot of time and specialized expertise, which makes it hard to scale [

8,

9].

Recent improvements in wearable acoustic sensors have made it possible to monitor PEG in real time [

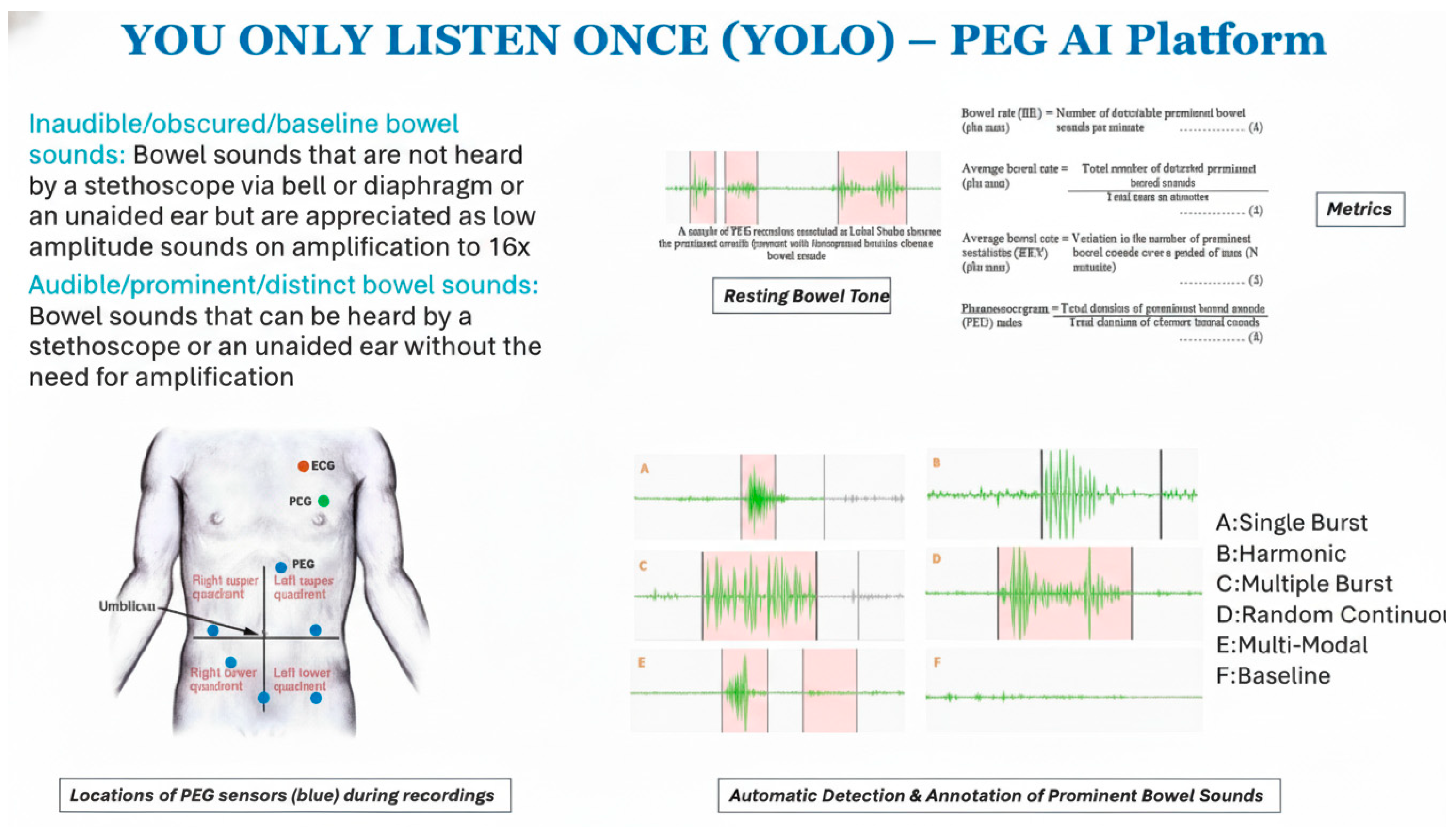

10]. This fits with our long-term goal of being able to check the gastrointestinal system while on the road. Our group previously developed a supervised deep learning model based on the You Only Listen Once (YOLO) architecture for automatic detection of prominent bowel sounds, achieving high accuracy in healthy subjects [

11]. While this demonstrated the feasibility of PEG-based acoustic detection, the requirement for thousands of expert-labeled annotations limited scalability and broader clinical applicability.

To address this challenge, unsupervised approaches are needed to phenotype bowel sounds directly from unlabeled recordings. Unsupervised YOLO-inspired pipelines can autonomously discover latent acoustic structures and scale to large datasets without requiring expert labels. This makes them better suited for clinical translation, where rapid and annotation-free deployment is essential. Moreover, such methods enable identification of previously uncharacterized bowel sound morphologies and can integrate seamlessly into continuous monitoring systems. Machine learning applications in gastrointestinal healthcare are expanding rapidly, affirming our emphasis on unsupervised acoustic phenotyping for prospective clinical implementation [

12].

AI and machine learning techniques are becoming more common for studying physiological signals, especially in cardiology and pulmonology [

13,

14,

15]. These advancements indicate analogous applications in gastroenterology. Previous research in acoustic clustering has demonstrated that dimensionality reduction approaches, such as UMAP (uniform manifold approximation and projection), when integrated with clustering models like K-Means or DBSCAN, can reveal latent structures in biological data [

16,

17,

18]. We broaden this framework to bowel sound analysis by employing an extensive feature extraction and clustering methodology specifically designed for PEG signals [

19]. Recent perspectives in digital gastroenterology have emphasized the importance of progressing phonoenterography (PEG) from proof-of-concept studies toward true clinical deployment. Redij et al. [

19] reviewed the integration of PEG with emerging microwave sensors and artificial intelligence, outlining how these technologies could enable noninvasive, continuous, and wireless monitoring of gastrointestinal motility. Their work highlighted both the translational promise of PEG-AI pipelines and the current barriers, including noise resilience, lack of annotation-free algorithms, and limited validation in realistic clinical settings. This study expands on our previous supervised YOLO-based PEG research [

11] by presenting an unsupervised, annotation-free pipeline that not only clusters prominent bowel sound events into reproducible phenotypes but also models their temporal dynamics. Our results signify a tangible progression from the conceptual framework established by Redij et al. [

19] enhancing PEG-AI analysis for scaled clinical applications.

The present work introduces an unsupervised YOLO-inspired pipeline for automatic annotation, clustering, and temporal modeling of bowel sounds from high-resolution PEG recordings. Specifically, we (i) automatically segment and annotate prominent bowel sound events without manual labeling, (ii) cluster these events into reproducible and interpretable acoustic phenotypes, and (iii) characterize sequential dynamics through dwell times, inter-event gaps, and transition probabilities. This study provides a scalable, annotation-free framework with direct translational potential for gastrointestinal diagnostics and continuous monitoring.

2. Materials and Methods

2.1. Data Collection

We collected 110 high-quality PEG recordings from eight healthy volunteers (5 male, 3 female; age range: 22–35 years) in a controlled, acoustically shielded environment after Mayo Clinic IRB approval. The recordings were obtained using an Eko DUO

® digital stethoscope placed at the left upper quadrant (LUQ) and right lower quadrant (RLQ) in a controlled low-noise environment. The subjects underwent both fasting and postprandial recordings to capture physiological variability in bowel motility [

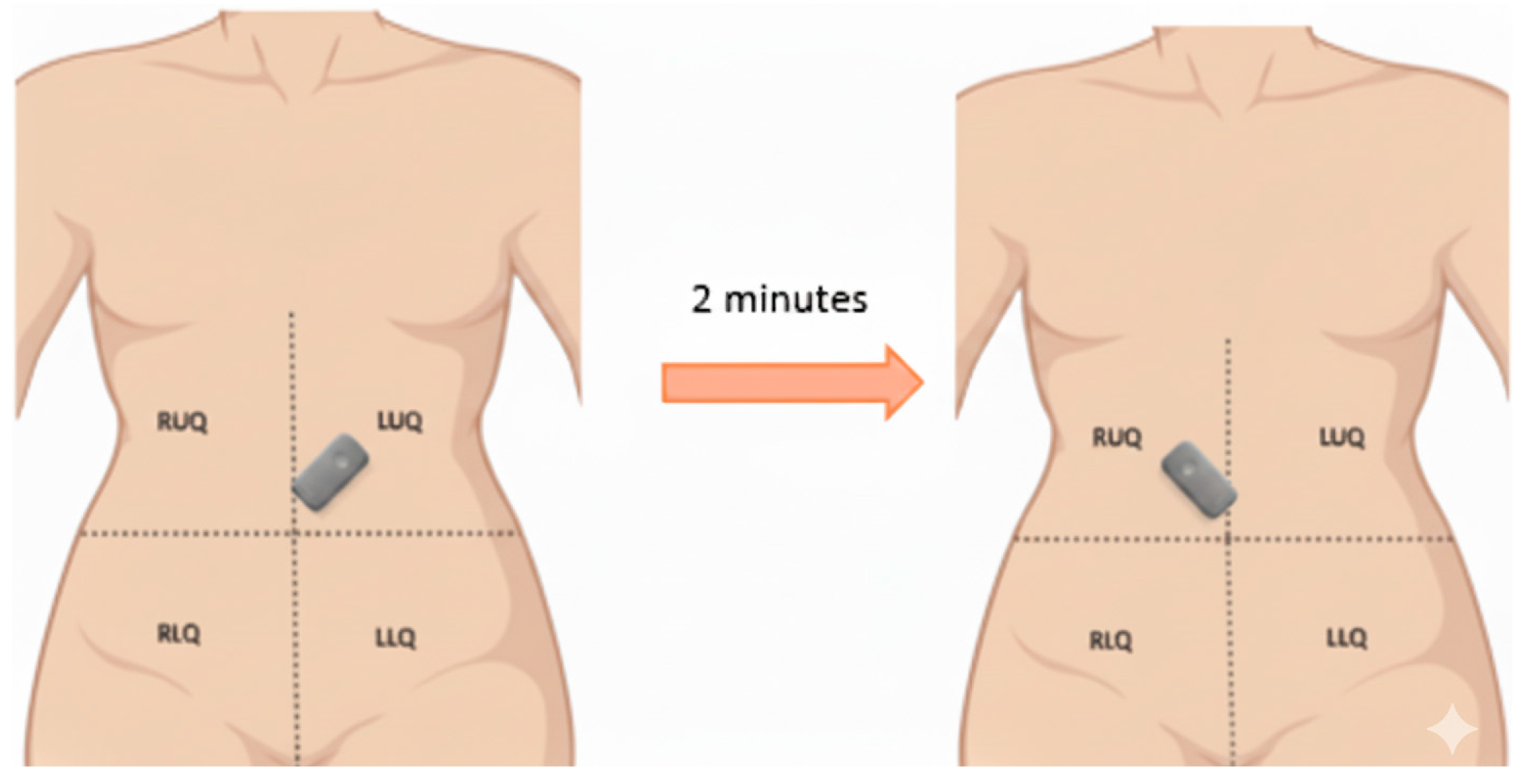

11]. No participants had a history of gastrointestinal disorders. Microelectromechanical (MEMS) microphones were affixed to the lower-right abdominal quadrant using skin-safe adhesive to ensure contact and reduce motion artifacts shown in

Figure 1. Each recording was 2 min long, sampled at 44.1 kHz with 16-bit resolution. Audio data were normalized for amplitude and pre-screened to remove segments with clipping or excessive ambient noise. No filtering, such as bandpass or denoising, was applied to preserve raw acoustic features.

2.2. Manual Annotation and Automatic Segmentation

Manual annotation of bowel sound events followed the protocol detailed in our prior study [

11]. Recordings from eight healthy adults (5 female, 3 male) were obtained using an Eko DUO

® digital stethoscope (Eko Health, Emeryville, CA 94608, USA) in a low-noise environment. Annotation was conducted in Label Studio v1.7 by trained research personnel experienced in PEG waveform interpretation. Annotators inspected both waveform and spectrogram views to label the onset of prominent bowel sound events. The labels were collaboratively resolved rather than independently duplicated. Hence, formal inter-rater statistics (e.g., Cohen’s κ) were not applicable.

The automated detector correctly identified 6314 of 6435 manually labeled events within a ±100 ms tolerance window, yielding a match-rate (recall) of 98.1%, precision of 98.4%, and F1 = 98.25%. This high correspondence confirms the reliability of the consensus-based annotation protocol and supports the validity of the automatic detection pipeline.

Prominent bowel sound (PBS) segments were automatically detected using an energy-based peak detection algorithm tailored for PEG data. A short-time energy envelope was computed, and local maxima exceeding a dynamic threshold (mean + 2 × SD) were selected. Each detected peak was isolated using a 200 ms window (100 ms before and after the peak). Overlapping or closely spaced events (<100 ms apart) were discarded to reduce ambiguity.

2.3. Feature Extraction

Every segment was transformed into a 279-dimensional audio feature vector. Initially, 20 Mel-frequency cepstral coefficients (MFCCs) were calculated utilizing a frame length of 25 ms with a 10 ms overlap, yielding 13 frames per 200 ms segment. These were condensed into 260 coefficients. We computed 13 Δ-MFCCs, indicative of temporal gradients, and six scalar spectral descriptors: centroid, bandwidth, flatness, roll-off, RMS energy, and spectral entropy. All features were concatenated and standardized using z-scores to guarantee uniform variation across dimensions and enhance clustering sensitivity.

2.4. Dimensionality Reduction

To optimize computation and mitigate noise, we initially employed principal component analysis (PCA), retaining the top 30 components that represented over 95% of the dataset’s variance. The components were subsequently mapped into a 10-dimensional manifold with UMAP, a nonlinear method intended to preserve both local relationships and the overall structure of the data. The UMAP parameters (n_neighbors = 35, min_dist = 0.01, metric = ‘cosine’) were refined by grid search to enhance the silhouette score and reduce cluster overlap. This modification maintained both local and global manifold structures essential for differentiating delicate auditory patterns. To assess the robustness of the UMAP embedding, a grid-based sensitivity analysis was conducted by varying n_neighbors (15–50) and min_dist (0.001–0.1) while maintaining the cosine distance metric. The resulting silhouette scores ranged from 0.55 to 0.60, and the Adjusted Rand Index (ARI) values relative to the baseline configuration (n_neighbors = 35, min_dist = 0.01) were consistently above 0.90, confirming that cluster assignments were highly reproducible. These results, summarized in

Table S1, indicate that small perturbations in UMAP hyperparameters did not materially alter cluster boundaries or class proportions, supporting the robustness of the five-cluster manifold.

2.5. Clustering Algorithms

We evaluated five unsupervised learning methods using the 10-dimensional acoustic feature space generated by UMAP. The K-Means algorithm was employed with five clusters, initialized twenty times to mitigate the risk of suboptimal local solutions. Agglomerative clustering followed a bottom-up approach, leveraging Ward’s linkage criterion to iteratively merge groups while minimizing within-cluster variability. Lastly, spectral clustering transformed the similarity matrix into a lower-dimensional space via graph Laplacian decomposition, after which K-Means was applied to uncover potential cluster structures. With these techniques, we develop a novel unsupervised framework, the You Only Listen Once (YOLO) platform for automated PEG analytics, to both detect and characterize prominent bowel sounds that can aid in detection and diagnosis of various bowel diseases.

2.6. Evaluating Metrics

Model performance was assessed using three standard unsupervised clustering metrics:

where

a is the average distance to points within the same cluster and

b is the average distance to points in the nearest neighboring cluster. Values range from –1 to +1, with higher scores indicating better clustering [

20].

where

tr (

) is the trace of the between-cluster dispersion matrix,

tr (

) is the trace of the within-cluster dispersion matrix,

N is the number of samples, and

k is the number of clusters [

21]. Higher CH values suggest better-defined clusters.

where

is the ratio of within-cluster scatter to between-cluster separation for clusters

i and

j [

22].

These metrics allow for consistent evaluation of clustering performance across models without relying on manual labels.

2.7. Temporal Modeling of Cluster Sequences

To analyze the sequential organization of bowel sounds, we modeled the time-ordered prominent events as a first-order Markov process with K = 5 states. A first-order Markov formulation was employed to model short-term transitions between acoustic states, where each subsequent state depends only on the current state. This approach was chosen for interpretability and stability given the limited sample size (110 recordings from 8 participants). Each transition represents localized motility activity within sub-second intervals (e.g., contraction bursts or resonant harmonics), providing a robust approximation of short-range motility dynamics.

2.7.1. Event Sequences

Each detected prominent event was denoted as

, representing the

n-th event within a recording. An event was defined as

where

: the start time of the event (seconds);

: the end time of the event (seconds);

: the cluster label assigned to the event (.

The events were ordered chronologically by their start time within each file, forming a temporal sequence of cluster states for each recording.

2.7.2. Transition Probabilities

A transition probability describes the likelihood that one state is followed directly by another. For each file, we counted consecutive transitions

and pooled them across all files. The transition count matrix was defined as

where

is the number of transitions from state

to state

. The transition probability matrix was then obtained by row normalization:

Here,

is a small constant added to the denominator to avoid division by zero when no outgoing transitions are present from a given state. Its value

is negligible and does not influence the computed probabilities [

8,

23,

24].

2.7.3. Dwell Times

The dwell time measures how long an event persists in a given state. For each event,

For each state

, the mean dwell time and its variability were computed as

where

is the number of events in state

. Here, the overline in

denotes the average dwell time for state

, and

is the corresponding standard deviation [

25,

26].

2.7.4. Inter-Event Gaps

The inter-event gap represents the silent interval between the end of one event and the start of the next event in the same file:

We reported the overall mean gap

and standard deviation

across all files as measures of temporal spacing, consistent with prior studies that analyzed bowel sound durations and inter-event intervals in both adult and neonatal monitoring [

27,

28].

3. Results

A total of 42,975 audio segments were retrieved from the complete dataset. Of them, 6314 were designated as significant bowel sound occurrences by our automated annotation system. To assess clinical validity, we conducted manual annotation of bowel sounds by trained analysts under gastroenterologist supervision. Our automated segmentation algorithm detected 6314 prominent bowel sound (PBS) segments, which closely matched 6435 manually annotated events [

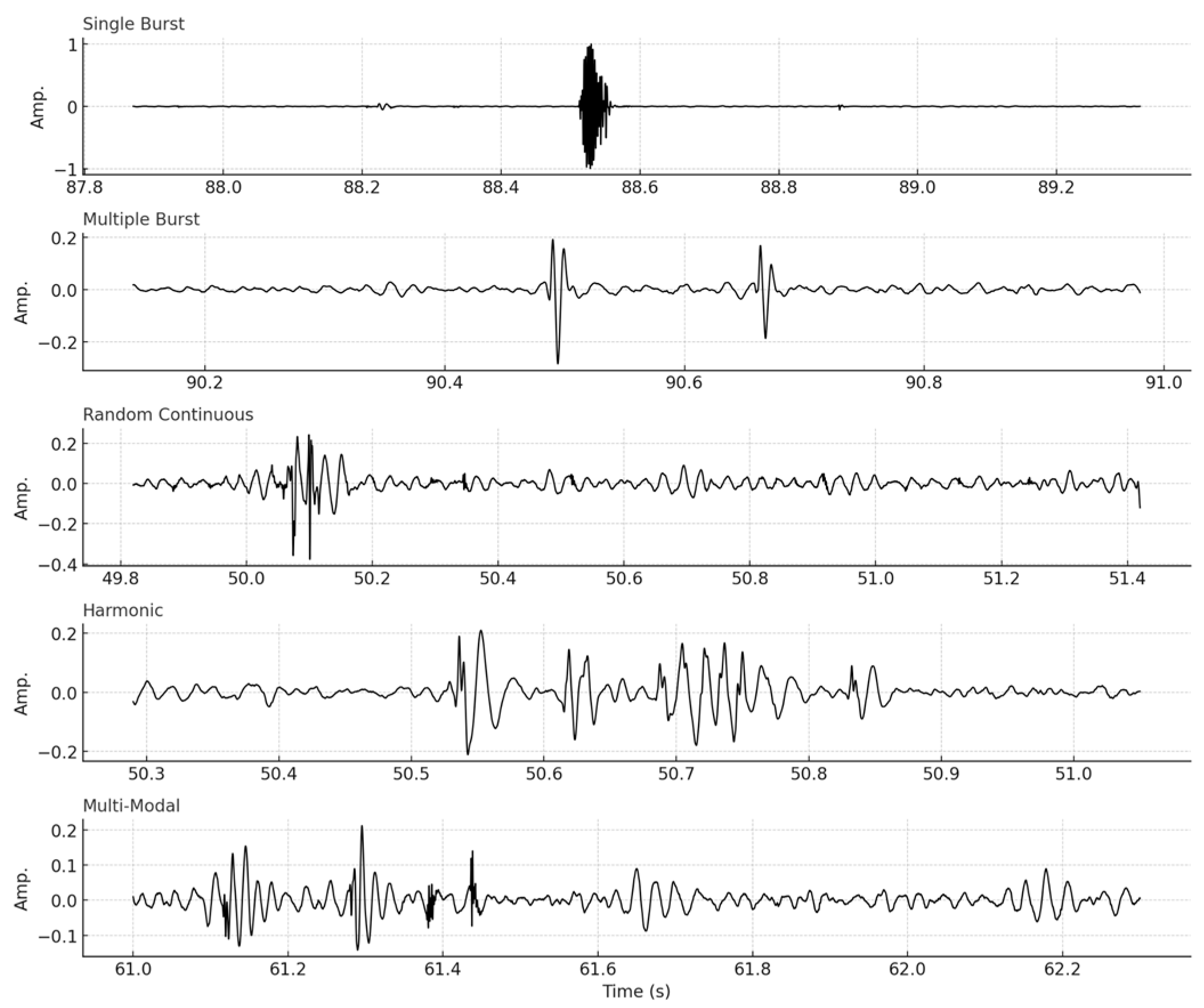

11] yielding a detection match rate of 98.1%. This strong correspondence confirms the reliability of the automated system. Clustering identified five consistent morphologies among patients based on visual examination, characterized as follows:

Single-burst—a concise, discrete, high-energy impulse;

Harmonic—continuous waveforms exhibiting regular oscillations;

Multiple-burst—series of impulse bursts;

Random continuous—irregular high-frequency sequences;

Multi-modal—intricate or overlapping compound patterns.

These five acoustic groups closely resemble previously reported bowel sound categories, including single, harmonic, and combination sounds [

29], with the multi-modal group corresponding to the previously defined combination type. This correspondence suggests that the unsupervised clustering independently recovered the principal acoustic phenotypes described in earlier manually classified studies.

From 110 recordings acquired from healthy people, 6314 prominent bowel sound (PBS) segments were effectively recovered utilizing the unsupervised auto-annotation method. This corresponded to an average yield of roughly 57 PBS events each minute. Each segment was encoded utilizing a comprehensive 279-dimensional acoustic feature representation that encapsulated both spectral and temporal characteristics of the sound waveform.

The high-dimensional features were standardized using z-scores and condensed to 30 principal components by PCA. UMAP was later employed to embed the features into a 10-dimensional manifold using cosine distance, 35 neighbors, and a minimum distance of 0.01. This configuration preserved both local neighborhood structures and global separability.

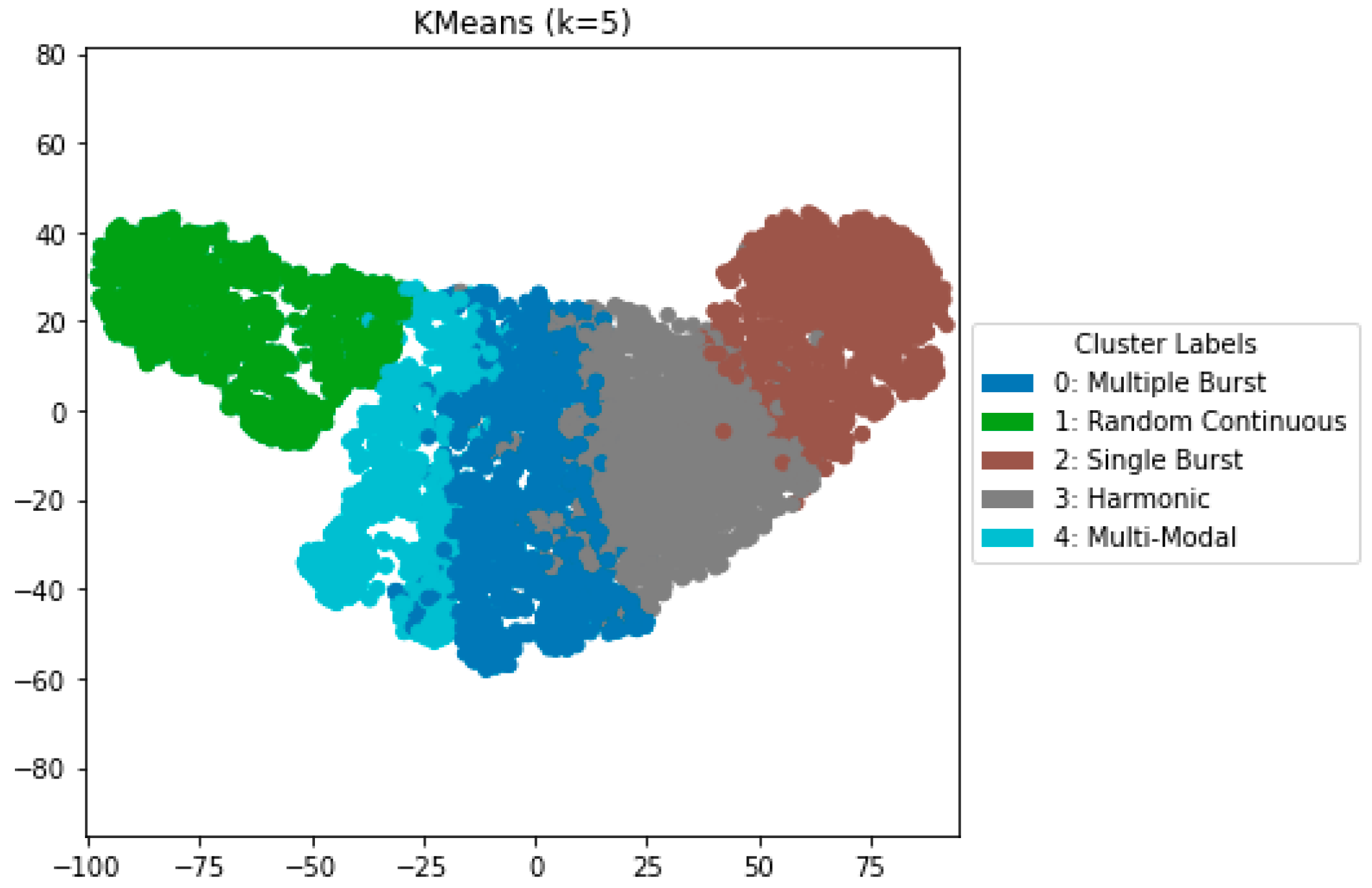

We evaluated three clustering algorithms—K-Means (k = 5), agglomerative clustering, and spectral clustering—employing UMAP-reduced features. K-Means exhibited the best-balanced performance across all clustering metrics, producing five distinct clusters that correspond to standard bowel sound categories: single-burst, multiple-burst, harmonic, random-continuous, and multi-modal. The clusters were confirmed through visual inspection of waveform morphologies and spectral properties.

Agglomerative clustering achieved somewhat lower silhouette scores (0.579), but spectral clustering demonstrated decreased cohesiveness (silhouette = 0.562) and diminished inter-cluster separability.

Figure 2 depicts the UMAP cluster embedding for K-Means clustering (k = 5), highlighting distinctly defined clusters that align with the five auditory characteristics defined previously. This robust separation offers unique capabilities to first study normal bowel sound characteristics on various conditions of dietary and other normal health conditions that can serve as a foundation to provide baseline analysis to compare with various diseased bowel states.

To assess robustness and generalization, we performed leave-one-subject-out cross-validation and observed stable clustering structures and consistent detection patterns across folds. We also evaluated statistical significance using permutation testing (n = 300), where observed silhouette scores significantly exceeded those from randomized cluster assignments (

p < 0.0001). Bootstrap analysis (n = 200) confirmed that the five-cluster configuration consistently outperformed k = 3–7 in terms of separation and compactness. We further addressed UMAP reproducibility by fixing random seeds and repeating experiments with varied parameters, observing <5% variability in cluster membership. These results reinforce the robustness and clinical interpretability of our segmentation and clustering pipeline.

Figure 3 displays representative waveforms from each cluster type, illustrating their morphological differences in amplitude and duration. These differences will serve to be the foundation for PEG digital fingerprinting to characterize various bowel diseases.

Table 1 summarizes the metrics from cluster evaluation.

To assess the sequential organization of acoustic states, we analyzed the dwell times, inter-event gaps, and transition probabilities derived from the clustered prominent events.

3.1. Dwell Times and Gaps

Table 2 summarizes the number of events, mean dwell times per cluster, and global inter-event gaps. Single-burst events exhibited the longest mean dwell duration (3.61 ± 2.50 s), while random continuous events were the most transient, with a mean dwell of only 0.08 ± 0.02 s. Harmonic and multi-modal states showed intermediate persistence (1.25 ± 0.33 s and 0.25 ± 0.08 s, respectively). Across all clusters, the mean inter-event gap between successive events was approximately 0.90 ± 0.46 s.

3.2. Transition Probabilities

Table 3 presents the first-order Markov transition matrix. Strong diagonal elements (22–28%) indicate substantial self-persistence across all five states. Notably, random continuous and multi-modal states frequently transitioned into each other (25–26%), while multiple-burst and harmonic states often served as connectors to neighboring states. These patterns suggest that transient bursts and harmonic sequences are more likely to precede or follow other acoustic states, while random continuous and single-burst events show greater persistence.

4. Discussion

This study demonstrates the feasibility of automated phenotyping of bowel sounds using unsupervised clustering on high-dimensional acoustic features. Our method successfully extracted and clustered 6314 prominent bowel sound segments from healthy volunteers, revealing five reproducible morphologies that matched known acoustic patterns such as single-burst, harmonic, and multi-modal activities. In contrast to conventional auscultation, which is inherently subjective, our pipeline delivers objective and repeatable bowel sound classifications. While earlier research has often relied on supervised models and manually annotated training sets [

7,

9,

15], our unsupervised methodology circumvents these constraints. By identifying meaningful patterns directly from unlabeled data, our approach enhances scalability and reduces dependence on time-intensive expert labeling. This offers practical advantages for scaling PEG analysis in research and clinical settings.

Our clustering metrics—silhouette (0.60), Calinski–Harabasz (19,165), and Davies–Bouldin (0.68)—indicate moderate but robust separability, especially considering the signal complexity and lack of supervision. Similar clustering scores have been reported in unsupervised studies of cardiac and pulmonary acoustic signals [

20,

22], where inherent biological variability and noise are known challenges. The reproducibility of our five clusters across subjects further supports the hypothesis that bowel sounds have structured acoustic subtypes. The present study was designed as a reproducible baseline for unsupervised bowel sound phenotyping using a small, acoustically homogeneous dataset (110 recordings from eight healthy participants). The clustering framework was selected to maximize stability and interpretability rather than to exhaustively benchmark all available methods. Given the limited dataset size and potential subject-level correlations, a comprehensive ablation study or hyperparameter sweep would be underpowered and could yield misleading variability estimates. Instead, we report the most consistent configuration identified during preliminary testing and document all acoustic feature groups to facilitate reproducibility. Future work will expand comparative analyses to include Gaussian mixture models (GMM) and deep embedded clustering architectures once additional participants and recordings are incorporated under the current IRB protocol.

In this study, the clustering framework was used to examine whether bowel sound events exhibit consistent acoustic groupings in an unsupervised setting. The five resulting clusters—single-burst, multiple-burst, harmonic, random continuous, and multi-modal—are intended as descriptive acoustic phenotypes rather than physiologic or clinical categories. The labels were assigned based on the dominant spectral–temporal patterns observed within each cluster and are meant to provide interpretable terminology for reproducible comparison. We acknowledge that confirming whether these acoustic phenotypes correspond to specific gastrointestinal motility mechanisms or clinical conditions will require orthogonal validation, such as controlled postprandial studies, concurrent motility or pressure measurements, and larger multi-sensor datasets.

The use of 279 acoustic features, PCA compression, and tuned UMAP embedding contributed to effective clustering. While K-Means performed best overall, it also benefited from the careful preprocessing pipeline that emphasized both global and local manifold structures. This methodology allows scalable extension to larger and more diverse datasets without retraining supervised models.

The temporal analysis revealed that bowel sound clusters differ not only acoustically but also in their persistence and transitions. As shown in

Table 2, single-burst events exhibited the longest dwell times, suggesting these represent stable, isolated contractions, while random continuous events were highly transient, consistent with short-lived motility bursts. Harmonic and multi-modal states displayed intermediate persistence, potentially reflecting repetitive or overlapping bowel activity. Inter-event gap analysis indicated that successive events were separated by sub-second intervals, supporting the concept of rapid, cyclic motility patterns. As shown in

Table 3, The transition matrix further demonstrated that all states exhibited measurable self-persistence, while random continuous and multi-modal states frequently transitioned into each other, suggesting they may act as dynamic connectors between more stable states. The findings highlight that unsupervised clustering can capture not only spectral distinctions but also temporal organization, yielding clinically interpretable motility signatures. The use of a first-order Markov model provides an interpretable framework for characterizing short-term motility transitions, though it does not capture longer-range dependencies such as postprandial cascades. This simplification was appropriate for the current dataset size and recording duration but could be extended in future studies using higher-order or recurrent formulations (e.g., hidden semi-Markov or RNN-based models). Transition matrices were pooled across subjects to summarize population-level organization; qualitative inspection of individual matrices revealed consistent dominant self-transitions and random–multi-modal exchanges, supporting the robustness of the pooled model.

This study aligns with recent trends in unsupervised biomedical signal discovery, where latent acoustic structures are leveraged to inform downstream classification or anomaly detection [

18,

30]. Our pipeline could enable early detection of GI pathologies, stratify patients by motility patterns, or serve as a foundation for self-supervised representation learning. However, the current dataset includes only healthy volunteers, limiting insight into pathological states.

To our knowledge, this is one of the few works to combine UMAP, high-dimensional PEG features, and clustering to phenotype bowel sounds. As datasets expand to include disease conditions and post-prandial states, future studies can validate these clusters as biomarkers and extend the approach with contrastive learning, attention-based models, or hybrid fusion with physiological metadata.

5. Limitations and Future Work

While this study provides a promising framework for unsupervised phenotyping of bowel sounds, several limitations remain. The dataset comprised recordings from only eight healthy volunteers, which restricts generalizability to broader patient populations.

The results characterize baseline temporal dynamics in healthy bowel sound recordings, providing a reference framework against which pathological deviations may be detected in future work. Residual background noise and subject-to-subject variability remain potential confounders that could affect clustering outcomes.

Each 200 ms segment was divided into 13 short-time frames to compute 20 MFCCs per frame (20 × 13 = 260 coefficients). These were concatenated into a fixed-length feature vector to represent spectral shape without requiring temporal alignment. Although this vectorization disregards intra-frame temporal dynamics, it is suitable for unsupervised clustering of brief, quasi-stationary events. Future work will explore recurrent or attention-based models to explicitly capture temporal dependencies across longer recordings.

The temporal modeling introduced here focused on dwell-time statistics, inter-event gaps, and first-order transition probabilities, providing a foundation for characterizing sequential bowel sound dynamics. Future work may build upon this framework by investigating longer-range dependencies and richer temporal descriptors to further enhance interpretability.

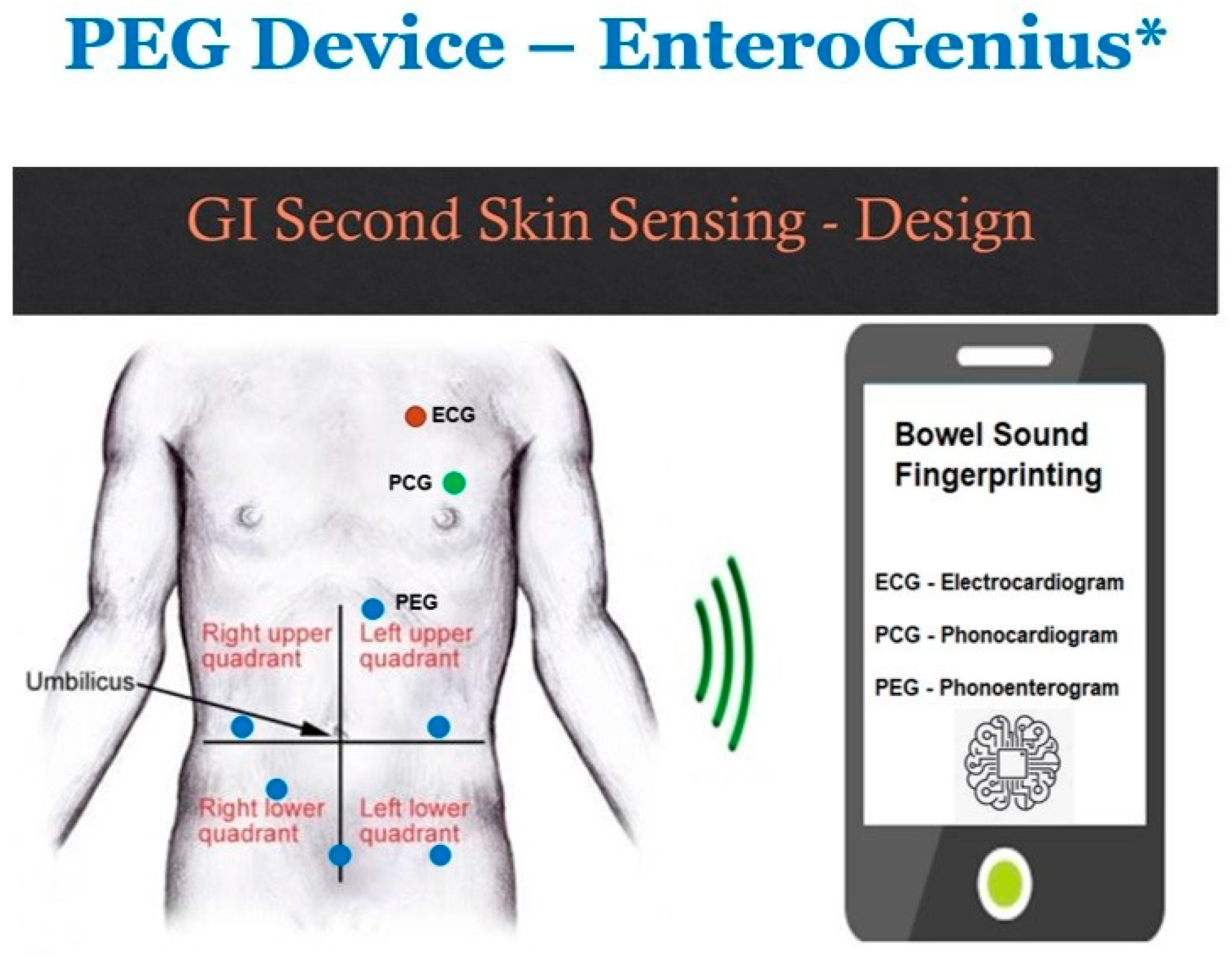

Future research should extend this dataset to include pathological cohorts, such as patients with ileus, bowel obstruction, or inflammatory bowel disease. Additionally, integration with wearable PEG devices such as EnteroGenius* could allow continuous at-home monitoring, enabling real-time detection of clinically relevant bowel sound anomalies.

6. Conclusions

We introduce an unsupervised pipeline capable of automatically detecting and phenotyping bowel sound events from high-resolution PEG recordings, consistently identifying five acoustic patterns without manual labels. By combining clustering with temporal modeling, the framework not only characterizes acoustic variability but also captures sequential dynamics that may reflect underlying gastrointestinal motility. These phenotypes can serve as weak labels for future supervised learning and as potential biomarkers of gastrointestinal dysfunction. From a clinical perspective, the absence of manual annotation makes this approach suitable for translation into bedside monitoring and point-of-care applications, including postoperative ileus, bowel obstruction, irritable bowel syndrome (IBS), and inflammatory bowel disease (IBD).

Author Contributions

G.Y. and S.P.A. defined the project scope, methodology design, and purpose of the study. V.N.I., S.A.H. and V.S.A. provided clinical perspectives and expertise for the study. G.Y. developed models and performed testing and validation. G.Y., J.L., M.N.S., P.E., T.N. and J.J. conducted the literature review and drafted the manuscript. K.G., A.K., D.S., S.R., J.G., G.A.R.P., R.A.A., J.M. and N.A., performed manual annotation of prominent bowel sounds and assisted with manuscript drafting. G.Y., S.S.K. and S.P.A. undertook the proofreading and organization of the manuscript. S.P.A. provided conceptualization of the You Only Listen Once (YOLO) deep learning model concept, supervision, and project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted under Mayo Clinic IRB-approved protocol #22-013060 approved on 01-27-2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data used in this study is not for public use under the IRB protocol due to privacy and ethical restrictions.

Acknowledgments

This work was funded by the Mayo Clinic Office of Digital Innovation. The content is solely the responsibility of the authors and does not necessarily represent the official views of Mayo Clinic. This work was also supported by the Digital Engineering and Artificial Intelligence Laboratory (DEAL), Department of Critical Care Medicine, Mayo Clinic, Jacksonville, FL, USA.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Felder, S.; Margel, D.; Murrell, Z.; Fleshner, P. Usefulness of bowel sound auscultation: A prospective evaluation. J. Surg. Educ. 2014, 71, 768–773. [Google Scholar] [CrossRef] [PubMed]

- Breum, B.M.; Rud, B.; Kirkegaard, T.; Nordentoft, T. Accuracy of abdominal auscultation for bowel obstruction. World J. Gastroenterol. 2015, 21, 10018–10024. [Google Scholar] [CrossRef]

- Nowak, J.K.; Nowak, R.; Radzikowski, K.; Grulkowski, I.; Walkowiak, J. Automated bowel sound analysis: An overview. Sensors 2021, 21, 5294. [Google Scholar] [CrossRef]

- Kölle, K.; Aftab, M.F.; Andersson, L.E.; Fougner, A.L.; Stavdahl, Ø. Data-driven filtering of bowel sounds using multivariate empirical mode decomposition. Biomed. Eng. Online 2019, 18, 28. [Google Scholar] [CrossRef]

- Zaborski, D.; Halczak, M.; Grzesiak, W.; Modrzejewski, A. Recording and analysis of bowel sounds. Euroasian J. Hepato-Gastroenterol. 2015, 5, 67–73. [Google Scholar] [CrossRef] [PubMed]

- Drake, M.; Marcon, M.; Langer, J.C. Auscultation of bowel sounds and ultrasound of peristalsis are neither compartmentalized nor correlated. Cureus 2021, 13, e14982. [Google Scholar] [CrossRef]

- Ching, S.S.; Tan, Y.K. Spectral analysis of bowel sounds in intestinal obstruction using an electronic stethoscope. World J. Gastroenterol. 2012, 18, 4585–4592. [Google Scholar] [CrossRef]

- Sitaula, C.; He, J.; Priyadarshi, A.; Tracy, M.; Kavehei, O.; Hinder, M.; Withana, A.; McEwan, A.; Marzbanrad, F. Neonatal bowel sound detection using convolutional neural network and Laplace hidden semi-Markov model. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 1853–1864. [Google Scholar] [CrossRef]

- Matynia, I.; Nowak, R. BowelRCNN: Region-based convolutional neural network system for bowel sound auscultation. arXiv 2025, arXiv:2504.08659. [Google Scholar] [CrossRef]

- Dang, T.; Truong, T.; Nguyen, C.; Listyawan, M.; Sapers, J.; Zhao, S.; Truong, D.; Zhang, J.; Do, T.; Phan, H.; et al. Flexible wearable mechano-acoustic sensors for body-sound monitoring applications. Nanoscale Adv. 2025, 4, 1221–1243. [Google Scholar] [CrossRef] [PubMed]

- Kalahasty, R.; Yerrapragada, G.; Lee, J.; Gopalakrishnan, K.; Kaur, A.; Muddaloor, P.; Sood, D.; Parikh, C.; Gohri, J.; Panjwani, G.A.R.; et al. A Novel You Only Listen Once (YOLO) Deep Learning Model for Automatic Prominent Bowel Sounds Detection: Feasibility Study in Healthy Subjects. Sensors 2025, 25, 4735. [Google Scholar] [CrossRef]

- Ali, H.; Muzammil, A.; Dahiya, D.S.; Ali, F.; Yasin, S.; Hanif, W.; Gangwani, M.K.; Aziz, M.; Khalaf, M.; Basuli, D.; et al. Artificial intelligence in gastrointestinal endoscopy: A comprehensive review. Ann. Gastroenterol. 2024, 37, 133–141. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Li, X.; Guo, J.; Sun, Y. Heart sound classification network based on convolution and transformer. Sensors 2023, 23, 8168. [Google Scholar] [CrossRef]

- Zulfiqar, R.; Majeed, F.; Irfan, R.; Rauf, H.T.; Benkhelifa, E.; Belkacem, A.N. Abnormal respiratory sounds classification using deep CNN through artificial noise addition. Front. Med. 2021, 11, 1545847. [Google Scholar] [CrossRef]

- Deng, M.; Meng, T.; Cao, J.; Wang, S.; Zhang, J.; Fan, H. Heart sound classification based on improved MFCC features and convolutional recurrent neural networks. Neural Netw. 2020, 130, 22–32. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar] [CrossRef]

- Becht, E.; McInnes, L.; Healy, J.; Dutertre, C.-A.; Kwok, I.W.H.; Ng, L.G.; Ginhoux, F.; Newell, E.W. Dimensionality reduction for visualizing single-cell data using UMAP. Nat. Biotechnol. 2019, 37, 38–44. [Google Scholar] [CrossRef]

- Fernández, M.; Plumbley, M.D. Using UMAP to inspect audio data for unsupervised anomaly detection under domain-shift conditions. arXiv 2021, arXiv:2107.10880. [Google Scholar] [CrossRef]

- Redij, R.; Kaur, A.; Muddaloor, P.; Sethi, A.K.; Aedma, K.; Rajagopal, A.; Gopalakrishnan, K.; Yadav, A.; Damani, D.N.; Arunachalam, S.P. Practicing digital gastroenterology through phonoenterography leveraging artificial intelligence: Future perspectives using microwave systems. Sensors 2023, 23, 2302. [Google Scholar] [CrossRef] [PubMed]

- Batool, F.; Hennig, C. Clustering with the average silhouette width. arXiv 2021. [Google Scholar] [CrossRef]

- Vijay, R.K.; Nanda, S.J.; Sharma, A. A review on clustering algorithms for spatiotemporal seismicity analysis. Artif. Intell. Rev. 2025, 58, 231. [Google Scholar] [CrossRef]

- Frédéric, R.; Rabia, R.; Serge, G. PDBI: A partitioning Davies-Bouldin index for clustering evaluation. Neurocomputing 2023, 528, 178–199. [Google Scholar] [CrossRef]

- Rabiner, L.R. A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Bilmes, J. A Gentle Tutorial of the EM Algorithm and Its Application to Parameter Estimation for Gaussian Mixture and Hidden Markov Models; Technical Report TR-97-021; International Computer Science Institute: Berkeley, CA, USA, 1997. [Google Scholar]

- Namikawa, T.; Yamaguchi, S.; Fujisawa, K.; Ogawa, M.; Iwabu, J.; Munekage, M.; Uemura, S.; Maeda, H.; Kitagawa, H.; Kobayashi, M.; et al. Real-time bowel sound analysis using newly developed device in patients undergoing gastric surgery for gastric tumor. JGH Open 2021, 5, 454–458. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Rabiner, L.R.; Juang, B.H. An Introduction to Hidden Markov Models. IEEE ASSP Mag. 1986, 3, 4–16. [Google Scholar] [CrossRef]

- Baronetto, A.; Graf, L.; Fischer, S.; Neurath, M.F.; Amft, O. Multiscale Bowel Sound Event Spotting in Highly Imbalanced Wearable Monitoring Data: Algorithm Development and Validation Study. JMIR AI 2024, 3, e51118. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Zhou, P.; Lu, M.; Chen, P.; Wang, D.; Jin, Z.; Zhang, L. Feasibility and basic acoustic characteristics of intelligent long-term bowel sound analysis in term neonates. Front. Pediatr. 2022, 10, 1000395. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Du, X.; Allwood, G.; Webberley, K.M.; Osseiran, A.; Marshall, B.J. Bowel Sounds Identification and Migrating Motor Complex Detection with Low-Cost Piezoelectric Acoustic Sensing Device. Sensors 2018, 18, 4240. [Google Scholar] [CrossRef]

- Chen, X.J.; Collins, L.M.; Patel, P.A.; Karra, P.; Mainsah, B.O. Heart sound analysis in individuals supported with LVAD using unsupervised clustering. Comput. Cardiol. 2020, 47, 80. [Google Scholar]

- Damani, S.; Damani, D.N.; Redij, R.; Sethi, A.K.; Muddaloor, P.; Kapoor, A.; Rajagopal, A.; Gopalakrishnan, K.; Wang, X.J.; Chedid, V.G.; et al. On The Design of a Novel Phonoenterogram Sensing Device Using AI Assisted Computer-Aided Auscultation. In Frontiers in Biomedical Devices; American Society of Mechanical Engineers: New York, NY, USA, 2023; Volume 86731, p. V001T04A010. [Google Scholar]

- Damani, D.N.; Damani, S.; Redij, R.; Sethi, A.K.; Muddaloor, P.M.; Kapoor, A.; Rajagopal, A.; Gopalakrishnan, K.; Wang, X.J.; Chedid, V.G.; et al. A Novel Deep Learning Algorithm for Computer-Aided Auscultation of Bowel Sounds Using Phonoenterogram: A Feasibility Study. Biomed. Sci. Instrum. 2023, 59, 70–75. [Google Scholar]

- Gopalakrishnan, K.; Kalahasty, R.; Damani, S.; Damani, D.N.; Parikh, C.; Sood, D.; Goudel, A.; Aakre, C.; Ryu, A.; Chedid, V.G.; et al. Tu2031 A Novel You Only Listen Once (YOLO) Deep Learning Model For Automated Prominent Bowel Sound Detection: Feasibility in Healthy Subjects. Gastroenterology 2024, 166, S-1500. [Google Scholar] [CrossRef]

- Gopalakrishnan, K.; Asadimanesh, N.; Sood, D.; Mohan, A.; Rapolu, S.; Lee, J.; Patel, B.; Dilmaghani, S.; Arunachalam, S.P. Metrics For Phonoenterogram Analytics Towards AI Powered Diagnosis of Bowel Diseases: Feasibility Meal Study in a Healthy Subject. In Proceedings of the 2025 IEEE International Conference on Electro Information Technology (EIT), Valparaiso, IN, USA, 29–31 May 2025; pp. 196–201. [Google Scholar]

- Goh, N.; Lim, F.; Kwan, J.M.; Widarsa, J.; Agrawal, K.; Ko, H.; Tsoi, K.K.; Park, S.-M.; Lee, C.H.; Ng, S.K.; et al. Acoustic sensing and analysis of bowel sounds in irritable bowel syndrome—Recent engineering developments and clinical applications. Sens. Actuators A Phys. 2025, 394, 116910. [Google Scholar] [CrossRef]

- Sood, D.; Riaz, Z.M.; Mikkilineni, J.; Ravi, N.N.; Chidipothu, V.; Yerrapragada, G.; Elangovan, P.; Shariff, M.N.; Natarajan, T.; Janarthanan, J.; et al. Prospects of AI-Powered Bowel Sound Analytics for Diagnosis, Characterization, and Treatment Management of Inflammatory Bowel Disease. Med. Sci. 2025, 13, 230. [Google Scholar] [CrossRef]

- Baronetto, A.; Fischer, S.; Neurath, M.F.; Amft, O. Automated inflammatory bowel disease detection using wearable bowel sound event spotting. Front. Digit. Health 2025, 7, 1514757. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wang, G.; Chen, Y.; Liu, H.; Yu, X.; Han, Y.; Wang, W.; Kang, H. Differences in intestinal motility during different sleep stages based on long-term bowel sounds. Biomed. Eng. Online 2023, 22, 105. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Zhang, M.; Xie, Z.; Liu, Q. Enhancing bowel sound recognition with self-attention. Sensors 2024, 24, 8780. [Google Scholar] [CrossRef]

- Deng, X.; Xu, Y.; Zou, Y. Numerical Modeling of Bowel Sound Propagation: Impact of Abdominal Tissue Properties. Appl. Sci. 2025, 15, 2929. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).