Abstract

This work presents a hybrid model that integrates a mechanistic multicomponent transport scheme in hollow-fiber membranes with an Adaptive Neuro-Fuzzy Inference System (ANFIS). The physical model incorporates pressure drops on the feed and permeate sides (Hagen–Poiseuille), non-ideal gas behavior (Peng–Robinson equation of state), and temperature-dependent viscosity; species permeances are treated as constant for model validation. After validation, a post-validation parametric exploration of permeance variability is carried out by perturbing the methane (CH4) permeance by one decade up and down. From an initial set of 18 variables, 4 key parameters were selected through rigorous statistical analysis (Pearson correlation, variance inflation factor (VIF), and mean absolute error (MAE)); likewise, other physical criteria have been considered: permeance, retentate volume, retentate pressure, and retentate viscosity. Trained with 70% of the simulated data and validated with the remaining 30%, the model achieves a coefficient of determination (R2) close to 0.999 and a root mean square error (RMSE) below 8 × 10−8 m3/h in predicting the methane volume in the retentate, effectively responding to both steady and dynamic fluctuations. The combination of first-principles modeling and adaptive learning captures both steady-state and dynamic behavior, positioning the approach as a viable tool for real-time analysis and supervisory control in petrochemical membrane operations.

1. Introduction

Daily operation of oil and gas production systems requires critical decisions that directly affect production volumes and associated costs [1]. These decisions, made at different organizational levels, converge in the physical production system. In recent years, the industry has evolved toward deeper technological integration, giving rise to the paradigm known as Industry 4.0. This approach promotes the use of intelligent technologies to improve operational efficiency [2].

In the context of natural gas processing, which consists mainly of methane (CH4), one of the most relevant challenges is the removal of carbon dioxide (CO2), as it reduces the calorific value of the gas and accelerates pipeline corrosion. Membrane-based separation technologies, particularly hollow fiber membrane modules (HFMM), have gained importance due to their energy efficiency, modularity, and compactness. These membranes operate through the principle of selective permeation, leveraging differences in solubility and molecular size to separate CH4 from CO2 without the need for chemical solvents.

Gas separation in petrochemical processes has therefore become an active area of research, with CH4/CO2 separation receiving significant attention in recent decades due to the dual objectives of natural gas purification and carbon emission reduction. From a modeling perspective, multiple approaches have been proposed. Chu et al. [3] developed steady-state mass transfer models, while Gu [4] extended this framework by including non-ideal gas effects, concentration profiles, and pressure drops, achieving accurate prediction of experimental data. Later, Ko [5] introduced a dynamic model that considered transient disturbances in operating conditions, enabling more realistic simulations of industrial processes. However, purely physical or “white-box” models require precise estimation of parameters such as permeability, viscosity, and molar volume, which are not always available and often present high uncertainty, particularly in multicomponent systems.

To alleviate these limitations, artificial intelligence (AI) methods have been explored. Artificial neural networks (ANNs) model complex nonlinearities but lack physical interpretability and may generalize poorly beyond the training domain. This motivates hybrid (“gray-box”) strategies that fuse physics with data-driven inference. Among these, the adaptive neuro-fuzzy inference system (ANFIS) stands out by combining the learning capacity of ANNs with fuzzy rule-based reasoning, improving transparency and adaptability [6].

Recent work signals a shift toward hybrid intelligence in gas separation (see Table 1). In polymeric hollow fibers, a GA-optimized ANFIS—genetic algorithm (GA) tuning and ≈7 Gaussian membership functions—predicted permeate-side CO2 for CH4/CO2 separations with near-perfect fit (R2 ≈ 0.9993; RMSE ≈ 0.0064; Average Absolute Relative Deviation (AARD) ≈ 1.25%) [7]. In mixed-matrix membranes (MMMs) comprising fumed silica (FS), polyhedral oligomeric silsesquioxane (POSS), and polydimethylsiloxane (PDMS), differential evolution (DE)–ANFIS was benchmarked against a Crow Search Algorithm–Least-Squares Support Vector Machine (CSA-LSSVM) for single-gas permeation (H2/CH4/CO2/C3H8): DE-ANFIS reached R2 = 0.9981 (overall), while CSA-LSSVM reported R2 = 0.9946/0.9689 (train/test) with MSE = 0.0003/0.0011 and MAE = 0.0114/0.0257 [8]. For silicoaluminophosphate-34 (SAPO-34) MMMs, clustering-guided ANFIS variants—subtractive clustering (SC) and Fuzzy C-Means (FCM)—as well as genetic programming (GP), reproduced CO2 permeability with AARD < 3% and R2 > 0.995 [9]. In polymethylpentene (PMP) modified with nanoparticles, a multilayer perceptron (MLP) with Bayesian regularization (3–8–1) captured “separation capacity” (CO2 permeability) with R = 0.99477, MAE = 6.87, AARD = 5.46%, MSE = 152.75 [10]. By contrast, an empirical-plus-regression HFMM reported a modest R2 ≈ 0.628 for CO2 enrichment/distribution, underscoring the need to embed dynamic multicomponent transport and deliver real-time, explainable responses beyond black-box regression [11].

Table 1.

Comparable studies.

Table 2 widens the lens to adjacent problems with transferable patterns. In electro-assisted ultrafiltration of water, a Takagi–Sugeno–Kang (TSK) fuzzy model predicted Ni2+ removal with R2 ≥ 0.98 and maximum rejections of ≈60% (polyethersulfone, 5 kDa (PBCCS) at ~3.5–4.5 V) and ≈45% (regenerated cellulose, 5 kDa (PLCCS) at 4 V) [12]. For C3H6/C3H8 adsorption in copper benzene-1,3,5-tricarboxylate (Cu-BTC, HKUST-1), particle swarm optimization (PSO)–ANFIS was compared to ANN; ANN yielded the lower MAE (0.111 vs. 0.421) [13]. CO2 solubility in Polystyrene (PS)/ Poly(vinyl acetate) (PVAc)/ Polybutylene succinate (PBS)/ Poly(butylene succinate-co-adipate)(PBSA) was modeled via genetic programming (GP) with R2 > 0.98 and small average relative deviations (ARDs) (≈0.095%, 0.0503%, 0.0312%, 0.039%; 70/30 split; inputs T[K], P[MPa]) [14]. A computational fluid dynamics (CFD) → ANFIS mapping reproduced bubble-column void fraction: with two inputs and eight membership functions (MFs), it attained R ≈ 0.9999, although adding turbulence reduced performance (Rtest ≈ 0.64) [15]. For Cu/H2O nanofluids in a lid-driven square cavity, a grid-partition ANFIS (3 inputs: x, y, T; 4 MFs/input → 64 rules) achieved R ≈ 0.999 (~65% training; up to ~800 iterations); a compact ant colony optimization (ACO) surrogate reached R≈0.92 [16]. CO2 absorption in a closed-vessel nanofluid absorber was learned by an MLP (6–9–1) trained with the Levenberg–Marquardt algorithm (LM), yielding R = 0.9996, MSE = 2.36 × 10−5, MAE% = 0.326 (N = 165) [17]. In forward osmosis (FO) for textile wastewater, combinations of response surface methodology (RSM), ANN (feed-forward backpropagation with Levenberg–Marquardt, FFBP-LM) and ANFIS (Sugeno; five inputs) predicted/optimized water flux (Jw) and reverse salt flux (Js); the ANN evaluation reported Jw: R2 ≈ 0.798, R ≈ 0.8933, MAD ≈ 0.8120, MSE ≈ 1.4800, RMSE ≈ 1.2170; Js: R2 ≈ 0.7807, R ≈ 0.8836, Mean Absolute Deviation (MAD) ≈ 0.7940, MSE ≈ 1.5220, RMSE ≈ 1.2340; a 70/15/15 protocol achieved R2train ≈ 0.998, R2test ≈ 0.997, RMSEtrain/test ≈ 0.0136/0.0194 [18]. In bubble-column CO2 absorption with SiO2/H2O and Fe3O4/H2O, an ANFIS with Fuzzy C-Means (ANFIS-FCM) (3 rules; hybrid learning) produced RMSE ≈ 0.014–0.019; optimal nanoparticle (NP) loadings were 0.025 wt.% SiO2 and 0.015 wt.% Fe3O4, enhancing the mass-transfer enhancement factor (E) to ≈ 1.43 (+43%) and 1.13 (+13.4%), respectively [19]. For sheet-type water permeation, a probabilistic neural network–group method of data handling (PNN–GMDH) with PSO optimized permeate flux, achieving R2 ≈ 0.983, performance index (PI) = 0.723, and Nash–Sutcliffe efficiency (NSE) ≈ 0.984 [20].

Table 2.

Adjacent studies.

CO2 absorption correlations in nanofluids built with GP and GMDH showed GP outperforming GMDH (R2 = 0.9914, AARD = 3.732%, RMSE = 0.0141 vs. R2 = 0.9726, AARD = 8.1134%, RMSE = 0.0231; n = 230; 80/20; inputs t, P, T, dnp, C wt.%, ρnp) [21]. In metal–organic frameworks (MOFs), a random forest (RF) predicted CH4/CO2 biogas separation (main metrics in Supplementary) [22]. Finally, porous liquids (PLs) show a clear AARD ranking for CO2 solubility: 3.17% (CSA-LSSVM) < 6.64% (MLP) < 8.67% (PSO-ANFIS) < 12.98% (ANFIS) [23].

Against this backdrop, our study integrates a mechanistic, multicomponent HFMM model—accounting for pressure drops, non-ideal gas behavior via the Peng–Robinson equation of state (PR-EOS), and temperature-dependent viscosity—with an ANFIS layer trained on statistically paired, physically meaningful inputs (permeance, retentate volume, retentate pressure, retentate mixture viscosity).

This gray-box synthesis delivers near-perfect agreement (R2 ≈ 0.999; RMSE < 8 × 10−8 m3·h−1) for retentate methane volume while retaining interpretability and enabling real-time use, directly addressing the literature gap on dynamic CH4/CO2 separation in HFMM. In doing so, it reduces parameter uncertainty by coupling first-principles transport with data-driven inference; optimizes membrane design and operating conditions to maximize CH4 purity and flow rate; and provides a robust, interpretable, and adaptive system capable of operating under variable conditions—aligning with the principles of Industry 4.0.

To ensure the clarity and reproducibility of the study, it is essential to detail the methodological sequence followed to construct the hybrid model. The starting point was the development of a mechanistic, multicomponent transport model. To ensure its physical fidelity before any subsequent use, this model was rigorously validated against established experimental data from the literature, using the Ko model [5] as a benchmark.

Once the reliability of the mechanistic model was confirmed, it was employed as a “virtual plant” to generate a comprehensive synthetic dataset, thus overcoming the lack of a dedicated experimental dataset for training. Subsequently, to optimize the quality of the inputs for the intelligent model, this synthetic dataset was subjected to a rigorous variable selection process. Based on the statistical criteria detailed in Section 2.2, the initial set of 18 candidate predictors was reduced to the four most informative and statistically independent ones.

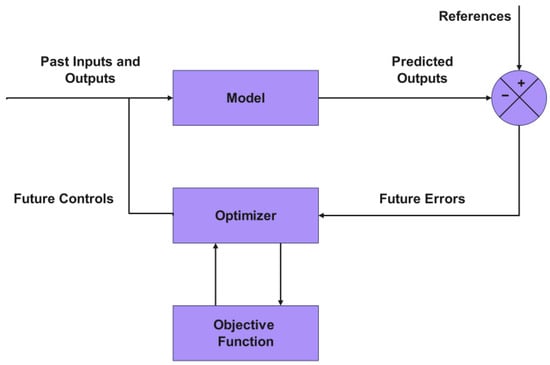

Finally, this refined dataset served as the foundation for training and testing the ANFIS layer. The ultimate purpose of this work is for the developed ANFIS model, thanks to its high precision and speed, to serve in a future stage as the predictive core within an advanced optimization and control strategy, whose fundamental structure is illustrated in Figure 1. In such a scheme, the model would predict the system’s behavior, allowing an optimizer, guided by an objective function (specifically, to maximize the amount of methane obtained in the retentate), to determine the optimal control actions for the process.

Figure 1.

Basic structure of model-based optimization.

This paper is organized as follows. The Section 2 (Materials and Methods) comprises three subsections: Section 2.1 develops the multicomponent HFMM mechanistic model, including temperature-dependent viscosity and non-ideal gas behavior; Section 2.2 presents the statistical scaffold—data normalization, a normalized multiple linear regression baseline, assumption diagnostics, and multicollinearity/variable selection; and Section 2.3 details the ANFIS layer (membership functions, rule base, hybrid training, and the train/validation/test split). Section 3 presents the results, and Section 4 concludes the paper.

2. Materials and Methods

This section details the methodology used to develop a hybrid model that predicts gas separation in an HFMM. The process begins with the construction of a mathematical model based on first principles, which is rigorously validated. Subsequently, this model is used as a “virtual plant” to generate a synthetic dataset. Finally, the generated data are subjected to a thorough statistical analysis to select the most influential variables, which serve as input for training the intelligent ANFIS model. To further enhance reproducibility, Appendix A (Algorithms A1–A5) provides a series of detailed pseudocodes illustrating the implementation of the mechanistic model, data generation, statistical analysis, and the ANFIS training workflows.

2.1. Mathematical Modeling of HFMM

This model aims to describe the behavior of the separation system through mathematical relationships that account for the gas properties and the membrane’s characteristics. The most critical considerations in this model are described as follows:

- The membrane does not deform under the applied pressure. This is a simplifying assumption [3].

- Pressure drop on the permeate and retentate sides can be calculated using the Hagen-Poiseuille equation.

- Permeance of chemical species is considered constant in model validation (see Section 2.1.3).

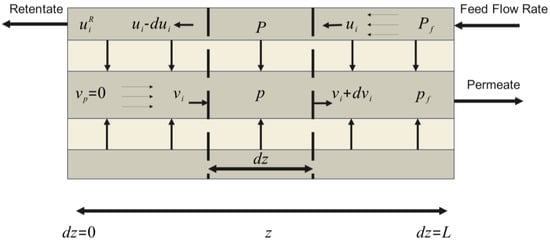

This model is based on a set of differential equations that describe the variation of the component flow along the membrane. Equation (1) describes the rate of change in the molar flow of component i on the retentate side of the membrane in the flow direction. Equation (2) represents the rate of change in the molar flow of component i on the permeate side. Equation (3) details the pressure variation along the membrane on the retentate side, considering the dynamic viscosity of the mixture and membrane geometry. Finally, Equation (4) describes pressure variation on the permeate side; see Figure 2 [3,24].

where is permeance of component i , and denote partial pressures of component in the retentate and permeate section, , is the number of tubes or fibers, is component index, is flow rate on the permeate side and is the flow rate on the retentate side ). The internal diameter of the shell is denoted by , while and refer to the internal and external diameter of the fiber , respectively, is the universal gas constant, is the temperature, and is the dynamic viscosity of the mixture . Superscripts and are employed to denote quantities in the retentate and permeate streams, respectively. These notations are consistently applied to other variables in subsequent sections to facilitate further analyses.

Figure 2.

Countercurrent flow dynamics in an HFMM scheme.

To solve the differential equations, the MATLAB® (version R2025b) function ode15s is used. This function employs an adaptive implicit method based on backward differentiation formulas (BDF). This approach is particularly suitable for stiff systems, as it can handle rapid and slow variations in a stable and efficient manner. First, the model equations and initial conditions were defined (see Table 3 and Table 4). Then, error tolerances and the integration interval were configured. This setup allowed for precise and stable numerical solutions for the system of interest. This model has proven valid with data from studies in [3,25,26].

Table 3.

Initial conditions of the Process.

Table 4.

Initial conditions of chemical species.

2.1.1. Dynamic Determination of Viscosity

According to [27], the viscosity of a pure fluid can be determined using the Chapman-Enskog solution, which is based on the Boltzmann transport equation. This approach assumes that molecular interactions can be approximated by those of Lennard–Jones particles with a potential function. Dynamic viscosity of a gas is calculated using the kinetic theory of gases, which relates this property to temperature , molar mass , collision diameter σ, and collision integral . The pure-component molecular weights used in the Wilke mixing rule are given in Appendix A, Table A1.

Reduced collision integral is calculated by considering the possible trajectories of particles during their approach. An empirical correlation relates to temperature.

where the reduced temperature .

In this expression, is the depth of the Lennard–Jones potential well, and is the Boltzmann constant (1.380649 × 10−23 J/K). The term has units of kelvins, making dimensionless. Physically, represents the ratio of the system temperature to the characteristic energy of intermolecular interactions. The Lennard–Jones parameters adopted for collision-integral evaluations are summarized in Appendix A, Table A3.

According to [28] viscosity of a diluted gas is related to the mole fraction , and the viscosity of the diluted gas component , following Wilke’s equation.

Binary weighting factor expressed as a function of gas viscosity and the molecular weight of component as follows:

2.1.2. Peng–Robinson Equation of State for Non-Ideal Gas Behavior

To accurately represent the non-ideal behavior of gas mixtures within the HFMM, Peng–Robinson (PR) cubic equation of state is implemented in the mathematical model. This approach allows for the calculation of the real molar volume for both the retentate and permeate streams at each spatial position along the membrane, thus providing a more rigorous description of volumetric and transport phenomena, particularly under elevated pressure conditions [29].

The Peng–Robinson equation of state is given by the following:

where is the pressure, is the temperature, is the molar volume, and is the universal gas constant. The parameters and are determined from the critical temperature , critical pressure , and acentric factor of each species.

Computation of the parameters and for Peng–Robinson equation of state in multicomponent mixtures requires the use of appropriate mixing rules, as well as the estimation of temperature-dependent correction factors for each component.

First, for each pure component i, the parameters are defined as follows:

where and are the critical temperature and pressure of component i, respectively. The term introduces temperature dependence, calculated as follows:

For mixtures, global parameters and are obtained by classical mixing rules:

In these equations, and are the mole fractions of components i and j, respectively. Binary interaction parameter is an empirical coefficient used to account for non-ideal interactions between unlike pairs of molecules in the mixture, and it is usually obtained from experimental data or taken as zero in the absence of specific information.

Final values of and are then substituted into the cubic Peng–Robinson equation of state to compute the molar volume or compressibility factor for the mixture under the specified conditions of temperature, pressure, and composition [29].

The Peng–Robinson EoS is widely recognized as a robust model for CH4/CO2 mixtures under the moderate operating conditions (8 atm, 298.15 K) of this study. Its ability to provide an excellent compromise between simplicity and accuracy for CO2-rich systems in the subcritical gas phase, with reported errors for compressibility below 1%, makes it a suitable choice for this application [30]. Furthermore, its successful implementation in recent molecular simulation studies of gas separation in membranes under comparable conditions validates its predictive capabilities [31]. The model’s robustness is also supported by its application in studies under even more demanding conditions of higher pressure [32]. The critical properties and acentric factors employed are listed in Appendix A, Table A2.

2.1.3. Permeance: Baseline Assumption and Post-Validation Exploration

The effective observed in operation can shift with temperature, pressure, and composition (competitive sorption, plasticization), as well as with the architecture/thickness of the selective layer and module non-idealities (pressure drops, film coefficients, non-isothermality). The specialized literature recommends, when reliable parameters are not available, conducting parametric evaluations to bound their impact on module performance and design [33].

At the material/architecture level, hollow-fiber TFC membranes with ultrathin selective layers have reported CO2 permeances on the order of 2.6–2.7 × 103 GPU with CO2/N2 selectivities of ~21; the authors note that thickness estimation assumed constant permeability despite evidence of thickness dependence and operating-condition variability, reinforcing that effective permeance is not static [34].

At the module/process level, performance depends on the integration of the separation unit (areas, stage cut, pressures) and its couplings with other units; these factors modify driving forces and fluxes, explicitly justifying the use of parametric scans when working with tabulated permeances [35].

Regarding constitutive modeling, frameworks that integrate molecular dynamic (MD)+ free-volume theory and pressure/composition-dependent permeability improve agreement with data but require nontrivial parameters; in parallel, non-ideal models (pressure-dependent permeability, substrate resistance, real-gas behavior) have been shown to reduce deviations relative to the ideal approach, supporting parametric evaluation when a full parametrization is not yet available [36].

Adopted protocol. Without introducing a new constitutive model at this stage, a post-validation exploration was carried out by varying only the CH4 permeance in {0.1×, 1×, 10×} relative to its baseline. This approach was chosen as a first-order sensitivity analysis to evaluate the system’s response to perturbations in the less permeable species, a key factor controlling methane purity in the retentate. We acknowledge that a complete analysis would involve co-variation of CO2 permeance to fully map the impact on selectivity, which remains a valuable direction for future studies. The scan reports eighteen outputs spanning: total volumes on each side (retentate and permeate); gas compositions on each side (CO2 and CH4 mole fractions); pressures on each side; component molar flows of CO2 and CH4 on each side; component volumes of CO2 and CH4 on each side; and the mixture viscosity on each side. Together, these metrics quantify the plausible impact on flow splits, compositions, and pressure profiles within a range bounded by the literature.

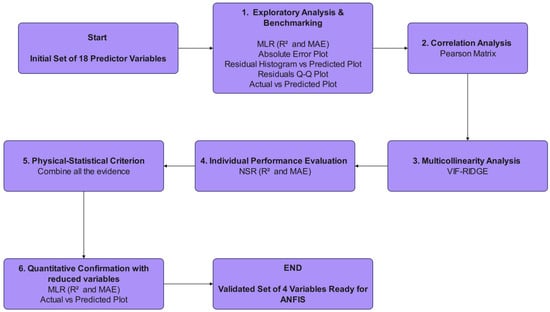

2.2. Statistical Method

This section details the systematic methodology, illustrated in Figure 3, used to reduce the initial set of 18 candidate predictors to a compact, high-quality subset for ANFIS modeling. The workflow is designed to ensure the final variables are not only statistically powerful but also physically meaningful.

Figure 3.

Statistical analysis framework for predictor dimensionality reduction.

The process begins with an Exploratory Analysis and Benchmarking phase, where a multiple linear regression (MLR) model incorporating all 18 variables is constructed. This initial model serves as a baseline, and its performance is evaluated using the coefficient of determination (R2) and mean absolute error (MAE). Its statistical validity is rigorously checked through a series of diagnostic plots, including residual analysis and Q-Q plots, to assess underlying assumptions like homoscedasticity and normality.

Subsequently, a Correlation and Multicollinearity Analysis is performed. A Pearson correlation matrix is generated to identify strong linear relationships between pairs of variables. This is complemented by a more robust multicollinearity assessment using the Variance Inflation Factor with a Ridge penalty (VIF-Ridge), which quantifies how much the variance of an estimated regression coefficient is increased due to collinearity with other predictors.

In parallel, an Individual Performance Evaluation is conducted for each predictor. By fitting a normalized simple linear regression (NSR) for each variable against the target output, we assess their individual predictive contributions, again using R2 and MAE as key metrics.

The core of the selection process is the Physical-Statistical Criterion, where all the evidence is synthesized. The statistical findings—from the benchmark model, correlation matrix, VIF scores, and individual regressions—are combined with physical knowledge of the membrane separation process. This crucial step ensures that the selected variables are not just statistically independent but also mechanistically relevant.

Finally, a Quantitative Confirmation is performed. A new, reduced MLR model is built using only the four selected variables. Its performance is re-evaluated to confirm that the parsimonious model retains high predictive accuracy. This multi-stage filtering workflow systematically eliminates weak and redundant variables, guaranteeing that the final input set for the ANFIS model is both robust and interpretable.

All measurements are arranged into the standard regression form:

where denotes the number of experimental observations (rows) and is the number of initial predictors (). This succinct representation facilitates matrix-based computations and algorithmic efficiency.

To explore the relationships between variables, scatter plots are employed to visualize ordered pairs (, ). It’s not just a graph but a visual representation of the relationship between the two variables. When most points align closely with a straight line, the correlation is classified as linear. Conversely, the correlation is considered nonlinear if the points follow a curved pattern. However, if no discernible pattern is observed among the ordered pairs, it indicates the absence of a relationship between the two variables. To depict the curve or regression line that best fits the distribution of the ordered pairs, Equation (18) is applied [36].

where is the intercept and are the regression coefficients associated with each predictor.

2.2.1. Data Normalization

To mitigate bias from differing variable scales, all features and the target variable are standardized to zero mean and unit variance:

where and are vectors of column-wise means and standard deviations of the predictor across all samples. and are the mean and standard deviation of the dependent variable .

2.2.2. Normalized Multiple Linear Regression

A normalized multiple linear regression model was fitted to the standardized data:

where is the vector of normalized predicted values. is the normalized design matrix (dimensions ), with each column scaled to zero mean and unit variance, and is the vector (dimensions ).

2.2.3. Parameter Estimation via Gradient Descent

The coefficients were optimized by minimizing the mean squared error (MSE) cost function:

Using stochastic gradient descent (SGD) with Learning rate , Iterations = 1000 (ensuring convergence to local minima) and an update rule according to the following:

where the gradient is computed as follows:

Normalized predictions are transformed back to the original scale for direct comparison with experimental data. We evaluate performance with two metrics: the coefficient of determination (R2), defined in Equation (24), and the mean absolute error (MAE), defined in Equation (25). ∈ [0,1] quantifies the fraction of variance in the target explained by the model, values closer to 1 indicate a better fit. For completeness, the Pearson correlation coefficient ∈ [−1, 1] measures the strength and direction of linear association between variables [34].

Normalized simple linear regression is additionally performed for each independent variable , repeating the same procedure and metric calculations to identify the individual contribution of each variable to the model.

2.2.4. Multicollinearity Analysis

Given that collinearity among predictors can inflate the variance of regression coefficients and undermine the numerical stability of the model. This procedure enabled the systematic elimination of highly collinear and weakly predictive variables, reducing the original pool of 18 candidate predictors to the four most informative and mutually independent inputs for ANFIS training.

Pearson’s correlation coefficients are computed according to [37] on the normalized predictor matrix .

With this, it was also possible to construct a correlation matrix which compacts all the into a single object.

Inspecting the correlation matrix quickly flags pairs with near 1, it remains a subjective heuristic and tells us nothing about how much multicollinearity is affecting our coefficient estimates. To quantify that impact, we turn to the variance inflation factor for predictor , quantifying how much the variance of its estimated coefficient is inflated due to multicollinearity. Formally,

According to [38], is the diagonal element of . Values > 10 indicate severe collinearity. However, when there is severe collinearity, the correlation matrix can become close to singular and lead to extreme or undefined values of . To address this problem, we introduce a Ridge penalty in the collinearity diagnosis. Strictly speaking, we define the “penalized” version of the as follows:

Ridge penalty hyperparameter controls the degree of regularization and was selected (λ = 0.03) via literature . is the identity matrix of size , where is the number of original predictors.

2.2.5. Validation of Model Assumptions via Diagnostic Plots

To ensure that the normalized linear regression satisfies its underlying assumptions prior to ANFIS training, the following diagnostic plots are systematically generated:

- Residuals vs. Predicted Values: Plot the residual against the fitted value yk. This visualization is used to assess homoscedasticity—constancy of error variance across the prediction range—and to detect any funnel-shaped or nonlinear patterns that would indicate model misspecification.

- Q-Q-Plot of Residuals: Construct a quantile-quantile plot comparing the empirical quantiles of the residuals to theoretical quantiles of a standard normal distribution. Close adherence of points to the 45° reference line will validate the assumption of approximate Gaussianity of the errors, which is important for subsequent inferential procedures.

- Histogram of Residuals: Generate a normalized histogram of residuals to examine the shape of their distribution—specifically symmetry, peakedness, and tail behavior. This complements the Q-Q-plot by visually confirming the presence (or absence) of skewness or heavy tails.

- Actual vs. Predicted Values: Plot observed responses versus predicted values alongside the identity line . A tight cluster of points around this line will demonstrate high overall predictive accuracy and minimal systematic bias.

- Absolute Error per Sample: Compute and plot the absolute error for each sample index. This plot helps to identify isolated outliers or regions where the model’s performance deteriorates, thereby guiding further data investigation or model refinement.

By applying these five diagnostic plots, one can confirm the validity of homoscedasticity and normality assumptions, quantify overall goodness-of-fit, and detect any influential observations that may warrant additional scrutiny before proceeding with ANFIS modeling.

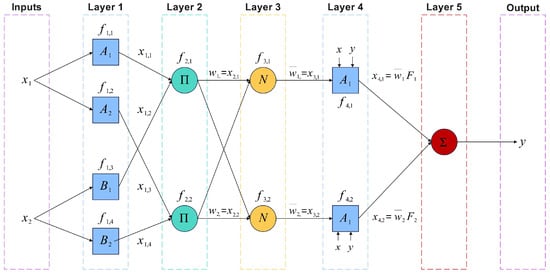

2.3. Intelligent Modeling with ANFIS

The technique implemented in this study is the ANFIS, which integrates two intelligent methods: ANNs and fuzzy logic. This hybrid approach combines the learning capacity of ANNs with the interpretability of fuzzy systems, effectively addressing the limitations inherent to each individual technique as a universal approximator [39,40,41].

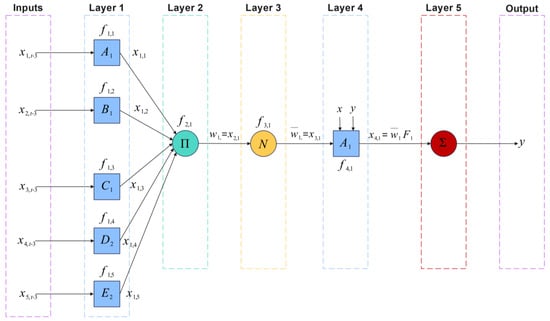

Classical ANFIS architecture, illustrated in Figure 4, consists of five layers:

Figure 4.

Architecture scheme of a two-input ANFIS network.

- Layer 1 (Membership Functions): Each input is fuzzified using membership functions (MFs) that transform crisp inputs into membership degrees ranging from 0 to 1. In this work, different membership functions were tested, such as Gaussian, triangular, sigmoidal, and bell functions. After a comparative analysis, the membership function with the best performance was the sigmoidal one, as presented and discussed later in Table 10. The sigmoidal membership function is defined as follows:

where parameters and control the slope and center of the function, respectively.

- Layer 2 (Rule Firing Strength): Computes the firing strength of each fuzzy rule by aggregating membership degrees of all inputs via product:

where is the number of fuzzy rules and is number of inputs.

- Layer 3 (Normalization): Normalizes firing strengths:

- Layer 4 (Consequents): Each rule computes an output as a linear function of inputs weighted by :

where and are consequent parameters.

- Layer 5 (Output Aggregation): Aggregates all outputs to produce final prediction:

Neural network components enable continuous adjustment of weights and parameters during learning. The training method applied is a hybrid algorithm combining least squares estimation for the consequent parameters and gradient descent for nonlinear membership parameters , and [39,40].

The error function minimized during training is the mean squared error:

where and are actual and predicted outputs respectively. Update rule for any parameter follows gradient descent:

with learning rate controlling step size.

To capture temporal dependencies, input vectors include up to 20 previous samples (lags), generating delayed inputs such as the following:

Data for these delayed inputs are generated from white-box membrane transport model and loaded for ANFIS training. Variable selection is performed as previously described using correlation analysis to ensure relevance and reduce redundancy.

To ensure a robust and interpretable ANFIS model, configuration and training parameters are summarized in Table 5. Model uses four selected input variables—permeance , retentate volume , retentate pressure , and retentate mixture viscosity —with methane volume in retentate as output. Temporal dependencies are incorporated by evaluating up to 20 time lags per input, allowing the model to capture the dynamic behavior of the membrane process. Sigmoidal membership functions are primarily used. Each input variable has one membership function, balancing simplicity and expressive power.

Table 5.

Summary of ANFIS Model Configuration and Training Parameters.

While a greater number of membership functions (MFs) can enhance an ANFIS model’s ability to capture complex nonlinearities, this typically comes at the cost of significantly increased processing time. Our findings indicate that for this application, a model with a minimal set of MFs is not only sufficient but optimal. The marginal gains in accuracy from more complex configurations did not justify the exponential increase in computational demand, rendering them impractical for the real-time estimation of CH4 volume in the membrane retentate. Consequently, our chosen architecture strikes a robust balance between high predictive fidelity and operational efficiency.

Dataset is split into 70% training and 30% testing, maintaining strict separation to validate generalization. Training employs a hybrid method combining least squares estimation for linear consequent parameters and gradient descent for nonlinear membership parameters and . Parameters and are initialized randomly within defined ranges. Training proceeds until the mean squared error (MSE) for a maximum of 50 iterations is reached. Likewise, evaluation time (Eval Time) is computed. Performance metrics (Eval Time, MSE, RMSE, MAE, and ) are computed for both training and testing sets to evaluate overall accuracy. Final model selection is based on the lowest test set MSE among all evaluated lag configurations, ensuring optimal predictive performance. Validation includes graphical comparisons of predicted versus actual values and residual analysis to confirm model reliability. This rigorous framework enables ANFIS to effectively capture the nonlinear and temporal characteristics of the HFMM. We propose implementing a real-time control scheme coupled with bio-inspired algorithms (e.g., PSO, Gray Wolf Optimizer (GWO), and DE) for process tuning and optimization [42].

3. Results

This section presents and analyzes the results obtained from the developed hybrid model, which integrates a mechanistic multicomponent transport model for hollow fiber membranes (HFMM) with an Adaptive Neuro-Fuzzy Inference System (ANFIS). The section is organized into three key subsections to demonstrate the model’s validity, the variable selection methodology, and the predictive performance of the final model.

3.1. Mathematical Modeling of HFMM Results and Validation

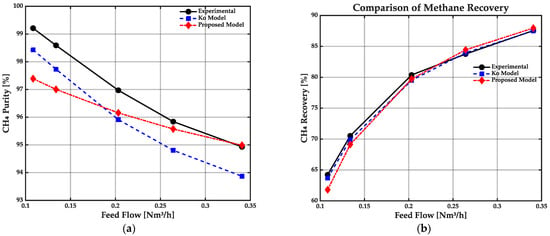

The accuracy and predictive capacity of the proposed mathematical model are first evaluated by comparing its results against experimental data and the reference Ko model, as shown in Figure 5. Comparison focuses on two primary performance indicators for the membrane system: methane purity (Figure 5a) and methane recovery (Figure 5b), both as functions of feed flow rate.

Figure 5.

Validation of the proposed membrane model using experimental results and the Ko model. (a) Methane purity as a function of feed flow rate. (b) Methane recovery as a function of feed flow rate.

As observed in Figure 5a, all models predict a decreasing trend in methane purity with increasing feed flow rate, which is consistent with the expected reduction in separation efficiency at higher feed rates. Notably, the proposed model tracks the experimental data more closely than the Ko model, particularly at intermediate and high feed flow rates. While the Ko model significantly underestimates methane purity under these conditions, the proposed model shows smaller deviations and better alignment with experimental results, indicating a more accurate representation of the underlying transport mechanisms.

In terms of methane recovery (Figure 5b), both models reproduce the expected increasing trend as the feed flow rate rises. However, the proposed model displays improved agreement with experimental data across tested feed rates, especially at low and moderate flow values where the Ko model exhibits more pronounced discrepancies. This consistent agreement highlights the ability of the proposed model to capture both the magnitude and trend of experimental recovery data.

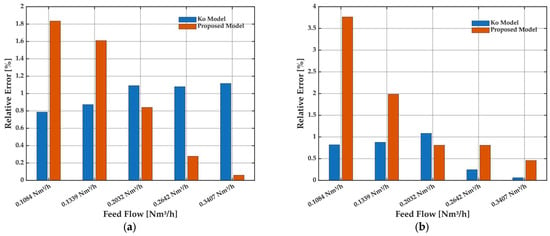

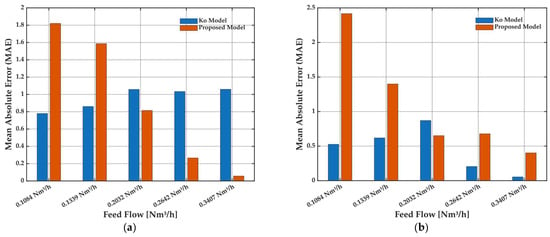

To provide a quantitative assessment of model accuracy, a detailed error analysis was conducted using the relative error and mean absolute error (MAE) metrics for each experimental case (Figure 6 and Figure 7). Relative error distributions for methane purity (Figure 6a) and methane recovery (Figure 6b) show that, although the Ko model achieves lower errors at the lowest feed flow rates, its accuracy diminishes as flow increases. In contrast, the proposed model shows higher errors at low flow but rapidly improves with increasing feed, outperforming the Ko model in the higher range of operational relevance.

Figure 6.

Relative error of the Ko model and the proposed model for the prediction of (a) methane purity and (b) methane recovery as a function of feed flow rate. Results correspond to the same experimental conditions as in Figure 5.

Figure 7.

Mean absolute error (MAE) of the Ko model and the proposed model for the prediction of (a) methane purity and (b) methane recovery as a function of feed flow rate. Results correspond to the same experimental conditions as in Figure 5.

MAE analysis (Figure 7a,b) reinforces this observation: while the Ko model maintains a slight advantage at the lowest feed, the proposed model yields lower MAE values at moderate and high feed flows for both purity and recovery. These results confirm that the proposed model delivers robust and reliable predictions under practical operating conditions, supporting its suitability for process optimization and scale-up in HFMM.

3.2. Statistical Method Results

A comprehensive statistical analysis is carried out to evaluate the predictive performance and interrelationships of all candidate input variables considered for modeling. The objective is to identify informative and least redundant subsets of variables for training a subsequent ANFIS model. Table 6 summarizes the results of individual linear regressions, including coefficient of determination (R2), mean absolute error (MAE) and variance inflation factor .

Table 6.

Initial individual variable regression metrics for model reduction.

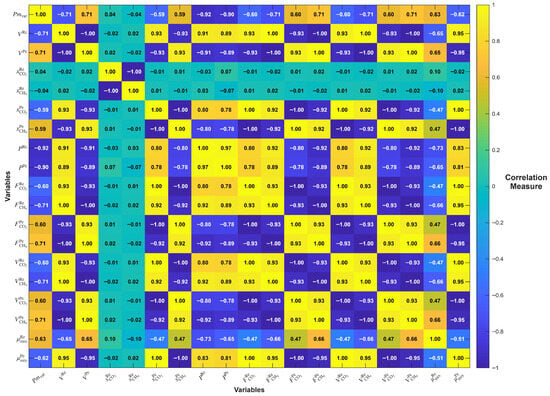

Among evaluated variables, Retentate volume and permeate volume showed near-perfect linearity ( ≈ 0.9998–0.9999) and extremely low error (MAE = 5.47 × 10−8). However, their low values (0.52) and very high pairwise correlation (ρ > 0.93), as depicted in the Pearson correlation matrix (Figure 8), revealed significant redundancy. Including both would likely lead to multicollinearity without improving model expressiveness.

Figure 8.

Pearson correlation matrix between all candidate retentate and permeate variables included in the variable selection procedure. The heatmap indicates the degree of linear relationship between each pair of variables.

Retentate Pressure demonstrated strong predictive ability ( = 0.8356) and moderate (5.62), indicating it brings valuable and relatively unique information. Although its suggests some correlation with other inputs, it remained within an acceptable range for inclusion. On the other hand, permeance had a lower R2 (0.4883) but was favored due to its meaningful physical interpretation, relatively low (3.70), and strong negative correlations with both Retentate Volume and Retentate Pressure (ρ ≈ −0.90), suggesting it captures orthogonal aspects of the system behavior.

Regarding viscosity, Permeate Mix Viscosity achieved a high R2 (0.8992) and low MAE, indicating good predictive potential. However, its strong correlations with other variables (ρ > 0.9) signaled redundancy. Conversely, retentate mix viscosity showed moderate predictive performance ( = 0.4305) and acceptable multicollinearity ( = 2.18) but is retained for its physical relevance and lower correlation with other selected variables (ρ < 0.5), supporting its role in improving model robustness.

The correlation matrix (Figure 8) provides a comprehensive overview of the linear interrelationships among all candidate variables. Several variables exhibited strong pairwise correlations (ρ > 0.93), indicating a high degree of redundancy in the information they convey. Notably, variables such as retentate CH4 flow, permeate CH4 flow, retentate CO2 flow, and permeate CO2 fraction were found to be tightly interrelated with other process variables—particularly retentate volume, permeate volume, and retentate pressure—all of which already captured the principal trends of the system.

Despite achieving near-perfect individual scores in their univariate regressions, these highly correlated variables were not selected for the final model due to their limited marginal contribution to the overall predictive power and their potential to introduce multicollinearity. This decision is further supported by their very low values (typically < 0.60), which mathematically reflect that variance in these predictors can be almost entirely explained by other variables in the model. Including such variables would not only complicate the model unnecessarily but also increase the risk of overfitting and reduce the generalizability of the final predictive tool, particularly in real-world conditions with process noise and variability.

Moreover, from a physical standpoint, flows and fractions are often dependent on the outcomes of pressure, volume, and permeance conditions, and not independent drivers of system behavior. Thus, their high correlation with physically meaningful variables such as retentate pressure and permeance reinforces the decision to exclude them in favor of more mechanistically grounded predictors.

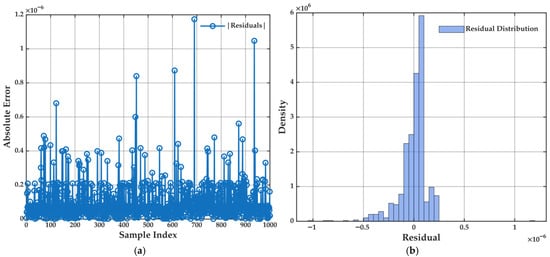

To further validate the reliability of the selected model, residual diagnostics are conducted, as illustrated in Figure 9 and Figure 10. Figure 9a shows the absolute error per sample across the dataset. The majority of errors are small and centered, with some increase observed near sample boundaries. This pattern is consistent with edge effects, where predictive models may exhibit slightly reduced accuracy due to limited data density or extrapolation beyond the core data cloud.

Figure 9.

(a) Absolute error for each sample in the validation dataset for the multiple linear regression model. (b) Histogram of residuals showing the distribution and magnitude of errors across all samples. These visualizations provide a direct assessment of the model’s prediction accuracy and the normality of residuals, which are essential for evaluating regression model assumptions.

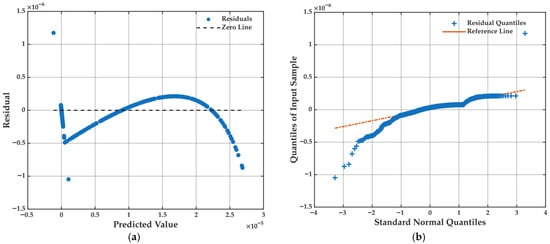

Figure 10.

(a) Residuals as a function of predicted values for a multiple linear regression model, highlighting any trends or heteroscedasticity in model errors. (b) Q-Q plot of residuals, used to assess the normality of errors and the adequacy of linear model assumptions.

Figure 9b presents the histogram of residuals, which exhibits a nearly symmetric bell-shaped distribution, closely approximating normality. This supports the assumption of homoscedastic and unbiased residuals, essential conditions for the validity of linear regression estimators.

Further insight is offered by the residuals vs. predicted plot (Figure 10a), where a slight curvature is observed. While mostly centered around zero, this pattern suggests the presence of mild heteroscedasticity or a weak nonlinear component in data that a linear model may not fully capture. Nevertheless, deviation remains minor and does not compromise the model’s overall fit or its applicability for variable screening.

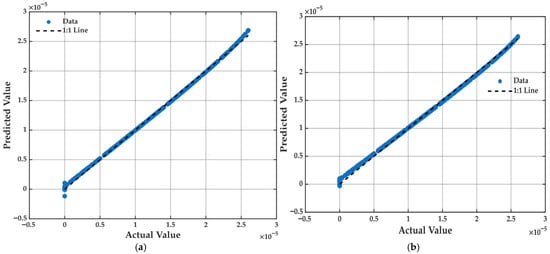

The scatter plot of actual versus predicted values (Figure 11a) provides a visual confirmation of the regression model’s predictive capability. Most data points align closely with the ideal 1:1 reference line, indicating a strong agreement between observed outputs and those estimated by the model. This tight clustering suggests that the regression model effectively captures dominant trends and intrinsic relationships among selected input variables. Only minimal deviations are observed at the extremes, which is typical in physicochemical models where non-linearities and edge-case behaviors may arise due to unmodeled secondary effects.

Figure 11.

Comparison of actual vs. predicted values for the multiple linear regression models. (a) Performance of the model with the initial set of 18 variables. (b) Performance of the reduced model with the 4 selected variables. The dashed line indicates the ideal 1:1 relationship.

Statistical performance of regression model is further substantiated by quantitative metrics presented in Table 7. Model achieved a global coefficient of determination of = 0.999, indicating that 99.9% of the variability in the target variable is explained by selected predictors. Additionally, the normalized mean absolute error (MAE) is 1.292, reflecting a very low average deviation between predicted and actual values relative to the output scale. Together, these results support the robustness and high fidelity of regression models.

Table 7.

Comparison of regression model performance metrics.

To complement the visual and numerical analysis, final regression model’s structure, including linear, interaction, and higher-order terms, is presented in Table 8. This table lists the estimated coefficients , which quantifies the relative influence of each term used in the polynomial regression model. Inclusion of higher-order coefficients enables the model to capture subtle nonlinear dependencies without introducing excessive complexity, while preserving interpretability and numerical stability.

Table 8.

The values of .

Following a comprehensive analysis that considered multiple statistical criteria—including , MAE, , and Pearson correlation coefficients—a reduced set of four variables was selected as optimal inputs for the subsequent ANFIS training process: Permeance, retentate volume, retentate pressure, and retentate mix viscosity. These variables were chosen based on their ability to capture complementary aspects of the process. Permeance represents the intrinsic transport property of the membrane, influenced by temperature, pressure, and composition. Retentate volume and retentate pressure reflect the operational state of the feed side and are strongly tied to driving forces for mass transfer. Retentate Mix Viscosity, while having moderate , was retained for its mechanistic relevance and lower correlation with other selected inputs, thus contributing unique variance to the model. Importantly, the selected variables offer a balanced trade-off: they exhibit high predictive power, low to moderate multicollinearity (as evidenced by acceptable values), and are rooted in well-understood physical mechanisms governing membrane-based gas separation. This selection aligns with the principle of model parsimony—minimizing the number of inputs without compromising accuracy or generalizability.

Several other variables, despite exhibiting excellent individual regression metrics (e.g., Permeate Flow CH4, Retentate Flow CO2, Permeate Fraction CH4), were deliberately excluded due to high pairwise correlations (ρ > 0.90), excessively low values (<0.6), or overlapping information content with already selected predictors. Additionally, some variables lacked direct mechanistic interpretability, which could hinder extrapolation or explainability of the model in broader operating domains.

Nonetheless, it is important to recognize that variable selection is inherently context-dependent. Current selection is optimized for the specific objective of training a robust, interpretable ANFIS model for this dataset and operating window. Future studies may consider evaluating alternative or expanded subsets of input variables—such as combinations including permeate mix viscosity or permeate fraction CH4—to explore whether these configurations offer improved performance, particularly under varying process conditions, multicomponent mixtures, or in the presence of dynamic disturbances.

In summary, the selected reduced-input model demonstrates excellent agreement with observed data (see Table 9), both statistically and physically. This outcome provides a strong foundation for training intelligent models like ANFIS, while avoiding pitfalls of overfitting, multicollinearity, and loss of physical meaning.

Table 9.

Criteria and Rationale for Selecting Input Variables for ANFIS Modeling.

3.3. ANFIS Simulation Results

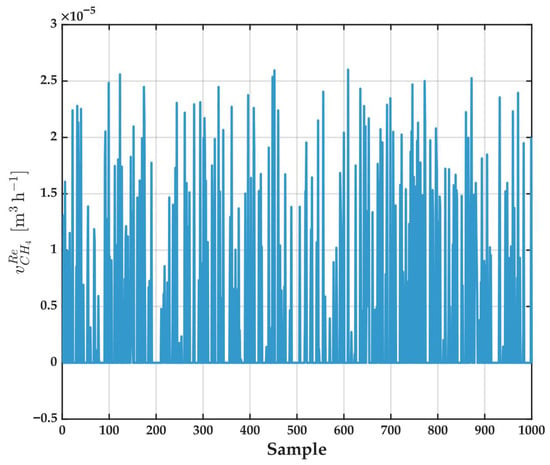

The dataset used for training the ANFIS model is characterized by its high complexity and variability, justifying the choice of an advanced modeling strategy.

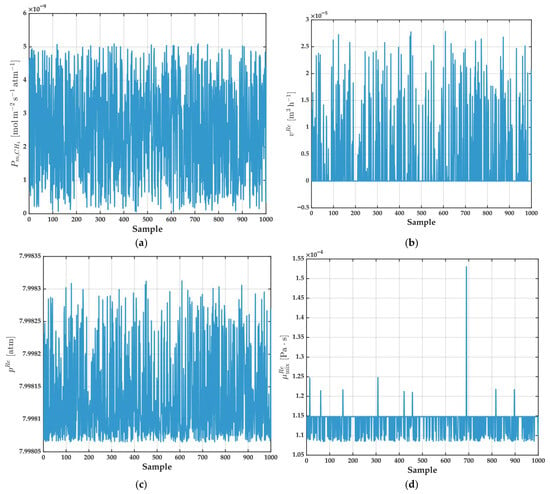

Figure 12 presents the output variable used as the target for ANFIS training: for each sample. Much like several of the input variables, the target output displays a highly scattered profile, characterized by frequent peaks and valleys and a lack of clear global trends across the dataset. Distribution is fairly symmetric, with values spanning a broad range; notably, there are no obvious outliers or missing data, though some points fall close to zero—most likely a result of diversity in simulated operating conditions or presence of measurement noise.

Figure 12.

Experimental values of retentate () for all samples in the dataset. This variable is used as the output (target) in the ANFIS modeling.

This considerable variability in output further increases the challenge for any predictive model, as it must learn to map a set of complexes, nonlinear, and potentially noisy relationships between inputs and target values. When combined with highly variable input variables (as shown in Figure 13), this scattered output underscores the inherent complexity of the modeled gas separation process. Altogether, these figures highlight the necessity for advanced modeling strategies such as ANFIS, which are specifically designed to capture nonlinear dependencies and to provide robust predictions even in the presence of high data dispersion and noise—scenarios where traditional modeling approaches often struggle.

Figure 13.

Input variables used for the ANFIS model: (a) (); (b) (); (c) (); (d) (). Each plot shows the respective variable as a function of sample number for the entire dataset.

Figure 13 provides a comprehensive visualization input variables selected for the ANFIS model: (a) , (b) , (c) , and (d) , each plotted as a function of sample number. This figure serves to illustrate both the dynamic range and variability present in the input dataset used for training and evaluating the ANFIS model.

The input variables depicted in Figure 13 show a combination of highly variable and relatively stable features, reflecting the multifaceted and sometimes unpredictable nature of real-world process data. This diversity underscores the need for robust and flexible modeling strategies—such as ANFIS—that are capable of learning complex, nonlinear, and context-dependent relationships in the presence of noisy or nonstationary inputs.

As shown, the majority of these variables exhibit marked dispersion and apparently random fluctuations throughout the entire sample set, without any discernible temporal trends, periodicities, or seasonality. This high degree of scatter highlights the complexity and nonstationary character of the system, emphasizing the modeling challenge posed by such input data.

Focusing on permeance and retentate volume, both variables display substantial variability and a wide dynamic range. These characteristics may reflect either a broad spectrum of operational scenarios—including changes in membrane properties, feed conditions, or process upsets—or the synthetic or experimental nature of the underlying dataset. The absence of smooth transitions or recurring patterns suggests that the data either samples a wide range of experimental data or intentionally incorporates variability to enhance the generality of the resulting model.

In sharp contrast, retentate pressure remains almost invariant across all samples, presenting only minor fluctuations around a stable mean value. This pattern is typical of processes where pressure is tightly controlled or regulated, ensuring that this variable does not introduce confounding effects in the modeling process. Such stability is often desirable in membrane systems to maintain separation efficiency and process safety.

Finally, retentate mix viscosity displays behavior that is generally stable, with values clustered around a central mean. Nonetheless, several isolated peaks are observed, which could be attributed to specific simulation scenarios, rare measurement anomalies, or occasional transient events in the process. These excursions, though infrequent, might provide a model with valuable information about edge-case behavior or process sensitivity.

Table 10 presents a comparative analysis considering different metrics using several , such as gaussian, sigmoidal, bell, and triangular. Likewise, datasets used are separated into 700 samples for training and 300 samples for validation. Full analysis can be consulted in Appendix A, in Table A4, Table A5 and Table A6. The results obtained show that sigmoidal has better performance. Furthermore, sigmoidal has only two parameters, which allows for a reduction in evaluation time.

Table 10.

Comparative Analysis of Membership Function Performance in an ANFIS Model.

Table 10.

Comparative Analysis of Membership Function Performance in an ANFIS Model.

| Delay | Eval Time (s) | MSETrain | RMSETrain | MAETrain | R2Train | MSETest | RMSETest | MAETest | R2Test | |

|---|---|---|---|---|---|---|---|---|---|---|

| Gaussian | 5 | 3.1722 | 7.4569 × 10−15 | 8.6354 × 10−8 | 4.3155 × 10−8 | 0.9998 | 3.4332 × 10−15 | 5.8594 × 10−8 | 3.6890 × 10−8 | 0.9999 |

| Sigmoidal | 4 | 0.0111 | 6.50 × 10−15 | 8.06 × 10−8 | 4.07 × 10−8 | 0.9998 | 5.83 × 10−15 | 7.64 × 10−8 | 4.39 × 10−8 | 0.9999 |

| Bell | 6 | 4.2737 | 7.2937 × 10−15 | 8.5403 × 10−8 | 4.4189 × 10−8 | 0.9998 | 3.8136 × 10−15 | 6.1755 × 10−8 | 3.9547 × 10−8 | 0.9999 |

| Triangular | 3 | 4.2068 | 5.4029 × 10−11 | 7.3505 × 10−6 | 3.328 × 10−6 | −0.258 | 5.9828 × 10−11 | 7.7348 × 10−6 | 3.5508 × 10−6 | −0.267 |

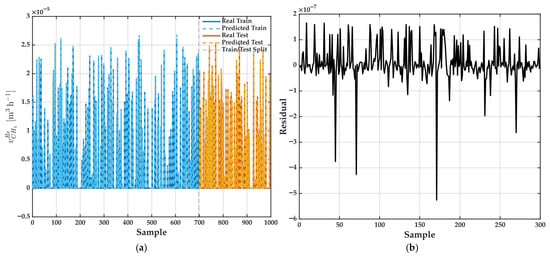

Once sigmoidal is selected, its graphical results are illustrated. Figure 14a presents a comparison between the experimental values and the predictions generated by the best ANFIS model (with = 4) for the across all samples. The used dataset is clearly divided by a vertical dashed line into two partitions: the initial 70% of samples are assigned to training, while the remaining 30% are reserved for testing and model validation. In both regions, model predictions (dashed lines) exhibit a remarkable alignment with actual experimental data (solid lines), capturing with high accuracy not only the general trends but also the intricate details—such as sharp peaks and deep valleys—that characterize the output signal.

Figure 14.

(a) Comparison between experimental (Real Train: solid blue line; Real Test: solid orange line) and predicted values (Predicted Train: dashed blue line; Predicted Test: dashed orange line) of obtained by the best ANFIS model ( = 4). The model is trained using 70% of the data and tested on the remaining 30%. Vertical dashed line indicates train/test split. (b) Residuals (prediction errors) for test set using best ANFIS model ( = 4).

A closer inspection reveals that the ANFIS model preserves this high fidelity even in segments where the process response is particularly volatile or shows abrupt fluctuations. This is especially noteworthy, as such regions typically pose significant challenges for both conventional and data-driven modeling approaches, often resulting in loss of precision or the introduction of systematic errors. Model’s consistent performance throughout both smooth and highly variable intervals suggests that it is not simply memorizing data (overfitting) but rather learning and internalizing the underlying process dynamics.

Moreover, near-perfect overlap between real and predicted curves in both training and testing subsets underscores the model’s ability to generalize to previously unseen data, which is critical for practical applications in real-world systems. This level of predictive accuracy, even under challenging conditions, highlights the effectiveness of the ANFIS approach when dealing with complex systems where interactions among variables are nonlinear, time-dependent, and potentially obscured by noise.

To complement this visual assessment, Figure 14b provides an in-depth analysis of the model’s predictive accuracy by displaying the residuals— pointwise errors between experimental and predicted values—over the entire test set. The vast majority of these residuals remain tightly clustered around zero, with only isolated instances where more substantial errors occur. Lack of persistent trends, recurring spikes, or clusters of large errors throughout the sample range indicates that the model does not introduce bias, nor does it fail systematically under any particular set of operating conditions. Such a balanced distribution of errors is a desirable attribute, as it suggests that the model’s occasional larger deviations are more likely attributable to inherent data noise or rare process events rather than to structural deficiencies in the model itself.

Another important aspect is that, despite the high degree of scatter and the presence of noise in both input and output variables (as illustrated in Figure 12 and Figure 13), the ANFIS model maintains robustness and does not suffer from degradation in predictive quality as data complexity increases. This robustness is particularly valuable in industrial settings, where sensor noise, process disturbances, and changes in operating regimes are common and can easily compromise the performance of less adaptive models.

Across all tested (see Table 11), ANFIS models achieved outstanding accuracy, with R2 values of 0.999 for both subsets and extremely low error metrics (on the order of 10−15 for MSE and less than 10−7 for RMSE). These results reflect both the suitability of selected variables and the ability of ANFIS to model complex, nonlinear processes.

Table 11.

Performance metrics for ANFIS models at different times, . Metrics Eval Time, MSE, RMSE, MAE, R2 for both the training and test datasets. Best result is highlighted in bold.

As increases from 1 to 20, only minor variations are observed in the error metrics. MSE and MAE remain low and relatively stable for between 1 and 9, with a gradual increase in error from = 10 onward. Best predictive performance in the test set is obtained with a of 4, where the lowest MSE and MAE values are recorded. This indicates that incorporating a moderate memory of previous samples enables the model to optimally capture temporal dependencies without introducing unnecessary complexity or overfitting.

To rigorously evaluate the model’s generalization capability, a cross-validation was performed, with the results summarized in Table 12. This analysis confirms the exceptional predictive performance and stability of the ANFIS architecture.

Table 12.

Performance metrics for ANFIS models with different time using cross-validation. Metrics Eval Time, MSE, RMSE, MAE, R2 for both the training and test datasets. The best result is highlighted in bold.

The results demonstrate outstanding and consistent accuracy for models with short time lags. For delays between = 1 and = 10, the models achieved average R2 values of 0.9998 and Mean Square Errors (MSE) on the order of 10−15, indicating high reliability regardless of the data partition.

However, a drastic degradation in performance is observed for lags greater than 10 ( > 10), where the R2 decreases and errors increase by several orders of magnitude. This behavior suggests that an excess of historical information introduces noise, causing model instability and a loss of its generalization capability.

Based on this analysis, the model with a time lag of = 3 is identified as the optimal configuration. It offers the best balance between near-perfect accuracy, demonstrated by the lowest average MSE (7.256 × 10−15) and computational efficiency, validating its robustness for real-time prediction and control applications.

The architecture of the optimal ANFIS model, corresponding to a time lag of = 3, is illustrated in Figure 15. This structure is notable for its parsimony; although neuro-fuzzy systems can house significant complexity, the preceding statistical analysis demonstrated that a model with a single fuzzy rule (Equation (38)) was sufficient to capture the dynamic process with exceptional precision. The rule follows a first-order Sugeno structure, where if the four input variables (Permeance, Retentate Volume, Retentate Pressure, and Retentate Mix Viscosity) meet their respective membership functions, then the output (methane volume in the retentate) is calculated as a linear combination of those inputs. The fact that such a simple architecture achieves such high performance underscores the effectiveness of the variable selection and the synergy between the mechanistic model and the neuro-fuzzy approach.

Figure 15.

Architecture of the single-rule ANFIS model for the optimal time lag (d = 3). This title specifies that the diagram represents the structure of the best-performing model identified through cross-validation, which uses a single fuzzy rule and a time delay of three samples.

To conclusively validate the robustness of this optimal model, Table 13 presents a detailed breakdown of the performance metrics for each of the five folds of the cross-validation. The results demonstrate remarkable stability and consistency. Across all folds, the coefficient of determination (R2) remains above 0.999, while the Mean Squared Error (MSE) and Mean Absolute Error (MAE) stay at extremely low orders of magnitude (10−15 and 10−8, respectively). The minimal deviation in these metrics across the different test subsets confirms that the model’s superior performance is not an artifact of a fortunate data partition but the result of a genuine generalization capability.

Table 13.

Cross-validation performance metrics by fold for the optimal ANFIS model ( = 3).

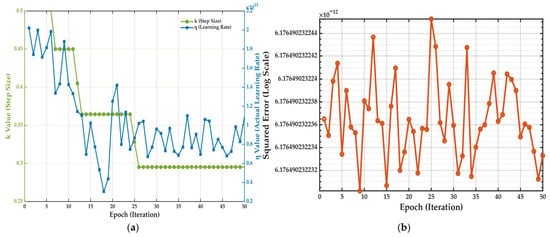

Figure 16a illustrates the evolution of the two main learning algorithm parameters: the step size (k), which is updated using heuristic rules proposed by Jang in [41], and the learning rate (η). Unlike an approach with fixed parameters, a dynamic behavior is observed. The learning rate (η), associated with the gradient descent method for nonlinear parameters, exhibits significant fluctuations throughout the 50 epochs. This indicates that the algorithm actively adjusts the magnitude of the updates to efficiently navigate the error surface. Simultaneously, the step size (k), associated with the adjustment of the linear parameters, decreases in a stepwise fashion, suggesting progressive stabilization as the model approaches the optimal solution.

Figure 16.

(a) Evolution of the adaptive step size (k) and learning rate (η) across 50 training epochs. (b) Squared error per epoch, illustrating the model’s convergence behavior.

Meanwhile, Figure 16b shows the evolution of the squared error per epoch. Notably, the error converges almost instantaneously to an extremely low error valley (on the order of 10−12) without showing a typical steep descent curve. The low-amplitude, high-frequency fluctuations observed from that point onward do not indicate instability but are a direct reflection of the micro-adjustments made by the adaptive learning rate shown in Figure 16a. This behavior demonstrates that after a very rapid initial convergence, the algorithm continues a fine-tuning process to ensure the model settles in a deep and stable error minimum.

Taken together, both graphs confirm the efficiency and sophistication of the hybrid training method. The adaptive mechanism allows for swift convergence and precise parameter adjustment, which justifies the superior performance and robustness of the final selected model.

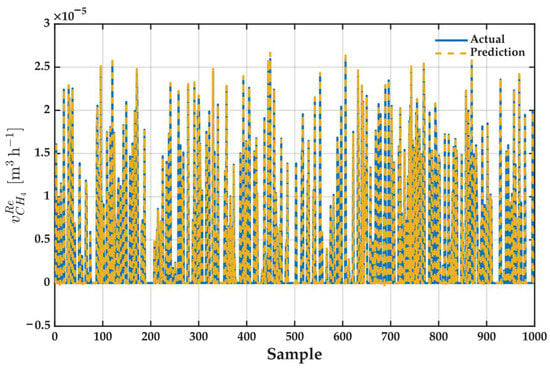

Figure 17 provides a compelling visual validation of the ANFIS model’s performance. It displays a near-perfect superposition of the actual methane volume in the retentate (solid blue line) and the values predicted by the model (dashed yellow line). This exceptional fit is maintained across the entire dataset, capturing with high fidelity not only the general trends but also the abrupt fluctuations and volatility of the process.

Figure 17.

Validation of the Optimal ANFIS Model’s Predictive Performance. The plot shows the superposition of the actual (solid blue) and model-predicted (dashed yellow) values for the methane volume in the retentate across the entire dataset, demonstrating a near-perfect fit.

The model’s ability to precisely track the sharp peaks and valleys demonstrates that it has successfully learned the complex, nonlinear dynamics of the membrane system. This excellent visual agreement is the graphical representation of the quantitative metrics previously reported, such as a coefficient of determination (R2) close to 0.999. In summary, the plot confirms the model’s robustness and high precision.

The viability of a model for real-time applications depends not only on its accuracy but also on its computational efficiency. Therefore, the inference latency of the optimal ANFIS model was evaluated, and the results are presented in Table 14. This analysis quantifies the time required by the model to generate predictions as a function of the number of samples processed simultaneously (in a batch).

Table 14.

Inference Time Analysis for the Optimal ANFIS Model.

For a single sample, the model demonstrates exceptional responsiveness, generating a prediction in just 0.3930 ms. This speed is fundamental for control systems that require immediate decisions. A key finding is the notable gain in efficiency achieved through batch processing; as the number of samples increases, the average inference time per sample decreases drastically. Specifically, when processing a batch of 100 samples, the average time per prediction is reduced to 0.0085 ms, representing an improvement of over 97% compared to individual processing.

It is observed that performance stabilizes for batches of 100 and 500 samples, indicating that the model’s maximum computational efficiency has been reached under these conditions. In addition to speed, the stability of the performance is notable, as the standard deviation significantly decreases for larger batches, which confirms that the prediction times are highly consistent and reliable. Taken together, these results confirm that the ANFIS model is computationally efficient, validating its implementation in real-time monitoring and control applications where response speed is a critical factor.

4. Conclusions

In this work, it has been demonstrated that combining a first-principles transport model with an ANFIS produces a tool that is both powerful and interpretable for modeling CH4/CO2 separation in HFMM. By systematically reducing eighteen candidate predictors to only four—permeance, retentate volume, retentate pressure, and mixture viscosity of the retentate—via Pearson correlation, VIF (with ridge regularization), and normalized regression diagnostics, it is also ensured a parsimonious input set that balances physical insight and statistical rigor. This hybrid framework not only preserves mechanistic transparency but also injects flexibility of data-driven learning, so that the “fuzzy” part of ANFIS remains strictly in the logic layer—never clouding our understanding of underlying physics!

While an initial analysis training on 70% of the simulated dataset and validating on the remaining 30% suggested the best ANFIS configuration was the one with a time lag of = 4, a more robust cross-validation was performed to get a more reliable estimate of the model’s generalization capability. This more rigorous method revealed that the model with a time lag of = 3 was the true optimum, and this final configuration achieved an R2 of 0.999 and an RMSE below 8 × 10−8 m3/h for retentate methane volume prediction. Such near-perfect agreement, across both smooth regimes and abrupt dynamics, confirms that the model is anything but “fuzzy” in its accuracy.

Validity is restricted by the assumptions and the training domain. For validation, permeances were assumed constant; strong dependencies on T, P, or composition (e.g., competitive sorption, plasticization, aging/moisture) or module non-idealities (maldistribution, external film resistances) not explicitly parameterized can degrade accuracy. The hydrodynamics are 1D and laminar (Hagen–Poiseuille) with non-deformable fibers; deviations are expected under transitional/turbulent flow, slip/Knudsen regimes at very low pressure, or fiber compaction at high pressure drop. PR-EOS and Wilke/Chapman–Enskog viscosity models are adequate across broad ranges but may require corrections near critical regions, under incipient condensation, or for strongly associating/heavy/impure mixtures (e.g., C3+, H2S, high humidity). The ANFIS was trained on physics-generated data. While performance was initially evaluated with a 70/30 train/test split, a cross-validation was also implemented to ensure a more reliable estimate of the model’s generalization capability. To this end, future work will focus on the development of a pilot-scale plant to obtain our own experimental data. This will allow for a direct validation of the model’s generalization to real-world conditions and enable further calibration of the intelligent system, bridging the gap between simulation and industrial application.

From an applied standpoint, this hybrid approach fully aligns with Industry 4.0 objectives: it reduces parameter uncertainty, adapts in real time to process disturbances, and serves as an interpretable “soft sensor” for advanced monitoring and control.

In this context, the interpretability of the ANFIS component provides actionable insight for process engineers in two key ways. First, as a decision-support tool, the ANFIS model distills the complex set of differential equations from the mechanistic model into a single, intuitive “IF-THEN” fuzzy rule. This rule acts as a transparent soft sensor, allowing an operator without modeling expertise to gain a qualitative, cause-and-effect understanding of how input variables (such as permeance or retentate pressure) influence the final methane volume, thereby guiding operational adjustments. Second, its simplicity and high computational speed make it the foundation for advanced process optimization. The model is designed to be integrated into an optimization loop coupled with bio-inspired algorithms, using its predictions within a cost function to determine the optimal operating conditions that maximize methane purity or recovery.

That a single membership function per input sufficed to capture complex nonlinearities underscores the wisdom of marrying white-box equations with neuro-fuzzy learning—delivering high precision without unnecessary model bloat. Moreover, this white-box model can be substituted by a data-collection system in physical plants, enabling empirical calibration and adjustment of the Intelligent Model under real operating conditions.

Looking ahead, integrating this ANFIS-enhanced model into multi-objective optimization routines or closed-loop control schemes promises to further boost the efficiency and resilience of gas separation processes. Specifically, and as a key priority for our next investigation, we will conduct the sensitivity analysis on fiber compaction. This analysis will quantify how potential reductions in fiber diameter impact pressure drop and CH4 recovery, thereby directly addressing the model’s utility for industrial scale-up. Future work could also explore online parameter adaptation under real plant noise, extension to multicomponent mixtures beyond CH4/CO2, and pilot-scale validation.

Author Contributions

Conceptualization, B.J.G.-S. and J.A.R.-H. methodology, J.A.R.-H. and B.J.G.-S.; software, B.J.G.-S.; validation, B.J.G.-S. and J.A.R.-H.; writing—original draft preparation, B.J.G.-S. and J.A.R.-H.; writing—review and editing, J.A.R.-H., A.Y.A., M.A.R.C., J.C.G.G., J.-L.R.-L. and F.J.R.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

First author acknowledges SECIHTY (formerly CONHACYT) with scholarship number [999970] for its support through a Ph.D. scholarship. The authors also thank the support to UNACAR and U. de G. to establish the academic collaboration network “Sistemas Avanzados, Inteligentes y Bioinspirados Aplicados a la Ingeniería, Tecnología y Control” for providing the facilities, resources, and collaborative environment to carry out this research project.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| AARD | Average Absolute Relative Deviation |

| ACO | Ant Colony Optimization |

| AI | Artificial Intelligence |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANN/ANNs | Artificial Neural Network(s) |

| ARD | Average Relative Deviation |

| BDF | Backward Differentiation Formula(s) |

| BR | Bayesian Regularization |

| CFD | Computational Fluid Dynamics |

| CSA-LSSVM | Crow Search Algorithm–Least-Squares Support Vector Machine |

| Cu-BTC | Copper(II) benzene-1,3,5-tricarboxylate (HKUST-1) |

| DE | Differential Evolution |

| dsigmf | Difference-of-Sigmoids Membership Function |

| EUF | Electro-Ultrafiltration (Electro-assisted Ultrafiltration) |

| FCM | Fuzzy C-Means |

| FFBP-LM | Feed-Forward Backpropagation with Levenberg–Marquardt |

| FO | Forward Osmosis |

| FS | Fumed Silica |

| GA | Genetic Algorithm |

| gaussmf | Gaussian membership function |

| GMDH | Group Method of Data Handling |

| GP | Genetic Programming |

| GPU | Gas Permeation Unit |

| GWO | Gray Wolf Optimizer |

| HF | Hollow Fiber |

| HFMM | Hollow Fiber Membrane Module(s) |

| Jw | Water flux |

| Js | Reverse salt flux |

| LM | Levenberg–Marquardt (algorithm) |

| MAE | Mean Absolute Error |

| MAD | Mean Absolute Deviation |

| MD | Molecular dynamic |

| MF/MFs | Membership Function(s) |

| MMM | Mixed-Matrix Membrane |

| MLP | Multilayer Perceptron |

| MOFs | Metal–Organic Frameworks |

| MSE | Mean Square Error |

| NSE | Nash–Sutcliffe Efficiency |

| NP/NPs | Nanoparticle(s) |

| PBCC5 | Polyethersulfone, 5 kDa |

| PBSA | Poly(butylene succinate-co-adipate) |

| PBS | Poly(butylene succinate) |

| PDMS | Polydimethylsiloxane |

| PI | Performance Index |

| PL/PLs | Porous Liquid(s) |

| PLCC5 | Regenerated cellulose, 5 kDa |

| PMP | Polymethylpentene |

| PNN | Probabilistic Neural Network |

| POSS | Polyhedral Oligomeric Silsesquioxane |

| PR | Peng–Robinson |

| PS | Polystyrene |

| PSO | Particle Swarm Optimization |

| PVAc | Poly(vinyl acetate) |

| RF | Random Forest |

| RMSE | Root Mean Square Error |

| RSM | Response Surface Methodology |

| SAPO-34 | Silicoaluminophosphate-34 |

| SC | Subtractive Clustering |

| SGD | Stochastic Gradient Descent |

| TFC | Thin-Film Composite |

| TSK | Takagi–Sugeno–Kang (Sugeno fuzzy model) |

| VIF | Variance Inflation Factor |

Nomenclature

| Latin letters | |||

| PR parameter for pure component i, | |||

| MF slope/shape parameter, | |||

| PR mixture parameter, | |||

| PR parameter for pure component i, | |||

| PR mixture parameter, | |||

| Linear consequent coefficient (rule , input ), | |||

| MF center, | |||

| Consequent offset (rule ), | |||

| Time lag (delay), | |||

| Module (shell) diameter, | |||

| Inner fiber diameter, | |||

| Outer fiber diameter, | |||

| Mean squared error in ANFIS training, | |||

| Molar flow rate of component i, | |||