Abstract

In this paper, we study an iterative method for solving the multiple-set split feasibility problem: find a point in the intersection of a finite family of closed convex sets in one space such that its image under a linear transformation belongs to the intersection of another finite family of closed convex sets in the image space. In our result, we obtain a strongly convergent algorithm by relaxing the closed convex sets to half-spaces, using the projection onto those half-spaces and by introducing the extended form of selecting step sizes used in a relaxed CQ algorithm for solving the split feasibility problem. We also give several numerical examples for illustrating the efficiency and implementation of our algorithm in comparison with existing algorithms in the literature.

Keywords:

multiple-set split feasibility problem; relaxed CQ algorithm; subdifferential; strong convergence; Hilbert space MSC:

47H09; 47J25; 65K10; 49J52

1. Introduction

1.1. Split Inverse Problem

Split Inverse Problem (SIP) is an archetypal model presented in ([1], Section 2), and it is stated as

where A is a bounded linear operator from a space X to another space Y, and IP1 and IP2 are two inverse problems installed in X and Y, respectively. Real-world inverse problems can be cast into this framework by making different choices of the spaces X and Y (including the case ), and by choosing appropriate inverse problems for IP1 and IP2. For example, image restoration, computer tomograph and intensity-modulated radiation therapy (IMRT) treatment planning generate functions that can be transformed to have an SIP model; see in [2,3,4,5]. The split feasibility problem [6] and multiple-set split feasibility problem [7] are the first instances of the SIP, where the two problems IP1 and IP2 are of the Convex Feasibility Problem (CFP) type [8]. In the SIP framework, many authors studied cases for which IP1 and IP2 are convex feasibility problems, minimization problems, equilibrium problems, fixed point problems, null point problems and so on; see, for example [2,3,5,9,10,11,12,13,14,15,16,17,18,19,20].

1.2. Split Feasibility Problem and Multiple-Set Split Feasibility Problem

Let H be a real Hilbert space and let be an operator. We say that T is -strongly quasi-nonexpansive, where , if Fix and

If in (1), then T is called a quasi-nonexpansive operator. If in (1), then we say that T is strongly quasi-nonexpansive. Obviously, a nonexpansive operator having a fixed point is quasi-nonexpansive. If T is quasi-nonexpansive, then FixT is closed and convex. For denote by , the -relaxation of T, where I is the identity operator and is called relaxation parameter. If T is quasi-nonexpansive, then usually one applies a relaxation parameter .

Let and be real Hilbert spaces and let be a bounded linear operator. Given a nonempty closed convex subsets and of and , respectively. The Multiple-Set Split Feasibility Problem (MSSFP), which was introduced by Censor et al. [7], is formulated as finding a point

Denote by the set of solutions for (2). The MSSFP (2) with is known as the Split Feasibility Problem (SFP), which is formulated as finding a point

where C and Q are nonempty closed convex subsets of real Hilbert spaces and , respectively. The SFP was first introduced in 1994 by Censor and Elfving [6] for modeling inverse problems in finite-dimensional Hilbert spaces for modeling inverse problems that arise from phase retrievals and in medical image reconstruction. SFP plays an important role in the study of signal processing, image reconstruction, intensity-modulated radiation, therapy, etc. [2,3,5,11]. Several iterative algorithms were presented to solve the SFP and MSSFP provided the solution exists; see, for example, in [3,6,21,22,23,24,25,26,27,28,29,30,31,32]. The algorithm proposed by Censor and Elfving [6] for solving the SFP involves the computation of the inverse of A per each iteration assuming the existence of the inverse of A, a fact that makes the algorithm nonapplicable in practice. Most methods employ the Landweber operators, see the definition and its property in [33]. In general, all of these methods produce sequences that converge weakly to a solution. Byrne [3] proposed the following iteration for solving the SFP, and called it the CQ-algorithm or the projected Landweber method:

where is arbitrary, is a -relaxation of the Landweber operator V (corresponding to ), i.e.,

where , denotes the adjoint of A, and is the spectral norm of . It is well known that the CQ algorithm (4) does not necessarily converge strongly to the solution of SFP in the infinite-dimensional Hilbert space. An important advantage of the algorithm by Byrne [3,21] is that computation of the inverse of A (matrix inverses) is not necessary. The Landweber operator is used for a more general type of problem called the split common fixed point problem of quasi-nonexpansive operators (see, for example, [10,34,35]), but the implementation of the Landweber-type algorithm generated requires prior knowledge of the operator norm. However, the operator norm is a global invariant and is often difficult to estimate; see, for example, the Theorem of Hendrickx and Olshevsky in [36]. To overcome this difficulty, Lopez et al. [2] introduced a new way of selecting the step sizes for solving the SFP (3) such that the information of the operator norm is not necessary. To be precise, Lopez et al. [2] proposed

where , , and . In addition, the computation of the projection on a closed convex set is not easy. In order to overcome this drawback, Yang [37] considered SFPs in which the involved sets C and Q are given as sub-level sets of convex functions, i.e.,

where and are convex and subdifferentiable functions on and , respectively, and that and are bounded operators (i.e., bounded on bounded sets). It is known that every convex function defined on a finite-dimensional Hilbert space is subdifferentiable and its subdifferential operator is a bounded operator (see [38]). In this situation, the efficiency of the method is extremely affected because, in general, the computation of projections onto such subsets is still very difficult. Motivated by Fukushima’s relaxed projection method in [39], Yang [37] suggested calculating the projection onto a half-space containing the original subset instead of the latter set itself. More precisely, Yang introduced a relaxed algorithm using a half-space relaxation projection method for solving SFP. The proposed algorithm by Yang is given as follows:

where , for each the set is given by

where , and the set is given by

where . Obviously, and are half-spaces and and for every . More important, since the projections onto and have the closed form, the relaxed algorithm is now easily implemented. The specific form of the metric projections onto and can be found in [38,40,41].

For solving the MSSFP (2), many methods have been developed; see, for example, in [7,26,42,43,44,45,46,47,48,49] and references therein. We aim to propose a strongly convergent algorithm with high efficiency that is easy to implement in solving the MSSFP. Motivated by Yang [37], we are interested in solving the MSSFP (2) in which the involved sets () and () are given as sub-level sets of convex functions, i.e.,

where and are convex functions for all , . We assume that each and are subdifferentiable on and , respectively, and that and are bounded operators (i.e., bounded on bounded sets). In what follows, we define half-spaces at point by

where , and

where .

The paper contributes to developing the algorithm for the MSSFP in the direction of half-space relaxation (assuming and are given as a sub-level sets of convex functions (8)) and parallel computation of projection onto half-spaces (9) and (10) without prior knowledge of the operator norm.

This paper is organized in the following way. In Section 2, we recall some basic and useful facts that will be used in the proof of our results. In Section 3, we introduce the extended form of the way of selecting step sizes used in the relaxed CQ algorithm for solving the SFP by [37] and Lopez et al. [2] to work for the MSSFP framework, and we analyze the strong convergence of our proposed algorithm. In Section 4, we give some numerical examples to discuss the performance of the proposed algorithm. Finally, we give some conclusions.

2. Preliminary

In this section, in order to prove our result, we recall some basic notions and useful results in a real Hilbert space H. The symbols and denote weak and strong convergence, respectively.

Let C be a nonempty closed convex subset of H. The metric projection on C is a mapping defined by

Lemma 1.

[50] Let C be a closed convex subset of H. Given and a point , then if and only if

The mapping is firmly nonexpansive if

which is equivalent to

If T is firmly nonexpansive, is also firmly nonexpansive. The metric projection on a closed convex subset C of H is firmly nonexpansive.

Definition 1.

The subdifferential of a convex function at , denoted by , is defined by

If , f is said to be subdifferentiable at x. If the function f is continuously differentiable then , this is the gradient of f.

Definition 2.

The function is called weakly lower semi-continuous at if the sequence weakly converges to implies

A function that is weakly lower semi-continuous at each point of H is called weakly lower semi-continuous on H.

Lemma 2.

[3,51] Let and be real Hilbert spaces and is given by where Q is closed convex subset of and be a bounded linear operator. Then

- (i)

- The function f is convex and weakly lower semi-continuous on ;

- (ii)

- for ;

- (iii)

- is -Lipschitz, i.e.,

Lemma 3.

[27,52] Let C and Q be closed convex subsets of real Hilbert spaces and , respectively, and is given by , where be a bounded linear operator. Then for and the following statements are equivalent.

- (i)

- The point solves the SFP (3), i.e., ;

- (ii)

- The point is the fixed point of the mapping , i.e.,

Lemma 4.

[53] Let H be a real Hilbert space. Then, for all and , we have

- (i)

- (ii)

- (iii)

Lemma 5.

[54] Let be the sequence of nonnegative numbers such that

where is a sequence of real numbers bounded from above and and . Then it holds that

3. Half-Space Relaxation Projection Algorithm

In this section, we propose an iterative algorithm to solve the MSSFP (2). To make our algorithm more efficient and the implementation of the algorithm more easy, we assume that the convex sets and are given in the form of (8) and we use projections onto half-spaces and defined in (9) and (10), respectively, instead of onto and , just as the relaxed or inexact methods in [5,37,39,55]. Moreover, in order to remove the requirement of the estimated value of the operator norm and solve the MSSFP when finding the operator norm is not easy, we now introduce a new way of selecting the step sizes for solving the MSSFP (2) given as follows for , and and are half-spaces defined in (9) and (10).

- (i)

- For each and , define

- (ii)

- and are defined as and so where such that for each ,

- (iii)

- For each and , define

From Aubin [51], and are convex, weakly lower semi-continuous and differentiable for each and . Now, using , , , , and given in (i)–(iii) above, and assuming that the solution set of the MSSFP (2) is nonempty, we propose and analyze the strong convergence of our algorithm, called the Half-Space Relaxation Projection Algorithm.

Note that, the iterative scheme in Algorithm 1 (HSRPA) is established in away that and are computed, i.e., the projections and are computed, in parallel setting under simple assumptions on step sizes.

| Algorithm 1: Half-Space Relaxation Projection Algorithm (HSRPA). |

| Initialization: Choose u, . Let the positive real constants , and (), and the real sequences , and satisfy the following conditions:

Iterative Step: Proceed with the following computations: |

Lemma 6.

If at some iterate n in HSRPA, then is the solution of MSSFP (2).

Proof.

implies

Thus, one can get for all and . Since , we get for all . Combined with the fixed point relation Lemma 3 (ii), we also get that for all . Following the representations of the sets and in (9) and (10) we obtain that for all and for all , and this implies that for all and for all , which completes the proof. ☐

By Lemma 6, we can conclude that the HSRPA terminates at some iterate n when . Otherwise, if the HSRPA does not stop, then we have the following strong convergence theorem for the approximation of the solution of the problem of MSSFP (2).

Theorem 1.

The sequence generated by HSRPA converges strongly to the solution point of MSSFP (2) () where .

Proof.

Using definition of and Lemma 4, we have

Using convexity of , we have

From (16) and (C5), we have

Using (17), Lemma 4 and the definition of , we get

From (18) and the definition of , we get

which shows that is bounded. Consequently, , and are all bounded.

Now,

and

From the definition of , we have

That is,

where

We know that is bounded and so it is bounded below. Hence, is bounded below. Furthermore, using Lemma 5 and (C3), we have

Therefore, is a finite real number and by (C3), we have

Since is bounded, there exists a subsequence of such that for some and

Since is bounded and is finite, we have that is bounded. Also, by (C4), we have and so we have that is bounded.

Observe from (C3) and (C4), we have

Therefore, we obtain from (20) and that

From the definition of , we have

and

Hence,

Now, using (16), we obtain

Again using (C5) together with (30) yields

Hence, in view of (31) and restriction condition (C2), we have

for all .

For each and for each , and are Lipschitz continuous with constant and 1, respectively. Since the sequence is bounded and

we have the sequences and , which are bounded. Hence, we have bounded and hence is bounded. Consequently, from (32), we have

From the definition of , we can have

That is, for all , , we have

Therefore, since is bounded and from the boundedness assumption of the subdifferential operator , the sequence is bounded. In view of this and (35), for all we have

Similarly, from the boundedness of and (35), for all we obtain

Since and using (28), we have and hence .

The weak lower semi-continuity of and (36) implies that

That is, for all .

Likewise, the weak lower semi-continuity of and (37) implies that

That is, for all . Hence, .

Take . Then, we obtain from (26) and Lemma 1 that

Then we have from (25) that

Therefore, and this implies that converges strongly to . This completes the proof. ☐

Remark 1.

- i.

- When the point u in HSRPA is taken to be 0, from Theorem 1, we see that the limit point of the sequence is the unique minimum norm solution of the MSSFP, i.e.,

- ii.

- In the algorithm (HSRPA), the stepsize can also be replaced bywhere

The proof for the strong convergence of the HSRPA using the stepsize defined in (38) is almost the same as the proof of Theorem 1. To be precise, only slight rearrangement in (14) is required in the proof of Theorem 1.

If , we have the following algorithm as a consequence of HSRPA concering SFP (3) assuming that C and Q are given as sub-level sets of convex functions (5) and by constructing half-spaces (6) and (7), and defining , , and .

Corollary 1.

Assume that Then, the sequence generated by Algorithm 2 converges strongly to the solution of SFP (3).

| Algorithm 2: Algorithm for solving the SFP. |

| Initialization: Choose u, . Let the positive real constants and , and the real sequences , and satisfy the following conditions:

Iterative Step: Proceed with the following computations: |

4. Preliminary Numerical Results and Applications

In this section, we illustrate the numerical performance and applicability of HSRPA by solving some problems. In the first example, we investigate the numerical performance of HSRPA with regards to different choices of the control parameters and . In Example 2, we illustrate the numerical properties of HSRPA in comparison with three other strongly convergent algorithms, namely the gradient projection method (GPM) by Censor et al. ([7], Algorithm 1), the perturbed projection method (PPM) by Censor et al. ([43], Algorithm 5), and the self-adaptive projection method (SAPM) by Zhao and Yang ([46], Algorithm 3.2). As mentioned in Remark 1 the stepsize in HSRPA can be replaced by the stepsize (38). Therefore in Example 3, we analyze the effect of the two stepsizes in HSRPA for different choices of and Additionally, we compare HSRPA with et al. ([48], Algorithm 2.1). Also, a comparison of Algorithm 2 with the strong convergence result of SFP proposed by Shehu et al. [56] is given in Example 4. Finally in Section 4.1, we present a sparse signal recovery experiment to illustrate the efficiency of Algorithm 2 by comparing with algorithms proposed by Lopez [2] and Yang [37]. The numerical results are completed on a standard TOSHIBA laptop with Intel(R) Core(TM) i5-2450M CPU@2.5GHz 2.5GHz with memory 4GB. The programme is implemented in MATLAB R2020a.

Example 1.

Consider MSSFP (2) for , , given by , where is matrix, the closed convex subsets () of are given by

where such that

- •

- for all , where r is a positive real number,

- •

- for each ,

and the closed convex subsets () of are given by

where

such that for each ;

- •

- ,

- •

- ,

- •

- , and , where θ is a nonzero real number.

Notice that, for , , contains infinite points for , for , and r is a natural number. Moreover, , and .

We consider for , , , and , where r is natural number, and is identity matrix. Thus, , and hence the solution set of the MSSFP is .

For each and , the subdifferential is given by

and

Note that the projection

where is solving the following quadratic programming problems with inequality constraint

where , , , , . Moreover, the projection for where is solving the following quadratic programming problems with inequality constraint

where , , , , for . The problems (39) and (40) can be effectively solved by its appropriate solver in MATLAB.In the following our experiments we took

and

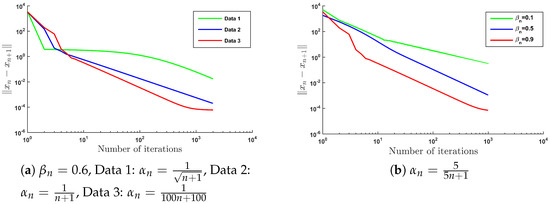

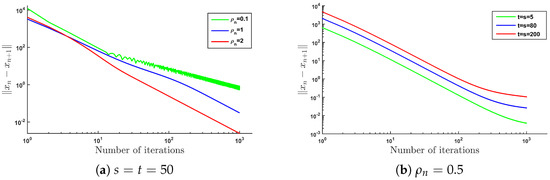

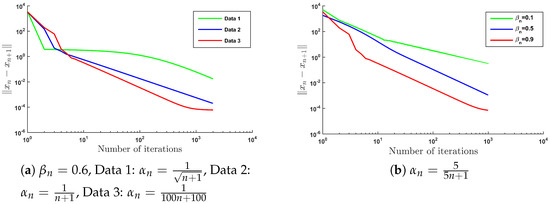

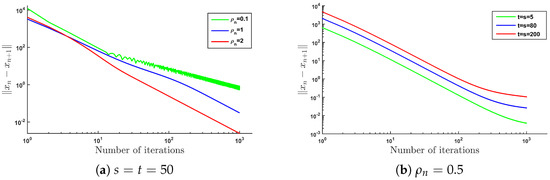

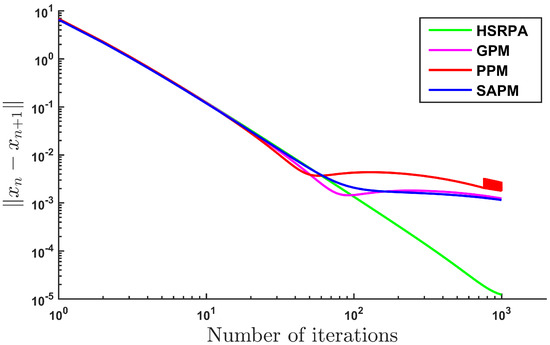

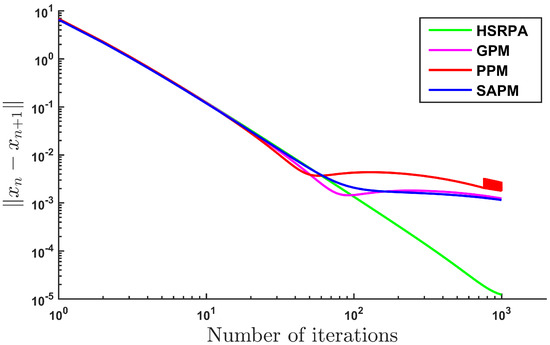

We study the numerical behavior of HSRPA for different parameters , , and for different dimensions , where (i.e., ), , for all are fixed. Notice that for the choice of the parameter is chosen in such a way that and . The numerical results are shown in Figure 1 and Figure 2.

Figure 1.

For , , and for randomly generated starting points and u.

Figure 2.

For , , and for randomly generated starting points and u.

- (i)

- A sequence in which the terms are very close to zero;

- (ii)

- A sequence in which terms are very close to 1;

- (iii)

- A sequence in which terms are larger but not exceeding 4.

Example 2.

Comparing HSRPA with GPM, PPM and SAPM.

Consider MSSFP (2) for , , given by , the closed convex subsets () of are given by

where θ is a real constant, is identity matrix and

such that and , and the closed convex subsets () of are given by

where , and for each

For each choice of θ the solution set of the MSSFP is , i.e., .

We choose GPM, PPM and SAPM because the problem under consideration is the same and the approach has some common features with our approach.

GPM:The proposed iterative algorithm solving MSSFP is reduced to

where , and for , and ω is the spectral radius of .

PPM:The proposed algorithm is obtained by replacing the projections on the closed convex subset and in (41) by the half-space and .

SAPM:The proposed iterative algorithm for solving the MSSFP is reduced to

where , for , , , , .

In our algorithm we took for each and

and for each

For the purpose of comparison we took the following data:

HSRPA:, for , , , .

GPM:, for , for .

PPM:, for , for .

SAPM:, , for , for .

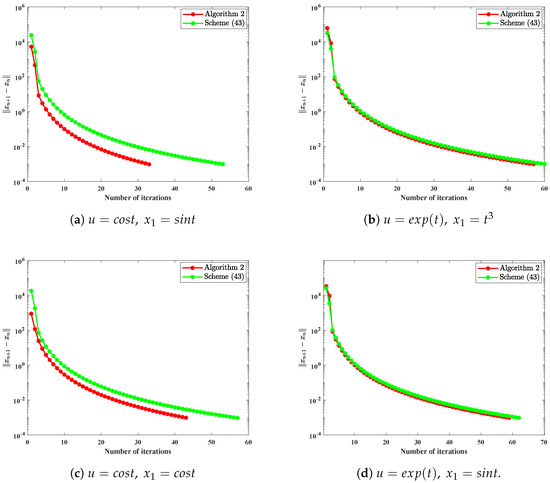

The numerical results are shown in Table 1 and Figure 3. To permit comparisons between the three algorithms about the number of iterations (Iter(n)) and the time of execution in seconds (CPUt(s)), we have used the relative difference , which is less than ϵ, as the stopping criteria in Table 1.

Table 1.

For , and .

Figure 3.

For and starting points (in HSRPA) and .

From the numerical results in Table 1 and Figure 3 of Example 2, we can see that our algorithm (HSRPA) has better performance than GPM, PPM and SAPM. To be specific, HSRPA converges faster and requires less iterations than GPM, PPM and SAPM. In view of CPU time of execution, our algorithm has comparable performance with GPM, PPM and SAPM.

Example 3.

Consider the MSSFP with and () and (), with a different number of feasible sets N and M, and dimensions s and t. We randomly generated the operator , with In this example, we see the effect of the different choices of and for the numerical results of the HSRPA using both stepsizes and (given in (38)), and also we compare the HSRPA with the algorithm proposed by Zhao et al. ([48], Algorithm 2.1). For the HSRPA we take , , , , and , . For ([48], Algorithm 2.1), we take , , , , , and , . We use as the stopping criteria. The results are presented in Table 2 below.

Table 2.

Comparative result of the Half-Space Relaxation Projection Algorithm (HSRPA) (with two different stepsizes and ) with ([48], Algorithm 2.1) for different choices of and

Interestingly, it can be observed from Table 2 that, for HSRPA with the stepsize is faster in terms of less number of iterations and CPU-run time than HSRPA with the stepsize . On the contrary, HSRPA () has better performance for and HSRPA with either of the stepsizes converges faster and requires less number of iterations than the compared algorithm ([48], Algorithm 2.1).

Example 4.

Consider the Hilbert space with norm and the inner product given by . The two nonempty, closed and convex sets are and , and the linear operator is given as , i.e., or is the identity. The orthogonal projection onto C and Q have an explicit formula; see, for example, [57]

We consider the following problem

It is clear that Problem (42) has a nonempty solution set Ω since . In this case, the iterative scheme in Algorithm 2( with ) becomes

where

for

In this example, we compare Algorithm 2 with the strong convergence result of SFP proposed by Shehu et al. [56]. The iterative scheme (27) in [56] for with , and was reduced into the following form

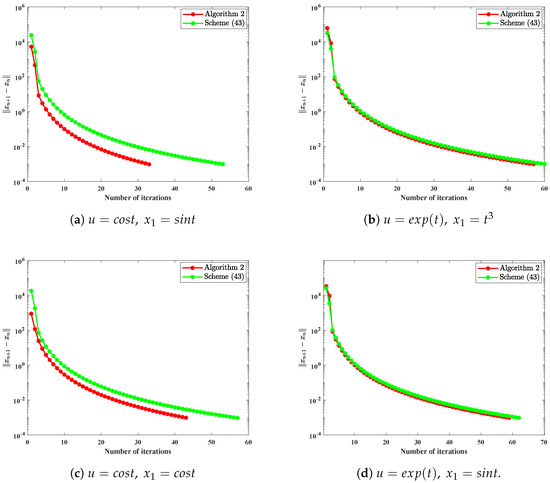

We see here that our iterative scheme can be implemented to solve the problem (42) considered in this example. We use as stopping criteria for both algorithms and the outcome of the numerical experiment is reported in Figure 4. It can be observed from Figure 4 that, for different choices of u and , Algorithm 2 is faster in terms of less number of iterations and CPU-run time than the algorithm proposed by Shehu et al. [56].

Figure 4.

Comparison of Algorithm 2 and algorithm by Shehu [56] for different choices of u and .

4.1. Application to Signal Recovery

In this part, we consider the problem of recovering a noisy sparse signal. Compressed sensing can be modeled as the following linear equation:

where is a vector with L nonzero components to be recovered, is the measured data with noisy (when it means that there is no noise to the observed data) and A is an bounded linear observation operator with (). The problem in Equation (44) can be seen as the LASSO problem, which has wide application in signal processing theory [58].

where is a given constant. It can be observed that (45) indicates the potential of finding a sparse solution of the SFP (3) due to the constraint. Thus, we apply Algorithm 2 to solve problem (45).

Let and then the minimization problem (45) can be seen as an SFP (3). Denote the level set by,

where with the convex function .

For a special case where Algorithm 2 converges to the solution of (45) and moreover, since the projection onto the level set has an explicit formula, Algorithm 2 can be easily implemented. In the sequel, we present a sparse signal recovery experiment to illustrate the efficiency of Algorithm 2 by comparing with algorithms proposed by Lopez [2] and Yang [37].

The vector x is an sparse signal with L non-zero elements that is generated from uniform distribution in the interval . The matrix A is generated from a normal distribution with mean zero and one variance. The observation b is generated by white Gaussian noise with signal-to-noise ratio . The process is started with , and u are randomly generated vectors. The goal is then to recover the sparse signal x by solving (45). The restoration accuracy is measured by the mean squared error as follows:

where is an estimated signal of x, and is a given small constant. We take the stopping criteria and we choose the corresponding parameters for Algorithm 2. We also choose and for the algorithm by Yang [37] and algorithm by Lopez [2], respectively.

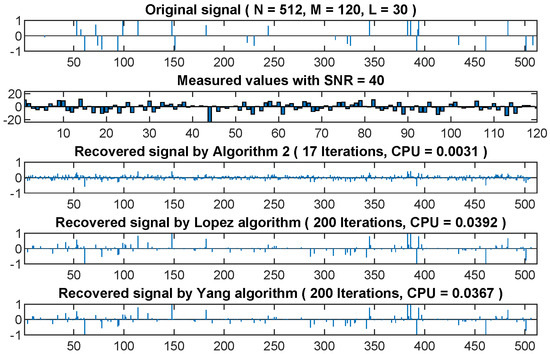

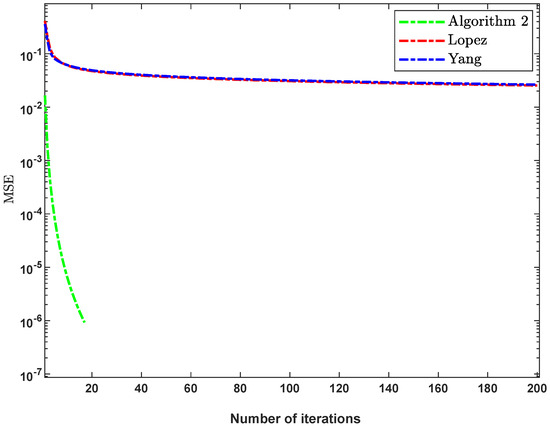

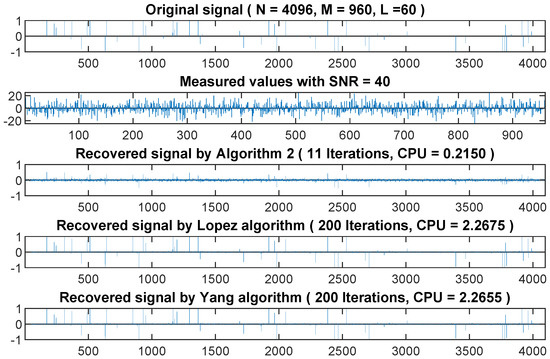

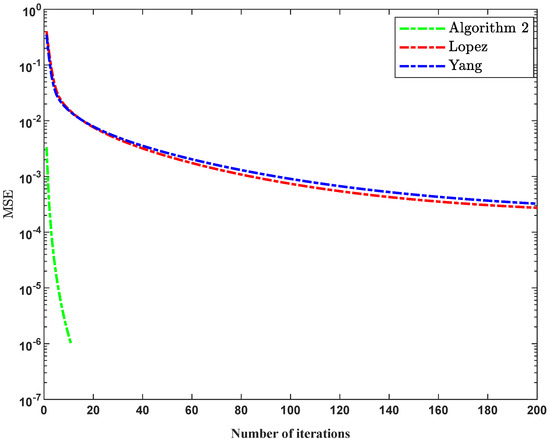

It can be observed from Figure 5, Figure 6, Figure 7 and Figure 8 that the recovered signal by the proposed algorithm has less number of iterations and MSE. Therefore, the quality of the signal recovered by the proposed algorithm is better than the compared algorithms.

Figure 5.

The original sparse signal versus the recovered sparse signal by Algorithm 2, algorithms by Lopez [2] and Yang [37] when and .

Figure 6.

MSE versus the number of iterations, and the comparison of Algorithm 2 with that of algorithms by Lopez [2] and Yang [37] when and .

Figure 7.

The original sparse signal versus the recovered sparse signal by Algorithm 2, algorithms by Lopez [2] and Yang [37] when and .

Figure 8.

MSE versus the number of iterations, and the comparison of Algorithm 2 with that of algorithms by Lopez [2] and Yang [37] when and .

5. Conclusions

In this paper, we present a strong convergence iterative algorithm solving MSSFP with a way of selecting the stepsizes such that the implementation of the algorithm does not need any prior information as regards the operator norms. Preliminary numerical results are reported to illustrate the numerical behavior of our algorithm (HSRPA), and to compare it with those well known in the literature, including, Censor et al. ([7], Algorithm 1), Censor et al. ([43], Algorithm 5), Zhao and Yang ([46], Algorithm 3.2) and Zhao et al. ([48], Algorithm 2.1). The numerical results show that our proposed Algorithm is practical and promising for solving MSSFP. Algorithm 2 is applied in signal recovery. The experiment results show that Algorithm 2 has fewer iterations than that of algorithms proposed by Yang [37], Lopez [2] and Shehu [56].

Author Contributions

Conceptualization, G.H.T. and K.S.; data curation, A.G.G.; formal analysis, G.H.T.; funding acquisition, P.K.; investigation, K.S.; methodology, K.S.; project administration, P.K.; resources, P.K.; software, A.G.G.; supervision, P.K.; validation, G.H.T. and P.K.; visualization, A.G.G.; writing—original draft, G.H.T.; writing—review and editing, A.G.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), Faculty of Science, KMUTT. The first author was supported by the Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi with Grant No. 37/2561.

Acknowledgments

The authors acknowledge the financial support provided by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), KMUTT. Guash Haile Taddele is supported by the Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi (Grant No.37/2561). Moreover, Kanokwan Sitthithakerngkiet was supported by the Faculty of Applied Science, King Mongkut’s University of Technology, North Bangkok. Contract no. 6342101.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2003, 20, 103. [Google Scholar] [CrossRef]

- Combettes, P. The convex feasibility problem in image recovery. In Advances in Imaging and Electron Physics; Elsevier: Amsterdam, The Netherlands, 1996; Volume 95, pp. 155–270. [Google Scholar]

- Qu, B.; Xiu, N. A note on the CQ algorithm for the split feasibility problem. Inverse Probl. 2005, 21, 1655. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T.; Kopf, N.; Bortfeld, T. The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 2005, 21, 2071. [Google Scholar] [CrossRef]

- Censor, Y.; Lent, A. Cyclic subgradient projections. Math. Program. 1982, 24, 233–235. [Google Scholar] [CrossRef]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. The split common null point problem. arXiv 2011, arXiv:1108.5953. [Google Scholar]

- Censor, Y.; Segal, A. The split common fixed point problem for directed operators. J. Convex Anal. 2009, 16, 587–600. [Google Scholar]

- Dang, Y.; Gao, Y.; Li, L. Inertial projection algorithms for convex feasibility problem. J. Syst. Eng. Electron. 2012, 23, 734–740. [Google Scholar] [CrossRef]

- He, Z. The split equilibrium problem and its convergence algorithms. J. Inequalities Appl. 2012, 2012, 162. [Google Scholar] [CrossRef]

- Taiwo, A.; Jolaoso, L.O.; Mewomo, O.T. Viscosity approximation method for solving the multiple-set split equality common fixed-point problems for quasi-pseudocontractive mappings in Hilbert spaces. J. Ind. Manag. Optim. 2017, 13. [Google Scholar] [CrossRef]

- Aremu, K.O.; Izuchukwu, C.; Ogwo, G.N.; Mewomo, O.T. Multi-step iterative algorithm for minimization and fixed point problems in p-uniformly convex metric spaces. J. Manag. Optim. 2017, 13. [Google Scholar] [CrossRef]

- Oyewole, O.K.; Abass, H.A.; Mewomo, O.T. A strong convergence algorithm for a fixed point constrained split null point problem. Rend. Circ. Mat. Palermo Ser. 2 2020, 13, 1–20. [Google Scholar]

- Izuchukwu, C.; Ugwunnadi, G.C.; Mewomo, O.T.; Khan, A.R.; Abbas, M. Proximal-type algorithms for split minimization problem in P-uniformly convex metric spaces. Numer. Algorithms 2019, 82, 909–935. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Alakoya, T.O.; Taiwo, A.; Mewomo, O.T. Inertial extragradient method via viscosity approximation approach for solving equilibrium problem in Hilbert space. Optimization 2020, 1–20. [Google Scholar] [CrossRef]

- He, S.; Wu, T.; Cho, Y.J.; Rassias, T.M. Optimal parameter selections for a general Halpern iteration. Numer. Algorithms 2019, 82, 1171–1188. [Google Scholar] [CrossRef]

- Dadashi, V.; Postolache, M. Forward–backward splitting algorithm for fixed point problems and zeros of the sum of monotone operators. Arab. J. Math. 2020, 9, 89–99. [Google Scholar] [CrossRef]

- Yao, Y.; Leng, L.; Postolache, M.; Zheng, X. Mann-type iteration method for solving the split common fixed point problem. J. Nonlinear Convex Anal. 2017, 18, 875–882. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441. [Google Scholar] [CrossRef]

- Dang, Y.; Gao, Y. The strong convergence of a KM–CQ-like algorithm for a split feasibility problem. Inverse Probl. 2010, 27, 015007. [Google Scholar] [CrossRef]

- Jung, J.S. Iterative algorithms based on the hybrid steepest descent method for the split feasibility problem. J. Nonlinear Sci. Appl. 2016, 9, 4214–4225. [Google Scholar] [CrossRef]

- Wang, F.; Xu, H.K. Cyclic algorithms for split feasibility problems in Hilbert spaces. Nonlinear Anal. Theory Methods Appl. 2011, 74, 4105–4111. [Google Scholar] [CrossRef]

- Xu, H.K. An iterative approach to quadratic optimization. J. Optim. Theory Appl. 2003, 116, 659–678. [Google Scholar] [CrossRef]

- Xu, H.K. A variable Krasnosel’skii–Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 2006, 22, 2021. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 2010, 26, 105018. [Google Scholar] [CrossRef]

- Yu, X.; Shahzad, N.; Yao, Y. Implicit and explicit algorithms for solving the split feasibility problem. Optim. Lett. 2012, 6, 1447–1462. [Google Scholar] [CrossRef]

- Shehu, Y.; Mewomo, O.T.; Ogbuisi, F.U. Further investigation into approximation of a common solution of fixed point problems and split feasibility problems. Acta Math. Sci. 2016, 36, 913–930. [Google Scholar] [CrossRef]

- Shehu, Y.; Mewomo, O.T. Further investigation into split common fixed point problem for demicontractive operators. Acta Math. Sin. Engl. Ser. 2016, 32, 1357–1376. [Google Scholar] [CrossRef]

- Mewomo, O.T.; Ogbuisi, F.U. Convergence analysis of an iterative method for solving multiple-set split feasibility problems in certain Banach spaces. Quaest. Math. 2018, 41, 129–148. [Google Scholar] [CrossRef]

- Dong, Q.L.; Tang, Y.C.; Cho, Y.J.; Rassias, T.M. “Optimal” choice of the step length of the projection and contraction methods for solving the split feasibility problem. J. Glob. Optim. 2018, 71, 341–360. [Google Scholar] [CrossRef]

- Cegielski, A. Landweber-type operator and its properties. Contemp. Math. 2016, 658, 139–148. [Google Scholar]

- Cegielski, A. General method for solving the split common fixed point problem. J. Optim. Theory Appl. 2015, 165, 385–404. [Google Scholar] [CrossRef]

- Cegielski, A.; Reich, S.; Zalas, R. Weak, strong and linear convergence of the CQ-method via the regularity of Landweber operators. Optimization 2020, 69, 605–636. [Google Scholar] [CrossRef]

- Hendrickx, J.M.; Olshevsky, A. Matrix p-norms are NP-hard to approximate if p ≠ 1,2,∞. SIAM J. Matrix Anal. Appl. 2010, 31, 2802–2812. [Google Scholar] [CrossRef]

- Yang, Q. The relaxed CQ algorithm solving the split feasibility problem. Inverse Probl. 2004, 20, 1261. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Borwein, J.M. On projection algorithms for solving convex feasibility problems. SIAM Rev. 1996, 38, 367–426. [Google Scholar] [CrossRef]

- Fukushima, M. A relaxed projection method for variational inequalities. Math. Program. 1986, 35, 58–70. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Jolaoso, L.O.; Mewomo, O.T. Modified inertial subgradient extragradient method with self adaptive stepsize for solving monotone variational inequality and fixed point problems. Optimization 2020, 1–30. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Taiwo, A.; Alakoya, T.O.; Mewomo, O.T. A unified algorithm for solving variational inequality and fixed point problems with application to the split equality problem. Comput. Appl. Math. 2020, 39, 38. [Google Scholar] [CrossRef]

- Buong, N. Iterative algorithms for the multiple-sets split feasibility problem in Hilbert spaces. Numer. Algorithms 2017, 76, 783–798. [Google Scholar] [CrossRef]

- Censor, Y.; Motova, A.; Segal, A. Perturbed projections and subgradient projections for the multiple-sets split feasibility problem. J. Math. Anal. Appl. 2007, 327, 1244–1256. [Google Scholar] [CrossRef]

- Latif, A.; Vahidi, J.; Eslamian, M. Strong convergence for generalized multiple-set split feasibility problem. Filomat 2016, 30, 459–467. [Google Scholar] [CrossRef]

- Masad, E.; Reich, S. A note on the multiple-set split convex feasibility problem in Hilbert space. J. Nonlinear Convex Anal. 2007, 8, 367. [Google Scholar]

- Zhao, J.; Yang, Q. Self-adaptive projection methods for the multiple-sets split feasibility problem. Inverse Probl. 2011, 27, 035009. [Google Scholar] [CrossRef]

- Zhao, J.; Yang, Q. Several acceleration schemes for solving the multiple-sets split feasibility problem. Linear Algebra Appl. 2012, 437, 1648–1657. [Google Scholar] [CrossRef][Green Version]

- Zhao, J.; Yang, Q. A simple projection method for solving the multiple-sets split feasibility problem. Inverse Probl. Sci. Eng. 2013, 21, 3537–3546. [Google Scholar] [CrossRef]

- Yao, Y.; Postolache, M.; Zhu, Z. Gradient methods with selection technique for the multiple-sets split feasibility problem. Optimization 2019, 69, 269–281. [Google Scholar] [CrossRef]

- Osilike, M.O.; Isiogugu, F.O. Weak and strong convergence theorems for nonspreading-type mappings in Hilbert spaces. Nonlinear Anal. Theory Methods Appl. 2011, 74, 1814–1822. [Google Scholar] [CrossRef]

- Aubin, J.P. Optima and Equilibria: An Introduction to Nonlinear Analysis; Springer Science & Business Media: Berlin, Germany, 2013; Volume 140. [Google Scholar]

- Ceng, L.C.; Ansari, Q.H.; Yao, J.C. An extragradient method for solving split feasibility and fixed point problems. Comput. Math. Appl. 2012, 64, 633–642. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin/Heidelberg, Germany, 2011; Volume 408. [Google Scholar]

- Maingé, P.E.; Măruşter, Ş. Convergence in norm of modified Krasnoselski–Mann iterations for fixed points of demicontractive mappings. Appl. Math. Comput. 2011, 217, 9864–9874. [Google Scholar] [CrossRef]

- He, B. Inexact implicit methods for monotone general variational inequalities. Math. Program. 1999, 86, 199–217. [Google Scholar] [CrossRef]

- Shehu, Y. Strong convergence result of split feasibility problems in Banach spaces. Filomat 2017, 31, 1559–1571. [Google Scholar] [CrossRef][Green Version]

- Cegielski, A. Iterative Methods for Fixed Point Problems in Hilbert Spaces; Springer: Berlin/Heidelberg, Germany, 2012; Volume 2057. [Google Scholar]

- Gibali, A.; Liu, L.W.; Tang, Y.C. Note on the modified relaxation CQ algorithm for the split feasibility problem. Optim. Lett. 2018, 12, 817–830. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).