Abstract

One of the most crucial aspects of image segmentation is multilevel thresholding. However, multilevel thresholding becomes increasingly more computationally complex as the number of thresholds grows. In order to address this defect, this paper proposes a new multilevel thresholding approach based on the Evolutionary Arithmetic Optimization Algorithm (AOA). The arithmetic operators in science were the inspiration for AOA. DAOA is the proposed approach, which employs the Differential Evolution technique to enhance the AOA local research. The proposed algorithm is applied to the multilevel thresholding problem, using Kapur’s measure between class variance functions. The suggested DAOA is used to evaluate images, using eight standard test images from two different groups: nature and CT COVID-19 images. Peak signal-to-noise ratio (PSNR) and structural similarity index test (SSIM) are standard evaluation measures used to determine the accuracy of segmented images. The proposed DAOA method’s efficiency is evaluated and compared to other multilevel thresholding methods. The findings are presented with a number of different threshold values (i.e., 2, 3, 4, 5, and 6). According to the experimental results, the proposed DAOA process is better and produces higher-quality solutions than other comparative approaches. Moreover, it achieved better-segmented images, PSNR, and SSIM values. In addition, the proposed DAOA is ranked the first method in all test cases.

1. Introduction

One of the most often used image segmentation techniques is multilevel thresholding. It is divided into two types: bi-level and multilevel [1,2]. Multilevel thresholding is used to separate complex images, which can generate several thresholds, such as tri-level or quad-level thresholds, which break pixels into several identical parts depending on size. Bi-level thresholding divides the image into two levels, while multilevel thresholding divides the image into two classes [3,4]. When there are only two primary gray levels in an image, bi-level thresholding yields acceptable results; however, when it is expanded to multilevel thresholding, the main drawback is the time-consuming computation [5]. Bi-level thresholding cannot precisely find the optimum threshold, due to the slight variation between the target and the context of a complex image [6,7].

Medical imaging, machine vision, and satellite photography all use image segmentation [8,9,10]. The primary aim of image segmentation is to divide an image into relevant regions for a specific mission. The process of finding and isolating points of interest from the rest of the scene is known as the segmentation of pattern recognition systems [11,12]. Following image segmentation, certain features from objects are removed, and then objects are grouped into specific categories or classes, depending on the extracted features. Segmentation is used in medical applications to detect organs, such as the brain, heart, lungs, and liver, in CT or MR images [13,14]. It is also used to tell the difference between abnormal tissue, such as a tumor, and healthy tissue. Image segmentation techniques, such as image thresholding, edge detection, area expanding, stochastic models, Artificial Neural Network (ANN), and clustering techniques, have all been used, depending on the application [15,16].

Tsallis, Kapur, and Otsu procedures are the most widely used thresholding strategies [17,18]. The Otsu method maximizes the between-class variance function to find optimum thresholds, while the Kapur method maximizes the posterior entropy of the segmented groups. Due to exhaustive search, Tsallis and Otsu’s computational complexity grows exponentially as the number of thresholds increases [19]. Many researchers have worked on image segmentation over the years. Image segmentation has been tackled using a variety of approaches and algorithms [20]. Examples of the used optimization algorithms are the Bat Algorithm (BA) [21], Firefly Algorithm (FA) [22], Genetic Algorithm (GA) [23], Gray Wolf Optimizer (GWO) [24,25], Dragonfly Algorithm (DA) [26], Moth–Flame Optimization Algorithm (MFO) [27], Marine Predators Algorithm (MPA) [28], Arithmetic Optimization Algorithm (AOA) [29], Aquila Optimizer (AO) [30], Krill Herd Optimizer (KHO) [31], Harris Hawks Optimizer (HHO) [32], Red Fox Optimization Algorithm (RFOA) [33], Artificial Bee Colony Algorithm (ABC) [34], and Artificial Ecosystem-based Optimization [35]. Many other optimizers can be found in [36,37].

The paper [38] used Kapur and Otsu’s approaches to adjust the latest Elephant Herding Optimization Algorithm for multilevel thresholding. Its performance was compared to four other swarm intelligence algorithms, using regular benchmark images. The Elephant Herding Optimization Algorithm outperformed and proved more stable than other methods in the literature. Sahlol et al. in [39] introduced an improved hybrid method for COVID-19 images by merging the strengths of convolution neural networks (CNNs) to remove features and the MPA feature selection algorithm to choose the most important features. The proposed method exceeds several CNNs and other well-known methods on COVID-19 images.

The multi-verse optimizer (MVO), based on the multi-verse theorem, is a new algorithm for solving real-world multi-parameter optimization problems. A novel parallel multi-verse optimizer (PMVO) with a coordination approach is proposed in [40]. For each defined iteration, the parallel process is used to randomly split the original solutions into multiple groups and exchange the various groups’ details. This significantly improves individual MVO algorithm cooperation and reduces the shortcomings of the original MVO algorithm, such as premature convergence, search stagnation, and easy trapping into the local optimal search. The PMVO algorithm was compared to methods under the CEC2013 test suite to validate the proposed scheme’s efficiency. The experimental findings show that the PMVO outperforms the other algorithms under consideration. In addition, using minimum cross entropy thresholding, PMVO is used to solve complex multilevel image segmentation problems. In comparison with different related algorithms, the proposed PMVO algorithm seems to achieve better quality image segmentation.

For image segmentation, a modified artificial bee colony optimizer (MABC) is proposed [41], which balances the tradeoff between the search process by using a pool of optimal foraging strategies. MABC’s main goal is to improve artificial bee foraging behaviors by integrating local search with detailed learning, using a multi-dimensional PSO-based equation. With detailed learning, the bees combine global best solution knowledge into the solution quest equation to increase exploration. Simultaneously, local search allows the bees to thoroughly exploit across the promising field, providing a good combination of exploration and exploitation. The proposed algorithm’s feasibility was shown by the experimental findings comparing the MABC to several popular EA and SI algorithms on a series of benchmarks. The experimental findings verify the suggested algorithm’s efficacy.

For solving the image segmentation problem, a novel multilevel thresholding algorithm based on a meta-heuristic Krill Herd Optimization (KHO) algorithm is proposed in [42]. The optimal threshold values are calculated, using the Krill Herd Optimization technique to maximize Kapur’s or Otsu’s objective function. The suggested method reduces the amount of time it takes to calculate the best multilevel thresholds. Various benchmark images are used to illustrate the applicability and numerical performance of the Krill Herd Optimization-based multilevel thresholding. To demonstrate the superior performance of the proposed method, a detailed comparison with other current bio-inspired techniques based on multilevel thresholding techniques, such as Bacterial Foraging (BF), Particle Swarm Optimization (PSO), Genetic Algorithm (GA), and Moth-Flame Optimization (MFO), was performed. The results confirmed that the proposed method achieved better results than other methods.

This paper presents a modified version of the Manta Ray Foraging Optimizer (MRFO) algorithm to deal with global optimization and multilevel image segmentation problems [43]. MRFO is a meta-heuristic technique that simulates the behaviors of manta rays to find food. The performance of the MRFO is improved by using fractional-order (FO) calculus during the exploitation phase. In this experiment, a variant of natural images is used to assess FO-MRFO. According to different performance measures, the FO-MRFO outperformed the compared algorithms in global optimization and image segmentation.

The concept “optimization” refers to the process of identifying the best solutions to a problem while keeping those constraints in mind [44,45]. The used optimization in solving the image segmentation problem is the method of finding the best threshold values for a given image. Swarm intelligence (SI) algorithms are used widely for multilevel thresholding problems to determine the optimal threshold values, using various objective functions to solve the problems of the computational inefficiency of traditional thresholding techniques. The primary motivation behind this paper is to find the optimal threshold values for image segmentation problems. At the same time, to address the weakness of the original AOA, it suffers from the local optimal problem and premature coverage in some cases. In this paper, an improved version of the Arithmetic Optimization Algorithm (AOA) by using Differential Evolution, called DAOA, is proposed. The proposed method uses Differential Evolution to tackle the conventional Arithmetic Optimization Algorithm’s weaknesses, such as being trapped in local optima and fast convergence. Thus, Differential Evolution is used to enhance the performance of the Arithmetic Optimization Algorithm. The proposed DAOA assists by using eight standard test images from different groups: two-color images, two gray images, two normal CT COVID-19 images, and two confirmed COVID-19 CT images. Peak signal-to-noise ratio (PSNR), structural similarity index test (SSIM), and fitness function (Kapur’s) are used to determine the accuracy of segmented images. The proposed DAOA method’s efficiency is evaluated and compared to other multilevel thresholding methods. The findings are presented with a number of different threshold values (i.e., 2, 3, 4, 5, and 6). According to the experimental results, the proposed DAOA process is better and produces higher-quality solutions than other approaches. The encouraging findings suggest that using the DAOA-based thresholding strategy has potential and is helpful.

The rest of this paper is organized as follows. Section 2 presents the procedure of the proposed DAOA method. Section 3 presents the definitions and procedures of the image segmentation problem. The experiments and results are given in Section 4. Finally, in Section 5, the conclusions and potential future work directions are given.

2. The Proposed Method

In this section, we present the conventional Arithmetic Optimization Algorithm (AOA), Differential Evolution (DE), and the proposed Evolutionary Arithmetic Optimization Algorithm (DAOA).

2.1. Arithmetic Optimization Algorithm (AOA)

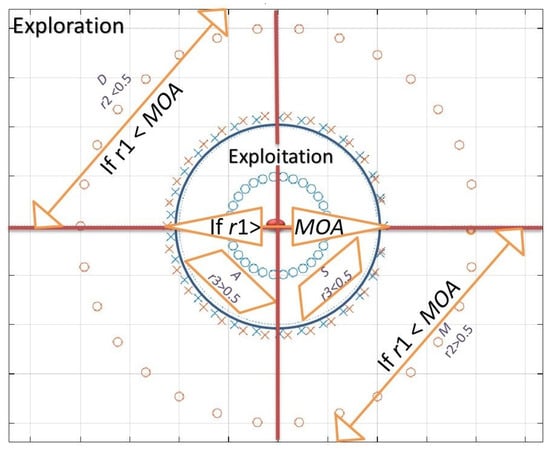

In this section, we describe the exploration and exploitation phases of the original AOA [29], which is motivated by the main operators in math science (i.e., multiplication (M), division (D), subtraction (S), and addition (A)). The main search methods of the AOA are presented in Figure 1, which are illustrated in the following subsections.

Figure 1.

The search phases of the Arithmetic Optimization Algorithm.

The AOA should choose the search process before beginning its work (i.e., exploration or exploitation). So, in the following search steps, the Math Optimizer Accelerated () function is a coefficient determined by Equation (1).

where (C_Iter) means the value at the iteration of MOA function, determined by Equation (1). is the current iteration: [1 ………]. and are the accelerated function values (minimum and maximum), respectively.

2.1.1. Exploration Phase

The exploration operators of AOA are modeled in Equation (2). The exploration phase uses the D or M operators conditioned by MOA. The D operator is prepared by 0.5, or, otherwise, by the M operator. is a random number. The position updating process is determined as follows.

where (C_Iter is the next solution, (C_Iter) is the location of the solution, and is the location in the best solution. is a control value (0.5) to tune the exploration search.

where (C_Iter) denotes the coefficient value at the iteration. is a control value (5) to tune the exploration search.

2.1.2. Exploitation Phase

The exploitation searching phase uses the S and A operators conditioned by the MOA function value. Subtraction (S) and addition (A) search strategies are represented in Equation (4).

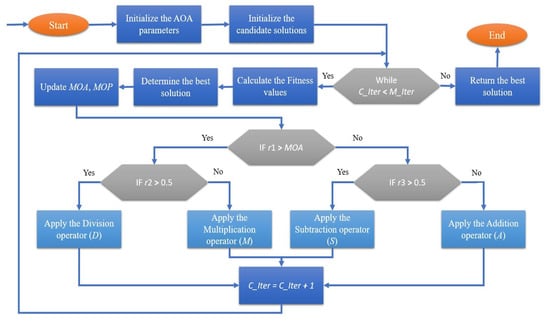

The intuitive and detailed process of AOA is shown in Figure 2.

Figure 2.

Flowchart of the conventional AOA.

2.2. Differential Evolution (DE)

In [46], Storn and Price introduced the DE as the first version to solve multiple optimization problems in 1997. DE stands out for its versatility, quick execution time, rapid acceleration pattern, and fast and accurate local operators [47,48]. In DE, the optimization process begins with a random selection of solutions for finding the majority of the points in the search space (initialization phase). The solutions can then be improved, using a series of operators called mutation and crossover. The new solution can be accepted if it has a higher objective value. For the current solution , the mathematical model of the mutation operator can be applied as follows:

where , and are random numbers, F is the mutation balancing factor, and F is greater than 0.

For the crossover operator, Equation (6) represents the new solution , which is produced using the mutated operator through the crossover . The crossover is considered a mixture process among vectors and .

is the crossover probability.

The DE algorithm improves its selected solutions according to the objective function values, where the generated is replaced with the current one if it obtained a better fitness value, which is as follows.

2.3. The Proposed DAOA

In this section, the procedure of the proposed Evolutionary Arithmetic Optimization Algorithm (DAOA) is presented as follows.

2.3.1. Initialization Phase

When using the AOA, the optimization procedure begins with a number of random solutions (X) as designated in matrix (8). The best solution is taken in each iteration as the best-obtained solution.

2.3.2. Phases of the Proposed DAOA

In this section, the main details and procedures of the proposed Evolutionary Arithmetic Optimization Algorithm (DAOA) are given.

The DAOA is introduced mainly to develop the original AOA’s convergence ability, the quality of solutions, and the ability to avoid the local optima problem. Thus, the DE technique is introduced into the conventional AOA to form DAOA. This proposed DAOA method is introduced to perform the exploration search by the AOA and exploitation search by the DE. This also makes an excellent balance between the search strategies and guarantees that the proposed method averts the local optima.

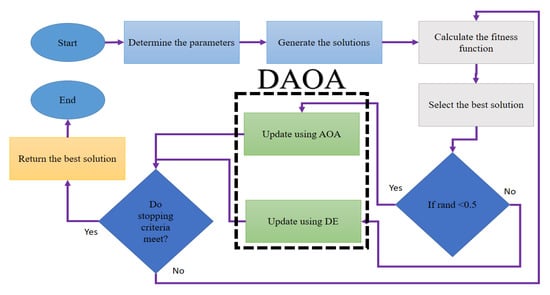

Figure 3 depicts the proposed DAOA approach in this section. The DAOA procedure begins with (1) determining the values of the used algorithms’ parameters, (2) generating candidate solutions, (3) calculating fitness functions, (4) selecting the best solution, (5) if a given condition is true, the AOA is executed to update the solutions; otherwise, the DE is executed to update the solutions, and (6) then another condition is given to stop or continue the optimization process. Figure 3 shows the flowchart for the proposed DAOA. The pseudo-code of the DAOA algorithm is given in Algorithm 1.

| Algorithm 1 Pseudo-code of the DAOA algorithm |

|

Figure 3.

The flowchart of the proposed DAOA.

3. Definitions of the Multilevel Thresholding Image Segmentation Problems

In this section, we describe the main problem of multilevel thresholding. Let us suppose that I is a gray or color image that needs to be processed, where presents the classes that need to be produced. For segmenting the given image (I) into classes, the k thresholds’ values are required to progress in the image segmentation procedure; , and this can be expressed as follows [1,7,49].

where L indicates the highest gray levels and indicates the kth class of the image I. The is the k-th threshold, with being the gray level at the th pixel. Furthermore, in Equation (10), multilevel thresholding is identified as a maximization optimization problem that needs to find the optimal threshold values.

K multilevel threshold values can be presented as follows.

3.1. Fitness Function (Kapur’s Entropy)

For the purpose of thresholding, consider a digital image I with N pixels and L gray levels. Via thresholds, these L number of gray levels are divided into classes: Class1, Class2, …, Classk [1].

In this proposed DAOA, Kapur’s entropy is utilized for achieving optimum threshold values. Measurement of the bi-level thresholds needs the optimization process’s objective function, as shown in Equation (11).

where is the probability distribution, is the numbers of pixels for the used gray level L, and is the total numbers of pixels of the image I. presents the probability value for the distribution, determined as (). and are the numbers of pixels for the used gray level L and total pixel of the image I. are the used fuzzy parameters, and .

Then, . The best fitness function obtained is the highest value.

3.2. Performance Measures

We assess the proposed DAOA method performance, using three performance measures: the fitness function value, the Structural Similarity Index (SSIM), and the Peak Signal-to-Noise Ratio (PSNR) [50,51]. The following equations compute SSIM and PSNR:

where () and () are the images’ mean intensity of and I, respectively, where is the governance of I and , and and coefficient values are equal to 6.5025 and 58.52252, respectively [1].

where the is the root-mean-squared error of each pixel, and depicts the image’s size. is the gray pixel value of the initial image, and is the gray value of the pixel in the obtained segmented image.

4. Experiments and Results

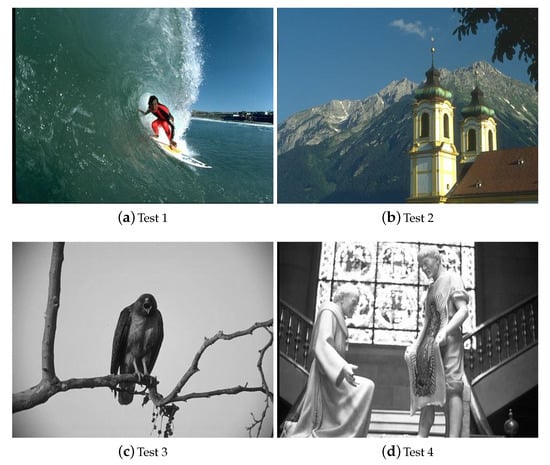

4.1. Benchmark Images

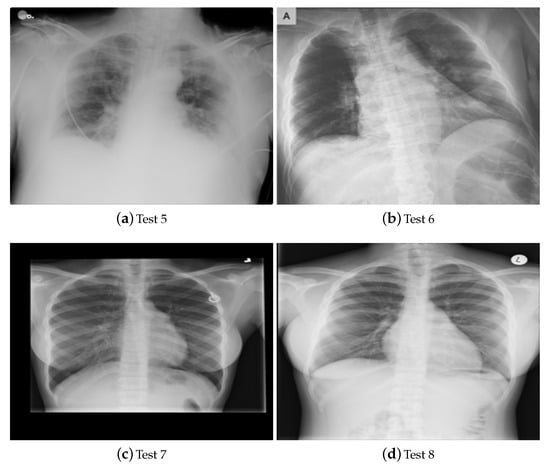

In this section, the benchmark image data sets are presented in Figure 4 and Figure 5. Two image types were used in this paper’s experiments, taken from nature (as seen in Figure 4) and medical CT images (as seen in Figure 5). We chose eight images: two-color images from nature (i.e., Test 1 and Test 2), two gray images from nature (i.e., Test 3 and Test 4), two COVID-19 CT images (i.e., Test 5 and Test 6), and two normal COVID-19 CT images (i.e., Test 7 and Test 8). These benchmarks were taken from the Berkeley Segmentation Data Set: Images and BIMCV-COVID19 [52].

Figure 4.

The nature benchmark images that have been used.

Figure 5.

The CT benchmark images that were used.

4.2. Comparative Algorithms and Parameter Setting

The proposed DAOA is analyzed and compared with six recently well-known algorithms, including Aquila Optimizer (AO) [30], Whale Optimization Algorithm (WOA) [53], Salp Swarm Algorithm (SSA) [54], Arithmetic Optimization Algorithm (AOA) [29], Particle Swarm Optimization (PSO) [55], Marine Predators Algorithm (MPA) [56], and Differential Evolution (DE) [57].

These algorithms’ parameters are set in the same way as they were in their original papers. The values of different parameter settings used in the tested algorithms are shown in Table 1. These sensitive parameters can be tuned for further investigation to show the effect of each parameter on the performance of the tested methods. The algorithms are executed by using the MATLAB 2015a software. These algorithms are run on an Intel Core i7 1.80 GHz 2.30 GHz processor with 16 GB RAM. The number of solutions used is twenty-five. For a systematic comparison, the maximum number of iterations is set to one hundred. Each competitor algorithm generates thirty independent runs.

Table 1.

Parameter settings.

4.3. Performance Evaluation

A comparison of the proposed DAOA for multilevel thresholding segmentation, using eight different images, is presented in this section. The following tables show the max, mean, min, and standard deviation of each test case’s PSNR and SSIM. Moreover, the summation, mean rank, and final ranking are given, using the Friedman ranking test to prove the proposed method’s significant improvement [58,59].

The PSNR and SSIM results of Test 1 are given in Table 2 and Table 3. It is clear that the proposed DAOA obtained excellent results in almost all the test cases in terms of PSNR. For threshold 2, the proposed DAOA obtained the best results, and it ranked first when compared to all other comparative methods, followed by AOA, SSA, PSO, WOA, MPA, AO, and finally, DE. In addition, for threshold 6, the proposed method obtained promising results compared to other methods. DAOA obtained the first rank, followed by WOA, PSO, SSA, AOA, AO, SSA, MPA, and DE. Overall, we can see that the proposed method obtained the first ranking, followed by AOA, PSO, SSA, WOA, AO, MPA, and DE. The obtained results in this table prove the ability of the proposed DAOA to solve the given problems effectively.

Table 2.

The PSNR results of the test case 1.

Table 3.

The SSIM results of the test case 1.

For threshold 2 in Table 3, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by PSO, SSA, DE, AOA, WOA, MPA, and finally, AO. In addition, for threshold 3, the proposed method obtained promising results, compared to other methods. DAOA obtained the first ranking, followed by PSO, SSA, DE, AOA, MPA, WOA, and AO. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by PSO, AOA, SSA, DE, WOA, AO, and MPA. The obtained results in this table prove the ability of the proposed DAOA to solve the given problems effectively.

The PSNR and SSIM results of Test 2 are given in Table 4 and Table 5. The proposed DAOA achieved excellent results in almost all the test cases in terms of PSNR. For threshold 4, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by AO, MPA, AOA, SSA, DE, PSO, and finally, WOA. For threshold 5, the proposed method obtained promising results, compared to other methods. DAOA obtained the first rank, followed by AO, DE, PSO, WOA, AOA, SSA, and MPA. Overall, we can see that the proposed method obtained the first ranking, followed by AO, DE, SSA, PSO, MPA, WOA, and AOA. The achieved results in this table demonstrate the ability of the proposed DAOA to solve the given problems efficiently.

Table 4.

The PSNR results of the test case 2.

Table 5.

The SSIM results of the test case 2.

For threshold 4 in Table 5, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by AO, AOA, MPA, SSA, DE, PSO, and finally, WOA. For threshold 3, the proposed method obtained promising results, compared to other methods. DAOA obtained the first ranking, followed by WOA, DE, AO, PSO, SSA, MPA, and AOA. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by AO, MPA, SSA, DE, PSO, WOA, and AOA. The obtained results in this table confirm the performance of the proposed DAOA and its ability to solve the given problems efficiently.

The PSNR and SSIM results of Test 3 are given in Table 6 and Table 7. The proposed DAOA obtained new, promising results in almost all the test cases in terms of PSNR. For threshold 5, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by SSA, AO, PSO, AOA, MPA, and finally, DE. For threshold 6, the proposed method obtained promising results compared to other methods. DAOA obtained the first rank, followed by AO, PSO, DE, WOA, AOA, SSA, and MPA. Overall we can see that the proposed method obtained the first ranking, followed by WOA, DE, AO, AOA, MPA, PSO, and SSA. The achieved results in this table demonstrate the ability of the proposed DAOA to solve the given problems efficiently. As well, it is clear the proposed DAOA has this ability at different threshold levels.

Table 6.

The PSNR results of the test case 3.

Table 7.

The SSIM results of the test case 3.

For threshold 2 in Table 7, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by MPA, WOA, DE, AO, SSA, AOA, and finally, PSO. For threshold 5, the proposed method obtained promising results compared to other methods. DAOA obtained the first ranking, followed by PSO, AOA, MPA, WOA, AO, PSO, and DE. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by MPA, AO, SSA, AOA, WOA, PSO, and DE. The obtained results in this table confirm the performance of the proposed DAOA and its ability to solve the given problems efficiently. The following results prove and support that the proposed algorithm’s ability to solve such problems is strong and that it is capable of finding robust solutions in this field.

The PSNR and SSIM results of Test 4 are given in Table 8 and Table 9. The proposed DAOA obtained new promising results in almost all the test cases in terms of PSNR, as shown in Table 8. The proposed DAOA obtained the best results for two threshold values (i.e., 3 and 4 levels). For threshold 3, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by PSO, SSA, WOA, AOA, MPA, DE, and finally, AO. For threshold 4, the proposed method obtained promising results, compared to other methods. DAOA obtained the first rank, followed by WOA, SSA, AOA, MPA, AO, DE, and AOA. Overall, we can see that the proposed method obtained the first ranking, followed by WOA, PSO, SSA, MPA, AO, AOA, and DE. The achieved results in this table demonstrate the ability of the proposed DAOA to solve the given problems efficiently. As well, it is clear the proposed DAOA has this ability at different threshold levels.

Table 8.

The PSNR results of the test case 4.

Table 9.

The SSIM results of the test case 4.

Table 9 shows that the proposed DAOA method obtained better results in almost all the test cases in terms of SSIM for Test 4. The proposed DAOA obtained the best results for two threshold values (i.e., 2 and 3 levels). For threshold 2, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by DE, AOA, WOA, MPA, PSO, SSA, and finally, AOA. For threshold 3, the proposed method obtained promising results, compared to other methods. DAOA obtained the first ranking, followed by AOA, AO, PSO, WOA, MPA, SSA, and DE. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by PSO, AO, WOA, AOA, MPA, SSA, and DE. The obtained results in this table confirm the performance of the proposed DAOA to solve the given problems efficiently. The following results prove and support that the proposed algorithm’s ability to solve such problems is strong and that it is capable of finding robust solutions in this field.

In Table 10 and Table 11, the PSNR and SSIM results of Test 5 are shown. As shown in Table 10, the proposed DAOA yielded new promising PSNR results in almost all test cases. For two threshold values, the proposed DAOA gave the best results (i.e., 2 and 5 levels). For threshold 2, the proposed DAOA produced the best results, placing it first among all other comparative methods, ahead of SSA, AOA, MPA, DE, AO, WOA, and PSO. In addition, when compared to other methods, the proposed method produced positive results for threshold 5. DAOA came first, followed by AOA, MPA, AO, WOA, PSO, and DE. Overall, we can see that DAOA came first, followed by SSA, DE, AO, AOA, PSO, MPA, and WOA. The obtained results in this table demonstrate the proposed DAOA’s ability to solve the given problems efficiently. Furthermore, it is evident that the proposed DAOA has the potential to operate at various threshold levels.

Table 10.

The PSNR results of the test case 5.

Table 11.

The SSIM results of the test case 5.

In terms of SSIM for Test 5, Table 11 shows that the proposed DAOA system obtained better results in almost all test cases. For two threshold values, the proposed DAOA produced the best results (i.e., 2 and 5 levels). For threshold 2, the proposed DAOA received the best results, placing it first among SSA, AO, MPA, AOA, DE, PSO, and WOA. In addition, when compared to other methods, the proposed method produced positive results for threshold 5. The first-place winner was DAOA, followed by AO, WOA, SSA, AOA, DE, MPA, and PSO. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by AO, SSA, WOA, PSO, MPA, DE, and AOA. The obtained results in this table confirm the performance of the proposed DAOA and its ability to solve the given problems efficiently. The following results prove and support that the proposed algorithm’s ability to solve such problems is strong and that it is capable of finding robust solutions in this field.

In Table 12 and Table 13, the PSNR and SSIM results of Test 6 are shown. As shown in Table 12, the proposed DAOA yielded new promising PSNR results in nearly all test cases. For two threshold values, the proposed DAOA gave the best results (i.e., 5 and 6 levels). For threshold 5, the DE produced the best results, placing it first among all other comparative approaches, ahead of DAOA, SSA, PSO, AOA, WOA, MPA, and AO. In addition, when compared to other methods, the proposed method produced positive results for threshold 6. DAOAO came first, followed by SSA, WOA, AOA, MPA, DE, AO, and PSO. Overall, we can see that DAOA came first, followed by AOA, DE, PSO, SSA, WOA, AO, and MPA. The obtained results in this table demonstrate the proposed DAOA’s ability to solve the given problems efficiently. Furthermore, it is evident that the proposed DAOA has the potential to operate at various threshold levels.

Table 12.

The PSNR results of the test case 6.

Table 13.

The SSIM results of the test case 6.

In terms of SSIM for Test 6, Table 13 shows that the proposed DAOA method obtained better results in almost all test cases. For one threshold value, the proposed DAOA produced the best results (i.e., three levels). For threshold 3, the proposed DAOA received the best results, placing it first, followed by AOA, AO, PSO, MPA, SSA, WOA, and DE. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by AOA, SSA, MPA, AO, WOA, PSO, and DE. The obtained results in this table confirm the performance of the proposed DAOA to solve the given problems efficiently. The following results prove and support that the proposed algorithm’s ability to solve such problems is strong and that it is capable of finding robust solutions in this field.

The PSNR and SSIM results of Test 7 are given in Table 14 and Table 15. The proposed DAOA obtained new promising results in almost all the test cases in terms of PSNR, as shown in Table 14. The proposed DAOA obtained the best results for three threshold values (i.e., 3, 4, and 6 levels). For threshold 3, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by PSO, WOA, MPA, PSO, SSA, AOA, and finally, DE. For threshold 6, the proposed method obtained promising results, compared to other methods. DAOA obtained the first rank, followed by MPA, AO, AOA, SSA, PSO, DE, and WOA. Overall, we can see that the proposed method obtained the first ranking, followed by MPA, AO, AOA, SSA, PSO, WOA, and DE. The achieved results in this table demonstrate the ability of the proposed DAOA to solve the given problems efficiently. Furthermore, it is obvious that the proposed DAOA has the potential to operate at various threshold levels.

Table 14.

The PSNR results of the test case 7.

Table 15.

The SSIM results of the test case 7.

Table 15 shows that the proposed DAOA method obtained better results in almost all the test cases in terms of SSIM for Test 7. The proposed DAOA obtained the best results for two threshold values (i.e., 2 and 4 levels). For threshold 2, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by MPA, PSO, AOA, AO, WOA, SSA, and finally, DE. For threshold 4, the proposed method obtained promising results compared to other methods. DAOA obtained the first ranking, followed by PSO, SSA, AO, AOA, WOA, DE, and MPA. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by PSO, AOA, AO, MPA, WOA, SSA, and DE. The obtained results in this table confirm the performance of the proposed DAOA to solve the given problems efficiently. The presented results demonstrate and declare the proposed algorithm’s ability to solve such problems and find reliable solutions in this area.

The PSNR and SSIM results of Test 8 are given in Table 16 and Table 17. The proposed DAOA obtained got new promising results in almost all the test cases in terms of PSNR, as shown in Table 16. The proposed DAOA obtained the best results for four threshold values (i.e., 2, 3, 4, and 5 levels). For threshold 2, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by DE, AOA, PSO, SSA, AO, MPA, and finally, WOA. For threshold 5, the proposed method obtained promising results, compared to other methods. DAOA obtained the first rank, followed by AO, DE, PSO, WOA, AOA, MPA, and SSA. Overall, we can see that the proposed method obtained the first ranking, followed by AO, AOA, PSO, DE, WOA, MPA, and SSA. The achieved results in this table demonstrate the ability of the proposed DAOA to solve the given problems efficiently. Furthermore, it is obvious that the proposed DAOA has the potential to operate at various threshold levels.

Table 16.

The PSNR results of the test case 8.

Table 17.

The SSIM results of the test case 8.

Table 17 shows that the proposed DAOA method obtained better results in almost all the test cases in terms of SSIM for Test 8. The proposed DAOA obtained the best results for two threshold values (i.e., 3 and 5 levels). For threshold 3, the proposed DAOA obtained the best results, and it ranked as the first method, compared to all other comparative methods, followed by AOA, SSA, DE, WOA, AO, PSO, and finally, MPA. For threshold 5, the proposed method obtained promising results compared to other methods. DAOA obtained the first ranking, followed by AO, DE, SSA, PSO, AOA, WOA, and MPA. Overall, we can see that the proposed DAOA method obtained the first ranking, followed by AOA, AO, WOA, SSA, PSO, DE, and MPA. The obtained results in this table confirm the performance of the proposed DAOA and its ability to solve the given problems efficiently. The presented results demonstrate and declare the proposed algorithm’s ability to solve such problems and find reliable solutions in this area. We added a Wilcoxon sign test to show the significant improvement for test case number 8 as shown in Table 16 and Table 17. It is clear that the proposed method is more effective and better than the other methods.

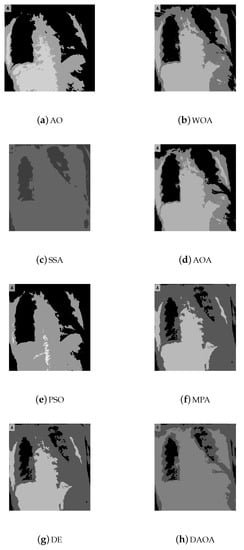

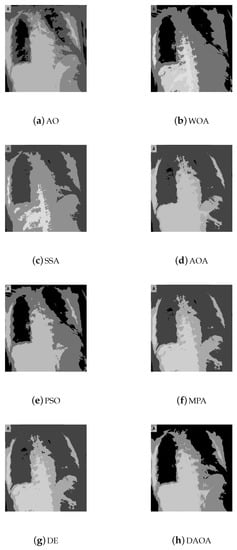

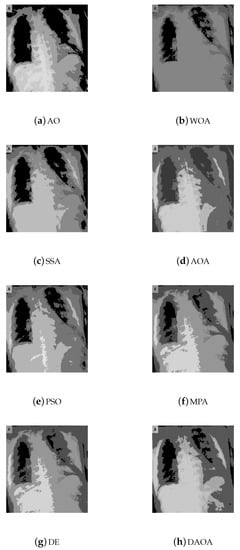

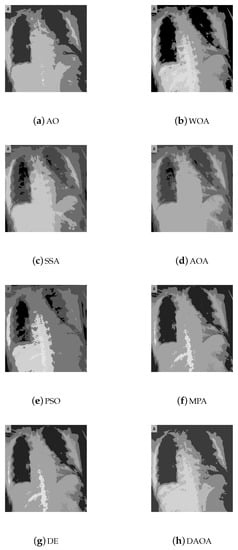

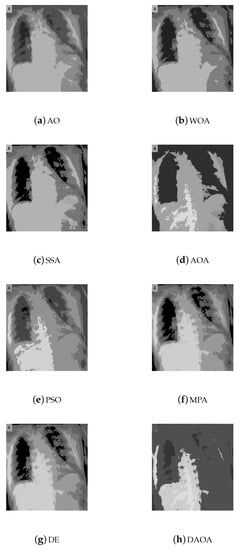

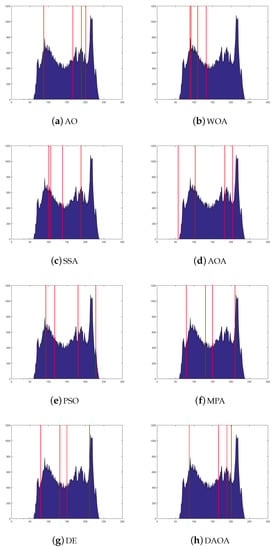

The segmentation results (segmented images) of the proposed DAOA and the other comparative methods for Test 8 are shown in Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10. Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 show the segmented images for all the tested methods, when the threshold values are 2, 3, 4, 5, and 6, respectively. According to these figures, we can recognize that the proposed DAOA showed good segmented images for various images (CT COVID-19 medical images) under different thresholds. Additionally, these figures prove that the segmented images are better in terms of quality when the threshold value is higher.

Figure 6.

The segmented image (Test 8) by the comparative methods when the threshold value is 2.

Figure 7.

The segmented image (Test 8) by the comparative methods when the threshold value is 3.

Figure 8.

The segmented image (Test 8) by the comparative methods when the threshold value is 4.

Figure 9.

The segmented image (Test 8) by the comparative methods when the threshold value is 5.

Figure 10.

The segmented image (Test 8) by the comparative methods when the threshold value is 6.

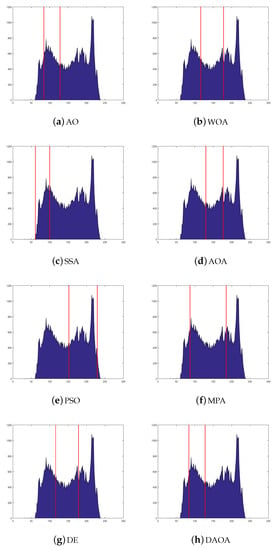

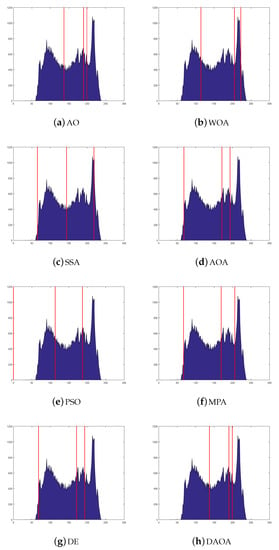

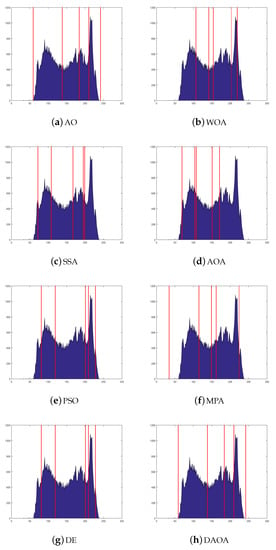

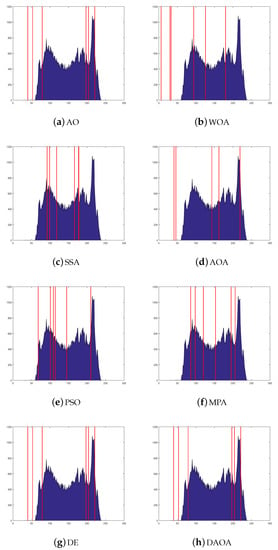

The thresholds are shown in Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15, applied over the selected images. In Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15, the histogram images are given with the best threshold values obtained by the comparative methods for Test 8, where the threshold values are taken (i.e., 2, 3, 4, 5, and 6). The X and Y axes present the threshold values and Kapur measure values, respectively. It is feasible to recognize that the histogram classes are uniformly created, even in complex situations from such images. This means that the proposed method has an excellent ability to find always the same threshold values. The complexity is different from case to case because of the various peaks displayed in the pixels’ distribution, which could create multiple classes or even carefully obtain the selection of the optimal thresholds.

Figure 11.

The histogram image (Test 8) by the comparative methods when the threshold value is 2.

Figure 12.

The histogram image (Test 8) by the comparative methods when the threshold value is 3.

Figure 13.

The histogram image (Test 8) by the comparative methods when the threshold value is 4.

Figure 14.

The histogram image (Test 8) by the comparative methods when the threshold value is 5.

Figure 15.

The histogram image (Test 8) by the comparative methods when the threshold value is 6.

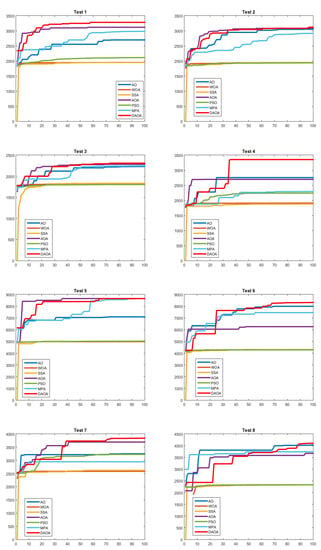

Figure 16 shows the convergence curves of the proposed DAOA and its comparative optimization algorithms on eight tested images (i.e., Test 1 to Test 8); it can be seen that the proposed DAOA performs better than all involved other optimization methods in Test 8 when the threshold value is 6. For almost all the test images, the excellent optimized performance with accelerated convergence and more reliable accuracy achieved by the proposed DAOA can be seen as being remarkably smoothing behavior in the convergence curve. Moreover, we recognize that the curves of the proposed method always converge smoothly, reflecting the proposed DAOA’s ability to avoid the common problem (local optima). In the end, the proposed DAOA reached the best solutions almost in all the tested cases, compared to the other comparative methods, as clearly shown in Figure 16.

Figure 16.

The convergence behavior of the comparative methods in solving Test 8 when the threshold value is 6.

5. Conclusions and Future Works

The most crucial aspect of image segmentation is multilevel thresholding. However, multilevel thresholding displays require increasingly more computational complexity as the number of thresholds grows. In order to address this weakness, this paper proposes a new multilevel thresholding approach based on using an improved optimization-based evolutionary method.

The Arithmetic Optimization Algorithm (AOA) is a recently proposed optimization technique to solve different complex optimization problems. An enhanced version of the Arithmetic Optimization Algorithm is proposed in this paper to solve multilevel thresholding image segmentation problems. The proposed method combines the conventional Arithmetic Optimization Algorithm with the Differential Evolution technique, called DAOA. The main aim of the proposed DAOA is to improve the local search of the Arithmetic Optimization Algorithm and to establish an equilibrium among the search methods (exploration and exploitation).

The proposed DAOA method was applied to the multilevel thresholding problem, using Kapur’s measure between class variance functions as a fitness function. The proposed DAOA evaluated eight standard test images from two different groups: nature images and CT medical images (i.e., COVID-19). The Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Test (SSIM) were used to determine the segmented images’ accuracy. The proposed DAOA method’s efficiency was evaluated and compared to other multilevel thresholding methods, including the Aquila Optimizer (AO), Whale Optimization Algorithm (WOA), Salp Swarm Algorithm (SSA), Arithmetic Optimization Algorithm (AOA), Particle Swarm Optimization (PSO), and Marine Predators Algorithm (MPA). The findings were presented, using a number of different threshold values (i.e., 2, 3, 4, 5, and 6). According to the experimental results, the proposed DAOA produced higher quality solutions than the other approaches. It achieved better results in almost all the tested cases, compared to other methods.

For future work, other fitness functions, evaluation measures, and benchmark images can be used. The conventional Arithmetic Optimization Algorithm can be improved, using other different optimization operations to enhance its performance further. As well, the proposed DAOA method can be used to solve other problems, such as text clustering, feature selection, photovoltaic parameter estimations, task scheduling in fog and cloud computing, appliances management in smart homes, advanced benchmark functions, text classification, text summarization, data clustering, engineering design problems, industrial problems, image construction, short-term wind speed forecasting, fuel cell modeling, damage identification, the prediction of the software vulnerability, knapsack problems, and others.

Author Contributions

L.A.: data curation, formal analysis, investigation, methodology, resources, software, supervision, validation, visualization, writing—original draft, and writing—review and editing. A.D.: formal analysis, investigation, supervision, writing—original draft, and writing—review and editing. P.S.: investigation, supervision, and writing—review and editing. A.H.G.: investigation, supervision, and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abd El Aziz, M.; Ewees, A.A.; Hassanien, A.E. Whale optimization algorithm and moth-flame optimization for multilevel thresholding image segmentation. Expert Syst. Appl. 2017, 83, 242–256. [Google Scholar] [CrossRef]

- Shubham, S.; Bhandari, A.K. A generalized Masi entropy based efficient multilevel thresholding method for color image segmentation. Multimed. Tools Appl. 2019, 78, 17197–17238. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Lu, S. Many-objectives multilevel thresholding image segmentation using knee evolutionary algorithm. Expert Syst. Appl. 2019, 125, 305–316. [Google Scholar] [CrossRef]

- Pare, S.; Bhandari, A.K.; Kumar, A.; Singh, G.K. An optimal color image multilevel thresholding technique using grey-level co-occurrence matrix. Expert Syst. Appl. 2017, 87, 335–362. [Google Scholar] [CrossRef]

- Bao, X.; Jia, H.; Lang, C. A novel hybrid harris hawks optimization for color image multilevel thresholding segmentation. IEEE Access 2019, 7, 76529–76546. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Ewees, A.A.; Oliva, D. Hyper-heuristic method for multilevel thresholding image segmentation. Expert Syst. Appl. 2020, 146, 113201. [Google Scholar] [CrossRef]

- Houssein, E.H.; Helmy, B.E.d.; Oliva, D.; Elngar, A.A.; Shaban, H. A novel Black Widow Optimization algorithm for multilevel thresholding image segmentation. Expert Syst. Appl. 2021, 167, 114159. [Google Scholar] [CrossRef]

- Gill, H.S.; Khehra, B.S.; Singh, A.; Kaur, L. Teaching-learning-based optimization algorithm to minimize cross entropy for Selecting multilevel threshold values. Egypt. Inform. J. 2019, 20, 11–25. [Google Scholar] [CrossRef]

- Tan, Z.; Zhang, D. A fuzzy adaptive gravitational search algorithm for two-dimensional multilevel thresholding image segmentation. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 4983–4994. [Google Scholar] [CrossRef]

- Yousri, D.; Abd Elaziz, M.; Abualigah, L.; Oliva, D.; Al-Qaness, M.A.; Ewees, A.A. COVID-19 X-ray images classification based on enhanced fractional-order cuckoo search optimizer using heavy-tailed distributions. Appl. Soft Comput. 2021, 101, 107052. [Google Scholar] [CrossRef] [PubMed]

- Srikanth, R.; Bikshalu, K. Multilevel thresholding image segmentation based on energy curve with harmony Search Algorithm. Ain Shams Eng. J. 2021, 12, 1–20. [Google Scholar] [CrossRef]

- Duan, L.; Yang, S.; Zhang, D. Multilevel thresholding using an improved cuckoo search algorithm for image segmentation. J. Supercomput. 2021, 77, 6734–6753. [Google Scholar] [CrossRef]

- Jia, H.; Lang, C.; Oliva, D.; Song, W.; Peng, X. Hybrid grasshopper optimization algorithm and differential evolution for multilevel satellite image segmentation. Remote Sens. 2019, 11, 1134. [Google Scholar] [CrossRef] [Green Version]

- Khairuzzaman, A.K.M.; Chaudhury, S. Multilevel thresholding using grey wolf optimizer for image segmentation. Expert Syst. Appl. 2017, 86, 64–76. [Google Scholar] [CrossRef]

- He, L.; Huang, S. Modified firefly algorithm based multilevel thresholding for color image segmentation. Neurocomputing 2017, 240, 152–174. [Google Scholar] [CrossRef]

- Li, Y.; Bai, X.; Jiao, L.; Xue, Y. Partitioned-cooperative quantum-behaved particle swarm optimization based on multilevel thresholding applied to medical image segmentation. Appl. Soft Comput. 2017, 56, 345–356. [Google Scholar] [CrossRef]

- Manic, K.S.; Priya, R.K.; Rajinikanth, V. Image multithresholding based on Kapur/Tsallis entropy and firefly algorithm. Indian J. Sci. Technol. 2016, 9, 89949. [Google Scholar] [CrossRef]

- Bhandari, A.K.; Kumar, A.; Singh, G.K. Modified artificial bee colony based computationally efficient multilevel thresholding for satellite image segmentation using Kapur’s, Otsu and Tsallis functions. Expert Syst. Appl. 2015, 42, 1573–1601. [Google Scholar] [CrossRef]

- Liang, H.; Jia, H.; Xing, Z.; Ma, J.; Peng, X. Modified grasshopper algorithm-based multilevel thresholding for color image segmentation. IEEE Access 2019, 7, 11258–11295. [Google Scholar] [CrossRef]

- Abualigah, L. Group search optimizer: A nature-inspired meta-heuristic optimization algorithm with its results, variants, and applications. Neural Comput. Appl. 2020, 33, 2949–2972. [Google Scholar] [CrossRef]

- Alsalibi, B.; Abualigah, L.; Khader, A.T. A novel bat algorithm with dynamic membrane structure for optimization problems. Appl. Intell. 2021, 51, 1992–2017. [Google Scholar] [CrossRef]

- Ewees, A.A.; Abualigah, L.; Yousri, D.; Algamal, Z.Y.; Al-qaness, M.A.; Ibrahim, R.A.; Abd Elaziz, M. Improved Slime Mould Algorithm based on Firefly Algorithm for feature selection: A case study on QSAR model. Eng. Comput. 2021, 1–15. [Google Scholar] [CrossRef]

- Şahin, C.B.; Dinler, Ö.B.; Abualigah, L. Prediction of software vulnerability based deep symbiotic genetic algorithms: Phenotyping of dominant-features. Appl. Intell. 2021, 1–17. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Safaldin, M.; Otair, M.; Abualigah, L. Improved binary gray wolf optimizer and SVM for intrusion detection system in wireless sensor networks. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 1559–1576. [Google Scholar] [CrossRef]

- Alshinwan, M.; Abualigah, L.; Shehab, M.; Abd Elaziz, M.; Khasawneh, A.M.; Alabool, H.; Al Hamad, H. Dragonfly algorithm: A comprehensive survey of its results, variants, and applications. Multimed. Tools Appl. 2021, 80, 14979–15016. [Google Scholar] [CrossRef]

- Shehab, M.; Abualigah, L.; Al Hamad, H.; Alabool, H.; Alshinwan, M.; Khasawneh, A.M. Moth–flame optimization algorithm: Variants and applications. Neural Comput. Appl. 2020, 32, 9859–9884. [Google Scholar] [CrossRef]

- Al-Qaness, M.A.; Ewees, A.A.; Fan, H.; Abualigah, L.; Abd Elaziz, M. Marine predators algorithm for forecasting confirmed cases of COVID-19 in Italy, USA, Iran and Korea. Int. J. Environ. Res. Public Health 2020, 17, 3520. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Jouhari, H.; Lei, D.; Al-qaness, M.A.; Elaziz, M.A.; Damaševičius, R.; Korytkowski, M.; Ewees, A.A. Modified Harris Hawks Optimizer for Solving Machine Scheduling Problems. Symmetry 2020, 12, 1460. [Google Scholar] [CrossRef]

- Połap, D.; Woźniak, M. Red fox optimization algorithm. Expert Syst. Appl. 2021, 166, 114107. [Google Scholar] [CrossRef]

- Mernik, M.; Liu, S.H.; Karaboga, D.; Črepinšek, M. On clarifying misconceptions when comparing variants of the artificial bee colony algorithm by offering a new implementation. Inf. Sci. 2015, 291, 115–127. [Google Scholar] [CrossRef]

- Sahlol, A.T.; Abd Elaziz, M.; Tariq Jamal, A.; Damaševičius, R.; Farouk Hassan, O. A novel method for detection of tuberculosis in chest radiographs using artificial ecosystem-based optimisation of deep neural network features. Symmetry 2020, 12, 1146. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A. Advances in sine cosine algorithm: A comprehensive survey. Artif. Intell. Rev. 2021, 54, 2567–2608. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A. A comprehensive survey of the Grasshopper optimization algorithm: Results, variants, and applications. Neural Comput. Appl. 2020, 32, 15533–15556. [Google Scholar] [CrossRef]

- Tuba, E.; Alihodzic, A.; Tuba, M. Multilevel image thresholding using elephant herding optimization algorithm. In Proceedings of the 2017 14th International Conference on Engineering of Modern Electric Systems (EMES), Oradea, Romania, 1–2 June 2017; pp. 240–243. [Google Scholar]

- Sahlol, A.T.; Yousri, D.; Ewees, A.A.; Al-Qaness, M.A.; Damasevicius, R.; Abd Elaziz, M. COVID-19 image classification using deep features and fractional-order marine predators algorithm. Sci. Rep. 2020, 10, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Pan, J.S.; Chu, S.C. A parallel multi-verse optimizer for application in multilevel image segmentation. IEEE Access 2020, 8, 32018–32030. [Google Scholar] [CrossRef]

- Gao, Y.; Li, X.; Dong, M.; Li, H.P. An enhanced artificial bee colony optimizer and its application to multi-level threshold image segmentation. J. Cent. South Univ. 2018, 25, 107–120. [Google Scholar] [CrossRef]

- Resma, K.B.; Nair, M.S. Multilevel thresholding for image segmentation using Krill Herd Optimization algorithm. J. King Saud. Univ.-Comput. Inf. Sci. 2018, 33, 528–541. [Google Scholar]

- Abd Elaziz, M.; Yousri, D.; Al-qaness, M.A.; AbdelAty, A.M.; Radwan, A.G.; Ewees, A.A. A Grunwald–Letnikov based Manta ray foraging optimizer for global optimization and image segmentation. Eng. Appl. Artif. Intell. 2021, 98, 104105. [Google Scholar] [CrossRef]

- Sörensen, K. Metaheuristics—The metaphor exposed. Int. Trans. Oper. Res. 2015, 22, 3–18. [Google Scholar] [CrossRef]

- García-Martínez, C.; Gutiérrez, P.D.; Molina, D.; Lozano, M.; Herrera, F. Since CEC 2005 competition on real-parameter optimisation: A decade of research, progress and comparative analysis’s weakness. Soft Comput. 2017, 21, 5573–5583. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A. Success-history based parameter adaptation for differential evolution. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 71–78. [Google Scholar]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Replication and comparison of computational experiments in applied evolutionary computing: Common pitfalls and guidelines to avoid them. Appl. Soft Comput. 2014, 19, 161–170. [Google Scholar] [CrossRef]

- Maitra, M.; Chatterjee, A. A hybrid cooperative–comprehensive learning based PSO algorithm for image segmentation using multilevel thresholding. Expert Syst. Appl. 2008, 34, 1341–1350. [Google Scholar] [CrossRef]

- Yin, P.Y. Multilevel minimum cross entropy threshold selection based on particle swarm optimization. Appl. Math. Comput. 2007, 184, 503–513. [Google Scholar] [CrossRef]

- Zhou, W. Image quality assessment: From error measurement to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–613. [Google Scholar]

- Sumari, P.; Syed, S.J.; Abualigah, L. A Novel Deep Learning Pipeline Architecture based on CNN to Detect Covid-19 in Chest X-ray Images. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 2001–2011. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the MHS’95. Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Abualigah, L.M.Q. Feature Selection and Enhanced Krill Herd Algorithm for Text Document Clustering; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).