Abstract

High precision control is often accompanied by many control parameters, which are interrelated and difficult to adjust directly. It is difficult to convert the system control effect directly into mathematical expression, so it is difficult to optimize it by intelligent algorithm. To solve this problem, we propose an improved sinusoidal gray wolf optimization algorithm (ISGWO). In this algorithm, a particle crossing processing mechanism based on the symmetry idea is introduced to maximize the retention of the position information of the optimal individual and improve the search accuracy of the algorithm. In addition, a differential cross-perturbation strategy is adopted to help the algorithm jump out of the local optimal solution in time, which enhances the development capability of ISGWO. Meanwhile, the position update formula with improved sinusoidal can better balance the development and exploration of ISGWO. The ISGWO algorithm is compared with three improved Gray Wolf algorithms on the CEC2017 test set as well as the synchronization controller. The experimental results show that the ISGWO algorithm has better selectivity, speed and robustness.

1. Introduction

Controller parameter tuning [1] is a major challenge in system control, following controller design. Usually, the designer relies on experience or fewer numerical attempts to determine the appropriate parameters. In fact, some adjustable parameters in the controller have a great influence on the final control effect, but it is more difficult to find the optimal values of the parameters mathematically. Meanwhile, in the case of multiple parameters affecting a system simultaneously, a direct solution is almost impossible. Therefore, it is necessary to design an optimization algorithm with both speed and high accuracy to seek the optimal parameters for the real-time changing control system.

Compared to other optimization problems, the optimization objective of a control system is difficult to be directly converted into a mathematical expression. Therefore, with the help of the concept of optimal control, the dynamic parameters such as the upward adjustment and response time of the system are expressed by performance indexes to measure the control effect. At the same time, due to the complexity of the optimization target and the performance index, the classical gradient-based algorithm takes a lot of time and easily falls into the complexity of the local optimum of the objective function, while the global optimum cannot be found. For this reason, to overcome the limitations of gradient-based, a meta-heuristic algorithm is introduced to solve this problem. As a whole, meta-heuristic algorithms can be roughly divided into two categories: (1) algorithms based on a single solution, such as the simulated annealing algorithm [2], in which the performance of the algorithm is continuously improved by simulating the temperature change in a hot object, and (2) population-based metaheuristic algorithms such as Black Widow Optimization Algorithm (BWO) [3], Seagull Optimization Algorithm (SOA) [4], Butterfly Optimization Algorithm (BOA) [5], Artificial Fishing Swarms Algorithm [6], Beluga Whale Algorithm [7] and African Vulture Optimization Algorithm (AVOA) [8]. Group-based algorithms exhibit intelligence through group cooperation. The gray wolf optimization algorithm is an excellent group meta-heuristic algorithm first proposed by Seyedali Mirjalili in 2014. GWO [9] is a search method to find the optimal solution, which is mainly achieved by simulating the social hierarchy and predatory behavior of gray wolf populations. It has the advantages of fast convergence speed, high convergence accuracy and a simple implementation process, and has been widely studied and emphasized. It has been widely applied in the fields of stress distribution [10], blood pressure monitoring [11], fault diagnosis [12], power systems [13], wind prediction [14], etc., and has achieved remarkable results.

GWO’s impressive optimization results in diverse fields prove the algorithm’s remarkable versatility and scalability in tackling problems of varying dimensions and objectives. The control parameter optimization problem is riddled with hidden constraints, making it challenging for classical metaheuristic algorithms like SA and GA to identify suitable parameters and achieve superior planning results. Meanwhile, some algorithms with relatively high computational time complexity and low accuracy, such as the Artificial Bee Colony Algorithm (ABC) [15], the Differential Evolutionary Algorithm (DE) [16] and the Particle Swarm Algorithm (PSO) [17], require high flight costs due to the short computational response time required for the control problem. GWO’s superior global search capability is a key advantage. It enables the algorithm to search globally for multiple objectives, maintaining diversity in the search space and avoiding local optima through group intelligence and competition. This ensures a high level of accuracy in the global search.

However, in practical applications, due to the complexity and variability of the optimization objectives of the control parameter optimization problem, the traditional GWO algorithm has limited adaptability when it comes to complex environmental factors and constraints, and it is easy for it to fall into local optimality, which affects the search accuracy. To solve the local optimum problem, researchers usually have the following two ideas to optimize the GWO algorithm:

(1) Optimize the position-update formula or form a new combined algorithm by introducing a simple adaptive mechanism into the GWO algorithm.

Xu et al. [18] developed a stochastic convergence factor, and Zhang et al. [19] assessed the performance of various adaptive convergence functions and identified the most suitable convergence function for the Gray Wolf algorithm. Liu et al. [20] introduced a nonlinear adaptive convergence factor strategy that balanced the algorithm’s global and local search capabilities, and Zheng et al. [21] applied tent chaotic mappings, which improved the diversity of the initial populations, thereby enhancing the algorithm’s global search capacity. Chuen et al. [22] designed a population initialization strategy based on SPM chaotic mapping. Madhiarasan et al. [23] partitioned the population into three groups and optimized the algorithm parameters, thereby boosting the convergence rate. Di et al. [24] proposed an adaptive search algorithm, which enhanced the algorithm’s global search capability and optimization search precision. To avoid the problem of random and blind searching in the iterative computation process, the chaotic mapping and the reverse learning mechanism are introduced into the improved optimization algorithm [25].

(2) Optimization by mixing the optimal search strategies and ideas of different algorithms.

Saurav et al. [24] integrate the reverse learning approach with the GWO algorithm, markedly enhancing the diversity of the initial population and expediting the algorithm’s precision. Liu et al. [20] incorporate Powell’s algorithm into the Gray Wolf algorithm, leveraging its robust local search capability to compensate for the shortcomings of late convergence precision. Deng et al. [26] introduce a damage repair operation in the large-scale domain search algorithm in the GA algorithm to address the deficiency in global search capability while further enhancing local search capability. Fariborz et al. [27] incorporate a hybrid optimization algorithm, which facilitates information exchange between individuals and addresses the issue of slow convergence in the late stage. Wang et al. [28] incorporate the somersault foraging strategy into the GWO algorithm to enhance population diversity, thereby preventing the algorithm from becoming trapped in a local optimum. She et al. [29] incorporate a dimension learning strategy, which augments the algorithm’s global search capability. Li et al. [30] improved the algorithm by integrating Levi’s flight and random swimming strategies, which augmented the algorithm’s local optimization capability and convergence speed. In Liang et al. [31], the PSO algorithm was combined with the GWO algorithm, which resulted in an enhanced local search capability and accelerated convergence speed. Huang et al. [32] improved the position update formula by combining the idea of individual superiority of the particle swarm algorithm, which led to an improved algorithmic model-solving ability. Zhang et al. [33] introduced individual coding and POX cross-operation to overcome local stagnation and enhance global search ability. Considering that there are numerous parameters to be optimized in ADRC, an improved moth-flame optimization (MFO) is proposed to obtain ADRC parameters [34].

Although the above methods provide two existing ideas and ways to solve the problem, the stability of single stratification may be insufficient, and the combination algorithm may affect the optimization searching effect to a certain extent due to the high time complexity and space complexity when performing the optimization objective calculation and parameter assignment. To address this problem, an improved sinusoidal gray wolf optimization algorithm (ISGWO) based on the improved sinusoidal algorithm combined with differential cross perturbation and symmetric particle crossing processing is proposed and applied to the optimization problem of control parameters.

The main contributions of this paper are as follows:

- (1)

- A novel position-update formulation based on an improved sinusoidal algorithm is used to balance the global exploration and local convergence of the algorithm;

- (2)

- The differential cross-perturbation strategy helps to improve the ability of the algorithm to please the local optimum;

- (3)

- The particle crossing processing mechanism based on the symmetry idea helps the algorithm retain the best individual information and improve the convergence accuracy;

- (4)

- The effectiveness of the ISGWO algorithm is verified through extensive statistical experiments, including CEC 2017 benchmarking and parameter-controlled optimization problems.

This paper is organized as follows. Section 2 gives the optimization object and parameter selection. Section 3 presents the basic mathematical model of GWO. Section 4 details the design and construction of ISGWO. Section 5 verifies the effectiveness of the ISGWO algorithm through simulation. Section 6 summarizes the conclusions of this paper.

2. Optimization Object and Parameter Selection

2.1. Optimization Objects

According to the literature [35], in the process of synchronous control of dual motors, the state space equation of the control object can be established as Equation (1). At the same time, the global reverse-step sliding mode angular velocity synchronous control is adopted for synchronous operation, and the controller is shown in Equation (2). In order to save space, detailed derivation is not carried out in this paper.

where represents the output angle of the motor; represents the output angular speed of the motor; is the current loop feedback coefficient; is the speed loop feedback system; is the speed regulator proportionality coefficient; is the coefficient of pulse-width modulation; is the motor torque coefficient; , is the resistance and inductance of the armature circuit, where can be neglected in modeling; is the rotational inertia of the motor; and is the friction coefficient.

where ; ; ; ; is the expected value of ; ; are non-negative control parameters.

Several parameters in the controller affect the final control effect. To achieve the optimal control effect efficiently and accurately, the parameters need to be screened for parameter optimization.

2.2. Parameter Selection

Taguchi’s method [36] is a widely used local optimization method, which is able to comprehensively consider the influence of multiple parameters on the optimization objective Chengdu, with high efficiency and small computational volume. Four adjustable parameters , and in the controller u are selected as alternative optimization factors, and the constraint ranges are given in Table 1 by combining the system stability conditions and the device technology level.

Table 1.

Optimization factors and their constraint ranges.

Within this range, according to the general principle of Taguchi’s method for selecting the level values of optimization factors, four level values are selected equidistantly within the range of values, named level values I, II, III and IV, and the number of levels of the parameters to be optimized is shown in Table 2.

Table 2.

Number of parameter levels to be optimized.

Considering the stability, speed and low energy consumption of the control algorithm, , and are selected as the optimization objectives. is often used for given signal tracking problems. When targeting , it seeks both dynamic quality and steady-state performance. With as the optimization goal, it emphasizes the dynamic quality, expects to optimize the time, and needs to complete the turnaround under the time-optimal condition under huge load consumption. When targeting , it emphasizes steady-state performance, expecting a minimum control cost from initial state to final state.

With the help of Simulink simulation software to complete the sensitivity analysis of the main parameters, to obtain the Pearson correlation coefficient r of each parameter for a certain optimization target, and then according to the weight of the optimization target in the motor design, to obtain the sensitivity of a certain parameter for the optimization target .

The Pearson correlation coefficient r and the sensitivity are defined as

where is the optimization target value corresponding to the control parameter ; is the average value of the control parameter ; is the average value of the optimization target value ; is the Pearson correlation coefficient of each optimization target; and is the weight coefficient of each optimization target. In this paper, the main premise is to maintain speed under the guarantee of stability, and finally to consider low energy consumption. According to the performance indexes of step response, six indexes of overshoot, delay time, rise time, peak time, adjustment time and error band are selected as the measurement standards. J1 can represent the delay time, rise time, peak time, adjustment time, error band and other five, J2 can represent the delay time, rise time, peak time, adjustment time and other four, and J3 can only represent the overshoot. Therefore, the weighting parameters in Equation (5) are: , , .

The Pearson correlation coefficients as well as the sensitivities of each control parameter were obtained in the single margin mode of operation as shown in Table 3.

Table 3.

Summary of correlation coefficients and sensitivities of control parameters.

Therefore, the most sensitive control parameter was selected as the optimization parameter.

2.3. Optimization Algorithm Objective Function Selection

In the dual motor synchronization control process, the state space equation of the control object is established. The global backstepping sliding mode angular velocity synchronization control is used to synchronize the operation, and the controller as shown in Equation (2).

Among the integral-type performance metrics that are commonly used are shortest time control metrics, least fuel control and minimum energy control [37]. ITAE performance control is to minimize the performance metric , where is the absolute value of the system error. In satisfying this performance metric, the control weights the unavoidable initial state error in a time-varying weighting manner to make the system have a fast yet smooth dynamic performance. However, the ITAE performance index cannot be solved analytically, and an optimization algorithm is needed to find the optimal parameters that minimize the performance index.

3. Basic Gray Wolf Optimization Algorithm

Under the harsh environment of nature, creatures, even if they do not have the high intelligence of human beings, have shown amazing group intelligence through continuous adaptation and cooperation under the incentive of the same goal, i.e., food. Based on the tightly organized system of wolf packs and their subtle collaborative hunting methods, a new group intelligence algorithm, the Gray Wolf Optimization Algorithm, is proposed in the literature.

The gray wolf population has a strict hierarchy, as shown in the diagram: the head wolf at the top of the pyramid is called , and is responsible for making decisions about hunting behavior, habitat, food allocation and other functions. Wolf is not necessarily the strongest wolf, but is the best leader and manager. The second tier of the pyramid is called , and is the replacement for when is missing from the peak. On the third level is , and takes orders from and . Older (less well-adapted) and are also relegated to the level. The lowest level, , is responsible for balancing relationships within the population.

The GWO algorithm simulates the hierarchy and hunting behavior of gray wolves in nature. The entire wolf pack is divided into four groups. The first three groups are the best adapted three groups in order, and these three groups guide the other wolves () to search towards the target. During the optimization process, the wolves update the position of . Equation (6) denotes the distance between individuals, and Equation (7) denotes the updating method of individual gray wolves:

where denotes the distance of an individual from the food; denotes the number of the current iteration; . When , the gray wolf pack expands its search range to find better food, which corresponds to local search; , the gray wolf pack narrows its encirclement, which corresponds to local search; . The convergence factor is a linear decrease from 2 to 0 with the number of iterations. is the position of the prey and denotes the position vector for the gray wolf.

When the gray wolf judges the position of the prey, the head wolf leads and to guide the pack to surround the prey, because is the closest to the prey, so the position of the three wolves is used to judge the approximate position of the prey and gradually approach the prey; the mathematical description of this is as follows:

where denotes the current position of , denotes the current position of and denotes the current position of . denotes a random vector, and denotes the current gray wolf position vector. This Equation defines the length and direction of the front progress of wolf towards , respectively.

After the fitness ranking, gray wolf individuals outside the top three randomly update their positions near prey (i.e., the optimal solution) under the guidance of the current best three wolves. The basic principle is the sum of three position difference vectors, adding random quantities to avoid falling into local optimal. The location update formula is shown below

4. Improved Gray Wolf Algorithm Guided by the Sinusoidal Algorithm

4.1. Improved Sinusoidal Algorithm

The improved sine algorithm strategy is inspired by various types of SCA-related algorithms, such as the sine-cosine algorithm, sine algorithm and exponential sine-cosine algorithm function, as well as the improved sine-cosine algorithm, which utilizes the sinusoidal function in mathematics to perform iterative search for optimization, with a strong global search capability. Meanwhile, the cosine algorithm is improved for the convergence factor, so that the algorithm can fully search the local area, so that the global search and local development ability achieve a good balance. The improved vector A formula is shown below:

where is a random number on the interval .

A nonlinear decreasing mode is used to set the value of the convergence factor and a cosine function between 0 and is used to determine the change in value of :

where denotes the current iteration number and denotes the maximum iteration number.

The basic GWO algorithm updates gray wolf positions by calculating the average of the three best gray wolf positions. When the fitness values of the best gray wolves differ greatly, this position updating method takes a longer time to gather the wolves near the optimal point. To further speed up the local search of the algorithm, a weight factor can be designed using the fitness values of the first three gray wolves. Wolves with higher fitness should occupy higher weights accordingly.

Among them, the fitness value of a given gray wolf individual is the value of the control index after the operation of the system with changed individual parameters.

Therefore, the new updated position formula is obtained as follows:

4.2. Differential Cross-Talk

As the number of iterations of the GWO algorithm increases, the algorithm’s optimization search gradually converges, and it is difficult to jump out of the local optimum once it converges to it. To enhance the ability of the algorithm to jump out of the local optimum, a perturbation mechanism can be added to the population update of the algorithm. In this paper, we design a method that mimics the cross-perturbation of genetic algorithms for enhancing the population diversity of the algorithm at the later stage of the optimality search, to create favorable conditions for escaping from local convergence. Drawing on the crossover operation of the genetic algorithm, the three worst-adapted gray wolf individuals are perturbed as follows:

where denotes the current moment, denotes the inter-individual crossover operator function and is a random variable between 0 and 1.

To avoid falling into a local optimum solution, a moderate perturbation of the gray wolf individuals is required. However, perturbing all individuals will result in a longer processing time of the algorithm and affect the search speed. Therefore, we choose to perturb the three gray wolf individuals with the worst fitness. By retaining the dominant individuals among the gray wolves and performing random jumps globally, the potential optimal points can be searched.

When perturbing the worst assembled individuals with gray wolves and , respectively, regarding a particular segment of gene sequence, this results in a change in their own individuals, and ultimately in the evolution of the gray wolf population in the direction of diminishing fitness.

When the gray wolf individual is one-dimensional, crossover operations are not possible, so special definitions are required.

Although the differential perturbation mutation strategy can enhance the algorithm’s ability to search globally and jump out of the local optimum, there is no way to determine that the new individual obtained after the mutation perturbation must be better than the original individual’s fitness value; therefore, after the mutation perturbation updating, the greedy rule is added, and by comparing the fitness values of the old and the new positions, we can determine whether or not we should update the position. The greedy rule is shown in Equation (23) denotes the fitness value of position .

4.3. Particle Transgression Handing Strategy Based on Symmetry Idea

In the process of solving problems, the position of the particles is constantly changing with the iteration of the algorithm, and it is inevitable that the particles will jump out of the feasible domain of the boundary. If not dealt with, it will affect the convergence of the algorithm speed and accuracy. Usually there are three ways to deal with this problem: (1) “Reflection Boundary Method”: the velocity of the individual direction is reversed and the size is unchanged; (2) “Invisible Boundary Method”: give up the overstepping individual directly without participating in the subsequent algorithm optimization; (3) “Absorption Boundary Method”: take the boundary value directly when the particle overstepped the boundary. The literature [38] shows that all of the above three methods will cause a loss of population diversity in the wolf pack and affect the convergence accuracy of the particles. In order to improve the convergence accuracy of the algorithm, a symmetry-based individual boundary-crossing processing strategy is proposed, which is based on the principle of geometric symmetry: when an individual crosses the boundary, the boundary is used as the axis of symmetry (center of symmetry), the individual is transformed to the symmetric position as (or) and the distance from the individual to the boundary is computed as (or) and then processed in different situations. For instance, when an individual crosses the boundary upper limit and , the position of the individual after the process is the symmetric position plus the product of a random number and distance; when the individual crosses the upper boundary and , the position of the processed individual is the symmetric position minus the product of a random number and distance; when the individual crosses the lower boundary limit and , the position of the processed individual is the symmetric position minus the product of a random number and distance; when the individual crosses the lower boundary limit and , the position of the processed individual is the symmetric position plus the product of a random number and distance. The specific transformation process is shown in Equations (23) and (24), as shown below:

(1) :

(2) :

As shown in Equations (24) and (25), random numbers on the , after this cross-border processing individuals will not be gathered on the boundary. The introduction of random numbers can ensure that the cross-boundary individuals are in the same position in this iteration, and different positions in the next iteration, increasing the diversity of the positions of the cross-boundary individuals after processing, thus increasing the diversity of the individual population, which can help the algorithm to find the optimal solution of the problem, and improve the convergence accuracy of the algorithm.

4.4. Flow Chart

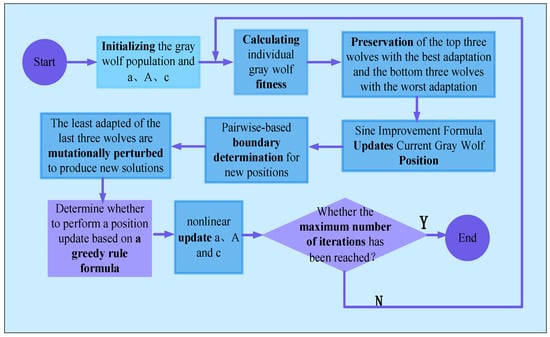

First, the gray wolf population is initialized and the key parameters a, A and c are initialized. The fitness value of each individual is calculated according to the fitness function. Then, the three individuals with the highest fitness values are screened. Based on these three individuals, the position update formula of improved sine is applied to update the positions of other individuals. Then, it is judged whether the updated position is within the boundary, and the transgressed particle is rotated based on the idea of symmetry. In order to avoid the algorithm falling into local optimal, differential cross perturbation is added to the worst three individuals. Determine whether to update the position according to the greedy formula. Finally, nonlinear update parameters (a, A, c). The specific process is shown in Figure 1.

Figure 1.

Algorithm Flow Chart.

5. Simulation Analysis

5.1. Experimental Environment and Parameter Settings

In this experiment, the test environment is a 64-bit Windows 10 operating system, and the experimental software is MATLAB R2016a to execute the algorithm operation. The processor is Intel(R) Core(TM)i7-8550U and RAM is 8192 MB. At the same time, to fully prove the feasibility and accuracy of the ISGWO algorithm, and also to ensure that the results of the algorithm operation are relatively fair, this paper will unify the test methods and test data of the algorithm in the simulation experiments. The number of populations is set to 100, the maximum number of iterations is 5000, and all experiments are run independently 20 times to prevent the influence of randomness in the algorithm running results, and the mean, standard deviation and average time of the experimental results are recorded.

5.2. Test Functions

In order to verify the applicability, effectiveness and efficiency of the ISGWO algorithm, 29 test functions in the CEC2017 test set are selected in this paper, and the specific information of the test functions is shown in Table 4. Among them, F1–F2 are single-peak functions, F3–F9 are simple multi-peak functions, F10–F19 are hybrid functions and F20–F29 are composite functions

Table 4.

Test function of CEC2017.

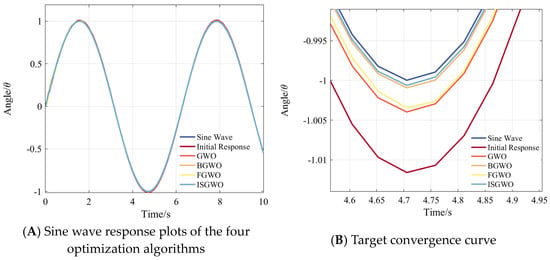

5.3. Analysis of Experimental Data

To verify whether the ISGWO algorithm has a more prominent performance of optimization search and whether it can master enough competitiveness in the core field of optimization algorithms, this paper mainly selects GWO, BGWO and FGWO to conduct comparative experiments. From the experimental intuition, this paper took the control algorithm, and e test function to obtain the mean, standard deviation and time as shown in the statistics of Table 5. At the same time, the convergence curve of each test function is plotted for convergence analysis, and the intercepted part is shown in Figure 2 for space consideration.

Table 5.

Experimental comparison of 4 different algorithms.

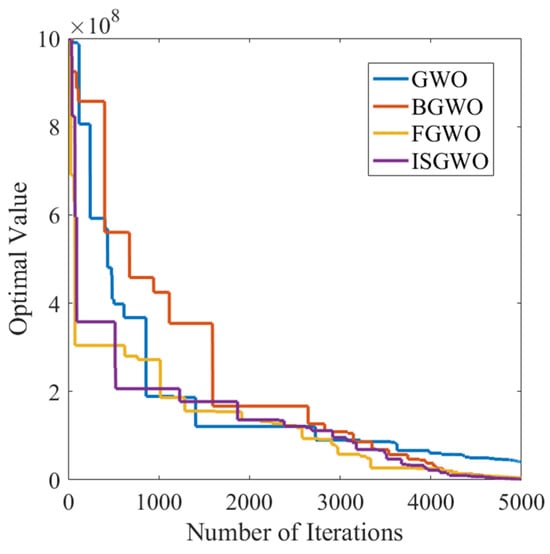

Figure 2.

Convergence curve of F1.

Since the single-peak function has a unique global optimal solution, the data values of the F1–F2 counts can be used to determine the convergence of each of the tested algorithms during execution. Of the four compared algorithms, ISGWO has the best optimal solution search capability for the F2 function and exhibits the best accuracy in terms of standard deviation.

For simple multi-modal functions, i.e., the presence of multiple peaks in the function construction, this fully validates the algorithm’s ability to perform a global search. The statistical analysis of the test functions allows us to determine the accuracy of the algorithm. It can be seen that for simple multi-modal functions, there is a very small difference between the solution accuracies of the different algorithms, with an average difference of only one order of magnitude. Among the three evaluation metrics, ISGWO is in the leading position in terms of optimization accuracy and computation time in the test.

To test the optimization ability of the algorithms in large-scale and difficult environments, we will analyze the algorithmic accuracy for hybrid functions F5–F13 and combinatorial functions F14–F20. For hybrid functions, ISGWO shows good search ability and algorithmic stability. In F5–F13, the average solution accuracy of ISGWO in the F5 and F7 functions is closest to the theoretical optimal solution, showing the ability to find the optimal solution under large-scale complex conditions. The analysis results of the F16 function show that ISGWO outperforms the other algorithms in the three aspects of the mean, standard deviation and computational speed, demonstrating excellent robustness and stability in the hybrid test function.

Finally, by analyzing the accuracy values of complex functions F21–F29, we can see that as the difficulty of the test environment increases, the difference in the search accuracy of each comparative algorithm in the test function gradually decreases. In the F24 function, the mean and standard deviation values of the ISGWO algorithm reach the optimal values of the other algorithms. In the F21, F25, F26 and F28 functions, the ISGWO algorithm’s ability to solve these functions is significant and stable due to the small standard deviation values. However, ISGWO outperforms other algorithms in all aspects of control parameter tuning.

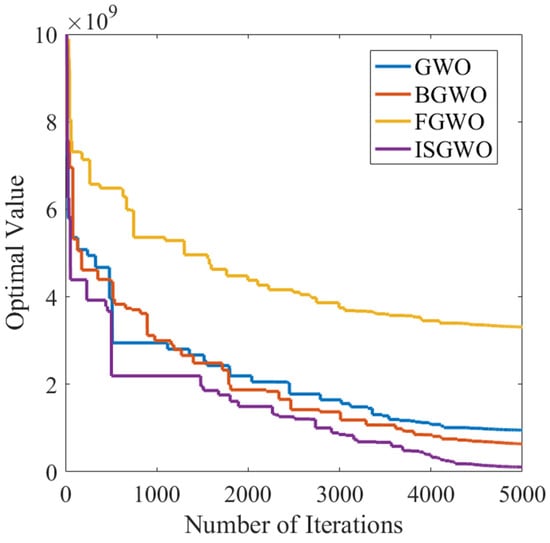

Combined with the convergence diagram, it can be seen that for the unimodal test function F1, the ISGWO algorithm not only has the fastest global convergence speed but also the smallest convergence value. This fully shows that ISGWO algorithm performs better in this kind of test function than other algorithms.

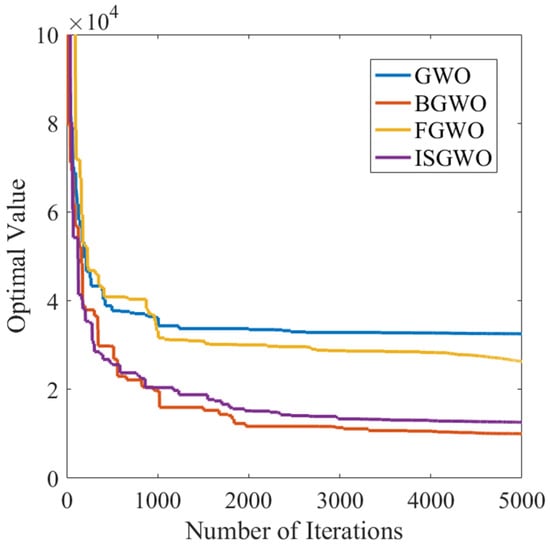

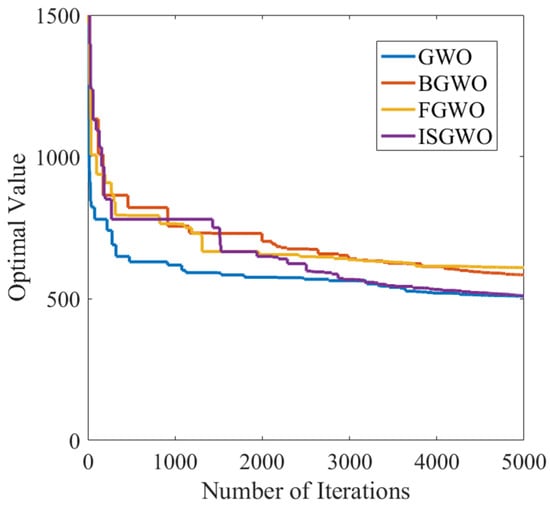

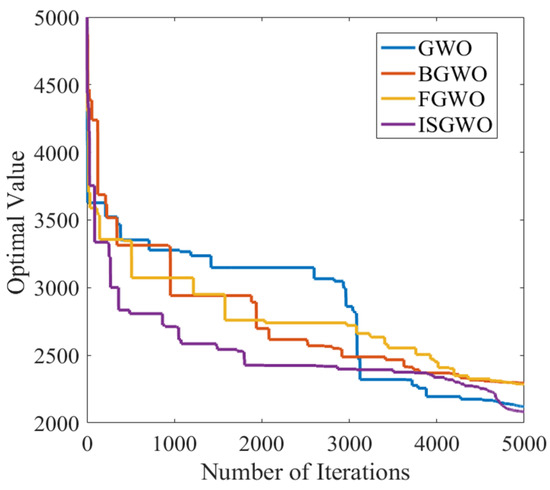

ISGWO also maintains the fastest convergence speed and small convergence value in the optimization process of multi-peak test function represented by F3 test function in Figure 3. At the same time, the F4 test function shows the ability of ISGWO algorithm to find the best through strong local search in the later stage in Figure 4. In addition, the optimization object in this paper is a complex system, and the performance of ISGWO in mixed functions and combined functions is more important.

Figure 3.

Convergence curve of F3.

Figure 4.

Convergence curve of F4.

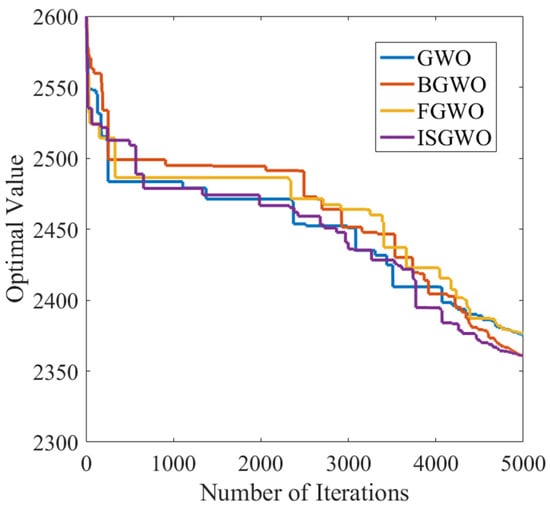

In the optimization process of the mixed function F12 in Figure 5, ISGWO shows a fast convergence rate, which is not only fast in 10 iterations, but also far ahead in 800 iterations. In the optimization process of test function F16 in Figure 6, the ISGWO algorithm avoids the trap of local optimization and finds the optimal solution with smaller adaptation value while ensuring fast convergence.

Figure 5.

Convergence curve of F12.

Figure 6.

Convergence curve of F16.

Finally, in the convergence of F21 in Figure 7, the ISGWO algorithm shows a significant advantage in its ability to coordinate global and local search. This shows that ISGWO not only has a good balance of global exploration capability, but also has significant local exploitation performance in the optimization process of mixed function and combined function. The convergence analysis of the above four kinds of different test functions shows that ISGWO algorithm has good stable convergence and global searching ability from different angles.

Figure 7.

Convergence curve of F21.

5.4. Comprehensive Evaluation of Algorithms

According to CEC2017, the evaluation criteria will be divided into three sections:

1, The 25 points for the first part come from the sum of the mean error values for each test function

where is the average of all counts and is the combined error. The score for the first part can then be obtained:

2, The 25 points for the second part come from the ordering of the different algorithms in each test function

where is the composite of all the rankings. The score for the second part can then be obtained:

3, The 50 points for the third part come from the runtime of the different algorithms in each test function

where is the composite of all the rankings. The score for the second part is then available:

4, Finally, the three scores above are added together

Table 6 shows that the BGWO algorithm performs the best in terms of accuracy but has the worst speed. On the other hand, the FGWO algorithm excels in speed but lacks precision. In contrast, the proposed ISGWO algorithm achieves a balance between accuracy and speed, demonstrating significant performance improvement in the same order of magnitude as the best algorithm.

Table 6.

Combined scores of the four algorithms.

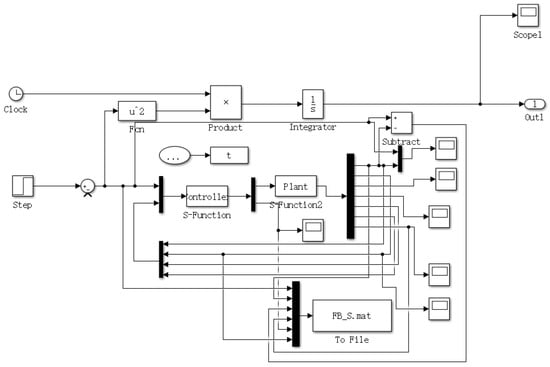

5.5. Optimization Analysis of Servo System Parameters

Although ISGWO performs well in test functions, performance in real systems is more important. According to literature [36], a control system is established as an optimization object, and the system parameters are shown in Table 7. The overall structure of the control system and optimization function established in Simulink is shown in Figure 8.

Table 7.

Control parameter list.

Figure 8.

Program chart.

Considering the optimization parameters as individual population individuals, the number of population individuals in the algorithm is uniformly 1 and the maximum number of iterations is 500.

Under the step input, the four optimization functions perform 500 iterations of each of the four parameters. The initial parameter values and optimization values are shown in Table 8.

Table 8.

Optimization results of the four optimization algorithms (step input).

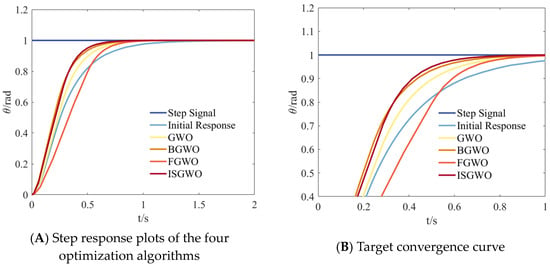

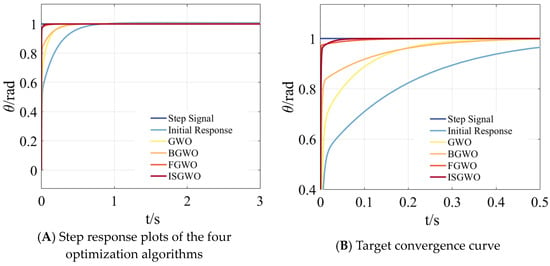

To make the optimization more intuitive, the step response generated based on the optimized parameters is shown in Figure 9.

Figure 9.

Parameter Optimization Comparison (Step Response).

In Figure 9A, comparing the initial response, each optimization algorithm generates a faster step response, FGWO algorithm has a slower initial response rate and ISGWO algorithm performs optimally in all aspects and can better achieve smooth and fast control of the system to meet the optimization requirements.

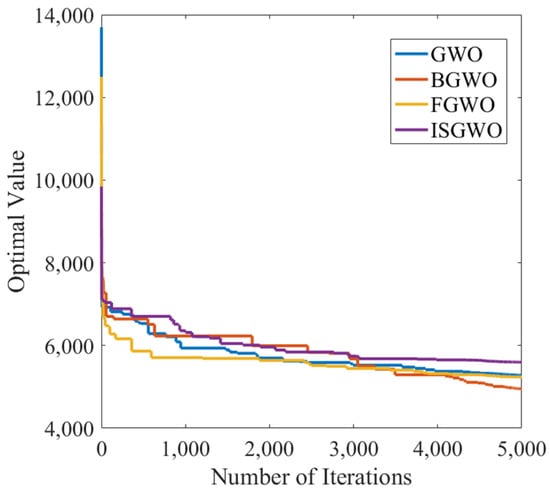

It can be seen from Figure 10 that the convergence speed of GWO decreases in steps, FGWO can reach convergence faster but with a larger convergence value, BGWO converges slower and has a larger final convergence value. The algorithm in this paper has the fastest convergence speed and the best convergence value.

Figure 10.

Parameter Optimization Target convergence curve (Step Response).

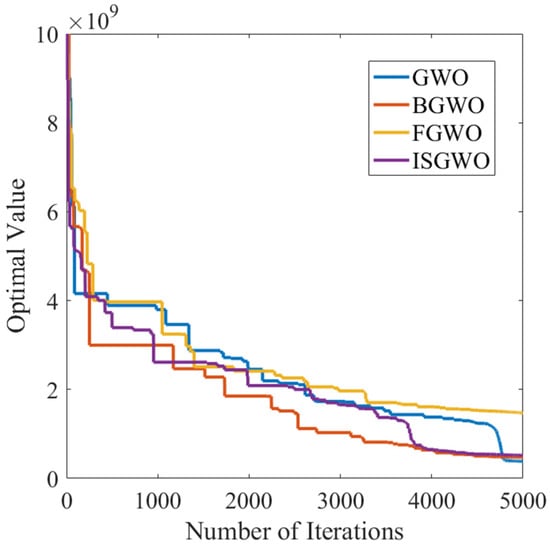

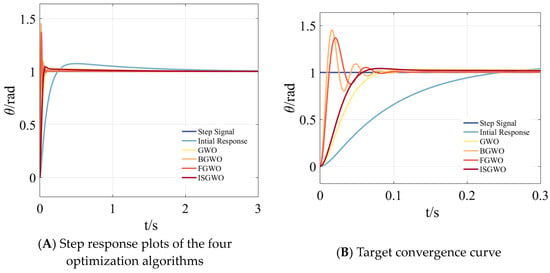

In Figure 11A, compared with the initial response, each optimization algorithm can produce a faster step response, while the GWO algorithm has a slower initial response speed, FGWO has the fastest response speed, but the convergence speed is slow, and ISGWO can achieve the fastest convergence and meet the optimization requirements.

Figure 11.

Parameter Optimization Comparison (Step Response).

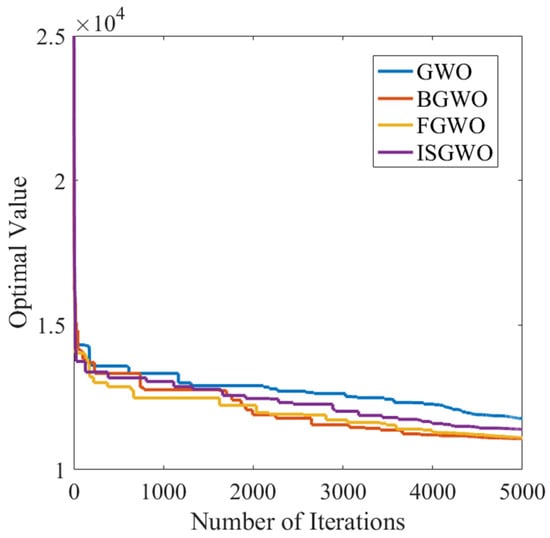

As it can be seen from Figure 12, GWO has the slowest convergence speed, FGWO can achieve convergence faster, but the convergence value is large, and BGWO has a slower convergence speed, but the final convergence value is small. The algorithm in this paper has the fastest convergence speed.

Figure 12.

Parameter Optimization Target convergence curve (Step Response).

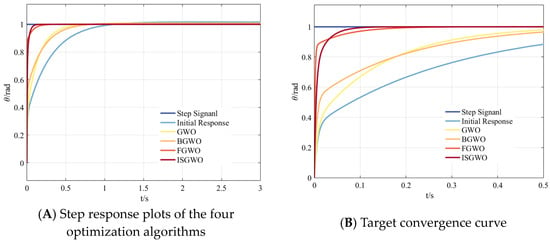

In Figure 13A, compared with the initial response, each optimization algorithm can produce a faster step response, while the initial response speed of GWO algorithm is slower but also improved. Although FGWO has the fastest response speed, its curve is not smooth enough, and ISGWO can achieve the fastest convergence and meet the optimization requirements.

Figure 13.

Parameter Optimization Comparison (Step Response).

As shown in Figure 14, FGWO can reach convergence faster, while BGWO has a slower convergence speed, but the final convergence value is small. The convergence rate of the algorithm in this paper is the fastest, and although the convergence value is large, it is maintained at a reasonable level.

Figure 14.

Parameter Optimization Target convergence curve (Step Response).

In Figure 15, compared with the initial response, each optimization algorithm can produce a faster step response, while the initial response speed of the GWO algorithm is slower, and FGWO has the fastest response speed but larger fluctuation. As shown in Figure 15B, ISGWO can achieve the fastest convergence and small overshoot, which meets the optimization requirements.

Figure 15.

Target convergence curve (Step Response).

It can be seen from Figure 16 that GWO has the fastest convergence speed, but the convergence value is larger. FGWO has the slowest convergence speed, but the convergence value is small. The algorithm proposed in this paper has the fastest convergence speed and a smaller final value.

Figure 16.

Parameter Optimization Target convergence curve (Step Response).

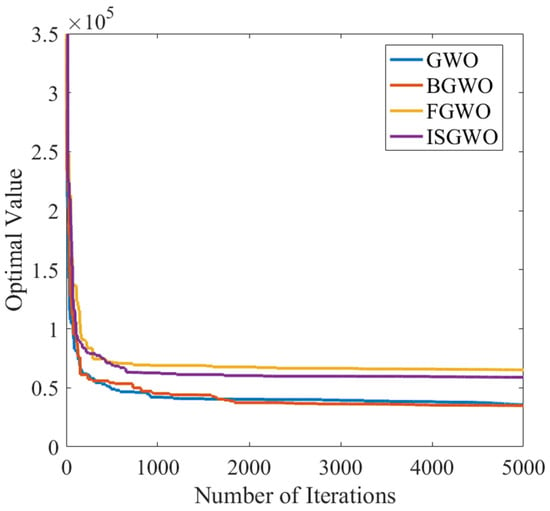

Under the sinusoidal input, the four optimization functions perform 500 iterations of each of the four parameters. The initial parameter values and optimization values are shown in Table 9.

Table 9.

Optimization results of the four optimization algorithms (sinusoidal input).

In order to better verify the superiority of the ISGWO algorithm in parameter optimization, we change the input to a sinusoidal input.

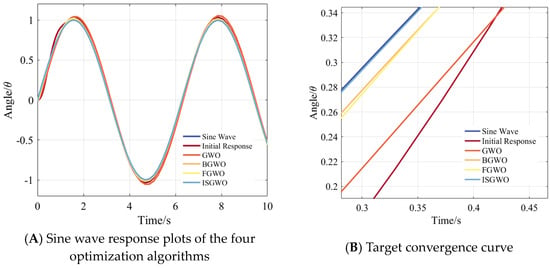

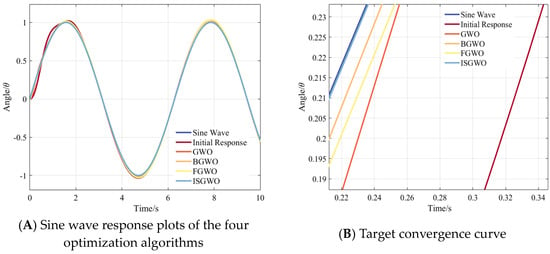

In Figure 17A, comparing the initial responses, each optimization algorithm tracks the input sinusoidal curve better, the performance of the FGWO algorithm and ISGWO algorithm are not different from each other and the ISGWO algorithm has the smallest stabilization error, which satisfies the optimization requirements.

Figure 17.

Parameter Optimization Comparison (Sine Wave Response).

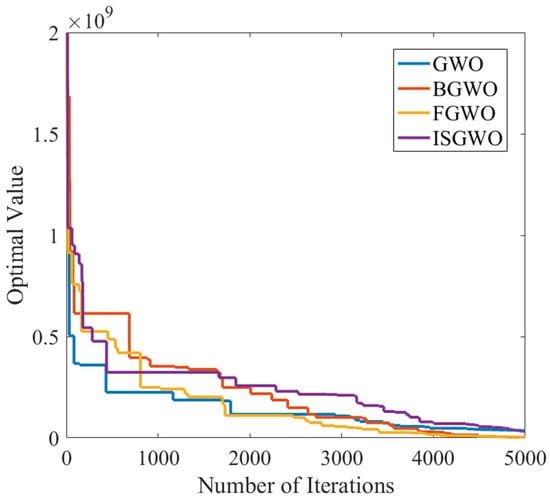

From Figure 18, it can be seen that GWO has the fastest convergence speed, but the final convergence value is larger; FGWO can reach convergence faster, but the convergence value is larger. The algorithm in this paper has the smallest final convergence value and can reach a better convergence value in a shorter number of iterations.

Figure 18.

Parameter Optimization Target convergence curve (Sine Wave Response).

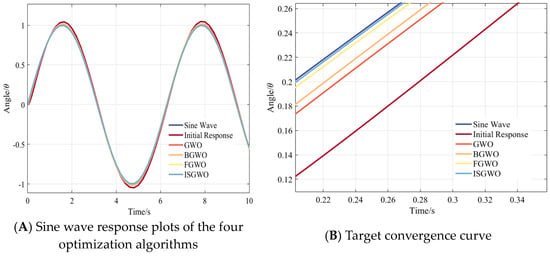

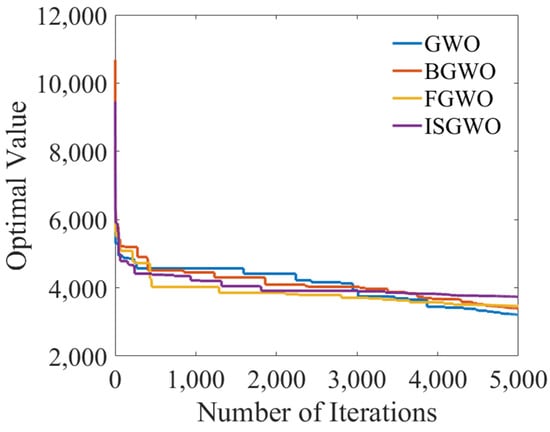

Similarly, we changed the input to a sinusoidal input to strengthen the verification of the superiority of the ISGWO algorithm. In Figure 19A, the stability error of each optimization algorithm is reduced compared to the initial response, and among them the ISGWO algorithm has the smallest stability error and converges faster to meet the optimization requirements.

Figure 19.

Parameter Optimization Comparison (Sine Wave Response).

From Figure 20, it can be seen that GWO has the slowest convergence speed and the largest convergence value, and FGWO has a slower convergence speed but a smaller convergence value. The algorithm in this paper not only has the fastest convergence speed, but also the final convergence value is not much different from the minimum convergence value.

Figure 20.

Parameter Optimization Target convergence curve (Sine Wave Response).

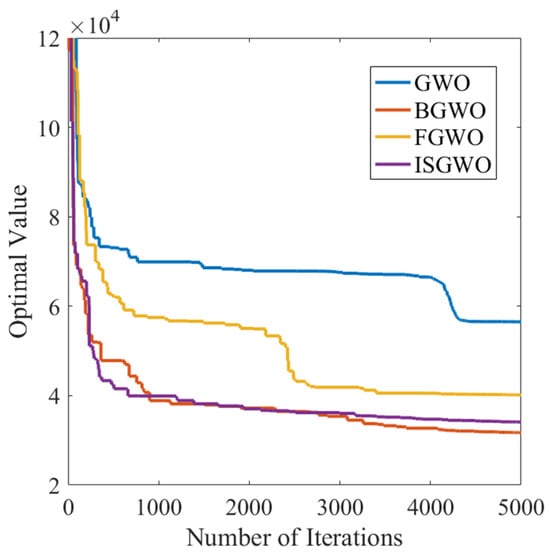

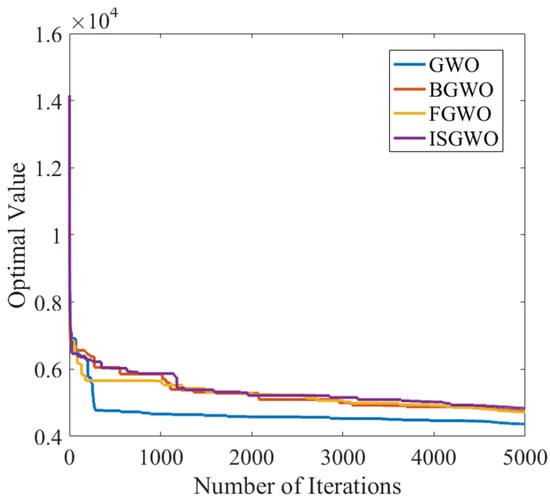

After changing the input to sinusoidal input, we can conclude from Figure 21A that each optimization algorithm fits the input curve better compared to the initial response, and that the GWO algorithm has a slower but improved initial response. ISGWO can achieve the fastest convergence speed and possesses the smallest stabilization error, which basically fits the input curve exactly and meets the optimization requirements.

Figure 21.

Parameter Optimization Comparison (Sine Wave Response).

As shown in Figure 22, GWO has the fastest convergence speed, but the late convergence is unstable. While BGWO has slower convergence speed, but the final convergence value is smaller. The algorithm in this paper has the fastest convergence speed, and the convergence value can reach a small level at a smaller number of iterations.

Figure 22.

Parameter Optimization Target convergence curve (Sine Wave Response).

After replacing the input with sinusoidal input, from Figure 23A we can see that each optimization algorithm tracks the input curve better compared to the initial response. the GWO algorithm fluctuates more and the stabilization error of BGWO is larger. As shown in Figure 23B, ISGWO has the smallest stabilization error and less fluctuation, which satisfies the optimization requirements.

Figure 23.

Target convergence curve (Sine Wave Response).

From Figure 24, it can be seen that GWO has the slowest convergence speed, but the final convergence value is smaller, and FGWO has the slowest convergence speed, but the convergence value is smaller. The algorithm proposed in this paper has the fastest convergence speed, and although the final convergence value is larger, a more objective convergence value can be achieved at a smaller number of convergence times. Combining the performance of different types, the algorithm in this paper converges faster, is not easy to fall into the local optimum, and can find the control parameters with better control effect in the complex control environment.

Figure 24.

Parameter Optimization Target convergence curve (Sine Wave Response).

6. Conclusions

For the problem of optimizing the synchronous control parameters of permanent magnet synchronous motors, we propose an improved sinusoidal optimization Gray Wolf algorithm. In the algorithm design, we introduce a position-update formula based on the improved sinusoidal to balance the exploratory and exploitative abilities of the algorithm in the process of finding the optimal solution. Meanwhile, to prevent the population individuals from crossing the boundary during the optimization process, we adopt a particle-crossing processing strategy based on the symmetry idea, which can update the position of the crossing individuals in time and retain the position information of the optimal individuals to maximize the retention of the current optimal solution, to improve the convergence speed of the algorithm to the optimal solution. Finally, we use the differential cross-perturbation strategy to move the three gray wolf individuals with the worst fitness out of the local optimal position to help the algorithm jump out of the local optimal position, to improve the search accuracy of the algorithm.

To evaluate the performance and effectiveness of the algorithm, ISGWO was tested against three gray wolf-related algorithms such as GWO, BGWO and FGWO with the help of CEC2017 benchmark test functions on the ISGWO, and the experimental results show that ISGWO maintains the leading level in search accuracy, algorithmic performance as well as search speed, and achieves several balances. The algorithm is applied to the parameter optimization problem of synchronous control, and the same three algorithms are selected for comparison. The experimental results show that ISGWO can find the smallest cost function value as well as the optimal parameter value the fastest under the same environment, thus proving the effectiveness and significance of the ISGWO algorithm in the synchronous control parameter optimization problem.

Although ISGWO has made some contributions to the problem of algorithm design and control parameter optimization, there are some limitations. Although high convergence accuracy and short computation time can be guaranteed at the same time in the control parameter optimization in this paper, the algorithm is not universal enough for different types of functions.

In future work, we will try to optimize multiple parameters or multiple function indicators simultaneously. This places higher demands on the optimization algorithm and requires optimization of its process to improve convergence accuracy and computation time.

Author Contributions

Conceptualization, methodology, software and validation, S.S.; formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing and visualization, T.W.; supervision and project administration, B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wen, Z.; Xiong, B.; Wang, Y.; Ding, H.; Liu, W. Optimization Design of Remanufacturing Line-Starting Permanent Magnet Synchronous Motor Based on Ant Colony Algorithm. In The Proceedings of the 16th Annual Conference of China Electrotechnical Society; Springer: Singapore, 2022. [Google Scholar]

- He, F.; Ye, Q. A Bearing Fault Diagnosis Method Based on Wavelet Packet Transform and Convolutional Neural Network Optimized by Simulated Annealing Algorithm. Sensors 2022, 22, 1410. [Google Scholar] [CrossRef] [PubMed]

- Hayyolalam, V.; Pourhaji Kazem, A.A. Black Widow Optimization Algorithm: A novel meta-heuristic approach for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2020, 87, 103249. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Hu, G. Hybrid seagull optimization algorithm and its engineering application integrating Yin–Yang Pair idea. Eng. Comput. 2022, 38, 2821–2857. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Al-Betar, M.A.; Abasi, A.K.; Awadallah, M.A.; Abu Doush, I.; Alyasseri, Z.A.A.; Alomari, O.A. Recent Advances in Butterfly Optimization Algorithm, Its Versions and Applications. Arch. Comput. Methods Eng. 2023, 30, 1399–1420. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Niu, Y.; He, K.; Wang, Y. A Grey wolf optimizer combined with Artificial fish swarm algorithm for engineering design problems. Ain Shams Eng. J. 2024, 15, 102797. [Google Scholar] [CrossRef]

- Sahayaraj, J.M.; Gunasekaran, K.; Verma, S.K.; Dhurgadevi, M. Energy efficient clustering and sink mobility protocol using Improved Dingo and Boosted Beluga Whale Optimization Algorithm for extending network lifetime in WSNs. Sustain. Comput. Inform. Syst. 2024, 43, 101008. [Google Scholar] [CrossRef]

- Tripathy, S.N.; Kundu, S.; Pradhan, A.; Samal, P. Optimal design of a BLDC motor using African vulture optimization algorithm. e-Prime—Adv. Electr. Eng. Electron. Energy 2024, 7, 100499. [Google Scholar] [CrossRef]

- Zhu, M.; Xu, W.; Ma, W. A novel prestress design method for cable-strut structures with Grey Wolf-Fruit Fly hybrid optimization algorithm. Structures 2024, 67, 106932. [Google Scholar] [CrossRef]

- Liu, S.; Huang, Z.; Zhu, J.; Liu, B.; Zhou, P. Continuous blood pressure monitoring using photoplethysmography and electrocardiogram signals by random forest feature selection and GWO-GBRT prediction model. Biomed. Signal Process. Control 2024, 88, 105354. [Google Scholar] [CrossRef]

- Li, Z.B.; Feng, X.Y.; Wang, L.; Xie, Y.C. DC–DC circuit fault diagnosis based on GWO optimization of 1DCNN-GRU network hyperparameters. Energy Rep. 2023, 9, 536–548. [Google Scholar] [CrossRef]

- Dey, B.; Raj, S.; Mahapatra, S. A Variegated GWO Algorithm Implementation in Emerging Power Systems Optimization Problems. 2023. Available online: https://www.researchsquare.com/article/rs-1291323/v1 (accessed on 7 August 2024).

- Phan, Q.B.; Nguyen, T.T. Enhancing wind speed forecasting accuracy using a GWO-nested CEEMDAN-CNN-BiLSTM model. ICT Express 2024, 10, 485–490. [Google Scholar] [CrossRef]

- Sharma, A.; Sharma, A.; Choudhary, S.; Pachauri, R.K.; Shrivastava, A.; Kumar, D. A review on artificial bee colony and it’s engineering applications. J. Crit. Rev. 2020, 7, 4097–4107. [Google Scholar]

- Deng, W.; Shang, S.; Cai, X.; Zhao, H.; Song, Y.; Xu, J. An improved differential evolution algorithm and its application in optimization problem. Soft Comput. 2021, 25, 5277–5298. [Google Scholar] [CrossRef]

- Okoji, A.I.; Okoji, C.N.; Awarun, O.S. Performance evaluation of artificial intelligence with particle swarm optimization (PSO) to predict treatment water plant DBPs (haloacetic acids). Chemosphere 2023, 344, 140238. [Google Scholar] [CrossRef] [PubMed]

- Dogruer, T. Grey Wolf Optimizer-Based Optimal Controller Tuning Method for Unstable Cascade Processes with Time Delay. Symmetry 2023, 15, 54–59. [Google Scholar] [CrossRef]

- Xu, S.; Long, W. Improved grey Wolf Optimization Algorithm based on stochastic convergence factor and Differential Variation. Sci. Technol. Eng. 2018, 18, 5–9. [Google Scholar]

- Zhang, W.; Zhang, X.; Wang, C.; Sun, B. Path planning of substation inspection robot with improved grey Wolf Algorithm. J. Chongqing Univ. Technol. Nat. Sci. 2023, 37, 129–135. [Google Scholar]

- Liu, Y.; Zhu, H.; Fang, W. Uav path planning with improved grey Wolf Algorithm. Electro-Opt. Control 2023, 30, 1–7. [Google Scholar]

- Zheng, Q.G.; Yang, X.G.; Liu, D.; Li, X. Residual life prediction of lithium batteries by improved grey Wolf optimized least squares support Vector Machine. J. Chongqing Univ. 2022, 5, 1–13. [Google Scholar]

- Jitkongchuen, D.; Sukpongthai, W.; Thammano, A. Weighted distance grey wolf optimization with immigration operation for global optimization problems. In Proceedings of the 2017 18th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Kanazawa, Japan, 26–28 June 2017. [Google Scholar]

- Madhiarasan, M.; Deepa, S.N. Long-Term Wind Speed Forecasting using Spiking Neural Network Optimized by Improved Modified grey Wolf Optimization Algorithm. Int. J. Adv. Res. 2016, 4, 356–368. [Google Scholar] [CrossRef]

- Raj, S.; Bhattacharyya, B. Reactive power planning by opposition-based grey wolf optimization method. Int. Trans. Electr. Energy Syst. 2018, 2, 551–558. [Google Scholar] [CrossRef]

- Wang, L.; Wang, X.; Liu, K.; Sheng, Z. Multi-Objective Hybrid Optimization Algorithm Using a Comprehensive Learning Strategy for Automatic Train Operation. Energies 2019, 12, 1882. [Google Scholar] [CrossRef]

- Deng, F.; Wei, Y.; Liu, Y. Improvement and application of grey Wolf optimization Algorithm. Stat. Decis. 2023, 11, 18–24. [Google Scholar]

- Masoumi, F.; Masoumzadeh, S.; Zafari, N.; Emami-Skardi, M.J. Optimal operation of single and multi-reservoir systems via hybrid shuffled grey wolf optimization algorithm (SGWO). Water Sci. Technol. Water Supply 2022, 22, 1663–1675. [Google Scholar] [CrossRef]

- Wang, Z.; Cheng, F.; Juventus, L.S. Grey wolf optimization algorithm based on somersault foraging strategy. Appl. Res. Comput. 2021, 38, 4–6. [Google Scholar]

- She, W.; Ma, K.; Tian, Z.; Liu, W.; Kong, D. Optimal aiming point selection method for ground target based on improved Grey Wolf optimization algorithm. Fire Control Command. Control 2023, 48, 139–145. [Google Scholar]

- Li, Y.; Li, W.; Zhao, Y.; Liu, A. Grey wolf Algorithm based on Levy Flight and Random Walk Strategy. Comput. Sci. 2020, 47, 6–8. [Google Scholar]

- Liang, J.; Zhou, Z.; Liu, X. Robot path planning based on Particle swarm Optimization improved by grey Wolf Algorithm. Softw. Guide 2022, 21, 1–5. [Google Scholar]

- Huang, H.X.; Yu, G.; Cheng, S.; Li, C. Full coverage path planning of Bridge detection wall-climbing Robot based on Improved Gray Wolf optimization. J. Comput. Appl. 2024, 44, 966–971. [Google Scholar]

- Zhang, Z.; Xu, L.; Li, J.; Zhao, Y.; He, K. Algorithm based on improved wolves of the flexible job-shop scheduling research. J. Syst. Simul. 2023, 35, 10. [Google Scholar]

- Wang, L.; Wang, X.; Liu, G.; Li, Y. Improved Auto Disturbance Rejection Control Based on Moth Flame Optimization for Permanent Magnet Synchronous Motor. IEEJ Trans. Electr. Electron. Eng. 2021, 16, 1124–1135. [Google Scholar] [CrossRef]

- Wang, T.; Sun, S. Adaptive multi-level differential coupling control strategy for dual-motor servo synchronous system based on global backstepping super-twisting control. IET Control Theory Appl. 2024, 18, 1892–1909. [Google Scholar] [CrossRef]

- Patel, N.S.; Parihar, P.L.; Makwana, J.S. Parametric optimization to improve the machining process by using Taguchi method: A review. Mater. Today Proc. 2021, 47, 2709–2714. [Google Scholar] [CrossRef]

- Wang, J.P. Optimal control of population with age distribution and weighted competition in polluted environment. J. Appl. Math. 2024, 37, 1014–1026. [Google Scholar] [CrossRef]

- Zhuo, Z.; Yang, Y.; Fan, X.; Wang, X.; Huang, B.; Zhao, X. Research on particle boundary crossing processing by PSO algorithm in array pattern synthesis. Mod. Def. Technol. 2016, 44, 218–224. (In Chinese) [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).