Abstract

With many efficient solutions for a multi-objective optimization problem, this paper aims to cluster the Pareto Front in a given number of clusters K and to detect isolated points. K-center problems and variants are investigated with a unified formulation considering the discrete and continuous versions, partial K-center problems, and their min-sum-K-radii variants. In dimension three (or upper), this induces NP-hard complexities. In the planar case, common optimality property is proven: non-nested optimal solutions exist. This induces a common dynamic programming algorithm running in polynomial time. Specific improvements hold for some variants, such as K-center problems and min-sum K-radii on a line. When applied to N points and allowing to uncover points, K-center and min-sum-K-radii variants are, respectively, solvable in and time. Such complexity of results allows an efficient straightforward implementation. Parallel implementations can also be designed for a practical speed-up. Their application inside multi-objective heuristics is discussed to archive partial Pareto fronts, with a special interest in partial clustering variants.

1. Introduction

This paper is motivated by real-world applications of multi-objective optimization (MOO). Some optimization problems are driven by more than one objective function, with conflicting optimization directions. For example, one may minimize financial costs, while maximizing the robustness to uncertainties or minimizing the environmental impact [1,2]. In such cases, higher levels of robustness or sustainability are likely to induce financial over-costs. Pareto dominance, preferring one solution to another if it is better for all the objectives, is a weak dominance rule. With conflicting objectives, several non-dominated points in the objective space can be generated, defining efficient solutions, which are the best compromises. A Pareto front (PF) is the projection in the objective space of the efficient solutions [3]. MOO approaches may generate large sets of efficient solutions using Pareto dominance [3]. Summarizing the shape of a PF may be required for presentation to decision makers. In such a context, clustering problems are useful to support decision making to present a view of a PF in clusters, the density of points in the cluster, or to select the most central cluster points as representative points. Note than similar problems are of interest for population MOO heuristics such as evolutionary algorithms to archive representative points of a partial Pareto fronts, or in selecting diversified efficient solutions to process mutation or cross-over operators [4,5].

With N points in a PF, one wishes to define clusters while minimizing the measure of dissimilarity. The K-center problems, both in the discrete and continuous versions, define the cluster costs in this paper, covering the PF with K identical balls while minimizing the radius of the balls used. By definition, the ball centers belong to the PF for the discrete K-center version, whereas the continuous version is similar to geometric covering problems, without any constraint for the localization of centers. Furthermore, sum-radii or sum-diameter are min-sum clustering variants, where the covering balls are not necessarily identical. For each variant, one can also consider partial clustering variants, where a given percentage (or number) of points can be ignored in the covering constraints, which is useful when modelling outliers in the data.

The K-center problems are NP-hard in the general case, [6] but also for the specific case in using the Euclidean distance [7]. This implies that K-center problems in three-dimensional (3D) PF are also NP-hard, with the planar case being equivalent to an affine 3D PF. We consider the case of two-dimensional (2D) PF in this paper, focusing on the polynomial complexity results. It as an application to bi-objective optimization, the 3D PF and upper dimensions are shown as perspectives after this work. Note that 2D PF are a generalization of one-dimensional (1D) cases, where polynomial complexity results are known [8,9]. A preliminary work proved that K-center clustering variants in a 2D PF are solvable in polynomial time using a Dynamic Programming (DP) algorithm [10]. This paper improves these algorithms for these variants, with an extension to min-sum clustering variants, partial clustering, and Chebyshev and Minkowski distances. The properties of the DP algorithms are discussed for efficient implementation, including parallelization.

This paper is organized as follows. The considered problems are defined formally with unified notations in Section 2. In Section 3, related state-of-the-art elements are discussed. In Section 4 and Section 5, intermediate results and specific complexity results for sub-cases are presented. In Section 6, a unified DP algorithm with a proven polynomial complexity is designed. In Section 7, specific improvements are presented. In Section 8, the implications and applications of the results of Section 5, Section 6 and Section 7 are discussed. In Section 9, our contributions are summarized, with a discussion of future research directions.

2. Problem Statement and Notation

In this paper, integer intervals are denoted as . Let a set of N elements of , such that for all , defining the binary relations for all with

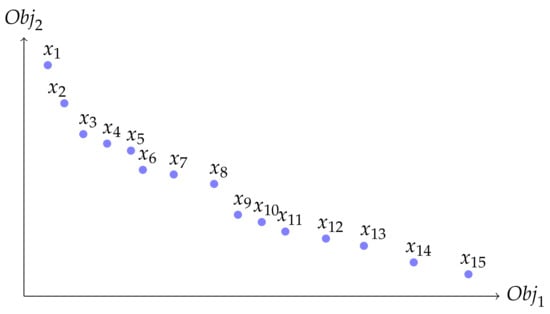

These hypotheses on E define 2D PF considering the minimization of two objectives [3,11]. Such configuration is illustrated by Figure 1. Without loss of generality, transforming objectives to maximize f into allows for the consideration of the minimization of two objectives. This assumption impacts the sense of the inequalities of . A PF can also be seen as a Skyline operator [12]. A 2D PF can be extracted from any subset of using an output-sensitive algorithm [13], or using any MOO approach [3,14].

Figure 1.

Illustration of a 2D Pareto Front (PF) with 15 points and the indexation implied by Proposition 1.

The results of this paper will be given using the Chebyshev and Minkowski distances, generically denoting the and norm-induced distance, respectively. For a given , the Minkowski distance is denoted , and given by the formula

The case corresponds to the Euclidean distance; it is a usual case for our application. The limit with defines the Chebyshev distance, denoted and given by the formula

Once a distance d is defined, a dissimilarity among a subset of points is defined using the radius of the minimal enclosing ball containing . Numerically, this dissimilarity function, denoted as , can be written as

A discrete variant considers enclosing balls with one of the given points as the center. Numerically, this dissimilarity function, denoted , can be written as

For the sake of having unified notations for common results and proofs, we define to indicate which version of the dissimilarity function is considered. (respectively, 1) indicates that the continuous (respectively, discrete) version is used, , thus denoting (respectively, ). Note that will be related to complexity results which motivated such a notation choice.

For each a subset of points and integer , we define , as the set of all the possible partitions of in K subsets. Continuous and discrete K-center are optimization problems with as a set of feasible solutions, covering E with K identical balls while minimizing the radius of the balls used

The continuous and discrete K-center problems in the 2D PF are denoted K--CP2DPF. Another covering variant, denoted min-sum-K-radii problems, covers the points with non-identical balls, while minimizing the sum of the radius of the balls. We consider the following extension of min-sum-K-radii problems, with being a real number

corresponds to the standard min-sum-K-radii problem. with the standard Euclidean distance is equivalent to the minimization of the area defined by the covering disks. For the sake of unifying notations for results and proofs, we define a generic operator to denote, respectively, sum-clustering and max-clustering. This defines the generic optimization problems

Lastly, we consider a partial clustering extension of problems (10), similarly to the partial p-center [15]. The covering with balls mainly concerns the extreme points, which make the results highly dependent on outliers. One may consider that a certain number of the points may be considered outliers, and that M points can be removed in the evaluation. This can be written as

Problem (11) is denoted K-M-⊕--BC2DPF. Sometimes, the partial covering is defined by a maximal percentage of outliers. In this case, if M is much smaller than N, we have , which we have to keep in mind for the complexity results. K-center problems, K--CP2DPF, are K-M-max--BC2DPF problems for all ; the value of does not matter for max-clustering, defining the same optimal solutions as . The standard min-sum-k-radii problem, equivalent to the min-sum diameter problem, corresponds to k-0-+--BC2DPF problems for discrete and continuous versions, k-M-+--BC2DPF problems consider partial covering for min-sum-k-radii problems.

3. Related Works

This section describes works related to our contributions, presenting the state-of-the-art for p-center problems and clustering points in a PF. For more detailed survey on the results for the p-center problems, we refer to [16].

3.1. Solving P-Center Problems and Complexity Results

Generally, the p-center problem consists of locating p facilities among possible locations and assigning n clients, called , to the facilities in order to minimize the maximum distance between a client and the facility to which it is allocated. The continuous p-center problem assumes that any place of location can be chosen, whereas the discrete p-center problem considers a subset o m potential sites, denoted , and distances for all and . Discrete p-center problems can be formulated with bipartite graphs, modeling that si unfeasibile for some assignments. In the discrete p-center problem defined in Section 2, points are exactly , and the distances are defined using a norm, so that triangle inequality holds for such variants.

P-center problems are NP-hard [6,17]. Furthermore, for all , any -approximation for the discrete p-center problem with triangle inequality is NP-hard [18]. Two approximations were provided for the discrete p-center problem running in time and in time, respecitvely [19,20]. The discrete p-center problem in with a Euclidean distance is also NP-hard [17]. Defining binary variables and with if and only if the customer i is assigned to the depot j, and if and only if location is chosen as a depot, the following Integer Linear Programming (ILP) formulation models the discrete p-center problem [21]

Constraints (12b) are implied by a standard linearization of the min–max original objective function. Constraint (12c) fixes the number of open facilities to p. Constraints (12d) assign each client to exactly one facility. Constraints (12e) are necessary to induce that any considered assignment implies that facility j is open with . Tighter ILP formulations than (12) were proposed, with efficient exact algorithms relying on the IP models [22,23]. Exponential exact algorithms were also designed for the continuous p-center problem [24,25]. An -time algorithm was provided for the continuous Euclidean p-center problem in the plane [26]. An -time algorithm is available for the continuous p-center problem in under Euclidean and -metric [27].

Specific cases of p-center problems are solvable in polynomial time. The continuous 1-center problem is exactly the minimum covering ball problem, which has a linear complexity in . Indeed, a “prune and search” algorithm finds the optimum bounding sphere and runs in linear time if the dimension is fixed as a constant [28]. In dimension d, its complexity is in time, which is impractical for high-dimensional applications [28]. The discrete 1-center problem is solved in time , using furthest-neighbor Voronoi diagrams [29]. The continuous and planar 2-center problem is solved in randomized expected time [30,31]. The discrete and planar 2-center problem is solvable in time [32].

1D p-center problems, or those with equivalent points that are located in a line, have specific complexity results with polynomial DP algorithms. The discrete 1D k-center problem is solvable in time [33]. The continuous and planar k-centers on a line, finding k disks with centers on a given line l, are solvable in polynomial time, in time in the first algorithm by [29], and in time and space in the improved version provided by [34]. An intensively studied extension of the 1D sub-cases is the p-center in a tree structure. The continuous p-center problem is solvable in time in a tree structure [7]. The discrete p-center problem is solvable in time in a tree structure [35].

Rectilinear p-center problems, using the Chebyshev distances, were less studied. Such distance is useful for complexity results; however, it has fewer applications than Euclidean or Minkowski norms. For the planar and rectangular 1-center and 2-center problems, algorithms are available for the 1-center problem, and such 3-center problems can be solved in time [36]. In a general dimension d, continuous and discrete versions of rectangular p-center problems are solvable in and running time, respectively. Specific complexity results for rectangular 2-center problems are also available [37].

3.2. Solving Variants of P-Center Problems and Complexity Results

Variants of p-center problems were studied less intensively than the standard p-center problems. The partial variants were introduced in 1999 by [15], whereas a preliminary work in 1981 considered a partial weighted one-center variant and a DP algorithm to solve it running in time [38]. The partial discrete p-center can formulated as an ILP starting from the formulation provided by [21] as written in (12). Indeed, considering that points can be uncovered, constraints (12.4) become inequalities for all and the maximal number of unassigned points is set to , adding one constraint . Similarly, the sum-clustering variants K-M-+--BC2DPF can be written as the following ILP

In this ILP formulation, binary variables are defined such that if and only if the points and are assigned in the same cluster, with being the discrete center. Continuous variables denote the powered radius of the ball centered in , if is chosen as a center, and otherwise. Constraint (13b) is a standard linearization of the non-linear objective function. indicates that if point is chosen as the center, then this implies with (13c) that K such variables are nonzero, and with (13f) that a nonzero variable implies that the corresponding is not null. (13d) and (13e) allow the extension with partial variants, as discussed before.

Min-sum radii or diameter problems were rarely studied. However, such objective functions are useful for meta-heuristics to break some “plateau” effects [39]. Min-sum diameter clustering is NP-hard in the general case and polynomial within a tree structure [40]. The NP-hardness is also proven, even in metrics induced by weighted planar graphs [41]. Approximation algorithms were studied for min-sum diameter clustering. A logarithmic approximation with a constant factor blowup in the number of clusters was provided by [42]. In the planar case with Euclidean distances, a polynomial time approximation scheme was designed [43].

3.3. Clustering/Selecting Points in Pareto Frontiers

Here, we summarize the results related to the selection or the clustering of points in PF, with applications for MOO algorithms. Polynomial complexity resulting in the use of 2D PF structures is an interesting property; clustering problems have a NP-hard complexity in general [17,44,45].

To the best of our knowledge, no specific work focused on PF sub-cases of k-center problems and variants before our preliminary work [10]. A Distance-Based Representative Skyline with similarities to the discrete p-center problem in a 2D PF may not be fully available in the Skyline application, which makes a significant difference [46,47]. The preliminary results proved that K--CP2DPF is solvable in time using additional memory space [10]. Partial extensions and min-sum-k-radii variants were not considered for 2D PF. We note that the 2D PF case is an extension of the 1D case, with 1D cases being equivalent to the cases of an affine 2D PF. In the study of complexity results, a tree structure is usually a more studied extension of 1D cases. The discrete k-center problem on a tree structure, and thus the 1D sub-case, is solvable in time [33]. 3F PF cases are NP-complete, as already mentioned in the introduction, this being a consequence of the NP-hardness of the general planar case.

Maximization of the quality of discrete representations of Pareto sets was studied with the hypervolume measure in the Hypervolume Subset Selection (HSS) problem [48,49]. The HSS problem is known to be NP-hard in dimension 3 (and greater dimensions) [50]. HSS is solvable with an exact algorithm in and a polynomial-time approximation scheme for any constant dimension d [50]. The 2D case is solvable in polynomial time with a DP algorithm with a complexity in time and space [49]. The time complexity of the DP algorithm was improved in by [51], and in by [52].

The selection of points in a 2D PF, maximizing the diversity, can also be formulated using p-dispersion problems. Max–Min and Max-Sum p-dispersion problems are NP-hard problems [53,54]. Max–Min and Max-Sum p-dispersion problems are still NP-hard problems when distances fulfill the triangle inequality [53,54]. The planar (2D) Max–Min p-dispersion problem is also NP-hard [9]. The one-dimensional (1D) cases of Max–Min and Max-Sum p-dispersion problems are solvable in polynomial time, with a similar DP algorithm running in time [8,9]. Max–Min p-dispersion was proven to be solvable in polynomial time, with a DP algorithm running in time and space [55]. Other variants of p-dispersion problems were also proven to be solvable in polynomial time using DP algorithms [55].

Similar results exist for k-means, k-medoid and k-median clustering. K-means is NP-hard for 2D cases, and thus for 3D PF [44]. K-median and K-medoid problems are known to be NP hard in dimension 2, since [17], where the specific case of 2D PF was proven to be solvable in time with DP algorithms [11,56]. The restriction of k-means to 2D PF would be also solvable in time with a DP algorithm if a conjecture was proven [57]. We note that an affine 2D PF is a line in , where clustering is equivalent to 1D cases. 1D k-means were proven to be solvable in polynomial time with a DP algorithm in time and space. This complexity was improved for a DP algorithm in time and space [58]. This is thus the complexity of K-means in an affine 2D PF.

4. Intermediate Results

4.1. Indexation and Distances in a 2D PF

Lemma 1.

≼ is an order relation, and ≺ is a transitive relation

Proposition 1 implies an order among the points of E, for a re-indexation in time

Proposition 1

(Total order). Points can be re-indexed in time, such that

Proof.

We index E such that the first coordinate is increasing. This sorting procedure runs in time. Let , with . We thus have . Having implies that . and is by definition . □

The re-indexation also implies monotonic relations among distances of the 2D PF

Lemma 2.

We suppose that E is re-indexed as in Proposition 1. Letting d be a Minkowski, Euclidean or Chebyshev distance, we obtain the following monotonicity relations

Proof.

We first note that the equality cases are trivial, so we can suppose that in the following proof. We prove the propriety (17); the proof of (18) is analogous.

Let . We note that , and . Proposition 1 re-indexation ensures and . With ,

With ,

Thus, for any , and also

. Hence, the result is proven for Euclidean, Minkowski and Chebyshev distances. □

4.2. Lemmas Related to Cluster Costs

This section provides the relations needed to compute or compare cluster costs. Firstly, one notes that the computation of cluster costs is easy in a 2D PF in the continuous clustering case.

Lemma 3.

Let , such that . Let i (resp ) be the minimal (respective maximal) index of points of P with the indexation of Proposition 1. Then, can be computed with .

To prove the Lemma 3, we use the Lemmas 4 and 5.

Lemma 4.

Let , such that . Let i (resp ) the minimal (resp maximal) index of points of P with the indexation of Proposition 1. We denote with the midpoint of . Then, using a Minkowski or Chebyshev distance d, we have for all : .

Proof of Lemma 4:

We denote with , with the equality being trivial as points are on a line and d is a distance. Let . We calculate the distances using a new system of coordinates, translating the original coordinates such that O, is a new origin (which is compatible with the definition of Pareto optimality). and have coordinates and in the new coordinate system, with and if a Minkowski distance is used, otherwise it is for the Chebyshev distance. We use to denote the coordinates of x. implies that and , i.e., and , which implies , using Minkowski or Chebyshev distances. □

Lemma 5.

Let such that . Let i (respective ) be the minimal (respective maximal) index of points of P with the indexation of Proposition 1. We denote, using , the midpoint of . Then, using a Minkowski or Chebyshev distance d, we have for all : .

Proof of Lemma 5:

As previously noted, let . Let . We have to prove that or . If we suppose that , this implies that . Then, having implies with Lemma 2. □

Proof of Lemma 3:

We first note that , using the particular point . Using Lemma 4, , and thus with . Reciprocally, for all , using Lemma 5, and thus . This implies that . □

Lemma 6.

Let such that . Let i (respective ) the minimal (respective maximal) index of points of P.

Proof.

Let . We denote , such that . Applying Lemma 2 to , for all , we have . Then

Lastly, we notice that extreme points are not optimal centers. Indeed, with Proposition 2, i.e., i, is not optimal in the last minimization, dominated by . Similarly, is dominated by . □

Lemma 7.

Let . Let . We have .

Proof.

Using the order of Proposition 1, let i (respectively, ) the minimal index of points of P (respectively, ) and let j (respectively, ) the maximal indexes of points of P (respectively, ). is trivial using Lemmas 2 and 3. To prove , we use , and Lemmas 2 and 6

□

Lemma 8.

Let . Let , such that . Let i (respectively, ) the minimal (respectively, maximal) index of points of P. For all , such that , we have

Proof.

Let such that . With Lemma 7, we have . is trivial using Lemma 3, so that we have to prove .

□

4.3. Optimality of Non-Nested Clustering

In this section, we prove that non-nested clustering property, the extension of interval clustering from 1D to 2D PF, allows the computation of optimal solutions, which will be a key element for a DP algorithm. For (partial) p-center problems, i.e., K-M-max--BC2DPF, optimal solutions may exist without fulfilling the non-nested property, whereas for K-M-+--BC2DPF problems, the nested property is a necessary condition to obtaining an optimal solution.

Lemma 9.

Let ; let . There is an optimal solution of 1-M-⊕--BC2DPF on the shape with .

Proof.

Let represent an optimal solution of 1-M-⊕--BC2DPF, let be the optimal cost, and with and . Let i (respectively, ) be the minimal (respectively maximal) index of using order of Proposition 1. , so Lemma 8 applies and . , thus defines an optimal solution of 1-M-⊕--BC2DPF. □

Proposition 2.

Let be a 2D PF, re-indexed with Proposition 1. There are optimal solutions of K-M-⊕--BC2DPF using only clusters on the shape .

Proof.

We prove the results by the induction on . For , Lemma 9 gives the initialization.

Let us suppose that and the Induction Hypothesis (IH) that Proposition 2 is true for K-M-⊕--BC2DPF. Let be an optimal solution of K-M-⊕--BC2DPF; let be the optimal cost. Let be the subset of the non-selected points, , and be the K subsets defining the costs, so that is a partition of E and . Let be the maximal index, such that , which is, necessarily, . We reindex the clusters , such that . Let i be the minimal index such that .

We consider the subsets , and for all . It is clear that is a partition of , and is a partition of E. For all , , so that (Lemma 7).

is a partition of E, and . is an optimal solution of K--⊕--BC2DPF. is an optimal solution of --⊕--BC2DPF, applied to points . Letting be the optimal cost of --⊕--BC2DPF, we have . Applying IH for of --⊕--BC2DPF to points , we have an optimal solution of --⊕--BC2DPF among on the shape . , and thus . is an optimal solution of K-M-⊕--BC2DPF in E using only clusters . Hence, the result is proven by induction. □

Proposition 3.

There is an optimal solution of K-M-⊕--BC2DPF, removing exactly M points in the partial clustering.

Proof.

Starting with an optimal solution of K-M-+--BC2DPF, let be the optimal cost, and let be the subset of the non-selected points, , and , the K subsets defining the costs, so that is a partition of E. Removing random points in , we have clusters such that, for all , , and thus (Lemma 7). This implies , and thus the clusters and outliers define and provide the optimal solution of K-M-⊕--BC2DPF with exactly M outliers. □

Reciprocally, one may investigate if the conditions of optimality in Propositions 2 and 3 are necessary. The conditions are not necessary in general. For instance, with , and the discrete function , ie , the selection of each pair of points defines an optimal solution, with the same cost as the selection of the three points, which do not fulfill the property of Proposition 3. Having an optimal solution with the two extreme points also does not fulfill the property of Proposition 2. The optimality conditions are necessary in the case of sum-clustering, using the continuous measure of the enclosing disk.

Proposition 4.

Let an optimal solution of K-M-+--BC2DPF be defined with as the subset of outliers, with , and as the K subsets defining the optimal cost. We therefore have

, in other words, exactly M points are not selected in π.

For each , defining and , we have .

Proof.

Starting with an optimal solution of K-M-+--BC2DPF, let be the optimal cost, and let be the subset of the non-selected points, , and be the K subsets defining the costs, so that is a partition of E. We prove and ad absurdum.

If , one may remove one extreme point of the cluster , defining . With Lemmas 2 and 3, we have , and . This is in contraction with the optimality of , , defining a strictly better solution for K-M-+--BC2DPF. is thus proven ad absurdum.

If is not fulfilled by a cluster , there is with . If , we have a better solution than the optimal one with and . If with , we have nested clusters and . We suppose that (otherwise, reasoning is symmetrical). We define a better solution than the optimal one with and . is thus proven ad absurdum. □

4.4. Computation of Cluster Costs

Using Proposition 2, only cluster costs are computed. This section allows the efficient computation of such cluster costs. Once points are sorted using Proposition 1, cluster costs can be computed in using Lemma 3. This makes a time complexity in to compute all the cluster costs for .

Equation (19) ensures that cluster costs can be computed in for all . Actually, Algorithm 1 and Proposition 5 allow for computations in once points are sorted following Proposition 1, with a dichotomic and logarithmic search.

Lemma 10.

Letting with . decreases before reaching a minimum , , and then increases for .

Proof: We define with and .

Let . Proposition 2, applied to i and any with and , ensures that g is decreasing. Similarly, Proposition 2, applied to and any , ensures that h is increasing.

Let . and , so that . A is a non-empty and bounded subset of , so that A has a maximum. We note that . and , so that and .

Let . and , using the monotony of and . and as . Hence, . This proves that is decreasing in .

and have to be coherent with the fact that .

Let . , so using the monotony of and .This proves that is increasing in .

Lastly, the minimum of f can be reached in l or in , depending on the sign of . If , there are two minimums . Otherwise, there is a unique minimum , , which decreases before increasing.

| Algorithm 1: Computation of |

| input: indexes , a distance d |

| output: the cost |

| define , , , , |

| while |

| ifthen and |

| else and |

| end while |

| return |

Proposition 5.

Let be N points of , such that for all , . The computing cost for any cluster has a complexity in time, using additional memory space.

Proof.

Let . Let us prove the correctness and complexity of Algorithm 1. Algorithm 1 is a dichotomic and logarithmic search; it iterates times, with each iteration running in time. The correctness and complexity of Algorithm 1 is a consequence of Lemma 10 and the loop invariant, which exists as a of minimum of , with , also having and . By construction in Algorithm 1, we have , and thus . This implies that , and thus , using Lemma 10. Similarly, we always obtain , and thus , , so that with Lemma 10. At the convergence of the dichotomic search, and is or ; therefore, the optimal value is . □

Remark 1.

Algorithm 1 improves the previously proposed binary search algorithm [10]. If it has the same logarithmic complexity, this leads to two times fewer calls of the distance function. Indeed, in the previous version, the dichotomic algorithm is computed at each iteration and to determine if is in the increasing or decreasing phase of . In Algorithm 1, the computations that are provided for each iteration are equivalent to the evaluation of only , computing and .

Proposition 5 can compute for all in . Now, we prove that the costs of all can be computed in time instead of using -independent computations. Two schemes are proposed, computing the lines of the cost matrix in time, computing for all for a given in Algorithm 2, and computing for all for a given in Algorithm 3.

Lemma 11.

Let , with . Let such that .

(i) If , then there is , such that , with .

(ii) If , then there is , such that , with .

Proof.

We prove ; we suppose that and we prove that, for all , so that either c is an argmin of the minimization, and the superior minimum to c. is similarly proven. Let . , which implies and, with Lemma 10, is decreasing in , i.e., for all We thus have , and, with lemma 2, . Thus, . With lemma 2, . implies that , and then . Thus , and . □

Proposition 6.

, Let be N points of , such that for all , . Algorithm 2 computes for all for a given in time using memory space.

Proof.

The validity of Algorithm 2 is based on Lemmas 10 and 11: once a discrete center c is known for a , we can find a center of with , and Lemma 10 gives the stopping criterion to prove a discrete center. Let us prove the time complexity; the space complexity is obviously within memory space. In Algorithm 2, each computation is in time; we have to count the number of calls for this function. In each loop in , one computation is used for the initialization; the total number of calls for this initialization is . Then, denoting, with , the center found for , we note that the number of loops is . Lastly, there are less that computations calls ; Algorithm 2 runs in time. □

| Algorithm 2: Computing for all for a given |

| Input: indexed with Proposition 1, , , N points of , |

| Output: for all , |

| define vector v with for all |

| define , |

| for to N |

| while |

| end while |

| end for |

| return vector v |

Proposition 7.

Let be N points of , such that for all , . Algorithm 3 computes for all for a given in time, using memory space.

| Algorithm 3: Computing for all for a given |

| Input: indexed with Proposition 1, , , N points of , |

| Output: for all , |

| define vector v with for all |

| define , |

| for to 1 with increment |

| while |

| end while |

| end for |

| return vector v |

Proof.

The proof is analogous with Proposition 6, applied to Algorithm 2. □

5. Particular Sub-Cases

Some particular sub-cases have specific complexity results, which are presented in this section.

5.1. Sub-Cases with

We first note that sub-cases show no difference between 1-0-+--BC2DPF and 1-0-max--BC2DPF problems, defining the continuous or the discrete version of 1-center problems. Similarly, 1-M-+--BC2DPF and 1-M-max--BC2DPF problems define the continuous or the discrete version of partial 1-center problems. 1-center optimization problems have a trivial solution; the unique partition of E in one subset is E. To solve the 1-center problem, it is necessary to compute the radius of the minimum enclosing disk covering all the points of E (centered in one point of E for the discrete version). Once the points are re-indexed with Proposition 1, the cost computation is in time for the continuous version using Proposition 3, and in time for the discrete version using Proposition 5. The cost of the re-indexation in forms the overall complexity time with such an approach. One may improve this complexity without re-indexing E.

Proposition 8.

Let , a subset of N points of , such that for all , . 1-0-⊕--BC2DPF problems are solvable in time using additional memory space.

Proof.

Using Lemma 3 or Lemma 6, computations of are, at most, in once the extreme elements following the order ≺ have been computed. Computation of the extreme points is also seen in , with one traversal of the elements of E, storing only the current minimal and maximal element with the order relation ≺. Finally, the complexity of one-center problems is in linear time. □

Proposition 9.

Let , let a subset of N points of , such that for all , . The continuous partial 1-center, i.e., 1-M-⊕--BC2DPF problems, is solvable in time. The discrete partial 1-center, i.e., 1-M-⊕--BC2DPF problems, is solvable in time.

Proof.

Using Proposition 2, -center problems are computed equivalently: .

For the continuous and the discrete case, re-indexing the whole PF with Proposition 1 runs in time, leading to M computations in or time, which are dominated by the complexity of re-indexing. The time complexity for both cases are highest in . In the continuous case, i.e., , one requires only the M minimal and maximal points with the total order ≺ to compute the cluster costs using. If , one may use one traversal of E, storing the current m minimal and extreme points, which has a complexity in . Choosing among the two possible algorithms, the time complexity is in . □

5.2. Sub-Cases with

Specific cases with define two clusters, and one separation as defined in Proposition 2. For these cases, specific complexity results are provided, enumerating all the possible separations.

Proposition 10.

Let N points of , , such that for all , . 2-0-⊕--BC2DPF problems are solvable in time, using additional memory space.

Proof.

Using Proposition 2, optimal solutions exist, considering two clusters: and . One enumerates the possible separations . First, the re-indexation phase runs in time, which will be the bottleneck for the time complexity. Enumerating the (N-1) values and storing the minimal value induces (N-1) computations in time for the continuous case , and uses additional memory space: the current best value and the corresponding index. Considering the discrete case, one uses additional memory space , to maintain the time complexity result. □

One can extend the previous complexity results with the partial covering extension.

Proposition 11.

Let be a subset of N points of , such that for all , . 2-M-⊕--BC2DPF problems are solvable in time and additional memory space, or in time and additional memory space.

Proof.

After the re-indexation phase running in time), Proposition 2 ensures that there is an optimal solution for 2-M-⊕--BC2DP, removing the first indexes, the last indexes, and points between the two selected clusters, with . Using Proposition 3, there is an optimal solution, exactly defining the M outliers, so that we can consider that . Denoting i as the last index of the first cluster, the first selected cluster is ; the second one is . We have and i.e., . We denote, with X, the following feasible

Computing an optimal solution for 2-M-⊕--BC2DP brings the following enumeration

In the continuous case (ie ), we use computations to enumerate the possible , and computations to enumerate the possible i once are defined. With cost computations running in time, the computation of (20) by enumeration runs in time, after the re-indexation in time. This induces the time complexity announced for . This computation uses additional memory space, storing only the best current solution and its cost; this is also the announced memory complexity .

In the discrete case (i.e., ), we use computations to enumerate the possible , and computations to enumerate the possible i once are fixed. This uses additional memory space, and the total time complexity is . To decrease the time complexity, one can use two vectors of size N to store a given , for which the cluster costs and are given by Algorithms 2 and 3, so that the total time complexity remains in with an additional memory space. These two variants, using or additional memory space, induce the time complexity announced in Proposition 11. □

5.3. Continuous Min-Sum K-Radii on A Line

To the best of our knowledge, the 1D continuous min-sum k-radii and the min-sum diameter problems were not previously studied. The specific properties hold, as proven in Lemma 12. This allows a time complexity of .

Lemma 12.

Let be N points in a line of , indexed such that for all , . The min-sum k-radii in a line, K-0-+--BC2DPF, is equivalent to selecting the highest values of the distance among consecutive points, with the extremity of such segments defining the extreme points of the disks.

Proof.

Let a feasible and non-nested solution of K-0-+--BC2DPF be defined with clusters such that . Using the alignment property, we can obtain

Reciprocally, this is equivalent to considering K-0-+--BC2DPF or the maximization of the sum of sa a different distance among consecutive points. The latter problem is just computing the highest distances among consecutive points. □

Proposition 12.

Let be a subset of N points of on a line. K-0-+--BC2DPF, the continuous min-sum-k-radii, is solvable in time and memory space.

Proof.

Lemma 12 ensures the validity of Algorithm 4, determining the highest values of the distance among consecutive points. The additional memory space in Algorithm 4 is in O(N), computing the list of consecutive distances. Sorting the distances and the re-indexation both have a time complexity in . □

| Algorithm 4: Continuous min-sum K-radii on a line |

| Input:, N points of on a line |

| re-index E using Proposition 1 |

| initialize vector v with for |

| initialize vector w with for |

| sort vector v with increasing |

| for the elements of v with the maximal value , store the indexes |

| i in w |

| sort w in the increasing order |

| initialize , , . |

| for in the increasing order |

| add in |

| end for |

| add in |

| return the optimal cost and the partition of selected clusters |

6. Unified DP Algorithm and Complexity Results

Proposition 2 allows the design of a common DP algorithm for p-center problems and variants, and to prove polynomial complexities. The key element is to design Bellman equations.

Proposition 13

(Bellman equations). Defining as the optimal cost of k-m-⊕--BC2DPF among points for all , and , we have the following induction relations

Proof. (21) is the standard 1-center case. (22) is a trivial case, where it is possible to fill the clusters with singletons, with a null and optimal cost. (23) is a recursion formula among the partial 1-center cases, an optimal solution of 1-m-⊕--BC2DPF among points , selecting the point , and the optimal solution is cluster with Proposition 3, with a cost or an optimal solution of 1--⊕--BC2DPF if the point is not selected. (24) is a recursion formula among the k-0-⊕--BC2DPF cases among points ; when generalizing the ones from [10] for the powered sum-radii cases, the proof is similar. Let and . Let , when selecting an optimal solution of k-0-⊕--BC2DPF among points indexed in , and adding cluster , a feasible solution is obtained for k-0-⊕--BC2DPF among the points indexed in with a cost . This last cost is lower than the optimal cost, thus . Such inequalities are valid for all ; this implies

Let indexes, such that defines the optimal solution of k-0-⊕--BC2DPF among the points indexed in ; its cost is . Necessarily, defines the optimal solution of -0-⊕--BC2DPF among the points indexed in . On the contrary, a better solution for would be constructed, adding the cluster . We thus have . Combined with (26), this proves .

Lastly, we prove (25). Let . ; each solution of k--⊕--BC2DPF among the points indexed in defines a solution of k-m-⊕--BC2DPF among the points indexed in , with the selecting point as an outlier. Let , with the cost of k-m-⊕--BC2DPF among the points indexed in ; necessarily selecting the point i, we obtain . is defined by a cluster and an optimal solution of k-m-⊕--BC2DPF among the points indexed in , so that . We thus have

Reciprocally, let indexes, such that defines an optimal solution of k-m-⊕--BC2DPF among the points indexed in ; its cost is . If , then and (27) is an equality. If , then defines an optimal solution of k--⊕--BC2DPF among the points indexed in ; its cost is . We thus have , and (27) is an equality. Finally, (25) is proven by disjunction. □

Bellman equations of Proposition 13 can compute the optimal value by induction. A first method is a recursive implementation of the Bellman equations to compute the cost and store the intermediate computations in a memoized implementation. An iterative implementation is provided in Algorithm 5, using a defined order for the computations of elements . An advantage of Algorithm 5 is that independent computations are highlighted for a parallel implementation. For both methods computing the optimal cost , backtracking operations in the DP matrix with computed costs allow for recovery of the affectation of clusters and outliers in an optimal solution.

In Algorithm 5, note that some useless computations are not processed. When having to compute , computations with are useless. will also not be called. Generally, triangular elements with are useless. The DP matrix is not fully constructed in Algorithm 5, removing such useless elements.

| Algorithm 5: unified DP algorithm for K-M-⊕--BC2DPF | ||

| ||

| sort E following the order of Proposition 1 | ||

| initialize matrix O with for all | ||

| compute for all and store in | ||

| for to N | ||

| compute and store for all | ||

| compute for all | ||

| for to | ||

| compute | ||

| for to | ||

| compute | ||

| end for | ||

| end for | ||

| delete the stored for all | ||

| end for | ||

| initialize , , | ||

| for to 1 with increment | ||

| compute | ||

| compute and store for all | ||

| find such that | ||

| add in | ||

| delete the stored for all | ||

| end for | ||

| return the optimal cost and the selected clusters |

Theorem 1.

Let a subset of N points of , such that for all , . When applied to the 2D PF E for , the K-M-⊕--BC2DPF problems are solvable to optimality in polynomial time using Algorithm 5, with a complexity in time and space.

Proof.

The validity of Algorithm 5 is proven by induction; each cell of the DP matrix is computed using only cells that were previously computed to optimality. Once the required cells are computed, a standard backtracking algorithm is applied to compute the clusters. Let us analyze the complexity. Let . The space complexity is in , along with the size of the DP matrix, with the intermediate computations of cluster costs using, at most, O(N) memory space, only remembering such vectors due to the deleting operations. Let us analyze the time complexity. Sorting and indexing the elements of E (Proposition 1) has a time complexity in . Once costs are computed and stored, each cell of the DP matrix is computed, at most, in time using Formulas (21)–(24). This induces a total complexity in time. The cluster costs are computed using N times Algorithm 3 and one time Algorithm 2; this has a time complexity in , which is negligible compared to the time computation of the cells of the DP matrix. The K backtracking operations requires a time computation of the costs for all and a given i, M operations in time to compute and operations in time to compute . Finally, the backtracking operations requires time, which is negligible compared to the previous computation in time. □

7. Specific Improvements

This section investigates how the complexity results of Theorem 2 may be improved, and how to speed up Algorithm 5, from a theoretical and a practical viewpoint.

7.1. Improving Time Complexity for Standard and Partial P-Center Problems

In Algorithm 5, the bottleneck for complexity are the computations , for , , . When , it is proven that such a minimization can be processed in instead of for the naive enumeration, leading to the general complexity results. This can improve the time complexity in the p-center cases.

Lemma 13.

Let and . The application is decreasing.

Proof.

Let . For each , any feasible solution of k--⊕--BC2DPF in is a feasible solution of k-m-⊕--BC2DPF, with the partial versions defined by problems (11). An optimal solution of k--⊕--BC2DPF is feasible for k--⊕--BC2DPF, it implies . □

Lemma 14.

Let and . The application is increasing.

Proof.

We yfirst note that the case is implied by the Lemma 7, so that we can suppose in the following, that . Let , and . Let be an optimal solution of k-m-⊕--BC2DPF among the points indexed in ; its cost is . Let , the subset of the non-selected points, , and with the k subsets defining the costs, so that is a partition of E and . If , then using Lemma 13, which is the result. We suppose to end the proof that and re-index the clusters such that . We consider the clusters . With X, a partition of is defined, with, at most, M outliers, so that it defines a feasible solution of the optimization problem, defining as a cost . Using Lemma 7, , so that . □

Lemma 15.

Let , , . Let . There is , such that is decreasing for , and then increases for . For , and for , .

Proof.

Similarly to the proof of Lemma 10, the following applications are monotone:

decreases with Lemma 7,

increases for all k with Lemma 14. □

Proposition 14.

Let . Let . Once the values in the DP matrix of Algorithm 2 are computed, Algorithm 6 computes calling cost computations . This induces a time complexity in using straightforward computations of the cluster costs with Propositions 3 and 5.

| Algorithm 6: Dichotomic computation of |

| input: indexes , , , ; |

| a vector v containing for all . |

| define , , |

| define , , |

| while |

| ifthen set and |

| else and |

| end while |

| return |

Proof.

Algorithm 6 is a dichotomic search based on Lemma 15, similarly to Algorithm 1, derived from Lemma 10. The complexity in Algorithm 6 is cost computations . In the discrete case, such computations run in time with Proposition 5, whereas it is in the continuous case with Lemma 3. In both cases, the final time complexity is given by . □

Computing in time instead of in the proof of Theorem 1 for p-center problem and variants, the complexity results are updated for these sub-problems.

Theorem 2.

Let be a subset of N points of , such that for all , . Whe napplied to the 2D PF E for , the K-M-max--BC2DPF problems are solvable to optimality in polynomial time using Algorithm 4, with a complexity in time and space.

Proof.

The validity of Algorithm 5 using Algorithm 6 inside is implied by the validity of Algorithm 6, proven in Proposition 14. Updating the time complexity with Proposition 14, the new time complexity for continuous K-center problems is seen in time instead of , as previously. For the discrete versions, using Proposition 14 with computations of discrete cluster costs with Proposition 5 induces a time complexity in . The complexity is decreased to , where the cluster costs are already computed and stored in Algorithm 5, and thus the computations of Algorithm 6 are seen in . tThisinduces the same complexity for discrete and continuous K-center variants. □

Remark 2.

For the standard discrete p-center, Theorem 2 improves the time complexity given in the preliminary paper [10], from to . Another improvement was given by Algorithm 1; the former computation of cluster costs has the same asymptotic complexity but requires two times more computations. tTis proportional factor is non negligible in practice.

7.2. Improving Space Complexity for Standard P-Center Problems

For standard p-center problems, Algorithm 5 has a complexity in memory space in , the size of the DP matrix. This section proves it is possible to reduce the space complexity into an memory space.

One can compute the DP matrix for k-centers “line-by-line”, with k increasing. This does not change the validity of the algorithm, with each computation using values that were previously computed to the optimal values. Two main differences occur compared to Algorithm 5. On one hand, the -center values use only k-center computations, and the computations with can be deleted once all the required k-center values are computed when having to compute only the K-center values, especially the optimal cost. On the other hand, the computations of cluster costs are not factorized, as in Algorithm 5; this does not make any difference in the continuous version, where Lemma 3 can to recompute cluster costs in time when needed, whereas recomputing each cost induces the computations running in for the discrete version with Algorithm 1.

The search order of operations slightly degrades the time complexity for the discrete variant, without inducing a change in the continuous variant. This allows only for computations of the optimal value; another difficulty is that the backtracking operations, as written in Algorithm 5, require storage of the whole stored values of the whole matrix. The issue is obtaining alternative backtracking algorithms that allow the computation of an optimal solution of the standard p-center problems using only the optimal value provided by the DP iterations, and with a complexity of, at most, time and memory space. Algorithms 7 and 8 have such properties.

| Algorithm 7: Backtracking algorithm using memory space |

| input: - to specify the clustering measure; |

| - N points of a 2D PF, , sorted such that for all , ; |

| - the number of clusters; |

| - , the optimal cost of K--CP2DPF; |

| output: an optimal partition of K--CP2DPF. |

| initialize , , , a set of sub-intervals of . |

| for to 2 with increment |

| set |

| whiledoend while |

| add in |

| end for |

| add in |

| return |

| Algorithm 8: Backtracking algorithm using memory space |

| input: - to specify the clustering measure; |

| - N points of a 2D PF, , sorted such that for all , ; |

| - the number of clusters; |

| - , the optimal cost of K--CP2DPF; |

| output: an optimal partition of K--CP2DPF. |

| initialize , , , a set of sub-intervals of . |

| for to K with increment |

| set |

| whiledoend while |

| add in |

| set |

| end for |

| add in |

| return |

Lemma 16.

Let . Let , sorted such that for all , . For the discrete and continuous K-center problems, the indexes given by Algorithm 7 are lower bounds of the indexes of any optimal solution. Denoting , the indexes given by Algorithm 7, and , the indexes of an optimal solution, we have, for all

Proof.

This lemma is proven a decreasing induction on k, starting from . The case is furnished by the first step of Algorithm 4, and decreaswa with Lemma 7. WIth a given k, , is implied by Lemma 2 and . □

Algorithm 8 is similar to Algorithm 7, with iterations increasing the indexes of the points of E. The validity is similarly proven, and this provides the upper bounds for the indexes of any optimal solution of K-center problems.

Lemma 17.

Let . Let , sorted such that for all , . For K-center problems, the indexes given by Algorithm 8 are upper bounds of the indexes of any optimal solution. Denoting , the indexes given by Algorithm 8, and , the indexes of an optimal solution, we have, for all .

Proposition 15.

Once the optimal cost of p-center problems are computed, Algorithms 7 and 8 compute an optimal partition in time using additional memory space.

Proof.

We consider the proof for Algorithm 7, which is symmetrical for Algorithm 8. Let be the optimal cost of K-center clustering with f. Let be the indexes given by Algorithm 7. Through this construction, all the clusters defined by the indexes for all verify . Let be the cluster defined by ; we have to prove that to conclude the optimality of the clustering defined by Algorithm 4. For an optimal solution, let be the indexes defining this solution. Lemma 16 ensures that , and thus Lemma 7 assures . Analyzing the complexity, Algorithm 7 calls for a maximum of times the clustering cost function, without requiring stored elements; the complexity is in time. □

Remark 3.

Finding the biggest cluster with an extremity given and a bounded cost can be acheived by a dichotomic search. Rhis would induce a complexity in . To avoid the separate case and , Algorithms 7 and 8 provide a common algorithm running in time, which is enough for the following complexity results.

The previous improvements, written in Algorithm 9, allow for new complexity results with a memory space for K-centrer problems.

| Algorithm 9: p-center clustering in a 2DPF with a O(N) memory space |

| Input: |

| - N points of , such that for all , ; |

| - to specify the clustering measure; |

| - the number of clusters. |

| initialize matrix O with for all |

| sort E following the order of Proposition 1 |

| compute and store for all (with Algorithm 2 if ) |

| for to |

| for to |

| compute and store (Algorithm 6) |

| end for |

| delete the stored for all i |

| end for |

| with Algorithm 6 |

| return the optimal cost and a partition given by backtracking Algorithm 7 or 8 |

Theorem 3.

Let a subset of N points of , such that for all , . When applied to the 2D PF E for , the standard continuous and discrete K-center problems, i.e., K-0-max--BC2DPF, are solvable with a complexity in time and space.

Remark 4.

The continuous case improves the complexity obtained after Theorem 2, with the same time complexity and an improvement in the space complexity. For the discrete variant, improving the space complexity in instead of induces a very slight degradation of the time complexity, from to . Depending on the value of K, it may be preferable, with stronger constraints in memory space, to have this second version.

7.3. Improving Space Complexity for Partial P-Center Problems?

This section tries to generalize the previous results for the partial K-center problems, i.e., K-M-max--BC2DPF with . The key element is to obtain a backtracking algorithm that does not use the DP matrix. Algorithm 10 extends Algorithm 7 by considering all the possible cardinals of outliers between clusters k and for and the outliers after the last cluster. A feasible solution of the optimal cost should be feasible by iterating Algorithm 7 for at least one of these sub-cases.

| Algorithm 10: Backtracking algorithm for K-M-max--BC2DPF with |

| input: - a K-M-max--BC2DPF problem |

| - N points of a 2D PF, , sorted such that for all , ; |

| - , the optimal cost of K-M-max--BC2DPF problem; |

| output: an optimal partition of K-M-max--BC2DPF problem. |

| for each vector x of elements such that |

| initialize , , , a set of sub-intervals |

| of . |

| for to 2 with increment |

| set |

| whiledoend while |

| add in |

| set |

| end for |

| ifthen add in and return |

| end for |

| return error “OPT is not a feasible cost for K-M-max--BC2DPF ” |

It is crucial to analyze the time complexity induced by this enumeration. If the number of vectors x of elements is such such that is in , then this complexity is not polynomial anymore. For , a time complexity in would be induced, which is acceptable within the complexity of the computation of the DP matrix. Having would dramatically degrade the time complexity. Hence, we extend the improvement results of space complexity only for , with Algorithm 11.

Theorem 4.

Let a subset of N points of , such that for all , . When applied to the 2D PF E for , partial K-center problems K-1-max--BC2DPF, are solvable with a complexity in time and space.

7.4. Speeding-Up DP for Sum-Radii Problems

Similarly to Algorithm 6, this section tries to speed up the computations , which are the bottleneck for the time complexity in Algorithm 5. This section presents the stopping criterion to avoid useless computations in the naive enumeration, but without providing proofs of time complexity improvements.

| Algorithm 11: Partial p-center K-1-max--BC2DPF with a O(N) memory space |

| Input: |

| - N points of , such that for all , ; |

| - to specify the clustering measure; |

| - the number of clusters. |

| initialize matrix O with for all |

| sort E following the order of Proposition 1 |

| compute and store for all (with Algorithm 2 if ) |

| compute and store for all (with Algorithm 2 if ) |

| compute and store for all |

| for to K |

| for to |

| compute and store (Algorithm 6) |

| compute and store |

| end for |

| delete the stored for all |

| end for |

| return the optimal cost and a partition given by backtracking Algorithm 10 |

Proposition 16.

Let , and . Let β an upper bound for . We suppose there exist , such that . Then, each optimal index , such that necessarily fulfills . In other words, .

Proof.

With , Lemma 7 implies that for all , . Using for all implies that for all , , and the optimal index gives , which is superior to . □

Proposition 16 can be applied to compute each value of the DP matrix using fewer computations than the naive enumeration. In the enumeration, is updated to the best current value of . The index would be enumerated in a decreasing way, starting from until an index is found, such that , and no more enumeration is required with Proposition 16, ensuring that the partial enumeration is sufficient to find the wished-for minimal value. This is a practical improvement, but we do not furnish proof of complexity improvements, as it is likely that this would not change the worst case complexity.

8. Discussion

8.1. Importance of the 2D PF Hypothesis, Summarizing Complexity Results

Planar p-center problems were not studied previously in the PF case. The 2D PF hypothesis is crucial for the complexity results and the efficiency of the solving algorithms. Table 1 compares the available complexity results for 1D and 2D cases of some k-center variants.

Table 1.

Comparison of the time complexity for 2D PF cases to the 1D and 2D cases.

The complexity for 2D PF cases is very similar to the 1D cases; the 2D PF extension does not induce major difficulties in terms of complexity results. 2D PF cases may induce significant differences compared to the general 2D cases. The p-center problems are NP-hard in a planar Euclidean space [17], since adding the PF hypothesis leads to the polynomial complexity of Theorem 1, which allows for an efficient, straightforward implementation of the algorithm. Two properties of 2D PF were crucial for these results: The 1D structure implied by Proposition 1, which allows an extension of DP algorithms [58,59], and Lemmas 3 and 6, which allow quick computations of cluster costs. Note that rectangular p-center problems have a better complexity using general planar results than using our Theorems 2 and 3. Our algorithms only use common properties for Chebyshev and Minkowski distances, whereas significant improvements are provided using specificities of Chebyshev distance.

Note that our complexity results are given considering the complexity of the initial re-indexation with Proposition 1. This phase may be the bottleneck for the final complexity. Some papers mention results which consider that the data are already in the memory (avoiding an O(N) traversal for input data) and already sorted. In our applications, MOO methods such as epsilon-constraint provide already sorted points [3]. Using this means of calculating the complexity, our algorithms for continuous and discrete 2-center problems in a 2D PF would have, respectively, a complexity in and time. A notable advantage of the specialized algorithm in a 2D PF instead of the general cases in 2D is the simple and easy to implement algorithms.

8.2. Equivalent Optimal Solutions for P-Center Problems

Lemmas 16 and 17 emphasize that many optimal solutions may exist; the lower and upper bounds may define a very large funnel. We also note that many optimal solutions can be nested, i.e., non-verifying the Proposition 2. For real-world applicationa, having well-balanced clusters is more natural, and often wished for. Algorithms 7 and 8 provide the most unbalanced solutions. One may balance the sizes of covering balls, or the number of points in the clusters. Both types of solutions may be given using simple and fast post-processing. For example, one may proceed with a steepest descent local search using two-center problem types for consecutive clusters in the current solution. For balancing the size of clusters, iterating two-center computations induces marginal computations in time for each iteration with Algorithm 6. Such complexity occurs once the points are re-indexed using Proposition 1; one such computation in allows for many neighborhood computations running in time, and the sorting time is amortized.

8.3. Towards a Parallel Implementation

Complexity issues are raised to speed-up the convergence of the algorithms in practice. An additional way to speed up the algorithms in practice is to consider implementation issues, especially parallel implementation properties in multi- or many-core environments. In Algorithm 5, the values of the DP matrix for a given requires only to compute the values for all . Independent computations can thus be operated at the iteration i of Algorithm 5, once the cluster costs for all have been computed, which is not the most time-consuming part when using Algorithms 2 and 3. This is a very useful property for a parallel implementation, requiring only synchronizations to process operations. Hence, a parallel implementation of Algorithm 5 is straightforward in a shared memory parallelization, using OpenMP for instance in C/C++, or higher-level programming languages such as Python, Julia or Chapel [60]. One may also consider an intensive parallelization in a many-core environment, such as General Purpose Graphical Processing Units (GPGPU). A difficulty when using this may be the large memory size that is required in Algorithm 5.

Section 7 variants, which construct the DP matrix faster, both for k-center and min-sum k-radii problems, are not compatible with an efficient GPGPU parallelization, and one would prefer the naive and fixed-size enumeration of Algorithm 5, even with its worse time complexity for the sequential algorithm. Comparing the sequential algorithm to the GPGPU parallelization, having many independent parallelized computations allows a huge proportional factor with GPGPU, which can compensate the worst asymptotic complexity for reasonable sized instances. Shared memory parallelization, such as OpenMP, is compatible with the improvements provided in Section 7. Contrary to Algorithm 5, Algorithms 9 and 11 compute the DP matrix with index k increasing, with independent computation induced at each iteration. With such algorithms, there are only synchronizations required, instead of for Algorithm 5, which is a better property for parallelization. The memory versions are also useful for GPGPU parallelization, where memory space is more constrained than when storing a DP matrix on the RAM.

Previously, the parallelization of the DP matrix construction was discussed, as this is the bottleneck in time complexity. The initial sorting algorithm can also be parallelized on GPGPU if needed; the sorting time is negligible in most cases. The backtracking algorithm is sequential to obtain clusters, but with a low complexity in general, so that a parallelization of this phase is not crucial. We note that there is only one case where the backtracking Algorithm has the same complexity as the construction of the DP matrix: the DP variant in memory space proposed in Algorithm 11 with Algorithm 10 as a specific backtrack. In this specific case, the tests with different positions of the chosen outlier are independent, which allows a specific parallelization for Algorithm 10.

8.4. Applications to Bi-Objective Meta-Heuristics

The initial motivation of this work was to support decision makers when an MOO approach without preference furnishes a large set of non-dominated solutions. In this application, the value of K is small, allowing for human analyses to offer some preferences. In this paper, the optimality is not required in the further developments. Our work can also be applied to a partial PF furnished by population meta-heuristics [5]. A posteriori, the complexity allows for the use of Algorithms 5, 9 and 11 inside MOO meta-heuristics. Archiving PF is a common issue of population meta-heuristics, facing multi-objective optimization problems [4,5]. A key issue is obtaining diversified points of the PF in the archive, to compute diversified solutions along the current PF.

Algorithms 5, 9 and 11 can be used to address this issue, embedded in MOO approaches, similarly to [49]. Archiving diversified solutions of Pareto sets has application for the diversification of genetic algorithms, to select diversified solutions for cross-over and mutation phases [61,62], but also for swarm particle optimization heuristics [63]. In these applications, clustering has to run quickly. The complexity results and the parallelization properties are useful in such applicationas.

For application to MOO meta-heuristics like evolutionary algorithms, the partial versions are particularly useful. Indeed, partial versions may detect outliers that are isolated from the other points. For such points, it is natural to process intensification operators to look for efficient solutions in the neighborhood, which will make the former outlier less isolated. Such a process is interesting for obtaining a better balanced distribution of the points along the PF, which is a crucial point when dealing with MOO meta-heuristics.

8.5. How to Choose ?

A crucial point in clustering applications the selection of an appropriate value of K. A too-small value of K can miss that instances which are well-captured with representative clusters. Real-world applications seek the best compromise between the minimization of K, and the minimization of the dissimilarity among the clusters. Similarly, with [11], the properties of DP can be used to achieve this goal. With the DP Algorithm 9, many couples are computed, using the optimal k-center values with k clusters. Having defined a maximal value , the complexity for computing these points is seen in . When searching for good values of k, the elbow technique, may be applied. Backtracking operations may be used for many solutions without changing the complexity. Rhe same ideas are applicable along the M index. In the previoulsy described context of MOO meta-heuristics, the sensitivity with the M parameter is more important than the sensitivity for the parameter K, where the number of archived points is known and fixed regarding other considerations, such as the allowed size of the population.

9. Conclusions and Perspectives

This paper examined the properties of p-center problems and variants in the special case of a discrete set of non-dominated points in a 2D space, using Euclidean, Minkowski or Chebyshev distances. A common characterization of optimal clusters is proven for the discrete and continuous variants of the p-center problems and variants. Thie can solve these problems to optimality with a unified DP algorithm of a polynomial complexity. Some complexity results for the 2D PF case improve the general ones in 2D. The presented algorithms are useful for MOO approaches. The complexity results, in time for the standard K-center problems, and in time for the standard min-sum k-radii problems, are useful for application with a large PF. When applied to N points and able to ncover points, partial K-center and min-sum-K-radii variants are, respectively, solvable in and time. Furthermore, the DP algorithms have interesting properties for efficient parallel implementation in a shared memory environment, such as OpenMP or using GPGPU. This allows their application for a very large PF with short solving times. For an application for MOO meta-heuristics such as evolutionary algorithms, the partial versions are useful for the detection of outliers where intensification phases around these isolated solutions may be processed in order to obtain a better distribution of the points along the PF.

Future perspectives include the extension of these results to other clustering algorithms. The weighted versions of p-center variants were not studied in this paper, which was motivated by MOO perspectives, and future perspectives shall consider extending our algorithms to weighted variants. Regarding MOO applications, extending the results to dimension 3 is a subject of interest for MOO problems with three objectives. However, clustering a 3D PF will be an NP-hard problem as soon as the general 2D cases are proven to be NP-hard. The perspective in such cases is to design specific approximation algorithms for a 3D PF.

Author Contributions

Conceptualization, N.D. and F.N.; Methodology, N.D. and F.N.; Validation, E.-G.T. and F.N.; Writing–original draft preparation, N.D.; Writing—review and editing, N.D.; Supervision, E.-G.T. and F.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Peugeot, T.; Dupin, N.; Sembely, M.J.; Dubecq, C. MBSE, PLM, MIP and Robust Optimization for System of Systems Management, Application to SCCOA French Air Defense Program. In Complex Systems Design & Management; Springer: Berlin/Heidelberg, Germany, 2017; pp. 29–40. [Google Scholar] [CrossRef]

- Dupin, N.; Talbi, E. Matheuristics to optimize refueling and maintenance planning of nuclear power plants. J. Heuristics 2020, 1–43. [Google Scholar] [CrossRef]

- Ehrgott, M.; Gandibleux, X. Multiobjective combinatorial optimization-theory, methodology, and applications. In Multiple Criteria Optimization: State of the Art Annotated Bibliographic Surveys; Springer: Berlin/Heidelberg, Germany, 2003; pp. 369–444. [Google Scholar]

- Schuetze, O.; Hernandez, C.; Talbi, E.; Sun, J.; Naranjani, Y.; Xiong, F. Archivers for the representation of the set of approximate solutions for MOPs. J. Heuristics 2019, 25, 71–105. [Google Scholar] [CrossRef]

- Talbi, E. Metaheuristics: From Design to Implementation; Wiley: Hoboken, NJ, USA, 2009; Volume 74. [Google Scholar]

- Hsu, W.; Nemhauser, G. Easy and hard bottleneck location problems. Discret. Appl. Math. 1979, 1, 209–215. [Google Scholar] [CrossRef]

- Megiddo, N.; Tamir, A. New results on the complexity of p-centre problems. SIAM J. Comput. 1983, 12, 751–758. [Google Scholar] [CrossRef]

- Ravi, S.; Rosenkrantz, D.; Tayi, G. Heuristic and special case algorithms for dispersion problems. Oper. Res. 1994, 42, 299–310. [Google Scholar] [CrossRef]

- Wang, D.; Kuo, Y. A study on two geometric location problems. Inf. Process. Lett. 1988, 28, 281–286. [Google Scholar] [CrossRef]

- Dupin, N.; Nielsen, F.; Talbi, E. Clustering a 2d Pareto Front: P-center problems are solvable in polynomial time. In Proceedings of the International Conference on Optimization and Learning, Cádiz, Spain, 17–19 February 2020; pp. 179–191. [Google Scholar] [CrossRef]

- Dupin, N.; Nielsen, F.; Talbi, E. k-medoids clustering is solvable in polynomial time for a 2d Pareto front. In Proceedings of the World Congress on Global Optimization, Metz, France, 8–10 July 2019; pp. 790–799. [Google Scholar] [CrossRef]

- Borzsony, S.; Kossmann, D.; Stocker, K. The skyline operator. In Proceedings of the 17th International Conference on Data Engineering, Heidelberg, Germany, 2–6 April 2001; pp. 421–430. [Google Scholar]

- Nielsen, F. Output-sensitive peeling of convex and maximal layers. Inf. Process. Lett. 1996, 59, 255–259. [Google Scholar] [CrossRef]

- Arana-Jiménez, M.; Sánchez-Gil, C. On generating the set of nondominated solutions of a linear programming problem with parameterized fuzzy numbers. J. Glob. Optim. 2020, 77, 27–52. [Google Scholar] [CrossRef]

- Daskin, M.; Owen, S. Two New Location Covering Problems: The Partial P-Center Problem and the Partial Set Covering Problem. Geogr. Anal. 1999, 31, 217–235. [Google Scholar] [CrossRef]

- Calik, H.; Labbé, M.; Yaman, H. p-Center problems. In Location Science; Springer: Berlin/Heidelberg, Germany, 2015; pp. 79–92. [Google Scholar]

- Megiddo, N.; Supowit, K. On the complexity of some common geometric location problems. SIAM J. Comput. 1984, 13, 182–196. [Google Scholar] [CrossRef]

- Hochbaum, D. When are NP-hard location problems easy? Ann. Oper. Res. 1984, 1, 201–214. [Google Scholar] [CrossRef]

- Hochbaum, D.; Shmoys, D. A best possible heuristic for the k-center problem. Math. Oper. Res. 1985, 10, 180–184. [Google Scholar] [CrossRef]

- Gonzalez, T. Clustering to minimize the maximum intercluster distance. Theor. Comput. Sci. 1985, 38, 293–306. [Google Scholar] [CrossRef]

- Daskin, M. Network and Discrete Location: Models, Algorithms and Applications; Wiley: Hoboken, NJ, USA, 1995. [Google Scholar]

- Calik, H.; Tansel, B. Double bound method for solving the p-center location problem. Comput. Oper. Res. 2013, 40, 2991–2999. [Google Scholar] [CrossRef]

- Elloumi, S.; Labbé, M.; Pochet, Y. A new formulation and resolution method for the p-center problem. INFORMS J. Comput. 2004, 16, 84–94. [Google Scholar] [CrossRef]

- Callaghan, B.; Salhi, S.; Nagy, G. Speeding up the optimal method of Drezner for the p-centre problem in the plane. Eur. J. Oper. Res. 2017, 257, 722–734. [Google Scholar] [CrossRef]

- Drezner, Z. The p-centre problem—heuristic and optimal algorithms. J. Oper. Res. Soc. 1984, 35, 741–748. [Google Scholar]

- Hwang, R.; Lee, R.; Chang, R. The slab dividing approach to solve the Euclidean P-Center problem. Algorithmica 1993, 9, 1–22. [Google Scholar] [CrossRef]

- Agarwal, P.; Procopiuc, C. Exact and approximation algorithms for clustering. Algorithmica 2002, 33, 201–226. [Google Scholar] [CrossRef]

- Megiddo, N. Linear-time algorithms for linear programming in R3 and related problems. SIAM J. Comput. 1983, 12, 759–776. [Google Scholar] [CrossRef]

- Brass, P.; Knauer, C.; Na, H.; Shin, C.; Vigneron, A. Computing k-centers on a line. arXiv 2009, arXiv:0902.3282. [Google Scholar]

- Sharir, M. A near-linear algorithm for the planar 2-center problem. Discret. Comput. Geom. 1997, 18, 125–134. [Google Scholar] [CrossRef]

- Eppstein, D. Faster construction of planar two-centers. SODA 1997, 97, 131–138. [Google Scholar]

- Agarwal, P.; Sharir, M.; Welzl, E. The discrete 2-center problem. Discret. Comput. Geom. 1998, 20, 287–305. [Google Scholar] [CrossRef]

- Frederickson, G. Parametric search and locating supply centers in trees. In Workshop on Algorithms and Data Structures; Springer: Berlin/Heidelberg, Germany, 1991; pp. 299–319. [Google Scholar]

- Karmakar, A.; Das, S.; Nandy, S.; Bhattacharya, B. Some variations on constrained minimum enclosing circle problem. J. Comb. Optim. 2013, 25, 176–190. [Google Scholar] [CrossRef]

- Chen, D.; Li, J.; Wang, H. Efficient algorithms for the one-dimensional k-center problem. Theor. Comput. Sci. 2015, 592, 135–142. [Google Scholar] [CrossRef]

- Drezner, Z. On the rectangular p-center problem. Nav. Res. Logist. (NRL) 1987, 34, 229–234. [Google Scholar] [CrossRef]

- Katz, M.J.; Kedem, K.; Segal, M. Discrete rectilinear 2-center problems. Comput. Geom. 2000, 15, 203–214. [Google Scholar] [CrossRef]

- Drezner, Z. On a modified one-center model. Manag. Sci. 1981, 27, 848–851. [Google Scholar] [CrossRef]

- Hansen, P.; Jaumard, B. Cluster analysis and mathematical programming. Math. Program. 1997, 79, 191–215. [Google Scholar] [CrossRef]

- Doddi, S.; Marathe, M.; Ravi, S.; Taylor, D.; Widmayer, P. Approximation algorithms for clustering to minimize the sum of diameters. Nord. J. Comput. 2000, 7, 185–203. [Google Scholar]

- Gibson, M.; Kanade, G.; Krohn, E.; Pirwani, I.A.; Varadarajan, K. On metric clustering to minimize the sum of radii. Algorithmica 2010, 57, 484–498. [Google Scholar] [CrossRef]

- Charikar, M.; Panigrahy, R. Clustering to minimize the sum of cluster diameters. J. Comput. Syst. Sci. 2004, 68, 417–441. [Google Scholar] [CrossRef][Green Version]

- Behsaz, B.; Salavatipour, M. On minimum sum of radii and diameters clustering. Algorithmica 2015, 73, 143–165. [Google Scholar] [CrossRef]