Abstract

In this article, we discuss the hierarchical fixed point and split monotone variational inclusion problems and propose a new iterative method with the inertial terms involving a step size to avoid the difficulty of calculating the operator norm in real Hilbert spaces. A strong convergence theorem of the proposed method is established under some suitable control conditions. Furthermore, the proposed method is modified and used to derive a scheme for solving the split problems. Finally, we compare and demonstrate the efficiency and applicability of our schemes for numerical experiments as well as an example in the field of image restoration.

Keywords:

variational inclusion problem; inertial technique; strong convergence; image restoration; hierarchical fixed point problem MSC:

47H05; 47J20; 47J25; 65K05

1. Introduction

The variational inequality problem (VIP for short) is a significant branch of mathematics. Over the decades, this problem has been extensively studied for solving many real-world problems in various applied research areas such as physics, economics, finance, optimization, network analysis, medical images, water resources and structural analysis. Moreover, it contains fixed point problems, optimization problems arising in machine learning, signal processing and linear inverse problems, (see [1,2,3]). The set of solutions of the variational inequality problem is denoted by

where C is a nonempty closed convex subset of the Hilbert space H and is a mapping.

Moudafi and Mainge [4] established the hierarchical fixed point problem for a nonexpansive mapping T with respect to another nonexpansive mapping S on H by utilizing the concept of the variational inequality problem: Find such that

where is a nonexpansive mapping and . It is easy to see that (1) is equivalent to the following fixed point problem: Find such that

where stands for the metric projection on the closed convex set . The solution set of HFPP (1) is denoted by . It is obvious that . It is worth noting (1) covers monotone variational inequality on fixed point sets as well as minimization problem, etc. For several fixed point theory and solving the hierarchical fixed point problem in (1), many iterative methods have been studied and developed, (see [4,5,6,7,8]).

On the other hand, The split monotone variational inclusion problem (in short, SpMVIP) is proposed and studied by Moudafi [9]. It had been applied to the intensity-modulated radiation therapy treatment planning as a model, (see [10]). This concept has appeared in many inverse problems which emerge in phase retrieval and other real-world problems such as sensor networks, data compression as well as comprised tomography, (see [11,12]): Find such that

and such that

where and are multi-valued monotone operators, , are two single-valued operators and is a bounded linear operator. The solution set of SpMVIP is denoted by .

They introduced the following iterative method and studied the weak convergence theorem for SpMVIP: For a given , compute iterative sequence generated by the following scheme:

where , such that and are the resolvent mappings of F and G, respectively (see the definition in Section 2) and I is the identity mapping. The operator A is a bounded linear operator with is adjoint of A and with L being the spectral radius of the operator . They proved that the sequence converges weakly to a solution of hierarchical fixed point and split monotone variational inclusion problems.

In 2017, Kazmi et al. [13] developed a Krasnoselski–Mann type iterative method to approximate a common solution set of a hierarchical fixed point problem for nonexpansive mappings and a split monotone variational inclusion problem which was defined as follows:

where , and the step size where L is the spectral radius of the operator . Under some suitable conditions on and :

.

They proved that the sequence converges weakly to a solution of hierarchical fixed point and split monotone variational inclusion problems. Normally, an interesting question is how to construct the strongly convergence results which approximate the solution of the split monotone variational inclusion and hierarchical fixed point problems.

In 2021, Dao-Jun Wen [14] modified Krasnoselski–Mann type iteration (5) which replaced operator T with where D is a strongly monotone operator and L-Lipschitzian. Define a sequence in the following manner:

where , , are two nonexpansive mappings and the step size where L is the spectral radius of the operator . They established the strong convergence result and constructed the new condition of coefficients which replaced of condition (C2).

If and , then the split monotone variational inclusion problem reduced to the following split variational inclusion problem (SVIP): Find such that

Byrne et al. [15] have attempted to solve a special case of (6) and defined in the following: Moreover, they obtained the weak and strong convergence results of problem (6) with resolvent operator technique and the stepsize . In order to speed up the convergence speed, Alvarez and Attouch [16] considered the following iterative scheme: , where F is a maximal monotone operator, and , such the iterative scheme is called the inertial proximal method, and is referred to as the inertial extrapolation term which is a procedure of speeding up the convergence properties under the condition that (see [17]). At the same time, the idea of the inertial technique plays an important role in the optimization community as a technology to build an accelerating iterative method, (see [18,19]).

On the other hand, the iterative methods mentioned above share a common manner, that is, their step size requires a knowlegde of the prior information of the operator (matrix) norm . It may be difficult to calculate and the fixed step size of iterative methods have an impact on the implementation. To conquer, the construction of self-adaptive step size has aroused interest among researchers. Recently, there are many iterative methods that do not require the prior information of the operator (matrix) norm, (see [20,21,22]).

Motivated and inspired by the work mentioned above, we further investigate the self-adaptive inertial Krasnoselski-mann iterative method for solving hierarchical fixed point and split monotone variational inclusion problems. This manuscript aims to suggest modifications of the results that appeared in [14] by applying the inertial scheme that is effective for speeding up the iteration process and adding the step size that the prior information of the operator (matrix) norm is not required. A strong convergence theorem of the proposed iterative method is established under some suitable conditions. Furthermore, we also present some numerical experiments to demonstrate the advantages of the stated iterative method over other existing methods [9,13,14] and apply our main results to solving the image restoration problem.

2. Materials and Methods

In this section, we recall some basic definitions and properties which will be frequently used in our later investigation. Some useful results proved already in the literature are also summarized.

Definition 1.

A mapping is said to be:

- (i)

- monotone, if

- (ii)

- α-inverse strongly monotone, if there exists a constant such that

- (iii)

- β-Lipschitz continuous, if there exists a constant such that

Let be a multivalued operator on H. Then the graph of F is defined by

and

- (i)

- the operator F is called a monotone operator, if

- (ii)

- the operator F is called a maximal monotone operator, if F is monotone and the graph of F is not properly contained in the graph of other monotone mappings.

Let be a set-valued maximal monotone mapping, which Graph (F) is not property contained in the graph of any other monotone mapping. Then the resolvent operator is defined by

where I stands for the identity operator on H. It is well known that resolvent operator is single-valued, nonexpansive and firmly nonexpansive for .

Lemma 1.

Let f be a κ-inverse strongly monotone mapping and F be a maximal monotone mapping, then is averaged for all .

Lemma 2

([9]). Let f be a mapping and F be a maximal monotone mapping, then if and only if , i.e., for .

Lemma 3.

For any , the following results hold:

- (i)

- (ii)

Lemma 4

([23]). Let be η-strongly monotone and L-Lipschitz continuous. Then is a -contraction, i.e.,

where is a sequence such that and with .

Lemma 5

([24]). Let and be two sequences of non-negative real numbers such that

where is a sequence in and is a sequence in . Assume that . If for some , then is a bounded sequence.

Lemma 6

([25]). Let be a sequence of non-negative real numbers, be a sequence of real numbers in with and be a sequence of real numbers such that

If for every subsequence of satisfying , then .

3. Results

In this section, we propose a self-adaptive method with inertial extrapolation term for solving hierarchical fixed point and split variational inclusion problems. To begin with, the control conditions need to be satisfied by Condition 1:

- (A1)

- for some such that , i.e.,

- (A2)

- such that and ;

- (A3)

- such that , ;

- (A4)

- is a positive sequence such that and ;

- (A5)

- , and

Remark 1.

From = and Algorithm 1, we have

Therefore

| Algorithm 1: Inertial Krasnoselski-Mann Iterative Method |

| Initialization: Let , , and . |

| Iterative steps: Calculate follows: |

| Step 1. Given the iterates and for each , choose where = |

| Step 2. Compute ; . |

| Step 3. Compute , and , where = |

| Step 4. Compute . Set , and return to Step 1. |

Lemma 7.

Assume that and are real Hilbert spaces and is a bounded linear operator with its adjoint operator Let and be set-valued maximal monotone operators. Let and be -inverse strongly monotone mappings with . Then, for any , and , the following statements hold:

- (i)

- and are nonexpansive;

- (ii)

- where .

Proof.

(i) Since f and g are -inverse strongly monotone mappings, respectively. From Lemma 1, we obtain that is averaged. So U is nonexpansive for . Similarly, we can prove that is nonexpansive.

(ii) From is nonexpansive, we have

It follows that

□

Lemma 8.

The sequence formed by Algorithm 1, then is bounded.

Proof.

If , then

From A is bounded and linear, we get

Hence , thus is bounded. □

Lemma 9.

Assume that and are formed by Algorithm 1. If converges weakly to z and , then .

Proof.

Since U is nonexpansive, we get

which together gives that

Since

we get

This combines with Lemma 2 and yields . In view of the fact A is a linear operator, we get . From , we obtain . Therefore □

Theorem 1.

Let and be are two real Hilbert spaces and C be a nonempty closed convex subset of and be a bounded linear operator with its adjoint operator . Assume that and are set-valued maximal monotone operators. Let and be are -inverse strongly monotone mappings with . Let be η-strongly monotone and L-Lipschitzian, be a nonexpansive mapping, be a continuous quasi-nonexpansive mapping such that is monotone and Let be the sequence defined by Algorithm 1 and the Condition 1 holds. Then the sequence converges strongly to in the norm, where

Proof.

This proof is divided the remaining proof into several steps.

Step 1. We will show that is bounded. Let us assume that . From the definition of , we get

According to Condition 1, one has . Therefore, there exists a constant such that

Set and , we get

From Lemma 4, we have

Indeed, it follows Lemma 7, and (7), we have

It follows from , we obtain

Set , it follows that

By the definition of , we also have

Further, we have

By (16) and Condition 1, we get

From Lemma 5, this implies that is bounded. Together with (10), (13) and (16), we get , and are also bounded.

Step 2. We will show that

By the definition of , we get

for some From Lemma 3, we have

From Lemma 3, Lemma 4 and (10), we obtain

By the definition of , we have

for some . Substituting (18), (24) and using Condition 1 into (25), we obtain

for some . Thus

Step 3. We will show that

From the definition of , we get

Let . Then we have and

From the definition of , we obtain

This implies that

Step 4. We will show . Assume that is a subsequence of such that

Then,

From Step 2, we see that

which indicates that

From the definition of , we get

Thus

From , we have

So

Since T is nonexpansive, S is a continuous quasi-nonexpansive mapping, we have

So

Thus

Note that

From (35) and Condition 1, we get

By the definition of , we have

Since , we have . It follows that

So

Set . Now we estimate

By the definition of and Lemma 7, we get

Thus

Since is firmly nonexpansive, we obtain

So

Therefore

Since

we have

By the definition of , we get

Using (50) and Condition 1, we have

Note that

Since is bounded, there exists a subsequence which converges weakly to . Assume that , by Opial property

This is a contradiction. Hence and . On the other hand, we note that and

Setting

In particular, for each , we have

which comes from the monotonicity of and

Replacing n with , we have

Moreover, by (32) and Condition 1, we get

If z is substituted by for , we get

Since is continuous, on taking limit , we have

That is . Next we will show that

Note that can be rewritten as

where is nonexpansive for Lemma 7. Taking in (54) by (31), (49) and the graph of maximal monotone mapping is weakly strongly closed, we obtain . Moreover, since and have the same asymptotical behavior, we have . Since the resolvent operator is average and hence nonexpansive, we can obtain

This shows that and so .

Step 5. We will show that where Since is bounded, there exists a subsequence of such that . Moreover,

Since , one has , which together with and Lemma 9, we get . From the definition of and (55), we obtain

This together with (50), Step 3. and Lemma 6, we can conclude that strongly converges to . Hence we obtain the desired conclusion. □

Remark 2.

We note that our results directly improve and extend some known results in the literature as follows:

- (i)

- Our proposed iterative method has a strong convergence in real Hilbert spaces which is more preferable than the weak convergence results of Kazmi et al. [7].

- (ii)

- We improve and extend Theorem 3.1 of Dao-Jun Wen [14]. Especially, we use the quasi-nonexpansive mappings instead of the nonexpansive mappings.

- (iii)

- The selection of the step size in the iterative method provided by Dao-Jun Wen [14] and Kazmi et al. [7] requires the prior information of the operator (matrix) norm while our iterative method can update the step size of each iteration.

- (iv)

- Our iterative method improve and extend iterative method of Dao-Jun Wen [14] and Kazmi et al. [7].

4. Theoretical Applications

In this section, we derive a scheme for solving hierarchical fixed point and the split problems from Algorithm 1 and also extend and generalize the known results.

4.1. Split Variational Inclusion Problem

The split variational inclusion problem is one of the important special case of the split monotone variational inclusion problem. This is a fundamental problem in optimization theory, which is applied in a wide range of disciplines. In other words, if and , then the split monotone variational inclusion problem is reduced to split variational inclusion problem. Let us denote Sol(SVIP) by the solution set of the split variational inclusion problem.

Theorem 2.

Let and be are two real Hilbert spaces and C be a nonempty closed convex subset of and be a bounded linear operator with its adjoint operator . Assume that and are set-valued maximal monotone operators. Let be η-strongly monotone and L-Lipschitzian, be a nonexpansive mapping, be a continuous quasi-nonexpansive mapping such that is monotone and Let be the a sequence which satisfies the Condition 1 generated by the following scheme:

where and satisfy the Algorithm 1. Then the sequence converges strongly to in the norm, where

4.2. Split Variational Inequality Problem

Let C be a nonempty closed convex subset of a Hilbert space . Define the normal cone of C at a point by

It is known that for each , we get

This implies that . Let C and Q be nonempty closed convex subsets of Hilbert spaces of and , respectively. If and in the split monotone variational inclusion problem, the following split variational inequality problem is obtained: Find such that

where and are -inverse strongly monotone mappings with . In particular, if f is a -inverse strongly monotone mapping with , then is average. Then, the following results can be obtained from our Theorem 1.

Theorem 3.

Let be the same as above. Let be a bounded linear operator with adjoint operator . Select arbitrary initial points , is generated by the following scheme:

where and satisfy the Algorithm 1. Suppose that Condition 1 are satisfied and . Then the sequence converges strongly to in the norm, where

5. Numerical Experiments

Some numerical results will be presented in this section to demonstate the effeciveness of our proposed method. The MATLAB codes were run in MATLAB version 9.5 (R2018b) on MacBook Pro 13-inch, 2019 with 2.4 GHz Quad-Core Intel Core i5 processor. RAM 8.00 GB.

Example 1.

where . Choose and , we have

We consider an example in infinite dimensional Hilbert spaces. Assume with inner product and induced norm for all . Let be defined by and where . Let be defined by and where . Then f is 2-inverse strongly monotone, g is 4-inverse strongly monotone and are maximal monotone. Further, we have by a direct calculation that

The mapping is defined by and . Let , and where , Then T is a nonexpansive mapping with and S is a continuous qusi-nonexpansive mapping with and is monotone but S is not a nonexpansive mapping. Hence . Furthermore, it easy to prove that . Therefore . We choose the iterative coefficients

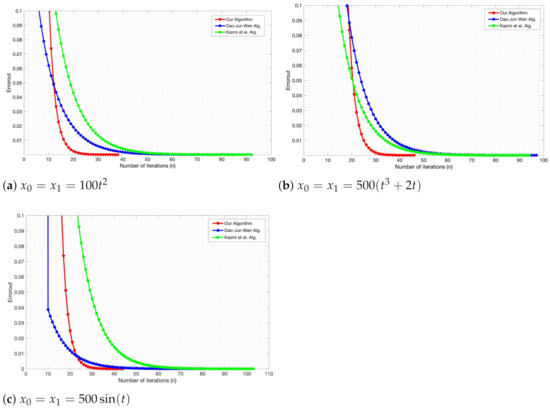

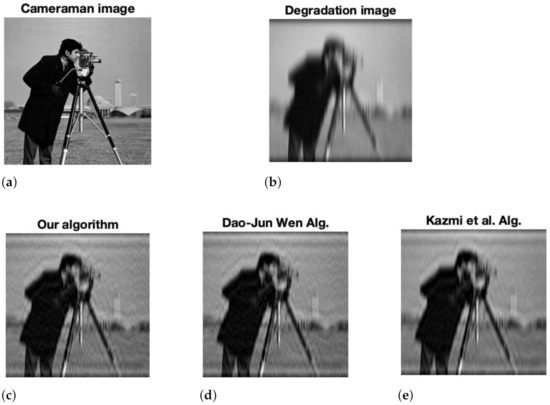

The further comparison of convergence behavior between our proposed method and Dao-Jun Wen. [14] and Kazmi et al. [7] is displayed in Figure 1 and Table 1 with the stopping criteria .

Figure 1.

Numerical behavior of all algorithms with different initial values in Example 1.

Table 1.

The result of all algorithms with different inertial points in Example 1.

Remark 3.

Table 1 shows that the different inertial points have almost on effect on the number of iterations and shows that our proposed method has a better number of iterations and CPU time than Dao-Jun Wen. [14] and Kazmi et al. [7].

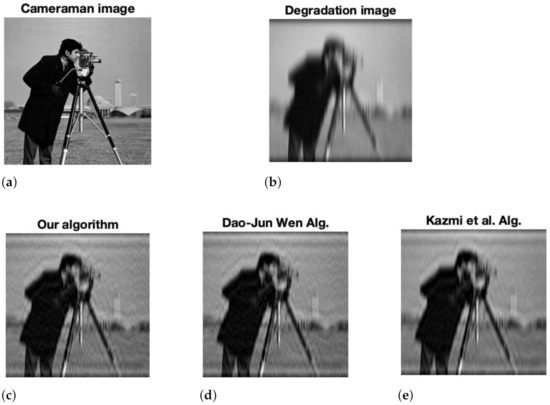

Example 2.

In this example, we apply our main result to solve the image restoration problem by using Algorithm 1. We consider the convex minimization problem as follows:

where is a smooth convex loss function and differentiable with L-Lipschitz continuous gradient where and is a continuous convex function. It is well known that the convex minimization problem (58) is equivalent to the following problem:

We consider the degradation model that represents image restoration problems as the following mathematical model:

where is a blurring matrix and is a noise term. The goal is to recover the original image by minimizing a noise term. We consider a model which produces the restored image given by the following minimization problem:

for some regularization parameter . In this situation, we choose and and set operators , , , , , , and is defined as Example 1. We set parameters as follows:

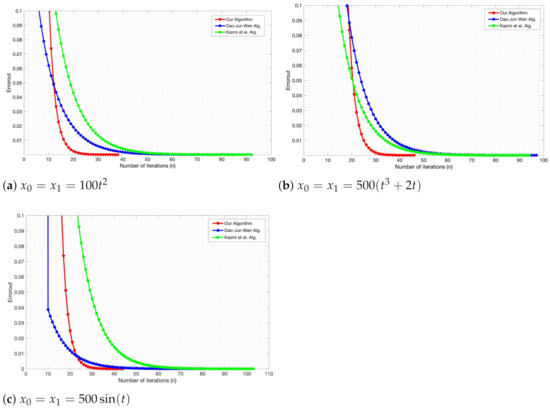

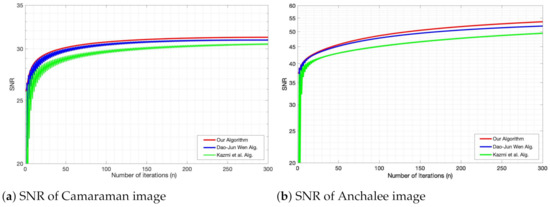

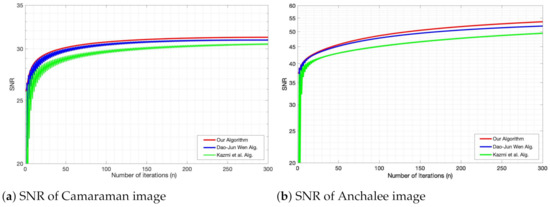

For this example, we choose the regularization parameter and the original gray and RGB images (see in Figure 2a,f). We use an average and motion blur create the blurred and noise images (see in Figure 2b,g). The performance for image restoring process is quantitatively measured by signal-to-noise ratio (SNR), which is defined as

where x and denote the original image and the restored image at iteration n, respectively. A higher SNR implies that the recovered image is of higher quality. Our numerical results are explained in Table 2 and Figure 3.

Figure 2.

The original images are shown as (a) “Cameraman image” and (f) “Anchalee image”, Degradation images by an average and motion blur are shown as (b,g), repectively. (c,h) show the reconstruction by our algorithm, (d,i) show the reconstruction by Dao-Jun Wen algorithm and (e,j) show the reconstruction by Kazmi et al. algorithm.

Table 2.

The performance of signal-to-noise ratio (SNR) in the gray and RGB images.

Figure 3.

Numerical behavior of all algorithms with SNR in Example 2.

Remark 4.

It can be observed from Figure 2 that the restoration quality of the the gray and RGB images restored by our algorithm is better than the quality of the image restored by Dao-Jun Wen. Alg. [14] and Kazmi et al. Alg. [7], and Table 2 and Figure 3 are verified by the higher SNR values of our algorithm.

6. Conclusions

In this article, the main contribution is to introduce a novel self-adaptive inertial Krasnoselski-mann iterative method for solving hierarchical fixed point and split monotone variational inclusion problems in Hilbert speaces. The main advantage of this scheme involves both the use of an inertial technique and the self-adaptive step size criterion which does not require prior knowledge of the Lipschitz constant of the cost operator. Under standard assumptions, the strong convergence of the proposed method is established. A modified scheme derived from the proposed method is given for solving hierarchical fixed points and the split problems. The application of the proposed method in image recovery and comparison with Dao-Jun Wen. Alg. [14] and Kazmi et al. Alg. [7] are presented.

Author Contributions

Conceptualization, P.C. and A.K.; methodology, P.C. and A.K.; formal analysis, P.C. and A.K.; investigation, P.C. and A.K.; writing—original draft preparation, P.C. and A.K.; writing—review and editing, P.C. and A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by Faculty of science, Burapha University (SC05/2564) and Faculty of science, Naresuan University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editor and the anonymous referees for their valuable comments and suggestions which helped to improve the original version of this paper. This work was financially supported by Faculty of science, Burapha University (SC05/2564) and the second author would like to express her deep thank to Faculty of science, Naresuan University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Combettes, P.L.; Pesquet, J.-C. Deep neural structures solving variational inequalities. Set-Valued Var. Anal. 2020, 28, 491–518. [Google Scholar] [CrossRef]

- Luo, M.J.; Zhang, Y. Robust solution sto box-contrained stochastic linear variational inequality problem. J. Ineq. Appl. 2017, 2017, 253. [Google Scholar] [CrossRef][Green Version]

- Juditsky, A.; Nemirovski, A. Solving variational inequalities with monotone operators in domains given by linear minimization oracles. arXiv 2013, arXiv:1312.1073v2. [Google Scholar] [CrossRef]

- Moudafi, A.; Mainge, P.-E. Towards viscosity approximations of hierarchical fixed-point problems. Fixed Point Theory Appl. 2006, 1–10. [Google Scholar] [CrossRef]

- Ćirić, L.J. Some Recent Results in Metrical Fixed Point Theory; University of Belgrade: Belgrade, Serbia, 2003. [Google Scholar]

- Faraji, H.; Radenović, S. Some fixed point results of enriched contractions by Krasnoseljski iterative method in partially ordered Banach spaces. Trans. A Razmadze Math. Inst. to appear.

- Kazmi, K.R.; Ali, R.; Furkan, M. Hybrid iterative method for split monotone variational inclusion problem and hierarchical fixed point problem for finite family of nonexpansive mappings. Numer. Algorithms 2018, 79, 499–527. [Google Scholar] [CrossRef]

- Moudafi, A. Krasnoselski–Mann iteration for hierarchical fixed-point problems. Inverse Probl. 2007, 23, 1635–1640. [Google Scholar] [CrossRef]

- Moudafi, A. Split monotone variational inclusions. J. Optim. Theory Appl. 2011, 150, 275–283. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Combettes, P.L. The convex feasibility problem in image recovery. Adv. Imaging Electron Phys. 1996, 95, 155–453. [Google Scholar]

- Kazmi, K.R.; Ail, R.; Furkan, M. Krasnoselski–Mann type iterative method for hierarchical fixed point problem and split mixed equilibrium problem. Numer. Algorithms 2017, 77, 289–308. [Google Scholar] [CrossRef]

- Wen, D.-J. Modified Krasnoselski–Mann type iterative algorithm with strong convergence for hierarchical fixed point problem and split monotone variational inclusions. J. Comput. Appl. Math. 2021, 393, 1–13. [Google Scholar] [CrossRef]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. The split common null point problem. J. Nonlinear Convex Anal. 2012, 13, 759–775. [Google Scholar]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operator via discretization of nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Chuang, C.S. Hybrid inertial proximal algorithm for the split variational inclusion problem in Hilbert spaces with applications. Optimization 2017, 66, 777–792. [Google Scholar] [CrossRef]

- Olona, M.A.; Alakoya, T.O.; Owolabi, A.O.-E.; Mewomo, O.T. Inertial shrinking projection algorithm with self-adaptive step size for split generalized equilibrium and fixed point problems for a countable family of nonexpansive multivalued mappings. Demonstr. Math. 2021, 54, 47–67. [Google Scholar] [CrossRef]

- Olona, M.A.; Alakoya, T.O.; Owolabi, A.O.-E.; Mewomo, O.T. Inertial algorithm for solving equilibrium, variational inclusion and fixed point problems for an infinite family of strictly pseudocontractive mappings. J. Nonlinear Funct. Anal. 2021, 2, 10. [Google Scholar]

- Kesornprom, S.; Cholamjiak, P. Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in hilbert spaces with applications. Optimization 2019, 68, 2369–2395. [Google Scholar] [CrossRef]

- Tang, Y. Convergence analysis of a new iterative algorithm for solving split variational inclusion problems. J. Ind. Manag. Optim. 2020, 16, 945–964. [Google Scholar] [CrossRef]

- Tang, Y.; Gibali, A. New self-adaptive step size algorithms for solving split variational inclusion problems and its applications. Numer. Algorithms 2020, 83, 305–331. [Google Scholar] [CrossRef]

- Xu, H.K.; Kim, T.H. Convergence of hybrid steepest-descent methods for variational inequalities. J. Optim. Theory Appl. 2003, 119, 185–201. [Google Scholar] [CrossRef]

- Maingé, P.E. Approximation methods for common fixed points of nonexpansive mappings in Hilbert spaces. J. Math. Anal. Appl. 2007, 325, 469–479. [Google Scholar] [CrossRef]

- Saejung, S.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 2012, 75, 742–750. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).