Abstract

Probability theory is built around Kolmogorov’s axioms. To each event, a numerical degree of belief between 0 and 1 is assigned, which provides a way of summarizing the uncertainty. Kolmogorov’s probabilities of events are added, the sum of all possible events is one. The numerical degrees of belief can be estimated from a sample by its true fraction. The frequency of an event in a sample is counted and normalized resulting in a linear relation. We introduce quantum-like sampling. The resulting Kolmogorov’s probabilities are in a sigmoid relation. The sigmoid relation offers a better importability since it induces the bell-shaped distribution, it leads also to less uncertainty when computing the Shannon’s entropy. Additionally, we conducted 100 empirical experiments by quantum-like sampling 100 times a random training sets and validation sets out of the Titanic data set using the Naïve Bayes classifier. In the mean the accuracy increased from to .

1. Introduction

Quantum algorithms are based on different principles than classical algorithm. Here we investigate a simple quantum-like algorithm that is motivated by quantum physics. The incapability between Kolmogorov’s probabilities and quantum probabilities results from the different norms that are used. In quantum probabilities the length of the vector in norm representing the amplitudes of all events is one. Usually, Kolmogorov’s probabilities are converted in Quantum-like probabilities by the squared root operation, it is difficult to attach any meaning to the squared root operation. Motivated by the lack of interpretability we define quantum-like sampling, the sampling. The resulting Kolmogorov’s probabilities are not any more linear but related to the sigmoid function. In the following we will introduce the traditional sampling Then we will introduce the quantum-like sampling, the sampling. We will indicate the relation between the sampling, the sigmoid function and the normal distribution. The quantum-like sampling leads to less uncertainty. We fortify this hypothesis by empirical experiments with a simple Naïve Bayes classifier on the Titanic dataset.

2. Kolmogorovs Probabilities

Probability theory is built around Kolmogorov’s axioms (first published in 1933 [1]). All probabilities are between 0 and 1. For any proposition x,

and

To each sentence, a numerical degree of belief between 0 and 1 is assigned, which provides a way of summarizing the uncertainty. The last axiom expresses the probability of disjunction and is given by

where do these numerical degrees of belief come from?

- Humans can believe in a subjective viewpoint, which can be determined by some empirical psychological experiments. This approach is a very subjective way to determine the numerical degree of belief.

- For a finite sample we can estimate the true fraction. We count the frequency of an event in a sample. We do not know the true value because we cannot access the whole population of events. This approach is called frequentist.

- It appears that the true values can be determined from the true nature of the universe, for example, for a fair coin, the probability of heads is . This approach is related to the Platonic world of ideas. However, we can never verify whether a fair coin exists.

Frequentist Approach and Sampling

Relying on the frequentist approach, one can determine the probability of an event x by counting. If is the set of all possible events, and the cardinality determines the number of elements of a set and is the number of elements of the set x and with

This kind of sampling is the sampling. With n events that describe all possible events of the set ,

We can interpret as dimension of an n dimensional vector with the vector being

with the norm

it is as a unit-length vector in the norm

3. Quantum Probabilities

Quantum physics evaluates a probability of a state x as the squared magnitude of a probability amplitude , which is represented by a complex number

This is because the product of complex number with its conjugate is always a real number. With

Quantum physics by itself does not offer any justification or explanation beside the statement that it just works fine, see [2]. This quantum probabilities are as well called von Neumann probabilities. Converting two amplitudes into probabilities leads to an interference term ,

making both approaches, in general, incompatible

In other words, the summation rule of classical probability theory is violated, resulting in one of the most fundamental laws of quantum mechanics, see [2]. In quantum physics we interpret as dimension of an n dimensional vector with the vector being

In quantum physics the unit-length vector is computed in the in the norm

instead of the norm with a unit-length vector in the norm

By replacing the norm by the Euclidean norm in the classical probability theory (Kolmogorov probabilities), we obtain quantum mechanics [3] with all the corresponding laws. The incapability between the Kolmogorov’s probabilities and quantum probabilities results from the simple fact

This incapability is as well the basis for quantum cognition. Quantum cognition is motivated by clues from psychology indicate that human cognition is based on quantum probability rather than the traditional probability theory as explained by Kolmogorov’s axioms, see [4,5,6,7]. Empirical findings show that, under uncertainty, humans tend to violate the expected utility theory and consequently the laws of classical probability theory (e.g., the law of total probability [6,7,8]), In [4,9,10,11,12,13,14] leading to what is known as the “disjunction effect” which, in turn, leads to violation of the Sure Thing Principle. The violation results from an additional interference that influences the classical probabilities.

Conversion

The amplitude is the root of the belief multiplied with the corresponding phase [11,12,13]

With sampling it is difficult to attach any meaning to ,

Motivated by the lack of interpretability we define the quantum-like sampling, the sampling with n events that describe all possible events

and

with

The sampling leads to an interpretation of the amplitudes as a normalized frequency of occurrence of an event multiplied by the phase. is dependent on the distribution of all values . When developing empirical experiments that are explained by quantum cognition models sampling should be used rather than sampling. What is the interpretation of outside quantum interpretation?

4. Quantum-Like Sampling and the Sigmoid Function

To understand the difference between sampling and sampling (quantum-like sampling) we will analyze a simple binary event x

For sampling and is defined as

and for sampling

with

we can define the functions for binary and sampling as

and

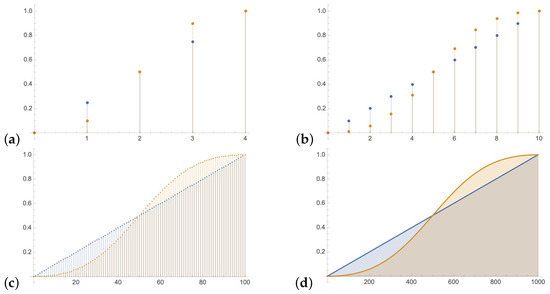

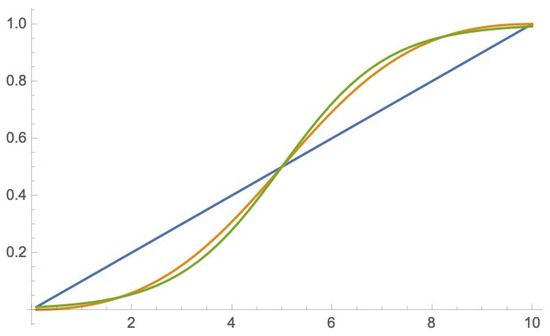

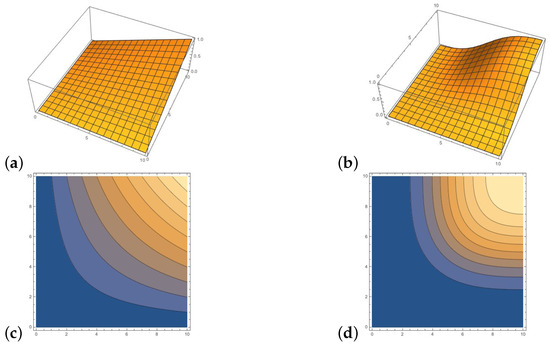

In Figure 1 is compared to for , (a) , (b) , (c) and (d) . With growing size of the function converge to a continuous sigmoid function. In Figure 2 the sigmoid function with is compared to the well known scaled to the logistic function.

Figure 1.

compared to for , (a) , (b) , (c) and (d) . With growing size of the function converge to a sigmoid function.

Figure 2.

Sigmoid functions: versus logistic function .

The derivative of the sigmoid function is a bell-shaped function. For the continuous function , the derivative is similar to the Gaussian distribution. The central limit theorem states that under certain (fairly common) conditions, the sum of many random variables will have an approximately Gausssian distribution. In Figure 3 the derivative of with , is indicated and compared to Gaussian distribution with and .

Figure 3.

(a) and ; (b) versus .

The derivative of the sigmoid function is less similar to the probability mass function of the the binomial distribution.

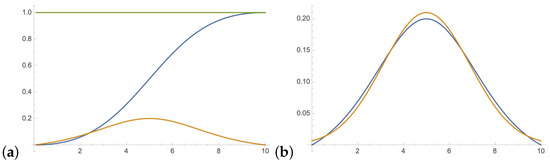

The sampling leads to natural sigmoid representation of probabilities that reflects the nature of Gaussian/Normal distribution. This leads to less uncertainty using compared to sampling represented by Shannon’s entropy.

as can be seen in Figure 4 for .

Figure 4.

Shannon’s entropy H with , blue discrete plot for sampling and yellow discrete plot sampling.

Combination

Depending on or sampling we get different results when we combine probabilities. Multiplying two independent sampled events x and y results in the joint distribution

when sampled with and

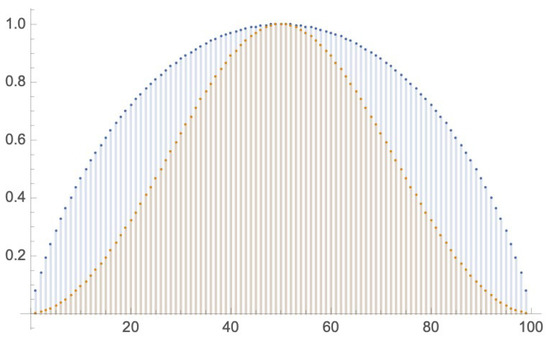

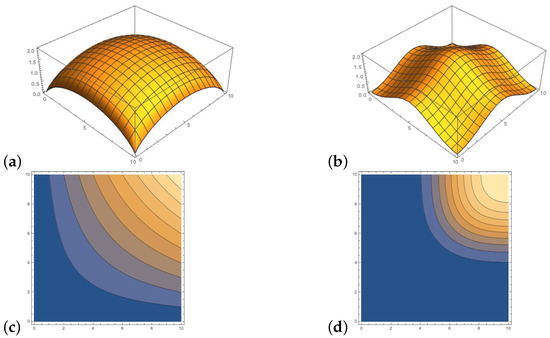

when sampled with as indicated in the Figure 5. The same applies for weighted sum of information, corresponding to the Shannon’s entropy as used in the algorithm [15,16,17] for symbolical machine learning to preform a greedy search for small decision trees. Computing

when sampled with and

when sampled with leads to different and values as indicated in the Figure 6.

Figure 5.

Multiplying two independent sampled events x and y with leads to different results: (a) , (b) , (c) counterplot of , (d) counterplot of .

Figure 6.

Different and values for sampled events x and y with : (a) , (b) , (c) counterplot of , (d) counterplot of .

Less uncertainty would lead to better results in machine learning. We preform empirical experiments with a simple Naïve Bayes classifier to fortify this hypothesis.

5. Naïve Bayes Classifier

For a target function , where each instance x described by attributes Most probable value of is:

The likelihood of the conditional probability is described by possible combinations. For n possible variables, the exponential growth of combinations being true or false becomes an intractable problem for large n since all possible combinations must be known. The decomposition of large probabilistic domains into weakly connected subsets via conditional independence,

is known as the Naïve Bayes assumption and is one of the most important developments in the recent history of Artificial Intelligence [16]. It assumes that a single cause directly influences a number of events, all of which are conditionally independent. The Naïve Bayes classifier is defined as

Titanic Dataset

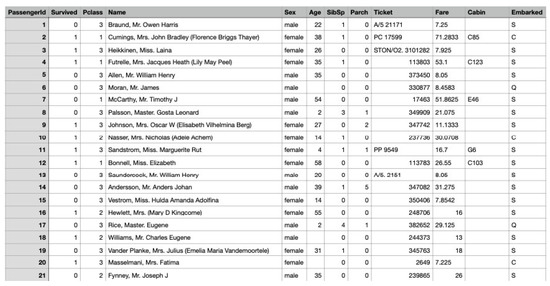

When the Titanic sank it killed 1502 out of 2224 passengers and crew. We are using a file titanic.cvs processed file from https://gist.github.com/michhar/ accessed on 1 July 2021 that corresponds to the file train.cvs from kaggle https://www.kaggle.com/c/titanic/data?select=train.csv accessed on 1 July 2021 that contains data for 891 of the real Titanic passengers. Each row represents one person. The columns describe different attributes about the person including their , whether they , heir passenger-class , their , their , their , and other six attributes, see Figure 7. In our experiment we will only use the attributes , , and .

Figure 7.

The Titanic data set is represented in an Excel table that contains data for 891 of the real Titanic passengers, some entries are not defined.

The binary attribute (=s) will be our target h resulting in the prior and . Out of the 891 passengers 342 survived and 549 did not survive. The attribute has three values 1 for upper class, 2 for middle class and 3 for lower class. It will be binarized into the attribute c indicating if the person had a lower class cabin by

in the case is not defined the default value is 0. The attribute has two binary values represented by the binary attribute m

in the case is not defined the default value is 0. The attribute will be binarized into the attribute g indicating if the person is a child or grown up by

in the case is not defined the default value is 1.

Using the sampling over the whole data set we get the values for the priors

and for the likelihoods,

Using the sampling over the whole data set we get the values for the priors

and for the likelihoods,

The sampled probability values are quite different.

We measure the accuracy of our algorithm by dividing the number of entries it correctly classified by the total number of entries. For sampling there 190 are entries wrong classified resulting in the accuracy of , for the sampling 179 entries are wrong classified resulting in the accuracy of .

In the next step we separate our data set in a training set and a validation set resulting in 295 elements in the validation set We use sklearn.model_selection accessed on 1 July 2021 import train_test_split accessed on 1 July 2021 with the parameters train_test_split(XH,yy,test_size=0.33,random_state=42) accessed on 1 July 2021. The later is used to validate how well our algorithm is doing. For sampling 60 entries are wrong classified resulting in the accuracy of , for the sampling 55 entries are wrong classified resulting in the accuracy of .

In the next we sample the 100 times a random training set and validation set We use sklearn.model_selection accessed on 1 July 2021 import train_test_split accessed on 1 July 2021 with the parameters train_test_split(XH,yy,test_size=0.33) accessed on 1 July 2021. For sampling there were in the mean entries wrong classified resulting in the accuracy of , for the sampling there were entries wrong classified resulting in the accuracy of .

The trend from this simple evaluation indicates that sampling leads to better results compared to sampling in the empirical experiments.

So far we looked at the performance of classification models in terms of accuracy. Specifically, we measured error based on the fraction of mistakes. However, in some tasks, there are some types of mistakes that are worse than others. However in our simple task the correctly classified results when sampled are also correctly classified when sampled.

6. Conclusions

We introduced quantum-like sampling also called the sampling. The sampling leads to an interpretation of the amplitudes as a normalized frequency of occurrence of an event multiplied by the phase

is dependent on the distribution of all values . When developing empirical experiments that are explained by quantum cognition models sampling should be used rather than sampling.

The quantum inspired sampling maps the probability values to a natural continuous sigmoid function, its derivative is is a bell-shaped function that is similar to the Gaussian distribution of events. The sampling improves the classification accuracy in machine learning models that are based on sampling as indicated by empirical experiments with Naïve Bayes classifier.

Funding

This work was supported by national funds through FCT, Fundação para a Ciência e a Tecnologia, under project UIDB/50021/2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this paper are provided within the main body of the manuscript.

Acknowledgments

We would like to thank the anonymous reviewers for their valuable feedback.

Conflicts of Interest

Compliance with Ethical Standards: The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. The author declare no conflict of interest. This article does not contain any studies with human participants or animals performed by any of the authors.

References

- Kolmogorov, A. Grundbegriffe der Wahrscheinlichkeitsrechnung; Springer: Berlin, Germany, 1933. [Google Scholar]

- Binney, J.; Skinner, D. The Physics of Quantum Mechanics; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Aaronson, S. Is quantum mechanics an island in theoryspace? In Proceedings of the Växjö Conference Quantum Theory: Reconsideration of Foundations; Khrennikov, A., Ed.; University Press: Vaxjo, Sweden, 2004; Volume 10, pp. 15–28. [Google Scholar]

- Busemeyer, J.; Wang, Z.; Trueblood, J. Hierarchical bayesian estimation of quantum decision model parameters. In Proceedings of the 6th International Symposium on Quantum Interactions, Paris, France, 27–29 June 2012; pp. 80–89. [Google Scholar]

- Busemeyer, J.R.; Trueblood, J. Comparison of quantum and bayesian inference models. In Quantum Interaction; Lecture Notes in Computer, Science; Bruza, P., Sofge, D., Lawless, W., van Rijsbergen, K., Klusch, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5494, pp. 29–43. [Google Scholar]

- Busemeyer, J.R.; Wang, Z.; Lambert-Mogiliansky, A. Empirical comparison of markov and quantum models of decision making. J. Math. Psychol. 2009, 53, 423–433. [Google Scholar] [CrossRef]

- Busemeyer, J.R.; Wang, Z.; Townsend, J.T. Quantum dynamics of human decision-making. J. Math. Psychol. 2006, 50, 220–241. [Google Scholar] [CrossRef]

- Khrennikov, A. Quantum-like model of cognitive decision making and information processing. J. Biosyst. 2009, 95, 179–187. [Google Scholar] [CrossRef] [PubMed]

- Busemeyer, J.; Pothos, E.; Franco, R.; Trueblood, J. A quantum theoretical explanation for probability judgment errors. J. Psychol. Rev. 2011, 118, 193–218. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Busemeyer, J.; Wang, Z. Quantum cognition: Key issues and discussion. Top. Cogn. Sci. 2014, 6, 43–46. [Google Scholar] [CrossRef] [PubMed]

- Wichert, A. Principles of Quantum Artificial Intelligence: Quantum Problem Solving and Machine Learning, 2nd ed.; World Scientific: Singapore, 2020. [Google Scholar]

- Wichert, A.; Moreira, C. Balanced quantum-like model for decision making. In Proceedings of the 11th International Conference on Quantum Interaction, Nice, France, 3–5 September 2018; pp. 79–90. [Google Scholar]

- Wichert, A.; Moreira, C.; Bruza, P. Quantum-like bayesian networks. Entropy 2020, 22, 170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yukalov, V.; Sornette, D. Decision theory with prospect interference and entanglement. Theory Decis. 2011, 70, 283–328. [Google Scholar] [CrossRef] [Green Version]

- Luger, G.F.; Stubblefield, W.A. Artificial Intelligence, Structures and Strategies for Complex Problem Solving, 3rd ed.; Addison-Wesley: Boston, MA, USA, 1998. [Google Scholar]

- Mitchell, T. Machine Learning; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Winston, P.H. Artificial Intelligence, 3rd ed.; Addison-Wesley: Boston, MA, USA, 1992. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).